1. Introduction

The Automatic Identification System (AIS) plays a pivotal role in the digitization of the shipping industry by providing frequent vessel movement data [

1]. An AIS transponder is mandatory for all ships with a gross tonnage over 300 that sail in international waters, ships over 500 tons that do not sail internationally, and all passenger ships [

2]. With over 310 billion AIS messages transmitted every year, the maritime sector has unquestionably entered the big data era [

3]. Originally intended to prevent ship collisions [

1], ongoing improvements in data quality and coverage have greatly expanded the potential applications of AIS data. However, despite accessibility to free AIS data with the appropriate infrastructure (e.g., base stations), organizations that collect and store global AIS data typically charge for access, creating a significant barrier to entry, and hindering the big data potential in the maritime sector. Recently, a few providers, most notably AISStream (

https://aisstream.io/ accessed on 4 November 2025) and AISHub (

https://aishub.net accessed on 4 November 2025), have started offering real-time terrestrial AIS data at no cost. In this work, we focus on open-access sources, which can be sustainably collected across both large spatial and temporal scales. To address the challenge of incomplete port berth documentation, we propose an unsupervised spatial modeling framework that leverages freely available AIS data to localize berthing sites.

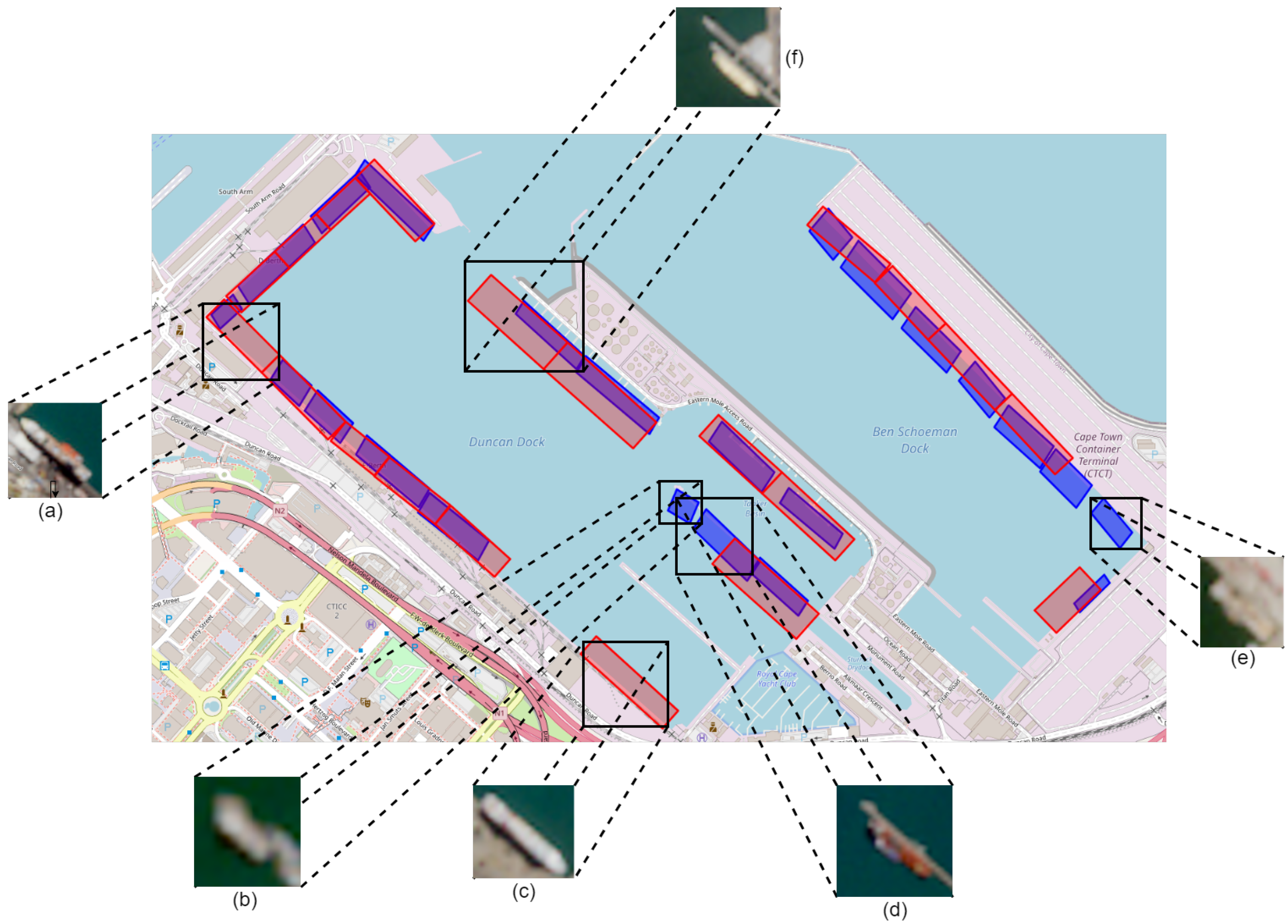

Port berths are designated locations within ports equipped with necessary facilities such as cranes and docking infrastructure (see

Figure 1) that support essential maritime operations including cargo loading and unloading, refueling, and maintenance. Despite their critical role, many ports fail to document their location adequately, and existing documentation is often fraught with inaccuracies or omissions.

By accurately identifying and localizing port berths, we aim to fill these data gaps, ensuring that such vital information is both freely available and reliable. Importantly, port berth localization can enable AIS geofencing, i.e., AIS data overlaid on a set of berths to discern short-term and long-term patterns. Examples of applications include the generation of real-time and historical statistics, e.g., service time, the development of predictive data-driven models, and the use of data, statistics, and models by port authorities to make informed decisions regarding infrastructure investments, expansions (e.g., new terminals), or operational adjustments [

5]. The shortage of complete and accurate port berth documentation motivates the development of automated berth localization methods. However, this problem remains challenging for several reasons: the inherent absence of reliable ground truth labels necessitates a fully unsupervised approach; AIS data are noisy, making it difficult to reliably distinguish stopped vessels from transient behaviours beyond simple speed-based criteria; accurately detecting port berths requires identifying collective spatial–temporal vessel patterns rather than single-vessel movements, inherently calling for hierarchical clustering approaches; and existing clustering methods typically demand extensive parameter fine-tuning, limiting their generalizability across diverse ports. Collectively, these factors underscore the complexity of developing robust, fully unsupervised, and universally applicable berth localization methods. However, existing research addressing this problem remains scarce, as discussed in

Section 1.

In this work, we devise an unsupervised method for optimizing data-driven models [

6] and demonstrate its efficacy across various ports around the globe. By selecting a diverse set of ports (based on TEU), we ensure our sample encapsulates a wide range of port sizes and operational contexts, from small sea ports to some of the world’s largest shipping hubs (see

Table 1). The promising results of our method across 11 ports underscore the proposed method’s adaptability and its potential applicability to ports globally. Our contributions are as follows:

Related Work

Vessel Stop Detection and Port Area Clustering. Identifying vessel stop episodes and berth locations from AIS data involves detecting meaningful spatial–temporal patterns. Wang and McArthur [

14] and Nogueira et al. [

15] exploited temporal and spatial gaps in GPS data to detect stop episodes and distinct movement patterns. Density-based methods, particularly DBSCAN, have proven effective in pinpointing stay points, as demonstrated by Hwang et al. [

16] and Luo et al. [

17]. Nevertheless, conventional DBSCAN faces scalability limitations, prompting advancements such as GriT-DBSCAN by Huang et al. [

18], which introduced efficient grid-tree indexing, and the variant by Chen et al. [

19], optimized for high-dimensional data by pruning unnecessary computations. Adaptive trajectory clustering methods were introduced by Tang et al. [

20], who employed Douglas–Peucker and OPTICS algorithms, while Wang et al. [

21] enhanced sequential trajectory clustering through structured pattern representations.

Spatial clustering methods explicitly tailored for maritime contexts have also been explored. Millefiori et al. [

22] adapted Kernel Density Estimation within a map-reduce framework for defining port boundaries. Xiao et al. [

23] combined DBSCAN and GMMs to spatially cluster urban areas, and Yan et al. [

24] integrated DBSCAN with Random Forests to identify vessel stopping information. The dynamic clustering method by Rehman and Belhaouari [

25] avoided predefined cluster counts by dynamically adjusting granularity through iterative splitting and merging. Steenari et al. [

26] specifically applied DBSCAN to berth localization, providing a useful comparative framework.

Unsupervised and Parameter-Free Clustering Methods. Acknowledging the challenges of parameter tuning, recent methodologies emphasize fully unsupervised, parameter-free clustering. Ansari et al. [

27] provided an extensive review of such unsupervised approaches, including DBSCAN, OPTICS, and GMMs. End-to-end frameworks such as Neural Mixture Models with Expectation-Maximization by Tissera et al. [

28], and Minimum Description Length-based spatial clustering by Kirkley [

29], represent significant advances toward automated clustering without manual intervention. Additionally, Zhang et al. [

30] utilized reinforcement learning to automate hyperparameter optimization in DBSCAN, reinforcing the potential of fully autonomous clustering methods.

Internal Validation Metrics for Unsupervised Clustering. Due to the absence of ground truth in berth localization, internal validation metrics are crucial. Chen et al. [

31] and Wang et al. [

32] employed KLD to internally refine clustering outcomes. Punera and Ghosh [

33] further validated KLD in consensus clustering frameworks, ensuring robust cluster structures from internal data distributions. Similarly, BD was effectively applied by Lahlimi et al. [

34] in hyperspectral band selection, and by You et al. [

35] in speaker recognition, demonstrating the reliable unsupervised validation of clustering consistency.

Collectively, these works underscore the non-triviality of developing robust unsupervised, data-driven clustering methods, particularly in complex spatial–temporal contexts where ground truth is absent. Despite significant methodological advances, a application-specific method that is explicitly tailored and rigorously validated for port berth localization across ports of varying sizes and characteristics remains lacking.

The rest of the paper is organized as follows. In

Section 2, we detail our method, including data preprocessing steps, spatial data augmentation strategy, model selection, and the use of GMMs for spatial clustering. Our experimental findings are detailed in

Section 3. We conclude in

Section 4, where we discuss our findings and contextualize them within the broader scope of port optimization.

2. Materials and Methods

2.1. AIS Data

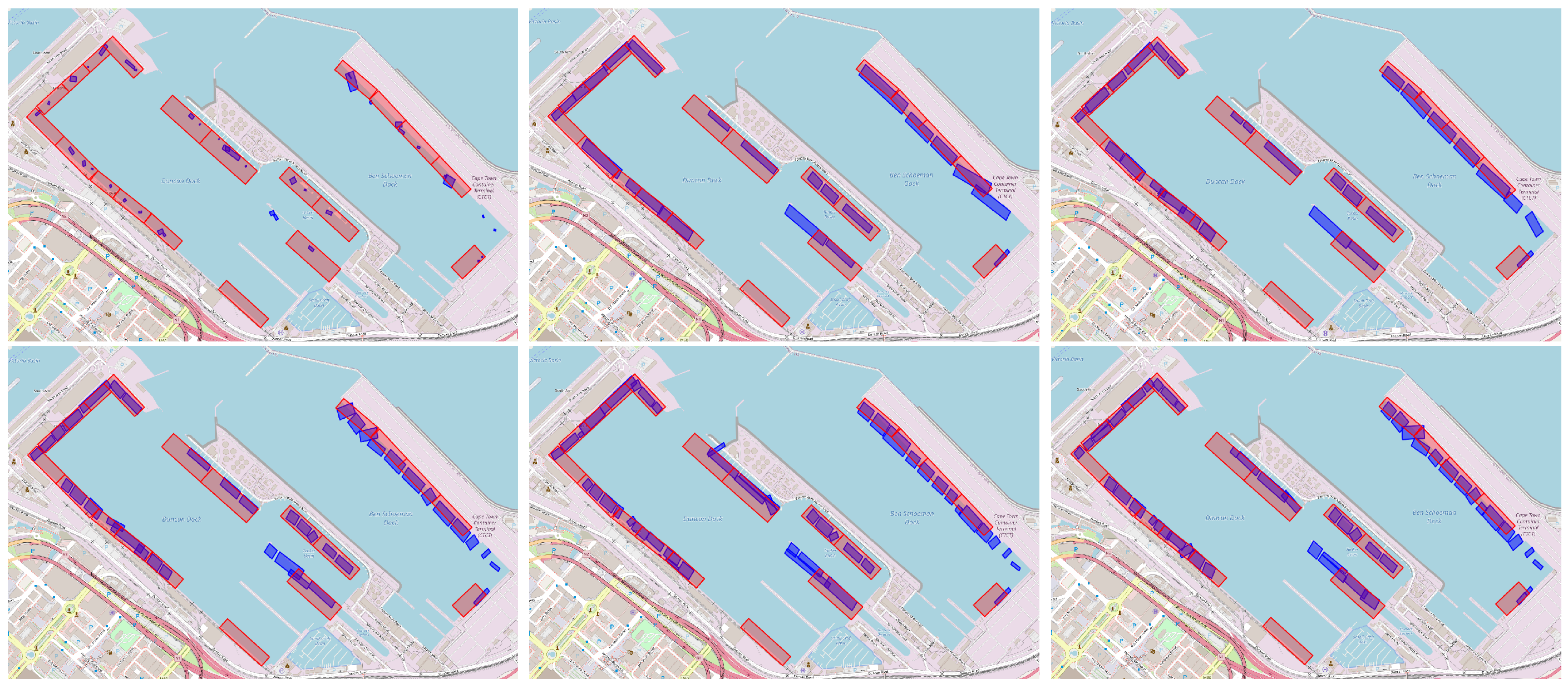

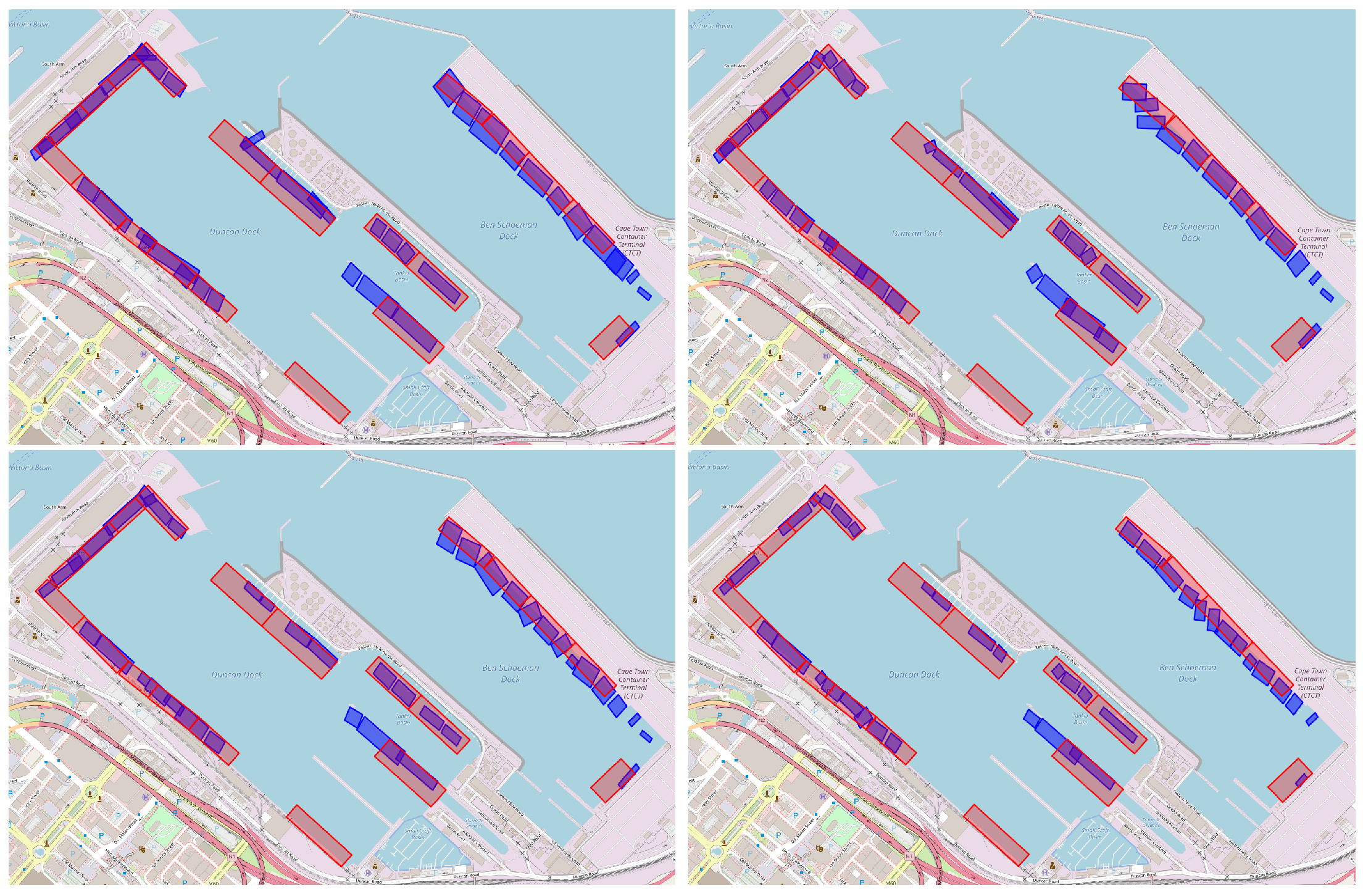

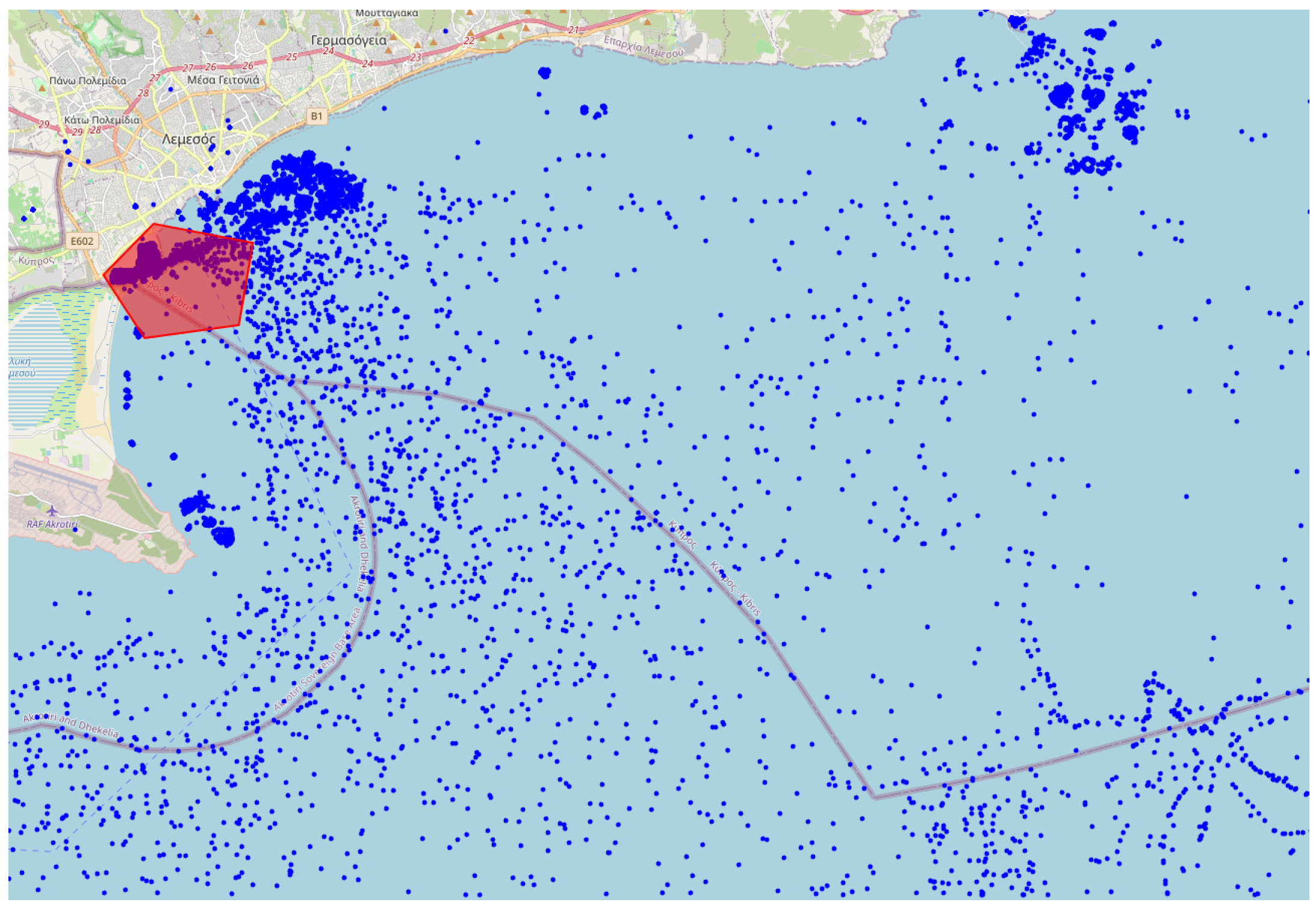

The AIS data utilized in this research were sourced using the Application Programming Interface (API) of AISStream.io, a platform that offers free access to terrestrial AIS data. Their network of AIS receiving stations, which varies in density globally, covers areas within approximately 200 km of the majority of the world’s coastlines. For a detailed visualization, a map of their AIS stations is available on their website. To receive AIS data from AISStream.io, one needs to define the region or regions of interest (ROIs) and, optionally, a list of vessels of interest based on Maritime Mobile Service Identities (MMSIs). The MMSI is a nine-digit number used for identifying vessels, ship stations, and coast stations in maritime communication and navigation systems. However, while an MMSI is linked to a single ship at a given time, it can be reassigned to different ships over time, and a ship might change its MMSI. Therefore, the MMSI is not a unique identifier, though it is used as an indicator for individual ships in this work. Finally, we do not utilize the vessel-specific filtering of the API. AIS data from the Cape Town and Limassol ports over a period of one month are shown in

Figure 2 and

Figure 3, respectively. The port-specific ROI is an area covering an entire port while avoiding adjacent waterways or nearby ports. An example of the Limassol ROI compared to all the data collected for that port is shown in

Figure 3. To ensure a representative sample of ports of all sizes (based on TEU) the following sampling technique was employed. First, twelve port sizes in the range of 100 to

TEU were sampled using a uniform logarithmic distribution. Ports corresponding closely to these predetermined size categories were then selected (see

Table 1).

2.1.1. Period of Interest

We fixed the maximum period of interest (POI) at one month (1–31 October 2023), and collected AIS data across the ROIs. This setup reflects our scope: identifying port berths given up to one month of freely available AIS data. For the sensitivity analysis of the amount of data (discussed in

Section 3), the POI varied. Specifically, we carried out four experiments with POIs that begin on the 1st of October and span three days, one week, two weeks, and the entire month, respectively.

The number of AIS messages received over a period of 1 month for each port is presented in

Table 1 alongside each port’s TEU throughput. Almost no AIS messages were received for the port of Ambarli, which was therefore excluded.

2.1.2. Boundary Boxes for Port Berths

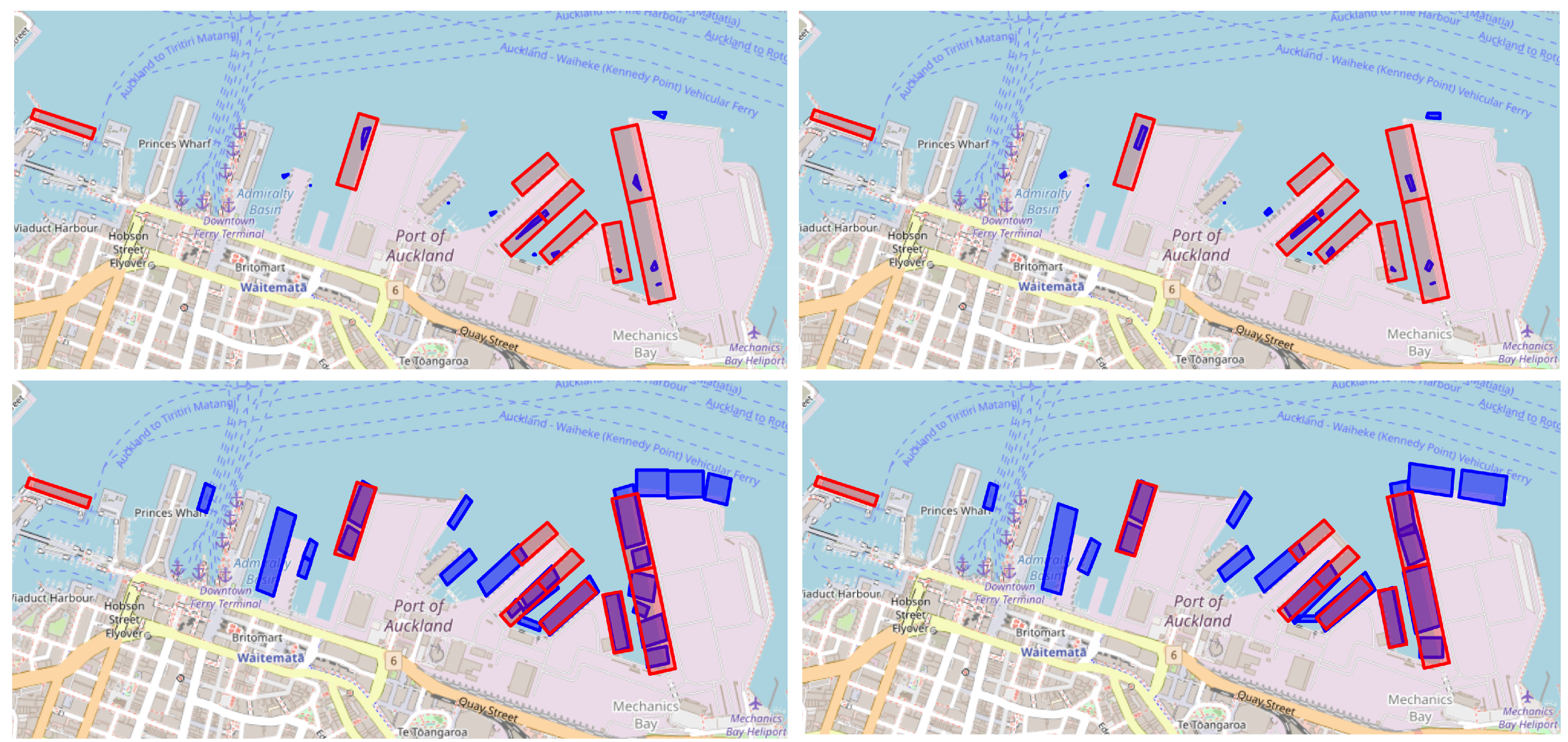

For qualitative model evaluation, rather than training, we collected port berth boundary boxes for the ports of interest. In particular, berth, terminal, and port area labels were obtained from the ShipNext website (

https://shipnext.com/ accessed on 4 November 2025). The port berth areas are represented as rotated rectangles, as illustrated in

Figure 4.

2.2. Methodology

2.2.1. Data Preprocessing

The preprocessing of the raw AIS data involved data cleaning, interpolation, and standardisation. For data cleaning, the data are filtered based on vessel type, so that only cargo and tanker ships remain, and speed (<3 knots). Second, AIS records with either incomplete vessel dimensions or missing heading values are removed. Finally, for each vessel, consecutive messages indicating a substantial directional change in the heading are removed (>10 degrees), since large directional changes may be due to vessel movement or due to noisy or incorrect recorded values.

Following the above, the AIS messages per MMSI are resampled such that there is at maximum one AIS message per hour (“interpolation” step). This is achieved by taking the last message of every hour, if available, for each vessel. An ablation study is carried out to determine the interpolation period, as discussed in

Section 3.2. Finally, the latitude and longitude information of all AIS messages are standardized to have a mean of 0 and a standard deviation (SD) of 1.

2.2.2. Data Split

For the purposes of hyperparameter tuning and evaluation (but not inference, see

Section 2.4), the preprocessed AIS data for each port was split into two datasets. The splitting process involves the following steps:

Vessels are sorted in descending order based on the number of AIS messages they transmitted.

The sorted vessels are separated into two data sets by alternately assigning consecutive vessels to a different data set.

This methodology ensures that the resulting splits of the data have approximately equal numbers of AIS messages while maintaining vessel exclusivity within each split.

2.2.3. DBSCAN

Density-Based Spatial Clustering of Applications with Noise (DBSCAN), first introduced by Ester et al. [

36], is a non-parametric, density-based clustering algorithm, which groups points in a data set based on their proximity and the density of their surrounding points. DBSCAN is governed by two hyperparameters:

which defines the maximum radius of a neighborhood, and

which refers to the minimum number of points for a cluster to be formed.

In the present work, DBSCAN is applied to each vessel independently, clustering AIS data points based on vessel movement (or lack thereof). Since the AIS data of each vessel are reported hourly, intuitively, the algorithm creates a cluster in locations where the vessel reportedly stayed for at least

hours, as reported by AIS messages, with each reported location being within a distance of

from one another. Note that haversine distance was used to account for differences in distances between points at different latitudes. DBSCAN effectively identifies and filters out outliers—in our case, these can be AIS messages with vessel movement or insufficient duration of stay. Such points are shown as black dots in

Figure 5a. We optimize these two hyperparameters using the Tree Parzen Estimator (TPE), with a log uniform distribution of real numbers between 5 and 70 as the prior for

, and a uniform distribution of integers between 2 and 25 as the prior for

.

2.2.4. Spatial Data Augmentation

Following DBSCAN-based filtering, for each remaining AIS message, we generate additional points (10 during training, 20 during evaluation) by sampling from a uniform distribution within the area occupied by the vessel. This area is determined based on the vessel’s dimensions and heading, which are provided in the AIS data. The dimensions of the vessel are captured in four fields: “Dimension A”, “Dimension B”, “Dimension C”, and “Dimension D”. These fields report the distances of the AIS receiver to each of the ship’s four sides. Based on these dimensions and the reported heading of the vessel, further points are generated as shown in

Figure 6.

2.2.5. Geohash Encoding

Following the above, the spatial data of the AIS messages (i.e., longitude, latitude) can be encoded as geohashes—a practice that aims to declutter the dataset while significantly improving the efficiency of model training and inference. Guided by domain knowledge on cargo/tanker berth sizes and typical berth spacing, we set geohash precision to 9, which bins the data into

m ×

m squares, to declutter AIS points without limiting spatial resolution. We report on the effectiveness of our proposed method with and without geohash encoding in

Section 3.

2.2.6. Gaussian Mixture Model

A GMM is a probabilistic model that represents the data as a mixture of several Gaussian distributions, each of which can be thought of as a cluster [

11]. GMM does not assign data points to single clusters though; instead, it assigns a probability distribution across all Gaussian components for each data point. One important hyperparameter in GMMs is the number of components (“ncomponents”), which dictates the number of Gaussian distributions underlying the model. In our context, the Gaussian distributions will be interpreted as representing potential berths in a port (illustrated in

Figure 5b). Therefore, the value of “ncomponents” determines how many distinct berths (or other docking areas) a GMM will attempt to model. To determine the optimal “ncomponents”, we use the Minimum Description Length (MDL) criterion [

9], which can be viewed as a formalization of Occam’s razor [

10]. It provides a principled approach of balancing model complexity against explanatory power in light of the observed data [

10]. Succinctly, MDL can be viewed as an approximation to Bayesian model selection, but without the requirement of an explicit prior. Instead of assigning subjective priors, it uses universal coding principles to measure model complexity objectively. Here, the description length is the length, in bits, needed to encode both the parameters of a GMM, and the data given the GMM [

10]. Formally:

where

is the description length corresponding to the model

M and data

D (given

M) respectively. The description length can be further elaborated as follows:

where

are individual data points from

D,

N their count, and

the number of GMM parameters [

10]. The number of parameter

of a GMM with

“ncomponents” can be written as follows:

Here,

is the number of mixture components, i.e., number of Gaussians in the GMM, and

is the number of dimensions of the (input) data (in our case, it is 2). Intuitively, Equation (

3) accounts for the mean, covariance matrix, and weight parameters that come with every Gaussian.

This MDL-based approach to tuning “ncomponents” balances model complexity with goodness of fit, helping to avoid underfitting (too few berths modeled) or overfitting (e.g., modeling noise as berths). The process is as follows:

We define a range of possible “ncomponents” values: (3, 50) for smaller ports and (30, 250) for larger ports (Singapore and Antwerp in our case).

We fit a GMM with full covariance matrix, 2 restarts, and tolerance for each “ncomponents” value and for each data split.

We calculate the MDL for each fitted GMM on each data split. The “ncomponents” value yielding the lowest average MDL across the two data splits is selected.

2.2.7. Hyperparameter Tuning Based on Kullback–Leibler Divergence

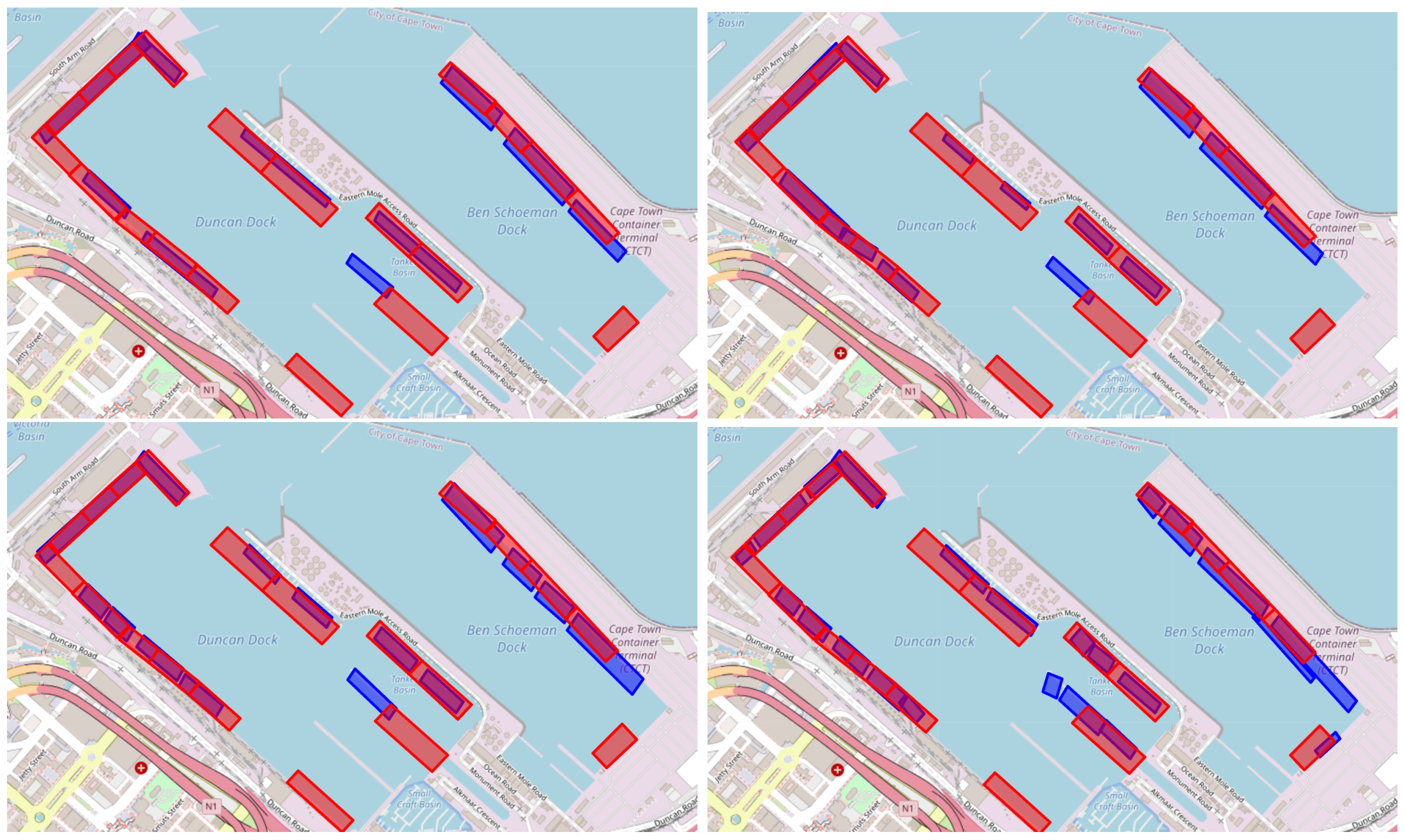

The following summarizes our hyperparameter optimization process:

We use the Tree-structured Parzen Estimator (TPE) to search the space of DBSCAN’s hyperparameters ( and ) for 100 trials, with the first 30 serving as a warm start.

At every trial, we fit two GMMs, one for each augmented, as previously defined, data split, using the optimal “ncomponents” value (determined as discussed in

Section 2.2.6).

We assess the similarity between the two GMMs using the symmetric KLD, as described in the equations that follow.

Following the results on 100 trials, the trial yielding the lowest KLD score is selected as the best configuration (i.e., optimal and for DBSCAN and optimal “ncomponents” based on the MDL criterion) for that port.

We use Optuna for hyperparameter optimization [

37]. The KLD [

7] from distribution

p to distribution

q is defined as follows:

To ensure symmetry in our comparison, we use a symmetric variant of KLD which we define as:

where

p and

q in our case represent the underlying distribution of GMMs fitted on different data splits.

The symmetric KLD quantifies the similarity between GMMs trained on different data splits, reflecting how well the chosen hyperparameters (of both DBSCAN and GMM) generalize across these complementary subsets of the data. Intuitively, a lower KLD indicates that the GMMs trained on different data splits identify a similar set of berths, suggesting that the model’s representation of port structure is consistent and robust across subsets of the data. The optimal hyperparameter configurations for all ports, automatically selected based on the described process, are listed in

Appendix B.

2.3. Evaluation Approach

During evaluation, to assess the consistency and robustness of our models across different data splits, we employ the BD rather than the KLD. For hyperparameter tuning, KLD was more suitable as it more heavily penalizes inconsistencies. In contrast, for evaluation, the BD provides a more balanced assessment (especially since true ground truth is lacking), measuring distribution similarity without overly penalizing minor variations between GMMs trained on different data splits. The evaluation process includes the following steps:

Train GMMs on each of the two augmented data splits (generating 20 points rather than 10) using tuned hyperparameters for both DBSCAN and GMM. GMM hyperparameters “tolerance” and number of restarts are set to and 5 respectively.

Uniformly sample points from the port area.

Calculate the probability of these points under each of the two GMMs.

Use these probabilities to compute the Bhattacharyya coefficient.

The Bhattacharyya coefficient is defined as follows:

where

and

are the values of the probability density functions (PDFs) evaluated at the point x for the two GMMs respectively. To estimate this coefficient over the entire port area, we use Monte Carlo Integration with a uniform distribution:

where

A is the total area of the port. Finally, we calculate the BD by taking the negative logarithm of the coefficient:

Intuitively, a lower BD indicates that the two GMMs assign similar probabilities to the same areas within the port, suggesting that our model consistently identifies similar berth structures across different data splits. For the Steenari et al. method [

26], which produces non-probabilistic clusters, we assign a probability of 1 if a sample point falls within a cluster and 0 otherwise, allowing for comparison with our probabilistic approach. To obtain a robust estimate of performance, we calculate the averaged BD over 200 Monte Carlo reruns.

2.4. Port Berth Localization

To localize port berths, the entire data set is used alongside the optimal hyperparameter configuration of both the GMM and DBScan. Moreover, during augmentation, we generate 20 points rather than 10. Finally, the hyperparameters of the GMM, “tolerance” and number of restarts, are set to and 5 respectively. To generate boundary boxes, the augmented AIS points that fall in each component of the final GMM (of each port) are encircled by a rotated rectangle, representing the area of the localized port berth. The rotated rectangle is calculated by finding the smallest possible rectangle that can contain all points in a cluster. To achieve this, we calculate the convex hull of the cluster points and then evaluate the bounding rectangles at different angles formed by the edges of the hull. The rectangle with the smallest area is selected as the final bounding box of each Gaussian component.

2.5. Use of Generative AI Tools

During the preparation of this manuscript, the authors used a generative AI tool (OpenAI ChatGPT, GPT-4) to improve the clarity and phrasing of certain text passages. The tool was not used to generate original research content, study design, data, or analysis. After using the tool, the authors carefully reviewed and edited the output to ensure accuracy and compliance with the intended meaning. The final responsibility for all content rests solely with the authors.

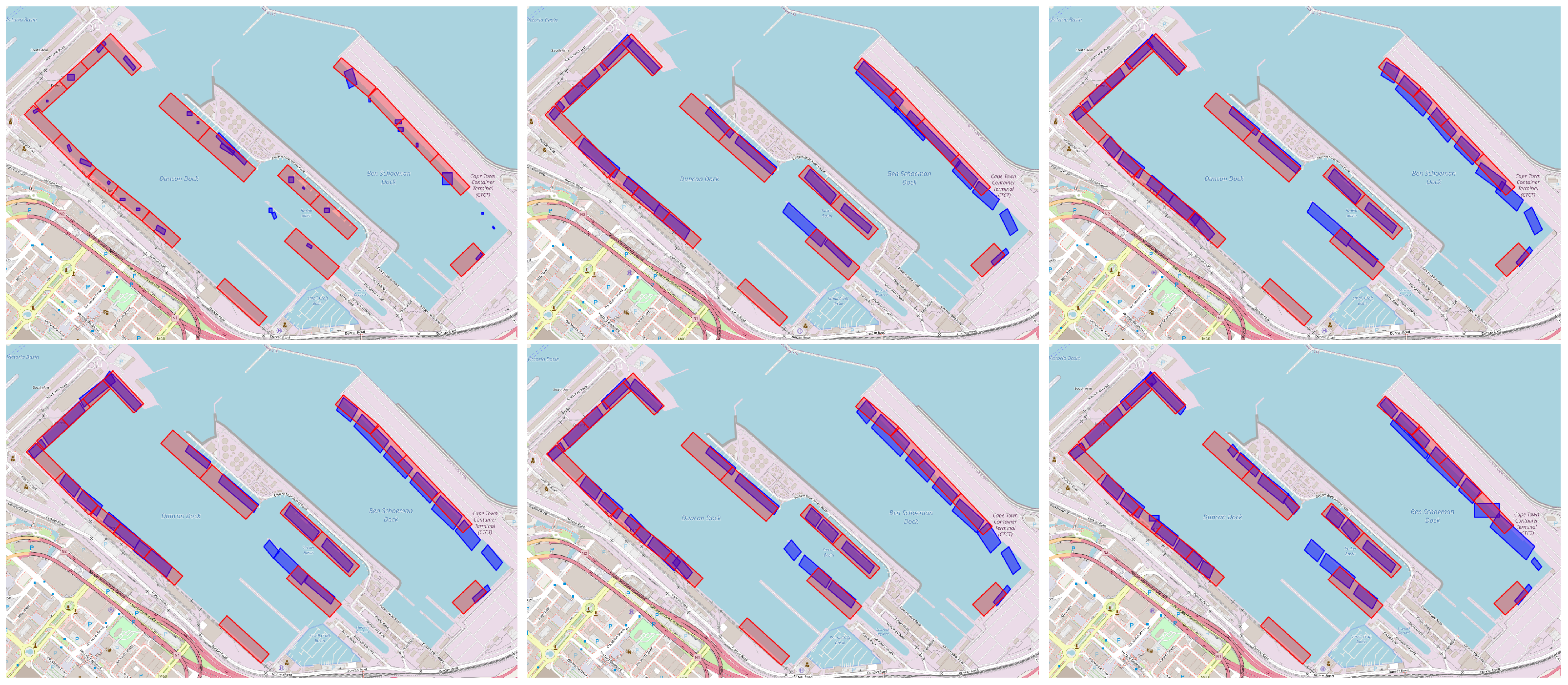

4. Discussion

In this work, we presented an unsupervised framework for port berth localization, evaluating two variants—one employing geohash encoding and one without—across a diverse set of 11 ports worldwide. Our results demonstrate a significant advancement over existing approaches in both quantitative and qualitative terms. By comparing our model predictions against satellite imagery and existing berth labels, we highlighted the limitations and inconsistencies in the current documentation. As presented, our models were able to identify berths that were overlooked or inaccurately represented by publicly available labels. Furthermore, the broad evaluation, encompassing ports of varying sizes and operational contexts, attests to the adaptability and generalizability of our approach.

A key factor in the success of our method lies in several novel methodological choices. First, the introduction of a spatial data augmentation strategy, guided by vessel dimensions and headings, enriched the model’s spatial representation and contributed to more coherent berth delineations. Second, our use of a KLD-based score, coupled with Bayesian optimization and the Minimum Description Length (MDL) principle, facilitated a principled and data-driven approach to hyperparameter tuning and model selection. Finally, the post-processing procedure, which transforms the probabilistic outputs of GMMs into polygonal berth boundaries, enabled a more intuitive and practical interpretation of the results. Each of these components, when combined, provided a robust and flexible framework capable of delivering reliable berth localization results across diverse maritime environments.

Our ablation studies further elucidated the factors influencing berth localization performance. Increasing the observation period (e.g., from a few days to one month) consistently yielded more stable and accurate GMM outputs, confirming the intuitive notion that more AIS messages provide a firmer statistical basis for identifying berths. Likewise, introducing spatial augmentation significantly enhanced clustering consistency, though the marginal gains diminished as the number of generated points increased, guiding us toward a balanced configuration. Setting the interpolation interval to one hour provided balance between computational efficiency and performance. Notably, these adjustments benefited the geohash-enabled variant more consistently than its non-geohash counterpart, underscoring the advantages of encoding spatial information into geohashes. While non-geohash models still improved through augmentation and tuning, their gains were not as pronounced, reinforcing the conclusion that geohash encoding provides a more robust and reliable foundation for data-driven berth localization. Beyond accuracy, the geohash pipeline is more computationally efficient, reducing average end-to-end runtime from to min across the 11 ports (mean speedup ; median ; range –).

Beyond the empirical gains we reported, our work offers three concrete strengths that we believe the pattern recognition community can directly benefit from: (i) a fully unsupervised, end-to-end pipeline whose hyperparameters are selected by internal, information-theoretic criteria (KL/MDL) rather than labels—making it deployable at any port with sufficient AIS data; (ii) a hierarchical, two-stage design (ship-level DBSCAN followed by area-level GMM) that explicitly separates denoising/episode detection from berth inference, which others can reuse in similarly noisy spatial–temporal problems; and (iii) a distributional, model–model validation protocol (BD/KLD) that can act as a general recipe for evaluating clustering stability when ground truth is unavailable.

Despite these advances, some limitations remain, offering fertile ground for future investigation. Currently, our approach focuses primarily on cargo and tanker vessels; extending coverage to other vessel types, such as passenger or fishing ships, could broaden the applicability of our method. While the geohash-enabled variant consistently demonstrated superior performance, some of our methodological choices and hyperparameter configurations (e.g., interpolation period) were designed to be generally effective across a diverse set of ports, potentially biasing the outcome toward a “one-size-fits-all” solution. Future work could tailor these configurations specifically to each variant and port, potentially revealing scenarios where the non-geohash variant might excel or reducing the need for certain preprocessing steps. Additionally, while our method leverages freely available terrestrial AIS data, regions with sparse coverage or data quality issues (e.g., Port of Ambarli) may pose challenges to the application of our method.

By bridging the gap between raw AIS data and port berth localization, this work provides a strong foundation for more informed decision-making within maritime logistics and port management. As global data accessibility continues to improve, our framework’s adaptability and scalability position it as a valuable tool for stakeholders aiming to optimize port operations. Ultimately, advances in unsupervised berth localization can pave the way for more agile, data-driven maritime strategies and more resilient global trade networks.

Future Directions

Future research directions aimed at enhancing the method’s effectiveness and reliability include (i) extending the POI to multi-month/seasonal windows to capture intermittently used berths; (ii) incorporating multi-modal weak supervision (Sentinel-2/SAR imagery and third-party berth polygons) for semi/weakly supervised outline refinement; (iii) broadening evaluation across additional ports and vessel classes; and (iv) developing task-specific variants, e.g., ship-type/subtype–specific models and hotspot mapping beyond ports.