One-Dimensional Convolutional Neural Network for Object Recognition Through Electromagnetic Backscattering in the Frequency Domain

Abstract

1. Introduction

- Employment of a 1D CNN model trained on frequency-domain backscattering responses for object detection and recognition, while most of the existing literature is focused on image-based CNNs.

- Design of an experimental framework to collect datasets from multiple realizations of objects for each considered class that represents a new challenge with respect to similar, previous works.

- Investigation on the reliability of electromagnetic-based recognition as a complementary or alternative approach to vision-based systems.

- Analyses of the impact of acquisition settings on recognition accuracy, highlighting trade-offs between performance and processing time.

2. Background on Object Recognition

| Type | Ref. | Detection/Recognition | Notes |

|---|---|---|---|

| Image-Based | [3] | Detection, Recognition | Deep learning for object detection, semantic segmentation, and action recognition. |

| [4] | Detection | Camera-based detection and tracking for UAVs. | |

| [1] | Recognition | Review of deep learning-based OR algorithms. | |

| [5] | Recognition | OR Voice assisting visually impaired individuals. | |

| [6] | Detection | Review of object detection techniques using images/videos with DL methods. | |

| [7] | Detection | Deep learning methods for detecting objects in images. | |

| [8] | Recognition | Overview of brain-inspired models for visual OR. | |

| EM Wave-Based | [10,23,25] | Detection and Recognition | Review of (deep learning) techniques applied to radar signals for target detection/recognition. |

| [16,17] | Recognition | Signal Processing on UWB radar signals for OR. | |

| [21,22] | Recognition | Terahertz optical machine learning to recognize hidden objects. | |

| [27,28,29,30,31] | Recognition | Radar-based OR through high resolution range profile. | |

| [32] | Recognition | Moving target classification using 1D CNN based on Doppler spectrogram. | |

| [33] | Recognition | Microwave-based OR in the 2–8 GHz range. | |

| [34] | Recognition | Automotive Radar for millimeter wave OR. | |

| [24] | Detection | GPR application to detect buried explosive objects. | |

| [2] | Characterization | GPR application to obtain some features of buried objects. |

3. Experimental Framework

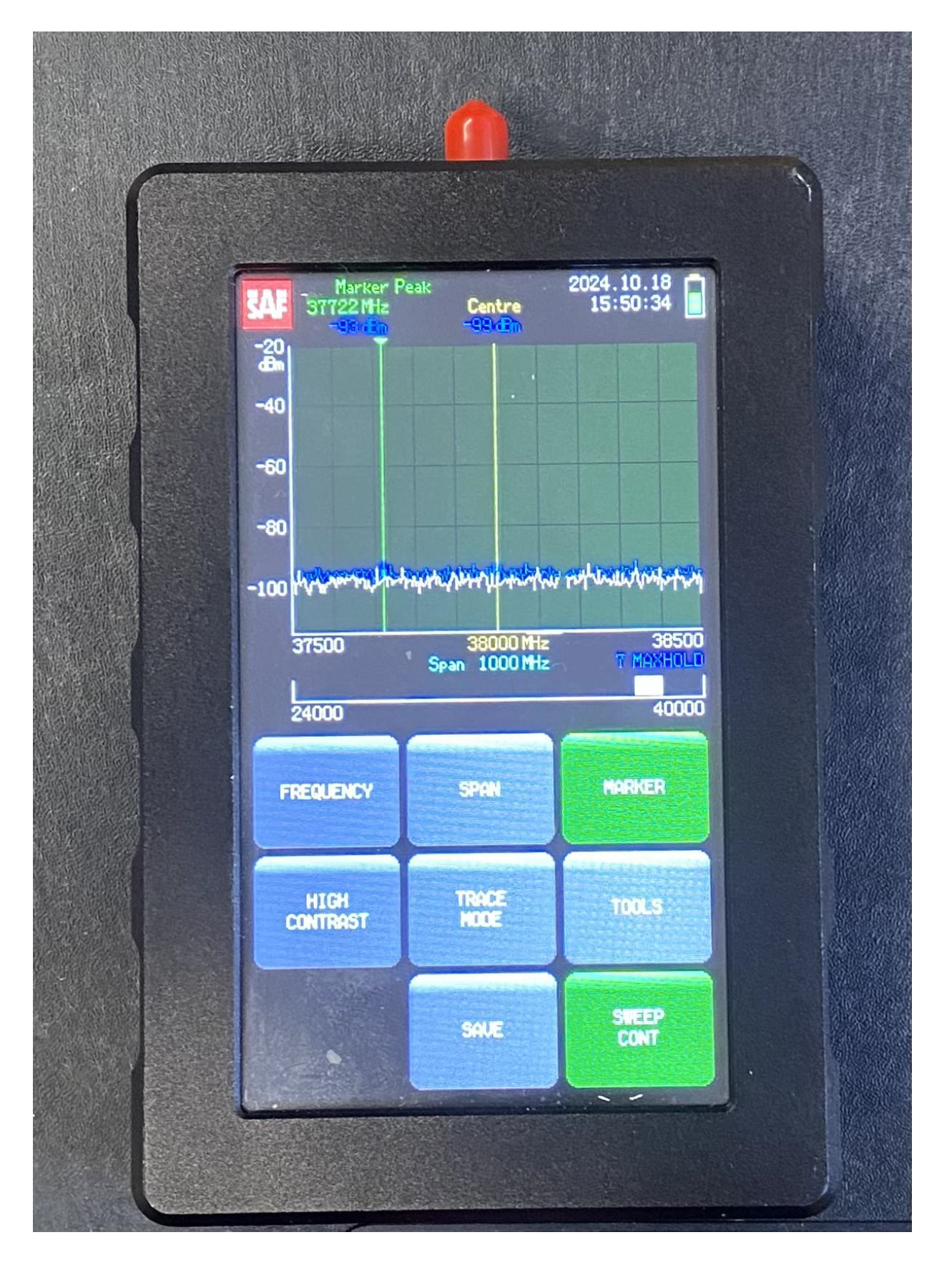

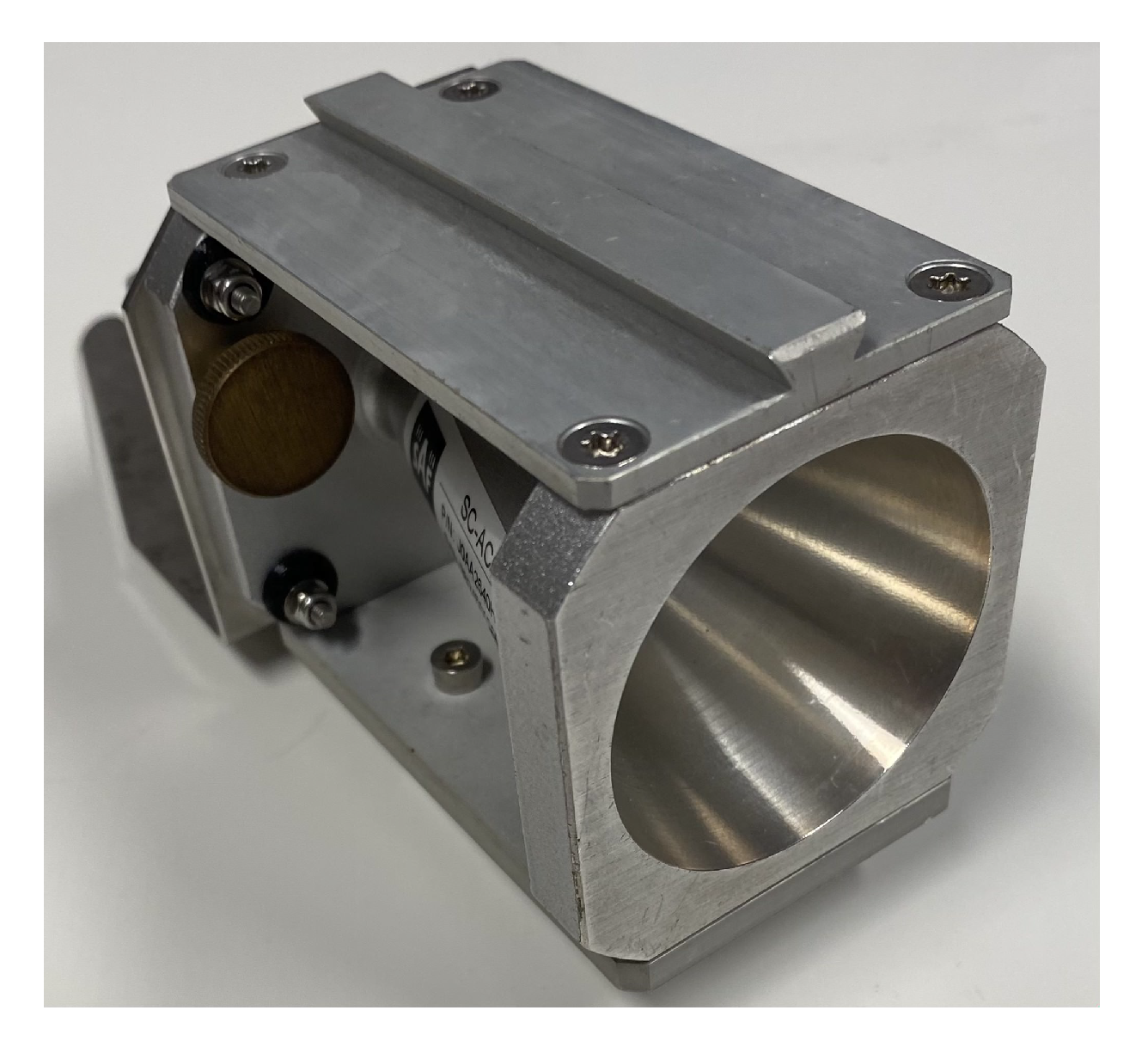

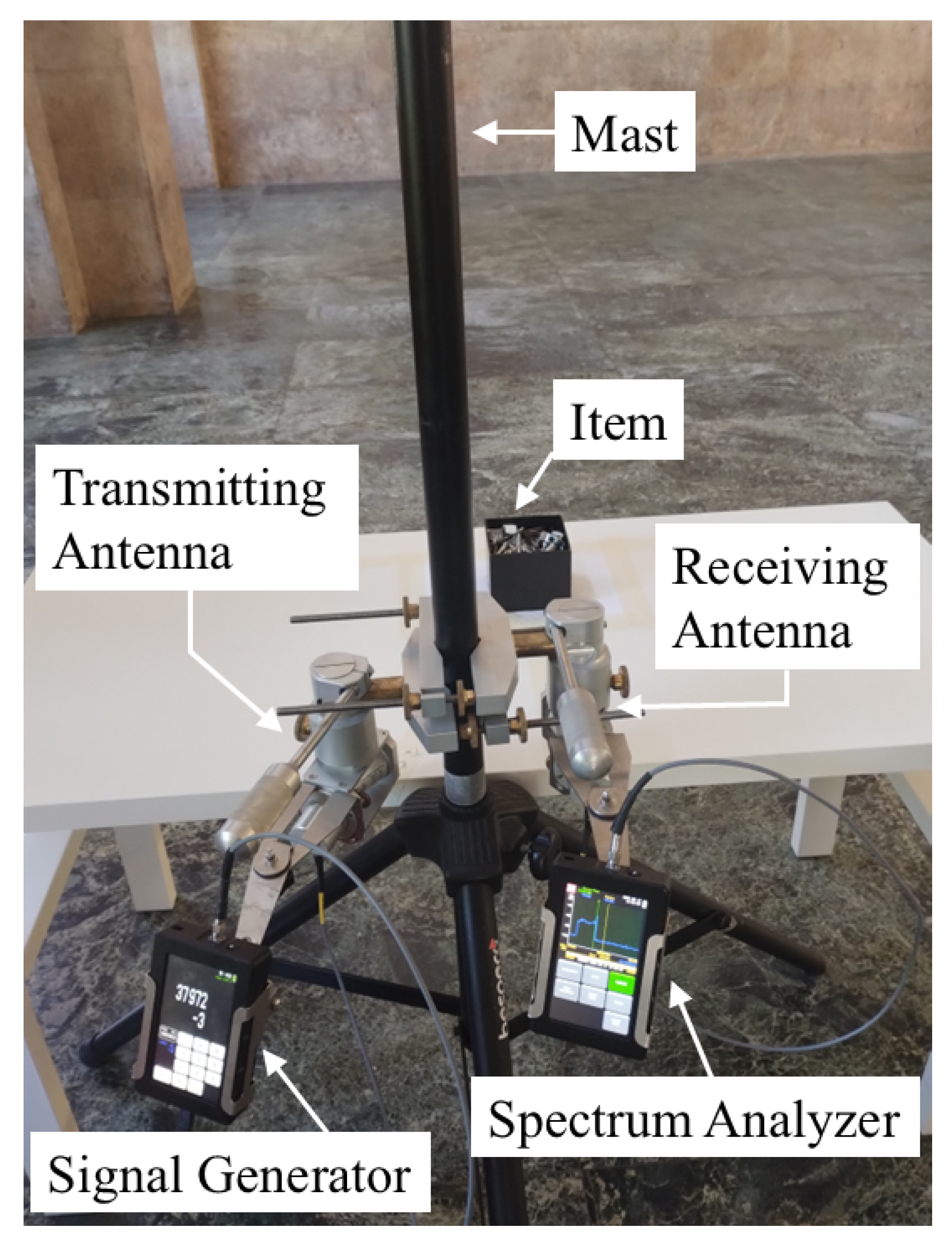

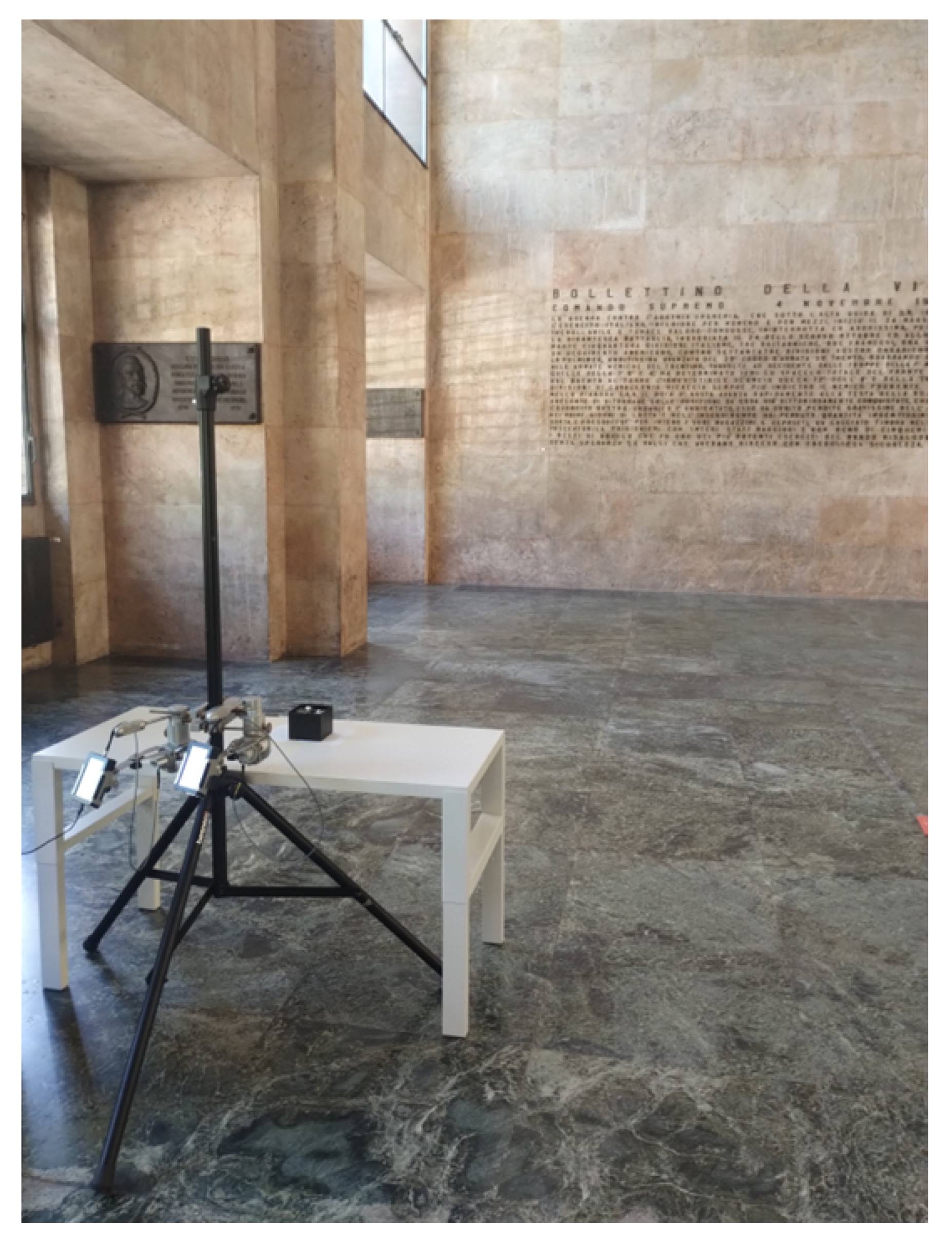

3.1. Measurement Equipment

- Conical Horn Antennas: These also operate across the frequency band from 26 GHz to 40 GHz, where the power gain ranges from 20.5 dBi to 21.5 dBi. The corresponding half-power beam-width is equal to about 13.5 deg. Vertical polarization was considered during the whole measurement activity (Figure 4).

3.2. Object Classes

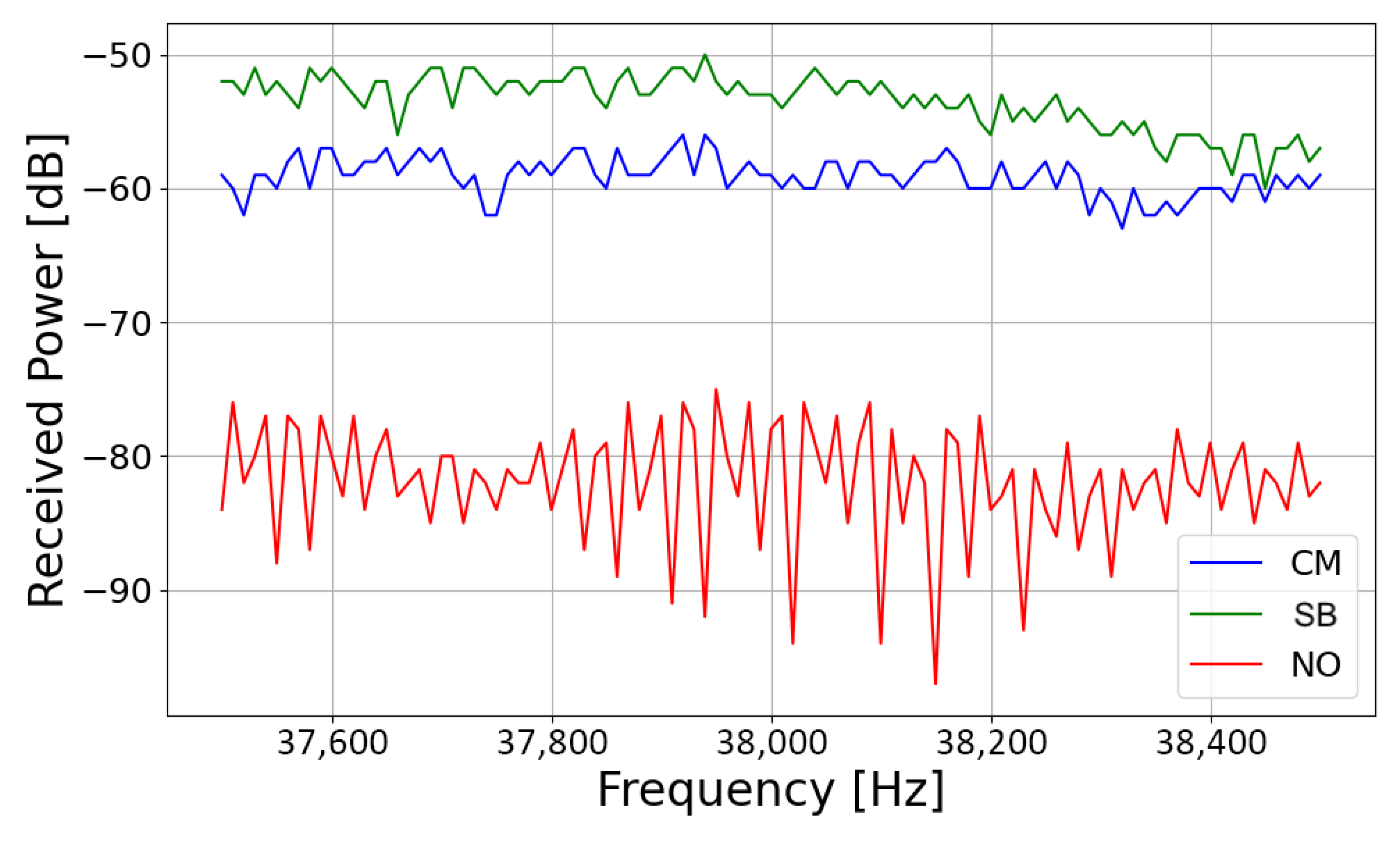

- Ceramic Mugs (CMs): A total of 18 different mugs, varying in size and shape, were considered (Figure 5). Each mug was measured three times, resulting in 54 measured frequency responses.

- Box of Screws (SBs): A cardboard box was filled with screws and then emptied 18 times (Figure 5), i.e., corresponding to a different deployment of the screws every time, and therefore to a different backscattered signal to some extent. Again, measurements were repeated 3 times for each realization, thus resulting in 54 measured frequency signals overall.

3.3. Measurement Procedure and Data Collection

4. Machine Learning

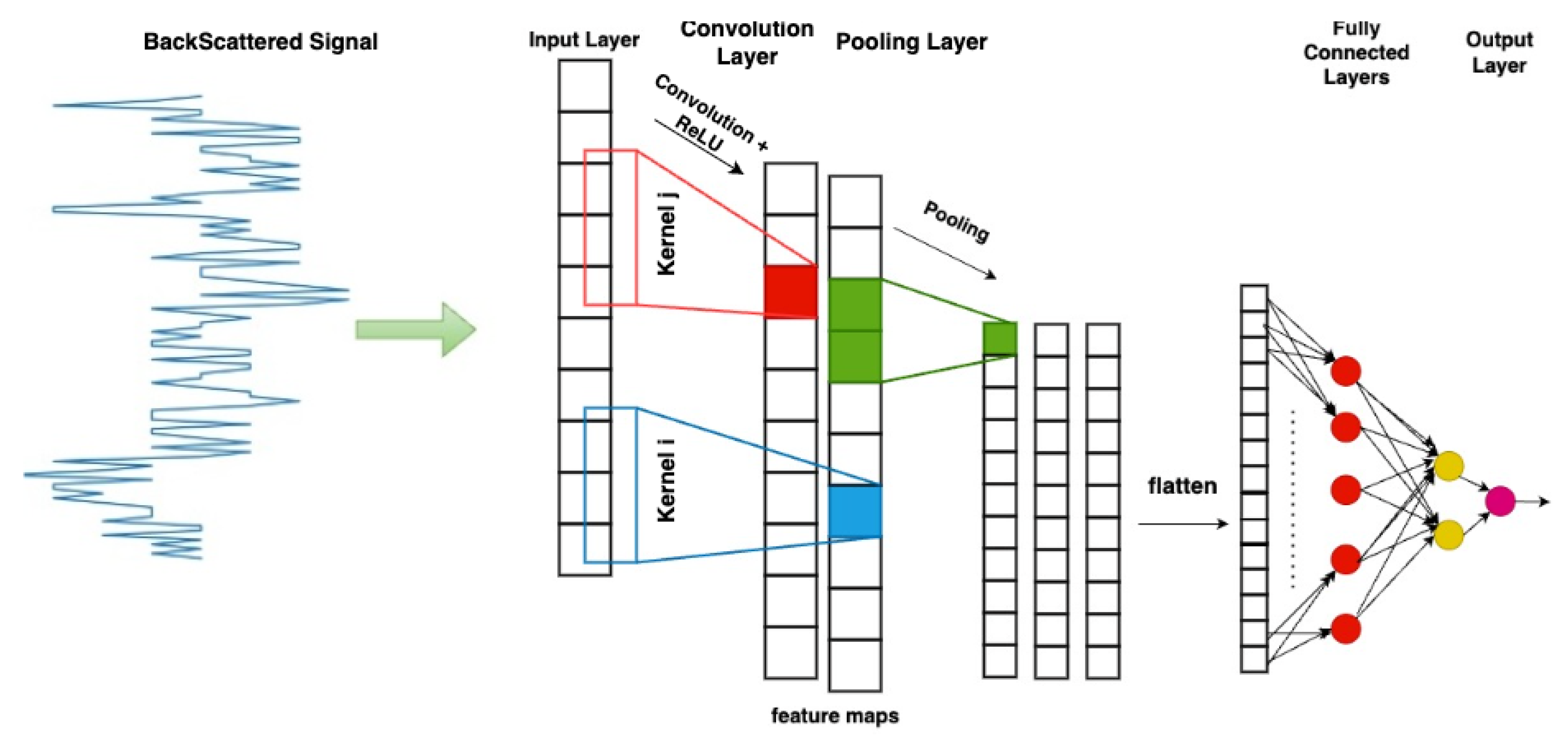

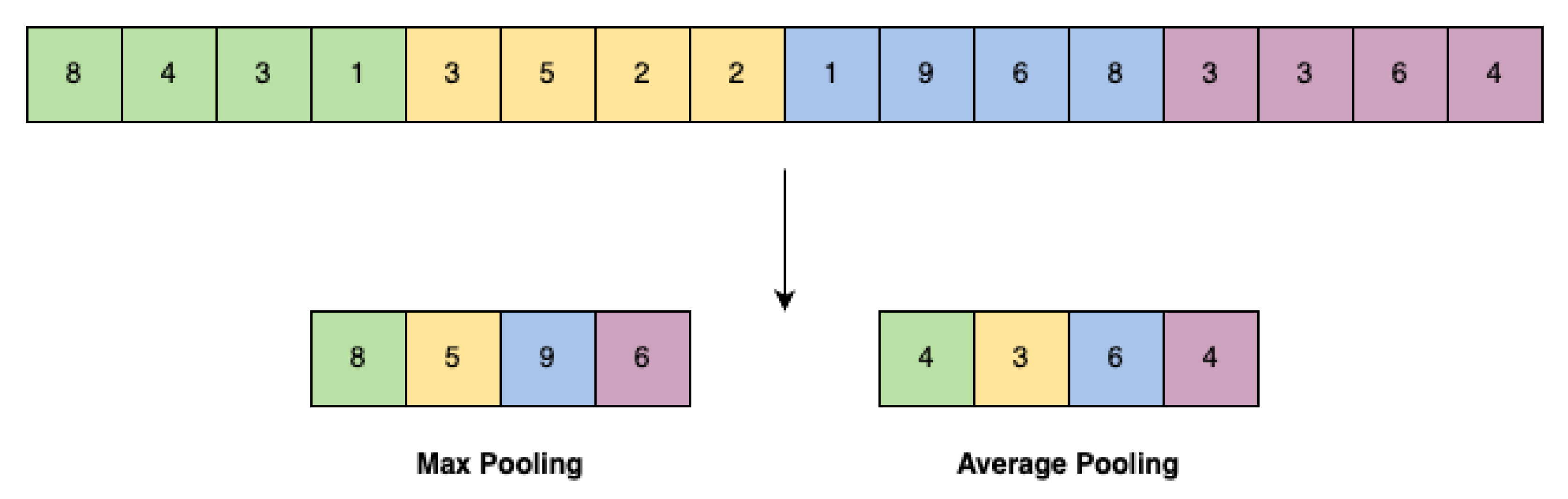

4.1. Convolutional Neural Networks

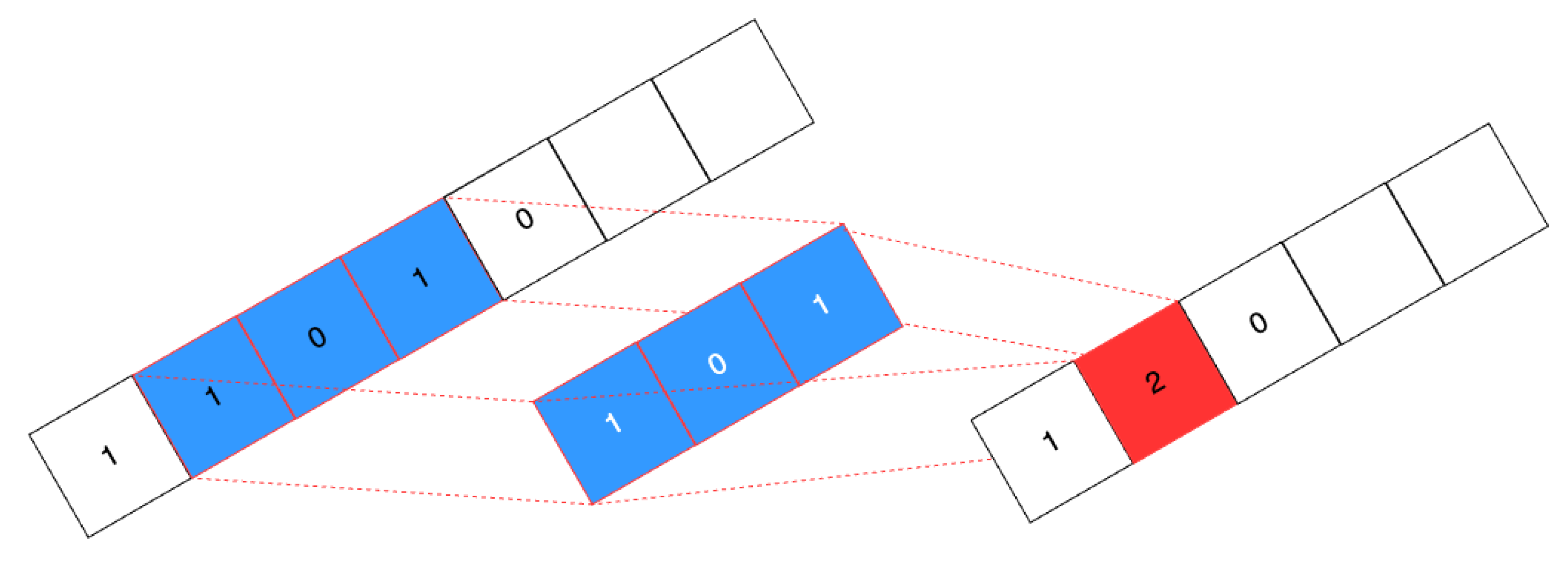

4.2. One-Dimensional CNNs

- Epochs: The number of times the entire training dataset is passed through the model. While more epochs can enhance performance, they also increase the risk of overfitting. Even though the same dataset is used in each epoch, the model updates its parameters (weights and biases) after every iteration based on the calculated loss and gradients. Within each epoch, the model refines these parameters, gradually improving its ability to generalize patterns in the data, rather than memorizing it.

- Learning Rate: Controls how much the model adjusts its weights during training. A high learning rate speeds up training but may overshoot optimal values, whereas a low rate ensures precise updates but slows convergence.

- Weight Decay: A regularization technique that discourages large weight values, helping to prevent overfitting and encouraging simpler models.

- Batch Size: The number of training samples used in a single update step. Larger batches provide more stable updates but require more memory, while smaller batches introduce more variability but can generalize better.

- Early Stopping: A technique that monitors the iteration process to stop as soon as the step-by-step performance improvement becomes negligible, i.e., when the time required by further iterations is no longer worth the effort.

- Accuracy: This is simply the probability of correct classification, i.e., the ratio between the number of exactly classified samples and the overall number of samples.

- Precision: This measures the proportion of correctly predicted positive observations out of all predictions made as positive. It represents the reliability of the positive classification.

- Recall: Also known as sensitivity, it is the probability of positive detection, that is, the probability that a positive sample is correctly classified. It accounts for the model’s ability to detect all relevant instances.

- F1 Score: The harmonic mean of precision and recall, the F1 score balances the trade-off between precision and recall, especially when the dataset is imbalanced.

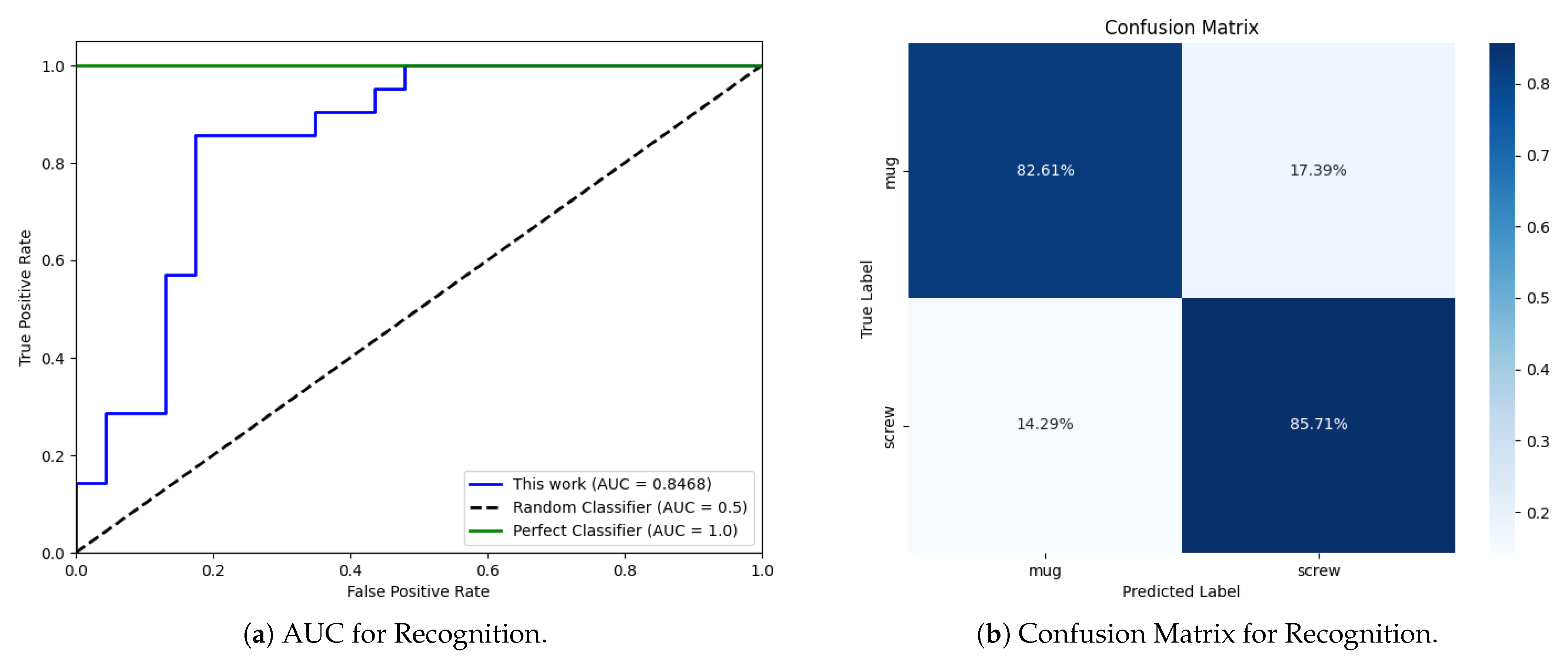

- Area Under the Curve (AUC): This represents the degree of separability between classes, based on the Receiver Operating Characteristic (ROC) curve. It provides insight into how well the model distinguishes between positive and negative classes.

4.3. One-Dimensional CNN for Object Recognition

5. Results and Discussion

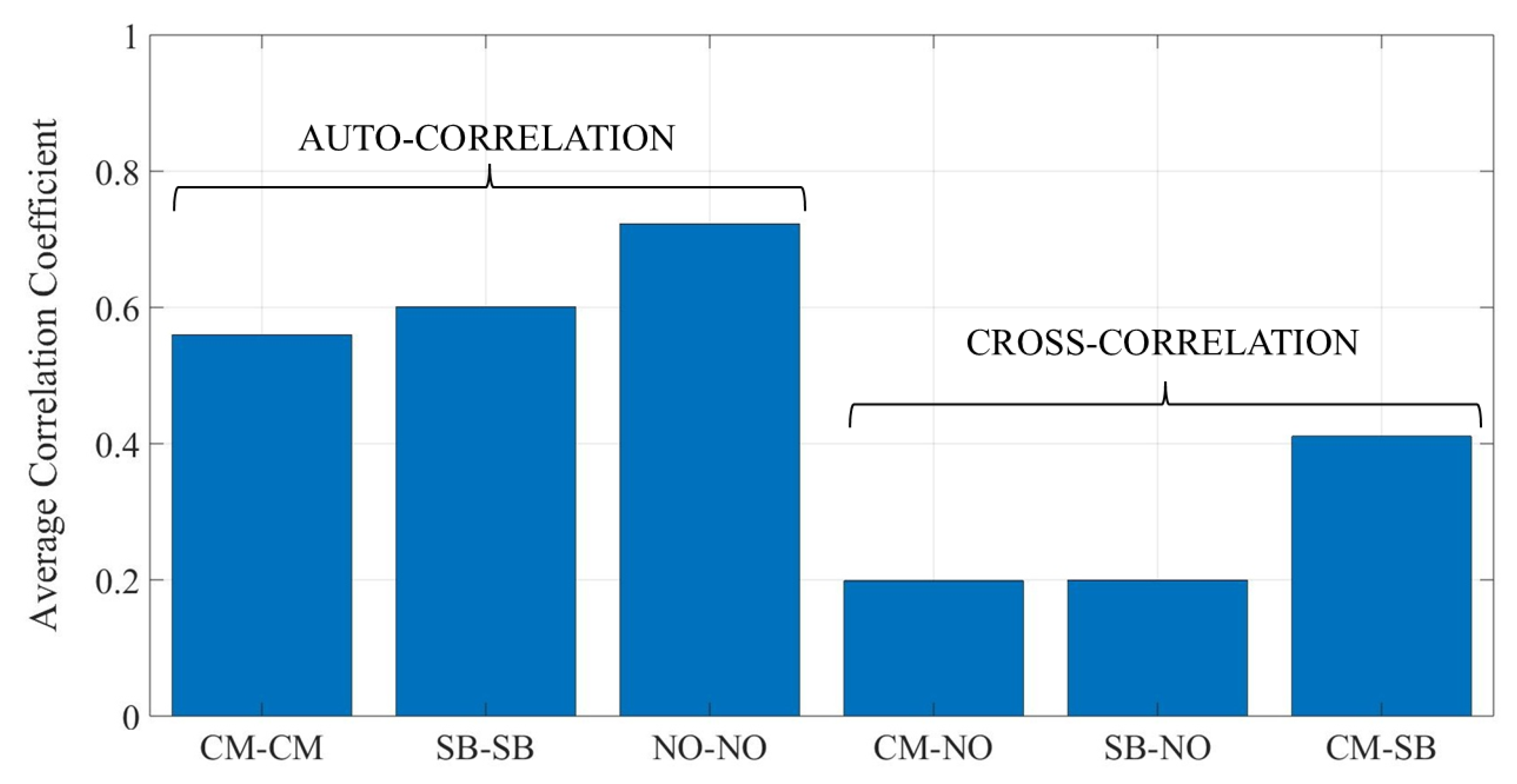

5.1. Correlation Analysis

5.2. Detection and Recognition Performance

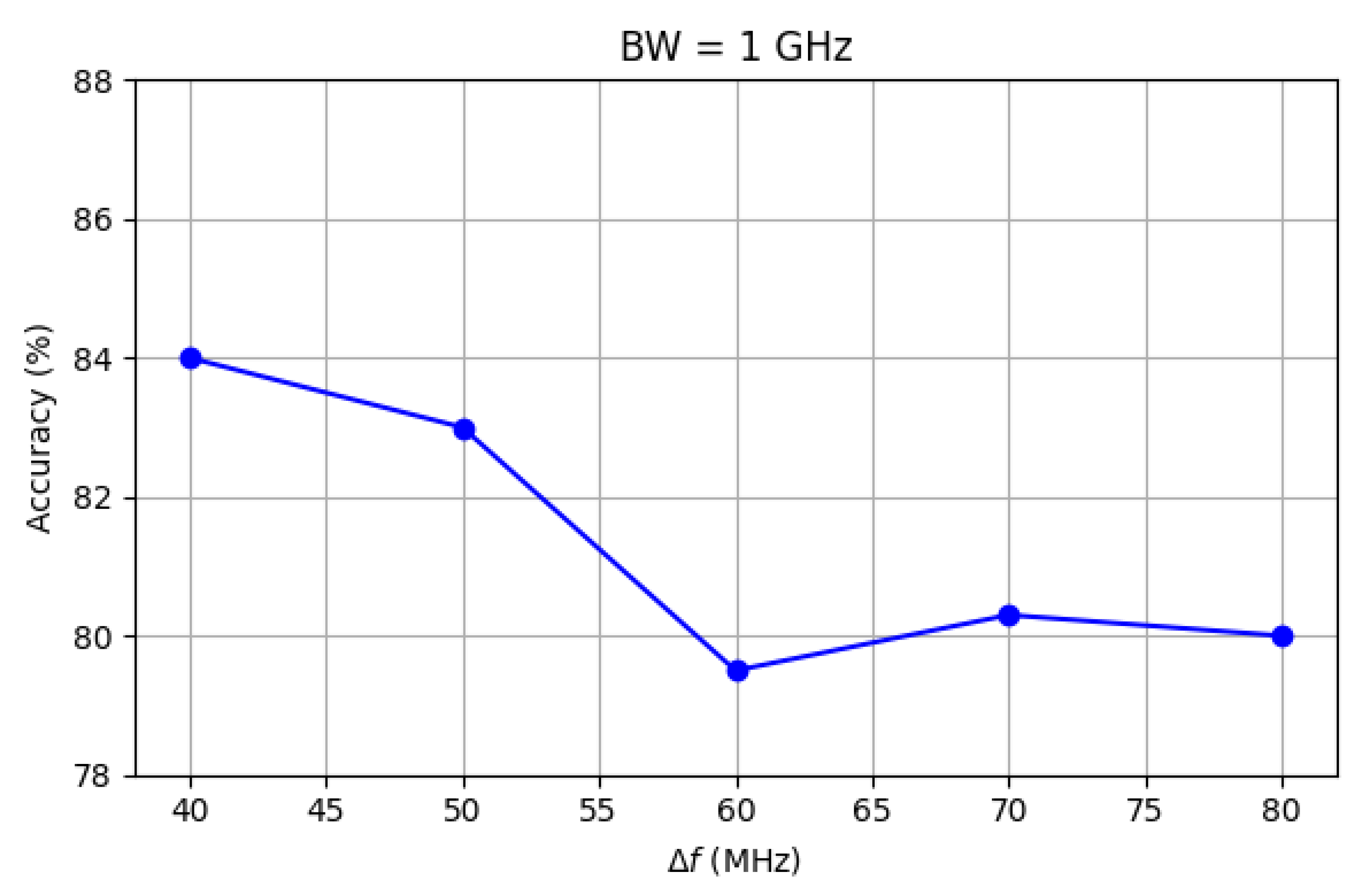

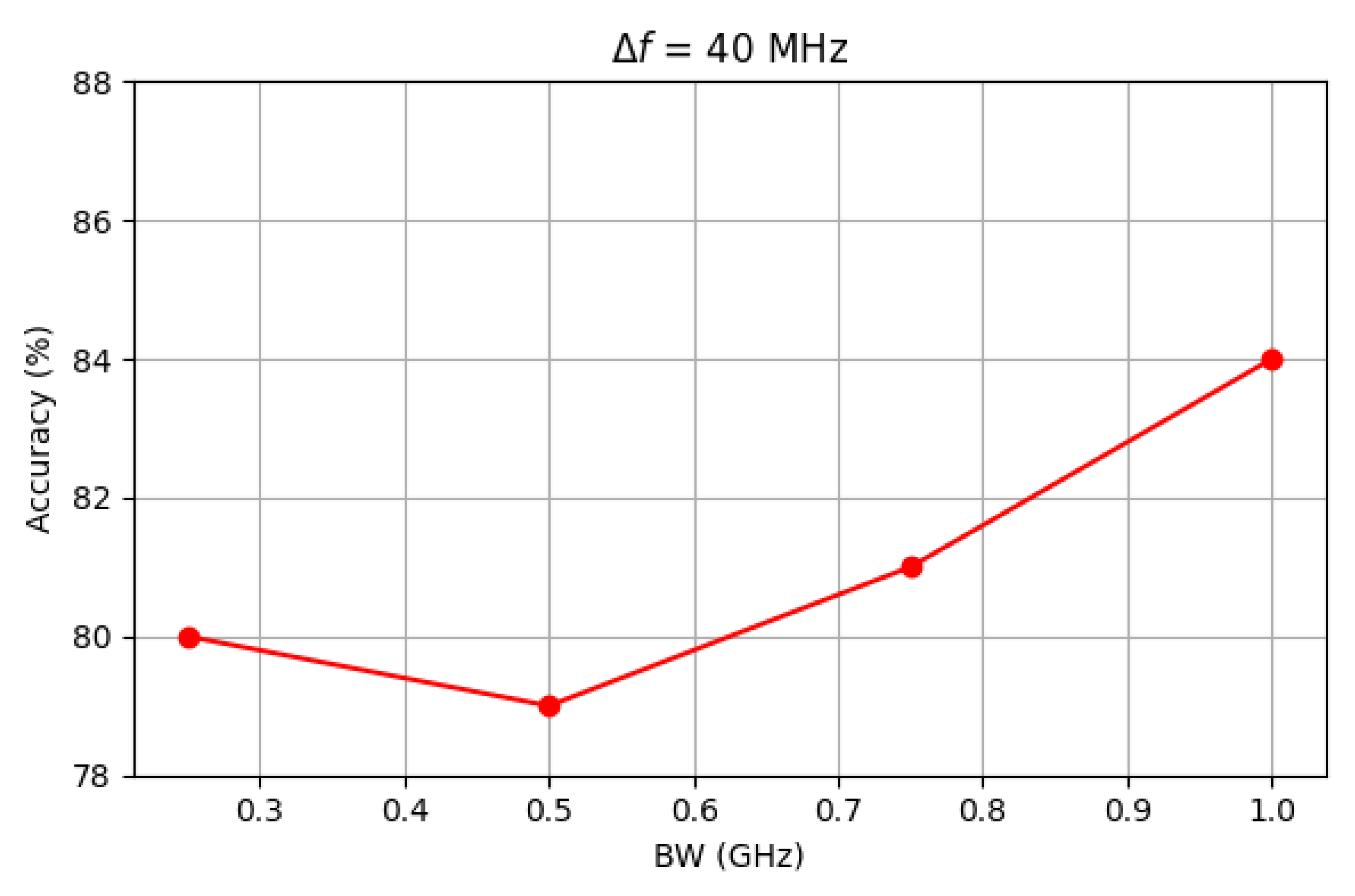

5.3. Impact of Acquisition Parameters

5.4. A Glance to Open Issues

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| AUC | Area Under the Curve |

| BW | Bandwidth |

| CL | Convolutional Layer |

| CM | Ceramic Mug |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| ECG | Electrocardiogram |

| FCL | Fully Connected Layer |

| HRRP | High Resolution Range Profile |

| KPI | Key Performance Indicator |

| ML | Machine Learning |

| NO | No Object |

| OR | Object Recognition |

| PL | Pooling Layer |

| RSS | Received Signal Strength |

| SA | Spectrum Analyzer |

| SB | Screw Box |

| SG | Signal Generator |

References

- Mahanty, M.; Bhattacharyya, D.; Midhunchakkaravarthy, D. A review on deep learning-based object recognition algorithms. In Machine Intelligence and Soft Computing; Bhattacharyya, D., Saha, S.K., Fournier-Viger, P., Eds.; Springer Nature Singapore: Singapore, 2022; pp. 53–59. [Google Scholar]

- Yurt, R.; Torpj, H.; Mahouti, P.; Kizilay, A.; Kozie, S. Buried object characterization using ground penetrating radar assisted by data-driven surrogate models. IEEE Access 2023, 11, 13309–13323. [Google Scholar] [CrossRef]

- Manakitsa, N.; Maraslidis, G.S.; Moysis, L.; Fragulis, G.F. A review of machine learning and deep learning for object detection, semantic segmentation, and human action recognition in machine and robotic vision. Technologies 2024, 12, 15. [Google Scholar] [CrossRef]

- Daramouskas, I.; Meimetis, D.; Patrinopoulou, N.; Lappas, V.; Kostopoulos, V.; Kapoulas, V. Camera-based local and global target detection, tracking, and localization techniques for UAVs. Machines 2023, 11, 315. [Google Scholar] [CrossRef]

- Jain, D.; Nailwal, I.; Ranjan, A.; Mittal, S. Object recognition with voice assistant for visually impaired. In Proceedings of the International Conference on Paradigms of Communication, Computing and Data Analytics, Delhi, India, 22–23 April 2023; Springer Nature: Singapore, 2023; pp. 537–545. [Google Scholar]

- Murthy, C.B.; Hashmi, M.F.; Bokde, N.D.; Geem, Z.W. Investigations of object detection in images/videos using various deep learning techniques and embedded platforms—A comprehensive review. Appl. Sci. 2020, 10, 3280. [Google Scholar] [CrossRef]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Deep learning approaches for detecting objects from images: A review. In Progress in Computing, Analytics and Networking; Pattnaik, P.K., Rautaray, S.S., Das, H., Nayak, J., Eds.; Springer: Singapore, 2018; pp. 491–499. [Google Scholar]

- Yang, X.; Yan, J.; Wang, W.; Li, S.; Hu, B.; Lin, J. Brain-inspired models for visual object recognition: An overview. Artif. Intell. Rev. 2022, 55, 5263–5311. [Google Scholar] [CrossRef]

- Rodriguez-Conde, I.; Campos, C.; Fdez-Riverola, F. Optimized convolutional neural network architectures for efficient on-device vision-based object detection. Neural Comput. Appl. 2022, 34, 10469–10501. [Google Scholar] [CrossRef]

- Abdu, F.J.; Zhang, Y.; Fu, M.; Li, Y.; Deng, Z. Application of deep learning on millimeter-wave radar signals: A review. Sensors 2021, 21, 1951. [Google Scholar] [CrossRef]

- Kalbo, N.; Mirsky, Y.; Shabtai, A.; Elovici, Y. The security of IP-based video surveillance systems. Sensors 2020, 20, 4806. [Google Scholar] [CrossRef]

- Kokaly, R.; Clark, R.; Swayze, G.; Livo, K.; Hoefen, T.; Pearson, N.; Wise, R.; Benzel, W.; Lowers, H.; Driscoll, R. USGS Spectral Library Version 7; U.S. Geological Survey Data Series 1035; U.S. Geological Survey: Reston, VA, USA, 2017. [Google Scholar] [CrossRef]

- Křivánek, I. Dielectric properties of materials at microwave frequencies. Acta Univ. Agric. Silvic. Mendelianae Brun. 2008, 56, 125–131. [Google Scholar] [CrossRef]

- Bennamoun, M.; Mamic, M.J. Object recognition—Fundamentals and case studies. In Object Recognition; Singh, S., Ed.; Springer: London, UK, 2002; pp. 19–57. [Google Scholar] [CrossRef]

- Chin, R.T.; Dyer, C.R. Model-based recognition in robot vision. ACM Comput. Surv. 1986, 18, 67–108. [Google Scholar] [CrossRef]

- Salman, R.; Schultze, T.; Janson, M.; Wiesbeck, W.; Willms, I. Robust radar UWB object recognition. In Proceedings of the 2008 IEEE International RF and Microwave Conference, Kuala Lumpur, Malaysia, 2–4 December 2008. [Google Scholar] [CrossRef]

- Salman, R.; Willms, I. A novel UWB radar super-resolution object recognition approach for complex edged objects. In Proceedings of the 2010 IEEE International Conference on Ultra-Wideband, Nanjing, China, 20–23 September 2010. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Hamila, R.; Gabbouj, M. Convolutional neural networks for patient-specific ECG classification. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Ince, T.; Gabbouj, M. Real-time patient-specific ECG classification by 1-D convolutional neural networks. IEEE Trans. Biomed. Eng. 2016, 63, 664–675. [Google Scholar] [CrossRef] [PubMed]

- Kiranyaz, S.; Ince, T.; Gabbouj, M. Personalized monitoring and advance warning system for cardiac arrhythmias. Sci. Rep. 2017, 7, 9270. [Google Scholar] [CrossRef]

- Limbacher, B.; Schoenhuber, S.; Wenclawiak, M.; Kainz, M.A.; Andrews, A.M.; Strasser, G.; Darmo, J.; Unterrainer, K. Terahertz optical machine learning for object recognition. APL Photonics 2020, 5, 126103. [Google Scholar] [CrossRef]

- Danso, S.A.; Shang, L.; Hu, D.; Odoom, J.; Liu, Q.; Nyarko, B.N.E. Hidden dangerous object recognition in terahertz images using deep learning methods. Appl. Sci. 2022, 12, 7354. [Google Scholar] [CrossRef]

- Bai, X.; Yang, Y.; Wei, S.; Chen, G.; Li, H.; Tian, H.; Zhang, T.; Cui, H. A comprehensive review of conventional and deep learning approaches for ground-penetrating radar detection of raw data. Appl. Sci. 2023, 13, 7992. [Google Scholar] [CrossRef]

- Moalla, M.; Frigui, H.; Karen, A.; Bouzid, A. Application of convolutional and recurrent neural networks for buried threat detection using ground penetrating radar data. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7022–7034. [Google Scholar] [CrossRef]

- Jang, D.; Wang, Y.; Li, Y.; Lin, Y.; Shen, W. Radar target characterization and deep learning in radar automatic target recognition: A review. Remote Sens. 2023, 15, 3742. [Google Scholar] [CrossRef]

- Buchman, D.; Drozdov, M.; Krilavicius, T.; Maskeliunas, M.; Damasevicius, R. Pedestrian and animal recognition using Doppler radar signature and deep learning. Sensors 2022, 22, 3456. [Google Scholar] [CrossRef]

- Xia, Z.; Wang, P.; Liu, H. Radar HRRP open set target recognition based on closed classification boundary. Remote Sens. 2023, 15, 468. [Google Scholar] [CrossRef]

- Liu, Q.; Zhang, X.; Liu, Y. SCNet: Scattering center neural network for radar target recognition with incomplete target-aspects. Signal Process. 2024, 219, 109409. [Google Scholar] [CrossRef]

- Dong, Y.; Wang, P.; Fang, M.; Guo, Y.; Cao, L.; Yan, J.; Liu, H. Radar High-Resolution Range Profile Rejection Based on Deep Multi-Modal Support Vector Data Description. Remote Sens. 2024, 16, 649. [Google Scholar] [CrossRef]

- Chen, L.; Pan, Z.; Liu, Q.; Hu, P. HRRPGraphNet++: Dynamic Graph Neural Network with Meta-Learning for Few-Shot HRRP Radar Target Recognition. Remote Sens. 2025, 17, 2108. [Google Scholar] [CrossRef]

- Lunden, J.; Koivunen, V. Deep learning for HRRP-based target recognition in multistatic radar systems. In Proceedings of the 2016 IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016. [Google Scholar] [CrossRef]

- Yanik, M.E.; Rao, S. Radar-Based Multiple Target Classification in Complex Environments Using 1D-CNN Models. In Proceedings of the IEEE Radar Conference (RadarConf23), San Antonio, TX, USA, 1–5 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Erdélyi, V.; Rizk, H.; Yamaguchi, H.; Higashino, T. Learn to see: A microwave-based object recognition system using learning techniques. In Proceedings of the 2021 International Conference on Distributed Computing and Networking (ICDCN ’21), Nara, Japan, 5–8 January 2021; ACM: New York, NY, USA; pp. 145–150. [Google Scholar] [CrossRef]

- Pathel, K.; Rambach, K.; Visentin, T.; Rusev, D.; Pfeiffer, M.; Yang, B. Deep learning-based object classification on automotive radar spectra. In Proceedings of the IEEE Radar Conference, Boston, MA, USA, 22–26 April 2019. [Google Scholar] [CrossRef]

- SAF Tehnika. Spectrum Compact Device. Available online: https://spectrumcompact.com/ (accessed on 9 September 2025).

- Sarker, I.H. Machine learning: Algorithms, real-world applications and research directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Chalapathy, R.; Chawla, S. Deep learning for anomaly detection: A survey. arXiv 2019, arXiv:1901.03407. [Google Scholar] [CrossRef]

- Aditi; Dureja, A. A review: Image classification and object detection with deep learning. In Applications of Artificial Intelligence in Engineering; Gao, X.-Z., Kumar, R., Srivastava, S., Soni, B.P., Eds.; Springer: Singapore, 2021; pp. 69–91. [Google Scholar]

- Pang, Y.; Cao, J. Deep learning in object detection. In Deep Learning in Object Detection and Recognition; Jiang, X., Hadid, A., Pang, Y., Granger, E., Feng, X., Eds.; Springer: Singapore, 2019; pp. 19–57. [Google Scholar] [CrossRef]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar] [CrossRef]

- Dhillon, A.; Verma, G.K. Convolutional neural network: A review of models, methodologies and applications to object detection. Prog. Artif. Intell. 2020, 9, 85–112. [Google Scholar] [CrossRef]

- Mortezapour Shiri, F.; Perumal, T.; Mustapha, N.; Mohamed, R. A comprehensive overview and comparative analysis on deep learning models: CNN, RNN, LSTM, GRU. arXiv 2023, arXiv:2305.17473. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems 25; Pereira, F., Burges, C.J., Bottou, L., Weinberger, K.Q., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2012. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 9 September 2025).

- Avci, O.; Abdeljaber, O.; Kiranyaz, S.; Hussein, M.; Inman, D.J. Wireless and real-time structural damage detection: A novel decentralized method for wireless sensor networks. J. Sound Vib. 2018, 424, 158–172. [Google Scholar] [CrossRef]

- Abdeljaber, O.; Avci, O.; Kiranyaz, S.; Gabbouj, M.; Inman, D.J. Real-time vibration-based structural damage detection using one-dimensional convolutional neural networks. J. Sound Vib. 2017, 388, 154–170. [Google Scholar] [CrossRef]

- Ince, T.; Kiranyaz, S.; Eren, L.; Askar, M.; Gabbouj, M. Real-time motor fault detection by 1-D convolutional neural networks. IEEE Trans. Ind. Electron. 2016, 63, 7067–7075. [Google Scholar] [CrossRef]

- Kiranyaz, S.; Gastli, A.; Ben-Brahim, L.; Al-Emadi, N.; Gabbouj, M. Real-time fault detection and identification for MMC using 1-D convolutional neural networks. IEEE Trans. Ind. Electron. 2019, 66, 8760–8771. [Google Scholar] [CrossRef]

- Eren, L. Bearing fault detection by one-dimensional convolutional neural networks. Math. Probl. Eng. 2017, 2017, 8617315. [Google Scholar] [CrossRef]

- Zhang, W.; Li, C.; Peng, G.; Chen, Y.; Zhang, Z. A deep convolutional neural network with new training methods for bearing fault diagnosis under noisy environment and different working load. Mech. Syst. Signal Process. 2018, 100, 439–453. [Google Scholar] [CrossRef]

- Glorot, X.; Bordes, A.; Bengio, Y. Deep sparse rectifier neural networks. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010; Volume 15. [Google Scholar]

- Glorot, X.; Bengio, Y. Understanding the difficulty of training deep feedforward neural networks. In Proceedings of the International Conference on Artificial Intelligence and Statistics, Sardinia, Italy, 13–15 May 2010. [Google Scholar]

- Gholamalinezhad, H.; Khosravi, H. Pooling methods in deep neural networks: A review. arXiv 2020, arXiv:2009.07485. [Google Scholar] [CrossRef]

- Basha, S.H.S.; Dubey, S.R.; Pulabaigari, V.; Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 2020, 378, 112–119. [Google Scholar] [CrossRef]

- Sharma, A.; Kumar, D. Hyperparameter optimization in CNN: A review. In Proceedings of the 2023 International Conference on Computing, Communication, and Intelligent Systems (ICCCIS), Greater Noida, India, 3–4 February 2023; pp. 237–242. [Google Scholar] [CrossRef]

- Powers, D.M.W. Evaluation: From precision, recall and f-measure to ROC, informedness, markedness and correlation. arXiv 2020, arXiv:2010.16061. [Google Scholar] [CrossRef]

| Description | Value | Note |

|---|---|---|

| # Kernels | 32 | Convolutional Layer |

| Kernel size | 16 | |

| Activation function | ReLU | |

| Type of pooling | Max | Pooling Layer |

| Kernel size | 2 | |

| # Neurons | 50 | 1st Fully Connected Layer |

| # Neurons | 2 | 2nd Fully Connected Layer |

| # Epochs | 100 | Candidate values: 50, 100, 200 |

| Learning Rate | 0.001 | Candidate values: 0.0001, 0.001, 0.01, 0.1 |

| Weight Decay | 0.001 | Candidate values: 0.001, 0.01, 0.1 |

| Batch Size | 2 | Candidate values: 2, 4, 8 |

| Early Stopping | 20 |

| Metrics | Accuracy | Precision | Recall | F1 Score | AUC |

|---|---|---|---|---|---|

| Detection | 100% | 100% | 100% | 100% | 100% |

| Recognition | 84% | 82% | 86% | 84% | 85% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hossein zadeh, M.; Barbiroli, M.; Del Prete, S.; Fuschini, F. One-Dimensional Convolutional Neural Network for Object Recognition Through Electromagnetic Backscattering in the Frequency Domain. Sensors 2025, 25, 6809. https://doi.org/10.3390/s25226809

Hossein zadeh M, Barbiroli M, Del Prete S, Fuschini F. One-Dimensional Convolutional Neural Network for Object Recognition Through Electromagnetic Backscattering in the Frequency Domain. Sensors. 2025; 25(22):6809. https://doi.org/10.3390/s25226809

Chicago/Turabian StyleHossein zadeh, Mohammad, Marina Barbiroli, Simone Del Prete, and Franco Fuschini. 2025. "One-Dimensional Convolutional Neural Network for Object Recognition Through Electromagnetic Backscattering in the Frequency Domain" Sensors 25, no. 22: 6809. https://doi.org/10.3390/s25226809

APA StyleHossein zadeh, M., Barbiroli, M., Del Prete, S., & Fuschini, F. (2025). One-Dimensional Convolutional Neural Network for Object Recognition Through Electromagnetic Backscattering in the Frequency Domain. Sensors, 25(22), 6809. https://doi.org/10.3390/s25226809