FedPSFV: Personalized Federated Learning via Prototype Sharing for Finger Vein Recognition

Abstract

1. Introduction

2. Related Work

2.1. Finger Vein Recognition

2.2. Finger Vein Recognition via Federated Learning

3. Methodology

3.1. Problem Description

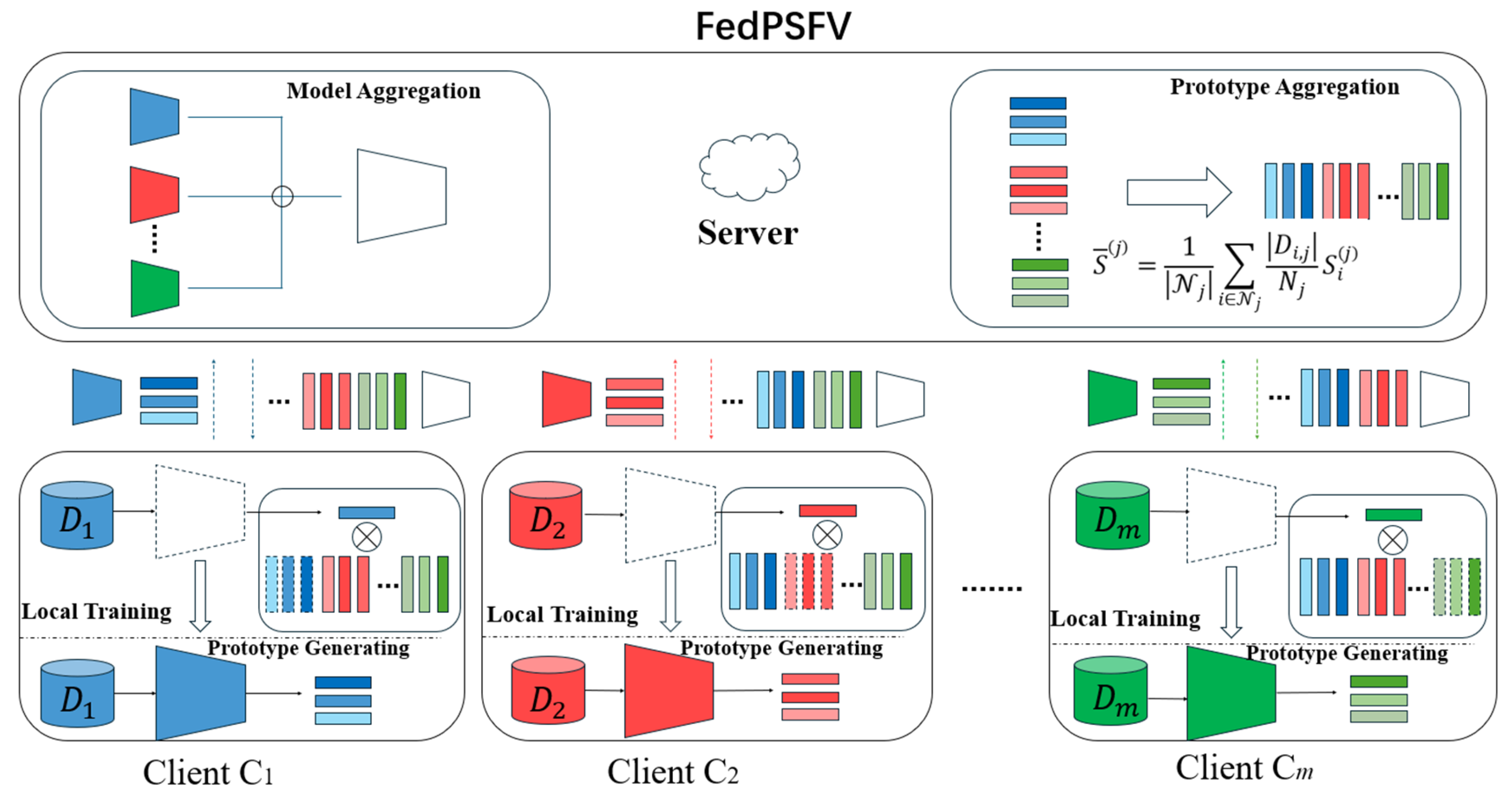

3.2. Framework of the FedPSFV Method

3.3. Local Training Process

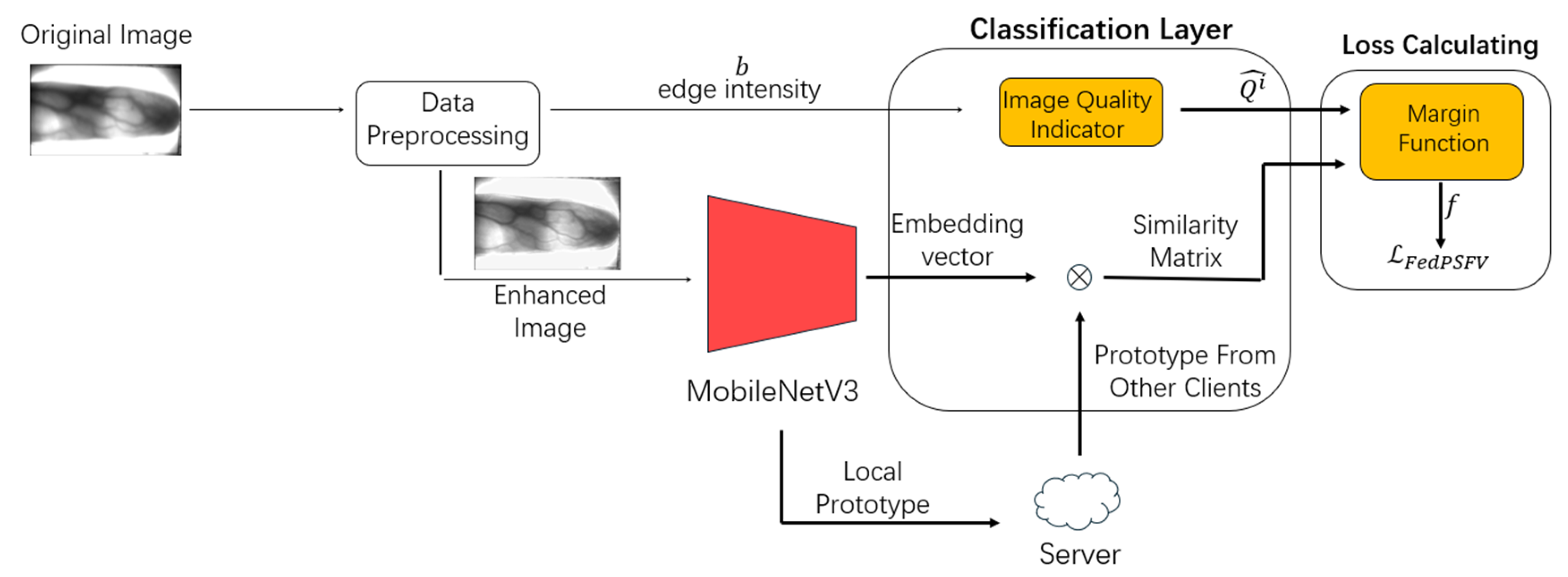

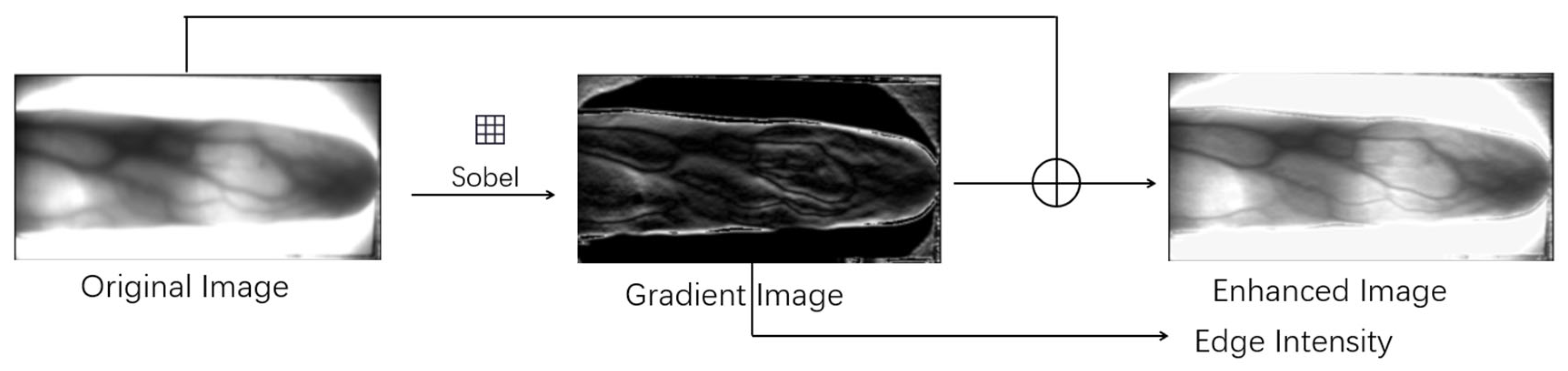

3.3.1. Data Preprocessing

3.3.2. Local Network Architecture

3.3.3. Loss Calculation

| Algorithm 1. Calculating edge intensity and margin loss | |

| Parameters: dataset of client Dk, sample set belonging to a class of a, sample image I belongs to a sample set, edge intensity set Bk of the client, and quality score set Qk of the client. Every client executes: | |

| 1: | Initialize the local classifier weight matrix Wk and hyperparameter margin m |

| 2: | for do |

| 3: | set the set of edge intensities bi |

| 4: | for do |

| 5: | Compute Ig by Equation (3) via I |

| 6: | Compute the enhanced image via Equation (2) using Ig and I |

| 7: | set |

| 8: | Compute the edge intensity b via Equation (5) using Ig. Add it to bi |

| 9: | end for |

| 10: | Compute , the mean of Add it to Bk |

| 11: | end for |

| 12: | Normalize the mean value of edge intensity for each category in Bk according to Equation (10) to |

| 13: | Compute the quality score Qi for each category by Equation (11) using Add it to Qk |

| 14: | Compute θ using Dk, Wk, the embedding vectors during training, and other client prototypes shared by the FedPSFV algorithm |

| 15: | Compute the margin loss by Equations (12) and (13) using Qk and θ |

| Algorithm 2. Client training process and FedPSFV loss function | |

| Parameters: client serial number k, model parameter ω after the t-th round aggregation, and the set of global prototypes after the t-th round aggregation | |

| ClientUpdate (k, ωt, ): | |

| set ωt,kωt | |

| 1: | Compute Wk by Equation (9) via and initialize hyperparameter margins m |

| 2: 3: | for each local epoch do for batch (xk, yk)Dk do |

| 4: | Compute loss via Equations (13) and (16) using xk, ωt,k, Wk, and yk |

| 5: | Update local model parameter ωt+1,k according to the loss. |

| 6: | end for |

| 7: | end for |

| 8: | Compute local prototype Sk via Equation (6) using xk and ωt + 1,k |

| 9: | return ωt+1,k, Sk |

3.4. Global Aggregation

| Algorithm 3. Global aggregation | |

| Parameters: The global prototype set excludes the prototypes owned by client i. The server executes: | |

| 1: | Initialize the feature representation layer parameters ω of the global model and global prototype set |

| 2: | for each round T = 1,2……do |

| 3: | for each client k in parallel do |

| 4: | ωt+1,k, SkClientUpdate (k, ω, ) |

| 5: | end for |

| 6: | Update parameters ω of the global model via Equation (17) using ωt+1,k |

| 7: | Update the global prototype set via Equation (18) using Sk |

| 8: | end for |

4. Experiments

4.1. Datasets and Evaluation Methods

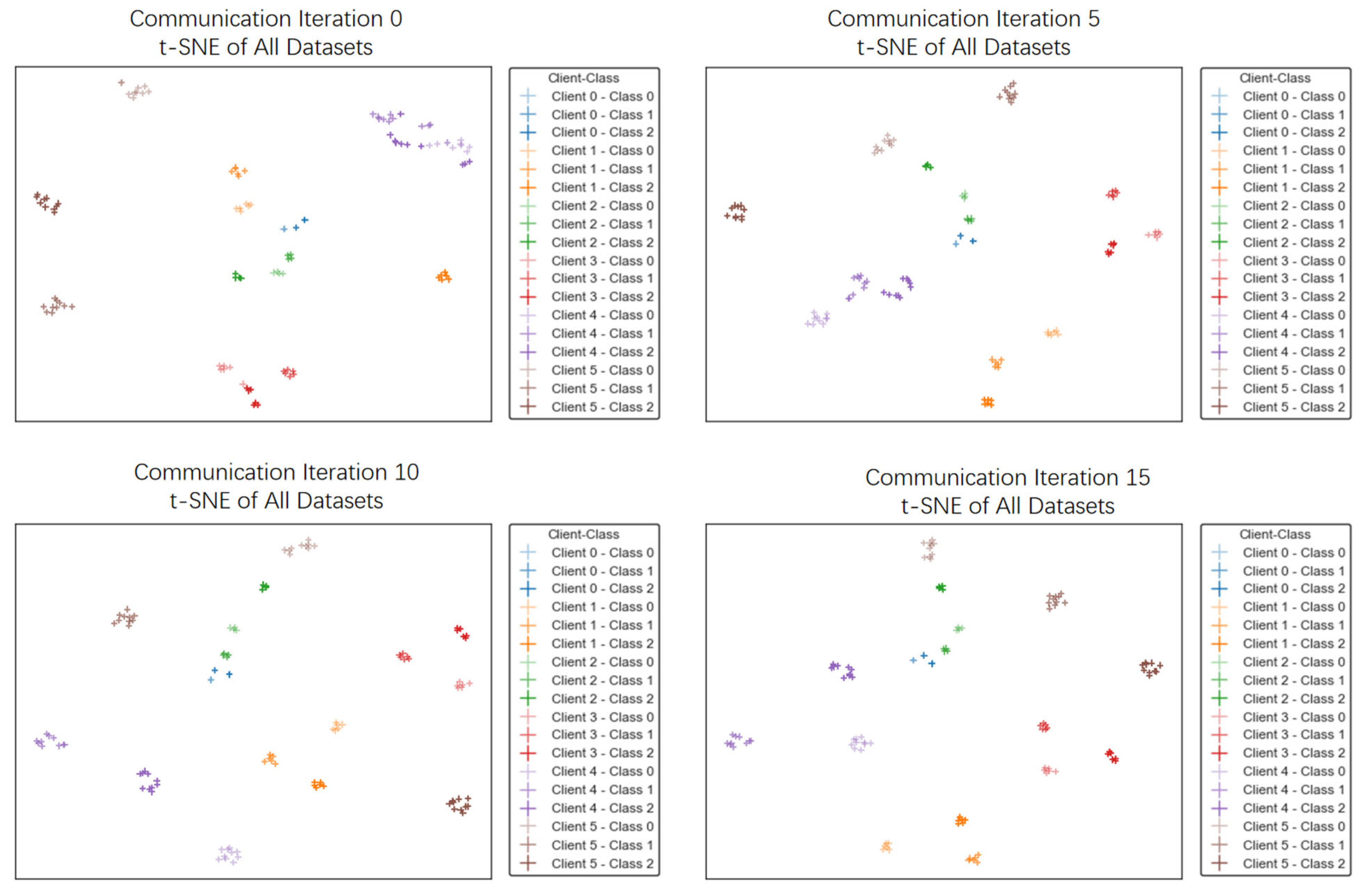

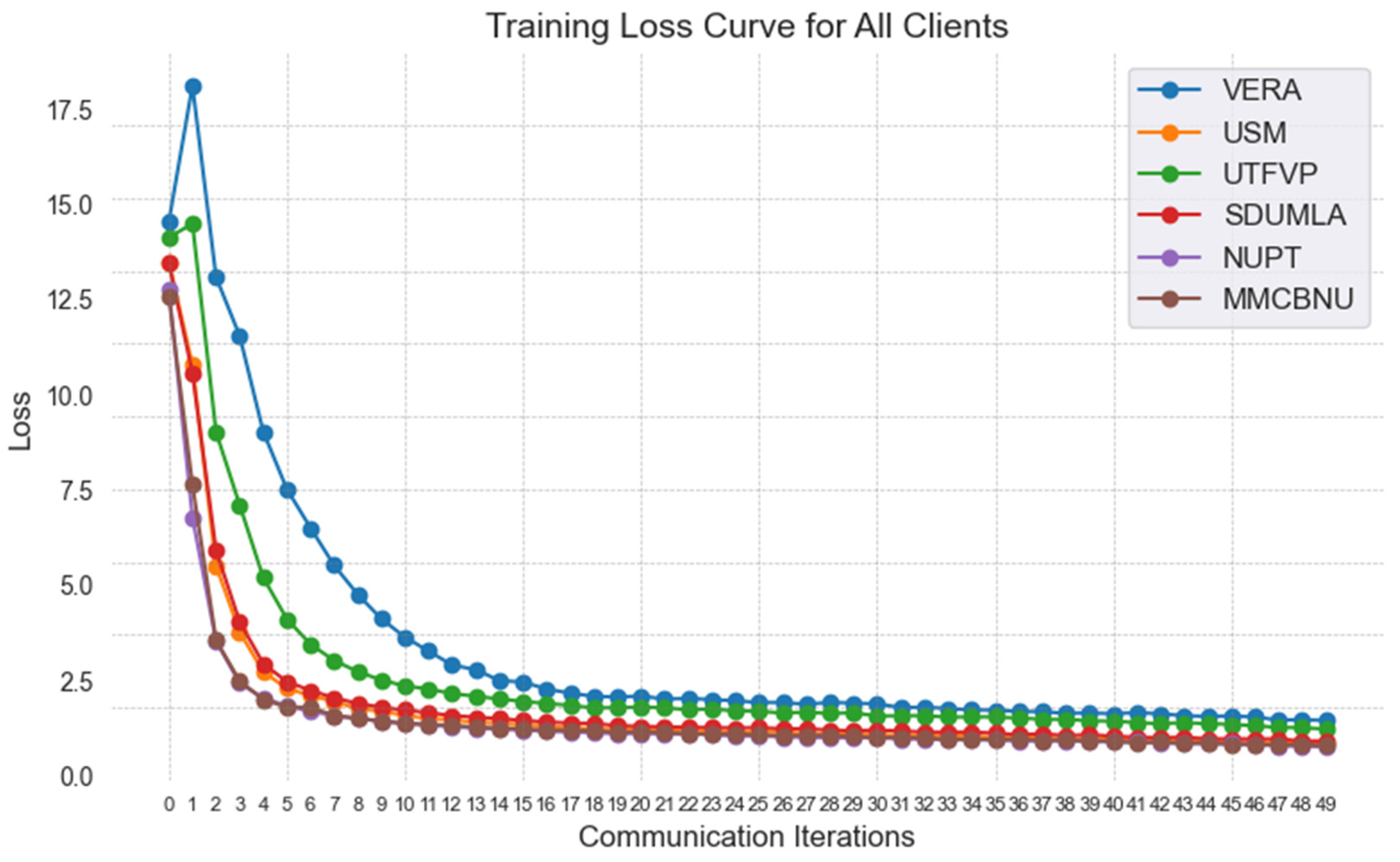

4.2. Experimental Results and Analysis

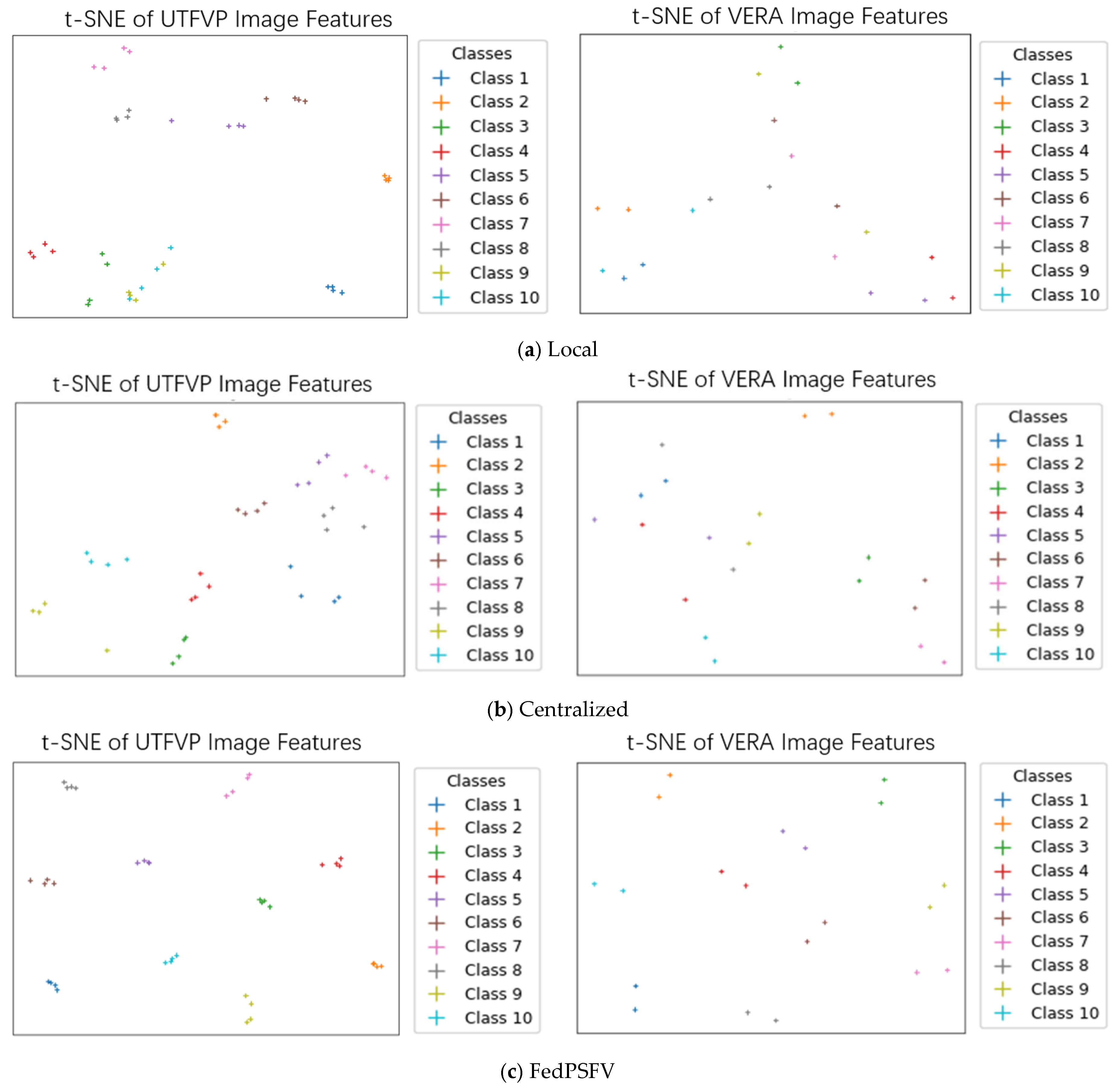

4.2.1. Comparison with Client-Independent Training and Client-Centralized Training Methods

4.2.2. Ablation Study

4.2.3. Comparison with Existing Methods

4.2.4. Communication Cost and Inference Efficiency

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, Z.; Liu, P.; Su, C.; Tong, S. A High-Speed Finger Vein Recognition Network with Multi-Scale Convolutional Attention. Appl. Sci. 2025, 15, 2698. [Google Scholar] [CrossRef]

- Ren, H.; Sun, L.; Fan, X.; Cao, Y.; Ye, Q. IIS-FVIQA: Finger Vein Image Quality Assessment with intra-class and inter-class similarity. Pattern Recognit. 2025, 158, 111056. [Google Scholar] [CrossRef]

- Bhushan, K.; Singh, S.; Kumar, K.; Kumar, P. Deep learning based automated vein recognition using swin transformer and super graph glue model. Knowl.-Based Syst. 2025, 310, 112929. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, Z.; Tian, Z.; Yu, S. CRCGAN: Toward robust feature extraction in finger vein recognition. Pattern Recognit. 2025, 158, 111064. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, Z.; Li, M.; Wu, H. GAN-based image augmentation for finger-vein biometric recognition. IEEE Access 2019, 7, 183118–183132. [Google Scholar] [CrossRef]

- Stallings, W. Handling of personal information and deidentified, aggregated, and pseudonymized information under the California consumer privacy act. IEEE Secur. Priv. 2020, 18, 61–64. [Google Scholar] [CrossRef]

- Goldman, E. An Introduction to the California Consumer Privacy Act (CCPA). Santa Clara University Legal Studies Research Paper 2020. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3211013 (accessed on 11 August 2025).

- Bygrave, L.A. Article 25 Data Protection by Design and by Default//The EU General Data Protection Regulation (GDPR); Oxford University Press: Oxford, UK, 2020. [Google Scholar]

- Shamsian, A.; Navon, A.; Fetaya, E.; Chechik, G. Personalized federated learning using hypernetworks. In Proceedings of the 38th International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 9489–9502. [Google Scholar]

- Li, T.; Hu, S.; Beirami, A.; Smith, V. Ditto: Fair and robust federated learning through personalization. In Proceedings of the 38th International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 6357–6368. [Google Scholar]

- Husnoo, M.A.; Anwar, A.; Hosseinzadeh, N.; Islam, S.N.; Mahmood, A.N.; Doss, R. FedREP: Towards horizontal federated load forecasting for retail energy providers. In Proceedings of the 2022 IEEE PES 14th Asia-Pacific Power and Energy Engineering Conference (APPEEC), Melbourne, Australia, 20–23 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Liu, J.; Ma, H. Finger Vein Recognition based on Self-Attention and Convolution. In Proceedings of the 2024 36th Chinese Control and Decision Conference (CCDC), Xi’an, China, 25–27 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 5750–5754. [Google Scholar]

- Ghafourian, M.; Fierrez, J.; Vera-Rodriguez, R.; Tolosana, R.; Morales, A. SaFL: Sybil-aware federated learning with application to face recognition. In Proceedings of the 2024 IEEE International Conference on Image Processing Challenges and Workshops (ICIPCW), Abu Dhabi, United Arab Emirates, 27–30 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 4050–4056. [Google Scholar]

- Gupta, H.; Rajput, T.K.; Vyas, R.; Vyas, O.P.; Puliafito, A. Biometric iris identifier recognition with privacy preserving phenomenon: A federated learning approach. In Proceedings of the International Conference on Neural Information Processing, Virtual, 22–26 November 2022; Springer Nature: Singapore, 2022; pp. 493–504. [Google Scholar]

- Luo, Z.; Wang, Y.; Wang, Z.; Wang, Z.; Sun, Z.; Tan, T. Fediris: Towards more accurate and privacy-preserving iris recognition via federated template communication. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3357–3366. [Google Scholar]

- Meng, Q.; Zhou, F.; Ren, H.; Feng, T.; Liu, G.; Lin, Y. Improving federated learning face recognition via privacy-agnostic clusters. arXiv 2022, arXiv:2201.12467. [Google Scholar]

- Guo, J.; Mu, H.; Liu, X.; Ren, H.; Han, C. Federated learning for biometric recognition: A survey. Artif. Intell. Rev. 2024, 57, 208. [Google Scholar] [CrossRef]

- Lian, F.Z.; Huang, J.D.; Liu, J.X.; Chen, G.; Zhao, J.H.; Kang, W.X. FedFV: A personalized federated learning framework for finger vein authentication. Mach. Intell. Res. 2023, 20, 683–696. [Google Scholar] [CrossRef]

- Guo, Z.; Guo, J.; Huang, Y.; Zhang, Y.; Ren, H. DDP-FedFV: A Dual-Decoupling Personalized Federated Learning Framework for Finger Vein Recognition. Sensors 2024, 24, 4779. [Google Scholar] [CrossRef]

- Ren, H.; Sun, L.; Cao, Y.; Qi, L.; Ye, Q. FVRFL: Federated Learning for Finger Vein Recognition under Distributed Scenario. IEEE Trans. Instrum. Meas. 2025, 74, 5017312. [Google Scholar] [CrossRef]

- Miura, N.; Nagasaka, A.; Miyatake, T. Feature extraction of finger-vein patterns based on repeated line tracking and its application to personal identification. Mach. Vis. Appl. 2004, 15, 194–203. [Google Scholar] [CrossRef]

- Miura, N.; Nagasaka, A.; Miyatake, T. Extraction of finger-vein patterns using maximum curvature points in image profiles. IEICE Trans. Inf. Syst. 2007, 90, 1185–1194. [Google Scholar] [CrossRef]

- Lee, E.C.; Lee, H.C.; Park, K.R. Finger vein recognition using minutia-based alignment and local binary pattern-based feature extraction. Int. J. Imaging Syst. Technol. 2009, 19, 179–186. [Google Scholar] [CrossRef]

- Hu, H.; Kang, W.; Lu, Y.; Liu, H.; Zhao, J.; Deng, F. FV-Net: Learning a finger-vein feature representation based on a CNN. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 3489–3494. [Google Scholar]

- Zhao, D.; Ma, H.; Yang, Z.; Li, J.; Tian, W. Finger vein recognition based on lightweight CNN combining center loss and dynamic regularization. Infrared Phys. Technol. 2020, 105, 103221. [Google Scholar] [CrossRef]

- Song, J.M.; Kim, W.; Park, K.R. Finger-vein recognition based on deep DenseNet using composite image. Ieee Access 2019, 7, 66845–66863. [Google Scholar] [CrossRef]

- Wang, S.; Gai, K.; Yu, J.; Zhang, Z.; Zhu, L. PraVFed: Practical heterogeneous vertical federated learning via representation learning. IEEE Trans. Inf. Forensics Secur. 2025, 20, 2693–2705. [Google Scholar] [CrossRef]

- Tan, Y.; Long, G.; Liu, L.; Zhou, T.; Lu, Q.; Jiang, J.; Zhang, C. Fedproto: Federated prototype learning across heterogeneous clients. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 8432–8440. [Google Scholar]

- Tan, J.; Zhou, Y.; Liu, G.; Wang, J.H.; Yu, S. pFedSim: Similarity-Aware Model Aggregation Towards Personalized Federated Learning. arXiv 2023, arXiv:2305.15706. [Google Scholar]

- Li, Q.; He, B.; Song, D. Model-contrastive federated learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 10713–10722. [Google Scholar]

- Zhang, J.; Liu, Y.; Hua, Y.; Cao, J. An Upload-Efficient Scheme for Transferring Knowledge from a Server-Side Pre-trained Generator to Clients in Heterogeneous Federated Learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024; pp. 12109–12119. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Zhang, Z.; Bai, D.; Fan, W.; Shi, X.; Tan, H.; Du, J.; Bai, X.; Zhou, F. A Time–Frequency-Aware Hierarchical Feature Optimization Method for SAR Jamming Recognition. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 10619–10635. [Google Scholar] [CrossRef]

- Seddik, M.E.A.; Tamaazousti, M. Neural networks classify through the class-wise means of their representations. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 8204–8211. [Google Scholar]

- Wang, H.; Wang, Y.; Zhou, Z.; Ji, X.; Gong, D.; Zhou, J.; Li, Z.; Liu, W. Cosface: Large margin cosine loss for deep face recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5265–5274. [Google Scholar]

- Deng, J.; Guo, J.; Xue, N.; Zafeiriou, S. Arcface: Additive angular margin loss for deep face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 4690–4699. [Google Scholar]

- Kim, M.; Jain, A.K.; Liu, X. Adaface: Quality adaptive margin for face recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 18750–18759. [Google Scholar]

- Yin, Y.; Liu, L.; Sun, X. SDUMLA-HMT: A multimodal biometric database. In Biometric Recognition, Proceedings of the 6th Chinese Conference, CCBR 2011, Beijing, China, 3–4 December 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 260–268. [Google Scholar]

- Lu, Y.; Xie, S.J.; Yoon, S.; Wang, Z.; Park, D.S. An available database for the research of finger vein recognition. In Proceedings of the 2013 6th International Congress on Image and Signal Processing (CISP), Hangzhou, China, 16–18 December 2013; IEEE: Piscataway, NJ, USA, 2013; Volume 1, pp. 410–415. [Google Scholar]

- Asaari, M.S.M.; Suandi, S.A.; Rosdi, B.A. Fusion of band limited phase only correlation and width centroid contour distance for finger based biometrics. Expert Syst. Appl. 2014, 41, 3367–3382. [Google Scholar]

- Ton, B.T.; Veldhuis, R.N.J. A high quality finger vascular pattern dataset collected using a custom designed capturing device. In Proceedings of the 2013 International Conference on Biometrics (ICB), Madrid, Spain, 4–7 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–5. [Google Scholar]

- Tome, P.; Vanoni, M.; Marcel, S. On the vulnerability of finger vein recognition to spoofing. In Proceedings of the 2014 International Conference of the Biometrics Special Interest Group (BIOSIG), Darmstadt, Germany, 10–12 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–10. [Google Scholar]

- Ren, H.; Sun, L.; Guo, J.; Han, C. A dataset and benchmark for multimodal biometric recognition based on fingerprint and finger vein. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2030–2043. [Google Scholar] [CrossRef]

- Lu, Z.; Pan, H.; Dai, Y.; Si, X.; Zhang, Y. Federated learning with non-iid data: A survey. IEEE Internet Things J. 2024, 11, 19188–19209. [Google Scholar] [CrossRef]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In Proceedings of the 38th International Conference on Machine Learning, PMLR, Virtual, 18–24 July 2021; pp. 10096–10106. [Google Scholar]

- Liu, Z.; Mao, H.; Wu, C.Y.; Feichtenhofer, C.; Darrell, T.; Xie, S. A convnet for the 2020s. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 11976–11986. [Google Scholar]

- Yang, X.; Huang, W.; Ye, M. FedAS: Bridging inconsistency in personalized federated learning. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 11986–11995. [Google Scholar]

- Ren, H.; Sun, L.; Ren, J.; Cao, Y. FV-DDC: A novel finger-vein recognition model with deformation detection and correction. Biomed. Signal Process. Control 2025, 100, 107098. [Google Scholar] [CrossRef]

- Mu, H.; Guo, J.; Han, C.; Sun, L. Pafedfv: Personalized and asynchronous federated learning for finger vein recognition. arXiv 2024, arXiv:2404.13237. [Google Scholar] [CrossRef]

| Datasets | Local | Centralized | FedPSFV | |||

|---|---|---|---|---|---|---|

| EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | |

| SDUMLA | 5.15% | 89.35% | 3.52% | 91.84% | 0.65% | 99.79% |

| MMCBNU | 0.80% | 99.31% | 0.93% | 99.15% | 0.08% | 100.00% |

| USM | 5.21% | 81.31% | 4.64% | 95.85% | 0.40% | 99.69% |

| UTFVP | 9.57% | 80.71% | 4.77% | 91.39% | 0.53% | 99.75% |

| VERA | 6.04% | 70.00% | 2.60% | 90.12% | 1.06% | 99.29% |

| NUPT | 0.86% | 99.26% | 1.01% | 98.97% | 0.26% | 98.92% |

| Average | 4.61% | 86.66% | 2.91% | 94.55% | 0.50% | 99.57% |

| Datasets | W/O DPS and DAA | W/O DAA | W/O DPS | FedPSFV | ||||

|---|---|---|---|---|---|---|---|---|

| EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | |

| SDUMLA | 2.71% | 95.37% | 1.92% | 99.25% | 2.23% | 95.98% | 0.65% | 99.79% |

| MMCBNU | 0.08% | 100.00% | 0.39% | 99.89% | 0.72% | 98.94% | 0.08% | 100.00% |

| USM | 2.64% | 95.42% | 0.62% | 99.45% | 0.63% | 99.70% | 0.40% | 99.69% |

| UTFVP | 0.36% | 99.98% | 0.50% | 99.82% | 0.29% | 100.00% | 0.53% | 99.75% |

| VERA | 1.36% | 98.29% | 0.82% | 100.00% | 1.08% | 96.67% | 1.06% | 99.29% |

| NUPT | 0.33% | 98.08% | 1.62% | 97.85% | 0.38% | 99.84% | 0.26% | 98.92% |

| Average | 1.25% | 97.86% | 0.98% | 99.38% | 0.89% | 98.52% | 0.50% | 99.57% |

| Datasets | FedPSFV with Efficientnetv2-S | FedPSFV with ConvNeXt-Tiny | FedPSFV with MobileNetV3 Large | |||

|---|---|---|---|---|---|---|

| EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | |

| SDUMLA | 3.60% | 91.69% | 1.34% | 98.61% | 0.65% | 99.79% |

| MMCBNU | 1.35% | 98.20% | 0.84% | 99.49% | 0.08% | 100.00% |

| USM | 0.47% | 99.83% | 0.31% | 99.90% | 0.40% | 99.69% |

| UTFVP | 5.38% | 84.32% | 0.48% | 99.60% | 0.53% | 99.75% |

| VERA | 13.57% | 60.00% | 6.62% | 85.52% | 1.06% | 99.29% |

| NUPT | 0.79% | 99.29% | 0.54% | 99.45% | 0.26% | 98.92% |

| Average | 4.19% | 88.89% | 1.69% | 97.10% | 0.50% | 99.57% |

| Datasets | Contrast 0.6 | Contrast 0.8 | Contrast 1.0 | |||

|---|---|---|---|---|---|---|

| EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | |

| SDUMLA | 0.56% | 99.79% | 0.92% | 99.18% | 0.65% | 99.79% |

| MMCBNU | 0.04% | 99.98% | 0.28% | 99.95% | 0.08% | 100.00% |

| USM | 0.40% | 99.87% | 0.44% | 99.87% | 0.40% | 99.69% |

| UTFVP | 0.80% | 99.60% | 0.21% | 100.0% | 0.53% | 99.75% |

| VERA | 2.45% | 97.43% | 1.85% | 98.54% | 1.06% | 99.29% |

| NUPT | 3.14% | 98.84% | 5.45% | 95.12% | 0.26% | 98.92% |

| Average | 1.23% | 99.25% | 1.53% | 98.78% | 0.50% | 99.57% |

| Datasets | pfedsim | MOON | FedFV | DDP-FV | Ditto | FedAS | FedPSFV | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | EER | TAR@FAR = 0.01 | |

| SDUMLA | 2.20% | 96.33% | 3.31% | 93.05% | 2.64% | 94.93% | 2.52% | 96.23% | 6.21% | 90.19% | 2.92% | 95.34% | 0.65% | 99.79% |

| MMCBNU | 0.45% | 99.78% | 0.79% | 99.42% | 0.95% | 99.04% | 0.61% | 99.63% | 0.94% | 99.12% | 1.08% | 98.82% | 0.08% | 100.00% |

| USM | 2.65% | 94.64% | 1.71% | 96.77% | 2.19% | 95.17% | 3.24% | 90.26% | 4.36% | 88.96% | 3.62% | 89.04% | 0.40% | 99.69% |

| UTFVP | 2.18% | 95.38% | 2.92% | 92.36% | 3.20% | 91.27% | 2.90% | 93.81% | 9.71% | 76.85% | 4.81% | 86.61% | 0.53% | 99.75% |

| VERA | 8.91% | 64.14% | 6.61% | 73.60% | 7.04% | 69.05% | 6.40% | 89.95% | 8.33% | 83.12% | 5.35% | 80.90% | 1.06% | 99.29% |

| NUPT | 1.09% | 98.79% | 0.83% | 99.17% | 0.89% | 99.19% | 0.92% | 99.12% | 1.13% | 98.75% | 0.92% | 99.19% | 0.26% | 98.92% |

| Average | 2.91% | 91.51% | 2.70% | 92.40% | 2.82% | 91.44% | 2.77% | 94.83% | 5.11% | 89.50% | 3.12% | 91.65% | 0.50% | 99.57% |

| Upload | Download | |

|---|---|---|

| pfedsim, MOON, FedFV, DDP-FV, Ditto, FedAS | 3.00 M | 3.00 M |

| FedPSFV | 3.00 M + 0.26 M | 3.00 M + 1.56 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, H.; Guo, Y.; Qu, Y.; Guo, J.; Ren, H. FedPSFV: Personalized Federated Learning via Prototype Sharing for Finger Vein Recognition. Sensors 2025, 25, 6790. https://doi.org/10.3390/s25216790

Xu H, Guo Y, Qu Y, Guo J, Ren H. FedPSFV: Personalized Federated Learning via Prototype Sharing for Finger Vein Recognition. Sensors. 2025; 25(21):6790. https://doi.org/10.3390/s25216790

Chicago/Turabian StyleXu, Haoyan, Yuyang Guo, Yunzan Qu, Jian Guo, and Hengyi Ren. 2025. "FedPSFV: Personalized Federated Learning via Prototype Sharing for Finger Vein Recognition" Sensors 25, no. 21: 6790. https://doi.org/10.3390/s25216790

APA StyleXu, H., Guo, Y., Qu, Y., Guo, J., & Ren, H. (2025). FedPSFV: Personalized Federated Learning via Prototype Sharing for Finger Vein Recognition. Sensors, 25(21), 6790. https://doi.org/10.3390/s25216790