Robust 3D Skeletal Joint Fall Detection in Occluded and Rotated Views Using Data Augmentation and Inference–Time Aggregation

Abstract

1. Introduction

- We introduce a “Synthetic Viewpoint Generation” data augmentation pipeline that efficiently generates realistic occluded and rotated skeletal sequences specifically for training fall detection models, significantly enhancing robustness to camera perspective variation and stability.

- We propose a simple but effective inference-time aggregation method that leverages multiple rotated views of a single input to achieve a consensus-based final fall prediction, further enhancing model performance.

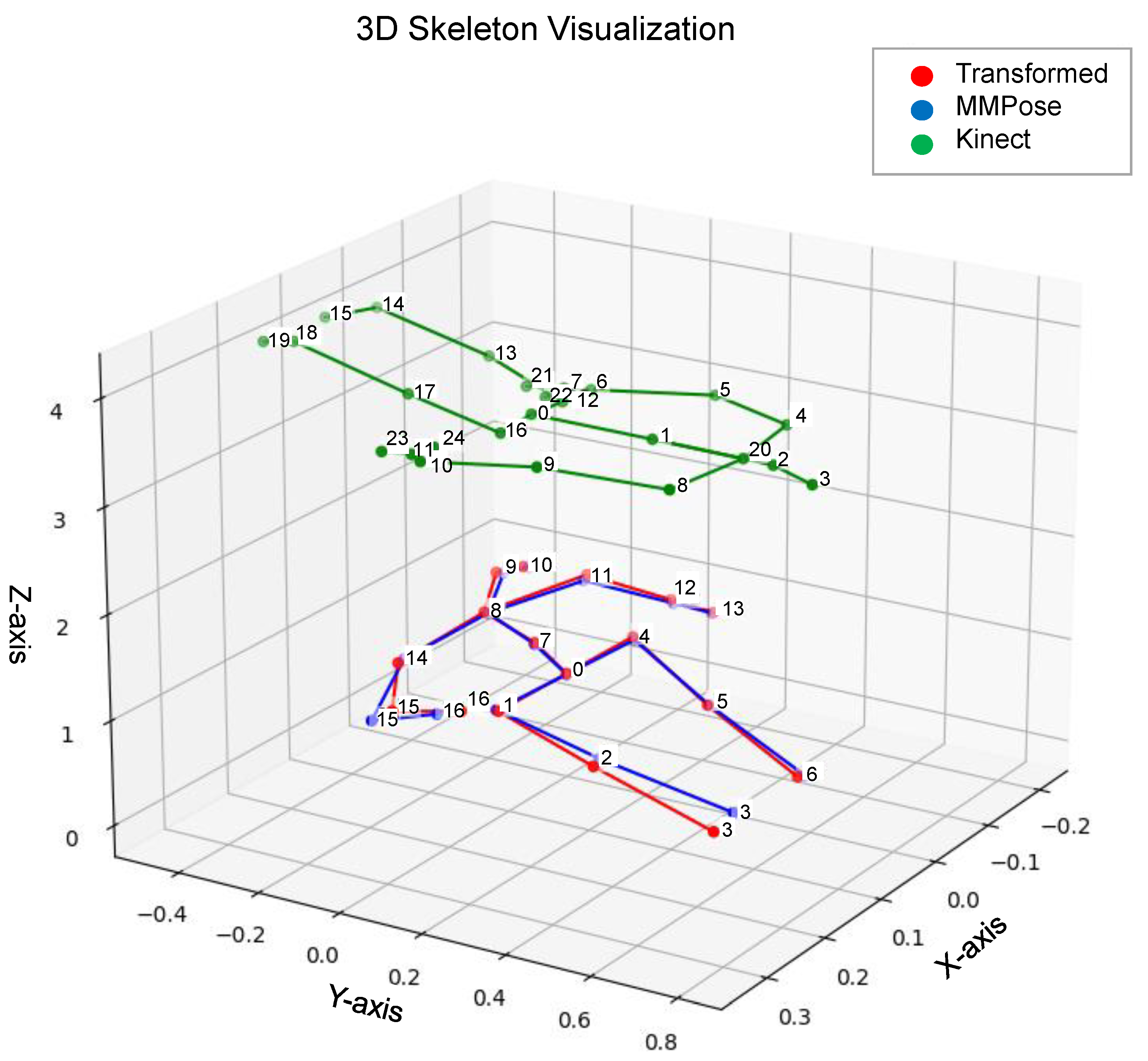

- We propose a per-joint affine transformation to convert the Kinect skeleton format to the MMPose format, enabling the model to be trained on standard Kinect datasets.

- We conduct a comprehensive ablation study to systematically quantify the impact of each of our contributions on the accuracy of fall detection.

2. Related Work

3. Methodology

3.1. Dataset Preparation

3.1.1. Le2i Dataset

- 1.

- Baseline Dataset:This dataset was established by extracting the initial 3D joint coordinates from the RGB videos in both the training and testing subsets, utilizing the MMPose framework, as detailed in Section 3.2. This corpus served as the essential performance baseline for subsequent comparative analysis.

- 2.

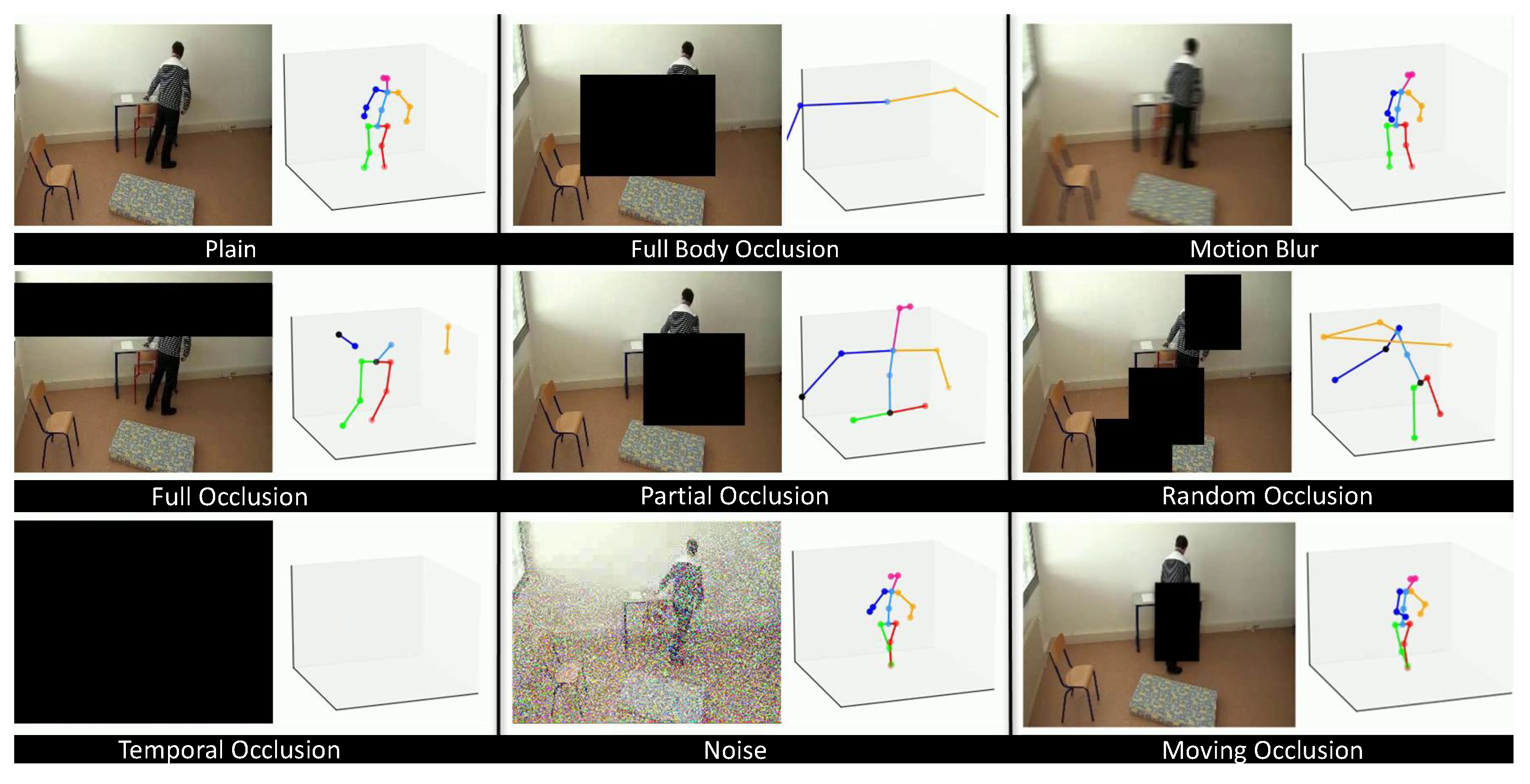

- Occluded Dataset: This dataset was derived from the baseline by applying various types of random occlusions as illustrated in Figure 1. This method simulates realistic scenarios where a subject might be partially obscured before or during a fall. We utilized distinct occlusion modalities, including random black boxes over sequential frames and temporal occlusions, where all frames are rendered black for a short duration. From a single source video, 10 unique occluded variations were generated. The joint predictions were subsequently re-extracted from these altered frames, thereby yielding intentionally degraded coordinate data for robustness training. The resulting corpus was subjected to a fixed, random split for training and testing evaluation.

- 3.

- Rotated Dataset: This dataset was generated to enhance the model’s robustness against camera viewpoint variations. This was achieved through Synthetic Viewpoint Generation, a technique that programmatically augments each skeletal sequence by creating multiple synthetic views via 3D rotations.Let a skeleton sequence be represented as:where denotes the 3D coordinates of joint v at frame t, with frames and joints.We applied two-axis rotations to each sequence: a vertical (z-axis) rotation and a horizontal (x-axis) tilt. The rotation angles were defined as:For each pair of angles , we constructed a combined rotation matrix:whereEach joint position was then transformed as:This procedure generated:synthetic viewpoints per original skeleton sequence, thereby enriching the dataset and enhancing the model’s ability to generalize across different camera perspectives.A significant challenge we faced was the severe class imbalance within the dataset, as the number of “fall” instances was disproportionately large compared to actual “no fall” events. To address this, we applied a data augmentation strategy to the minority class. By generating additional data for “no fall” events, we created a more balanced training set, which was critical for building a robust model that performs accurately on both fall and non-fall scenarios.

3.1.2. NTU RGB+D Dataset

3.2. Skeletal Feature Extraction

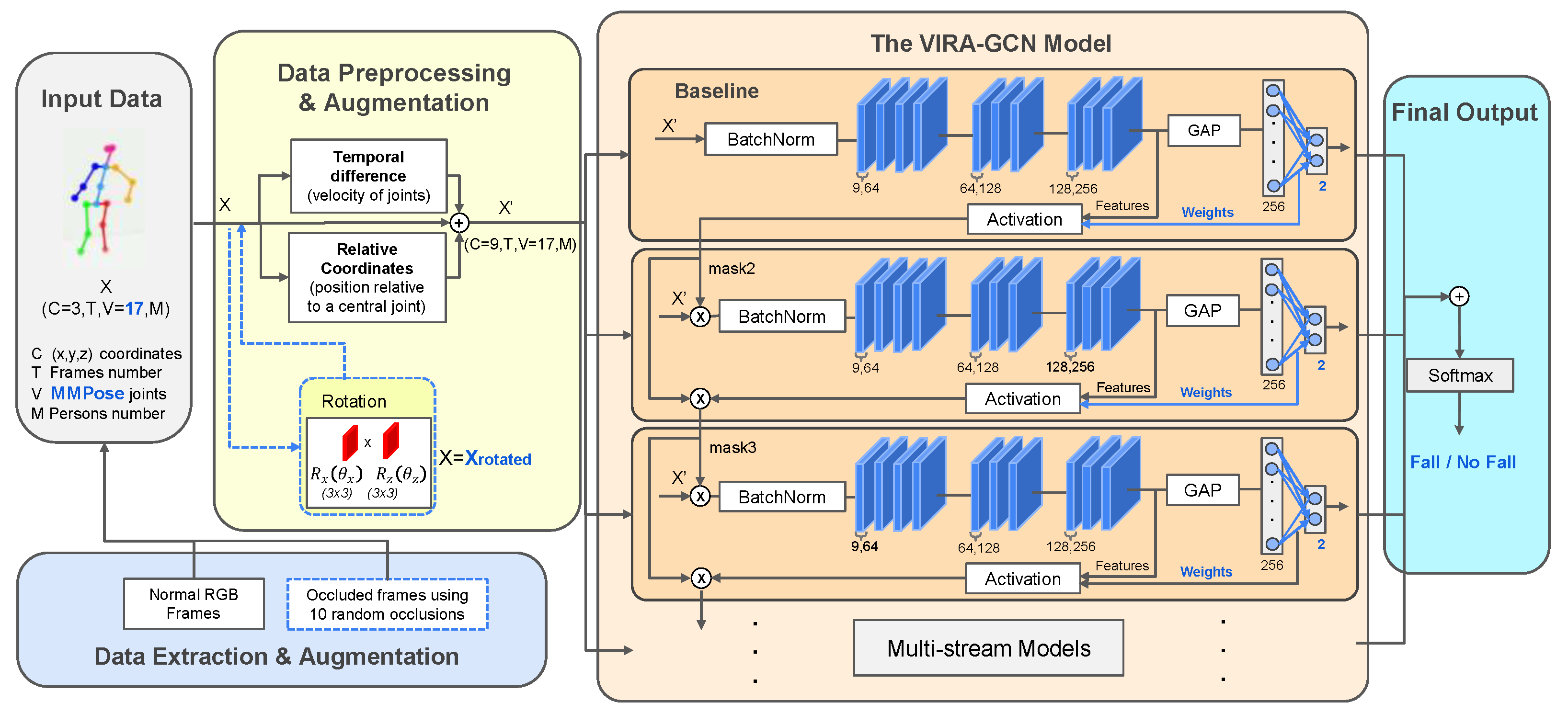

3.3. Proposed Model Architecture

- Input Dimensionality Reduction: We adapted the input layer to process a more concise 17-joint skeleton, which aligns with modern pose estimation frameworks (e.g., MMPose). The original model was designed for the larger 25-joint skeleton from Kinect.

- Realistic Data Augmentation for Robustness: The original study employed an artificial occlusion methodology that is fundamentally limited in its capacity to represent real-world operating scenarios. This method directly altered the predicted joint values, which may not reflect real outputs from a skeletal prediction model for an occluded frame. To overcome this constraint and enhance model robustness, we implemented a comprehensive preprocessing pipeline incorporating two forms of realistic data augmentation. This strategy includes Realistic Occlusion, which simulates the partial visibility of body parts due to environmental factors, and Viewpoint Rotation, which simulates the diversity of camera perspectives encountered in naturalistic settings. As detailed in the preceding section, this deliberate augmentation process ensures the resulting model can accurately identify falls across a representative range of visual conditions, maintaining performance even when full body visibility is compromised.

- Task Re-purposing for Binary Classification: We adapted the base RA-GCNv2 architecture, transforming its original function of general action recognition into a specific binary classification task. This involved re-purposing the final layers and loss functions to distinctly discriminate between fall and non-fall events. This crucial architectural modification compels the model to concentrate its learning on the subtle, critical kinematic indicators of a fall, such as rapid changes in vertical displacement or sudden, precipitous collapses, thereby optimizing its sensitivity for the target safety application.

3.3.1. Training Pipeline Optimization

3.3.2. Inference-Time Aggregation

3.4. Kinect to MMPose Data Transformation

4. Experiments and Results

4.1. Experimental Design

4.1.1. Evaluation Datasets

- Plain (Le2i): This dataset comprises the raw, unaugmented 3D skeleton joint sequences extracted directly from the Le2i video corpus using the MMPose. Evaluation on this set established the baseline performance of the models on the native, clean data of the source domain.

- Occluded (Le2i): Consisting of skeleton joints derived from Le2i videos that were intentionally subjected to realistic visual occlusions, this set utilizes the MMPose format. Performance here directly quantifies the model’s inherent robustness against common real-world data corruptions, specifically in the form of partial data visibility.

- Rotated (Le2i): This set is composed of 3D skeleton sequences from the Le2i test set that were synthetically transformed via viewpoint rotations. Adhering to the MMPose format, this evaluation measures the model’s invariance to synthetic changes in camera perspective within the source domain.

- Mntuplain (NTU MMPose): This dataset features 3D skeleton joints extracted from the original NTU RGB+D videos using the MMPose framework. Evaluation on this set provides a direct measure of the model’s generalization performance when confronted with the standard skeleton format of the target domain.

- Kntuplain (NTU Kinect Transformed): This is a critical cross-domain evaluation set comprising 3D skeleton joints that were transformed from the original NTU RGB+D Kinect sensor data into the MMPose Transformed format using the proposed affine transformation pipeline. Performance on this dataset is essential for assessing the efficacy of the proposed transformation methodology in aligning the characteristics of the NTU Kinect data with the model’s training space, providing insight into robustness against sensor heterogeneity.

4.1.2. Training Model Variants

- (Model Le2i-Plain): This preliminary model was trained exclusively on the Plain subset of the Le2i skeleton data. It serves as a fundamental reference point, establishing the performance achievable without the introduction of any data augmentation.

- (Model Le2i-Occluded): This second preliminary model expanded the training set to include both the Plain and the Occluded sequences from the Le2i dataset. Its purpose was to quantify the incremental robustness gained solely from simulating occlusions.

- (Model Le2i-FullAug): This primary model was trained exclusively on a highly augmented version of the Le2i dataset, explicitly combining the raw skeleton data Plain, sequences subjected to realistic visual occlusions Occluded, and sequences augmented with synthetic viewpoint rotations Rotated. This configuration used the Le2i data augmented across all defined transformation categories. The primary purpose of this model was to establish the maximum performance and robustness achievable solely within the well-controlled Le2i domain and to serve as a critical baseline to quantify the generalization failure, or domain shift, when evaluated against the distinct NTU RGB+D dataset.

- (Model Le2i-NTU-DomainAug): This model was introduced to specifically address the identified challenge of cross-dataset generalization. It significantly expands the training regime by incorporating augmented data from both the Le2i and the NTU RGB+D domains. It retained the comprehensive Le2i augmented training set and further included the Kinect-transformed data Kntuplain and its synthetic viewpoint rotations Rotated Kntuplain derived from the NTU dataset. By explicitly exposing the model to the characteristics of both datasets, this model was engineered to achieve robust generalization across heterogeneous data sources, aiming to bridge the performance gap observed in cross-domain evaluation.

4.2. Transformation Results

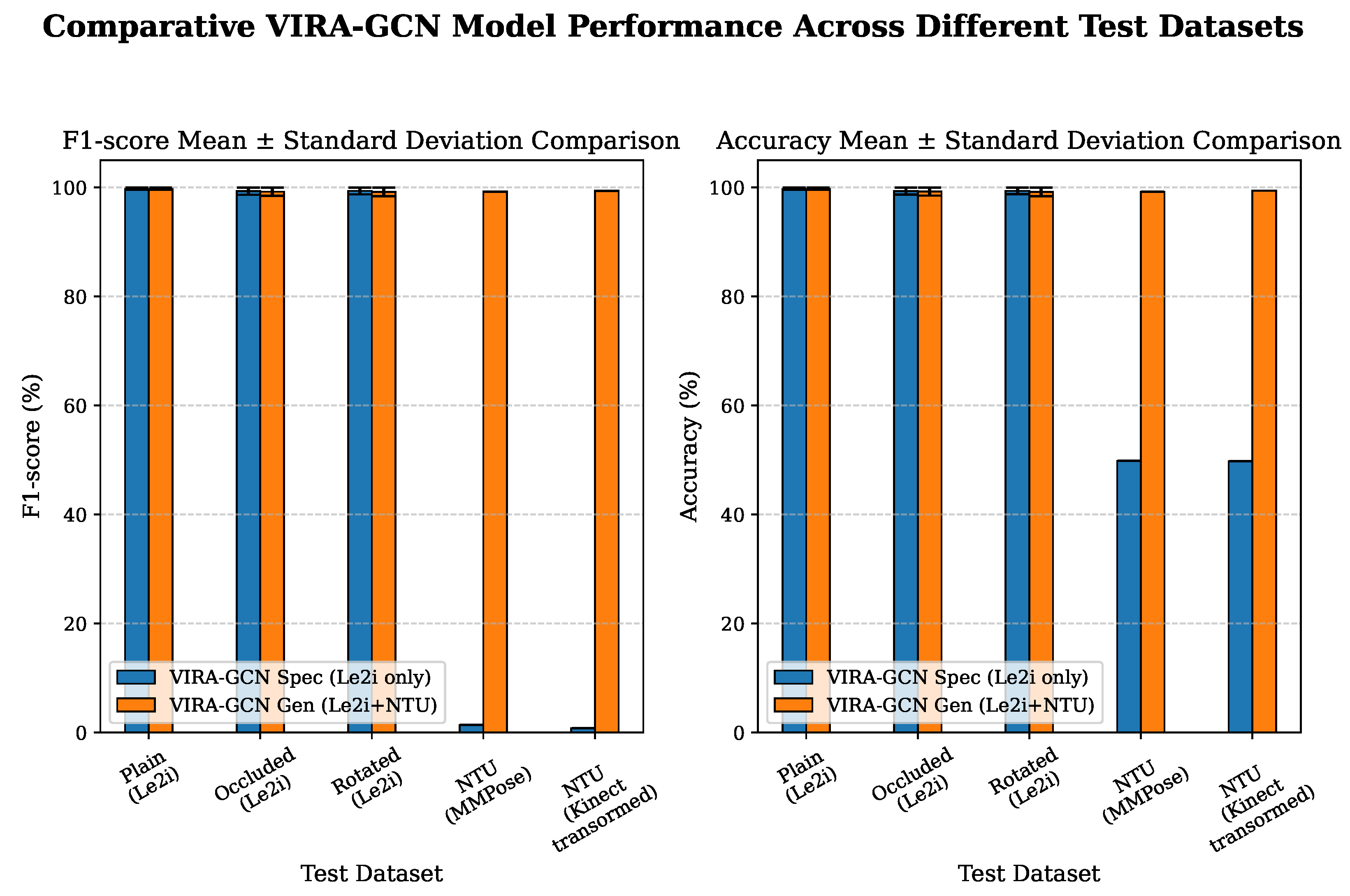

4.3. Comparative Model Performance

4.3.1. Initial Augmentation Impact (Le2i-Plain Dataset)

4.3.2. Performance on Le2i Domain Datasets

4.3.3. Performance on NTU Domain Datasets

- Mntuplain (NTU MMPose): F1-score approximated 99.25% and accuracy approximated 99.22%.

- Kntuplain (NTU Kinect Transformed): F1-score approximated 99.40% and accuracy approximated 99.42%.

4.4. Computational Analysis and Hardware Configuration

5. Discussion

5.1. The Critical Challenge of Domain Shift

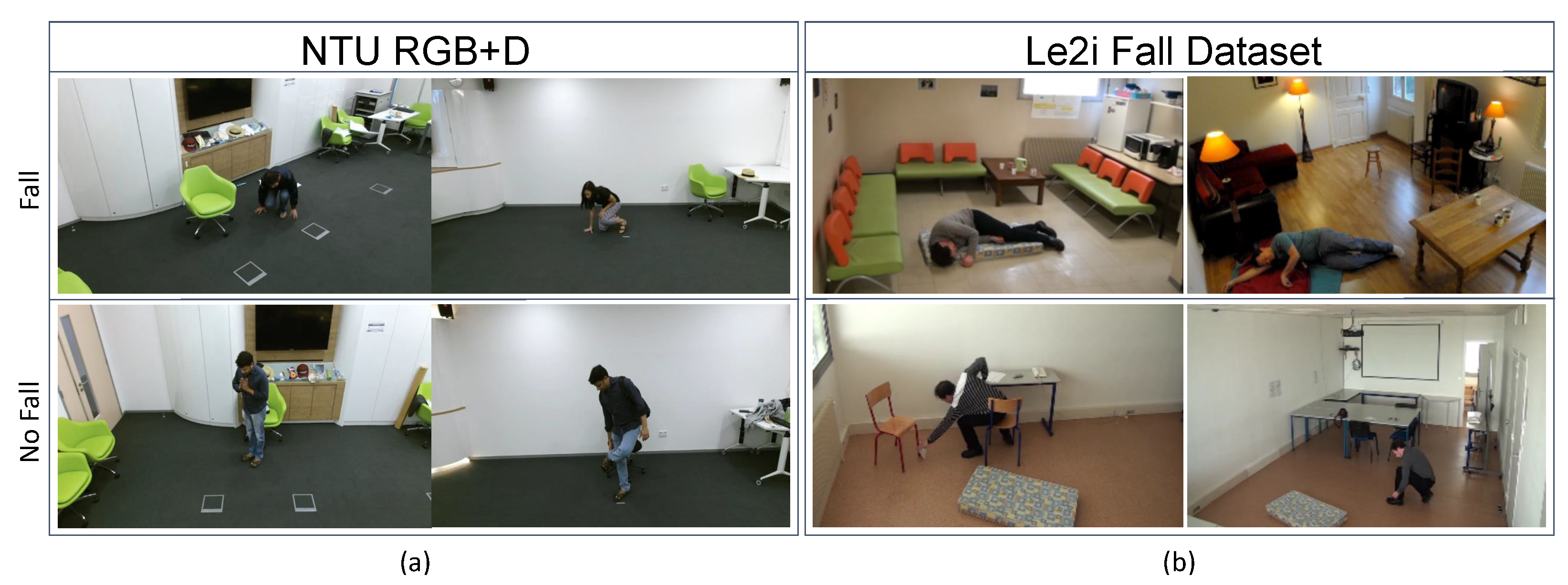

- Fall Action Heterogeneity: As qualitatively illustrated in Figure 6, the NTU dataset generally features slower, more controlled descents where subjects often remain centered. Conversely, the Le2i dataset captures more realistic and complex scenarios, including falls initiated during activities such as walking, and subjects remain prone for the duration of the sequence. The overfit to the complex dynamics of Le2i and could not reliably recognize the subtler, stylized falls of the NTU domain.Figure 6. Comparative analysis of fall and non-fall actions in the (a) NTU RGB+D and (b) Le2i datasets. The Le2i dataset presents greater complexity by including non-fall actions that are visually similar to falls in NTU RGB+D, posing a significant challenge for detection models. The NTU dataset, by contrast, contains more structured and controlled fall events.Figure 6. Comparative analysis of fall and non-fall actions in the (a) NTU RGB+D and (b) Le2i datasets. The Le2i dataset presents greater complexity by including non-fall actions that are visually similar to falls in NTU RGB+D, posing a significant challenge for detection models. The NTU dataset, by contrast, contains more structured and controlled fall events.

- Data Transformation Efficacy: Although our per-joint affine transformation successfully established a consistent mapping function from the Kinect to the MMPose format (Figure 4), the resultant poor cross-domain performance of confirmed that failure was solely attributable to the intrinsic domain differences in fall dynamics, rather than mere data format misalignment. The transformation was necessary, but not sufficient, to bridge the domain gap.

- Implication for Generalization: These results suggest that standard models are prone to overfitting to specific recording conditions and sensor modalities. The successful strategy implemented in the following section demonstrates that the solution must involve explicit domain exposure.

5.2. Overcoming the Generalization Deficit

- Domain Adaptation through Augmentation: The inclusion of the augmented NTU data successfully taught the model to recognize the specific fall characteristics and environmental conditions inherent in the NTU dataset (e.g., slower falls, post-fall recovery actions). This demonstrates that model performance degradation is not an inherent flaw in the model architecture (VIRA-GCN), but rather a failure to generalize due to domain-specific feature scarcity in the original training data.

- Efficacy of the Transformation Pipeline: The fact that performed equally well on both the MMPose-extracted NTU data (Mntuplain) and the Kinect-transformed NTU data (Kntuplain) provides strong validation of our per-joint affine transformation method. It confirms that the transformation creates a consistently aligned skeleton format, enabling seamless aggregation of different data sources (Kinect vs. MMPose) for robust cross-domain training.

5.3. State-of-the-Art Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- The United Nations Department of Economic and Social Affairs (UN DESA). World Population Ageing 2020 Highlights: Living Arrangements of Older Persons; (ST/ESA/SER.A/451); United Nations: New York, NY, USA, 2020. [Google Scholar]

- WHO. WHO Global Report on Falls Prevention in Older Age; WHO: Geneva, Switzerland, 2021.

- Nazar, M.; Ahsan, A.; Khan, M.I. A review of vision-based fall detection systems for elderly people: A performance analysis. J. King Saud-Univ. Comput. Inf. Sci. 2020, 32, 808–819. [Google Scholar]

- Shaha, I.; Islam, M.; Hasan, S.; Zahan, M.L.N.; Islam, T.; Kamal, A.M.H.; Chowdhury, M.M.I.; Hasan, M.A.; Khan, F.M.H. Exploring Human Pose Estimation and the Usage of Synthetic Data for Elderly Fall Detection in Real-World Surveillance. IEEE Access 2022, 10, 94249–94261. [Google Scholar] [CrossRef]

- Zhang, A.; Feng, X.; Su, X.; Li, F. Robust Self-Adaptation Fall-Detection System Based on Camera Height. Sensors 2019, 19, 2390. [Google Scholar]

- Sannino, M.M.; De Masi, L.; D’Angelo, V.; Sannino, L.; D’Agnese, M.; Sannino, F. Fall Detection with Multiple Cameras: An Occlusion-Resistant Method Based on 3-D Silhouette Vertical Distribution. IEEE J. Biomed. Health Inform. 2020, 24, 262–273. [Google Scholar]

- Wang, J.; Zhang, Z.; Li, B.; Lee, S.; Sherratt, R.S. An enhanced fall detection system for elderly person monitoring using consumer home networks. IEEE Trans. Consum. Electron. 2014, 60, 23–29. [Google Scholar] [CrossRef]

- Desai, K.; Mane, P.; Dsilva, M.; Zare, A.; Shingala, P.; Ambawade, D. A novel machine learning based wearable belt for fall detection. In Proceedings of the 2020 IEEE International Conference on Computing, Power and Communication Technologies (GUCON), Online, 2–4 October 2020; pp. 502–505. [Google Scholar]

- Chelli, A.; Pätzold, M. A Machine Learning Approach for Fall Detection and Daily Living Activity Recognition. IEEE Access 2019, 7, 38670–38687. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 150–166. [Google Scholar] [CrossRef]

- MMPose Contributors. MMPose: An OpenMMLab Pose Estimation Toolbox and Benchmark. 2020. Available online: https://github.com/open-mmlab/mmpose (accessed on 19 October 2025).

- Yao, L.; Yang, W.; Huang, W. An improved feature-based method for fall detection. Teh. Vjesn. 2019, 26, 1363–1368. [Google Scholar]

- Bian, Z.-P.; Hou, J.; Chau, L.-P.; Magnenat-Thalmann, N. Fall Detection Based on Body Part Tracking Using a Depth Camera. IEEE J. Biomed. Health Inform. 2015, 19, 430–439. [Google Scholar] [CrossRef]

- Debard, G.; Karsmakers, P.; Deschodt, M.; Vlaeyen, E.; Bergh, J.; Dejaeger, E.; Milisen, K.; Goedemé, T.; Tuytelaars, T.; Vanrumste, B. Camera Based Fall Detection Using Multiple Features Validated with Real Life Video. In Proceedings of the 7th International Conference on Intelligent Environments, Nottingham, UK, 25–28 July 2011; Volume 10. [Google Scholar]

- Lu, N.; Wu, Y.; Feng, L.; Song, J. Deep Learning for Fall Detection: Three-Dimensional CNN Combined with LSTM on Video Kinematic Data. IEEE J. Biomed. Health Inform. 2019, 23, 314–323. [Google Scholar] [CrossRef]

- Tian, Y.; Lee, G.-H.; He, H.; Hsu, C.-Y.; Katabi, D. Rf-based fall monitoring using convolutional neural networks. In Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Singapore, 8–12 October 2018; Volume 2, pp. 1–24. [Google Scholar]

- Chen, Y.; Li, W.; Wang, L.; Hu, J.; Ye, M. Vision-based fall event detection in complex background using attention guided bi-directional lstm. IEEE Access 2020, 8, 161337–161348. [Google Scholar] [CrossRef]

- Dutt, M.; Gupta, A.; Goodwin, M.; Omlin, C.W. An interpretable modular deep learning framework for video-based fall detection. Appl. Sci. 2024, 14, 4722. [Google Scholar] [CrossRef]

- Tsai, T.H.; Hsu, C.W. Implementation of fall detection system based on 3D skeleton for deep learning technique. IEEE Access 2019, 7, 153049–153059. [Google Scholar] [CrossRef]

- Keskes, O.; Noumeir, R. Vision-Based Fall Detection Using ST-GCN. IEEE Access 2021, 9, 28224–28236. [Google Scholar] [CrossRef]

- Egawa, R.; Miah, A.S.M.; Hirooka, K.; Tomioka, Y.; Shin, J. Dynamic fall detection using graph-based spatial temporal convolution and attention network. Electronics 2023, 12, 3234. [Google Scholar] [CrossRef]

- Shin, J.; Miah, A.S.M.; Egawa, R.; Hirooka, K.; Hasan, M.A.M.; Tomioka, Y.; Hwang, Y.S. Fall recognition using a three stream spatio temporal GCN model with adaptive feature aggregation. Sci. Rep. 2025, 15, 10635. [Google Scholar] [CrossRef]

- Kepski, M.; Kwolek, B. A New Multimodal Approach to Fall Detection. PLoS ONE 2014, 9, e92886. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.T.; Wang, G. NTU RGB+D: A Large Scale Dataset for 3D Human Activity Analysis. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar]

- Charfi, I.; Mit’eran, J.; Dubois, J.; Atri, M.; Tourki, R. Optimised spatio-temporal descriptors for real-time fall detection: Comparison of SVM and Adaboost based classification. J. Electron. Imaging 2013, 22, 043009. [Google Scholar] [CrossRef]

- Kepski, M.; Kwolek, B. A New Fall Detection Dataset and the Comparison of Fall Detection Methods. In Proceedings of the 2019 International Conference on Computer Vision and Graphics, Warsaw, Poland, 16–18 September 2019; Springer: Cham, Switzerland, 2019; pp. 11–22. [Google Scholar]

- Ma, X.; Wang, H.; Xue, B.; Zhou, M.; Ji, B.; Li, Y. Depth-based human fall detection via shape features and improved extreme learning machine. IEEE J. Biomed. Health Inform. 2014, 18, 1915–1922. [Google Scholar] [CrossRef]

- Soto-Valero, L.G.; Medina-Merodio, M. SisFall: A Fall and Movement Dataset. Sensors 2017, 17, 198. [Google Scholar] [CrossRef]

- TST Fall Detection Dataset v2. IEEE Dataport, 2016. Available online: https://ieee-dataport.org/documents/tst-fall-detection-dataset-v2 (accessed on 19 October 2025).

- Yu, X.; Jang, J.; Xiong, S. KFall: A Comprehensive Motion Dataset to Detect Pre-impact Fall for the Elderly Based on Wearable Inertial Sensors. Front. Aging Neurosci. 2021, 13, 692865. [Google Scholar] [CrossRef]

- Vavoulas, G.; Chatzaki, C.; Malliotakis, T.; Pediaditis, M. The MobiAct Dataset: Recognition of Activities of Daily Living using Smartphones. In Proceedings of the 2nd International Conference on Information and Communication Technologies for Ageing Well and e-Health, Rome, Italy, 21–22 April 2016; pp. 143–151. [Google Scholar]

- Saleh, M.; Abbas, M.; Le Jeannès, R.B. FallAllD: An Open Dataset of Human Falls and Activities of Daily Living for Classical and Deep Learning Applications. IEEE Sens. J. 2021, 21, 5110–5120. [Google Scholar] [CrossRef]

- Klenk, J.; Schwickert, L.; Jäkel, A.; Krenn, M.; Bauer, J.M.; Becker, C. The FARSEEING real-world fall repository: A large-scale collaborative database to collect and share sensor signals from real-world falls. IEEE Trans. Biomed. Eng. 2016, 63, 1339–1346. [Google Scholar] [CrossRef]

- Fang, H.; Li, J.; Tang, H.; Xu, C.; Zhu, H.; Xiu, Y.; Li, Y.-L.; Lu, C. AlphaPose: Whole-Body Regional Multi-Person Pose Estimation and Tracking in Real-Time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7157–7173. [Google Scholar] [CrossRef] [PubMed]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.-L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar] [CrossRef]

- Song, Y.-F.; Zhang, Z.; Shan, C.; Wang, L. Richly Activated Graph Convolutional Network for Robust Skeleton-based Action Recognition. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 1915–1925. [Google Scholar] [CrossRef]

- Li, W.; Wang, Y.; Wang, Y.; Li, B.; Zhang, H.; Wu, Y. Lightweight Spatio-Temporal Graph Convolutional Networks for Skeleton-based Action Recognition. In Proceedings of the 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Virtual, 6–12 June 2021; pp. 1605–1609. [Google Scholar]

- Alaoui, A.; El-Yaagoubi, M.; Chehri, A. V2V-PoseNet: A View-Invariant Pose Estimation Network for Action Recognition. In Proceedings of the International Conference on Advanced Communication Technologies and Information Systems (ICACTIS), Coimbatore, India, 19–20 March 2021; pp. 1–6. [Google Scholar]

- Zi, Q.; Liu, Y.; Zhang, S.; Yang, T. YOLOv7-DeepSORT: An Improved Human Fall Detection Method. Sensors 2023, 23, 789. [Google Scholar]

- Li, H.; Wei, S.; Xu, Y.; Yang, J.; Zhang, Z. A Novel Fall Detection Method Based on AlphaPose and Spatio-Temporal Graph Convolutional Networks. Sensors 2023, 23, 5565. [Google Scholar]

- Alanazi, S.; Alshahrani, S.; Alqahtani, A.; Alghamdi, A. 3D Multi-Stream CNNs for Real-Time Fall Detection. IEEE Access 2023, 11, 15993–16004. [Google Scholar]

- Shin, J.; Kim, S.; Kim, D. Three-Stream Spatio-Temporal Graph Convolutional Networks for Human Fall Detection. In Proceedings of the 2024 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2024; pp. 1205–1210. [Google Scholar]

| Dataset | RGB Videos | Kinect Data | Viewpoint Variation | Recording Environment |

|---|---|---|---|---|

| Le2i Fall Dataset [25] | ✔ | ✔ | Indoor Home | |

| UR Fall Detection [26] | ✔ | ✔ | Indoor Lab | |

| SDUFall [27] | ✔ | ✔ | Indoor Home | |

| SisFall [28] | Indoor Home | |||

| NTU RGB+D [24] | ✔ | ✔ | Indoor Lab | |

| TST Fall Detection [29] | ✔ | ✔ | Indoor Lab | |

| KFall Dataset [30] | ✔ | Indoor Home | ||

| MobiAct [31] | Indoor Lab | |||

| FallAllD [32] | ✔ | Uncontrolled | ||

| FARSEEING [33] | Uncontrolled |

| Framework | Year | Target Scope | Nb Extracted Joints | Accuracy AP (%) | Cost (GFLOPs) | Efficiency |

|---|---|---|---|---|---|---|

| OpenPose [10] | 2017 | Multi-person 2D/3D Pose | 15–25 up to 135 | 61.8 | 160 | Slowest (GPU-Intensive) |

| AlphaPose [34] | 2017 | High-Accuracy 2D/3D Pose | 17 up to 136 | 73.3 | 5.91 → 15.99 | Fast (Requires GPU) |

| MediaPipe [35] | 2019 | Real-time Mobile Pipelines | 33 | - | 29.3 → 35.4 | Extremely Fast on Mobile/CPU |

| MMPose [11] | 2020 | SOTA Toolkit Benchmark | 17 up to 133 | 75.8 | 1.93 | 90+ FPS CPU/ 430+ FPS GPU |

| Model Name | Test Type | F1-Score Mean (%) | Accuracy Mean (%) | Sensitivity Mean (%) | Specificity Mean (%) |

|---|---|---|---|---|---|

| Plain | 94.52 | 94.25 | 90.34 | 99.09 | |

| Plain | 99.50 | 99.69 | 99.25 | 100.00 | |

| Plain | 99.81 | 99.81 | 99.62 | 100.00 |

| Model Name | Test Type | F1-Score Mean (%) | Accuracy Mean (%) | Sensitivity Mean (%) | Specificity Mean (%) |

|---|---|---|---|---|---|

| Plain | |||||

| Occluded | |||||

| Rotated | |||||

| Mntuplain | |||||

| Kntuplain | |||||

| Plain | |||||

| Occluded | |||||

| Rotated | |||||

| Mntuplain | |||||

| Kntuplain |

| Reference Hardware | Model Parameters | Computational Complexity | Inference Latency |

|---|---|---|---|

| NVIDIA RTX 3090 | 4.06 M | 7.85 GFLOPs | 7.50 ms/sample |

| Model Methodology | Year | Accuracy/% | Sensitivity/% | Specificity/% |

|---|---|---|---|---|

| Lightweight ST-GCN [37] | 2021 | 96.10 | 92.50 | 95.70 |

| V2V-PoseNet [38] | 2021 | 93.67 | 100.00 | 87.00 |

| YOLOv7-DeepSORT [39] | 2023 | 94.50 | 98.60 | 97.00 |

| Alphapose ST-GCN [40] | 2023 | 98.70 | 97.78 | 100.00 |

| 3D Multi-Stream CNNs [41] | 2023 | 99.44 | 99.12 | 99.12 |

| Three-Stream ST-GCN [42] | 2024 | 99.64 | 97.25 | - |

| VIRA-GCN (Ours) | 2025 | 99.81 | 99.62 | 100.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zobi, M.; Bolzani, L.; Tabii, Y.; Oulad Haj Thami, R. Robust 3D Skeletal Joint Fall Detection in Occluded and Rotated Views Using Data Augmentation and Inference–Time Aggregation. Sensors 2025, 25, 6783. https://doi.org/10.3390/s25216783

Zobi M, Bolzani L, Tabii Y, Oulad Haj Thami R. Robust 3D Skeletal Joint Fall Detection in Occluded and Rotated Views Using Data Augmentation and Inference–Time Aggregation. Sensors. 2025; 25(21):6783. https://doi.org/10.3390/s25216783

Chicago/Turabian StyleZobi, Maryem, Lorenzo Bolzani, Youness Tabii, and Rachid Oulad Haj Thami. 2025. "Robust 3D Skeletal Joint Fall Detection in Occluded and Rotated Views Using Data Augmentation and Inference–Time Aggregation" Sensors 25, no. 21: 6783. https://doi.org/10.3390/s25216783

APA StyleZobi, M., Bolzani, L., Tabii, Y., & Oulad Haj Thami, R. (2025). Robust 3D Skeletal Joint Fall Detection in Occluded and Rotated Views Using Data Augmentation and Inference–Time Aggregation. Sensors, 25(21), 6783. https://doi.org/10.3390/s25216783