Drowsiness Classification in Young Drivers Based on Facial Near-Infrared Images Using a Convolutional Neural Network: A Pilot Study

Abstract

1. Introduction

- Novel interpretation of NIR facial images: This study proposes to treat 940 nm NIR images as physiological indicators rather than behavioral cues, highlighting their potential to capture drowsiness-related changes in facial skin tone.

- Demonstration of CNN-based drowsiness classification: By applying a CNN to facial NIR images acquired at 940 nm, the feasibility of multi-level drowsiness classification is demonstrated.

- Pilot study toward practical applications: Since the 940 nm wavelength is already employed in commercial driver monitoring systems, this approach offers a practical pathway toward real-world implementation.

2. Related Work

2.1. Research Gap

2.2. Present Approach

3. Materials and Methods

3.1. Facial NIR Imaging System and Experimental Setup

3.1.1. Experimental Systems

3.1.2. Procedure and Conditions

3.2. Drowsiness Annotation by Facial Expression Evaluation

3.3. Dataset

3.3.1. Input Images

3.3.2. Labels

3.4. CNN Modeling

3.5. Model Evaluation Method

3.6. Grad-CAM

4. Results

4.1. CNN Performance

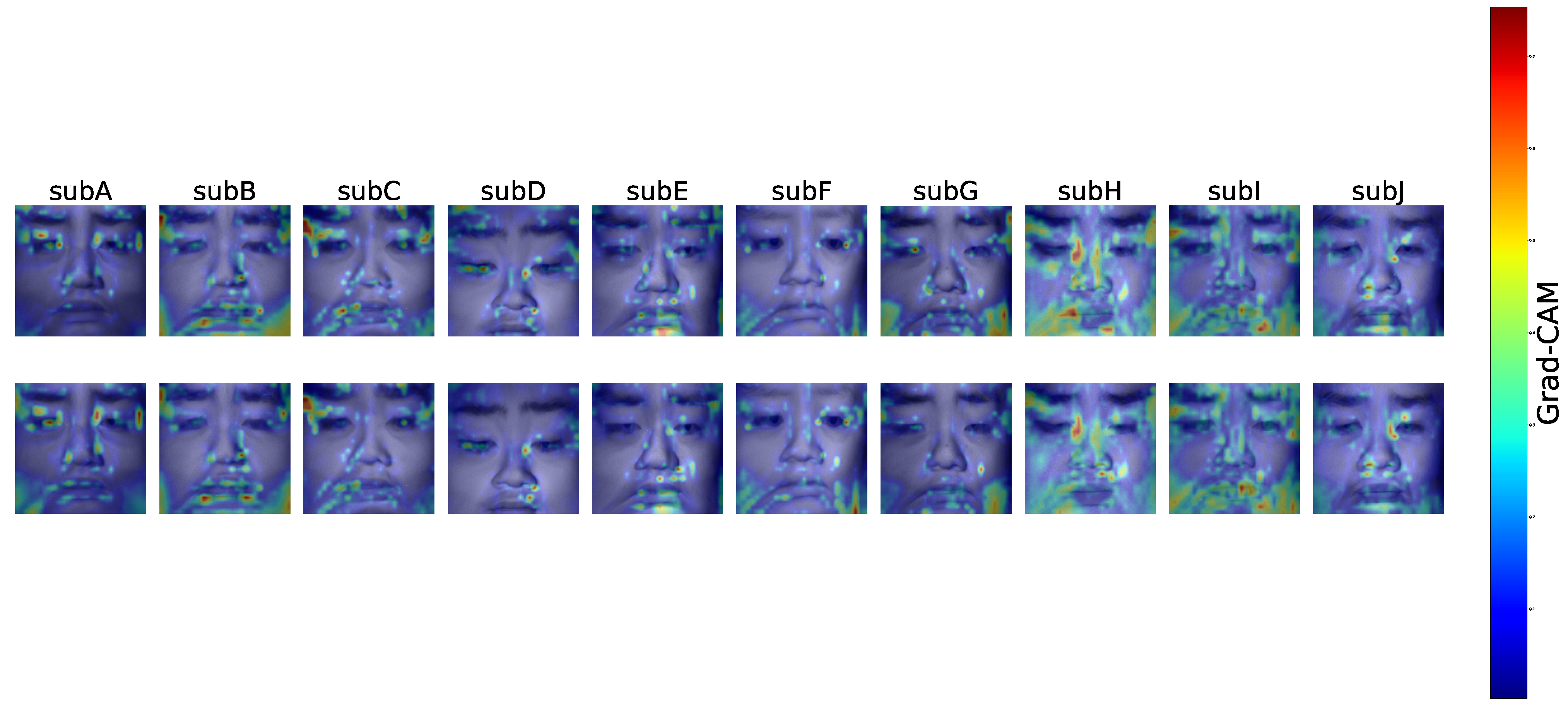

4.2. Average Grad-CAM Activation Map

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Global Status Report on Road Safety 2023; World Health Organization: Geneva, Switzerland, 2023; Available online: https://www.who.int/teams/social-determinants-of-health/safety-and-mobility/global-status-report-on-road-safety-2023 (accessed on 25 October 2025).

- National Highway Traffic Safety Administration. Drowsy Driving: Asleep at the Wheel; U.S. Department of Transportation: Washington, DC, USA, 2017. Available online: https://www.nhtsa.gov/risky-driving/drowsy-driving (accessed on 25 October 2025).

- European Parliament and Council. Regulation (EU) 2019/2144 of 27 November 2019 on Type-Approval Requirements for Motor Vehicles and Their Trailers, and Systems, Components and Separate Technical Units Intended for Such Vehicles. Off. J. Eur. Union 2019, L 325, 1–40. [Google Scholar]

- Ministry of Land, Infrastructure, Transport and Tourism. Guidelines for Driver Monitoring Systems to Detect Drowsiness and Inattention; Ministry of Land, Infrastructure, Transport and Tourism: Tokyo, Japan, 2020; Available online: https://www.mlit.go.jp/report/press/content/001377623.pdf (accessed on 25 October 2025).

- GB/T 41797–2022; Road Vehicles—Driver Monitoring System—Performance Requirements and Test Methods. Standardization Administration of China: Beijing, China, 2022. Available online: https://www.chinesestandard.net/PDF.aspx/GBT41797-2022?English_GB/T%2041797-2022 (accessed on 25 October 2025).

- U.S. Senate. Stay Aware for Everyone (SAFE) Act of 2021; U.S. Government Publishing Office: Washington, DC, USA, 2021.

- Business Wire. Global and China Automotive DMS/OMS Research Report, 2023–2024. 8 April 2024. Available online: https://www.businesswire.com/news/home/20240408995808/en/ (accessed on 25 October 2025).

- Gerber, M.A.; Schroeter, R.; Ho, B. A human factors perspective on how to keep SAE Level 3 conditional automated driving safe. Transp. Res. Interdiscip. Perspect. 2023, 22, 100959. [Google Scholar] [CrossRef]

- Morales-Alvarez, W.; Sipele, O.; Léberon, R.; Tadjine, H.H.; Olaverri-Monreal, C. Automated Driving: A Literature Review of the Take over Request in Conditional Automation. Electronics 2020, 9, 2087. [Google Scholar] [CrossRef]

- Sigari, M.-H.; Pourshahabi, M.-R.; Soryani, M.; Fathy, M. A Review on Driver Face Monitoring Systems for Fatigue and Distraction Detection. Int. J. Adv. Sci. Technol. 2014, 64, 73–100. [Google Scholar] [CrossRef]

- Ji, Q.; Zhu, Z.; Lan, P. Real-time eye, gaze, and face pose tracking for monitoring driver vigilance. IEEE Trans. Veh. Technol. 2002, 8, 357–377. [Google Scholar] [CrossRef]

- Yae, J.-H.; Oh, Y.D.; Kim, M.S.; Park, S.H. A Study on the Development of Driver Behavior Simulation Dummy for the Performance Evaluation of Driver Monitoring System. Int. J. Precis. Eng. Manuf. 2024, 25, 641–652. [Google Scholar] [CrossRef]

- Kim, D.; Park, H.; Kim, T.; Kim, W.; Paik, J. Real-time Driver Monitoring System with Facial Landmark-based Behavior Recognition. Sci. Rep. 2023, 13, 18264. [Google Scholar]

- Halin, A.; Verly, J.G.; Van Droogenbroeck, M. Survey and Synthesis of State of the Art in Driver Monitoring. Sensors 2021, 21, 5558. [Google Scholar] [CrossRef]

- Sharma, P.K.; Chakraborty, P. A Review of Driver Gaze Estimation and Application in Gaze Behavior Understanding. Eng. Appl. Artif. Intell. 2024, 133, 108117. [Google Scholar] [CrossRef]

- Lachance-Tremblay, J.; Tkiouat, Z.; Léger, P.-M.; Cameron, A.-F.; Titah, R.; Coursaris, C.K.; Sénécal, S. A gaze-based driver distraction countermeasure: Comparing effects of multimodal alerts on driver’s behavior and visual attention. Int. J. Hum.-Comput. Stud. 2025, 193, 103366. [Google Scholar] [CrossRef]

- Meyer, J.E.; Llaneras, R.E. Behavioral Indicators of Drowsy Driving: Active Search Mirror Checks; Safe-D Project Report 05-084; Virginia Tech Transportation Institute (VTTI): Blacksburg, VA, USA, 2022. Available online: https://rosap.ntl.bts.gov/view/dot/63152 (accessed on 25 October 2025).

- Soleimanloo, S.S.; Wilkinson, V.E.; Cori, J.M.; Westlake, J.; Stevens, B.; Downey, L.A.; Shiferaw, B.A.; Rajaratnam, S.M.W.; Howard, M.E. Eye-blink parameters detect on-road track-driving impairment following severe sleep deprivation. J. Clin. Sleep Med. 2019, 15, 1271–1284. [Google Scholar] [CrossRef]

- Qu, F.; Dang, N.; Furht, B.; Nojoumian, M. Comprehensive study of driver behavior monitoring systems using computer vision and machine learning techniques. J. Big Data 2024, 11, 32. [Google Scholar] [CrossRef]

- Okajima, T.; Yoshida, A.; Oiwa, K.; Nagumo, K.; Nozawa, A. Drowsiness Estimation Based on Facial Near-Infrared Images Using Sparse Coding. IEEJ Trans. Electr. Electron. Eng. 2023, 18, 1961–1963. [Google Scholar] [CrossRef]

- Perkins, E.; Sitaula, C.; Burke, M.; Marzbanrad, F. Challenges of Driver Drowsiness Prediction: The Remaining Steps to Implementation. IEEE Trans. Intell. Veh. 2022, 8, 1319–1338. [Google Scholar] [CrossRef]

- Saleem, A.A.; Siddiqui, H.U.R.; Raza, M.A.; Rustam, F.; Dudley, S.; Ashraf, I. A systematic review of physiological signals based driver drowsiness detection systems. Cogn. Neurodynamics 2023, 17, 1229–1259. [Google Scholar] [CrossRef]

- Prahl, S. Optical Absorption of Hemoglobin; Oregon Medical Laser Center: Portland, OR, USA, 1999; Available online: https://omlc.org/spectra/hemoglobin/ (accessed on 25 October 2025).

- Jacques, S.L. Optical properties of biological tissues: A review. Phys. Med. Biol. 2013, 58, R37–R61. [Google Scholar] [CrossRef]

- Takano, M.; Nagumo, K.; Nanai, Y.; Oiwa, K.; Nozawa, A. Remote blood pressure measurement from near-infrared face images: A comparison of the accuracy by the use of first and second biological optical window. Biomed. Opt. Express 2024, 15, 1523–1537. [Google Scholar] [CrossRef]

- Oiwa, K.; Ozawa, Y.; Nagumo, K.; Nishimura, S.; Nanai, Y.; Nozawa, A. Remote Blood Pressure Sensing Using Near-Infrared Wideband LEDs. IEEE Sens. J. 2021, 21, 24327–24337. [Google Scholar] [CrossRef]

- Nakagawa, M.; Oiwa, K.; Nanai, Y.; Nagumo, K.; Nozawa, A. A comparative study of linear and nonlinear regression models for blood glucose estimation based on near-infrared facial images from 760 to 1650 nm wavelength. Artif. Life Robot. 2024, 29, 501–509. [Google Scholar] [CrossRef]

- Nakagawa, M.; Oiwa, K.; Nanai, Y.; Nagumo, K.; Nozawa, A. Feature Extraction for Estimating Acute Blood Glucose Level Variation From Multiwavelength Facial Images. IEEE Sens. J. 2024, 23, 20247–20257. [Google Scholar] [CrossRef]

- AMS OSRAM. In-Cabin Sensing Systems Today Operate at 940 nm Wavelength While Some Also Use 850 nm. Automotive & Mobility—In-Cabin Sensing. 2021. Available online: https://ams-osram.com/applications/automotive-mobility/in-cabin-sensing (accessed on 25 October 2025).

- Automotive Focus Shifts to Driver Monitoring Systems. Embedded (EE Times). 1 September 2021. Available online: https://www.embedded.com/automotive-focus-shifts-to-driver-monitoring-systems/ (accessed on 25 October 2025).

- Mouser Electronics White Paper: Driver Monitor System Yields Safety Gains. All About Circuits. 9 August 2022. Available online: https://www.allaboutcircuits.com/uploads/articles/Driver_Monitor_System_Yields_Safety_Gains.pdf (accessed on 25 October 2025).

- Lambert, D.K.; Itsede, F.; Tomura, K.; Nohara, A.; Chou, K.; Carty, D. Windshield with Enhanced Infrared Reflectivity Enables Packaging a Driver Monitor System in a Head-Up Display; SAE Technical Paper. No. 2021-01-0105; SAE International: Warrendale, PA, USA, 2021. [Google Scholar] [CrossRef]

- Smart Eye: AIS Driver Monitoring System; Product Page Including Field of View and Wavelength Specifications (940 nm); Macnica Corporation: Yokohama, Japan, 2023; Available online: https://www.macnica.co.jp/en/business/semiconductor/manufacturers/smarteye/products/144981/ (accessed on 25 October 2025).

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 26th Annual Conference on Neural Information Processing Systems (NeurIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Arefnezhad, S.; Keshavarz, A.; Lin, C.; Lin, F.; Tavakoli, N.; Chou, C.A. Driver drowsiness estimation using EEG signals with a dynamical encoder–decoder modeling framework. Sci. Rep. 2022, 12, 16435. [Google Scholar] [CrossRef]

- Khan, M.J.; Hong, K.-S. Hybrid EEG–fNIRS-based eight-command decoding for BCI: Application to drowsiness detection. Front. Hum. Neurosci. 2015, 9, 610–621. [Google Scholar]

- Kitajima, H.; Numata, N.; Yamamoto, K.; Goi, Y. Prediction of Automobile Driver Sleepiness (1st Resort, Rating of Sleepiness Based on Facial Expression and Examination of Effective Predictor Indexes of Sleepiness). Trans. Jpn. Soc. Mech. Eng. (C Ed.) 1997, 63, 93–100. (In Japanese) [Google Scholar]

- Uchiyama, Y.; Sawai, S.; Omi, T.; Yamauchi, K.; Tamura, K.; Sakata, T.; Nakajima, K.; Sakai, H. Convergent validity of video-based observer rating of drowsiness, against subjective, behavioral, and physiological measures. PLoS ONE 2023, 18, e0285557. [Google Scholar] [CrossRef]

- Nagumo, K.; Kobayashi, T.; Oiwa, K.; Nozawa, A. Face Alignment in Thermal Infrared Images Using Cascaded Shape Regression. Int. J. Environ. Res. Public Health 2021, 18, 1776. [Google Scholar] [CrossRef]

- Nagumo, K.; Oiwa, K.; Nozawa, A. Spatial normalization of facial thermal images using facial landmarks. Artif. Life Robot. 2021, 26, 481–487. [Google Scholar] [CrossRef]

- Sundelin, T.; Lekander, M.; Kecklund, G.; Van Someren, E.J.W.; Olsson, A.; Axelsson, J. Cues of fatigue: Effects of sleep deprivation on facial appearance. Sleep 2013, 36, 1355–1360. [Google Scholar] [CrossRef] [PubMed]

- Matsukawa, T.; Ozaki, M.; Nishiyama, T.; Imamura, M.; Kumazawa, T.; Iijima, T. Comparison of changes in facial and thigh skin blood flows during general anesthesia. Anesthesiology 1997, 86, 603–609. [Google Scholar]

- Aristizabal-Tique, A.; Farup, I.; Hovde, Ø.; Khan, R. Facial skin color and blood perfusion modulations induced by mental and physical workload: A multispectral imaging study. J. Biomed. Opt. 2023, 28, 035002. [Google Scholar]

- Ahlström, C.; Fors, C.; Anund, A. Comparing driver sleepiness in simulator and on-road studies using EEG and HRV. Accid. Anal. Prev. 2018, 111, 238–246. [Google Scholar]

- Caldwell, J.A.; Hall, S.; Erickson, B.S. Physiological correlates of drowsiness during simulator and real driving. Front. Hum. Neurosci. 2020, 14, 574890. [Google Scholar]

- Morales, E.; Navarro, J.; Diaz-Piedra, C. Physiological correlates of fatigue in simulated and real driving: Heart rate and skin temperature comparisons. Appl. Ergon. 2021, 93, 103375. [Google Scholar]

- Takano, M.; Nagumo, K.; Lamsal, R.; Nozawa, A. Correction of Artifacts from Environmental Temperature and Time-of-day Variations in Facial Thermography for Field Workers’ Health Monitoring. Adv. Biomed. Eng. 2025, 14, 54–61. [Google Scholar] [CrossRef]

| Main Indicator/Input | Model/Method | Key Findings/ Characteristics | Main Limitation |

|---|---|---|---|

| Blink kinematics [18] | Statistical/regression analysis | Blink parameters associated with degraded driving performance after sleep deprivation | Mainly detects advanced drowsiness; early sensitivity limited. |

| Behavioral indicators (mirror checks, head/body posture) [17] | Statistical feature analysis | Behavioral indicators such as mirror-check frequency linked to drowsiness progression(No significant difference) | Weak changes in early drowsiness stage. |

| Facial landmarks (68 points) [13] | Deep Learning | Classified driver behaviors from facial landmark dynamics using infrared facial images | Sequence dependence; limited instantaneous response. |

| Behavioral indicators [12] | Simulation dummy design/system evaluation | Developed a driver-monitoring evaluation dummy and validated DMS performance under fatigue scenarios | Hardware-oriented validation; not a direct learning model. |

| Temporal gaze variation [16] | On-road experiment with multimodal alerts | Investigated gaze behavior and visual attention during distraction countermeasures | Not targeted at subtle or short-term drowsiness. |

| EEG power ratios ( etc.) [36] | Deep Learning | Demonstrated deep-learning-based vigilance estimation from EEG signals under sleep deprivation | Requires electrode attachment; noise-sensitive. |

| Prefrontal hemoglobin changes [37] | LDA/SVM | Hybrid EEG–fNIRS framework; reported vigilance/drowsiness-related cerebral hemodynamic changes | Bulky device; long temporal response. |

| Phase | Duration | Eye State |

|---|---|---|

| Rest 1 | 1 min | Eyes open |

| 1 min | Eyes closed | |

| 1 min | Eyes open | |

| Driving | 40 min | Not controlled |

| Rest 2 | 1 min | Eyes open |

| 1 min | Eyes closed | |

| 1 min | Eyes open |

| Drowsiness Level | State | Example of Facial Expression and Motions |

|---|---|---|

| Level 1 | Awake | · Eye movements are quick and frequent |

| · Blink cycles are stable at approximately two per two seconds | ||

| · Body motions are active | ||

| Level 2 | Slightly drowsy | · Lips are parted |

| · Motions of eye movements are slow | ||

| Level 3 | Drowsy | · Blinks are slow and frequent |

| · Re-positions body on seat | ||

| · Touches hand to face | ||

| Level 4 | Very drowsy | · Blinks assumed to occur consciously |

| · Shakes head | ||

| · Frequently yawns | ||

| Level 5 | Extremely drowsy | · Eyelids closing |

| · Leans head back and forth |

| Subject | Level 1 | Level 2 | Level 3 | Level 4 | Level 5 |

|---|---|---|---|---|---|

| SubA | 440 | 880 | 680 | 400 | 0 |

| SubB | 480 | 280 | 560 | 1080 | 0 |

| SubC | 340 | 1580 | 480 | 0 | 0 |

| SubD | 640 | 620 | 1140 | 0 | 0 |

| SubE | 320 | 280 | 880 | 920 | 0 |

| SubF | 480 | 1040 | 460 | 360 | 60 |

| SubG | 580 | 760 | 1060 | 0 | 0 |

| SubH | 320 | 200 | 400 | 1160 | 320 |

| SubI | 340 | 560 | 720 | 760 | 20 |

| SubJ | 320 | 180 | 180 | 1480 | 240 |

| Drowsiness Level | Pattern A | Pattern B | Pattern C |

|---|---|---|---|

| Level 1 | Low | Low | Low |

| Level 2 | High | Low | Medium |

| Level 3 | High | High | High |

| Level 4 | High | High | High |

| Level 5 | High | High | High |

| Pattern A | Pattern B | Pattern C | |||||

|---|---|---|---|---|---|---|---|

| Subject | Low | High | Low | High | Low | Medium | High |

| SubA | 440 | 1960 | 1320 | 1080 | 440 | 880 | 1080 |

| SubB | 480 | 1920 | 760 | 1640 | 480 | 280 | 1640 |

| SubC | 340 | 2060 | 1920 | 480 | 340 | 1580 | 480 |

| SubD | 640 | 1760 | 1260 | 1140 | 640 | 620 | 1140 |

| SubE | 320 | 2080 | 600 | 1800 | 320 | 280 | 1800 |

| SubF | 480 | 1920 | 1520 | 880 | 480 | 1040 | 880 |

| SubG | 580 | 1820 | 1340 | 1060 | 580 | 760 | 1060 |

| SubH | 320 | 2080 | 520 | 1880 | 320 | 200 | 1880 |

| SubI | 340 | 2060 | 900 | 1500 | 340 | 560 | 1500 |

| SubJ | 320 | 2080 | 500 | 1900 | 320 | 180 | 1900 |

| Total | 4260 | 19,740 | 10,640 | 13,360 | 4260 | 6380 | 13,360 |

| Pattern A | Pattern B | Pattern C | |||||

|---|---|---|---|---|---|---|---|

| Subject | Low | High | Low | High | Low | Medium | High |

| SubA | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubB | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubC | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubD | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubE | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubF | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubG | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubH | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubI | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| SubJ | 320 | 320 | 480 | 480 | 180 | 180 | 180 |

| Total | 3200 | 3200 | 4800 | 4800 | 1800 | 1800 | 1800 |

| Layer | Output Shape | Activation | Parameters |

|---|---|---|---|

| Conv1 | (259, 259, 32) | ReLU | 320 |

| Conv2 | (257, 257, 64) | ReLU | 18,496 |

| MaxPool1 | (128, 128, 64) | – | 0 |

| Conv3 | (126, 126, 64) | ReLU | 36,928 |

| MaxPool2 | (63, 63, 64) | – | 0 |

| Conv4 | (61, 61, 64) | ReLU | 36,928 |

| MaxPool3 | (30, 30, 64) | – | 0 |

| Conv5 | (28, 28, 64) | ReLU | 36,928 |

| MaxPool4 | (14, 14, 64) | – | 0 |

| Dropout1 | (14, 14, 64) | – | 0 |

| Flatten | (12,544) | – | 0 |

| FC1 | (128) | ReLU | 1,605,760 |

| Dropout2 | (128) | – | 0 |

| FC2 (Output) | (2 or 3) | Softmax | 258 |

| Total | 1,735,618 | ||

| Subject | Pattern A | Pattern B | Pattern C |

|---|---|---|---|

| SubA | 47.3 | 64.0 | 45.2 |

| SubB | 76.1 | 66.0 | 55.7 |

| SubC | 92.5 | 63.3 | 59.1 |

| SubD | 93.4 | 89.1 | 80.4 |

| SubE | 98.1 | 97.7 | 97.4 |

| SubF | 99.8 | 93.2 | 89.8 |

| SubG | 99.7 | 95.2 | 97.0 |

| SubH | 99.8 | 99.0 | 99.1 |

| SubI | 99.7 | 97.5 | 97.0 |

| SubJ | 100.0 | 99.8 | 98.7 |

| Mean ± SD | 90.7 ± 16.9 | 86.5 ± 15.5 | 81.9 ± 20.8 |

| Median | 98.9 | 94.2 | 93.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nomura, A.; Yoshida, A.; Torii, T.; Nagumo, K.; Oiwa, K.; Nozawa, A. Drowsiness Classification in Young Drivers Based on Facial Near-Infrared Images Using a Convolutional Neural Network: A Pilot Study. Sensors 2025, 25, 6755. https://doi.org/10.3390/s25216755

Nomura A, Yoshida A, Torii T, Nagumo K, Oiwa K, Nozawa A. Drowsiness Classification in Young Drivers Based on Facial Near-Infrared Images Using a Convolutional Neural Network: A Pilot Study. Sensors. 2025; 25(21):6755. https://doi.org/10.3390/s25216755

Chicago/Turabian StyleNomura, Ayaka, Atsushi Yoshida, Takumi Torii, Kent Nagumo, Kosuke Oiwa, and Akio Nozawa. 2025. "Drowsiness Classification in Young Drivers Based on Facial Near-Infrared Images Using a Convolutional Neural Network: A Pilot Study" Sensors 25, no. 21: 6755. https://doi.org/10.3390/s25216755

APA StyleNomura, A., Yoshida, A., Torii, T., Nagumo, K., Oiwa, K., & Nozawa, A. (2025). Drowsiness Classification in Young Drivers Based on Facial Near-Infrared Images Using a Convolutional Neural Network: A Pilot Study. Sensors, 25(21), 6755. https://doi.org/10.3390/s25216755