Abstract

Eye movement is an important tool used to investigate cognition. It also serves as input in human–computer interfaces for assistive technology. It can be measured with camera-based eye tracking and electro-oculography (EOG). EOG does not rely on eye visibility and can be measured even when the eyes are closed. We investigated the feasibility of detecting the gaze direction using EOG while having the eyes closed. A total of 15 participants performed a proprioceptive calibration task with open and closed eyes, while their eye movement was recorded with a camera-based eye tracker and with EOG. The calibration was guided by the participants’ hand motions following a pattern of felt dots on cardboard. Our cross-correlation analysis revealed reliable temporal synchronization between gaze-related signals and the instructed trajectory across all conditions. Statistical comparison tests and equivalence tests demonstrated that EOG tracking was statistically equivalent to the camera-based eye tracker gaze direction during the eyes-open condition. The camera-based eye-tracking glasses do not support tracking with closed eyes. Therefore, we evaluated the EOG-based gaze estimates during the eyes-closed trials by comparing them to the instructed trajectory. The results showed that EOG signals, guided by proprioceptive cues, followed the instructed path and achieved a significantly greater accuracy than shuffled control data, which represented a chance-level performance. This demonstrates the advantage of EOG when camera-based eye tracking is infeasible, and it paves the way for the development of eye-movement input interfaces for blind people, research on eye movement direction when the eyes are closed, and the early detection of diseases.

1. Introduction

Eye tracking is the measurement of eye movements, or more specifically, the gaze direction and fixation patterns. On the one hand, the gaze direction can be controlled voluntarily. Therefore, it is used for human–computer interfaces where limb movement is not possible [1] or when a hands-free interaction is desirable [2]. On the other hand, involuntary eye movements are often correlated with cognitive processes in the human brain, such as attention and preferences [3,4].

Considering these two use cases, our focus was on the voluntarily controlled gaze direction because it is useful for gaze-based interaction designs for vulnerable populations such as blind people and locked-in patients [5]. For example, blind people could use their eyes as a joystick during audio- and tactile-guided navigation [6,7]. In particular, when the hands are used as a surface for tactile displays [8], eye movements can provide an additional input channel for navigation and object manipulation. In this case, blind people are not using their eyes for vision anymore; therefore, the eyes’ voluntary movements are free for interaction.

Gaze direction is typically represented in a two-dimensional view reference plane. Vergence cues for the eye direction are small, but have been used to assess the depth gaze in virtual and augmented reality displays or in non-display interactions [9,10,11]. However, when people are interacting with screens, the vergence between the eyes remains the same, presenting a challenge to identify the depth cues and to point at visual target interactions [12]. Furthermore, when using gaze as an interaction input with the eyes closed, the depth cues are unavailable. Therefore, we focused on gaze estimation in a two-dimensional plane.

There are different techniques that can be used to measure the gaze direction, and each of them has its distinct advantages and shortcomings. One such technology is computer vision (CV), where an infrared light pattern is projected onto the pupil, and the reflection and changes therein are recorded with a camera [13]. This technique achieves good results, is easy to set up, and is not invasive. Some of the downsides of CV are the need to have uncovered access to the eyes and its sensitivity to illumination changes, as well as the reflection of other light sources like the infrared portion of sunlight or reflections on glasses [14,15,16,17]. When the eye area is partially or intermittently covered by eyelids, hair, or external accessories, the accuracy and reliability of CV-based eye tracking can be significantly compromised. This is particularly problematic for users with certain eye conditions that cause partial eyelid closure or obstruction of the eye region, such as ptosis (drooping eyelids) or habitual squinting resulting from poor visual acuity [18,19,20]. Additionally, image processing in CV-based systems often requires a high amount of computational resources. Recent research has sought to mitigate this demand by introducing techniques such as feature maps similarity knowledge transfer, which enables accurate gaze estimations from low-resolution images [21]. Another line of work improved the computational efficiency by suppressing redundant facial information and enhancing the extraction of gaze-relevant features through attention-based mechanisms [22]. An alternative method involves the use of search coils [23]. Contact lenses with a very small metal coil are placed onto the eyes and measured within an electromagnetic field. The movement of the search coils leads to changes in the magnetic field that are measurable at a very high precision, even with closed eyes, but the wire inside the eye leads to some discomfort and can, therefore, only be used for up to 30 min as recommended by the manufacturers [24]. Electro-oculography (EOG) is a third technique that is less invasive and can, as we will show, measure the gaze direction with closed eyes. The electrical difference between the cornea at the front of the eye and the retina at the back of the eye can be measured with electrodes pasted horizontally and vertically outside of the eyes. However, the accuracy of EOG is often limited by noise resulting from the overlap with other biological signals, such as facial electromyography (EMG). Additionally, the basic charge of the eye is different depending on the lighting situation, as the eye’s potential decreases in darkness, reaching a dark trough after 8–12 min, and rises after the lights are turned on again, peaking about 10 min later [25]. Therefore, lighting conditions might cause drifts in the EOG measurements as well.

Depending on the situation, it can still be worthwhile to choose EOG over CV, despite the challenges involved with EOG measurement. A summary comparison of EOG- and CV-based eye-tracking methods is provided in Table 1. The limitations of CV-based eye tracking—including the sensitivity to reflections, a poor performance with glasses, and failure under eye occlusion—make it unsuitable for certain scenarios where EOG can be used unrestrictedly. These scenarios where EOG is advantageous include sleep monitoring, use in high- or low-light environments, or use with participants wearing prescription lenses. However, most video-based and EOG-based eye-tracking techniques rely on visual fixation for calibration. Because of this, they are inherently unsuitable not only for closed-eye conditions, but also for individuals who are blind or partially sighted and cannot perceive visual targets even with their eyes open.

Table 1.

Comparison of EOG- and CV-based eye tracking.

To address this critical limitation, we introduced a proprioceptive calibration method that uses a tactile cardboard interface with a pattern of felt dots. This task leverages the body’s internal sense of limb position to guide gaze alignment, thereby allowing users to perform guided fixations under closed-eye conditions to enable consistent and repeatable calibration without relying on visual input [30].

The main contributions of this study are as follows:

- We introduced a proprioceptive calibration method that enables EOG calibration in the absence of visual input.

- We propose a novel approach for estimating the gaze direction using EOG signals when the eyes are closed.

- We established eye tracking as a benchmark for evaluating EOG-based gaze direction estimation by comparing both modalities under eyes-open conditions.

- We validated the effectiveness of EOG-based gaze estimation under eyes-closed conditions by comparing aligned EOG trajectory data with shuffled (chance-level) control data.

Estimating the gaze direction with closed eyes would be beneficial when designing supportive technology for groups dependent on human–computer interaction for an improved well-being and quality of life, such as people with locked-in syndrome [5] or amyotrophic lateral sclerosis [1]. Furthermore, it would allow experiments with blind participants and the investigation of cognition through eye tracking, where retinal inputs are unavailable—for example, in the case of audio-guided navigation [31]. EOG has also been widely used in sleep monitoring, particularly within polysomnography (PSG), where it supports sleep staging by capturing gross eye movements [32]. However, several studies in sleep and dream research have gone further by analyzing the characteristics and directions of eye movements during REM sleep, and our method offers a potential tool to support such advanced investigations. For instance, previous work has shown alignment between EOG-based eye movement directions and dream reports [33], demonstrated that smooth-pursuit tracking during REM sleep more closely resembles perception than imagination [34], and revealed that REM gaze shifts in mice are linked to shifts in the virtual head direction [35].

The remainder of this paper is organized as follows: The Section 2 reviews prior research on gaze estimation, beginning with EOG-based approaches under eyes-open conditions, followed by techniques developed for eyes-closed scenarios. The Section 3 outlines the experimental details, including participant recruitment, the experimental design, the apparatus, and the data-collection procedures. The Section 4 details the analytical methods used in this study, including the signal preprocessing pipeline and evaluation strategies. The Section 5 presents the experimental findings. The Section 6 interprets the results in the context of the existing literature. Finally, the Section 7 outlines the current constraints of the study and proposes directions for future research.

2. Related Works

Gaze estimation has been widely used in vision science and HCI, including a wide range of applications, such as attention analyses, intention recognition, and hands-free interaction control. Among various gaze estimation methods, EOG with open eyes is a common input in the field of HCI to control wheelchairs, play games, and write text [36,37,38]. It is also employed for recognizing user activities such as reading, writing, or watching videos [39]. Several algorithms have been proposed to estimate gaze direction using EOG signals with open eyes [40,41,42,43]. These methods typically require calibration to determine the direction and amplitude of eye movements. This calibration process often involves associating EOG signal amplitudes with known gaze targets to derive user-specific transfer functions, based on the well-established linear relationship between the EOG amplitude and the visual angle of gaze shifts [37,39,44]. For example, Yan et al. adopted a calibration procedure in which participants were guided to fixate on 24 predefined gaze targets positioned at angular offsets of 30°, 45°, 60°, and 75° to the left and right, across three vertical levels: up, middle, and down [45]. Manabe et al. proposed a horizontal gaze estimation approach using nine predefined gaze targets ranging from −40° to +40° from left to right with an interval of 10° [46]. Instead of using a predefined calibration grid, Barbara et al. employed a biophysical battery model of the eye and estimated the model parameters using EOG signals recorded while the participants fixated on targets that appeared randomly on the screen [47]. However, these calibrations require open eyes to fixate on predefined screen-based targets, limiting their applicability in closed-eye scenarios.

In 1980, the gaze direction or eye-in-head position was estimated based on a maximum movement calibration during experiments to investigate eye movements in the blind [48]. Hsieh et al. investigated the relationship between EOG signals and visual features of closed-eye motion to synthesize artificial EOG signals using computer vision for non-invasive sleep tracking [49]. Recent advances have explored various modalities and applications for gaze estimation and interaction under closed-eye conditions. Findling et al. introduced a camera-based recognition of closed-eye gaze gestures using optical flow from eye-facing cameras in smart glasses [50]. They proposed four closed-eye gaze gesture protocols and a processing pipeline for classifying eyelid movement patterns. Extending this work, the authors applied EOG to enable closed-eye gaze gesture input for secure mobile authentication [51]. They designed a nine-character EOG-based gesture alphabet and used sensors embedded in the nose pads and bridge of smart glasses to enable gaze gesture detection for password entry. Tamaki et al. also explored EOG-based closed-eye interactions for users with severe motor impairments, achieving the reliable detection of upward eye movements through a combination of thresholding and k-nearest neighbor classification methods [52]. These gesture-based studies demonstrate that EOG can operate under eyes-closed conditions, but they did not attempt to estimate the continuous gaze direction or analyze fine-grained gaze movements. Beyond interaction systems, Ben Barak-Dror et al. demonstrated a touchless short-wave infrared (SWIR) imaging method capable of tracking pupil dynamics and estimating the gaze direction through closed eyelids [53]. This approach uses a U-NET architecture to reconstruct an open-eye model from closed-eye SWIR images, thereby enabling the estimation of pupil size and gaze shifts with a sub-second temporal resolution. To validate the accuracy of gaze estimation, the study employed a protocol in which the participants kept one eye open while manually holding the other eye closed using a finger, allowing for a comparison between the estimated gaze and simultaneous open-eye measurements used as the ground truth, which relies on the assumption of conjugate eye movement between both eyes. However, this assumption does not hold universally; for instance, it does not hold in an individual with strabismus. Moreover, physically holding one eye closed using a finger may restrict natural ocular motion, potentially compromising the validity of the recorded eye movement data. Additionally, the need for active illumination and computational resources makes visual-based methods less suitable for long-term applications such as sleep monitoring, dream research, or critical care settings, where low-power and unobtrusive methods are preferred. MacNeil et al. proposed a calibrated EOG method that aligns EOG signals with ground-truth data from a pupil–corneal reflection tracker to enable the measurement of closed-eye movement kinematics [54,55]. The calibration factor was estimated under open-eye, normal-illumination conditions and then applied to adjust the EOG signals recorded in darkness and during tasks performed with eyes closed. Although this study demonstrates the feasibility of closed-eye movement tracking using calibrated EOG, it focused solely on horizontal eye movements due to a poor signal quality in the vertical EOG channel. In addition, MacNeil et al.’s memory-based design assumes that participants can visually perceive and memorize the calibration pattern, which is not feasible for blind individuals, and may introduce additional sources of error from relying on mental imagery and saccadic execution without visual feedback. To address these limitations, we propose a tactile-guided proprioceptive calibration method that uses a pattern of felt dots on cardboard to guide the gaze with eyes open or closed, under both illuminated and dark conditions. This method does not require visual fixation and provides tactile spatial references that are more reliable than memory-based calibration. In addition, we explored both horizontal and vertical eye movements in this work. In summary, our method differs from prior work in several key aspects: (1) it is grounded in physiological signals and does not depend on visual access; (2) it supports both horizontal and vertical gaze estimation; and (3) it is inherently compatible with individuals who are blind or partially sighted, as it does not require sighted calibration or visual stimuli at any stage of the procedure.

3. Materials and Methods

3.1. Participants

Five volunteers (three female, mean age: 25.6 years old, = 2.7) participated in a pilot study. The pilot study was conducted as an initial test of the experimental procedure and apparatus. Based on its outcome, the main experiment was then carried out with the finalized procedure using paid participants. During the pilot study, there were procedural changes, and we experienced technical difficulties with the eye tracker. Only the data for the last participant used the same procedure as the main experiment and were kept for further analysis. For the main experiment, ten paid participants (five female, mean age: 28 years old, = 9.8) were recruited. One participant completed half of the trials due to limited time, performing only the lights-off condition. Therefore, there were 11 participants whose data were analyzed. The study was conducted in accordance with the Declaration of Helsinki. The experimental procedure was explained to the participants beforehand, and all gave informed consent to participate.

3.2. Experiment Design

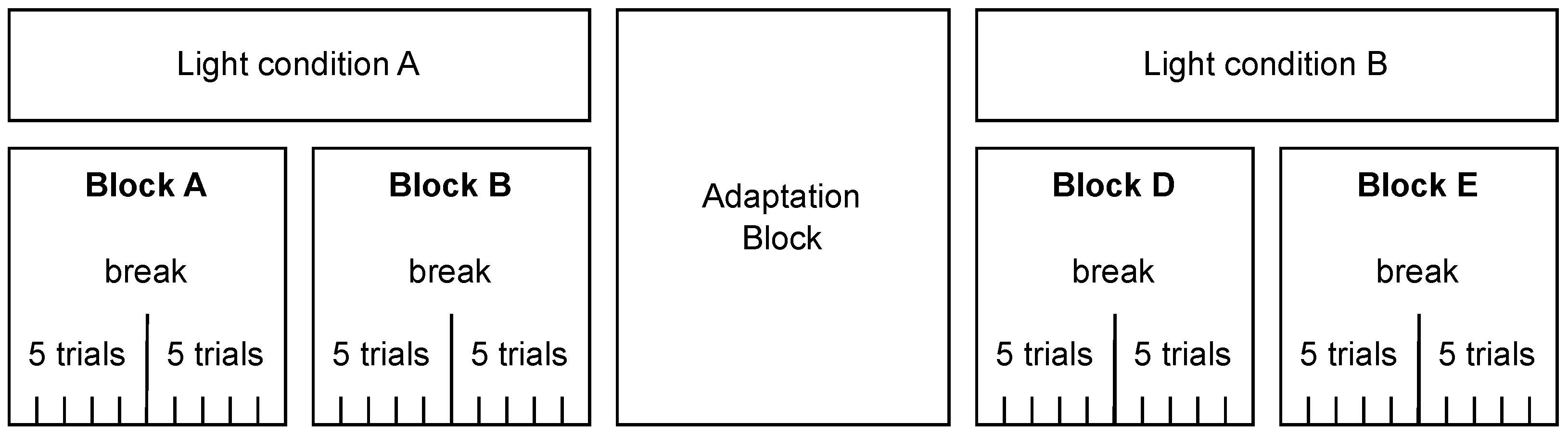

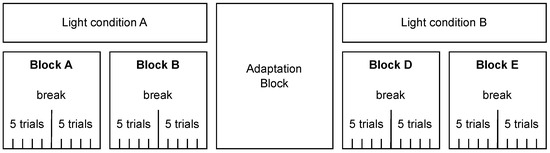

We used a within-subjects design with the factors light (on/off) and eyes (open/closed). Each participant completed four blocks (two per light condition; see Figure 1); the order of light conditions and blocks was randomized. Each block comprised 10 trials with the eye state (open/closed) randomized across blocks. The participants took a short break after every fifth trial. Between the two light conditions, an adaptation phase with lights off was inserted; the participants kept their eyes closed and listened to an audiobook read in English.

Figure 1.

Block diagram of the experimental design. Each participant completed four blocks of trials (Blocks A–B under light condition A and Blocks D–E under light condition B), with the order of light conditions and blocks randomized. Light conditions A and B could be lights on or off. Each block consisted of ten trials, with a short break after five trials. There was an adaptation phase with lights off and eyes closed between the two light conditions.

3.3. Apparatus

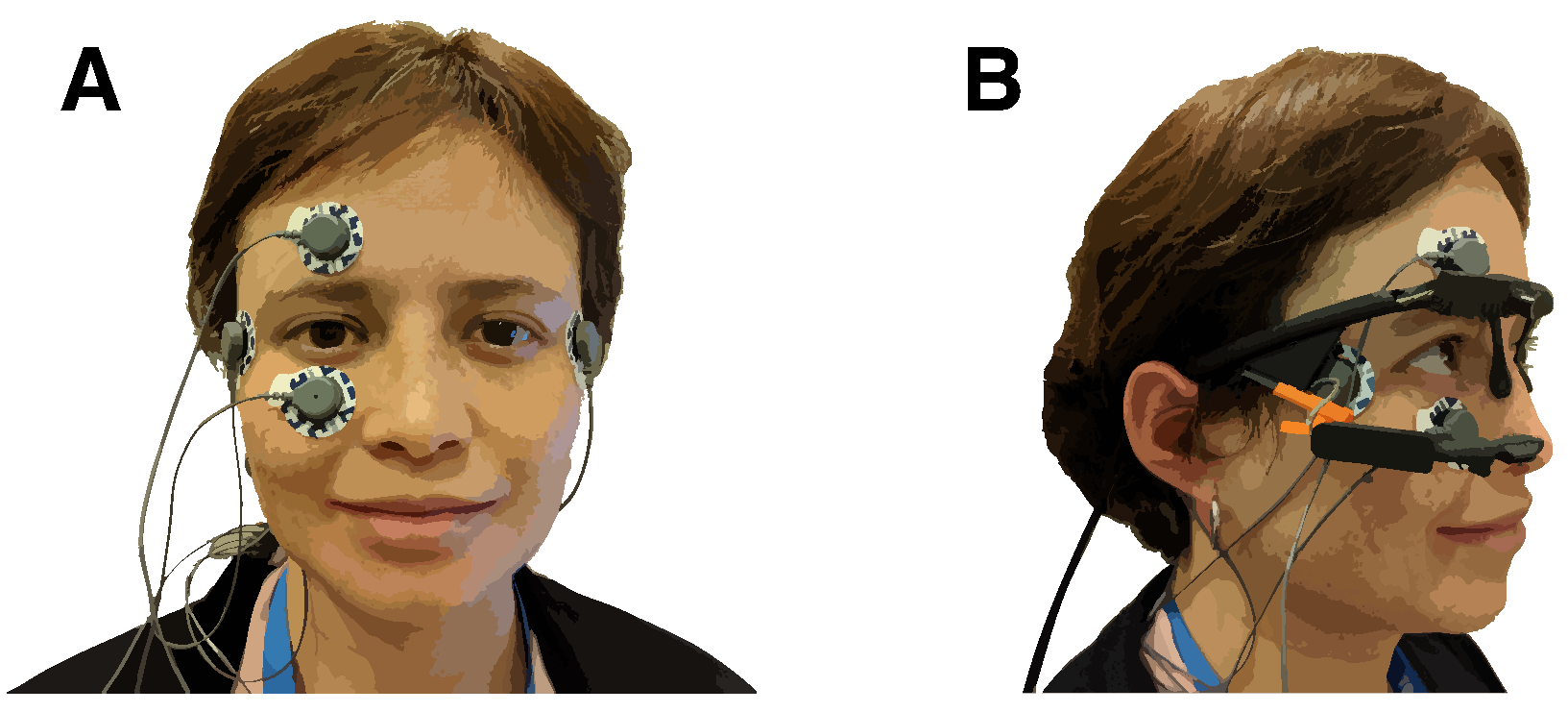

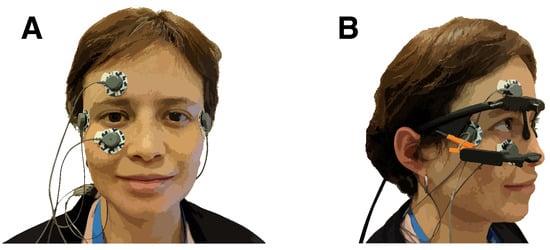

The experiment took place in a soundproof booth. The participants were seated at a table with their heads on a chin rest. In front of the participants, at a distance of 28 cm, was the target pattern on a cardboard square taped to a screen. The target pattern used in the experiment was made from round felt stickers. The participants wore active noise-canceling headphones (ATH-ANC7b-SViS, Audio-Technica, Tokyo, Japan) to hear the instructions and suppress environmental noise. The participants also wore eye-tracking glasses (Pupil Core, Pupil Labs, Berlin, Germany) with a world-facing camera and monocular tracking of the right eye [56]. The eye-tracking glasses were connected to a MacBook Air (3.1 GHz Intel Core i7, 16 GB RAM, 2017, macOS High Sierra, Apple, Cupertino, CA, USA) running Pupil Capture (version 1.13.31, Pupil Labs, Berlin, Germany). The experiment was controlled by custom software written in C++11 with openFrameworks 0.9.4 [57]. Eye movement was recorded using electro-oculography (EOG). The participants wore disposable electrodes (Kendall ARBO H124SG, CardinalHealth, Dublin, OH, USA) above and below their right eye (vertical direction) and outside their right and left eye (horizontal direction), as shown in Figure 2. A fifth electrode on the mastoid behind the right ear served as a reference. The electrodes were connected to a Shimmer3 ECG/EMG Bluetooth device (Shimmer, Dublin, Ireland) that was placed into a pouch strapped diagonally across the participants’ backs.

Figure 2.

(A) Electrode placement. Electrodes above and below the right eye were used to collect vertical eye movement. Electrodes below the temples at the lateral side of the eyes recorded horizontal eye movement. A ground electrode was attached to the right mastoid bone. (B) The eye tracker was placed above the electrodes.

An Arduino microcontroller (Arduino SA, Monza, Italy) connected with USB 2.0 to the MacBook Air received synchronization signals from the custom software through a serial port. A Shimmer3 Expansion device was connected to the Arduino with a 3.5 mm audio-jack cable and recorded the synchronization signals. A 5 V signal presented on the audio-jack cable was interrupted for a duration of 50 ms after receiving a synchronization signal. The shimmer devices recorded to internal memory and streamed to a Lenovo Ideapad 330S 15.6 (Lenovo, Beijing, China). The Shimmer ConsensysPRO v1.5.0 was used to synchronize the recordings from both Shimmer devices and export the data for further processing.

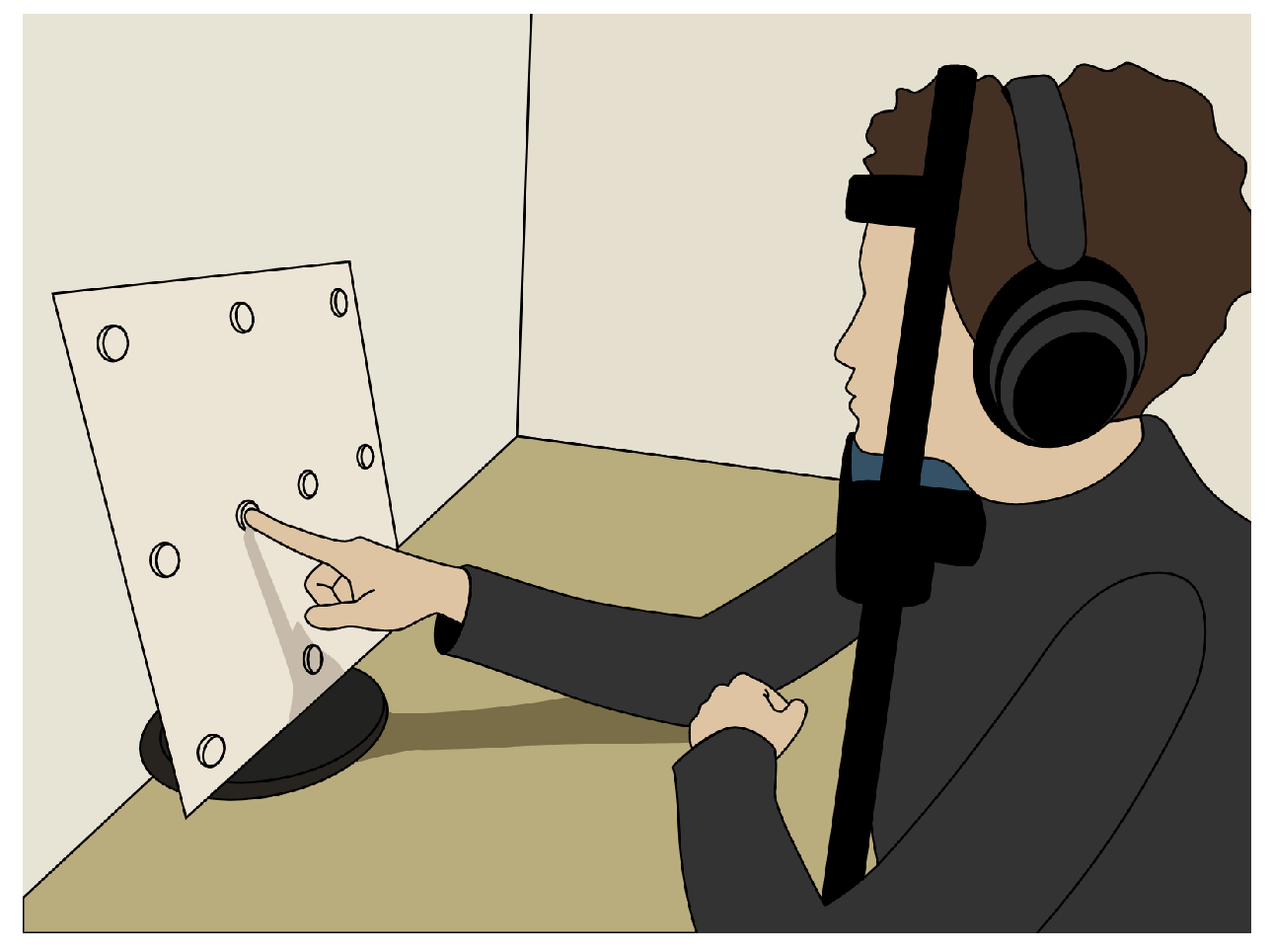

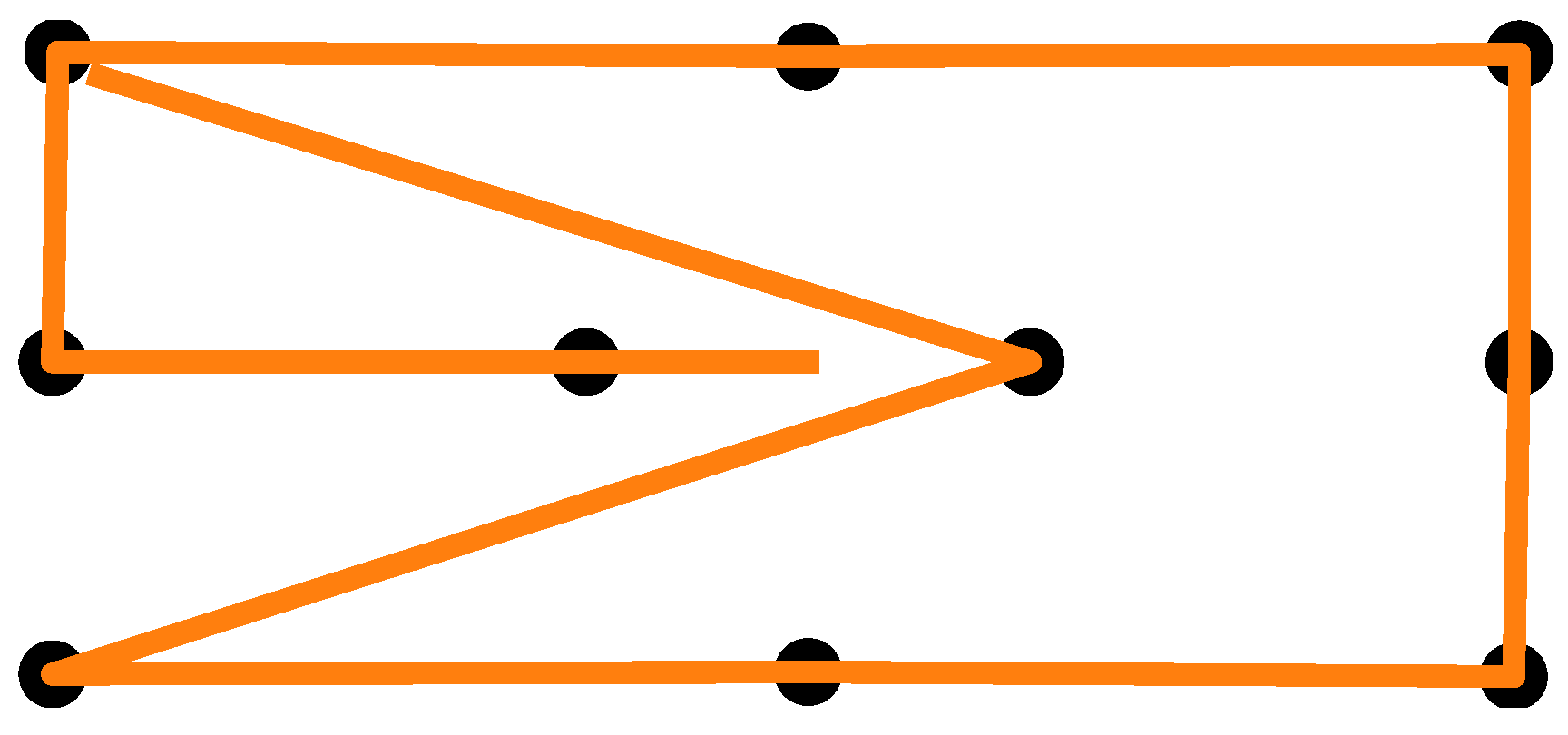

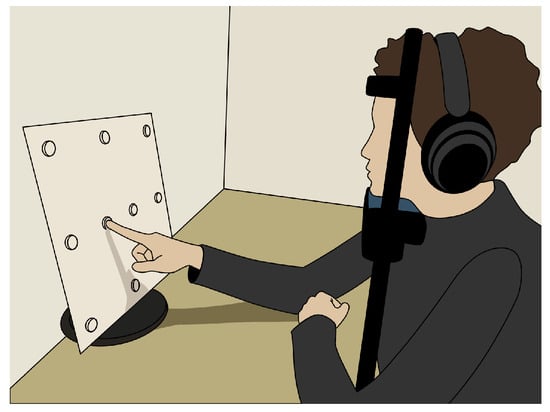

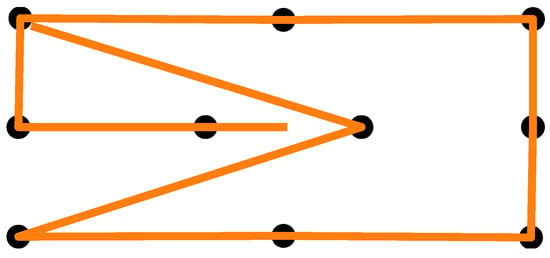

3.4. Procedure

After giving consent to participate in the study, the participants’ skin above and below the right eye, as well as at the corner of both eyes and behind the right ear, was cleaned with alcohol cleaning wipes. EOG electrodes were placed on the cleaned skin areas and connected to a Shimmer unit that was stored in a small bag over the participant’s shoulder. A quick visual signal check was performed to confirm the electrode placement by means of the signal quality. Next, the participants were given the eye-tracking glasses, and the experimenter adjusted the eye camera. The participants were asked to use a headrest that was mounted on a table in front of them to keep their head in a stable position. At a distance of 28 cm in front of the headrest was a widescreen monitor. Using the Tobii Glasses PyController 2.2.4 [58], the eye tracker was calibrated. After successful calibration, a cardboard featuring felt-dot tactile markers (raised dots as shown in Figure 3) was pasted on top of the monitor. The participants completed two to three practice trials to familiarize themselves with the tactile pattern and the auditory signals. The experiment employed a 2 × 2 within-subjects factorial design with the lighting condition (lights on vs. lights off) and eye state (eyes open vs. eyes closed) as factors, resulting in four experimental conditions. The order of the lighting conditions was counterbalanced across participants, and within each lighting condition, the order of eye states was also counterbalanced. The participants performed two blocks in each lighting condition with a short one-minute break after five trials and in between the blocks. A block consisted of 10 measurements in total. For all four experimental conditions, the participants were instructed to touch the markers on the cardboard with their index finger and direct their gaze toward the tip of their finger. When the participants heard an instructional beep at 250 Hz, they had to move their finger between the markers while maintaining real or imaginary eye contact with the tip of their finger, depending on the experiment condition. The marker pattern is shown in Figure 4. Each trial began with a two-second initial phase, during which the participants fixated on the center of the cardboard. Afterward, they sequentially fixated on the 10 markers shown in Figure 4, with each fixation lasting two seconds. The upper-left marker was used twice, resulting in a total of 11 fixations. Combined with the initial phase, this yielded a trial duration of 24 s. After the first lighting condition, the lights in the room were turned off, or kept off, and the participants closed their eyes for 15 min while listening to an English audiobook read in a male voice. This adaptation phase was intended to mitigate the signal drift due to dilating pupils during lighting changes, but was used for all condition combinations. After the adaptation phase, the participants performed two more blocks in the other lighting condition—again, with a one-minute break in between the blocks. After the experiment, the participants removed the eye-tracking glasses and the experimenter disconnected the Shimmer unit and assisted the participant in removing the electrodes.

Figure 3.

Sketch of the measurement setup. The participants were seated at a table with the target pattern in front of them. The head was stabilized by a chin rest, and the participants wore headphones.

Figure 4.

Calibration pattern used as a guide for the eye movement.

4. Analysis

4.1. Processing

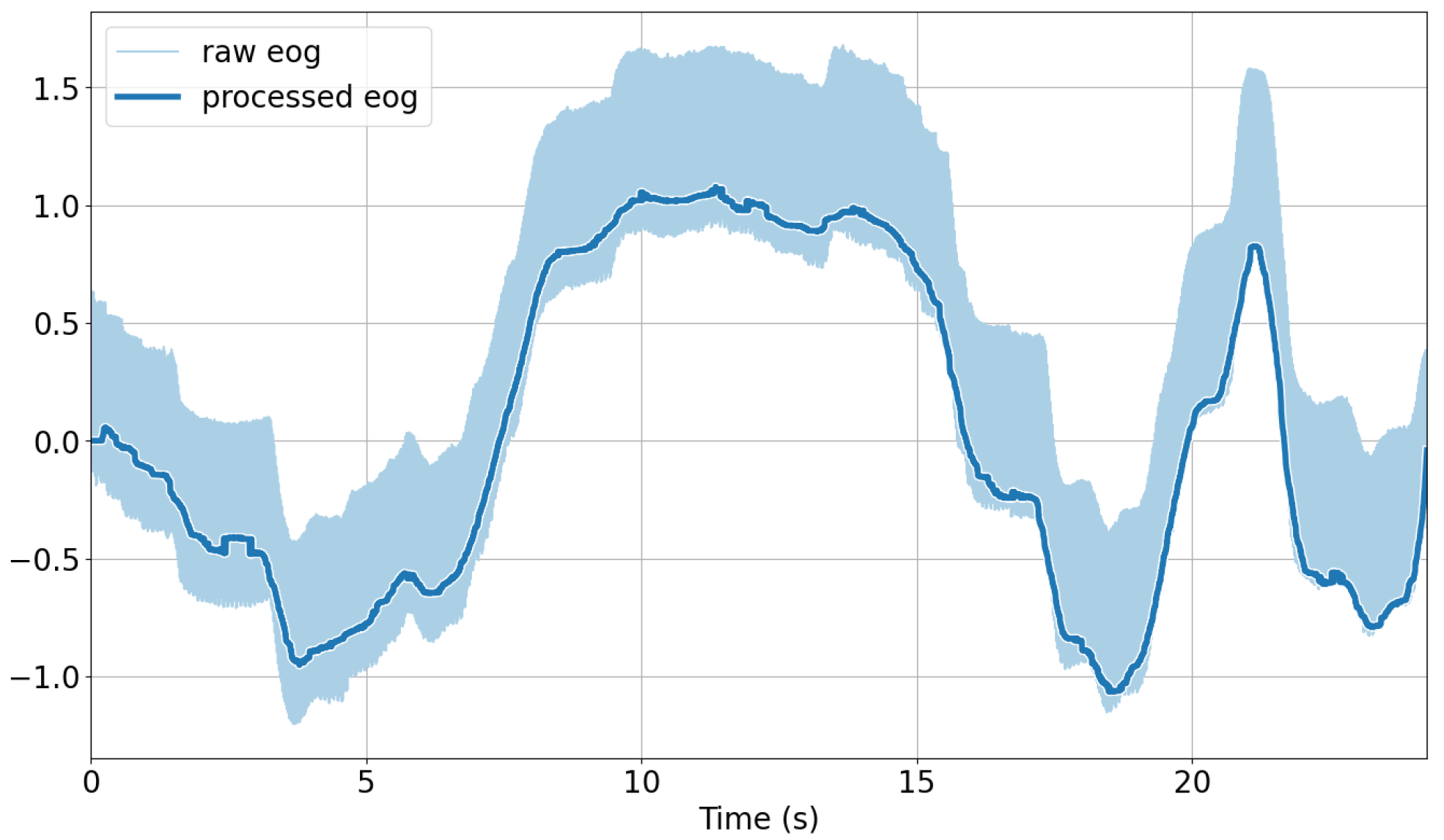

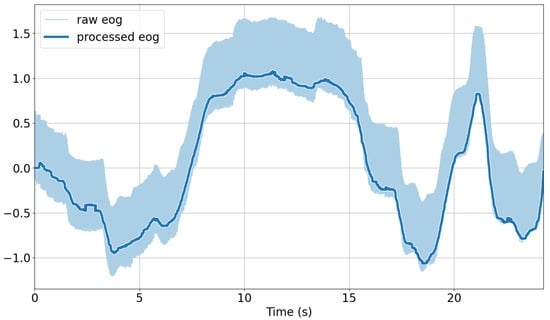

EOG: The signals were linear-detrended using the detrend function of the SciPy Python library [59]. A median filter with a window length of 200 ms was applied to the detrended signals using SciPy’s medfilt function. Finally, for the removal of eye blinks from the vertical signal component, we applied the algorithm devised by Bulling et al. [39] to identify blinks and interpolate linearly. The continuous 1D wavelet coefficients at a scale of 20 using a Haar mother wavelet were computed. A blink was characterized by a large positive peak directly followed by a negative peak in the resulting coefficient vector. Peaks were detected if their magnitude was outside of the average signal plus one standard deviation. The time between the two peaks of a blink is typically less than 0.5 s, which was chosen as the threshold to determine the interval between peaks as a blink. The identified blinks were then linearly interpolated across each interval using five samples on either side to replace the blink segment. For analysis purposes, the standard deviation using a sliding window approach with a window length of 31 ms was extracted. An illustration of the raw versus preprocessed EOG signals after applying these steps is shown in Figure 5.

Figure 5.

Comparison of raw and preprocessed EOG signals over time.

CV: The recordings of the eye-tracking glasses were filtered using a Hampel filter to remove signal components that deviated from the standard deviation in a sliding window with a length of 100 ms. Finally, a median filter with a window length of 100 ms was applied.

The mean of both the EOG and the eye-tracking signals was subtracted before they were transformed to z-scores.

4.2. Comparison of EOG and Eye-Tracking Similarity Between Eyes-Open Lights-On and Lights-Off Conditions

To assess the similarity between the EOG and eye-tracking signals under different lighting conditions during eyes-open trials, we computed the participant-wise grand-average time series for each modality and condition. Specifically, we computed the grand-average signals by averaging the signals across the 10 trials associated with each lighting condition (i.e., EOG in lights-on/off and eye tracking in lights-on/off). One participant who did not complete the lights-on condition was excluded from this comparison. To quantify the alignment between EOG and eye tracking, we computed the cosine similarity between paired signals: (1) EOG vs. eye tracking under lights-on conditions, and (2) EOG vs. eye tracking under lights-off conditions. This was performed separately for the horizontal and vertical components.

4.3. Similarity Between Ground Truth, Eye Tracking, and EOG

To assess the temporal similarity between the physiological signals and the target trajectories, we computed the normalized cross-correlation for each participant and each pair of signals of interest. The analysis included comparisons between the EOG signals (recorded during eyes-open and eyes-closed trials), eye-tracking signals (during eyes-open trials), and the corresponding horizontal and vertical target trajectories. The cross-correlation was computed using full convolution, and the resulting coefficients and lags were normalized to the [0, 1] range. For this analysis, we used grand averages calculated by pooling the trials across both lighting conditions. The eyes-closed EOG signals were averaged over 20 eyes-closed trials, including both light conditions. As no eye-tracking data were available under the eyes-closed conditions, only the EOG signals were analyzed in that context. Similarly, the eyes-open EOG and eye-tracking signals were averaged over 20 eyes-open trials, including the two light conditions. One participant completed only half the experiment (10 trials per light condition); hence, the individual grand averages were based on either 10 or 20 trials. The group-level grand averages were calculated from a total of 210 trials. These averaged signals were used in subsequent statistical analyses.

4.4. Statistical Comparisons

All the statistical analyses were conducted in Python 3.8.18, utilizing the SciPy (v1.10.1) and Statsmodels (v0.14.1) libraries.

4.4.1. Comparing EOG and Eye-Tracking Signals for the Eyes-Open Condition

Time-series analyses were performed for the eyes-open condition to compare the processed EOG and eye-tracking signals for each participant along the horizontal and vertical directions. For each participant, we used the calculated grand average of normalized signals across all eyes-open trials and compared the two modalities at each time point. Both the EOG and eye-tracking signals were downsampled to match the eye tracker’s frame rate of 30 Hz. Given the trial duration of 24 s, each trial consisted of 720 time-aligned samples. Before the statistical comparison, we assessed the normality of the paired signal differences using the Shapiro–Wilk test. If the normality assumption was met, we selected a parametric paired t-test. If not, we used a non-parametric Wilcoxon signed-rank test to perform the paired comparison tests. In addition, we applied two one-sided equivalence tests (TOSTs) to evaluate whether the differences between modalities were small enough to be considered practically negligible. The smallest effect size of interest (SESOI) was set to a Cohen’s d of 0.1, often interpreted as a trivially small effect according to widely used benchmarks [60,61]. We converted this SESOI into raw measurement units using the standard deviation of the paired differences, producing symmetric upper and lower equivalence bounds. The equivalence test assessed whether the observed mean differences (90% confidence interval (CI)) fell entirely within these bounds, indicating practical equivalence.

4.4.2. Comparing EOG Signals and the Ground Truth Trajectory for the Eyes-Closed Condition

While eye tracking offers an alternative sensing modality for reference, direct comparisons with it are not feasible under closed-eye conditions. Therefore, we conducted a control analysis using a surrogate data approach, following the method described in [53] to evaluate whether the EOG signals accurately captured the target trajectories during eyes-closed conditions. Specifically, we compared the MAE between the true data (EOG signals aligned with their paired target trajectories) and the surrogate data (EOG signals aligned with shuffled target trajectories). The surrogate data were generated by randomly shuffling the trajectory sequence 100 times to avoid bias from any single random permutation. After checking for normality with Shapiro–Wilk tests, we applied either a paired t-test or a non-parametric Wilcoxon signed-rank test to determine the statistical significance of the MAE differences.

5. Results

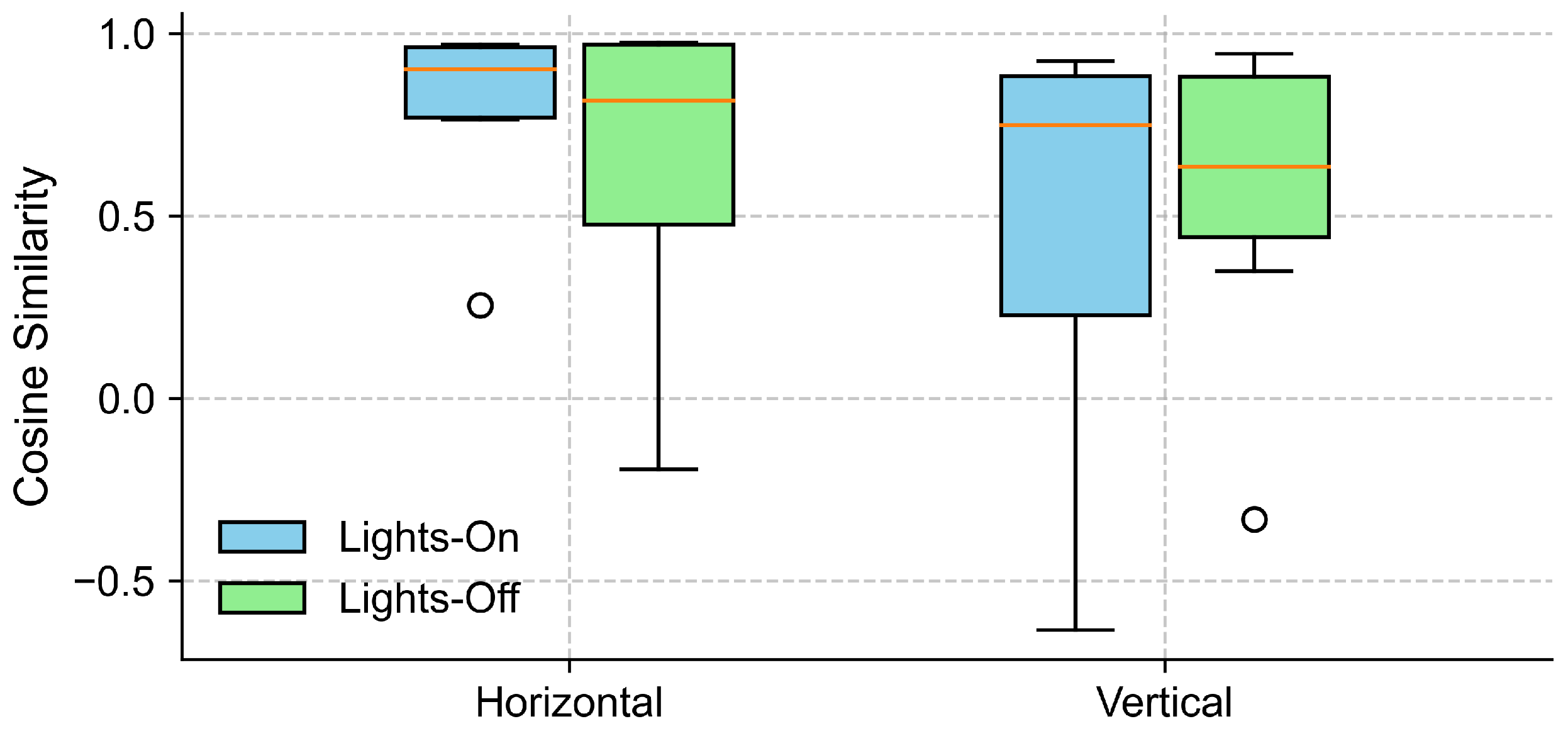

5.1. Effect of Lighting Conditions on Similarity Between EOG and Eye-Tracking Signals Under Eyes-Open Conditions

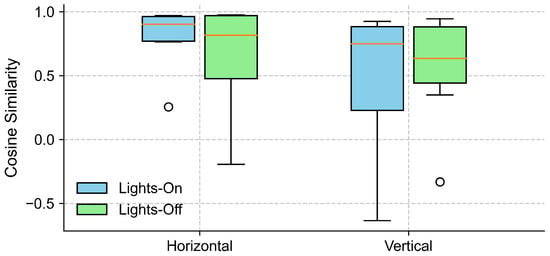

To examine the effect of lighting conditions on the alignment between the EOG and eye-tracking signals, we computed the cosine similarity between the grand-averaged EOG and eye-tracking signals separately for lights-on and lights-off conditions, in both the horizontal and vertical gaze directions (see Section 4.2). As shown in Figure 6, the horizontal direction yielded a high cosine similarity across the participants under both lighting conditions, with slightly higher and less variable values observed for the lights-on trials. In contrast, the cosine similarity in the vertical direction exhibited a greater variability.

Figure 6.

Cosine similarity between EOG and eye-tracking signals under different lighting conditions. The boxplots show the distribution of cosine similarity values across participants for each gaze direction (horizontal and vertical), comparing lights-on (blue) and lights-off (green) conditions. Each box represents the interquartile range (IQR), with the horizontal line indicating the median, and the whiskers extending to the minimum and maximum values within 1.5 × IQR. Circles represent outliers.

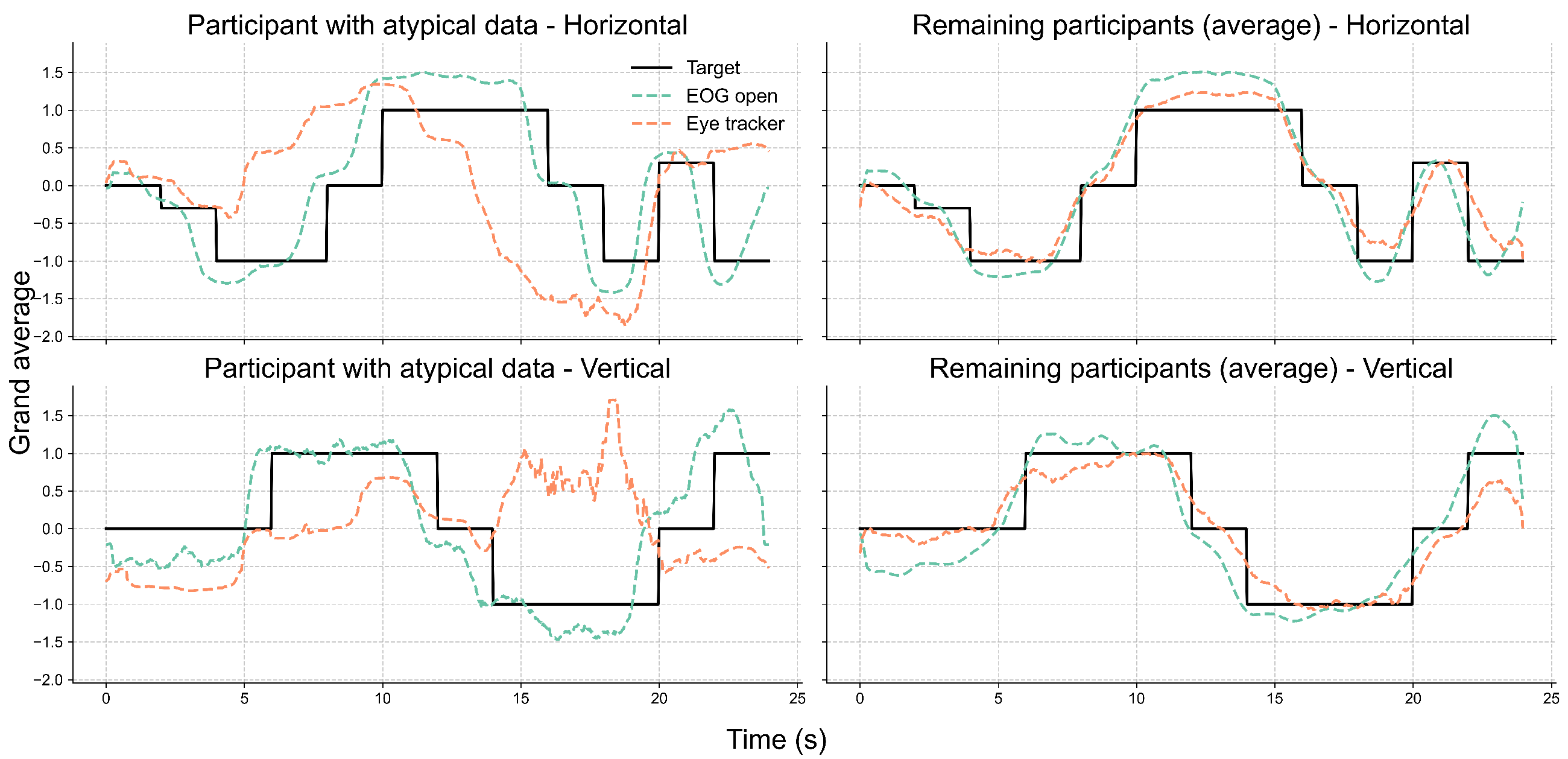

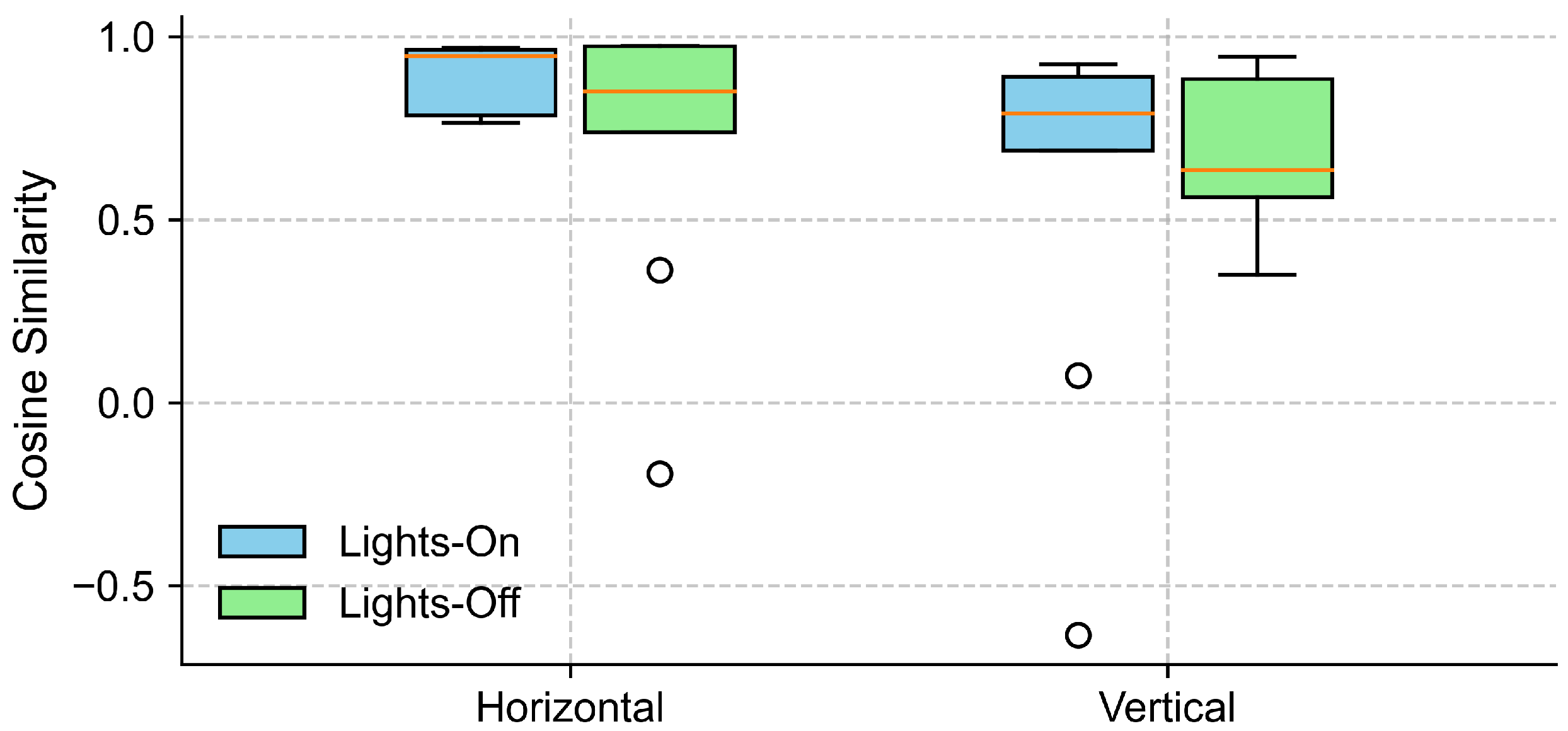

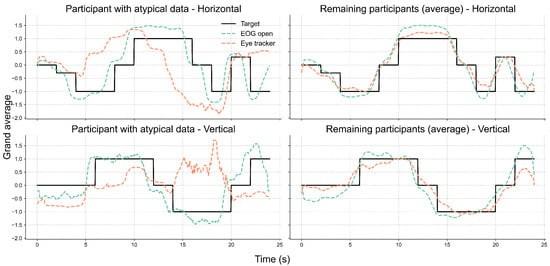

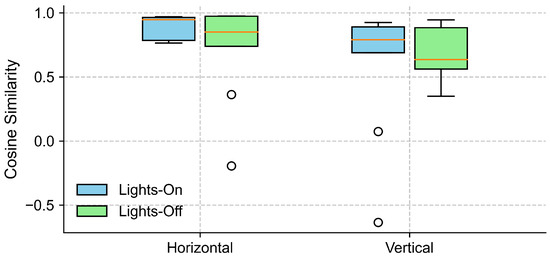

Further inspection revealed that the extreme outliers were primarily attributable to the data from one participant. This participant had myopia requiring –6D corrective lenses and was not wearing their glasses during the experiment. Figure 7 illustrates the EOG and eye-tracking signals during the eyes-open trials for the participant with reduced signal alignment and the average signals from the remaining participants. It is evident that the eye-tracking signals from this specific participant demonstrated poor alignment with both the target trajectory and the corresponding EOG signals. In contrast, the EOG signals were unaffected by the participant’s visual disorder and closely followed the target trajectory. Therefore, we recalculated the cosine similarity after excluding this participant. The updated results in Figure 8 show a clear reduction in variability across all conditions, particularly in the vertical direction under the lights-on condition. We summarized the statistical test results that assessed the effect of lighting conditions in Table 2. We first tested for normality using the Shapiro–Wilk test for cosine similarity values under the “lights-on” and “lights-off” conditions. Most of the conditions showed significant deviations from normality (), except for the vertical “lights-off” condition (). Based on these results, we employed the non-parametric Wilcoxon signed-rank test to compare the similarity scores between the lighting conditions. No significant differences were found in either the horizontal () or vertical () directions. The corresponding effect sizes were small to moderate ( for horizontal, for vertical).

Figure 7.

Comparison of eye movement signals between a participant with myopia and uncorrected vision and the group average. Each subplot displays the grand-averaged signals over time for the horizontal (top row) and vertical (bottom row) directions. The left column shows data from one participant whose eye-tracking signals exhibited low alignment with the EOG and target trajectories. The right column presents the average signals from the remaining participants, excluding that case. Black lines represent the target trajectory, while dashed lines indicate the EOG (green) and eye-tracker (orange) signals recorded under the eyes-open condition.

Figure 8.

Cosine similarity between EOG and eye-tracking signals under different lighting conditions, excluding data from one participant with myopia (–6D corrective lens) and uncorrected vision. The boxplots show the distribution of cosine similarity values across participants for each gaze direction (horizontal and vertical), comparing lights-on (blue) and lights-off (green) conditions. Each box represents the interquartile range (IQR), with the horizontal line indicating the median, and the whiskers extending to the minimum and maximum values within 1.5 × IQR. Circles represent outliers.

Table 2.

Normality (Shapiro–Wilk) and Wilcoxon signed-rank test results for cosine similarity between lights-on and lights-off conditions.

5.2. Grand Average of Instructed Trajectory vs. Sensed Gaze Across Lighting Conditions

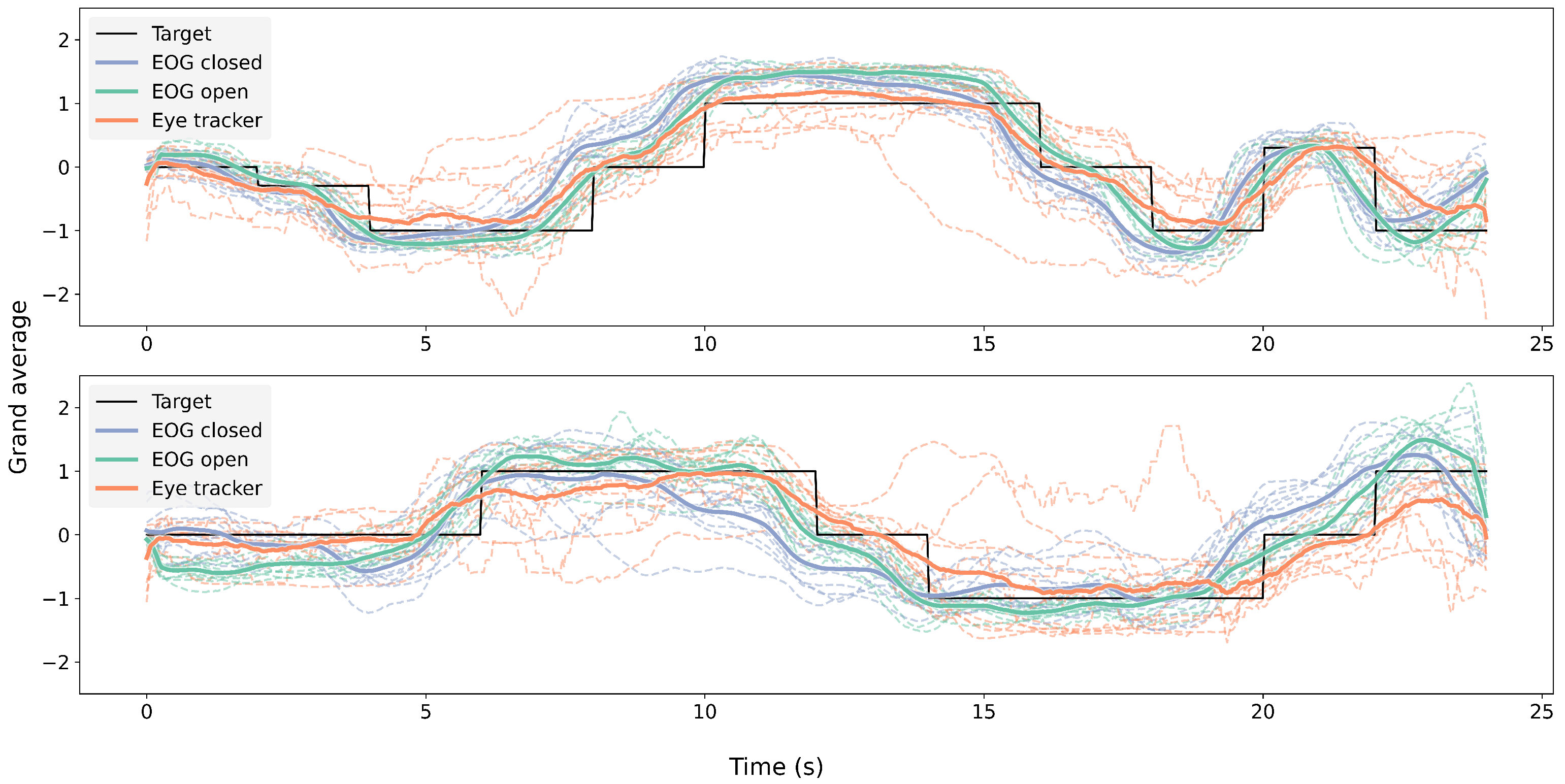

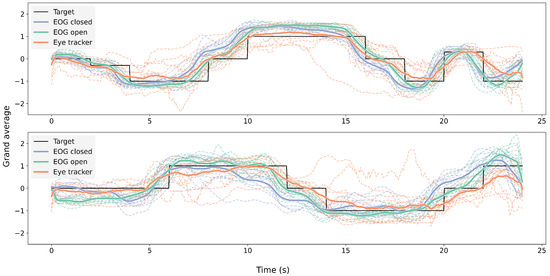

Figure 9 shows the grand average of EOG signals during the eyes-open and eyes-closed trials, the eye-tracking signals during the eyes-open trials, and the corresponding target trajectories in both the horizontal and vertical directions across 11 participants.

Figure 9.

Grand average per participant of EOG with open (green) and closed (blue) eyes and eye-tracking signals (orange) with open eyes. The upper graph shows horizontal eye movement, while the bottom graph shows vertical eye movement.

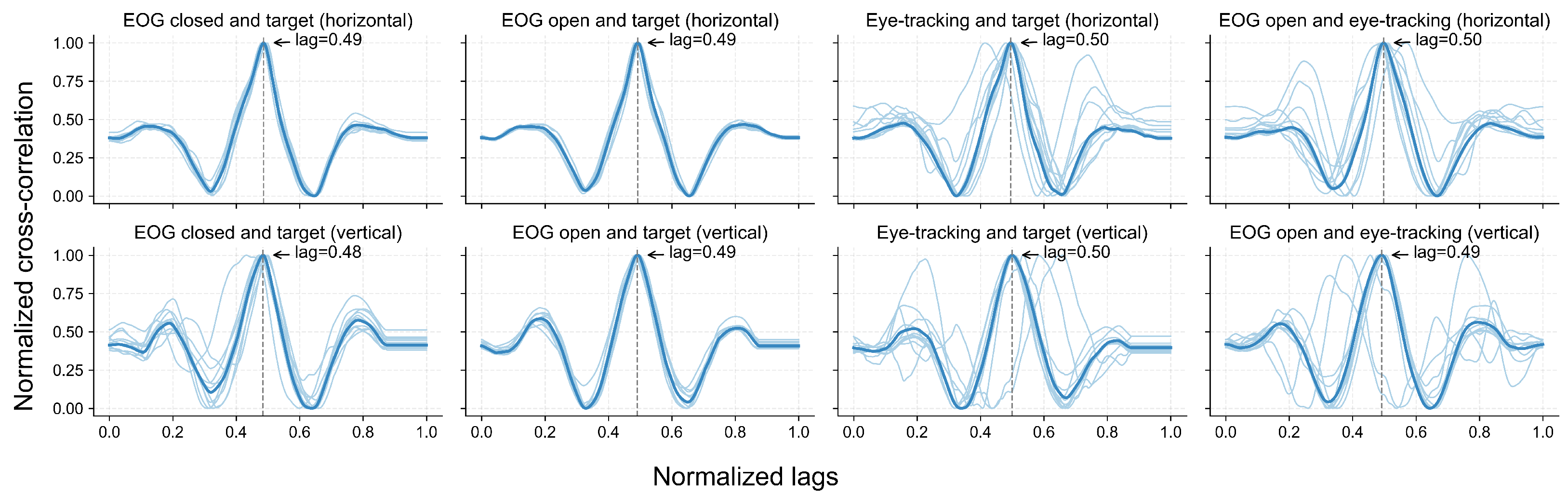

5.3. Cross-Correlation Between Instructed Trajectory and Sensed Gaze

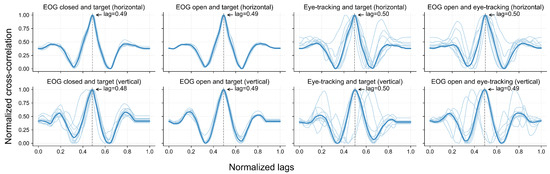

Figure 10 illustrates the cross-correlation between pairs of signals or between a signal and the target trajectory across the horizontal and vertical directions. After scaling the cross-correlation index to the [0, 1] range, a peak correlation of around 0.5 indicates that the highest correlation between two signals occurred when they were temporally aligned. In other words, a peak in 0.5 indicates that there is no lag between the signals. Across all the comparisons, the group-level cross-correlation curves exhibited a clear peak near the normalized lag of 0.5. In the horizontal direction, the average delay between the EOG and eye-tracking signals was approximately 50 ms, with the EOG preceding the eye-tracking curve. In the vertical direction, this delay was around 200 ms, again indicating that EOG preceded the eye-tracking signals.

Figure 10.

Normalized cross-correlation between eye movement signals and target trajectories. Each subplot shows the normalized cross-correlation between signal pairs or between a signal and the target trajectory, including EOG under eyes-open and eyes-closed conditions, eye-tracking data under eyes-open conditions, and target trajectories, in both horizontal and vertical directions. Light blue lines represent individual participants (N = 11), while the dark blue line indicates the group-level results. Dashed vertical lines mark the lag at which the maximum correlation occurs.

5.4. Comparison of EOG and Eye-Tracking Signals Under Eyes-Open Conditions

Normality tests with the Shapiro–Wilk test indicated a deviation from a normal distribution (). Therefore, we employed non-parametric Wilcoxon tests instead of paired t-tests. The Bonferroni correction was used to correct for multiple comparisons.

Table 3 summarizes the results of the Wilcoxon signed-rank tests and equivalence tests for the horizontal and vertical gaze components under eyes-open conditions. The test statistics and equivalence outcomes for each individual participant are reported. Additionally, the All row indicates the group-level analysis based on the average signals across all participants.

Table 3.

Summary of Wilcoxon signed-rank tests and equivalence tests (TOSTs) comparing EOG and eye-tracking signals for both the horizontal (X) and vertical (Y) gaze directions under eyes-open conditions, including both individual and group-level results.

At the group level, no statistically significant differences were observed between the EOG and eye-tracking signals for horizontal () or vertical () movements. Additionally, the 90% confidence intervals of the mean differences ([−0.019, 0.019] for horizontal; [−0.027, 0.027] for vertical) fell entirely within the predefined equivalence bounds ( and , respectively), thereby confirming statistical equivalence. This pattern was consistent across all the participants, where all p-values exceeded 0.05 and all 90% confidence intervals remained within the participant-specific equivalence bounds. Even in cases with relatively larger bounds (e.g., P01 and P05), the equivalence criteria were still met. These results provide strong evidence that EOG and eye-tracking signals are not only statistically indistinguishable, but also statistically equivalent across both spatial dimensions.

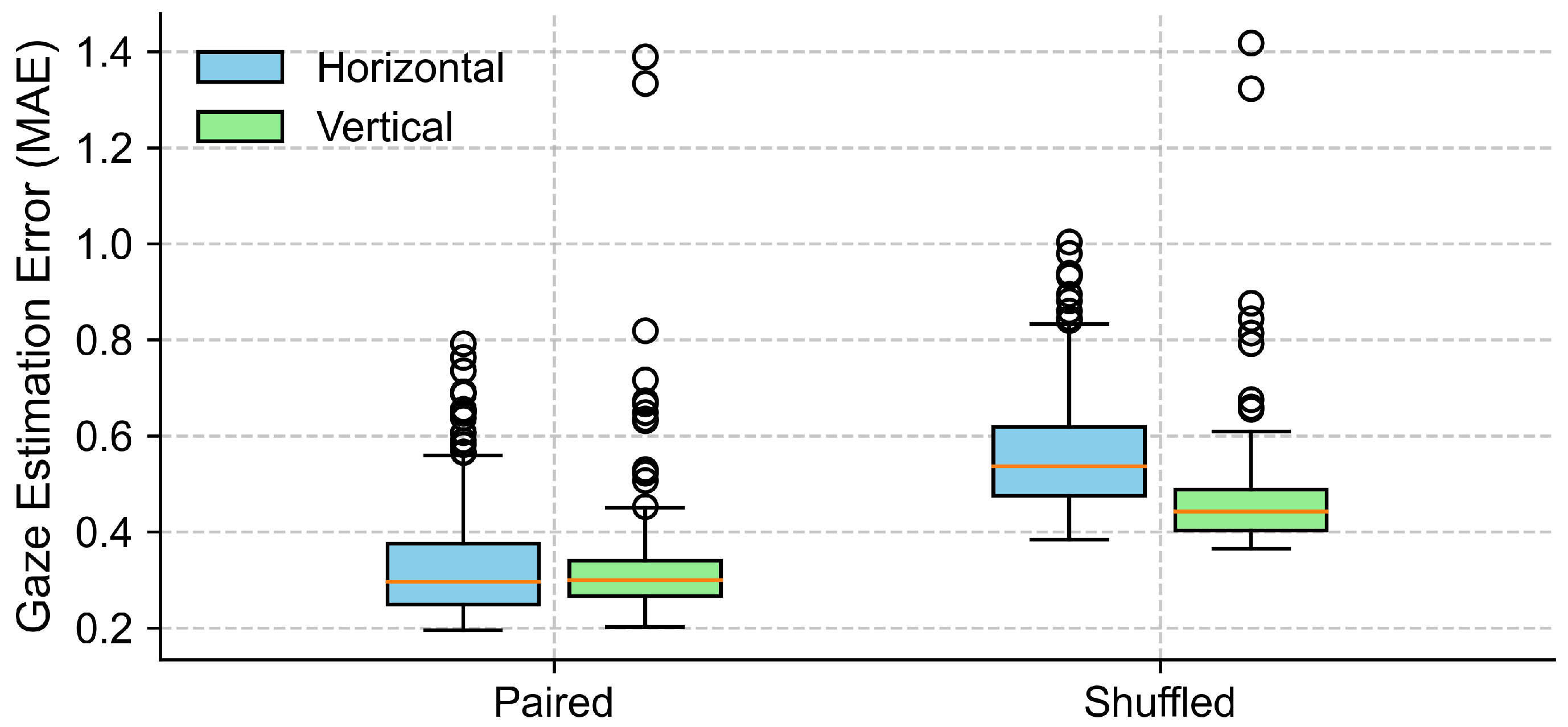

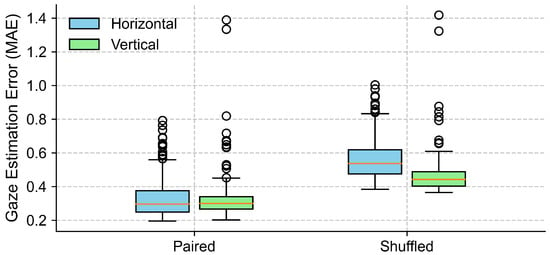

5.5. Control Analysis of EOG Accuracy Under Eyes-Closed Conditions Using Surrogate Data

To evaluate the effectiveness of EOG signals in capturing gaze trajectories under eyes-closed conditions, we compared the MAE between the true data and surrogate data across the horizontal and vertical directions. As shown in Figure 11 and Table 4, the paired condition yielded lower MAEs compared to the shuffled condition. In the horizontal direction, the average MAE increased from in the paired condition to in the shuffled condition. Normality testing using the Shapiro–Wilk test indicated a violation of the normality assumption (p < 0.001), so a non-parametric Wilcoxon signed-rank test was applied. This test confirmed a statistically significant difference between the conditions (p < 0.001), with a large effect size (Cohen’s ). Similarly, in the vertical direction, the average MAE rose from to . The normality assumption was violated (Shapiro–Wilk p < 0.001), and the Wilcoxon signed-rank test confirmed the difference as statistically significant (p < 0.001), with an effect size of Cohen’s . These results suggest that EOG signals contain meaningful information about the eye movement direction during eye closure, and that the gaze direction estimated from EOG was significantly more accurate than that derived from shuffled control data, which reflects the chance-level performance.

Figure 11.

Comparison of gaze estimation errors (MAE) between true (paired) and surrogate (shuffled) data for both horizontal and vertical directions. Each box represents the distribution of trial-level mean absolute errors (MAEs), with lower values indicating better alignment between EOG signals and target trajectories. The boxes represent the interquartile range (IQR), with the horizontal line indicating the median, and the whiskers extending to the minimum and maximum values within 1.5 × IQR. Circles represent outliers.

Table 4.

Comparison of gaze estimation error (MAE) between true (paired) and surrogate (shuffled) data for X and Y axes.

6. Discussion

We designed an experiment to evaluate gaze direction estimation with EOG and an eye tracker under four conditions: lights on and eyes open, lights on and eyes closed, lights off and eyes open, and lights off and eyes closed. A gaze direction was instructed using audio and proprioceptive cues. With these data, we assessed the agreement between the gaze direction estimates from EOG and video-based eye tracking. Our results demonstrated a strong alignment between EOG-based and eye-tracking-based gaze signals in both the horizontal and vertical directions when the participants’ eyes were open. We compared the similarity between the EOG and eye-tracking signals under both lights-on and lights-off conditions to understand whether ambient lighting influenced gaze measurements. The results showed a comparable performance across both conditions. In addition, we investigated whether detecting the eye movement direction using EOG under the eyes-closed condition is possible. During the eyes-closed trials, the EOG signals retained meaningful directional trends that followed the target trajectory that the participants were instructed to follow. Our statistical analyses also confirmed that the EOG-based gaze estimates were significantly more accurate than chance.

In the literature, ambient light is recognized as a confounding factor for eye tracking, and, to a lesser extent, for EOG as well. However, our results showed that the similarity between the EOG and eye-tracking signals remained high and comparable under both lights-on and lights-off conditions (see Figure 8). The statistical results further showed no significant differences between lighting conditions in either the horizontal or vertical direction, indicating that ambient illumination had no measurable impact on the signal alignment (see Table 2). The eye-tracking system demonstrated a stable performance regardless of lighting, likely due to its use of an infrared (IR) illuminator that enables robust tracking, even in the absence of visible light. A particular case involved one participant suffering from myopia who exhibited consistently lower similarity values across both lighting conditions along the two directions. This discrepancy was likely due to frequent squinting caused by refractive errors. Squinting could have led to the partial occlusion of the eyes and, therefore, hindered the performance of video-based eye tracking. Nevertheless, the EOG signals from this participant remained well aligned with the target trajectory, which highlights the usability of EOG under such challenging conditions.

The grand-average signals from both modalities closely followed the target trajectory over time across both lighting conditions, as illustrated in Figure 9, with some deviation across participants. The cross-correlation analysis (Figure 10) further supported this finding, revealing consistent correlation peaks near a normalized lag of 0.5 across all signal pairs. Notably, the EOG signals tended to slightly precede the eye-tracking signals, which may reflect the earlier detection of oculomotor activity via EOG compared to the visual-capture latency of eye trackers. Similar observations of electromyography (EMG) signals preceding computer vision detection have been reported in prior studies [62,63].

Furthermore, we found EOG tracking to be equivalent to eye tracking when the eyes were open, and to the instructed gaze trajectory with the eyes closed, as indicated by the Wilcoxon signed-rank tests and two one-sided tests (TOSTs) in both the horizontal and vertical directions. Looking closer, the alignment between EOG and eye tracking was stronger in the horizontal direction than in the vertical. This was reflected in the higher similarity and tighter overlap between the horizontal EOG and eye-tracking signals (Figure 8 and Figure 9), the sharper and more consistent cross-correlation peaks (Figure 10), and the smaller MAE and larger effect size observed for the horizontal axis during the gaze estimation error analysis (Table 4). This finding aligns with previous studies, which noted that vertical EOG signals were often too noisy and heavily influenced by horizontal ocular activities to extract useful vertical eye movement features [54,55]. The reduced reliability of vertical EOG signals may be explained by their greater susceptibility to noise and artifacts from involuntary actions such as blinking and squinting.

With eyes closed, the gaze detected from the EOG signals preserved a direction that was qualitatively consistent with the target trajectory, as shown in Figure 9. Our quantitative analysis (Figure 11, Table 4) further confirmed that the EOG-based gaze estimates were significantly more accurate than those derived from shuffled (chance-level) data, for both the horizontal and vertical components. The large effect sizes observed underscore the robustness of this effect. To further assess the adequacy of our sample size, we conducted a post hoc power analysis based on the observed effect sizes (Cohen’s d = 4.58 for horizontal and d = 1.85 for vertical error comparisons). With a sample size of 11 participants and a significance level of alpha = 0.05 (two-tailed), the statistical power for both comparisons exceeded 0.99. These results indicate that our study had sufficient power to detect the observed effects.

Together, our findings support the feasibility of using EOG as a viable alternative to video-based eye tracking under standard conditions, where the latter may suffer from a reduced accuracy due to eyelid interference or blinking, as we observed in one myopic participant with a decreased performance. Furthermore, both video-based and conventional EOG-based gaze estimation methods typically require a calibration procedure that relies on visual fixation and is therefore limited to open-eye conditions. In contrast, the proposed proprioceptive calibration method enables EOG-based gaze estimation without requiring visual input, making it a promising standalone tool for use with the eyes closed or in situations where visual input is unavailable. This is especially important in investigating the relationship between eye movement and sensory processes other than vision. For example, it could be used to investigate eye movement while closing the eyes and listening to sound stimuli during sleep research, and to measure the eye movements of blind individuals. Enabling eyes-closed tracking could serve as the basis to uncover physiological mechanisms for automatic eye movement navigation, such as head and gaze anticipation in both blind and sighted people [31]. Other applications of measuring the EOG with the eyes closed include detecting involuntary eye movements during closed-eye periods [64], which would allow sleep quality assessments or the early detection of diseases, for example, Parkinson’s disease [65,66]. Moreover, in interaction designs, blind people do not use their eyes for vision anymore, freeing an interaction channel with voluntary movement control. This new channel would be extremely useful if the hands were used as tactile sensory substitution devices, becoming unable to freely interact with and manipulate objects. Given its simplicity, cost-effectiveness, and robustness to lighting and occlusion, EOG could be integrated into mobile or wearable systems where camera-based solutions are less practical.

7. Limitations and Future Work

The proprioceptive calibration method proposed in this study provides a practical solution for estimating the gaze direction in scenarios where visual input is unavailable or the eyes are closed. Unlike common calibration techniques that depend on visual fixation, this method derives user-specific parameters to interpret EOG signals by guiding users through tactile targets and auditory instructions. However, even for identical eye movements, the resulting EOG signals can vary substantially across individuals and recording sessions due to anatomical differences and electrode placement variability. Therefore, while effective within individual sessions, a calibration must be performed to ensure a consistent accuracy in new sessions or with different users. Developing robust methods that can generalize across users and sessions without requiring repeated calibration is a valuable and challenging direction for future research. In particular, future work could explore techniques to compensate for variations in electrode placement or model user-specific signal characteristics. Additionally, it would be worthwhile to compare the proposed proprioceptive calibration with alternative methods suitable for closed-eye conditions, such as those based on verbal instructions. In this study, we prioritized multiple short-duration calibration trials and did not evaluate the long-term robustness of the method in continuous, single-session recordings. While the temporal robustness of EOG-based gaze estimation has been well studied under open-eye conditions, such an analysis has yet to be extended to closed-eye scenarios. Future work should investigate the stability of EOG signals and the performance of the gaze estimation method over extended recording periods under eyes-closed conditions, to assess its reliability in extended-use applications such as sleep monitoring or assistive interfaces.

Our results revealed that vertical gaze direction estimations tend to be noisier and less reliable than horizontal ones (Figure 8, Figure 9 and Figure 10). This imbalance was reflected in a weaker alignment with CV-based gaze estimates and larger errors along the vertical axis. Future work should explore signal-processing techniques or sensor configurations that can improve the quality of vertical EOG signals to enhance the robustness of full 2D gaze estimation. Moreover, the current study primarily focused on offline signal processing and statistical validation to demonstrate the feasibility of the proposed method. Extending this work to support real-time closed-eye gaze estimation represents a critical next step toward enabling practical, interactive applications.

Another limitation of this study is that the evaluations were conducted in the laboratory. While this was important for validating the core method under consistent conditions, it restricted insight into the method’s real-world applicability, particularly in dynamic or mobile environments such as wearable or assistive technologies. Future work should evaluate the sensing performance in real-world settings to assess the practical usability in gaze-based interaction applications. Additionally, we observed a notable drop in performance in one myopic participant’s CV-based eye-tracking data, while their EOG signals remained stable and of good quality. This case highlights the need to further investigate the system performance across a broader range of user profiles and visual acuity. Future work should aim to include a more diverse participant pool to better capture inter-individual variability and strengthen the external validity of the findings. Investigating user-specific factors that affect signal quality and system performance, such as anatomical variation, ocular conditions, and the use of corrective lenses during EOG recording, will be essential for developing more generalizable and user-adaptive EOG-based gaze estimation systems.

Author Contributions

Conceptualization: M.P.-H. and F.D.; Data Curation: M.P.-H. and F.D.; Formal Analysis: M.P.-H., F.D. and X.W.; Funding Acquisition: M.P.-H.; Investigation: M.P.-H., F.D. and X.W.; Methodology: M.P.-H., F.D. and X.W.; Software: F.D.; Supervision: M.P.-H. and K.K.; Validation: M.P.-H., F.D., X.W. and K.K.; Visualization: F.D. and X.W.; Writing—Original Draft Preparation: M.P.-H., F.D. and X.W.; Writing—Review and Editing Preparation: M.P.-H., F.D., X.W. and K.K. All authors have read and agreed to the published version of the manuscript.

Funding

X.W. was supported by Japan Society for the Promotion of Science (25KJ182900); K.K., X.W. and M.P-H. were supported by Japan Society for the Promotion of Science (22H00539); and M.P-H. was supported by Japan Society for the Promotion of Science (22K21309 and 25K21250).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all participants involved in the study.

Data Availability Statement

The original data presented in the study are openly available in OSF: https://doi.org/10.17605/OSF.IO/PTVKQ (accessed on 26 October 2025).

Acknowledgments

We would like to thank Shimpei Yamagishi for their technical and administrative support.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the study’s design; in the collection, analysis, or interpretation of data; or in the writing of the manuscript.

References

- Chang, W.D.; Cha, H.S.; Kim, D.Y.; Kim, S.H.; Im, C.H. Development of an electrooculogram-based eye-computer interface for communication of individuals with amyotrophic lateral sclerosis. J. Neuroeng. Rehabil. 2017, 14, 89. [Google Scholar] [CrossRef] [PubMed]

- Chieh, T.C.; Mustafa, M.M.; Hussain, A.; Hendi, S.F.; Majlis, B.Y. Development of vehicle driver drowsiness detection system using electrooculogram (EOG). In Proceedings of the 2005 1st International Conference on Computers, Communications, & Signal Processing with Special Track on Biomedical Engineering, Kuala Lumpur, Malaysia, 14–16 November 2005; pp. 165–168. [Google Scholar] [CrossRef]

- Fukuda, K.; Stern, J.A.; Brown, T.B.; Russo, M.B. Cognition, blinks, eye-movements, and pupillary movements during performance of a running memory task. Aviat. Space, Environ. Med. 2005, 76, C75–C85. [Google Scholar] [PubMed]

- Tsai, Y.F.; Viirre, E.; Strychacz, C.; Chase, B.; Jung, T.P. Task performance and eye activity: Predicting behavior relating to cognitive workload. Aviat. Space Environ. Med. 2007, 78, B176–B185. [Google Scholar] [PubMed]

- Lee, K.R.; Chang, W.D.; Kim, S.; Im, C.H. Real-Time “Eye-Writing” Recognition Using Electrooculogram. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 37–48. [Google Scholar] [CrossRef]

- Katz, B.F.G.; Kammoun, S.; Parseihian, G.; Gutierrez, O.; Brilhault, A.; Auvray, M.; Truillet, P.; Denis, M.; Thorpe, S.; Jouffrais, C. NAVIG: Augmented reality guidance system for the visually impaired. Virtual Real. 2012, 16, 253–269. [Google Scholar] [CrossRef]

- Bleau, M.; Kafle, K.; Wang, M.; Kabore, S.S.; Cueva-Vargas, J.L.; Nemargut, J.P. International prevalence of tactile map usage and its impact on navigational independence and well-being of people with visual impairments. Sci. Rep. 2025, 15, 27245. [Google Scholar] [CrossRef]

- Xu, C.; Israr, A.; Poupyrev, I.; Bau, O.; Harrison, C. Tactile display for the visually impaired using TeslaTouch. In Proceedings of the CHI ’11 Extended Abstracts on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; pp. 317–322. [Google Scholar] [CrossRef]

- Duchowski, A.T.; House, D.H.; Gestring, J.G. Comparing Estimated Gaze Depth in Virtual and Physical Environments. In Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA), Safety Harbor, FL, USA, 26–28 March 2014; Association for Computing Machinery: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Kuo, T.; Shih, K.; Chung, S.; Chen, H.H. Depth from Gaze. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 2910–2914. [Google Scholar]

- Schirm, J.; Gómez-Vargas, A.R.; Perusquía-Hernández, M.; Skarbez, R.T.; Isoyama, N.; Uchiyama, H.; Kiyokawa, K. Identification of Language-Induced Mental Load from Eye Behaviors in Virtual Reality. Sensors 2023, 23, 6667. [Google Scholar] [CrossRef]

- Batmaz, A.U.; Turkmen, R.; Sarac, M.; Barrera Machuca, M.D.; Stuerzlinger, W. Re-investigating the Effect of the Vergence-Accommodation Conflict on 3D Pointing. In Proceedings of the 29th ACM Symposium on Virtual Reality Software and Technology, VRST ’23, New York, NY, USA, 9–11 October 2023. [Google Scholar]

- Hansen, D.W.; Ji, Q. In the Eye of the Beholder: A Survey of Models for Eyes and Gaze. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 478–500. [Google Scholar] [CrossRef]

- Constable, P.A.; Bach, M.; Frishman, L.; Jeffrey, B.G.; Robson, A.G. Request for Input from the ISCEV Membership: ISCEV Standard EOG (2017) Update–October 2016 Draft. Available online: https://www.iscev.org/standards/pdfs/ISCEV-EOG-Standard-2017-Draft-01.pdf (accessed on 24 June 2025).

- Zibandehpoor, M.; Alizadehziri, F.; Larki, A.A.; Teymouri, S.; Delrobaei, M. Electrooculography Dataset for Objective Spatial Navigation Assessment in Healthy Participants. Sci. Data 2025, 12, 553. [Google Scholar] [CrossRef]

- Robert, F.M.; Otheguy, M.; Nourrit, V.; de Bougrenet de la Tocnaye, J.L. Potential of a Laser Pointer Contact Lens to Improve the Reliability of Video-Based Eye-Trackers in Indoor and Outdoor Conditions. J. Eye Mov. Res. 2024, 17, 1–16. [Google Scholar] [CrossRef]

- Kourkoumelis, N.; Tzaphlidou, M. Eye Safety Related to Near Infrared Radiation Exposure to Biometric Devices. Sci. World J. 2011, 11, 902610. [Google Scholar] [CrossRef]

- Gowrisankaran, S.; Sheedy, J.E.; Hayes, J.R. Eyelid Squint Response to Asthenopia-Inducing Conditions. Optom. Vis. Sci. 2007, 84, 611. [Google Scholar] [CrossRef]

- Bacharach, J.; Lee, W.W.; Harrison, A.R.; Freddo, T.F. A review of acquired blepharoptosis: Prevalence, diagnosis, and current treatment options. Eye 2021, 35, 2468–2481. [Google Scholar] [CrossRef]

- Ripa, M.; Cuffaro, G.; Pafundi, P.C.; Valente, P.; Battendieri, R.; Buzzonetti, L.; Mattei, R.; Rizzo, S.; Savino, G. An epidemiologic analysis of the association between eyelid disorders and ocular motility disorders in pediatric age. Sci. Rep. 2022, 12, 8840. [Google Scholar] [CrossRef]

- Yan, C.; Pan, W.; Dai, S.; Xu, B.; Xu, C.; Liu, H.; Li, X. FSKT-GE: Feature maps similarity knowledge transfer for low-resolution gaze estimation. IET Image Process. 2024, 18, 1642–1654. [Google Scholar] [CrossRef]

- Yan, C.; Pan, W.; Xu, C.; Dai, S.; Li, X. Gaze Estimation via Strip Pooling and Multi-Criss-Cross Attention Networks. Appl. Sci. 2023, 13, 5901. [Google Scholar] [CrossRef]

- Robinson, D.A. A Method of Measuring Eye Movemnent Using a Scieral Search Coil in a Magnetic Field. IEEE Trans. Bio-Med. Electron. 1963, 10, 137–145. [Google Scholar]

- Sprenger, A.; Neppert, B.; Köster, S.; Gais, S.; Kömpf, D.; Helmchen, C.; Kimmig, H. Long-term eye movement recordings with a scleral search coil-eyelid protection device allows new applications. J. Neurosci. Methods 2008, 170, 305–309. [Google Scholar] [CrossRef] [PubMed]

- Creel, D.J. The electrooculogram. In Handbook of Clinical Neurology; Clinical Neurophysiology: Basis and Technical, Aspects; Levin, K.H., Chauvel, P., Eds.; Elsevier: Amsterdam, The Netherlands, 2019; Volume 160, Chapter 33; pp. 495–499. [Google Scholar] [CrossRef]

- Majaranta, P.; Bulling, A. Eye Tracking and Eye-Based Human–Computer Interaction. In Advances in Physiological Computing; Fairclough, S.H., Gilleade, K., Eds.; Human–Computer Interaction Series; Springer: London, UK, 2014; pp. 39–65. [Google Scholar] [CrossRef]

- Mack, D.J.; Schönle, P.; Fateh, S.; Burger, T.; Huang, Q.; Schwarz, U. An EOG-based, head-mounted eye tracker with 1 kHz sampling rate. In Proceedings of the 2015 IEEE Biomedical Circuits and Systems Conference (BioCAS), Atlanta, GA, USA, 22–24 October 2015; pp. 1–4. [Google Scholar] [CrossRef]

- Le Meur, O.; Le Callet, P.; Barba, D. Predicting visual fixations on video based on low-level visual features. Vis. Res. 2007, 47, 2483–2498. [Google Scholar] [CrossRef]

- Andersson, R.; Nyström, M.; Holmqvist, K. Sampling Frequency and Eye-Tracking Measures: How Speed Affects Durations, Latencies, and More. J. Eye Mov. Res. 2009, 3, 1–12. [Google Scholar] [CrossRef]

- Darling, W.G.; Wall, B.M.; Coffman, C.R.; Capaday, C. Pointing to One’s Moving Hand: Putative Internal Models Do Not Contribute to Proprioceptive Acuity. Front. Hum. Neurosci. 2018, 12, 177. [Google Scholar] [CrossRef] [PubMed]

- Dollack, F.; Perusquía-Hernández, M.; Kadone, H.; Suzuki, K. Head Anticipation During Locomotion with Auditory Instruction in the Presence and Absence of Visual Input. Front. Hum. Neurosci. 2019, 13, 293. [Google Scholar] [CrossRef] [PubMed]

- van Gorp, H.; van Gilst, M.M.; Overeem, S.; Dujardin, S.; Pijpers, A.; van Wetten, B.; Fonseca, P.; van Sloun, R.J.G. Single-channel EOG sleep staging on a heterogeneous cohort of subjects with sleep disorders. Physiol. Meas. 2024, 45, 055007. [Google Scholar] [CrossRef] [PubMed]

- Herman, J.H.; Erman, M.; Boys, R.; Peiser, L.; Taylor, M.E.; Roffwarg, H.P. Evidence for a Directional Correspondence Between Eye Movements and Dream Imagery in REM Sleep. Sleep 1984, 7, 52–63. [Google Scholar] [CrossRef]

- LaBerge, S.; Baird, B.; Zimbardo, P.G. Smooth tracking of visual targets distinguishes lucid REM sleep dreaming and waking perception from imagination. Nat. Commun. 2018, 9, 3298. [Google Scholar] [CrossRef]

- Senzai, Y.; Scanziani, M. A cognitive process occurring during sleep is revealed by rapid eye movements. Science 2022, 377, 999–1004. [Google Scholar] [CrossRef]

- Bulling, A.; Roggen, D.; Tröster, G. It’s in your eyes: Towards context-awareness and mobile HCI using wearable EOG goggles. In Proceedings of the 10th International Conference on Ubiquitous Computing, UbiComp ’08, Seoul, Republic of Korea, 21–24 September 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 84–93. [Google Scholar]

- Bulling, A.; Roggen, D.; Tröster, G. Wearable EOG goggles: Eye-based interaction in everyday environments. In Proceedings of the CHI ’09 Extended Abstracts on Human Factors in Computing Systems, CHI EA ’09, Boston, MA, USA, 4–9 April 2009; Association for Computing Machinery: New York, NY, USA, 2009; pp. 3259–3264. [Google Scholar]

- Chang, W.D. Electrooculograms for Human–Computer Interaction: A Review. Sensors 2019, 19, 2690. [Google Scholar] [CrossRef]

- Bulling, A.; Ward, J.A.; Gellersen, H.; Troster, G. Eye Movement Analysis for Activity Recognition Using Electrooculography. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 741–753. [Google Scholar] [CrossRef]

- Merino, M.; Rivera, O.; Gómez, I.; Molina, A.; Dorronzoro, E. A Method of EOG Signal Processing to Detect the Direction of Eye Movements. In Proceedings of the 2010 First International Conference on Sensor Device Technologies and Applications, Venice, Italy, 18–25 July 2010; pp. 100–105. [Google Scholar]

- Hládek, L.; Porr, B.; Brimijoin, W.O. Real-time estimation of horizontal gaze angle by saccade integration using in-ear electrooculography. PLoS ONE 2018, 13, e0190420. [Google Scholar] [CrossRef]

- Toivanen, M.; Pettersson, K.; Lukander, K. A probabilistic real-time algorithm for detecting blinks, saccades, and fixations from EOG data. J. Eye Mov. Res. 2015, 8, 1–14. [Google Scholar] [CrossRef]

- Chang, W.D.; Cha, H.S.; Im, C.H. Removing the Interdependency between Horizontal and Vertical Eye-Movement Components in Electrooculograms. Sensors 2016, 16, 227. [Google Scholar] [CrossRef]

- Ryu, J.; Lee, M.; Kim, D.H. EOG-based eye tracking protocol using baseline drift removal algorithm for long-term eye movement detection. Expert Syst. Appl. 2019, 131, 275–287. [Google Scholar] [CrossRef]

- Yan, M.; Tamura, H.; Tanno, K. A Study on Gaze Estimation System using Cross-Channels Electrooculogram Signals. In Proceedings of the International MultiConference of Engineers and Computer Scientists 2014, Hong Kong, 12–14 March 2014. [Google Scholar]

- Manabe, H.; Fukumoto, M.; Yagi, T. Direct Gaze Estimation Based on Nonlinearity of EOG. IEEE Trans. Biomed. Eng. 2015, 62, 1553–1562. [Google Scholar] [CrossRef] [PubMed]

- Barbara, N.; Camilleri, T.A.; Camilleri, K.P. EOG-Based Gaze Angle Estimation Using a Battery Model of the Eye. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6918–6921. [Google Scholar]

- Leigh, R.; Zee, D. Eye movements of the blind. Investig. Ophthalmol. Vis. Sci. 1980, 19, 328–331. [Google Scholar]

- Hsieh, C.W.; Chen, H.S.; Jong, T.L. The study of the relationship between electro-oculogram and the features of closed eye motion. In Proceedings of the 2008 International Conference on Information Technology and Applications in Biomedicine, Shenzhen, China, 30–31 May 2008; pp. 420–422. [Google Scholar]

- Findling, R.; Nguyen, L.; Sigg, S. Closed-Eye Gaze Gestures: Detection and Recognition of Closed-Eye Movements with Cameras in Smart Glasses. In Proceedings of the Advances in Computational Intelligence-15th International Work-Conference on Artificial Neural Networks, IWANN 2019, Canaria, Spain, 12–14 June 2019; pp. 322–334. [Google Scholar] [CrossRef]

- Findling, R.D.; Quddus, T.; Sigg, S. Hide my Gaze with EOG! Towards Closed-Eye Gaze Gesture Passwords that Resist Observation-Attacks with Electrooculography in Smart Glasses. In Proceedings of the 17th International Conference on Advances in Mobile Computing & Multimedia, MoMM2019, Munich, Germany, 2–4 December 2019; Association for Computing Machinery: New York, NY, USA, 2020; pp. 107–116. [Google Scholar] [CrossRef]

- Tamaki, D.; Fujimori, H.; Tanaka, H. An Interface using Electrooculography with Closed Eyes. Int. Symp. Affect. Sci. Eng. 2019, ISASE2019, 1–4. [Google Scholar] [CrossRef]

- Ben Barak-Dror, O.; Hadad, B.; Barhum, H.; Haggiag, D.; Tepper, M.; Gannot, I.; Nir, Y. Touchless short-wave infrared imaging for dynamic rapid pupillometry and gaze estimation in closed eyes. Commun. Med. 2024, 4, 1–12. [Google Scholar] [CrossRef]

- MacNeil, R.R.; Gunawardane, P.; Dunkle, J.; Zhao, L.; Chiao, M.; de Silva, C.W.; Enns, J.T. Using electrooculography to track closed-eye movements. J. Vis. 2021, 21, 1898. [Google Scholar] [CrossRef]

- MacNeil, R.R. Tracking the Closed Eye by Calibrating Electrooculography with Pupil-Corneal Reflection. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2020. [Google Scholar] [CrossRef]

- Kassner, M.; Patera, W.; Bulling, A. Pupil: An Open Source Platform for Pervasive Eye Tracking and Mobile Gaze-based Interaction. arXiv 2014, arXiv:1405.0006. [Google Scholar] [CrossRef]

- openFrameworks, Community. openFrameworks. Available online: https://openframeworks.cc/ (accessed on 31 August 2025).

- De Tommaso, D.; Wykowska, A. TobiiGlassesPySuite: An Open-source Suite for Using the Tobii Pro Glasses 2 in Eye-tracking Studies. In Proceedings of the 11th ACM Symposium on Eye Tracking Research & Applications, ETRA ’19, Denver, CO, USA, 25–28 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 46:1–46:5. [Google Scholar] [CrossRef]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Lakens, D.; Scheel, A.M.; Isager, P.M. Equivalence Testing for Psychological Research: A Tutorial. Adv. Methods Pract. Psychol. Sci. 2018, 1, 259–269. [Google Scholar] [CrossRef]

- Maxwell, S.E.; Lau, M.Y.; Howard, G.S. Is psychology suffering from a replication crisis? What does “failure to replicate” really mean? Am. Psychol. 2015, 70, 487–498. [Google Scholar] [CrossRef]

- Cohn, J.F.; Schmidt, K.L. The timing of facial motion in posed and spontaneous smiles. Int. J. Wavelets, Multiresolution Inf. Process. 2004, 2, 121–132. [Google Scholar] [CrossRef]

- Perusquía-Hernández, M.; Ayabe-Kanamura, S.; Suzuki, K. Human perception and biosignal-based identification of posed and spontaneous smiles. PLoS ONE 2019, 14, e0226328. [Google Scholar] [CrossRef]

- Händel, B.F.; Chen, X.; Inbar, M.; Kusnir, F.; Landau, A.N. A quantitative assessment of EOG eye tracking during free viewing in sighted and in congenitally blind. Brain Res. 2025, 1864, 149794. [Google Scholar] [CrossRef]

- Cooray, N. Proof of concept: Screening for REM sleep behaviour disorder with a minimal set of sensors. Clin. Neurophysiol. 2021, 132, 904–913. [Google Scholar] [CrossRef]

- Antoniades, C.A.; Spering, M. Eye movements in Parkinson’s disease: From neurophysiological mechanisms to diagnostic tools. Trends Neurosci. 2024, 47, 71–83. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).