Indoor Object Measurement Through a Redundancy and Comparison Method

Abstract

1. Introduction

1.1. Background

1.2. State of the Art and Gap

- Operate on standard consumer devices;

- Exploit redundant scene cues to rescale monocular estimates;

- Provide a pragmatic bridge between lightweight 2D detectors and more resource-demanding 3D spatial reasoning systems.

1.3. Research Approach and Objective

- A redundancy architecture that leverages recognisable scene objects as internal reference markers to rescale monocular estimates;

- An implementation pathway that can run on consumer hardware or be deployed via modest cloud resources;

- An integration concept that allows correction indices learned from single-view imagery to be refined by, or exported to, spatial reasoning models (for example SpatialLM) for improved generalisation across scenes and devices.

- Construction site inspections: rapid on-site checks can be done by any person, not necessary a professional.

- Real estate evaluation and property listings: automated extraction of room dimensions and window/wall measurements to accelerate valuation and generate floor plans for listings.

- Auditing and retrofit planning: Help on estimation of wall and window areas which can benefit certain audits e.g., thermal performance calculations or Seismic performance without requiring so much time-consuming manual measurements on-site.

- On-site clash detection/quality control: In similar alternatives used by BIM, lightweight scans during construction phases to detect misalignments relative to intended plans, before committing to expensive corrections.

- Heritage and small-scale surveying: inexpensive documentation of indoor geometries where expensive surveying equipment is impractical.

- Maintain detection precision and recall above 0.98 on the validation set.

- Achieve stable mAP@50 and mAP@50–95 values (≥0.99) during training, confirming consistent localization and classification performance.

- Demonstrate proportional correction of monocular estimates using internal scene references, such that validation height and distance deviations are minimized within the practical resolution of smartphone cameras.

2. Materials and Methods

2.1. Case Study Introduction

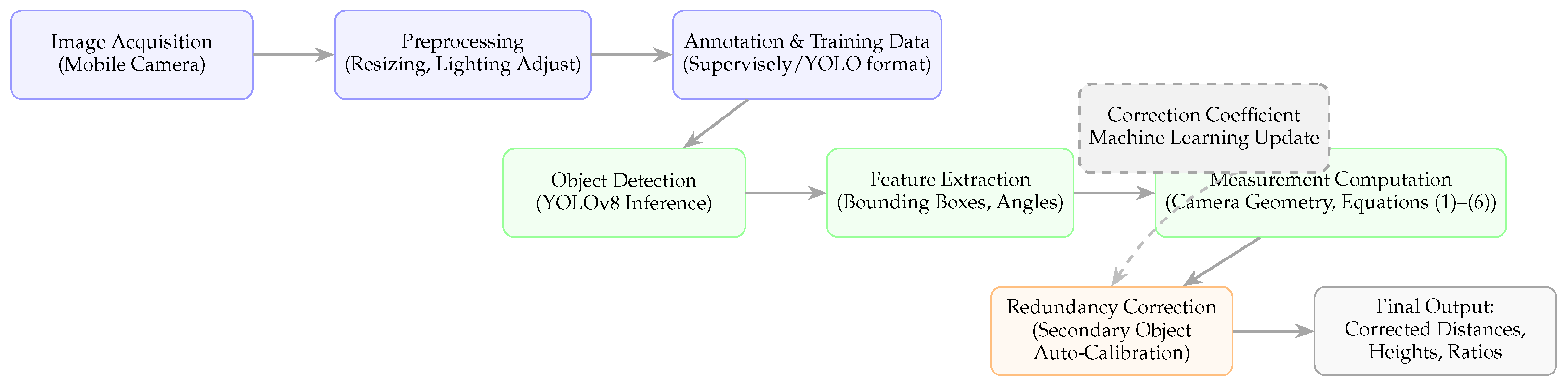

2.2. System Overview and Workflow Structure

2.3. Correction Coefficient Definition

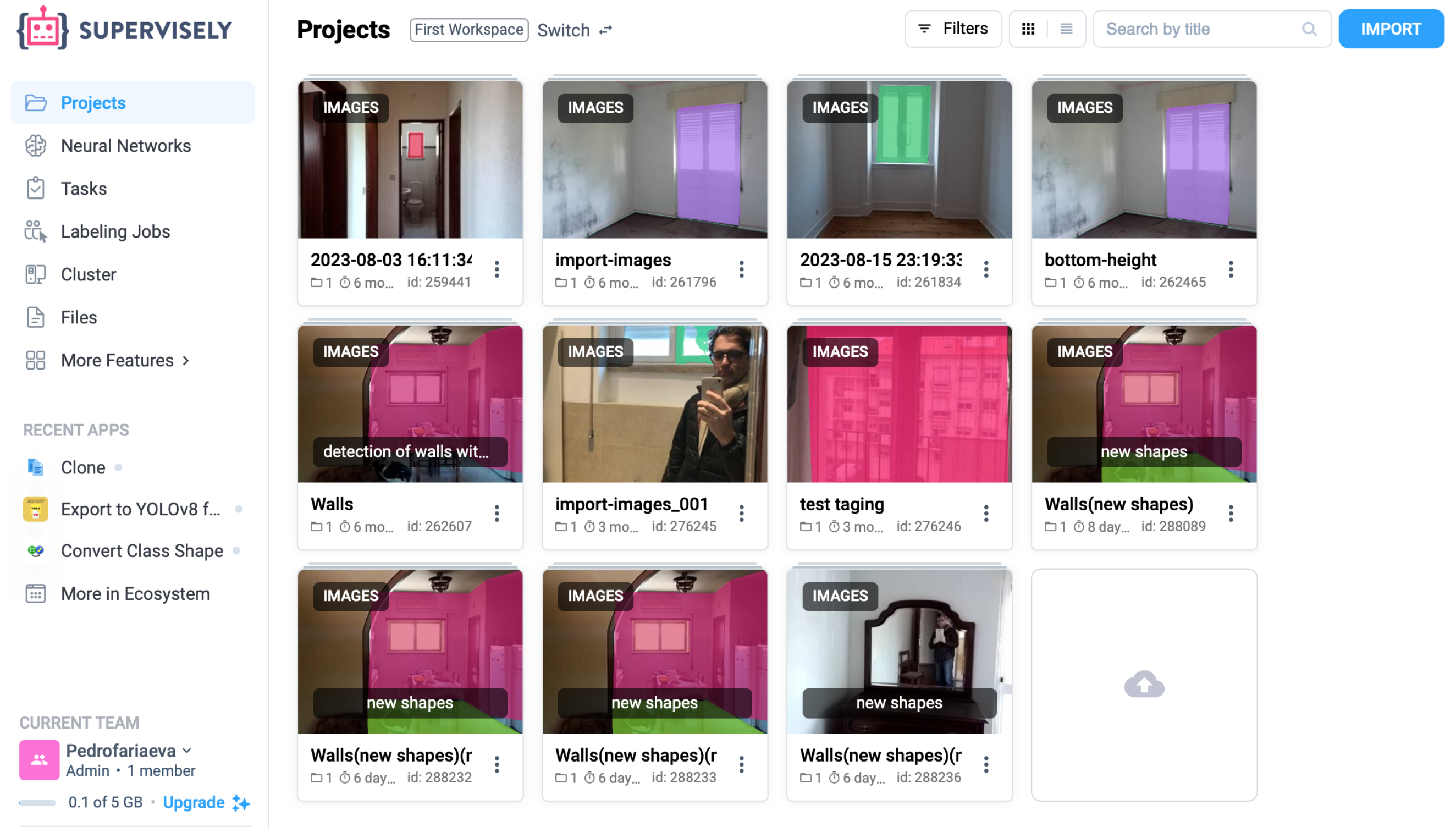

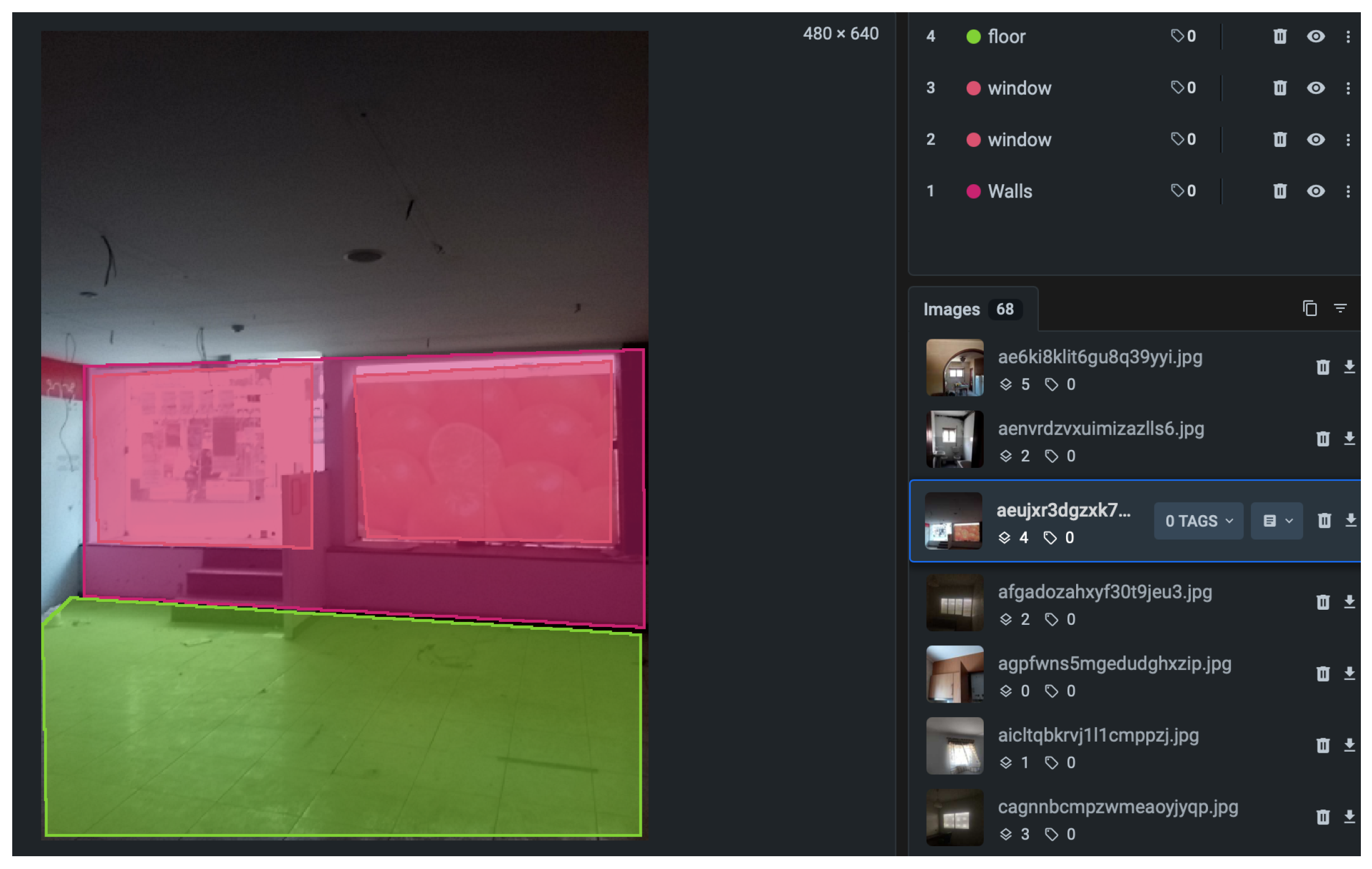

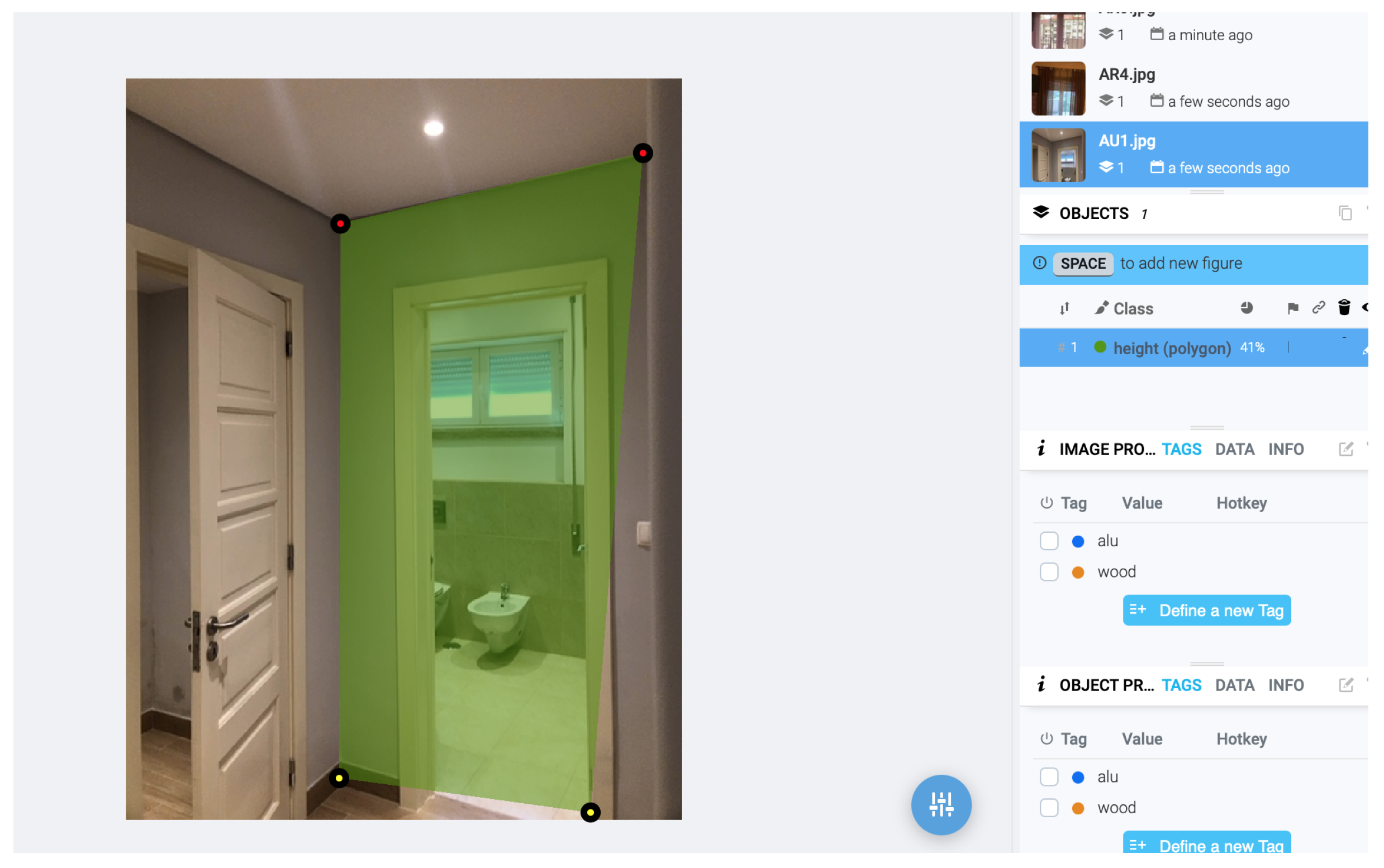

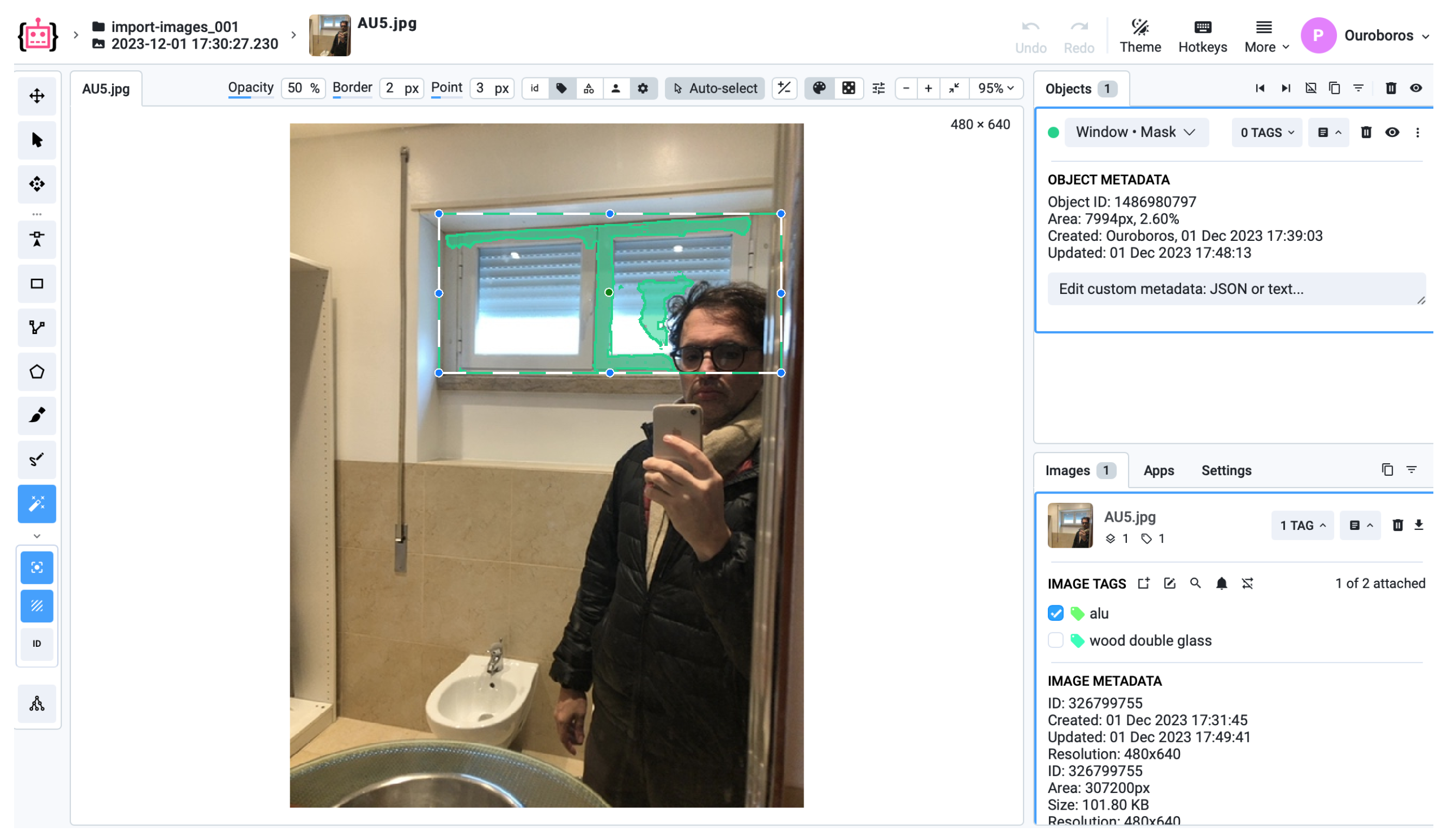

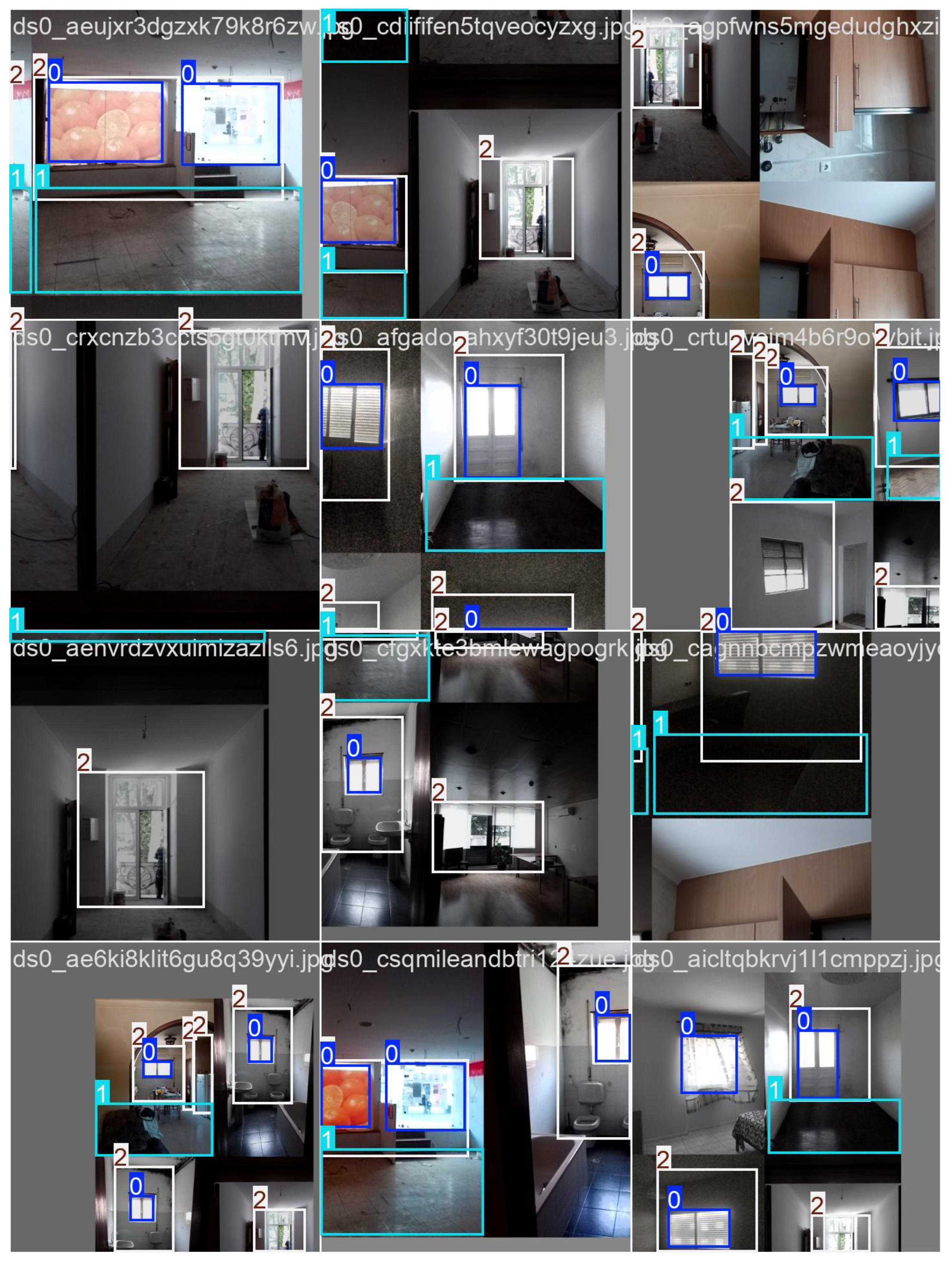

2.4. Creating the Computer Vision Datasets and Case Study Implementation

- window/metal/singleGlass/blindersTrue

- window/metal/singleGlass/blindersFalse

- window/metal/doubleGlass/6 mm/blindersTrue

- window/metal/doubleGlass/6 mm/blindersFalse

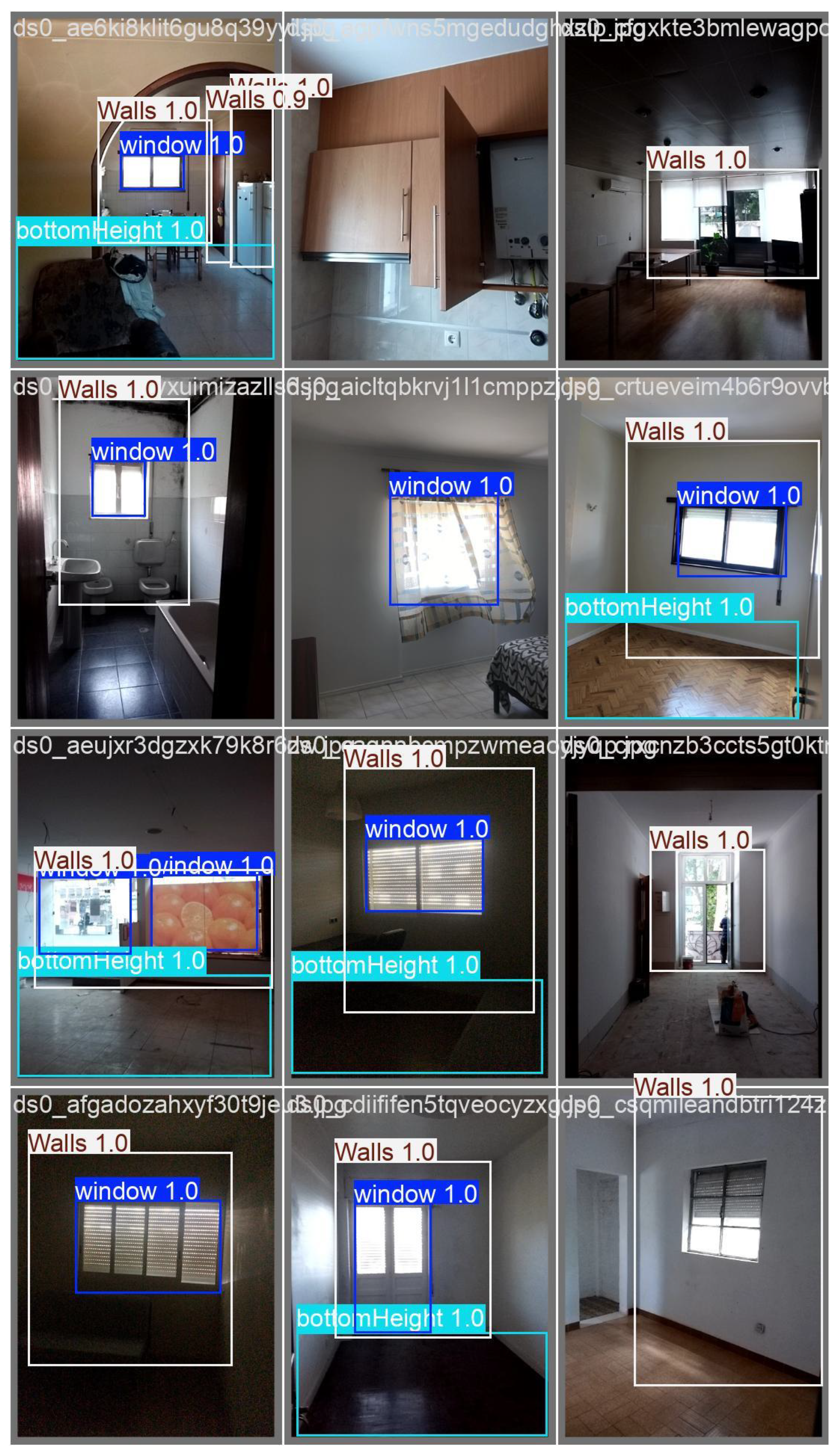

2.5. Creating Simple Models for Object Detection

Training Convergence and Validation Consistency

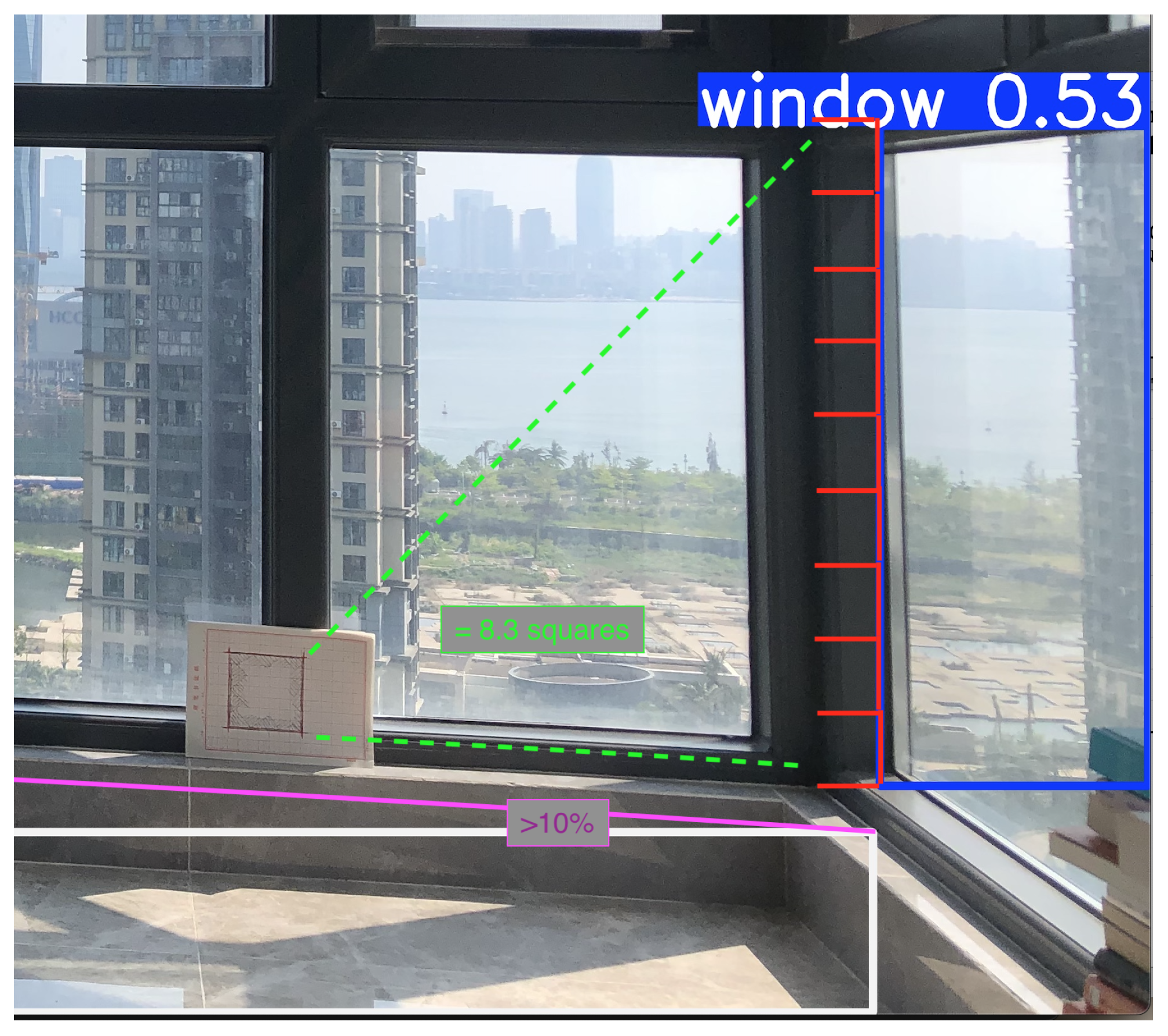

2.6. Apply the Method for Object Detection Measurement

- D—distance between the camera and the object (cm);

- —actual height of the object (cm);

- f—focal length of the camera lens (mm);

- —height of the object in the image (pixels);

- —height of the camera sensor (mm);

- —height of the detected object in the image (pixels).

Summary of the Geometry Pipeline

- Object detection via YOLOv8;

- Pixel-to-real scaling using a known reference marker;

- Correction coefficient normalisation;

- Geometric inference of object dimensions.

2.7. Early Conclusions

2.8. Future Work

3. Discussion and Results

3.1. Further Implementation into Applications: Training the Application to Understand How Devices Measure

3.2. Further NoSQL Collection Integration, Including Correctional Indexation and False Positives

3.3. Further Implementations into Applications: Training the Application to Understand Geometry

3.4. Further Implementations into Applications: Using Third-Party Cloud Web Services

3.5. Further Implementations into Applications: Real-Time Measures, Seismic Detection, and Energy Efficiency

3.6. Final Statements

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Marcel, S.; Rodriguez, Y. Torchvision the machine-vision package of torch. In Proceedings of the 18th ACM International Conference on Multimedia, Firenze, Italy, 25–29 October 2010. [Google Scholar] [CrossRef]

- Khanam, R.; Asghar, T.; Hussain, M. Comparative Performance Evaluation of YOLOv5, YOLOv8, and YOLOv11 for Solar Panel Defect Detection. Solar 2025, 5, 6. [Google Scholar] [CrossRef]

- Kang, S.; Hu, Z.; Liu, L.; Zhang, K.; Cao, Z. Object Detection YOLO Algorithms and Their Industrial Applications: Overview and Comparative Analysis. Electronics 2025, 14, 1104. [Google Scholar] [CrossRef]

- Li, Z.; Li, J.; Zhang, C.; Dong, H. Lightweight Detection of Train Underframe Bolts Based on SFCA-YOLOv8s. Machines 2024, 12, 714. [Google Scholar] [CrossRef]

- Barrak, A.; Petrillo, F.; Jaafar, F. Serverless on Machine Learning: A Systematic Mapping Study. IEEE Access 2022, 10, 105417–105436. [Google Scholar] [CrossRef]

- Dantas, J.; Khazaei, H.; Litoiu, M. Application Deployment Strategies for Reducing the Cold Start Delay of AWS Lambda. In Proceedings of the 2022 IEEE 15th International Conference on Cloud Computing (CLOUD), Barcelona, Spain, 10–16 July 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Mankala, C.K.; Silva, R.J. Sustainable Real-Time NLP with Serverless Parallel Processing on AWS. Information 2025, 16, 903. [Google Scholar] [CrossRef]

- Karim, F. YOLO—You Only Look Once, Real-Time Object Detection Explained. Available online: https://towardsdatascience.com/yolo-you-only-look-once-real-time-object-detection-explained-492dc9230006 (accessed on 21 May 2024).

- Nguyen, L.A.; Tran, M.D.; Son, Y. Empirical Evaluation and Analysis of YOLO Models in Smart Transportation. AI 2024, 5, 2518–2537. [Google Scholar] [CrossRef]

- Sodhro, A.H.; Kannam, S.; Jensen, M. Real-time efficiency of YOLOv5 and YOLOv8 in human intrusion detection across diverse environments and recommendation. Internet Things 2025, 33, 101707. [Google Scholar] [CrossRef]

- Yang, L.; Asli, B.H.S. MSConv-YOLO: An Improved Small Target Detection Algorithm Based on YOLOv8. J. Imaging 2025, 11, 285. [Google Scholar] [CrossRef]

- Ultralytics. YOLOv8 Documentation. Available online: https://docs.ultralytics.com/pt (accessed on 21 May 2024).

- Parisot, O. Method and Tools to Collect, Process, and Publish Raw and AI-Enhanced Astronomical Observations on YouTube. Electronics 2025, 14, 2567. [Google Scholar] [CrossRef]

- Trigka, M.; Dritsas, E. A Comprehensive Survey of Machine Learning Techniques and Models for Object Detection. Sensors 2025, 25, 214. [Google Scholar] [CrossRef]

- Qing, C.; Xiao, T.; Zhang, S.; Li, P. Region Proposal Networks (RPN) Enhanced Slicing for Improved Multi-Scale Object Detection. In Proceedings of the 7th International Conference on Communication Engineering and Technology (ICCET), Tokyo, Japan, 22–24 February 2024; pp. 66–70. [Google Scholar] [CrossRef]

- Fu, K.; Chang, Z.; Zhang, Y.; Xu, G.; Zhang, K.; Sun, X. Rotation-Aware and Multi-Scale Convolutional Neural Network for Object Detection in Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2020, 161, 294–308. [Google Scholar] [CrossRef]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision Transformers for Dense Prediction. arXiv 2021, arXiv:2103.13413. [Google Scholar] [CrossRef] [PubMed]

- Xian, K.; Cao, Z.; Shen, C.; Lin, G. Towards robust monocular depth estimation: A new baseline and benchmark. Int. J. Comput. Vis. 2024, 132, 2401–2419. [Google Scholar] [CrossRef]

- Jasmari, J. Smartphone Distance Measurement Application Using Image Processing Methods. IOP Conf. Ser. Earth Environ. Sci. 2022, 1064, 012010. [Google Scholar] [CrossRef]

- Khan, M.S.; Imran, A. The Art of Seeing: A Computer Vision Journey into Object Detection. 2024. Available online: https://www.researchsquare.com/article/rs-4361138/v1 (accessed on 20 July 2024).

- Jae, E.; Lee, J.M.; Hwang, K.; Jung, I.H. Real Distance Measurement Using Object Detection of Artificial Intelligence. Turk. J. Comput. Math. Educ. 2021, 12, 557–563. [Google Scholar] [CrossRef]

- Chen, S.; Fang, X.; Shen, J.; Wang, L.; Shao, L. Single-Image Distance Measurement by a Smart Mobile Device. IEEE Trans. Cybern. 2017, 47, 4451–4462. [Google Scholar] [CrossRef]

- Mehta, T.; Riddhi, L.; Mohana, U. Simulation and Performance Analysis of 3D Object Detection Algorithm Using Deep Learning for Computer Vision Applications. In Proceedings of the 2023 2nd International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 4–6 May 2023; pp. 535–541. [Google Scholar] [CrossRef]

- Basavaraj, U.; Raghuram, H.; Mohana, U. Real-Time Object Distance and Dimension Measurement Using Deep Learning and OpenCV. In Proceedings of the 2023 Third International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, India, 2–4 February 2023; pp. 929–932. [Google Scholar] [CrossRef]

- Rosebrock, A. Find Distance from Camera to Object/Marker Using Python and OpenCV. PyImageSearch. 19 January 2015. Available online: https://pyimagesearch.com/2015/01/19/find-distance-camera-objectmarker-using-python-opencv/ (accessed on 21 May 2024).

- GeeksforGeeks. Realtime Distance Estimation Using OpenCV Python. Available online: https://www.geeksforgeeks.org/realtime-distance-estimation-using-opencv-python/ (accessed on 21 May 2024).

- Sharma, A.; Singh, A.; Kumari, V. Real-Time Object Dimension Measurement. Ind. Eng. J. 2022, 15, 96–101. [Google Scholar] [CrossRef]

- Alnori, A.; Djemame, K.; Alsenani, Y. Agnostic Energy Consumption Models for Heterogeneous GPUs in Cloud Computing. Appl. Sci. 2024, 14, 2385. [Google Scholar] [CrossRef]

- Ultralytics. Connect to Roboflow. Available online: https://roboflow.com/?ref=ultralytics (accessed on 21 May 2024).

- Roboflow. University—Date Fruit Classification. Available online: https://universe.roboflow.com/university-ndbmn/date-fruit-classification (accessed on 21 May 2024).

- Wang, R.; Li, X.; Ao, S.; Ling, C. Pelee: A Real-Time Object Detection System on Mobile Devices. arXiv 2018, arXiv:1804.06882. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2016, arXiv:1506.02640. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar] [CrossRef]

- Vercel. Vercel Partners: AWS. Available online: https://vercel.com/partners/aws (accessed on 21 May 2024).

- Lin, L.; Yang, J.; Wang, Z.; Zhou, L.; Chen, W.; Xu, Y. Compressed Video Quality Index Based on Saliency-Aware Artifact Detection. Sensors 2021, 21, 6429. [Google Scholar] [CrossRef] [PubMed]

- Scikit-Video. strred Module Source Code. Available online: http://www.scikit-video.org/stable/_modules/skvideo/measure/strred.html (accessed on 21 May 2024).

- Scikit-Image. Getting Started. Available online: https://scikit-image.org/docs/stable/user_guide/getting_started.html (accessed on 21 May 2024).

- Farhan, A.; Kurnia, K.A.; Saputra, F.; Chen, K.H.-C.; Huang, J.-C.; Roldan, M.J.M.; Lai, Y.-H.; Hsiao, C.-D. An OpenCV-Based Approach for Automated Cardiac Rhythm Measurement in Zebrafish from Video Datasets. Biomolecules 2021, 11, 1476. [Google Scholar] [CrossRef] [PubMed]

- Rusu-Both, R.; Socaci, M.-C.; Palagos, A.-I.; Buzoianu, C.; Avram, C.; Vălean, H.; Chira, R.-I. A Deep Learning-Based Detection and Segmentation System for Multimodal Ultrasound Images in the Evaluation of Superficial Lymph Node Metastases. J. Clin. Med. 2025, 14, 1828. [Google Scholar] [CrossRef]

- Paniego, S.; Sharma, V.; Cañas, J.M. Open Source Assessment of Deep Learning Visual Object Detection. Sensors 2022, 22, 4575. [Google Scholar] [CrossRef]

- Amazon Web Services. Amazon Machine Images (AMIs). AWS Documentation. Available online: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/AMIs.html (accessed on 11 October 2024).

- Zhang, Y.; Huo, L.; Li, H. Automated Recognition of a Wall Between Windows from a Single Image. J. Sens. 2017, 2017, 7051931. [Google Scholar] [CrossRef]

- Walsh, I.; Fishman, D.; Garcia-Gasulla, D.; Titma, T.; Pollastri, G.; Harrow, J.; Psomopoulos, F.E.; Tosatto, S.C.E. DOME: Recommendations for Supervised Machine Learning Validation in Biology. Nat. Methods 2020, 18, 1122–1127. [Google Scholar] [CrossRef]

- Othman, N.; Salur, M.; Karakose, M.; Aydin, I. An Embedded Real-Time Object Detection and Measurement of Its Size. In Proceedings of the 2018 International Conference on Artificial Intelligence and Data Processing (IDAP), Malatya, Turkey, 28–30 September 2018. [Google Scholar] [CrossRef]

- Pacios, D.; Ignacio-Cerrato, S.; Vázquez-Poletti, J.L.; Moreno-Vozmediano, R.; Schetakis, N.; Stavrakakis, K.; Di Iorio, A.; Gomez-Sanz, J.J.; Vazquez, L. Amazon Web Service–Google Cross-Cloud Platform for Machine Learning-Based Satellite Image Detection. Information 2025, 16, 381. [Google Scholar] [CrossRef]

- Vinay, S.B. AI and Machine Learning Integration with AWS SageMaker: Current Trends and Future Prospects. Int. J. Artif. Intell. Tools 2024, 1, 1–24. [Google Scholar]

- Mao, Y.; Zhong, J.; Fang, C.; Zheng, J.; Tang, R.; Zhu, H.; Tan, P.; Zhou, Z. SpatialLM: Training Large Language Models for Structured Indoor Modeling. arXiv 2025, arXiv:2506.07491. [Google Scholar] [CrossRef]

- Győrödi, C.A.; Dumşe-Burescu, D.V.; Zmaranda, D.R.; Győrödi, R.Ş. A Comparative Study of MongoDB and Document-Based MySQL for Big Data Application Data Management. Big Data Cogn. Comput. 2022, 6, 49. [Google Scholar] [CrossRef]

- Chi, Y. Integrating MongoDB with AWS Smithy: Enhancing Data Management and API Development. 2024. Available online: https://www.researchgate.net/publication/381548147_Integrating_MongoDB_with_AWS_Smithy_Enhancing_Data_Management_and_API_Development (accessed on 21 May 2024).

- McGlone, C.; Shufelt, J. Incorporating Vanishing-Point Geometry in Building Extraction Techniques. In Proceedings of the Optical Engineering and Photonics in Aerospace Sensing, Orlando, FL, USA, 24 September 1993. [Google Scholar] [CrossRef]

- McGlone, C.; Shufelt, J.A. Projective and Object Space Geometry for Monocular Building Extraction. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 54–61. [Google Scholar] [CrossRef]

- Tsai, F.; Chang, H. Detection of Vanishing Points Using Hough Transform for Single View 3D Reconstruction. In Proceedings of the 34th Asian Conference on Remote Sensing 2013, ACRS 2013, Bali, Indonesia, 20–24 October 2013; Volume 2, pp. 1182–1189. [Google Scholar]

- Chang, H. Single View Reconstruction Using Refined Vanishing Point Geometry. Ph.D. Thesis, National Central University, Taoyuan City, Taiwan, 2017. [Google Scholar] [CrossRef]

- Omari, M.; Kaddi, M.; Salameh, K.; Alnoman, A. Advancing Image Compression Through Clustering Techniques: A Comprehensive Analysis. Technologies 2025, 13, 123. [Google Scholar] [CrossRef]

- FastAPI. Available online: https://fastapi.tiangolo.com/ (accessed on 21 May 2024).

- Schuszter, I.C.; Cioca, M. Increasing the Reliability of Software Systems Using a Large-Language-Model-Based Solution for Onboarding. Inventions 2024, 9, 79. [Google Scholar] [CrossRef]

- LiveOverflow. FastAPI—Easy REST API Creation with Python [Video]. YouTube 2019. Available online: https://www.youtube.com/watch?v=7t2alSnE2-I (accessed on 21 May 2024).

- Amazon Web Services. Host Instance Storage. Available online: https://docs.aws.amazon.com/sagemaker/latest/dg/host-instance-storage.html (accessed on 21 May 2024).

- Faria, P.; Simoes, T.; Kai, M.; Yan, Q. Automation and Classification Cascade Methods for Object Detection: Organising Data Stack for Supervised Learning Before Running Unnecessary Pathfinders. In Proceedings of the 2024 International Conference on Image Processing, Computer Vision and Machine Learning (ICICML), Shenzhen, China, 21–24 November 2024; Available online: https://www.icicml.org (accessed on 21 May 2024).

- de Groot, P.; Pelissier, M.; Refayee, H.; Hout, M. Deep Learning Seismic Object Detection Examples. 2021. Available online: https://www.researchgate.net/publication/356988233_Deep_Learning_Seismic_Object_Detection_Examples (accessed on 21 May 2024).

- Waas, L.; Enjellina. Review of BIM-Based Software in Architectural Design: Graphisoft Archicad vs. Autodesk Revit. J. Artif. Intell. Archit. 2022, 1, 14–22. [Google Scholar] [CrossRef]

- Apple Inc. Measurement. Available online: https://developer.apple.com/documentation/foundation/measurement (accessed on 21 May 2024).

- Apple Developer. Machine Learning. Available online: https://developer.apple.com/machine-learning/ (accessed on 21 May 2024).[Green Version]

- Truong, T.X.; Nhu, V.-H.; Phuong, D.T.N.; Nghi, L.T.; Hung, N.N.; Hoa, P.V.; Bui, D.T. A New Approach Based on TensorFlow Deep Neural Networks with ADAM Optimizer and GIS for Spatial Prediction of Forest Fire Danger in Tropical Areas. Remote Sens. 2023, 15, 3458. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312v3. [Google Scholar] [CrossRef]

| Approach & Reference | Main Contribution | Observed Limitation |

|---|---|---|

| Ultralytics/YOLO-based detection [4,5,6,9,10,11] | Real-time object recognition with high mAP; lightweight deployment | Focus on detection accuracy only; lacks geometric or measurement validation in indoor settings; limited to small objects within camera frame |

| Trigka & Dritsas (2024) [14] | Identification of YOLO limitations for objects larger than the camera frame | Demonstrates incomplete detection and scaling issues in reference-scarce indoor environments |

| Monocular estimation studies (e.g., depth inference approaches) [15,16,17,18] | Calibration-based distance measurement and single-camera geometry correction; related methods such as MiDaS provide dense depth inference | Conducted under controlled conditions; MiDaS and similar models rely on synthetic or mixed-data pretraining, incompatible with the real-scene geometric validation required in this study; limited scene diversity; minimal redundancy; only predefined markers or known intrinsics |

| SpatialLM framework [13] | Large-scale spatial reasoning and 3D layout interpretation | Requires 3D input (point clouds) and high computational cost; does not provide empirical monocular measurement validation; unsuitable for mobile real-time applications |

| Metric | Epoch 1 | Epoch 200 | Epoch 350 | Epoch 458 | Epoch 500 |

|---|---|---|---|---|---|

| Precision | 1.000 | 0.982 | 0.990 | 0.991 | 0.995 |

| Recall | 0.480 | 0.890 | 0.960 | 1.000 | 0.99235 |

| mAP@50 | 0.330 | 0.930 | 0.985 | 0.995 | 0.99227 |

| mAP@50–95 | 0.130 | 0.880 | 0.980 | 0.995 | 0.99227 |

| val/box_loss | 1.000 | 0.380 | 0.220 | 0.163 | 0.15552 |

| val/cls_loss | 1.000 | 0.620 | 0.420 | 0.380 | 0.37131 |

| val/dfl_loss | 1.000 | 0.820 | 0.760 | 0.720 | 0.72389 |

| lr/pg0 | – | 0.0007 | 0.0003 | 0.00014 |

| Output |

|---|

| Mac-XXXX: ML pedro$ python3 distance_and_height.py |

| Focal Length: 4.0 mm |

| Distance from camera to window: 1344.00 cm |

| Height of the window (without marker): 150.00 cm |

| Height of the window (with marker): 83.00 cm |

| Index factor between methods: 1.80 |

| Mac-XXX: ML pedro$ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Faria, P.; Simões, T.; Marques, T.; Finn, P.D. Indoor Object Measurement Through a Redundancy and Comparison Method. Sensors 2025, 25, 6744. https://doi.org/10.3390/s25216744

Faria P, Simões T, Marques T, Finn PD. Indoor Object Measurement Through a Redundancy and Comparison Method. Sensors. 2025; 25(21):6744. https://doi.org/10.3390/s25216744

Chicago/Turabian StyleFaria, Pedro, Tomás Simões, Tiago Marques, and Peter D. Finn. 2025. "Indoor Object Measurement Through a Redundancy and Comparison Method" Sensors 25, no. 21: 6744. https://doi.org/10.3390/s25216744

APA StyleFaria, P., Simões, T., Marques, T., & Finn, P. D. (2025). Indoor Object Measurement Through a Redundancy and Comparison Method. Sensors, 25(21), 6744. https://doi.org/10.3390/s25216744