TreeHelper: A Wood Transport Authorization and Monitoring System

Abstract

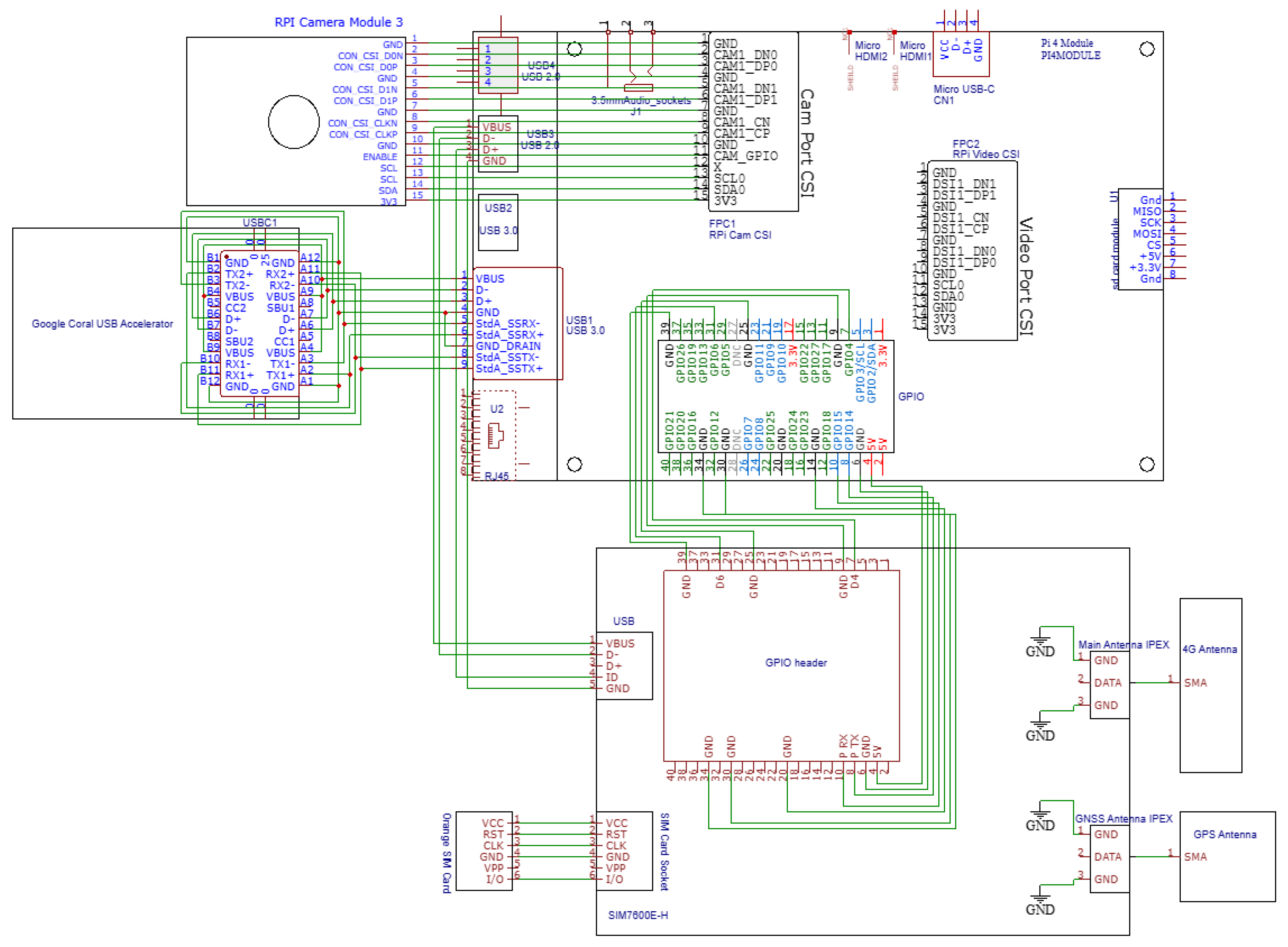

1. Introduction

1.1. Context and Motivation

- Continuously capture frames and maintain a GPS fix on the device.

- Detect logging trucks locally and in real time using a compact YOLOv11n model.

- Extract the vehicle license plate by sending a frame to a cloud automatic license plate recognizer service to obtain a returned string.

- Query the backend authorization endpoint with the plate string in order to determine whether a valid transport authorization exists.

- Trigger an alert when no authorization is found, notifying the competent authorities via SMS, including the plate and GPS coordinates in the message, for timely intervention.

- Apply safeguards for reliability to minimize false or duplicate alerts.

1.2. State of the Art

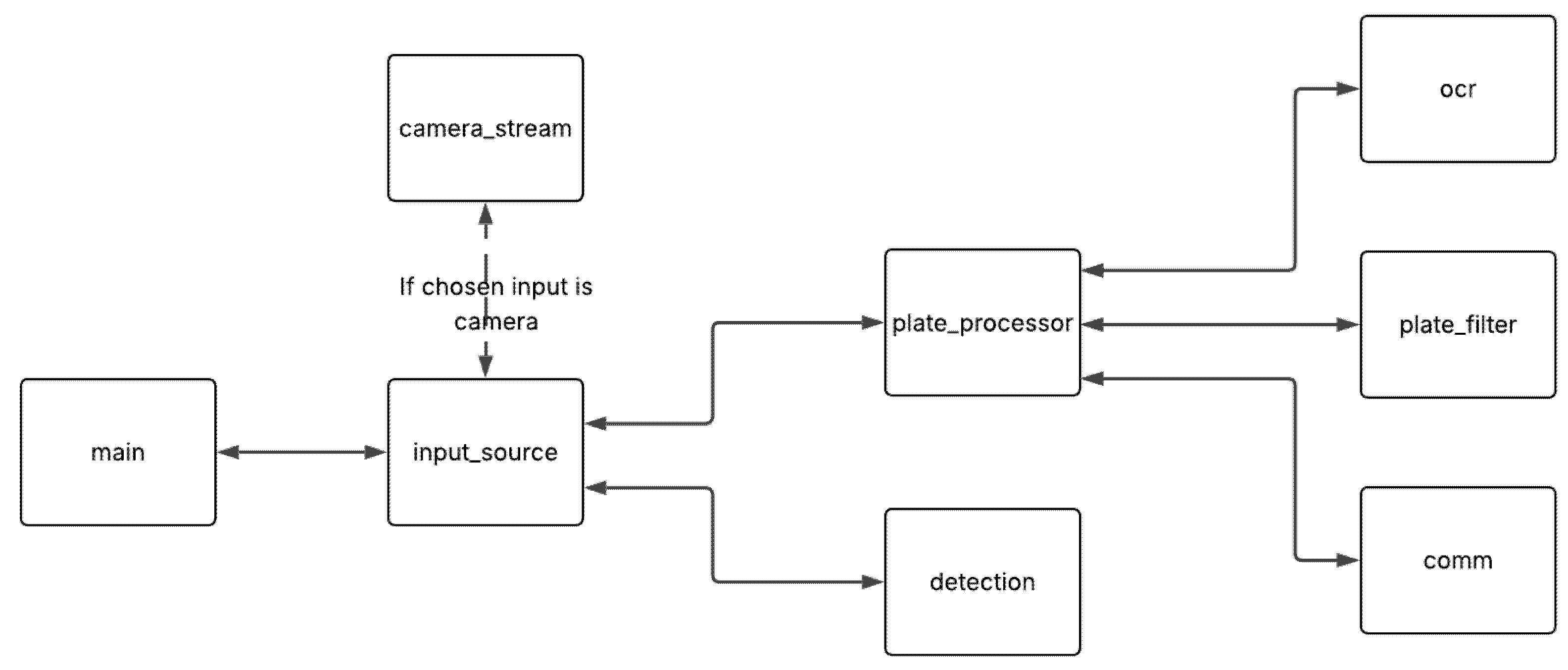

2. Materials and Methods

2.1. Design

- The monitoring device receives input, either a live camera feed, a video or an image.

- The monitoring device obtains its GPS coordinates.

- The monitoring device detects if a logging truck is in a frame.

- If a truck is detected, the frame is captured.

- The system sends the captured frame to the Cloud ALPR service.

- The Cloud ALPR service extracts the license plate number as a string.

- The monitoring device receives the license plate number.

- The monitoring device accesses a website endpoint to check if the license plate number corresponds to a granted authorization.

- If step 8 responds with a negative answer:

- 8a. The monitoring device sends an SMS to the authorities.

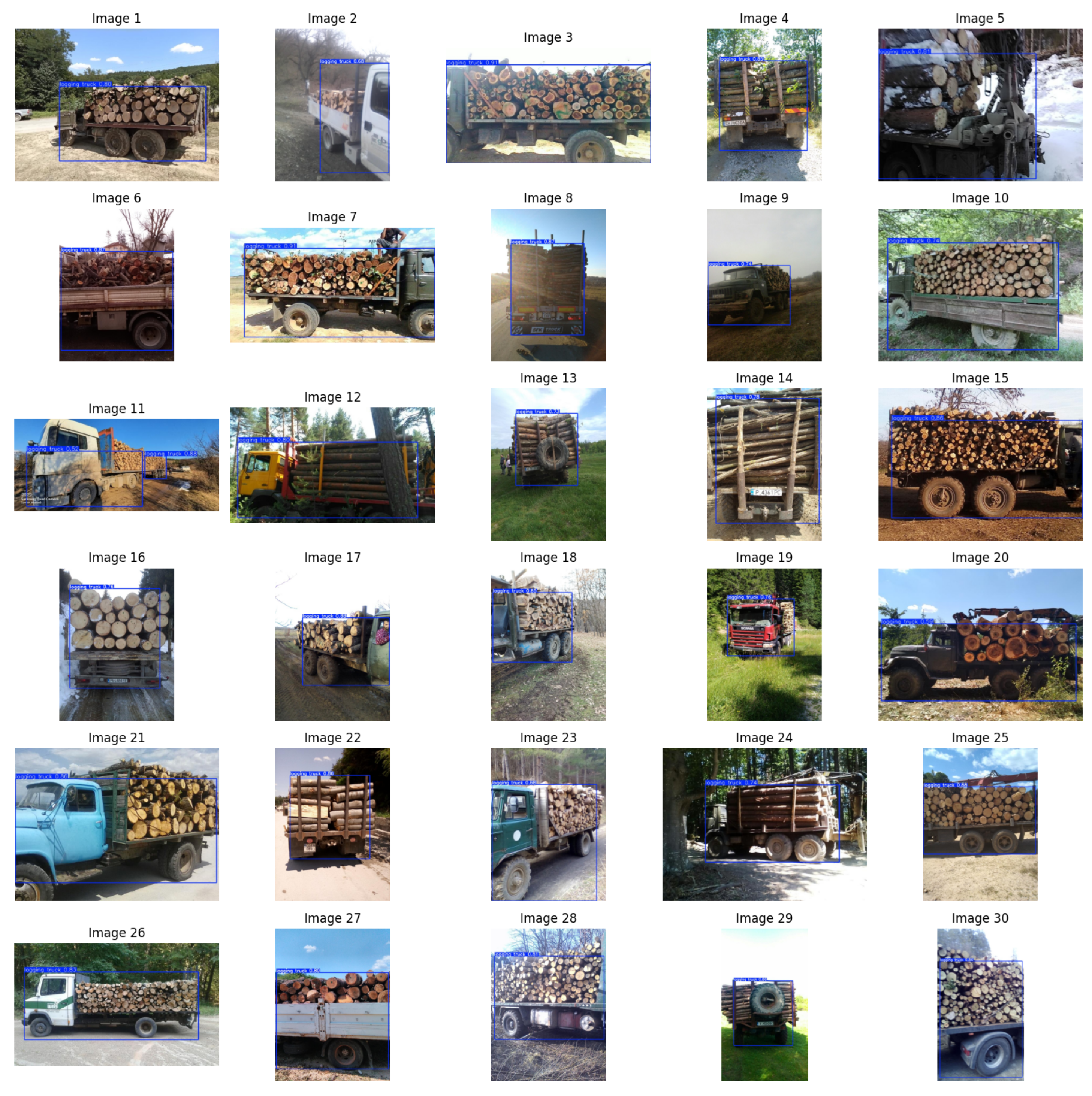

2.2. Implementation-Machine Learning Model

- Train Set: 1052 images (60%)

- -

- This portion is used directly during model training. The model learns patterns from these images, identifying trucks, understanding bounding boxes and associating visual features with logging trucks.

- Validation Set: 350 images (20%)

- -

- These are used during training but not for learning. They help tune hyper-parameters and monitor performance after each epoch. Loss, precision and other metrics are calculated on this set in order to detect overfitting and underfitting.

- Test Set: 350 images (20%)

- -

- This set is used only after training is completed. It evaluates the model’s generalization, i.e., how well it performs on unseen data.

- data = .../data.yaml – path to the dataset configuration file.

- model = yolo11n.pt – specifies the usage of the YOLOv11n model.

- epochs = 100 – number of training epochs.

- imgsz = 640 – resizes all images to 640 × 640 pixels for uniform input size.

2.3. Implementation—Raspberry Pi Software

- The first four rows represent the bounding box: [x_center, y_center, width, height].

- The fifth row contains confidence scores for each prediction.

- The frame is saved temporarily as an image file.

- The image is sent to the OCR API (with a method imported from ocr.py).

- If a license plate number is returned, it is sent to the plate filter.

- If the plate filter confirms everything is ok and verifies the plate, it is

- taken by the SIM module code (from comm.py) for checking authorization.

- If the plate is not authorized, the SIM is called in order to send the alert SMS to the authorities (also containing the location coordinates).

- initialize_sim: Sends the PIN code of the SiIM card and checks readiness of it.

- initialize_http: Attaches mobile data service functionality and sets up the Access Point Name (net) for HTTP.

- initialize_sms: Configures SMS mode and message center.

- initialize(): Combines all of the previously mentioned methods and fetches the GPS coordinates with the specifically designed method.

3. Results

3.1. Machine Learning Model

- box_loss: shows how well the model learns to predict bounding box locations.

- cls_loss: classification loss, showing the accuracy in recognizing the “logging truck” class.

- dfl_loss: distribution focal loss, used in enhancing bounding box regression precision.

- precision(B): proportion of detected objects that are actually correct.

- recall(B): proportion of actual objects that were successfully detected.

- mAP50(B): Mean Average Precision, showing a standard overall performance score. It is calculated at an IoU threshold of 0.5.

- mAP50-95(B): also Mean Average Precision, showing a standard overall performance score. It is calculated at IoU thresholds from 0.5 to 0.95.

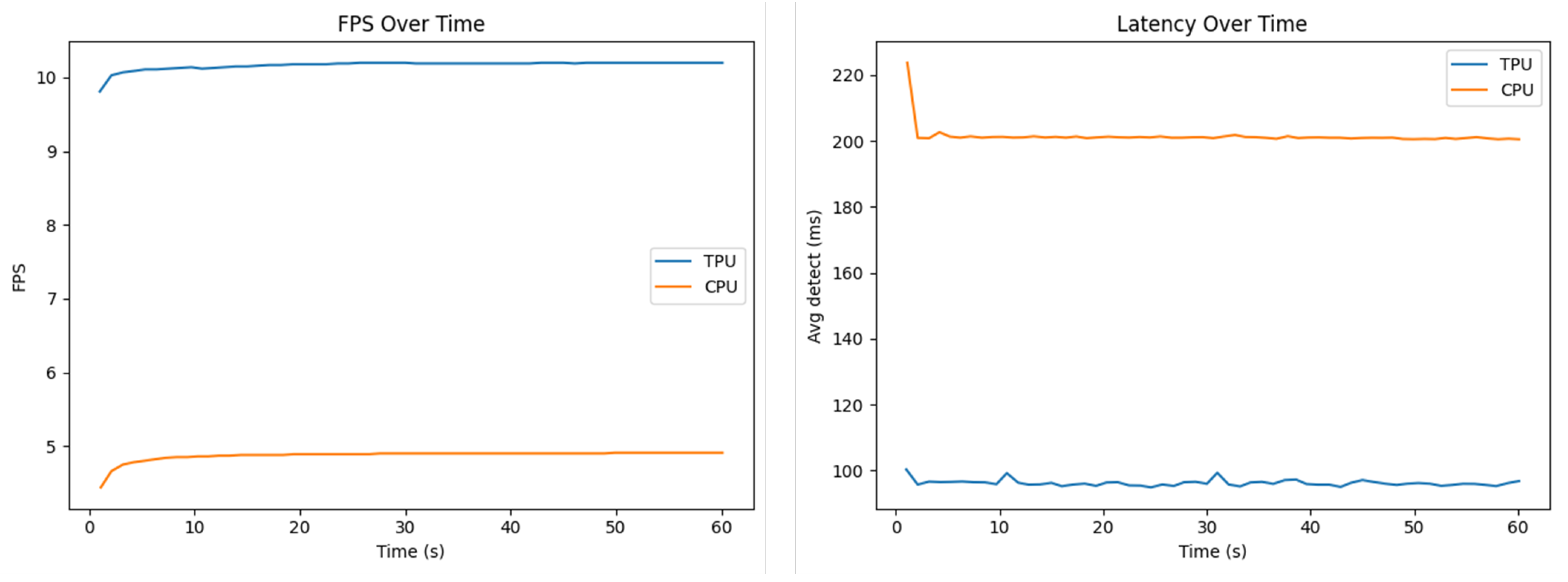

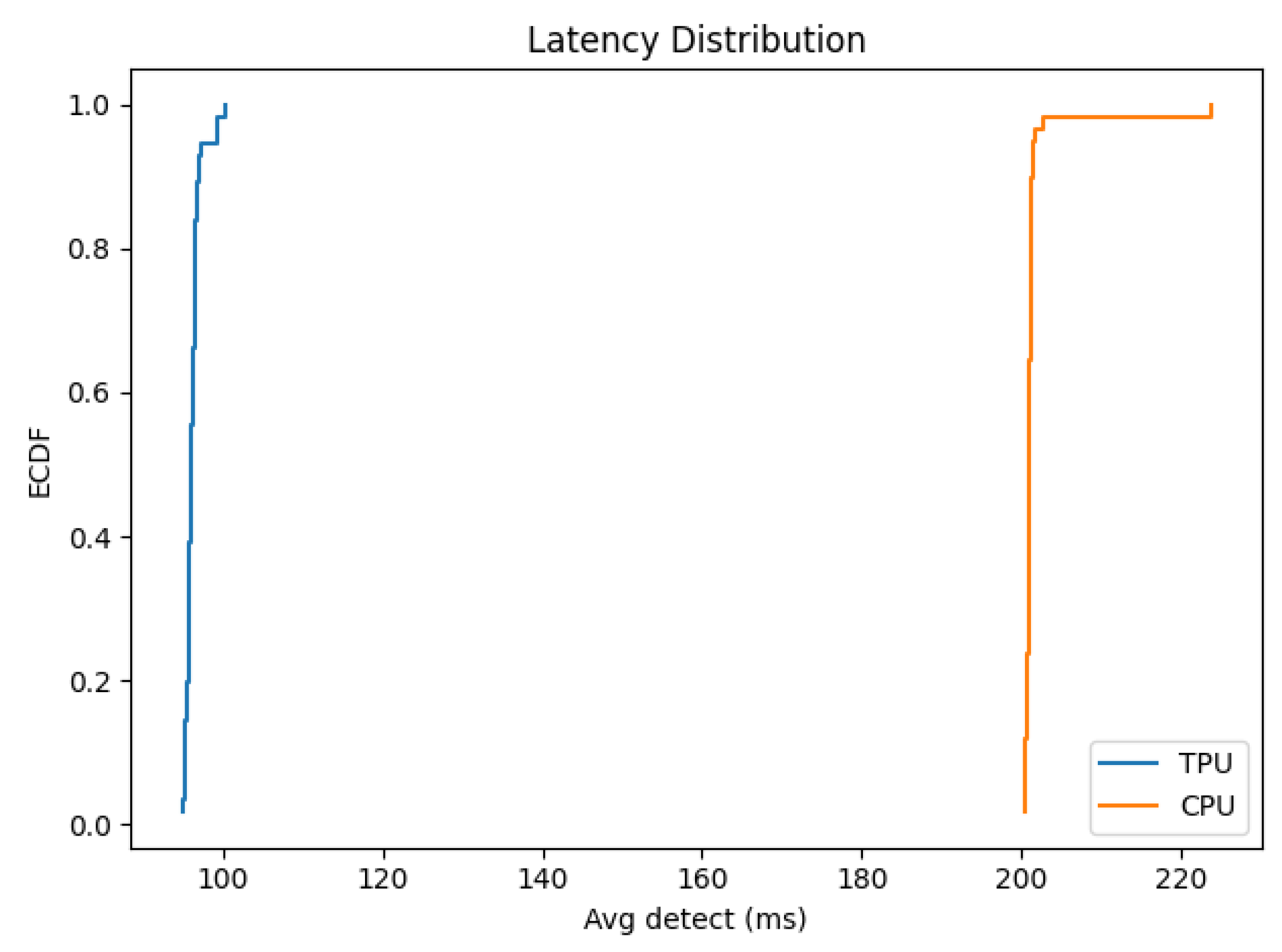

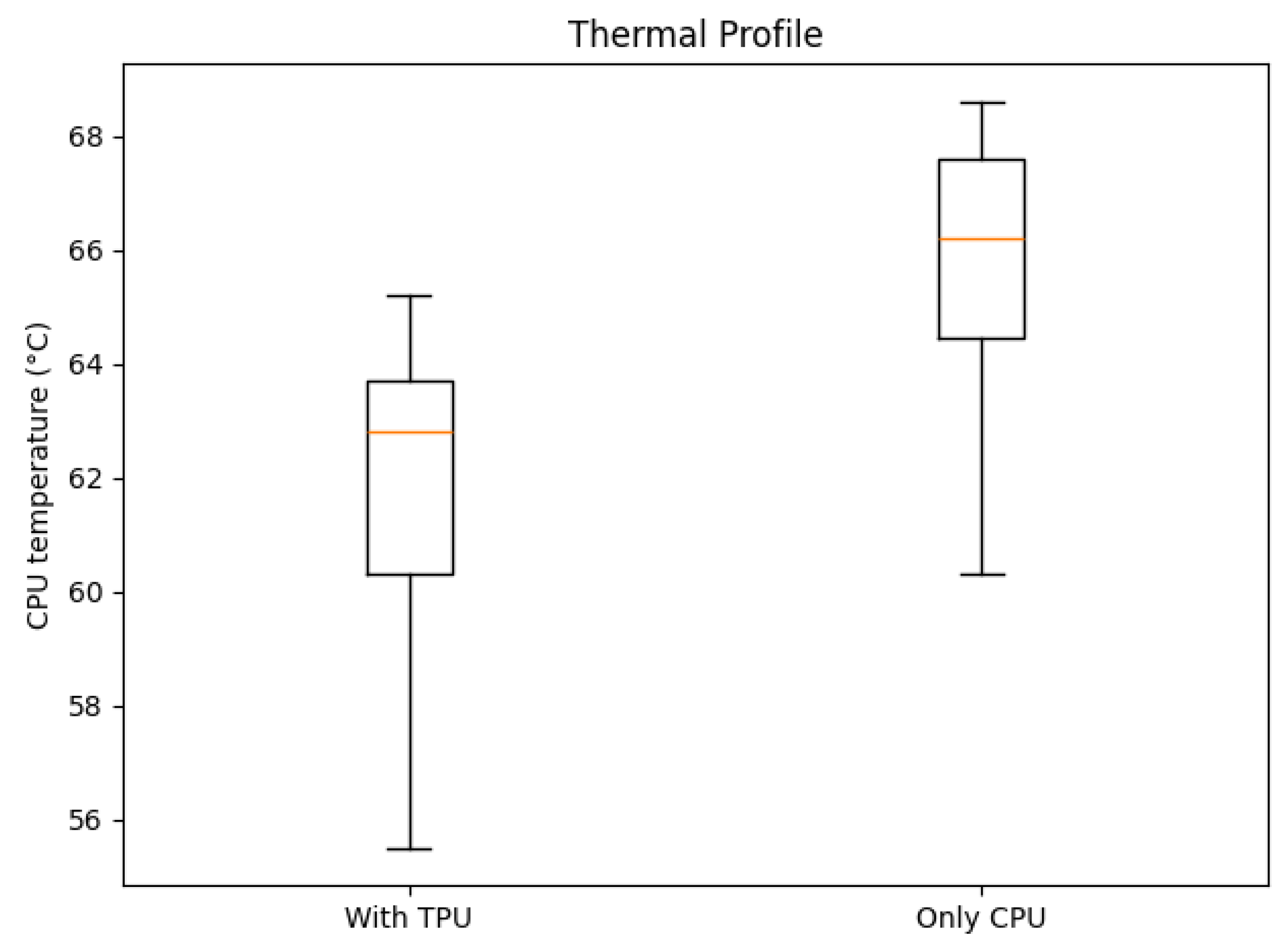

3.2. Live Detection Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-resolution global maps of 21st-century forest cover change. Science 2013, 342, 850–853. [Google Scholar] [CrossRef]

- Keenan, R.J.; Reams, G.A.; Achard, F.; de Freitas, J.V.; Grainger, A.; Lindquist, E. Dynamics of global forest area: Results from the FAO Global Forest Resources Assessment 2015. For. Ecol. Manag. 2015, 352, 9–20. [Google Scholar] [CrossRef]

- Sabatini, F.M.; Burrascano, S.; Keeton, W.S.; Levers, C.; Lindner, M.; Pötzschner, F.; Verkerk, P.J.; Bauhus, J.; Buchwald, E.; Chiarucci, A.; et al. Where are Europe’s last primary forests? Divers. Distrib. 2018, 24, 1426–1439. [Google Scholar] [CrossRef]

- Kleinschmit, D.; Mansourian, S.; Wildburger, C.; Purret, A. Illegal Logging and Related Timber Trade–Dimensions, Drivers, Impacts and Responses; IUFRO World Series; International Union of Forest Research Organizations (IUFRO): Vienna, Austria, 2016; Volume 35. [Google Scholar]

- Vasile, M.; Iordăchescu, G. Forest crisis narratives: Illegal logging, datafication and the conservation frontier in the Romanian Carpathian Mountains. Political Geogr. 2022, 96, 102600. [Google Scholar] [CrossRef]

- Voda, M.; Torpan, A.; Moldovan, L. Wild Carpathia future development: From illegal deforestation to ORV sustainable recreation. Sustainability 2017, 9, 2254. [Google Scholar] [CrossRef]

- Peptenatu, D.; Grecu, A.; Simion, A.G.; Gruia, K.A.; Andronache, I.; Draghici, C.C.; Diaconu, D.C. Deforestation and frequency of floods in Romania. In Water Resources Management in Romania; Springer: Cham, Switzerland, 2019; pp. 279–306. [Google Scholar] [CrossRef]

- Georgescu, I.; Nica, I. Evaluating the determinants of deforestation in Romania: Empirical evidence from an autoregressive distributed lag model and the Bayer–Hanck cointegration approach. Sustainability 2024, 16, 5297. [Google Scholar] [CrossRef]

- Ciobotaru, A.M.; Andronache, I.; Ahammer, H.; Jelinek, H.F.; Radulovic, M.; Pintilii, R.D.; Peptenatu, D.; Drăghici, C.C.; Simion, A.G.; Papuc, R.M.; et al. Recent deforestation pattern changes (2000–2017) in the central Carpathians: A gray-level co-occurrence matrix and fractal analysis approach. Forests 2019, 10, 308. [Google Scholar] [CrossRef]

- Rădulescu, C.V.; Bran, F.; Ciuvăț, A.L.; Bodislav, D.A.; Buzoianu, O.C.; Ștefănescu, M.; Burlacu, S. Decoupling the economic development from resource consumption: Implications and challenges in assessing the evolution of forest area in Romania. Land 2022, 11, 1097. [Google Scholar] [CrossRef]

- Andreadis, A.; Giambene, G.; Zambon, R. Monitoring Illegal Tree Cutting through Ultra-Low-Power Smart IoT Devices. Sensors 2021, 21, 7593. [Google Scholar] [CrossRef]

- ORDIN nr. 583 din 15 Septembrie 2008 Pentru Aprobarea Metodologiei Privind Organizarea şi Funcţionarea Sistemului Informaţional Integrat de Urmărire a Materialelor Lemnoase (SUMAL) şi Obligaţiie Operatorilor Economici Legate de Acesta. Available online: https://legislatie.just.ro/Public/DetaliiDocument/97531 (accessed on 13 May 2025).

- Ministerul Mediului a Lansat, în Premieră, Aplicaţia ‘Inspectorul Pădurii’ Pentru Utilizatorii de Smartphone. Available online: https://www.agerpres.ro/mediu/2016/07/19/ministerul-mediului-a-lansat-in-premiera-aplicatia-inspectorul-padurii-pentru-utilizatorii-de-smartphone-17-34-37 (accessed on 13 May 2025).

- Bandaranayake, H.M.K.S.; Mahamohottala, D.M.M.Y.P.; Wijekoon, W.M.A.M.; Sandakelum, K.M.V.T.; Gamage, N.; Rankothge, W. GreenSoal: Illegal Tree Logging Detection System Using IoT. In Proceedings of the 2022 International Conference on ICT for Smart Society (ICISS), Bandung, Indonesia, 10–11 August 2022. [Google Scholar] [CrossRef]

- Ravindran, P.; Thompson, B.; Soares, R.; Wiedenhoeft, A. The XyloTron: Flexible, Open-Source, Image-Based Macroscopic Field Identification of Wood Products. Front. Plant Sci. 2020, 11, 1015. [Google Scholar] [CrossRef]

- Lin, C.-J.; Chuang, C.-C.; Lin, H.-Y. Edge-AI-Based Real-Time Automated License Plate Recognition System. Appl. Sci. 2022, 12, 1445. [Google Scholar] [CrossRef]

- Sonnara, F.; Chihaoui, H.; Filali, F. Efficient Real-Time License Plate Recognition Using Deep Learning on Edge Devices. J. Real-Time Image Process. 2025, 22, 159. [Google Scholar] [CrossRef]

- Singhal, A.; Singhal, N. PatrolVision: Automated License Plate Recognition in the Wild. In Proceedings of the IEEE SoutheastCon 2025, Concord, NC, USA, 22–30 March 2025. [Google Scholar] [CrossRef]

- Alharbi, F.; Zakariah, M.; Alshahrani, R.; Albakri, A.; Viriyasitavat, W.; Alghamdi, A.A. Intelligent transportation using wireless sensor networks blockchain and license plate recognition. Sensors 2023, 23, 2670. [Google Scholar] [CrossRef]

- Amelia, A.; Zarlis, M.; Suherman, S.; Efendi, S. Impact of Multimedia Sensor Placement to Car Licence Number Detection: Detecting and tracking vehicle plate number. In Proceedings of the 2022 11th International Conference on Software and Computer Applications, New York, NY, USA, 18–20 February 2022; pp. 97–101. [Google Scholar] [CrossRef]

- Pradhan, G.; Prusty, M.R.; Negi, V.S.; Chinara, S. Advanced IoT-integrated parking systems with automated license plate recognition and payment management. Sci. Rep. 2025, 15, 2388. [Google Scholar] [CrossRef]

- Marconcini, F.D.M.; Segatto, M.E.V.; Salles, E.O.T. A Low-Cost Smart Surveillance System Applied to Vehicle License Plate Tracking. J. Microwaves Optoelectron. Electromagn. Appl. 2022, 21, 141–156. [Google Scholar] [CrossRef]

- Panda, D.S.; Reshma, C.H.; Dixit, R. A Robust Intelligent License Plate Recognition System for Vehicle Surveillance and Security Control. In Proceedings of the 2025 3rd International Conference on Communication, Security, and Artificial Intelligence (ICCSAI), Delhi, India, 12–14 April 2025; Volume 3, pp. 1459–1464. Available online: https://ieeexplore.ieee.org/stamp/stamp.jsp?tp=&arnumber=11064630 (accessed on 30 October 2025).

- Dalarmelina, N.D.V.; Teixeira, M.A.; Meneguette, R.I. A Real-Time Automatic Plate Recognition System Based on Optical Character Recognition and Wireless Sensor Networks for ITS. Sensors 2019, 20, 55. [Google Scholar] [CrossRef]

- Quraishi, A.A.; Feyzi, F.; Shahbahrami, A. Detection and recognition of vehicle licence plates using deep learning in challenging conditions: A systematic review. Int. J. Intell. Syst. Technol. Appl. 2024, 22, 105–150. [Google Scholar] [CrossRef]

- Expanded-Logging-Trucks Computer Vision Dataset. Available online: https://universe.roboflow.com/loggingtruck/expanded-logging-trucks (accessed on 7 June 2025).

- Singh, P. Enhancing Performance of Hybrid Electric Vehicle using Optimized Energy Management Methodology. Int. J. Data Inform. Intell. Comput. 2023, 2, 1–10. [Google Scholar] [CrossRef]

- Phan, T.C.; Singh, P. A Recent Connected Vehicle—IoT Automotive Application Based on Communication Technology. Int. J. Data Inform. Intell. Comput. 2023, 2, 40–51. [Google Scholar] [CrossRef]

- Rammohan, R.; Jayanthiladevi, A. AI Enabled Crypto Mining for Electric Vehicle Systems. Int. J. Data Inform. Intell. Comput. 2023, 2, 33–39. [Google Scholar] [CrossRef]

- Keshun, Y.; Yingkui, G.; Yanghui, L.; Yajun, W. A Novel Physical Constraint-Guided Quadratic Neural Network for Interpretable Bearing Fault Diagnosis under Zero-Fault Sample. Nondestruct. Test. Eval. 2025, 1–31. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Chen, L.; Li, F.; Feng, Z.; Jia, L.; Li, P. RailVoxelDet: A Lightweight 3D Object Detection Method for Railway Transportation Driven by On-Board LiDAR Data. IEEE Internet Things J. 2025, 12, 37175–37189. [Google Scholar] [CrossRef]

| Operator | Count | Status |

|---|---|---|

| STRIDED_SLICE | 12 | More than one subgraph is not supported |

| STRIDED_SLICE | 13 | Mapped to Edge TPU |

| TRANSPOSE | 2 | Operation is otherwise supported, but not mapped due to some unspecified limitation |

| TRANSPOSE | 8 | More than one subgraph is not supported |

| TRANSPOSE | 3 | Mapped to Edge TPU |

| SOFTMAX | 2 | More than one subgraph is not supported |

| PAD | 5 | Mapped to Edge TPU |

| PAD | 2 | More than one subgraph is not supported |

| PACK | 2 | More than one subgraph is not supported |

| LOGISTIC | 34 | Mapped to Edge TPU |

| LOGISTIC | 44 | More than one subgraph is not supported |

| ADD | 10 | More than one subgraph is not supported |

| ADD | 7 | Mapped to Edge TPU |

| SPLIT | 1 | Mapped to Edge TPU |

| SPLIT | 4 | For example, a fully-connected or softmax layer with 2D output |

| FULLY_CONNECTED | 4 | More than one subgraph is not supported |

| MUL | 34 | Mapped to Edge TPU |

| MUL | 46 | More than one subgraph is not supported |

| CONV_2D | 46 | More than one subgraph is not supported |

| CONV_2D | 163 | Mapped to Edge TPU |

| DEPTHWISE_CONV_2D | 6 | More than one subgraph is not supported |

| RESIZE_NEAREST_NEIGHBOR | 2 | More than one subgraph is not supported |

| RESHAPE | 13 | More than one subgraph is not supported |

| RESHAPE | 5 | Mapped to Edge TPU |

| CONCATENATION | 15 | More than one subgraph is not supported |

| CONCATENATION | 8 | Mapped to Edge TPU |

| MAX_POOL_2D | 3 | Mapped to Edge TPU |

| QUANTIZE | 3 | More than one subgraph is not supported |

| SUB | 3 | More than one subgraph is not supported |

| System | Technology | Automation Level |

|---|---|---|

| SUMAL 1.0 | Desktop software | Low |

| Inspectorul Pădurii | Web and mobile application | Low |

| SUMAL 2.0 | Web, mobile, and GPS system | Medium |

| Vodafone Smart Forest | Acoustic IoT and AI | High |

| Rainforest Connection (RFCx) | Acoustic IoT and AI | High |

| GreenSoal | Acoustic IoT and AI | High |

| XyloTron | AI wood structure analysis | Medium |

| PatrolVision | YOLO, OCR | Medium |

| Forest Guard | YOLO, OCR, mobile application | Medium |

| TreeHelper | YOLO, OCR, web application, 4G communication, GPS | High |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zvîncă, A.-M.; Petruc, S.-I.; Bogdan, R.; Marcu, M.; Popa, M. TreeHelper: A Wood Transport Authorization and Monitoring System. Sensors 2025, 25, 6713. https://doi.org/10.3390/s25216713

Zvîncă A-M, Petruc S-I, Bogdan R, Marcu M, Popa M. TreeHelper: A Wood Transport Authorization and Monitoring System. Sensors. 2025; 25(21):6713. https://doi.org/10.3390/s25216713

Chicago/Turabian StyleZvîncă, Alexandru-Mihai, Sebastian-Ioan Petruc, Razvan Bogdan, Marius Marcu, and Mircea Popa. 2025. "TreeHelper: A Wood Transport Authorization and Monitoring System" Sensors 25, no. 21: 6713. https://doi.org/10.3390/s25216713

APA StyleZvîncă, A.-M., Petruc, S.-I., Bogdan, R., Marcu, M., & Popa, M. (2025). TreeHelper: A Wood Transport Authorization and Monitoring System. Sensors, 25(21), 6713. https://doi.org/10.3390/s25216713