In this section, we review existing research on monocular image-based pose estimation for non-cooperative spacecraft, which can be broadly classified into two categories: traditional methods and deep learning-based methods. Additionally, we provide a concise overview of existing algorithms for visible and infrared image fusion.

2.1. Traditional Methods

Traditional monocular vision-based pose estimation methods primarily depend on handcrafted features and prior geometric model knowledge of specific spacecraft. These methods employ traditional feature detection techniques, such as SIFT [

21] and ORB [

22], to extract features from images. The extracted features are then matched with predefined features of the known spacecraft, establishing correspondences between 2D keypoints and 3D model points to estimate the pose.

D’Amico et al. [

11] used the Canny edge detector to extract edge features from images and determine the pose of the target spacecraft through model matching. Liu [

12] utilized elliptical information to calculate the spacecraft orientation and distance parameters, leveraging the asymmetry of these features to ensure the accuracy of the pose estimation. Despite the effectiveness of these methods, their reliance on individual model characteristics does not guarantee robustness in pose estimation. To address these limitations, Sharma et al. [

13] proposed weak gradient elimination (WGE), where the Sobel operator and Hough transform were constrained by geometric features to extract multiple image features, thereby improving the accuracy of pose estimation.

However, traditional pose estimation methods often require specific environmental conditions, such as good lighting and clear image quality. In complicated and changeable environments, such as those with strong light or shadows, the accuracy of these methods is limited by the precision of the model and the matching algorithm.

2.2. Deep Learning Based Methods

With the development of deep learning technology, many pose estimation methods based on deep learning have emerged in recent years. Deep learning-based approaches can be categorized into single stage and multi-stage methods, depending on whether the pose of the non-cooperative object is directly obtained.

The multi-stage method primarily relies on the geometric feature key points of the non-cooperative object. A neural network-based image key point regression model is designed to predict the 2D bounding box and the pixel positions of the 2D key points of the non-cooperative object. Subsequently, the relationship between the 2D key points and their corresponding 3D key points is calculated using PnP or EPnP solvers to obtain the 6-DOF pose of the camera relative to the object. Park [

23] was the first to use an object detection network for ROI (Region of Interest) detection to determine the position of a spacecraft in an image, then returned the coordinates of the vector, and finally used the PnP method to solve for the pose. Similarly, Chen et al. [

24] first detected the two-dimensional bounding box of the object, used HRNet [

25] and heat maps to obtain key points, and then determined the relative orientation of the object and camera through the RANSAC PnP algorithm. Lorenzo et al. [

26] combined a CEPPnP solver with an extended Kalman filter and a depth network, using heat maps to predict the two-dimensional pixel coordinates of feature points for non-cooperative spacecraft. Huo et al. [

27] replaced the prediction head of tiny-YOLOv3 with key point detection results and designed a reliability judgment model and a key point existence judgment model to enhance the robustness of the pose estimation algorithm. The lightweight feature extraction network also facilitates deployment in space environments. Wang et al. [

28] designed a key point detection network based on the Transformer model and introduced a representation containing key point coordinates and index entries. The final relative position of the camera and object was determined using the PnP method. Liu et al. [

29] proposed a deformable-transformer-based single stage end-to-end SpaceNet (DTSE-SpaceNet) that integrates object detection with key point regression. Yu et al. [

30] adopted a wire-frame-based landmark description method, performing direct coordinate classification for satellite landmark positioning, and finally used the PnP method to obtain the target pose. It would be more physically interpretable and theoretically robust to detect keypoints and apply a 2D-3D correspondence with a PnP solver.

Although based on the deep study of the multi-stage pose estimation method shows the potential, but almost all of the training images are synthetic. In practical space control missions, spacecraft are mostly non-cooperative objects, the key point regression used by the multi-stage approach may fail. The single stage method directly estimates the pose of the target from the input image through a model. The nonlinear mapping relationship between the image and the pose is typically established via a neural network through end-to-end training, which can simplify the processing pipeline and enhance real-time performance. Additionally, the single stage method does not require additional information such as the 3D model of the spacecraft and the corresponding 2D key points, making it more suitable for deployment in the real space environment. However, the single stage method requires the same camera parameters during training and testing.

Sharma et al. [

31] use AlexNet to classify the input images with discrete orientation labels and transform the regression problem of orientation estimation into a classification problem. However, this method requires the orientation space to be discretized to a very detailed extent to ensure the accuracy of orientation estimation and it is limited by the number of training data samples. To address this issue, Proença and Gao [

32] proposed a deep learning framework for spacecraft orientation estimation based on soft classification. This framework directly obtained the relative position through a regression method and the relative orientation through probabilistic direction soft classification; additionally, they proposed the URSO dataset. Garcia et al. [

33] proposed a two-branch spacecraft pose estimation network. The first branch outputs the object position, and the second branch uses ROI images to return the spacecraft orientation quaternion. Huang et al. [

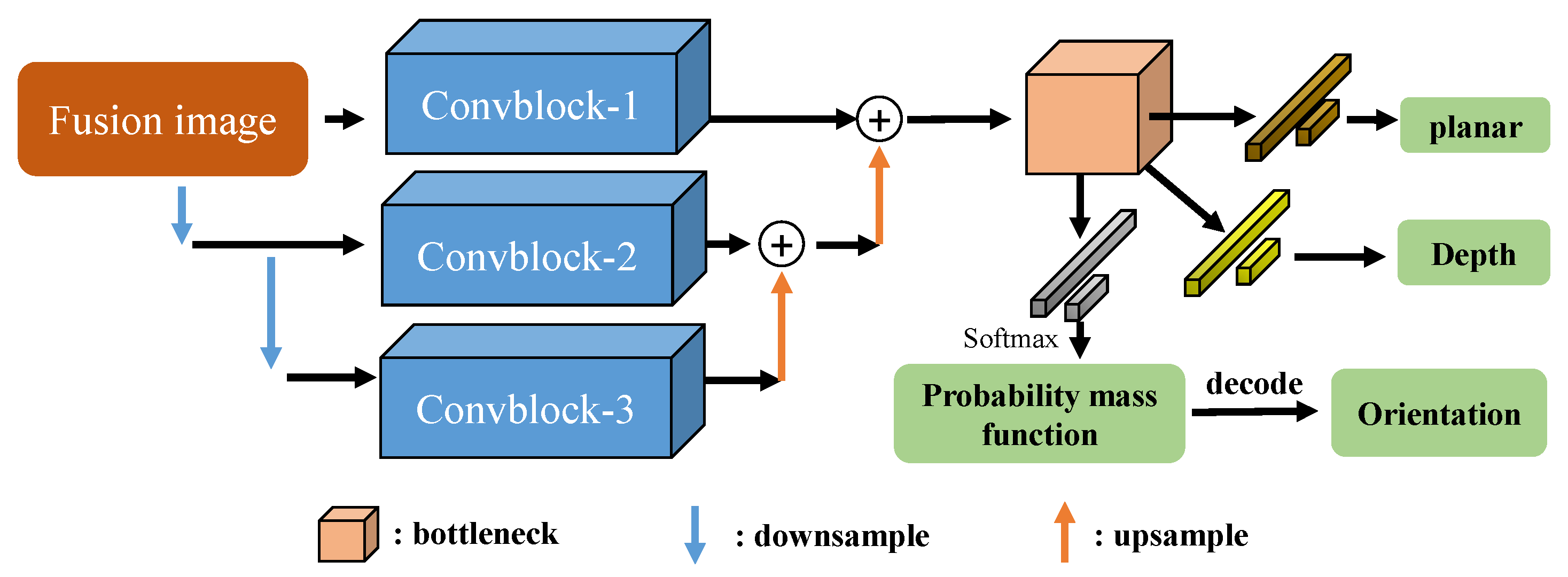

34] proposed a pose estimation network with three branches. The first branch returns the quaternion information of the object through soft classification coding, the second branch returns the 3-DOF position information, and the third branch determines whether the results of the first two branches need to be output. Sharma et al. [

35] proposed the Spacecraft Pose Network (SPN), which consists of three branch networks. These networks realize spacecraft position estimation, probability mass function prediction after discrete soft classification, and final orientation regression, respectively. The relative position of the spacecraft is obtained using the Gauss-Newton algorithm, and the target pose can be estimated using only grayscale images. To solve the problem of domain differences, Park et al. [

36] improved upon SPN and proposed a new multi-task network architecture SPNv2.

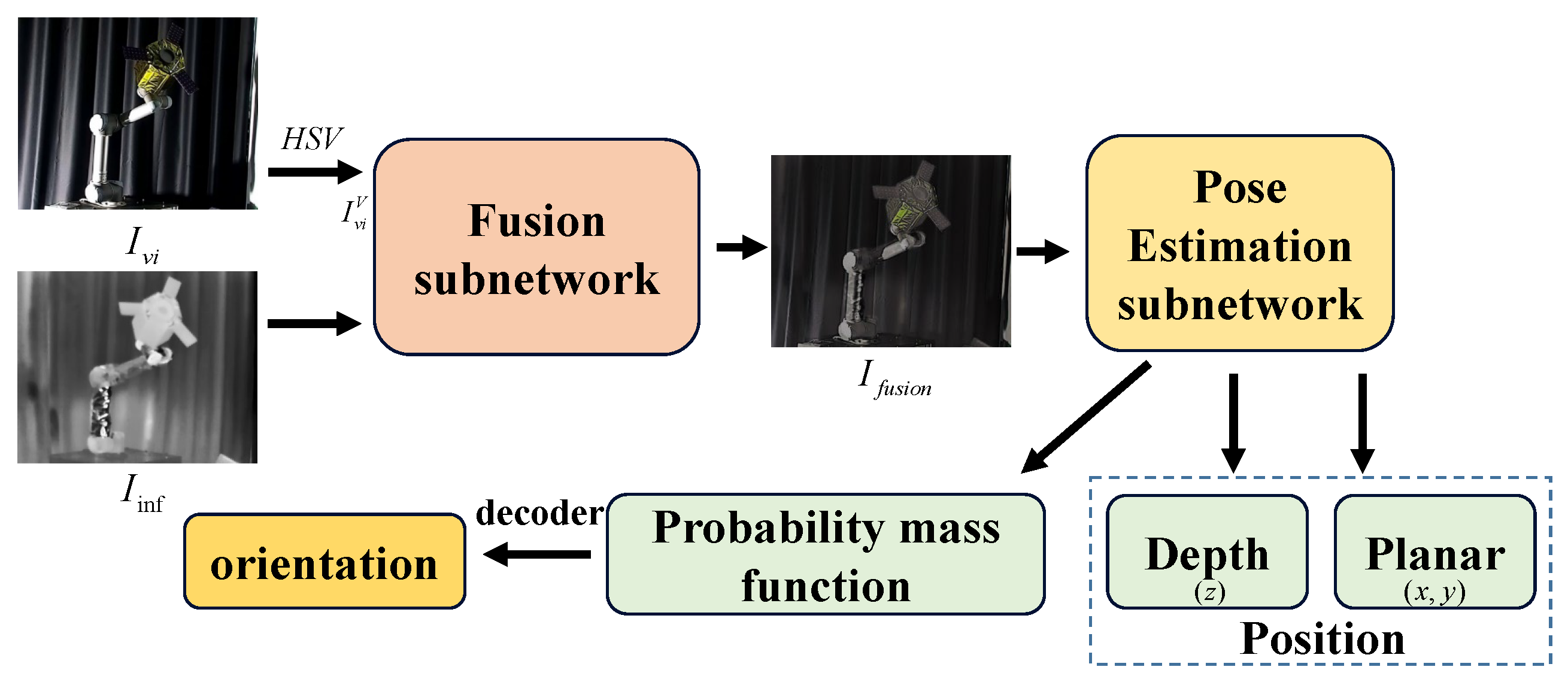

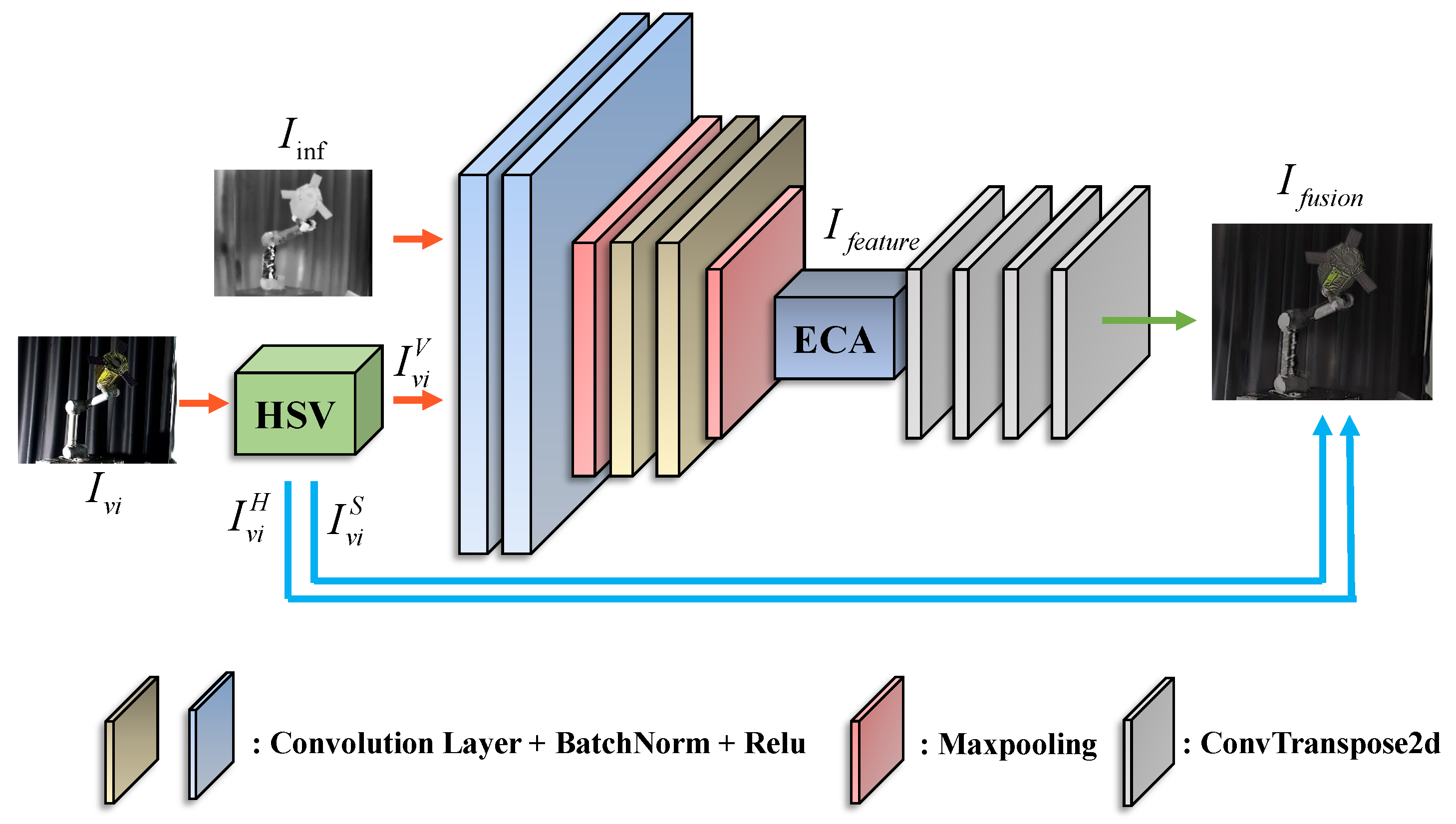

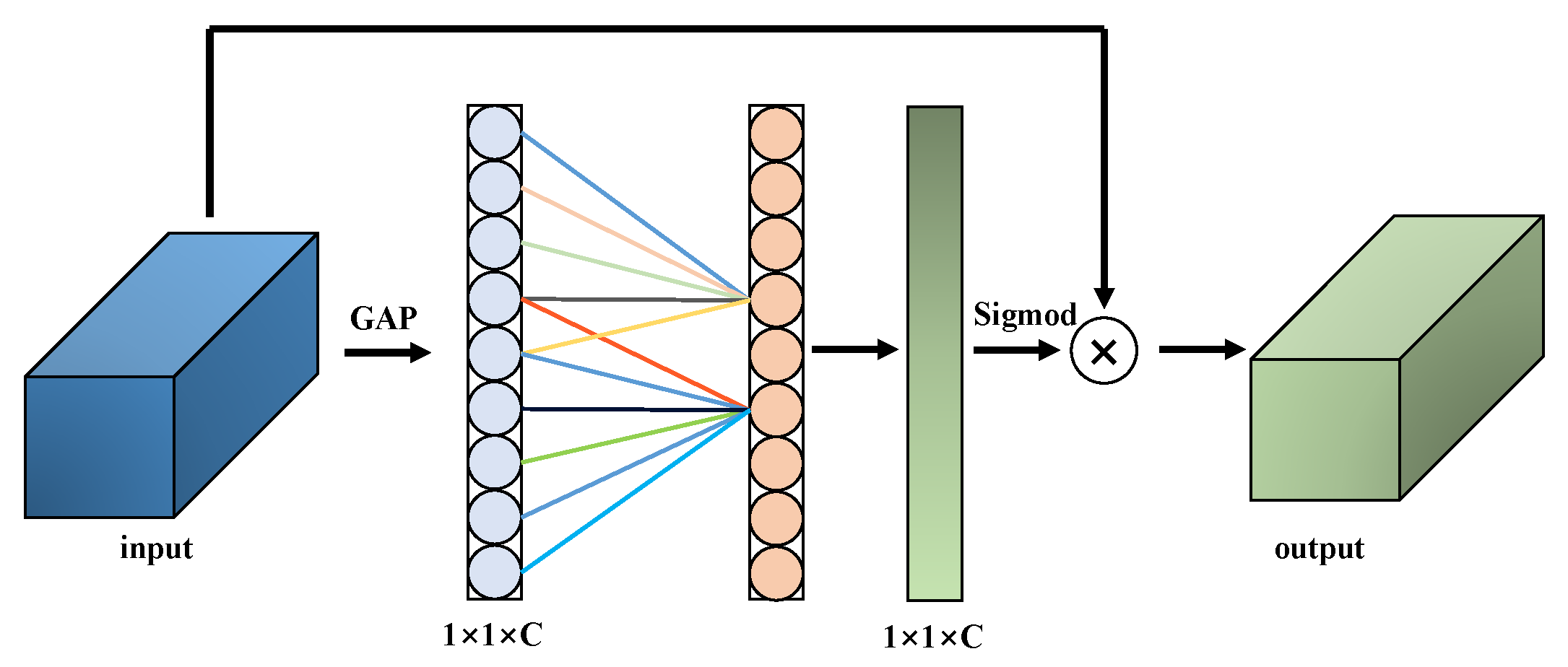

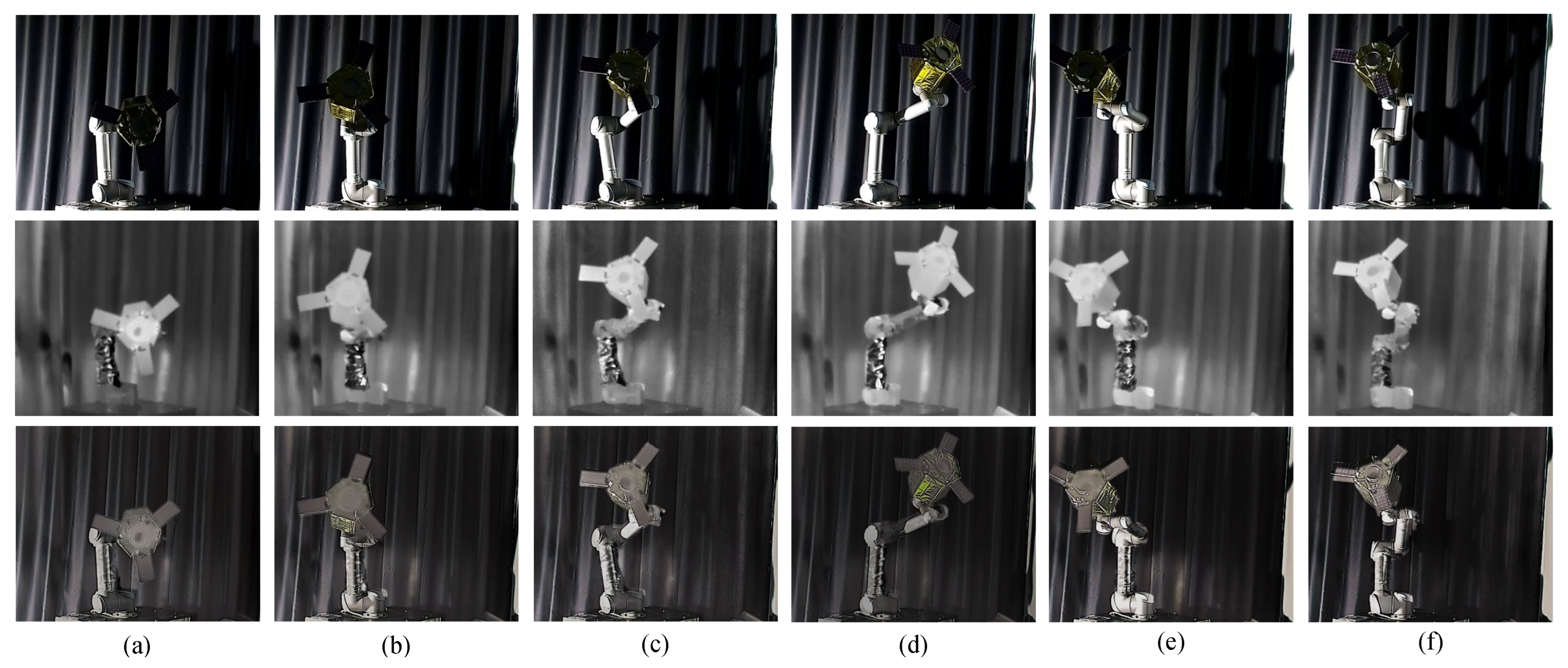

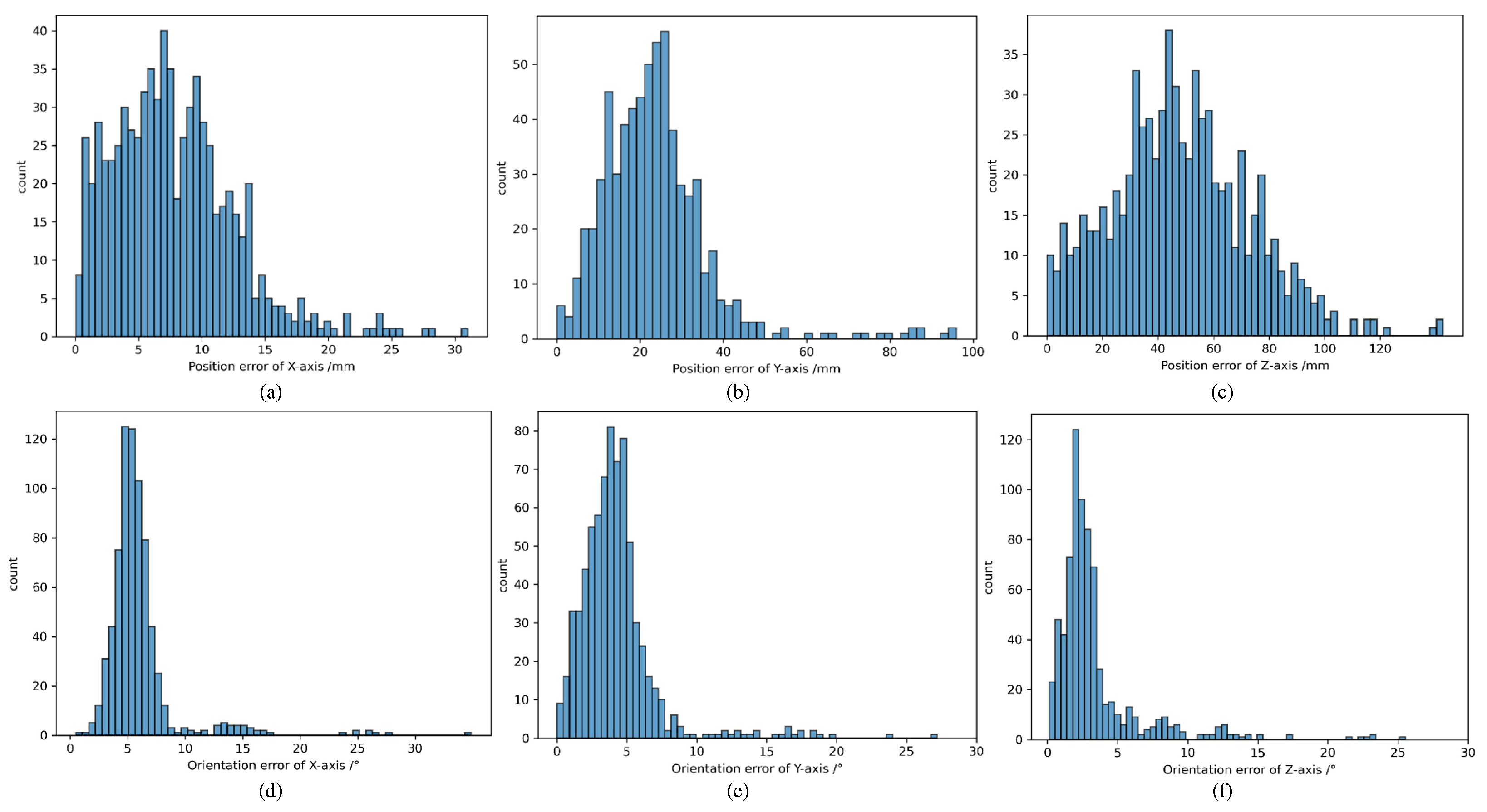

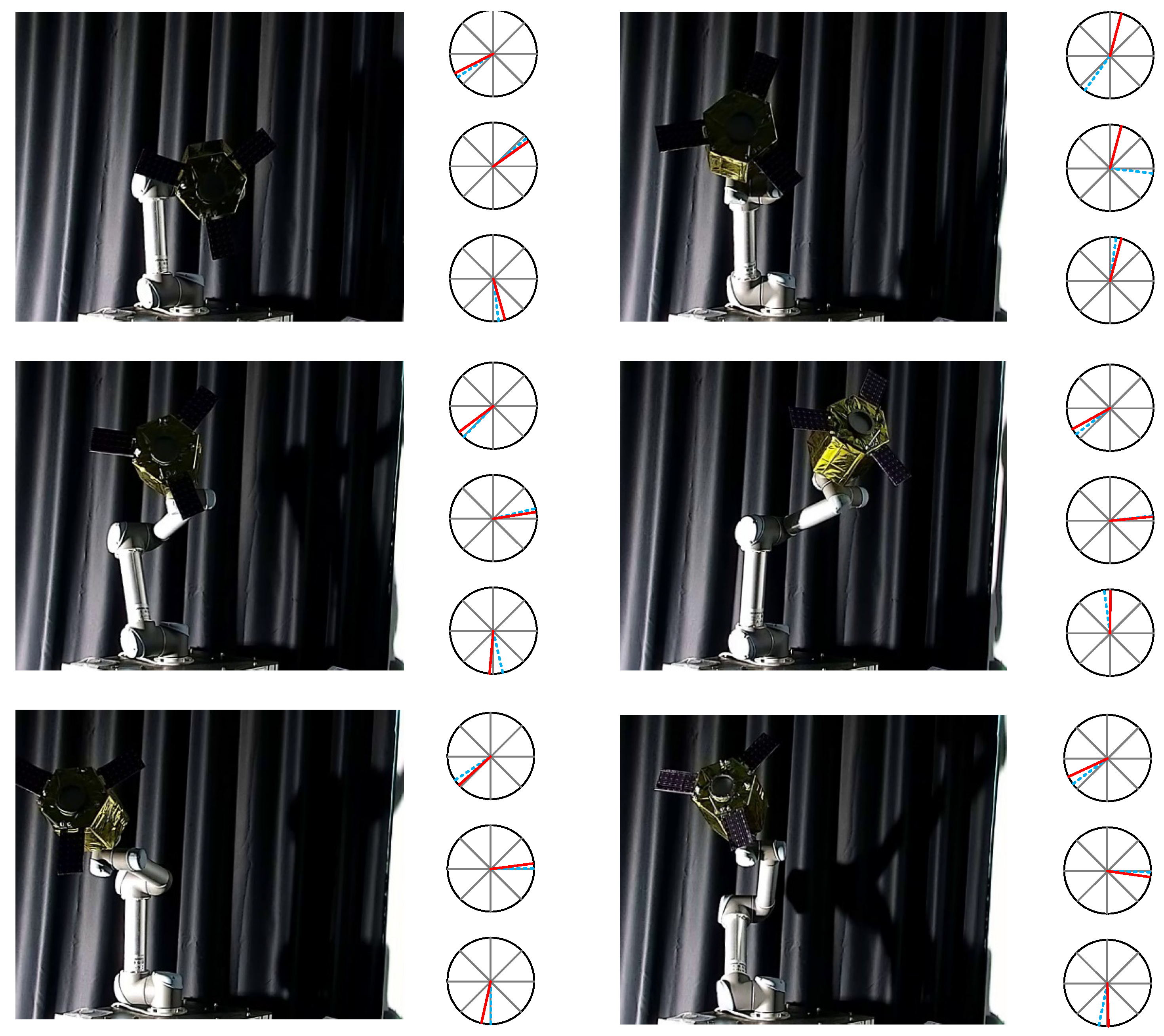

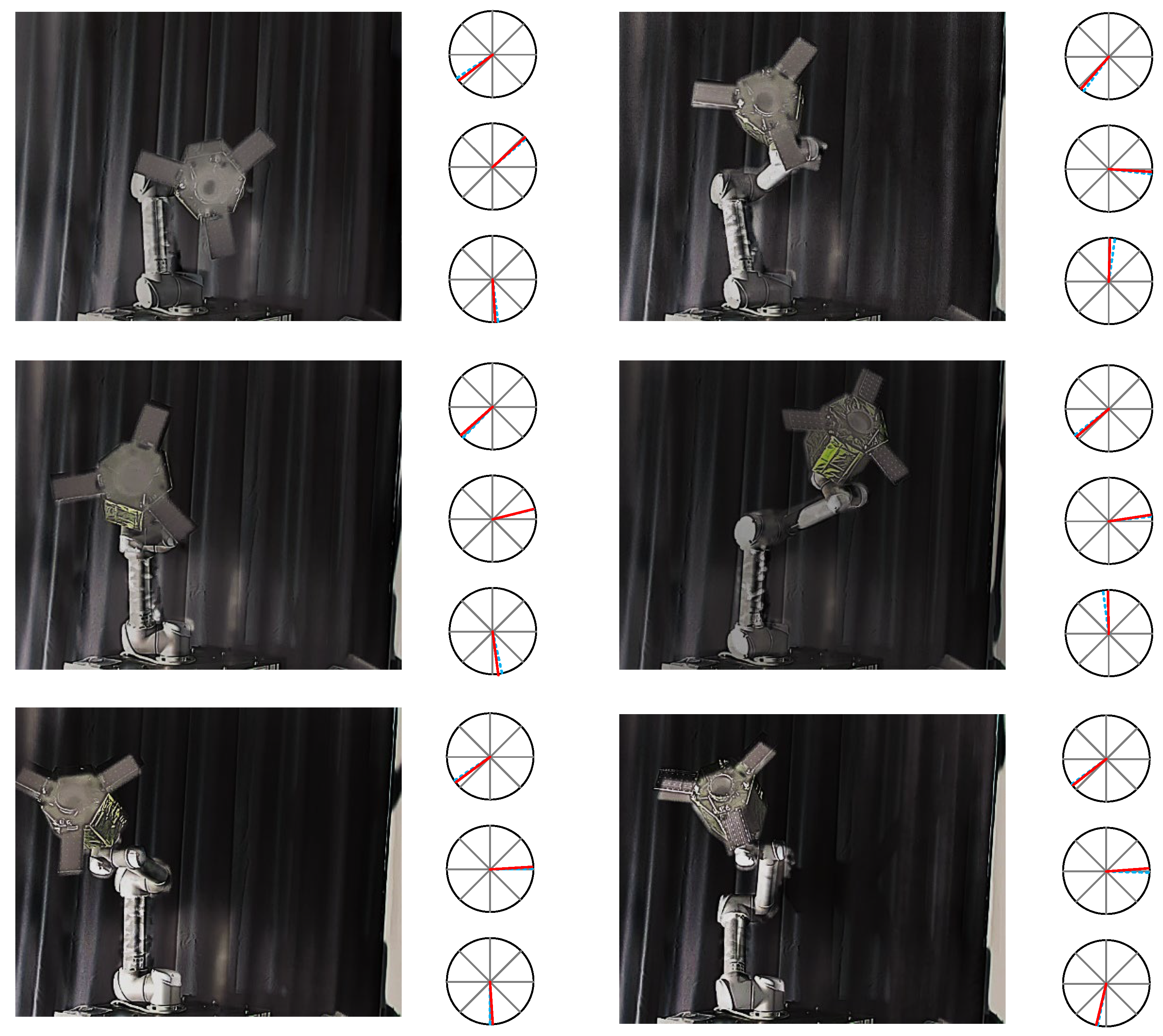

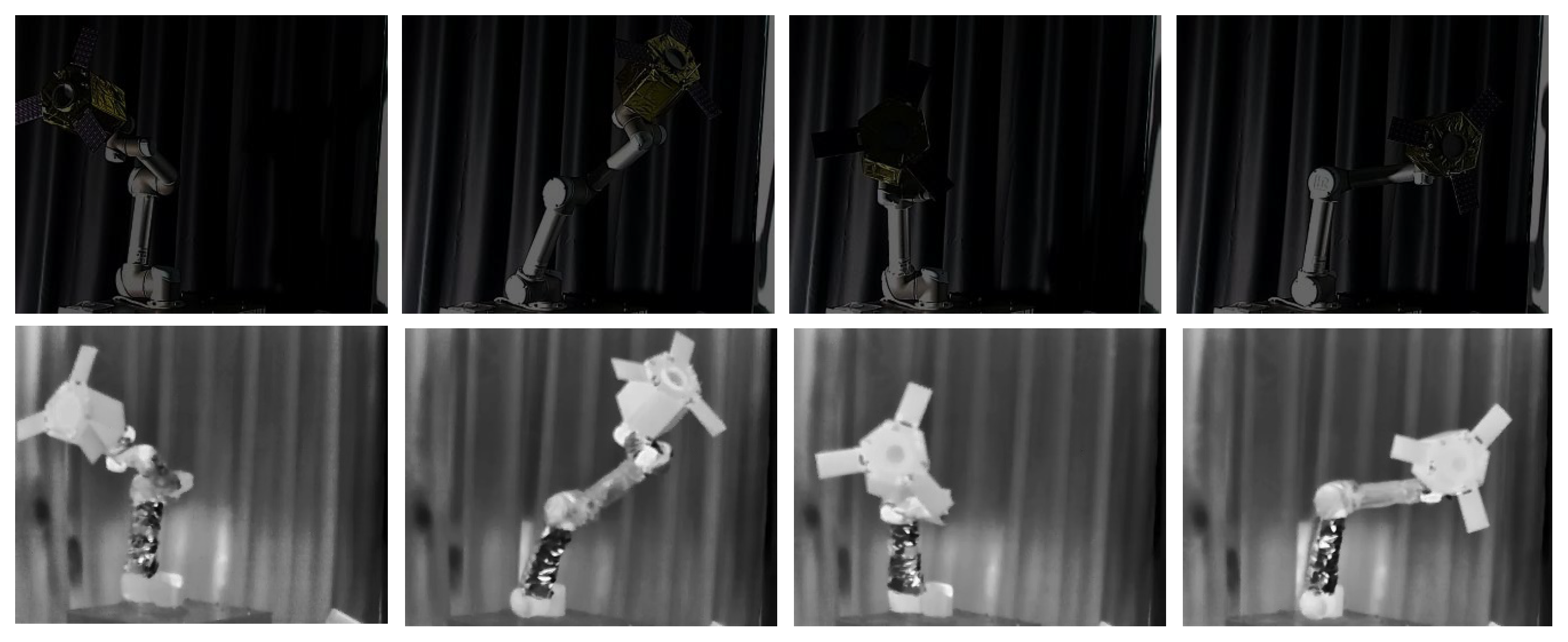

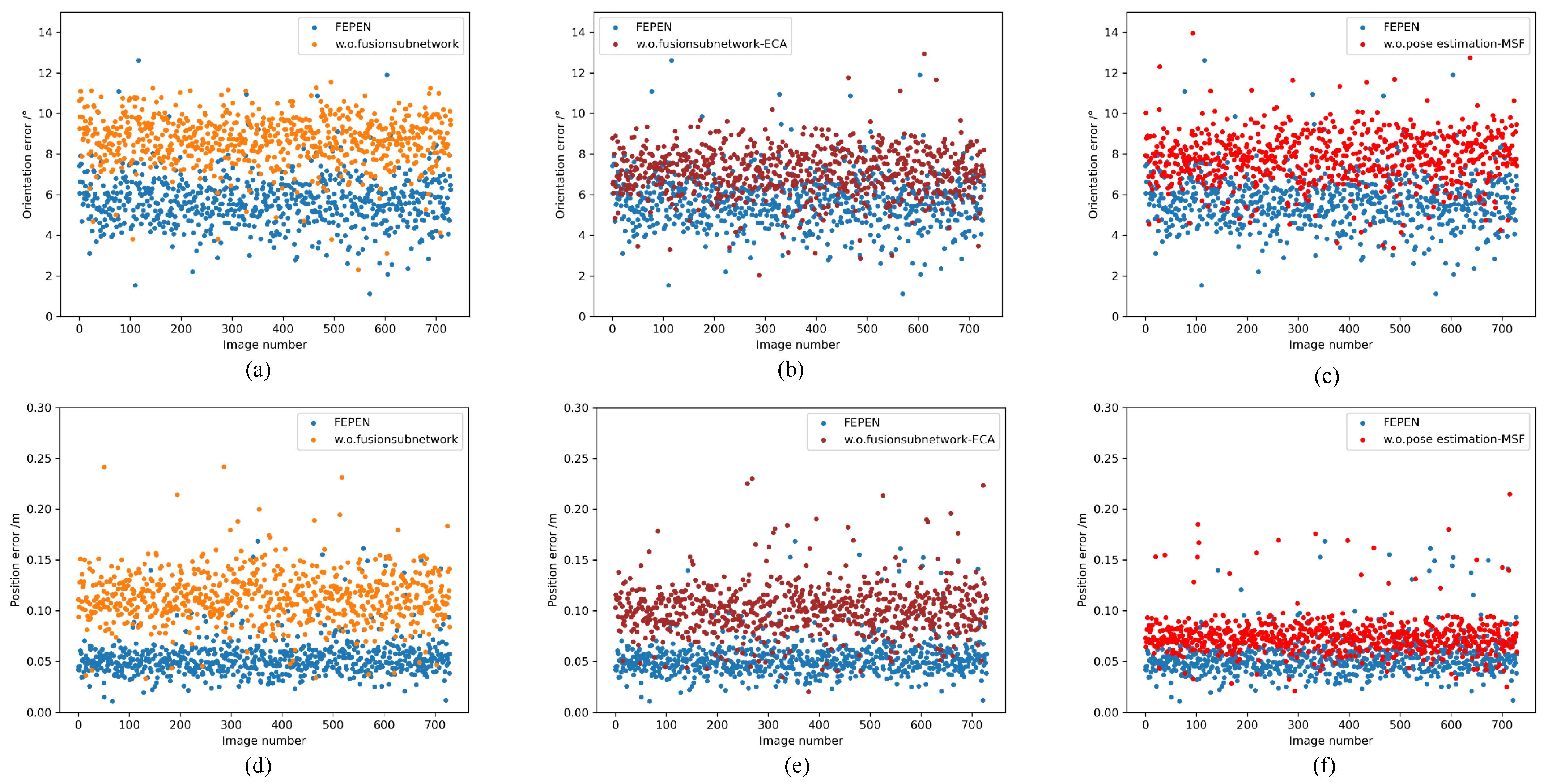

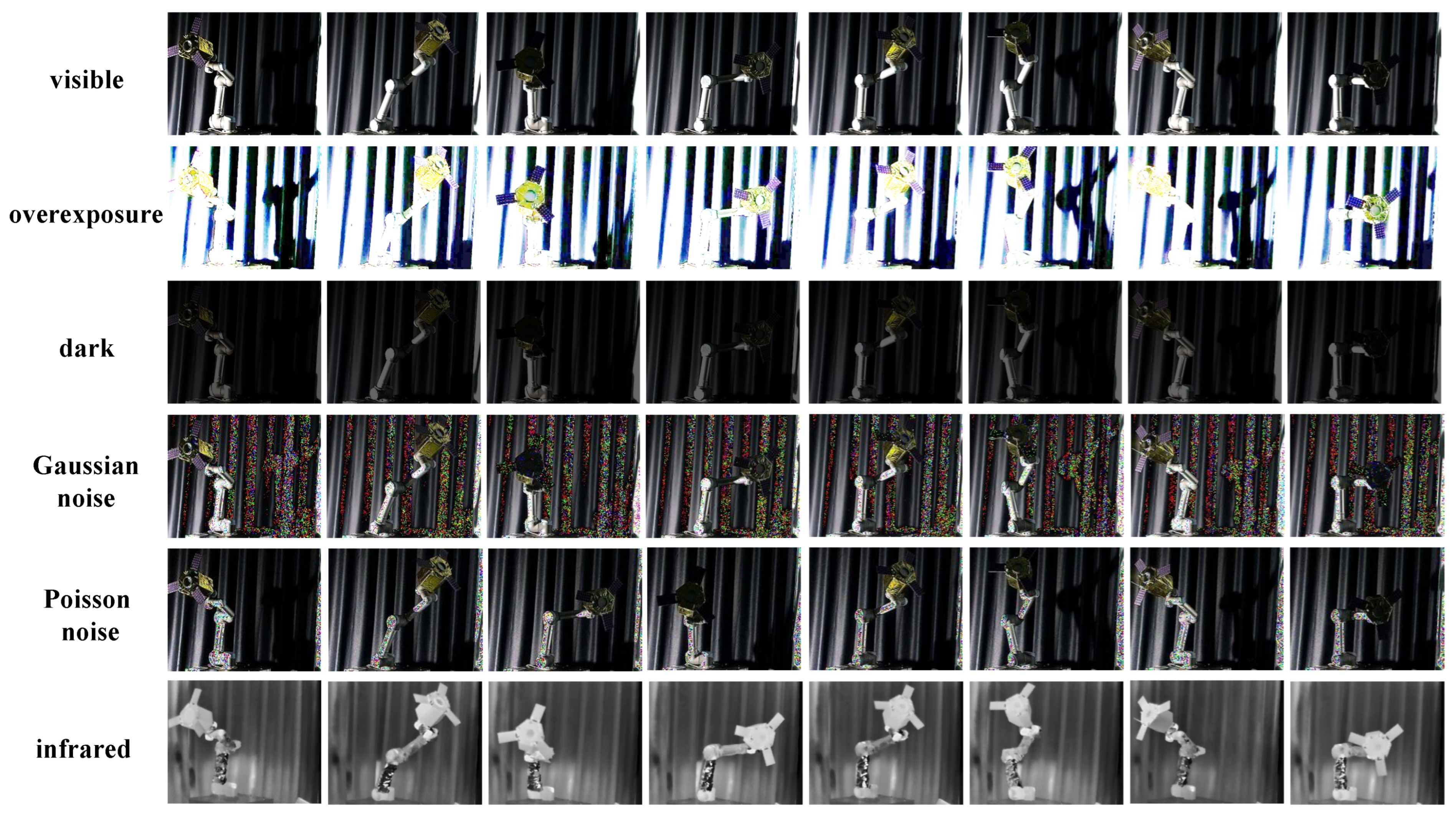

The single stage pose estimation method exhibits lower accuracy compared to multi-stage approaches. However, it offers distinct advantages, including reduced dependency on additional labels, enhanced adaptability to complex environments through end-to-end training, and minimized reliance on data preprocessing. Existing pose estimation methods, whether single-stage or multi-stage, predominantly rely on visible images as network inputs. While effective in terrestrial applications, this approach presents significant limitations in space environments. Visible sensors often fail to capture object details under low-light or high-contrast conditions, while infrared sensors struggle in scenarios with minimal thermal gradients or high reflectivity. To address these challenges, we propose the Visible and Infrared Fused Pose Estimation Framework (VIPE) for space non-cooperative objects. VIPE leverages complementary features from both visible and infrared spectra through a novel fusion mechanism, providing richer and more robust input representations. This fusion not only overcomes the limitations of single-modal approaches but also significantly enhances pose estimation accuracy and reliability across diverse orbital conditions, marking a critical advancement for space applications.

2.3. Visible-Infrared Image Fusion

Infrared and visible image fusion has been widely researched to combine the thermal radiation information from infrared images with the detailed texture features from visible images, aiming to improve scene perception for downstream vision tasks. Traditional fusion methods can be broadly classified into two categories: multi-scale transformation-based approaches [

37] and sparse representation-based methods [

38]. Although these methods rely on handcrafted fusion rules, their performance is highly dependent on feature extraction and fusion strategies, which may result in information loss, particularly in complex scenarios.

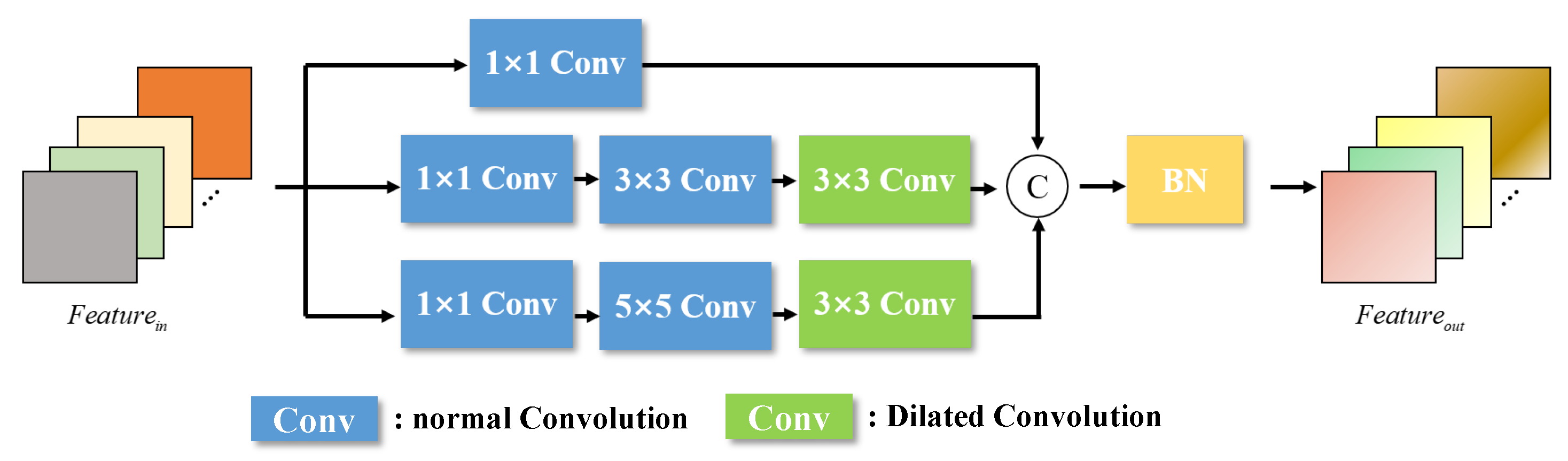

Recent advances in deep learning have revolutionized this field. Convolutional neural networks (CNNs)-based frameworks, such as IFCNN [

39] and FusionGAN [

40], leverage hierarchical features to automatically learn fusion rules through adversarial or non-adversarial training. Attention mechanisms further improve feature selection by highlighting salient regions across modalities. AttentionFGAN [

41] combined with attention mechanisms and generative adduction networks, significantly improves the visual effect of fused images. Emerging trends focus on hybrid architectures combining deep networks with traditional methods. DenseFuse [

42] integrates multi-scale analysis with dense connectivity to enhance feature representation. In addition, research on spacecraft pose estimation based on RGB-Thermal (RGB-T) fusion remains relatively limited. Rondao et al. [

43] developed the ChiNet framework, which effectively enhances RGB images by incorporating long-wave infrared data, thereby providing richer feature representations while mitigating the impact of artifacts commonly encountered in visible-light imaging of spatial objects. Notably, Li et al. [

44] proposed DCTNet, a heterogeneous dual-branch multi-cascade network for infrared and visible image fusion. DCTNet employs a transformer-based branch and a CNN-based branch to extract global and local features, respectively, followed by a multi-cascade fusion module to integrate complementary information. U2Fusion [

45] adapts to various fusion tasks through a single model. However, its generic design does not address the unique challenges of space environments, such as extreme lighting conditions and the need for precise pose estimation. In a complementary study, Hogan et al. [

46] demonstrated that thermal imaging sensors offer a viable alternative to conventional visible-light cameras under low-illumination conditions. Their work introduced a novel convolutional neural network architecture for spacecraft relative attitude prediction, achieving promising results. Furthermore, recent advances in Transformer-based models [

47] have shown significant potential in capturing long-range dependencies between heterogeneous modalities, with successful applications in RGB-T fusion for visual tracking of non-cooperative space objects.

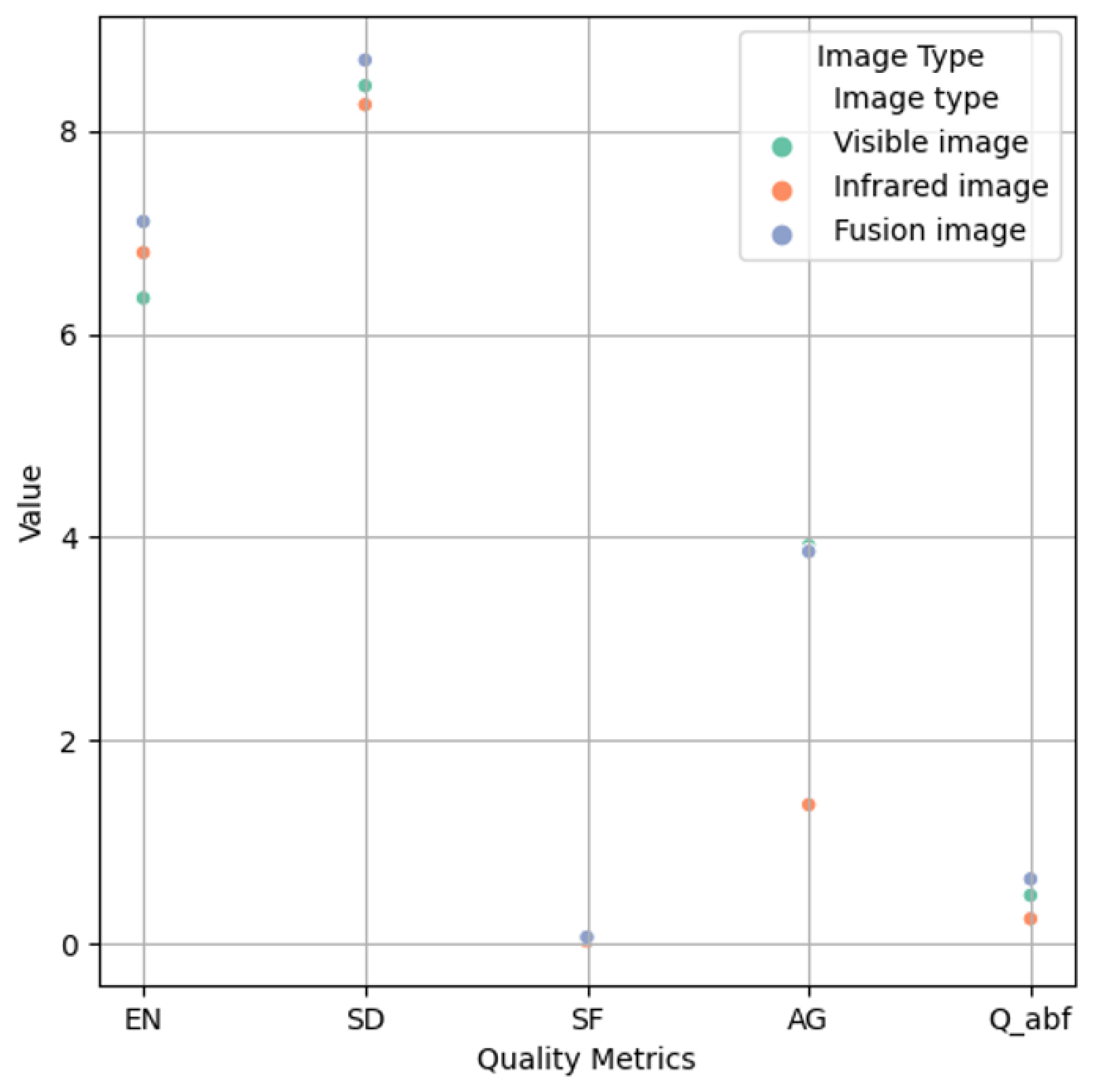

In this paper, different from the above-mentioned research, we take image fusion as the upstream task of pose estimation and create a noval space non-cooperative object pose estimation framework based on infrared and visible images.