Electrical connectors are essential elements in electric energy storage systems, providing the pathways that link storage units in both series and parallel arrangements. Their stability is crucial for ensuring reliable current transfer during charge and discharge operations. If a connector fails, the current path is interrupted, which may cause instability of the system, accelerated deterioration of the cells, and ultimately premature system failure. One of the key degradation mechanisms of connector contacts is stress relaxation, where materials gradually lose the ability to maintain stress under constant strain. To evaluate this mechanism, ADT under a constant-stress framework is conducted. In this setting, three elevated temperature levels are employed, namely 65 °C, 85 °C, and 100 °C, as shown in

Figure 2. Six test units are subjected to these stresses, with inspections scheduled at fixed times

, and at each inspection the stress relaxation measurement

is recorded, where

denotes the number of observations for unit

i under stress level

. The reference stress level is 40 °C, while failure is defined once the stress relaxation exceeds 30% (

ω = 30%). The dataset for this analysis originates from [

34], and the summary results are listed in

Table 1.

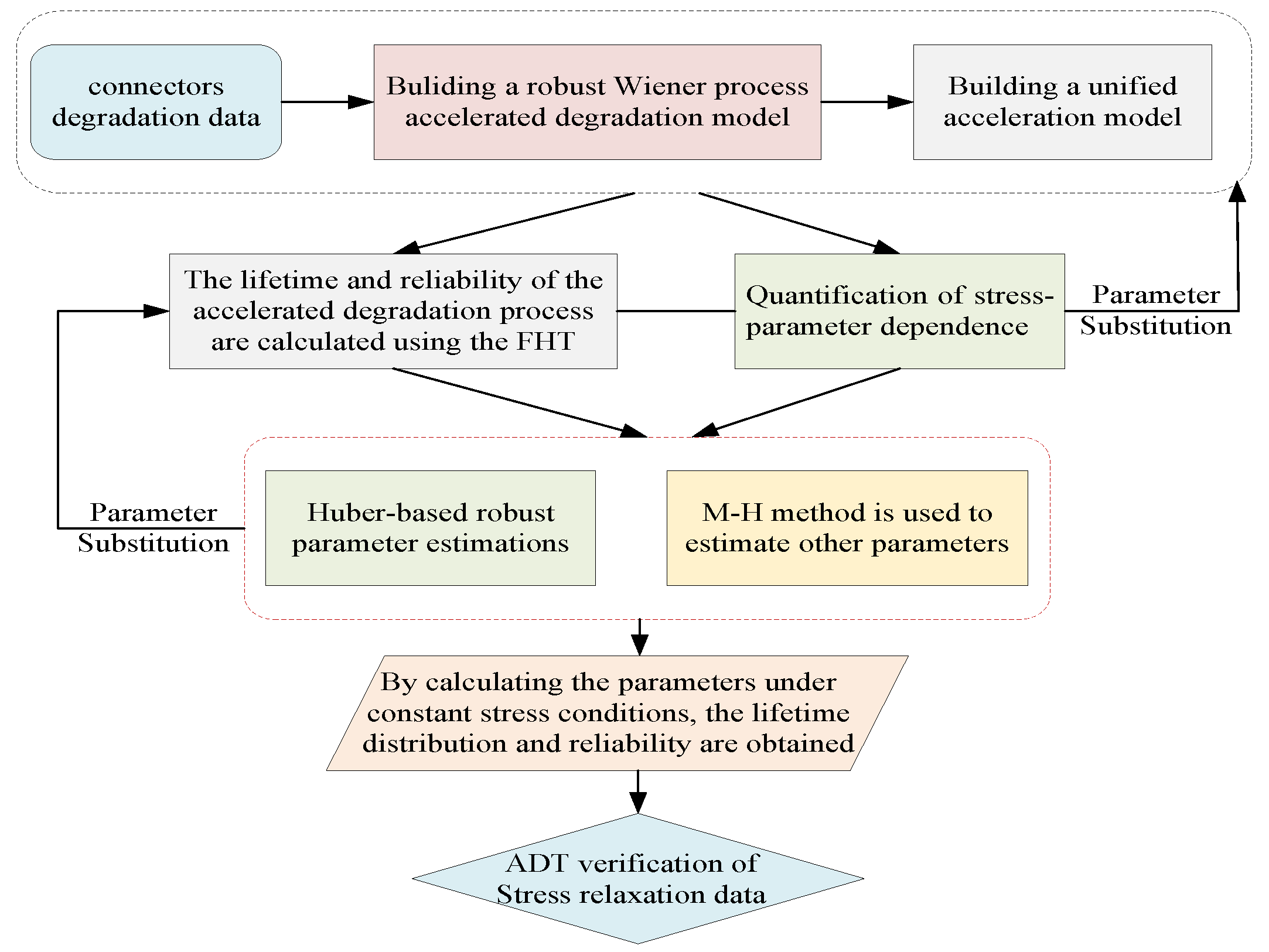

5.1. Estimation of the Parameters

To identify the unknown parameters of the ADT model, a sequential estimation strategy is adopted. In the initial stage, the parameters associated with the Huber-type robust formulation are obtained, and in the subsequent stage, the remaining unknown quantities are determined. The numerical outcomes of this procedure are summarized in

Table 2.

In particular,

Table 2 reports the estimates for

. When carrying out the simulation, it is necessary to verify that the fitted values obtained from the observed data exhibit good agreement with the actual model parameters, thereby confirming the validity of the estimation process.

To assess how well the estimated model-to-trend parameters capture the underlying degradation behavior, the obtained estimates are substituted into Equation (

25) to generate the predicted trend curves for each stress condition. For clarity,

Figure 3 illustrates a side-by-side comparison between the predicted trajectories and the actual observed degradation paths of the product.

Figure 3 presents a comparison between the estimated degradation trends and the actual observed degradation data under three accelerated stress conditions. At 65 °C, the estimated curve fits the empirical data well, showing that the model can effectively capture the relatively gradual and smooth degradation pattern occurring at lower stress levels. Only minor deviations are observed, suggesting that the estimation remains stable throughout the time horizon.

When the stress level is increased to 85 °C, the degradation process becomes noticeably faster. The estimated trend is able to follow this accelerated deterioration and provides a close approximation to the actual measurements. Although the observed data exhibit some fluctuations, the predicted trajectory still captures the overall progression of degradation with good accuracy.

At 100 °C, the degradation intensifies substantially, leading to a much sharper trend compared with the other two cases. Even under this extreme stress level, the estimated curve remains consistent with the empirical observations, indicating that the proposed method is capable of modeling both the rate and the magnitude of degradation. Overall, the figure demonstrates that the estimated model reproduces the observed degradation behavior reliably across different stress levels, thereby validating its applicability for accelerated degradation testing and lifetime prediction.

After the trend parameters have been obtained through the Huber-based approach, attention is directed toward estimating the other parameters in the ADT framework. These two groups of parameters are interdependent, since the trend estimates appear in Equation (

22) during the evaluation of the likelihood function. The second phase of the estimation strategy is therefore carried out with the M-H algorithm.

Table 3 presents the outcomes of this procedure, reporting quantities such as posterior mean, variance, Monte Carlo error, and the posterior quantiles (2.5%, median, 97.5%). Such statistical summaries provide a reliable basis for further investigations of lifetime prediction and reliability evaluation.

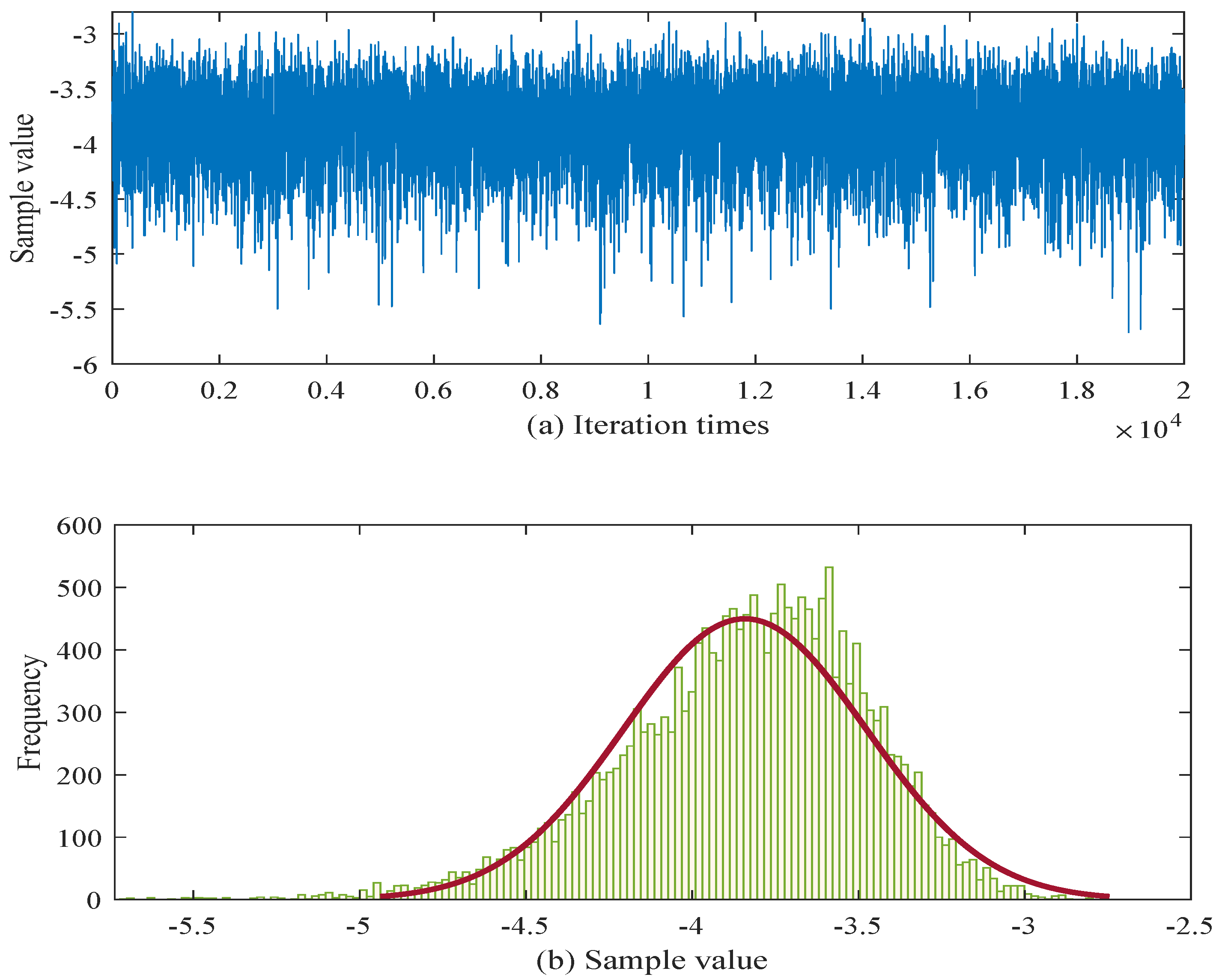

In order to provide a more intuitive view of the parameter estimation results, the posterior samples after a burn-in of 2000 iterations are illustrated in

Figure 4 and

Figure 5.

Figure 4 corresponds to the parameter

. The trace plot shows that after burn-in, the chain fluctuates steadily within a bounded region (from approximately

to

), without noticeable drift, which indicates satisfactory convergence and acceptable mixing of the Markov chain. The associated histogram is unimodal and relatively concentrated, with the bulk of the posterior mass centered around the mid-

range. This suggests that the M–H sampler has produced a stable estimate of

with moderate posterior uncertainty.

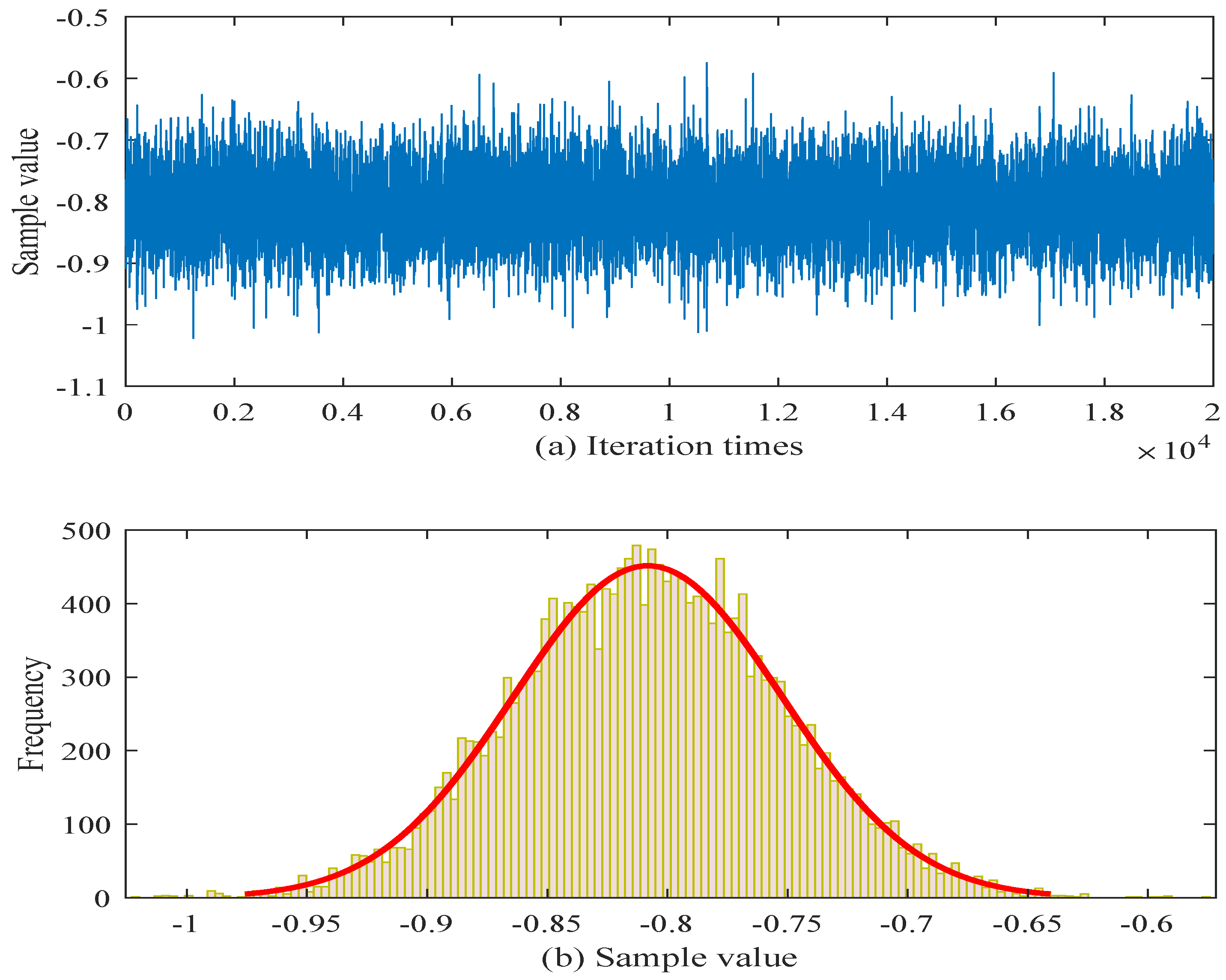

Figure 5 depicts the results for

. The trace exhibits a similarly stable pattern, oscillating within a narrower interval (roughly between

and

) and showing no signs of poor mixing or long-range dependence. The corresponding histogram is sharply peaked and more concentrated than that of

, with most of the posterior mass around

to

. This indicates that the posterior distribution of

is well identified and exhibits lower variance.

Both figures indicate that, after the 2000-iteration burn-in, the M–H algorithm produces stable chains and unimodal posterior distributions for

and

, providing evidence that the estimation procedure is reliable for these parameters. To mitigate the internal uncertainty associated with limited sample sizes, a parameter estimation approach based on interval estimation is adopted. Consequently, according to Equation (

32), the estimated values obtained from the interval estimation framework are summarized in

Table 4.

To enable comparison with the proposed model, we also include a traditional Wiener process-based model that ignores the effect of internal uncertainties; in this case,

is set to zero, and the model is denoted as M1. In addition, we examine the other scenarios introduced in

Section 2. For case (1), the subcases (a)–(c) are labeled M2, M3, and M4, respectively. For case (2), the subcases (a)–(c) are denoted M5, M6, and M7, respectively. For case (3), the model is referred to as M8. These baseline models are incorporated into the case study for benchmarking purposes. Then, the estimated parameters’ values of these models are listed in

Table 5.

According to

Table 2 and

Table 5, although the sets of parameters to be estimated differ, the results for the time-transformation parameter

c are quite similar across all models. Hence,

c can be considered independent of stress, which aligns with our earlier assumption.

Table 2,

Table 3,

Table 4 and

Table 5 present the parameter estimates obtained from different modeling approaches applied to the same degradation dataset. Variations in the estimated values arise from differences in model formulation and the treatment of uncertainty, which in turn affect the scale and shape of the fitted distributions. Nevertheless, parameter estimates alone cannot fully determine which model provides the most accurate representation of connector degradation under accelerated testing with uncertainties. A broader evaluation of model performance is therefore necessary to assess their comparative suitability.

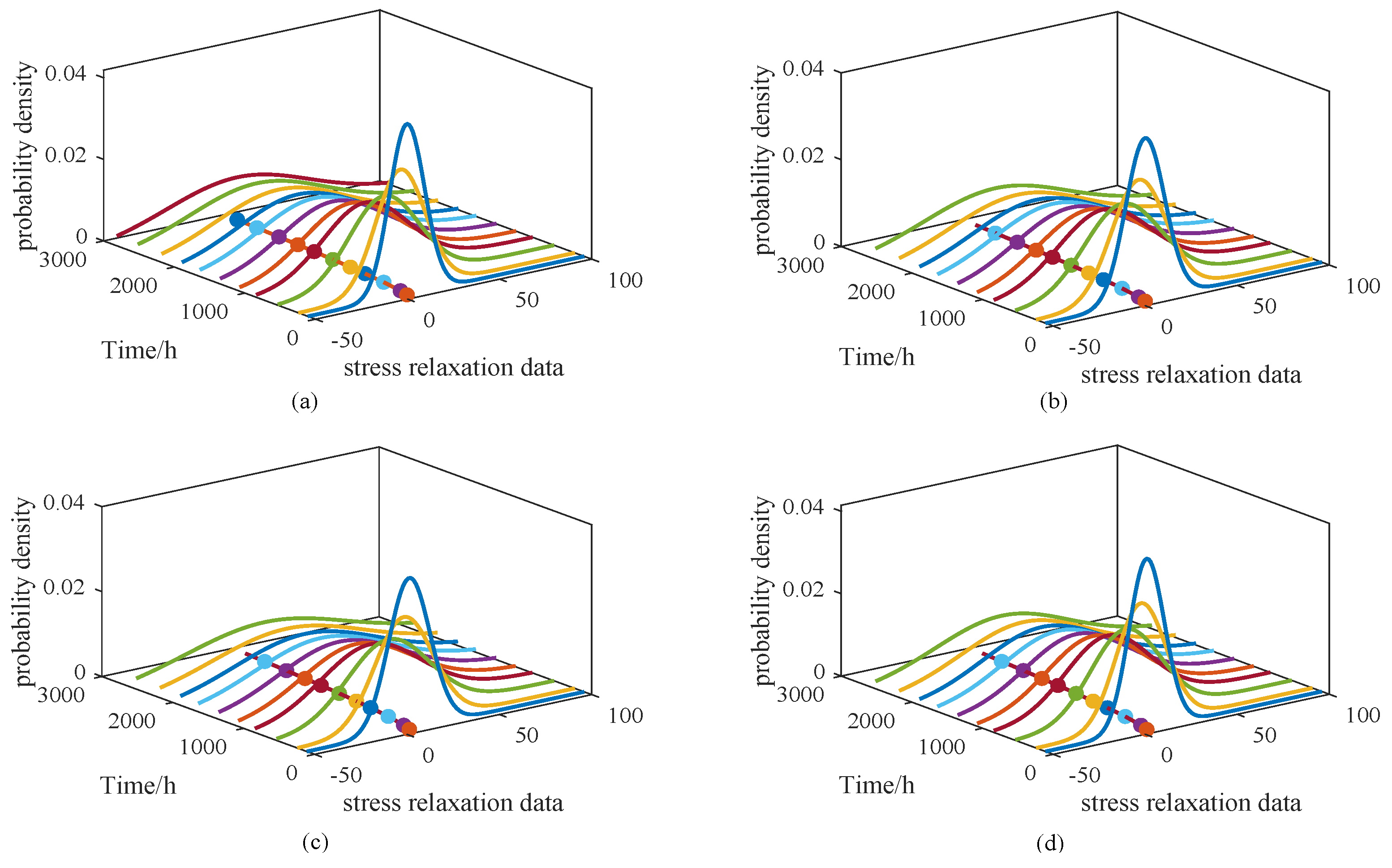

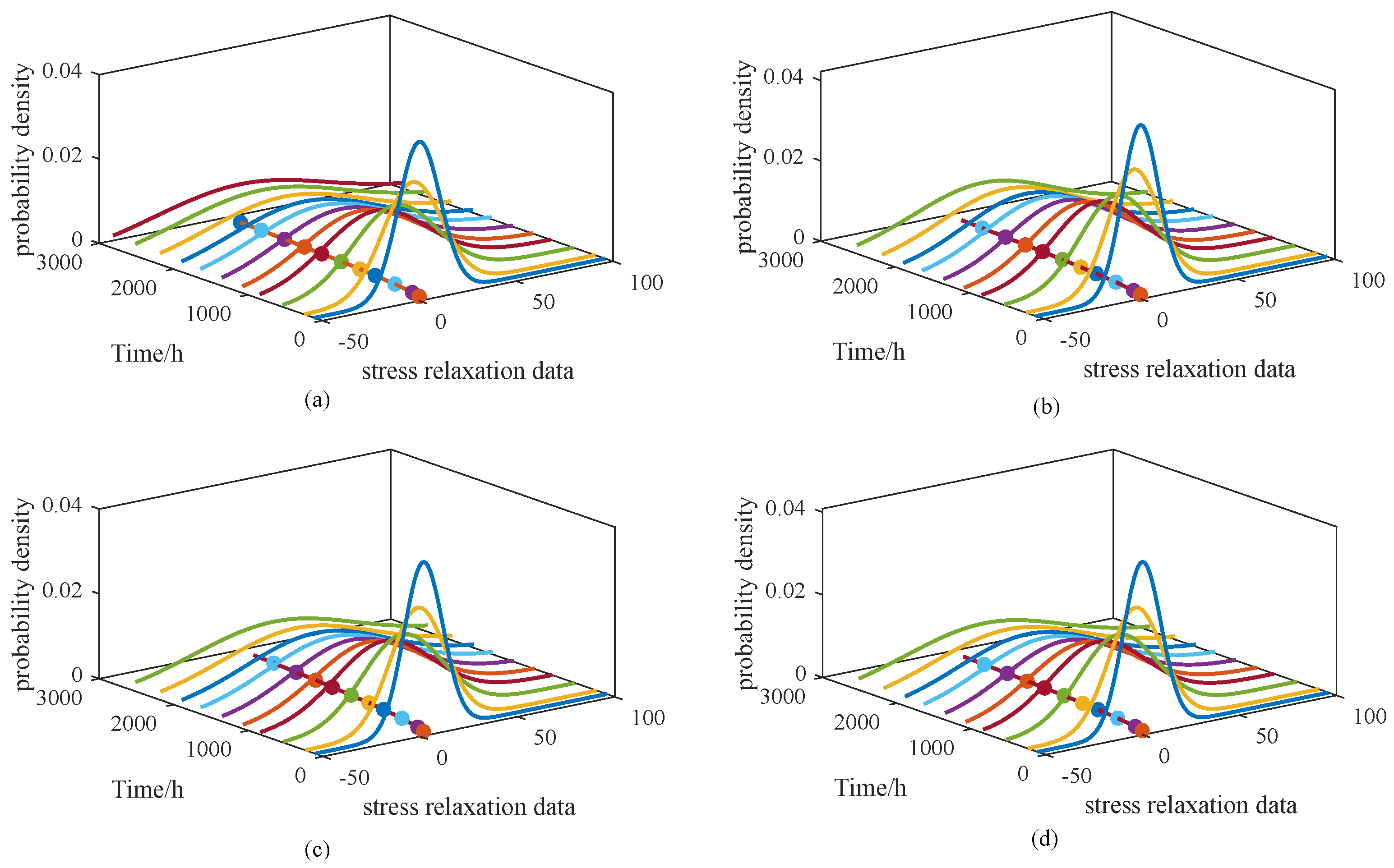

Figure 6 and

Figure 7 illustrate the probability density functions (PDFs) for models M1–M4 and M5–M8, respectively. To simplify the presentation, results are shown only for the 65 °C condition. Alongside the PDFs, the corresponding degradation trajectories are also plotted, enabling direct visual comparison of the models under consistent parameter settings.

From

Figure 6, it can be observed that the PDFs of M1 and M4 are relatively narrow and peaked, whereas those of M2 and M3 appear broader, suggesting that the degradation behavior in M1 and M4 is more concentrated. In

Figure 7, the PDF of M5 is noticeably wider than those of the other three models. Such broader distributions imply a more dispersed degradation process, corresponding to a larger variance. However, the overall degradation trends remain consistent, showing no substantial differences. To further illustrate the performance of the proposed approach,

Figure 8 presents the PDF of the model under a stress level of 65 °C, along with the PDF curves at three sampling times: 108 h, 534 h, and 1074 h.

From

Figure 8a, the probability density functions (PDFs) of the proposed model are shown to closely follow the overall degradation trajectory under the 65 °C stress condition. The degradation trend is effectively captured, and the fitted PDFs provide a clear representation of the probabilistic distribution of degradation states across the time horizon. This demonstrates that the model not only traces the mean degradation path but also quantifies the associated uncertainty through the shape and spread of the PDFs.

Figure 8b presents the PDFs at selected sampling times (108 h, 1074 h, and 2810 h). At the initial stage (108 h), the distribution is narrow and sharply peaked, reflecting a concentrated degradation state with low variance. As time advances, the distributions become wider, showing greater dispersion and increasing uncertainty. These observations confirm that the proposed model can dynamically capture both the central tendency and the variability of degradation behavior over time, which is essential for reliable lifetime prediction and accurate reliability assessment.

To provide stronger evidence of the distinctions observed in the figure, a quantitative evaluation of model performance was carried out. The computed metrics are reported in the next section to compare model accuracy across operational conditions.

5.2. Lifetime and Reliability Analysis

The primary goal of ADT is to evaluate product lifetime and reliability under nominal operating conditions. Achieving this requires converting stress-dependent parameters into their counterparts at the normal stress level. Accordingly, Equation (

21) is recalculated under the condition of normal stress, with the transformation expressed as follows:

where

denotes the nominal operating stress level given in

Table 1. Because the parameter

c does not vary with stress, it remains unchanged and requires no transformation.

From Equations (

33) and (

12), the cumulative distribution function (CDF) of the FHT under nominal stress conditions can be directly derived. By substituting Equation (

33), the closed-form expressions are obtained, and the corresponding CDF curves are plotted in

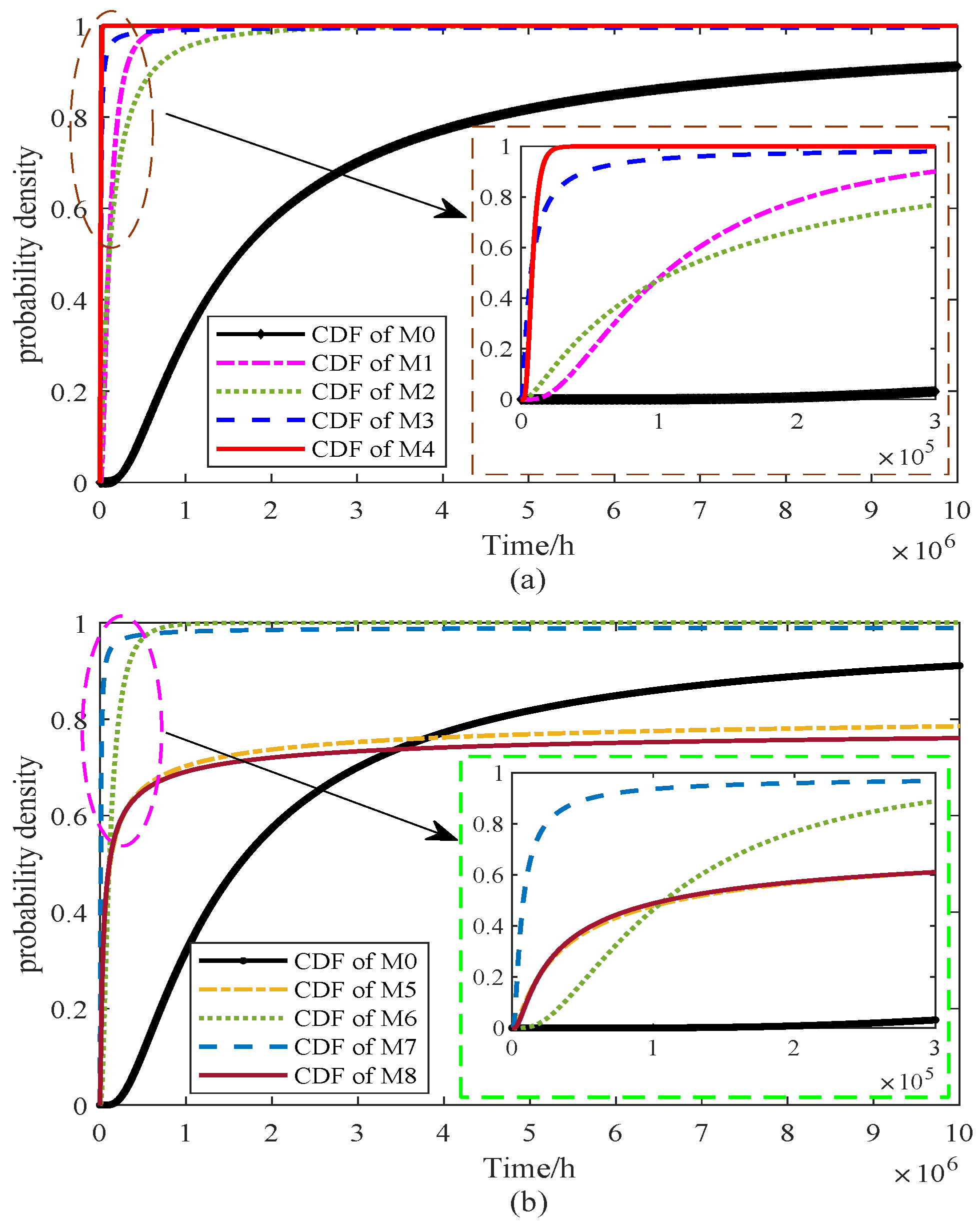

Figure 9. For clarity of discussion, the models are organized into two groups, each including the proposed approach for comparison. In this context, the proposed model is denoted as M0.

From

Figure 9a, it is evident that the cumulative distribution functions (CDFs) of models M1–M4 differ noticeably from that of the proposed model M0. Specifically, the CDFs of M3 and M4 rise more steeply, implying shorter predicted lifetimes and smaller variances, while M1 and M2 exhibit relatively flatter curves, indicating longer tails and greater dispersion in the estimated lifetimes. In

Figure 9b, the comparison of M0 with models M5–M8 underscores the differing stress–parameter mapping assumptions. The CDF curve of M5 is shifted left relative to M8, indicating earlier failure predictions, while M6 and M7 produce narrower distributions, suggesting reduced variability and a tendency to underestimate uncertainty. By contrast, M8 shows the slowest rise, corresponding to the longest predicted lifetime but potentially leading to an overestimation of reliability. Overall, the CDF of M0 does not rise the fastest nor the slowest; instead, it provides a balanced representation across the entire time horizon, supporting its suitability for characterizing lifetime distributions under nominal stress conditions. To further highlight the differences among the models, the PDFs derived from Equation (

13) are presented in

Figure 10.

From

Figure 10a, M1 and M3 exhibit sharp early peaks, suggesting shorter lifetimes with limited variability. In contrast, M2 and M4 produce flatter peaks, reflecting greater dispersion and delayed failures. In

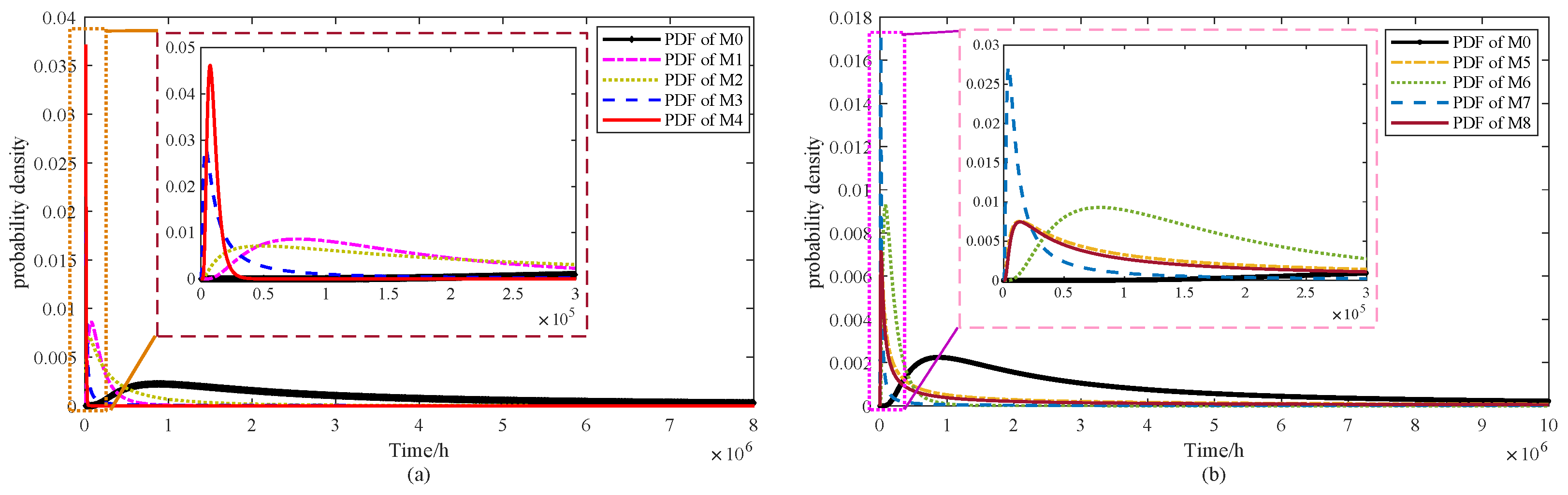

Figure 10b, the PDFs of M5–M8 are compared with M0. Both M5 and M8 display moderately wide peaks, suggesting moderate but not excessive variability. Model M6 yields the broadest distribution among them, whereas M7 shows a narrow and pronounced peak, indicating concentrated predictions with reduced uncertainty. M0 is distinctive in presenting the widest distribution overall, capturing the largest uncertainty range while remaining consistent with the degradation trend. This underscores the strength of the proposed model in representing both variability and realism in lifetime prediction. In addition, the PDF and CDF of M0 obtained through confidence interval estimation are illustrated in

Figure 11.

From

Figure 11, the point-estimated probability density function (PDF) of model

exhibits a distinct unimodal shape, with the 95% confidence band being relatively narrow around the peak and gradually widening toward the tails. This indicates that the central part of the lifetime distribution is estimated with high stability, whereas predictions at the extremes are more sensitive to uncertainty. The corresponding cumulative distribution function (CDF) shows a similar pattern: the confidence interval remains tight around the median region (e.g.,

), suggesting reliable estimation of central quantiles, while the interval widens toward the lower and upper tails, reflecting increased variability in early-failure and high-reliability quantiles (e.g.,

and

).

It should be emphasized that the widening of the confidence intervals in the tail regions does not imply poor robustness of the proposed method. Robust estimation in this study is primarily applied to the mean degradation parameters, ensuring that the central trend remains stable even under data contamination. The interval estimation, on the other hand, addresses the additional uncertainty arising from limited sample sizes by explicitly quantifying its impact on variance-related parameters. Consequently, the combination of robust mean estimation and interval-based variance quantification allows

not only to provide stable central predictions but also to transparently capture the sensitivity of extreme predictions, thereby enhancing the overall credibility of the lifetime analysis. Based on (

14), the reliability curves of these models are shown in

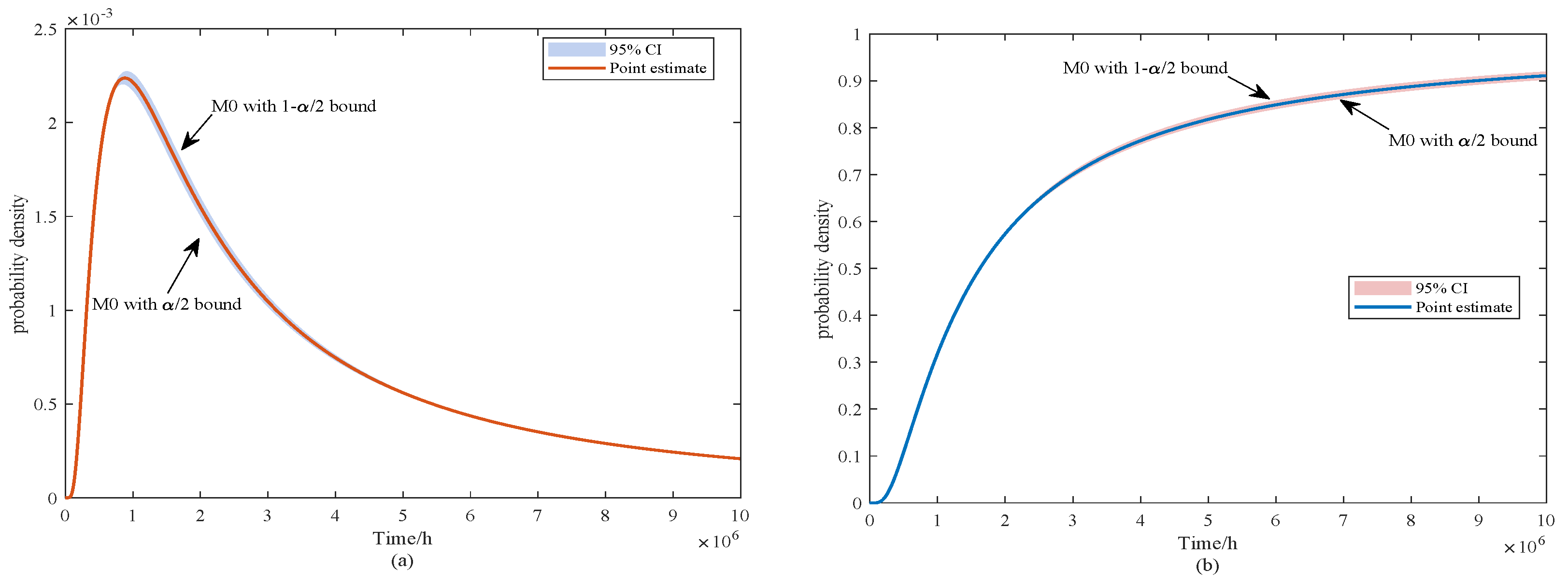

Figure 12.

Figure 12 presents the reliability curves of the nine models (M0–M8), where panel (a) and (j) correspond to the proposed method (M0) and its interval performance, respectively, and panels (b)–(i) display the baseline models. For a quantitative comparison, the median lifetime

(defined as the time at which the reliability function

) was approximated from the plots. The proposed model M0 and its interval values achieve the longest predicted lifetime with

h. Among the baseline models, M8 also demonstrate relatively long lifetimes (

h), though still significantly shorter than M0. In contrast, M3, M4 and M7 show the most rapid reliability decay, with

values on the order of

h, indicating much earlier predicted failures. The other models (M1, M2, M5, and M6) fall between these extremes, typically with

in the range of

–

h. The slope and spread of the curves further reflect model-specific uncertainty. The proposed method (M0) exhibits the broadest distribution with a gradual decline, capturing greater variability in the degradation process. Conversely, M3, M4 and M7 display steep drops, suggesting concentrated lifetime predictions that may underestimate uncertainty. M8 exhibits intermediate behavior, with reliability maintained longer than most baseline models, which may indicate an overestimation of reliability.

Overall, the results demonstrate that the proposed model M0 outperforms the baseline approaches by providing efficient lifetime estimates and effectively characterizing the uncertainty in the reliability distribution, thereby offering a more realistic representation of long-term degradation behavior.

To assess the quality of the model more rigorously, we conduct a quantitative evaluation. In the next section, these models are examined from multiple perspectives.

5.3. Model Comparison Results

This section evaluates the nine models with respect to their capacity to capture degradation dynamics and predict product lifetime. As noted in

Section 4, the mean function of a stochastic process provides a reliable description of the general degradation pattern. Therefore, the degree of agreement between the model-predicted mean trajectory and the experimental degradation data can be used as an indicator of model accuracy. Based on the parameter estimates listed in

Table 2 and

Table 5,

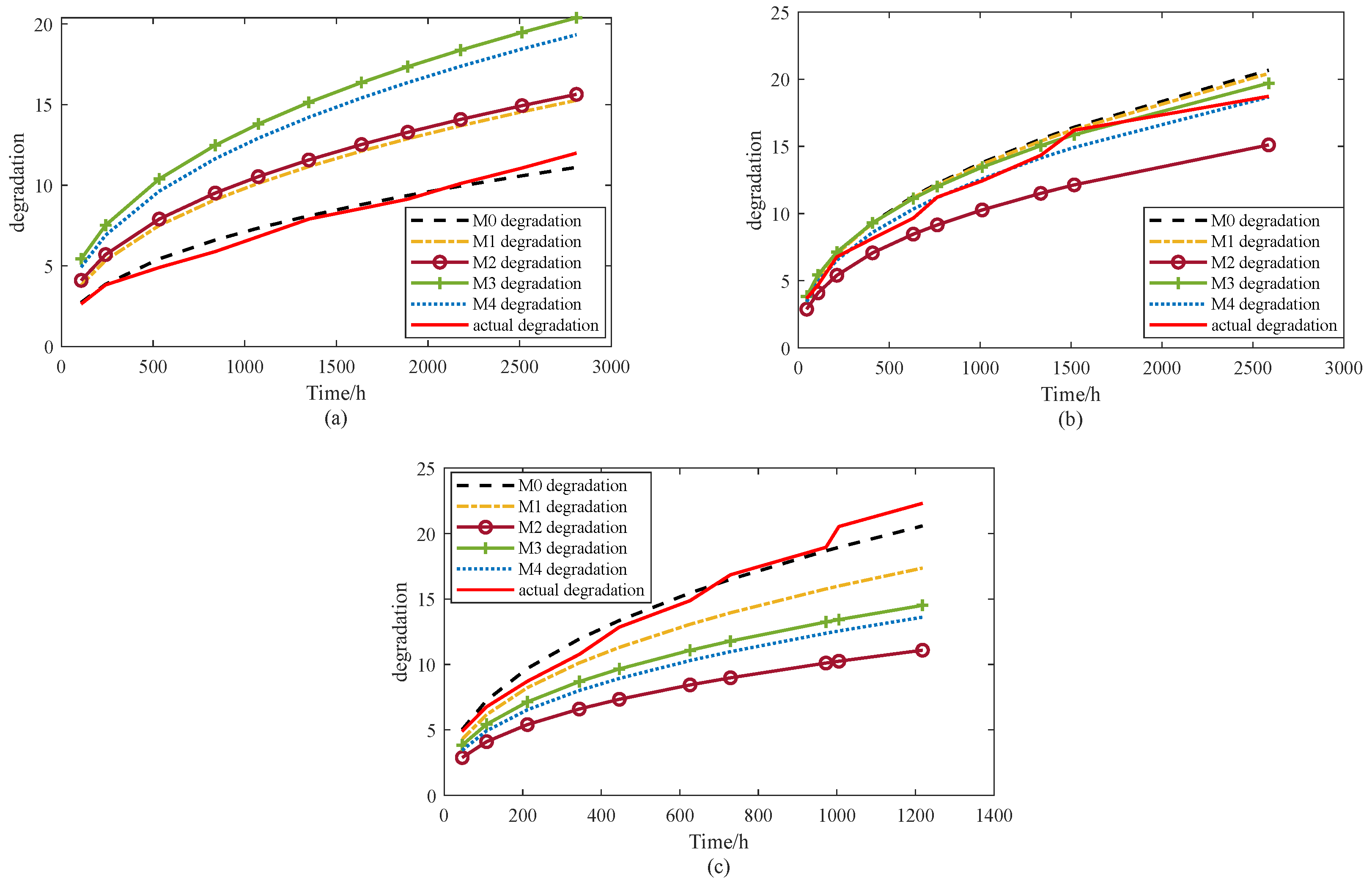

Figure 13 and

Figure 14 present a comparison of the mean degradation paths for M0 and the baseline models, offering a clearer assessment of how well each approach represents the underlying degradation behavior. For clarity, two figures are provided to compare the average degradation trends of models M0–M8 with the degradation data.

Figure 13 presents the results for M0–M4, while

Figure 14 illustrates the results for M5–M8.

As shown in

Figure 13, under stress levels of 65 °C and 100 °C, model M0 provides the closest fit to the observed degradation trend. At 85 °C, the best agreement is observed for models M3 and M4, although M0 and M1 also demonstrate acceptable performance. At 65 °C, M3 exhibits the poorest fit, whereas M2 shows the weakest performance under both 85 °C and 100 °C. These results suggest that M2 fails to adequately represent the accelerated degradation process under uncertainty, which may in turn compromise the accuracy of lifetime predictions derived from this model.

Figure 14 illustrates that, consistent with the findings in

Figure 13, the proposed model M0 delivers the best performance under the 65 °C and 100 °C stress conditions. At 100 °C, models M5, M6, and M8 approximate the observed degradation trajectory reasonably well during the initial 300 h; however, their accuracy declines as time progresses, suggesting that these models fail to adequately capture the underlying uncertainty. Under 85 °C, model M7 exhibits the closest fit to the degradation data, while the remaining models show comparable but slightly weaker performance. Nevertheless, M7 performs poorly at both 65 °C and 100 °C, lagging behind the others and highlighting its limited robustness.

In summary, visual inspection confirms that the proposed model M0 achieves the most consistent overall performance. It not only accounts for internal uncertainty within the modeling framework but also incorporates robust estimation techniques to mitigate the effects of external disturbances. As a result, M0 demonstrates superior adaptability and predictive accuracy compared with the baseline models. To further validate these conclusions, a quantitative evaluation is conducted using several widely adopted error metrics, including the root mean square error (RMSE), mean absolute error (MAE), relative average deviation (RAD), and relative standard deviation (RSD). These indices provide a rigorous basis for performance comparison across different stress levels, and the corresponding results are summarized in

Table 6.

Table 6 presents the quantitative results of all models in terms of RMSE, MAE, RAD, and RSD. Overall, the proposed model M0 achieves the best accuracy, with the lowest RMSE (0.8751), MAE (0.6883), and RAD (0.066). These values are substantially smaller than those of the baseline models, confirming that M0 provides the most reliable approximation of the observed degradation behavior. Interestingly, M0 also yields the largest RSD (5.1109), which indicates that it captures a wider spread of variability while maintaining the lowest average errors. This balance suggests that M0 is not only accurate but also capable of representing the inherent uncertainty more comprehensively.

By contrast, models M2, M3, and M4 exhibit the poorest performance, with RMSE values exceeding 4.6 and MAE values above 3.6, as well as large RAD values (0.37–0.44). Although their RSD values are moderately high (3.95–4.96), the high error metrics imply that these models fail to effectively represent the accelerated degradation process. Models M1, M5, M6, and M8 perform better, with intermediate RMSE and MAE values in the range of 2.0–2.5 and RAD values around 0.22–0.24, but they still fall short of M0 in both accuracy and robustness. Model M7, despite showing comparable accuracy to M2–M4, suffers from instability across stress levels, as indicated by its high errors and relatively large RSD.

In summary, the results demonstrate that M0 consistently outperforms the competing models. While several baselines achieve moderate accuracy (M1, M5–M8), they either underestimate uncertainty or fail to capture the degradation dynamics under varying stress conditions. M2–M4, on the other hand, are clearly less reliable due to their high errors. Thus, M0 achieves the most favorable trade-off between accuracy and uncertainty representation, confirming its superiority in accelerated degradation modeling.

To complement the previous evaluation, two commonly used information criteria are applied: the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC) [

35]. The AIC focuses on predictive capability by trading off model fit against the number of parameters, whereas the BIC imposes a stricter penalty on model complexity, especially as the sample size increases, and therefore often selects more parsimonious models to mitigate overfitting. By considering both measures, a balanced assessment of model adequacy can be achieved over the entire stress testing period. The resulting values, along with the mean time to failure (MTTF) estimates, are summarized in

Table 7.

Table 7 reports the AIC, BIC, and MTTF values for all models. Among them, the proposed model M0 achieves the lowest AIC with interval (810.64 [810.3301, 811.0032]) and BIC with interval (817.09 [816.7816, 817.4547]), demonstrating the best overall trade-off between model fit and complexity. In contrast, several alternative models (e.g., M3, M4, and M7) yield considerably higher AIC and BIC values, suggesting inferior performance either due to poorer fit or over-parameterization. Regarding the mean time to failure (MTTF), M0 provides an estimate of

, which lies within a reasonable range under the accelerated testing framework. Models M5 and M8 report even higher MTTF values (

and

, respectively). Although these results suggest longer predicted lifetimes, their larger AIC and BIC scores imply weaker model adequacy. From a reliability perspective, such elevated MTTF estimates may reflect an overestimation of product durability, leading to overly optimistic reliability predictions. Consequently, despite the longer MTTF reported by M5 and M8, M0 remains the most reliable and parsimonious model, offering both accurate lifetime characterization and robust model selection criteria performance.