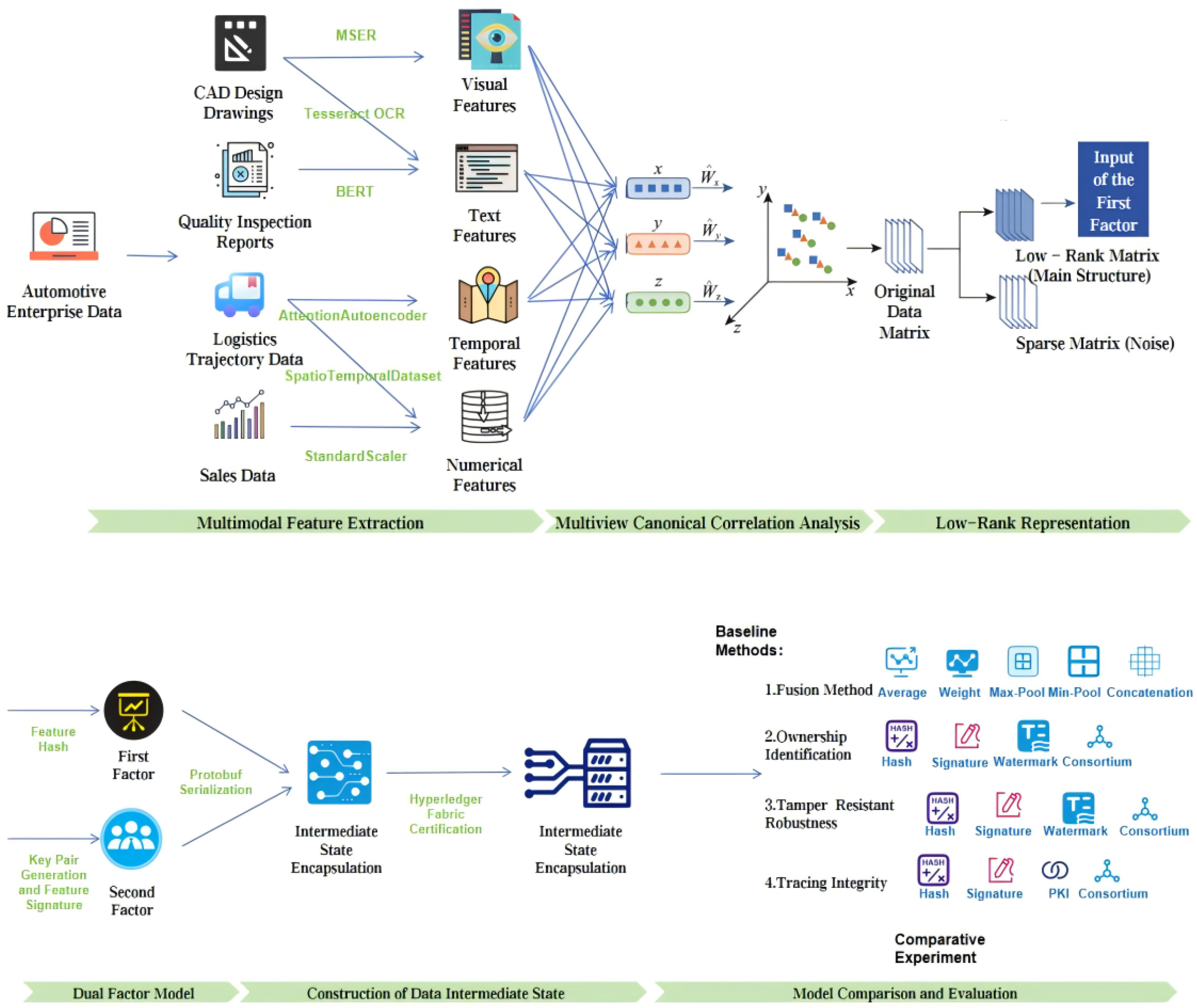

This study aims to address the core issues of “difficulty in defining ownership” and “difficulty in cross-domain tracing” in data circulation, focusing on the following aspects as shows in

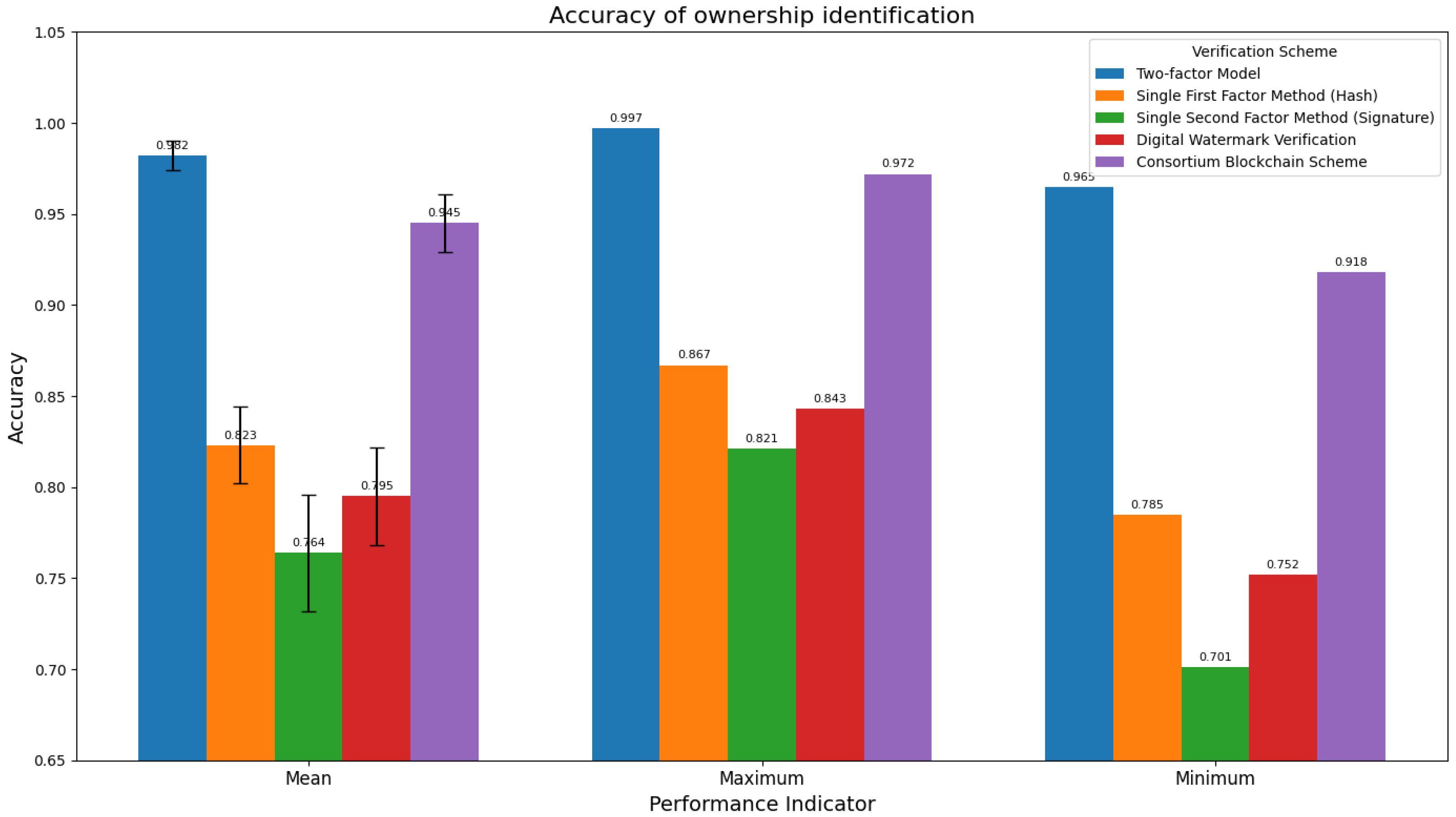

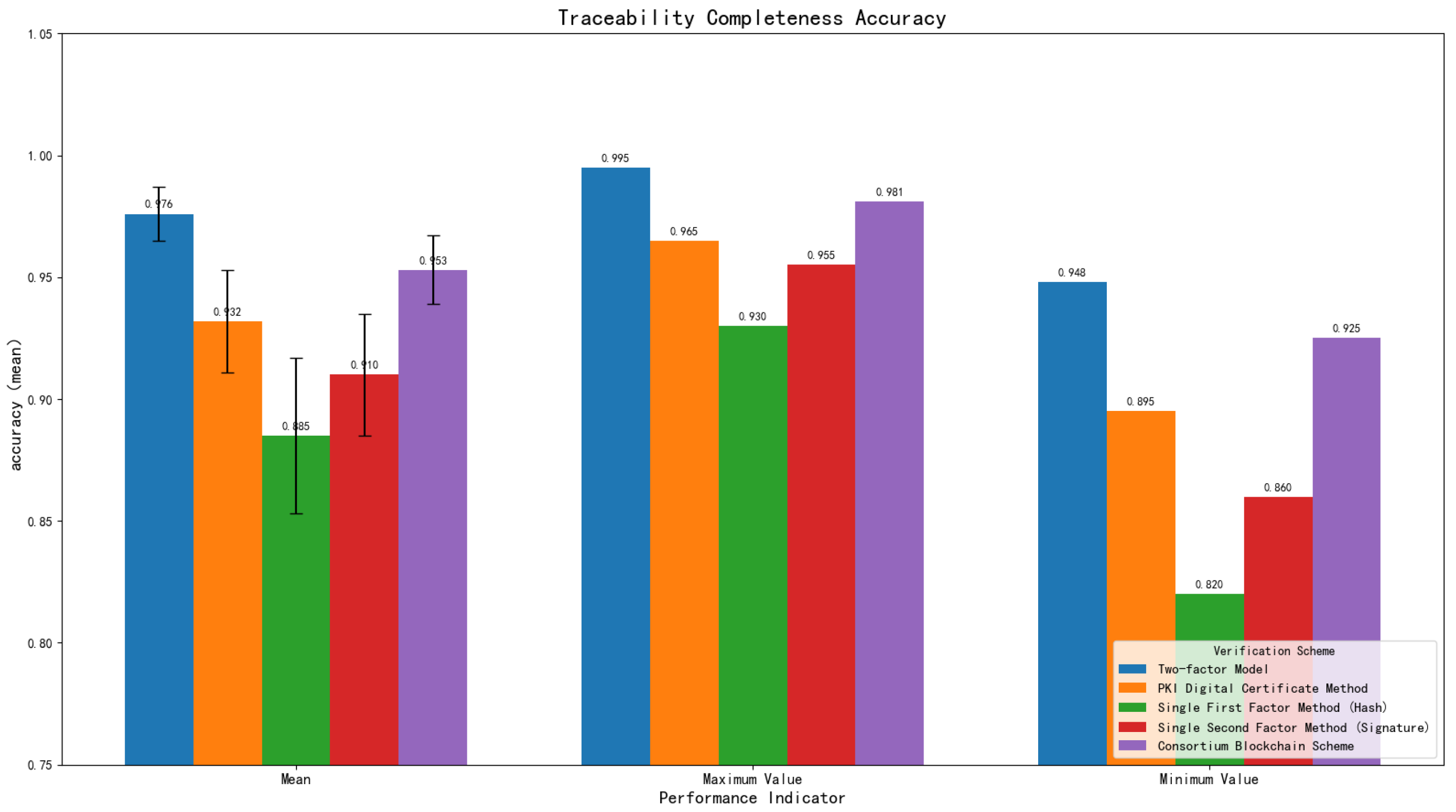

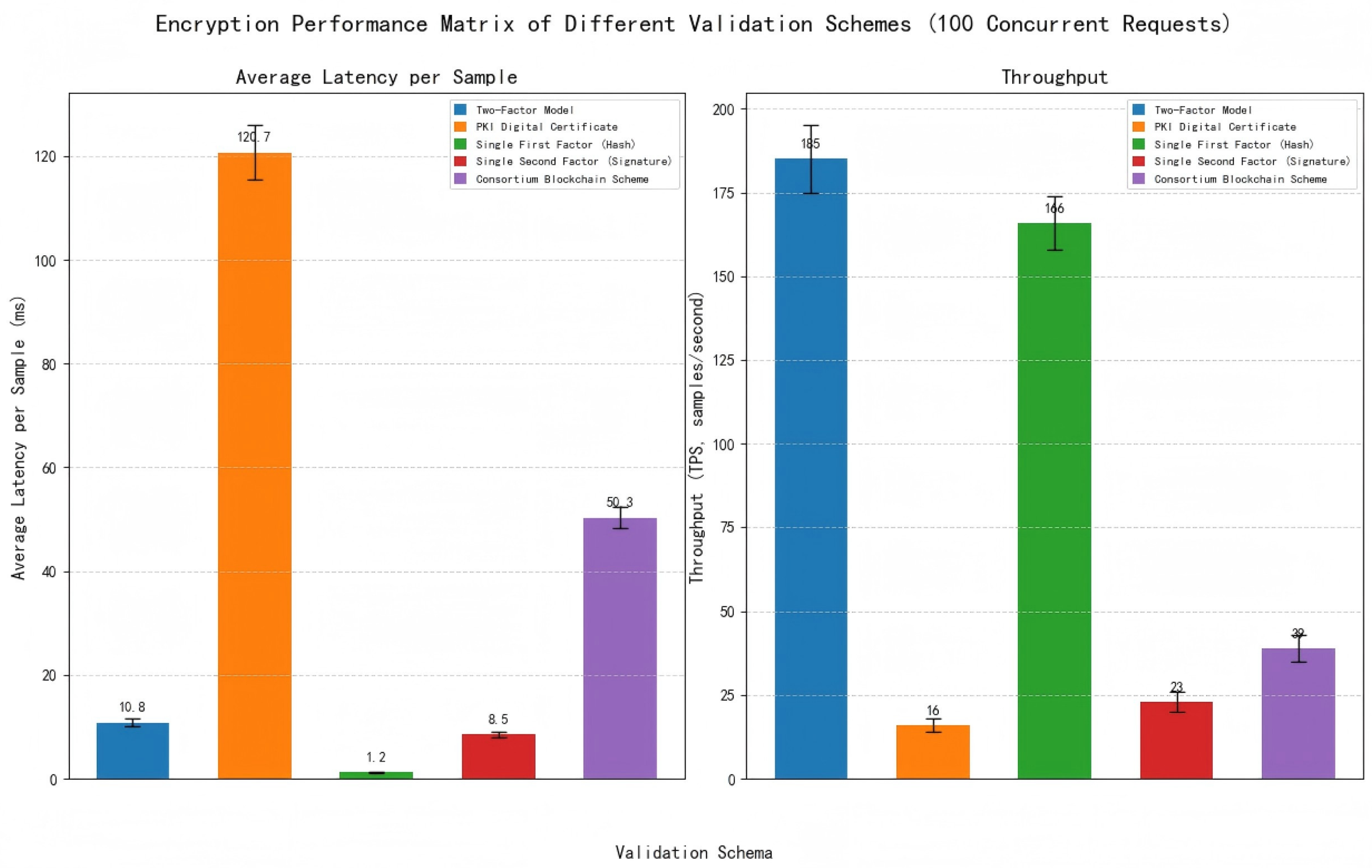

Figure 1: Firstly, it designs a multimodal feature collaborative extraction mechanism, conducts in-depth research on feature extraction methods for heterogeneous modal data such as text, image, numerical, and spatio-temporal data, and constructs an efficient feature fusion strategy to provide a solid data foundation for dual-factor ownership confirmation. Secondly, based on the results of multimodal feature fusion, it builds a dual-factor ownership identification system, designs the generation mechanisms of the first factor (feature hash) and the second factor (digital signature), and forms an ownership confirmation framework with both “content uniqueness” and “identity non-repudiation”. Thirdly, it explores the structured encapsulation method of data intermediate state and designs a collaborative mechanism between alliance chain and IPFS distributed storage to realize data ownership identification and cross-domain tracing. Finally, it carries out empirical research with the automotive supply chain as the application scenario, and verifies the effectiveness and feasibility of the dual-factor model through comparative experiments in modal fusion, ownership identification, tamper resistance, and cross-domain tracing.

2.1. Multimodal Feature Collaborative Extraction

This section focuses on three core sensor data types (image/time-series/text) typical in IoT scenarios, with each feature extraction module corresponding to specific sensor data sources as detailed below:

Text feature extraction (

Section 2.1.1): Used for text-type sensor data, e.g., auto-generated quality inspection reports from industrial detection sensors and running logs from IoT terminal devices.

Visual feature extraction (

Section 2.1.2): Designed for image-type sensor data, such as CAD drawings generated by 3D scanning sensors and component surface images captured by industrial cameras.

Spatio-temporal/numerical feature extraction (

Section 2.1.3 and

Section 2.1.4): Applied to time-series sensor data, including GPS trajectory data from location sensors and part tolerance measurement data from industrial dimension gauges.

2.1.1. Text Feature Extraction

Text data—such as quality inspection reports auto-generated by industrial sensors and IoT device logs—are common modalities in data circulation. This study adopts a multi-level text feature extraction method to achieve comprehensive feature representation from lexical, syntactic, to semantic levels.

First, TF-IDF extracts basic text features. Term Frequency (TF) is a word’s frequency in a document: , where is word t’s count in document d. Inverse Document Frequency (IDF) reflects a word’s discriminative power: . TF-IDF is their product:

Meanwhile, the Doc2Vec algorithm is combined to generate document embedding vectors, capturing contextual semantic associations of the text by learning paragraph-level vector representations. The document vector is learned through the following optimization objective: , where is the word vector, and is calculated using the softmax function. Second, the pre-trained language model BERT is used to extract deep semantic features of the text. BERT learns contextual semantic information of the text through a bidirectional Transformer architecture, with its core self-attention mechanism calculated as follows: , where are the query, key, and value matrices, respectively, and is the feature dimension.

For special text scenarios in the engineering field, a multimodal text processing flow is constructed: for text information in CAD drawings, the MSER algorithm is used for text region detection; for PDF-format quality inspection reports, text content is extracted using the pdfplumber tool. In code implementation, cosine similarity is used to measure the feature consistency between the first and last parts of the document: . The final feature vector is constructed by concatenating TF-IDF features (dimension m), Doc2Vec vectors (dimension 100), and similarity values, forming a comprehensive feature representation with a dimension of .

2.1.2. Visual Feature Extraction

Visual data (such as component images captured by industrial cameras and CAD drawings derived from 3D scanning sensors) plays a significant role in IoT-driven data circulation. This study integrates traditional computer vision methods with mathematical models to construct a multi-level visual feature extraction framework, enabling comprehensive representation from low-level features to high-level semantics. In the preprocessing stage, Gaussian blur is used for denoising, which smooths the image through convolution operations to remove high-frequency noise. Its core formula is . Subsequently, the OTSU adaptive thresholding method is applied for image binarization, which automatically determines the optimal threshold by maximizing inter-class variance: where . This converts grayscale images into black-and-white binary images, laying the foundation for subsequent feature extraction. The feature extraction framework consists of three progressive modules: low-level feature detection, mid-level relationship analysis, and high-level semantic understanding. Low-level feature detection uses traditional methods to build a basic feature space: linear features are detected via probabilistic Hough transform, whose probabilistic model is based on Bayesian decision theory, modeling likelihood through a Gaussian distribution of distances, .

Circle detection is optimized using an improved Hough transform, incorporating prior probability of radii to enhance accuracy for hole features in engineering drawings. Mid-level relationship analysis achieves structural understanding by constructing a shape relationship network. The adjacency matrix quantifies relationships between geometric elements using directional cosine similarity: when , ; otherwise, .

Here, is the angle between elements i and j, and is the Euclidean distance. Spatial relationships are converted into numerical representations via 8-neighborhood analysis. High-level semantic feature extraction adopts a transfer learning strategy, using a pre-trained ResNet architecture to generate 2048-dimensional deep features, which are concatenated with 8-dimensional geometric statistical vectors (e.g., counts of lines/circles, mean and standard deviation of lengths). An attention mechanism is introduced in the fusion stage, with weights calculated via a two-layer neural network to form hybrid features that balance geometric precision and semantic abstraction. To adapt to multimodal fusion, kernel principal component analysis is used to reduce the feature dimension to 50, retaining 92.3% of information, providing structured visual features for subsequent rights confirmation.

2.1.3. Numerical Feature Extraction

Numerical data—primarily from industrial sensors (e.g., dimension gauges and pressure transducers) and IoT meters—are widely present in supply chains (e.g., part tolerance measurements and cargo weight readings). This study constructs a multi-dimensional numerical feature extraction framework that integrates traditional statistical methods with deep learning technologies. The basic statistical feature layer adopts an extended set of statistics, including indicators of central tendency and dispersion such as mean (), median, and standard deviation (). It adds skewness () and kurtosis () to describe the data distribution pattern, and enhances the robust measurement of data fluctuation characteristics through interquartile range (, where is the upper quartile and is the lower quartile) and coefficient of variation ().

The time-series feature extraction adopts a two-layer architecture: the bottom layer uses sliding window technology to calculate rolling mean (, where w is the window size) and standard deviation for 7-day/4-week cycles, and combines with month-on-month growth rate () to capture short-term fluctuations; the upper layer implements an improved Gated Recurrent Unit (MGU) network, which dynamically integrates entity features such as vehicle ID and dealer ID through the calculation of forget gate () and candidate state (), generating a 128-dimensional deep time-series feature vector MGU.

The logistics scenario-specific module adopts a spatio-temporal fusion network, constructs spatio-temporal sequences through SpatioTemporalDataset, and uses the PyTorch deep learning framework to extract spatio-temporal correlation features of transportation paths. The model output forms a window-level feature representation after average pooling over the time dimension (, where T is the number of time steps and is the feature vector at time t).

2.1.4. Spatio-Temporal Feature Extraction

Spatio-temporal data—collected by GPS sensors, in-vehicle IoT devices, and logistics tracking sensors—integrates temporal and spatial information, with key applications in IoT-enabled supply chains and transportation. This study adopts a spatio-temporal joint feature extraction method to achieve the effective representation of spatio-temporal data. In the temporal dimension, a Memory Gated Unit (MGU) is used to construct a recurrent neural network for capturing temporal dependencies.

Multi-scale convolutions (with convolution kernel sizes of 3/5/7) are combined to extract feature patterns at different time granularities. The convolution operation formula is , where K is the convolution kernel size. The model dynamically focuses on key time steps through a self-attention mechanism, with the attention weight calculated as . Meanwhile, it fuses the entity embedding features (16-dimensional embedding vector) of vehicles, dealers, and brands to construct a 128-dimensional deep temporal feature vector containing entity information.

For the spatial dimension, the Euclidean distance between consecutive sampling points is calculated using longitude and latitude coordinates: (with a precision of 0.1 km). Combined with the driving direction change rate () (5° tolerance), spatial morphological features are constructed. A sliding window technique (window size = 10) is used to extract the spatio-temporal sequences of speed, acceleration, and driving direction. The window feature calculation formula is , forming a multimodal feature matrix containing spatial distance, time difference, and direction change.

In terms of spatio-temporal feature fusion, an autoencoder architecture based on the attention mechanism is designed, with the loss function defined as . The non-linear mapping relationship of spatio-temporal features is learned through the encoding–decoding process. The model compresses the 10 × 15 spatio-temporal feature matrix into a 64-dimensional fusion vector, where the temporal dimension uses mean pooling: (time_steps = 10), and the spatial dimension retains coordinate correlation. Finally, the end-to-end feature extraction of logistics trajectory data is achieved, with the output dimension transformation process .

2.1.5. Multimodal Feature Fusion

The multimodal feature fusion is realized through a two-level architecture of “multi-view fusion + low-rank representation”, with the specific process as follows: First, preprocess and align the four core feature views (CAD design features, logistics trajectory features, quality inspection features, and sales data features) by filling missing values with the mean, applying Z-score standardization (), and unifying the number of samples (minimum 28) to ensure consistent scales for heterogeneous features. Then, construct a unified feature space using Multiset Canonical Correlation Analysis (MCCA): calculate the covariance matrix of the horizontally concatenated views , perform eigenvalue decomposition , select the top 50 eigenvectors to form the projection matrix W, map each view to the shared space via , and concatenate them into a 50-dimensional fusion vector. Next, apply low-rank decomposition to the MCCA output with the objective function (s.t. ) to obtain a low-rank matrix , retaining the core feature structures and an error matrix E filtering noise. Finally, map the low-rank matrix to a standard-dimensional vector (64-256 dimensions) via a fully connected layer, providing a standardized feature interface for blockchain certification. Key innovations of this architecture include adaptive weighting between modalities via an attention mechanism, a hierarchical strategy of “intramodal fusion → cross-modal global fusion”, maximizing view correlations through CCA (), and enhancing feature robustness through low-rank decomposition.

2.2. Dual-Factor Ownership Identification System

2.2.1. First Factor: Multimodal Feature Hash Generation

As the “digital fingerprint” of data content, the first factor realizes the fusion and hash generation of sensor-derived multimodal features, with a lightweight processing flow (adapted to IoT edge devices) through three stages: feature standardization → multi-view fusion → dimensionality reduction and hashing. This process can unify heterogeneous feature spaces into fixed-length hash vectors while maintaining the uniqueness and immutability of data content. The multimodal feature fusion adopts a three-level progressive architecture: first, it integrates CAD design data through weighted adaptive fusion (30% for geometric features, 20% for text features, and 50% for semantic features), and reduces the dimensionality to 50 via PCA; second, it uses an attention mechanism for fusion, dynamically learning weight allocation through a three-layer neural network, where semantic features obtain the highest attention weight (0.62 ± 0.08); finally, it maps heterogeneous features such as logistics, sales, and quality data to a shared subspace through multi-view CCA (MCCA) fusion. The mathematical expression of the fusion process is , where , represent the dynamic weight matrices of geometric, text, and semantic features respectively, ⊕ denotes the feature concatenation operation, and MCCA is the multi-view canonical correlation analysis operator. In the dimensionality reduction and hash generation stage, the fused high-dimensional features (with dimension ) are reduced to 100 dimensions using PCA, with a variance explanation rate of 92.3%; the SHA-256 algorithm is used to convert the real-valued feature vector into a 256-bit hash value, and the implementation process is . In the experimental automotive supply chain scenario, the system first processes CAD drawings (mainly geometric features), quality inspection reports (mainly text features), logistics trajectories (mainly spatio-temporal features), and sales data (mainly numerical and spatio-temporal features), respectively, to generate feature hashes for each modality; then it generates a comprehensive feature vector through MCCA fusion; finally, it generates a 128-byte first factor through PCA dimensionality reduction and SHA-256 hashing.

2.2.2. Second Factor: Digital Signature

The second factor, a “cryptographic proof” of both owner and IoT device identity, binds ownership to sensor nodes via ECDSA signatures—linking private keys to hardware identifiers of industrial cameras, GPS modules, and RFID readers. This study adopts the ECDSA (Elliptic Curve Digital Signature Algorithm) as the core signature algorithm, implementing a combined scheme based on the P-256 elliptic curve (NIST secp256r1) and the SHA-256 hash algorithm. The key pair generation process is as follows: first, a 256-bit private key is generated by a cryptographically secure pseudo-random number generator, and the corresponding public key is calculated via elliptic curve point multiplication. The signature generation process involves two-level processing: hash preprocessing, which normalizes and encodes the hash value of the first factor to ensure the input data format complies with the ANS X9.62 standard, and elliptic curve signing, which performs ECDSA signing on the preprocessed hash value using the private key to generate the (r, s) coordinate pair, with Low-S value conversion applied to prevent signature malleability attacks. The mathematical representation of the second factor is where SK denotes the 256-bit ECDSA private key, H is the SHA-256 hash value of the first factor, and the signature result S is 64-byte ASN.1 DER-encoded data (containing the r and s components). The verification phase employs a three-step verification framework: first, parsing the signature to obtain the (r, s) components and verifying their validity; second, reconstructing the signature equation using the signer’s public key and the SHA-256 hash algorithm; and finally, confirming whether holds through elliptic curve point verification.

2.3. Design of Intermediate State Data Model

The data intermediate state serves as a critical hub linking raw data and blockchain certification, achieving the standardized organization of multimodal features, ownership metadata, and inter-modal associations through structured encapsulation. Its core design principles encompass semantic integrity, storage efficiency, traceability, security authentication, and distributed scalability. Semantic integrity is maintained by preserving core features of multimodal data, with a feature structure recording names and hash values of each modal feature to ensure reconstructable original semantics during cross-domain verification. Storage efficiency is optimized via Protobuf binary serialization, while traceability is enabled by the timestamp-based recording of data generation time and department-level ownership tracking through a dedicated field, supporting full-lifecycle auditing. Security authentication is ensured through the inclusion of public_key and base64-encoded signature fields in each feature entry, verifying data sources and preventing tampering. Distributed scalability is achieved by integrating an ipfs_hash field to associate with the IPFS distributed storage system, enabling off-chain anchoring and efficient access to large-scale raw data.

For cross-domain scenarios, the intermediate state model supports two core capabilities: 1. Cross-domain Metadata Synchronization: The feature_hashes (first factor) and signature (second factor) in the intermediate state are synchronized across consortium chain nodes of different domains (e.g., automotive enterprise domain, supplier domain, and logistics domain) through a lightweight consensus mechanism (PBFT simplified version), ensuring consistent ownership identification across domains. 2. Cross-domain Data Addressing: The ipfs_hash enables cross-domain nodes to directly retrieve original sensor data from the IPFS network without transferring full data across domains, avoiding latency caused by cross-domain data transmission. This solves the “traceability chain breakage” problem mentioned in

Section 1.3.

The core structure of the intermediate state data model is defined with IntermediateState as the top-level container, encompassing timestamp, version, features, and ipfs_hash, while Feature functions as a feature entry containing the name, hash (first factor), department, public_key, and signature (second factor). This model employs pure Protobuf for structured definition and binary serialization, implementing data assembly logic through strict structural constraints and ultimately generating binary files as the core carrier for blockchain certification. For hierarchical storage, a rigorous on-chain/off-chain separation architecture is adopted: core metadata—including version, timestamp, feature_hashes, and ipfs_hash—is stored on-chain, whereas original multimodal files (e.g., CAD drawings and quality inspection reports) are stored via the IPFS network, with only tamper-proof IPFS content-addressed hashes retained on-chain.