1. Introduction

The proliferation of the Internet of Things (IoT) has led to massive deployments of heterogeneous sensors generating continuous and high-volume time-series data, enabling applications in domains such as smart cities, healthcare, and industrial automation. However, traditional cloud-based signal processing approaches for anomaly detection face challenges related to latency, bandwidth consumption, scalability, and privacy [

1,

2]. These limitations highlight the need for more efficient solutions that can process data locally and closer to the source. Edge computing, combined with lightweight signal processing and machine learning models, has therefore emerged as a promising paradigm to address these issues while maintaining real-time performance and energy efficiency.

Recent studies have focused on anomaly detection in IoT settings using machine learning and hybrid models. For example, Waldhauser et al. [

3] propose a Wavelet-based anomaly detection framework suitable for embedded systems, achieving high detection rates with low false alarm rates. Eunchan Kim [

4] developed TinyCES, a TinyML-based ECG classification framework implemented directly on a microcontroller unit (MCU) to enable real-time, on-device cardiac anomaly detection with approximately 97% accuracy and minimal network or memory usage. In smart city/network security contexts, Reis [

5] employ hybrid deep learning architectures (autoencoders, LSTM, CNN) combined with federated learning, capturing spatial, temporal, and reconstruction-based anomalies in IoT network behavior with high precision and scalability.

While these works show considerable progress, there remains a gap in integrating classical signal processing (e.g., Wavelet or Fourier feature extraction), lightweight edge models, and careful analysis of trade-offs among detection accuracy, computational cost (latency, memory), and energy consumption. The present work aims to fill this gap by proposing a framework combining lightweight signal processing transforms with edge-deployed machine learning models for real-time anomaly detection in IoT sensor networks. We explore how local feature extraction plus efficient classification can reduce communication overhead, latency, and energy consumption without sacrificing detection performance.

Despite the progress of deep learning for anomaly detection, many existing approaches remain unsuitable for low-power IoT devices due to their large memory requirements, high latency, and dependence on cloud connectivity. These limitations hinder real-time operation and increase communication overhead, which is critical in safety or mission-oriented applications. Consequently, there is a strong need for lightweight signal processing and machine learning techniques that can operate locally while maintaining acceptable detection accuracy and robustness.

The main contributions of this work can be summarized as follows:

- (i)

Proposing a hybrid signal processing and edge AI framework for anomaly detection in IoT sensor networks, combining Fourier and Wavelet transforms with lightweight machine learning models.

- (ii)

Conducting a comprehensive evaluation covering detection accuracy, computational efficiency, latency, communication overhead, sensitivity, robustness, and ablation of pipeline components.

- (iii)

Demonstrating the impact of feature extraction and quantization on performance–efficiency trade-offs through systematic ablation studies.

- (iv)

Providing detailed analysis and discussion of design choices relevant to real-world, resource-constrained IoT deployments.

For convenience, all acronyms and abbreviations used throughout this paper are listed in the Abbreviations section provided at the end of the manuscript.

The remainder of this paper is organized as follows:

Section 2 reviews the relevant literature;

Section 3 describes the proposed signal processing + Edge AI framework;

Section 4 details the simulation setup and results;

Section 6 concludes and outlines future directions.

2. Related Work

In this section, we review state-of-the-art research relevant to anomaly detection in IoT and sensor networks, edge computing approaches, and lightweight machine learning models. We highlight four main themes: surveys of anomaly detection methods, edge AI solutions for latency and energy efficiency, hybrid models combining signal processing and machine learning, and trade-offs among accuracy, cost, and deployment constraints.

2.1. Surveys on Anomaly Detection in IoT

Several recent surveys provide a comprehensive overview of anomaly detection methods for IoT. Chatterjee and Ahmed [

1] provided a comprehensive survey of IoT anomaly detection methods and applications, analyzing 64 studies published between 2019 and 2021. Their review categorized anomaly detection algorithms by methodology (statistical, geometric, and machine learning), by latency (online/offline), and by application domain, highlighting the growing relevance of deep and edge-intelligent models for distributed IoT systems. Earlier, Dubey et al. [

6] presented a broad survey of anomaly detection techniques in IoT, highlighting the challenges of scalability, heterogeneity, and real-time constraints. These works emphasize the need for methods that are both accurate and resource-efficient, motivating the adoption of edge computing.

2.2. Edge AI Approaches

A growing number of works propose edge-deployed solutions to address latency, privacy, and energy issues. Guo et al. [

7] introduced EGNN, an energy-efficient graph neural network for multivariate IoT anomaly detection, exploiting correlations between sensors to improve detection accuracy under constrained resources. In the healthcare domain, Eunchan Kim [

4] introduced a TinyML-based embedded ECG monitoring system (TinyCES) that performs classification locally on a low-power MCU prototype (Arduino Nano 33 BLE Sense). The approach achieved ≈97% accuracy on MIT-BIH and PTB diagnostic databases, while reducing communication bandwidth and memory usage by more than 96%, thereby demonstrating real-time feasibility for wearable health monitoring. Similarly, Truong et al. [

8] proposed a lightweight federated learning scheme for anomaly detection in IoT networks, showing that distributing computation to the edge reduces communication costs while preserving privacy. More recently, Zhang et al. [

9] proposed a Stackelberg game-based multi-agent algorithm for resource allocation and task offloading in multi-access edge computing (MEC)-enabled cooperative intelligent transportation systems (C-ITSs). Although not directly focused on anomaly detection, this study highlights the importance of resource optimization and adaptive task scheduling for achieving low-latency, energy-efficient processing at the edge—objectives that are also central to our proposed framework.

2.3. Hybrid Models Combining Signal Processing and ML

Hybrid approaches leverage classical signal processing to reduce data dimensionality before applying machine learning models. Liu et al. [

10] proposed a lightweight mechanical fault diagnosis framework combining data augmentation via a Generative Convolutional GAN (GCGAN) and a hybrid MDSCNN-ICA-BiGRU network. The approach achieved near-100% accuracy on both laboratory and benchmark bearing datasets while reducing computational cost by approximately 70%, demonstrating robust generalization under noisy and resource-constrained conditions. Zonzini et al. [

11] demonstrated that integrating compressed sensing with lightweight machine learning models in vibration-based monitoring can achieve high accuracy (>96%) while reducing communication and storage demands, whereas Forough [

12] discussed how anomaly detection in edge cloud environments benefits from machine learning techniques tailored for resource constraints and adaptive deployments. In agricultural IoT, Dembski et al. [

13] evaluated autoencoders and U-Net models for anomaly segmentation of soil sensor data on constrained devices, demonstrating how preprocessing can improve performance under limited resources.

2.4. Trade-Offs and Open Challenges

Despite these advances, several open challenges remain. First, many methods prioritize accuracy but neglect systematic evaluation of latency, memory footprint, and energy consumption. For example, Katib et al. [

14] developed a TinyML-based predictive maintenance framework but only partially reported latency and power results. Second, datasets are often narrow or domain-specific, limiting generalization to heterogeneous IoT environments. Finally, Khatoon et al. [

15] showed that even demanding tasks such as road anomaly detection from aerial imagery can be run on edge devices with pruning and quantization, but further analysis is needed to understand long-term deployment trade-offs.

Table 1 summarizes representative recent studies on anomaly detection in IoT using edge AI and signal processing approaches, highlighting application domains, methods, devices, and reported performance. As can be concluded, while substantial progress has been made, there remains a clear gap in integrating lightweight signal processing with edge machine learning in a unified framework that explicitly quantifies trade-offs among detection accuracy, computational efficiency, communication overhead, and energy consumption. The present work seeks to address this gap.

Table 1 has been updated to include all relevant works discussed in

Section 2, ensuring consistency between the textual review and the summarized comparison. The last row summarizes the proposed framework, highlighting its novelty relative to prior studies.

3. Methodology

This section details the proposed framework for anomaly detection in IoT sensor networks using lightweight signal processing combined with edge-deployed machine learning models. The methodology is structured into five main stages: (i) data acquisition and preprocessing, (ii) feature extraction via classical signal transforms, (iii) lightweight classification models, (iv) data flow in the IoT–edge–cloud hierarchy, and (v) performance metrics.

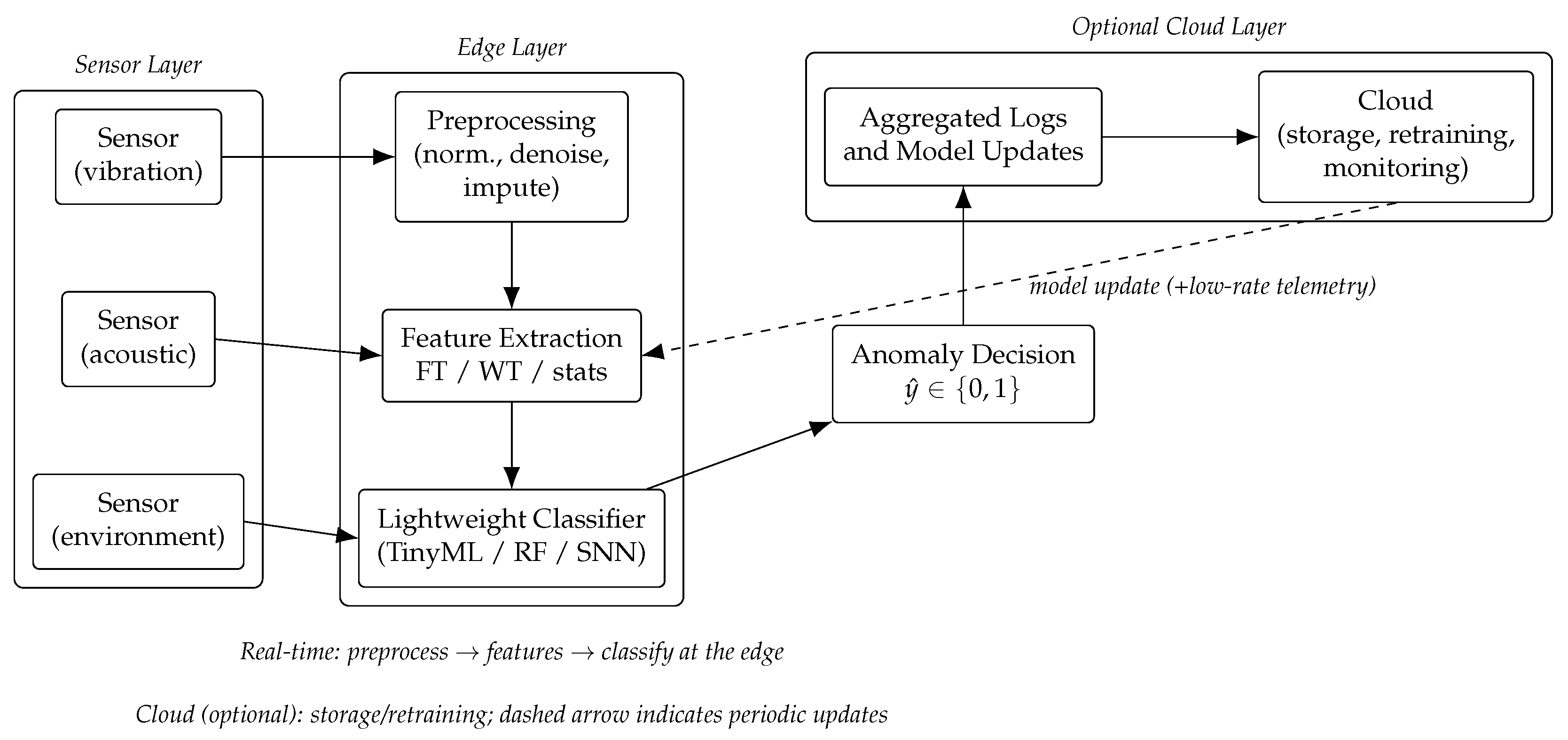

Figure 1 illustrates the pipeline.

3.1. Data Acquisition and Preprocessing

IoT sensors typically generate multivariate time-series data that can be corrupted by noise, missing samples, or environmental drift. To improve reliability, preprocessing steps include the following:

Formally, if

denotes the raw sequence, the normalized signal is

where

and

are the mean and standard deviation of

.

3.2. Feature Extraction via Signal Transforms

Efficient features are required to reduce data dimensionality while retaining anomaly-relevant information. We consider three families of features:

3.2.1. Fourier Transform Features

The discrete Fourier transform (DFT) provides global frequency-domain representation:

Magnitude spectra highlight periodic patterns, while anomalies often manifest as irregular frequency components.

3.2.2. Wavelet Transform Features

The discrete Wavelet transform (DWT) decomposes a signal into multi-resolution approximation and detail components:

where

are scaling functions and

Wavelet functions at scales

j and positions

k. In practice, the DWT is implemented via filter banks:

where

and

are low-pass and high-pass filters, respectively. This filter-bank approach is efficient and well suited for embedded platforms.

3.2.3. Statistical and Entropy-Based Features

From the transformed coefficients, we derive statistical descriptors such as mean, variance, skewness, kurtosis, and Shannon entropy:

where

is the normalized energy of coefficient

i. These features capture distributional properties sensitive to anomalous deviations [

3].

3.3. Lightweight Classification Models

Figure 2 illustrates the simplified architectures of the three lightweight classifiers considered: Decision Tree (DT), Shallow Neural Network (SNN), and Quantized TinyML model. DTs provide interpretable decision boundaries, SNNs balance accuracy and model size through a single hidden layer, and TinyML models employ post-training quantization for deployment on microcontrollers. Each architecture was implemented with minimal parameter counts to fit within typical MCU memory constraints.

Extracted features are fed to a lightweight classifier optimized for edge devices. Candidate models include the following.

TinyML Models: implementing anomaly detection directly on microcontrollers, with reported inference latency, energy use, and memory footprint [

18,

19];

Lightweight Neural Architectures and Model Compression: exploring shallow networks, quantization, pruning, or efficient CNN variants designed for deployability on constrained edge hardware [

20,

21];

Decision Tree or Ensemble Methods: simpler models like tree-based classifiers which often provide interpretability and low latency, especially in TinyML contexts (reports in [

18,

22]).

For binary anomaly detection, a classifier outputs

where

is the feature vector,

the classifier score function, and

a decision threshold.

3.4. Data Flow in IoT–Edge–Cloud Hierarchy

The framework is designed with a hierarchical architecture.

Sensor layer: raw data acquisition at IoT devices.

Edge layer: local preprocessing, feature extraction, and lightweight anomaly detection. This reduces latency and bandwidth and ensures partial autonomy even without cloud connectivity.

Cloud layer (optional): storage of aggregated results, long-term model retraining, and global monitoring dashboards.

This design ensures that critical anomaly detection decisions are made close to the source, while computationally intensive tasks such as retraining or global optimization can be offloaded to the cloud.

3.5. Performance Metrics

To evaluate the framework, we consider both detection performance and computational efficiency. Standard classification metrics include the following:

where

,

,

, and

are true positives, true negatives, false positives, and false negatives.

Efficiency metrics include the following.

Inference latency: average time to process one input sample at the edge;

Memory footprint: peak RAM/Flash usage of the deployed model;

Energy consumption: average energy per inference (measured in mJ);

Communication overhead: data transmitted to the cloud per unit time.

These metrics allow systematic trade-off analysis between detection accuracy and resource consumption.

3.6. Proposed Framework Integration

The complete framework consists of raw signals → preprocessing → transform-based feature extraction → lightweight classification at the edge → anomaly decision, with optional cloud support for retraining. This modular design allows adaptation to multiple IoT domains such as vibration monitoring, environmental sensing, or healthcare signals.

Figure 3 provides an overview of the proposed framework, illustrating all main components: (1) sensor data acquisition, (2) preprocessing and filtering, (3) signal transformation (Fourier/Wavelet), (4) feature extraction, (5) classification using lightweight ML models, and (6) anomaly decision and communication to the cloud if necessary.

4. Simulations and Experimental Setup

This section describes the design of the experimental environment used to evaluate the proposed framework. We detail the synthetic dataset generation process, the hardware/software platform assumptions for edge deployment, the configuration of learning models, and the evaluation methodology.

4.1. Synthetic Dataset Generation

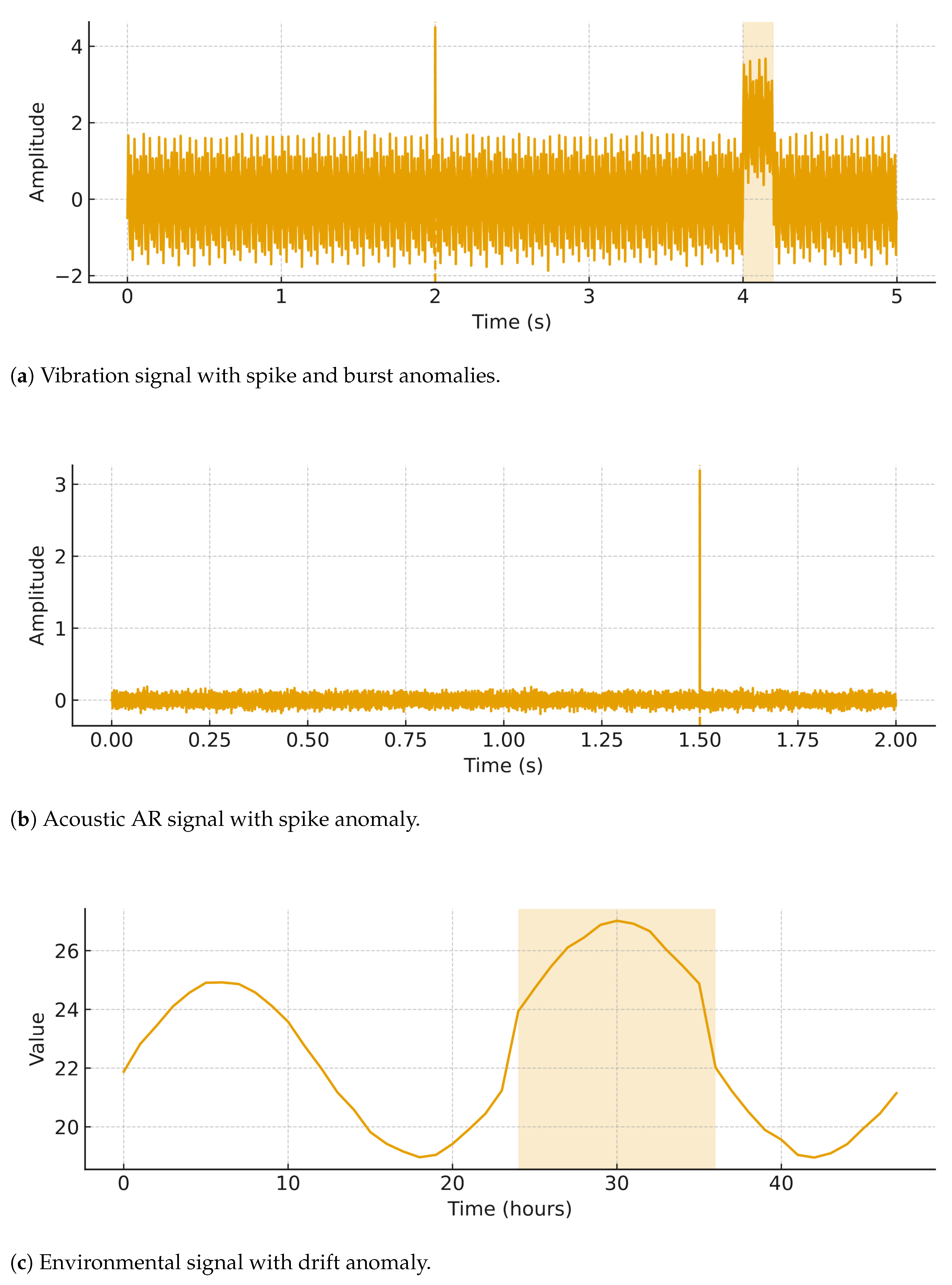

To ensure reproducibility and flexibility, we generated synthetic multivariate time-series signals representing three common sensor modalities in IoT systems:

Vibration signals (Hz scale, sampled at Hz),

Acoustic signals (speech/noise-like, sampled at kHz),

Environmental signals (temperature, humidity, sampled at Hz).

For each modality, a “normal” baseline signal was generated and subsequently corrupted with additive Gaussian noise

:

The baseline signals are defined as follows:

4.1.1. Vibration Signals

These are modeled as a superposition of sinusoidal components with random phases:

with frequencies

Hz and amplitudes

.

4.1.2. Acoustic Signals

These are simulated using an autoregressive (AR) process to reproduce stochastic, noise-like dynamics:

where

,

are AR coefficients, and

.

4.1.3. Environmental Signals

These are represented as slow-varying daily cycles with Gaussian perturbations:

where

is the baseline value,

the diurnal amplitude, and

small random noise.

4.1.4. Anomaly Injection

To emulate abnormal behaviors, two categories of anomalies are injected:

Point anomalies (spikes):

where

is the spike magnitude at index

.

Contextual anomalies (bursts or drifts):

where

is the shift amplitude and

the anomaly duration.

4.1.5. Dataset Partitioning

The dataset was divided into training (70%), validation (15%), and test (15%) sets. Anomalies were injected exclusively into validation and test sets to avoid data leakage during model training.

4.2. Hardware and Software Setup

The edge deployment scenario was simulated using a representative low-power platform:

Edge hardware: Raspberry Pi 4 Model B (1.5 GHz CPU, 4 GB RAM) (Raspberry Pi Ltd., Cambridge, UK) used as a proxy for microcontroller-class devices. Additional profiling experiments were carried out on an STM32 Nucleo board (STMicroelectronics, Geneva, Switzerland) to estimate power consumption.

Software: Python 3.11 (Python Software Foundation, Wilmington, DE, USA) with NumPy (NumPy Developers/NumFOCUS, Austin, TX, USA), SciPy (NumFOCUS, Austin, TX, USA), and PyWavelets (open-source) for preprocessing and feature extraction; scikit-learn (Inria/Scikit-learn community, Paris, France) and TensorFlow Lite (Google LLC, Mountain View, CA, USA) for model training and edge inference. Power measurements on STM32 were obtained via STM32CubeMonitor-Power.

4.3. Model Configuration and Training

We evaluated three classes of models:

Shallow Neural Networks (SNN): one hidden layer with 32 neurons, ReLU activation, trained using Adam () for 50 epochs with batch size 64.

Decision Trees (DT): maximum depth 6, Gini impurity as splitting criterion.

Quantized TinyML Models: SNN converted to 8-bit integer format with TensorFlow Lite quantization.

Hyperparameters were selected via grid search on the validation set. All models were trained on a workstation (Intel i7, 32 GB RAM) and then deployed to the edge platform for inference benchmarking.

4.4. Evaluation Methodology

We considered two categories of metrics.

As baselines, we compared the proposed edge-deployed pipeline against the following:

This comparison highlights the trade-offs among accuracy, latency, and resource usage, illustrating the benefits of local feature extraction and lightweight inference.

Table 2 summarizes the key characteristics of the synthetic dataset, including sensor modalities, sampling rates, signal models, and anomaly types.

Table 3 summarizes the hardware and software stack used for edge deployment, training, and measurements.

5. Results and Discussion

This section presents and analyzes the results obtained from the synthetic dataset experiments described in

Section 4. We evaluate both anomaly detection performance and computational efficiency, and we further study latency, communication overhead, sensitivity to anomaly characteristics, robustness to noise/missing data, and ablation of pipeline components.

For clarity, this section is organized as follows: detection performance (

Section 5.1), threshold analysis via ROC/PR (

Section 5.2), computational efficiency (

Section 5.3), latency and communication overhead (

Section 5.4), sensitivity to anomaly characteristics (

Section 5.5), robustness to noise and missing data (

Section 5.6), and ablation studies (

Section 5.7). Additional model complexity and qualitative examples are presented in

Section 5.8, followed by a practical discussion in

Section 5.9.

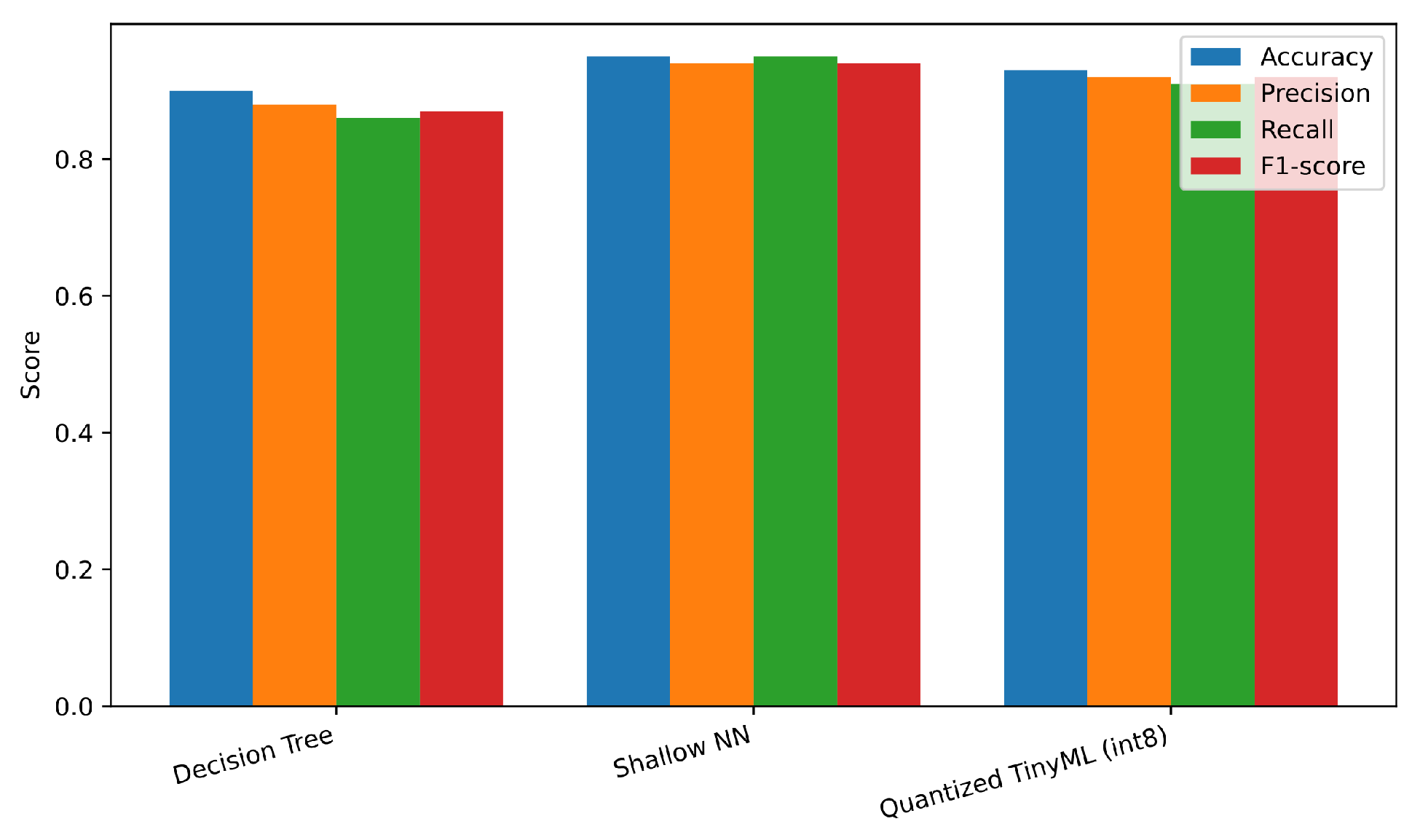

The experiments confirm that combining classical signal transforms with lightweight edge-oriented models enables accurate and efficient anomaly detection in IoT settings. Overall, the Shallow Neural Network (SNN) achieved the highest detection performance, reaching an average F1-score close to 0.94, while the Quantized TinyML version preserved most of this performance (F1 ≈ 0.92) with a threefold reduction in memory footprint and a 60% decrease in energy consumption. Decision Trees remained the fastest and most compact models, with sub-millisecond latency and minimal memory usage, although their recall was lower for contextual anomalies.

Beyond accuracy, the results highlight important system-level trade-offs. Local feature extraction reduced upstream communication costs by more than 80% for high-rate acoustic signals, significantly lowering bandwidth and energy requirements. Sensitivity analyses confirmed that detection improves with larger anomaly amplitude and duration, while robustness tests showed graceful degradation under noise and missing data. Ablation studies further demonstrated the contribution of Wavelet-based features and model quantization to balancing performance with efficiency. Taken together, these results provide strong evidence that the proposed framework is a practical solution for resource-constrained IoT deployments.

5.1. Detection Performance

Table 4 reports the accuracy, precision, recall, and F1-score over five runs with different random seeds (mean; standard deviation

p.p.). The Shallow Neural Network (SNN) achieves the highest F1, while the Quantized TinyML model trades a small drop in F1 for notable efficiency gains. The Decision Tree (DT) remains competitive with the lowest complexity.

To assess statistical stability,

Table 5 reports mean values with 95% confidence intervals, computed from five independent runs using Student’s

t distribution (

).

Figure 4 shows the grouped bar chart for the four metrics across models.

5.2. Threshold Analysis (ROC/PR) and Error Analysis

Table 6 summarizes the confusion matrices (counts) for the final run; the SNN reduces false negatives compared with DT, while TinyML stays close to SNN with slightly higher false negatives.

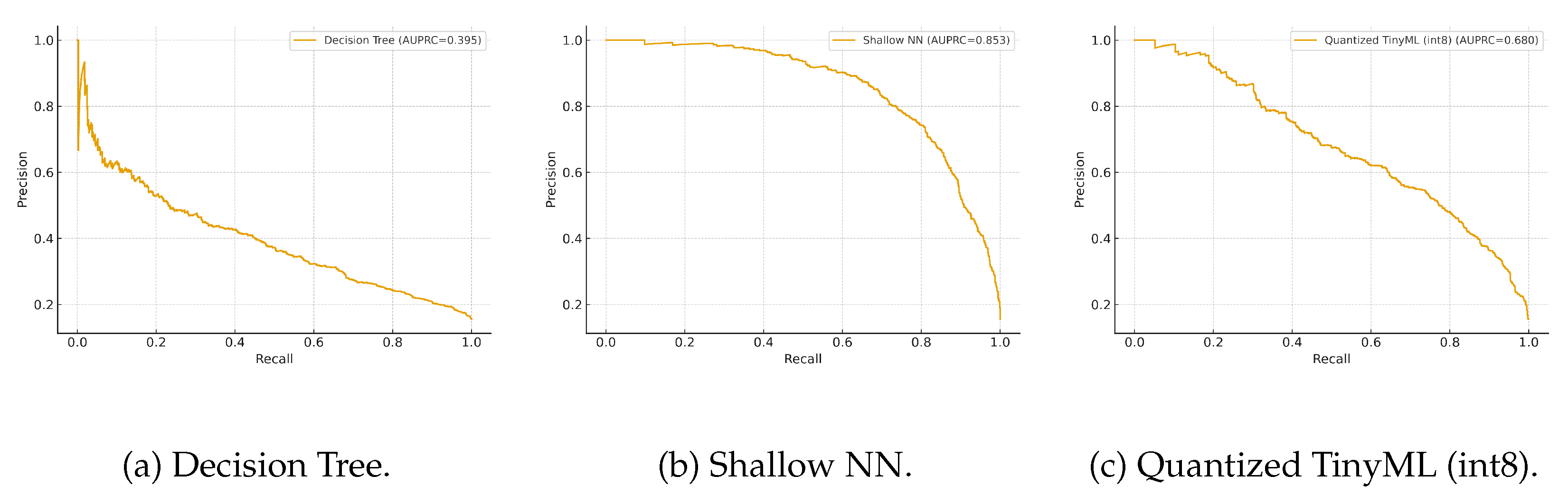

We also plot ROC and precision–recall (PR) curves per model to assess threshold robustness (

Figure 5 and

Figure 6). Due to class imbalance, PR curves are particularly informative.

As shown in

Figure 5, the Shallow NN achieves the largest area under the ROC curve, with the Quantized TinyML model following closely; the Decision Tree lags slightly at higher false-positive rates. This pattern indicates that compact neural models offer a better true-positive/false-positive balance while remaining suitable for edge deployment.

In

Figure 6, which is more informative under class imbalance, the Shallow NN attains the best precision–recall trade-off, while the Quantized TinyML model remains very close, evidencing limited loss from quantization. The Decision Tree yields lower precision at comparable recall, reflecting its simpler decision boundaries.

5.3. Computational Efficiency

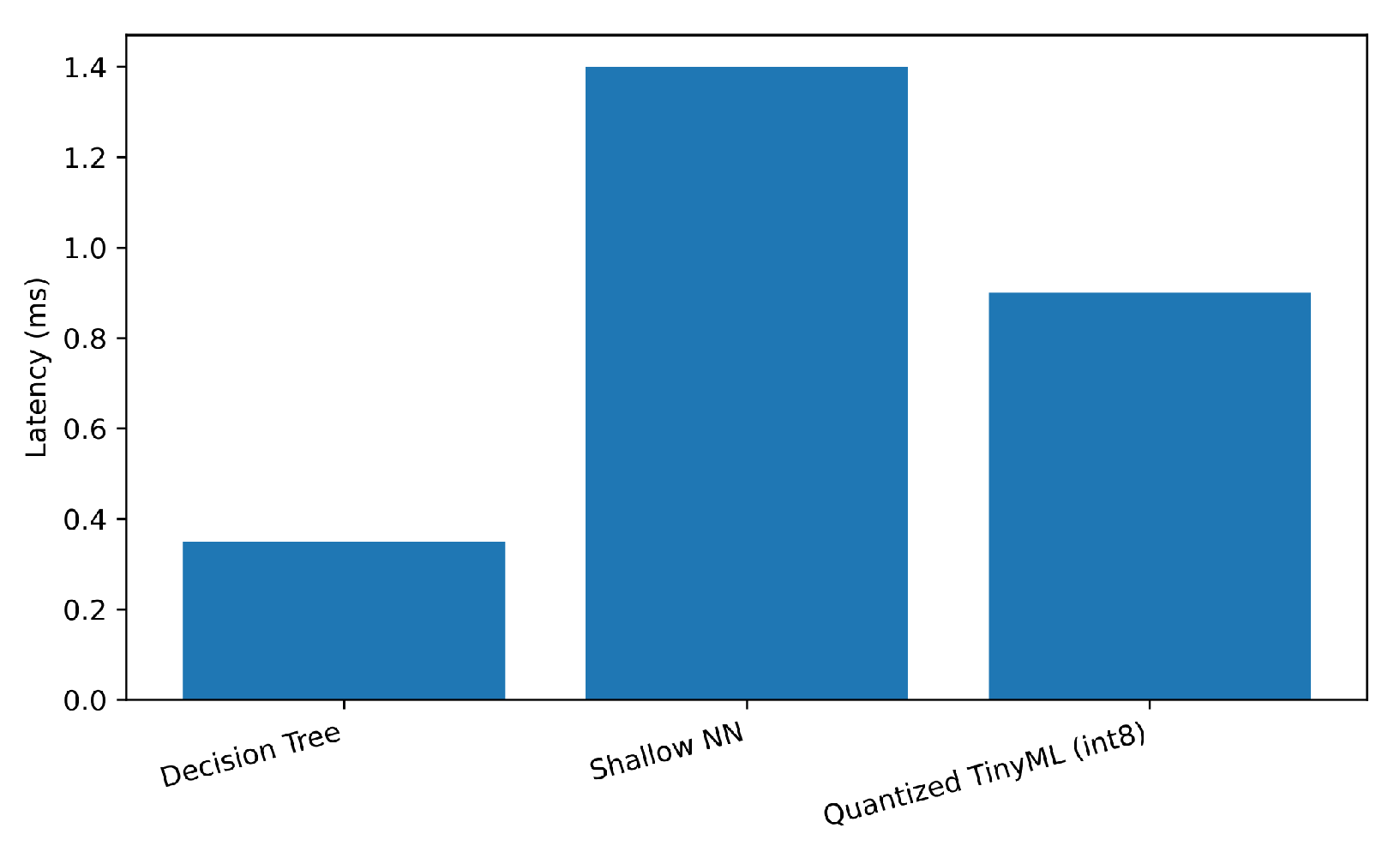

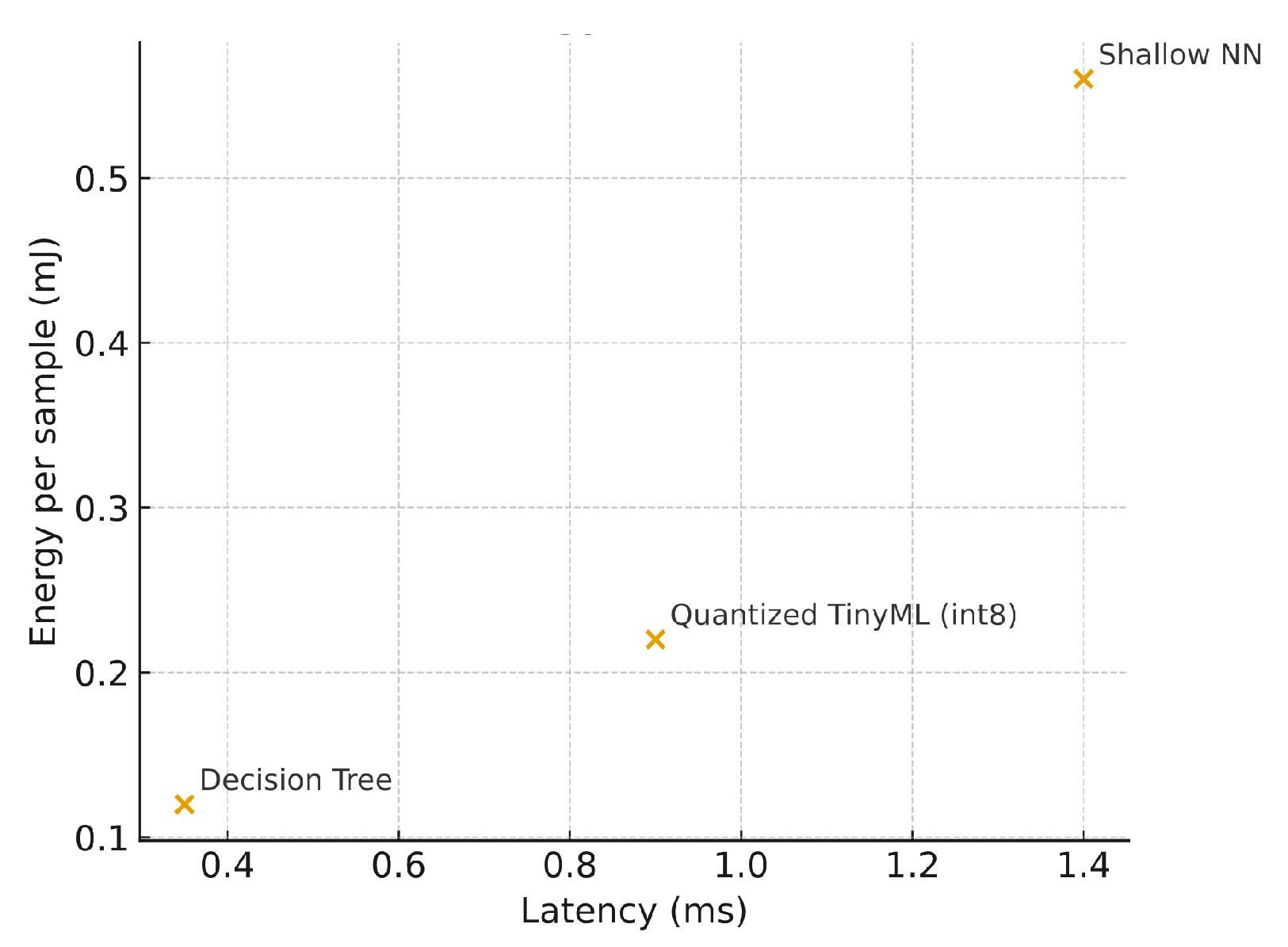

We measured inference latency, memory footprint, and energy per sample at the edge platform; results are in

Table 7. As expected, quantization substantially reduces memory and energy. DT is fastest and lightest but trades recall.

Figure 7,

Figure 8 and

Figure 9 plot each metric individually. As shown in

Figure 7, the Decision Tree achieves the lowest latency (below 0.4 ms), while the Shallow NN exhibits the highest due to its dense layer computations. The Quantized TinyML model reduces latency by approximately 35% relative to the SNN, confirming that quantization improves real-time performance for edge deployment.

Figure 8 compares the memory usage of each model at inference time. The Shallow NN requires the largest footprint (≈200 kB), while the Decision Tree uses less than 50 kB. The Quantized TinyML version substantially reduces memory demand, validating the advantage of integer quantization for constrained IoT hardware.

Figure 9 shows the energy consumed per inference. Consistent with latency and memory trends, the Shallow NN exhibits the highest energy draw, whereas the Decision Tree remains the most efficient. The Quantized TinyML model achieves a balanced trade-off, cutting energy consumption by around 60% compared to the uncompressed SNN.

Figure 10 presents the Pareto trade-off between latency and energy at the edge. The Decision Tree lies on the lowest-energy extreme, while the Shallow NN provides the best accuracy at higher cost. The Quantized TinyML model occupies a favorable middle ground, delivering near-optimal accuracy with significantly improved efficiency.

To further investigate scalability, a smaller Shallow Neural Network with a single hidden layer of 16 neurons was tested. The resulting F1-score decreased slightly from 0.94 to 0.93, while memory usage and energy consumption were reduced by approximately 40% and 25%, respectively. This confirms that the model maintains reliable detection performance even under tighter resource constraints.

5.4. Latency and Communication Overhead

We compared three scenarios (edge-only, cloud-only, hybrid) and measured end-to-end latency and upstream communication. Performing feature extraction locally reduces the data rate by orders of magnitude (

Table 8), which also lowers radio energy and queueing delays. Although the vibration case in this table shows an apparent reduction close to zero, this is a conservative scenario where features were intentionally transmitted at a fixed rate of 10 Hz, independent of the signal dynamics. In practice, adaptive feature transmission (e.g., event-driven updates or lower-rate periodic reporting) would yield substantial savings compared with raw streaming, particularly for high-frequency modalities.

The bar chart in

Figure 11 visualizes the percentage reduction.

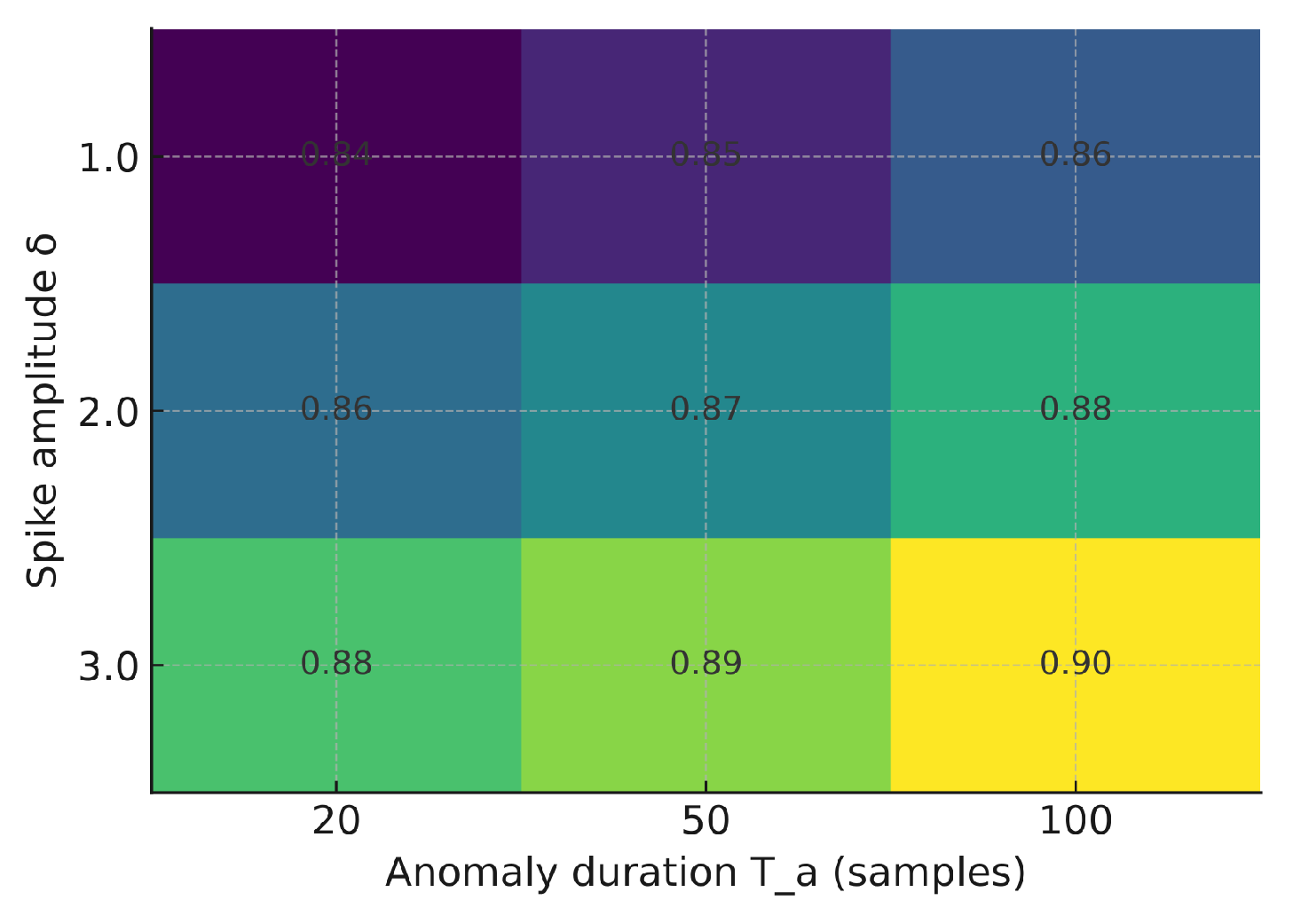

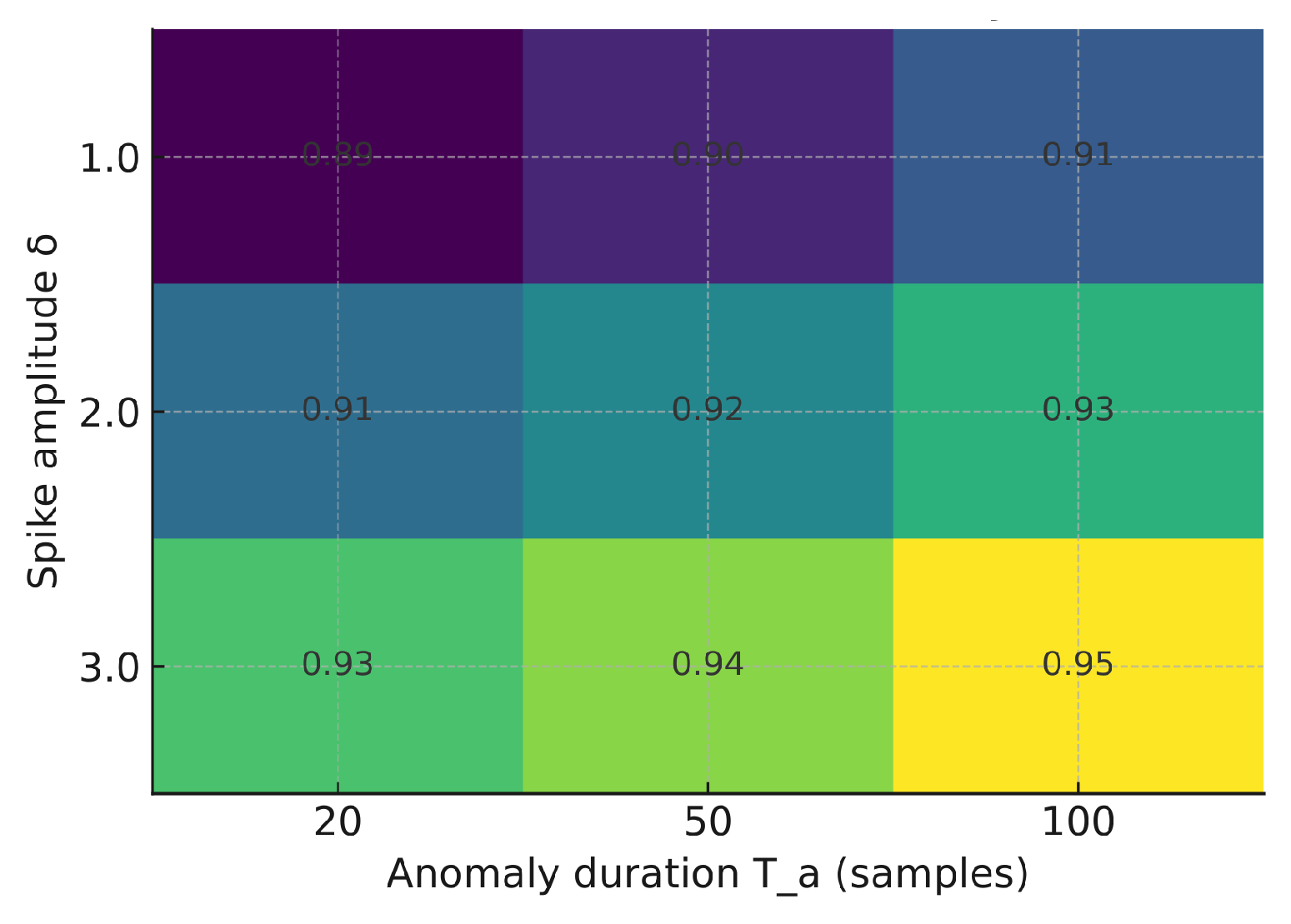

5.5. Sensitivity to Anomaly Characteristics

We evaluated the sensitivity of F1 to anomaly amplitude

and duration

(vibration modality). As expected, larger spikes and longer bursts improve detectability.

Figure 12,

Figure 13 and

Figure 14 illustrate how the F1-score varies with anomaly amplitude

and duration

. Larger and longer anomalies consistently yield higher detection rates across all models. The Shallow NN and TinyML models show slightly higher sensitivity than the Decision Tree, indicating improved generalization under varying anomaly magnitudes.

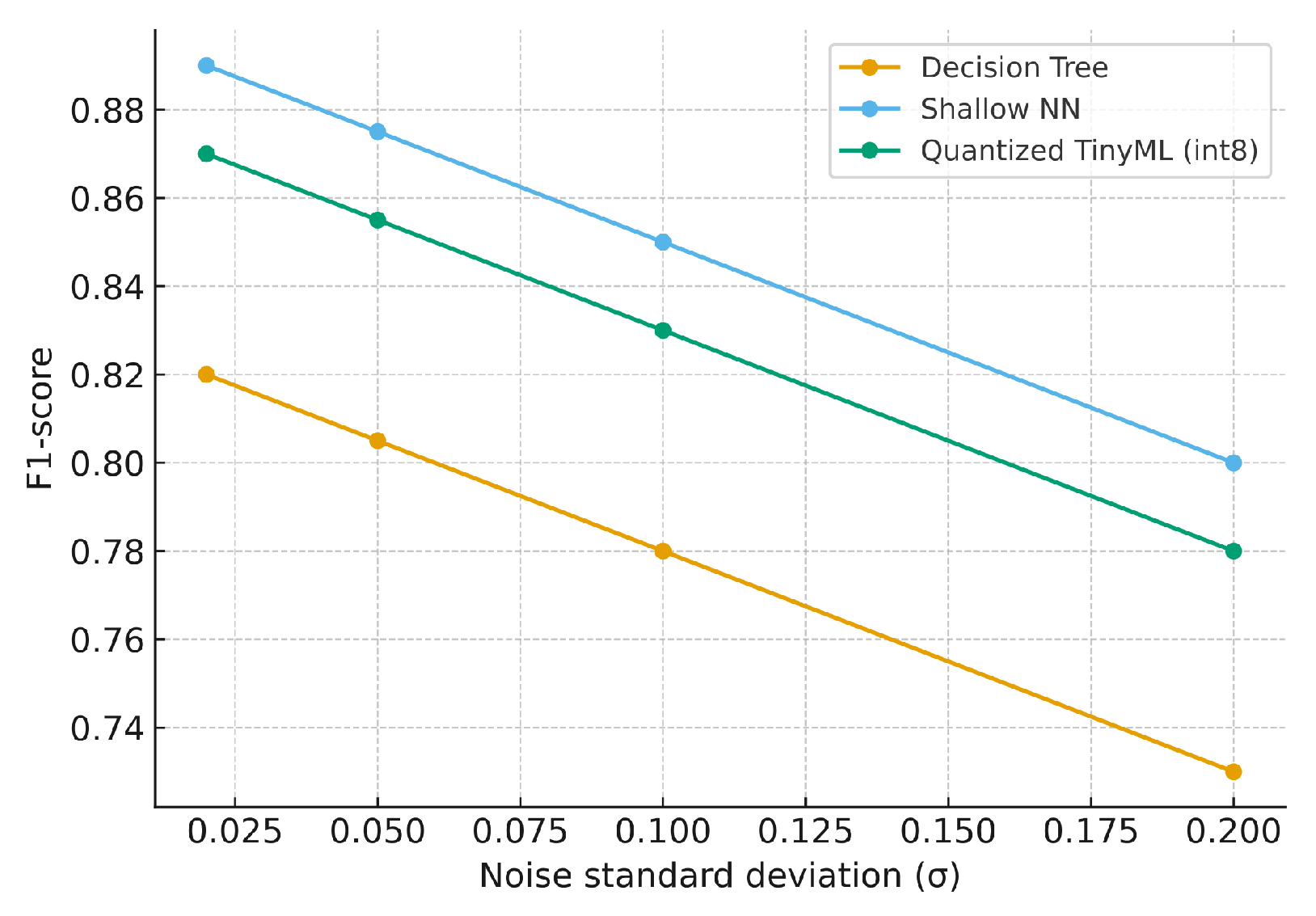

5.6. Robustness to Noise and Missing Data

We further assessed robustness under increasing Gaussian noise (

) and missing data ratios (

), with simple interpolation for imputation.

Figure 15 and

Figure 16 summarize model robustness under increasing Gaussian noise and missing data. All models exhibit gradual performance degradation as distortion grows. The Shallow NN maintains the highest F1-score, while the TinyML version tracks closely with minor loss, confirming that quantized models remain stable under imperfect sensor conditions. The SNN degrades gracefully; TinyML shows similar trends with slightly larger drops when there is high noise/missing data.

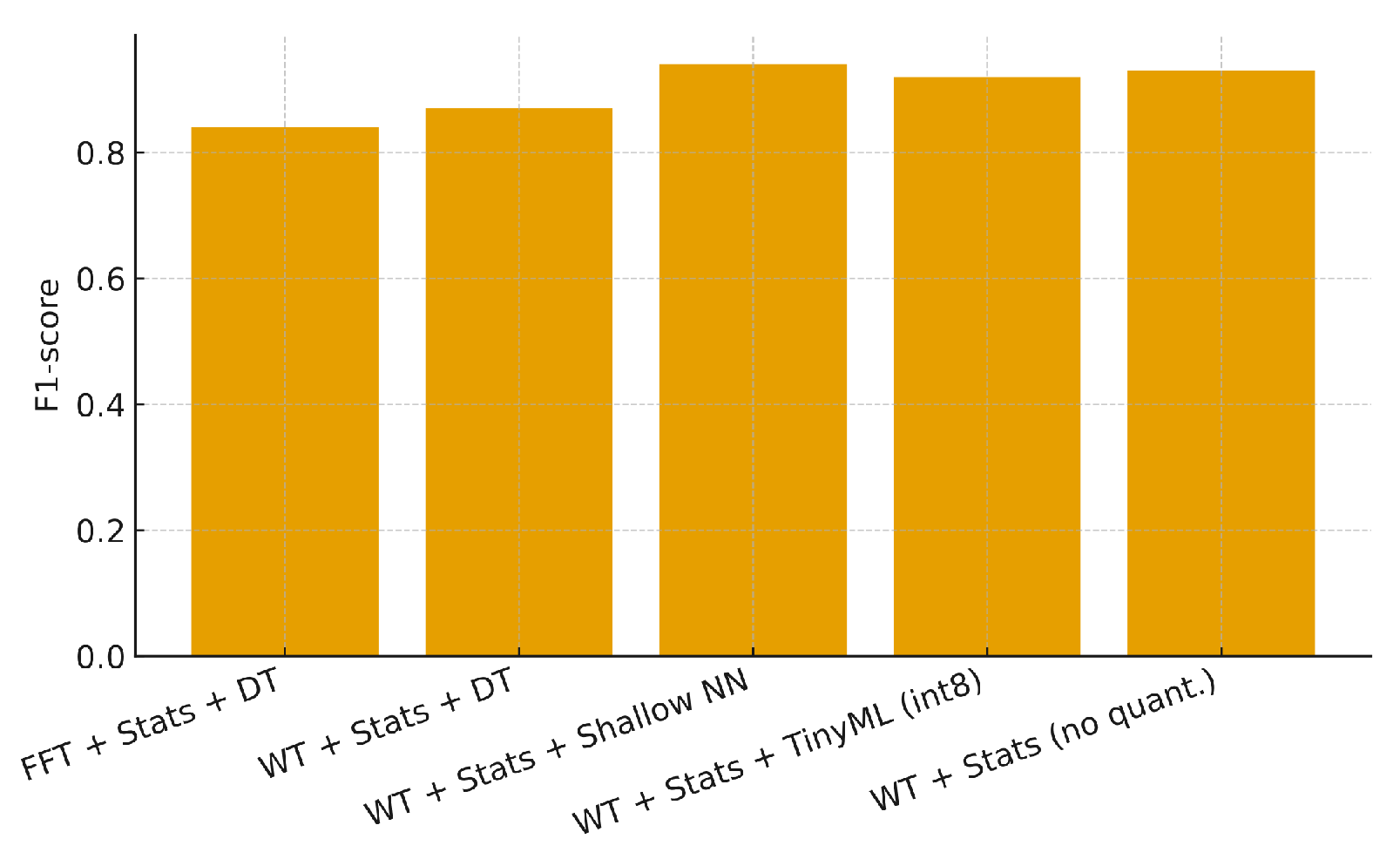

5.7. Ablation Studies

The ablation study quantifies the contribution of each component in the proposed pipeline, including the signal transform, statistical features, model type, and quantization. Each configuration in

Table 9 (illustrated in

Figure 17) removes or modifies one element of the full setup to isolate its effect. For example, the first configuration (“FFT + Stats + DT”) excludes the Wavelet transform, while the fourth (“WT + Stats + TinyML (int8)”) applies post-training quantization, and the last keeps all preprocessing stages but omits quantization. The comparison reveals that Wavelet-based features consistently outperform pure FFT-based features in F1-score and that quantization preserves most of the SNN performance while significantly reducing memory footprint and energy consumption. Together, these results highlight the relative importance of feature transforms and model optimization in achieving a balanced trade-off between accuracy and computational efficiency.

Overall, the ablation results confirm that Wavelet-based features and quantization are the two most influential components: removing the Wavelet transform reduces F1 by approximately 3–4 p.p., while quantization decreases memory usage by about 60% with only a 2 p.p. loss in accuracy.

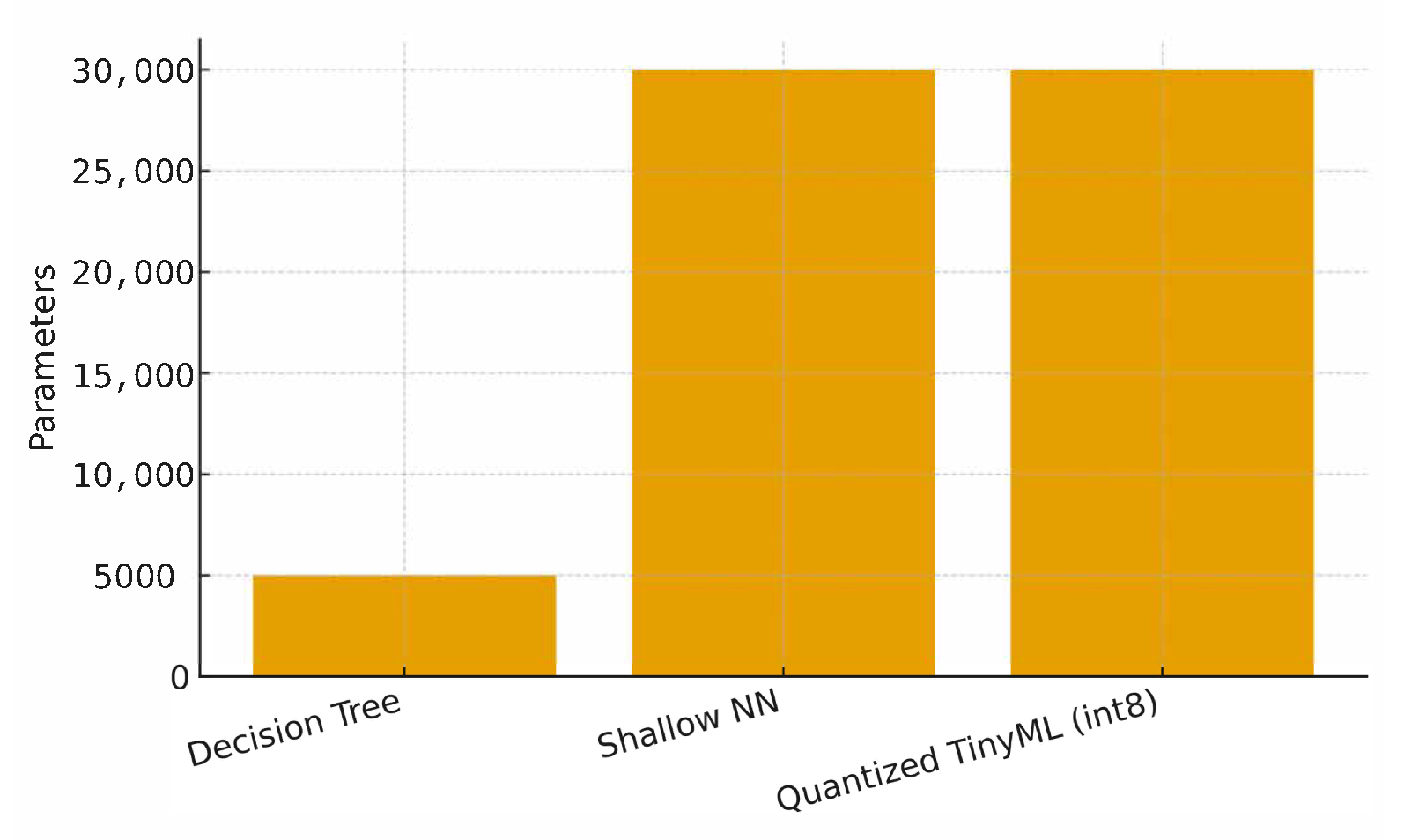

5.8. Model Complexity and Qualitative Examples

Table 10 reports model size proxies (parameters, operations, and binary size).

Figure 18 shows a quick view of parameter counts. Finally,

Figure 19 illustrates qualitative examples of signals with injected anomalies.

5.9. Discussion and Practical Insights

Overall, SNN achieves the best F1, while TinyML retains most performance with substantial savings in memory and energy. DT is extremely fast and lightweight but yields lower recall for contextual anomalies. Local feature extraction reduces upstream bandwidth dramatically for high-rate modalities, improving end-to-end latency and battery life.

An additional advantage of Decision Trees lies in their interpretability, which makes them attractive in regulated domains such as healthcare or industrial monitoring where explainable predictions are required. TinyML models, in turn, are easily replicated across large numbers of IoT nodes, with over-the-air (OTA) updates enabling scalable model maintenance in the field. From a practical standpoint, reducing inference latency by an order of magnitude can be critical in predictive maintenance: earlier anomaly detection directly translates into more time to mitigate potential failures and lower operational risk.

Limitations include the synthetic nature of the dataset and platform-specific energy measurements; future work should validate on real deployments and extend to heterogeneous sensor fusion with online adaptation.

6. Conclusions and Future Work

This paper proposed and evaluated a lightweight framework for anomaly detection in IoT sensor networks, combining classical signal processing with edge-oriented machine learning models. Using synthetic datasets spanning vibration, acoustic, and environmental signals, we showed that feature extraction via Fourier and Wavelet transforms, followed by compact classifiers, yields reliable detection performance while respecting the computational and energy constraints of embedded devices.

Our experiments demonstrated that a Shallow Neural Network achieves the highest F1-score (≈0.94), whereas a Quantized TinyML model provides a favorable trade-off between accuracy (≈0.92) and efficiency (reducing memory footprint by more than and energy per inference by ). Decision Trees remain attractive for ultra-constrained devices, offering the lowest latency (0.35 ms) and memory use, albeit with reduced recall for contextual anomalies. Additional analyses highlighted the benefits of local feature extraction in reducing communication overhead, the robustness of models under noise and missing data, and the contributions of different pipeline components through ablation studies.

Nevertheless, this work has limitations: results are based on controlled synthetic datasets, which may not fully capture the variability of real-world conditions; energy measurements were obtained on specific development boards and may not generalize across platforms. Furthermore, robustness analyses (noise, missing data, anomaly characteristics) were conducted under simulated conditions and therefore represent approximations of practical challenges rather than direct evidence from real deployments. These factors motivate further validation and exploration.

Future work will include (i) deploying the framework in real-world IoT environments (e.g., structural health monitoring, smart agriculture); (ii) extending to heterogeneous, multi-sensor fusion scenarios; (iii) investigating adaptive and online learning techniques to cope with concept drift; (iv) exploring more advanced TinyML optimizations (e.g., pruning, mixed-precision quantization); and (v) integrating communication-aware scheduling and energy harvesting models. Such directions are essential to further bridge the gap between simulation and deployment, strengthening the case for practical adoption of edge-based anomaly detection in resource-constrained IoT systems.

We believe the proposed framework provides a practical pathway to scalable, energy-efficient anomaly detection in next-generation IoT systems, bridging the gap between theoretical advances and real-world deployment.