Marine-Inspired Multimodal Sensor Fusion and Neuromorphic Processing for Autonomous Navigation in Unstructured Subaquatic Environments

Highlights

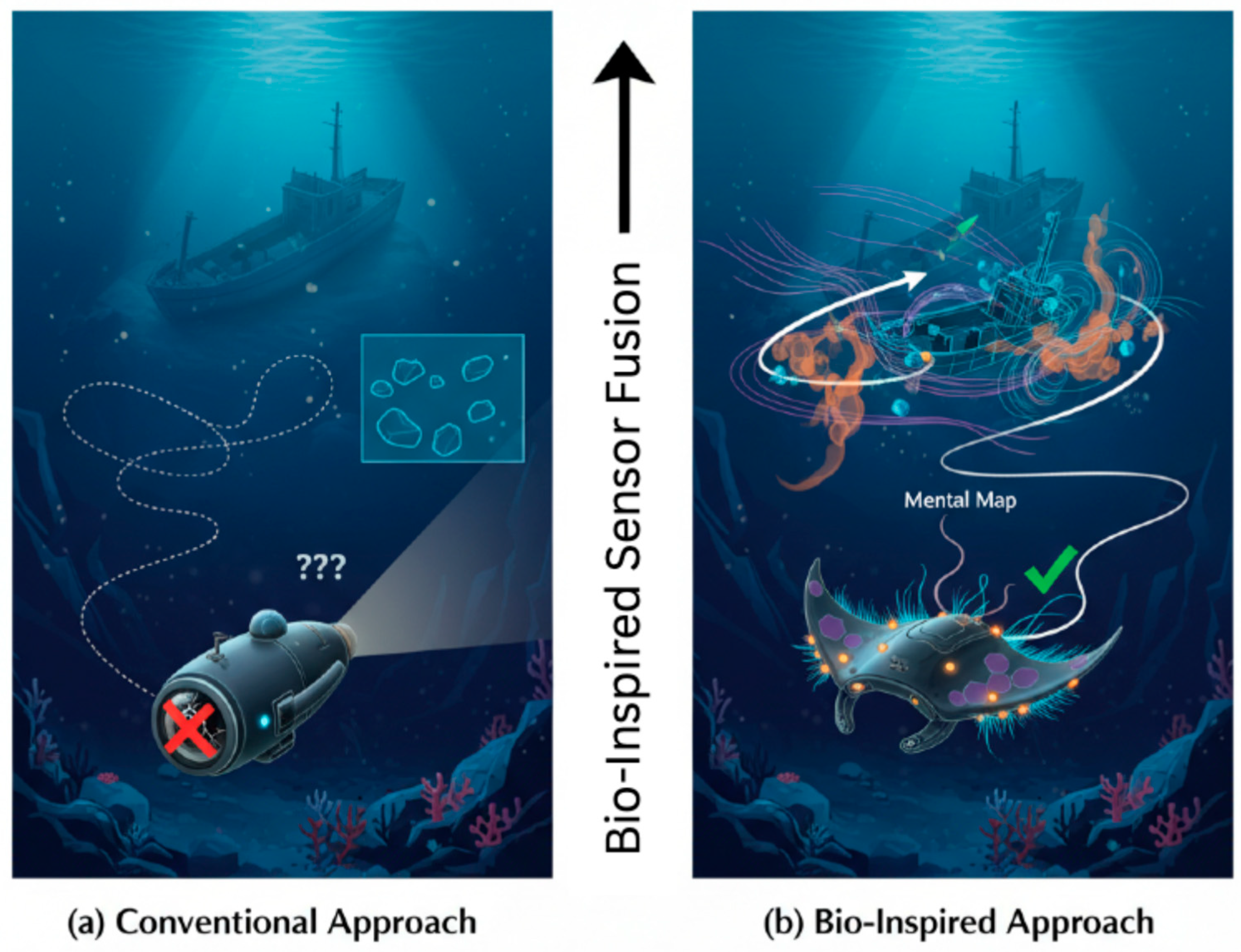

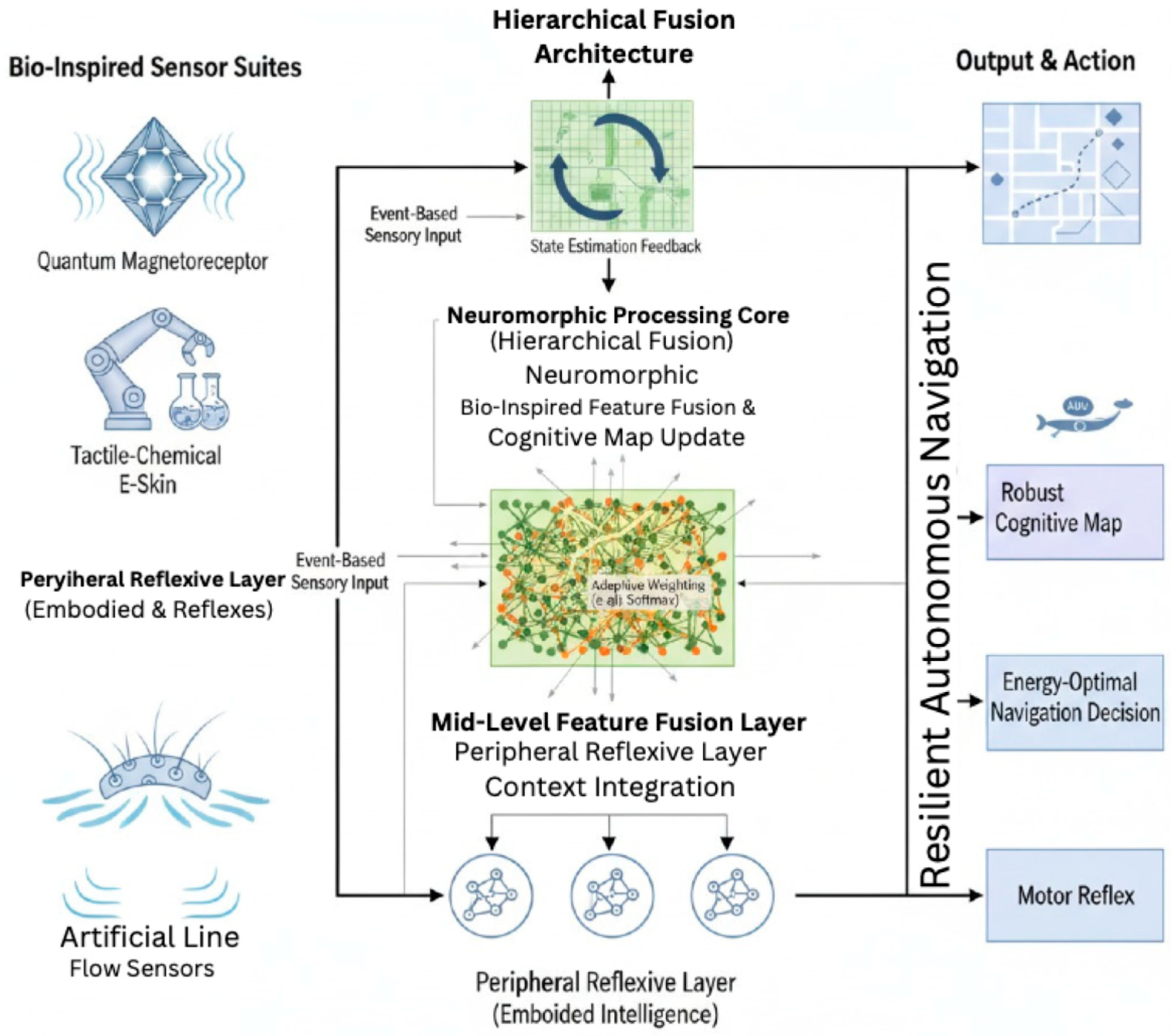

- A novel bio-inspired neuromorphic framework was developed, co-designing marine-inspired sensors (quantum magnetoreception, tactile-chemical sensing, and hydrodynamic flow detection) with event-based neuromorphic processors.

- The proposed architecture is theorized to significantly reduce positional drift and improve recovery from disorientation compared to state-of-the-art navigation systems.

- This work provides a robust, energy-efficient paradigm for autonomous underwater navigation in GPS-denied, murky, or complex environments, enabling longer missions for deep-sea exploration and infrastructure inspection.

- It demonstrates the transformative potential of tightly coupling bio-inspired sensing with neuromorphic processing, offering a blueprint for next-generation autonomous systems that mimic the fault tolerance and efficiency of marine organisms.

Abstract

1. Introduction

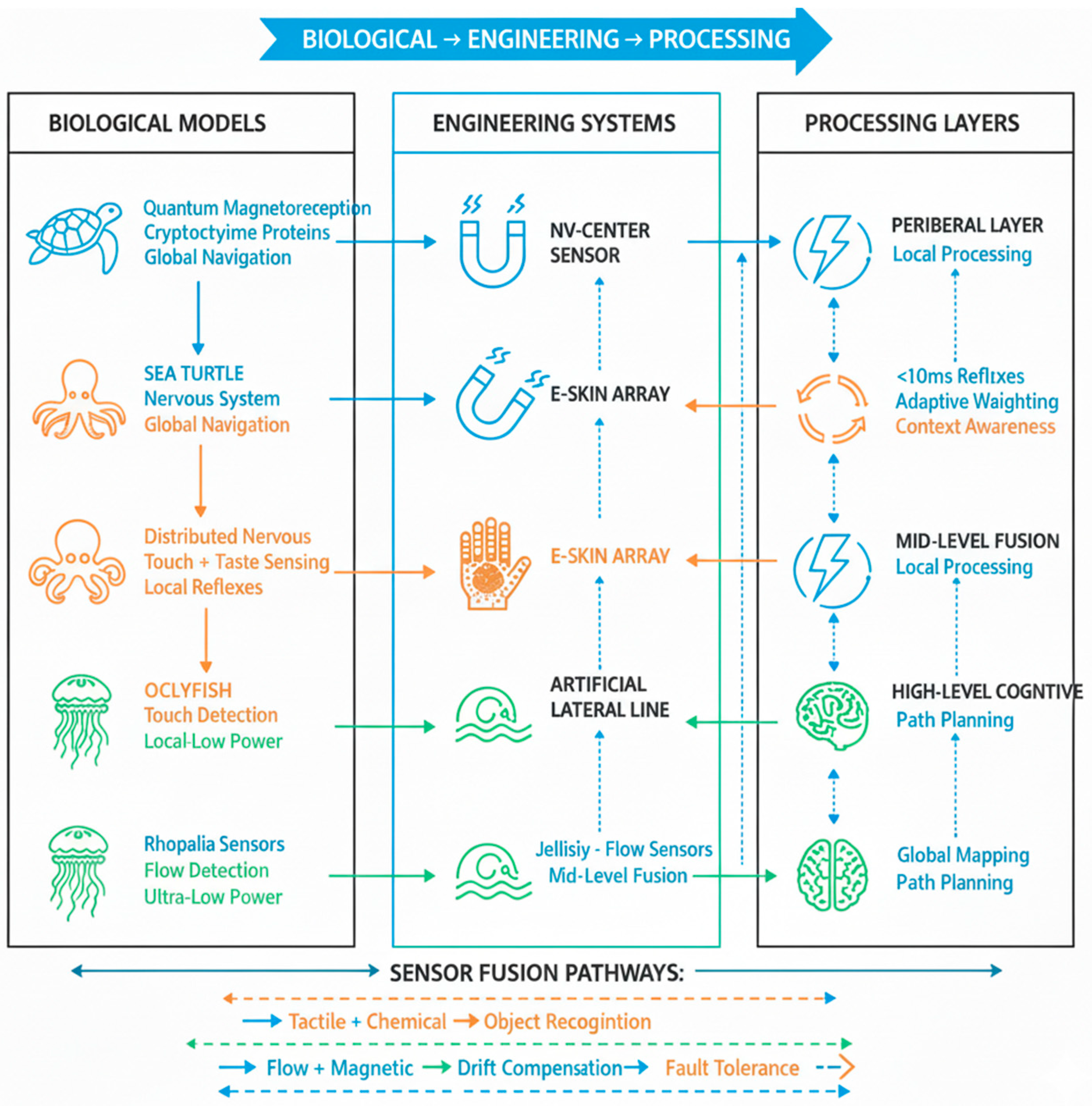

2. Biological Navigation Strategies in Marine Fauna

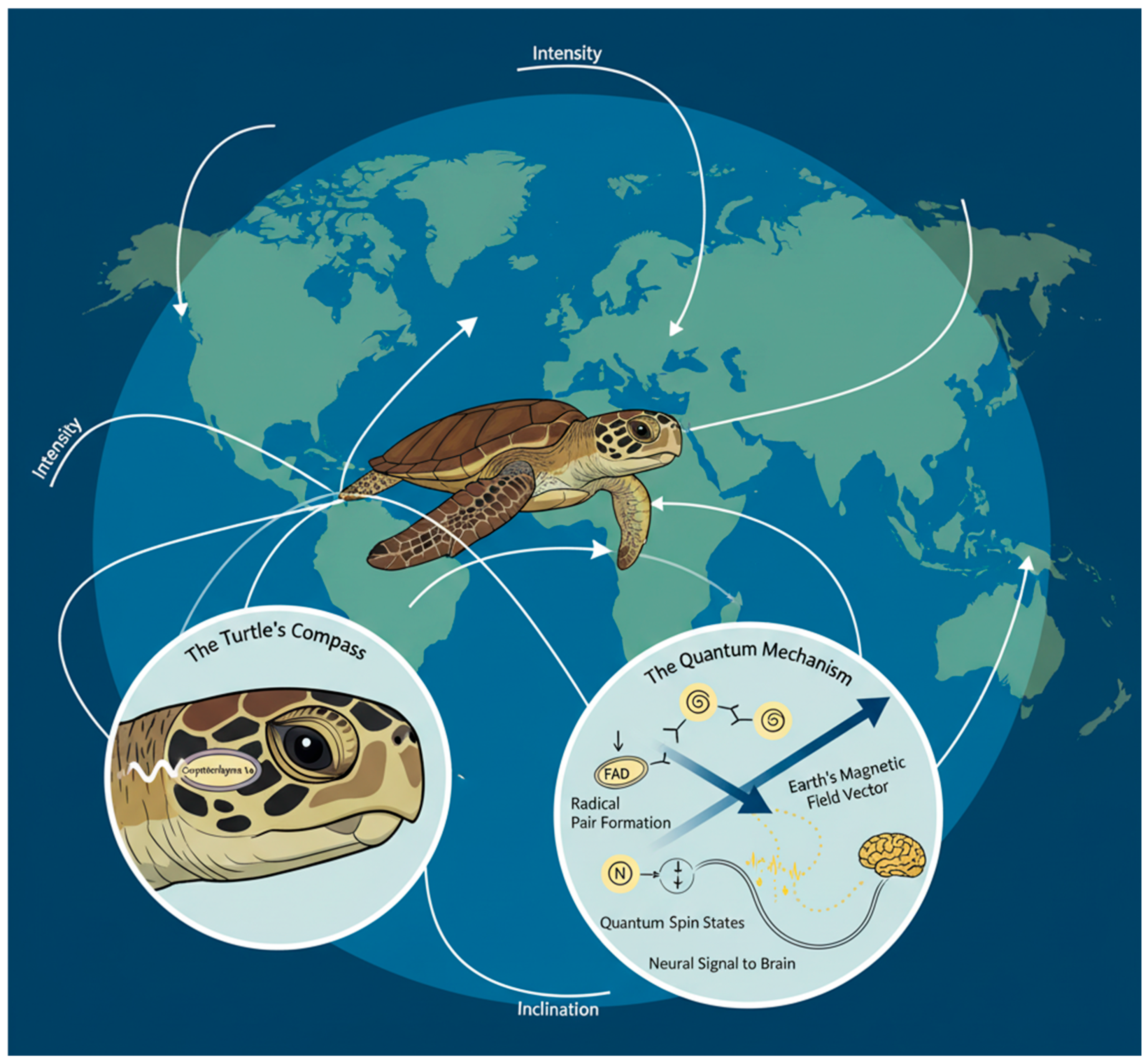

2.1. Long-Range Piloting: The Case of Sea Turtle Magnetoreception

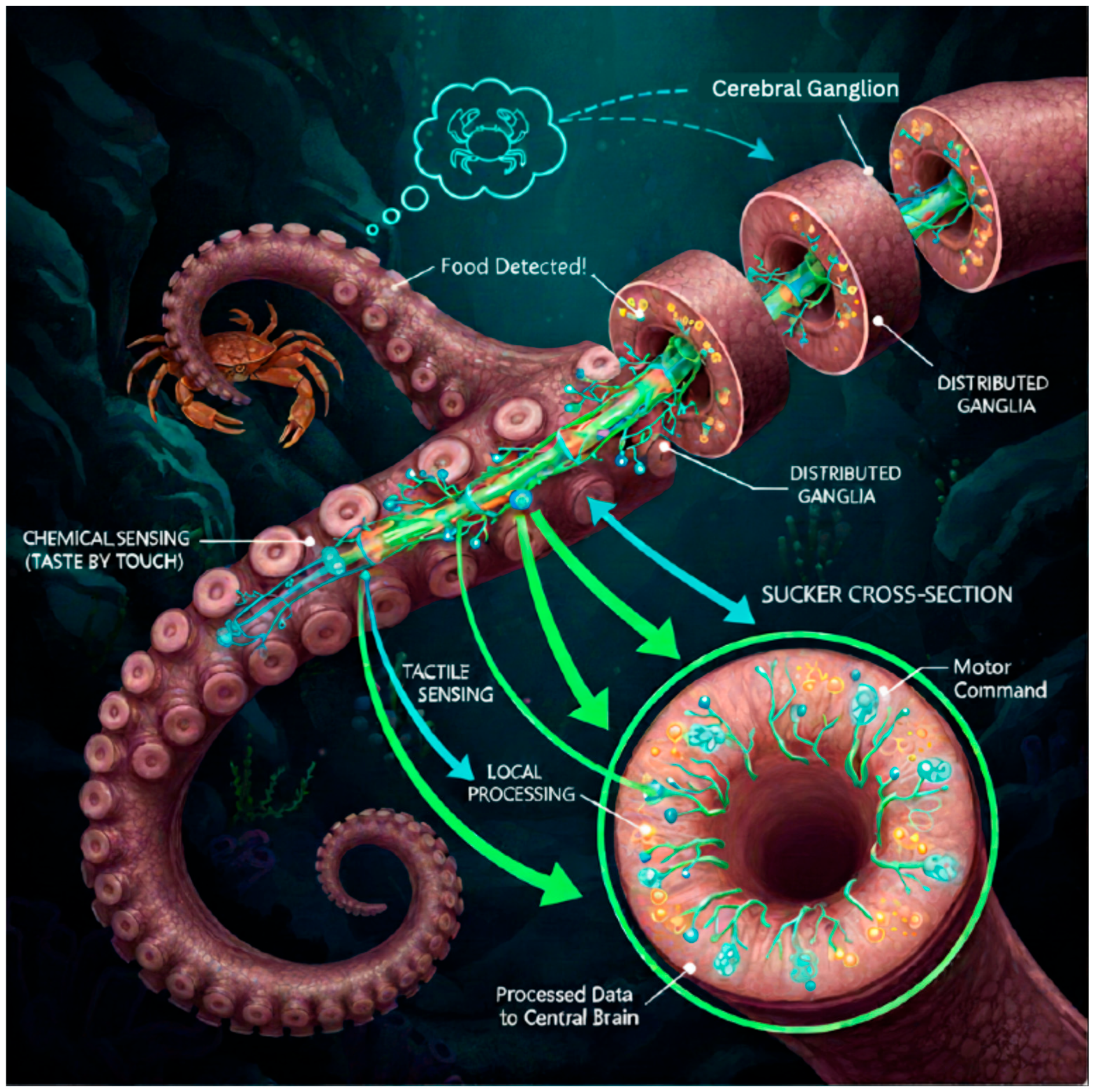

2.2. Short-Range, High-Resolution Sensing: Octopus Tactile-Chemotactic Integration

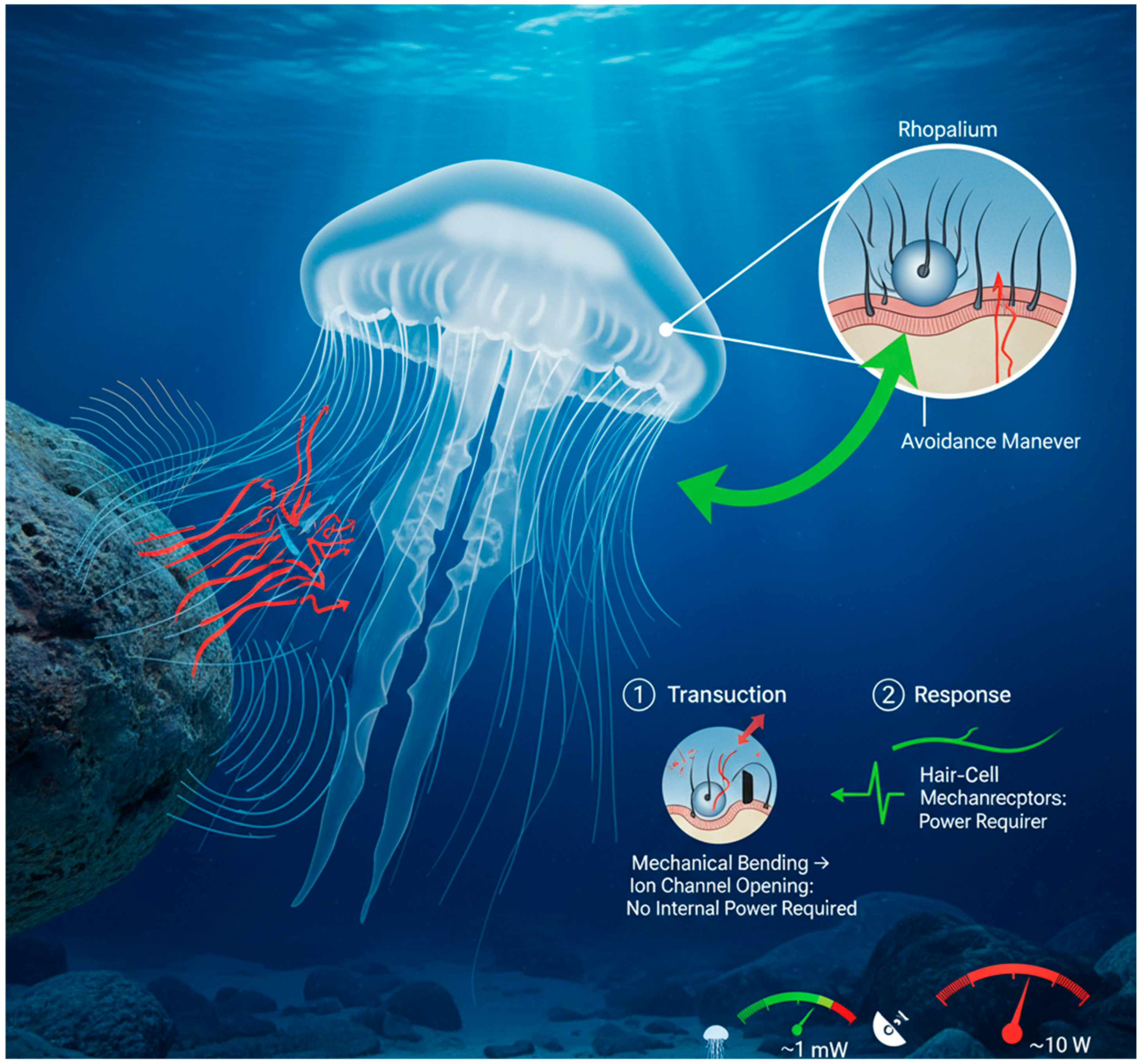

2.3. Energy-Efficient Situational Awareness: Jellyfish Flow Sensing

3. Engineering Analogues: From Biology to Sensors

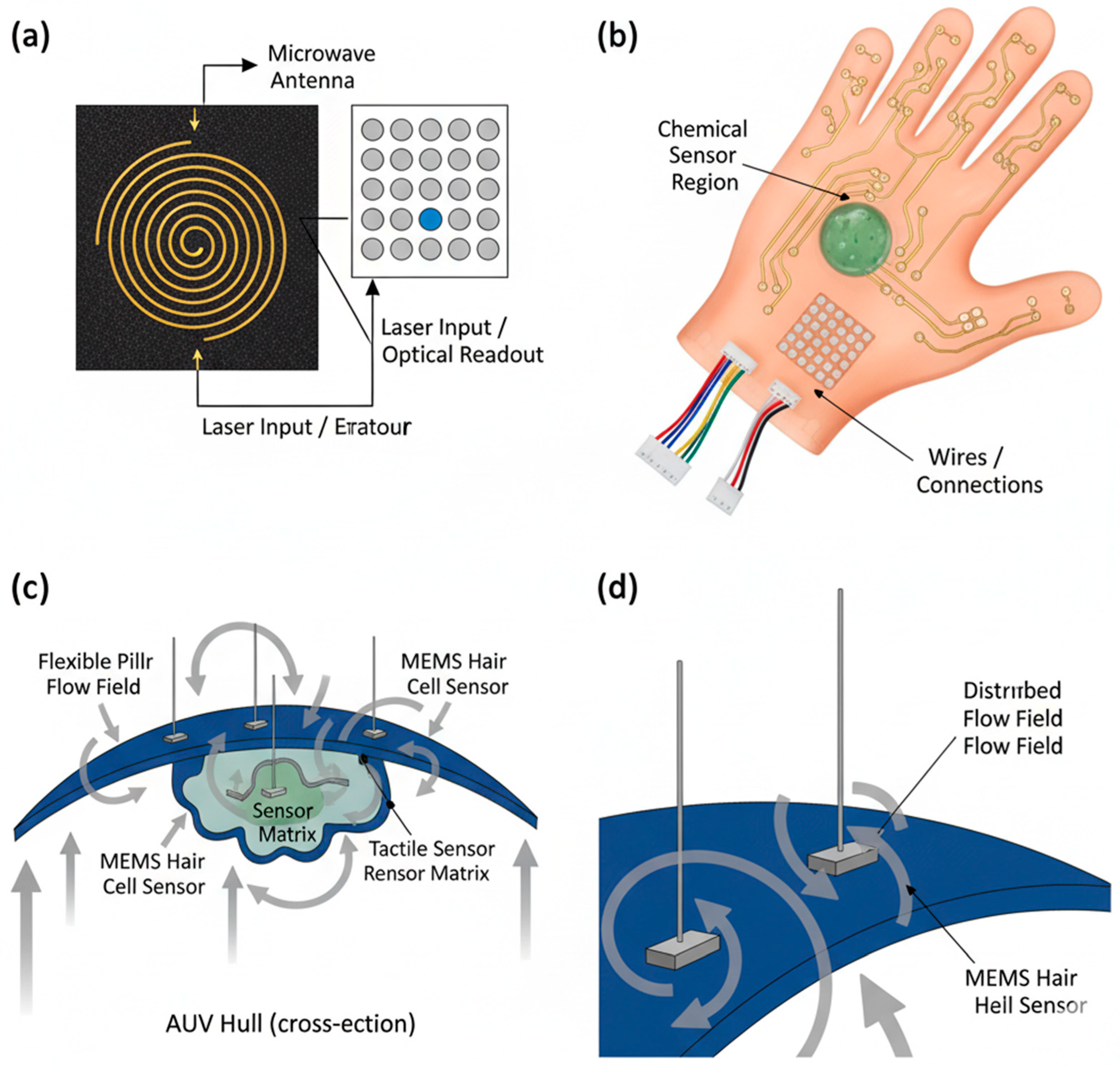

3.1. Quantum-Inspired Magnetoreceptors

3.2. Biomimetic Tactile and Chemical Sensor Arrays

Validation of Tactile-Chemical Sensing

3.3. Bio-Inspired Flow and Hydrodynamic Sensors

3.4. Comparative Analysis of Underwater Navigation Algorithms

3.5. Prototype Validation Result

4. The Neuromorphic Processing Paradigm

| Platform (Chip/System) | Core/Neuron Count | SNN Support & Key Features | Power Profile | Suitability for Bio-Fusion (Key Advantages) | Relevant Bio-Inspired/Robotic Studies |

|---|---|---|---|---|---|

| Intel Loihi 2 [54,61] | Up to 1 million programmable neurons per chip; Scalable. | Native asynchronous SNN; Online learning (e.g., STDP); Programmable neuron models. | ~10–100 mW/chip (highly workload-dependent). | High. Advanced programmability and learning capabilities ideal for adaptive mid-level and high-level fusion. Scalable for distributed processing. | [62] (Robotic tactile perception); [63,64] (Odor source localization). |

| IBM TrueNorth [54,61] | 1 million neurons, 256 million synapses per chip; Synchronous operation. | Digital, event-driven SNN; Fixed LIF neuron model; Extremely low power per event. | ~70 mW/chip (typical) for continuous operation. | Medium-High. Exceptional power efficiency for static, pre-defined networks. Suitable for fixed reflexive and fusion SNNs. Less flexible for online learning. | [63,64] (Real-time audio source separation). |

| SpiNNaker (SpiNNaker 2) [65] | Millions of ARM cores emulating billions of neurons (system-level). | Real-time SNN simulation; Flexible software-defined models; Optimized for large-scale neural simulations. | Watts to tens of watts (system-level, depends on scale). | Medium. High flexibility for research and prototyping complex, large-scale fusion architectures. Higher power than dedicated chips. | [66] (Closed-loop robotic control); [67] (Large-scale sensory integration). |

| BrainChip Akida [68] | 1.2 million neurons per chip; Event-based fabric. | Native SNN with on-chip learning; Focus on sensor-edge processing; Direct event-based sensor interface. | Sub-mW to mW range for inference tasks. | High. Designed for low-power, always-on sensing at the edge. Ideal for peripheral reflexive layer and lightweight mid-level fusion on the AUV itself. | [69] (Visual and auditory pattern recognition). |

| Dynap-SE2 [70] | ~1000 analog neurons per chip; Analog-mixed signal. | Ultra-low latency analog SNNs; Sub-millisecond response; Direct analog sensor interface. | ~100 µW–1 mW per chip. | Very High for Reflexive Layer. Unmatched speed and power efficiency for low-level, hardwired reflexive behaviors. Less suitable for complex learning. | [71] (Pole-balancing robot control); [44] (Fast tactile-driven control). |

| INRC (Intel Neuromorphic Research Community) Platforms (e.g., Kapoho Bay, Nahuku) [72] | Configurable arrays of Loihi chips. | Scalable systems for complex algorithms; Combines multiple Loihi chips for larger networks. | Scales with number of chips (Watts range). | High for Prototyping. Ideal for developing and testing the complete hierarchical architecture before deployment on a more power-optimized single chip. | [73] (Navigation and mapping in simulated environments). |

SNN Architecture and Training for Bio-Inspired Fusion

5. Bio-Inspired Multimodal Fusion Architecture: Methodology and Implementation

5.1. Fusion Architecture Design Principles

5.2. Hierarchical Processing Layers

5.3. Simulation Setup and Preliminary Validation

6. Current Challenges and Future Research Directions

Long-Term Reliability Assessment

7. Conclusions

Author Contributions

Funding

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Katzschmann, R.K.; DelPreto, J.; MacCurdy, R.; Rus, D. Exploration of underwater life with an acoustically controlled soft robotic fish. Sci. Robot. 2018, 3, eaar3449. [Google Scholar] [CrossRef]

- Mohan, M.M.; Baluprithiviraj, S.; Sridhar, P.K.; Varsinishrilaya, N.; Narendh, N.; Devi, T.K. Smart Driving Assistant for Upclimbing Hill Slope Area. In Proceedings of the 2022 International Conference on Automation, Computing and Renewable Systems (ICACRS), Pudukkottai, India, 13–15 December 2022; pp. 1344–1346. [Google Scholar] [CrossRef]

- Hore, P.J.; Mouritsen, H. The Radical-Pair Mechanism of Magnetoreception. Annu. Rev. Biophys. 2016, 45, 299–344. [Google Scholar] [CrossRef]

- Christensen, L.; Fernández, J.d.G.; Hildebrandt, M.; Koch, C.E.S.; Wehbe, B. Recent Advances in AI for Navigation and Control of Underwater Robots. Curr. Robot. Rep. 2022, 3, 165–175. [Google Scholar] [CrossRef]

- Sun, L.; Wang, Y.; Hui, X.; Ma, X.; Bai, X.; Tan, M. Underwater Robots and Key Technologies for Operation Control. Cyborg Bionic Syst. 2024, 5, 0089. [Google Scholar] [CrossRef]

- Ding, F.; Wang, R.; Zhang, T.; Zheng, G.; Wu, Z.; Wang, S. Real-time Trajectory Planning and Tracking Control of Bionic Underwater Robot in Dynamic Environment. Cyborg Bionic Syst. 2024, 5, 0112. [Google Scholar] [CrossRef]

- Campi, M.C.; Carè, A.; Garatti, S. The scenario approach: A tool at the service of data-driven decision making. Annu. Rev. Control 2021, 52, 1–17. [Google Scholar] [CrossRef]

- Abuin, A.; Iradier, E.; Fanari, L.; Montalban, J.; Angueira, P. High Efficiency Wireless-NOMA Solutions for Industry 4.0. In Proceedings of the 2022 IEEE 18th International Conference on Factory Communication Systems (WFCS), Pavia, Italy, 27–29 April 2022. [Google Scholar] [CrossRef]

- Pradhan, R.; Gummadi, A.; Tanusha, P.; Sowmya, G.; Vaitheeshwari, S.; Valavan, M.P. Neuromorphic Computing Architectures for Energy Efficient Edge Devices in Autonomous Vehicles. In Proceedings of the 2024 IEEE International Conference on Intelligent Techniques in Control, Optimization and Signal Processing, INCOS, Tamil Nadu, India, 14–16 March 2024. [Google Scholar] [CrossRef]

- Horsevad, N.; Kwa, H.L.; Bouffanais, R. Beyond Bio-Inspired Robotics: How Multi-Robot Systems Can Support Research on Collective Animal Behavior. Front. Robot. AI 2022, 9, 865414. [Google Scholar] [CrossRef] [PubMed]

- Hore, P.J.; Mouritsen, H. The Quantum Nature of Bird Migration: Migratory birds travel vast distances between their breeding and wintering grounds. New research hints at the biophysical underpinnings of their internal navigation system. Sci. Am. 2022, 326, 26. [Google Scholar] [CrossRef]

- Mhaisen, N.; Allahham, M.S.; Mohamed, A.; Erbad, A.; Guizani, M. On Designing Smart Agents for Service Provisioning in Blockchain-Powered Systems. IEEE Trans. Netw. Sci. Eng. 2022, 9, 401–415. [Google Scholar] [CrossRef]

- Wang, T.; Halder, U.; Gribkova, E.; Gillette, R.; Gazzola, M.; Mehta, P.G. Neural models and algorithms for sensorimotor control of an octopus arm. Biol. Cybern. 2025, 119, 25. [Google Scholar] [CrossRef]

- Luo, A.; Pande, S.S.; Turner, K.T. Versatile Adhesion-Based Gripping via an Unstructured Variable Stiffness Membrane. Soft Robot. 2022, 9, 1177–1185. [Google Scholar] [CrossRef]

- Wang, T.; Joo, H.J.; Song, S.; Hu, W.; Keplinger, C.; Sitti, M. A versatile jellyfish-like robotic platform for effective underwater propulsion and manipulation. Sci. Adv. 2023, 9, eadg0292. [Google Scholar] [CrossRef]

- Zhang, H.H.; Chao, J.B.; Wang, Y.W.; Liu, Y.; Xu, Y.X.; Yao, H.; Jiang, L.; Li, X.H. Electromagnetic-Thermal Co-Design of Base Station Antennas With All-Metal EBG Structures. IEEE Antennas Wirel. Propag. Lett. 2023, 22, 3008–3012. [Google Scholar] [CrossRef]

- Milford, M.; Wyeth, G. Persistent Navigation and Mapping using a Biologically Inspired SLAM System. Int. J. Rob. Res. 2010, 29, 1131–1153. [Google Scholar] [CrossRef]

- Davies, M.; Srinivasa, N.; Lin, T.-H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Yang, Y.; Zhao, L.; Liu, X. Iterative Zero-Shot Localization via Semantic-Assisted Location Network. IEEE Robot. Autom. Lett. 2022, 7, 5974–5981. [Google Scholar] [CrossRef]

- Chang, Z.; Wu, H.; Li, C. YOLOv4-tiny-based robust RGB-D SLAM approach with point and surface feature fusion in complex indoor environments. J. Field Robot. 2023, 40, 521–534. [Google Scholar] [CrossRef]

- Bonnen, T.; Yamins, D.L.K.; Wagner, A.D. When the ventral visual stream is not enough: A deep learning account of medial temporal lobe involvement in perception. Neuron 2021, 109, 2755–2766.e6. [Google Scholar] [CrossRef]

- Ben-Noah, I.; Friedman, S.P.; Berkowitz, B. Dynamics of Air Flow in Partially Water-Saturated Porous Media. Rev. Geophys. 2023, 61, e2022RG000798. [Google Scholar] [CrossRef]

- Dias, P.G.F.; Silva, M.C.; Filho, G.P.R.; Vargas, P.A.; Cota, L.P.; Pessin, G. Swarm Robotics: A Perspective on the Latest Reviewed Concepts and Applications. Sensors 2021, 21, 2062. [Google Scholar] [CrossRef]

- Koca, K.; Genç, M.S.; Özkan, R. Mapping of laminar separation bubble and bubble-induced vibrations over a turbine blade at low Reynolds numbers. Ocean. Eng. 2021, 239, 109867. [Google Scholar] [CrossRef]

- Berthold, P. A comprehensive theory for the evolution, control and adaptability of avian migration. Ostrich 1999, 70, 1–11. [Google Scholar] [CrossRef]

- Zhang, D.; Zhang, J.; Wu, Y.; Xiong, X.; Yang, J.; Dickey, M.D. Liquid Metal Interdigitated Capacitive Strain Sensor with Normal Stress Insensitivity. Adv. Intell. Syst. 2022, 4, 2100201. [Google Scholar] [CrossRef]

- Sehner, S.; Willems, E.P.; Vinicus, L.; Migliano, A.B.; van Schaik, C.P.; Burkart, J.M. Problem-solving in groups of common marmosets (Callithrix jacchus): More than the sum of its parts. Proc. Natl. Acad. Sci. USA 2022, 1, pgac168. [Google Scholar] [CrossRef]

- van Giesen, L.; Kilian, P.B.; Allard, C.A.H.; Bellono, N.W. Molecular Basis of Chemotactile Sensation in Octopus. Cell 2020, 183, 594–604.e14. [Google Scholar] [CrossRef]

- Ma, Q. Somatotopic organization of autonomic reflexes by acupuncture. Curr. Opin. Neurobiol. 2022, 76, 102602. [Google Scholar] [CrossRef]

- Tao, Y.; Wei, Y.; Ge, J.; Pan, Y.; Wang, W.; Bi, Q.; Sheng, P.; Fu, C.; Pan, W.; Jin, L.; et al. Phylogenetic evidence reveals early Kra-Dai divergence and dispersal in the late Holocene. Nat. Commun. 2023, 14, 6924. [Google Scholar] [CrossRef]

- Glanzman, D.L. The Cellular Mechanisms of Learning in Aplysia: Of Blind Men and Elephants. Biol. Bull. 2006, 210, 271–279. [Google Scholar] [CrossRef]

- Tramacere, F.; Beccai, L.; Kuba, M.; Gozzi, A.; Bifone, A.; Mazzolai, B. The Morphology and Adhesion Mechanism of Octopus vulgaris Suckers. PLoS ONE 2013, 8, e65074. [Google Scholar] [CrossRef] [PubMed]

- Andersson, A.; Karlsson, S.; Ryman, N.; Laikre, L. Monitoring genetic diversity with new indicators applied to an alpine freshwater top predator. Mol. Ecol. 2022, 31, 6422–6439. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Underwood, C.J. Apomixis. Curr. Biol. 2023, 33, R293–R295. [Google Scholar] [CrossRef]

- Xu, J.; Huang, J.; Chen, L.; Chen, M.; Wen, X.; Zhang, P.; Li, S.; Ma, B.; Zou, Y.; Wang, Y.; et al. Degradation characteristics of intracellular and extracellular ARGs during aerobic composting of swine manure under enrofloxacin stress. Chem. Eng. J. 2023, 471, 144637. [Google Scholar] [CrossRef]

- Puri, P.; Wu, S.T.; Su, C.Y.; Aljadeff, J. Peripheral preprocessing in Drosophila facilitates odor classification. Proc. Natl. Acad. Sci. USA 2024, 121, e2316799121. [Google Scholar] [CrossRef]

- Bertrand, O.J.N.; Sonntag, A. The potential underlying mechanisms during learning flights. J. Comp. Physiol. A Neuroethol. Sens. Neural Behav. Physiol. 2023, 209, 593–604. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Kociolek, A.M.; Mariano, L.A.; Loh, P.Y. Grip Force Modulation on Median Nerve Morphology Changes. J. Orthop. Res. 2025, 43, 1179–1190. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Halder, U.; Gribkova, E.; Gazzola, M.; Mehta, P.G. Modeling the Neuromuscular Control System of an Octopus Arm. arXiv 2022, arXiv:2211.06767. [Google Scholar] [CrossRef]

- Papadakis, E.; Tsakiris, D.P.; Sfakiotakis, M. An Octopus-Inspired Soft Pneumatic Robotic Arm. Biomimetics 2024, 9, 773. [Google Scholar] [CrossRef]

- Rawlings, B.S.; van Leeuwen, E.J.C.; Davila-Ross, M. Chimpanzee communities differ in their inter- and intrasexual social relationships. Learn. Behav. 2023, 51, 48–58. [Google Scholar] [CrossRef]

- Yoon, I.; Mun, J.; Min, K.S. Comparative Study on Energy Consumption of Neural Networks by Scaling of Weight-Memory Energy Versus Computing Energy for Implementing Low-Power Edge Intelligence. Electronics 2025, 14, 2718. [Google Scholar] [CrossRef]

- Kohl, K.D.; Dieppa-Colón, E.; Goyco-Blas, J.; Peralta-Martínez, K.; Scafidi, L.; Shah, S.; Zawacki, E.; Barts, N.; Ahn, Y.; Hedayati, S.; et al. Gut Microbial Ecology of Five Species of Sympatric Desert Rodents in Relation to Herbivorous and Insectivorous Feeding Strategies. Integr. Comp. Biol. 2022, 62, 237–251. [Google Scholar] [CrossRef]

- Choe, J.; Lee, J.; Yang, H.; Nguyen, H.N.; Lee, D. Sequential Trajectory Optimization for Externally-Actuated Modular Manipulators with Joint Locking. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 8 August 2024; pp. 8700–8706. [Google Scholar] [CrossRef]

- Smith, E.; Meger, D.; Pineda, L.; Calandra, R.; Malik, J.; Soriano, A.R.; Drozdzal, M. Active 3D Shape Reconstruction from Vision and Touch. Adv. Neural Inf. Process. Syst. 2021, 19, 16064–16078. Available online: https://arxiv.org/pdf/2107.09584 (accessed on 19 September 2025).

- Edmunds, P.J.; Perry, C.T. Decadal-scale variation in coral calcification on coral-depleted Caribbean reefs. Mar. Ecol. Prog. Ser. 2023, 713, 1–19. [Google Scholar] [CrossRef]

- Olson, C.S.; Moorjani, A.; Ragsdale, C.W. Molecular and Morphological Circuitry of the Octopus Sucker Ganglion. J. Comp. Neurol. 2025, 533, e70055. [Google Scholar] [CrossRef]

- Murillo, O.D.; Petrosyan, V.; LaPlante, E.L.; Dobrolecki, L.E.; Lewis, M.T.; Milosavljevic, A. Deconvolution of cancer cell states by the XDec-SM method. PLoS Comput. Biol. 2023, 19, e1011365. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, G.; Lan, Y.; Xiao, J.; Wang, Y.; Song, G.; Wei, H. A Modeling Study on Population Dynamics of Jellyfish Aurelia aurita in the Bohai and Yellow Seas. Front. Mar. Sci. 2022, 9, 842394. [Google Scholar] [CrossRef]

- Sarro, E.; Tripodi, A.; Woodard, S.H. Bumble Bee (Bombus vosnesenskii) Queen Nest Searching Occurs Independent of Ovary Developmental Status. Integr. Org. Biol. 2022, 4, obac007. [Google Scholar] [CrossRef]

- Fassbinder-Orth, C.A. Methods for Quantifying Gene Expression in Ecoimmunology: From qPCR to RNA-Seq. Integr. Comp. Biol. 2014, 54, 396–406. [Google Scholar] [CrossRef]

- Minford, P. Monetary Union: A Desperate Gamble. J. Staple Inn Actuar. Soc. 1998, 33, 63–88. [Google Scholar] [CrossRef]

- Solé, M.; Lenoir, M.; Fontuño, J.M.; Durfort, M.; Van Der Schaar, M.; André, M. Evidence of cnidarians sensitivity to sound after exposure to low frequency noise underwater sources. Sci. Rep. 2016, 6, 37979. [Google Scholar] [CrossRef] [PubMed]

- Indiveri, G.; Linares-Barranco, B.; Hamilton, T.J.; van Schaik, A.; Etienne-Cummings, R.; Delbruck, T.; Liu, S.-C.; Dudek, P.; Häfliger, P.; Renaud, S.; et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 2011, 5, 9202. [Google Scholar] [CrossRef]

- Barry, J.F.; Turner, M.J.; Schloss, J.M.; Glenn, D.R.; Song, Y.; Lukin, M.D.; Park, H.; Walsworth, R.L. Optical magnetic detection of single-neuron action potentials using quantum defects in diamond. Proc. Natl. Acad. Sci. USA 2016, 113, 14133–14138. [Google Scholar] [CrossRef] [PubMed]

- Stromatias, E.; Neil, D.; Pfeiffer, M.; Galluppi, F.; Furber, S.B.; Liu, S.C. Robustness of spiking Deep Belief Networks to noise and reduced bit precision of neuro-inspired hardware platforms. Front. Neurosci. 2015, 9, 141542. [Google Scholar] [CrossRef] [PubMed]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef] [PubMed]

- Posch, C.; Serrano-Gotarredona, T.; Linares-Barranco, B.; Delbruck, T. Retinomorphic event-based vision sensors: Bioinspired cameras with spiking output. Proc. IEEE 2014, 102, 1470–1484. [Google Scholar] [CrossRef]

- Kheradpisheh, S.R.; Ganjtabesh, M.; Thorpe, S.J.; Masquelier, T. STDP-based spiking deep convolutional neural networks for object recognition. Neural Netw. 2018, 99, 56–67. [Google Scholar] [CrossRef]

- Masquelier, T.; Guyonneau, R.; Thorpe, S.J. Competitive STDP-Based Spike Pattern Learning. Neural Comput. 2009, 21, 1259–1276. [Google Scholar] [CrossRef]

- Furber, S. Large-scale neuromorphic computing systems. J. Neural Eng. 2016, 13, 051001. [Google Scholar] [CrossRef]

- Amir, A.; Datta, P.; Risk, W.P.; Cassidy, A.S.; Kusnitz, J.A.; Esser, S.K.; Andreopoulos, A.; Wong, T.M.; Flickner, M.; Alvarez-Icaza, R.; et al. Cognitive computing programming paradigm: A Corelet Language for composing networks of neurosynaptic cores. In Proceedings of the International Joint Conference on Neural Networks, Dallas, TX, USA, 4–9 August 2013. [Google Scholar] [CrossRef]

- Hasani, R.; Lechner, M.; Amini, A.; Rus, D.; Grosu, R. Liquid Time-constant Networks. Proc. AAAI Conf. Artif. Intell. 2021, 35, 7657–7666. [Google Scholar] [CrossRef]

- Cao, Y.; Chen, Y.; Khosla, D. Spiking Deep Convolutional Neural Networks for Energy-Efficient Object Recognition. Int. J. Comput. Vis. 2015, 113, 54–66. [Google Scholar] [CrossRef]

- Wang, Y.; Taniguchi, T.; Lin, P.-H.; Zicchino, D.; Nickl, A.; Sahliger, J.; Lai, C.-H.; Song, C.; Wu, H.; Dai, Q.; et al. Time-resolved detection of spin–orbit torque switching of magnetization and exchange bias. Nat. Electron. 2022, 5, 840–848. [Google Scholar] [CrossRef]

- Wang, Z.; Han, J. Welcome Message. In Proceedings of the 2021 IEEE International Conference on Robotics and Biomimetics, ROBIO, Sanya, China, 27–31 December 2021. [Google Scholar] [CrossRef]

- Chowdhury, S.S.; Sharma, D.; Kosta, A.; Roy, K. Neuromorphic computing for robotic vision: Algorithms to hardware advances. Commun. Eng. 2025, 4, 152. [Google Scholar] [CrossRef]

- Hasani, R.; Lechner, M.; Amini, A.; Liebenwein, L.; Ray, A.; Tschaikowski, M.; Teschl, G.; Rus, D. Closed-form continuous-time neural networks. Nat. Mach. Intell. 2022, 4, 992–1003. [Google Scholar] [CrossRef]

- Zhao, X.; Gerasimou, S.; Calinescu, R.; Imrie, C.; Robu, V.; Flynn, D. Bayesian learning for the robust verification of autonomous robots. Commun. Eng. 2024, 3, 18. [Google Scholar] [CrossRef]

- Saint-André, V.; Charbit, B.; Biton, A.; Rouilly, V.; Possémé, C.; Bertrand, A.; Rotival, M.; Bergstedt, J.; Patin, E.; Albert, M.L.; et al. Smoking changes adaptive immunity with persistent effects. Nature 2024, 626, 827–835. [Google Scholar] [CrossRef] [PubMed]

- Pecheux, N.; Creuze, V.; Comby, F.; Tempier, O. Self Calibration of a Sonar–Vision System for Underwater Vehicles: A New Method and a Dataset. Sensors 2023, 23, 1700. [Google Scholar] [CrossRef]

- Novo, A.; Lobon, F.; De Marina, H.G.; Romero, S.; Barranco, F. Neuromorphic Perception and Navigation for Mobile Robots: A Review. ACM Comput. Surv. 2024, 56, 1–37. [Google Scholar] [CrossRef]

- Li, Z.; Johnson, W. Quantifying the effects of hindcast surface winds and ocean currents on oil spill contact probability in the Gulf of Mexico. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, OCE, Monterey, CA, USA, 19–23 September 2016. [Google Scholar] [CrossRef]

- Demir, M. SAR image reconstruction from 1-bit quantized data with sign-flip errors. In Proceedings of the 2021 29th Signal Processing and Communications Applications Conference (SIU), Istanbul, Turkey, 9–11 June 2021. [Google Scholar] [CrossRef]

- Lim, S.L.; Bentley, P.J. The ‘Agent-Based Modeling for Human Behavior’ Special Issue. Artif. Life 2023, 29, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.J.; Huang, H.; Bu, W.; Chen, Z.S. Optimization strategy for bio-inspired lateral line sensor arrays in autonomous underwater helicopter. Eng. Appl. Comput. Fluid Mech. 2025, 19, 2436087. [Google Scholar] [CrossRef]

- Lu, X.; Aung, S.T.; Kyaw, H.H.S.; Funabiki, N.; Aung, S.L.; Soe, T.T. A study of grammar-concept understanding problem for C programming learning. In Proceedings of the 2021 IEEE 3rd Global Conference on Life Sciences and Technologies (LifeTech), Nara, Japan, 9–11 March 2021; pp. 158–161. [Google Scholar] [CrossRef]

- Table of Contents. IEEE Trans. Nucl. Sci. 2023, 70, 869–871. [CrossRef]

- Vianello, E.; Werner, T.; Bichler, O.; Valentian, A.; Molas, G.; Yvert, B.; De Salvo, B.; Perniola, L. Resistive memories for spike-based neuromorphic circuits. In Proceedings of the 2017 IEEE 9th International Memory Workshop (IMW), Monterey, CA, USA, 14–17 May 2017. [Google Scholar] [CrossRef]

- Wolf, T.; Neumann, P.; Nakamura, K.; Sumiya, H.; Isoya, J.; Wrachtrup, J. Subpicotesla Diamond Magnetometry. Phys. Rev. X 2015, 5, 041001. [Google Scholar] [CrossRef]

- Zhang, L.; Li, H.; Zhang, X.; Li, Q.; Zhu, G.; Liu, F.Q. A marine coating: Self-healing, stable release of Cu2+, anti-biofouling. Mar. Pollut. Bull. 2023, 195, 115524. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Meng, X.; Han, T.; Wei, X.; Wang, L.; Zhao, Y.; Fu, G.; Tian, N.; Wang, Q.; Qin, S.; et al. Optical magnetic field sensors based on nanodielectrics: From biomedicine to IoT-based energy internet. IET Nanodielectr. 2023, 6, 116–129. [Google Scholar] [CrossRef]

- Hoang, K.L.H.; Wolf, S.I.; Mombaur, K. Benchmarking Stability of Bipedal Locomotion Based on Individual Full Body Dynamics and Foot Placement Strategies-Application to Impaired and Unimpaired Walking. Front. Robot. AI 2018, 5, 374830. [Google Scholar] [CrossRef] [PubMed]

| Research Focus | Key Finding | Methodology |

|---|---|---|

| Neural Architecture [29] | Mapped distinct neural populations in arms for chemo-tactile integration vs. proprioception. | Immunohistochemistry, neural tracing |

| Sucker Mechanics [31] | Quantified the pressure sensitivity range of individual suckers (0.5–120 kPa). | Micro-force sensors, high-speed video |

| Chemical Sensing [32] | Identified 15 unique protein receptors in sucker epithelium tuned to specific amino acids from prey. | Transcriptomics, electrophysiology |

| Distributed Control [33] | Demonstrated arm coordination and object retrieval without central brain input in de-brained specimens. | Behavioral experiments, lesion studies |

| Embodied Intelligence [20] | A soft robotic arm with local reflex loops successfully navigated a maze to find a chemical target. | Robotics validation, PID control |

| Sensor Fabrication [34] | Developed a flexible, multimodal “e-sucker” capable of simultaneous tactile and pH sensing. | Nanomaterial synthesis, characterization |

| Information Filtering [35] | <70% of raw sensory data from suckers is processed locally; only high-value data is transmitted centrally. | Neural recording, computational modeling |

| Motor Program Encoding [36] | Found that motor programs for complex gestures like “twist and pull” are encoded within arm ganglia. | Electrostimulation, kinematic analysis |

| Texture Discrimination [37] | Arms can discriminate textures with sub-millimeter features using dynamic sucker motion. | Behavioral assays, material science |

| Grip Force Modulation [38] | Grip force is automatically adjusted based on chemical detection of prey struggle indicators. | Force plate measurement, HPLC |

| Neural Simulation [39] | Created a computational model of the arm’s nervous system that successfully replicates grasping reflexes. | Spiking neural network (SNN) simulation |

| Material Compliance [40] | Showed that the softness of arm tissue is critical for conforming to objects and enhancing tactile feedback. | Finite Element Analysis (FEA), mechanical testing |

| Cross-Modal Learning [41] | Octopuses can learn to associate a specific texture with a food reward using tactile sensing alone. | Operant conditioning experiments |

| Energy Efficiency [42] | Measured the extremely low power consumption of peripheral neural processing in arms (<5 mW). | Calorimetry, electrophysiology |

| Damage Response [43] | Arms exhibit immediate localized gait adaptation to compensate for sucker damage or loss. | Behavioral observation, lesion studies |

| Closed-Loop Control [44] | Implemented a neuromorphic chip to process tactile data and control a gripper in under 10 ms. | Neuromorphic engineering, robotics |

| 3D Shape Recognition [45] | Arms can reconstruct the 3D shape of hidden objects through targeted exploratory grasping motions. | Kinematic tracking, machine learning |

| Chemical Communication [46] | Preliminary evidence suggests suckers may also detect chemical signals from other octopuses. | Mass spectrometry, behavioral ecology |

| Hydrodynamic Sensing [47] | Suckers are sensitive to minute hydrostatic pressure changes, aiding in prey detection. | Particle Image Velocimetry (PIV), sensor design |

| Synergy with Vision [48] | Detailed how central brain fuses ambiguous visual data with definitive chemotactic arm data for decision-making. | Neural recording, behavioral tracking |

| Biological Model | Sensing Principle | Engineering Analogue | Technology Readiness Level (TRL) | Key Advantages | Major Challenges |

|---|---|---|---|---|---|

| Sea Turtle (Magnetoreception) [11,12,21,22]. | Quantum-assisted radical pair mechanism in cryptochrome proteins sensing Earth’s magnetic field vector. | Nitrogen-Vacancy (NV) center magnetometers in diamond. Solid-state quantum sensors initialized and read with lasers and microwaves. | TRL 4–5 (Lab validation in relevant environment) | Absolute, drift-free measurement; high sensitivity; robust to pressure/temperature; provides both intensity and direction. | High power consumption for laser/microwave systems; miniaturization of peripheral electronics; sensitivity to vibrational noise. |

| Octopus (Touch-Taste) [26,28,30,31,32,33,34,35,36,37,38,43,44,45] | Distributed mechano- and chemoreceptors in suckers enabling localized “peripheral intelligence” and reflexive control. | Soft, multimodal E-skins using conductive polymers, liquid metals, and hydrogels for physically integrated on the same hardware tactile and chemical sensing with embedded processing. | TRL 3–4 (Proof-of-concept & lab validation) | Enables complex manipulation in unstructured environments; reduces central processing load via embodied intelligence; damage-resistant. | Integrating chemical and tactile sensing without cross-talk; sealing sensitive chemicals in aqueous environments; achieving high spatial resolution at low cost. |

| Jellyfish/Fish (Hydrodynamic Flow) [15,47,49,50,53] | Hair cells in lateral line or rhopalia detecting flow velocity and pressure gradients for passive obstacle detection and rheotaxis. | MEMS or polymer-based Artificial Hair Cell (AHC) sensors arranged in arrays to form an artificial lateral line. | TRL 4–6 (Lab to early prototype testing in water) | Ultra-low power consumption (µW–mW range); always-on passive sensing; detects both living and static obstacles. | Susceptibility to biofouling; signal interpretation in highly turbulent or noisy flows; calibration and drift over long deployments. |

| Algorithm Type | Key Features | Advantages | Limitations | Power Consumption |

|---|---|---|---|---|

| EKF-SLAM | Probabilistic, Gaussian assumptions | Mature technology, reliable in clear waters | High computational load, sensitive to sensor noise | 50–100 W |

| Visual SLAM | Feature-based, camera-centric | High resolution in clear water | Fails in turbid conditions, high processing load | 30–80 W |

| Proposed Bio-inspired SNN | Event-driven, adaptive fusion | Robust to sensor failure, low power | Requires specialized hardware | 5–20 W |

| Challenge Category | Specific Challenges | Future Research Directions |

|---|---|---|

| Sensor Integration & Fusion | Disparate data types (vector, event-based, continuous) Temporal alignment of multi-scale data Physical integration on AUVs (EM interference, wiring, sensor placement) | Develop hierarchical SNN architectures for multi-time-scale fusion Dynamic, context-aware fusion models Optimize sensor placement and packaging to minimize interference |

| Neuromorphic Computing Scalability & Deployment | Limited neuron/synapse count on single chips Sensitivity to environmental factors (pressure, temperature) Immature SNN training methods for navigation tasks | Research multi-chip neuromorphic systems with event-based communication Develop robust online learning algorithms (e.g., STDP-based reinforcement learning) Harden hardware for harsh underwater conditions |

| Long-Term Reliability & Robustness | Biofouling of flow sensors Degradation of soft e-skins in seawater Stability of quantum magnetometer systems under vibration | Integrate anti-fouling coatings without compromising sensitivity Develop ruggedized, environmentally sealed sensor packages Advance from TRL 3–5 to TRL 6–7 for real-world deployment |

| Validation & Benchmarking | Lack of real-world testing in unpredictable conditions No standardized metrics for bio-inspired systems Simulations and tank tests not representative of open-water challenges | Establish standardized metrics (energy efficiency, latency, fault tolerance) Conduct long-duration field trials in progressively challenging environments Develop protocols for graceful degradation and adaptive capability assessment |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sheikder, C.; Zhang, W.; Chen, X.; Li, F.; Liu, Y.; Zuo, Z.; He, X.; Tan, X. Marine-Inspired Multimodal Sensor Fusion and Neuromorphic Processing for Autonomous Navigation in Unstructured Subaquatic Environments. Sensors 2025, 25, 6627. https://doi.org/10.3390/s25216627

Sheikder C, Zhang W, Chen X, Li F, Liu Y, Zuo Z, He X, Tan X. Marine-Inspired Multimodal Sensor Fusion and Neuromorphic Processing for Autonomous Navigation in Unstructured Subaquatic Environments. Sensors. 2025; 25(21):6627. https://doi.org/10.3390/s25216627

Chicago/Turabian StyleSheikder, Chandan, Weimin Zhang, Xiaopeng Chen, Fangxing Li, Yichang Liu, Zhengqing Zuo, Xiaohai He, and Xinyan Tan. 2025. "Marine-Inspired Multimodal Sensor Fusion and Neuromorphic Processing for Autonomous Navigation in Unstructured Subaquatic Environments" Sensors 25, no. 21: 6627. https://doi.org/10.3390/s25216627

APA StyleSheikder, C., Zhang, W., Chen, X., Li, F., Liu, Y., Zuo, Z., He, X., & Tan, X. (2025). Marine-Inspired Multimodal Sensor Fusion and Neuromorphic Processing for Autonomous Navigation in Unstructured Subaquatic Environments. Sensors, 25(21), 6627. https://doi.org/10.3390/s25216627