This section introduces the COCOWEARS Framework, a novel approach for the efficient and accelerated development of next-generation wearable devices.

The importance of a dedicated framework for wearable system development lies in its ability to optimize design efficiency across multiple crucial aspects:

2.1. The Proposed Framework

In COCOWEARS, PBD inspired the definition of constraints, adopting a top-down approach to guide platform selection based on factors such as speed, power dissipation, and hardware availability. Meanwhile, SPINE influenced the architecture of software components and hardware accelerators, ensuring their reusability and adaptability across different implementations. Unlike frameworks purely software-oriented like SPINE, COCOWEARS must deal with the inherent complexity of heterogeneous systems—i.e., supporting both software routines and hardware accelerators, each with different execution paradigms, resource constraints, latency profiles, and communication fabrics. Because of this, a major part of our effort has been to model and conceptualize an architecture and interface abstraction capable of working seamlessly with both paradigms. The COCOWEARS Framework is designed to optimize the development of wearable systems by systematically selecting the most suitable platform. The platform selection process is based on high-level constraints defined by the developer, who specifies requirements such as computation speed, delay, occupied area (memory footprint for software components and chip area for FPGA implementations), power consumption, and the tasks to be performed (e.g., machine learning classification, signal filtering, or data compression). The developer does not need prior knowledge of the optimal execution platform, as COCOWEARS aims to autonomously determine the best deployment based on application constraints and operations. While full automation is the long-term goal, the current focus is on defining the methodology and architecture. Central to this approach are high-level interfaces that abstract communication with both hardware accelerators and software components. These components must be pre-built, characterized, and made available in a repository to enable future automated platform selection and seamless task migration with minimal developer intervention.

2.1.1. Repository-Driven Platform Selection

At its core, COCOWEARS operates based on a structured repository of pre-implemented hardware and software components to make informed platform selection decisions. This repository is conceptually divided into two sets:

Hardware Components Set—Contains pre-implemented modules designed for FPGA-based acceleration, such as signal filtering blocks, matrix operations, machine learning classifiers (e.g., KNN), or control units.

Software Components Set—Includes implementations that run on CPUs/MCUs, handling tasks such as digital filtering (e.g., IIR filters), basic classification algorithms, and general-purpose processing.

Ideally, for each operation, the repository should contain both hardware and software implementations, ensuring a one-to-one correspondence between the two sets. This allows COCOWEARS to analyze existing components, compare their performance metrics (e.g., latency, resource usage, power consumption), and determine whether a task should be implemented in hardware or software.

However, if only one implementation instance (hardware or software) exists, the framework will work with the available option. If a hardware implementation is missing, the framework suggests either using the software alternative or guiding the developer in implementing a hardware version, following structured design guidelines for reusability. If a software implementation is absent, the developer may need to rely on an FPGA-based solution or create a software alternative within the platform’s constraints. By structured design guidelines, we mean that every module—whether hardware or software—must interact with the rest of the system through a common, well-defined interface. In practice, this means that a hardware accelerator and its software counterpart expose identical input/output contracts, data formats, handshaking semantics, and control signals. Because both variants “speak the same language” at the architectural boundary, they can be swapped or replaced without altering the overarching system flow, thus preserving consistency and enabling seamless fallback or hybrid execution.

After this evaluation process, COCOWEARS suggests how to interconnect the selected components, ensuring an optimized workflow where software does not introduce bottlenecks that limit hardware efficiency. This structured approach guarantees seamless integration, high performance, and energy-efficient execution of wearable systems.

At the end of the selection process, COCOWEARS provides the developer with recommendations on the most suitable approach for implementing the wearable system, choosing from three alternatives:

Software-Only Solution: The CPU is responsible for both data management and processing, as no hardware accelerators are present. This solution prioritizes flexibility and ease of development, making it ideal for scenarios where high computational power is not required.

Hardware-Only Solution: The CPU serves solely as a coordinator, overseeing data acquisition, task distribution, and system control, while all computational workloads are executed on the FPGA hardware accelerators. This approach optimizes speed and latency, making it suitable for applications requiring significant processing power. However, due to the complexity of FPGA development, this solution may not always be accessible to all developers.

Hybrid Approach: The CPU acts as a coordinator and also performs processing, working alongside FPGA-based accelerators to balance computational efficiency, energy consumption, and scalability. By leveraging both architectures, this model ensures optimized execution performance while maintaining ease of development, making it a strong compromise between the software-only and hardware-only solutions.

A fundamental principle of the COCOWEARS Framework—beyond guiding the developer in platform selection—is its emphasis on code and component reusability. By leveraging a structured repository of pre-implemented hardware and software components, developers can significantly simplify the development process, making it more adaptable to new requirements while maintaining efficiency. This approach reduces time to market, as developers can reuse validated and characterized components rather than designing them from scratch. Additionally, it minimizes potential errors, ensuring that previously tested modules contribute to greater system reliability. Furthermore, the flexibility and scalability of the framework allow developers to build more complex and efficient systems, while maintaining the ability to extend functionality as needed. Whether integrating new components or refining existing ones, COCOWEARS ensures a structured, reusable, and optimized development cycle, making wearable system design more accessible and effective.

All materials developed so far, including the hardware and software components used to validate and test the proposed concepts, are released in open source and can be accessed from the official project repository (

https://github.com/FPorreca/COCOWEARS (accessed on 27 October 2025)).

2.1.2. Design of Reusable and Customizable Components

To achieve component reusability, each module within the COCOWEARS Framework must be structured following specific design rules. These standardized guidelines ensure that components, whether hardware or software, can be seamlessly integrated, swapped, or extended without affecting overall system functionality.

By adhering to these rules, developers gain the ability to achieve the following:

Interchange hardware and software implementations of the same task, enabling flexibility in platform selection. For example, a KNN classifier or an IIR filter can be implemented either in software (CPU) or hardware (FPGA) depending on performance requirements.

Expand functionality by designing new components or modifying existing ones while ensuring compatibility. This structured approach allows wearable systems to evolve without requiring extensive rework.

Guarantee interoperability among all components, maintaining smooth communication across sensor nodes, processing units, and hardware accelerators regardless of their implementation type.

Similarly to what has been developed in [

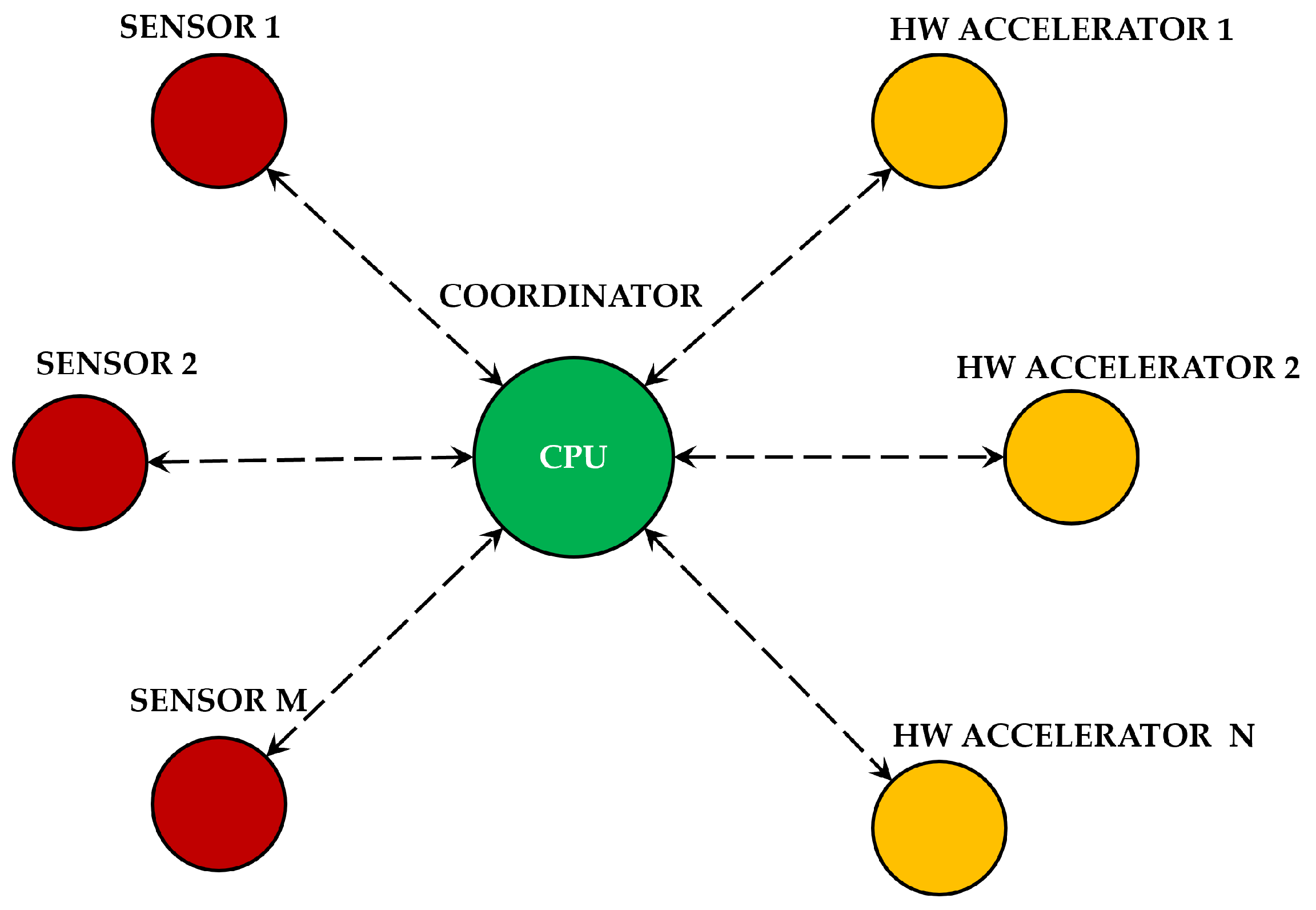

3], the architecture of the COCOWEARS framework follows a star topology (see

Figure 1), with three main components: the coordinator, the sensor nodes, and the hardware accelerator nodes.

The coordinator, represented by the CPU of the SoC, plays a central role in the system’s operation. It manages the network and collects data from the sensor nodes using serial protocols. When data processing requires high computational power or low latency, the coordinator offloads computations to a hardware accelerator node implemented in the FPGA. Otherwise, it directly processes the data using software components.

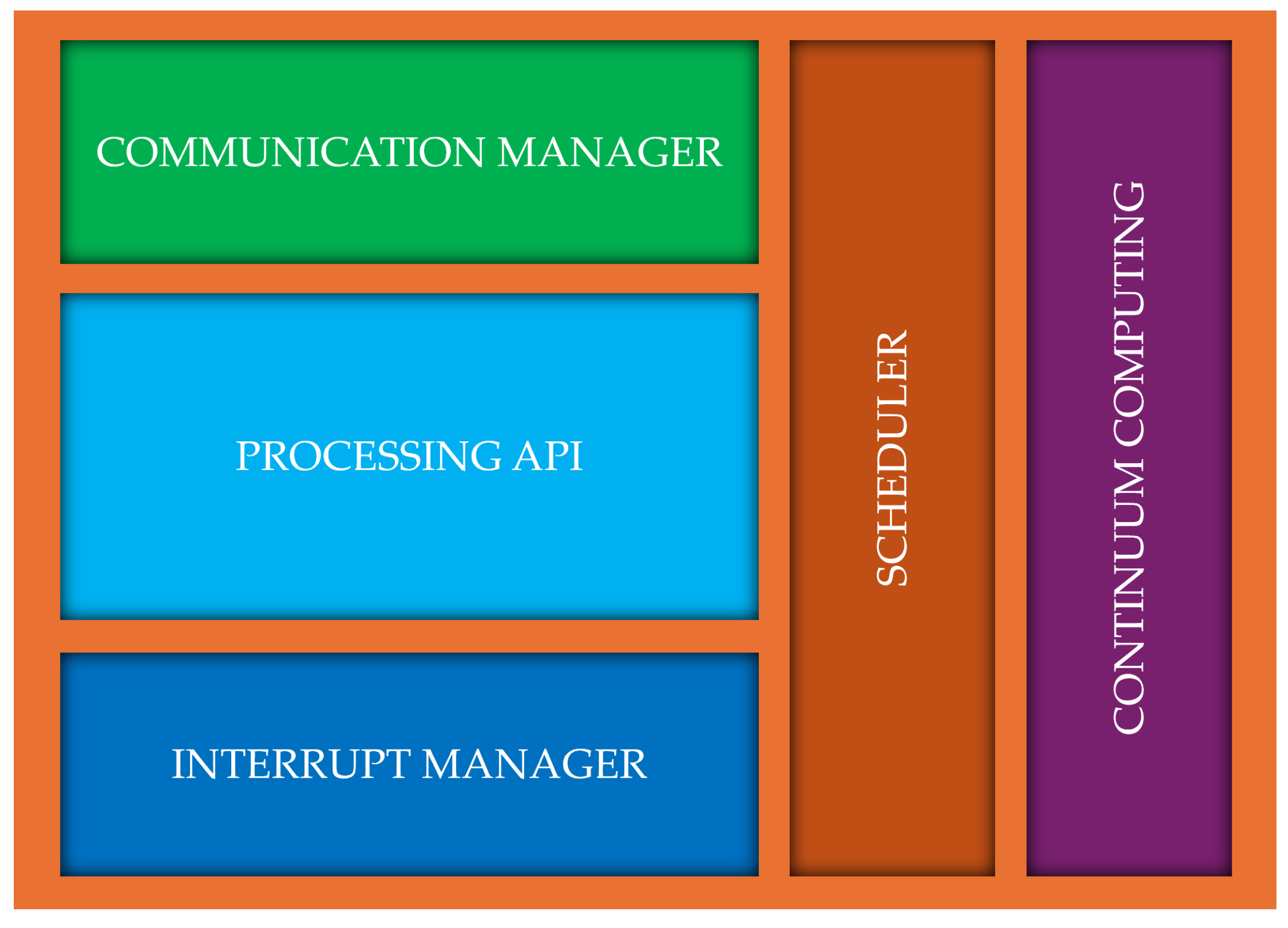

Since the coordinator executes software components, it ensures proper integration between hardware and software, adapting execution based on the framework’s selection. If hardware accelerators are required, the coordinator is also responsible for data exchange between the CPU and the FPGA, optimizing the communication flow. The coordinator handles external communication, acting as a gateway in scenarios where cloud processing is essential or when integrating into a computing continuum architecture. This ensures that wearable systems remain scalable and interconnected, maintaining flexibility across local and distributed processing environments. The functional architecture of the COCOWEARS node is shown in more detail in

Figure 2, and the functional architecture of COCOWEARS coordinator is shown in

Figure 3.

By structuring the wearable system according to the components of the framework, the development process becomes significantly easier and the system much more scalable. This approach enables the creation of a versatile library containing hardware and software components, which can be utilized as needed based on specific requirements. To ensure seamless interchangeability, both hardware accelerators and software modules must expose common APIs. For instance, in the case of classifiers like kNN, the API is as follows:

int classify (Classifier c, int∗ instanceVector, int iSize,

int∗ trainingSet, int tSetSize);

Its use would look like the following instruction, where the declaration of the parameters passed to the function is not reported for the sake of brevity:

int class = classify (KNN, instanceV, INST_SIZE,

trainingS, TRAINING_SIZE);

Specifically:

Classifier: Contains attributes related to the classifier to use, including the type (e.g., kNN, decision tree, …) and relevant parameters (e.g., number of nearest neighbors, in the case of kNN);

instanceVector: A pointer to the input vector containing the new instance to be classified.

trainingSet: A pointer to the training data needed to train the classifier model.

The input data and the returned result (i.e., the predicted class label) are consistent across both hardware and software implementations, ensuring uniformity. However, the source and handling of these data differ:

Hardware Implementation: Data are sent to the hardware accelerator via the ARM Advanced eXtensible Interface (AXI)-Lite protocol, and the result is extracted from the hardware accelerator’s register through the same protocol.

Software Implementation: The software routine processes the data locally and returns the result directly.

This consistent interface allows for the seamless replacement of hardware modules with software implementations and vice versa, without disrupting the overall system flow. The components of this library can be used by developers to build the system, allowing them to transparently utilize software functions or hardware accelerators. This means that developers can effectively employ hardware accelerators even without expertise in VHDL development.

2.1.3. The Coordinator

The coordinator is the core of the system and is always present. It runs a C++ application specifically optimized to ensure high performance and reliability, orchestrating both software execution and hardware acceleration management. This application is designed as a modular system, integrating various software components that handle distinct functionalities:

Communication Manager—A software routine responsible for handling wired and wireless network protocols, ensuring fast and secure communication with external sensors and hardware accelerators. The communication manager not only acquires the data from the sensors but is also in charge of configuring the sensors with the appropriate register settings when necessary.

Processing API—A collection of software routines essential for the application, including operations like kNN classification, IIR filtering, and other processing tasks. These routines handle operations that can be executed more slowly, avoiding the need to send data to hardware accelerators and preventing the CPU from being bogged down, which could slow down other operations.

Interrupt Manager—Manages interrupt signals originating from processing APIs (software routines) or hardware accelerators, ensuring timely response and efficient execution.

Scheduler—Organizes the execution of various components based on the recommendations generated by the framework, ensuring efficient scheduling that prevents software routines from becoming a bottleneck for hardware accelerators and optimizes overall system performance. At the current stage, the proposed framework still allows software tasks to be scheduled using a simple interrupt-based mechanism, without assigning any specific priority. More complex and efficient scheduling mechanisms are planned to be developed and integrated in future work.

Continuum Computing Module—Acts as the gateway for external communications, managing connections to cloud computing resources or enabling distributed processing within a computing continuum architecture when necessary.

By integrating these components, the coordinator efficiently balances hardware acceleration and software execution, ensuring seamless interoperability between modules while optimizing latency, processing speed, and resource allocation.

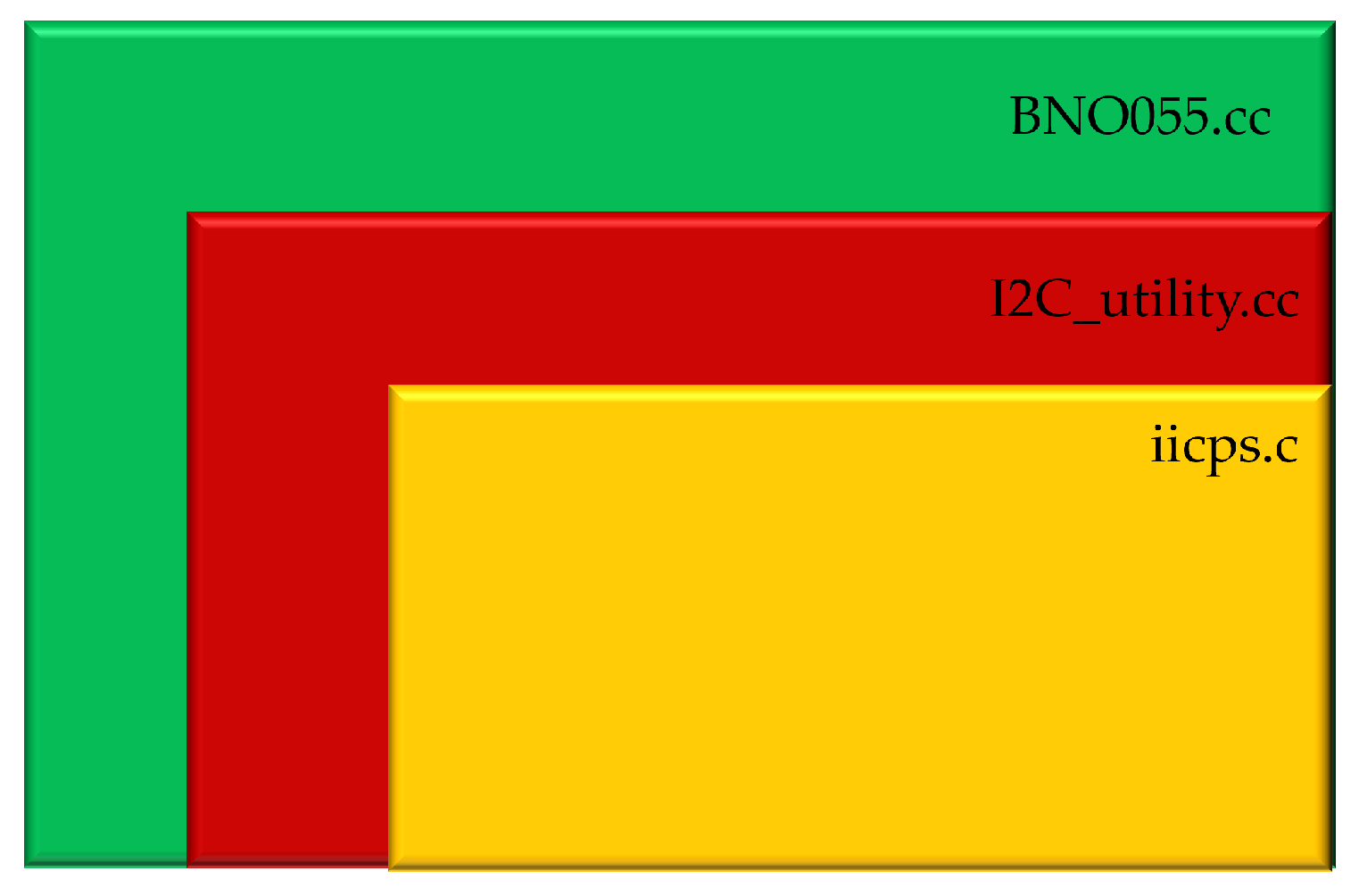

To enhance software reusability, for the communication manager, we have developed a library for configuring and acquiring data from sensors that use

serial protocol. The library is organized in three level (see

Figure 4): two levels are entirely reusable for any

sensor, and one level is sensor-specific. This approach reduces the designer’s customization effort when only the latter layer needs to be modified to adapt the system to the specific characteristics of a sensor featuring an

interface. For instance, this may involve adjusting the number of bytes to be transferred, which depends on the nature of the physical quantity measured by the sensor. It is worth noting that the

interface employs a standardized communication protocol, which is also commonly used in sensors implemented on flexible substrates—particularly designed for wearable systems [

15]. Note that

Figure 4 illustrates only the modular design of the

interface in the current prototype—chosen because the BNO055 sensor uses

. However, the communication manager is a conceptual container, not a monolithic block: it hosts protocol-specific modules to support other standards (e.g., UART or SPI). The same layered architecture—separating the high-level, protocol-agnostic logic from low-level drivers—applies universally. Thus, replacing or adding support for, say, an SPI sensor (e.g., MAX6675) or a UART sensor (e.g., URM06) requires modifying only the low-level implementation, while preserving the common interfaces and keeping the rest of the system unchanged.

The first level consists of the library provided by Xilinx IICPS, which contains all the APIs needed to utilize the built-in IIC controllers of the off-the-shelf heterogeneous SoC families suitable to implement the proposed framework, such as the Zynq-7000 or Zynq-UltraScale, that interact directly with the hardware.

The second layer is a specially designed library that can be reused for any project utilizing the protocol. By leveraging the functions in the IICPS library, it incorporates several helpful methods that shield the developer from certain technical details.

In particular, the main methods present in this library are the following:

Begin (void): Takes care of initializing the processor’s controller and configuring it according to the specific requirements, based on the parameters defined by the constructor method ( address, communication clock frequency).

Read (u8 ∗MsgPtr, s32 ByteCount): Performs a read for a number of bytes specified by the ByteCount parameter. To perform the read, the signals carried by the SDA and SCL lines must be modified; this is done by the lower level iicps library.

Write (u8 ∗MsgPtr, s32 ByteCount): Performs a write for a number of bytes specified by the ByteCount parameter. To perform the write, the signals carried by the SDA and SCL lines must be modified; this is done by the lower level iicps library.

Write_then_read (u8 ∗write_buffer, size_t write_len, u8 ∗read_buffer, size_t read_len): Performs two successive operation without interrupting communication: write and then read. This method is the most useful and widely used because it is employed by the upper-level library whenever a sensor value needs to be obtained. When one wants to read the value of a specific register of an

slave device, the address of the register to be read is first sent, and then the contents of that register are read. The code for the method that performs what is described is reported in

Appendix A.

The third and final level is tailored to the specific device you wish to communicate via protocol, making it non-reusable. In this case, when developing a library for the BNO055 sensor, we list all the register addresses of this sensor in the header file. The methods in this library utilize this information, along with the methods provided by the lower-level library, to initialize, configure, and acquire data from the sensor with just a few lines of code. This approach has been widely adopted in the development of microcontrollers, where its effectiveness in ensuring flexibility and modularity is well-established. However, to the best of our knowledge, it has not yet been implemented on more complex platforms like those utilized in this work. This makes our effort a pioneering demonstration of the framework’s scalability and applicability to sophisticated systems, such as wearable devices, highlighting its potential to bridge the gap between compact embedded systems and intricate, high-performance platforms.

2.1.4. The Sensor Node

Sensor nodes are critical components in any sensor network, serving as the primary source of data collection. These nodes can vary widely in their design and functionality, depending on the specific application and the type of data they are meant to collect. Each sensor node consists of two main components: the interface and the sensor itself.

The

interface is responsible for communication with the rest of the system and supports various protocols such as

, SPI, UART, as well as wireless standards like IEEE 802.15.4 [

16] and Bluetooth. The selection of the appropriate protocol is based on factors like data rate, power consumption, and communication range. The

sensor interacts directly with the physical environment, measuring parameters such as temperature, humidity, pressure, light, and motion. Analog sensors require an ADC (Analog-to-Digital Converter) to convert signals into a digital format, while digital sensors provide direct digital output to the system.

Before data collection can begin, each sensor requires configuration, which involves adjusting specific register values to set parameters such as measurement range, sampling rate, and power mode. The communication manager, operating on the coordinator side, is responsible for transmitting these configuration commands to the sensor nodes. COCOWEARS is structured to manage communication entirely in software through the CPU. Once the sensor data reaches the CPU, it can either be processed directly or sent to hardware accelerators for more efficient computation. This interaction follows a standardized ARM Advanced eXtensible Interface (AXI)-Lite protocol, ensuring reusability across different components. Specifically:

The CPU transfers data to dedicated hardware registers within the FPGA via AXI Lite.

The accelerators read the data from these registers and execute processing tasks.

Once computations are completed, results are stored in new output registers, which the CPU can access through AXI Lite to retrieve the processed data.

By employing this structured approach, COCOWEARS ensures a seamless integration between software-driven communication and hardware-accelerated processing, enabling efficient execution of tasks while maintaining flexibility and scalability.

2.1.5. The Hardware Accelerator Node

The hardware accelerators are developed in hardware description language (HDL) and implemented on the FPGA chip (see next paragraph for more details). They are responsible for processing the data from the sensors when the CPU lacks the computational power or when the task requires strict time constraints. Additionally, by offloading complex tasks from the CPU to the hardware accelerator, the overall power consumption of the system is reduced. This is particularly important in wearable applications, where battery life is a critical factor. Usually an hardware accelerator is composed of three main components:

Processing unit: These component performs operations such as mathematical calculations, data encryption, signal processing, and more.

Memory: Hardware accelerators often include memory that allow them to store processed data or used during calculation chain for partial results.

Communication Interfaces: To interact with the rest of the system, hardware accelerators include communication interfaces. These interfaces can use various protocols, such as the Advanced eXtensible Interface (AXI) [

17], to ensure seamless data transfer between the accelerator and other components of the SoC.

Usually, these components are regulated by an FSM (Finite State Machine) that manages all the control signals, ensuring that the hardware accelerator operates efficiently and effectively.

Notably, our approach is compatible with the presence of multiple wearable devices. For instance, in use case study presented in the following Section, both a localization system and a human activity recognition system were implemented on the same platform. We would like to emphasize that the HW/SW co-design approach allows the implementation, within the programmable logic section, of hardware architectures that can operate in parallel and concurrently, each performing its own data processing independently, without the need to schedule different processes on the same hardware at separate time instants. The resulting outputs can then be jointly processed within an information fusion paradigm to provide a more structured and comprehensive understanding.

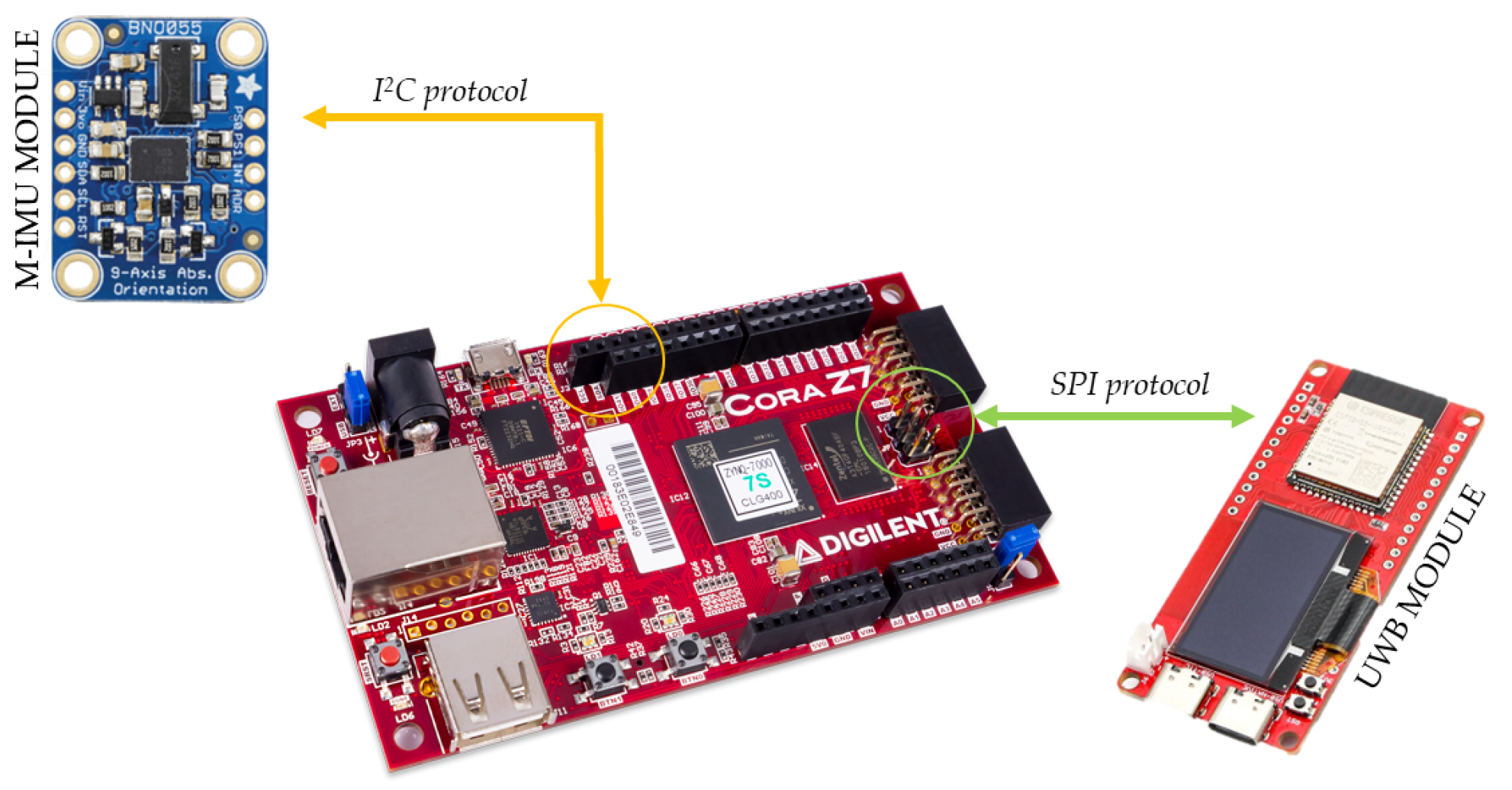

2.2. The Use Case

Serving as the bridge between COCOWEARS’ conceptual architecture and its real-world validation, we selected the Digilent Cora Z7-07S development board as our reference platform. This low-cost, off-the-shelf solution—built around the Xilinx Zynq-7000 APSoC—directly reflects the framework’s abstractions. It integrates a general-purpose processor—or Processing System (PS)—and a Programmable Logic (PL) fabric within a single chip: the on-chip ARM Cortex-A9 core plays the role of the coordinator, running the C++ orchestrator and handling all software routines, whereas the programmable FPGA fabric hosts the hardware accelerators. At its heart lies the XC7Z007S-1CLG400 SoC, combining a single-core 667 MHz Cortex-A9 processor with reconfigurable logic for true HW/SW co-design [

18]. Thanks to its extensive GPIO array and dedicated

, SPI, and UART connectors, the Cora Z7-07S lets us plug in a variety of analog and digital sensors perfect for proving the reusability of COCOWEARS in a wearable context. Expansion headers support off-the-shelf Wi-Fi and Bluetooth Low Energy (BLE) radios, closing the loop on COCOWEARS’ computing continuum vision by enabling seamless cloud offloading when on-device resources are stretched. The chosen use case concerns a system for real-time location monitoring and activity recognition of people within an indoor environment. Localization was performed using ultra-wideband (UWB) technology and trilateration algorithms, while activity recognition was based on data from a magneto-inertial sensor processed using a kNN-type machine learning algorithm. A wearable prototype was developed to implement this system, designed to be worn like a smartwatch on the dominant wrist. To minimize intrusiveness, a compact 3D-printed casing was created for housing the device. The prototype includes a BNO055 smart sensor, which integrates an accelerometer, gyroscope, magnetometer, and an embedded microcontroller running sensor fusion software. For localization, ESP32 MaUWB boards equipped with UWB transceivers and wireless connectivity (Wi-Fi/Bluetooth) were used to collect and transmit positional data by communicating with fixed anchors in the environment. Sensors are housed within the case and connected externally to the development board via a flexible cable. The case, sealed by its polycarbonate cover, is shown in

Figure 5 through which one can see the sensors and the display showing the university logo.

The ESP32 MauUWB board for localization and the magneto-inertial sensor BNO055 constitute the sensor node, according to the COCOWEARS architecture shown in

Figure 1. The BNO055 sensor integrate an hardware

communication interface, so the communication manager of the coordinator can interact directly with the sensor. The UWB chip for localization is connected to a ESP32 microcontroller, so the communication manager of the coordinator interact first with with the ESP32 microcontroller and then, the ESP32 microcontroller manage the UWB chip. The communication between the coordinator connection manager (master) and the microcontroller (slave) is executed via SPI protocol. In this case, the ESP32 integrates the interface that was made using the Espressif software API SPI Slave Driver. The wiring connection between the sensors and the development board are made according to the schematic shown in

Figure 6. This setup utilizes the dedicated physical pins for the serial communication protocol of the Cora Z7-07S. By following the schematic, each sensor is correctly interfaced with the development board, ensuring reliable data transmission and optimal performance of the system.

The sensor node connected with the board is managed by the coordinator, i.e., the Arm core of the SoC. The Communication Manager component of the coordinator directly interacts with the hardware of the main development board and exchanges data with the sensors using serial protocols. Specifically, for the chosen use case, the communication manager handles the communication between the magneto-inertial senor with interface and the localization sensor with the SPI protocol.

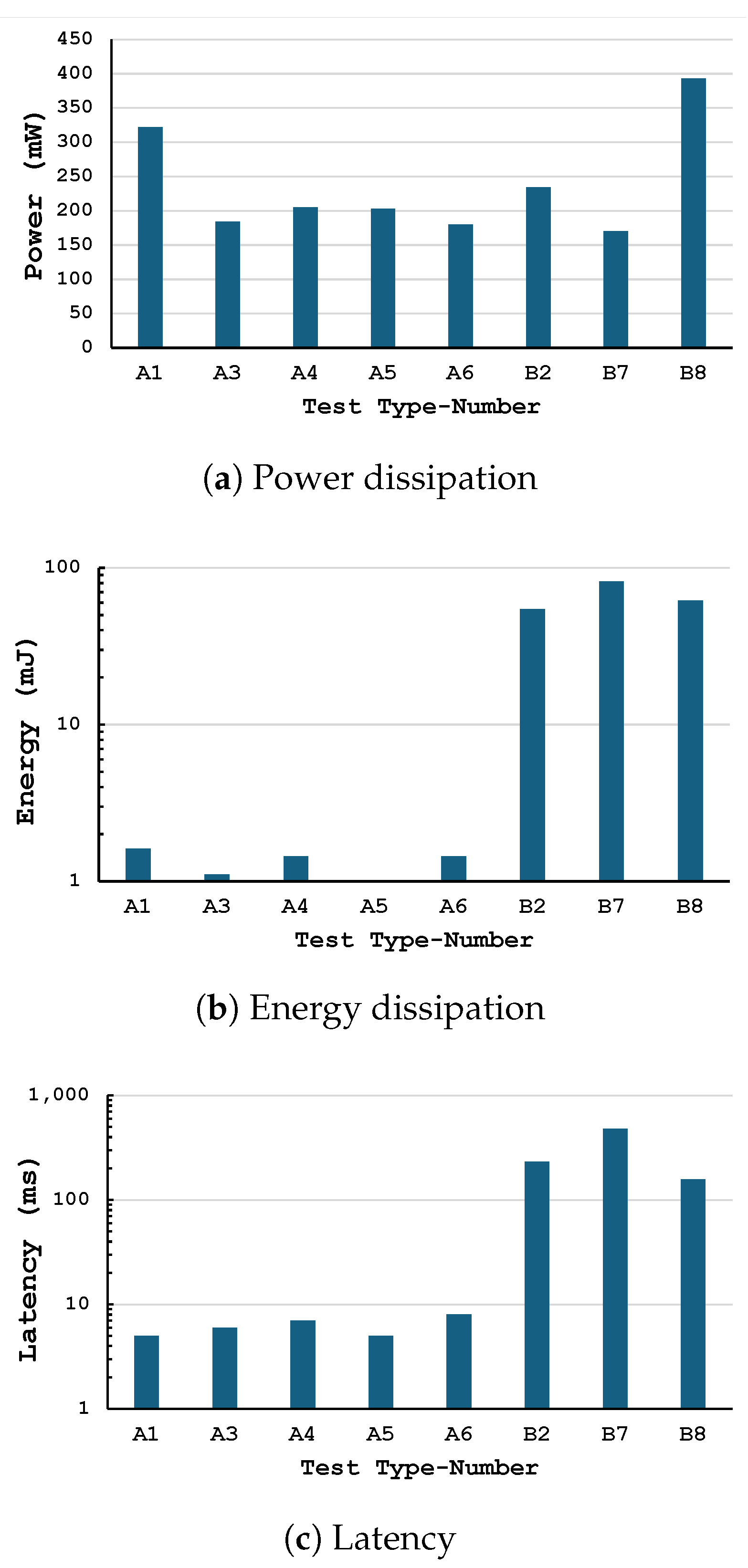

Once data are acquired, they must be processed. A priori, it is not possible to determine whether it is more convenient to process the data by hardware acceleration on FPGA or by a software routine on CPU. Our approach is to develop both solutions and adopt the one most convenient in terms of resources consumption (battery power) and performance (speed of execution). The metrics that characterize a solution were extracted through software simulations. Interchangeability between a software and hardware solution is ensured through the interface, which must be the same for both solutions.

In this case, the operation of acquiring data from the sensors and sending them to the hardware accelerator is managed sequentially, eliminating the need for the scheduler and the interrupt manager. Additionally, the gateway, which typically plays a role when local hardware or software resources are insufficient for processing tasks, is not used in this setup. This is because the selected development board is capable of executing all operations locally, whether entirely in software, entirely in hardware, or through a hybrid approach.

2.3. HW Accelerator Design

For the chosen use case, two hardware accelerators have been developed, one for the trilateration calculus and one for the kNN classification. These accelerators were designed using VHDL language and rigorously simulated and tested in the Xilinx Vivado development environment to verify their performance and reliability. Following successful validation, they were implemented on the physical prototype. In this implementation, the operation of acquiring data from the sensors and sending it to the hardware accelerators was managed sequentially. Consequently, components such as the interrupt controller were not utilized and implemented, simplifying the system’s design for this specific application.

2.3.1. Trilateration

Once the CPU acquires the data from the sensors, it transfers the information to the hardware accelerator using the AXI protocol. For the chosen use case, at least three anchors are needed to determine the position of the tag in space. Each anchor is characterized by three parameters: a pair of coordinates representing its spatial position and a distance measurement from the tag. These values are processed by the hardware accelerator according to the equations presented earlier, ultimately yielding the x and y coordinates of the tag.

The accelerator was designed using fixed-point arithmetic, considering the characteristics of the system generating the data to be processed. This approach helps reduce resource consumption and optimize both execution speed and power consumption. Specifically, the accuracy of the localization system, which is approximately 25 cm in this case, was taken into account. Consequently, an appropriate word length was chosen to ensure that computational accuracy is maintained throughout the processing chain.

For data processing, cascaded DSPs were utilized to take advantage of the dedicated fast hardware interconnections available for these specialized devices, thereby enhancing the computational speed. These DSP blocks, specifically the DSP48E1, are advanced digital signal processing units that feature integrated functionalities such as multipliers, adders, and accumulators. Their compact structure and optimized design make them ideal for accelerating computational tasks in FPGA implementations. Additionally, Xilinx IP Core dividers with a radix-2 algorithm were employed. The minimum clock period of the circuit is 5 ns, corresponding to a maximum operating frequency of 200 MHz. The circuit has an initial latency of 50 clock cycles, after which it can generate a useful result every clock cycle, thanks to the perfectly balanced pipeline.

2.3.2. kNN

Before implementing the kNN algorithm on hardware, the concept of using a single magneto-inertial sensor placed on the user’s dominant wrist was validated through simulations with Weka software v3.8 (

https://ml.cms.waikato.ac.nz/weka (accessed on 27 October 2025)). From the simulation, the system can distinguish with about 94% accuracy between sitting and walking activities.

The decision to use the kNN algorithm for classification in this work was based on its widespread popularity in the literature as a technique for human activity recognition (HAR). Numerous studies have demonstrated its effectiveness in classifying movement patterns using wearable sensor data, making it a well-established choice for this type of application. While other machine learning algorithms could achieve similar objectives, kNN remains a frequently adopted method due to its simplicity, adaptability, and strong performance in recognizing human activities. Furthermore, the choice to rely on a single sensor was made to ensure that the device remains less intrusive for the user and to simplify its development within the context of the chosen use case. This approach balances functionality and practicality while maintaining alignment with the overarching goals of the system.

The hardware accelerator used for kNN classification processes input data transferred via the AXI Lite Protocol. It utilizes a total of 10 registers: three registers are assigned to each of the sensors (accelerometer, magnetometer, and gyroscope) for data acquisition, and one register is reserved for storing the classification results.

For the development of the computational module, low-power techniques such as clock gating and fixed-point arithmetic were employed. The word length was carefully chosen based on the characteristics of the dataset to ensure efficient processing. DSPs were again utilized to calculate the distance, leveraging the dedicated internal links to enhance execution speed. The dataset, comprising 7206 instances, was stored on-chip using the BRAM slices of the FPGA device.

The Weka simulations identified the Manhattan distance as the most suitable metric for this case. To implement it in hardware, a dedicated absolute value function was developed. The architecture leverages the DSP configuration capabilities to compute the absolute difference without disrupting the high-speed PCIN-PCOUT path. This is achieved by adjusting the INMODE and carry-in signals to perform the operation as a sum, avoiding the need for external logic or two’s complement computation. This solution improves speed and reduces area and power consumption.

Figure 7 illustrates a DSP stage used for comparing input features. The developed circuit performs a classification in 7226 clock cycles. The minimum clock period is 9ns, which corresponds to a maximum operating frequency of approximately 111MHz. It is worth noting that the implemented hardware accelerator does not cause any loss of classification accuracy, despite being designed to operate with fixed-point arithmetic. This behavior is typical of machine learning applications, which are inherently robust to the noise introduced by reduced numerical precision in the underlying data representation.