The Enhance-Fuse-Align Principle: A New Architectural Blueprint for Robust Object Detection, with Application to X-Ray Security

Abstract

1. Introduction

2. Related Work

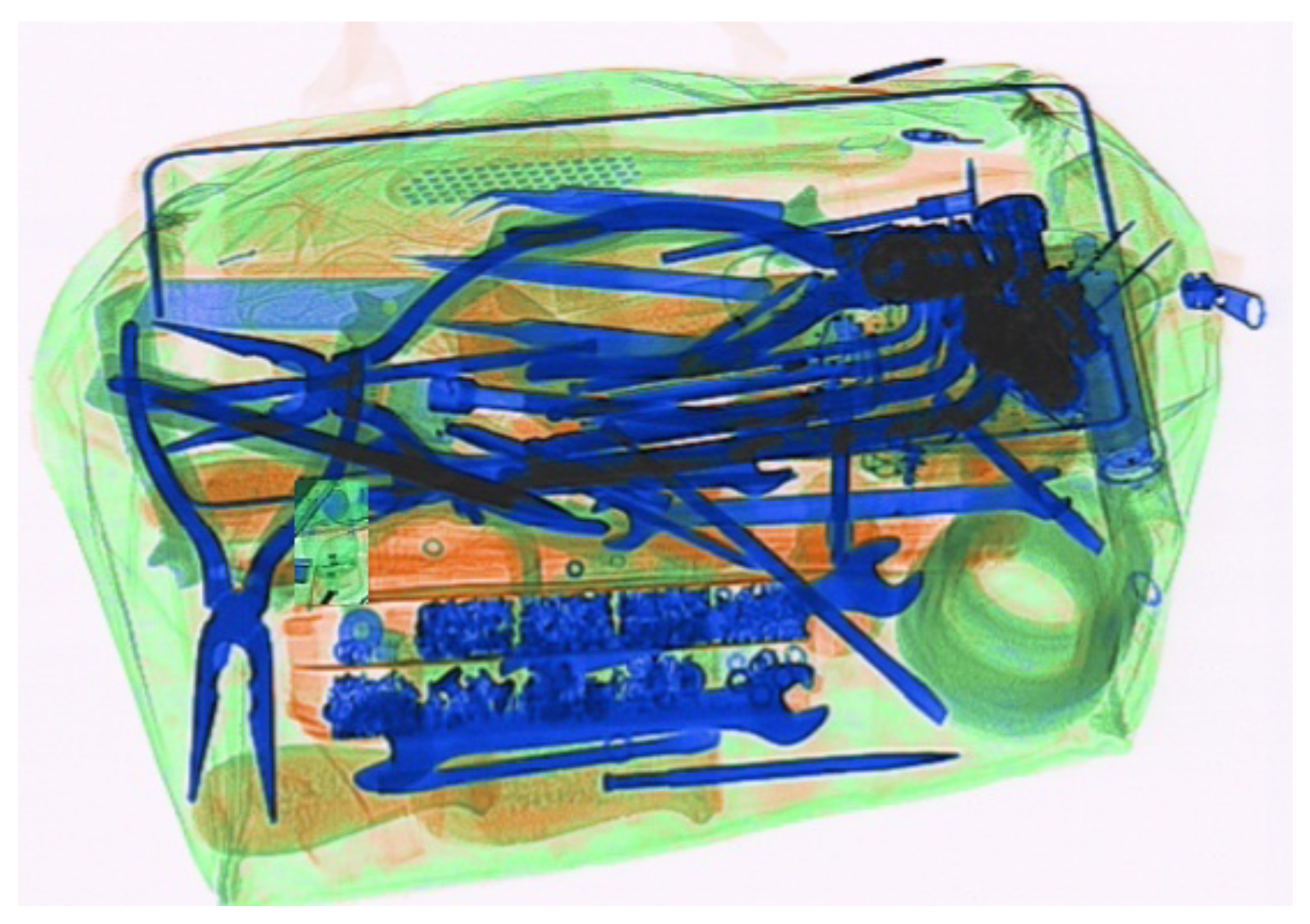

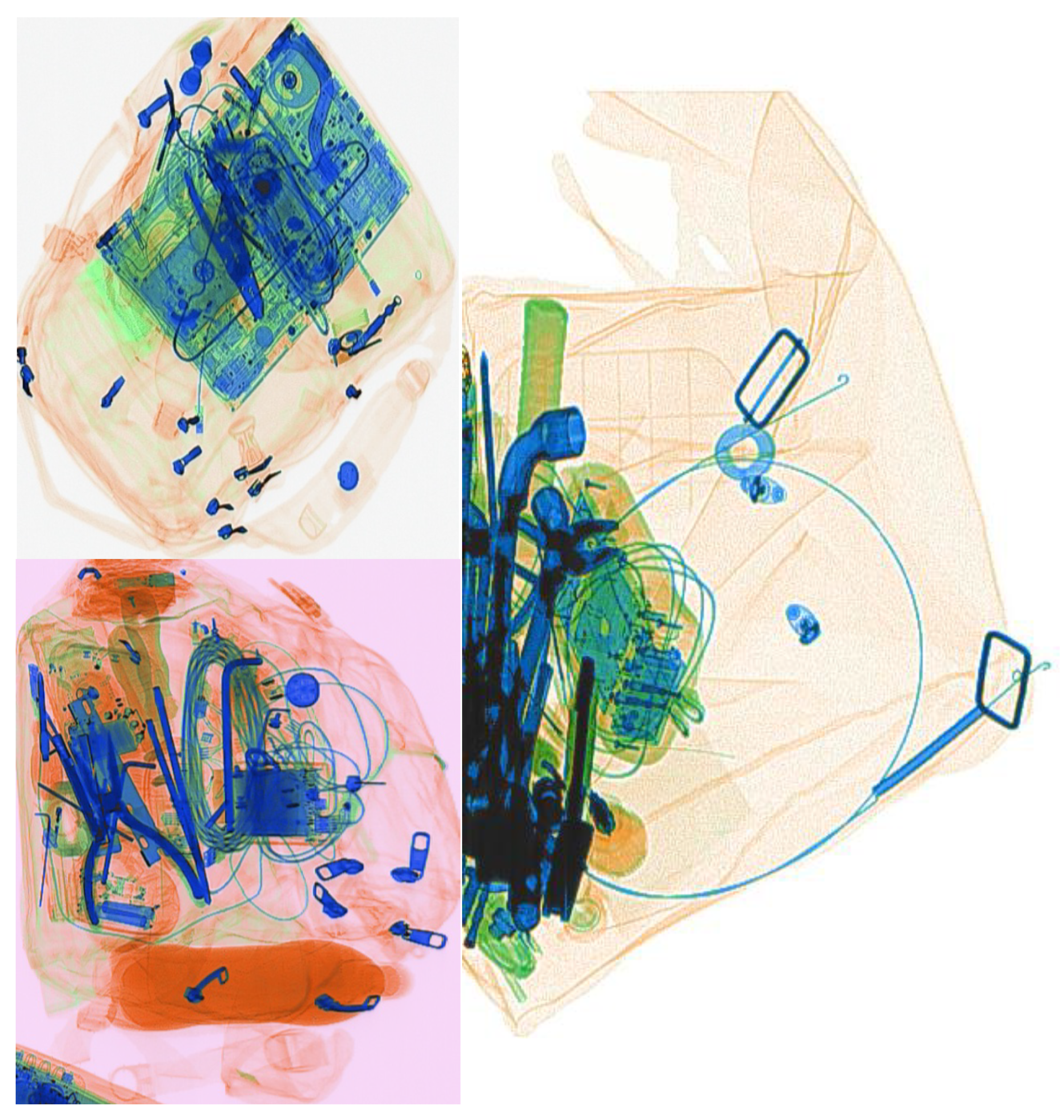

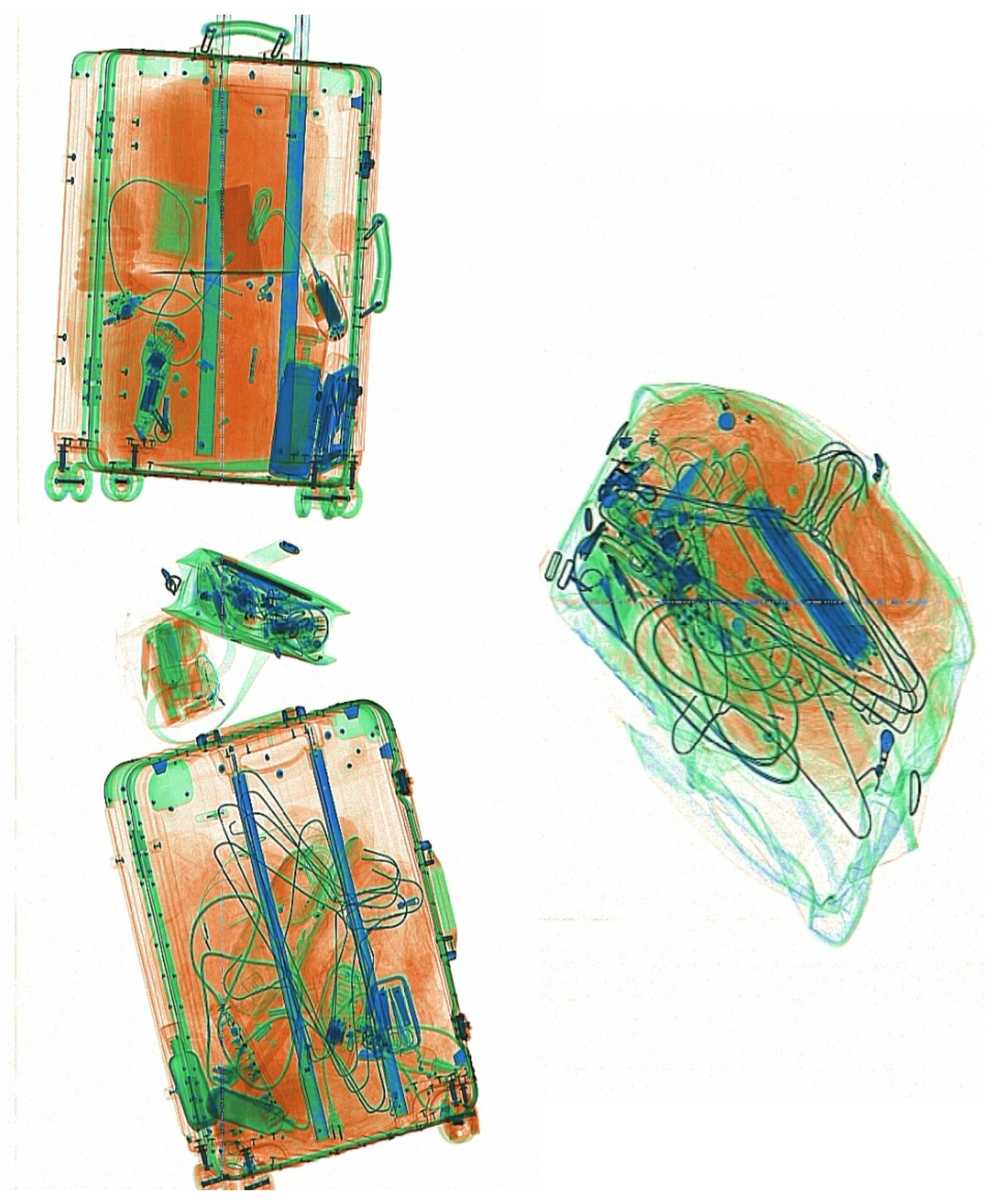

2.1. Challenges in Medical Imaging Object Detection

2.2. Remote Sensing and Satellite Imaging Challenges

3. Method

3.1. Overall Architecture and Design Philosophy

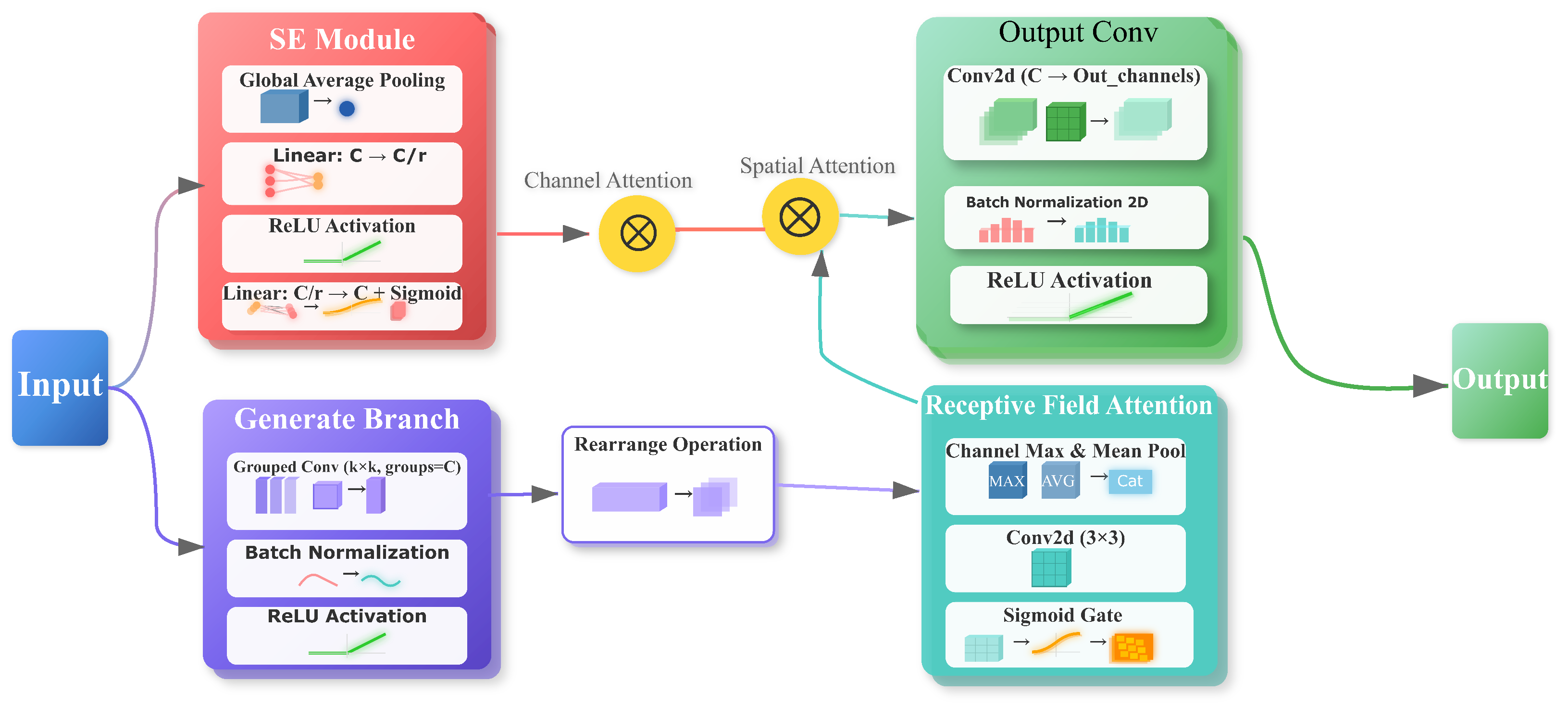

3.2. Enhance Module—RFCBAMConv

3.3. Fuse Module—BiFPN

3.4. Align Module

3.4.1. Enhanced Contextual Feature Alignment (ECFA)

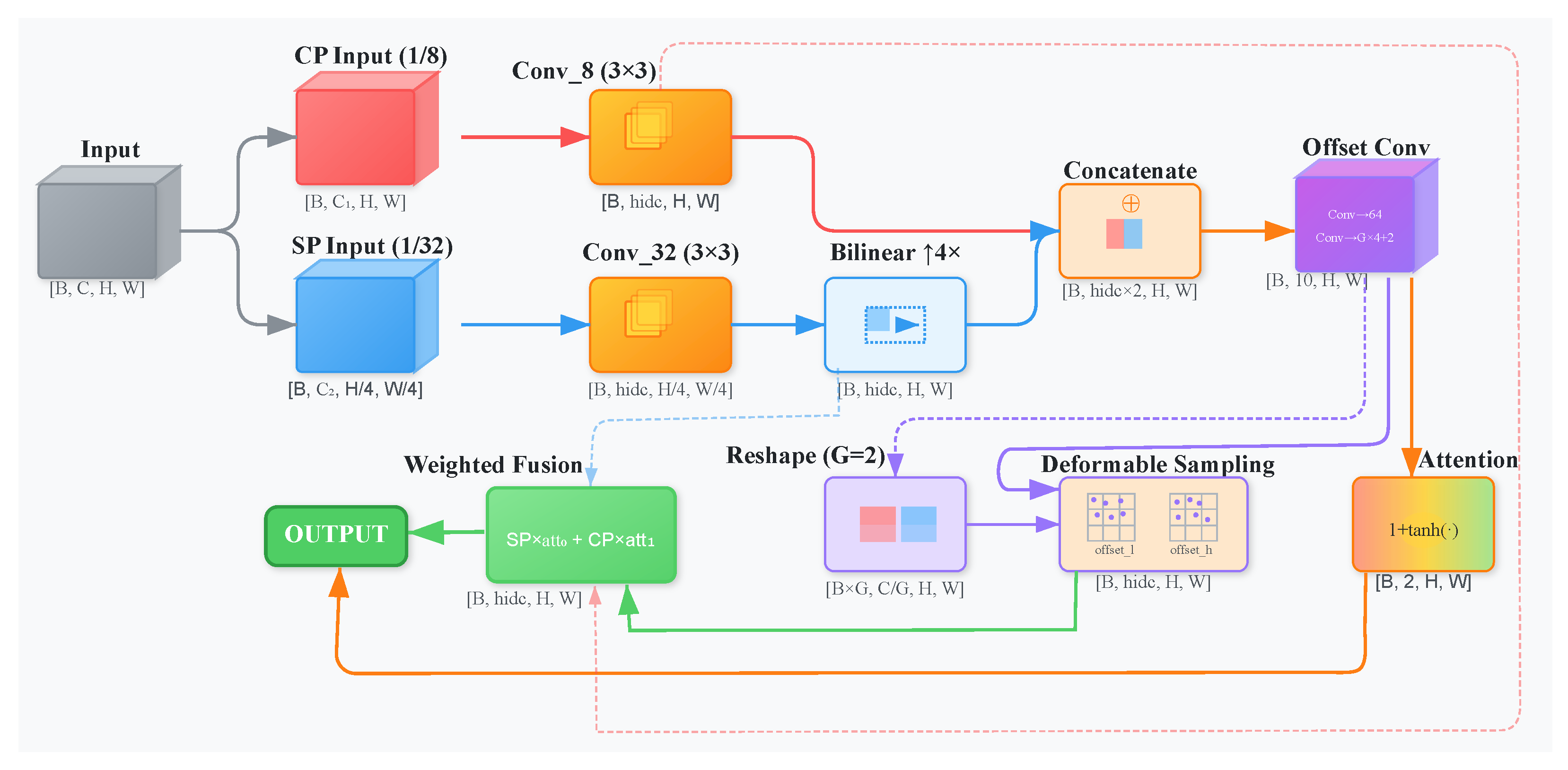

3.4.2. Adaptive Spatial Feature Alignment (ASFA)

4. Experiments and Results

4.1. Experimental Configuration and Protocol

4.2. Comprehensive Performance Analysis and Benchmarking

4.3. Ablation Study

4.4. Dataset Evaluation

4.5. Visual Performance Analysis

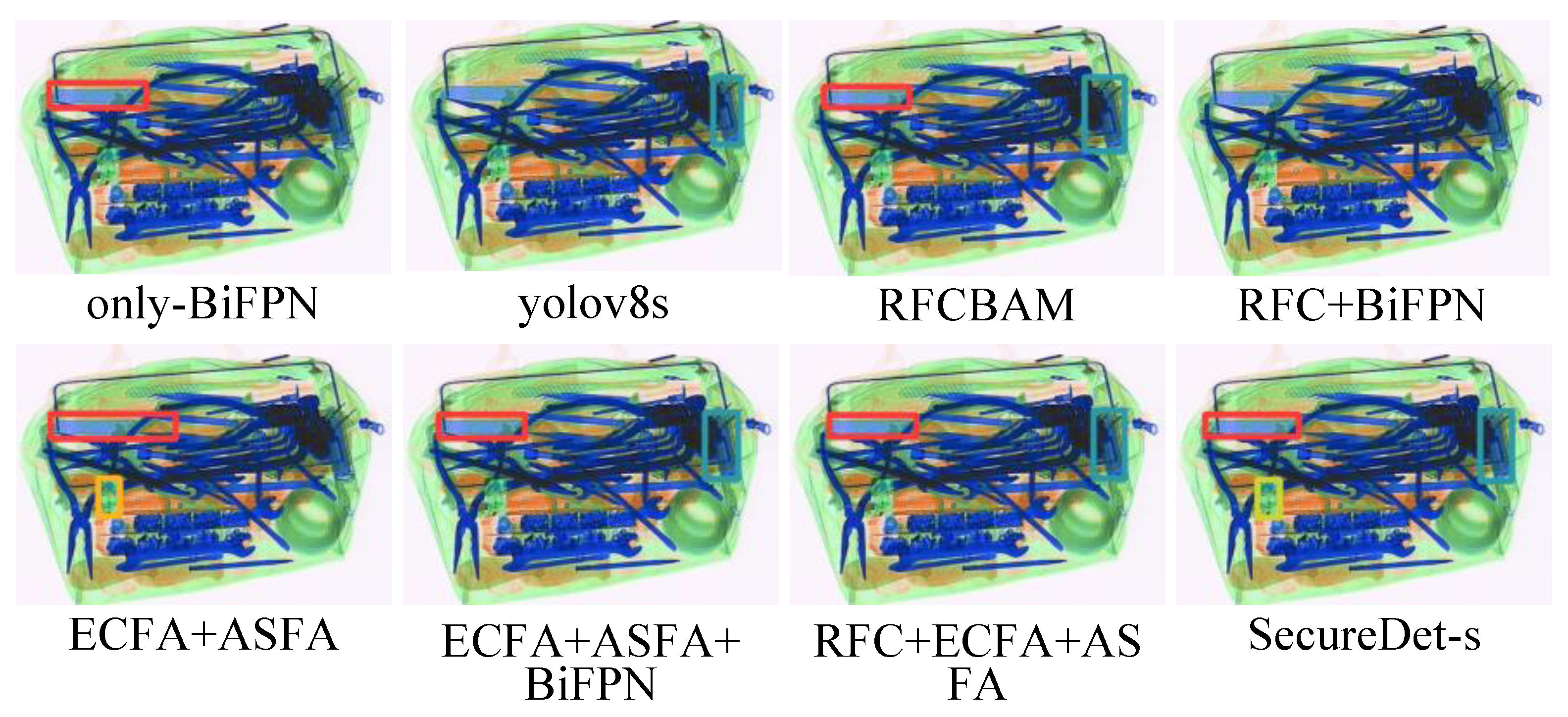

4.5.1. Ablation Study Visualization Analysis

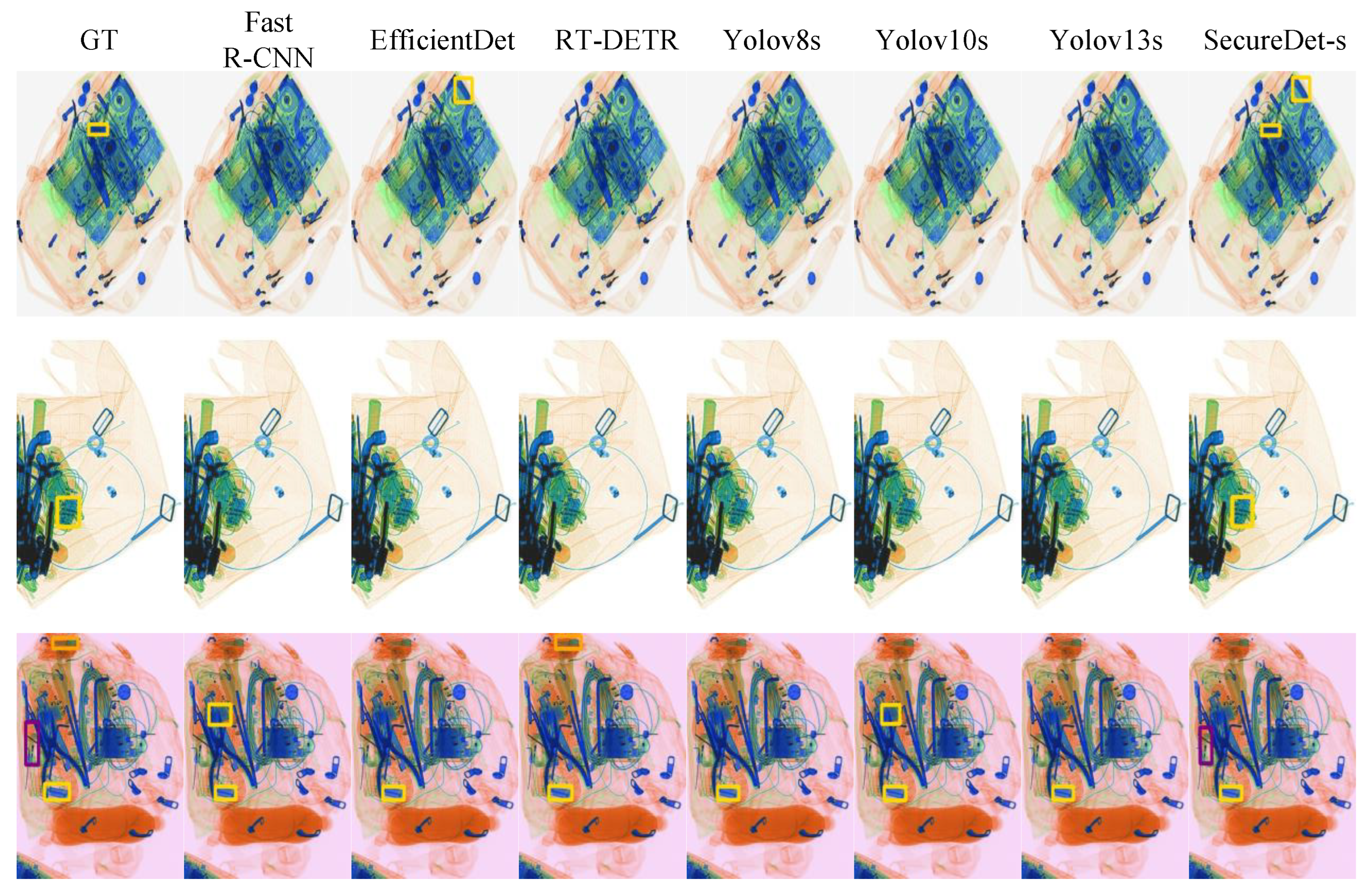

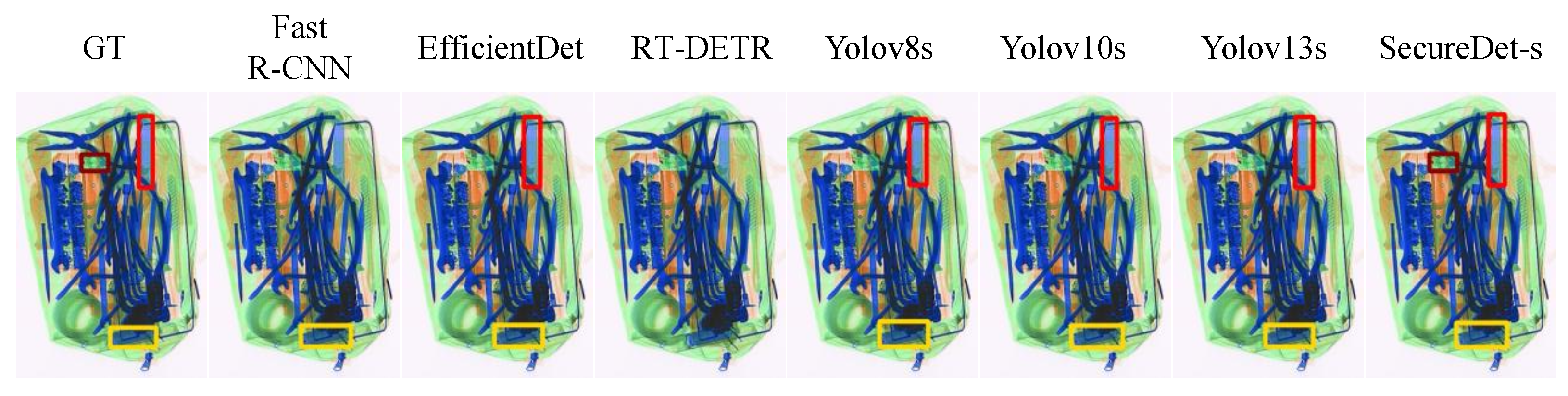

4.5.2. Severe Occlusion Scenario Analysis

4.5.3. Small Detection Performance

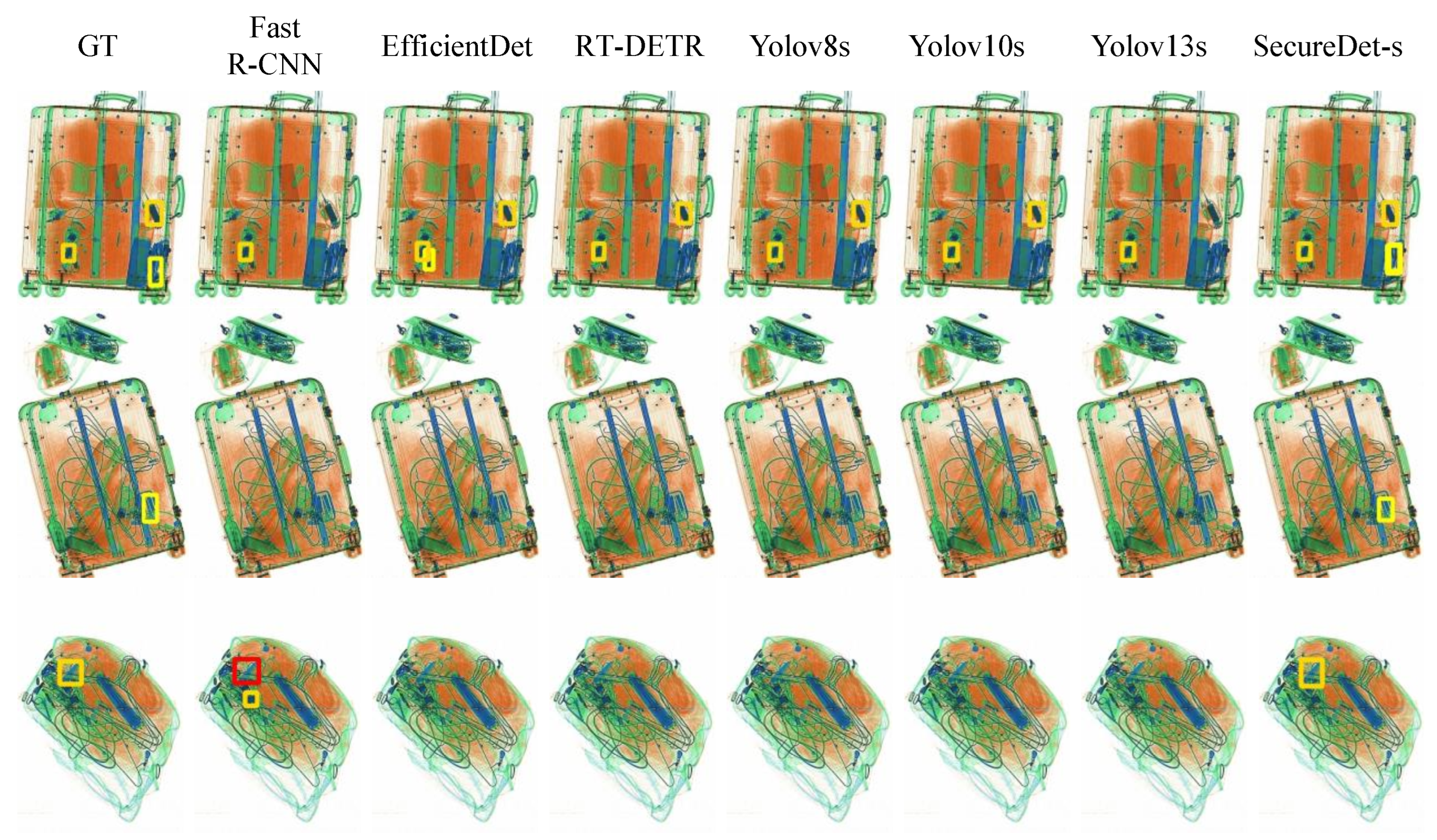

4.5.4. Multi-Scale Detection Performance

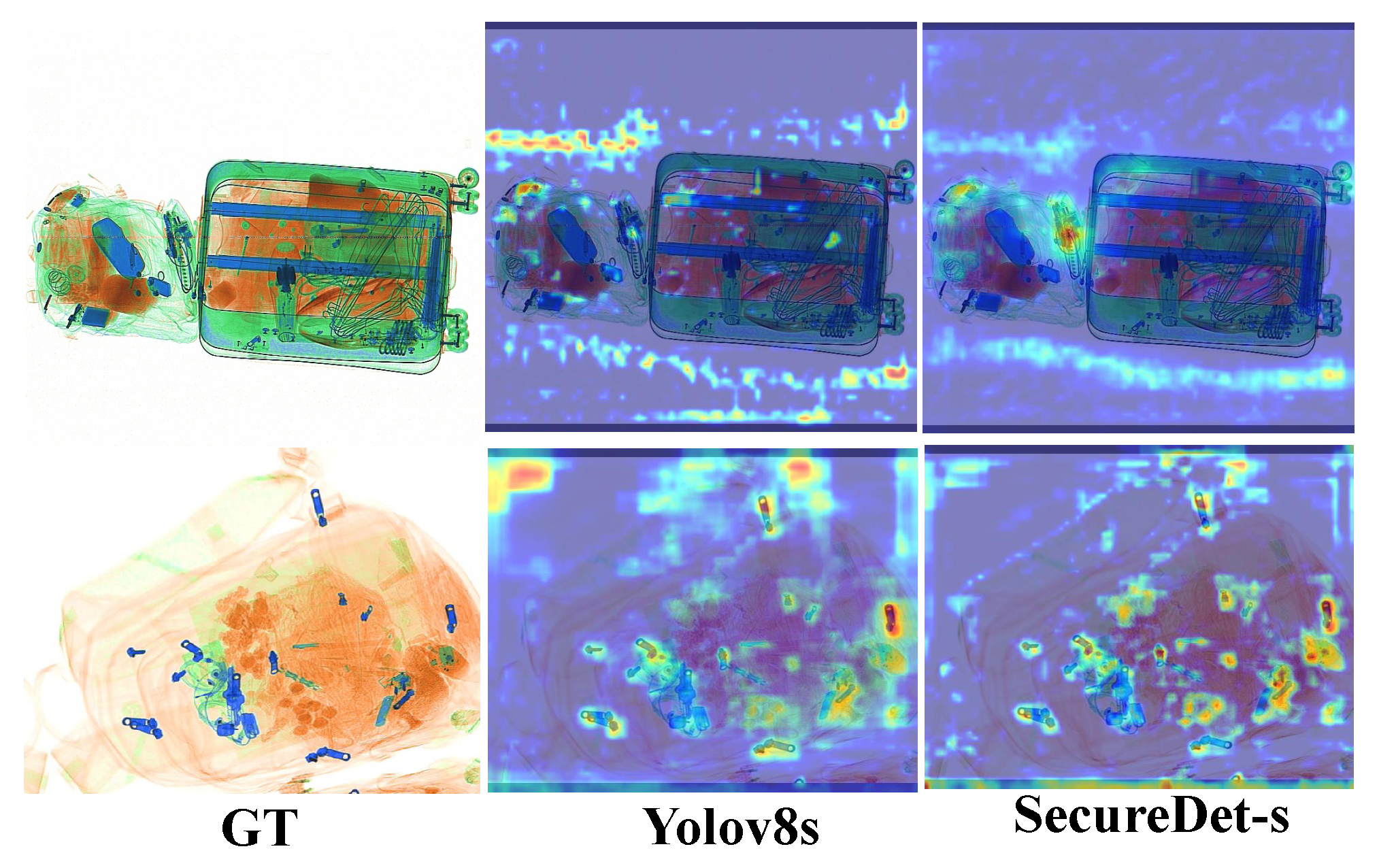

4.5.5. Attention Visualization of Feature Learning and Feature Impact

5. Discussion

5.1. Architectural Synergy as the Core Contribution

- Enhance First: The RFCBAMConv module first enriches the backbone features, making them more discriminative and robust to noise and blur. This is a crucial step, as standard feature detectors often show degraded performance when images are blurred, which is a common issue in low-lighting or rapid-scan conditions [48].

- Align Second: The ECFA and ASFA modules then explicitly model contextual relationships and correct for spatial misalignments across feature hierarchies.

- Fuse Last: Only after features are enhanced and aligned does the BiFPN module effectively fuse multi-scale information, leading to the peak performance of the full SecureDet model.

5.2. Mapping Architectural Innovations to X-Ray Image Characteristics and Remaining Challenges

5.3. Overcoming Data Scarcity and the “Black Box” Problem

5.4. Implications and Future Directions

- XAI Integration: Applying methods like SHAP and Grad-CAM to understand which input features (e.g., textures, material densities) the model prioritizes for different contraband types.

- Semi-Supervised Learning: Exploring non-fully supervised learning paradigms to leverage the vast amounts of unlabeled X-ray data available, thereby reducing the reliance on costly manual annotation and addressing the “label scarcity” challenge head-on.

- Multi-Modal Fusion: Incorporating data from other sensor modalities, such as dual-energy X-ray or 3D computed tomography (CT) scans, to provide richer information for detection.

- Deployment and Optimization: Further optimizing the model for deployment on edge devices with limited computational resources, ensuring its practical applicability in a wider range of security checkpoints.

6. Conclusions

Limitations Under Extreme Occlusion and Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| YOLO | You Only Look Once |

| mAP | mean Average Precision |

| RFCBAMConv | Receptive Field CBAM Convolution |

| BiFPN | Bidirectional Feature Pyramid Network |

| ECFA | Enhanced Contextual Feature Alignment |

| ASFA | Adaptive Spatial Feature Alignment |

| CBAM | Convolutional Block Attention Module |

| FPN | Feature Pyramid Network |

| SGD | Stochastic Gradient Descent |

| GPU | Graphics Processing Unit |

Appendix A. Supplementary Statistical Analysis

| Figure | Method | GT | TP | FN | FP | Precision | Recall | F1 |

|---|---|---|---|---|---|---|---|---|

| Figure 6 | only-BiFPN | 3 | 1 | 2 | 0 | 1.000 | 0.333 | 0.500 |

| yolov8s | 3 | 1 | 2 | 0 | 1.000 | 0.333 | 0.500 | |

| RFCBAM | 3 | 2 | 1 | 1 | 0.667 | 0.667 | 0.667 | |

| RFC + BiFPN | 3 | 0 | 3 | 2 | 0.000 | 0.000 | 0.000 | |

| ECFA + ASFA | 3 | 2 | 1 | 1 | 0.667 | 0.667 | 0.667 | |

| ECFA + ASFA + BiFPN | 3 | 2 | 1 | 1 | 0.667 | 0.667 | 0.667 | |

| RFC + ECFA + ASFA | 3 | 2 | 1 | 0 | 1.000 | 0.667 | 0.800 | |

| SecureDet-s | 3 | 3 | 0 | 0 | 1.000 | 1.000 | 1.000 | |

| Figure 7 | Fast R-CNN | 5 | 2 | 4 | 1 | 0.667 | 0.333 | 0.444 |

| EfficientDet | 5 | 3 | 4 | 0 | 1.000 | 0.429 | 0.600 | |

| RT-DETR | 5 | 2 | 3 | 0 | 1.000 | 0.400 | 0.571 | |

| YOLOv8s | 5 | 2 | 3 | 0 | 1.000 | 0.400 | 0.571 | |

| YOLOv10s | 5 | 2 | 3 | 1 | 0.667 | 0.400 | 0.500 | |

| YOLOv13s | 5 | 2 | 3 | 0 | 1.000 | 0.400 | 0.571 | |

| SecureDet-s | 5 | 5 | 0 | 1 | 0.833 | 1.000 | 0.909 | |

| Figure 8 | Fast R-CNN | 5 | 2 | 3 | 1 | 0.667 | 0.400 | 0.500 |

| EfficientDet | 5 | 2 | 3 | 1 | 0.667 | 0.400 | 0.500 | |

| RT-DETR | 5 | 2 | 3 | 0 | 1.000 | 0.400 | 0.571 | |

| YOLOv8s | 5 | 2 | 3 | 0 | 1.000 | 0.400 | 0.571 | |

| YOLOv10s | 5 | 2 | 3 | 0 | 1.000 | 0.400 | 0.571 | |

| YOLOv13s | 5 | 1 | 4 | 0 | 1.000 | 0.200 | 0.333 | |

| SecureDet-s | 5 | 5 | 0 | 0 | 1.000 | 1.000 | 1.000 | |

| Figure 9 | Fast R-CNN | 3 | 1 | 2 | 0 | 1.000 | 0.333 | 0.500 |

| EfficientDet | 3 | 2 | 1 | 0 | 1.000 | 0.667 | 0.800 | |

| RT-DETR | 3 | 0 | 3 | 0 | 0.000 | 0.000 | 0.000 | |

| YOLOv8s | 3 | 2 | 1 | 0 | 1.000 | 0.667 | 0.800 | |

| YOLOv10s | 3 | 2 | 1 | 0 | 1.000 | 0.667 | 0.800 | |

| YOLOv13s | 3 | 2 | 1 | 0 | 1.000 | 0.667 | 0.800 | |

| SecureDet-s | 3 | 3 | 0 | 0 | 1.000 | 1.000 | 1.000 |

Appendix B. Supplementary Retrieved Images for Zoom-In Comparison

References

- Moodley, T.; Crush, L.; Brits, M. Lung tumor segmentation: A review of the state of the art. Front. Comput. Sci. 2024, 6, 1423693. [Google Scholar] [CrossRef]

- Wei, Y.; Tao, R.; Wu, Z.; Ma, Y.; Zhang, L.; Liu, X. Occluded Prohibited Items Detection: An X-ray Security Inspection Benchmark and De-occlusion Attention Module. In Proceedings of the 28th ACM International Conference on Multimedia (MM), Seattle, WA, USA, 12–16 October 2020; pp. 138–146. [Google Scholar]

- Tao, R.; Wei, Y.; Jiang, B.; Li, B.; You, Y.; Liu, X. Towards real-world X-ray security inspection: A high-quality benchmark and lateral inhibition module. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10203–10212. [Google Scholar]

- Chen, L.; Yang, F.; Wang, H.; Zhang, Y.; Liu, X. Atomic number prior guided network for prohibited items detection from heavily cluttered X-ray imagery. Front. Phys. 2022, 10, 1026209. [Google Scholar] [CrossRef]

- Viriyasaranon, T.; Jung, S.W.; Hwang, S.J. MFA-net: Object detection for complex X-ray cargo and baggage security imagery. PLoS ONE 2022, 17, e0272961. [Google Scholar] [CrossRef] [PubMed]

- Oulhissane, H.A.; Bouchentouf, T.; Oulad-Abbou, D.; Yahyaouy, A.; Sabri, A. Enhanced detonators detection in X-ray baggage inspection by image manipulation and deep convolutional neural networks. Sci. Rep. 2023, 13, 4185. [Google Scholar] [CrossRef] [PubMed]

- Tsai, P.F.; Liao, C.H.; Yuan, S.M. Using Deep Learning with Thermal Imaging for Human Detection in Heavy Smoke Scenarios. Sensors 2022, 22, 5351. [Google Scholar] [CrossRef]

- Bhadoriya, A.S.; Vegamoor, V.; Rathinam, S. Vehicle Detection and Tracking Using Thermal Cameras in Adverse Visibility Conditions. Sensors 2022, 22, 4567. [Google Scholar] [CrossRef]

- Wang, W.; Jing, B.; Yu, X.; Sun, Y.; Yang, L.; Wang, C. YOLO-OD: Obstacle Detection for Visually Impaired Navigation Assistance. Sensors 2024, 24, 7621. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. Oriented Object Detection in Optical Remote Sensing Images using Deep Learning: A Survey. arXiv 2023, arXiv:2302.10473v4. [Google Scholar]

- Dubovik, O.; Schuster, G.L.; Xu, F.; Hu, Y.; B"osch, H.; Landgraf, J.; Li, Z. Grand challenges in satellite remote sensing. Front. Remote Sens. 2021, 2, 619818. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, F.L.; Dodgson, N.A. Target Scanpath-Guided 360-Degree Image Enhancement. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 8169–8177. [Google Scholar]

- Mileto, A.; Guimaraes, L.S.; McCollough, C.H.; Fletcher, J.G.; Yu, L. CT noise-reduction methods for lower-dose scanning: Strengths and weaknesses of iterative reconstruction algorithms and new techniques. RadioGraphics 2021, 41, 1493–1508. [Google Scholar]

- Yao, Z.; Ge, J.; Cao, P.; Wu, M.; Qian, J.; Li, Y.; Reynaerts, D. Advancements in process monitoring and quality control for electrical discharge machining: A comprehensive review. J. Mater. Process. Technol. 2025, 345, 119081. [Google Scholar] [CrossRef]

- Guo, C.; Fan, B.; Zhang, Q.; Xiang, S.; Pan, C. AugFPN: Improving multi-scale feature learning for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 12595–12604. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–16 July 2017. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–23 October 2017. [Google Scholar]

- Miao, C.; Xie, L.; Wan, F.; Su, C.; Liu, H.; Jiao, J.; Ye, Q. SIXray: A Large-Scale Security Inspection X-Ray Benchmark for Prohibited Item Discovery in Overlapping Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Mery, D.; Riffo, V.; Zscherpel, U.; Mondragón, G.; Lillo, I.; Zuccar, I.; Lobel, H.; Carrasco, M. GDXray: The Database of X-ray Images for Nondestructive Testing. J. Nondestruct. Eval. 2015, 34, 42. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, L.; Chen, J.; Fredericksen, M.; Hughes, D.P.; Chen, D.Z. Deep adversarial networks for biomedical image segmentation utilizing unannotated images. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Lima, Peru, 4–8 October 2020; pp. 1–9. [Google Scholar]

- Karimi, D.; Dou, H.; Warfield, S.K.; Gholipour, A. Deep learning with noisy labels: Exploring techniques and remedies in medical image analysis. Med. Image Anal. 2020, 65, 101759. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, F.L.; Dodgson, N.A. Scantd: 360° scanpath prediction based on time-series diffusion. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 7764–7773. [Google Scholar]

- Wen, J.; Cui, J.; Zhao, Z.; Yan, R.; Gao, Z.; Dou, L.; Chen, B.M. SyreaNet: A Physically Guided Underwater Image Enhancement Framework Integrating Synthetic and Real Images. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 5177–5183. [Google Scholar]

- Al-Antari, M.A.; Han, S.M.; Kim, T.S. See through the noise: Revolutionizing medical image diagnosis with quadratic convolutional neural network (Q-CNN). Int. J. Mach. Learn. Cybern. 2024, 15, 1435–1456. [Google Scholar]

- Adegun, A.A.; Viriri, S.; Ogundokun, R.O. State-of-the-Art Deep Learning Methods for Objects Detection in Remote Sensing Satellite Images. Sensors 2023, 23, 5849. [Google Scholar] [CrossRef]

- Wei, X.; Li, Z.; Wang, Y. SED-YOLO based multi-scale attention for small object detection in remote sensing. Sci. Rep. 2025, 15, 3125. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Zhao, Y.; Chen, Y.; Zhang, B.; Zheng, W.; Yang, M. A survey of small object detection based on deep learning in aerial images. Artif. Intell. Rev. 2025, 58, 1–74. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 346–361. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Schiopu, I.; Munteanu, A. Deep Learning Post-Filtering Using Multi-Head Attention and Multiresolution Feature Fusion for Image and Intra-Video Quality Enhancement. Sensors 2022, 22, 1353. [Google Scholar] [CrossRef]

- Xie, Y.; Fei, Z.; Deng, D.; Meng, L.; Niu, F.; Sun, J. MEEAFusion: Multi-Scale Edge Enhancement and Joint Attention Mechanism Based Infrared and Visible Image Fusion. Sensors 2024, 24, 5860. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

- Jocher, G.; Chaurasia, A.; Qiu, J. Ultralytics YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics/blob/main/docs/en/models/yolov8.md (accessed on 15 December 2024).

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Jocher, G.; Qiu, J. Ultralytics YOLO11, Version 11.0.0. 2024. Available online: https://github.com/ultralytics/ultralytics/blob/main/docs/en/models/yolo11.md (accessed on 15 December 2024).

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Lei, M.; Li, S.; Wu, Y.; Hu, H.; Zhou, Y.; Zheng, X.; Ding, G.; Du, S.; Wu, Z.; Gao, Y. YOLOv13: Real-Time Object Detection with Hypergraph-Enhanced Adaptive Visual Perception. arXiv 2025, arXiv:2506.17733. [Google Scholar]

- Smiti, A. A critical overview of outlier detection methods. Comput. Sci. Rev. 2020, 38, 100306. [Google Scholar] [CrossRef]

- Wu, M.; Arshad, M.H.; Saxena, K.K.; Qian, J.; Reynaerts, D. Profile prediction in ECM using machine learning. Procedia CIRP 2022, 113, 410–416. [Google Scholar] [CrossRef]

- Wu, M.; Yao, Z.; Ye, L.; Verbeke, M.; Karsmakers, P.; Reynaerts, D. Geometrical Feature Classification in Electrical Discharge Machining Using In-Process Monitoring and Machine Learning. Procedia CIRP 2025, 137, 462–467. [Google Scholar] [CrossRef]

- Zhao, Z. BALF: Simple and Efficient Blur Aware Local Feature Detector. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 3362–3372. [Google Scholar]

- Luo, J.; Wu, Q.; Wang, Y.; Zhou, Z.; Zhuo, Z.; Guo, H. MSHF-YOLO: Cotton growth detection algorithm integrated multi-semantic and high-frequency features. Digit. Signal Process. 2025, 167, 105423. [Google Scholar] [CrossRef]

- Yan, S.; Wang, Y.; Zhao, K.; Shi, P.; Zhao, Z.; Zhang, Y.; Li, J. HeMoRa: Unsupervised Heuristic Consensus Sampling for Robust Point Cloud Registration. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 1363–1373. [Google Scholar]

- Guo, H.; Wu, Q.; Wang, Y. AUHF-DETR: A Lightweight Transformer with Spatial Attention and Wavelet Convolution for Embedded UAV Small Object Detection. Remote Sens. 2025, 17, 1920. [Google Scholar] [CrossRef]

- Wu, M.; Guo, Z.; Qian, J.; Reynaerts, D. Multi-Ion-Based Modelling and Experimental Investigations on Consistent and High-Throughput Generation of a Micro Cavity Array by Mask Electrolyte Jet Machining. Micromachines 2022, 13, 2165. [Google Scholar] [CrossRef]

- Wu, M.; Hazak Arshad, M.; Kumar Saxena, K.; Reynaerts, D.; Guo, Z.; Liu, J. Experimental and Numerical Investigations on Fabrication of Surface Microstructures Using Mask Electrolyte Jet Machining and Duckbill Nozzle. J. Manuf. Sci. Eng. -Trans. ASME 2023, 145, 051006. [Google Scholar] [CrossRef]

- Wu, M.; Guo, Z.; He, J.; Chen, X. Modeling and simulation of the material removal process in electrolyte jet machining of mass transfer in convection and electric migration. Procedia CIRP 2018, 68, 488–492. [Google Scholar] [CrossRef]

- Chen, B.; Zha, J.; Cai, Z.; Wu, M. Predictive modelling of surface roughness in precision grinding based on hybrid algorithm. CIRP J. Manuf. Sci. Technol. 2025, 59, 1–17. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, J.; Wei, J.; Yuan, X.; Wu, M. A Review of Non-Fully Supervised Deep Learning for Medical Image Segmentation. Information 2025, 16, 433. [Google Scholar] [CrossRef]

- Ge, J.; Yao, Z.; Wu, M.; Almeida, J.H.S., Jr.; Jin, Y.; Sun, D. Tackling data scarcity in machine learning-based CFRP drilling performance prediction through a broad learning system with virtual sample generation (BLS-VSG). Compos. Part B Eng. 2025, 305, 112701. [Google Scholar] [CrossRef]

- Wu, M.; Yao, Z.; Verbeke, M.; Karsmakers, P.; Gorissen, B.; Reynaerts, D. Data-driven models with physical interpretability for real-time cavity profile prediction in electrochemical machining processes. Eng. Appl. Artif. Intell. 2025, 160, 111807. [Google Scholar] [CrossRef]

- Yao, Z.; Wu, M.; Qian, J.; Reynaerts, D. Intelligent discharge state detection in micro-EDM process with cost-effective radio frequency (RF) radiation: Integrating machine learning and interpretable AI. Expert Syst. Appl. 2025, 291, 128607. [Google Scholar] [CrossRef]

- Ahmedt-Aristizabal, D.; Armin, M.A.; Denman, S.; Fookes, C.; Petersson, L. Graph-Based Deep Learning for Medical Diagnosis and Analysis: Past, Present and Future. Sensors 2021, 21, 4758. [Google Scholar] [CrossRef] [PubMed]

- Wu, M.; Shukla, S.; Vrancken, B.; Verbeke, M.; Karsmakers, P. Data-Driven Approach to Identify Acoustic Emission Source Motion and Positioning Effects in Laser Powder Bed Fusion with Frequency Analysis. Procedia CIRP 2025, 133, 531–536. [Google Scholar] [CrossRef]

- Alonso-González, M.J.; Hoogendoorn-Lanser, S.; van Oort, N.; Cats, O.; Hoogendoorn, S. Drivers and barriers in adopting Mobility as a Service (MaaS) – A latent class cluster analysis of attitudes. Transp. Res. Part A Policy Pract. 2020, 132, 378–401. [Google Scholar] [CrossRef]

| Component | Setting |

|---|---|

| Channel attention bottleneck | two FC layers, reduction ratio , activation: sigmoid |

| Spatial attention | sigmoid |

| Context construction | depth-wise separable ; spatial unfold (patch/stride: design-formula) |

| Attention application | sequential weighting: then |

| Output layer | with stride ; BatchNorm + ReLU |

| Variant | Backbone | Depth Multiplier | Width Multiplier | Design Objective |

|---|---|---|---|---|

| SecureDet-n | YOLOv8n | 0.33 | 0.25 | Maximize efficiency for edge devices |

| SecureDet-s | YOLOv8s | 0.33 | 0.50 | Balance speed and accuracy for general scenarios |

| SecureDet-m | YOLOv8m | 0.67 | 0.75 | Pursue highest accuracy for high-performance servers |

| Item | Setting |

|---|---|

| Input resolution | |

| Batch size | 16 |

| Epochs | 300 |

| Optimizer | SGD (initial learning rate ) |

| Losses | BCE (cls, obj) + CIoU (box) |

| Schedule | cosine with warmup; EMA; weight decay; light label smoothing (design-formula) |

| Augmentation | strong data augmentation (design-formula) |

| Early stopping | by validation mAP@0.5:0.95 |

| Inference thresholds | confidence , NMS IoU |

| Metrics | Precision, Recall, mAP@0.5, mAP@0.5:0.95; Params, GFLOPs, FPS |

| Method | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | Params (M) | GFLOPs | FPS |

|---|---|---|---|---|---|---|---|

| YOLOv8s | 91.065 | 72.725 | 79.287 | 66.379 | 13.8 | 28.6 | 83.4 |

| YOLOv8m | 83.59 | 81.3 | 81.32 | 68.3 | 25.9 | 78.9 | 45.3 |

| YOLOv8n | 86.894 | 64.541 | 73.339 | 56.079 | 3.2 | 8.8 | 122.3 |

| Fast R-CNN | 84.781 | 62.537 | 70.849 | 54.065 | N/A | N/A | N/A |

| RT-DETR-R50 | 87.757 | 65.618 | 74.346 | 61.428 | 22.8 | 139.8 | 58.7 |

| EfficientDet-d2 | 89.274 | 70.922 | 79.086 | 63.398 | 8.1 | 11.0 | 41.57 |

| YOLOv10l | 91.242 | 71.123 | 77.723 | 64.497 | 24.4 | 120.3 | 46.4 |

| YOLOv10x | 91.988 | 73.633 | 78.466 | 69.487 | 29.5 | 160.4 | 35.5 |

| YOLOv11n | 83.235 | 59.895 | 69.843 | 52.575 | 2.9 | 6.5 | 97.8 |

| YOLOv11s | 90.775 | 72.218 | 79.597 | 66.088 | 9.4 | 21.5 | 61.1 |

| YOLOv11m | 93.929 | 73.03 | 82.088 | 71.686 | 20.1 | 68.0 | 25.46 |

| YOLOv13n | 84.2 | 40.7 | 50.5 | 37.5 | 3.1 | 7.5 | 126.9 |

| YOLOv13s | 84.3 | 53.3 | 62.2 | 47.0 | 9.3 | 21.4 | 92.3 |

| YOLOv13m | 85.107 | 60.112 | 68.311 | 53.279 | 20.2 | 67.5 | 53.5 |

| SecureDet-n | 88.006 | 67.618 | 75.529 | 59.97 | 3.0 | 7.1 | 113.1 |

| SecureDet-s | 93.558 | 73.034 | 81.628 | 69.544 | 7.55 | 21.4 | 78.5 |

| SecureDet-m | 92.602 | 74.611 | 82.258 | 72.312 | 19.2 | 58.9 | 59.2 |

| Configuration | RFCBAM | BiFPN | ECFA | ASFA | P (%) | R (%) | mAP@0.5 | mAP@0.5:0.95 | Params (M) |

|---|---|---|---|---|---|---|---|---|---|

| YOLOv8s (Baseline) | 91.07 | 72.73 | 79.29 | 66.38 | 11.13 | ||||

| only-BiFPN | ✓ | 87.87 | 70.08 | 77.93 | 59.99 | 7.37 | |||

| RFCBAM | ✓ | 90.01 | 71.76 | 79.85 | 64.91 | 11.41 | |||

| RFC+BiFPN | ✓ | ✓ | 90.05 | 72.18 | 78.95 | 64.61 | 10.95 | ||

| ECFA+ASFA | ✓ | ✓ | 91.59 | 73.52 | 80.79 | 65.90 | 12.50 | ||

| ECFA+ASFA+BiFPN | ✓ | ✓ | ✓ | 89.88 | 69.11 | 77.88 | 61.75 | 7.07 | |

| RFC+ECFA+ASFA | ✓ | ✓ | ✓ | 88.88 | 72.63 | 78.82 | 64.43 | 13.22 | |

| SecureDet-s (Full Model) | ✓ | ✓ | ✓ | ✓ | 93.56 | 73.03 | 81.63 | 69.54 | 7.55 |

| Datasets | Methods | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) |

|---|---|---|---|---|---|

| OPIXray | Fast R-CNN | 81.065 | 72.725 | 79.287 | 38.379 |

| YOLOv8s | 90.466 | 86.531 | 89.889 | 43.242 | |

| RT-DETR | 89.5 | 86.535 | 90.491 | 54.469 | |

| EfficientDet | 86.409 | 82.528 | 87.925 | 42.674 | |

| YOLOv12s | 84.781 | 62.537 | 89.849 | 42.065 | |

| SecureDet-s | 89.808 | 87.106 | 91.156 | 54.436 | |

| HiXray | Fast R-CNN | 82.176 | 61.172 | 69.42 | 51.885 |

| YOLOv8s | 87.832 | 70.47 | 78.099 | 62.295 | |

| RT-DETR | 93.431 | 73.481 | 81.259 | 68.345 | |

| EfficientDet | 90.18 | 76.73 | 80.982 | 50.011 | |

| YOLOv12s | 89.274 | 70.922 | 79.086 | 63.398 | |

| SecureDet-s | 84.795 | 81.11 | 83.585 | 69.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.; Lin, Y.; Wu, H.; Wu, M. The Enhance-Fuse-Align Principle: A New Architectural Blueprint for Robust Object Detection, with Application to X-Ray Security. Sensors 2025, 25, 6603. https://doi.org/10.3390/s25216603

Lin Y, Lin Y, Wu H, Wu M. The Enhance-Fuse-Align Principle: A New Architectural Blueprint for Robust Object Detection, with Application to X-Ray Security. Sensors. 2025; 25(21):6603. https://doi.org/10.3390/s25216603

Chicago/Turabian StyleLin, Yuduo, Yanfeng Lin, Heng Wu, and Ming Wu. 2025. "The Enhance-Fuse-Align Principle: A New Architectural Blueprint for Robust Object Detection, with Application to X-Ray Security" Sensors 25, no. 21: 6603. https://doi.org/10.3390/s25216603

APA StyleLin, Y., Lin, Y., Wu, H., & Wu, M. (2025). The Enhance-Fuse-Align Principle: A New Architectural Blueprint for Robust Object Detection, with Application to X-Ray Security. Sensors, 25(21), 6603. https://doi.org/10.3390/s25216603