Emotion Recognition Using Temporal Facial Skin Temperature and Eye-Opening Degree During Digital Content Viewing for Japanese Older Adults

Abstract

1. Introduction

- In many studies, emotions are represented via arousal and valence dimensions, making it difficult to evaluate whether emotional arousal and emotional non-arousal intervals can be accurately distinguished. Additionally, various methods have been developed to classify multiple emotions and neutral (defined as emotional non-arousal in this study). For accurate emotion estimation, it is crucial to determine whether an emotion is present or absent. However, these studies do not focus on distinguishing between emotional arousal and emotional non-arousal intervals.

- In recent years, the proportion of older adults in the global population has been steadily increasing, making aging a global trend. However, the datasets used in existing emotion-recognition research have primarily been limited to younger individuals, and datasets specifically targeting older adults have not been adequately explored.

- Research findings have demonstrated the significance of skin temperature variations and eye information in emotion recognition. Furthermore, multimodal approaches that utilize multiple methods and features outperform unimodal approaches that rely on a single feature. However, the combination of thermal and visible images, particularly those that integrate changes in skin temperature with eye state variations, remains underexplored.

- A novel emotion-recognition method that utilizes skin temperature and EOD to distinguish between emotional arousal and non-arousal states is proposed.

- The effectiveness of combining thermal and visible images, particularly those focusing on skin temperature changes and EOD, is demonstrated.

- The effectiveness of noncontact features for emotion recognition is assessed.

- The proposed method is compared with existing emotion-recognition methods that are based on noncontact features.

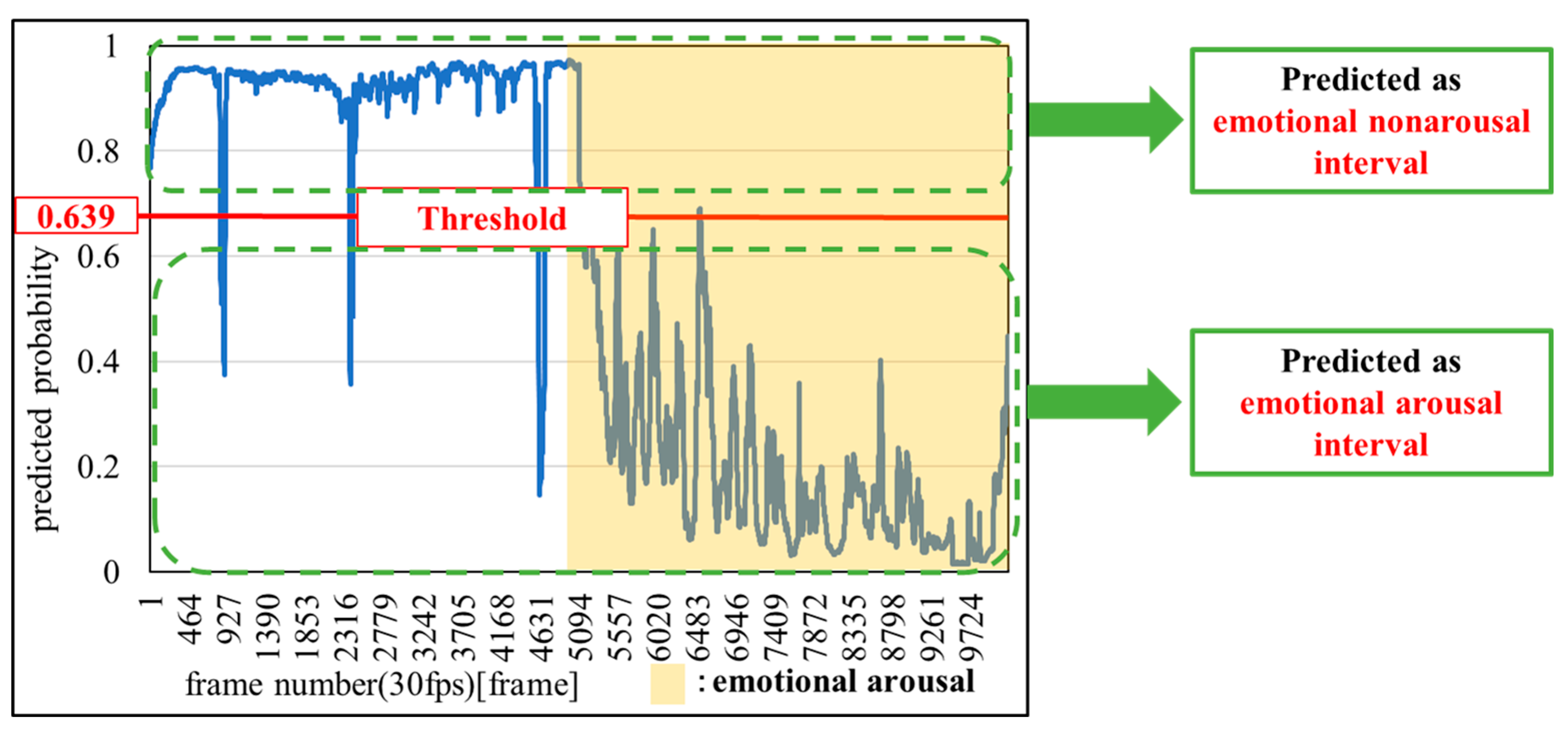

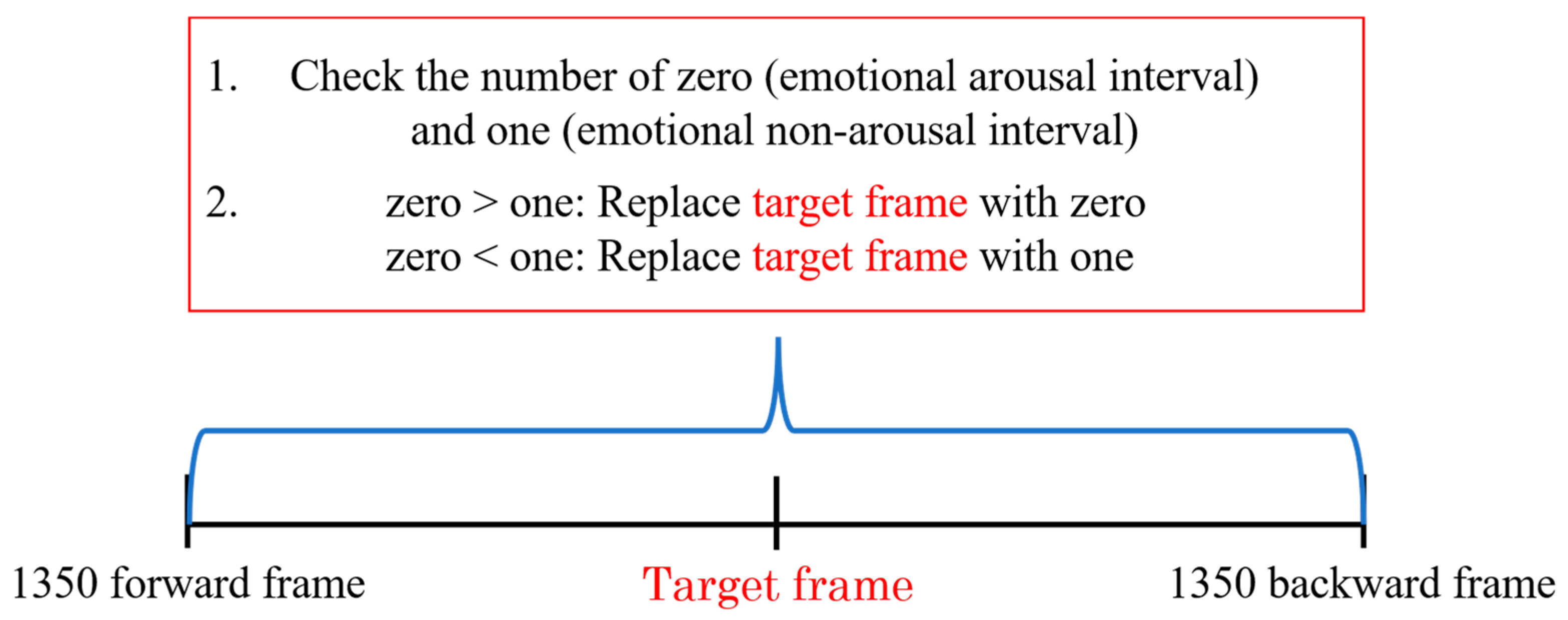

- The effectiveness of a method that automatically sets thresholds for classifying emotional arousal and non-arousal based on individual participants and corrects results by preceding and subsequent estimates in improving emotion-recognition accuracy is examined.

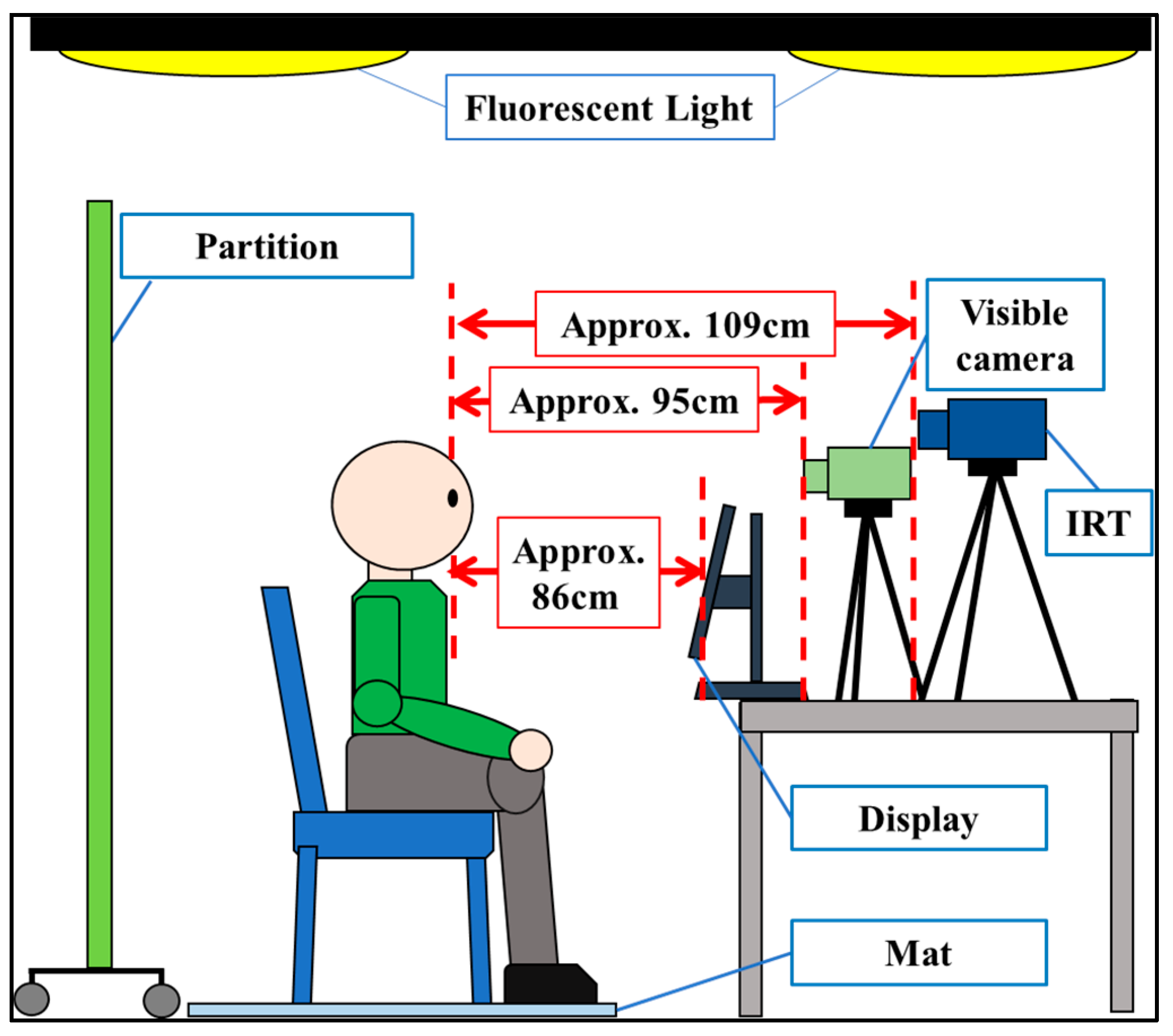

2. Data Acquisition

- Room temperature: 21.4–26.4 °C

- Humidity: 48.2–69.5%

- Illumination (above the participant): 703–889 lx

- Illumination (front of the participant): 255–361 lx

3. Proposed Methodology

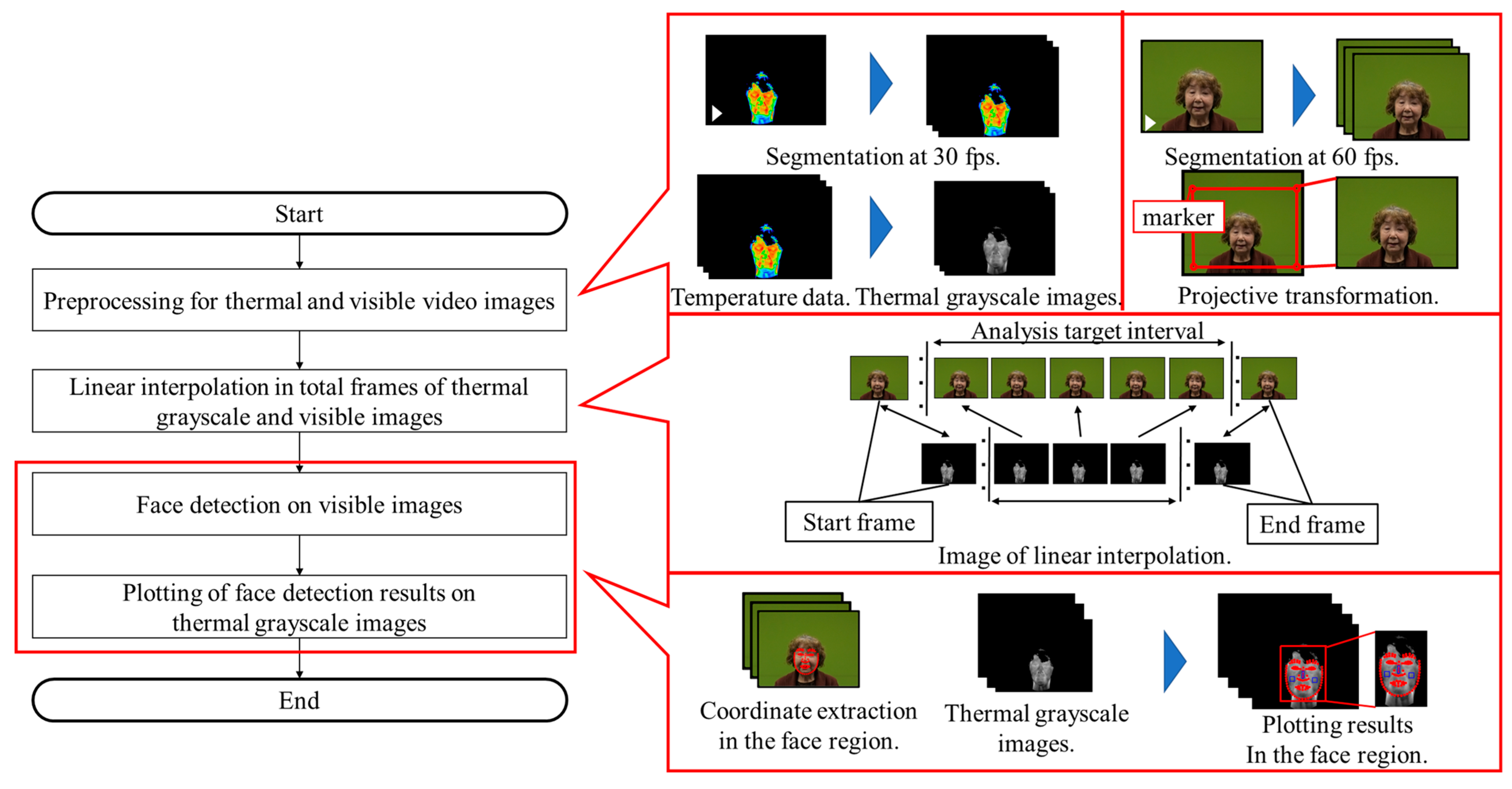

3.1. Face Detection Method

3.1.1. Preprocessing of Thermal and Visible Video Images

3.1.2. Linear Interpolation in Total Frames of Thermal Grayscale and Visible Images

3.1.3. Face Detection on Visible Images

3.1.4. Plotting of Face Detection Results on Thermal Grayscale Images

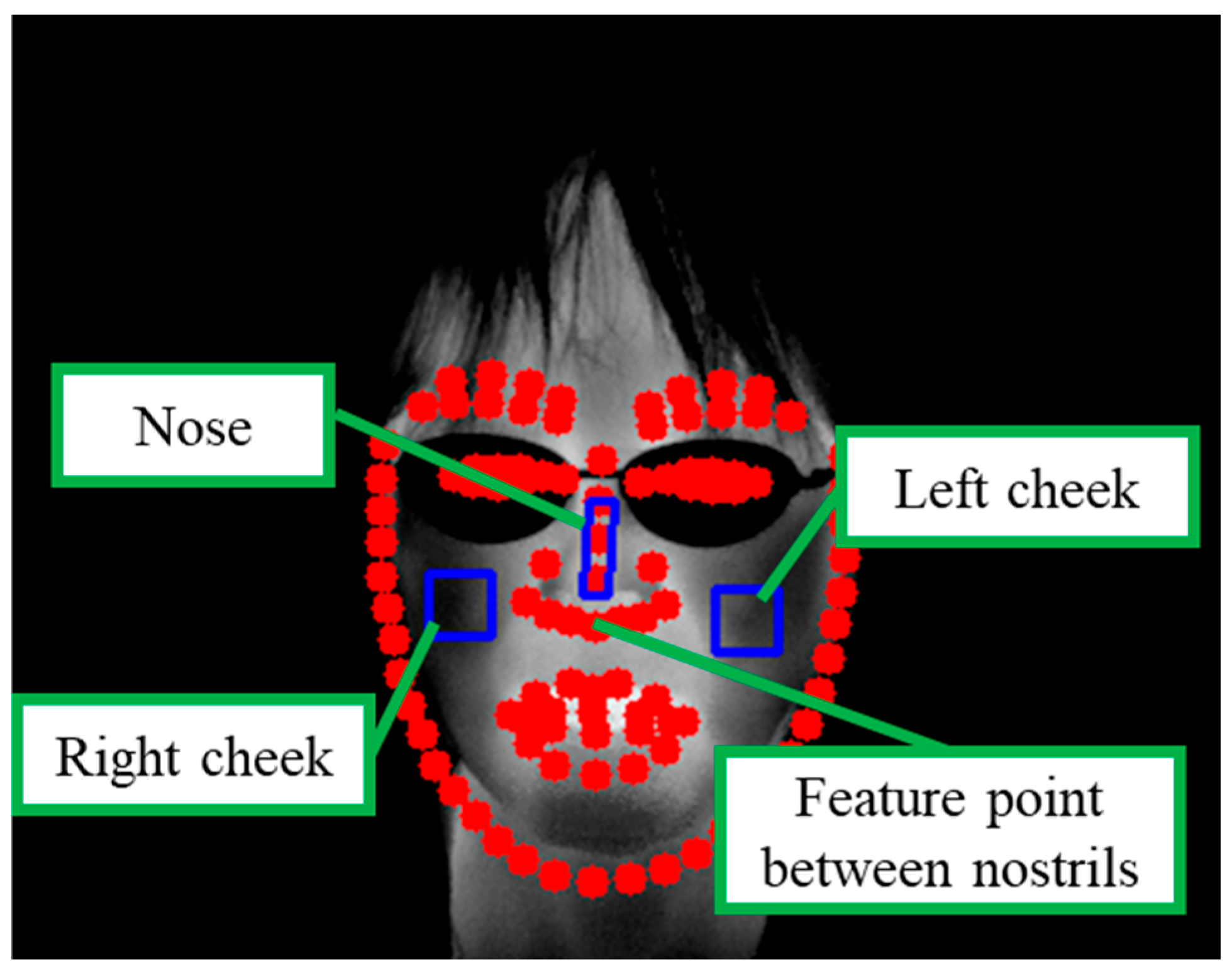

3.2. Setting ROIs

- Nose: Area between the apex and root of the nose, excluding the nostrils (10 × 30 pixels);

- Cheeks: Area below the eyes, excluding the eyes, nose, and mouth (20 × 20 pixels).

3.3. Feature Extraction

3.3.1. LT

3.3.2. LT Difference

- Nose and right cheek;

- Nose and left cheek;

- Right and left cheeks.

3.3.3. ATC

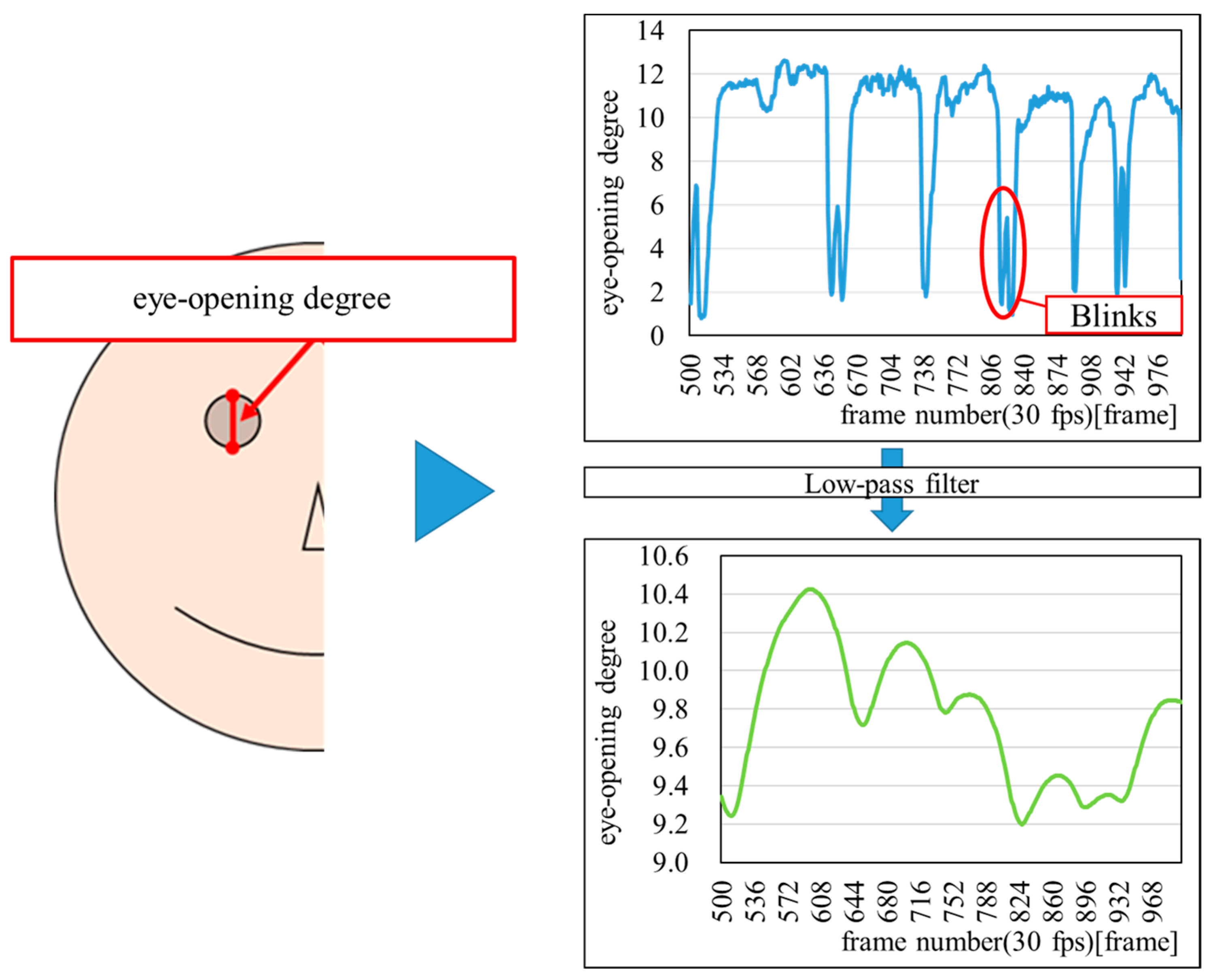

3.3.4. EOD

3.4. Emotion Recognition

3.4.1. BLSTM

3.4.2. Classification Threshold

3.5. Performance Metrics

3.6. Emotion Recognition

4. Analysis of Results and Discussion

5. Conclusions

- The proposed method achieves an accuracy of 92.21% in classifying emotional arousal and non-arousal intervals.

- The proposed method outperforms existing emotion-recognition methods that rely on noncontact information.

- The proposed method effectively integrates thermal and visible images, providing enhanced recognition performance.

- The proposed method highlights the significance of skin temperature variations and eye openness in emotion recognition.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ECG | Electrocardiogram |

| EMG | Electromyogram |

| EEG | Electroencephalography |

| HR | Heart Rate |

| GSR | Galvanic Skin Response |

| EDA | Electrodermal Activity |

| RSP | Respiration |

| EEG | Electroencephalogram |

| MFCC | Mel Frequency Cepstral Coefficient |

| GWO | Gray Wolf Optimization |

| GL-MFO | Grunwald–Letnikov Moth Flame Optimization |

| IRT | Infrared Thermography |

| ROIs | Regions of Interest |

| LT | Luminance Temperature |

| ATC | Amount of Temperature Change |

| EOD | Eye-opening Degree |

| BLSTM | Bidirectional Long Short-term Memory |

References

- Keltner, D.; Kring, A.M. Emotion, social function and psychopathology. Rev. Gen. Psychol. 1998, 2, 320–342. [Google Scholar] [CrossRef]

- Kaplan, S.; Cortina, J.; Ruark, G.; Laport, K.; Nicolaides, V. The role of organizational leaders in employee emotion management: A theoretical model. Leadersh. Q. 2014, 25, 563–580. [Google Scholar] [CrossRef]

- Liu, M.; Duan, Y.; Ince, R.A.A.; Chen, C.; Garrod, O.G.B.; Schyns, P.G.; Jack, R.E. Facial expressions elicit multiplexed perceptions of emotion categories and dimensions. Curr. Biol. 2022, 32, 200–209. [Google Scholar] [CrossRef]

- Zhang, J.; Yin, Z.; Chen, P.; Nichele, S. Emotion recognition using multi-modal data and machine learning techniques: A tutorial and review. Inf. Fusion 2020, 59, 103–126. [Google Scholar] [CrossRef]

- Nayak, S.; Nagesh, B.; Routray, A.; Sarma, M. A human-computer interaction framework for emotion recognition through time-series thermal video sequences. Comput. Electr. Eng. 2021, 93, 107280. [Google Scholar] [CrossRef]

- Dou, W.; Wang, K.; Yamauchi, T. Face Expression Recognition with Vision Transformer and Local Mutual Information Maximization. IEEE Access 2024, 12, 169263–169276. [Google Scholar] [CrossRef]

- Kikuchi, R.; Shirai, H.; Chikako, I.; Suehiro, K.; Takahashi, N.; Saito, H.; Kobayashi, T.; Watanabe, F.; Satake, H.; Sato, N.; et al. Feature Analysis of Facial Color Information During Emotional Arousal in Japanese Older Adults Playing eSports. Sensors 2025, 25, 5725. [Google Scholar] [CrossRef] [PubMed]

- Meléndez, J.C.; Satorres, E.; Reyes-Olmedo, M.; Delhom, I.; Real, E.; Lora, Y. Emotion recognition changes in a confinement situation due to COVID-19. J. Environ. Psychol. 2020, 72, 101518. [Google Scholar] [CrossRef] [PubMed]

- Ziccardi, S.; Crescenzo, F.; Calabrese, M. “What Is Hidden behind the Mask?” Facial Emotion Recognition at the Time of COVID-19 Pandemic in Cognitively Normal Multiple Sclerosis Patients. Diagnostics. 2022, 12, 47. [Google Scholar] [CrossRef]

- Kapitány-Fövény, M.; Vetró, M.; Révy, G.; Fabó, D.; Szirmai, D.; Hullám, G. EEG based depression detection by machine learning: Does inner or overt speech condition provide better biomarkers when using emotion words as experimental cues? J. Psychiatr. Res. 2024, 178, 66–76. [Google Scholar] [CrossRef]

- Priya, P.; Firdaus, M.; Ekbal, A. A multi-task learning framework for politeness and emotion detection in dialogues for mental health counselling and legal aid. Expert. Syst. Appl. 2023, 224, 120025. [Google Scholar] [CrossRef]

- Denervaud, S.; Mumenthaler, C.; Gentaz, E.; Sander, D. Emotion recognition development: Preliminary evidence for an effect of school pedagogical practices. Learn. Instr. 2020, 69, 101353. [Google Scholar] [CrossRef]

- Ungureanu, F.; Lupu, R.G.; Cadar, A.; Prodan, A. Neuromarketing and visual attention study using eye tracking techniques. In Proceedings of the 2017 21st International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 19–21 October 2017; pp. 553–557. [Google Scholar] [CrossRef]

- Mancini, E.; Galassi, A.; Ruggeri, F.; Torroni, P. Disruptive situation detection on public transport through speech emotion recognition. Intell. Syst. Appl. 2024, 21, 200305. [Google Scholar] [CrossRef]

- Wang, J.; Gong, Y. Recognition of multiple drivers’ emotional state. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Unmasking the Face: A Guide to Recognizing Emotions from Facial Clues; Malor Books: Los Altos, CA, USA, 2003; p. 22. [Google Scholar]

- Russell, J.A. circumplex model of affect. J. Pers. Soc. Psychol. 1980, 39, 1161–1178. [Google Scholar] [CrossRef]

- Birdwhistell, R.L. Kinesics and Context: Essays on Body motion Communication; University of Pennsylvania Press: Philadelphia, PA, USA, 1970. [Google Scholar]

- Almeida, J.; Vilaça, L.; Teixeira, I.N.; Viana, P. Emotion Identification in Movies through Facial Expression Recognition. Appl. Sci. 2021, 11, 6827. [Google Scholar] [CrossRef]

- Dumitru; Goodfellow, I.; Cukierski, W.; Bengio, Y. Challenges in Representation Learning: Facial Expression Recognition Challenge, Kaggle. 2013; Available online: https://kaggle.com/competitions/challenges-in-representation-learning-facial-expression-recognition-challenge (accessed on 14 September 2025).

- Dhall, A.; Goecke, R.; Lucey, S.; Gedeon, T. Static facial expression analysis in tough conditions: Data, evaluation protocol and benchmark. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 2106–2112. [Google Scholar] [CrossRef]

- Manalu, H.V.; Rifai, A.P. Detection of human emotions through facial expressions using hybrid convolutional neural network-recurrent neural network algorithm. Intell. Syst. Appl. 2024, 21, 200339. [Google Scholar] [CrossRef]

- Saganowski, S.; Komoszyńska, J.; Behnke, M.; Perz, B.; Kunc, D.; Klich, B.; Kaczmarek, Ł.D.; Kazienko, P. Emognition dataset: Emotion recognition with self-reports, facial expressions, and physiology using wearables. Sci. Data 2022, 9, 158. [Google Scholar] [CrossRef]

- Alhagry, S.; Fahmy, A.A.; El-Khoribi, R.A. Emotion recognition based on EEG using LSTM recurrent neural network. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 355–358. [Google Scholar] [CrossRef]

- Saha, P.; Kunju, A.K.A.; Majid, M.E.; Kashem, S.B.A.; Nashbat, M.; Ashraf, A.; Hasan, M.; Khandakar, A.; Hossain, M.S.; Alqahtani, A.; et al. Novel multimodal emotion detection method using Electroencephalogram and Electrocardiogram signals. Biomed. Signal Process. Control 2024, 92, 106002. [Google Scholar] [CrossRef]

- Abtahi, F.; Ro, T.; Li, W.; Zhu, Z. Emotion Analysis Using Audio/Video, EMG and EEG: A Dataset and Comparison Study. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 10–19. [Google Scholar] [CrossRef]

- Cruz-Albarran, I.A.; Benitez-Rangel, J.P.; Osornio-Rios, R.A.; Morales-Hernandez, L.A. Human emotions detection based on a smart-thermal system of thermographic images. Infrared Phys. Technol. 2017, 81, 250–261. [Google Scholar] [CrossRef]

- Nandini, D.; Yadav, J.; Rani, A.; Singh, V. Design of subject independent 3D VAD emotion detection system using EEG signals and machine learning algorithms. Biomed. Signal Process. Control 2023, 85, 104894. [Google Scholar] [CrossRef]

- Lee, M.S.; Lee, Y.K.; Pae, D.S.; Lim, M.T.; Kim, D.W.; Kang, T.K. Fast Emotion Recognition Based on Single Pulse PPG Signal with Convolutional Neural Network. Appl. Sci. 2019, 9, 3355. [Google Scholar] [CrossRef]

- Mellouk, W.; Handouzi, W. CNN-LSTM for automatic emotion recognition using contactless photoplythesmographic signals. Biomed. Signal Process. Control 2023, 85, 104907. [Google Scholar] [CrossRef]

- Umair, M.; Rashid, N.; Shahbaz Khan, U.; Hamza, A.; Iqbal, J. Emotion Fusion-Sense (Emo Fu-Sense)—A novel multimodal emotion classification technique. Biomed. Signal Process. Control 2024, 94, 106224. [Google Scholar] [CrossRef]

- Gannouni, S.; Aledaily, A.; Belwafi, K.; Aboalsamh, H. Emotion detection using electroencephalography signals and a zero-time windowing-based epoch recognition and relevant electrode identification. Sci. Rep. 2021, 11, 7071. [Google Scholar] [CrossRef]

- Hu, F.; He, K.; Wang, C.; Zheng, Q.; Zhou, B.; Li, G.; Sun, Y. STRFLNet: Spatio-Temporal Representation Fusion Learning Network for EEG-Based Emotion Recognition. IEEE Trans. Affect. Comput. 2025, 1–16. [Google Scholar] [CrossRef]

- Kachare, P.H.; Sangle, S.B.; Puri, D.V.; Khubrani, M.M.; Al-Shourbaji, I. STEADYNet: Spatiotemporal EEG Analysis for Dementia Detection Using Convolutional Neural Network. Cogn. Neurodyn. 2024, 18, 3195–3208. [Google Scholar] [CrossRef]

- Liu, H.; Lou, T.; Zhang, Y.; Wu, Y.; Xiao, Y.; Jensen, C.S.; Zhang, D. EEG-Based Multimodal Emotion Recognition: A Machine Learning Perspective. IEEE Trans. Instrum. Meas. 2024, 73, 1–29. [Google Scholar] [CrossRef]

- Pradhan, A.; Srivastava, S. Hierarchical extreme puzzle learning machine-based emotion recognition using multimodal physiological signals. Biomed. Signal Process. Control 2023, 83, 104624. [Google Scholar] [CrossRef]

- Schmidt, P.; Reiss, A.; Duerichen, R.; Marberger, C.; Laerhoven, K.V. Introducing WESAD, a Multimodal Dataset for Wearable Stress and Affect Detection. In Proceedings of the ICMI ‘18: The 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; pp. 400–408. [Google Scholar] [CrossRef]

- Jaswal, R.A.; Dhingra, S. Empirical analysis of multiple modalities for emotion recognition using convolutional neural network. Meas. Sens. 2023, 26, 100716. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, K.; Mazhar, S.; Fu, X.; Kong, J. Trusted emotion recognition based on multiple signals captured from video. Expert. Syst. Appl. 2023, 233, 120948. [Google Scholar] [CrossRef]

- Chatterjee, S.; Saha, D.; Sen, S.; Oliva, D.; Sarkar, R. Moth-flame optimization based deep feature selection for facial expression recognition using thermal images. Multimed. Tools Appl. 2024, 83, 11299–11322. [Google Scholar] [CrossRef]

- Kopaczka, M.; Kolk, R.; Merhof, D. A fully annotated thermal face database and its application for thermal facial expression recognition. In Proceedings of the 2018 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Houston, TX, USA, 14–17 May 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Yamada, M.; Kageyama, Y. Temperature analysis of face regions based on degree of emotion of joy. Int. J. Innov. Comput. Inf. Control 2022, 18, 1383–1394. [Google Scholar]

- Bhattacharyya, A.; Chatterjee, S.; Sen, S.; Sinitca, A.; Kaplun, D.; Sarkar, R. A deep learning model for classifying human facial expressions from infrared thermal images. Sci. Rep. 2021, 11, 20696. [Google Scholar] [CrossRef]

- Sathyamoorthy, B.; Snehalatha, U.; Rajalakshmi, T. Facial emotion detection of thermal and digital images based on machine learning techniques. Biomed. Eng.—Appl. Basis Commun. 2023, 35, 2250052. [Google Scholar] [CrossRef]

- Melo, W.C.; Granger, E.; Lopez, M.B. Facial expression analysis using Decomposed Multiscale Spatiotemporal Networks. Expert. Syst. Appl. 2024, 236, 121276. [Google Scholar] [CrossRef]

- Zhang, Y.; Cheng, C.; Zhang, Y. Multimodal emotion recognition based on manifold learning and convolution neural network. Multimed. Tools Appl. 2022, 81, 33253–33268. [Google Scholar] [CrossRef]

- Tanabe, R.; Kageyama, Y.; Zou, M. Emotional Arousal Recognition by LSTM Model Based on Time-Series Thermal and Visible Images. In Proceedings of the 10th IIAE International Conference on Intelligent Systems and Image Processing 2023 (ICISIP2023), Beppu, Japan, 4–8 September 2023; p. GS2–1. [Google Scholar]

- Tanabe, R.; Kikuchi, R.; Zou, M.; Kageyama, Y.; Suehiro, K.; Takahashi, N.; Saito, H.; Kobayashi, T.; Watanabe, F.; Satake, H.; et al. Feature Selection for Emotion Recognition using Time-Series Facial Skin Temperature and Eye Opening Degree. In Proceedings of the 11th IIAE International Conference on Intelligent Systems and Image Processing 2024 (ICISIP2024), Ehime, Japan, 13–17 September 2024; p. GS7–3. [Google Scholar]

- Tanabe, R.; Kikuchi, R.; Zou, M.; Kageyama, Y.; Suehiro, K.; Takahashi, N.; Saito, H.; Kobayashi, T.; Watanabe, F.; Satake, H.; et al. Emotional Recognition Method through Fusion of Temporal Skin Temperature and Eye State Changes. In Proceedings of the 13th International Conference on Soft Computing and Intelligent Systems and 25th International Symposium on Advanced Intelligent Systems 2024 (SCIS&ISIS2024), Himeji, Japan, 9–13 November 2024; p. SS9–1. [Google Scholar]

- Nippon Avionics Co., Ltd. R500EX-S Japanese Version Catalog. Available online: http://www.avio.co.jp/products/infrared/lineup/pdf/catalog-r500exs-jp.pdf (accessed on 14 September 2025).

- Nippon Avionics Co., Ltd. R550S Japanese Version Catalog. Available online: https://www.avio.co.jp/products/infrared/lineup/pdf/catalog-r550-jp.pdf (accessed on 14 September 2025).

- Panasonic Co., Ltd. Digital 4K Video Camera HC-VX2M. Available online: https://panasonic.jp/dvc/c-db/products/HC-VX2M.html (accessed on 14 September 2025).

- Insightface. Available online: https://insightface.ai/ (accessed on 14 September 2025).

- Chen, A.; Wang, F.; Liu, W.; Chang, S.; Wang, H.; He, J.; Huang, Q. Multi-information fusion neural networks for arrhythmia automatic detection. Comput. Methods Programs Biomed. 2020, 193, 105479. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Heaton, J. Ian Goodfellow, Yoshua Bengio, and Aaron Courville: Deep learning. Genet. Program. Evolvable Mach. 2018, 19, 305–307. [Google Scholar] [CrossRef]

- Taylor, J.B. My Stroke of Insight; Yellow Kite: London, OH, USA, 2009. [Google Scholar]

| Author | Techniques | Dataset | Targets | Features |

|---|---|---|---|---|

| Almeida et al. [19] | Xception | FER2013 | Natural emotions | Facial expression |

| SFEW | ||||

| Manalu et al. [22] | Custom CNN-RNN | Emognition Wearable Dataset 2020 | Natural emotions | Facial expression |

| InceptionV3-RNN | ||||

| MobileNetV2-RNN | ||||

| Pradhan et al. [36] | HEPLM | WESAD | Natural emotions | ECG, EDA, EMG, RSP, skin temperature |

| Jaswal et al. [38] | GWO + CNN | Personal | Natural emotions | EEG, MFCC |

| Zhang et al. [40] | Mask R-CNN | Personal | Natural emotions | HR (noncontact) |

| Eye state change | ||||

| HR (noncontact), Eye state change, Facial expression | ||||

| Chatterjee et al. [41] | MobileNet | Thermal Face Database | Intentional emotions | Thermal images (skin temperature) |

| MobileNet +GL-MFO |

| Types of Emotions |

|---|

| Sympathy |

| Encouragement |

| Gratitude |

| Surprise |

| Impression |

| Admiration |

| High Praise |

| Amusement |

| Interest |

| Concern |

| Concentration |

| Disappointment |

| Sadness |

| Boredom |

| Hyperparameter | Value |

|---|---|

| Batch size | 1024 |

| Lookback | 30 (one second) |

| Intermediate layer | 100 |

| Epoch | 100 |

| Loss function | Binary cross-entropy |

| Optimizer | Adam [56] |

| Number of features | 11 |

| Participants | F1 Score (%) |

|---|---|

| A | 82.28 |

| B | 86.49 |

| C | 98.76 |

| D | 90.24 |

| E | 97.32 |

| F | 97.89 |

| G | 96.76 |

| H | 94.95 |

| I | 85.24 |

| Average | 92.21 |

| Author | Techniques | Dataset | Features | F1 Score (%) |

|---|---|---|---|---|

| The authors of the present study | BLSTM | Personal | Skin temperature and eye-opening degree | 92.21 |

| Almeida et al. [19] | Xception | FER2013 | Facial expression | 84.74 |

| SFEW | 70.02 | |||

| Manalu et al. [22] | Custom CNN–RNN | Emognition Wearable Dataset 2020 | Facial expression | 72.12 |

| Inception V3–RNN | 71.34 | |||

| MobileNet V2–RNN | 61.07 | |||

| Pradhan et al. [33] | HEPLM | WESAD | ECG, EDA, EMG, RSP, and skin temperature | 98.21 |

| Jaswal et al. [35] | GWO + CNN | Personal | EEG and MFCC | 98.08 |

| Zhang et al. [37] | Mask R-CNN | Personal | HR (noncontact) | 58.28 |

| Eye state change | 83.10 | |||

| HR (noncontact), eye state change, and facial expression | 84.99 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tanabe, R.; Kikuchi, R.; Zou, M.; Suehiro, K.; Takahashi, N.; Saito, H.; Kobayashi, T.; Satake, H.; Sato, N.; Kageyama, Y. Emotion Recognition Using Temporal Facial Skin Temperature and Eye-Opening Degree During Digital Content Viewing for Japanese Older Adults. Sensors 2025, 25, 6545. https://doi.org/10.3390/s25216545

Tanabe R, Kikuchi R, Zou M, Suehiro K, Takahashi N, Saito H, Kobayashi T, Satake H, Sato N, Kageyama Y. Emotion Recognition Using Temporal Facial Skin Temperature and Eye-Opening Degree During Digital Content Viewing for Japanese Older Adults. Sensors. 2025; 25(21):6545. https://doi.org/10.3390/s25216545

Chicago/Turabian StyleTanabe, Rio, Ryota Kikuchi, Min Zou, Kenji Suehiro, Nobuaki Takahashi, Hiroki Saito, Takuya Kobayashi, Hisami Satake, Naoko Sato, and Yoichi Kageyama. 2025. "Emotion Recognition Using Temporal Facial Skin Temperature and Eye-Opening Degree During Digital Content Viewing for Japanese Older Adults" Sensors 25, no. 21: 6545. https://doi.org/10.3390/s25216545

APA StyleTanabe, R., Kikuchi, R., Zou, M., Suehiro, K., Takahashi, N., Saito, H., Kobayashi, T., Satake, H., Sato, N., & Kageyama, Y. (2025). Emotion Recognition Using Temporal Facial Skin Temperature and Eye-Opening Degree During Digital Content Viewing for Japanese Older Adults. Sensors, 25(21), 6545. https://doi.org/10.3390/s25216545