Fall Detection in Elderly People: A Systematic Review of Ambient Assisted Living and Smart Home-Related Technology Performance

Highlights

- Non-wearable sensors and hybrid solutions (wearable + non-wearable sensors) achieved the highest fall detection performance.

- Deep learning methods produced the best performance results.

- Propose a systematic review of fall detection systems’ performances.

- Identify the advantages of different solutions in terms of performance for researchers, practitioners, and policymakers in order to design and implement more effective fall detection systems.

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Search Strategy

3.2. Selection Criteria

3.3. Data Extraction and Classification

3.4. Data Analysis

3.5. Statistical Analysis

4. Results

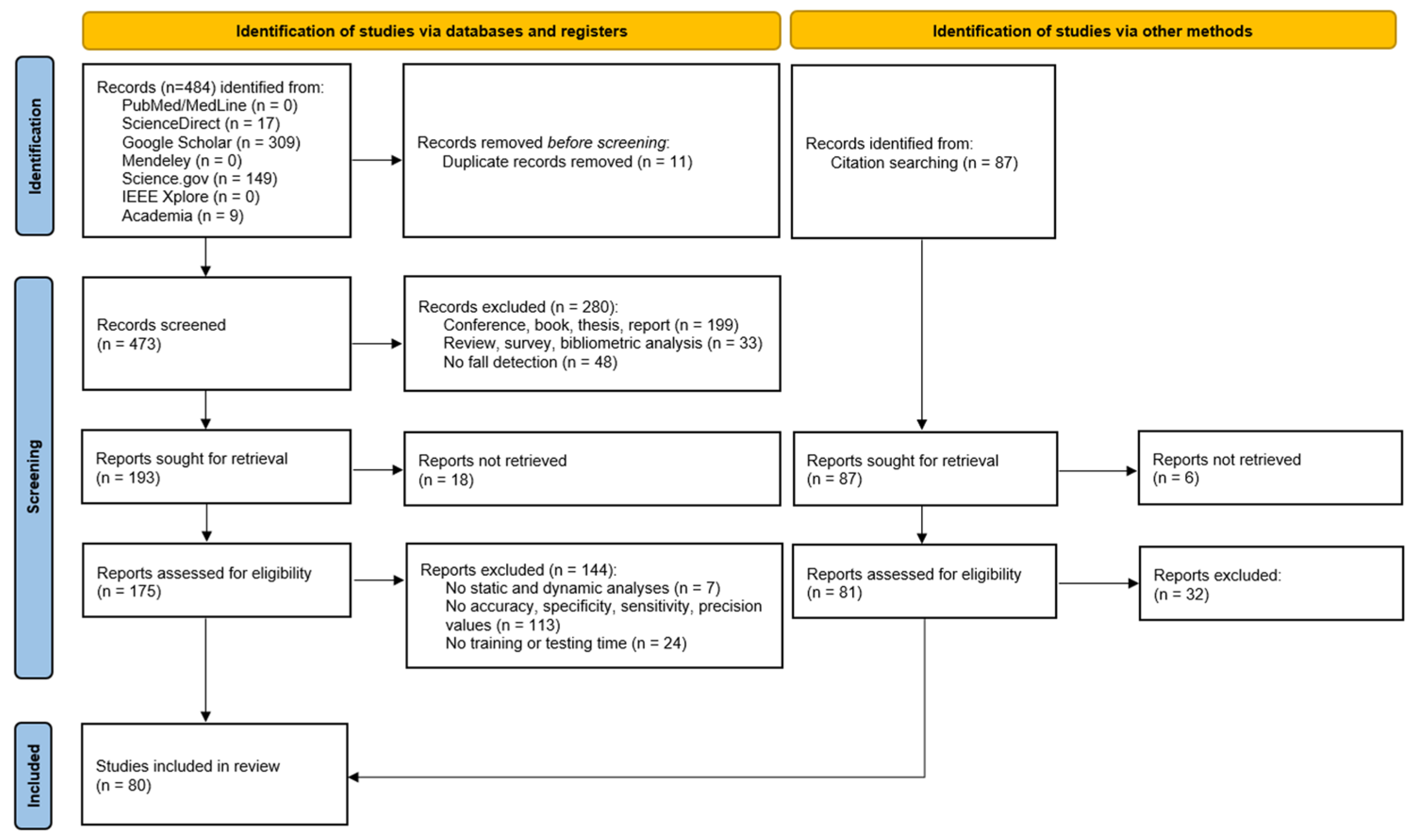

4.1. Search Results

4.2. Study Characteristics

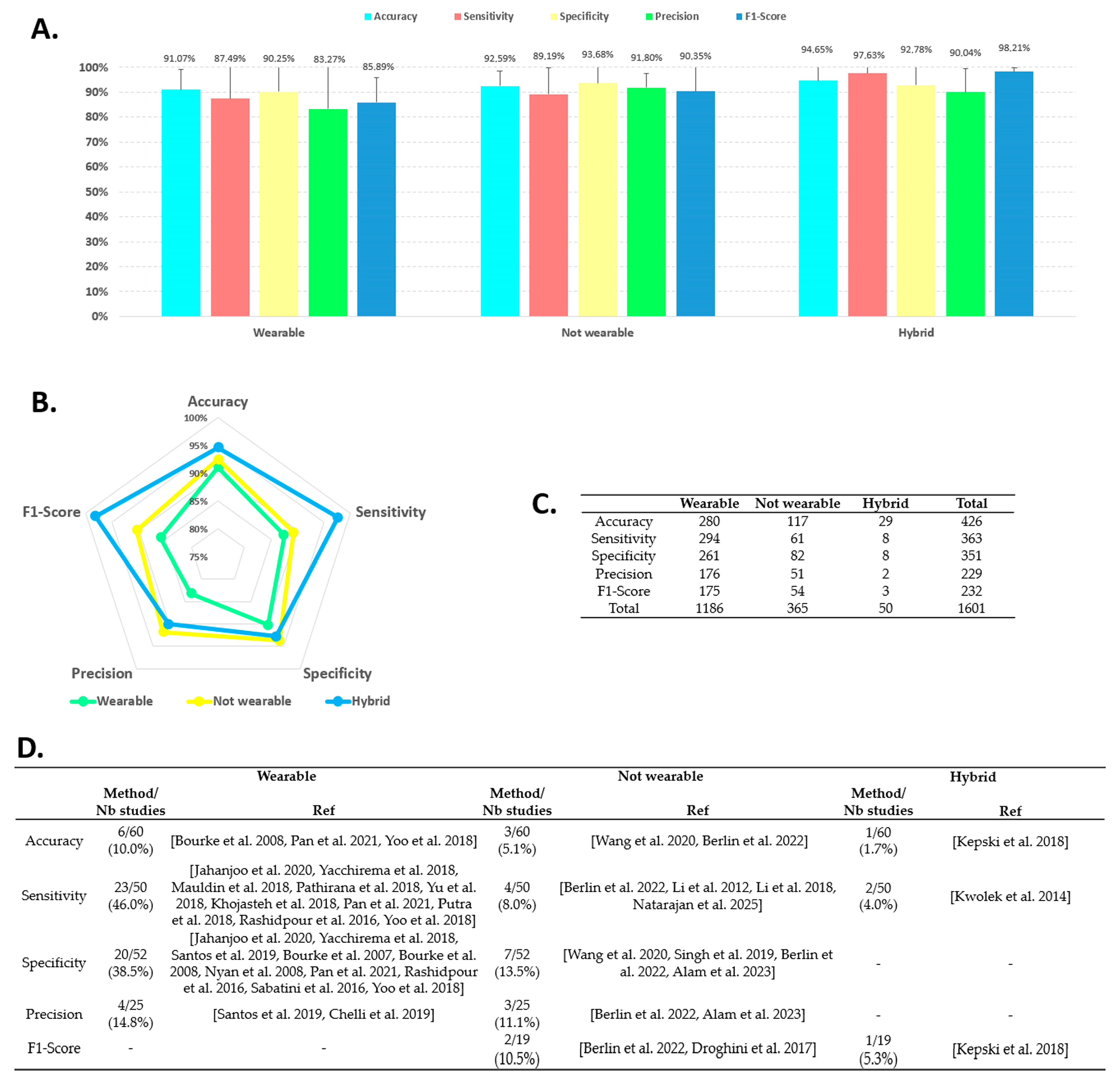

4.3. AAL Fall Detection Performance per Sensor Category

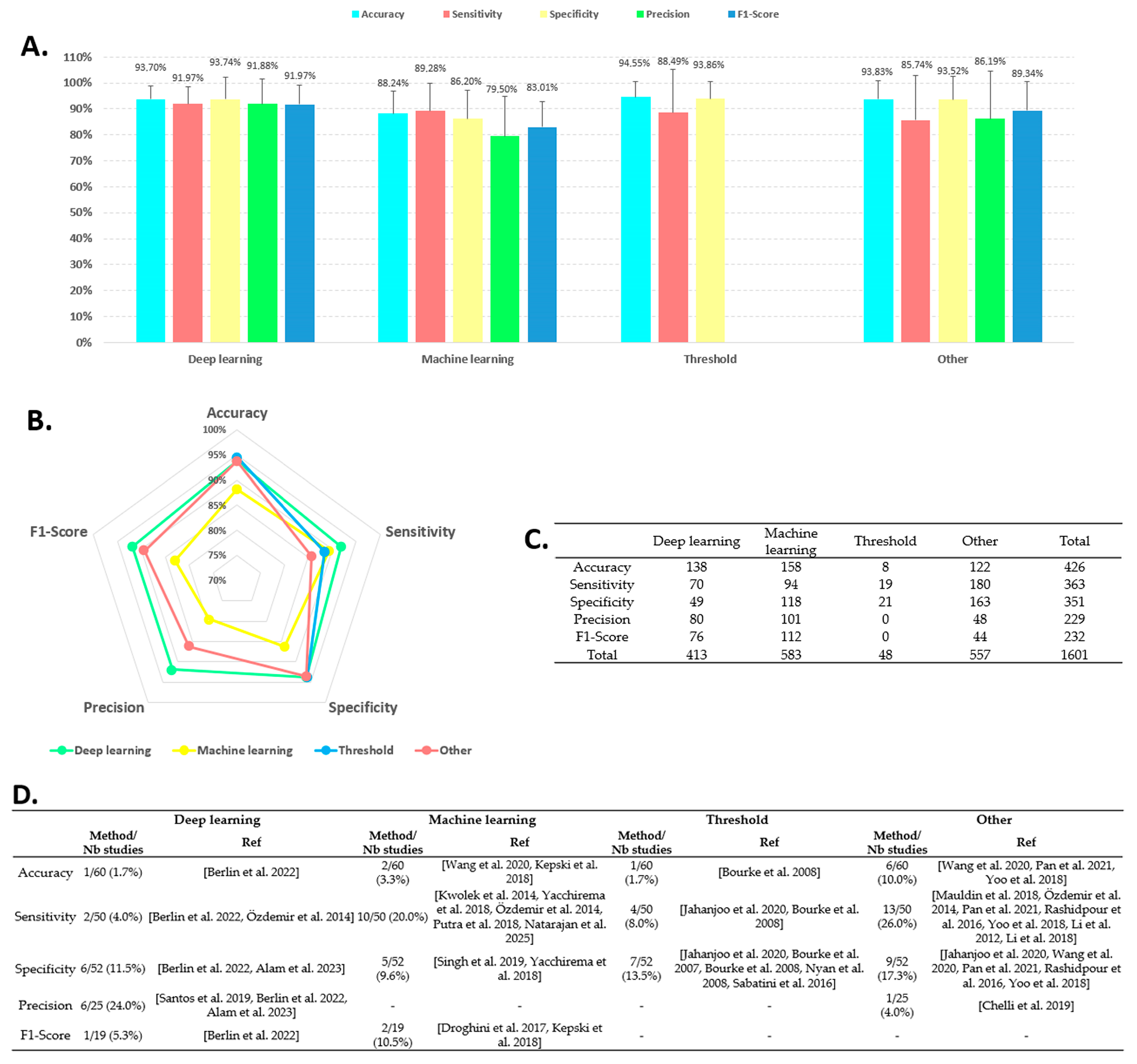

4.4. AAL Fall Detection Performance per Method

4.5. AAL Fall Detection with 100% Performance

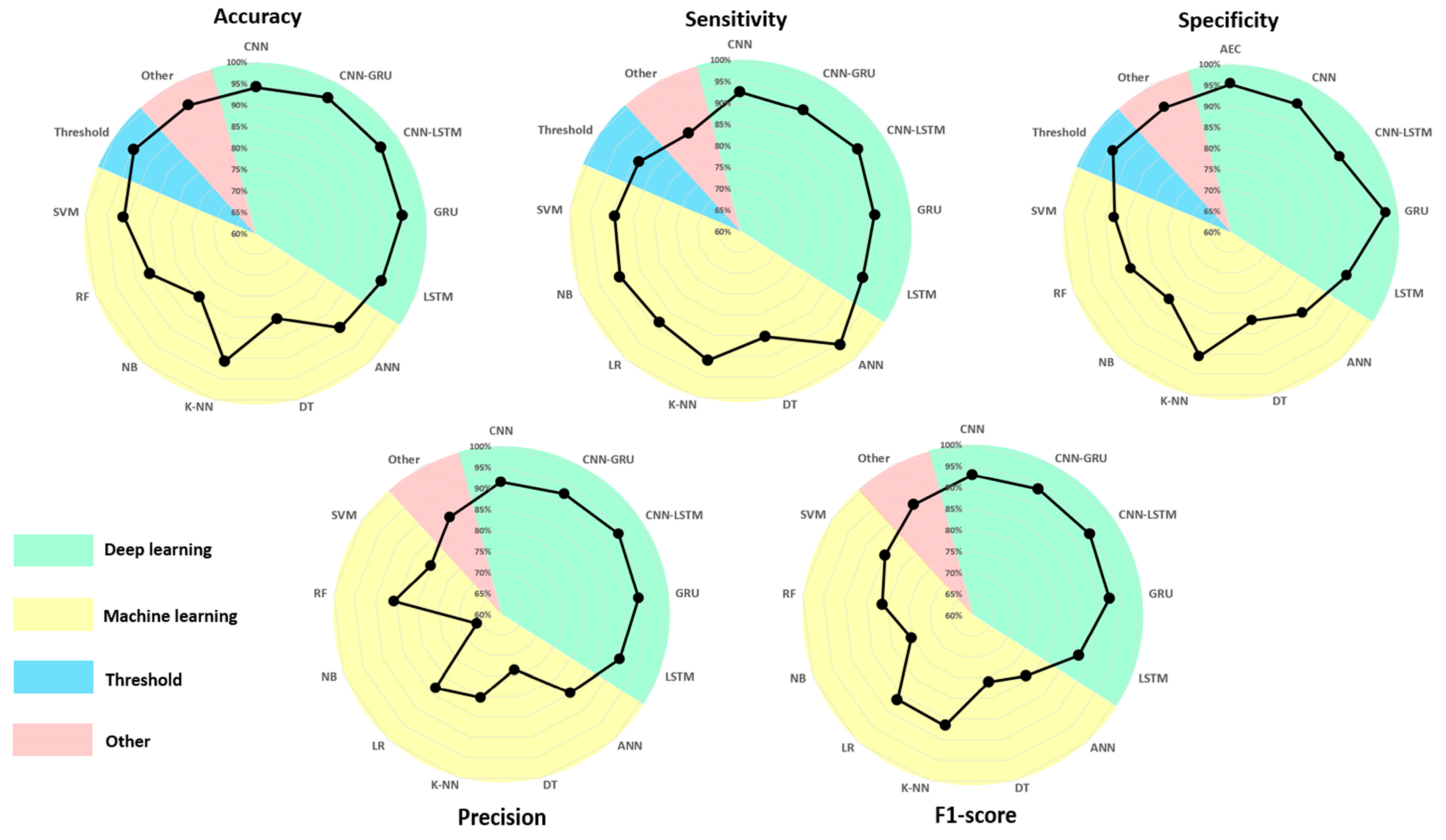

4.6. AAL Fall Detection Performance per Algorithm

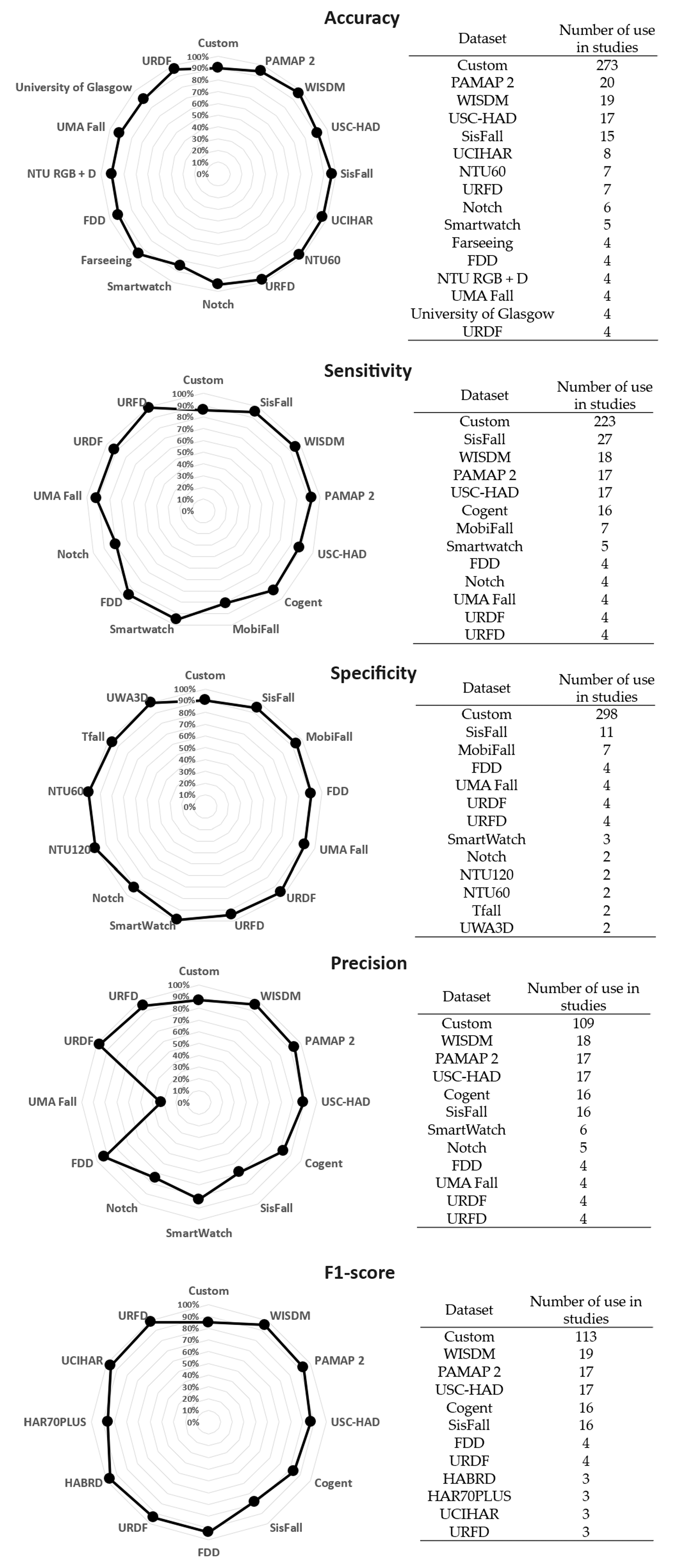

4.7. AAL Fall Detection Performance per Datasets

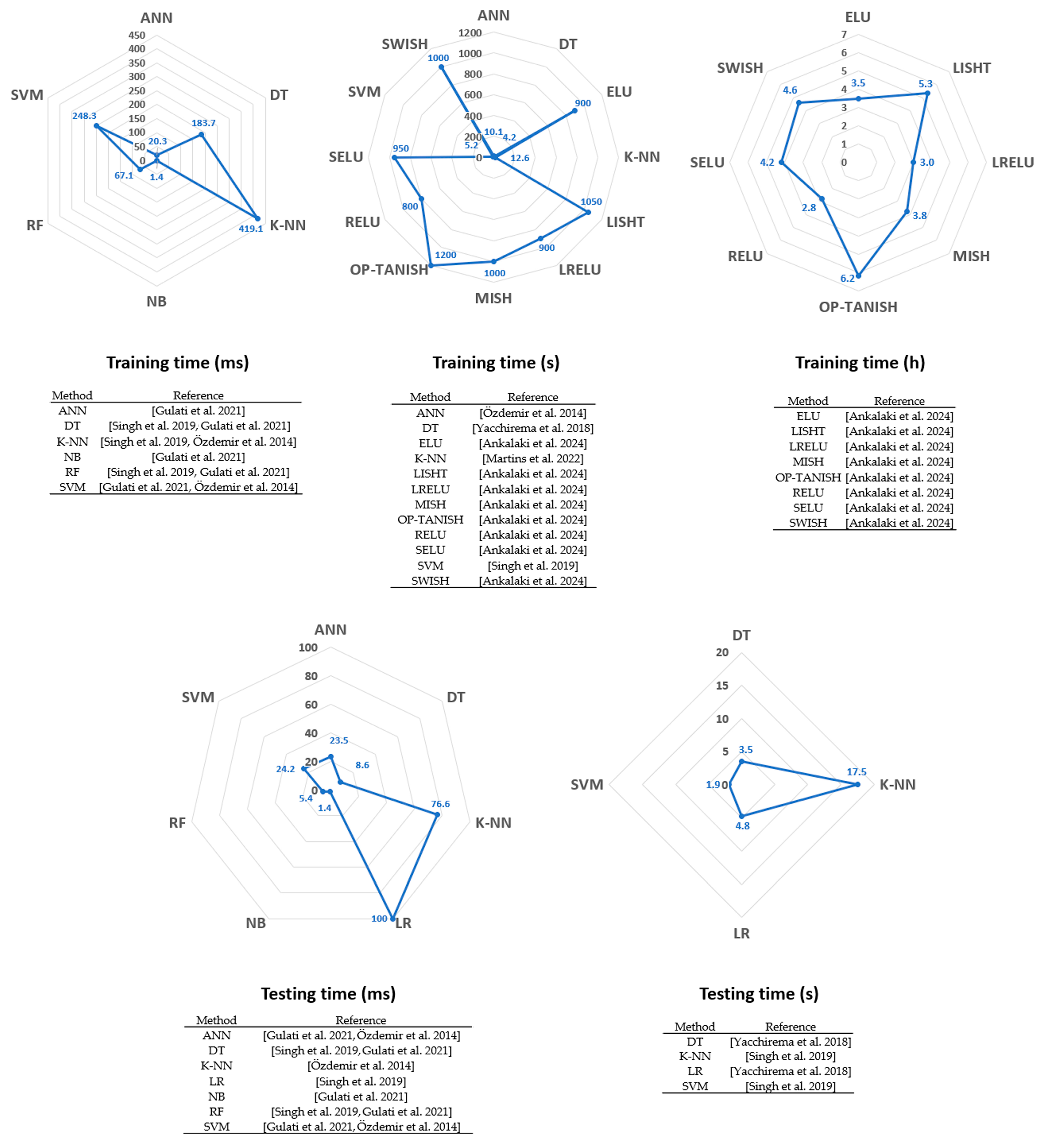

4.8. Algorithm Training and Testing Time

5. Discussion

5.1. Performance by Sensor Category

5.2. Performance by Methods

5.3. Algorithms

5.4. Datasets

5.5. Training and Testing Time

5.6. Limitations

5.7. General Outcomes and Future Research Directions

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| A | Accelerometer |

| A + R | Antennas and Receiver |

| AAL | Ambient Assisted Living |

| Acc | Linear dichroism |

| AEC | auto-encoder |

| ANN | Artificial Neural Network |

| AS | Acoustic sensor |

| AV | Angular Velocity |

| C | Camera |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| DT | Decision Tree |

| Dyn | Dynamic |

| ECG | Electrocardiogram |

| EMG | Electromyography |

| Ext | exterior sensor. |

| F1 | F1-score |

| G | Gyroscope |

| GRU | Gait Recurrent Unit |

| HML | Hybrid Machine Learning |

| HMM | Hidden Markov Model |

| IR | Infrared sensor |

| KNN | k-Nearest Neighbor |

| LR | Linear regression |

| LSTM | Long Short-Term Memory |

| M | Magnetometer |

| Mag | magnetic sensor |

| ML | Machine Learning |

| MLP | Multi-layer Perceptron |

| NB | Naive Bayes |

| O | Orientation sensor |

| P | Pressure Sensor |

| Prec | Precision |

| PRISMA | Preferred Reporting Items for Systematic reviews and Meta-Analyses |

| R | Radar |

| RF | Random Forest |

| Sens | Sensitivity |

| Spec | Specificity |

| Stat | Static |

| SVM | Support Vector Machines |

| T | Thermal sensor |

| V | Vibration sensor |

References

- United Nations. World Population Prospects: The 2017 Revision, Key Findings and Advance Tables; Working Paper ESA/P/WP/248; Department of Economic and Social Affairs, Population Division: New York, NY, USA, 2017. [Google Scholar]

- Centers for Disease Control and Prevention. Falls Are Leading Cause of Injury and Death in Older Americans. Available online: https://www.cdc.gov/media/releases/2016/p0922-older-adult-falls.html (accessed on 25 August 2025).

- Turner, S.; Kisser, R.; Rogmans, W. Falls Among Older Adults in the EU-28: Key Facts from the Available Statistics. Available online: https://eupha.org/repository/sections/ipsp/Factsheet_falls_in_older_adults_in_EU.pdf (accessed on 25 August 2025).

- Li, L.T.; Wang, S.Y. Disease burden and risk factors of falls in the elderly. Chin. J. Epidemiol. 2001, 22, 262–264. [Google Scholar]

- Kumari, P.; Mathew, L.; Syal, P. Increasing trend of wearables and multimodal interface for human activity monitoring: A review. Biosens. Bioelectron. 2017, 90, 298–307. [Google Scholar] [CrossRef] [PubMed]

- Kumar, P.; Suresh, S. Deep-HAR: An ensemble deep learning model for recognizing the simple, complex, and heterogeneous human activities. Multimed. Tools Appl. 2023, 82, 30435–30462. [Google Scholar] [CrossRef]

- Nouredanesh, M.; Gordt, K.; Schwenk, M.; Tung, J. Automated detection of multidirectional compensatory balance reactions: A step towards tracking naturally occurring near falls. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 478–487. [Google Scholar] [CrossRef]

- De la Cal, E.A.; Fáñez, M.; Villar, M.; Villar, J.R.; González, V.M. A low-power HAR method for fall and high-intensity ADLs identification using wrist-worn accelerometer devices. Log. J. IGPL 2023, 31, 375–389. [Google Scholar] [CrossRef]

- Garcia-Ceja, E.; Galván-Tejada, C.E.; Brena, R. Multi-view stacking for activity recognition with sound and accelerometer data. Inf. Fusion 2018, 40, 45–56. [Google Scholar] [CrossRef]

- Xie, J.; Guo, K.; Zhou, Z.; Yan, Y.; Yang, P. ART: Adaptive and real-time fall detection using COTS smart watch. In Proceedings of the 2020 6th International Conference on Big Data Computing and Communications (BIGCOM), Deqing, China, 24–25 July 2020; pp. 33–40. [Google Scholar]

- Zhang, S.; Mc Cullagh, P. Situation Awareness Inferred from Posture Transition and Location. IEEE Trans. Hum. Mach. Syst. 2017, 47, 814–821. [Google Scholar] [CrossRef]

- Badgujar, S.; Pillai, A.S. Fall detection for elderly people using machine learning. In Proceedings of the 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kharagpur, India, 1–3 July 2020; pp. 1–4. [Google Scholar]

- Haghi, M.; Geissler, A.; Fleischer, H.; Stoll, N.; Thurow, K. Ubiqsense: A personal wearable in ambient parameters monitoring based on IoT platform. In Proceedings of the 2019 International Conference on Sensing and Instrumentation in IoT Era (ISSI), Lisbon, Portugal, 29–30 August 2019; pp. 1–6. [Google Scholar]

- Andò, B.; Baglio, S.; Lombardo, C.O.; Marletta, V. A multisensor data-fusion approach for ADL and fall classification. IEEE Trans. Instrum. Meas. 2016, 65, 1960–1967. [Google Scholar] [CrossRef]

- Jahanjoo, A.; Naderan, M.; Rashti, M.J. Detection and multi-class classification of falling in elderly people by deep belief network algorithms. J. Ambient Intell. Hum. Comput. 2020, 11, 4145–4165. [Google Scholar] [CrossRef]

- Zerrouki, N.; Harrou, F.; Sun, Y.; Houacine, A. Vision-based human action classification using adaptive boosting algorithm. IEEE Sens. J. 2018, 18, 5115–5121. [Google Scholar] [CrossRef]

- Guerra, B.M.V.; Ramat, S.; Beltrami, G.; Schmid, M. Recurrent network solutions for human posture recognition based on Kinect skeletal data. Sensors 2023, 23, 5260. [Google Scholar] [CrossRef] [PubMed]

- Fan, L.; Li, T.; Yuan, Y.; Katabi, D. In-home daily-life captioning using radio signals. In Proceedings of the Computer Vision—ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 105–123. [Google Scholar]

- Wang, F.; Gong, W.; Liu, J.K.; Wu, K. Channel Selective Activity Recognition with WiFi: A Deep Learning Approach Exploring Wideband Information. IEEE Trans. Netw. Sci. Eng. 2020, 7, 181–192. [Google Scholar] [CrossRef]

- Ding, C.; Zhang, L.; Chen, H.; Hong, H.; Zhu, X.; Fioranelli, F. Sparsity-Based Human Activity Recognition with Point Net Using a Portable FMCW Radar. IEEE Internet Things J. 2023, 10, 10024–10037. [Google Scholar] [CrossRef]

- Chen, J.; Wang, C.; Liu, Y. Vibration Signal Based Abnormal Gait Detection and Recognition. IEEE Access 2024, 12, 89845–89855. [Google Scholar] [CrossRef]

- Singh, S.; Aksanli, B. Non-Intrusive Presence Detection and Position Tracking for Multiple People Using Low-Resolution Thermal Sensors. J. Sens. Actuator Netw. 2019, 8, 40. [Google Scholar] [CrossRef]

- Mashiyama, S.; Hong, J.; Ohtsuki, T. Activity recognition using low resolution infrared array sensor. In Proceedings of the 2015 IEEE International Conference on Communications (ICC), London, UK, 8–12 June 2015; pp. 495–500. [Google Scholar]

- Clapés, A.; Pardo, À.; Pujol Vila, O.; Escalera, S. Action detection fusing multiple Kinects and a WIMU: An application to in-home assistive technology for the elderly. Mach. Vis. Appl. 2018, 29, 765–788. [Google Scholar] [CrossRef]

- Kwolek, B.; Kepski, M. Human fall detection on embedded platform using depth maps and wireless accelerometer. Comput. Methods Prog. Biomed. 2014, 117, 489–501. [Google Scholar] [CrossRef]

- Fetzer, T.; Ebner, F.; Bullmann, M.; Deinzer, F.; Grzegorzek, M. Smartphone-based indoor localization within a 13th century historic building. Sensors 2018, 18, 4095. [Google Scholar] [CrossRef]

- Madhu, B.; Mukherjee, A.; Islam, M.Z.; Mamun-Al-Imran, G.M.; Roy, R.; Ali, L.E. Depth motion map based human action recognition using adaptive threshold technique. In Proceedings of the 2021 5th International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 18–20 November 2021; pp. 1–6. [Google Scholar]

- Liu, C.; Jiang, Z.; Su, X.; Benzoni, S.; Maxwell, A. Detection of Human Fall Using Floor Vibration and Multi-Features Semi-Supervised SVM. Sensors 2019, 19, 3720. [Google Scholar] [CrossRef]

- Vallabh, P.; Malekian, R.; Ye, N.; Bogatinoska, D.C. Fall detection using machine learning algorithms. In Proceedings of the 2024 24th International Conference on Software, Telecommunications and Computer Networks (SoftCOM) 2016, Split, Croatia, 22–24 September 2016; pp. 1–9. [Google Scholar] [CrossRef]

- Ando, B.; Baglio, S.; Marletta, V.; Crispino, R. A neurofuzzy approach for fall detection. In Proceedings of the 2017 International Conference on Engineering, Technology and Innovation (ICE/ITMC), Madeira, Portugal, 27–29 June 2017; pp. 1312–1316. [Google Scholar] [CrossRef]

- Lee, S. Fall detection using wavelet transform and neural network. Int. J. Comput. Sci. Electron. Eng. 2014, 2, 113–116. [Google Scholar]

- Hussain, F.; Hussain, F.; Ehatisham-ul-Haq, M.; Azam, M.A. Activity-Aware Fall Detection and Recognition Based on Wearable Sensors. IEEE Sens. J. 2019, 19, 4528–4536. [Google Scholar] [CrossRef]

- Zurbuchen, N.; Bruegger, P.; Wilde, A.A. Comparison of Machine Learning Algorithms for Fall Detection using Wearable Sensors. In Proceedings of the 2020 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 19–21 February 2020. [Google Scholar]

- He, J.; Hu, C.; Wang, X. A Smart Device Enabled System for Autonomous Fall Detection and Alert. Int. J. Distrib. Sens. Netw. 2016, 20, 2308183. [Google Scholar] [CrossRef]

- Yacchirema, D.; de Puga, J.S.; Palau, C.; Esteve, M. Fall detection system for elderly people using IoT and big data. Procedia Comput. Sci. 2018, 130, 603–610. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Fakhrulddin, A.H.; Fei, X.; Li, H. Convolutional neural networks (CNN) based human fall detection on body sensor networks (BSN) sensor data. In Proceedings of the 2017 4th International Conference on Systems and Informatics (ICSAI), Hangzhou, China, 11–13 November 2017. [Google Scholar]

- Santos, G.L.; Endo, P.T.; Monteiro, K.H.d.C.; Rocha, E.D.S.; Silva, I.; Lynn, T. Accelerometer-Based Human Fall Detection Using Convolutional Neural Networks. Sensors 2019, 19, 1644. [Google Scholar] [CrossRef]

- Ajerla, D.; Mahfuz, S.; Zulkernine, F. A real-time patient monitoring framework for fall detection. Wirel. Commun. Mob. Comput. 2019, 2, 9507938. [Google Scholar] [CrossRef]

- Theodoridis, T.; Solachidis, V.; Vretos, N.; Daras, P. Human Fall Detection from Acceleration Measurements Using a Recurrent Neural Network. In Precision Medicine Powered by pHealth and Connected Health, Proceedings of the ICBHI 2017. IFMBE Proceedings, Thessaloniki, Greece, 16–17 November 2017; Maglaveras, N., Chouvarda, I., de Carvalho, P., Eds.; Springer: Singapore, 2018; p. 66. [Google Scholar]

- Mauldin, T.R.; Canby, M.E.; Metsis, V.; Ngu, A.H.H.; Rivera, C.C. Smart-Fall: A smartwatch-based fall detection system using deep learning. Sensors 2018, 18, 3363. [Google Scholar] [CrossRef] [PubMed]

- Torti, E.; Fontanella, A.; Musci, M.; Blago, N.; Pau, D.; Leporati, F. Embedding recurrent neural networks in wearable systems for real-time fall detection. Microprocess. Microsyst. 2019, 71, 102895. [Google Scholar] [CrossRef]

- Al-Hassani, R.T.; Atilla, D.C. Human Activity Detection Using Smart Wearable Sensing Devices with Feed Forward Neural Networks and PSO. Appl. Sci. 2023, 13, 3716. [Google Scholar] [CrossRef]

- Zhang, X.; Xie, Q.; Sun, W.; Wang, T. Fall detection method based on spatio-temporal coordinate attention for high-resolution networks. Complex. Intell. Syst. 2025, 11, 1. [Google Scholar] [CrossRef]

- Benhaili, Z. Detecting human fall using IoT devices for healthcare applications. Int. J. Artif. Intell. 2025, 14, 561–569. [Google Scholar] [CrossRef]

- Droghini, D.; Ferretti, D.; Principi, E.; Squartini, S.; Piazza, F. A combined One-class SVM and Template-matching Approach for Useraided Human Fall Detection by Means of Floor Acoustic Features. Comput. Intell. Neurosci. 2017, 3, 1512670. [Google Scholar]

- Gibson, R.M.; Amira, A.; Ramzan, N.; Casaseca-De-La-Higuera, P.; Pervez, Z. Multiple comparator classifier framework for accelerometer-based fall detection and diagnostic. Appl. Soft Comput. 2016, 39, 94–103. [Google Scholar] [CrossRef]

- Berlin, S.J.; John, M. Vision based human fall detection with Siamese convolutional neural networks. J. Ambient. Intell. Hum. Comput. 2022, 13, 5751–5762. [Google Scholar] [CrossRef]

- Gulati, N.; Kaur, P.D. An argumentation enabled decision making approach for Fall Activity Recognition in Social IoT based Ambient Assisted Living systems. Future Gener. Comput. Syst. 2021, 122, 82–97. [Google Scholar] [CrossRef]

- Li, Y.; Liu, P.; Fang, Y.; Wu, X.; Xie, Y.; Xu, Z.; Ren, H.; Jing, F. A Decade of Progress in Wearable Sensors for Fall Detection (2015–2024): A Network-Based Visualization Review. Sensors 2025, 25, 2205. [Google Scholar] [CrossRef]

- Sanchez-Comas, A.; Synnes, K.; Hallberg, J. Hardware for Recognition of Human Activities: A Review of Smart Home and AAL Related Technologies. Sensors 2020, 20, 4227. [Google Scholar] [CrossRef]

- Casilari-Pérez, E.; García-Lago, F. A comprehensive study on the use of artificial neural networks in wearable fall detection systems. Expert. Syst. Appl. 2019, 138, 112811. [Google Scholar] [CrossRef]

- Iadarola, G.; Mengarelli, A.; Crippa, P.; Fioretti, S.; Spinsante, S. A Review on Assisted Living Using Wearable Devices. Sensors 2024, 24, 7439. [Google Scholar] [CrossRef]

- Amir, N.I.M.; Dziyauddin, R.A.; Mohamed, N.; Ismail, N.S.N.; Kaidi, H.M.; Ahmad, N. Fall Detection System using Wearable Sensor Devices and Machine Learning: A Review. TechRxiv 2024. [Google Scholar] [CrossRef]

- Islam, M.M.; Tayan, O.; Islam, M.I.; Islam, M.S.; Nooruddin, S.; Kabir, M.N. Deep Learning Based Systems Developed for Fall Detection: A Review. IEEE Access 2020, 8, 166117–166137. [Google Scholar] [CrossRef]

- Gaya-Morey, F.X.; Manresa-Yee, C.; Buades-Rubio, J.M. Deep learning for computer vision based activity recognition and fall detection of the elderly: A systematic review. Appl. Intell. 2024, 54, 8982–9007. [Google Scholar] [CrossRef]

- Guerra, B.M.V.; Torti, E.; Marenzi, E.; Schmid, M.; Ramat, S.; Leporati, F.; Danese, G. Ambient assisted living for frail people through human activity recognition: State-of-the-art, challenges and future directions. Front. Neurosci. 2023, 17, 1256682. [Google Scholar] [CrossRef] [PubMed]

- Ren, L.; Peng, Y. Research of Fall Detection and Fall Prevention Technologies: A Systematic Review. IEEE Access 2019, 7, 77702–77722. [Google Scholar] [CrossRef]

- Subramaniam, S.; Faisal, A.I.; Deen, M.J. Wearable Sensor Systems for Fall Risk Assessment: A Review. Front. Digit. Health 2022, 4, 921506. [Google Scholar] [CrossRef]

- Patel, S.; Park, H.; Bonato, P.; Chan, L.; Rodgers, M. A Review of Wearable Sensors and Systems with Application in Rehabilitation. J. Neuroeng. Rehabil. 2012, 9, 21. [Google Scholar] [CrossRef]

- Pathirana, P.N.; Karunarathne, M.S.; Williams, G.L.; Nam, P.T.; Durrant-Whyte, H. Robust and accurate capture of human joint pose using an inertial sensor. IEEE J. Transl. Eng. Health Med. 2018, 6, 2700913. [Google Scholar] [CrossRef]

- Cicirelli, G.; Marani, R.; Petitti, A.; Milella, A.; D’Orazio, T. Ambient assisted living: A review of technologies, methodologies and future perspectives for healthy aging of population. Sensors 2021, 21, 3549. [Google Scholar] [CrossRef]

- Pham, N.P.; Dao, H.V.; Phung, H.N.; Ta, H.V.; Nguyen, N.H.; Hoang, T.T. Classification different types of fall for reducing false alarm using single accelerometer. In Proceedings of the 2018 IEEE Seventh International Conference on Communications and Electronics (ICCE), Hue, Vietnam, 18–20 July 2018. [Google Scholar]

- Wu, Y.; Su, Y.; Hu, Y.; Yu, N.; Reng, R. A Multi-sensor Fall Detection System based on Multivariate Statistical Process Analysis. J. Med. Biol. Eng. 2019, 39, 336–351. [Google Scholar] [CrossRef]

- Yu, S.; Chen, H.; Brown, R.A. Hidden Markov model-based fall detection with motion sensor orientation calibration: A case for real-life home monitoring. IEEE J. Biomed. Health Inform. 2018, 22, 1847–1853. [Google Scholar] [CrossRef]

- Kaur, A.P.; Nsugbe, E.; Drahota, A.; Oldfield, M.; Mohagheghian, I.; Sporea, R.A. State-of-the-art fall detection techniques with emphasis on floor-based systems—A review. Biomed. Eng. Adv. 2025, 9, 100179. [Google Scholar] [CrossRef]

- Li, X.; Pang, T.; Liu, W.; Wang, T. Fall detection for elderly person care using convolutional neural networks. In Proceedings of the 10th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics, Shanghai, China, 14–16 October 2017. [Google Scholar]

- Shu, F.; Shu, J. An eight-camera fall detection system using human fall pattern recognition via machine learning by a low-cost android box. Sci. Rep. 2021, 11, 2471. [Google Scholar] [CrossRef] [PubMed]

- Bharathiraja, N.; Indhuja, R.; Krishnan, P.; Anandhan, S.; Hariprasad, S. Real-time fall detection using ESP32 and AMG8833 thermal sensor: A non-wearable approach for enhanced safety. In Proceedings of the Second International Conference on Augmented Intelligence and Sustainable Systems (ICAISS), Trichy, India, 23–25 August 2023. [Google Scholar] [CrossRef]

- Popescu, M.; Li, Y.; Skubic, M.; Rantz, M. An acoustic fall detector system that uses sound height information to reduce the false alarm rate. In Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–24 August 2008. [Google Scholar] [CrossRef]

- Kittiyanpunya, C.; Chomdee, P.; Boonpoonga, A.; Torrungrueng, D. Millimeter-Wave Radar-Based Elderly Fall Detection Fed by One-Dimensional Point Cloud and Doppler. IEEE Access 2023, 11, 76269–76283. [Google Scholar] [CrossRef]

- Clemente, J.; Li, F.; Valero, M.; Song, W. Smart seismic sensing for indoor fall detection, location, and notification. IEEE J. Biomed. Health Inform. 2020, 24, 524–532. [Google Scholar] [CrossRef]

- Li, X.; Nie, L.; Xu, H.; Wang, X. Collaborative Fall Detection Using Smart Phone and Kinect. Mob. Netw. Appl. 2018, 23, 775–788. [Google Scholar] [CrossRef]

- Harris, J.D.; Quatman, C.E.; Manring, M.M.; Siston, R.A.; Flanigan, D.C. How to Write a Systematic Review. Am. J. Sports Med. 2014, 42, 2761–2768. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Med. 2009, 6, 1006–1012. [Google Scholar] [CrossRef]

- Özdemir, A.T.; Barshan, B. Detecting falls with wearable sensors using machine learning techniques. Sensors 2014, 14, 10691–10708. [Google Scholar] [CrossRef]

- Agrawal, D.K.; Usaha, W.; Pojprapai, S.; Wattanapan, P. Fall Risk Prediction Using Wireless Sensor Insoles with Machine Learning. IEEE Access 2023, 11, 23119–23126. [Google Scholar] [CrossRef]

- Ankalaki, S.; Thippeswamy, M.N. A novel optimized parametric hyperbolic tangent swish activation function for 1D-CNN: Application of sensor-based human activity recognition and anomaly detection. Multimed. Tools Appl. 2024, 83, 61789–61819. [Google Scholar] [CrossRef]

- Bourke, A.K.; O’Brien, J.V.; Lyons, G.M. Evaluation of a threshold-based tri-axial accelerometer fall detection algorithm. Gait Posture 2007, 26, 194–199. [Google Scholar] [CrossRef]

- Bourke, A.K.; Lyons, G.M. A Threshold-based Fall-detection Algorithm Using A Bi-axial Gyroscope Sensor. Med. Eng. Phys. 2008, 30, 84–90. [Google Scholar] [CrossRef]

- Butt, F.S.; La Blunda, L.; Wagner, M.F.; Schäfer, J.; Medina-Bulo, I.; Gómez-Ullate, D. Fall Detection from Electrocardiogram (ECG) Signals and Classification by Deep Transfer Learning. Information 2021, 12, 63. [Google Scholar] [CrossRef]

- Chandramouli, N.A.; Natarajan, S.; Alharbi, A.H. Enhanced human activity recognition in medical emergencies using a hybrid deep CNN and bi-directional LSTM model with wearable sensors. Sci. Rep. 2024, 14, 30979. [Google Scholar] [CrossRef]

- Chelli, A.; Patzold, M. A machine learning approach for fall detection and daily living activity recognition. IEEE Access Pract. Innov. 2019, 7, 38670–38687. [Google Scholar] [CrossRef]

- Chen, K.H.; Hsu, Y.W.; Yang, J.J.; Jaw, F.S. Evaluating the specifications of built-in accelerometers in smartphones on fall detection performance. Instrum. Sci. Technol. 2018, 46, 194–206. [Google Scholar] [CrossRef]

- He, J.; Bai, S.; Wang, X. An Unobtrusive Fall Detection and Alerting System Based on Kalman Filter and Bayes Network Classifier. Sensors 2017, 17, 1393. [Google Scholar] [CrossRef]

- Jantaraprim, P.; Phukpattaranont, P.; Limsakul, C.; Wongkittisuksa, B. A system for improving fall detection performance using critical phase fall signal and a neural network. Songklanakarin J. Sci. Technol. 2012, 34, 637–644. [Google Scholar]

- Kerdegari, H.; Mokaram, S.; Samsudin, K.; Ramli, A.R. A pervasive neural network based fall detection system on smart phone. J. Ambient. Intell. Smart Environ. 2015, 7, 221–230. [Google Scholar] [CrossRef]

- Khojasteh, S.B.; Villar, J.R.; Chira, C.; González, V.M.; de la Cal, E. Improving fall detection using an on-wrist wearable accelerometer. Sensors 2018, 18, 1350. [Google Scholar] [CrossRef] [PubMed]

- Kraft, D.; Srinivasan, K.; Bieber, G. Deep Learning Based Fall Detection Algorithms for Embedded Systems, Smartwatches, and IoT Devices Using Accelerometers. Technologies 2020, 8, 72. [Google Scholar] [CrossRef]

- Liaqat, S.; Dashtipour, K.; Shah, S.A.; Rizwan, A.; Alotaibi, A.A.; Althobaiti, T.; Arshad, K.; Assaleh, K.; Ramzan, N. Novel Ensemble Algorithm for Multiple Activity Recognition in Elderly People Exploiting Ubiquitous Sensing Devices. IEEE Sens. J. 2021, 21, 18214–18221. [Google Scholar] [CrossRef]

- Martins, L.M.; Ribeiro, N.F.; Soares, F.; Santos, C.P. Inertial Data-Based AI Approaches for ADL and Fall Recognition. Sensors 2022, 22, 4028. [Google Scholar] [CrossRef] [PubMed]

- Medrano, C.; Igual, R.; García-Magariño, I.; Plaza, I.; Azuara, G. Combining novelty detectors to improve accelerometer-based fall detection. Med. Biol. Eng. Comput. 2017, 55, 1849–1858. [Google Scholar] [CrossRef] [PubMed]

- Miah, A.S.M.; Hwang, Y.S.; Shin, J. Sensor-Based Human Activity Recognition Based on Multi-Stream Time-Varying Features With ECA-Net Dimensionality Reduction. IEEE Access 2024, 12, 151649–151668. [Google Scholar] [CrossRef]

- Nyan, M.N.; Tay, F.E.H.; Murugasu, E. A wearable system for pre-impact fall detection. J. Biomech. 2008, 41, 3475–3481. [Google Scholar] [CrossRef]

- Özdemir, A.T.; Turan, A. An analysis on sensor locations of the human body for wearable fall detection devices: Principles and practice. Sensors 2016, 16, 1161. [Google Scholar] [CrossRef]

- Pan, D.; Liu, H.; Qu, D. Heterogeneous Sensor Data Fusion for Human Falling Detection. IEEE Access 2021, 9, 17610–17619. [Google Scholar] [CrossRef]

- Putra, I.P.E.S.; Brusey, J.; Gaura, E.; Vesilo, R. An Event-Triggered Machine Learning Approach for Accelerometer-Based Fall Detection. Sensors 2018, 18, 20. [Google Scholar] [CrossRef]

- Rashidpour, M.; Abdali-Mohammadi, F.; Fathi, A. Fall detection using adaptive neuro-fuzzy inference system. Int. J. Multimed. Ubiquitous Eng. 2016, 11, 91–106. [Google Scholar] [CrossRef]

- Ren, L.; Shi, W. Chameleon: Personalised and Adaptive Fall Detection of Elderly People in Home-based Environments. Int. J. Sens. Netw. 2016, 20, 163–176. [Google Scholar] [CrossRef]

- Rescio, G.; Leone, A.; Siciliano, P. Supervised Machine Learning Scheme for Electromyography-based Pre-fall Detection System. Expert. Syst. Appl. 2018, 100, 95–105. [Google Scholar] [CrossRef]

- Sabatini, A.M.; Ligorio, G.; Mannini, A.; Genovese, V.; Pinna, L. Prior-to- and Post-Impact Fall Detection Using Inertial and Barometric Altimeter Measurements. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 774–783. [Google Scholar] [CrossRef] [PubMed]

- Sarabia-Jácome, D.; Usach, R.; Palau, C.E.; Esteve, M. Highly-efficient fog-based deep learning AAL fall detection system. Internet Things 2020, 11, 100185. [Google Scholar] [CrossRef]

- Shahzad, A.; Kim, K. FallDroid: An automated smart-phone-based fall detection system using multiple kernel learning. IEEE Trans. Ind. Inf. 2018, 15, 35–44. [Google Scholar] [CrossRef]

- Suriani, N.S.; Rashid, F.N.; Yunos, N.Y. Optimal Accelerometer Placement for Fall Detection of Rehabilitation Patients. J. Telecommun. Electron. Comput. Eng. JTEC 2018, 10, 25–29. [Google Scholar]

- Tunca, C.; Salur, G.; Ersoy, C. Deep Learning for Fall Risk Assessment with Inertial Sensors: Utilizing Domain Knowledge in Spatio-Temporal Gait Parameters. IEEE J. Biomed. Health Inform. 2020, 24, 1994–2005. [Google Scholar] [CrossRef]

- Xi, X.; Tang, M.; Miran, S.M.; Luo, Z. Evaluation of Feature Extraction and Recognition for Activity Monitoring and Fall Detection Based on Wearable sEMG Sensors. Sensors 2017, 17, 1229. [Google Scholar] [CrossRef]

- Yoo, S.; Oh, D. An artificial neural network–based fall detection. Int. J. Eng. Bus. Manag. 2018, 10, 1847979018787905. [Google Scholar] [CrossRef]

- Yuwono, M.; Moulton, B.D.; Su, S.W.; Celler, B.G.; Nguyen, H.T. Unsupervised machine-learning method for improving the performance of ambulatory fall-detection systems. Biomed. Eng. Online 2012, 11, 9. [Google Scholar] [CrossRef]

- Adnan, S.M.; Irtaza, A.; Aziz, S.; Ullah, M.O.; Javed, A.; Mahmood, M.T. Fall Detection Through Acoustic Local Ternary Patterns. Appl. Acoust. 2018, 140, 296–300. [Google Scholar] [CrossRef]

- Alam, E.; Sufian, A.; Dutta, P.; Leo, M. Human Fall Detection Using Transfer Learning-Based 3D CNN. In Computational Technologies and Electronics, Proceedings of the ICCTE 2023. Communications in Computer and Information Science, Siliguri, India, 23–25 November 2023; Majumder, M., Zaman, J.K.M.S.U., Ghosh, M., Chakraborty, S., Eds.; Springer: Cham, Switzerland, 2023; Volume 2376. [Google Scholar] [CrossRef]

- de Miguel, K.; Brunete, A.; Hernando, M.; Gambao, E. Home Camera- Based Fall Detection System for the Elderly. Sensors 2017, 17, 2864. [Google Scholar] [CrossRef] [PubMed]

- Droghini, D.; Principi, E.; Squartini, S.; Olivetti, P.; Piazza, F. Human Fall Detection by Using an Innovative Floor Acoustic Sensor. Smart Innov. 2017, 69, 97–107. [Google Scholar] [CrossRef]

- Fan, K.; Wang, P.; Hu, Y.; Dou, B. Fall Detection via Human Posture Representation and Support Vector Machine. Int. J. Distrib. Sens. Netw. 2017, 13, 1550147717707418. [Google Scholar] [CrossRef]

- Guerra, B.M.V.; Ramat, S.; Beltrami, G.; Schmid, M. Automatic pose Recognition for monitoring dangerous situations in ambient-assisted living. Front. Bioeng. Biotechnol. 2020, 8, 415. [Google Scholar] [CrossRef]

- Guerra, B.M.V.; Schmid, M.; Beltrami, G.; Ramat, S. Neural networks for automatic posture recognition in ambient-assisted living. Sensors 2022, 22, 2609. [Google Scholar] [CrossRef]

- Helen Victoria, A.; Maragatham, G. Activity recognition of FMCW radar human signatures using tower convolutional neural networks. Wirel. Netw. 2021. [Google Scholar] [CrossRef]

- Hu, X.; Qu, X. An Individual-specific Fall Detection Model based on the Statistical Process Control Chart. Saf. Sci. 2014, 64, 13–21. [Google Scholar] [CrossRef]

- Huu, P.N.; Thi, N.N.; Ngoc, T.P. Proposing Posture Recognition System Combining MobilenetV2 and LSTM for Medical Surveillance. IEEE Access 2022, 10, 1839–1849. [Google Scholar] [CrossRef]

- Karayaneva, Y.; Sharifzadeh, S.; Jing, Y.; Tan, B. Human activity recognition for AI-enabled healthcare using low-resolution infrared sensor data. Sensors 2023, 23, 478. [Google Scholar] [CrossRef]

- Li, Y.; Ho, M.; Popescu, A. Microphone Array System for Automatic Fall Detection. IEEE Trans. Biomed. Eng. 2012, 59, 1291–1301. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Xu, G.; He, B.; Ma, X.; Xie, J. Pre-impact Fall Detection based on A Modified Zero Moment Point Criterion using Data from Kinect Sensors. IEEE Sens. J. 2018, 18, 5522–5531. [Google Scholar] [CrossRef]

- Li, H.; Mehul, A.; Le Kernec, J.; Gurbuz, S.Z.; Fioranelli, F. Sequential Human Gait Classification with Distributed Radar Sensor Fusion. IEEE Sens. J. 2020, 21, 7590–7603. [Google Scholar] [CrossRef]

- Li, M.; Sun, Q. 3D skeletal human action recognition using a CNN fusion model. Math. Probl. Eng. 2021, 2021, 6650632. [Google Scholar] [CrossRef]

- Li, C.; Wang, X.; Shi, J.; Wang, H.; Wan, L. Residual Neural Network Driven Human Activity Recognition by Exploiting FMCW Radar. IEEE Access 2023, 11, 111875–111887. [Google Scholar] [CrossRef]

- Martellli, D.; Artoni, F.; Monaco, V.; Sabatini, A.M.; Micera, S. Pre-Impact Fall Detection: Optimal Sensor Positioning Based on a Machine Learning Paradigm. PLoS ONE 2014, 9, e92037. [Google Scholar] [CrossRef][Green Version]

- Min, W.; Cui, H.; Rao, H.; Li, Z.; Yao, L. Detection of Human Falls on Furniture Using Scene Analysis Based on Deep Learning and Activity Characteristics. IEEE Access 2018, 6, 9324–9335. [Google Scholar] [CrossRef]

- Min, W.; Yao, L.; Lin, Z.; Liu, L. Support Vector Machine Approach to Fall Recognition based on Simplified Expression of Human Skeleton Action and Fast Detection of Start Key Frame using Torso Angle. IET Comput. Vis. 2018, 12, 1133–1140. [Google Scholar] [CrossRef]

- Natarajan, A.; Krishnasamy, V.; Singh, M. Device-Free Human Activity Recognition in Through-the-Wall Scenarios Using Single-Link Wi-Fi Channel Measurements. IEEE Sens. J. 2025, 25, 27556–27565. [Google Scholar] [CrossRef]

- Qiao, X.; Feng, Y.; Liu, S.; Shan, T.; Tao, R. Radar Point Clouds Processing for Human Activity Classification Using Convolutional Multilinear Subspace Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5121117. [Google Scholar] [CrossRef]

- Spasova, V.; Iliev, I.; Petrova, G. Privacy preserving fall detection based on simple human silhouette extraction and a linear support vector machine. Int. J. Bioautomat. 2016, 20, 237–252. [Google Scholar]

- Zahan, S.; Hassan, G.M.; Mian, A. Modeling Human Skeleton Joint Dynamics for Fall Detection. In Proceedings of the 2021 Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 29 November–1 December 2021; pp. 1–7. [Google Scholar] [CrossRef]

- Alabdulkreem, E.; Marzouk, R.; Alduhayyem, M.; Al-Hagery, M.A.; Motwakel, A.; Hamza, M.A. Chameleon Swarm Algorithm with Improved Fuzzy Deep Learning for Fall Detection Approach to Aid Elderly People. J. Disabil. Res. 2023, 2, 2–70. [Google Scholar] [CrossRef]

- Cao, X.; Wang, X.; Geng, X.; Wu, D.; An, H. An Approach for Human Posture Recognition Based on the Fusion PSE-CNN-BiGRU Model. CMES-Comp. Model. Eng. 2024, 140, 385–408. [Google Scholar] [CrossRef]

- Kepski, M.; Kwolek, B. Event-driven System for Fall Detection using Body-worn Accelerometer and Depth Sensor. IET Comput. Vis. 2018, 12, 48–58. [Google Scholar] [CrossRef]

- Sucerquia, A.; Lopez, J.D.; Vargas-Bonilla, J.F. Real-Life/Real-Time Elderly Fall Detection with a Triaxial Accelerometer. Sensors 2018, 18, 1101. [Google Scholar] [CrossRef]

- Habaebi, M.H.; Yusoff, S.H.; Ishak, A.N.; Islam, M.R.; Chebil, J.; Basahel, A. Wearable Smart Phone Sensor Fall Detection System. Int. J. Interact. Mob. Technol. 2022, 16, 72–93. [Google Scholar] [CrossRef]

- Agrawal, D.K.; Udgata, S.K.; Usaha, W. Leveraging Smartphone Sensor Data and Machine Learning Model for Human Activity Recognition and Fall Classification. Procedia Comput. Sci. 2024, 235, 1980–1989. [Google Scholar] [CrossRef]

- Stampfler, T.; Elgendi, M.; Fletcher, R.R.; Menon, C. Fall detection using accelerometer-based smartphones: Where do we go from here? Front. Public Health 2022, 17, 996021. [Google Scholar] [CrossRef]

- Vargas, V.; Ramos, P.; Orbe, E.A.; Zapata, M.; Valencia-Aragón, K. Low-Cost Non-Wearable Fall Detection System Implemented on a Single Board Computer for People in Need of Care. Sensors 2024, 24, 5592. [Google Scholar] [CrossRef]

- Auvinet, E.; Multon, F.; Saint-Arnaud, A.; Rousseau, J.; Meunier, J. Fall detection with multiple cameras: An occlusion-resistant method based on 3-D silhouette vertical distribution. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 290–300. [Google Scholar] [CrossRef]

- Anderson, D.; Luke, R.H.; Keller, J.M.; Skubic, M.; Rantz, M.; Aud, M. Linguistic Summarization of Video for Fall Detection Using Voxel Person and Fuzzy Logic. Comput. Vis. Image Underst. 2009, 113, 80–89. [Google Scholar] [CrossRef]

- Wang, X.; Ellul, J.; Azzopardi, G. Elderly fall detection systems: A literature survey. Front. Robot. AI 2020, 7, 71. [Google Scholar] [CrossRef] [PubMed]

- Jung, S.; Hong, S.; Kim, J.; Lee, S.; Hyeon, T.; Lee, M.; Kim, D.H. Wearable fall detector using integrated sensors and energy devices. Sci. Rep. 2015, 5, 17081. [Google Scholar] [CrossRef] [PubMed]

- Singh, K.; Rajput, A.; Sharma, S. Human fall detection using machine learning methods: A survey. Int. J. Math. Eng. Manag. Sci. 2020, 5, 161–180. [Google Scholar] [CrossRef]

- Saleh, M.; Jeannès, R.L.B. Elderly fall detection using wearable sensors: A low cost highly accurate algorithm. IEEE Sens. J. 2019, 19, 3156–3164. [Google Scholar] [CrossRef]

- Wang, G.; Li, Q.; Wang, L.; Zhang, Y.; Liu, Z. Elderly Fall Detection with an Accelerometer Using Lightweight Neural Networks. Electronics 2019, 8, 1354. [Google Scholar] [CrossRef]

- Ding, J.; Wang, Y. A WiFi-based smart home fall detection system using recurrent neural network. IEEE Trans. Consum. Electron. 2020, 66, 308–317. [Google Scholar] [CrossRef]

- Kim, T.H.; Choi, A.; Heo, H.M.; Kim, K.; Lee, K.; Mun, J.H. Machine Learning-Based Pre-Impact Fall Detection Model to Discriminate Various Types of Fall. J. Biomech. Eng. 2019, 141, 081010. [Google Scholar] [CrossRef]

- He, J.; Zhang, Z.; Wang, X.; Yang, S. A low power fall sensing technology based on FD-CNN. IEEE Sens. J. 2019, 19, 5110–5118. [Google Scholar] [CrossRef]

- Yhdego, H.; Li, J.; Morrison, S.; Audette, M.; Paolini, C.; Sarkar, M.; Okhravi, H. Towards musculoskeletal simulation-aware fall injury mitigation: Transfer learning with deep CNN for fall detection. In Proceedings of the Spring Simulation Conference (SpringSim), Tucson, AZ, USA, 29 April–2 May 2019; pp. 1–12. [Google Scholar]

- Mamdiwar, S.D.; Shakruwala, Z.; Chadha, U.; Srinivasan, K.; Chang, C.-Y. Recent Advances on IoT-Assisted Wearable Sensor Systems for Healthcare Monitoring. Biosensors 2021, 11, 372. [Google Scholar] [CrossRef]

- Verma, N.; Mundody, S.; Guddeti, R.M.R. An Efficient AI and IoT Enabled System for Human Activity Monitoring and Fall Detection. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Mandi, India, 24–28 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Habib, M.; Mohktar, M.; Kamaruzzaman, S.; Lim, K.; Pin, T.; Ibrahim, F. Smartphone-Based Solutions for Fall Detection and Prevention: Challenges and Open Issues. Sensors 2014, 14, 7181–7208. [Google Scholar] [CrossRef]

- Karar, M.E.; Shehata, H.I.; Reyad, O. A Survey of IoT-Based Fall Detection for Aiding Elderly Care: Sensors, Methods, Challenges and Future Trends. Appl. Sci. 2022, 12, 3276. [Google Scholar] [CrossRef]

| Database | Keyword Combination |

|---|---|

| PubMed/Medline | “wearable sensors”, (“Monitoring” OR “Ambient Assisted Living” OR “Assist* Living” OR “AAL” OR “Smart Home”), (“fall detection” OR “fall prevention” OR “fall risk assessment”), (“Static” OR “dynamic”), (“Elder*” OR “Senior” OR “old* people”), (“training time” OR “testing time”), (“specificity” OR “accuracy” OR “sensitivity” OR “precision”) |

| Google Scholar | “wearable sensors”, (“Monitoring” OR “Ambient Assisted Living” OR “Assist* Living” OR “AAL” OR “Smart Home”), (“fall detection” OR “fall prevention” OR “fall risk assessment”), (“Static” OR “dynamic”), (“Elder*” OR “Senior” OR “old* people”), (“training time” OR “testing time”), (“specificity” OR “accuracy” OR “sensitivity” OR “precision”) |

| ScienceDirect | “wearable sensors” AND “Ambient Assisted Living” AND “fall detection” AND “training time” AND “Elderly” AND accuracy AND specificity |

| Science.gov | Wearable sensors, fall detection, AAL, elder, accuracy |

| Academia | “wearable sensors”, “Ambient Assisted Living”, “fall detection”, accuracy, sensitivity, specificity |

| IEEE Xplore | sensor elderly AAL fall |

| Mendeley | wearable sensors AND Ambient Assisted Living AND fall detection AND training time AND Elderly AND accuracy AND sensitivity AND specificity |

| Authors | Sensor Type | Sensor Position | Sensor Characteristics | Used Method | Algorithms | Used Dataset | Input Data Type |

|---|---|---|---|---|---|---|---|

| Wearable sensors | |||||||

| Agrawal et al., 2023 [77] | P | Foot | 20 Hz | ML | SVM, RF, LR, NB, DT, KNN | Custom | |

| Al-Hassani et al., 2023 [43] | A, G, O | 100 Hz | DL | AEC | Custom | ||

| Ankalaki et al., 2024 [78] | A, G, M | Various | DL | CNN | UCIHAR, PAMAP2, Opportunity, Daphnet Gait HAR, UPFALL, SIMADL | ||

| Bourke et al., 2007 [79] | A | ±10 g | Threshold | Threshold | Custom | Dyn | |

| Bourke et al., 2008 [80] | G | Chest | G: 1 kHz | Threshold | Threshold | Custom | |

| Butt et al., 2021 [81] | ECG | Chest | DL | CNN | Custom | ||

| Chandramouli et al., 2024 [82] | DL | CNN, LSTM | Actitracker, MHEALTH | ||||

| Chelli et al., 2019 [83] | A, G | Chest | A: ±8 g, 100 Hz, G: ±2000°/s, 100 Hz | ML, Other | KNN, SVM, ANN | Custom | |

| Chen et al., 2018 [84] | A | ±2 g to ±4 g, 96.35 to 202.1 Hz | Threshold | Threshold | Custom | Stat | |

| Gibson et al., 2016 [47] | A | Chest | 50 Hz | ML, Other | ANN, KNN | Custom | |

| Gulati et al., 2021 [49] | A, G | Wrist | ML | RF, SVM, NB, DT, ANN | ADL, ARFall | Dyn | |

| He et al., 2016 [34] | A, G | Neck | A: ±16 g, G: ±2000°/s | ML, Other | KNN, NB, ANN, DT | Custom | |

| He et al., 2017 [85] | A, G | A: ±16 g, 100 Hz, G: 2000°/s, 100 Hz | ML, Other | KNN, NB, DT | Custom | ||

| Jahanjoo et al., 2020 [15] | A | Waist | Threshold | Threshold | tfall, MobiFall | ||

| Jantaraprim et al., 2012 [86] | A | Chest | 1 kHz | Other | Custom | ||

| Kerdegari et al., 2015 [87] | A | Waist | ±3 g, 100 Hz | Other | Custom | ||

| Khojasteh et al., 2018 [88] | A | Wrist, Waist | 16 to 204.8 Hz | ML, Other | DT, SVM | UMAFall | |

| Kraft et al., 2020 [89] | A | Wrist, Waist | DL | CNN | Notch, MUMA, SimFall, Smartwatch, Smar-Fall, UPFall | ||

| Liaqat et al., 2021 [90] | A | In the pocket | ML, DL, Other | LR, RF, KNN, SVM, DT, MLP, CNN, LSTM | Custom (experimental, 30 subjects, 6 ADL) | Stat | |

| Martins et al., 2022 [91] | A, G, M | Lower back, Thighs, Waist, Foot | ML, Other | KNN | Sisfall, FallAIID, FARSEEING, UCI HAR, UMAFall, Custom | ||

| Mauldin et al., 2018 [41] | A | Wrist, Waist | ±8 g to ±16 g, 21.25 to 100 Hz | ML, Other | NB, SVM | Smartwatch, Notch, Farseeing | |

| Medrano et al., 2017 [92] | A | 50 Hz | ML, Other | SVM | tfall | Stat | |

| Miah et al., 2024 [93] | A, G, M | ML, DL, Other | SVM, HMM, GRU, CNN, LSTM | WISDM, PAMAP2, USCHAD, Opportunity, UCI HAR | |||

| Nyan et al., 2008 [94] | A, G | Waist, Thigh | A: ±4 g, G: 150°/s | Threshold | Threshold | Custom | |

| Özdemir et al., 2014 [76] | A, G, M | Head, Chest, Waist, Wrist, Thigh, Ankle | A: ±13 g, 25 Hz, G: ±1200°/s, 25 Hz, M: ±1.5 Gauss, 25 Hz | ML, Other | KNN, SVM, ANN | Custom | |

| Özdemir et al., 2016 [95] | A, G, M | Head, Chest, Waist, Wrist, Thigh, Ankle | A: ±13 g, 25 Hz, G: ±1200°/s, 25 Hz, M: ±1.5 Gauss, 25 Hz | ML, Other | KNN, SVM, ANN | Custom | |

| Pan et al., 2021 [96] | A, AV, Mag | Shoulder, Waist, Foot | Other | Custom | |||

| Putra et al., 2018 [97] | A | Chest, Waist | 100, 200 Hz | DL | CNN | Cogent, Sisfall | |

| Rashidpour et al., 2016 [98] | A, G | Thigh | A: ±2 g, 87 Hz, G: ±2000°/s, 200 Hz | Other | MobiFall | ||

| Ren et al., 2016 [99] | A | Waist | 62.5 Hz | Other | Custom | ||

| Rescio et al., 2018 [100] | EMG | Leg | 1 kHz | Other | Custom | ||

| Sabatini et al., 2016 [101] | A, G, M | Waist | A: ±4 g, 50 Hz, G: 2000°/s | Threshold | Threshold | Custom | |

| Santos et al., 2019 [38] | A | Wrist, Waist | ±8 g to ±16, 21, 25 to 256 Hz | DL | CNN, LSTM | URFD, Notch, Smartwatch | |

| Sarabia-Jácome et al., 2020 [102] | A | Waist | ±16 g, 100 Hz | ML, DL, Other | LSTM, GRU, SVM, KNN | SisFall | Dyn |

| Shahzad et al., 2018 [103] | A | Waist, Thigh | 64 Hz | ML | SVM, ANN, KNN, NB | Custom | |

| Suriani et al., 2018 [104] | A | Hip, Thigh, Foot | ±3 g, 50 Hz | ML | KNN, SVM | Custom | |

| Torti et al., 2019 [42] | A | DL | LSTM | SisFall | Dyn | ||

| Tunca et al., 2019 [105] | A, G, M | Foot | ML, DL | SVM, RF, MLP, HMM, LTSM | Custom | ||

| Wu et al., 2018 [64] | A, AV | A: ±16 g, 20 Hz, G: 2000°/s, 100 Hz | Other | Custom | |||

| Xi et al., 2017 [106] | EMG | Thigh, Leg | 1024 Hz | Other | Custom | ||

| Yacchirema et al., 2018 [35] | A | Waist | ML, Other | DT, SVM, MLP, KNN | SisFall | ||

| Yoo et al., 2018 [107] | A | Wrist | 50 Hz | Other | Custom | Dyn | |

| Yuwono et al., 2012 [108] | A | Right pocket | ±6 g, 20 Hz | Other | Custom | ||

| Not wearable sensors | |||||||

| Adnan et al., 2018 [109] | AS | Ext | 16 to 48 kHz | ML | SVM | Custom | |

| Alam et al., 2023 [110] | C | Ext | DL | CNN | CAUCAFall, GMDCSA | ||

| Berlin et al., 2022 [48] | C | Ext | DL | CNN | URFD, FDD | ||

| de Miguel et al., 2017 [111] | C | Ext | ML | KNN | Custom | ||

| Ding et al., 2023 [20] | R | Ext | DL, ML, Other | CNN, KNN, LSTM | Custom | ||

| Droghini et al., 2017 [46] | AS | Ext | 44,100 kHz | ML | SVM | Custom | |

| Droghini et al., 2017 [112] | AS | Ext | 44,100 kHz | ML | SVM | Custom | |

| Fan et al., 2017 [113] | C | Ext | ML, Other | MLP, SVM | Custom | ||

| Guerra et al., 2020 [114] | C | Ext | Other | Fall detection, Fall detection testing | Stat and Dyn | ||

| Guerra et al., 2022 [115] | C | Ext | DL | GRU, LSTM | Custom | Dyn | |

| Guerra et al., 2023 [17] | C | Ext | DL | LSTM | Custom | Dyn | |

| Helen Victoria et al., 2021 [116] | R | Ext | 400 MHz, 5.8 GHz | DL | CNN | University of Glasgow | |

| Hu et al., 2014 [117] | C | Ext | 100 Hz | Other | Custom | ||

| Huu et al., 2022 [118] | C | Ext | DL, ML, Other | SVM, CNN, LSTM | Human pose, Custom | ||

| Karayaneva et al., 2023 [119] | C | Ext | DL, Other | CNN, LSTM | Custom | Stat and Dyn | |

| Li et al., 2012 [120] | AS | Ext | 20 kHz | Other | Custom | ||

| Li et al., 2018 [121] | C | Ext | Other | Custom | |||

| Li et al., 2020 [122] | R | Ext | 7.3 to 25 GHz | DL | LSTM | Custom | |

| Li et al., 2021 [123] | C | Ext | Other | NTU RGB + D | Dyn | ||

| Li et al., 2023 [124] | R | Ext | DL, Other | CNN, LSTM | Custom | ||

| Liu et al., 2019 [28] | V | Ext | Other | Custom | |||

| Martelli et al., 2014 [125] | C | Ext | 100 Hz | DL | CNN | Custom | Dyn |

| Min et al., 2018 [126] | C | Ext | 256 Hz | DL | CNN | URFD, Custom | |

| Min et al., 2018 [127] | C | Ext | ML | SVM | TST Fall | ||

| Natarajan et al., 2025 [128] | Ext | ML | SVM | Custom | |||

| Qiao et al., 2022 [129] | R | Ext | DL, Other | CNN | Custom | ||

| Singh et al., 2019 [22] | T | Ext | ML | LR, SVM, KNN, DT, RF | Custom | Stat | |

| Spasova et al., 2016 [130] | IR | Ext | ML | SVM | Custom | Stat | |

| Wang et al., 2020 [19] | A + R | Ext | ML, Other | HML | Custom | ||

| Zahan et al., 2021 [131] | C | Ext | DL, Other | CNN | UWA3D, NTU60, NTU120 | ||

| Hybrid solution | |||||||

| Alabdulkree et al., 2023 [132] | DL, Other | CNN | Custom | ||||

| Benhaili et al., 2025 [45] | A, G, C | Waist | A: 50 Hz, G: 50 Hz, C: 200 Hz | DL, Other | CNN, LSTM, GRU | ICU HAR, MHEALTH, SisFall | |

| Cao et al., 2024 [133] | DL | CNN, GRU | UCI HAR, HAR70PLUS, HABRD | Dynamic | |||

| Kepski et al., 2018 [134] | A, G, M, C | 250 Hz | ML | SVM, KNN | URFD | ||

| Kwolek et al., 2014 [25] | A, C | Lower back, Ext | 256 Hz | ML | SVM | URFD | Dynamic |

| Li et al., 2018 [73] | A, C | Waist, Ext | 50 Hz | ML, Threshold | SVM, Threshold | Custom | |

| Sucerquia et al., 2018 [135] | A, C | Waist | ±16 g, 200 Hz | Threshold | Threshold | SisFall |

| Authors | Accuracy | Specificity | Sensitivity | Precision | F1-Score | Training Time | Testing Time |

|---|---|---|---|---|---|---|---|

| Wearable sensors | |||||||

| Agrawal et al., 2023 [77] | X | X | X | ||||

| Al-Hassani et al., 2023 [43] | X | X | X | X | |||

| Ankalaki et al., 2024 [78] | X | X | |||||

| Bourke et al., 2007 [79] | X | ||||||

| Bourke et al., 2008 [80] | X | X | X | ||||

| Butt et al., 2021 [81] | X | ||||||

| Chandramouli et al., 2024 [82] | X | ||||||

| Chelli et al., 2019 [83] | X | X | |||||

| Chen et al., 2018 [84] | X | X | |||||

| Gibson et al., 2016 [47] | X | X | X | X | X | ||

| Gulati et al., 2021 [49] | X | X | X | X | X | X | |

| He et al., 2016 [34] | X | X | |||||

| He et al., 2017 [85] | X | X | X | ||||

| Jahanjoo et al., 2020 [15] | X | X | |||||

| Jantaraprim et al., 2012 [86] | X | X | |||||

| Kerdegari et al., 2015 [87] | X | X | X | ||||

| Khojasteh et al., 2018 [88] | X | X | X | X | |||

| Kraft et al., 2020 [89] | X | X | X | X | |||

| Liaqat et al., 2021 [90] | X | X | X | X | |||

| Martins et al., 2022 [91] | X | X | X | X | X | X | |

| Mauldin et al., 2018 [41] | X | X | X | ||||

| Medrano et al., 2017 [92] | X | X | |||||

| Miah et al., 2024 [93] | X | X | X | X | |||

| Nyan et al., 2008 [94] | X | X | |||||

| Özdemir et al., 2014 [76] | X | X | X | X | X | ||

| Özdemir et al., 2016 [95] | X | X | |||||

| Pan et al., 2021 [96] | X | X | X | ||||

| Putra et al., 2018 [97] | X | X | X | ||||

| Rashidpour et al., 2016 [98] | X | X | |||||

| Ren et al., 2016 [99] | X | X | X | ||||

| Rescio et al., 2018 [100] | X | X | |||||

| Sabatini et al., 2016 [101] | X | X | |||||

| Santos et al., 2019 [38] | X | X | X | X | |||

| Sarabia-Jácome et al., 2020 [102] | X | X | X | ||||

| Shahzad et al., 2018 [103] | X | X | X | ||||

| Suriani et al., 2018 [104] | X | ||||||

| Torti et al., 2019 [42] | X | X | X | ||||

| Tunca et al., 2019 [105] | X | ||||||

| Wu et al., 2018 [64] | X | X | |||||

| Xi et al., 2017 [106] | X | X | X | ||||

| Yacchirema et al., 2018 [35] | X | X | X | X | X | ||

| Yoo et al., 2018 [107] | X | X | X | ||||

| Yuwono et al., 2012 [108] | X | ||||||

| Not wearable sensors | |||||||

| Adnan et al., 2018 [109] | X | X | X | X | |||

| Alam et al., 2023 [110] | X | X | X | X | X | ||

| Berlin et al., 2022 [48] | X | X | X | X | X | ||

| de Miguel et al., 2017 [111] | X | X | X | X | |||

| Ding et al., 2023 [20] | X | X | X | X | X | X | |

| Droghini et al., 2017 [46] | X | ||||||

| Droghini et al., 2017 [112] | X | ||||||

| Fan et al., 2017 [113] | X | ||||||

| Guerra et al., 2020 [114] | X | X | X | X | |||

| Guerra et al., 2022 [115] | X | X | X | ||||

| Guerra et al., 2023 [17] | X | ||||||

| Helen Victoria et al., 2021 [116] | X | ||||||

| Hu et al., 2014 [117] | X | X | |||||

| Huu et al., 2022 [118] | X | X | X | ||||

| Karayaneva et al., 2023 [119] | X | ||||||

| Li et al., 2012 [120] | X | X | X | ||||

| Li et al., 2018 [121] | X | X | X | ||||

| Li et al., 2020 [122] | X | ||||||

| Li et al., 2021 [123] | X | ||||||

| Li et al., 2023 [124] | X | X | X | X | |||

| Liu et al., 2019 [28] | X | X | |||||

| Martelli et al., 2014 [125] | X | X | X | ||||

| Min et al., 2018 [126] | X | X | X | ||||

| Min et al., 2018 [127] | X | ||||||

| Natarajan et al., 2025 [128] | X | X | X | ||||

| Qiao et al., 2022 [129] | X | X | X | X | |||

| Singh et al., 2019 [22] | X | X | X | X | X | X | |

| Spasova et al., 2016 [130] | X | X | X | ||||

| Wang et al., 2020 [19] | X | X | X | ||||

| Zahan et al., 2021 [131] | X | X | X | X | X | ||

| Hybrid solution | |||||||

| Alabdulkree et al., 2023 [132] | X | ||||||

| Benhaili et al., 2025 [45] | X | ||||||

| Cao et al., 2024 [133] | X | X | |||||

| Kepski et al., 2018 [134] | X | X | |||||

| Kwolek et al., 2014 [25] | X | X | X | X | |||

| Li et al., 2018 [73] | X | ||||||

| Sucerquia et al., 2018 [135] | X | X | X |

| Authors | Accuracy | Specificity | Sensitivity | Precision | F1-Score | Datasets |

|---|---|---|---|---|---|---|

| Wearable sensors | ||||||

| Bourke et al., 2007 [79] | 100% (1) | Custom | ||||

| Bourke et al., 2008 [80] | 100% (1) | 100% (1) | 100% (1) | Custom | ||

| Chelli et al., 2019 [83] | 100% (1) | Custom | ||||

| Jahanjoo et al., 2020 [15] | 100% (4) | 100% (3) | tfall, MobiFall | |||

| Khojasteh et al., 2018 [88] | 100% (1) | UMAFall | ||||

| Mauldin et al., 2018 [41] | 100% (2) | Smartwatch, Notch, Farseeing | ||||

| Nyan et al., 2008 [94] | 100% (1) | Custom | ||||

| Özdemir et al., 2014 [76] | 100% (2) | Custom | ||||

| Pan et al., 2021 [96] | 100% (1) | 100% (1) | 100% (3) | Custom | ||

| Putra et al., 2018 [97] | 100% (4) | Cogent, Sisfall | ||||

| Rashidpour et al., 2016 [98] | 100% (1) | 100% (1) | MobiFall | |||

| Sabatini et al., 2016 [101] | 100% (1) | Custom | ||||

| Santos et al., 2019 [38] | 100% (3) | 100% (3) | URFD, Notch, Smartwatch | |||

| Yacchirema et al., 2018 [35] | 100% (2) | 100% (2) | SisFall | |||

| Yoo et al., 2018 [107] | 100% (4) | 100% (5) | 100% (4) | Custom | ||

| Not wearable sensors | ||||||

| Alam et al., 2023 [110] | 100% (1) | 100% (1) | Custom | |||

| Berlin et al., 2022 [48] | 100% (1) | 100% (2) | 100% (1) | 100% (2) | 100% (1) | URFD, FDD |

| Droghini et al., 2017 [112] | 100% (1) | Custom | ||||

| Li et al., 2012 [120] | 100% (1) | Custom | ||||

| Li et al., 2018 [121] | 100% (1) | Custom | ||||

| Natarajan et al., 2025 [128] | 100% (1) | Custom | ||||

| Singh et al., 2019 [22] | 100% (3) | Custom | ||||

| Wang et al., 2020 [19] | 100% (2) | 100% (1) | Custom | |||

| Hybrid solution | ||||||

| Kepski et al., 2018 [134] | 100% (1) | 100% (1) | URFD | |||

| Kwolek et al., 2014 [25] | 100% (2) | URFD |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gorce, P.; Jacquier-Bret, J. Fall Detection in Elderly People: A Systematic Review of Ambient Assisted Living and Smart Home-Related Technology Performance. Sensors 2025, 25, 6540. https://doi.org/10.3390/s25216540

Gorce P, Jacquier-Bret J. Fall Detection in Elderly People: A Systematic Review of Ambient Assisted Living and Smart Home-Related Technology Performance. Sensors. 2025; 25(21):6540. https://doi.org/10.3390/s25216540

Chicago/Turabian StyleGorce, Philippe, and Julien Jacquier-Bret. 2025. "Fall Detection in Elderly People: A Systematic Review of Ambient Assisted Living and Smart Home-Related Technology Performance" Sensors 25, no. 21: 6540. https://doi.org/10.3390/s25216540

APA StyleGorce, P., & Jacquier-Bret, J. (2025). Fall Detection in Elderly People: A Systematic Review of Ambient Assisted Living and Smart Home-Related Technology Performance. Sensors, 25(21), 6540. https://doi.org/10.3390/s25216540