A2G-SRNet: An Adaptive Attention-Guided Transformer and Super-Resolution Network for Enhanced Aircraft Detection in Satellite Imagery

Abstract

1. Introduction

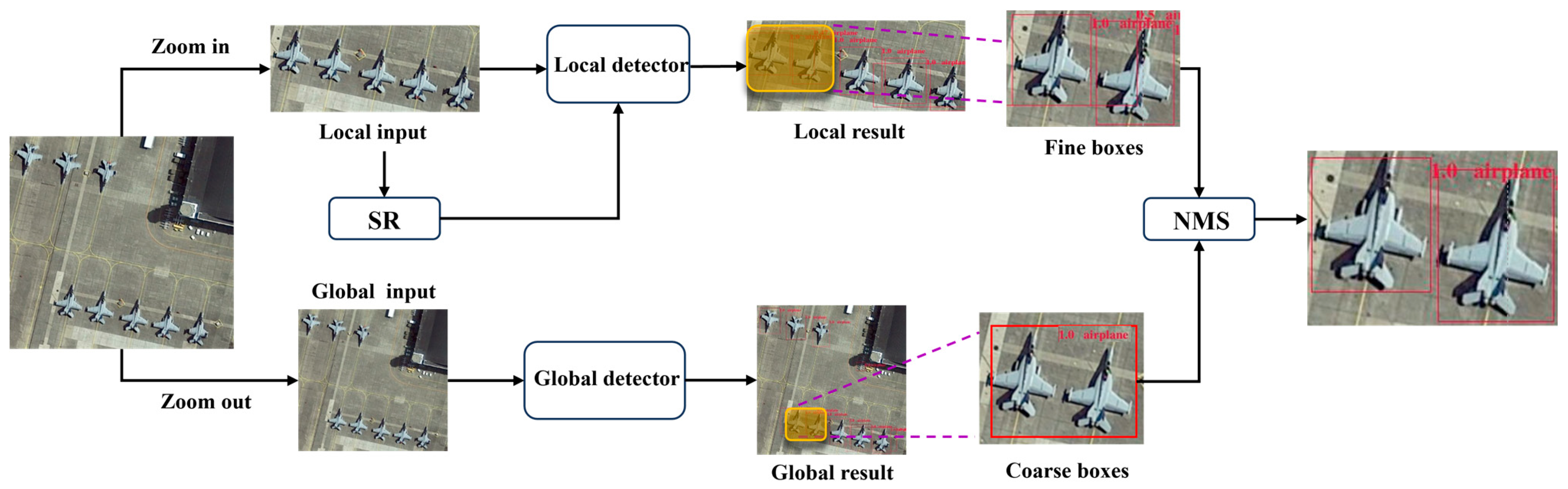

- A hierarchical global-local detection framework that employs a coarse-to-fine strategy for multi-scale aircraft detection in complex airport scenes. The framework first performs coarse detection on downsampled images to identify potential regions, then adaptively refines detection for small and densely clustered aircraft targets.

- A Saliency-Aware Tile Selection (SATS) module that leverages learnable attention mechanisms to dynamically identify critical aircraft regions. This innovation eliminates the need for manual threshold tuning while ensuring compatibility with standard detection architectures.

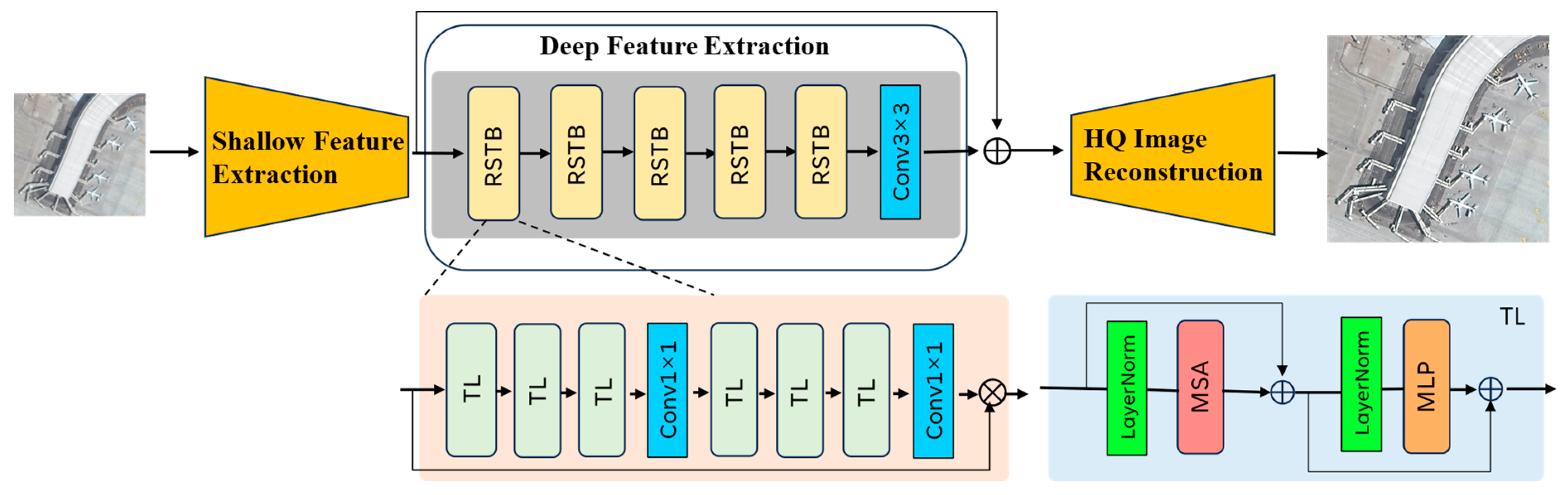

- A Transformer-based Local Tile Refinement (LTR) network that performs selective super-resolution (SR) exclusively on identified aircraft-dense regions through multi-scale feature fusion and attention-guided upsampling, while bypassing computational processing for non-target areas to maintain efficiency.

2. Related Work

2.1. Object Detection Based on Deep Learning

2.2. Object Detection Based on SR

3. Methodology

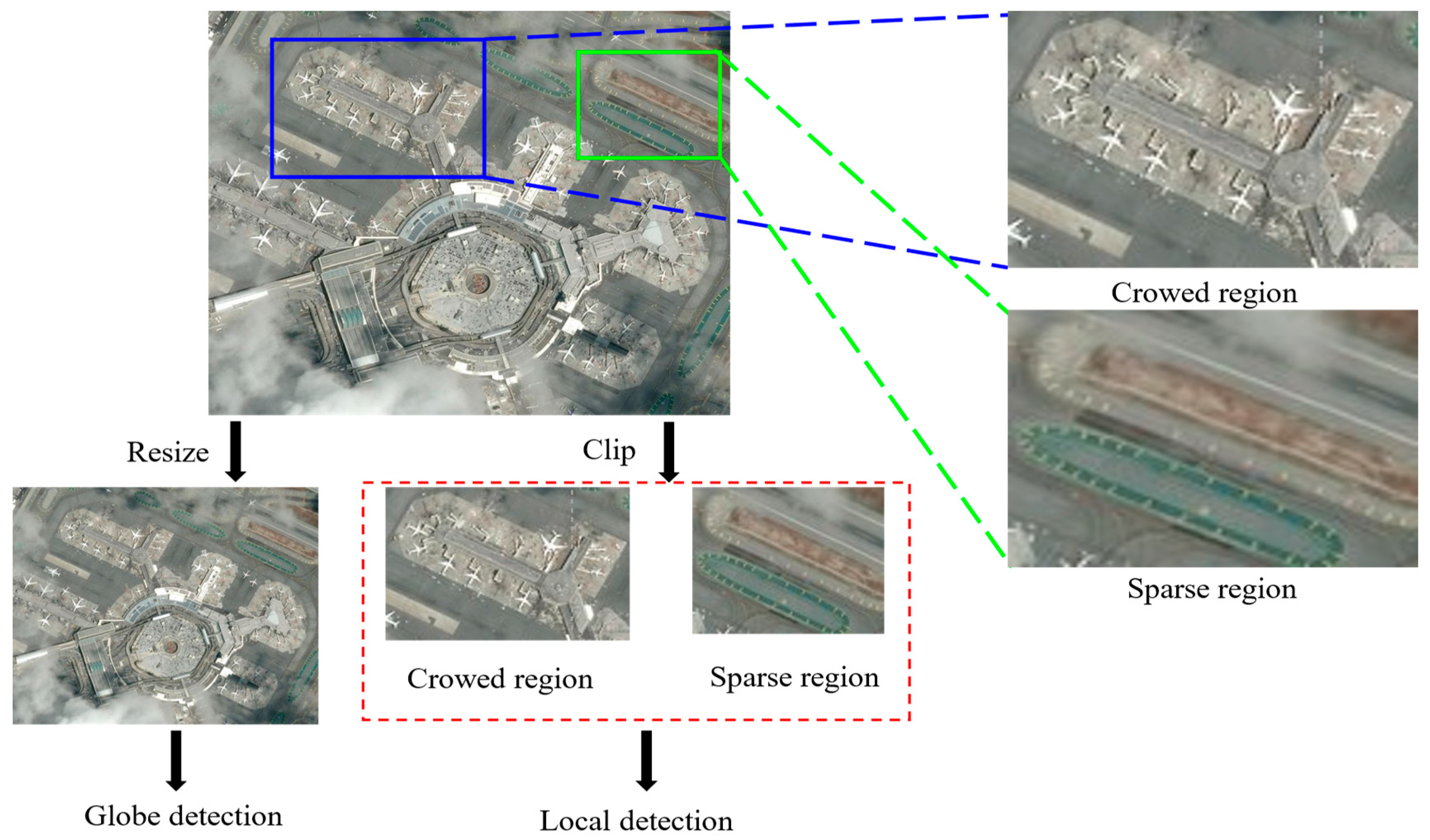

3.1. The Global-Local Coarse-to-Fine Detection Network

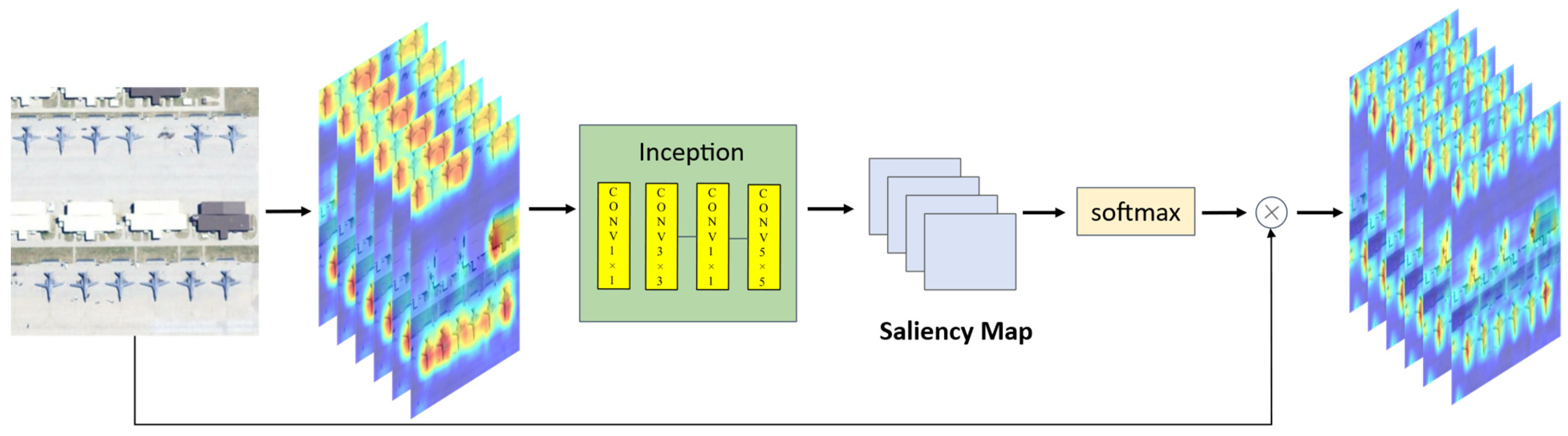

3.2. Saliency-Aware Tile Selection Algorithm

3.2.1. Theoretical Analysis of Saliency-Aware Tile Selection

3.2.2. Architecture and Workflow of Saliency-Aware Tile Selection

3.3. Local Tile Refinement Network

3.4. Training Data Augmentation

3.5. Loss Function

- Hierarchical Tile Selection Loss

- 2.

- Reconstruction Loss

- 3.

- Saliency Consistency Loss

4. Experiment

4.1. Experimental Settings

- (1)

- DIOR: A widely adopted benchmark for remote sensing object detection, DIOR comprises 23,463 optical remote sensing images with 192,472 manually annotated object instances. The dataset spans 20 common object categories, with each image resized to 800 × 800 pixels and spatial resolutions ranging from 0.5 m to 30 m. Annotations are provided in the form of axis-aligned bounding boxes.

- (2)

- FAIR1M: Designed for fine-grained object recognition, FAIR1M is a large-scale dataset focusing on three major categories: aircraft, ships, and vehicles, further subdivided into 37 fine-grained classes. FAIR1M employs oriented bounding boxes for object annotations, stored in XML format, to better capture the orientation and aspect ratio of targets in remote sensing imagery.

4.2. Experimental Results and Analysis

4.2.1. Object Detection Performance Evaluation of DIOR

4.2.2. Object Detection Performance Evaluation of FAIR1M

5. Discussion

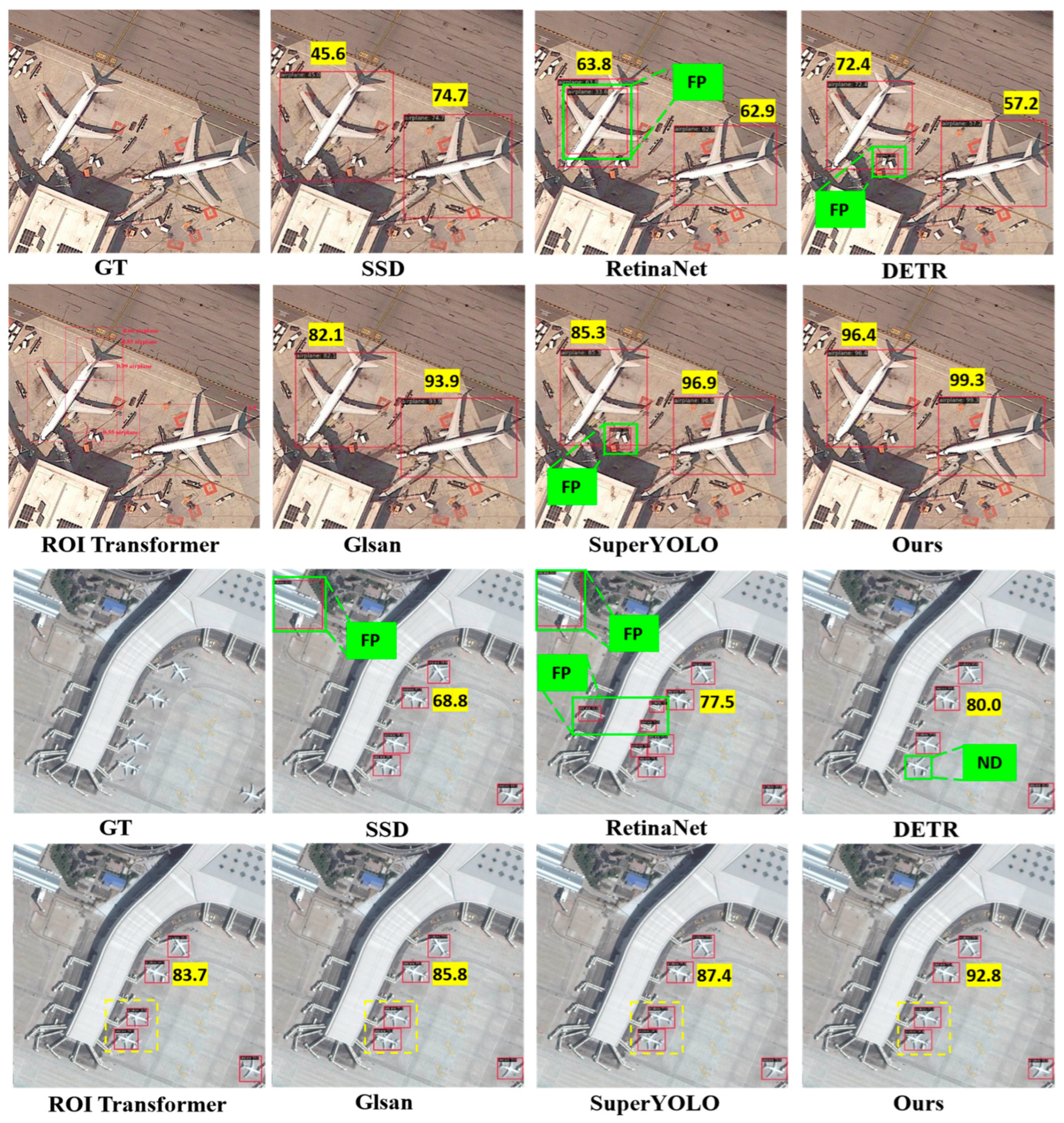

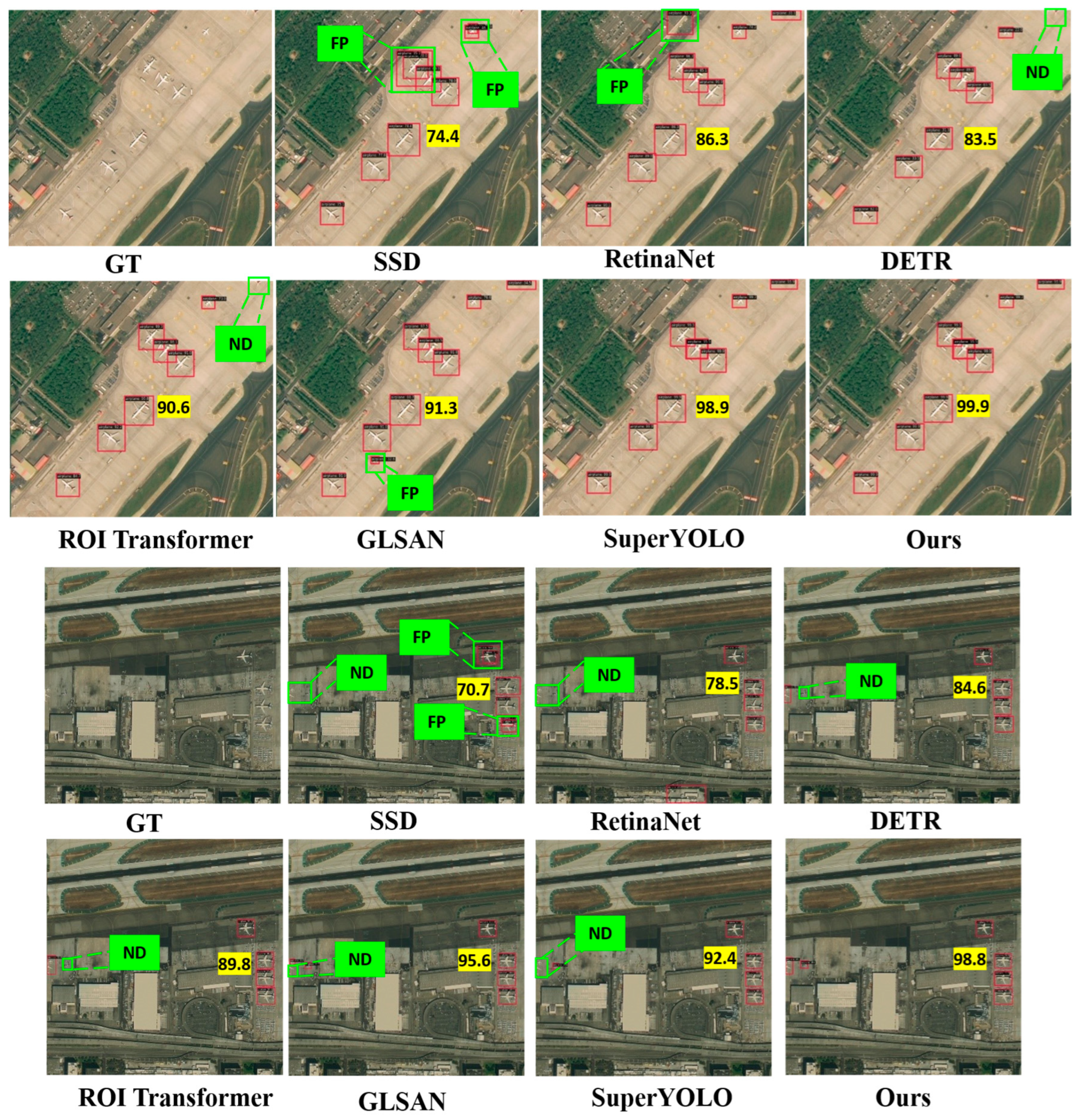

5.1. Accuracy Improvement

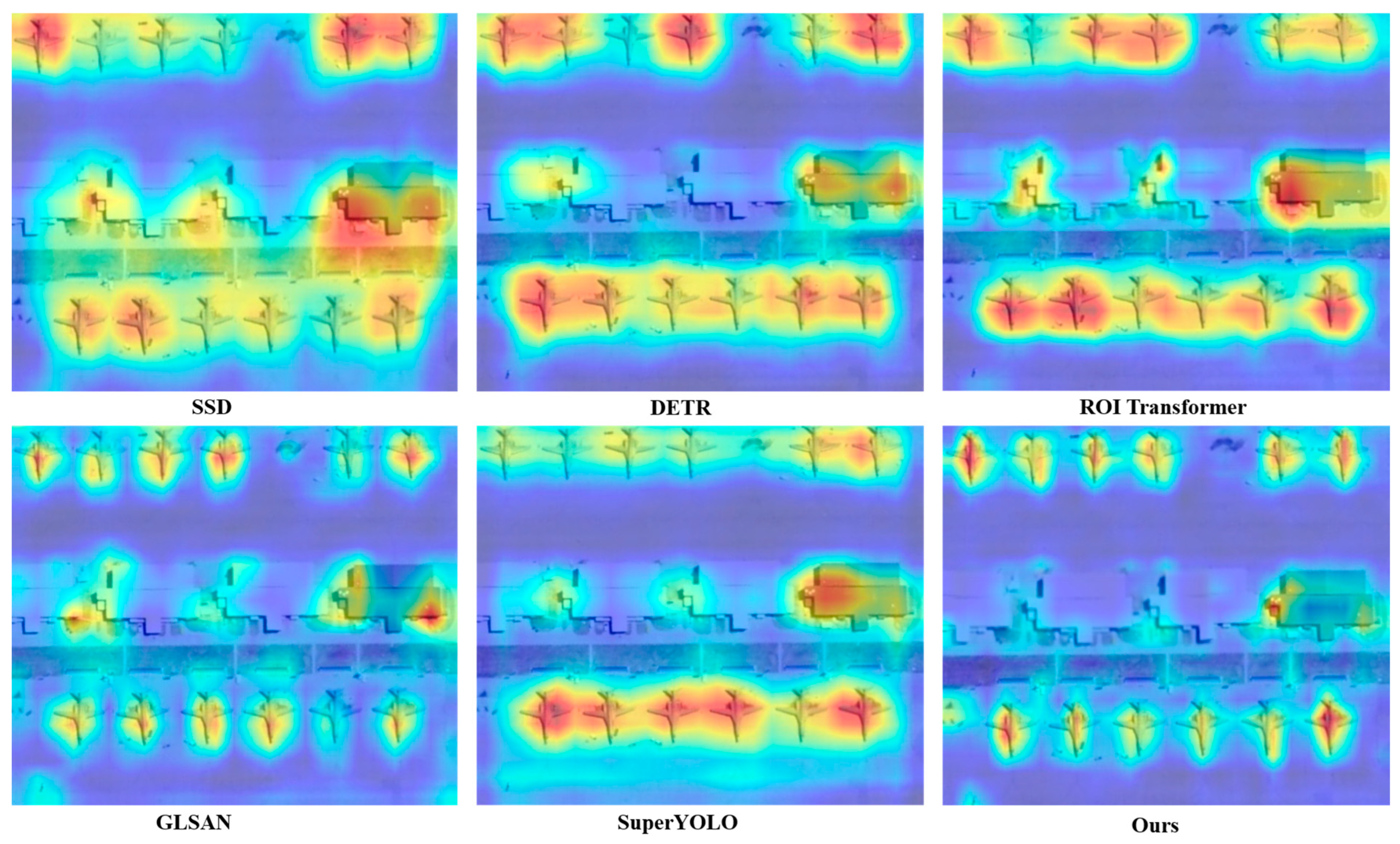

5.2. Visual Analysis

5.3. Ablation Study

5.3.1. Component-Wise Contribution Analysis

5.3.2. Saliency-Guided Attention Mechanism Effectiveness

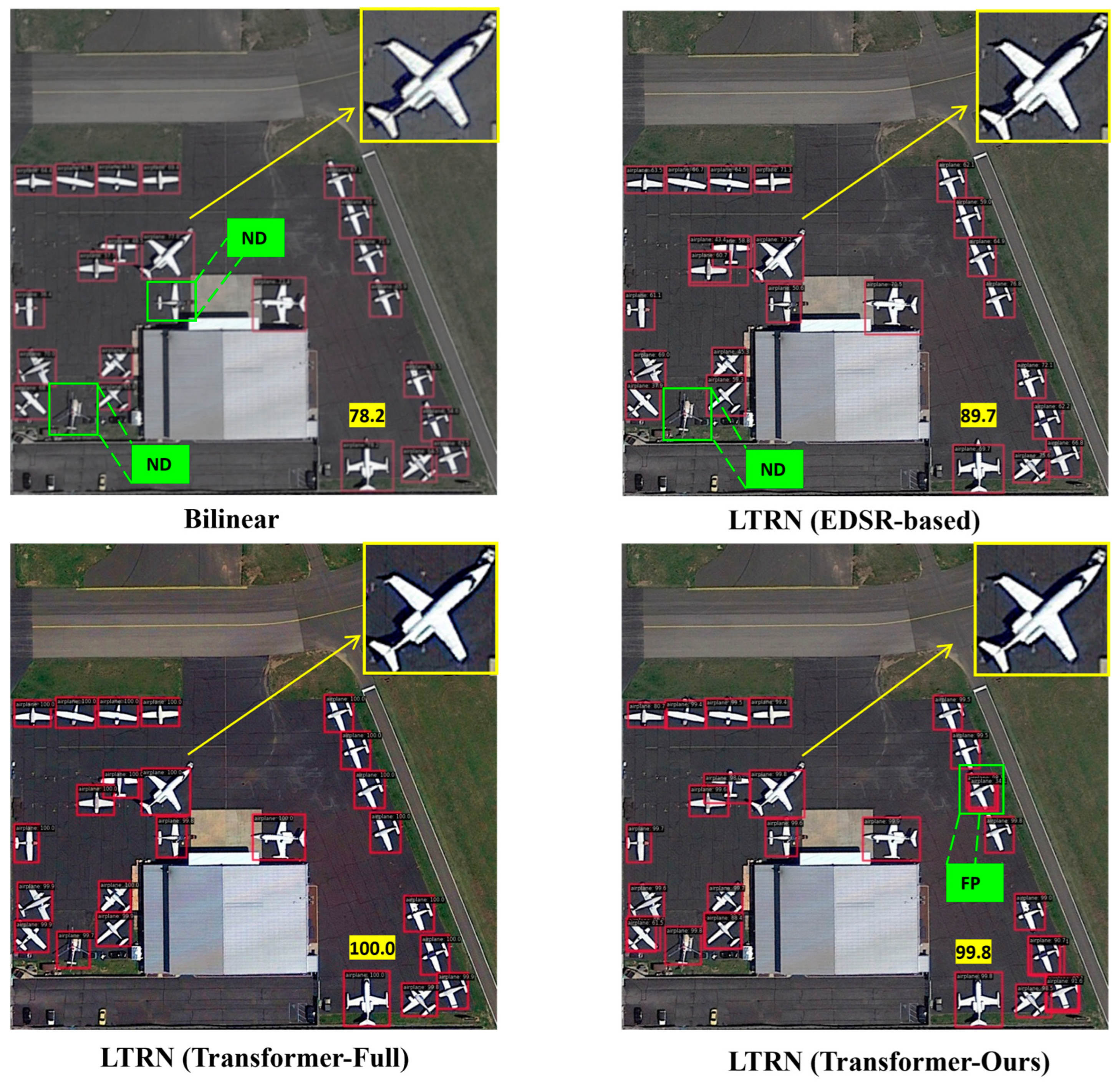

5.3.3. Impact of Local Super-Resolution Enhancement

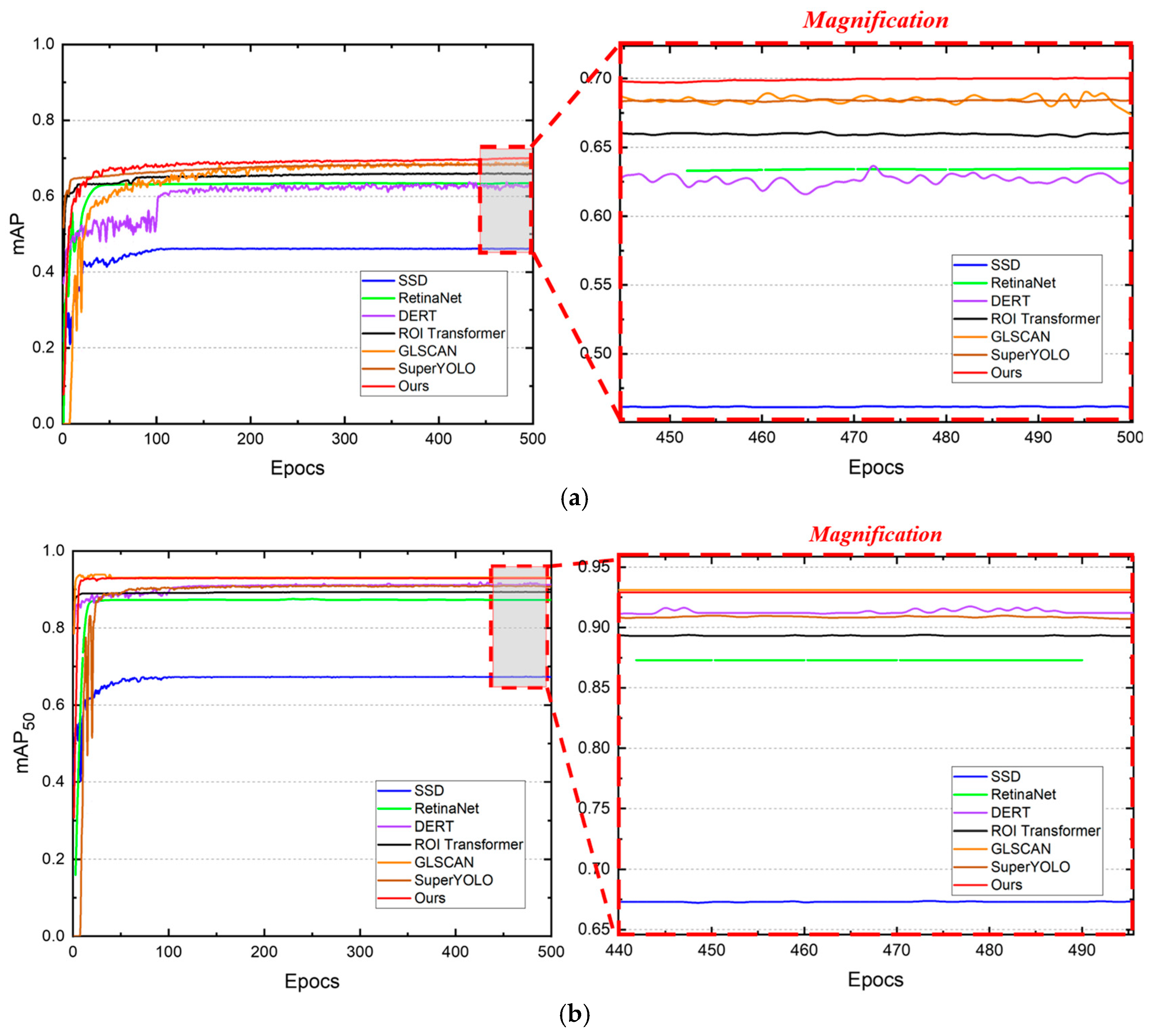

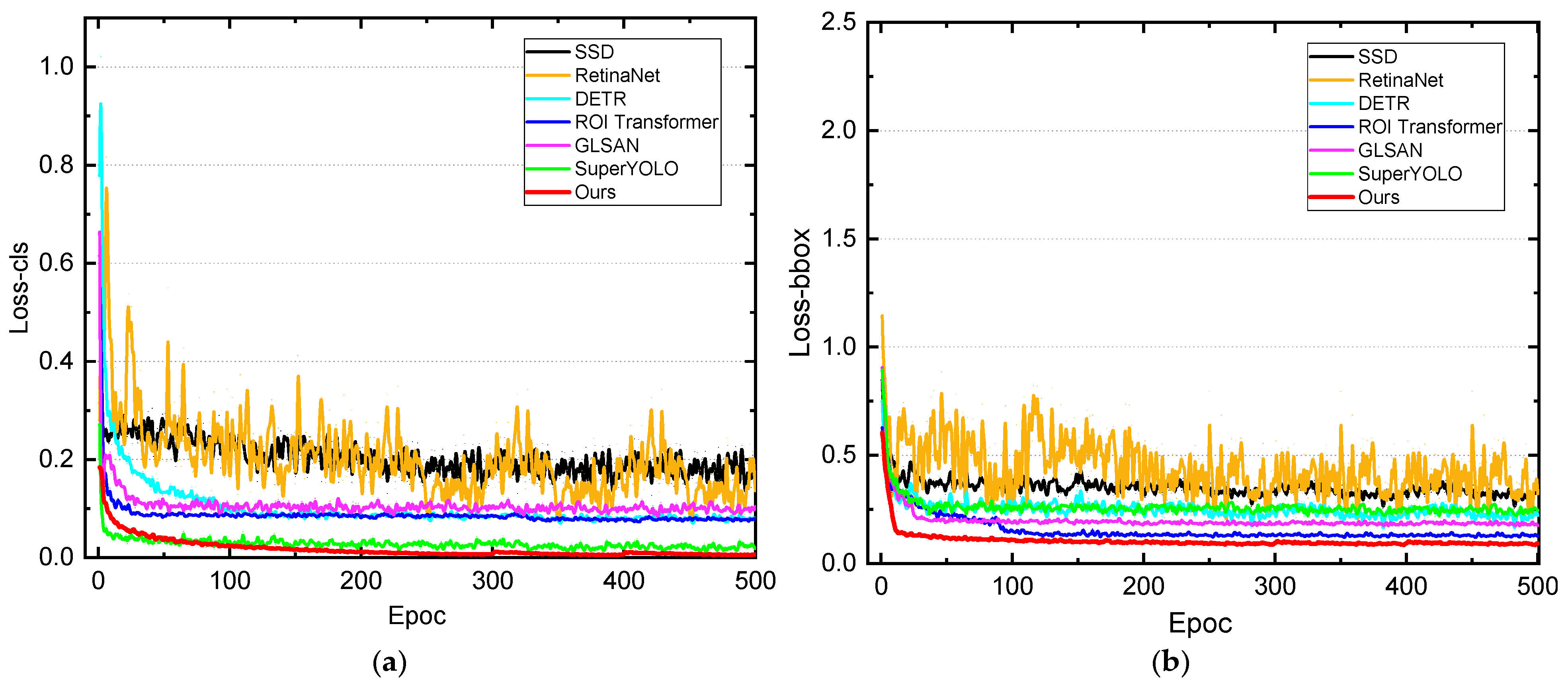

5.4. Loss Chart

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Qian, Y.; Pu, X.; Jia, H.; Wang, H.; Xu, F. ARNet: Prior Knowledge Reasoning Network for Aircraft Detection in Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5205214. [Google Scholar] [CrossRef]

- Zhao, A.; Fu, K.; Wang, S.; Zuo, J.; Zhang, Y.; Hu, Y.; Wang, H. Aircraft Recognition Based on Landmark Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1413–1417. [Google Scholar] [CrossRef]

- El Ghazouali, S.; Gucciardi, A.; Venturini, F.; Venturi, N.; Rueegsegger, M.; Michelucci, U. FlightScope: An Experimental Comparative Review of Aircraft Detection Algorithms in Satellite Imagery. Remote Sens. 2024, 16, 4715. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, T.; Yu, P.; Wang, S.; Tao, R. SFSANet: Multiscale Object Detection in Remote Sensing Image Based on Semantic Fusion and Scale Adaptability. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4406410. [Google Scholar] [CrossRef]

- Shi, T.; Gong, J.; Hu, J.; Sun, Y.; Bao, G.; Zhang, P.; Wang, J.; Zhi, X.; Zhang, W. Progressive class-aware instance enhancement for aircraft detection in remote sensing imagery. Pattern Recognit. 2025, 164, 111503. [Google Scholar] [CrossRef]

- Hu, G.; Yang, Z.; Han, J.; Huang, L.; Gong, J.; Xiong, N. Aircraft detection in remote sensing images based on saliency and convolution neural network. EURASIP J. Wirel. Commun. Netw. 2018, 2018, 26. [Google Scholar] [CrossRef]

- Shi, L.; Tang, Z.; Wang, T.; Xu, X.; Liu, J.; Zhang, J. Aircraft detection in remote sensing images based on deconvolution and position attention. Int. J. Remote Sens. 2021, 42, 4241–4260. [Google Scholar] [CrossRef]

- Wu, Z.Z.; Weise, T.; Wang, Y.; Wang, Y. Convolutional Neural Network Based Weakly Supervised Learning for Aircraft Detection from Remote Sensing Image. IEEE Access. 2020, 8, 158097–158106. [Google Scholar] [CrossRef]

- Xu, Z.; Jia, R.S.; Yu, J.T.; Yu, J.Z.; Sun, H.M. Fast aircraft detection method in optical remote sensing images based on deep learning. J. Appl. Remote Sens. 2021, 15, 014502. [Google Scholar] [CrossRef]

- Wu, Q.; Feng, D.; Cao, C.; Zeng, X.; Feng, Z.; Wu, J.; Huang, Z. Improved Mask R-CNN for Aircraft Detection in Remote Sensing Images. Sensors 2021, 21, 2618. [Google Scholar] [CrossRef]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef]

- Wei, H.; Zhang, Y.; Wang, B.; Yang, Y.; Li, H.; Wang, H. X-LineNet: Detecting Aircraft in Remote Sensing Images by a Pair of Intersecting Line Segments. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1645–1659. [Google Scholar] [CrossRef]

- Ren, Y.; Zhu, C.; Xiao, S. Deformable Faster R-CNN with Aggregating Multi-Layer Features for Partially Occluded Object Detection in Optical Remote Sensing Images. Remote Sens. 2018, 10, 1470. [Google Scholar] [CrossRef]

- Liu, Q.; Xiang, X.; Wang, Y.; Luo, Z.; Fang, F. Aircraft detection in remote sensing image based on corner clustering and deep learning. Eng. Appl. Artif. Intell. 2020, 87, 103333. [Google Scholar] [CrossRef]

- Cheng, G.; Zhou, P.; Han, J. Learning Rotation-Invariant Convolutional Neural Networks for Object Detection in VHR Optical Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7405–7415. [Google Scholar] [CrossRef]

- Huo, Y.; Gang, S.; Guan, C. FCIHMRT: Feature Cross-Layer Interaction Hybrid Method Based on Res2Net and Transformer for Remote Sensing Scene Classification. Electronics 2023, 12, 4362. [Google Scholar] [CrossRef]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. In Proceedings of the 9th International Conference on Learning Representations, Online, 3–7 May 2021; ICLR: Appleton, WI, USA, 2021. [Google Scholar]

- Dai, Z.; Cai, B.; Lin, Y.; Chen, J. UP-DETR: Unsupervised Pre-training for Object Detection with Transformers. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Online, 19 June 2021; pp. 1601–1610. [Google Scholar]

- Yao, Z.; Ai, J.; Li, B.; Zhang, C. Efficient DETR: Improving End-to-End Object Detector with Dense Prior. arXiv 2021, arXiv:2104.01318. [Google Scholar]

- Zhao, Y.; Zhao, L.; Li, C.; Kuang, G. Pyramid Attention Dilated Network for Aircraft Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2021, 18, 662–666. [Google Scholar] [CrossRef]

- Luo, R.; Chen, L.; Xing, J.; Yuan, Z.; Tan, S.; Cai, X.; Wang, J. A Fast Aircraft Detection Method for SAR Images Based on Efficient Bidirectional Path Aggregated Attention Network. Remote Sens 2021, 13, 2940. [Google Scholar] [CrossRef]

- Zhu, M.; Xu, Y.; Ma, S.; Li, S.; Ma, H.; Han, Y. Effective Airplane Detection in Remote Sensing Images Based on Multilayer Feature Fusion and Improved Nonmaximal Suppression Algorithm. Remote Sens. 2019, 11, 1062. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the Computer Vision—ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the 29th International Conference on Neural Information Processing Systems—Volume 1, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Ren, Y.; Zhu, C.; Xiao, S. Small Object Detection in Optical Remote Sensing Images via Modified Faster R-CNN. Appl. Sci. 2018, 8, 813. [Google Scholar] [CrossRef]

- Tang, T.; Zhou, S.; Deng, Z.; Zou, H.; Lei, L. Vehicle Detection in Aerial Images Based on Region Convolutional Neural Networks and Hard Negative Example Mining. Sensors 2017, 17, 336. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic Ship Detection in Remote Sensing Images from Google Earth of Complex Scenes Based on Multiscale Rotation Dense Feature Pyramid Networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Sharma, M.; Dhanaraj, M.; Karnam, S.; Chachlakis, D.; Ptucha, R.; Markopoulos, P.; Saber, E. YOLOrs: Object Detection in Multimodal Remote Sensing Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1497–1508. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep Learning Based Oil Palm Tree Detection and Counting for High-Resolution Remote Sensing Images. Remote Sens 2017, 9, 22. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI Transformer for Oriented Object Detection in Aerial Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15 June 2019; pp. 2844–2853. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.; Yan, J.; Zhang, Y.; Zhang, T.; Guo, Z.; Sun, X.; Fu, K. SCRDet: Towards More Robust Detection for Small, Cluttered and Rotated Objects. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8231–8240. [Google Scholar] [CrossRef]

- Ji, H.; Gao, Z.; Mei, T.; Ramesh, B. Vehicle Detection in Remote Sensing Images Leveraging on Simultaneous Super-Resolution. IEEE Geosci. Remote Sens. Lett. 2020, 17, 676–680. [Google Scholar] [CrossRef]

- Courtrai, L.; Pham, M.T.; Lefèvre, S. Small Object Detection in Remote Sensing Images Based on Super-Resolution with Auxiliary Generative Adversarial Networks. Remote Sens. 2020, 12, 3152. [Google Scholar] [CrossRef]

- Rabbi, J.; Ray, N.; Schubert, M.; Chowdhury, S.; Chao, D. Small-Object Detection in Remote Sensing Images with End-to-End Edge-Enhanced GAN and Object Detector Network. Remote Sens. 2020, 12, 1432. [Google Scholar] [CrossRef]

- Wang, L.; Li, D.; Zhu, Y.; Tian, L.; Shan, Y. Dual Super-Resolution Learning for Semantic Segmentation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3773–3782. [Google Scholar] [CrossRef]

- Wang, Y.; Bashir, S.M.A.; Khan, M.; Ullah, Q.; Rui, W.; Song, Y.; Guo, Z.; Niu, Y. Remote Sensing Image Super-resolution and Object Detection: Benchmark and State of the Art. Expert Syst. Appl. 2022, 197, 116793. [Google Scholar] [CrossRef]

- Zhang, J.; Lei, J.; Xie, W.; Fang, Z.; Li, Y.; Du, Q. SuperYOLO: Super Resolution Assisted Object Detection in Multimodal Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5605415. [Google Scholar] [CrossRef]

- Szegedy, C.; Wei, L.; Yangqing, J.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Yan, Z.; Xu, F.; Wang, R.; Diao, W.; Chen, J.; Li, J.; Feng, Y.; Xu, T.; et al. FAIR1M: A benchmark dataset for fine-grained object recognition in high-resolution remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2022, 184, 116–130. [Google Scholar] [CrossRef]

- Deng, S.; Li, S.; Xie, K.; Song, W.; Liao, X.; Hao, A.; Qin, H. A Global-Local Self-Adaptive Network for Drone-View Object Detection. IEEE Trans. Image Process. 2021, 30, 1556–1569. [Google Scholar] [CrossRef] [PubMed]

| Methods | Baseline | Backbone | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|

| SSD | Single-Stage | VGG-16 | 0.673 | 0.459 | 0.144 | 0.423 | 0.638 |

| RetinaNet | Single-Stage | ResNet50 | 0.873 | 0.582 | 0.181 | 0.543 | 0.649 |

| DETR | End-to-End | ResNet50 | 0.912 | 0.603 | 0.201 | 0.565 | 0.659 |

| ROI Transformer | Faster R-CNN | ResNet50 | 0.893 | 0.531 | 0.235 | 0.543 | 0.575 |

| GLSAN | Faster R-CNN | ResNet50 | 0.929 | 0.647 | 0.349 | 0.599 | 0.643 |

| SuperYOLO | Modified YOLOv5s | CSPDarknet | 0.909 | 0.558 | 0.245 | 0.547 | 0.771 |

| Ours | Faster R-CNN | ResNet50 | 0.931 | 0.794 | 0.359 | 0.655 | 0.756 |

| Methods | Baseline | Backbone | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|

| SSD | Single-Stage | VGG-16 | 0.598 | 0.311 | 0.074 | 0.271 | 0.568 |

| RetinaNet | Single-Stage | ResNet50 | 0.684 | 0.551 | 0.172 | 0.412 | 0.712 |

| DETR | End-to-End | ResNet50 | 0.659 | 0.515 | 0.122 | 0.417 | 0.611 |

| ROI Transformer | Faster R-CNN | ResNet50 | 0.739 | 0.524 | 0.281 | 0.468 | 0.587 |

| GLSAN | Faster R-CNN | ResNet50 | 0.718 | 0.625 | 0.386 | 0.507 | 0.745 |

| SuperYOLO | Modified YOLOv5s | CSPDarknet | 0.662 | 0.582 | 0.219 | 0.443 | 0.697 |

| Ours | Faster R-CNN | ResNet50 | 0.832 | 0.669 | 0.507 | 0.569 | 0.760 |

| Methods | Test data | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| Baseline | o | 0.725 | 0.521 | 0.188 | 0.289 | 0.589 |

| Baseline +SATSA | o + c | 0.844 | 0.744 | 0.281 | 0.412 | 0.712 |

| Baseline +SATSA+ LTRN | o + c | 0.931 | 0.794 | 0.359 | 0.655 | 0.756 |

| Methods | FPS | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|

| Full Attention | 15.7 | 0.902 | 0.757 | 0.321 | 0.542 | 0.732 |

| Saliency-Guided (Ours) | 18.3 | 0.931 | 0.794 | 0.359 | 0.655 | 0.756 |

| Methods | APS | APM | APL | FLOPs | FPS |

|---|---|---|---|---|---|

| No Enhancement | 0.221 | 0.453 | 0.618 | 28% | 22.7 |

| Bilinear Upsampling | 0.287 | 0.524 | 0.682 | 35% | 20.1 |

| LTRN (EDSR-based) | 0.337 | 0.589 | 0.737 | 42% | 19.6 |

| LTRN (Transformer-Full) | 0.382 | 0.661 | 0.773 | 100% | 8.4 |

| LTRN (Transformer-Ours) | 0.359 | 0.655 | 0.756 | 65% | 18.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, N.; Zhang, B.; He, H.; Gao, K.; Liu, Z.; Li, L. A2G-SRNet: An Adaptive Attention-Guided Transformer and Super-Resolution Network for Enhanced Aircraft Detection in Satellite Imagery. Sensors 2025, 25, 6506. https://doi.org/10.3390/s25216506

Chen N, Zhang B, He H, Gao K, Liu Z, Li L. A2G-SRNet: An Adaptive Attention-Guided Transformer and Super-Resolution Network for Enhanced Aircraft Detection in Satellite Imagery. Sensors. 2025; 25(21):6506. https://doi.org/10.3390/s25216506

Chicago/Turabian StyleChen, Nan, Biao Zhang, Hongjie He, Kyle Gao, Zhouzhou Liu, and Liangzhi Li. 2025. "A2G-SRNet: An Adaptive Attention-Guided Transformer and Super-Resolution Network for Enhanced Aircraft Detection in Satellite Imagery" Sensors 25, no. 21: 6506. https://doi.org/10.3390/s25216506

APA StyleChen, N., Zhang, B., He, H., Gao, K., Liu, Z., & Li, L. (2025). A2G-SRNet: An Adaptive Attention-Guided Transformer and Super-Resolution Network for Enhanced Aircraft Detection in Satellite Imagery. Sensors, 25(21), 6506. https://doi.org/10.3390/s25216506