3.1. The Characteristics of Human and Machine

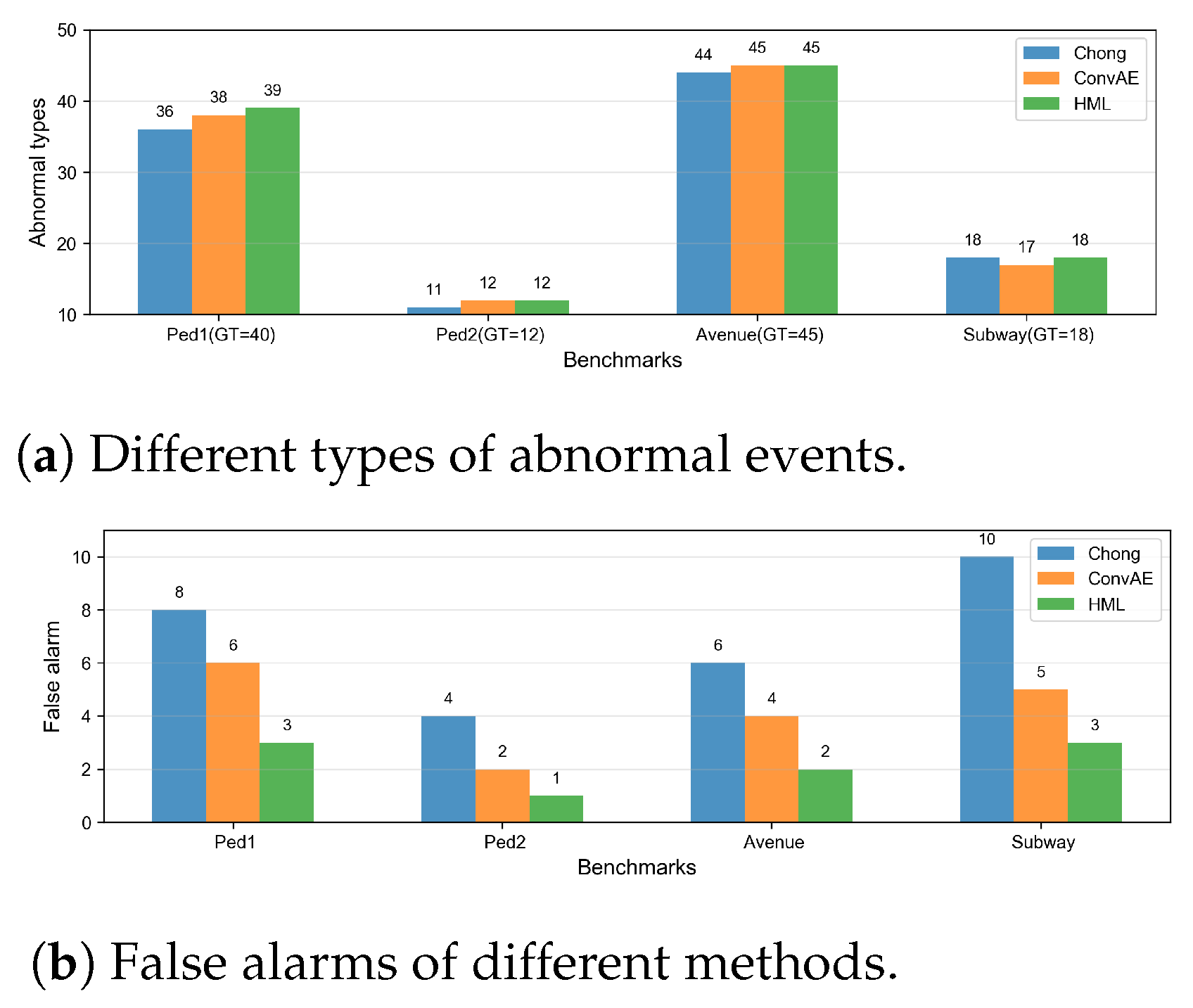

In intelligent systems, specialized models are often designed to address specific issues effectively. However, when faced with a new task, these models tend to fail due to differences in settings and requirements. It would be beneficial to establish a general framework that enables a model to handle data-driven tasks by leveraging human expertise and experience without the need to reconstruct a new system from scratch. Such a framework would allow humans to respond to dynamic situations, integrating human judgment with machine computation to create a complementary effect throughout the task-executing process. Consider a common scenario often depicted in television or film: investigating a homicide or identifying a suspect. Manually searching through vast amounts of surveillance footage to accurately pinpoint a suspect is highly labor-intensive. Although several classical deep learning methods have achieved competitive detection performance, they still suffer from false alarms and missed identifications. These errors arise due to factors such as varying lighting conditions, obstructions, and changes in the appearance of the same person across different outfits.

Human beings and machines utilize distinctive characteristics to engage with the same work. Humans have creativity, empathy, reasoning, analysis flexibility, and common sense; these features are unique for each person. However, fast speed, large storage, pattern recognition, and consistency are vital character properties for intelligent machines. In recent years, more and more countries have begun to study human–machine collaborative intelligence.

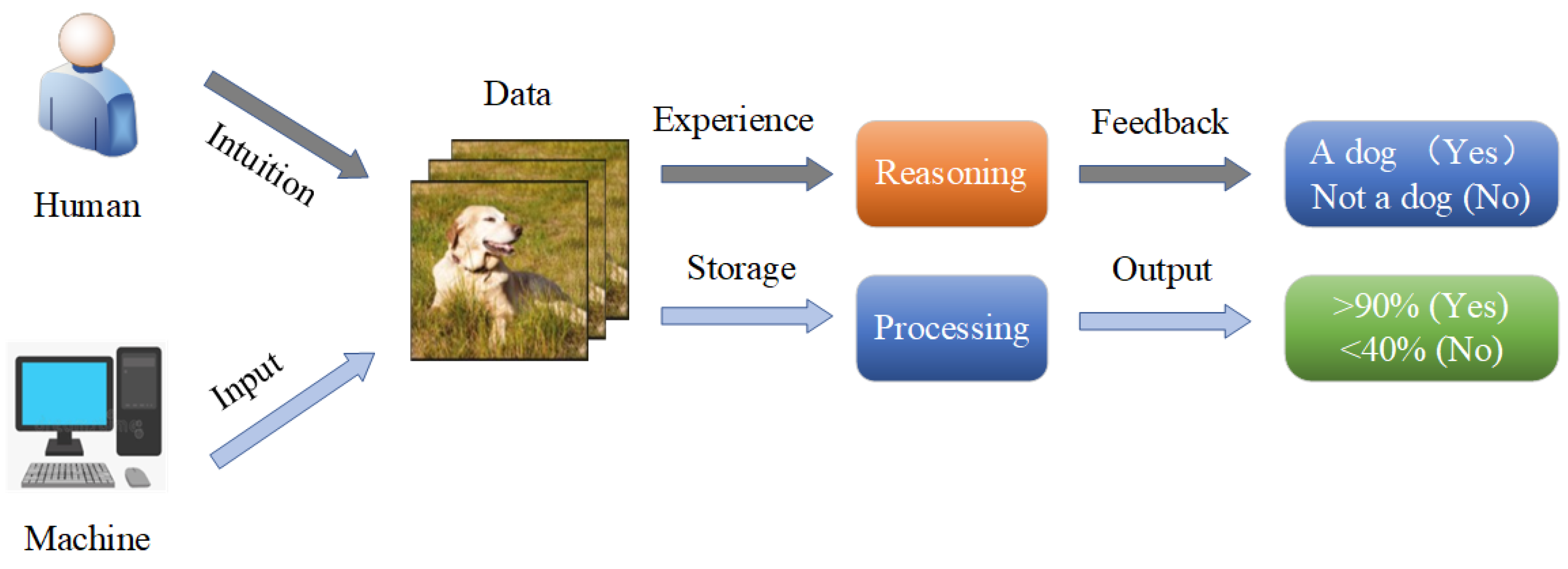

Through observation and deep thinking, we designed a human and machine computing process, which is illustrated in

Figure 1. Humans and machines work in a common workspace; when dealing with the same task, humans and machines compute in different ways, respectively. For human beings, they observe a task intuitively, using their experience to compute or reason, and giving the final feedback. For the machine, it first needs the input of the primary data, and then it makes use of processors and storage memory for further processing. Finally, it outputs the result in probability format.

It is an iterative and mutual learning method that integrates the cognitive reasoning of the human and the intelligent computing of the machine, in which the human and machine execute according to the rules, interact and cooperate with each other to give full play to their respective advantages for a specific streaming data-driven task. For an unsolved data-driven task, humans and machines work in a cooperative mode: machines provide powerful computing ability to process the most repetitive steps, whereas humans leverage their expertise and experience to guide the machines for a better direction of optimization or give their judgment for a complicated situation that the machine cannot address properly. A streaming data-driven task means that the final outcome is computed by the original data and then executed in a human–machine cooperative mode. In the whole performance cycle, the data are vital; in other words, the data make each processing stage run forward; without the data, it would do nothing. In contrast to this category, another type of task depends on specific scenarios, such as auto-driving and virtual reality applications.

There are three stages in which collaborative learning can be performed between humans and machines: (1) collecting samples means selecting representative to reduce the number of training sets, (2) incorporating human intelligence to adjust the model and iterate in a closed-loop form; and (3) analyzing the decision-making output by estimating whether the machine algorithm’s output is correct or contains some wrong results and then giving a highly reliable decision.

3.2. HMLSDS Framework

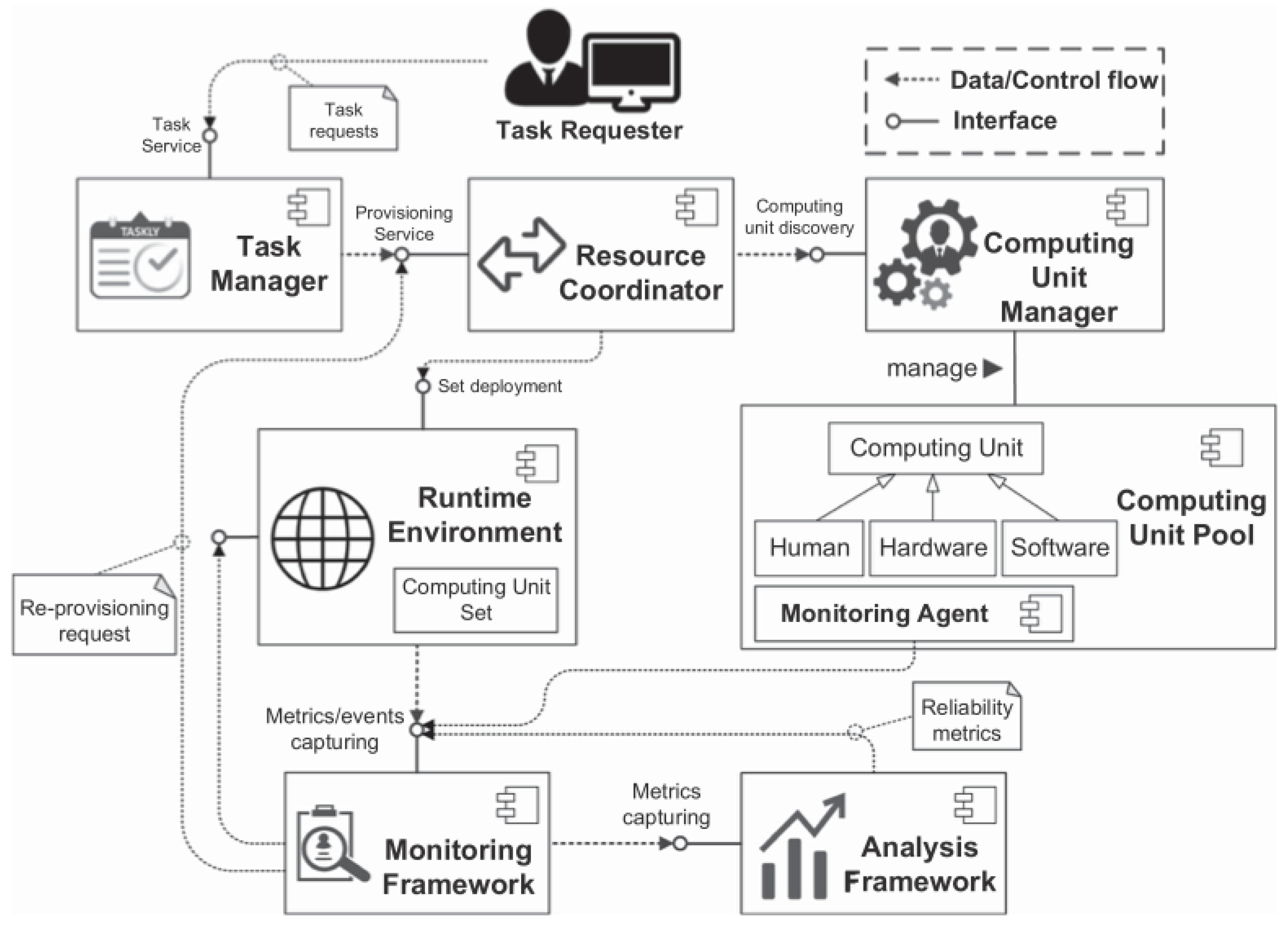

It is important to fuse human cognitive reasoning and intelligent machine computing for subtasks and collaborating in a complementary way. Our proposed framework is illustrated in

Figure 2. The framework contains three parts. (1) The first part is initialization, in which data are prepared to be computed for a certain task. At this stage, both humans and machines would perform a few tasks (human analysis and/or machine preprocessing, such as data cleaning, basic model training, and special sample collection). (2) The second part is the computing part; here, the machine selects a specific algorithm from the algorithm library, whereas the human has a knowledge base. The model is run in an iterative way until a predefined threshold is met or budgets are exhausted. (3) The third part is an evaluation part that evaluates the efficiency of human–machine learning. Furthermore, the most important thing is that a proportion of the low-confidence data will be collected for further training in an iterative pattern. The collected samples are mainly hard ones, such as are heavily shielded crowds or someone that is not clear, but in the same dressing style similar to the target.

Compared with the usual ML procedure, our framework has a distinctive feature which fuses human intelligence and machine intelligence together; the advantage is that we use less human workload to obtain higher model accuracy. Human can clean data in the initialization stage; at the same time, domain experts can give their feedback to the low-confidence output of the model and collect these data samples into the training phase to fine-tune the model. Through extensive experiments, our framework increases in precision with a human workload of no more than 20%. At the same time, human–machine learning can find the complex situation and take measures to prevent the model from going on a terrible performance trend; this is more flexible and efficient.

Our framework is implemented in a five-tuple , where represents the human computing unit, represents the machine computing unit, represents the computing relationship between the relevant human being and the machine computing unit, represents the mode of collaboration between humans and machines, and represents the performance of human–machine learning execution. For a common computing unit which can express that a human or a machine has a set of capabilities, . The capability type defines the kind of function that the computing units have or are endowed with, and represents its capability level. There are two types of capability level, a Boolean capability (i.e., it has or does not have specific capability) and a continuous capability level, for example (0, 1], which defines a floating value representing the quality of the computing unit. These values can be calculated on the basis of a qualification test or on a statistical measurement such as Amazon Mechanical Turk.

We designed the detailed information of the framework as follows:

stands for human computing unit; it can be only one expert who is familiar with a specific field and has extensive experience, or it would be a group of workers or experts . For each human, different abilities belong to different people.

stands for the machine computing unit; as mentioned above, it is similar to the human computing unit in that each machine has its own performance parameters.

stands for the computing relationship between the relevant human and the machine computing unit. If a human computing unit

and a machine computing unit

work in a parallel mode, the relationship uses “0” to represent this mode. When an intelligent machine performs the task, human experts can observe the intermediate result. Another relationship is “1” to represent the serial mode; it is more common when the machine completes the computing task and the human takes measures on low-confidence output. We formulate the calculation relationship

as follows.

stands for the collaboration mode of human and machine computing units. There are three types of cooperation. We use

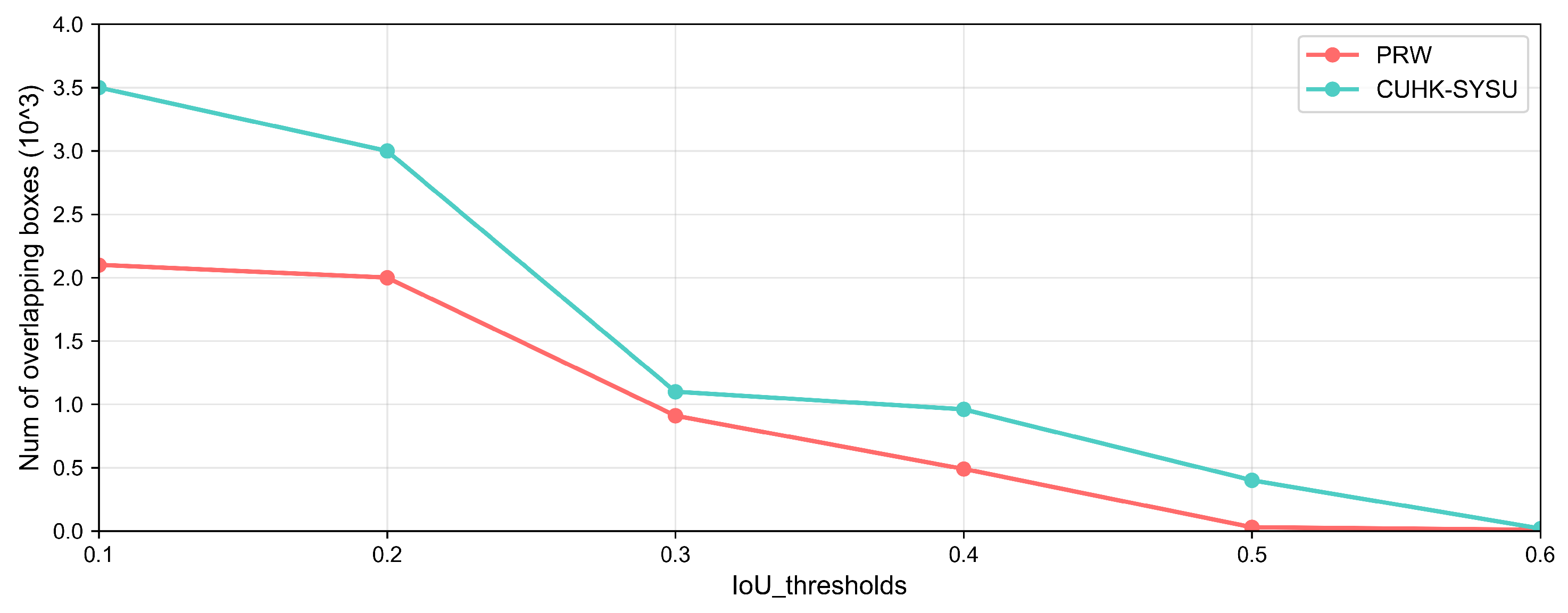

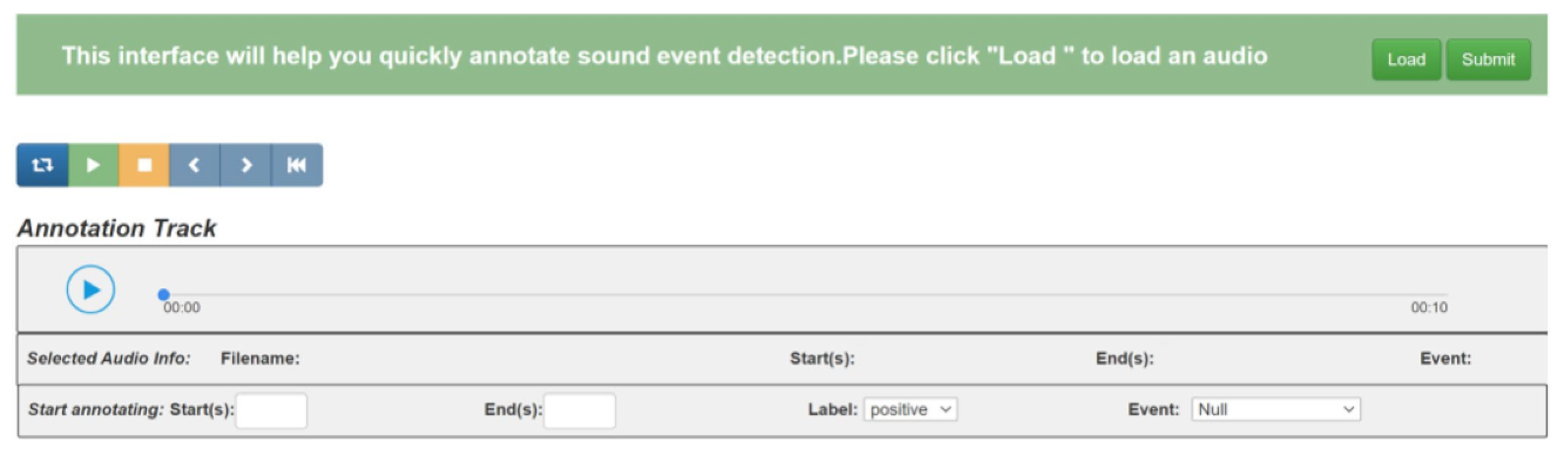

to represent the different modes. “1” represents a human computing unit that interacts with a machine computing unit. In this mode, an interactive interface is designed, in which human experts can observe the intermediate output and perform some operations, such as “mark”, “zoom in”, and “zoom out”. “2” represents humans giving feedback on the machine computation output; humans can then learn new knowledge from the machine and then give some advice to promote model construction. For example, in person re-identification tasks, humans find more overlap in some crowded frames and set the IoU to a smaller number, further reducing occlusions. “3” represents an augmented mode in which humans and machines can work collaboratively to enhance performance improvement; especially, human experience can augment the new method to address the current complex situation that only the machine can solve. For instance, in the sound event detection task, several sounds are mixed in a short slot; due to the different frequencies of each sound, the model can be designed to use several frequencies to discriminate coarse-grained classes.

stands for the performance of the human–machine learning execution. We consider three vital performance metrics: the accuracy of the final task is , the human involvement of a given task is expressed as , and a special metric is , which stands for a ratio of human workload to task accuracy—in other words, when increasing the amount of human involvement prompts the final precision improvement.

To avoid ambiguity, we explicitly defined the two-core metrics of the cost/benefit analysis, aligning them with standard practices in streaming ML research:

Human effort: quantified as the total labeling time (in person-hours) required to prepare training data for each baseline method and our proposed method. For consistency, we used a team of five annotators (with 2+ years of experience in video/image/audio labeling) and measured the time per sample;

Accuracy: task-specific metrics to reflect real-world utility:

(1) Video anomaly detection: F1-score (area under the curve, AUC) for the location of anomaly events.

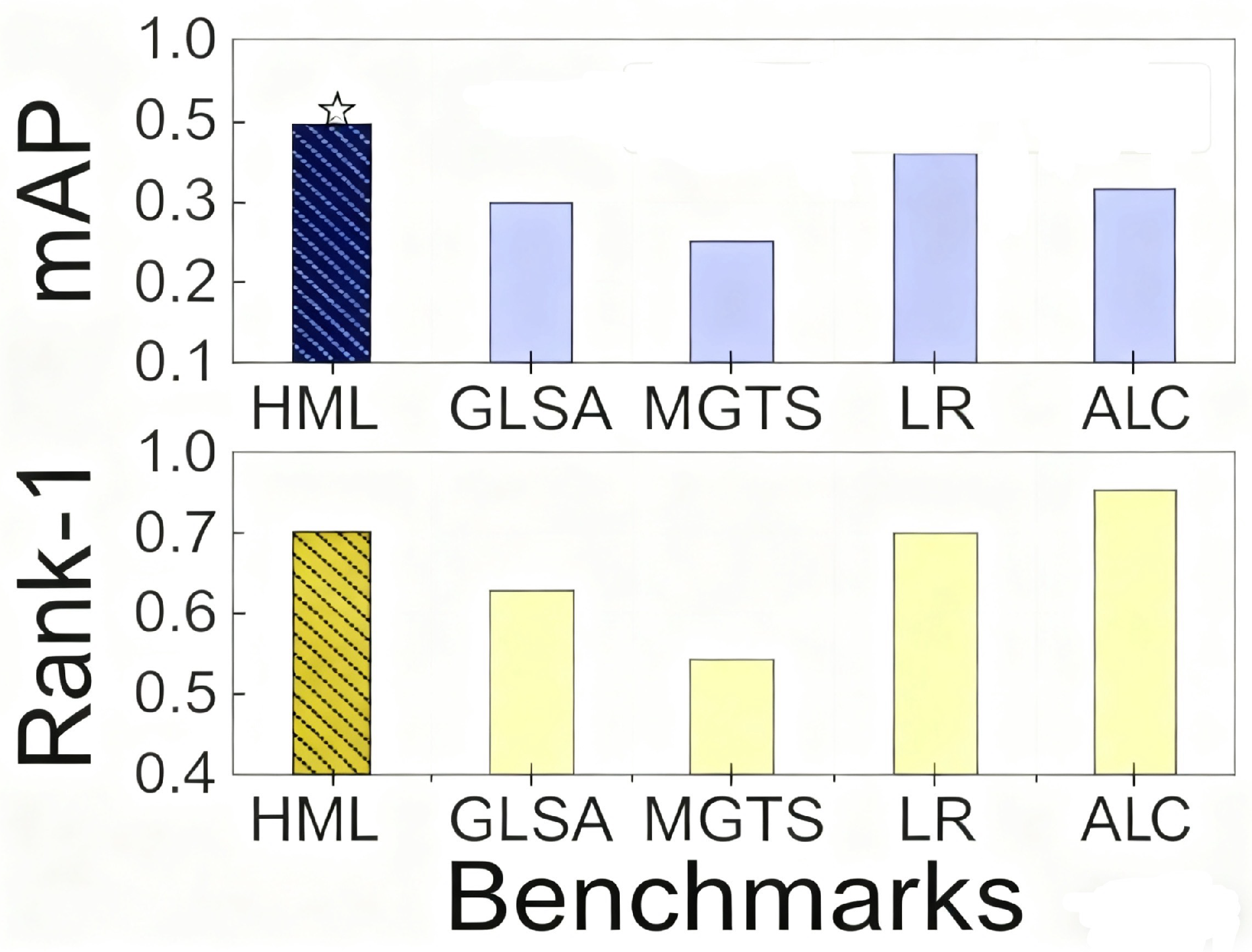

(2) Person re-identification: mean Average Precision (mAP) across five camera views.

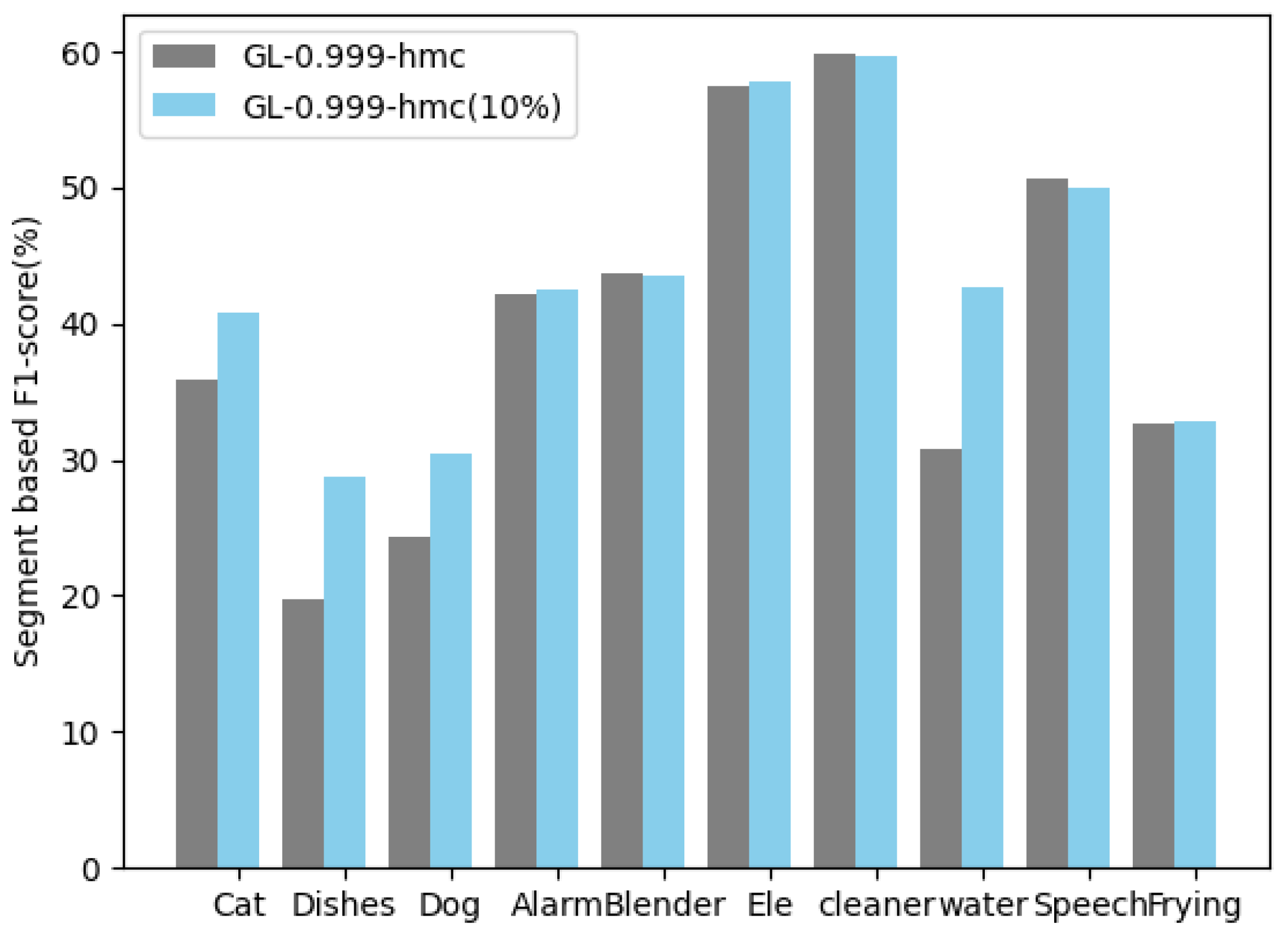

(3) Sound event detection: event-based F1-score (counting for both category accuracy and temporal alignment of detected events).

For a specified task, we can represent the execution performance

as follows.

In the initialization part, the basic task requirements would be known, such as task type (classification task, identification task, generation tasks, etc.), desired accuracy, and expected computation time. After obtaining the essential information, the human can analyze the complexity of the computation of the task. In most cases, the collected datasets conclude samples that are more or less noisy, and humans and machines can discard or refine them. Then, the preprocessed data can be computed in the subsequent steps.

In the computing part, which is a vital section of our framework, machines run a specified algorithm to address the preprocessed data utilizing a server with a GPU or a common PC. Humans with sufficient knowledge or experience can observe the intermediate output and can provide feedback to promote the rate of model convergence. For example, in the identification task, humans can choose incorrect classification samples as useful samples back to the training stage to train a robust model for testing.

In the evaluation part, an expected threshold would be set (according to the accuracy of the advanced algorithm which has been published). When the accuracy is larger than the threshold, it would output directly. For low-confidence output, the domain expert would give a confirmation or correction to enhance the final precision. We focus on select sample numbers and the ratio of the amount of human workload to the task performance as the main evaluation index.

Our proposed framework contains three parts as mentioned above; it is not isolated but runs iteratively in a loop. In the training phase, some special samples can be obtained for better model construction based on expert feedback. In the testing phase, it is not a one-time process just to give the final output; instead, a proportion of the low-confidence output data should be collected as meaningful samples input for fine-tuning training. Human and machine computing units work cooperatively in a common space in a specific collaborative style.

3.3. Rules for HMLSDS

In this section, we design basic rules for the framework to ensure that it can work smoothly as often as possible, mainly referring to the task allocation mechanism and conflict resolution to address human and machine judgements. Furthermore, model adjusting based on human feedback is a vital issue to be addressed. The workflow of the framework runtime is shown in

Figure 3. The runtime starts when a new task arrives and the task requester sends messages to the task manager, which is responsible for constructing a queue to be processed. The prepared request is then delivered to the resource coordinator, who is responsible for assembling a set of computing units. Potential computing units are selected by a computing unit manager. All of the computing units are virtualized in a computing unit pool to be chosen by the computing unit manager. Similarly to the computing unit manager, runtime environments are deployed to certain tasks. The monitoring framework through an interactive interface provides the current state of the process. Finally, the analysis framework displays critical performance metrics. With a clear operational process in place, the task can be executed effectively. Depending on the application (and the research groups), different abstractions and solutions will be used [

37].

Mathematical modeling of framework

We formalized the framework’s solution with a clear mathematical notation as follows:

1. As the core mathematical formulation of our HMLSDS framework, the dynamic feature alignment module with weakly supervised learning is designed to address the “non-stationary” nature of streaming data (i.e., concept drift, where data distribution shifts over time). We formalized this problem and the framework’s solution with a clear mathematical notation as follows:

(1) Problem definition: Modeling streaming data distribution shifts, we define the streaming data at time step t as a sequence , where is the i-th input sample (e.g., video frame feature, audio spectrogram feature) at time t with dimension D; : the weakly supervised label of (e.g., “anomaly present” for video, “target person present” for person re-identification)—distinct from full supervised labels (which require fine-grained localization/timestamps); : the number of samples arriving at time t (varies for streaming data, e.g., 30 samples/second for 30 fps video). The core challenge of concept drift is modeled as a time-varying probability distribution: where is the joint distribution of input x and label y at time t. Traditional static models (e.g., supervised ViT-B) assume , leading to performance degradation when drift occurs.

(2) Framework objective function: Balancing adaptation and stability to address drift while avoiding "catastrophic forgetting" (losing knowledge of past data), our framework optimizes a hybrid objective function that combines the following: current-time supervision loss (to adapt to the latest data distribution); past-feature alignment loss (to retain consistency with historical features); and weak-label regularization (to leverage sparse weak labels efficiently).

The full objective at time t is the following:

where

: model parameters at time t;

: model prediction function (e.g., feature extractor + task-specific classifier);

: hyperparameters (set via cross-validation to

, respectively) that balance the three loss terms. The definitions of each loss component are provided in the following with the derivations linking them to drift mitigation.

2. We created a detailed derivation of key loss components. To avoid “black-box” modeling, we derived each loss term with theoretical justification for its role in streaming tasks:

(1) Supervision loss (

): Adapting to current data for weakly supervised labels (e.g., binary “event present/absent”), we use a weighted cross-entropy loss to account for label uncertainty (weak labels are less precise than full labels). For a sample

with a weak label

,

where

is a confidence weight for the weak label—higher weights are assigned to labels verified by 2+ annotators (reducing noise from weak ambiguous labels). Theoretical rationale: This loss ensures that the model learns the latest data distribution

, addressing “sudden drift” (e.g., a new type of anomaly in video streams).

(2) Loss of feature alignment (): To mitigate catastrophic forgetting to retain knowledge of past data, we align the feature space of the current model with the feature space of the previous time step (). We define (historical feature set) and compute the Mahalanobis distance between the current and historical feature distributions (to measure the severity of the drift).

The alignment loss is shown below:

where

(current feature set);

: mean vector and covariance matrix of

(precomputed and stored to avoid recomputing historical data). Theoretical Rationale: The Mahalanobis distance accounts for correlations between feature dimensions (unlike Euclidean distance), making it more robust to minor distribution shifts. Minimizing this loss ensures that the current model’s features remain consistent with historical features, preventing forgetting.

(3) Loss of regularization (): Stabilizing weakly supervised learning since weak labels lack fine-grained information (e.g., no anomaly bounding boxes), we add an L2 regularization term to model parameters to avoid overfitting noisy weak labels: . Theoretical rationale: This term constrains parameter updates, ensuring that the model generalizes to unseen streaming samples rather than memorizing sparse weak labels.

3. Then we analyze the experimental results to bridge theory and practice by linking the mathematical model with our experimental findings. For video anomaly detection, the feature alignment loss () reduced the degradation of F1-score from 12.3% (without alignment) to 3.1% after 1 h of streaming (concept drift), matching the theoretical expectation that alignment mitigates forgetting. For weakly supervised labeling, the weighted supervision loss () improved the efficiency of the label–using 10% weak labels achieved 96.7% of the accuracy of 98.8% of the full labels, which is consistent with the loss’s design to leverage sparse labels. By supplementing these explicit formulas, derivations, and theoretical connections, our goal is to make the mathematical foundation of our framework fully reproducible.

Task allocation mechanism

Utilizing our previous work [

38], we divided the streaming data into several functional subtasks; then, according to the functional coupling relationship, we expressed them as a finite field

}. Specifically,

represents the total number of subtasks in the task

, where

. Here, the subscript h designates a subtask assigned to a human worker, while

denotes subtasks assigned to machines. In addition,

denotes the confidence or accuracy level of subtask

.

and

are the estimated accuracy rates for humans and machines, respectively. We use the indicator

to assess whether

exceeds a configurable threshold

: if

,

assigns the value 1; otherwise, it is set to 0.

When both humans and machines have no prior experience or clear decision boundaries, the framework may be adopted to share decision making, where humans and machines jointly analyze the data and propose solutions. They may use classical methods to deal with corresponding tasks to run on the machine and then observe the accuracy of the operation, which can be dynamically adjusted by resorting to Bayesian theory, the Markov decision process, the tree-based self-adaptive approach [

39] and other uncertain problems. There is a dynamic allocation of human–machine computing resources according to the performance and quality of task processing. For example, if the trend of task processing is not promising, we will consider de-noising and purifying the data, improving the quality of the data, and replacing a more suitable model algorithm. If the performance of the processing progresses in a desired direction, more human experts can be assigned to provide feedback so as to improve the final accuracy and reduce the total re-training cost.

Due to the imprecise nature of human abilities, a precise quantization of the required human skill can be a burden on the system. We used a fuzzy rules-based quality estimation strategy for guaranty the initial assignment of a specific task. Dynamic user profiling evaluates and continuously updates profiles based on task performance (accuracy, reliability, speed). Then, we assessed the quality of user feedback and historical data upon task completion. While the basic user profile is collected, it is not enough to make sure that they can perform well until the task is completed. The task allocation mechanism is significant for assigning different human workers to perform different subtasks at different levels. For instance, novices handle simper tasks (labeling marks in annotation task), while experts handle complex or high-risk tasks (medical diagnosis or content moderation tasks).

To ensure that task delegation remains fair and objective between users with differing skill levels, we used a simple strategy; each human has their own capability and user profile. We divided human ability into three kinds: poor, good, and excellent. For example, in crowd-sourced labeling tasks for machine learning, tasks are dynamically assigned based on user accuracy rates and confidence levels. Novice workers label simpler images, while experts review ambiguous ones. A review mechanism ensures fairness, and the framework monitors the performance of the task and takes some measures to improve the whole task, as expected over time. Periodical monitoring and continuous improvement will be explored in future studies hlwith regard to the following aspects:

(1) Performance analytics: continuously monitor task outcomes to ensure that assignments align with user capabilities.

(2) Feedback integration: collect and analyze user feedback to refine assignment algorithms and address fairness concerns.

(3) Bias adjustment: regularly audit task allocation patterns to identify and mitigate systemic biases.

Conflict resolution in decision making

We have established several schemes to address this issue.

(1) Machines provide a confidence score for their prediction, derived from a designed model or algorithm, and provide the result; the expert would then review the low confidence output by their expertise and cognitive ability. For some high-stakes tasks, such as health diagnosis and care allocation, a machine recommends a diagnosis based on medical imaging, but the doctor disagrees. The system shows key characteristics that influence the machine’s decision, allowing the doctor to verify or correct the outcome.

(2) Furthermore, if an expert cannot make a final decision, more experts may need to perform a comprehensive analysis or vote to obtain the final solution. The system shows key characteristics that influence the machine’s decision, allowing the doctor to verify or correct the outcome.

(3) The system combines human and machine predictions using a weighted decision model, whose weights are assigned based on the previous performance, task complexity and context. If the machine has historically outperformed in similar scenarios, its prediction might be weighted more heavily, whereas in ambiguous cases, human judgment might be given higher priority.

(4) A collaborative decision-making scheme involves performing and then iteratively refining the predictions by humans and machines. Combining human intuition and machine precision, human experts select hard samples for further training, and the machine improves its prediction accuracy gradually.

To ensure that the final decision optimally balances precision and interpretability, our framework is designed so that the final precision is not less than the classical or newly proposed approach, and through the interactive interface, the expert can confirm or correct some low-confidence output from the machine algorithm. For example, in crowded video scenes that require finding a target person, the machine model is prone to produce more false alarms and mistakes, but it may be easier for the human expert to select the right person. We set some metrics, such as accuracy, cost and total budget to allow the human–machine cooperative learning framework to work in a confined space, after computing and iterative evaluation, to obtain a relatively efficient and optimal scheme to balance accuracy and interpretability.

Model adjusting based on human feedback

The framework partly leverages the active learning mode in the training stage and the machine model inevitably performs more or less incorrectly, especially for some specific hard samples.Then, an expert can collect these hard samples and feed them as training samples in the subsequent training process. Due to the representative samples, the training performance improved by more epochs. Human experts can provide three types of collaboration mode: interactive mode, feedback mode, and augmented mode, respectively.

(1) Explicit feedback: Human feedback provides direct feedback on machine output, such as labels, corrections, or confidence scores for decisions.

(2) Implicit feedback: The framework observes user interactions and decision-making behaviors (e.g., selection, overrides, or usage patterns) to infer feedback.

(3) Structured annotations and human feedback are collected using tools or interfaces that guide them to provide unbiased high-quality input.

Feedback is used to fine-tune the model weights, ensuring alignment with the desired outcomes without overfitting to specific feedback instances.