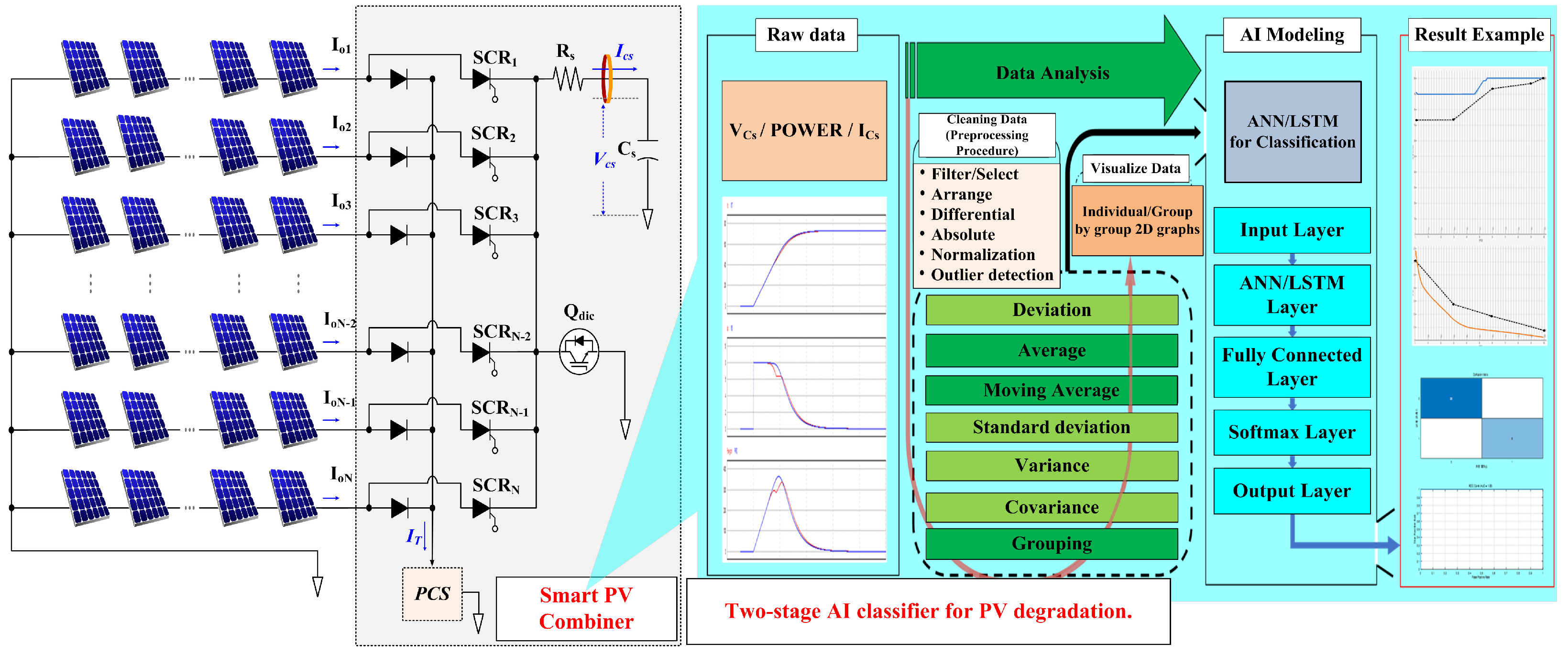

In this study, the training and evaluation of the artificial intelligence classification model were conducted using MATLAB R2024a in a Windows 11 environment with an NVIDIA RTX 4090 graphics card. The solar module used is the MSX-60 model, as detailed in

Table 2, which features a maximum output power of 60 W,

of 21.1 V, and

of 3.8 A. The dataset was constructed based on raw data obtained through simulation and was labeled as either normal or abnormal for use in the analysis. Based on this dataset,

Section 5.1 presents the experimental results of the first stage in the proposed degradation detection framework, focusing on the classification of normal and abnormal conditions.

Section 5.2 analyzes the final stage performance for identifying the detailed degradation states of photovoltaic modules.

5.1. Results of Experiments Classifying Normal and Abnormal States

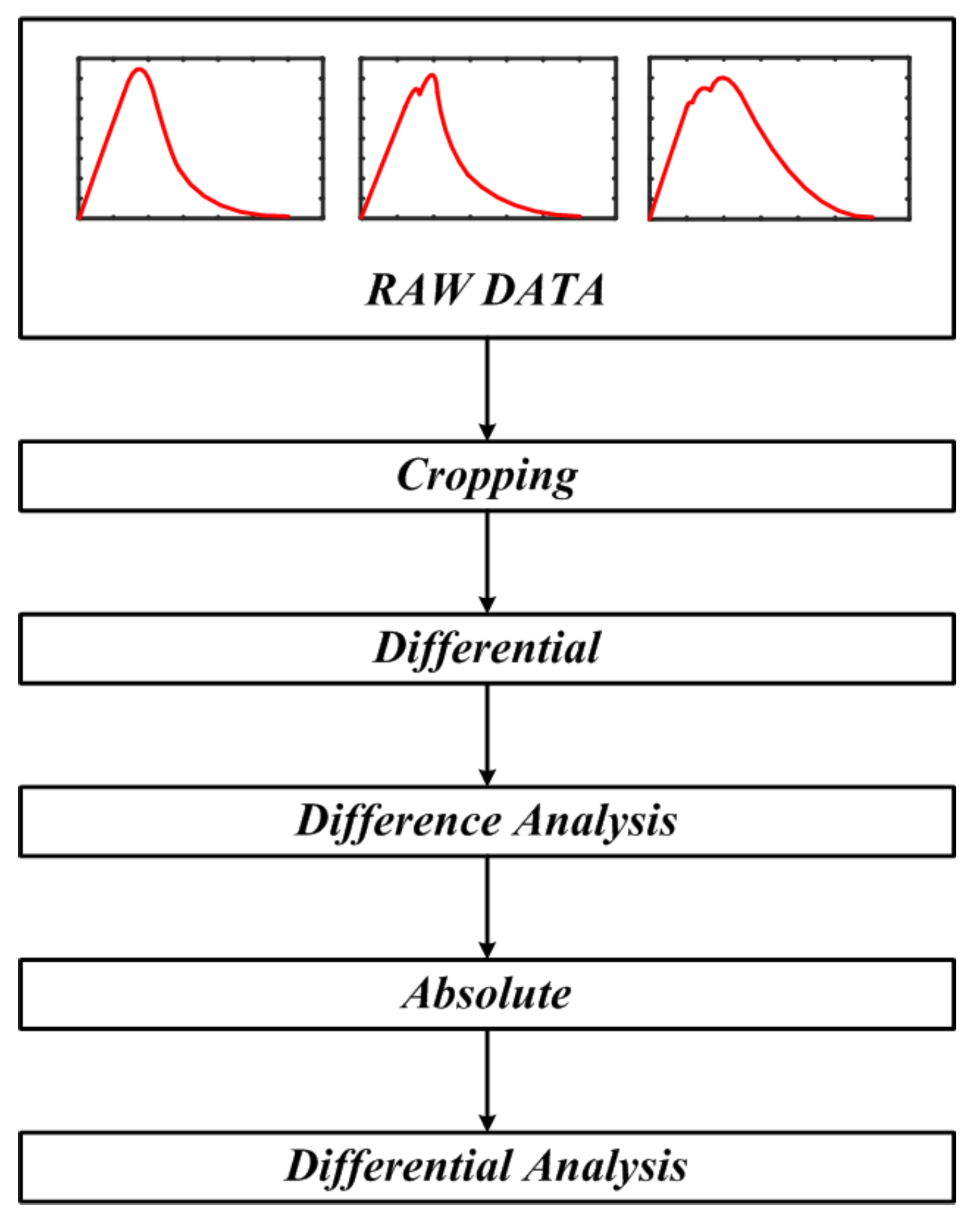

In this study, the entire dataset was divided into a 70% training set and a 30% validation set to train and validate the LSTM model for classifying normal and abnormal states. The comparative analysis covered logistic regression, SVM, random forest, a 1D-CNN, and the proposed shallow LSTM. To ensure a fair comparison, all models were evaluated under the same preprocessing pipeline and the same metrics: accuracy, AUC, confusion matrix, and ROC curve. The logistic regression model was constructed according to Equation (

7), and the predicted probability

p was computed using the logistic function.

where

p represents the probability of belonging to class 1;

x is the normalized input feature value;

is the intercept; and

,

, and

are the regression coefficients for each respective feature. Furthermore, we applied z-score normalization to the SVM to stabilize margin and coefficient estimation. By contrast, the Random Forest being tree-based and largely scale-invariant was trained on the original (unnormalized) features. As a comparative baseline, we used a lightweight 1D-CNN that ingests a 3-D input tensor and stacks two 1-D convolutional blocks (batch normalization + ReLU), global average pooling, a fully connected layer, and a softmax classifier.

Figure 13 reports results for four baselines—(a) logistic regression, (b) SVM, (c) random forest, and (d) 1D-CNN—with each panel showing a confusion matrix and a ROC curve with AUC. Quantitatively, the AUCs for logistic regression (≈0.5291) and SVM (≈0.5469) were comparable, whereas the 1D-CNN produced a lower AUC (≈0.4644). Random forest achieved the highest classification performance (≈0.9987). Its confusion matrix also shows a relatively high recall for the abnormal class (Class 1).

As shown in

Figure 14, the proposed shallow LSTM has an AUC of approximately 0.54, which is lower than that of Random Forest but comparable to the performance of Logistic Regression and SVM. This low AUC is a consequence of the severe class imbalance in the dataset (Normal:Abnormal ≈ 99:1). As the AUC metric is highly sensitive to minority class detection, it would be inappropriate to underestimate the model’s performance based solely on this single indicator. The proposed shallow LSTM can capture temporal features, such as inflection points and waveform distortions in the power curve, which is critical for long-term detection of PV module degradation. Accordingly, the results in

Figure 14 should be viewed not as an absolute performance comparison, but as evidence that the proposed method can detect abnormal patterns to a meaningful degree even under extreme class imbalance. Random forest achieved a higher AUC, but its memory footprint and inference latency limit real-time deployment. In contrast, the proposed shallow LSTM was selected as the final model for its deployability and scalability to long, multichannel time-series data and amenability to lightweighting, quantization, and hardware acceleration.

Table 3 summarizes the training and test accuracies for each model. All models exhibit comparable performance (98–99%), and the small training–validation gap indicates no explicit signs of overfitting.

For the experimental validation, a PV array with uniform characteristics was constructed to simulate artificial degradation, which was induced by partially shading selected modules. The resulting P–V curves displayed a decrease in maximum power and additional inflection points; these features were used as the principal basis for degradation assessment. As shown in

Figure 15, the dataset is clearly partitioned into three conditions (Normal, Single-String Damage, Dual-String Damage). Each condition exhibits a distinct power waveform, discernible by its number of inflection points—0, 1, and 2, respectively.

Oscilloscope acquired data were exported to CSV and processed in MATLAB to assess four baseline models and the proposed shallow LSTM. As summarized in

Table 4, Random Forest achieved the highest performance (AUC = 1.000; training accuracy = 99.91%; test accuracy = 99.96%). The 1D-CNN was similarly strong (AUC = 0.9993; 97.99%; 97.94%), and the proposed shallow LSTM also yielded competitive results (AUC = 0.9974; 96.41%; 97.07%). By contrast, Logistic Regression (AUC = 0.8676; 78.79%; 78.92%) and SVM (AUC = 0.8702; 77.59%; 77.90%) underperformed. Contrary to the initial simulation-based results, validation with real-world measurements confirmed the effectiveness of the proposed LSTM model. The model appears to capture temporal patterns and waveform characteristics (e.g., inflection points and distortions in the power curve), allowing reliable discrimination of normal vs. abnormal states in noisy field data.

The hyperparameters used for training are presented in

Table 5. The model was configured with an input size of 3 and 100 hidden units. The model was trained for 10 epochs with a batch size of 1000. Relative to the 6000-sample sequence length, using 100 hidden units yields ≈ 1:60 compression, preserving salient information while maintaining sufficient capacity. The resulting model size is ≈9.6 MB, supporting low-latency inference under resource constraints. In comparative experiments, 50 units risked insufficient capacity, whereas ≥200 units increased overfitting and computational cost. A hidden size of 100 units provided the best trade-off among performance, model complexity, and inference latency. We set a 100-epoch maximum with early stopping; by stopping before the validation loss rose, we limited overfitting while maintaining adequate training and runtime efficiency. The Adam optimizer was utilized for optimization, and a learning rate of 0.001 was set to ensure stable training. This is a well-established setting for the Adam optimizer; it yields stable, non-oscillatory convergence and enables fine-grained weight updates for precise training [

25].

5.2. Results of Degradation Stage Classification Experiment

For the training and validation of the model designed to classify degradation stages, the entire dataset was partitioned into a 70% training set and a 30% test set. The comparison was conducted under the same conditions as the normal/abnormal classification, and all models were evaluated using the same preprocessing pipeline and identical metrics (accuracy, AUC, confusion matrix, ROC). We reduced computational complexity by applying PCA with 50 components to the logistic regression model at preprocessing, then computed class probabilities via the logistic function (Equation (

8)).

where

p represents the predicted probability of belonging to the positive class (class 1);

,

, …,

are the n principal components obtained from PCA; is the intercept; and

,

, …,

are the regression coefficients for each corresponding principal component.

We applied z-score normalization to the SVM. By contrast, the random forest—being tree-based and largely scale-invariant—was trained on the original (unnormalized) features. The 1D-CNN baseline employed a lightweight stack of two 1D conv blocks (batch normalization + ReLU), global average pooling, a fully connected layer, and a softmax output.

Figure 16 presents the confusion matrices and ROC/AUC curves for the four baseline models—(a) Logistic Regression, (b) SVM, (c) Random Forest, and (d) 1D-CNN—while

Figure 17 shows the results for the proposed LSTM model. The quantitative results are as follows. Logistic regression exhibited limited classification performance (AUC = 0.724; training accuracy = 60.00%; test accuracy = 42.03%). In the confusion matrix, 11 of 25 normal (Class 0) samples were misclassified as degraded (false positives; see

Figure 16a). The SVM model exhibited high performance, attaining an AUC of 1.00 with training and test accuracies of 100.0% and 96.67%, respectively (

Figure 16b). The random forest model also performed well (AUC = 1.00; 97.10%/90.00%), though some misclassifications were present (

Figure 16c). The 1D-CNN also performed well, achieving AUC = 1.00 with training/test accuracies of 100.0%/96.67% (

Figure 16d). The proposed LSTM achieved AUC = 1.00 and training/test accuracies of 100.0%/100.0%; in the confusion matrix, it correctly classified all 27 normal and 3 degraded samples (

Figure 17). This indicates that validation performance remained stable throughout training, suggesting that the model learned the degradation characteristics without overfitting. These results are summarized in

Table 6, which reports the AUC and the training/test accuracies for all models.

In summary, logistic regression was insufficient for discriminating between normal and degraded states in this study, whereas SVM, random forest, and 1D-CNN exhibited near-perfect classification. The proposed shallow LSTM showed the most stable behavior across AUC and accuracy, reaching AUC 1.00 and 100.0% accuracy and classifying all samples correctly.

The key hyperparameters used for training are listed in

Table 7. An input size of 6000 and 100 hidden units were set for the model. Similar to the methodology in

Section 5.1, the length of the input sequence included only the data essential for the training process. Similar to the previous model, the number of hidden units was varied across tests (10, 50, 100, 150, and 200). It was determined that the analysis performed most stably with the hidden unit count set to 100. The model was trained for 100 epochs with a batch size of 1000. The Adam optimizer was utilized for optimization, and a learning rate of 0.001 was set to ensure stable training.

Figure 18 shows the differences in output characteristics across various degradation states by visualizing the normalized data in three dimensions.

Figure 18a shows the visualization results for the entire dataset, with time, lambda, and power as the axes, providing a visual representation of how the normal and degraded states are distributed across the full lambda spectrum. A noticeable pattern of decreasing output along the time axis is observed, which can be interpreted as the effect of degradation factors such as increased resistance or current loss within the string.

Figure 18b shows the power waveform when a single panel is degraded. All curves exhibit similar inflection points; however, the output amplitude gradually decreases as the lambda value increases. Although the basic shape of the curve remains consistent, differences in the distribution of the output amplitude indicate that the degraded panel influences the overall system output. The inflection point’s amplitude, position, and slope change systematically with the degree of degradation. These attributes serve as sensitive diagnostic indicators of the degradation state.

Figure 18c compares curves extracted from

Figure 17b at irradiance levels of 800, 850, 900, 950, 990, and 1000 W/m

2, using 1000 W/m

2 as the baseline. For ease of interpretation, each curve is annotated with an inflection-point marker indicating where the curvature changes. By construction of the normalization, the curve for

= 1000 W/m

2 appears as a flat baseline with no inflection, whereas curves with

< 1000 W/m

2 exhibit distinct peaks and inflection points. The peak deviation from the baseline is maximal at

= 800 W/m

2; as

increases from 800 to 990 W/m

2, both the peak height and the inflection-point amplitude gradually diminish, converging toward the baseline.

Figure 18d shows the condition in which two panels are degraded. In certain sections, abnormal sharp drops in output or irregular waveform distortions are observed, visually confirming that multiple abnormal conditions cumulatively affect the overall system response.

Figure 18e shows a detailed analysis of the fault condition in

Figure 18d by extracting key lambda values (800, 850, 900, 950, 990 W/m

2). In contrast to the single-panel degradation case

Figure 18c, each

curve exhibits two distinct peaks, a signature feature that emerges when two panels are degraded. Markers were placed on these peaks to enable easy identification of changes in the positions and magnitudes of key feature points within the complex waveform.

These visualization results enable a clear comparison of how output waveforms vary under different degradation conditions and provide a basis for the quantitative analysis of key characteristics, such as the occurrence and number of inflection points and changes in curve slope. Based on these findings, a solid foundation was established for effectively distinguishing between single and multiple anomaly conditions, and the validity of the proposed degradation stage classification model was experimentally verified.