ViT-BiLSTM Multimodal Learning for Paediatric ADHD Recognition: Integrating Wearable Sensor Data with Clinical Profiles

Abstract

Highlights

- We propose a cross-attention multimodal framework that maps wrist-sensor time series to Gramian Angular Field (GAF) images, learns spatiotemporal representations with a Vision Transformer Bidirectional Long Short-Term Memory (ViT-BiLSTM) encoder, and uses cross-attention to align image and clinical representations while suppressing noise, thereby improving attention-deficit/hyperactivity disorder (ADHD) recognition.

- Compared with late concatenation and dual-stream baselines, cross-attention fusion achieves higher and more stable ADHD recognition accuracy across cross-validation folds.

- Integrating wearable data with lightweight clinical profiles enables scalable, objective ADHD screening in naturalistic, free-living settings.

- Using consumer wearables and brief clinical inputs, we can run low-cost ADHD screening in schools and communities, reducing referral delays and missed or wrong referrals; because devices are widely available and the workflow is light, it also scales in resource-limited settings and supports population-level screening for public-health planning.

Abstract

1. Introduction

1.1. Background

1.2. Related Work

1.3. New Contributions

2. Materials and Methods

2.1. Participants and Data Collection

2.2. Data Preprocessing

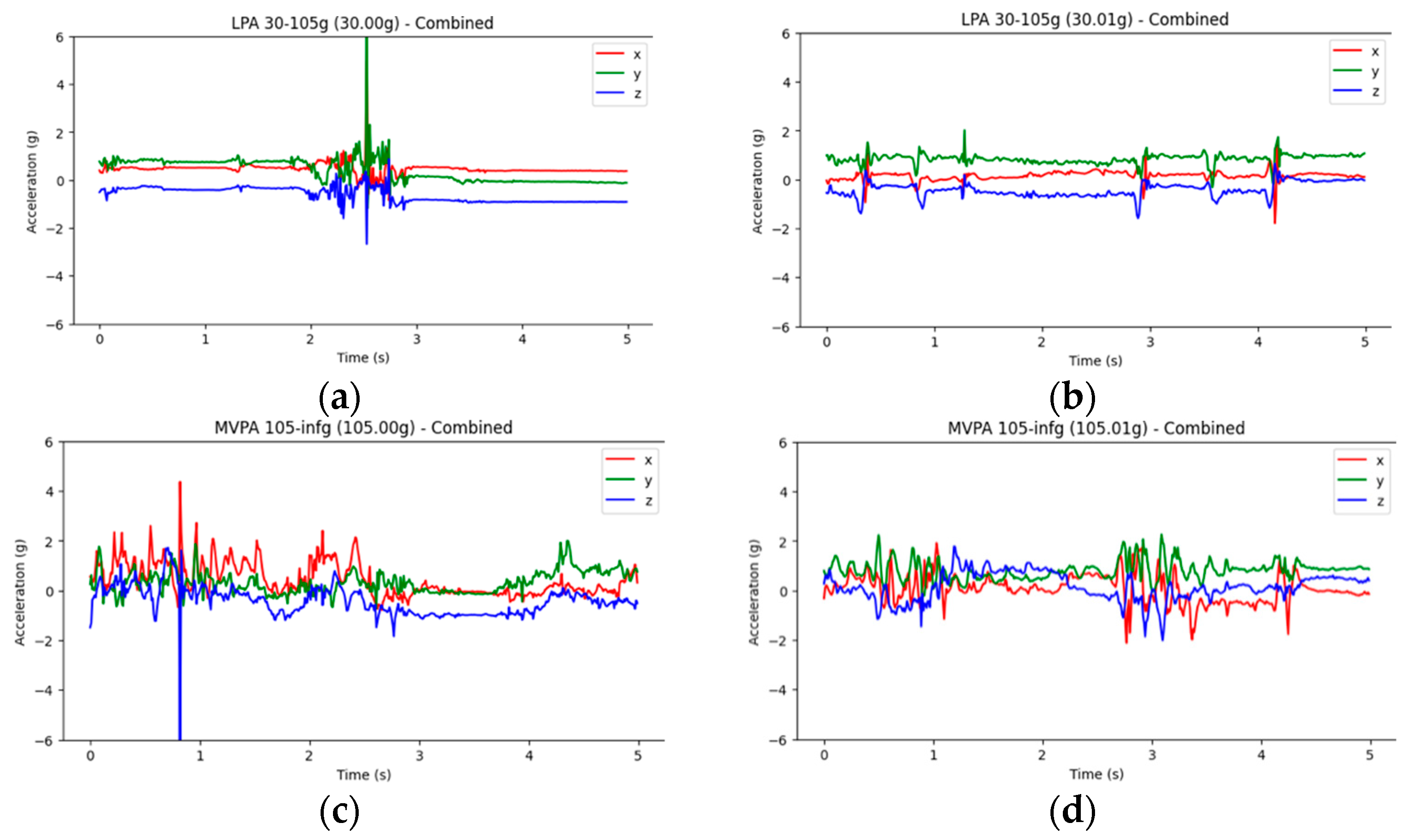

2.2.1. Physical Activity Acceleration to Generate an Image

2.2.2. Tabular Data

2.2.3. Preprocessing of Tabular Variables

2.3. Imaging Architectures

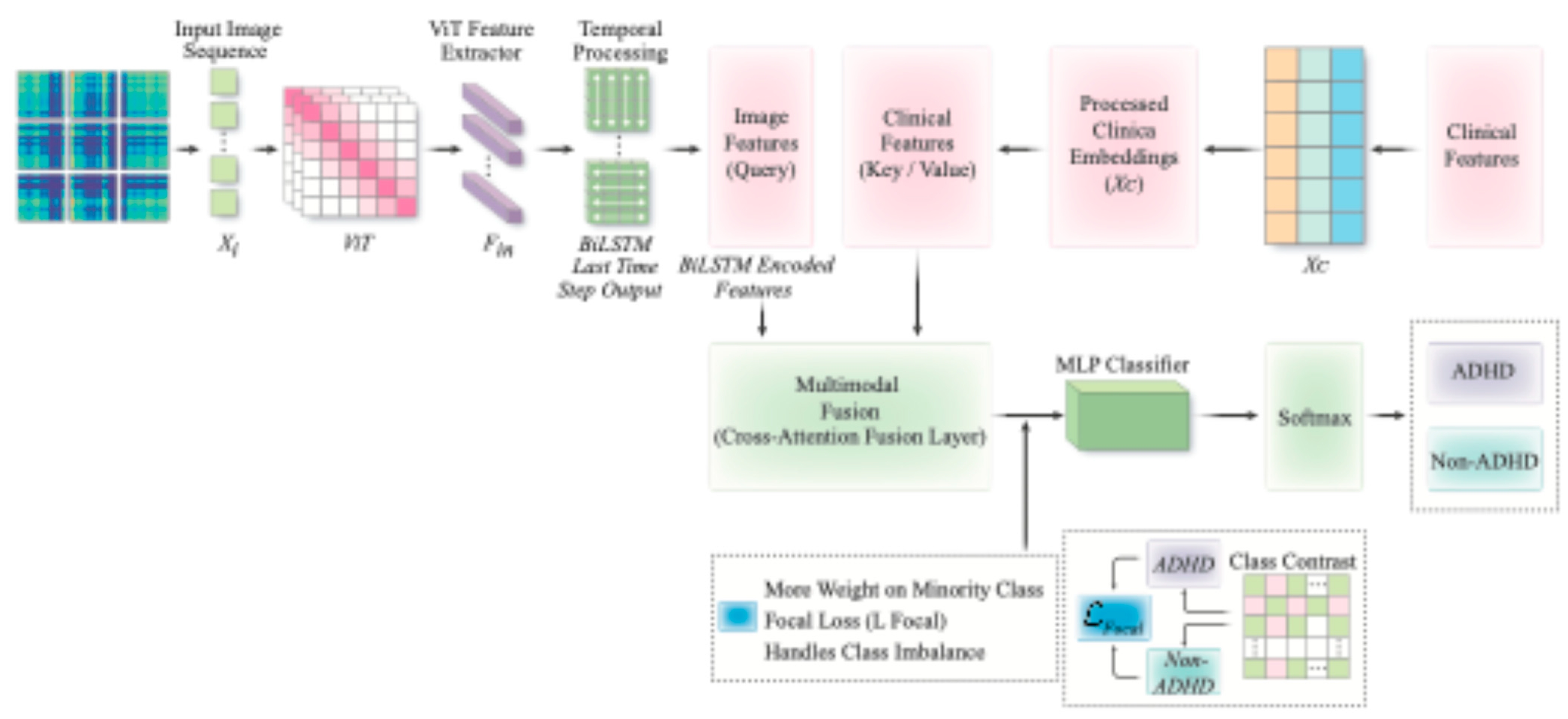

2.4. VB-Multimodal Architectures

2.5. Experimental Setup

2.6. Model Assessments

3. Results

3.1. Participants’ Characteristics

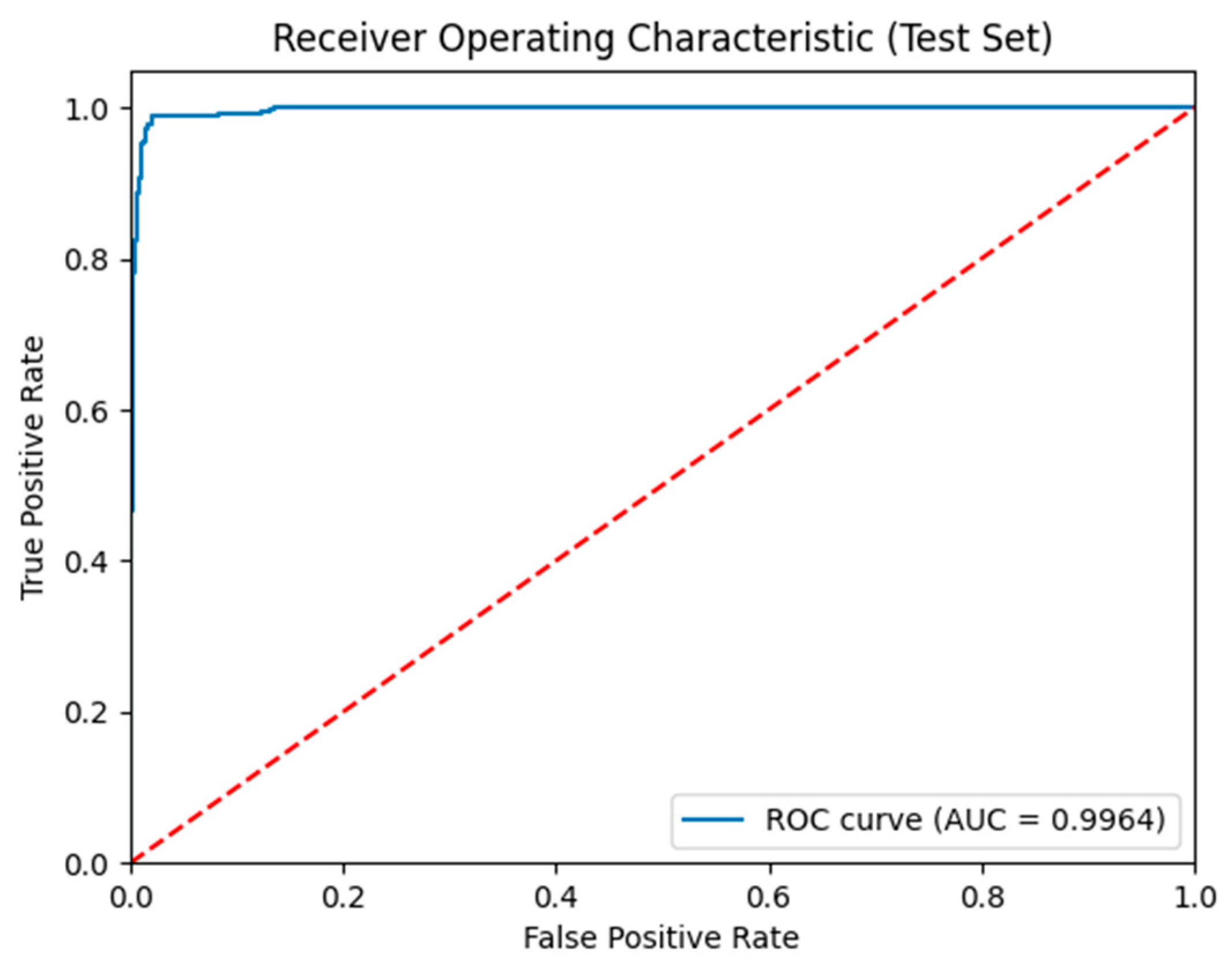

3.2. VB-Multimodal Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADHD | Attention-Deficit/Hyperactivity Disorder |

| GAF | Gramian Angular Field |

| ENMO | Euclidean Norm Minus One |

| ViT | Vision Transformer |

| BiLSTM | Bidirectional Long Short-Term Memory |

| EEG | Electroencephalography |

| SNAP-IV | Swanson, Nolan, and Pelham Questionnaire, 4th Edition |

| SD | Standard Deviation |

| BMI | Body Mass Index |

| SVM | Support Vector Machine |

| RF | Random Forest |

| XGB | XGBoost |

| LGB | Light Gradient Boosting Machine (LightGBM) |

| ANNs | Artificial Neural Networks |

| CNN | Convolutional Neural Network |

| ROC-AUC | Receiver Operating Characteristic–Area Under the Curve |

References

- Wilson, R.B.; Vangala, S.; Reetzke, R.; Piergies, A.; Ozonoff, S.; Miller, M. Objective measurement of movement variability using wearable sensors predicts ASD outcomes in infants at high likelihood for ASD and ADHD. Autism Res. 2024, 17, 1094–1105. [Google Scholar] [CrossRef]

- Garcia-Ceja, E.; Stautland, A.; Riegler, M.A.; Halvorsen, P.; Hinojosa, S.; Ochoa-Ruiz, G.; Berle, J.O.; Forland, W.; Mjeldheim, K.; Oedegaard, K.J.; et al. OBF-Psychiatric, a motor activity dataset of patients diagnosed with major depression, schizophrenia, and ADHD. Sci. Data 2025, 12, 32. [Google Scholar] [CrossRef] [PubMed]

- Park, C.; Rouzi, M.D.; Atique, M.M.U.; Finco, M.G.; Mishra, R.K.; Barba-Villalobos, G.; Crossman, E.; Amushie, C.; Nguyen, J.; Calarge, C.; et al. Machine Learning-Based Aggression Detection in Children with ADHD Using Sensor-Based Physical Activity Monitoring. Sensors 2023, 23, 4949. [Google Scholar] [CrossRef] [PubMed]

- Meggs, J.; Young, S.; McKeown, A. A narrative review of the effect of sport and exercise on ADHD symptomatology in children and adolescents with ADHD. Ment. Health Rev. J. 2023, 28, 303–321. [Google Scholar] [CrossRef]

- Sacco, R.; Camilleri, N.; Eberhardt, J.; Umla-Runge, K.; Newbury-Birch, D. A systematic review and meta-analysis on the prevalence of mental disorders among children and adolescents in Europe. Eur. Child Adolesc. Psychiatry 2022, 33, 2877–2894. [Google Scholar] [CrossRef]

- Popit, S.; Serod, K.; Locatelli, I.; Stuhec, M. Prevalence of attention-deficit hyperactivity disorder (ADHD): Systematic review and meta-analysis. Eur. Psychiatry 2024, 67, e68. [Google Scholar] [CrossRef]

- Danielson, M.L.; Claussen, A.H.; Bitsko, R.H.; Katz, S.M.; Newsome, K.; Blumberg, S.J.; Kogan, M.D.; Ghandour, R. ADHD prevalence among US children and adolescents in 2022: Diagnosis, severity, co-occurring disorders, and treatment. J. Clin. Child Adolesc. Psychol. 2024, 53, 343–360. [Google Scholar] [CrossRef]

- Christiansen, H. Attention deficit/hyperactivity disorder over the life span. Verhaltenstherapie 2016, 26, 182–193. [Google Scholar] [CrossRef]

- Benzing, V.; Chang, Y.K.; Schmidt, M. Acute physical activity enhances executive functions in children with ADHD. Sci. Rep. 2018, 8, 12382. [Google Scholar] [CrossRef]

- Meijer, A.; Konigs, M.; Vermeulen, G.T.; Visscher, C.; Bosker, R.J.; Hartman, E.; Oosterlaan, J. The effects of physical activity on brain structure and neurophysiological functioning in children: A systematic review and meta-analysis. Dev. Cogn. Neurosci. 2020, 45, 100828. [Google Scholar] [CrossRef]

- Kim, W.-P.; Kim, H.-J.; Pack, S.P.; Lim, J.-H.; Cho, C.-H.; Lee, H.-J. Machine learning–based prediction of attention-deficit/hyperactivity disorder and sleep problems with wearable data in children. JAMA Netw. Open 2023, 6, e233502. [Google Scholar] [CrossRef]

- Liu, J.J.; Borsari, B.; Li, Y.; Liu, S.X.; Gao, Y.; Xin, X.; Lou, S.; Jensen, M.; Garrido-Martín, D.; Verplaetse, T.L.; et al. Digital phenotyping from wearables using AI characterizes psychiatric disorders and identifies genetic associations. Cell 2025, 188, 515–529.e15. [Google Scholar] [CrossRef]

- Parameswaran, S.; Gowsheeba, S.R.; Praveen, E.; Vishnuram, S.R. Prediction of Attention Deficit Hyperactivity Disorder Using Machine Learning Models. In Proceedings of the 2024 3rd International Conference on Artificial Intelligence for Internet of Things (AIIoT), Vellore, India, 3–4 May 2024; pp. 1–6. [Google Scholar]

- Basic, J.; Uusimaa, J.; Salmi, J. Wearable motion sensors in the detection of ADHD: A critical review. In Nordic Conference on Digital Health and Wireless Solutions; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Lin, L.-C.; Ouyang, C.-S.; Chiang, C.-T.; Wu, R.-C.; Yang, R.-C. Quantitative analysis of movements in children with attention-deficit hyperactivity disorder using a smart watch at school. Appl. Sci. 2020, 10, 4116. [Google Scholar] [CrossRef]

- Muñoz-Organero, M.; Powell, L.; Heller, B.; Harpin, V.; Parker, J. Using recurrent neural networks to compare movement patterns in ADHD and normally developing children based on acceleration signals from the wrist and ankle. Sensors 2019, 19, 2935. [Google Scholar] [CrossRef] [PubMed]

- González Barral, C.; Servais, L. Wearable sensors in paediatric neurology. Dev. Med. Child Neurol. 2025, 67, 834–853. [Google Scholar] [CrossRef] [PubMed]

- Hoza, B.; Shoulberg, E.K.; Tompkins, C.L.; Martin, C.P.; Krasner, A.; Dennis, M.; Meyer, L.E.; Cook, H. Moderate-to-vigorous physical activity and processing speed: Predicting adaptive change in ADHD levels and related impairments in preschoolers. J. Child Psychol. Psychiatry 2020, 61, 1380–1387. [Google Scholar] [CrossRef]

- Koepp, A.E.; Gershoff, E.T. Leveraging an intensive time series of young children’s movement to capture impulsive and inattentive behaviors in a preschool setting. Child Dev. 2024, 95, 1641–1658. [Google Scholar] [CrossRef]

- Zhao, Y.; Dong, F.; Sun, T.; Ju, Z.; Yang, L.; Shan, P.; Li, L.; Lv, X.; Lian, C. Image expression of time series data of wearable IMU sensor and fusion classification of gymnastics action. Expert Syst. Appl. 2024, 238, 121978. [Google Scholar] [CrossRef]

- Farrahi, V.; Muhammad, U.; Rostami, M.; Oussalah, M. AccNet24: A deep learning framework for classifying 24-hour activity behaviours from wrist-worn accelerometer data under free-living environments. Int. J. Med. Inf. 2023, 172, 105004. [Google Scholar] [CrossRef]

- Borsos, B.; Allaart, C.G.; van Halteren, A. Predicting stroke outcome: A case for multimodal deep learning methods with tabular and CT perfusion data. Artif. Intell. Med. 2024, 147, 102719. [Google Scholar] [CrossRef]

- Steyaert, S.; Pizurica, M.; Nagaraj, D.; Khandelwal, P.; Hernandez-Boussard, T.; Gentles, A.J.; Gevaert, O. Multimodal data fusion for cancer biomarker discovery with deep learning. Nat. Mach. Intell. 2023, 5, 351–362. [Google Scholar] [CrossRef]

- Zhou, H.; Ren, D.; Xia, H.; Fan, M.; Yang, X.; Huang, H. Ast-gnn: An attention-based spatio-temporal graph neural network for interaction-aware pedestrian trajectory prediction. Neurocomputing 2021, 445, 298–308. [Google Scholar] [CrossRef]

- Kwon, S.; Kim, Y.; Bai, Y.; Burns, R.D.; Brusseau, T.A.; Byun, W. Validation of the Apple Watch for Estimating Moderate-to-Vigorous Physical Activity and Activity Energy Expenditure in School-Aged Children. Sensors 2021, 21, 6413. [Google Scholar] [CrossRef]

- White, J.W., III; Finnegan, O.L.; Tindall, N.; Nelakuditi, S.; Brown, D.E., III; Pate, R.R.; Welk, G.J.; de Zambotti, M.; Ghosal, R.; Wang, Y.; et al. Comparison of raw accelerometry data from ActiGraph, Apple Watch, Garmin, and Fitbit using a mechanical shaker table. PLoS ONE 2024, 19, e0286898. [Google Scholar] [CrossRef] [PubMed]

- Faedda, G.L.; Ohashi, K.; Hernandez, M.; McGreenery, C.E.; Grant, M.C.; Baroni, A.; Polcari, A.; Teicher, M.H. Actigraph measures discriminate pediatric bipolar disorder from attention-deficit/hyperactivity disorder and typically developing controls. J. Child Psychol. Psychiatry 2016, 57, 706–716. [Google Scholar] [CrossRef] [PubMed]

- O’Mahony, N.; Florentino-Liano, B.; Carballo, J.J.; Baca-Garcia, E.; Rodriguez, A.A. Objective diagnosis of ADHD using IMUs. Med. Eng. Phys. 2014, 36, 922–926. [Google Scholar] [CrossRef]

- Rahman, M.M. Enhancing ADHD Prediction in Adolescents through Fitbit-Derived Wearable Data. medRxiv 2024. [Google Scholar] [CrossRef]

- Jiang, Z.; Chan, A.Y.; Lum, D.; Wong, K.H.; Leung, J.C.; Ip, P.; Coghill, D.; Wong, R.S.; Ngai, E.C.; Wong, I.C. Wearable Signals for Diagnosing Attention-Deficit/Hyperactivity Disorder in Adolescents: A Feasibility Study. JAACAP Open 2024. [Google Scholar] [CrossRef]

- Duda, M.; Haber, N.; Daniels, J.; Wall, D.P. Crowdsourced validation of a machine-learning classification system for autism and ADHD. Transl. Psychiatry 2017, 7, e1133. [Google Scholar] [CrossRef]

- Muñoz-Organero, M.; Powell, L.; Heller, B.; Harpin, V.; Parker, J. Automatic Extraction and Detection of Characteristic Movement Patterns in Children with ADHD Based on a Convolutional Neural Network (CNN) and Acceleration Images. Sensors 2018, 18, 3924. [Google Scholar] [CrossRef]

- Vavoulis, D.V.; Cutts, A.; Thota, N.; Brown, J.; Sugar, R.; Rueda, A.; Ardalan, A.; Howard, K.; Matos Santo, F.; Sannasiddappa, T.; et al. Multimodal cell-free DNA whole-genome TAPS is sensitive and reveals specific cancer signals. Nat. Commun. 2025, 16, 430. [Google Scholar] [CrossRef] [PubMed]

- Floris, D.L.; Llera, A.; Zabihi, M.; Moessnang, C.; Jones, E.J.H.; Mason, L.; Haartsen, R.; Holz, N.E.; Mei, T.; Elleaume, C.; et al. A multimodal neural signature of face processing in autism within the fusiform gyrus. Nat. Ment. Health 2025, 3, 31–45. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Luo, Z.; Zhang, T. A novel ViT-BILSTM model for physical activity intensity classification in adults using gravity-based acceleration. BMC Biomed. Eng. 2025, 7, 2. [Google Scholar] [CrossRef]

- Lin, W. Movement and Mental Health in Children. Lin, W., Ed.; Zenodo: Geneva, Switzerland, 2024; Available online: https://zenodo.org/records/14875672 (accessed on 13 October 2025).

- Robusto, K.M.; Trost, S.G. Comparison of three generations of ActiGraph™ activity monitors in children and adolescents. J. Sports Sci. 2012, 30, 1429–1435. [Google Scholar] [CrossRef]

- van Hees, V.T.; Gorzelniak, L.; Dean Leon, E.C.; Eder, M.; Pias, M.; Taherian, S.; Ekelund, U.; Renstrom, F.; Franks, P.W.; Horsch, A.; et al. Separating movement and gravity components in an acceleration signal and implications for the assessment of human daily physical activity. PLoS ONE 2013, 8, e61691. [Google Scholar] [CrossRef]

- Wang, Z.; Oates, T. Encoding time series as images for visual inspection and classification using tiled convolutional neural networks. In Proceedings of the Workshops at the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Hiscock, H.; Mulraney, M.; Efron, D.; Freed, G.; Coghill, D.; Sciberras, E.; Warren, H.; Sawyer, M. Use and predictors of health services among Australian children with mental health problems: A national prospective study. Aust. J. Psychol. 2020, 72, 31–40. [Google Scholar] [CrossRef]

- Abhayaratna, H.C.; Ariyasinghe, D.I.; Ginige, P.; Chandradasa, M.; Hansika, K.S.; Fernando, A.; Wijetunge, S.; Dassanayake, T.L. Psychometric properties of the Sinhala version of the Swanson, Nolan, and Pelham rating scale (SNAP-IV) Parent Form in healthy children and children with ADHD. Asian J. Psychiatry 2023, 83, 103542. [Google Scholar] [CrossRef]

- Tharwat, A. Classification assessment methods. Appl. Comput. Inform. 2021, 17, 168–192. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The Matthews correlation coefficient (MCC) should replace the ROC AUC as the standard metric for assessing binary classification. BioData Min. 2023, 16, 4. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Boughorbel, S.; Jarray, F.; El-Anbari, M. Optimal classifier for imbalanced data using Matthews Correlation Coefficient metric. PLoS ONE 2017, 12, e0177678. [Google Scholar] [CrossRef]

- Chen, H.; Cui, J.; Zhang, Y.; Zhang, Y. VIT and Bi-LSTM for Micro-Expressions Recognition. In Proceedings of the 2022 IEEE 5th International Conference on Information Systems and Computer Aided Education (ICISCAE), Dalian, China, 23–25 September 2022; pp. 946–951. [Google Scholar]

- Oyeleye, M.; Chen, T.; Su, P.; Antoniou, G. Exploiting Machine Learning and LSTM for Human Activity Recognition: Using Physiological and Biological Sensor Data from Actigraph. In Proceedings of the 2024 IEEE International Conference on Industrial Technology (ICIT), Bristol, UK, 25–27 March 2024; pp. 1–8. [Google Scholar]

- Bi, Y.; Abrol, A.; Fu, Z.; Calhoun, V.D. A multimodal vision transformer for interpretable fusion of functional and structural neuroimaging data. Hum. Brain Mapp. 2024, 45, e26783. [Google Scholar] [CrossRef]

- Cai, G.; Zhu, Y.; Wu, Y.; Jiang, X.; Ye, J.; Yang, D. A multimodal transformer to fuse images and metadata for skin disease classification. Vis. Comput. 2023, 39, 2781–2793. [Google Scholar] [CrossRef] [PubMed]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110. [Google Scholar] [CrossRef] [PubMed]

- Shamshad, F.; Khan, S.; Zamir, S.W.; Khan, M.H.; Hayat, M.; Khan, F.S.; Fu, H. Transformers in medical imaging: A survey. Med. Image Anal. 2023, 88, 102802. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. A Survey of Visual Transformers. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 7478–7498. [Google Scholar] [CrossRef]

- Tang, L.; Hu, Q.; Wang, X.; Liu, L.; Zheng, H.; Yu, W.; Luo, N.; Liu, J.; Song, C. A multimodal fusion network based on a cross-attention mechanism for the classification of Parkinsonian tremor and essential tremor. Sci. Rep. 2024, 14, 28050. [Google Scholar] [CrossRef]

- Sun, K.; Ding, J.; Li, Q.; Chen, W.; Zhang, H.; Sun, J.; Jiao, Z.; Ni, X. CMAF-Net: A cross-modal attention fusion-based deep neural network for incomplete multi-modal brain tumor segmentation. Quant. Imaging Med. Surg. 2024, 14, 4579. [Google Scholar] [CrossRef]

- Zhao, X.; Tang, C.; Hu, H.; Wang, W.; Qiao, S.; Tong, A. Attention mechanism based multimodal feature fusion network for human action recognition. J. Vis. Commun. Image Represent. 2025, 110, 104459. [Google Scholar] [CrossRef]

- Li, S.; Tang, H. Multimodal alignment and fusion: A survey. arXiv 2024, arXiv:2411.17040. [Google Scholar] [CrossRef]

- Gan, C.; Fu, X.; Feng, Q.; Zhu, Q.; Cao, Y.; Zhu, Y. A multimodal fusion network with attention mechanisms for visual–textual sentiment analysis. Expert Syst. Appl. 2024, 242, 122731. [Google Scholar] [CrossRef]

- Novosad, P. A task-conditional mixture-of-experts model for missing modality segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2024. [Google Scholar]

- Niimi, J. An Efficient multimodal learning framework to comprehend consumer preferences using BERT and Cross-Attention. arXiv 2024, arXiv:2405.07435. [Google Scholar] [CrossRef]

- Saioni, M.; Giannone, C. Multimodal Attention is all you need. In Proceedings of the 10th Italian Conference on Computational Linguistics (CLiC-It 2024), Pisa, Italy, 4–6 December 2024. [Google Scholar]

- Li, C.-Y.; Chang, K.-J.; Yang, C.-F.; Wu, H.-Y.; Chen, W.; Bansal, H.; Chen, L.; Yang, Y.-P.; Chen, Y.-C.; Chen, S.-P. Towards a holistic framework for multimodal LLM in 3D brain CT radiology report generation. Nat. Commun. 2025, 16, 2258. [Google Scholar] [CrossRef]

- Yuan, H.; Plekhanova, T.; Walmsley, R.; Reynolds, A.C.; Maddison, K.J.; Bucan, M.; Gehrman, P.; Rowlands, A.; Ray, D.W.; Bennett, D.; et al. Self-supervised learning of accelerometer data provides new insights for sleep and its association with mortality. NPJ Digit. Med. 2024, 7, 86. [Google Scholar] [CrossRef]

- Ng, J.Y.Y.; Zhang, J.H.; Hui, S.S.; Jiang, G.; Yau, F.; Cheng, J.; Ha, A.S. Development of a multi-wear-site, deep learning-based physical activity intensity classification algorithm using raw acceleration data. PLoS ONE 2024, 19, e0299295. [Google Scholar] [CrossRef]

- Djemili, R.; Zamouche, M. An efficient deep learning-based approach for human activity recognition using smartphone inertial sensors. Int. J. Comput. Appl. 2023, 45, 323–336. [Google Scholar] [CrossRef]

| References | Dataset | Data Type | Arch/Model | Validation Metrics | Validation Accuracy |

|---|---|---|---|---|---|

| 1. O’Mahony et al. (2014) [28] | Private 6–11 years (n = 43, 26 male) | Tabular | SVM | Accuracy; Sensitivity; Specificity; | SVM = 94% |

| 2. Faedda et al. (2016) [27] | Private 5–18 years (n = 155, 97 male) | Tabular | RF; ANNs; PLS; MR; SVM | Accuracy; Kappa; ROC-AUC | RF = 79%; ANNs = 81%; PLS = 82%; MR = 82%; SVM = 83% |

| 3. Duda et al. (2017) [31] | Private 10–14 years (n = 174, 93 male) | Tabular | SVM; Ridge; ENet; LDA | ROC-AUC | SVM = 78%; Ridge = 84%; Enet = 89%; LDA = 89% |

| 4. Muñoz-Organero et al. (2018) [32] | Private 6–15 years (n = 22, 11 male) | Image | CNN | Accuracy; Sensitivity; Specificity; | CNN = 93% |

| 5. Kim et al. (2023) [11] | ABCD dataset 9-Dataset 11 years (n = 1090, 513 male) | Tabular | RF; XGB; LGB | AUC; Sensitivity; Specificity; | RF = 73%; XGB = 78%; LGB = 79% |

| 6. Park et al. (2023) [3] | Private 7–16 years (n = 39, 31 male) | Tabular | RF | Accuracy; Recall; Precision; F1; AUC | RF = 78%; |

| 7. Rahman (2024) [29] | ABCD dataset 9-Dataset 11 years (n = 450, 257 male) | Tabular | RF; Ada; DT; KNN; LGBM; LR; NB; SVM | Accuracy; Recall; Precision; F1; | RF = 87%; Ada = 65%; DT; KNN = 53; LGBM = 74%; LR = 61%; NB = 58%; SVM = 53% |

| 8. Jiang et al. (2024) [30] | Private 16–17 years (n = 30, 16 male) | Tabular | XGBoost | Accuracy; Recall; Precision; F1; | XGBoost = 93% |

| Characteristic | Total (n = 50) | Male (n = 29) | Female (n = 21) | Difference p |

|---|---|---|---|---|

| Age, years (Mean ± SD) | 9.16 ± 1.68 | 9.17 ± 1.79 | 9.14 ± 1.56 | 0.95 |

| BMI (Mean ± SD) | 16.57 ± 2.69 | 16.93 ± 2.95 | 16.07 ± 2.25 | 0.24 |

| Grade (n, %) | 0.61 | |||

| Grade 1 | 10 (20.0) | 6 (20.7) | 4 (19.0) | |

| Grade 2 | 13 (26.0) | 7 (24.1) | 6 (28.6) | |

| Grade 3 | 11 (22.0) | 6 (20.7) | 5 (23.8) | |

| Grade 4 | 11 (22.0) | 6 (20.7) | 5 (23.8) | |

| Grade 5 | 2 (4.0) | 2 (6.9) | 0 (0.0) | |

| Grade 6 | 2 (4.0) | 2 (6.9) | 0 (0.0) | |

| Grade 7 | 1 (2.0) | 0 (0.0) | 1 (4.8) | |

| Socioeconomic factors (n, %) | ||||

| Health state | 0.89 | |||

| Poor | 7 (14.0) | 4 (13.8) | 3 (14.3) | |

| Good | 43 (86.0) | 25 (86.2) | 18 (85.7) | |

| Very Good | 0 (0.0) | 0 (0.0) | 0 (0.0) | |

| Diet state | 0.56 | |||

| Poor | 13 (26.0) | 6 (20.7) | 7 (33.3) | |

| Good | 35 (70.0) | 22 (75.9) | 13 (61.9) | |

| Very Good | 2 (4.0) | 1 (3.4) | 1 (4.8) | |

| Parental education (n, %) | 0.94 | |||

| Poor | 8 (16.0) | 5 (17.2) | 3 (14.3) | |

| Moderate | 40 (80.0) | 23 (79.3) | 17 (81.0) | |

| Good | 2 (4.0) | 1 (3.4) | 1 (4.8) | |

| SNAP-IV scores (Mean ± SD) | ||||

| Inattention score | 15.76 ± 4.11 | 16.93 ± 4.02 | 14.14 ± 3.76 | 0.01 |

| Hyperactivity score | 13.80 ± 3.42 | 14.72 ± 3.39 | 12.52 ± 3.09 | 0.02 |

| Oppositional score | 13.60 ± 2.60 | 14.24 ± 2.84 | 12.71 ± 1.95 | 0.02 |

| SNAP-IV Total | 42.98 ± 9.13 | 45.69 ± 9.23 | 39.24 ± 7.72 | 0.01 |

| ADHD classification | ||||

| Non-ADHD (T < 55) | 37 (74.0) | 18 (62.1) | 19 (90.5) | 0.04 |

| ADHD (T ≥ 55) | 13 (26.0) | 11 (37.9) | 2 (9.5) | 0.02 |

| Precision | Recall | F1 | Accuracy | AUC | MCC | |

|---|---|---|---|---|---|---|

| VB-Multimodal | 0.97 | 0.97 | 0.97 | 0.97 | 0.99 | 0.93 |

| Fusions | Precision | Recall | F1 | Accuracy | AUC | MCC |

|---|---|---|---|---|---|---|

| Cross-attention | 0.97 | 0.97 | 0.97 | 0.97 | 0.99 | 0.93 |

| Simple CONCAT | 0.42 | 0.35 | 0.37 | 0.35 | 0.29 | −0.32 |

| Weightedsum | 0.48 | 0.35 | 0.34 | 0.35 | 0.45 | −0.14 |

| Dual-stream | 0.58 | 0.46 | 0.47 | 0.46 | 0.35 | 0.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, L.; Yang, G. ViT-BiLSTM Multimodal Learning for Paediatric ADHD Recognition: Integrating Wearable Sensor Data with Clinical Profiles. Sensors 2025, 25, 6459. https://doi.org/10.3390/s25206459

Wang L, Yang G. ViT-BiLSTM Multimodal Learning for Paediatric ADHD Recognition: Integrating Wearable Sensor Data with Clinical Profiles. Sensors. 2025; 25(20):6459. https://doi.org/10.3390/s25206459

Chicago/Turabian StyleWang, Lin, and Guang Yang. 2025. "ViT-BiLSTM Multimodal Learning for Paediatric ADHD Recognition: Integrating Wearable Sensor Data with Clinical Profiles" Sensors 25, no. 20: 6459. https://doi.org/10.3390/s25206459

APA StyleWang, L., & Yang, G. (2025). ViT-BiLSTM Multimodal Learning for Paediatric ADHD Recognition: Integrating Wearable Sensor Data with Clinical Profiles. Sensors, 25(20), 6459. https://doi.org/10.3390/s25206459