BiLSTM-LN-SA: A Novel Integrated Model with Self-Attention for Multi-Sensor Fire Detection

Abstract

1. Introduction

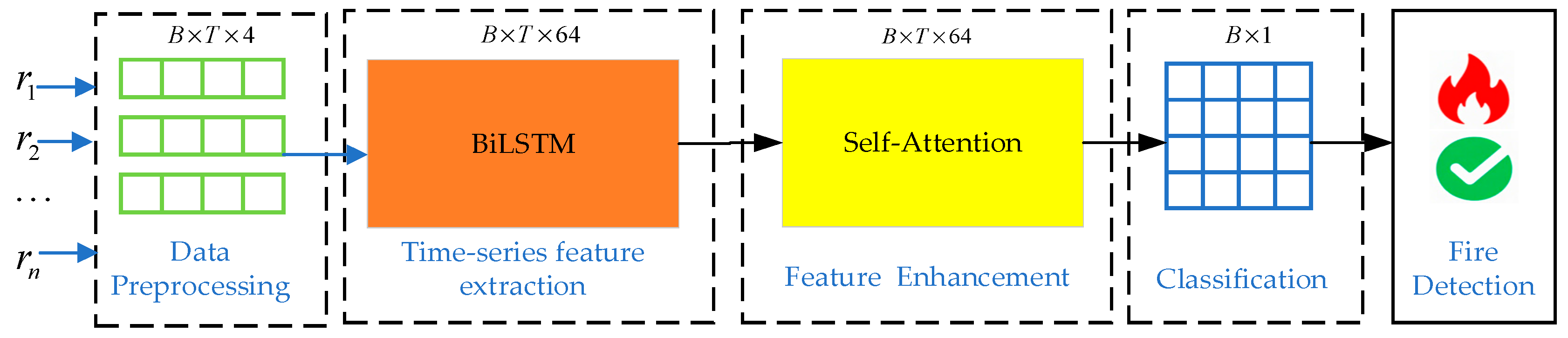

2. The Proposed Method

2.1. Data Preprocessing Module

- (1)

- Normalization:

- (2)

- Time-Series Data Generation:

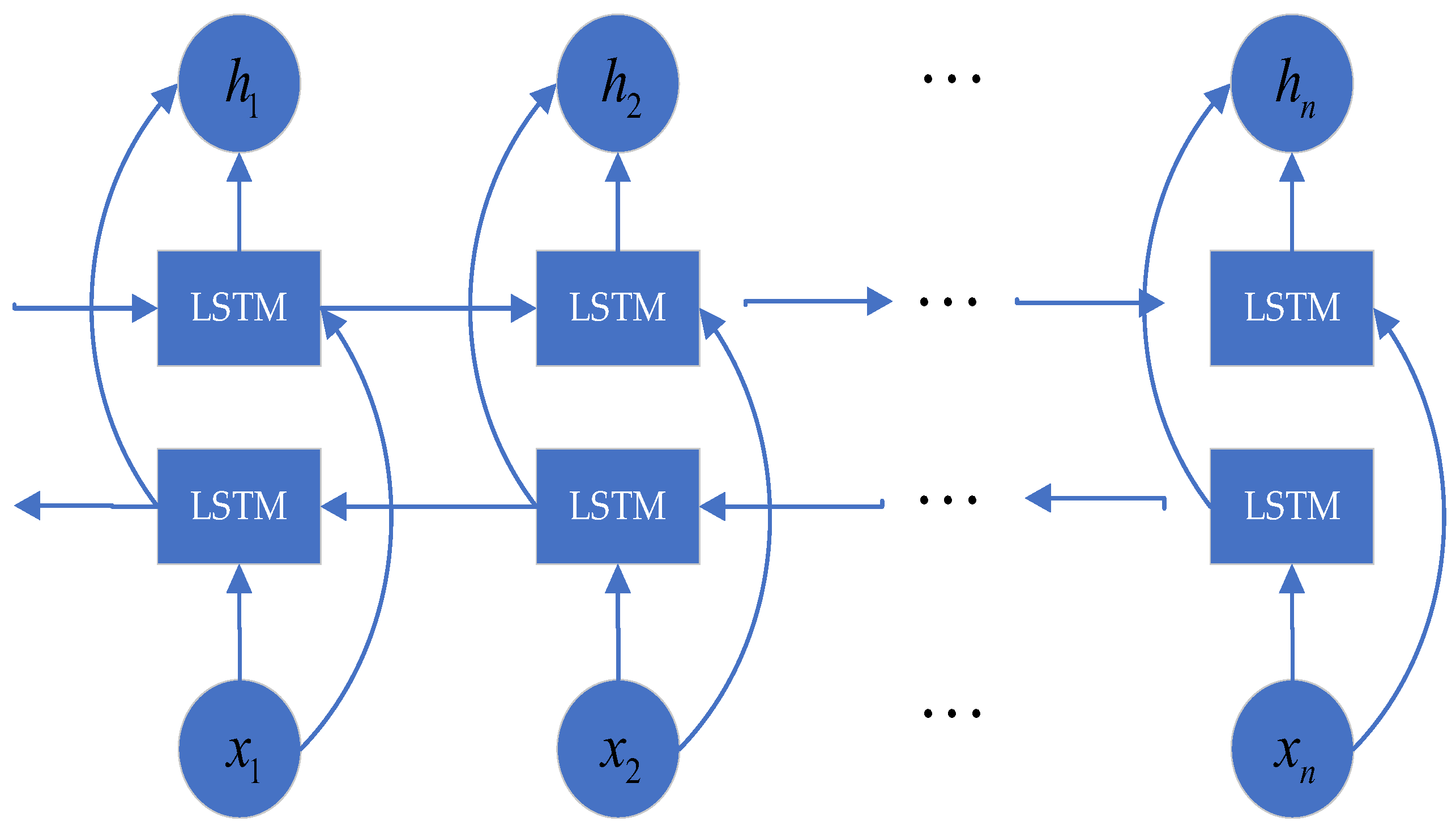

2.2. Time-Series Feature Extraction Module

2.3. Feature Enhancement Module

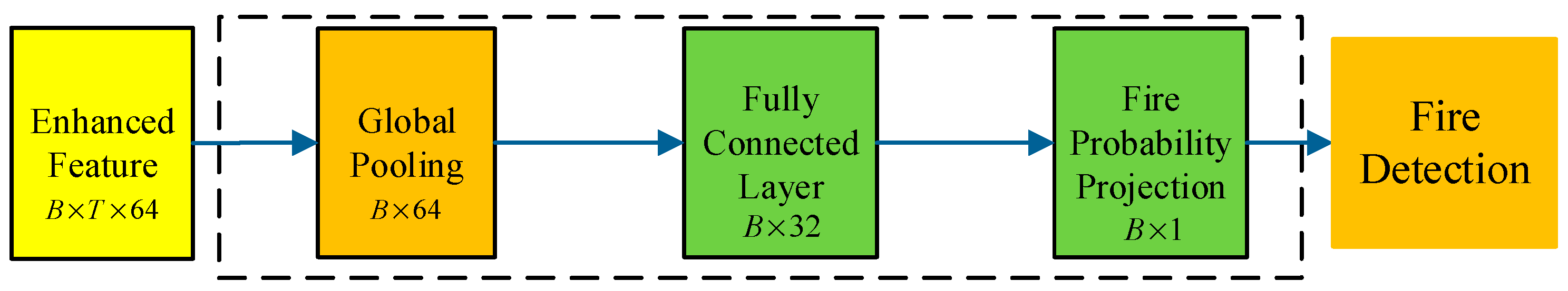

2.4. Classification Module

- (1)

- Global Average Pooling

- (2)

- Classification Head

3. Performance Evaluation and Analysis

3.1. Experimental Setup and Model Configuration

3.1.1. Dataset Description

- Normal indoor conditions

- Normal outdoor conditions

- Indoor wood fire within a firefighter training area

- Indoor gas fire within a firefighter training area

- Outdoor wood, coal, and gas grill fires

- High-humidity outdoor environments

3.1.2. Time-Series Data Construction

3.1.3. Model Configuration and Hyperparameters

- BiLSTM Module: This module consists of 2 stacked bidirectional LSTM layers. Each LSTM cell in each direction contains 32 hidden units, resulting in a concatenated output dimension of 64.

- Self-Attention Module: We employ a single-head self-attention mechanism. The dimension of the query and key vectors (dk) is set to 64.

- Classification Head: The fully connected layer following global average pooling comprises 32 neurons with ReLU activation.

3.1.4. Training and Evaluation Procedure

- denotes the batch size, set to 32, indicating the number of samples processed in each batch;

- indicates the number of time steps, which is set to 20, corresponding to the length of consecutive sensor readings per sample;

- represents the number of sensors (or sensor channels), which is set to 4 in this study.

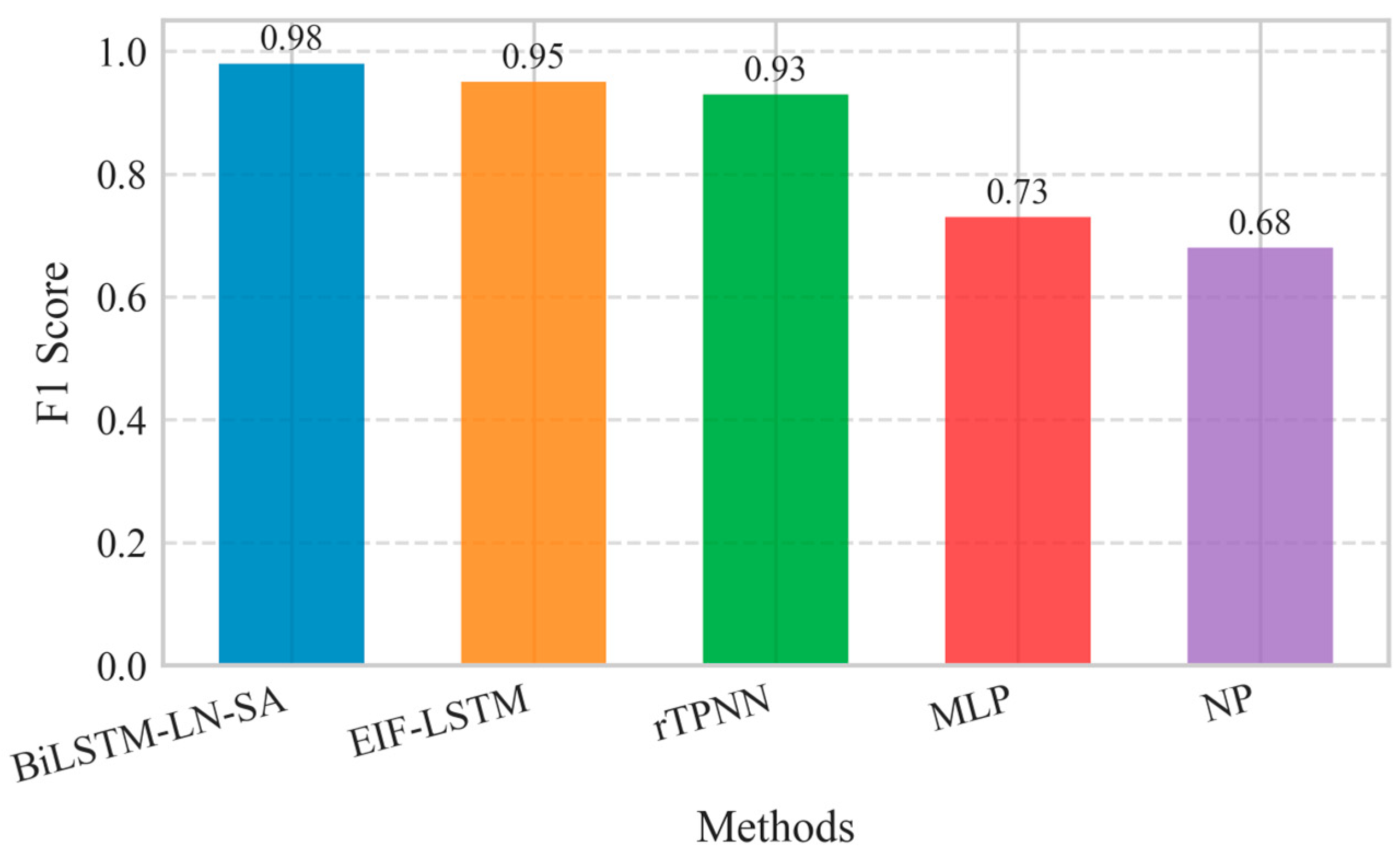

3.2. Results and Analysis

3.2.1. Metrics of Accuracy

3.2.2. Metrics of Confusion Matrix

3.2.3. Metrics of F1-Score

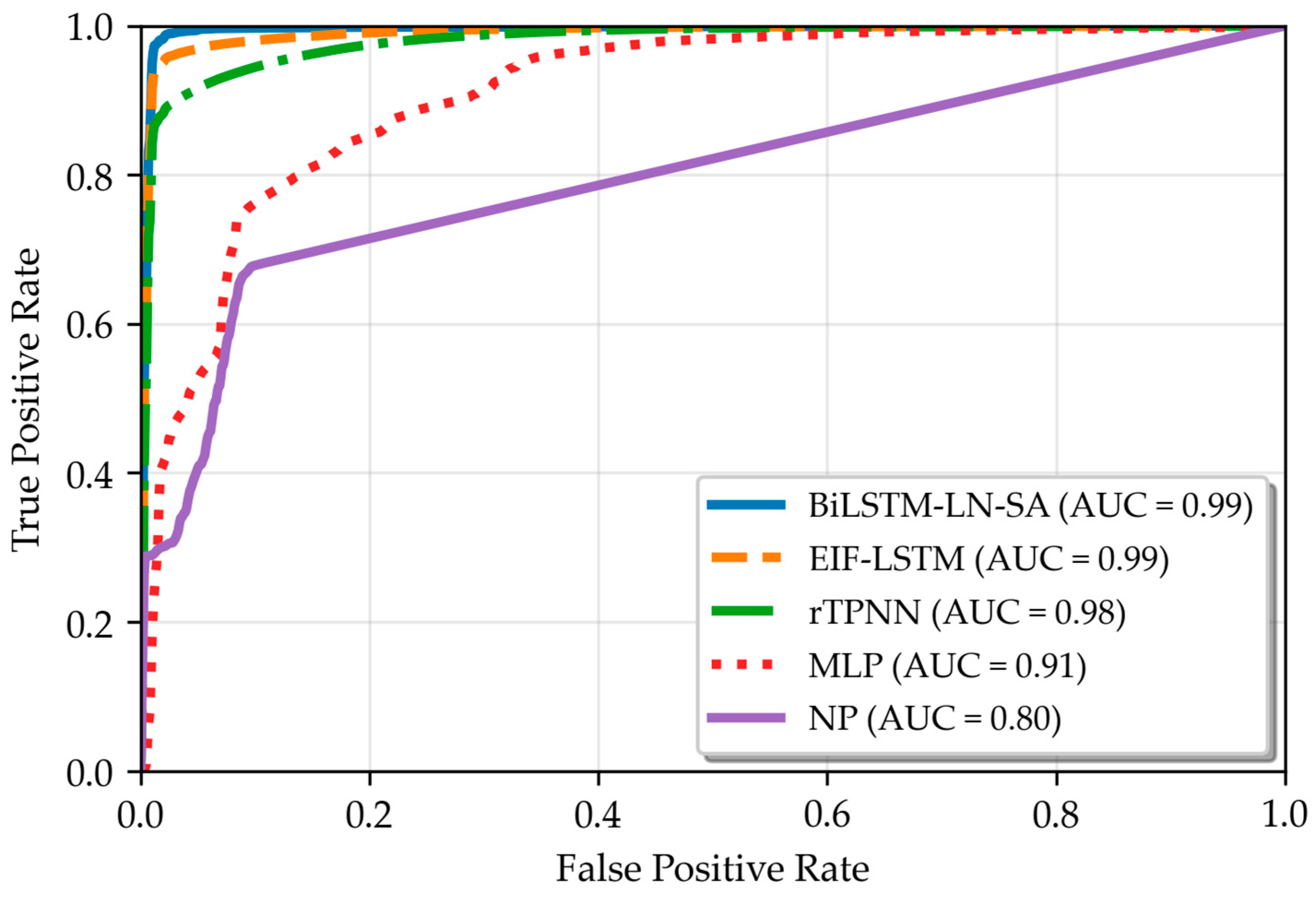

3.2.4. Metrics of ROC Curve

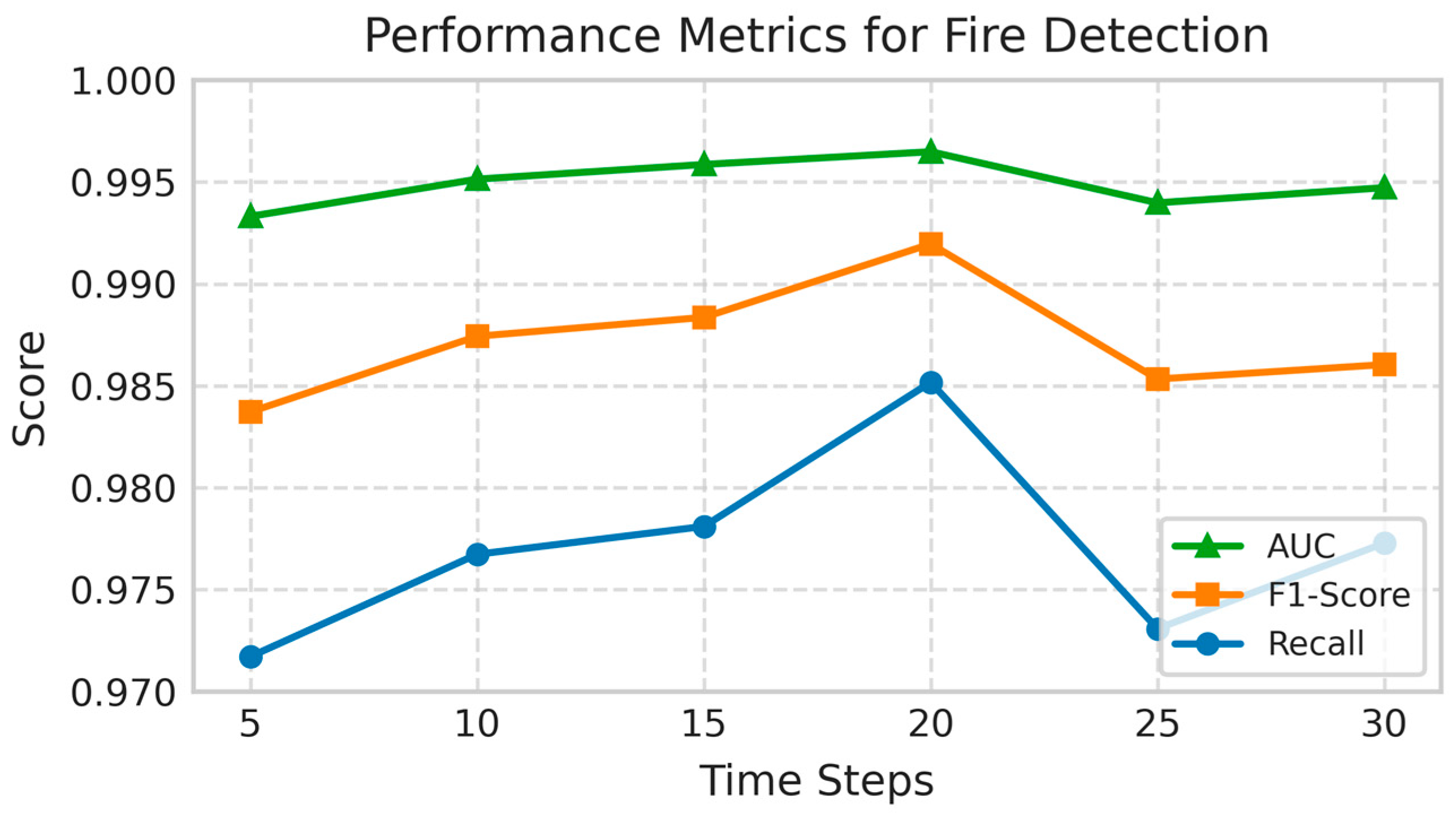

3.2.5. Analysis of the Relationship Between Step Size and Performance in Fire Detection Systems

3.2.6. Ablation Study: The Impact of Layer Normalization

3.2.7. Ablation Study: The Impact of Self-Attention Mechanism

3.2.8. Comprehensive Discussion: The Complementary Roles of Layer Normalization and Self-Attention

- (1)

- Self-Attention as the Core Feature Extractor: Removing the SA mechanism resulted in severe and comprehensive performance deterioration, particularly in critical safety metrics. The False Positive Rate (FPR) increased from 1.50% to 5.10%, while the False Negative Rate (FNR) experienced a substantial rise from 1.85% to 7.60%. This underscores SA’s role as the primary driving component for achieving high model accuracy. By dynamically focusing on fire-critical time steps, it empowers the model to extract highly discriminative features from complex time-series data. The absence of SA critically impairs the model’s capacity to capture essential fire dynamics.

- (2)

- Layer Normalization as the Stability Enhancer: In contrast, the removal of LN led to a more moderate performance decline, with FPR and FNR increasing to 4.80% and 3.20%, respectively. This pattern indicates that LN’s primary function is not to directly enhance representational power, but to act as a stability and generalization enhancer. It mitigates covariate shift induced by varying environmental conditions by stabilizing feature distributions within the deep network. This ensures more reliable learning and enables the model to focus on relative feature patterns rather than absolute values.

- (3)

- Synergistic Interaction Analysis: Together, the two ablation studies reveal a crucial synergistic relationship: the efficacy of LN is predicated on the high-quality time-series features extracted by SA. SA is responsible for generating discriminative feature representations, upon which LN operates to optimize the training process via internal normalization. This allows components like SA to function more stably and efficiently. This division of labor enables the BiLSTM-LN-SA model to achieve both high sensitivity and strong robustness in complex, multi-scenario environments.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nakip, M.; Guzelis, C. Development of a Multi-Sensor Fire Detector Based On Machine Learning Models. In Proceedings of the 2019 Innovations in Intelligent Systems and Applications Conference (ASYU), Izmir, Turkey, 31 October–2 November 2019; IEEE: New York, NY, USA, 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Festag, S. False alarm ratio of fire detection and fire alarm systems in Germany—A meta analysis. Fire Saf. J. 2016, 79, 119–126. [Google Scholar] [CrossRef]

- Hangauer, A.; Chen, J.; Strzoda, R.; Fleischer, M.; Amann, M.C. Performance of a fire detector based on a compact laser spectroscopic carbon monoxide sensor. Opt. Express 2014, 22, 13680. [Google Scholar] [CrossRef]

- Baek, J.; Alhindi, T.J.; Jeong, Y.-S.; Jeong, M.K.; Seo, S.; Kang, J.; Shim, W.; Heo, Y. A Wavelet-Based Real-Time Fire Detection with Multi-Modeling Framework. SSRN Electron. J. 2023, 233, 120940. [Google Scholar] [CrossRef]

- Fonollosa, J.; Solórzano, A.; Marco, S. Chemical Sensor Systems and Associated Algorithms for Fire Detection: A Review. Sensors 2018, 18, 553. [Google Scholar] [CrossRef]

- Solórzano, A.; Eichmann, J.; Fernández, L.; Ziems, B.; Jiménez-Soto, J.M.; Marco, S.; Fonollosa, J. Early fire detection based on gas sensor arrays: Multivariate calibration and validation. Sens. Actuators B Chem. 2022, 352, 130961. [Google Scholar] [CrossRef]

- Li, Y.; Lu, Y.; Zheng, C.; Ma, Z.; Yang, S.; Zheng, K.; Song, F.; Ye, W.; Zhang, Y.; Wang, Y.; et al. Development of a Mid-Infrared Sensor System for Early Fire Identification in Cotton Harvesting Operation. SSRN Electron. J. 2022. [Google Scholar] [CrossRef]

- Chen, S.-J.; Hovde, D.C.; Peterson, K.A.; Marshall, A.W. Fire detection using smoke and gas sensors. Fire Saf. J. 2007, 42, 507–515. [Google Scholar] [CrossRef]

- Baek, J.; Alhindi, T.J.; Jeong, Y.-S.; Jeong, M.K.; Seo, S.; Kang, J.; Heo, Y. Intelligent Multi-Sensor Detection System for Monitoring Indoor Building Fires. IEEE Sens. J. 2021, 21, 27982–27992. [Google Scholar] [CrossRef]

- Liu, Q. Application Research and Improvement of Weighted Information Fusion Algorithm and Kalman Filtering Fusion Algorithm in Multi-sensor Data Fusion Technology. Sens. Imaging 2023, 24, 43. [Google Scholar] [CrossRef]

- Liu, Y.; Deng, Z.; Hu, E. Multi-Sensor Fusion Positioning Method Based on Batch Inverse Covariance Intersection and IMM. Appl. Sci. 2021, 11, 4908. [Google Scholar] [CrossRef]

- Wang, R.; Li, Y.; Sun, H.; Yang, K. Multisensor-Weighted Fusion Algorithm Based on Improved AHP for Aircraft Fire Detection. Complexity 2021, 2021, 8704924. [Google Scholar] [CrossRef]

- Jing, C.; Jingqi, F. Fire Alarm System Based on Multi-Sensor Bayes Network. Procedia Eng. 2012, 29, 2551–2555. [Google Scholar] [CrossRef]

- Ran, M.; Bai, X.; Xin, F.; Xiang, Y. Research on Probability Statistics Method for Multi-sensor Data Fusion. In Proceedings of the 2018 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC), Zhengzhou, China, 18–20 October 2018; pp. 406–4065. [Google Scholar] [CrossRef]

- Jana, S.; Shome, S.K. Hybrid Ensemble Based Machine Learning for Smart Building Fire Detection Using Multi Modal Sensor Data. Fire Technol. 2022, 59, 473–496. [Google Scholar] [CrossRef]

- Baek, J.; Alhindi, T.J.; Jeong, Y.-S.; Jeong, M.K.; Seo, S.; Kang, J.; Choi, J.; Chung, H. Real-Time Fire Detection Algorithm Based on Support Vector Machine with Dynamic Time Warping Kernel Function. Fire Technol. 2021, 57, 2929–2953. [Google Scholar] [CrossRef]

- Ding, Q.; Peng, Z.; Liu, T.; Tong, Q. Multi-Sensor Building Fire Alarm System with Information Fusion Technology Based on D-S Evidence Theory. Algorithms 2014, 7, 523–537. [Google Scholar] [CrossRef]

- Jiang, Y. Fire detection system based on improved multi-sensor information fusion. In Proceedings of the Fifth International Conference on Computer Information Science and Artificial Intelligence (CISAI 2022), Chongqing, China, 16–18 September 2022; Curran Associates, Inc.: Red Hook, NY, USA, 2023; p. 71. [Google Scholar] [CrossRef]

- Ma, L.; Yao, W.; Dai, X.; Jia, R. A New Evidence Weight Combination and Probability Allocation Method in Multi-Sensor Data Fusion. Sensors 2023, 23, 722. [Google Scholar] [CrossRef]

- Su, Q.; Hu, G.; Liu, Z. Research on fire detection method of complex space based on multi-sensor data fusion. Meas. Sci. Technol. 2024, 35, 85107. [Google Scholar] [CrossRef]

- Zhang, T.; Liu, Y.; Fang, W.; Jia, G.; Qiu, Y. Fire Detection Scheme in Tunnels Based on Multi-source Information Fusion. In Proceedings of the 2022 18th International Conference on Mobility, Sensing and Networking (MSN), Guangzhou, China, 14–16 December 2022; pp. 1025–1030. [Google Scholar] [CrossRef]

- Sowah, R.A.; Ofoli, A.R.; Krakani, S.N.; Fiawoo, S.Y. Hardware Design and Web-Based Communication Modules of a Real-Time Multisensor Fire Detection and Notification System Using Fuzzy Logic. IEEE Trans. Ind. Appl. 2017, 53, 559–566. [Google Scholar] [CrossRef]

- Bao, H.; Jun, L.; Xian-Yun, Z.; Jing, Z. A fire detection system based on intelligent data fusion technology. In Proceedings of the 2003 International Conference on Machine Learning and Cybernetics (IEEE Cat. No.03EX693), Xi’an, China, 5 November 2003; pp. 1096–1101. [Google Scholar] [CrossRef]

- Rachman, F.Z.; Hendrantoro, G.; Wirawan. A Fire Detection System Using Multi-Sensor Networks Based on Fuzzy Logic in Indoor Scenarios. In Proceedings of the 2020 8th International Conference on Information and Communication Technology (ICoICT), Yogyakarta, Indonesia, 24–26 June 2020; pp. 1–6. [Google Scholar]

- Wang, X.; Xiao, J.; Bao, M. Multi-sensor fire detection algorithm for ship fire alarm system using neural fuzzy network. In Proceedings of the WCC 2000—ICSP 2000, 5th International Conference on Signal Processing Proceedings, 16th World Computer Congress, Beijing, China, 21–25 August 2000; Volume 3, pp. 1602–1605. [Google Scholar] [CrossRef]

- Qu, W.; Tang, J.; Niu, W. Research on Fire Detection Based on Multi-source Sensor Data Fusion. In Advances in Intelligent Systems and Computing; Springer: Singapore, 2020; pp. 629–635. [Google Scholar] [CrossRef]

- Wu, L.; Chen, L.; Hao, X. Multi-Sensor Data Fusion Algorithm for Indoor Fire Early Warning Based on BP Neural Network. Information 2021, 12, 59. [Google Scholar] [CrossRef]

- Jiang, Y.L. Research of Multi-Sensor Information Fusion Fire Detection System. Adv. Mater. Res. 2013, 860–863, 2745–2749. [Google Scholar] [CrossRef]

- Wen, M. Time Series Analysis of Receipt of Fire Alarms Based on Seasonal Adjustment Method. In Proceedings of the 2016 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 27–28 August 2016; pp. 81–84. [Google Scholar]

- Ryder, N.L.; Geiman, J.A.; Weckman, E.J. Hierarchical Temporal Memory Continuous Learning Algorithms for Fire State Determination. Fire Technol. 2021, 57, 2905–2928. [Google Scholar] [CrossRef]

- Li, Y.; Su, Y.; Zeng, X.; Wang, J. Research on Multi-Sensor Fusion Indoor Fire Perception Algorithm Based on Improved TCN. Sensors 2022, 22, 4550. [Google Scholar] [CrossRef]

- Nakip, M.; Guzelis, C.; Yildiz, O. Recurrent Trend Predictive Neural Network for Multi-Sensor Fire Detection. IEEE Access 2021, 9, 84204–84216. [Google Scholar] [CrossRef]

- Liu, P.; Xiang, P.; Lu, D. A new multi-sensor fire detection method based on LSTM networks with environmental information fusion. Neural Comput. Applic 2023, 35, 25275–25289. [Google Scholar] [CrossRef]

- Deng, X.; Shi, X.; Wang, H.; Wang, Q.; Bao, J.; Chen, Z. An Indoor Fire Detection Method Based on Multi-Sensor Fusion and a Lightweight Convolutional Neural Network. Sensors 2023, 23, 9689. [Google Scholar] [CrossRef]

- Sun, B.; Guo, T. Evidential reasoning and lightweight multi-source heterogeneous data fusion-driven fire danger level dynamic assessment technique. Process Saf. Environ. Prot. 2024, 185, 350–366. [Google Scholar] [CrossRef]

- Zhang, Q.; Ye, M.; Deng, X. A novel anomaly detection method for multimodal WSN data flow via a dynamic graph neural network. Connect. Sci. 2022, 34, 1609–1637. [Google Scholar] [CrossRef]

- Kong, S.; Deng, J.; Yang, L.; Liu, Y. An attention-based dual-encoding network for fire flame detection using optical remote sensing. Eng. Appl. Artif. Intell. 2024, 127, 107238. [Google Scholar] [CrossRef]

- Wang, G.; Bai, D.; Lin, H.; Zhou, H.; Qian, J. FireViTNet: A hybrid model integrating ViT and CNNs for forest fire segmentation. Comput. Electron. Agric. 2024, 218, 108722. [Google Scholar] [CrossRef]

- Ullah, I.; Alzaben, N.; Daradkeh, Y.I.; Lee, M.Y. Optimal features assisted multi-attention fusion for robust fire recognition in adverse conditions. Sci. Rep. 2025, 15, 23923. [Google Scholar] [CrossRef]

- Safarov, F.; Muksimova, S.; Kamoliddin, M.; Cho, Y.I. Fire and Smoke Detection in Complex Environments. Fire 2024, 7, 389. [Google Scholar] [CrossRef]

- Zhang, R.; Yang, B.; Xu, L.; Huang, Y.; Xu, X.; Zhang, Q.; Jiang, Z.; Liu, Y. A Benchmark and Frequency Compression Method for Infrared Few-Shot Object Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–11. [Google Scholar] [CrossRef]

- Stefan, B. AI Sensor Fusion for Fire Detection. 2022. Available online: https://www.kaggle.com/datasets/deepcontractor/smoke-detection-dataset (accessed on 10 November 2024).

| Methods | Training | Test | ||

|---|---|---|---|---|

| Mean | Std | Mean | Std | |

| BiLSTM-LN-SA | 98.50 | 0.31 | 98.38 | 0.38 |

| EIF-LSTM | 96.15 | 0.41 | 95.30 | 0.49 |

| rTPNN | 94.10 | 2.32 | 93.85 | 2.16 |

| MLP | 87.95 | 2.02 | 88.27 | 2.41 |

| NP | 80.05 | 1.21 | 80.12 | 1.26 |

| Sensor Type | Training | Test | ||

|---|---|---|---|---|

| Mean | Std | Mean | Std | |

| Temperature | 50.62 | 2.26 | 50.52 | 2.29 |

| TVOC | 85.89 | 0.91 | 85.75 | 0.80 |

| Carbon dioxide | 79.30 | 2.32 | 79.25 | 2.28 |

| NC2.5 | 84.95 | 1.36 | 84.72 | 1.30 |

| Methods | TPR | FNR | TNR | FPR |

|---|---|---|---|---|

| BiLSTM-LN-SA | 98.15 | 1.85 | 98.50 | 1.50 |

| EIF-LSTM | 95.20 | 4.80 | 96.90 | 3.10 |

| rTPNN | 91.27 | 8.73 | 95.27 | 4.73 |

| MLP | 85.27 | 14.73 | 91.27 | 8.73 |

| NP | 75.05 | 25.95 | 81.25 | 18.75 |

| Sensor Type | TPR | FNR | TNR | FPR |

|---|---|---|---|---|

| Temperature | 28.30 | 71.70 | 98.55 | 1.45 |

| TVOC | 85.40 | 14.60 | 99.10 | 0.90 |

| Carbon dioxide | 52.30 | 47.70 | 96.80 | 3.20 |

| NC2.5 | 82.90 | 17.10 | 98.71 | 1.29 |

| Methods | TPR (%) | FNR (%) | TNR (%) | FPR (%) |

|---|---|---|---|---|

| BiLSTM-LN-SA | 98.15 | 1.85 | 98.50 | 1.50 |

| BiLSTM-SA | 95.80 | 3.20 | 95.20 | 4.80 |

| Methods | TPR (%) | FNR (%) | TNR (%) | FPR (%) |

|---|---|---|---|---|

| BiLSTM-LN-SA (with SA) | 98.15 | 1.85 | 98.50 | 1.50 |

| BiLSTM-LN (without SA) | 92.40 | 7.60 | 94.90 | 5.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Z.; Si, Y.; Yang, L.; Xu, N.; Zhang, X.; Wang, M.; Sun, X. BiLSTM-LN-SA: A Novel Integrated Model with Self-Attention for Multi-Sensor Fire Detection. Sensors 2025, 25, 6451. https://doi.org/10.3390/s25206451

He Z, Si Y, Yang L, Xu N, Zhang X, Wang M, Sun X. BiLSTM-LN-SA: A Novel Integrated Model with Self-Attention for Multi-Sensor Fire Detection. Sensors. 2025; 25(20):6451. https://doi.org/10.3390/s25206451

Chicago/Turabian StyleHe, Zhaofeng, Yu Si, Liyuan Yang, Nuo Xu, Xinglong Zhang, Mingming Wang, and Xiaoyun Sun. 2025. "BiLSTM-LN-SA: A Novel Integrated Model with Self-Attention for Multi-Sensor Fire Detection" Sensors 25, no. 20: 6451. https://doi.org/10.3390/s25206451

APA StyleHe, Z., Si, Y., Yang, L., Xu, N., Zhang, X., Wang, M., & Sun, X. (2025). BiLSTM-LN-SA: A Novel Integrated Model with Self-Attention for Multi-Sensor Fire Detection. Sensors, 25(20), 6451. https://doi.org/10.3390/s25206451