Real-Time Parking Space Detection Based on Deep Learning and Panoramic Images

Abstract

1. Introduction

- To address the limitations of incomplete datasets under complex conditions (fog, snow, sandstorms, and rain), we construct a diverse parking space dataset, PSEX, by incorporating image depth information and a GAN.

- To enhance the contrast of parking space images in complex environments, a Style Attention Module (SANet) is integrated into the GAN framework.

- Furthermore, an end-to-end improved PP-Yoloe model is proposed for parking space detection in complex scenes, aiming to overcome the shortcomings of existing two-stage approaches and their limited accuracy. Compared with the baseline PP-Yoloe, the proposed method achieves notable improvements in both detection speed and accuracy.

1.1. Related Works

1.1.1. Data Processing

1.1.2. Detection Algorithm

2. Materials and Methods

2.1. Data Augmentation

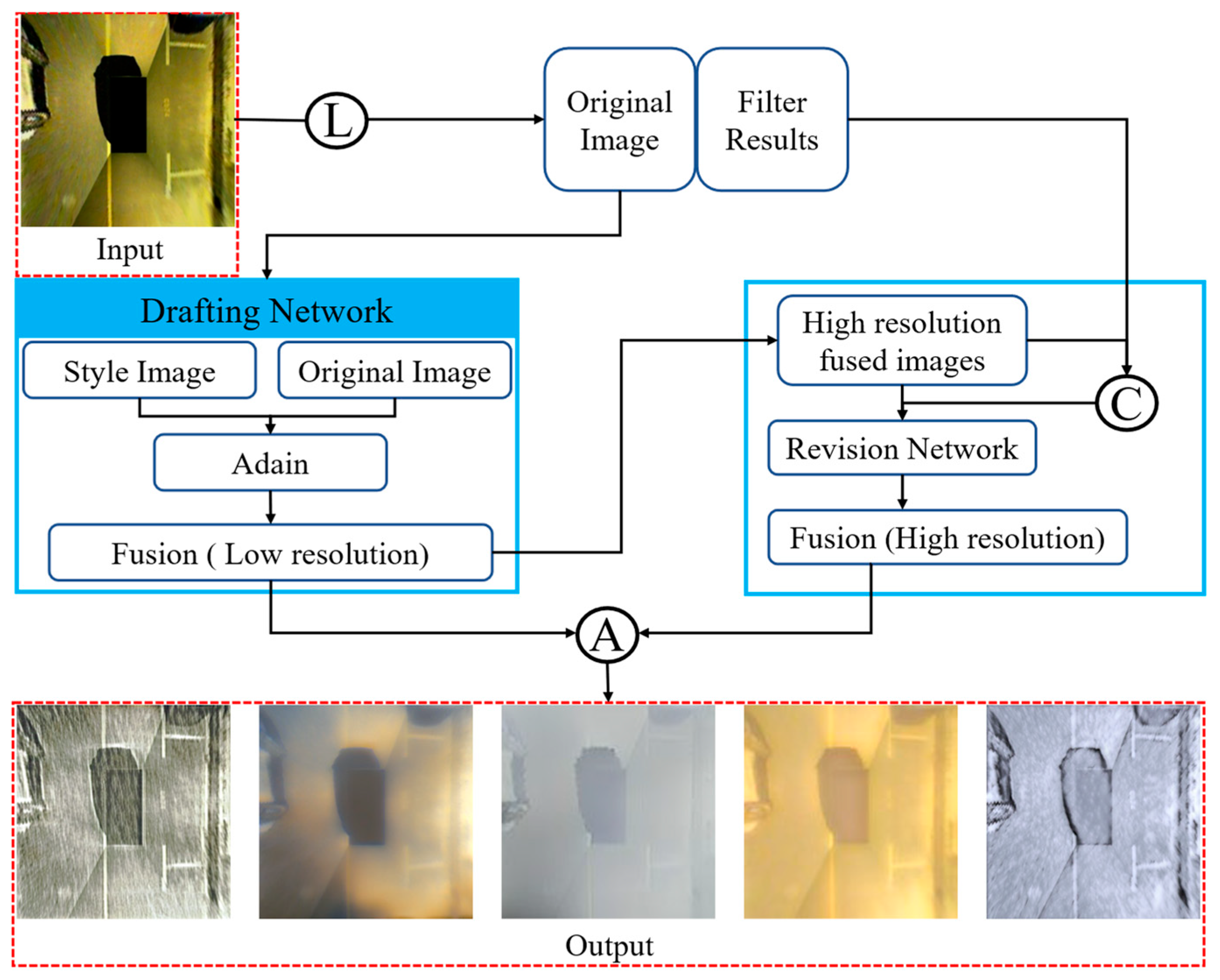

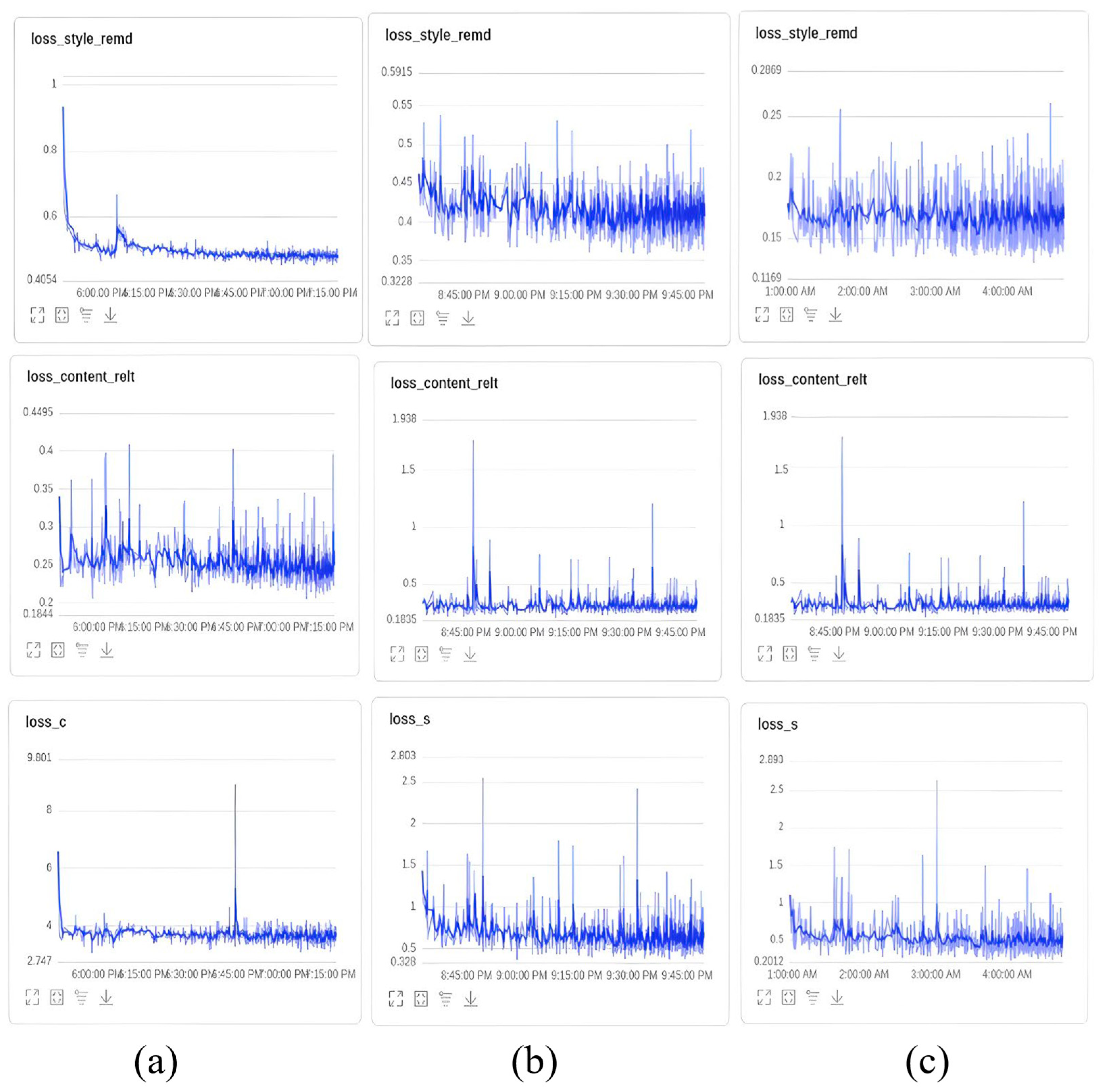

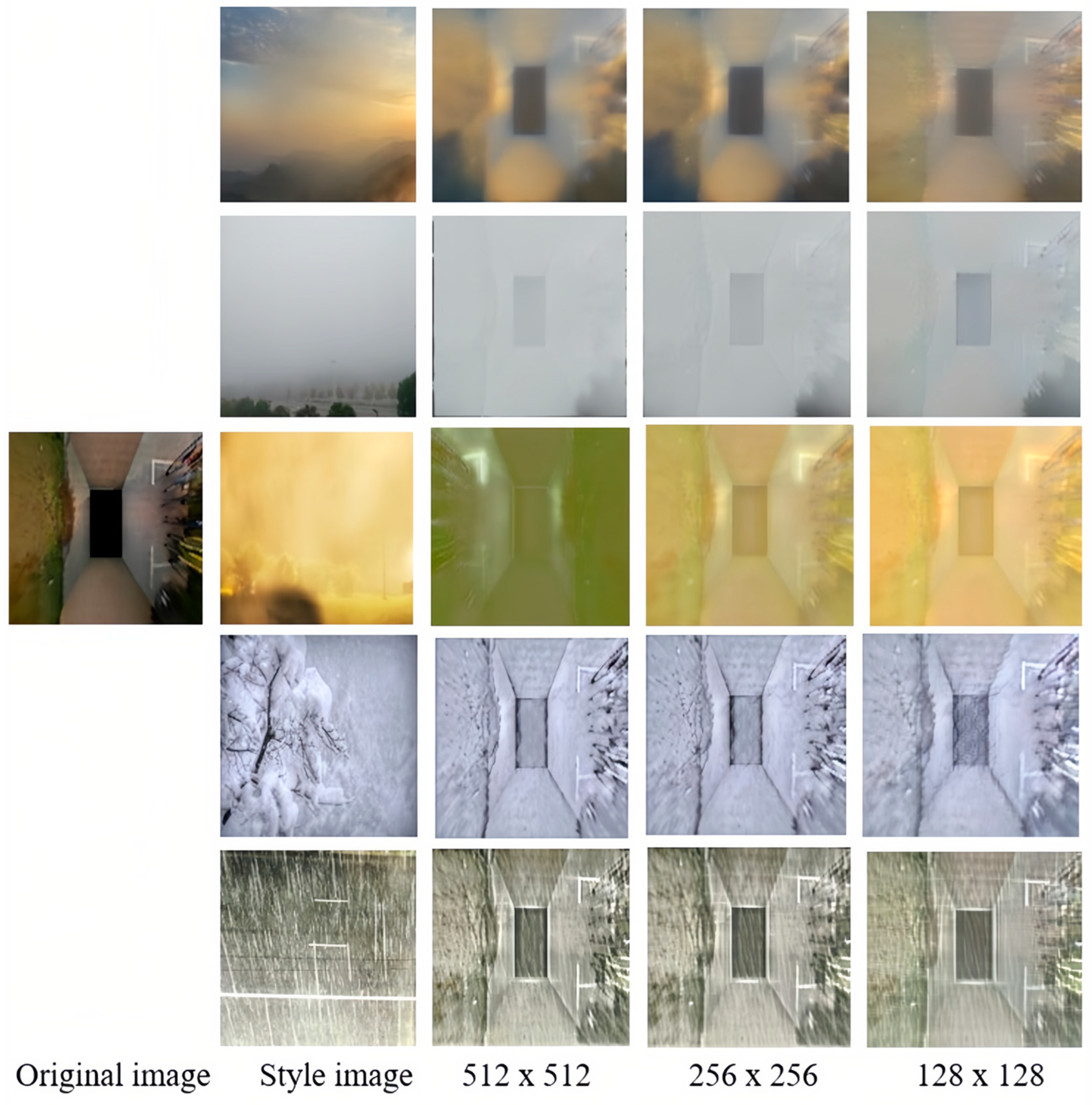

2.2. Data Augmentation Algorithm for Style Transfer

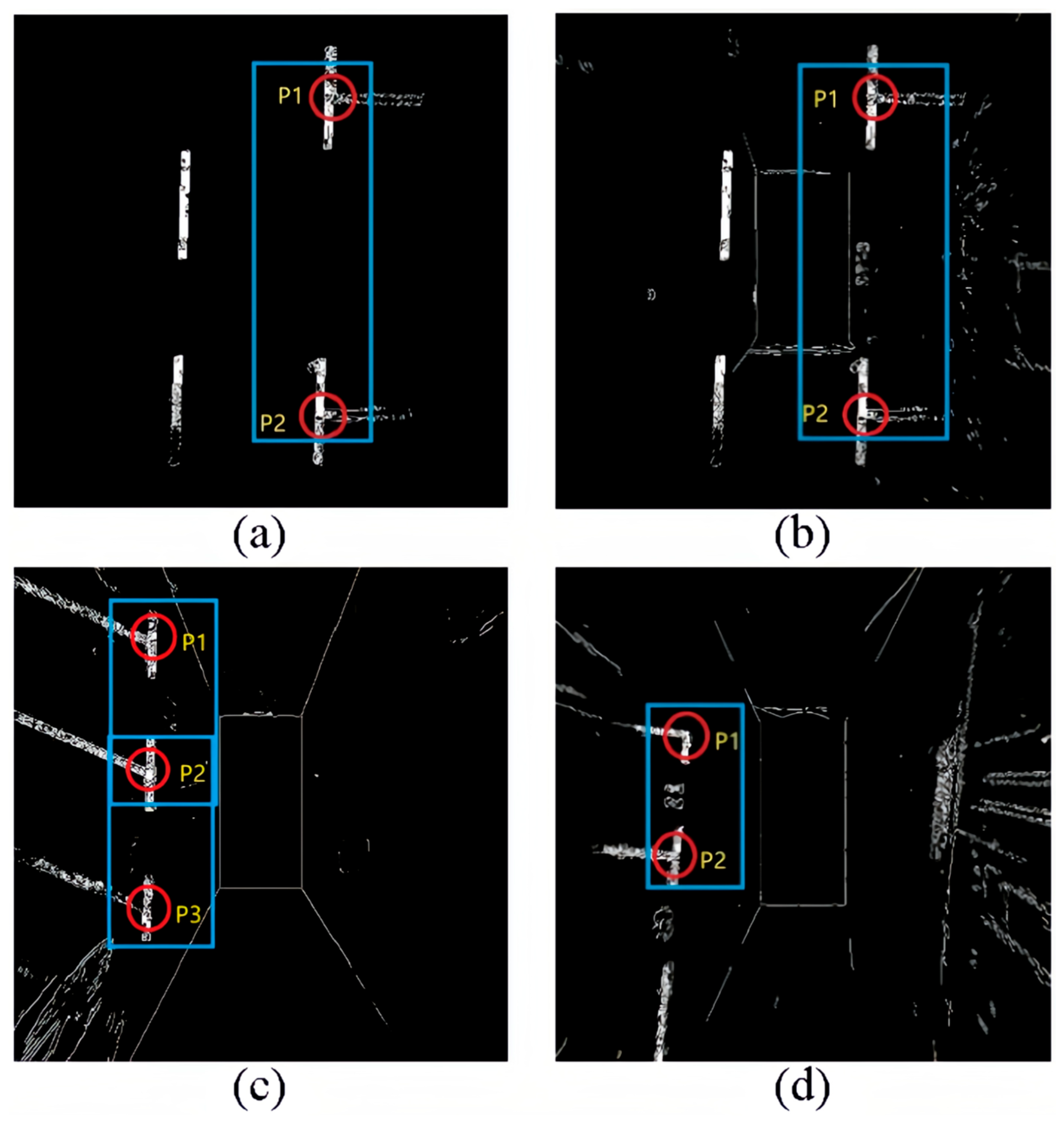

2.3. Dataset Validation

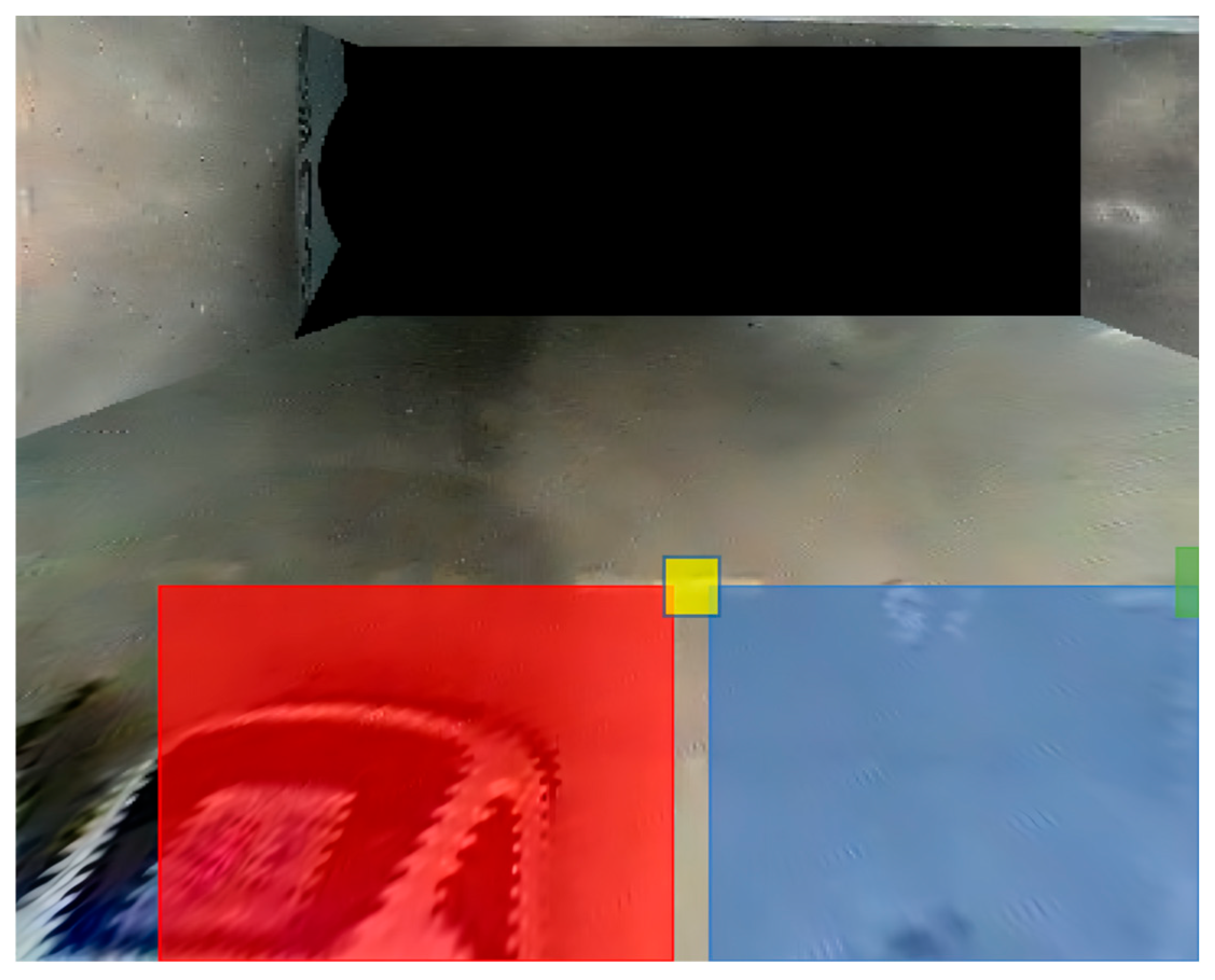

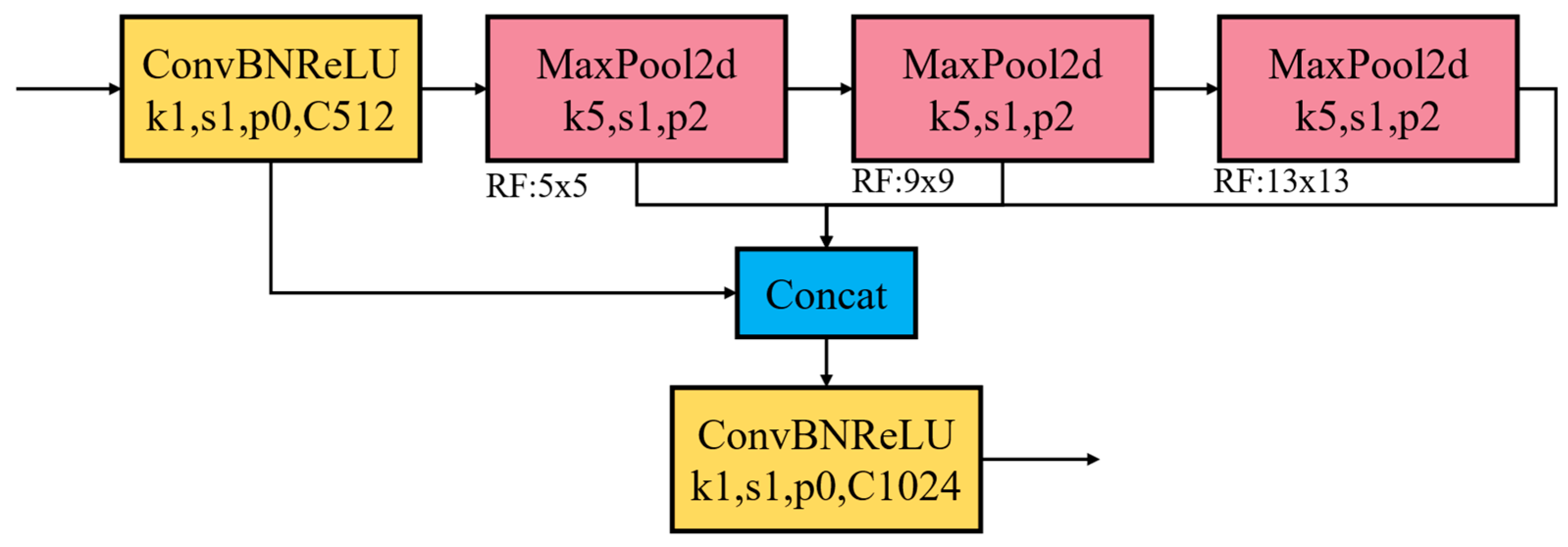

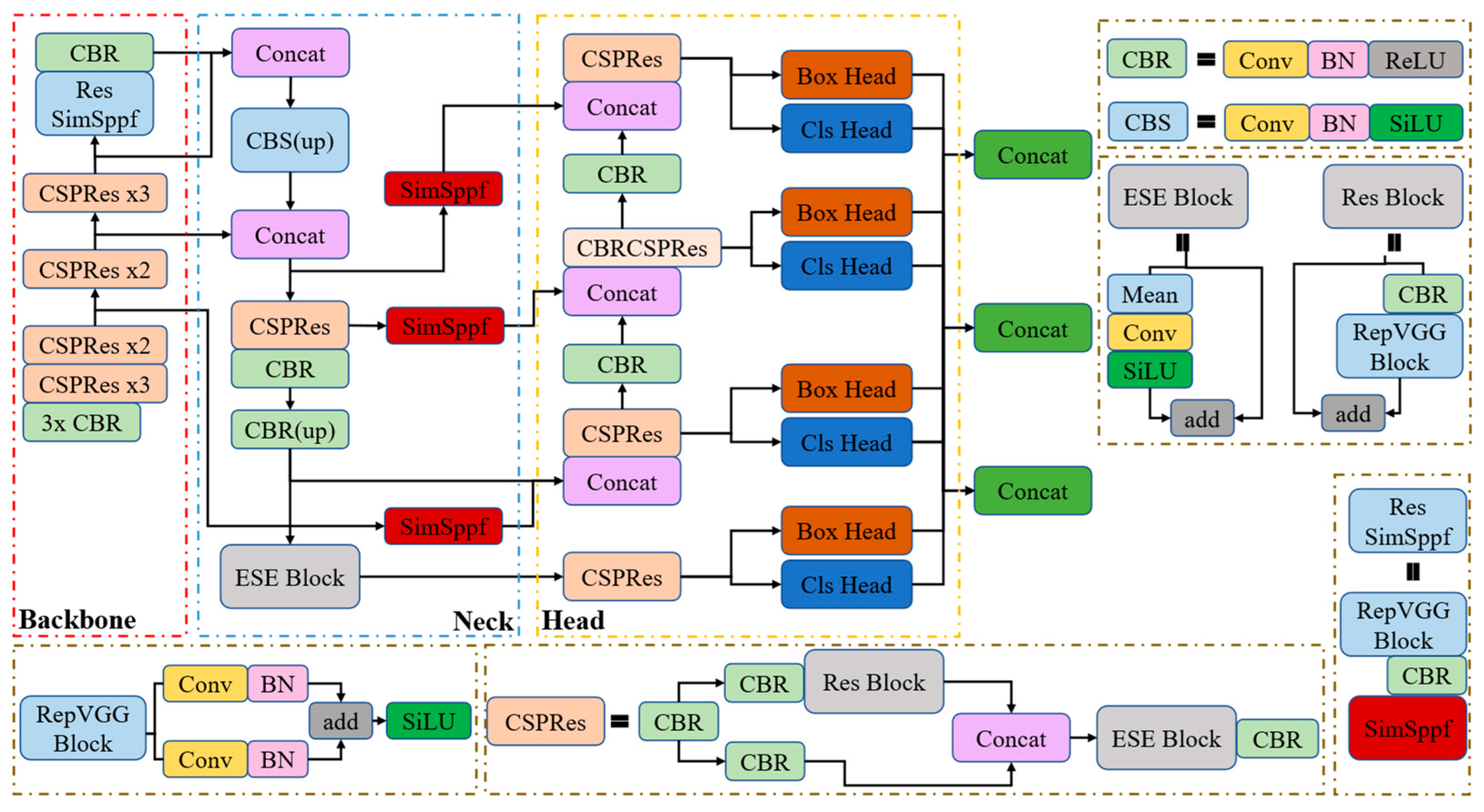

2.4. Parking Space Detection Algorithm

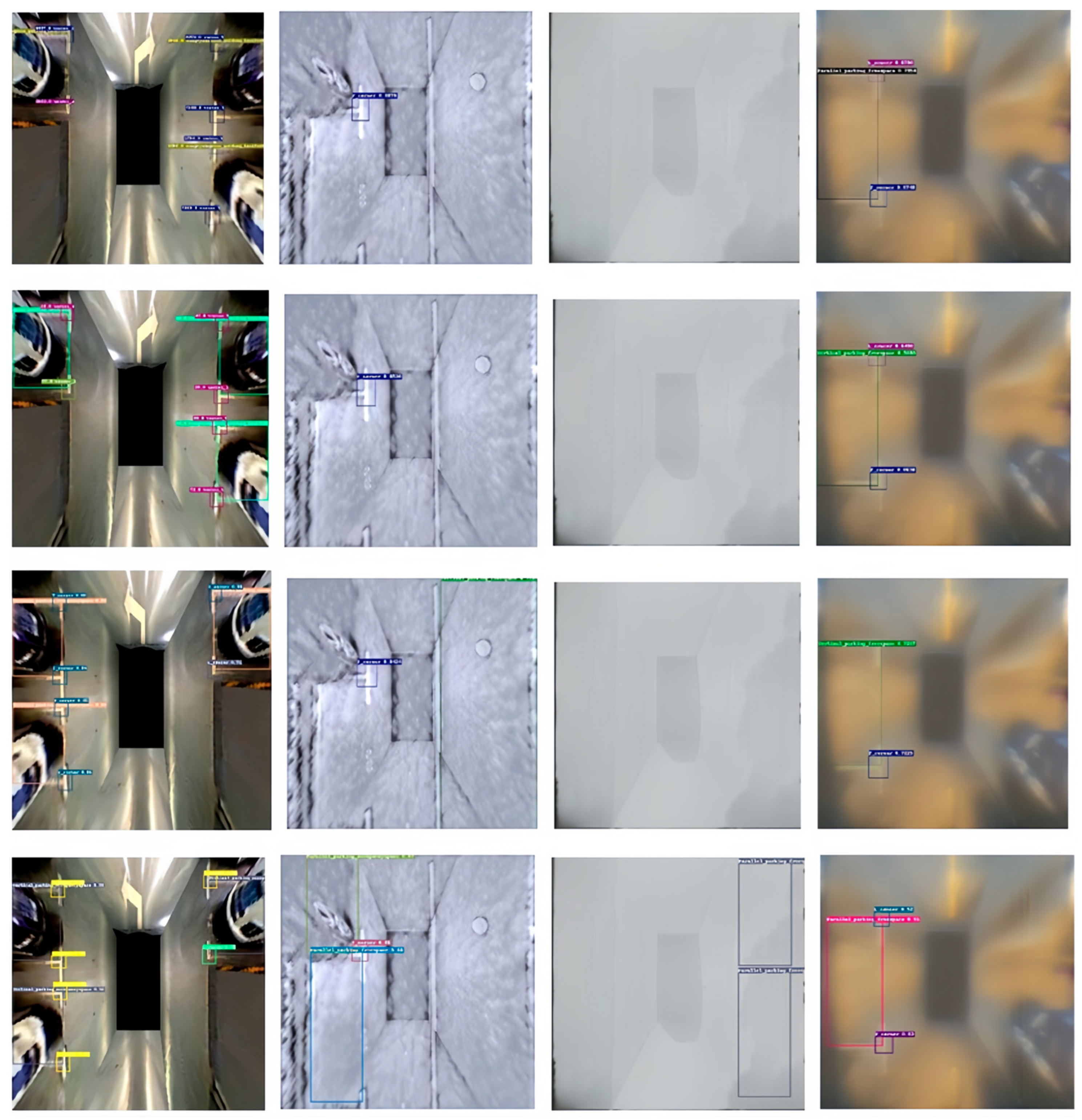

3. Results and Discussion

3.1. Data Augmentation Section

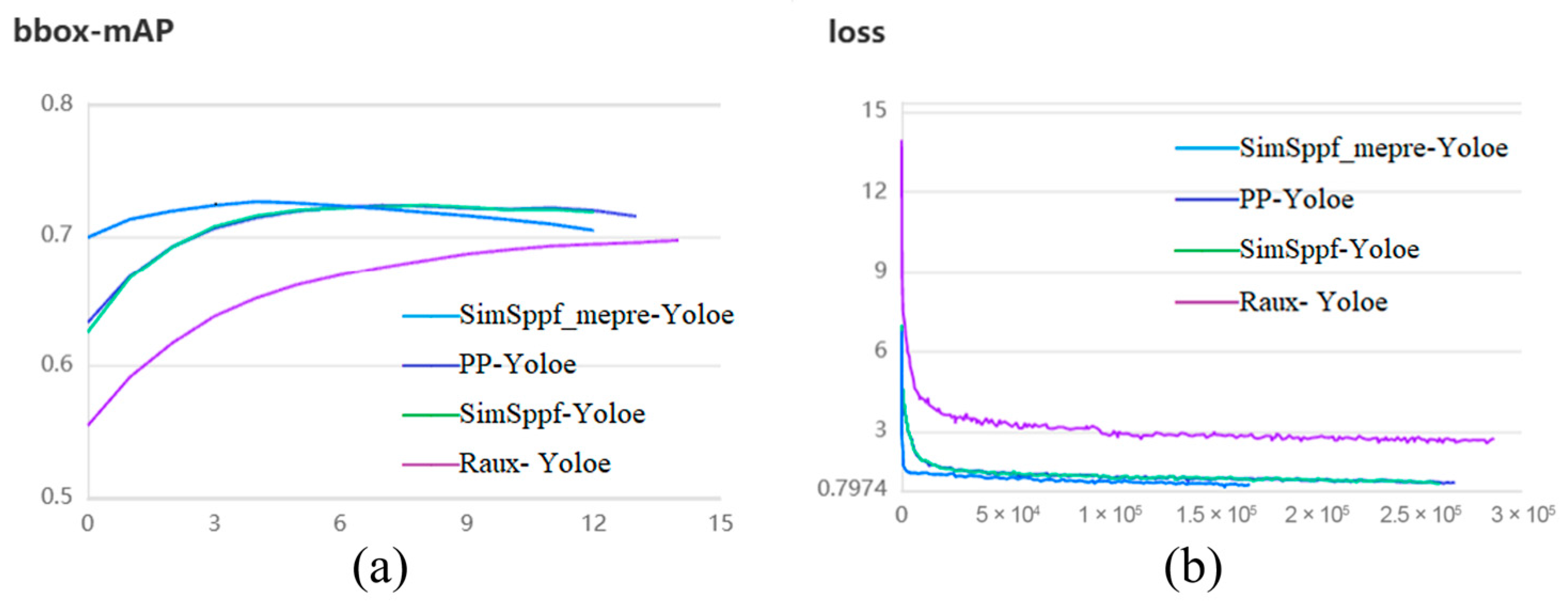

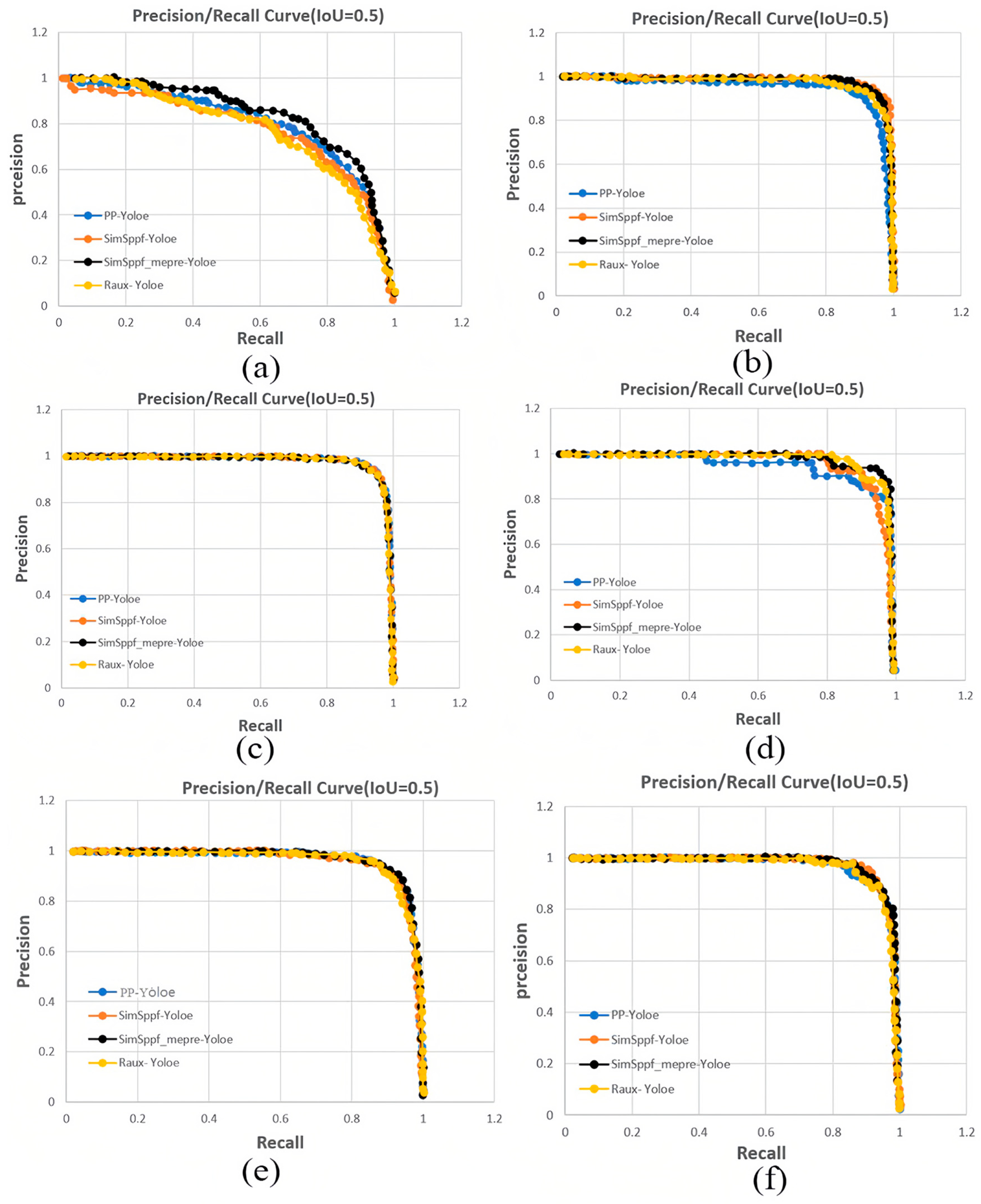

3.2. Parking Space Recognition and Detection Algorithms

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GAN | Generative Adversarial Networks |

| CNN | Convolutional Neural Network |

| AVM | Around View Monitor |

| CNNs | Convolutional Neural Networks |

| PSV | Panoramic Surround View |

| DCNN | Deep Convolutional Neural Network |

| AP | Average Precision |

| SPP | Spatial Pyramid Pooling |

| SPPF | Spatial Pyramid Pooling-Fast |

| SimSPPF | Simplified Spatial Pyramid Pooling-Fast |

| CBS | Conv-BN-SiLU |

| CBR | Conv-BN-ReLU |

| PR | Precision-recall |

| mAP | Mean Average Precision |

References

- Yao, L.; Yuan, X.; Li, G.; Lu, Y. Multi-robot consistent formation control based on novel leader-follower model and optimization motion planning approach. Knowl.-Based Syst. 2025, 330, 114590. [Google Scholar] [CrossRef]

- Yao, L.; Li, G.; Zhang, T.; Hussien, A.G.; Lu, Y. Adaptive multi-step path planning for multi-robot in dynamic environments based on hybrid optimization approach. Expert Syst. Appl. 2026, 298, 129699. [Google Scholar] [CrossRef]

- Li, G.; Zhang, T.; Tsai, C.Y.; Yao, L.; Lu, Y.; Tang, J. Review of the metaheuristic algorithms in applications: Visual analysis based on bibliometrics. Expert Syst. Appl. 2024, 255, 124857. [Google Scholar] [CrossRef]

- Yao, L.; Yuan, P.; Tsai, C.Y.; Zhang, T.; Lu, Y.; Ding, S. ESO: An enhanced snake optimizer for real-world engineering problems. Expert Syst. Appl. 2023, 230, 120594. [Google Scholar] [CrossRef]

- Minje, C.; Gayoung, K.; Seungjae, L. Autonomous driving parking robot systems for urban environmental benefit evaluation. J. Clean. Prod. 2024, 469, 143215. [Google Scholar] [CrossRef]

- Markus, H.; Jonathan, H.; Ciarán, H.; John, M.; Senthil, Y. Computer vision in automated parking systems: Design, implementation and challenges. Image Vis. Comput. 2017, 68, 88–101. [Google Scholar] [CrossRef]

- Jeong, S.H.; Choi, C.G.; Oh, J.N.; Yoon, P.J.; Kim, B.S.; Kim, M.; Lee, K.H. Low cost design of parallel parking assist system based on an ultrasonic sensor. Int. J. Automot. Technol. 2010, 11, 409–416. [Google Scholar] [CrossRef]

- Zhou, J.; Navarro-Serment, L.E.; Hebert, M. Detection of parking spots using 2D range data. In Proceedings of the 2012 15th International IEEE Conference on Intelligent Transportation Systems, Anchorage, AK, USA, 16–19 September 2012; pp. 1280–1287. [Google Scholar]

- Dubé, R.; Hahn, M.; Schütz, M.; Dickmann, J.; Gingras, D. Detection of parked vehicles from a radar based occupancy grid. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 1415–1420. [Google Scholar]

- Hsu, C.M.; Chen, J.Y. Around View Monitoring-Based Vacant Parking Space Detection and Analysis. Appl. Sci. 2019, 9, 3403. [Google Scholar] [CrossRef]

- Pohl, J.; Sethsson, M.; Degerman, P.; Larsson, J. A semi-automated parallel parking system for passenger cars. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2006, 220, 53–65. [Google Scholar] [CrossRef]

- Peláez, L.P.; Recalde, M.E.V.; Muñóz, E.D.M.; Larrauri, J.M.; Rastelli, J.M.P.; Druml, N.; Hillbrand, B. Car parking assistance based on Time-or-Flight camera. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 1753–1759. [Google Scholar]

- Ying, J.; Chen, W.; Yang, H.; He, S.; Liu, J. Research on Parking spaces recognization and counting algorithm based on affine transformation and template matching. Appl. Res. Comput. 2022, 39, 919–924. [Google Scholar]

- Nyambal, J.; Klein, R. Automated parking space detection using convolutional neural networks. In Proceedings of the 2017 Pattern Recognition Association of South Africa and Robotics and Mechatronics (PRASA-RobMech), Bloemfontein, South Africa, 30 November–1 December 2017; pp. 1–6. [Google Scholar]

- Wang, C.; Zhang, H.; Yang, M.; Wang, X.; Ye, L.; Guo, C. Automatic Parking Based on a Bird’s Eye View Vision System. Adv. Mech. Eng. 2014, 6, 847406. [Google Scholar] [CrossRef]

- Suhr, J.K.; Jung, H.G. End-to-End Trainable One-Stage Parking Slot Detection Integrating Global and Local Information. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4570–4582. [Google Scholar] [CrossRef]

- Karakaya, M.; Akıncı, F.C. Parking space occupancy detection using deep learning methods. In Proceedings of the 2018 26th Signal Processing and Communications Applications Conference (SIU), Izmir, Turkey, 2–5 May 2018; pp. 1–4. [Google Scholar]

- Wu, Y.; Yang, T.; Zhao, J.; Guan, L.; Jiang, W. VH-HFCN based Parking Slot and Lane Markings Segmentation on Panoramic Surround View. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Suzhou, China, 26–30 June 2018; pp. 1767–1772. [Google Scholar]

- Nguyen, T.; Tran, T.; Mai, T.; Le, H.; Le, C.; Pham, D.; Phung, K.H. An adaptive vision-based outdoor car parking lot monitoring system. In Proceedings of the 2020 IEEE Eighth International Conference on Communications and Electronics (ICCE), Phu Quoc Island, Vietnam, 13–15 July 2020; pp. 445–450. [Google Scholar]

- Zhang, L.; Huang, J.; Li, X.; Xiong, L. Vision-Based Parking-Slot Detection: A DCNN-Based Approach and a Large-Scale Benchmark Dataset. IEEE Trans. Image Process. 2018, 27, 5350–5364. [Google Scholar] [CrossRef]

- Xu, S.; Wang, X.; Lv, W.; Chang, Q.; Cui, C.; Deng, K.; Wang, G.; Dang, Q.; Wei, S.; Du, Y.; et al. PP-YOLOE: An evolved version of YOLO. arXiv 2022, arXiv:2203.16250. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. Int. J. Big Data 2019, 6, 134. [Google Scholar] [CrossRef]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. AutoAugment: Learning Augmentation Strategies From Data. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 113–123. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 21 June–1 July 2016; pp. 2414–2423. [Google Scholar]

- Efros, A.A.; Freeman, W.T. Image quilting for texture synthesis and transfer. In Seminal Graphics Papers: Pushing the Boundaries, Volume 2; Mary, C.W., Ed.; Association for Computing Machinery: New York, NY, USA, 2023; pp. 571–576. [Google Scholar]

- Hertzmann, A.; Jacobs, C.E.; Oliver, N.; Curless, B.; Salesin, D.H. Image analogies. In Seminal Graphics Papers: Pushing the Boundaries, Volume 2; Mary, C.W., Ed.; Association for Computing Machinery: New York, NY, USA, 2023; pp. 557–570. [Google Scholar]

- Ashikhmin, N. Fast texture transfer. IEEE Comput. Graph. Appl. 2003, 23, 38–43. [Google Scholar] [CrossRef]

- Lee, H.; Seo, S.; Ryoo, S.; Yoon, K. Directional texture transfer. In Proceedings of the 8th International Symposium on Non-Photorealistic Animation and Rendering, Annecy, France, 7–10 June 2010; pp. 43–48. [Google Scholar]

- Azadi, S.; Fisher, M.; Kim, V.G.; Wang, Z.; Shechtman, E.; Darrell, T. Multi-content gan for few-shot font style transfer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 7564–7573. [Google Scholar]

- Yang, S.; Wang, Z.; Wang, Z.; Xu, N.; Liu, J.; Guo, Z. Controllable artistic text style transfer via shape-matching gan. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4442–4451. [Google Scholar]

- Lin, T.; Ma, Z.; Li, F.; He, D.; Li, X.; Ding, E.; Wang, N.; Li, J.; Gao, X. Drafting and revision: Laplacian pyramid network for fast high-quality artistic style transfer. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 10–25 June 2021; pp. 5141–5150. [Google Scholar]

- Zhang, Q.; Yang, Y. Sa-net: Shuffle attention for deep convolutional neural networks. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Nashville, TN, USA, 10–25 June 2021; pp. 5141–5150. [Google Scholar]

- Li, L.; Zhang, L.; Li, X.; Liu, X.; Shen, Y.; Xiong, L. Vision-based parking-slot detection: A benchmark and a learning-based approach. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 649–654. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the 2017 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the 14th European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

| Type | Original/(Count) | Style Augmentation/(Count) |

|---|---|---|

| Indoor-parking-lot | 226 | 226 |

| Outdoor-normal-daylight | 546 | 5552 |

| Outdoor-rainy | 244 | 2464 |

| Outdoor-shadow | 1127 | 11,905 |

| Outdoor-slanted | 48 | 520 |

| Outdoor-street-light | 1477 | 1505 |

| Train | 9827 | 22,637 |

| Algorithm Type | Data Type | AP | Recall |

|---|---|---|---|

| YOLOv8 | No augmentation | 0.78 | 0.79 |

| Traditional data augmentation | 0.85 | 0.86 | |

| GAN data augmentation | 0.90 | 0.89 | |

| YOLOx | No augmentation | 0.71 | 0.73 |

| Traditional data augmentation | 0.80 | 0.82 | |

| GAN data augmentation | 0.82 | 0.83 | |

| Fast R-CNN | No augmentation | 0.69 | 0.66 |

| Traditional data augmentation | 0.72 | 0.70 | |

| GAN data augmentation | 0.77 | 0.74 |

| Parking Information | mAP | PP-Yoloe | SimSppf-Yoloe | SimSppf_Mepre-Yoloe | Deviation (%) |

|---|---|---|---|---|---|

| Parallel_parking_freespace | mAP50 | 0.860 | 0.862 | 0.881 | +2.44% |

| mAP50:95 | 0.752 | 0.749 | 0.768 | +2.13% | |

| Parallel_parking_occupancyspace | mAP50 | 0.732 | 0.744 | 0.793 | +8.33% |

| mAP50:95 | 0.703 | 0.712 | 0.743 | +5.69% | |

| Vertical_parking_freespace | mAP50 | 0.787 | 0.789 | 0.801 | +1.78% |

| mAP50:95 | 0.720 | 0.732 | 0.729 | +1.25% | |

| Vertical_parking_occupancyspace | mAP50 | 0.766 | 0.776 | 0.789 | +3.00% |

| mAP50:95 | 0.691 | 0.697 | 0.711 | +2.89% | |

| T_concer | mAP50 | 0.687 | 0.701 | 0.750 | +9.17% |

| mAP50:95 | 0.633 | 0.648 | 0.674 | +6.48% | |

| L_corner | mAP50 | 0.457 | 0.467 | 0.545 | +19.26% |

| mAP50:95 | 0.442 | 0.483 | 0.493 | +11.54% |

| Computing Unit Platform | Metric | Ubuntu (PC) | Jetson AGX | Jetson Nano | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Different Modes | No_trt | Trt_16 | Trt_32 | No_trt | Trt_16 | Trt_32 | No_trt | Trt_16 | Trt_32 | |

| PP-Yoloe | Latency (ms) | 35.2 | 11.4 | 28.8 | 143.4 | 30.0 | 80.0 | 845.0 | 342.7 | - |

| FPS | 28.4 | 87.7 | 34.7 | 7.0 | 33.3 | 12.5 | 1.2 | 2.9 | - | |

| SimSppf-Yoloe | Latency (ms) | 33.1 | 10.0 | 27.5 | 142.7 | 30.7 | 80.9 | 825.0 | 337.8 | - |

| FPS | 30.2 | 100.0 | 36.4 | 7.0 | 32.6 | 12.4 | 1.2 | 3.0 | - | |

| SimSppf_mepre-Yoloe | Latency (ms) | 34.6 | 11.3 | 27.0 | 141.6 | 29.7 | 79.5 | 828.8 | 337.9 | - |

| FPS | 28.9 | 88.5 | 37.0 | 7.1 | 33.7 | 12.6 | 1.2 | 3.0 | - | |

| Raux-Yoloe | Latency (ms) | 6.7 | 3.3 | 5.2 | 83.9 | 9.5 | 15.3 | 350.6 | 66.4 | - |

| FPS | 149.3 | 303.0 | 192.3 | 11.9 | 105.3 | 65.4 | 2.9 | 15.1 | - | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, W.; Chen, H.; Gong, J.; Che, K.; Ren, W.; Zhang, B. Real-Time Parking Space Detection Based on Deep Learning and Panoramic Images. Sensors 2025, 25, 6449. https://doi.org/10.3390/s25206449

Wei W, Chen H, Gong J, Che K, Ren W, Zhang B. Real-Time Parking Space Detection Based on Deep Learning and Panoramic Images. Sensors. 2025; 25(20):6449. https://doi.org/10.3390/s25206449

Chicago/Turabian StyleWei, Wu, Hongyang Chen, Jiayuan Gong, Kai Che, Wenbo Ren, and Bin Zhang. 2025. "Real-Time Parking Space Detection Based on Deep Learning and Panoramic Images" Sensors 25, no. 20: 6449. https://doi.org/10.3390/s25206449

APA StyleWei, W., Chen, H., Gong, J., Che, K., Ren, W., & Zhang, B. (2025). Real-Time Parking Space Detection Based on Deep Learning and Panoramic Images. Sensors, 25(20), 6449. https://doi.org/10.3390/s25206449