An AI-Based Integrated Multi-Sensor System with Edge Computing for the Adaptive Management of Human–Wildlife Conflict

Abstract

1. Introduction

1.1. The Global and European Context of Human–Wildlife Conflict

1.2. Podkarpackie Voivodeship as a European Conflict Hotspot

1.3. Review of Existing Non-Lethal Methods and Their Limitations

1.4. Aim and Scope of the Article

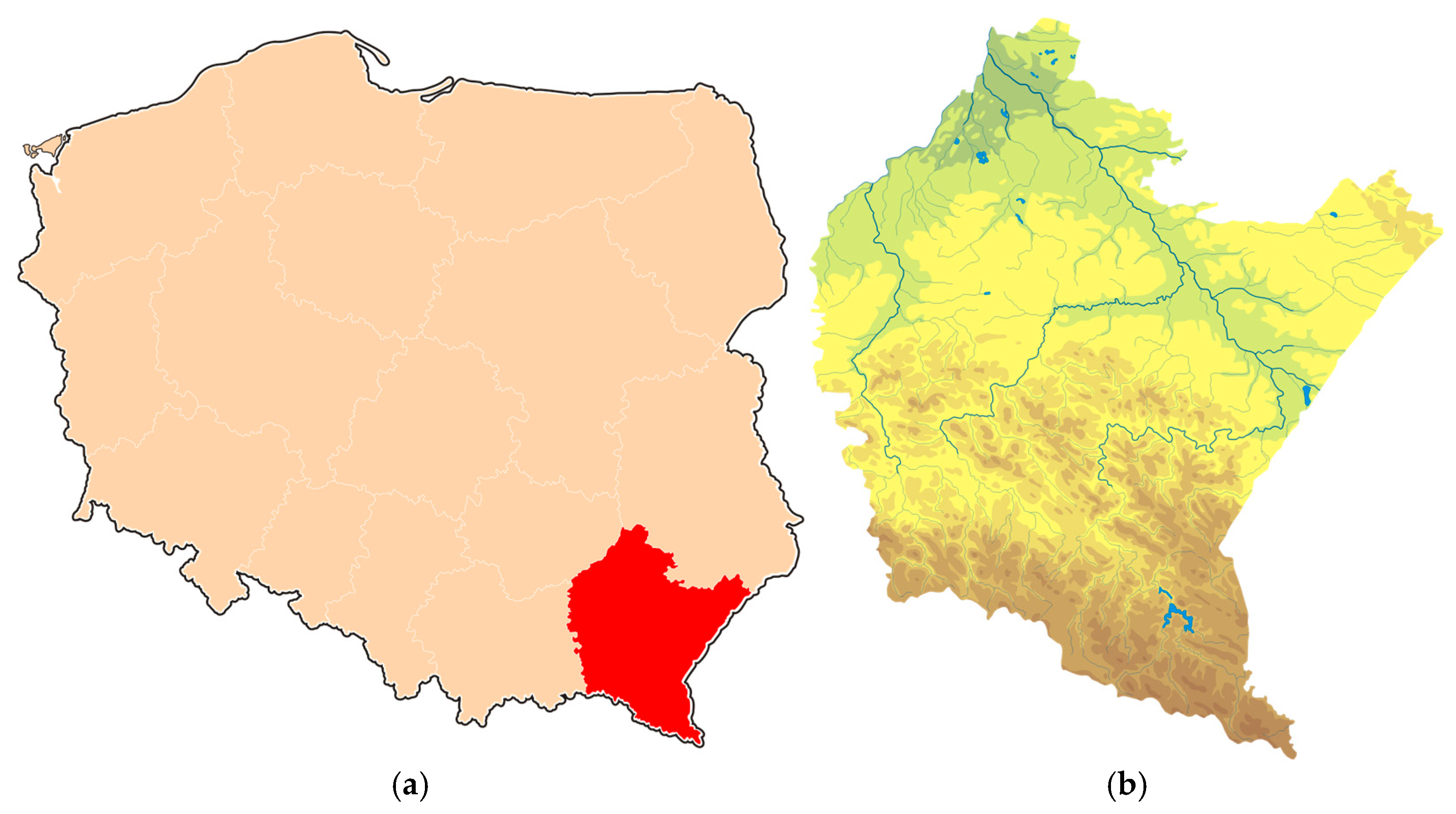

2. Case Study: Podkarpackie Voivodeship

2.1. Introduction to the Study Area

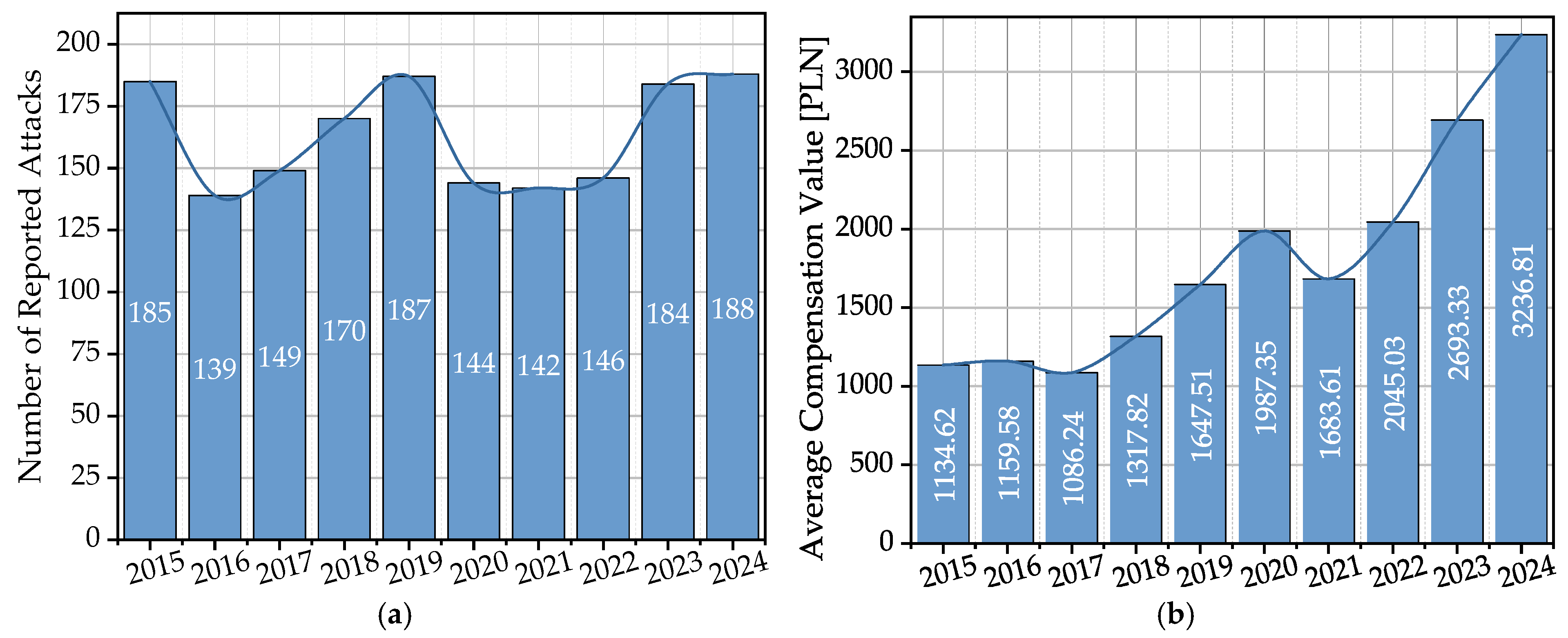

2.2. Scale and Temporal Dynamics of the Conflict

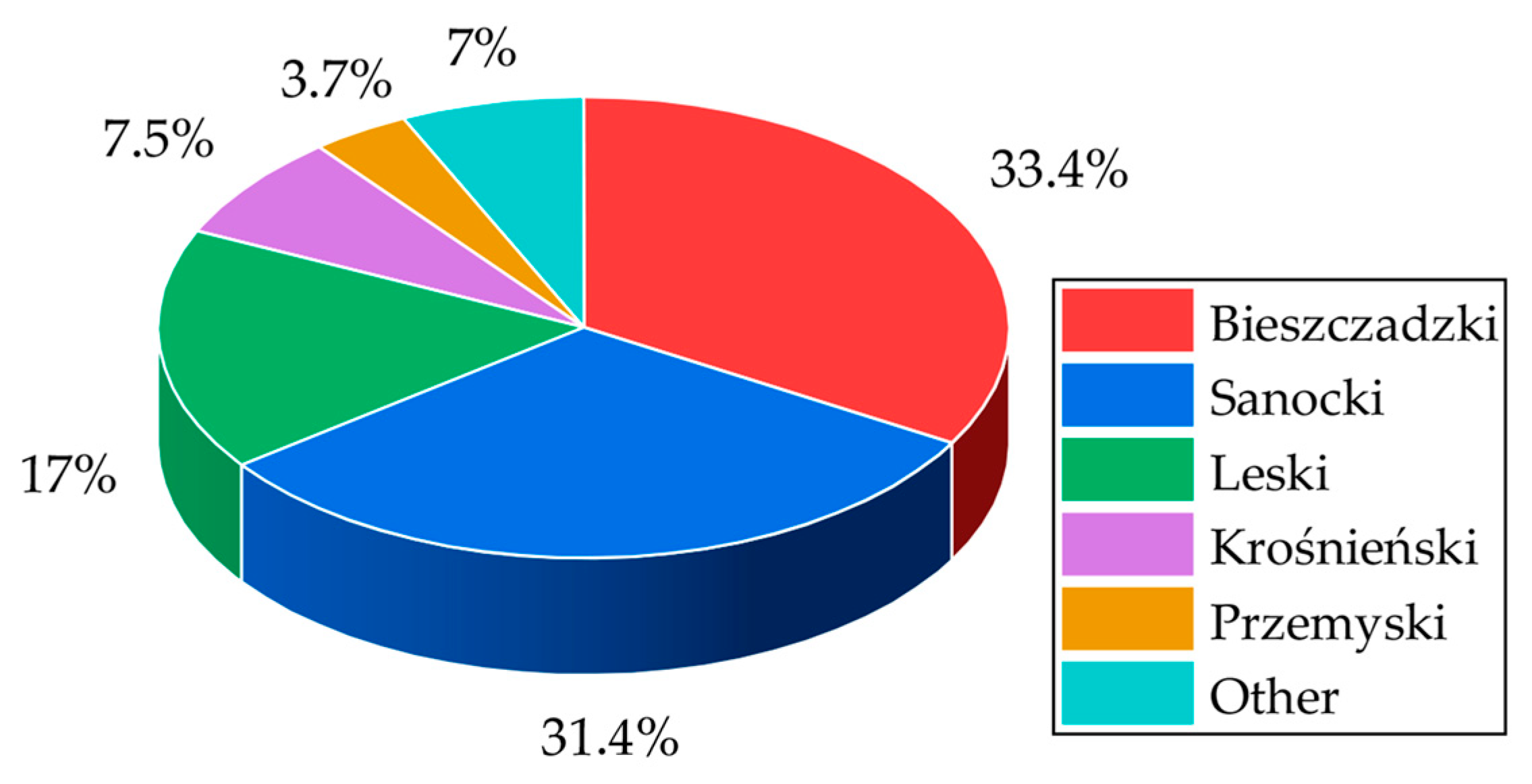

2.3. Spatial Concentration of the Conflict

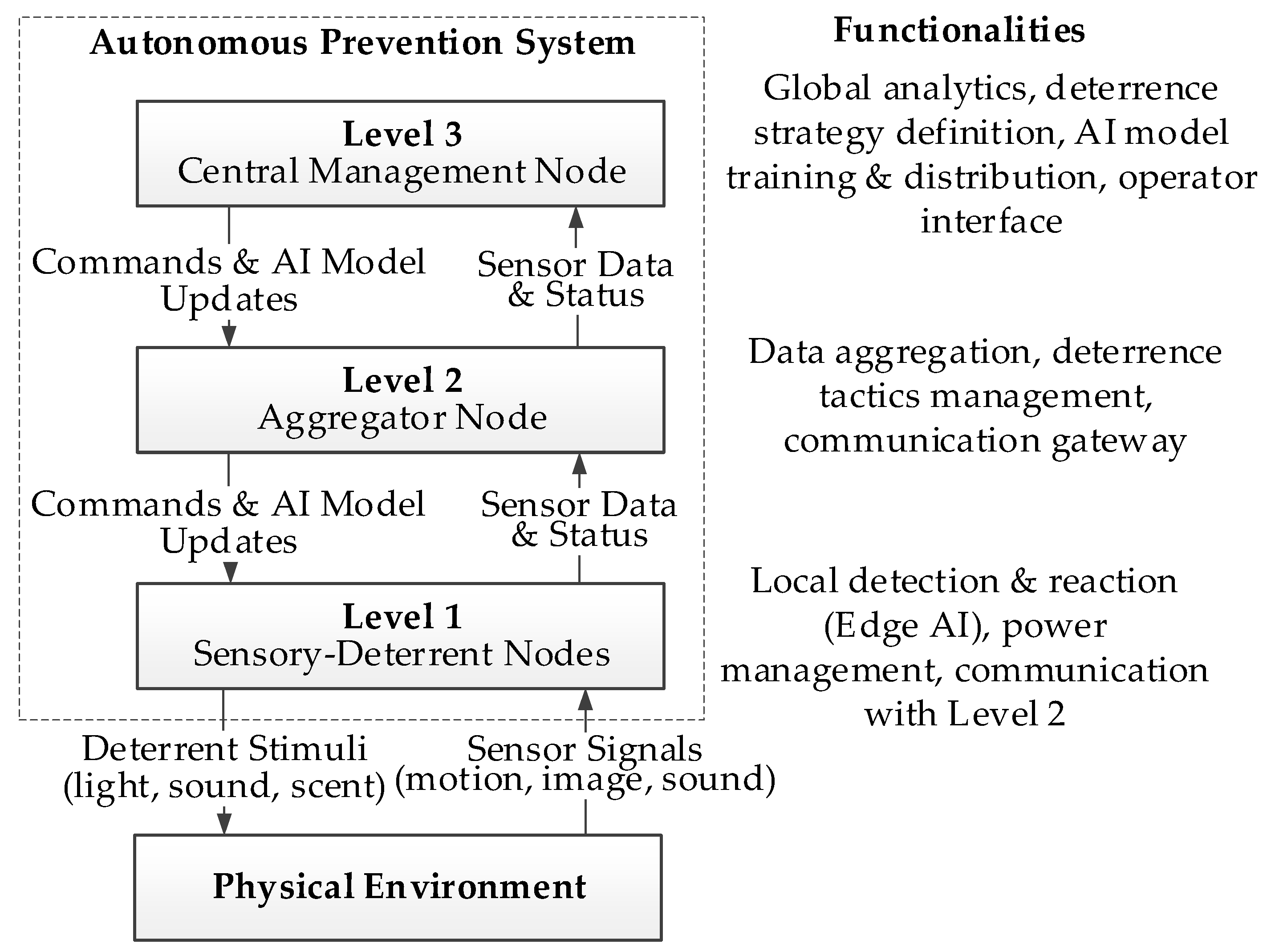

3. System Concept and Architecture

3.1. Rationale for System Architecture

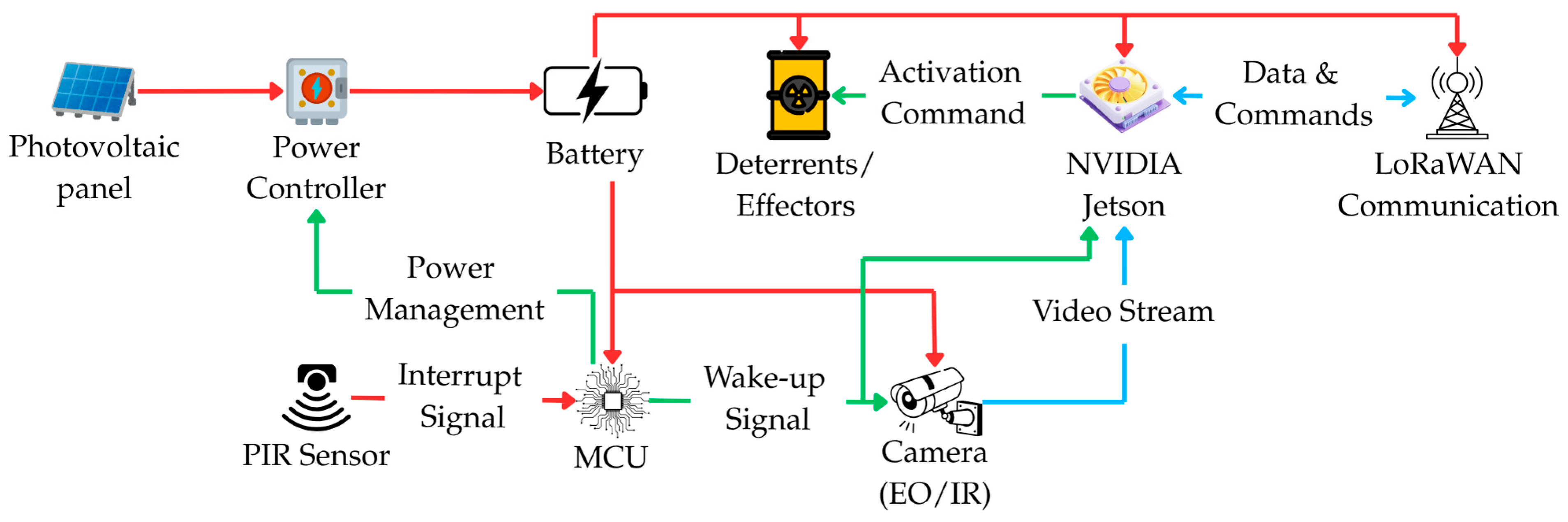

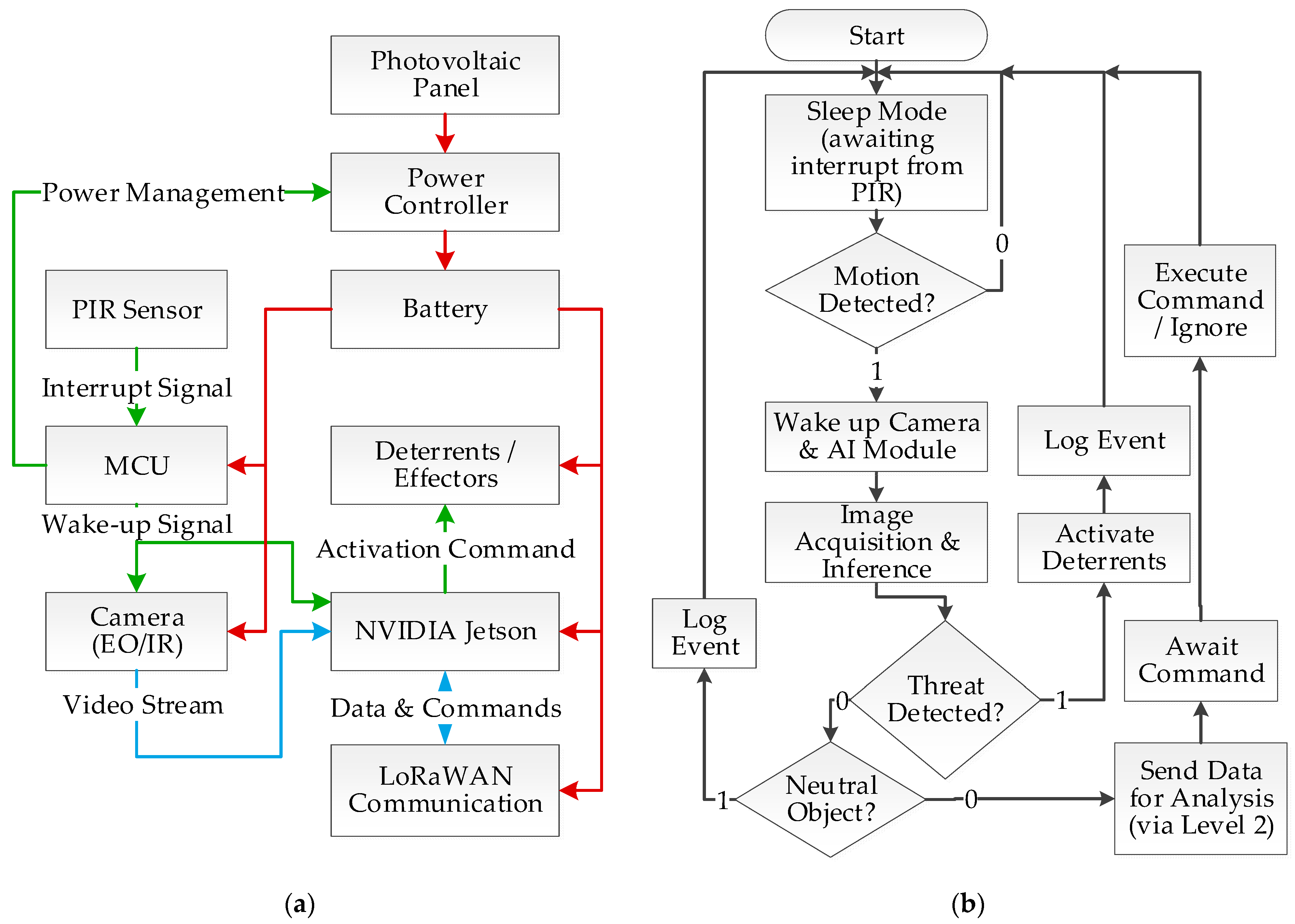

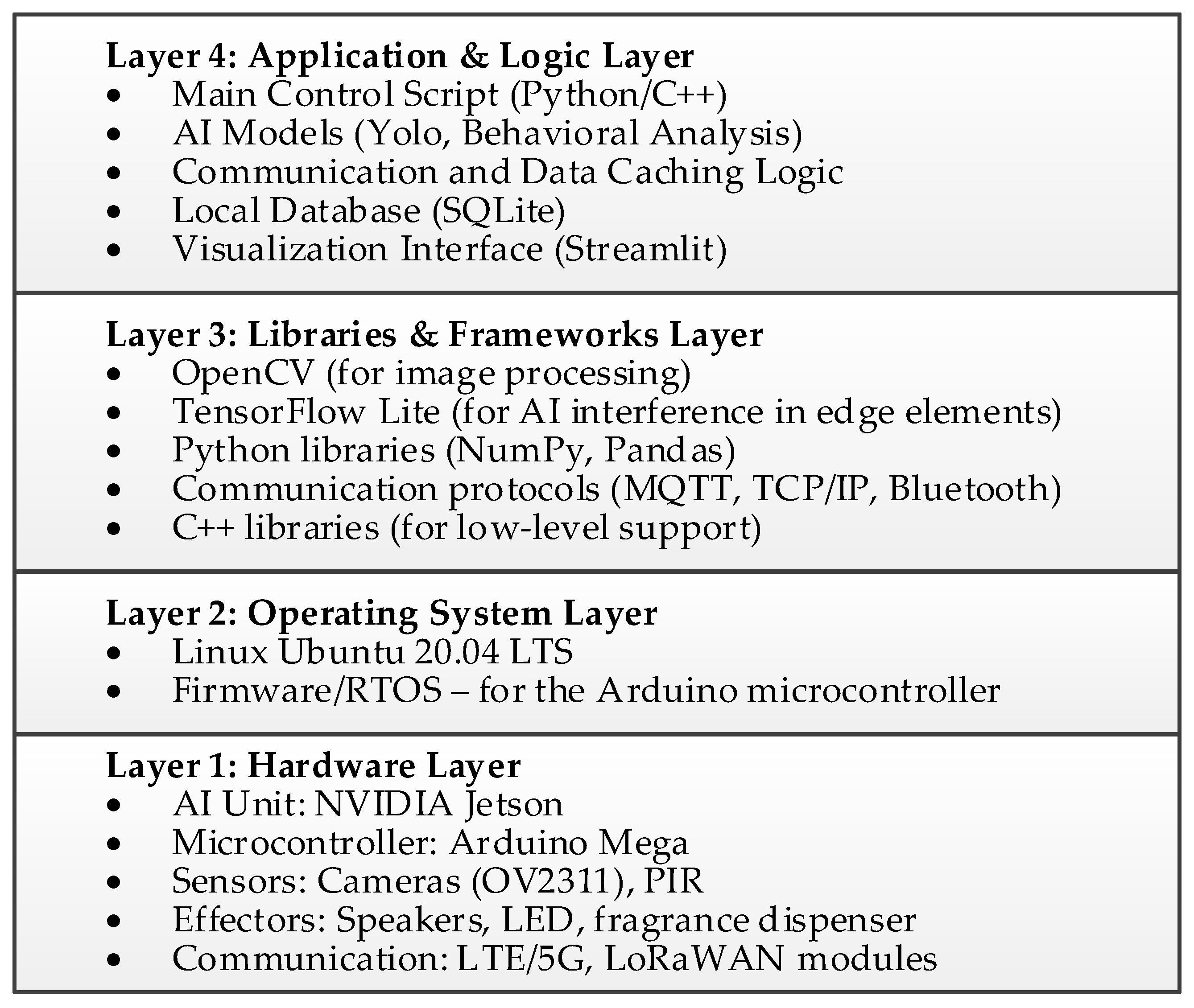

3.2. Architecture of the Sensory-Deterrent Node

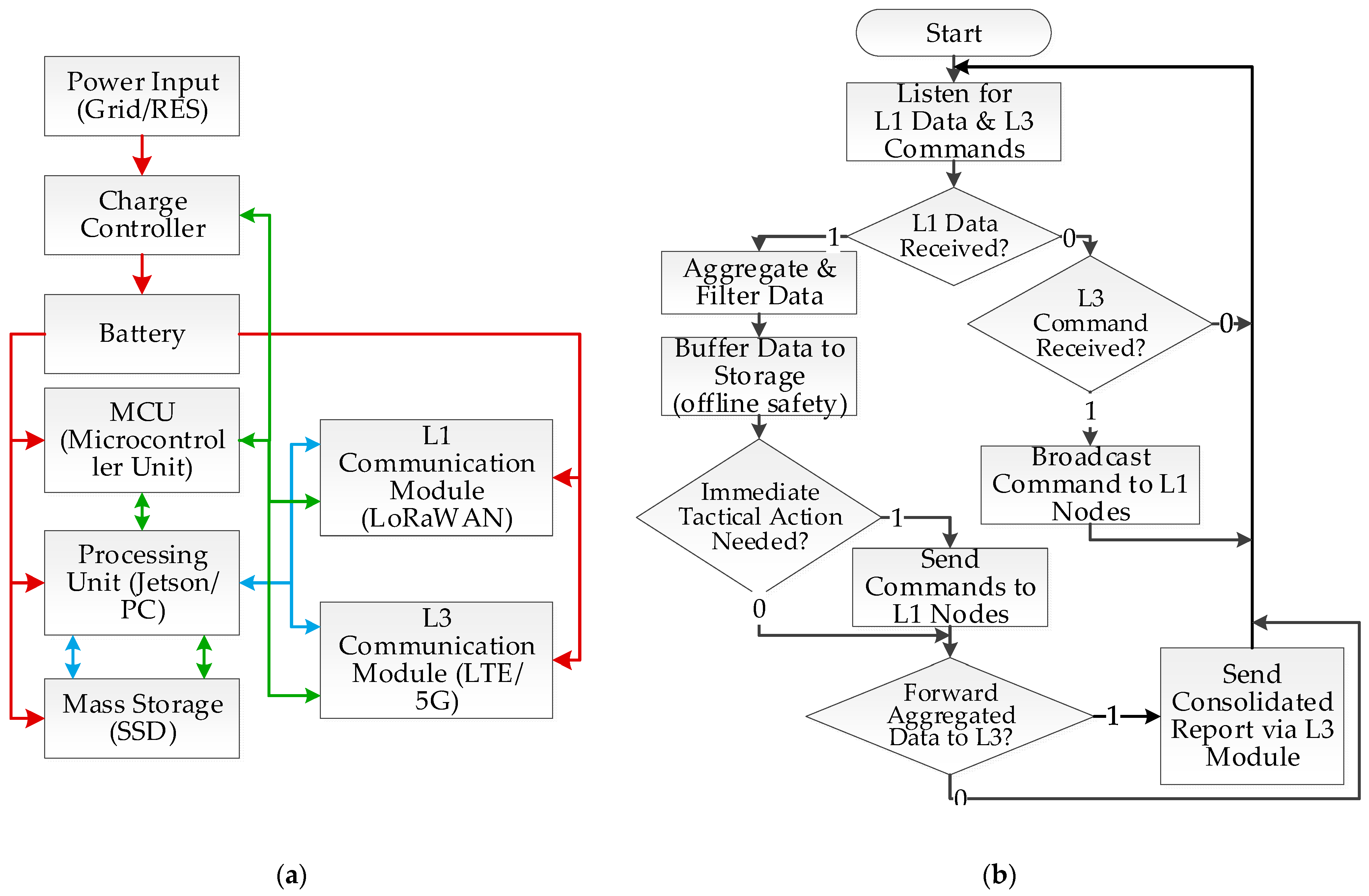

3.3. Architecture of the Aggregator Node

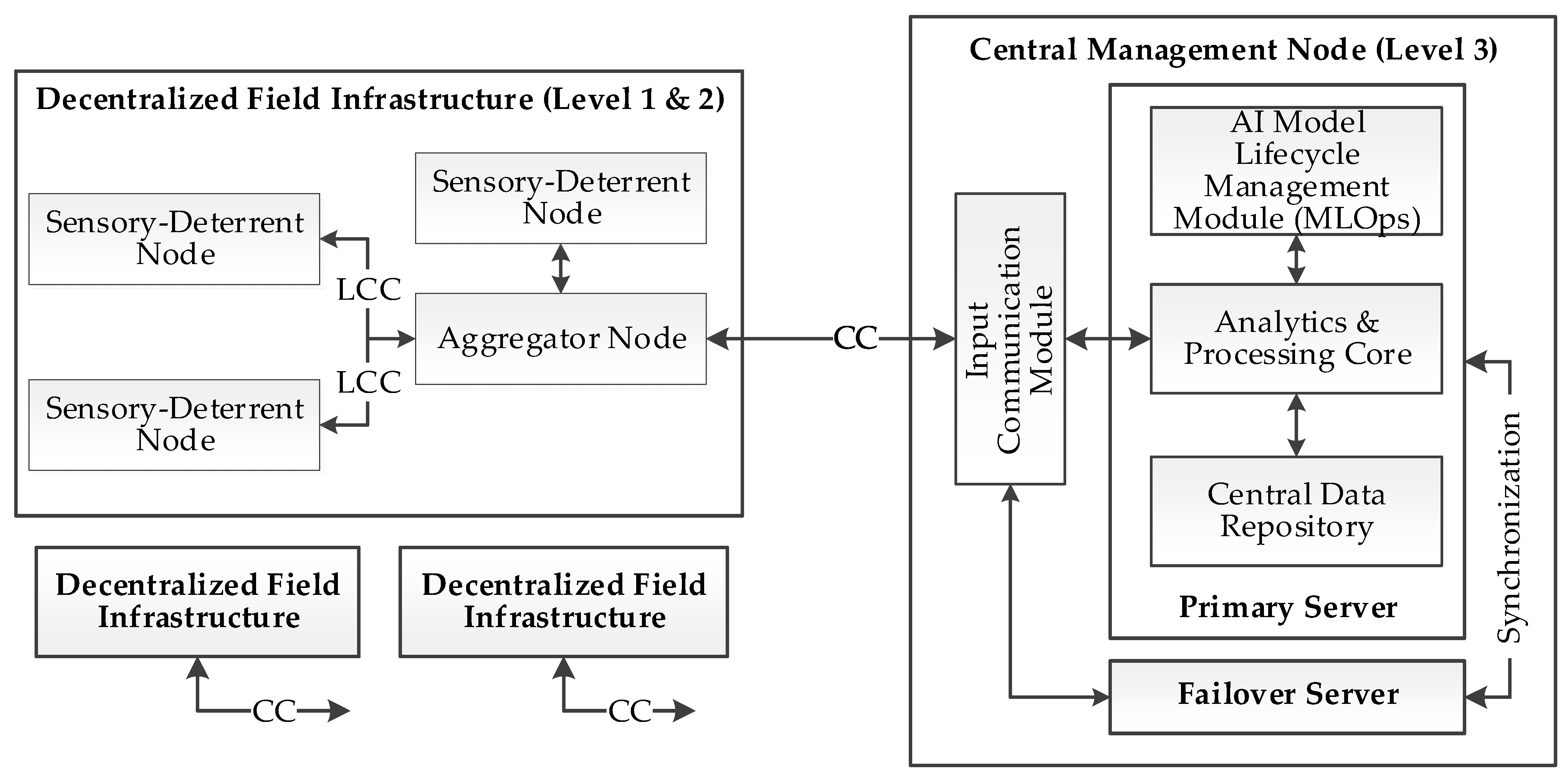

3.4. Architecture of the Central Management Node

4. Implementation and Prototyping

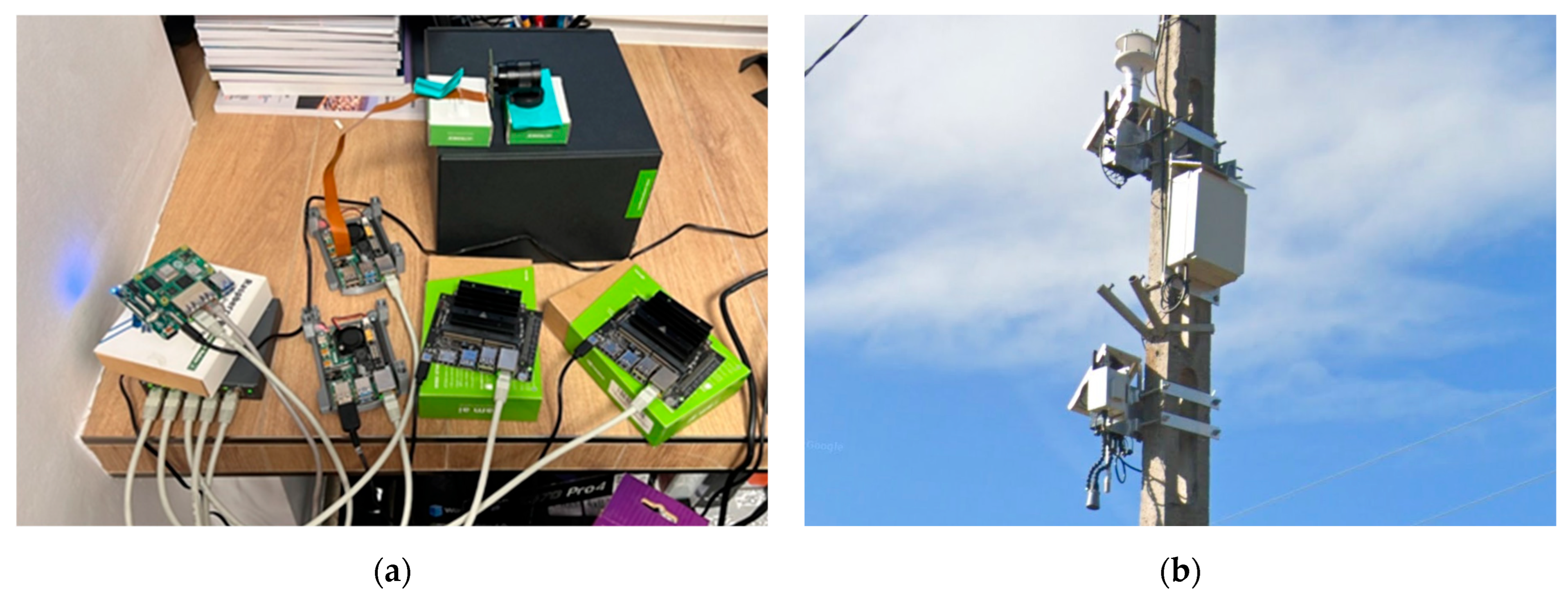

4.1. Hardware Prototype of the Sensory-Deterrent Node

4.2. Hardware Prototype of the Aggregator Node

4.3. Hardware Platform of the Central Node

4.4. Software Implementation

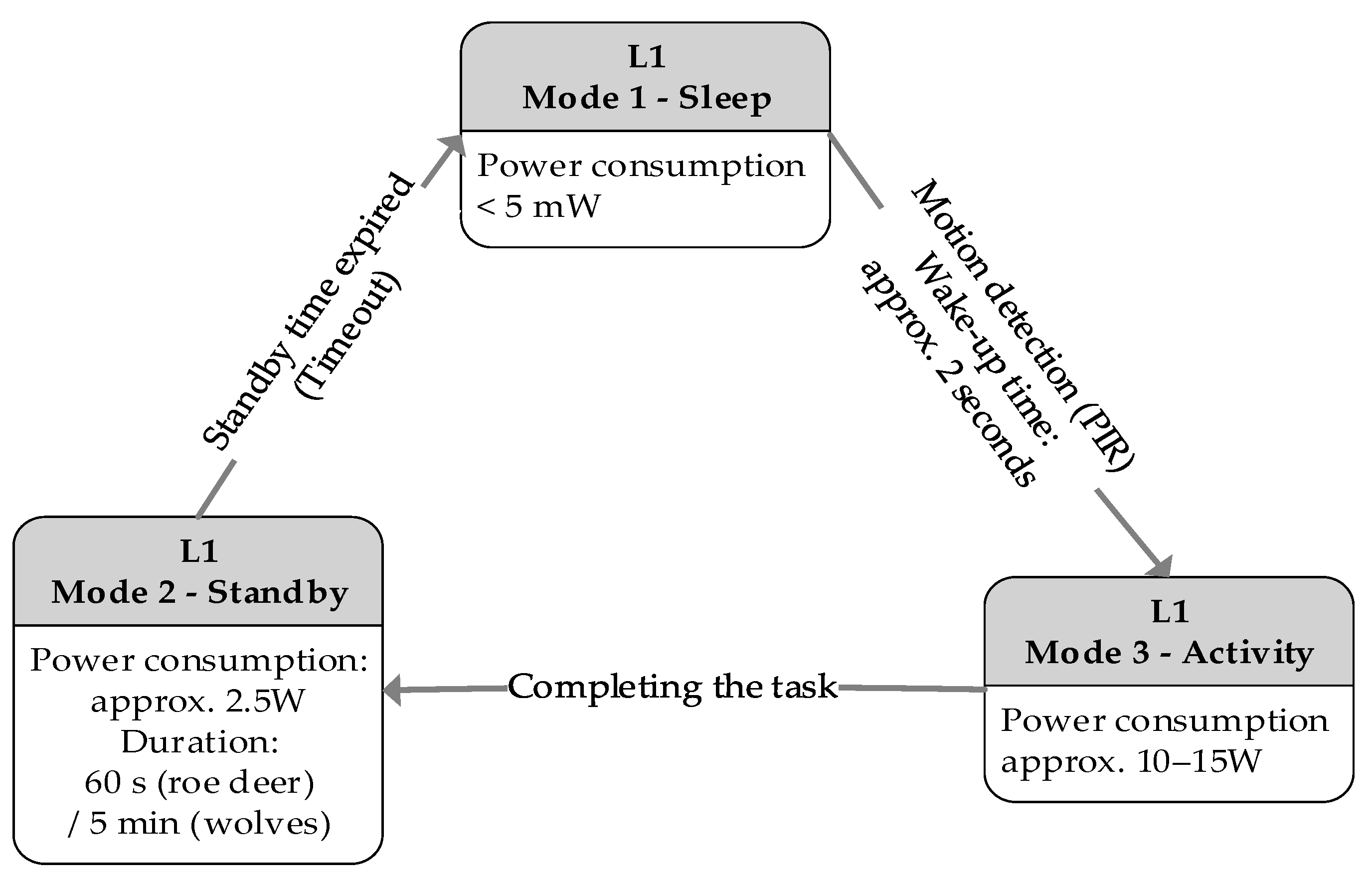

- Detection and Wake-up: The process begins when the ultra-low-power Passive Infrared (PIR) sensor, which continuously monitors the surroundings, detects motion. This detection generates an interruption signal that instantly wakes up the main microcontroller (MCU) from a deep sleep state.

- Data Acquisition: Immediately after waking up, the MCU activates power for the vision subsystem (EO/IR camera) and the Edge AI module (NVIDIA Jetson). The camera captures an image or a short video sequence, which is then passed directly to the Jetson module for analysis.

- Inference and Classification: On the Jetson module, the image is processed, and the implemented Convolutional Neural Network (CNN) model, e.g., from the YOLO family, classifies the detected object in real-time.

- Decision-Making: Based on the inference result, the system autonomously takes one of three predefined actions: a. If a threat is recognized (e.g., a wolf), the effector subsystem is activated to deter the intruder. b. If the object is neutral (e.g., a human), the event is only recorded in the system log, and no deterrent action is taken. c. If clear classification is not possible (e.g., due to poor lighting or partial object occlusion), the visual data is flagged and sent via the aggregator node for advanced analysis at the central node.

- Return to Sleep: After the entire sequence is completed, regardless of the outcome, the node immediately returns to its power-saving deep sleep state to await the next event.

- Action Selection: The system randomly selects one action (single or combined) from a pool containing only those stimuli currently considered effective for the given species.

- Application and Monitoring: The selected action is executed, and the system, in full activity mode (3), monitors the animal’s behavioral response in real-time using image analysis. The time allotted for assessing the reaction is species-dependent and based on its ethology—for timid roe deer, it is a few seconds, while for predators like wolves, it can be up to 30 s.

- Adaptation and Escalation: a. If the animal leaves the protected area, the sequence ends successfully. b. If the action proves ineffective, the system records this fact. In the event of three consecutive failures of a given single stimulus, it is temporarily removed from the pool of effective actions for that species. c. If the animal remains in the area, the system, after a randomly selected time interval between 30 and 90 s, returns to step one to execute another deterrent action.

- Post-Interaction Power Management: After the sequence ends (either by successful deterrence or exhaustion of the action pool), the system transitions from full activity mode (3) to standby mode (2). The duration of this mode is a key, configurable parameter determined empirically. For roe deer, it is set to 60 s, whereas for wolves, due to their tendency to return, it has been extended to 5 min.

- Return to Sleep: After the defined standby time has elapsed, if the PIR sensor does not detect new movement, the system enters deep sleep mode (1), minimizing energy consumption.

| Algorithm 1: Adaptive Deterrent Selection |

|

- Aggregation and Filtering: Data from one or more nodes are collected and pre-processed in real-time. The system filters redundant information and can perform data fusion to create a consolidated picture of the situation in a given segment (e.g., an animal’s movement path).

- Buffering: All significant, aggregated data is saved to local storage, ensuring its safety and integrity in case of a temporary loss of connectivity with Level 3.

- Tactical Decision: Based on the aggregated data and the currently active strategy received from Level 3, the node autonomously decides on a potential, coordinated deterrent action, sending appropriate commands to selected Level 1 nodes.

- Reporting: Key aggregated information is then sent as a concise report to the central management node for further global analysis.

- Data Analytics and Collection Module: a. This process continuously listens for incoming data from aggregator nodes (Level 2). b. Upon receiving a consolidated report, the data is validated, pre-processed, and saved to the central data repository. c. Long-term statistical analyses run in the background to identify patterns, trends, and assess the overall effectiveness of deterrence strategies. The results are made available to the operator.

- AI Model Lifecycle Management (MLOps) Module: a. This service operates periodically or on demand by the operator. b. Using the data accumulated in the repository, it initiates the process of training or re-training classification models to improve their effectiveness or expand them to include new species. c. After a new model is successfully validated, this module is responsible for its secure distribution to the appropriate aggregator nodes, which then forward it to the units in the field.

- Operator Interface and System Management Module: a. This is a continuously running service that provides the Graphical User Interface (GUI) for the operator. b. It allows the operator to have a real-time view of the entire system’s status, visualize events on maps, and review historical data. c. Through this interface, the operator can define and modify global deterrence strategies, manage software updates, and manually verify events flagged by the system as ambiguous.

4.5. Object Detection Model and Training Process

- Level 1 (Sensory Node): Due to strict energy constraints and the need for rapid inference on the NVIDIA Jetson Orin Nano module, the YOLOv8-Nano model was used. It provides an optimal balance between operational speed and classification accuracy in edge conditions.

- Level 2 (Aggregator Node): With the greater computational power of the NVIDIA Jetson Orin NX module and a more robust power supply, this node utilizes the YOLOv8-Medium model. It is used for in-depth verification of ambiguous cases escalated from Level 1, ensuring higher detection confidence.

- Level 3 (Central Node): On the central server, which functions as a “model factory,” the YOLOv8-Large model is employed. Its task is to achieve the maximum possible accuracy during the process of re-training the models on new data.

4.6. Technical Validation and Field Test Methodology

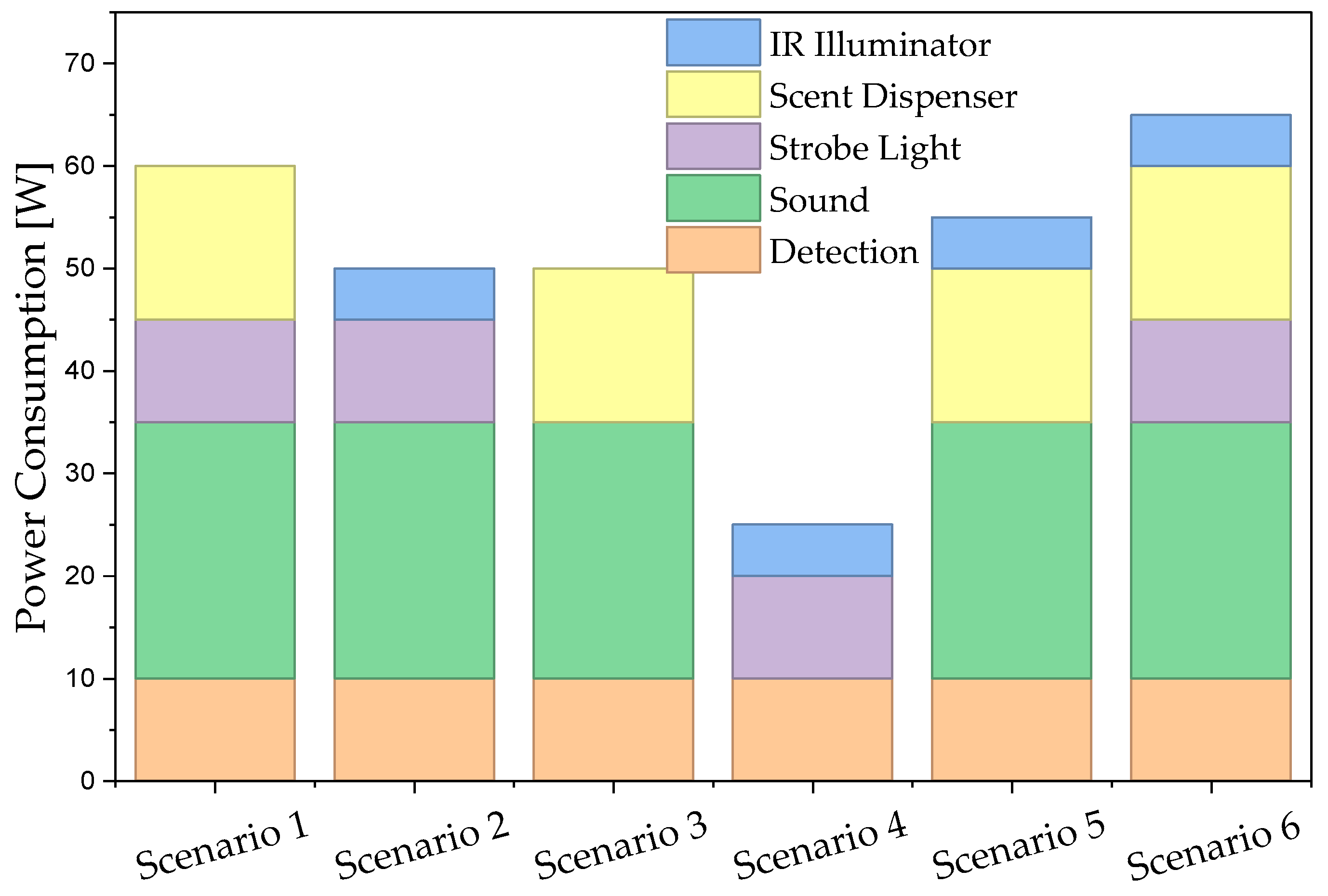

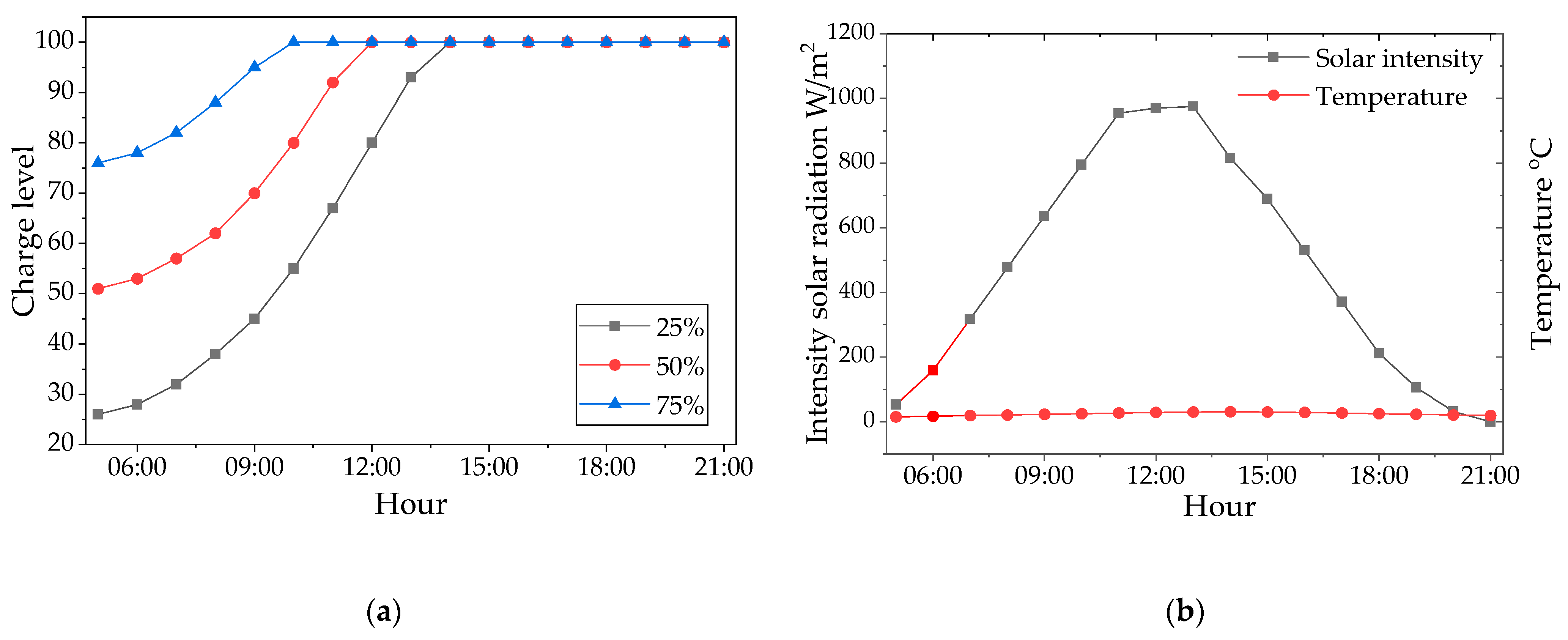

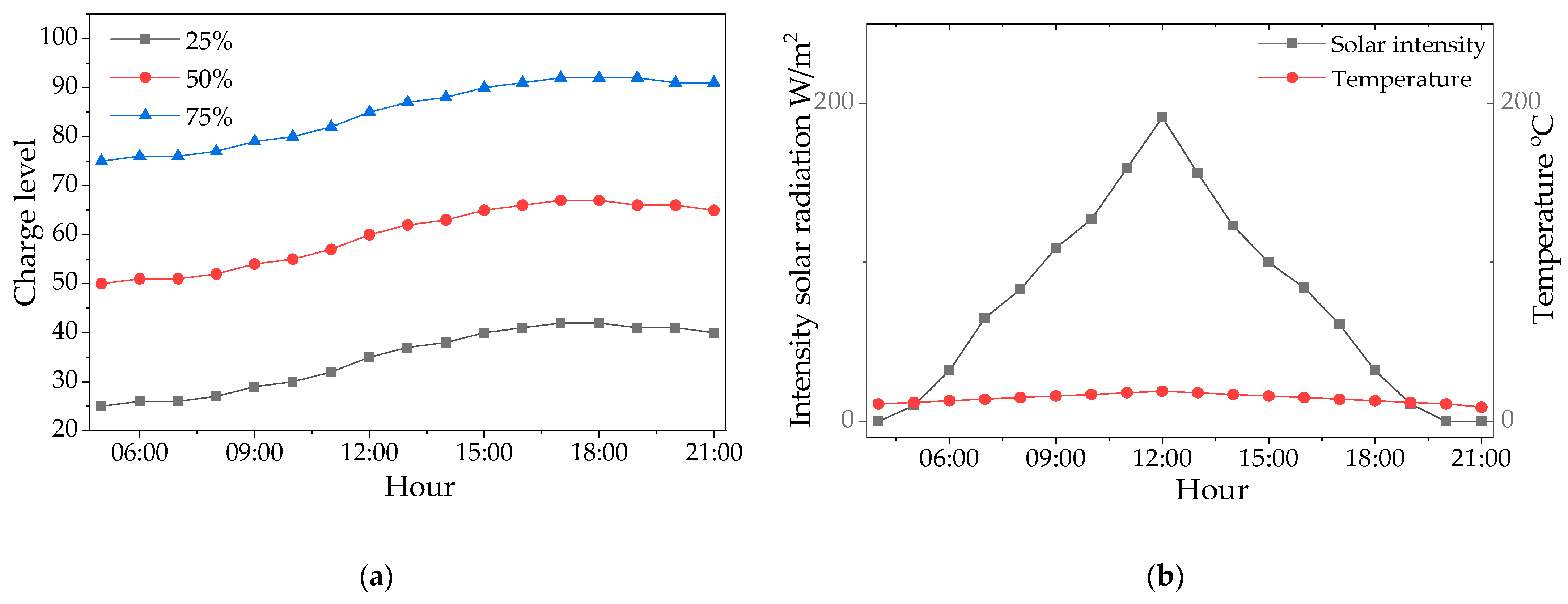

4.6.1. Technical Efficiency and Energy Balance

- Variant A (Passive Fence): The field was protected solely by a 170 cm high physical fence, consisting of four lines with spacing optimized for roe deer. In this variant, our system served only as a passive video monitoring tool to record intrusion attempts.

- Variant B (Electric Fence—24/7): A fence with the same specifications as in Variant A was used, but it was connected to a constant electrical supply around the clock.

- Variant C (Electric Fence—Night): An intermediate variant where the electric fence was activated automatically by an astronomical clock only during nighttime hours (from sunset to sunrise).

- Variant D (Autonomous System): The field was protected exclusively by our sensory-deterrent system, without the use of any physical barrier in the form of a fence.

4.6.2. Network Scalability and Performance

- Critical alerts (animal detection) are sent with the highest priority via MQTT.

- Operational data (system status, battery level) is buffered and sent at scheduled intervals.

- Low-priority data (archival images, statistics) is transferred via HTTPS during peak energy hours (when the system receives the most energy from renewable sources).

- Local data buffering in aggregator nodes, enabling autonomy in case of connectivity loss with Level 3.

- Exponential backoff algorithms for transmission collisions.

- CSMA (Carrier Sense Multiple Access) protocol for efficient medium access.

- Adaptive packet size optimization based on current network conditions.

5. Results

5.1. Performance of the AI Detection Model

5.2. Biological Validation Results

6. Discussion

6.1. Interpretation of Results

6.2. Main Innovations and System Advantages

6.3. Limitations and Future Work

6.3.1. Limitations of the Study

6.3.2. Future Work

6.4. Broader Implications

- Adaptability: By using artificial intelligence at the network edge (Edge AI), the system actively counteracts habituation. It can dynamically change the type, intensity, and sequence of applied stimuli, making its actions unpredictable to predators and thus effective in the long term.

- Selectivity and Precision: Unlike passive systems, our solution first identifies the species through image analysis. This allows for a selective response—deterrence is triggered only upon detecting a defined threat (e.g., a wolf), while other, neutral animals (e.g., roe deer) can be ignored. This minimizes unnecessary stress on wildlife and allows for the precise tailoring of the stimulus to the specific species.

- Research Potential: The system is not just a preventive tool but also a platform for collecting unique ethological data. By recording animal responses to specific stimuli, it enables further research into their behavior. The collected data can be used to find and validate the most effective, harmless deterrence methods, making a valuable contribution to the science of wildlife welfare and management.

6.5. Economic Analysis and Comparison with Alternative Methods

6.6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Podkarpacki Ośrodek Badań Regionalnych. Podkarpacie: Więcej wilków, łosi i niedźwiedzi [Podkarpacie: More Wolves, Moose, and Bears]; Urząd Statystyczny w Rzeszowie: Rzeszów, Poland, 2025.

- Statistics Poland, Agriculture and Environment Department. Nature and biodiversity protection. In Environment 2023; Statistics Poland: Warsaw, Poland, 2023; pp. 111–147. [Google Scholar]

- Nowak, S.; Mysłajek, R.W. Wolf recovery and population dynamics in Western Poland, 2001–2012. Mammal Res. 2016, 61, 133–143. [Google Scholar] [CrossRef]

- Nowak, S.; Mysłajek, R.W. Response of the wolf (Canis lupus Linnaeus, 1758) population to various management regimes at the edge of its distribution range in Western Poland, 1951–2012. Appl. Ecol. Environ. Res. 2017, 15, 187–203. [Google Scholar]

- Reidinger, R.F., Jr. Human-Wildlife Conflict Management. Prevention and Problem, 2nd ed.; John Hopkins University Press: Baltimore, MD, USA, 2022. [Google Scholar]

- Conover, M.R.; Conover, D.O. Human-Wildlife Interactions: From Conflict to Coexistence, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Smith, J.A.; Suraci, J.P.; Clinchy, M.; Crawford, A.; Roberts, D.; Zanette, L.Y.; Wilmers, C.C. Fear of the human “super predator” reduces feeding time in large carnivores. Proc. R. Soc. B Biol. Sci. 2017, 284, 20170433. [Google Scholar] [CrossRef] [PubMed]

- DeLiberto, S.T.; Werner, S.J. Applications of Chemical Bird Repellents for Crop and Resource Protection: A Review and Synthesis. Wildl. Res. 2024, 51, WR23062. [Google Scholar] [CrossRef]

- Smith, T.S.; Herrero, S.; DeBruyn, T.D. Alaskan brown bears, humans, and habituation. Ursus 2005, 16, 1–10. [Google Scholar] [CrossRef]

- Geist, V. How to keep bears out and people safe. In Bears: Behavior, Ecology, Conservatio; Voyageur Press: Minneapolis, MN, USA, 1998; pp. 200–207. [Google Scholar]

- Rutherford, K.L.; Clair, C.C.S.; Visscher, D.R. Elk and Deer Habituate to Stationary Deterrents in an Agricultural Landscape. J. Wildl. Manag. 2025, 89, e22543. [Google Scholar] [CrossRef] [PubMed]

- Breck, S.W.; Poessel, S.A.; Bonnel, M. A review of chemical repellents for wolves: A field of dreams? In Wolf-Human Conflicts: From Challenge to Coexistence; Musiani, M., Boitani, L., Paquet, P.C., Eds.; Routledge: London, UK, 2019; pp. 185–201. [Google Scholar]

- Gaynor, K.M.; Brown, J.S.; Middleton, A.D.; Power, M.E.; Brashares, J.S. Landscapes of fear: Spatial patterns of risk perception and response. Trends Ecol. Evol. 2019, 34, 355–368. [Google Scholar] [CrossRef] [PubMed]

- Zualkernan, I.; Dhou, S.; Judas, J.; Sajun, A.R.; Gomez, B.R.; Hussain, L.A. An IoT System Using Deep Learning to Classify Camera Trap. Computers 2022, 13, 2–24. [Google Scholar] [CrossRef]

- Sato, R.; Saito, H.; Tomioka, Y.; Kohira, Y. Energy Reduction Methods for Wild Animal Detection Devices. IEEE Access 2022, 10, 24149–24161. [Google Scholar] [CrossRef]

- Meek, P.D.; Collingridge, L.; Smith, D.; Ballard, G. A Calibration Method for Optimizing Detection of Species Using Camera Traps. JOJ Wildl. Biodivers. 2024, 5, 555655. [Google Scholar] [CrossRef]

- Jędrysik, W.; Hajder, P.; Rauch, Ł. Review of XAI methods for application in heavy industry. Comput. Methods Mater. Sci. 2025, 25, 31–43. [Google Scholar] [CrossRef]

- Cajas Ordóñez, S.A.; Samanta, J.; Suárez-Cetrulo, A.L.; Carbajo, R.S. Intelligent Edge Computing and Machine Learning: A Survey of Optimization and Applications. Sensors 2025, 25, 417. [Google Scholar] [CrossRef]

- Wang, X.; Han, Y.; Leung, V.C.; Niyato, D.; Yan, X.; Chen, X. Convergence of edge computing and deep learning: A comprehensive survey. IEEE Commun. Surv. Tutor. 2020, 22, 869–904. [Google Scholar] [CrossRef]

- Xu, L.; Shi, W. Edge Computing. Systems and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2025. [Google Scholar]

- Hajder, P.; Rauch, Ł. Moving Multiscale Modelling to the Edge: Benchmarking and Load Optimization for Cellular Automata on Low Power Microcomputers. Processes 2021, 9, 2225. [Google Scholar] [CrossRef]

- Hajder, P.; Rauch, Ł. Reconfiguration of the Multi-channel Communication System with Hierarchical Structure and Distributed Passive Switching. In Computational Science—ICCS 2019; Springer: Cham, Switzerland, 2019. [Google Scholar]

- Hajder, P.; Mojżeszko, M.; Hallo, F.; Hajder, L.; Regulski, K.; Banaś, K. Event-Driven and Scalable Digital Twin System for Real-Time Non-Destructive Testing in Industrial Computational Systems. In Proceedings of the 2025 IEEE International Conference on Pervasive Computing and Communications Workshops and Other Affiliated Events (PerCom Workshops), Washington, DC, USA, 17–21 March 2025. [Google Scholar]

- Hajder, M.; Nycz, M.; Hajder, P.; Liput, M. Information Security of Weather Monitoring System with Elements of Internet Things. In Security and Trust Issues in Internet of Things. Blockchain to the Rescue; Sharma, S., Bhushan, B., Unhelkar, B., Eds.; CRC Press: Boca Raton, FL, USA; Francis & Taylor: Oxfordshire, UK, 2021; Volume 1, pp. 57–84. [Google Scholar]

- Hajder, M.; Liput, M.; Hajder, P.; Hajder, L.; Mojżeszko, M.; Rogólski, R.; Kiszkowiak, Ł. How to Minimize the Impact of Sociopolitical Factors on the Implementation of Pervasive Computing Projects. In Proceedings of the 2025 IEEE International Conference on Pervasive Computing and Communications Workshops and Other Affiliated Events (PerCom Workshops), Washington, DC, USA, 17–21 March 2025. [Google Scholar]

| Counties | Number of Recorded Incidents (2015–2024) | Percentage Share of the Total |

|---|---|---|

| Bieszczadzki | 546 | 33.40% |

| Sanocki | 514 | 31.40% |

| Leski | 278 | 17.00% |

| Krośnieński | 122 | 7.50% |

| Przemyski | 61 | 3.60% |

| The remaining 14 counties | 114 | 7.00% |

| Result | 1635 | 100.00% |

| Communication Level | Access Technology | Application Protocol | Range | Security | Application |

|---|---|---|---|---|---|

| L1 ↔ L2 | LoRaWAN (SF 8-9, BW 125 kHz) | MQTT | Up to 5 km | AES-128 | MQTT: Sensory data, control commands |

| L2 ↔ L3 | LTE/4G/5G (private APN) | MQTT/HTTPS | unlimited | SSL/TLS + PKI | MQTT: alerts; HTTPS: data archives |

| Parameter | Value | Notes |

|---|---|---|

| Hardware Platform (L1) | NVIDIA Jetson Orin Nano | ARM Cortex-A78AE 6-core, Ampere GPU (1024 CUDA cores) |

| Hardware Platform (L2) | NVIDIA Jetson Orin NX | Local inference and decision-making |

| Operating System | Ubuntu 20.04 LTS | Docker containers, Python, OpenCV, TensorFlow Lite |

| AI Model | YOLOv8-Nano/Medium/Large | Transfer learning from COCO, INT8 quantization |

| Input Resolution | 640 × 480 | Batch size = 1 |

| Inference Time (L1) | 20–30 ms | Single frame, end-to-end |

| System Response Time | 0.5–1 s (idle), ~2 s (sleep) | From PIR detection to decision |

| Power Consumption (sleep/idle/active) | <5 mW/2.5 W/10–15 W | Peaks up to 60 W with effectors |

| Battery Autonomy | ~15 days | 50 Ah LiFePO4 battery |

| Training Datasets | 5000–8000 images per class | Plus synthetic 3D and locally collected data |

| Parameter | Small Network (≤20 Nodes) | Large Network (>20 Nodes) | Management Mechanism |

|---|---|---|---|

| Transmission Latency | 1–2 s | 3–5 s | QoS Prioritization |

| LoRaWAN Throughput | 5.5 kbps (SF8) | 0.3–1.1 kbps (SF10-12) | Adaptive SF Change |

| Collision Rate | <1% | 2–3% | TDMA + Backoff Algorithms |

| Time in Power-Saving Mode | 94% of uptime | 90% of uptime | Dynamic Power Management |

| Deployment Architecture | Single Aggregator | Multi-Aggregator Network | Automatic Load Balancing |

| Class (Species) | Precision | Recall | F1-Score | mAP@.5 | Number of Photos in the Test Set |

|---|---|---|---|---|---|

| Wolf | 0.85 | 0.80 | 0.82 | 0.83 | 850 |

| Roe Deer | 0.90 | 0.85 | 0.87 | 0.88 | 1200 |

| Red Deer | 0.89 | 0.85 | 0.86 | 0.87 | 1090 |

| Human | 0.90 | 0.86 | 0.88 | 0.89 | 980 |

| Bear | 0.78 | 0.70 | 0.74 | 0.73 | 210 |

| Average/Total | 0.86 | 0.81 | 0.84 | 0.84 | 4330 |

| Protection Method | Number of System Activations | Number of Roe Deer Classified | Habituation Time (days) | Estimated Losses by Farmer (%) |

|---|---|---|---|---|

| Variant A (Passive fence) | 1130 | 745 | 28 | 30–50 |

| Variant B (Electric fence—24/7) | 975 | 580 | >107 | 1–2 |

| Variant C (Electric fence—Night) | 1015 | 630 | 50 | 20–30 |

| Variant D (Autonomous system) | 1195 | 830 | >107 | 2–5 |

| Protection Method | Investment Cost (PLN/EUR) | Total Daily Cost (PLN/EUR) | Autonomy | Effectiveness | Adaptability |

|---|---|---|---|---|---|

| Our System (1 node) | 7.500/1.750 | 63/15 | ≥15 days | 95% damage reduction | Full—dynamic stimuli and species selection |

| Regular Fence | 1.970/460 | 505/118 | None | 20–30% damage reduction | None—constant stimulus |

| Regular Fence with Fladry | 2.160/500 | 810/190 | None | 20–30% damage reduction | None—constant stimulus |

| Electric Fence | 3.370/880 | 1.300/300 | Requires power supply | 60–70% damage reduction | Limited—only pulse strength regulation |

| Electric Fence with Fladry | 3.570/830 | 1.600/370 | Requires power supply | 60–70% damage reduction | Limited—only pulse strength regulation |

| Shepherd Dog | 4.000/930 | 80/20 | Round-the-clock care | 80–90% damage reduction | Moderate—limited to known routes |

| Shepherd—Night Only | 2.000/465 | 275/65 | Night duty | 70–80% damage reduction | Limited—no daytime operation |

| Shepherd with Shepherd Dog | 6.000/1.395 | 355/85 | Round-the-clock care | 90–95% damage reduction | Moderate—dog recognizes species, shepherd supervises |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hajder, M.; Kolbusz, J.; Liput, M. An AI-Based Integrated Multi-Sensor System with Edge Computing for the Adaptive Management of Human–Wildlife Conflict. Sensors 2025, 25, 6415. https://doi.org/10.3390/s25206415

Hajder M, Kolbusz J, Liput M. An AI-Based Integrated Multi-Sensor System with Edge Computing for the Adaptive Management of Human–Wildlife Conflict. Sensors. 2025; 25(20):6415. https://doi.org/10.3390/s25206415

Chicago/Turabian StyleHajder, Mirosław, Janusz Kolbusz, and Mateusz Liput. 2025. "An AI-Based Integrated Multi-Sensor System with Edge Computing for the Adaptive Management of Human–Wildlife Conflict" Sensors 25, no. 20: 6415. https://doi.org/10.3390/s25206415

APA StyleHajder, M., Kolbusz, J., & Liput, M. (2025). An AI-Based Integrated Multi-Sensor System with Edge Computing for the Adaptive Management of Human–Wildlife Conflict. Sensors, 25(20), 6415. https://doi.org/10.3390/s25206415