Large Language Models and 3D Vision for Intelligent Robotic Perception and Autonomy

Abstract

1. Introduction

2. Background

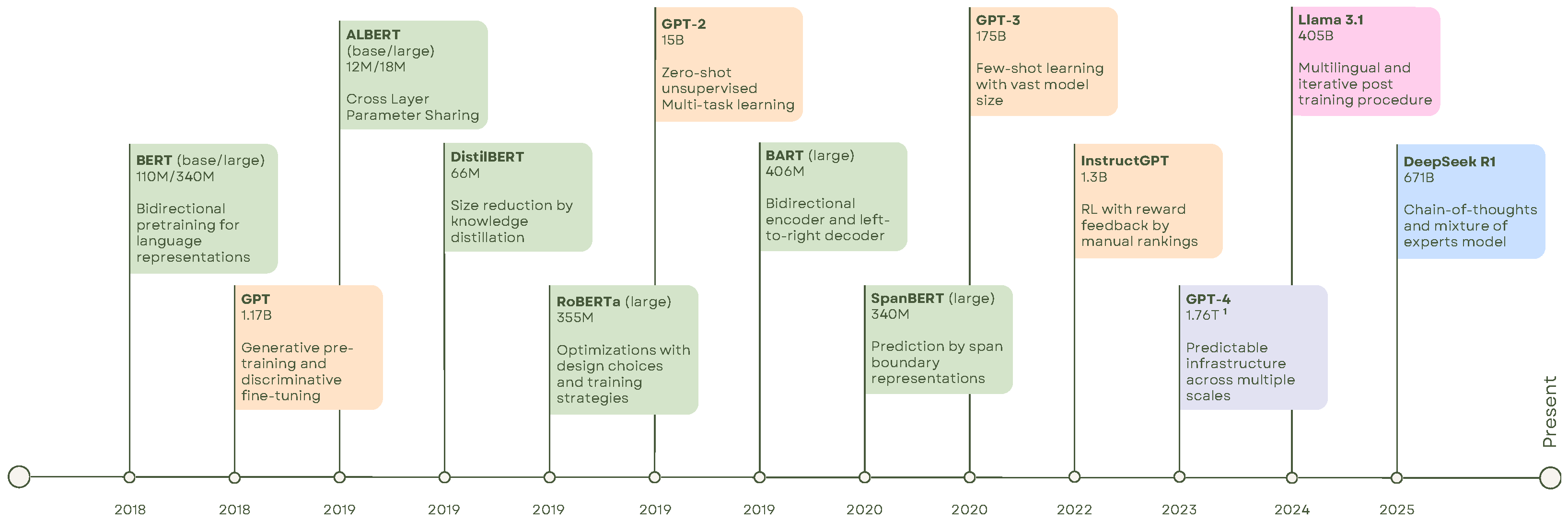

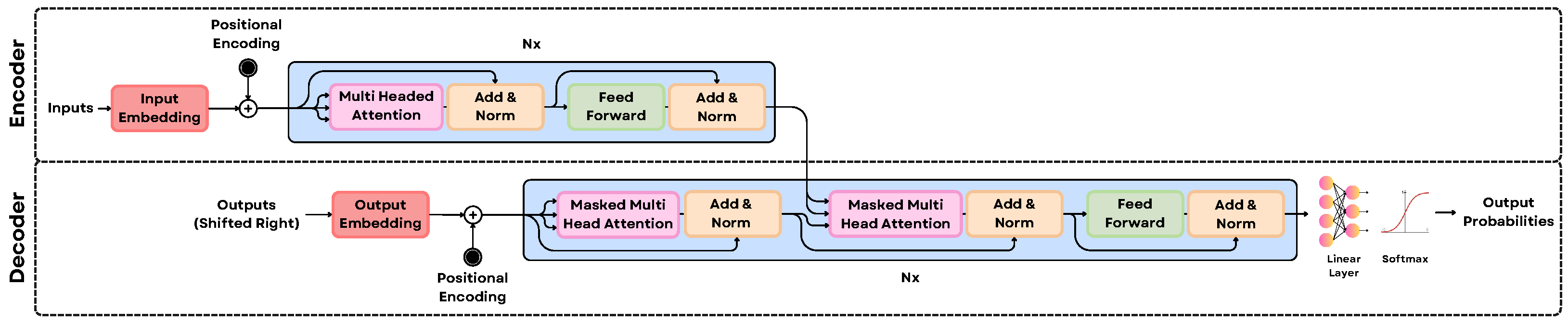

2.1. Overview of LLMs

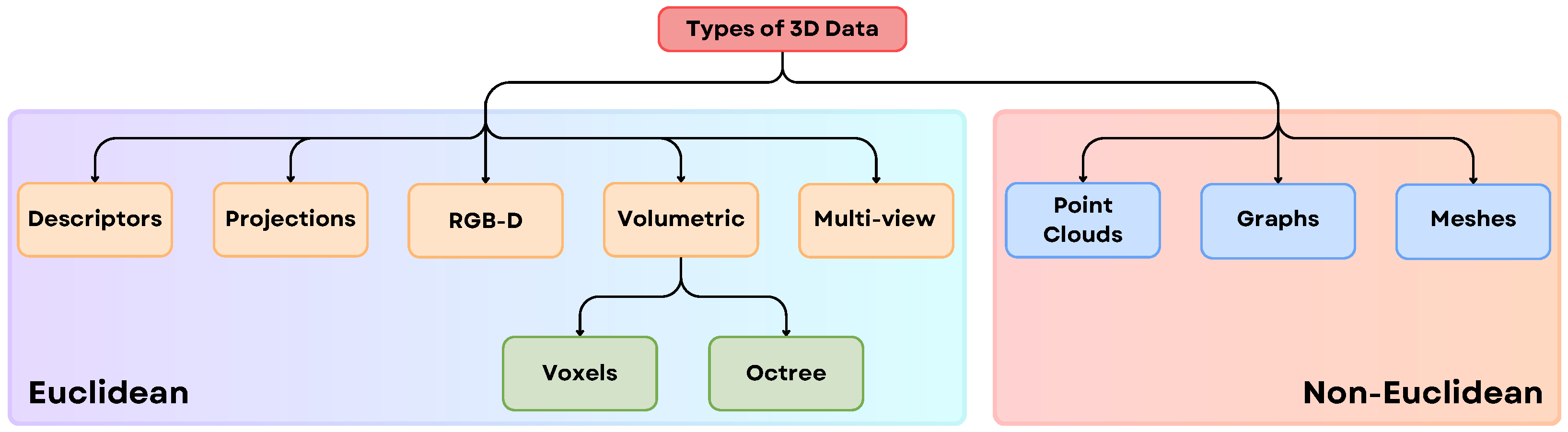

2.2. Fundamentals of 3D Vision

- Descriptors: These are compact mathematical or statistical summaries of 3D shapes, used for tasks like recognition or matching. Examples include shape histograms and curvature descriptors.

- Projections: 3D objects are represented through their 2D projections from multiple viewpoints, simplifying the representation while retaining key spatial information.

- RGB-D: Combines RGB color data with depth information captured by devices like Kinect. This format is widely used in robotics and augmented reality applications.

- Voxel Grid: A 3D grid cell that represents a portion of space, storing attributes like object presence, color, or density. It provides detailed 3D representations but can be memory-intensive.

- Octree: A hierarchical structure that divides 3D space into smaller cubes only where needed, reducing memory usage while maintaining detail in occupied regions.

- Multi-view: Uses multiple 2D images of a 3D object captured from various angles, allowing algorithms to infer 3D structure.

- Point Clouds: A collection of points in 3D space, each representing a specific position on an object’s surface. Used in lidar scanning and autonomous vehicles. For capturing point cloud data Matterport pro camera, LiDAR, Microsoft Kinect, etc., can be used.

- Graphs: Represent 3D data as nodes and edges, where nodes correspond to object elements and edges represent relationships. Common in structural analysis and mesh processing.

- Meshes: Define 3D shapes using vertices, edges, and faces, forming a network of polygons (typically triangles). They are widely used in computer graphics.

2.3. Motivation Behind Combining LLMs and 3D Vision

- RQ1: What are the dominant architectural paradigms and cross-modal alignment strategies for integrating the symbolic, semantic reasoning of Large Language Models with the raw geometric and spatial data from diverse 3D sensors (e.g., LiDAR, RGB-D) to achieve robust spatial grounding and object referencing in robotic systems?

- RQ2: What architectural frameworks and semantic grounding techniques enable Large Language Models to interpret and reason over heterogeneous, non-visual sensor data—such as tactile force distributions, thermal signatures, and acoustic cues—thereby enriching a robot’s 3D world model to enhance situational awareness and allow for more nuanced physical interaction under conditions where visual data is ambiguous or unreliable?

- RQ3: Given the inherent challenges of 3D data scarcity and the modality gap between unstructured point clouds and structured language, what emerging methodologies—spanning open-vocabulary pre-training, procedural text-to-3D generation, and the fusion of non-visual sensory inputs (tactile, thermal, auditory)—are being employed to create more generalizable and robust robotic perception systems?

| Models | Euclidean | Non-Euclidean | |||||

|---|---|---|---|---|---|---|---|

| RGB-D | Voxel | Multi-View | Point Cloud | Graph | Mesh | ||

| WildRefer [42] | ✓ | - | - | ✓ | - | - | |

| CrossGLG 1 [43] | ✓ | - | - | - | - | - | |

| 3DMIT [44] | - | - | - | ✓ | - | - | |

| LiDAR-LLM [45] | - | - | - | ✓ | - | - | |

| SceneVerse [46] | - | - | - | ✓ | - | - | |

| Agent3D-Zero [47] | - | - | ✓ | ✓ | - | - | |

| PointLLM [48] | - | - | - | ✓ | - | - | |

| QueSTMaps [49] | ✓ | - | - | ✓ | - | - | |

| Chat-3D [50] | - | - | - | ✓ | - | - | |

| ConceptFusion [51] | ✓ | - | - | ✓ | - | - | |

| RREx-BoT [52] | - | - | - | ✓ | - | - | |

| PLA [53] | ✓ | - | - | ✓ | - | - | |

| OpenScene [54] | - | - | ✓ | ✓ | - | - | |

| ULIP [55] | - | - | ✓ | ✓ | - | - | |

| PolarNet [56] | ✓ | - | ✓ | ✓ | - | - | |

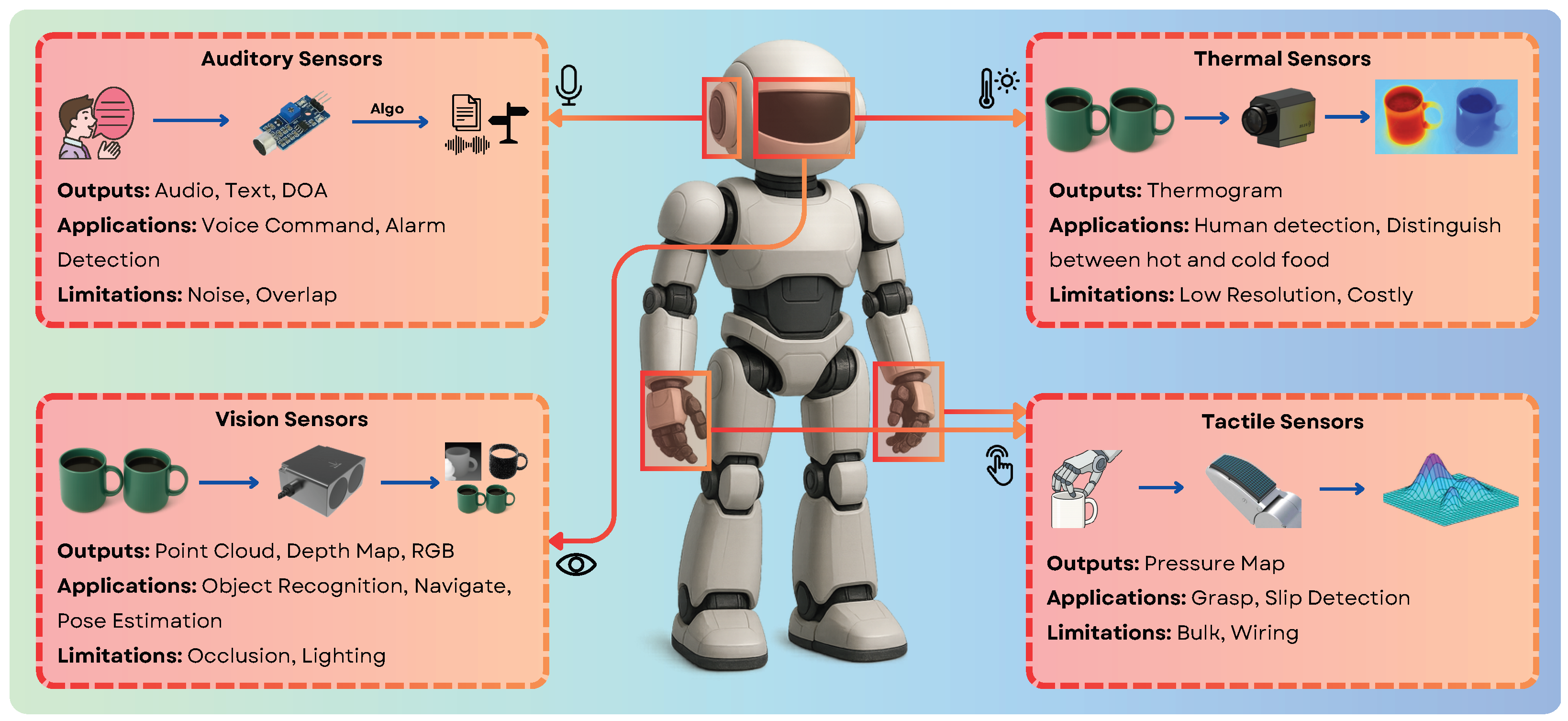

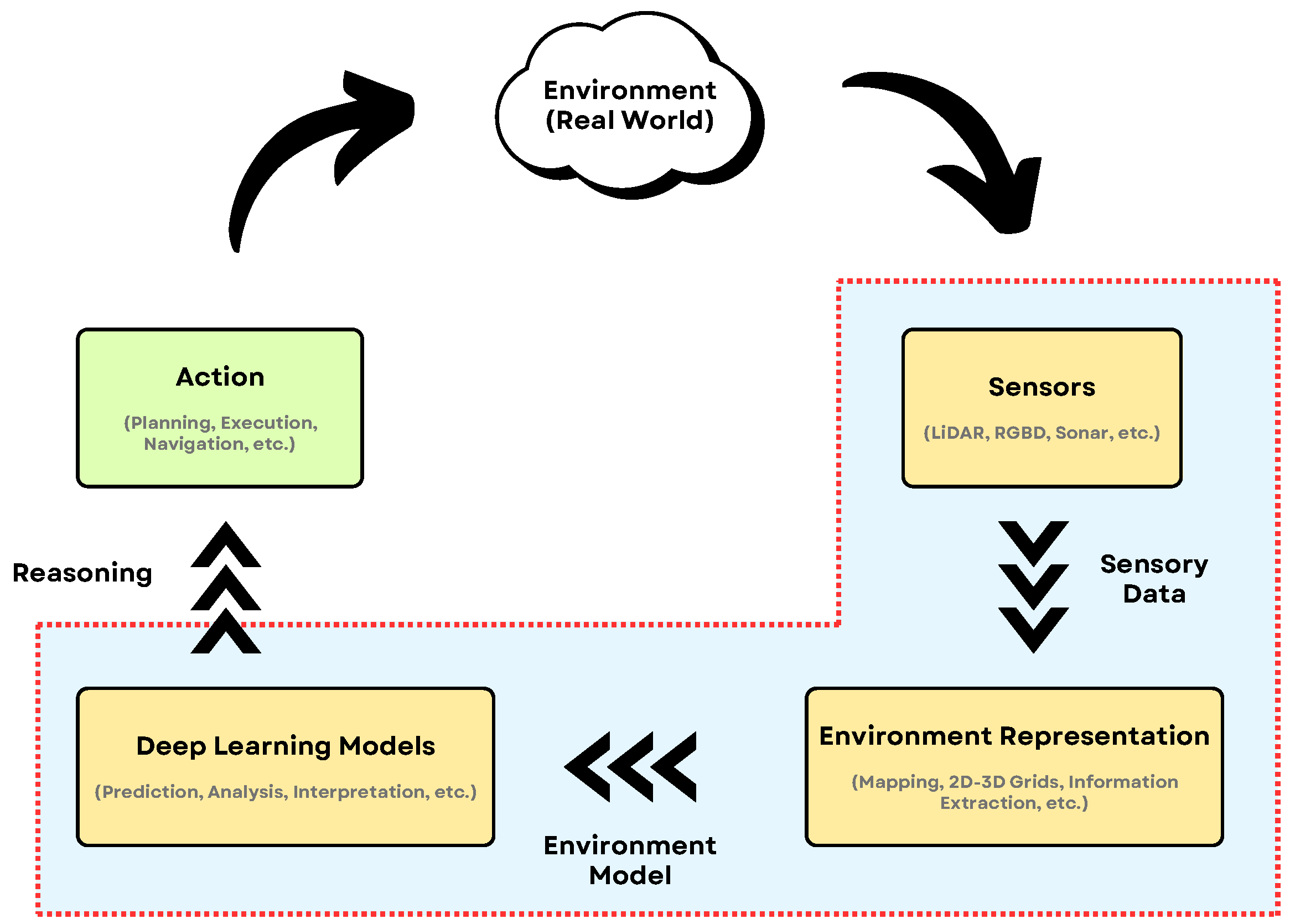

3. Sensing Technologies for Robotic Perception

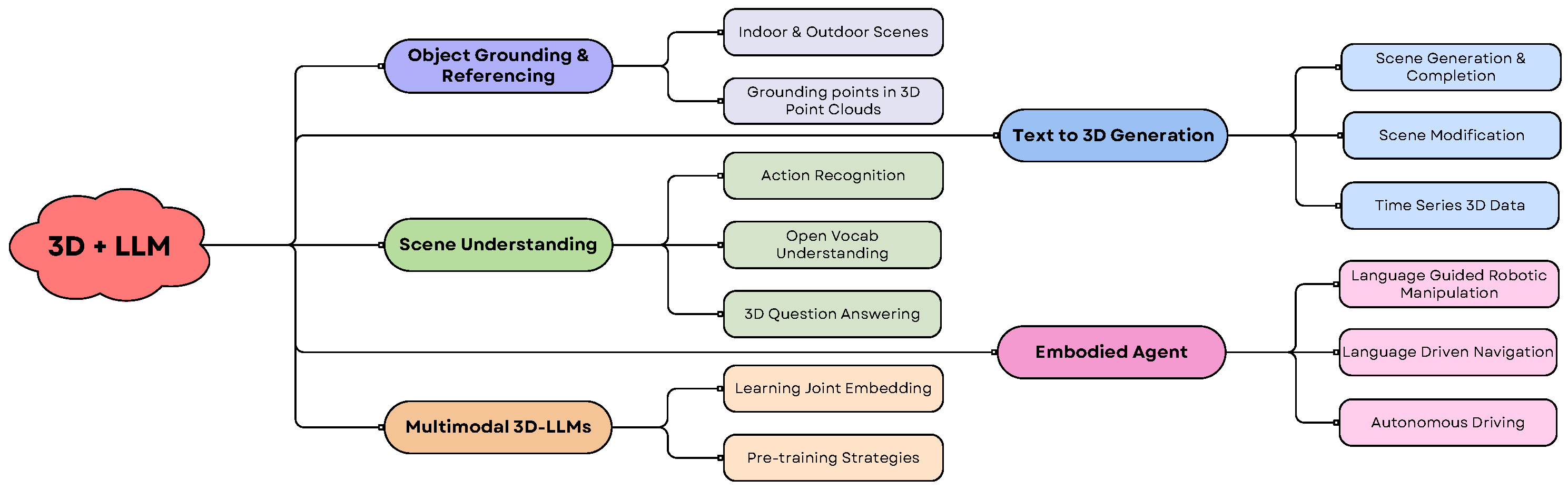

4. LLMs and 3D Vision Advancements and Applications

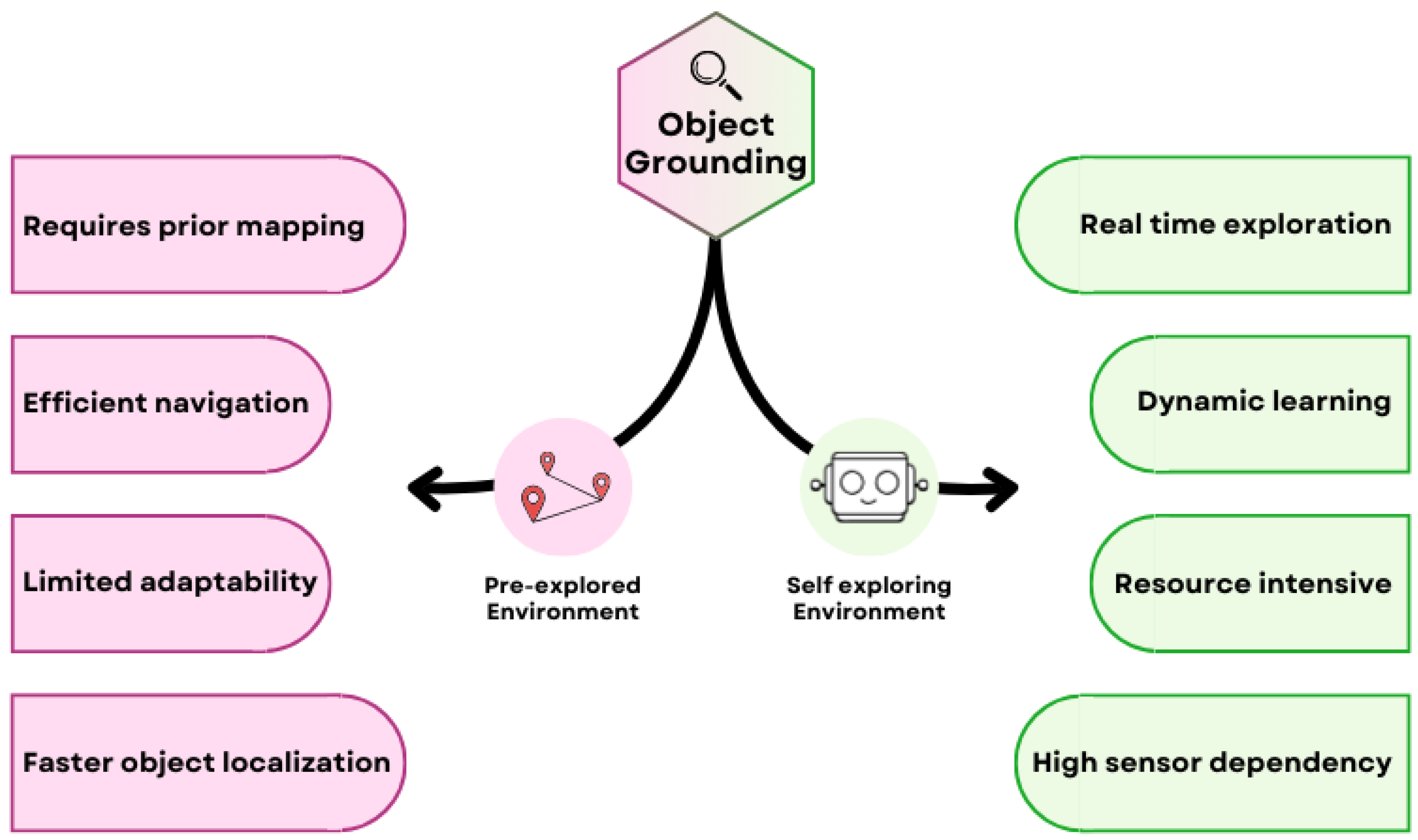

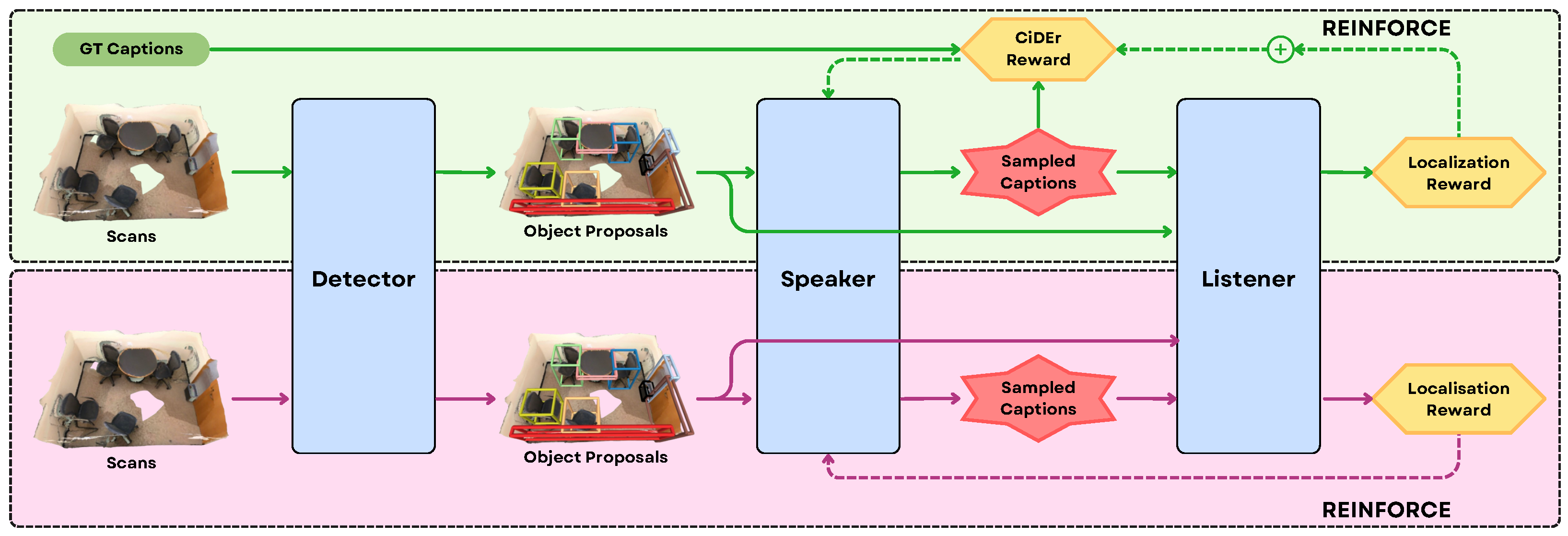

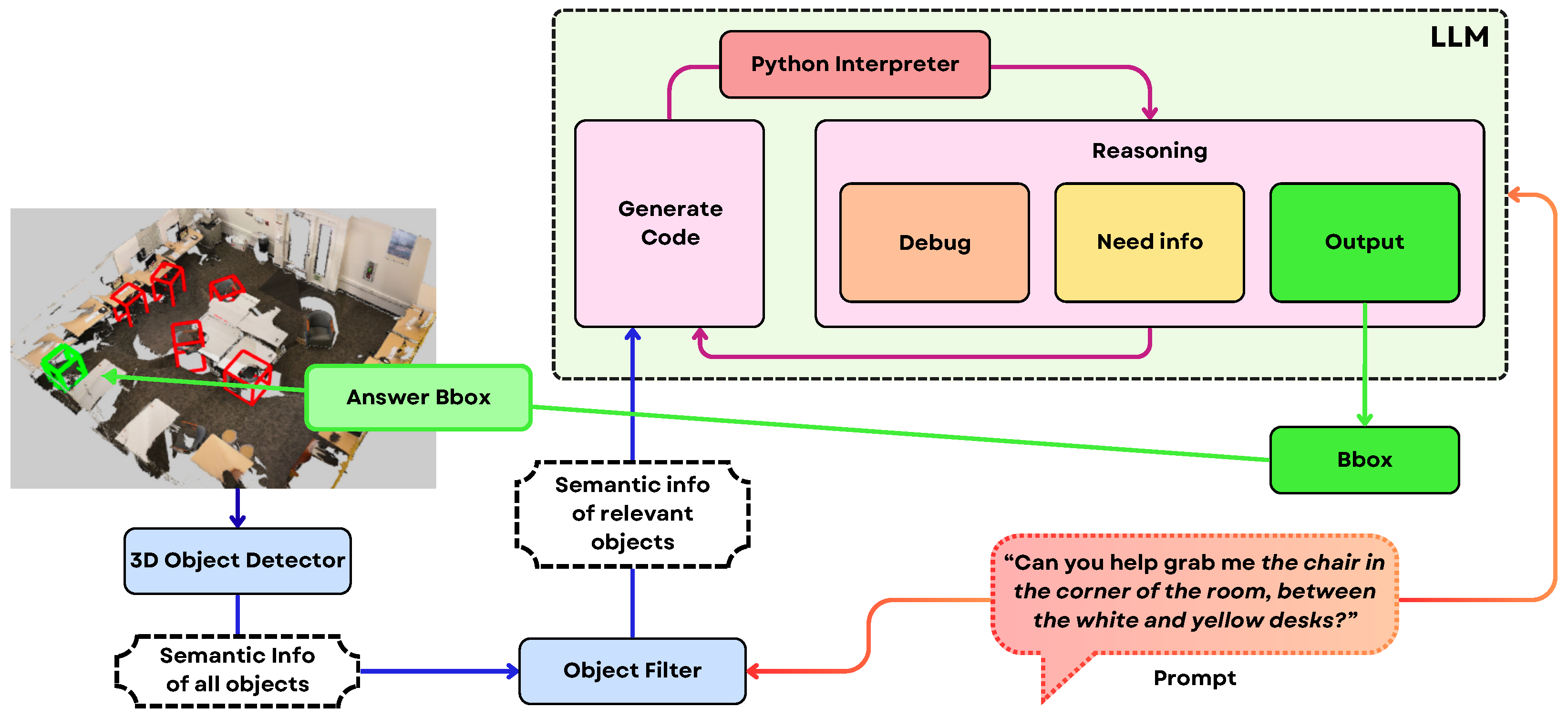

4.1. Localization and Grounding

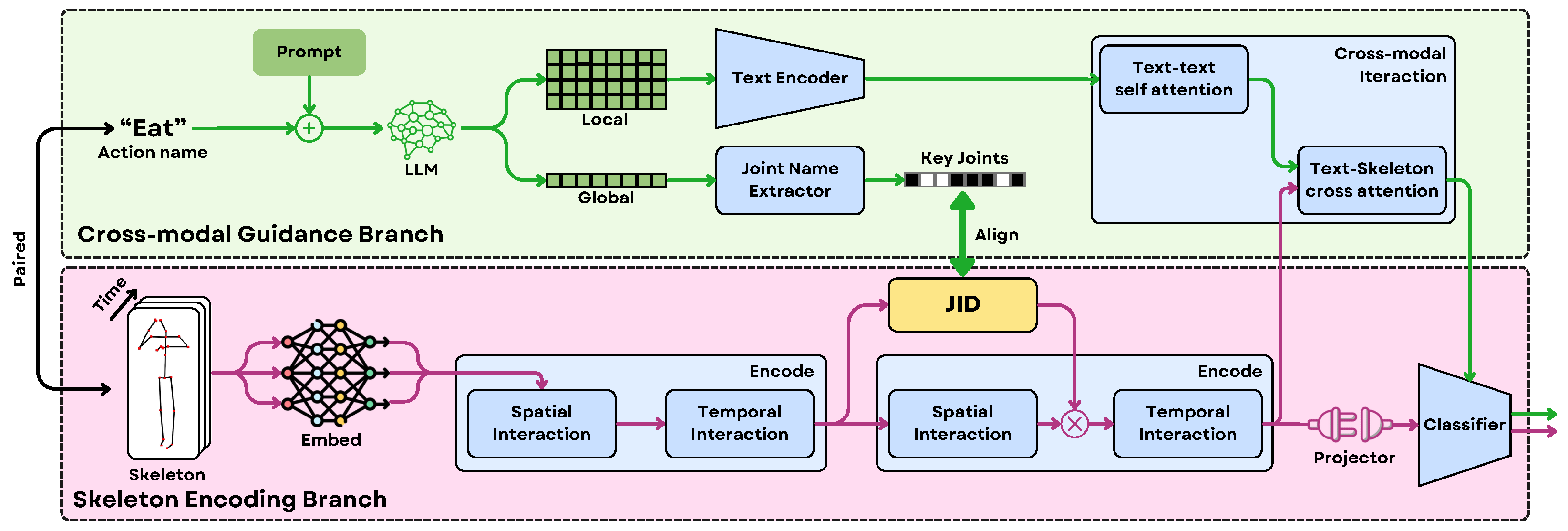

4.2. Dynamic Scenes

4.3. Indoor and Outdoor Scene Understanding

4.4. Open Vocabulary Understanding and Pretraining

4.5. Text to 3D

4.6. Multimodality

4.7. Embodied Agent

5. Datasets

6. Evaluation Metrics

7. Challenges and Limitations

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Chiang, W.L.; Li, Z.; Lin, Z.; Sheng, Y.; Wu, Z.; Zhang, H.; Zheng, L.; Zhuang, S.; Zhuang, Y.; Gonzalez, J.E.; et al. Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality. 2023. Available online: https://vicuna.lmsys.org (accessed on 10 July 2025).

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. Minigpt-4: Enhancing vision-language understanding with advanced large language models. arXiv 2023, arXiv:2304.10592. [Google Scholar]

- Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; Bi, X.; et al. Deepseek-r1: Incentivizing reasoning capability in llms via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar]

- Devlin, J. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Lan, Z. Albert: A lite bert for self-supervised learning of language representations. arXiv 2019, arXiv:1909.11942. [Google Scholar]

- Sanh, V. DistilBERT, a distilled version of BERT: Smaller, faster, cheaper and lighter. arXiv 2019, arXiv:1910.01108. [Google Scholar]

- Liu, Y. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Joshi, M.; Chen, D.; Liu, Y.; Weld, D.S.; Zettlemoyer, L.; Levy, O. Spanbert: Improving pre-training by representing and predicting spans. Trans. Assoc. Comput. Linguist. 2020, 8, 64–77. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 15 April 2025).

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Meta. Llama 3.1. Available online: https://ai.meta.com/blog/meta-llama-3-1/ (accessed on 5 February 2025).

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 27730–27744. [Google Scholar]

- Lewis, M. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Wikipedia. GPT-4. Available online: https://en.wikipedia.org/wiki/GPT-4 (accessed on 19 December 2024).

- Liu, Y.; Wang, Q.; Zhuang, Y.; Hu, H. A Novel Trail Detection and Scene Understanding Framework for a Quadrotor UAV with Monocular Vision. IEEE Sens. J. 2017, 17, 6778–6787. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Q.; Kamijo, S. Intelligent Driving Data Recorder in Smartphone Using Deep Neural Network-Based Speedometer and Scene Understanding. IEEE Sens. J. 2019, 19, 287–296. [Google Scholar] [CrossRef]

- Huang, Z.; Lv, C.; Xing, Y.; Wu, J. Multi-Modal Sensor Fusion-Based Deep Neural Network for End-to-End Autonomous Driving with Scene Understanding. IEEE Sens. J. 2021, 21, 11781–11790. [Google Scholar] [CrossRef]

- Ni, J.; Ren, S.; Tang, G.; Cao, W.; Shi, P. An Improved Shared Encoder-Based Model for Fast Panoptic Segmentation. IEEE Sens. J. 2024, 24, 22070–22083. [Google Scholar] [CrossRef]

- Wu, Y.; Tian, R.; Swamy, G.; Bajcsy, A. From Foresight to Forethought: VLM-In-the-Loop Policy Steering via Latent Alignment. arXiv 2025, arXiv:2502.01828. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Lu, H.; Liu, W.; Zhang, B.; Wang, B.; Dong, K.; Liu, B.; Sun, J.; Ren, T.; Li, Z.; Yang, H.; et al. Deepseek-vl: Towards real-world vision-language understanding. arXiv 2024, arXiv:2403.05525. [Google Scholar]

- Jia, C.; Yang, Y.; Xia, Y.; Chen, Y.T.; Parekh, Z.; Pham, H.; Le, Q.; Sung, Y.H.; Li, Z.; Duerig, T. Scaling up visual and vision-language representation learning with noisy text supervision. In Proceedings of the International Conference on Machine Learning, Online, 18 July 2021; pp. 4904–4916. [Google Scholar]

- Kim, W.; Son, B.; Kim, I. Vilt: Vision-and-language transformer without convolution or region supervision. In Proceedings of the International Conference on Machine Learning, Online, 18 July 2021; pp. 5583–5594. [Google Scholar]

- Chen, S.; Wu, Z.; Zhang, K.; Li, C.; Zhang, B.; Ma, F.; Yu, F.R.; Li, Q. Exploring embodied multimodal large models: Development, datasets, and future directions. Inf. Fusion 2025, 122, 103198. [Google Scholar] [CrossRef]

- Zhang, D.; Yu, Y.; Dong, J.; Li, C.; Su, D.; Chu, C.; Yu, D. Mm-llms: Recent advances in multimodal large language models. arXiv 2024, arXiv:2401.13601. [Google Scholar]

- Yin, S.; Fu, C.; Zhao, S.; Li, K.; Sun, X.; Xu, T.; Chen, E. A survey on multimodal large language models. arXiv 2023, arXiv:2306.13549. [Google Scholar] [CrossRef]

- Kamath, U.; Keenan, K.; Somers, G.; Sorenson, S. Multimodal LLMs. In Large Language Models: A Deep Dive: Bridging Theory and Practice; Springer Nature: Cham, Switzerland, 2024; pp. 375–421. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, W.; Han, X.; Lin, X.; Zhao, H.; Liu, Y.; Zhai, B.; Yuan, J.; You, Q.; Yang, H. Exploring the reasoning abilities of multimodal large language models (mllms): A comprehensive survey on emerging trends in multimodal reasoning. arXiv 2024, arXiv:2401.06805. [Google Scholar] [CrossRef]

- Ahn, M.; Brohan, A.; Brown, N.; Chebotar, Y.; Cortes, O.; David, B.; Finn, C.; Fu, C.; Gopalakrishnan, K.; Hausman, K.; et al. Do as I can, not as I say: Grounding language in robotic affordances. arXiv 2022, arXiv:2204.01691. [Google Scholar] [CrossRef]

- Huang, W.; Xia, F.; Xiao, T.; Chan, H.; Liang, J.; Florence, P.; Zeng, A.; Tompson, J.; Mordatch, I.; Chebotar, Y.; et al. Inner monologue: Embodied reasoning through planning with language models. arXiv 2022, arXiv:2207.05608. [Google Scholar] [CrossRef]

- Zeng, A.; Attarian, M.; Ichter, B.; Choromanski, K.; Wong, A.; Welker, S.; Tombari, F.; Purohit, A.; Ryoo, M.; Sindhwani, V.; et al. Socratic models: Composing zero-shot multimodal reasoning with language. arXiv 2022, arXiv:2204.00598. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

- Vaswani, A.; Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaisek, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017. [Google Scholar]

- Alexey, D. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Ye, T.; Dong, L.; Xia, Y.; Sun, Y.; Zhu, Y.; Huang, G.; Wei, F. Differential transformer. arXiv 2024, arXiv:2410.05258. [Google Scholar] [PubMed]

- Ahmed, E.; Saint, A.; Shabayek, A.E.R.; Cherenkova, K.; Das, R.; Gusev, G.; Aouada, D.; Ottersten, B. A survey on deep learning advances on different 3D data representations. arXiv 2018, arXiv:1808.01462. [Google Scholar]

- Li, C.; Zhang, C.; Cho, J.; Waghwase, A.; Lee, L.H.; Rameau, F.; Yang, Y.; Bae, S.H.; Hong, C.S. Generative ai meets 3d: A survey on text-to-3d in aigc era. arXiv 2023, arXiv:2305.06131. [Google Scholar]

- Bronstein, M.M.; Bruna, J.; LeCun, Y.; Szlam, A.; Vandergheynst, P. Geometric deep learning: Going beyond euclidean data. IEEE Signal Process. Mag. 2017, 34, 18–42. [Google Scholar] [CrossRef]

- Lin, Z.; Peng, X.; Cong, P.; Zheng, G.; Sun, Y.; Hou, Y.; Zhu, X.; Yang, S.; Ma, Y. Wildrefer: 3d object localization in large-scale dynamic scenes with multi-modal visual data and natural language. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 456–473. [Google Scholar]

- Yan, T.; Zeng, W.; Xiao, Y.; Tong, X.; Tan, B.; Fang, Z.; Cao, Z.; Zhou, J.T. Crossglg: Llm guides one-shot skeleton-based 3d action recognition in a cross-level manner. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 113–131. [Google Scholar]

- Li, Z.; Zhang, C.; Wang, X.; Ren, R.; Xu, Y.; Ma, R.; Liu, X.; Wei, R. 3dmit: 3d multi-modal instruction tuning for scene understanding. In Proceedings of the 2024 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Niagara Falls, ON, Canada, 15–19 July 2024; pp. 1–5. [Google Scholar]

- Yang, S.; Liu, J.; Zhang, R.; Pan, M.; Guo, Z.; Li, X.; Chen, Z.; Gao, P.; Guo, Y.; Zhang, S. Lidar-llm: Exploring the potential of large language models for 3d lidar understanding. arXiv 2023, arXiv:2312.14074. [Google Scholar] [CrossRef]

- Jia, B.; Chen, Y.; Yu, H.; Wang, Y.; Niu, X.; Liu, T.; Li, Q.; Huang, S. Sceneverse: Scaling 3d vision-language learning for grounded scene understanding. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 289–310. [Google Scholar]

- Zhang, S.; Huang, D.; Deng, J.; Tang, S.; Ouyang, W.; He, T.; Zhang, Y. Agent3d-zero: An agent for zero-shot 3d understanding. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 186–202. [Google Scholar]

- Xu, R.; Wang, X.; Wang, T.; Chen, Y.; Pang, J.; Lin, D. Pointllm: Empowering large language models to understand point clouds. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 131–147. [Google Scholar]

- Mehan, Y.; Gupta, K.; Jayanti, R.; Govil, A.; Garg, S.; Krishna, M. QueSTMaps: Queryable Semantic Topological Maps for 3D Scene Understanding. arXiv 2024, arXiv:2404.06442. [Google Scholar] [CrossRef]

- Wang, Z.; Huang, H.; Zhao, Y.; Zhang, Z.; Zhao, Z. Chat-3d: Data-efficiently tuning large language model for universal dialogue of 3d scenes. arXiv 2023, arXiv:2308.08769. [Google Scholar]

- Jatavallabhula, K.M.; Kuwajerwala, A.; Gu, Q.; Omama, M.; Chen, T.; Maalouf, A.; Li, S.; Iyer, G.; Saryazdi, S.; Keetha, N.; et al. Conceptfusion: Open-set multimodal 3d mapping. arXiv 2023, arXiv:2302.07241. [Google Scholar]

- Sigurdsson, G.A.; Thomason, J.; Sukhatme, G.S.; Piramuthu, R. Rrex-bot: Remote referring expressions with a bag of tricks. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 5203–5210. [Google Scholar]

- Ding, R.; Yang, J.; Xue, C.; Zhang, W.; Bai, S.; Qi, X. Pla: Language-driven open-vocabulary 3d scene understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7010–7019. [Google Scholar]

- Peng, S.; Genova, K.; Jiang, C.; Tagliasacchi, A.; Pollefeys, M.; Funkhouser, T. Openscene: 3d scene understanding with open vocabularies. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 815–824. [Google Scholar]

- Xue, L.; Gao, M.; Xing, C.; Martín-Martín, R.; Wu, J.; Xiong, C.; Xu, R.; Niebles, J.C.; Savarese, S. Ulip: Learning a unified representation of language, images, and point clouds for 3d understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 1179–1189. [Google Scholar]

- Chen, S.; Garcia, R.; Schmid, C.; Laptev, I. Polarnet: 3d point clouds for language-guided robotic manipulation. arXiv 2023, arXiv:2309.15596. [Google Scholar]

- Giuili, A.; Atari, R.; Sintov, A. ORACLE-Grasp: Zero-Shot Task-Oriented Robotic Grasping using Large Multimodal Models. arXiv 2025, arXiv:2505.08417. [Google Scholar]

- Zheng, D.; Huang, S.; Li, Y.; Wang, L. Learning from Videos for 3D World: Enhancing MLLMs with 3D Vision Geometry Priors. arXiv 2025, arXiv:2505.24625. [Google Scholar] [CrossRef]

- Wang, S.; Yu, Z.; Jiang, X.; Lan, S.; Shi, M.; Chang, N.; Kautz, J.; Li, Y.; Alvarez, J.M. OmniDrive: A Holistic LLM-Agent Framework for Autonomous Driving with 3D Perception, Reasoning and Planning. arXiv 2024, arXiv:2405.01533. [Google Scholar]

- Bai, Y.; Wu, D.; Liu, Y.; Jia, F.; Mao, W.; Zhang, Z.; Zhao, Y.; Shen, J.; Wei, X.; Wang, T.; et al. Is a 3d-tokenized llm the key to reliable autonomous driving? arXiv 2024, arXiv:2405.18361. [Google Scholar] [CrossRef]

- Yang, J.; Chen, X.; Madaan, N.; Iyengar, M.; Qian, S.; Fouhey, D.F.; Chai, J. 3d-grand: A million-scale dataset for 3d-llms with better grounding and less hallucination. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 29501–29512. [Google Scholar]

- Qian, G.; Li, Y.; Peng, H.; Mai, J.; Hammoud, H.; Elhoseiny, M.; Ghanem, B. Pointnext: Revisiting pointnet++ with improved training and scaling strategies. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 23192–23204. [Google Scholar]

- Liu, M.; Li, X.; Ling, Z.; Li, Y.; Su, H. Frame mining: A free lunch for learning robotic manipulation from 3d point clouds. arXiv 2022, arXiv:2210.07442. [Google Scholar] [CrossRef]

- Sun, C.; Han, J.; Deng, W.; Wang, X.; Qin, Z.; Gould, S. 3D-GPT: Procedural 3D Modeling with Large Language Models. arXiv 2023, arXiv:2310.12945. [Google Scholar] [CrossRef]

- Kuka, V. Multimodal Foundation Models: 2024’s Surveys to Understand the Future of AI. Available online: https://www.turingpost.com/p/multimodal-resources (accessed on 1 October 2025).

- Han, X.; Chen, S.; Fu, Z.; Feng, Z.; Fan, L.; An, D.; Wang, C.; Guo, L.; Meng, W.; Zhang, X.; et al. Multimodal fusion and vision-language models: A survey for robot vision. Inf. Fusion 2026, 126, 103652. [Google Scholar] [CrossRef]

- Li, S.; Tang, H. Multimodal alignment and fusion: A survey. arXiv 2024, arXiv:2411.17040. [Google Scholar] [CrossRef]

- Lin, C.Y.; Hsieh, P.J.; Chang, F.A. Dsp based uncalibrated visual servoing for a 3-dof robot manipulator. In Proceedings of the 2016 IEEE International Conference on Industrial Technology (ICIT), Taipei, Taiwan, 14–17 March 2016; pp. 1618–1621. [Google Scholar]

- Martinez González, P.; Castelán, M.; Arechavaleta, G. Vision Based Persistent Localization of a Humanoid Robot for Locomotion Tasks. Int. J. Appl. Math. Comput. Sci. 2016, 26, 669–682. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts, S.; Oprea, S.; Rodríguez, J.; Azorin-Lopez, J.; Saval-Calvo, M.; Cazorla, M. Multi-sensor 3D Object Dataset for Object Recognition with Full Pose Estimation. Neural Comput. Appl. 2017, 28, 941–952. [Google Scholar] [CrossRef]

- Zhang, L.; Guo, Z.; Chen, H.; Shuai, L. Corner-Based 3D Object Pose Estimation in Robot Vision. In Proceedings of the 2016 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 27–28 August 2016; pp. 363–368. [Google Scholar] [CrossRef]

- Wen, K.; Wu, W.; Kong, X.; Liu, K. A Comparative Study of the Multi-state Constraint and the Multi-view Geometry Constraint Kalman Filter for Robot Ego-Motion Estimation. In Proceedings of the 2016 8th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, 27–28 August 2016; pp. 466–471. [Google Scholar]

- Vicente, P.; Jamone, L.; Bernardino, A. Robotic Hand Pose Estimation Based on Stereo Vision and GPU-enabled Internal Graphical Simulation. J. Intell. Robot. Syst. 2016, 83, 339–358. [Google Scholar] [CrossRef]

- Masuta, H.; Motoyoshi, T.; Koyanagi, K.; Oshima, T.; Lim, H.o. Direct Perception of Easily Visible Information for Unknown Object Grasping. In Proceedings of the 9th International Conference on Intelligent Robotics and Applications, ICIRA 2016, Tokyo, Japan, 22–24 August 2016; Volume 9835, pp. 78–89. [Google Scholar] [CrossRef]

- Kent, D.; Behrooz, M.; Chernova, S. Construction of a 3D object recognition and manipulation database from grasp demonstrations. Auton. Robot. 2015, 40, 175–192. [Google Scholar] [CrossRef]

- Gunatilake, A.; Piyathilaka, L.; Tran, A.; Vishwanathan, V.K.; Thiyagarajan, K.; Kodagoda, S. Stereo Vision Combined with Laser Profiling for Mapping of Pipeline Internal Defects. IEEE Sens. J. 2021, 21, 11926–11934. [Google Scholar] [CrossRef]

- Du, Y.C.; Muslikhin, M.; Hsieh, T.H.; Wang, M.S. Stereo Vision-Based Object Recognition and Manipulation by Regions with Convolutional Neural Network. Electronics 2020, 9, 210. [Google Scholar] [CrossRef]

- Haque, A.U.; Nejadpak, A. Obstacle avoidance using stereo camera. arXiv 2017, arXiv:1705.04114. [Google Scholar] [CrossRef]

- Kumano, M.; Ohya, A.; Yuta, S. Obstacle avoidance of autonomous mobile robot using stereo vision sensor. In Proceedings of the 2nd International Symposium on Robotics and Automation, San Francisco, CA, USA, 24–28 April 2000. [Google Scholar]

- Howard, A. Real-time stereo visual odometry for autonomous ground vehicles. In Proceedings of the 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 3946–3952. [Google Scholar] [CrossRef]

- Zhao, Q.; Luo, B.; Zhang, Y. Stereo-based multi-motion visual odometry for mobile robots. arXiv 2019, arXiv:1910.06607. [Google Scholar]

- Nguyen, C.D.T.; Park, J.; Cho, K.Y.; Kim, K.S.; Kim, S. Novel Descattering Approach for Stereo Vision in Dense Suspended Scatterer Environments. Sensors 2017, 17, 1425. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Chen, S. Advances in sensing and processing methods for three-dimensional robot vision. Int. J. Adv. Robot. Syst. 2018, 15, 1729881418760623. [Google Scholar] [CrossRef]

- Hebert, M.; Krotkov, E. 3D measurements from imaging laser radars: How good are they? Image Vis. Comput. 1992, 10, 170–178. [Google Scholar] [CrossRef]

- Honegger, D.; Meier, L.; Tanskanen, P.; Pollefeys, M. An open source and open hardware embedded metric optical flow cmos camera for indoor and outdoor applications. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1736–1741. [Google Scholar]

- Besl, P.J. Active, optical range imaging sensors. Mach. Vis. Appl. 1988, 1, 127–152. [Google Scholar] [CrossRef]

- Kim, M.Y.; Ahn, S.T.; Cho, H.S. Bayesian sensor fusion of monocular vision and laser structured light sensor for robust localization of a mobile robot. J. Inst. Control Robot. Syst. 2010, 16, 381–390. [Google Scholar] [CrossRef]

- Yang, R.; Chen, Y. Design of a 3-D infrared imaging system using structured light. IEEE Trans. Instrum. Meas. 2010, 60, 608–617. [Google Scholar] [CrossRef]

- Cui, Y.; Schuon, S.; Chan, D.; Thrun, S.; Theobalt, C. 3D shape scanning with a time-of-flight camera. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 1173–1180. [Google Scholar]

- Sansoni, G.; Trebeschi, M.; Docchio, F. State-of-The-Art and Applications of 3D Imaging Sensors in Industry, Cultural Heritage, Medicine, and Criminal Investigation. Sensors 2009, 9, 568–601. [Google Scholar] [CrossRef]

- Boyer, K.L.; Kak, A.C. Color-encoded structured light for rapid active ranging. IEEE Trans. Pattern Anal. Mach. Intell. 1987, PAMI-9, 14–28. [Google Scholar] [CrossRef]

- Chen, S.; Li, Y.F.; Zhang, J. Vision processing for realtime 3-D data acquisition based on coded structured light. IEEE Trans. Image Process. 2008, 17, 167–176. [Google Scholar] [CrossRef]

- Wei, B.; Gao, J.; Li, K.; Fan, Y.; Gao, X.; Gao, B. Indoor mobile robot obstacle detection based on linear structured light vision system. In Proceedings of the 2008 IEEE International Conference on Robotics and Biomimetics, Bangkok, Thailand, 22–25 February 2009; pp. 834–839. [Google Scholar]

- King, S.J.; Weiman, C.F. Helpmate autonomous mobile robot navigation system. In Mobile Robots V, Proceedings of the Advances in Intelligent Robotics Systems, Boston, MA, USA, 4–9 November 1990; SPIE: Bellingham, WA, USA, 1991; Volume 1388, pp. 190–198. [Google Scholar]

- Silberman, N.; Fergus, R. Indoor scene segmentation using a structured light sensor. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 601–608. [Google Scholar]

- Sarafraz, A.; Haus, B.K. A structured light method for underwater surface reconstruction. ISPRS J. Photogramm. Remote Sens. 2016, 114, 40–52. [Google Scholar]

- Johnson-Roberson, M.; Bryson, M.; Friedman, A.; Pizarro, O.; Troni, G.; Ozog, P.; Henderson, J.C. High-resolution underwater robotic vision-based mapping and three-dimensional reconstruction for archaeology. J. Field Robot. 2017, 34, 625–643. [Google Scholar]

- Zhang, L.; Curless, B.; Seitz, S.M. Rapid shape acquisition using color structured light and multi-pass dynamic programming. In Proceedings of the First International Symposium on 3D Data Processing Visualization and Transmission, Padova, Italy, 19–21 June 2002; pp. 24–36. [Google Scholar]

- Park, J.; DeSouza, G.N.; Kak, A.C. Dual-beam structured-light scanning for 3-D object modeling. In Proceedings of the Third International Conference on 3-D Digital Imaging and Modeling, Quebec City, QC, Canada, 28 May–1 June 2001; pp. 65–72. [Google Scholar]

- Park, D.J.; Kim, J.H. 3D hand-eye robot vision system using a cone-shaped structured light for the SICE-ICASE International Joint Conference 2006 (SICE-ICCAS 2006). In Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Republic of Korea, 18–21 October 2006; pp. 2975–2980. [Google Scholar]

- Stuff, A. LiDAR Comparison Chart. Available online: https://autonomoustuff.com/lidar-chart (accessed on 25 April 2025).

- Robots, G. How to Select the Right LiDAR. Available online: https://www.generationrobots.com/blog/en/how-to-select-the-right-lidar/ (accessed on 25 April 2025).

- Ma, Y.; Fang, Z.; Jiang, W.; Su, C.; Zhang, Y.; Wu, J.; Wang, Z. Gesture Recognition Based on Time-of-Flight Sensor and Residual Neural Network. J. Comput. Commun. 2024, 12, 103–114. [Google Scholar] [CrossRef]

- Farhangian, F.; Sefidgar, M.; Landry, R.J. Applying a ToF/IMU-Based Multi-Sensor Fusion Architecture in Pedestrian Indoor Navigation Methods. Sensors 2021, 21, 3615. [Google Scholar] [CrossRef] [PubMed]

- Bostelman, R.; Hong, T.; Madhavan, R. Obstacle detection using a Time-of-Flight range camera for Automated Guided Vehicle safety and navigation. Integr. Comput.-Aided Eng. 2005, 12, 237–249. [Google Scholar] [CrossRef]

- Horio, M.; Feng, Y.; Kokado, T.; Takasawa, T.; Yasutomi, K.; Kawahito, S.; Komuro, T.; Nagahara, H.; Kagawa, K. Resolving Multi-Path Interference in Compressive Time-of-Flight Depth Imaging with a Multi-Tap Macro-Pixel Computational CMOS Image Sensor. Sensors 2022, 22, 2442. [Google Scholar] [CrossRef]

- Li, F.; Chen, H.; Pediredla, A.; Yeh, C.; He, K.; Veeraraghavan, A.; Cossairt, O. CS-ToF: High-resolution compressive time-of-flight imaging. Opt. Express 2017, 25, 31096–31110. [Google Scholar] [CrossRef]

- Lee, S.; Yasutomi, K.; Morita, M.; Kawanishi, H.; Kawahito, S. A Time-of-Flight Range Sensor Using Four-Tap Lock-In Pixels with High near Infrared Sensitivity for LiDAR Applications. Sensors 2020, 20, 116. [Google Scholar] [CrossRef]

- Agresti, G.; Zanuttigh, P. Deep Learning for Multi-Path Error Removal in ToF Sensors. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Son, K.; Liu, M.Y.; Taguchi, Y. Learning to remove multipath distortions in Time-of-Flight range images for a robotic arm setup. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3390–3397. [Google Scholar] [CrossRef]

- Su, S.; Heide, F.; Wetzstein, G.; Heidrich, W. Deep End-to-End Time-of-Flight Imaging. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 13–18 June 2018; pp. 6383–6392. [Google Scholar] [CrossRef]

- Du, Y.; Deng, Y.; Zhou, Y.; Jiao, F.; Wang, B.; Xu, Z.; Jiang, Z.; Guan, X. Multipath interference suppression in indirect time-of-flight imaging via a novel compressed sensing framework. arXiv 2025, arXiv:2507.19546. [Google Scholar]

- Buratto, E.; Simonetto, A.; Agresti, G.; Schäfer, H.; Zanuttigh, P. Deep Learning for Transient Image Reconstruction from ToF Data. Sensors 2021, 21, 1962. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Z. LiDAR data reduction for efficient and high quality DEM generation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 173–178. [Google Scholar]

- Yu, J.; Zhang, A.; Zhong, Y.; Nguyen, T.T.; Nguyen, T.D. An Indoor Mobile Robot 2D Lidar Mapping Based on Cartographer-Slam Algorithm. Taiwan Ubiquitous Inf. 2022, 7, 795–804. [Google Scholar]

- Kim, J.; Park, B.j.; Kim, J. Empirical Analysis of Autonomous Vehicle’s LiDAR Detection Performance Degradation for Actual Road Driving in Rain and Fog. Sensors 2023, 23, 2972. [Google Scholar] [CrossRef]

- Otgonbayar, Z.; Kim, J.; Jekal, S.; Kim, C.G.; Noh, J.; Oh, W.C.; Yoon, C.M. Designing a highly near infrared-reflective black nanoparticles for autonomous driving based on the refractive index and principle. J. Colloid Interface Sci. 2024, 667, 663–678. [Google Scholar] [CrossRef] [PubMed]

- IntertialLabs. Why Have 905 and 1550 nm Become the Standard for LiDAR? Available online: https://inertiallabs.com/why-have-905-and-1550-nm-become-the-standard-for-lidars/ (accessed on 1 October 2025).

- Eureka. LIDAR Systems: 905 nm vs 1550 nm Laser Diode Safety and Performance. Available online: https://eureka.patsnap.com/article/lidar-systems-905nm-vs-1550nm-laser-diode-safety-and-performance (accessed on 1 October 2025).

- Jin, J.; Wang, S.; Zhang, Z.; Mei, D.; Wang, Y. Progress on flexible tactile sensors in robotic applications on objects properties recognition, manipulation and human-machine interactions. Soft Sci. 2023, 3, 8. [Google Scholar] [CrossRef]

- Wiki, R. The BioTac—Multimodal Tactile Sensor. Available online: https://wiki.ros.org/BioTac (accessed on 25 April 2025).

- Sight, G. Gel Sight Datasheet. Available online: https://www.gelsight.com/wp-content/uploads/2022/09/GelSight_Datasheet_GSMini_9.20.22b.pdf (accessed on 25 April 2025).

- Robots, S. Tactile Telerobot—The Story So Far. Available online: https://www.shadowrobot.com/blog/tactile-telerobot-the-story-so-far/ (accessed on 25 April 2025).

- Yu, J.; Zhang, K.; Deng, Y. Recent progress in pressure and temperature tactile sensors: Principle, classification, integration and outlook. Soft Sci. 2021, 1, 6. [Google Scholar] [CrossRef]

- Xia, W.; Zhou, C.; Oztireli, C. RETRO: REthinking Tactile Representation Learning with Material PriOrs. arXiv 2025, arXiv:2505.14319. [Google Scholar] [CrossRef]

- Hu, G.; Hershcovich, D.; Seifi, H. Hapticcap: A multimodal dataset and task for understanding user experience of vibration haptic signals. arXiv 2025, arXiv:2507.13318. [Google Scholar] [CrossRef]

- Fu, L.; Datta, G.; Huang, H.; Panitch, W.C.H.; Drake, J.; Ortiz, J.; Mukadam, M.; Lambeta, M.; Calandra, R.; Goldberg, K. A touch, vision, and language dataset for multimodal alignment. arXiv 2024, arXiv:2402.13232. [Google Scholar] [CrossRef]

- Cao, G.; Jiang, J.; Bollegala, D.; Li, M.; Luo, S. Multimodal zero-shot learning for tactile texture recognition. Robot. Auton. Syst. 2024, 176, 104688. [Google Scholar] [CrossRef]

- Tejwani, R.; Velazquez, K.; Payne, J.; Bonato, P.; Asada, H. Cross-modality Force and Language Embeddings for Natural Human-Robot Communication. arXiv 2025, arXiv:2502.02772. [Google Scholar]

- Hu, J.S.; Chan, C.Y.; Wang, C.K.; Wang, C.C. Simultaneous localization of mobile robot and multiple sound sources using microphone array. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 29–34. [Google Scholar] [CrossRef]

- Albustanji, R.N.; Elmanaseer, S.; Alkhatib, A.A.A. Robotics: Five Senses plus One—An Overview. Robotics 2023, 12, 68. [Google Scholar] [CrossRef]

- Guo, X.; Ding, S.; Peng, T.; Li, K.; Hong, X. Robot Hearing Through Optical. Channel in a Cocktail Party Environment. Authorea 2022. [Google Scholar] [CrossRef]

- Engineering, T. How Does Thermal Vision Technology Assist in Search and Rescue. Available online: https://www.thermal-engineering.org/how-does-thermal-vision-technology-assist-in-search-and-rescue/ (accessed on 25 April 2025).

- Castro Jiménez, L.E.; Martínez-García, E.A. Thermal Image Sensing Model for Robotic Planning and Search. Sensors 2016, 16, 1253. [Google Scholar] [CrossRef] [PubMed]

- Chiu, S.Y.; Tseng, Y.C.; Chen, J.J. Low-Resolution Thermal Sensor-Guided Image Synthesis. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision Workshops (WACVW), Waikoloa, HI, USA, 3–7 January 2023; pp. 60–69. [Google Scholar] [CrossRef]

- Sun, Y.; Zuo, W.; Liu, M. RTFNet: RGB-Thermal Fusion Network for Semantic Segmentation of Urban Scenes. IEEE Robot. Autom. Lett. 2019, 4, 2576–2583. [Google Scholar] [CrossRef]

- Zhao, G.; Huang, J.; Yan, X.; Wang, Z.; Tang, J.; Ou, Y.; Hu, X.; Peng, T. Open-Vocabulary RGB-Thermal Semantic Segmentation. In Computer Vision—ECCV 2024: 18th European Conference, Milan, Italy, September 29–October 4, 2024, Proceedings, Part LXXIV; Springer: Berlin/Heidelberg, Germany, 2024; pp. 304–320. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Driess, D.; Xia, F.; Sajjadi, M.S.; Lynch, C.; Chowdhery, A.; Ichter, B.; Wahid, A.; Tompson, J.; Vuong, Q.; Yu, T.; et al. Palm-e: An embodied multimodal language model. arXiv 2023, arXiv:2303.03378. [Google Scholar]

- Lu, J.; Batra, D.; Parikh, D.; Lee, S. Vilbert: Pretraining task-agnostic visiolinguistic representations for vision-and-language tasks. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Chen, D.; Wu, Q.; Nießner, M.; Chang, A.X. D3 net: A unified speaker-listener architecture for 3d dense captioning and visual grounding. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 487–505. [Google Scholar]

- Jiang, L.; Zhao, H.; Shi, S.; Liu, S.; Fu, C.W.; Jia, J. Pointgroup: Dual-set point grouping for 3d instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4867–4876. [Google Scholar]

- Fang, J.; Tan, X.; Lin, S.; Vasiljevic, I.; Guizilini, V.; Mei, H.; Ambrus, R.; Shakhnarovich, G.; Walter, M.R. Transcrib3D: 3D Referring Expression Resolution through Large Language Models. arXiv 2024, arXiv:2404.19221. [Google Scholar] [CrossRef]

- Schult, J.; Engelmann, F.; Hermans, A.; Litany, O.; Tang, S.; Leibe, B. Mask3d: Mask transformer for 3d semantic instance segmentation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 8216–8223. [Google Scholar]

- Garg, S.; Babu V, M.; Dharmasiri, T.; Hausler, S.; Sünderhauf, N.; Kumar, S.; Drummond, T.; Milford, M. Look No Deeper: Recognizing Places from Opposing Viewpoints under Varying Scene Appearance using Single-View Depth Estimation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4916–4923. [Google Scholar] [CrossRef]

- Pavlova, M.; Krägeloh-Mann, I.; Sokolov, A.; Birbaumer, N. Recognition of point-light biological motion displays by young children. Perception 2001, 30, 925–933. [Google Scholar] [CrossRef]

- Gu, Q.; Kuwajerwala, A.; Morin, S.; Jatavallabhula, K.M.; Sen, B.; Agarwal, A.; Rivera, C.; Paul, W.; Ellis, K.; Chellappa, R.; et al. ConceptGraphs: Open-Vocabulary 3D Scene Graphs for Perception and Planning. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 5021–5028. [Google Scholar]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual instruction tuning. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 34892–34916. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Chang, A.; Dai, A.; Funkhouser, T.; Halber, M.; Niessner, M.; Savva, M.; Song, S.; Zeng, A.; Zhang, Y. Matterport3d: Learning from rgb-d data in indoor environments. arXiv 2017, arXiv:1709.06158. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Abdelreheem, A.; Eldesokey, A.; Ovsjanikov, M.; Wonka, P. Zero-shot 3d shape correspondence. In Proceedings of the SIGGRAPH Asia 2023 Conference Papers, Sydney, NSW, Australia, 12–15 December 2023; pp. 1–11. [Google Scholar]

- Azuma, D.; Miyanishi, T.; Kurita, S.; Kawanabe, M. Scanqa: 3d question answering for spatial scene understanding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19129–19139. [Google Scholar]

- Deng, Z.; Gao, Q.; Ju, Z.; Yu, X. Skeleton-Based Multifeatures and Multistream Network for Real-Time Action Recognition. IEEE Sens. J. 2023, 23, 7397–7409. [Google Scholar] [CrossRef]

- Yuan, Z.; Ren, J.; Feng, C.M.; Zhao, H.; Cui, S.; Li, Z. Visual Programming for Zero-shot Open-Vocabulary 3D Visual Grounding. arXiv 2024, arXiv:2311.15383. [Google Scholar]

- Chen, D.; Chang, A.X.; Nießner, M. ScanRefer: 3D object localization in RGB-D scans using natural language. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 202–221. [Google Scholar]

- Jin, Z.; Hayat, M.; Yang, Y.; Guo, Y.; Lei, Y. Context-aware alignment and mutual masking for 3d-language pre-training. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10984–10994. [Google Scholar]

- Wu, Z.; Song, S.; Khosla, A.; Yu, F.; Zhang, L.; Tang, X.; Xiao, J. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1912–1920. [Google Scholar]

- Uy, M.A.; Pham, Q.H.; Hua, B.S.; Nguyen, T.; Yeung, S.K. Revisiting point cloud classification: A new benchmark dataset and classification model on real-world data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1588–1597. [Google Scholar]

- Poole, B.; Jain, A.; Barron, J.T.; Mildenhall, B. Dreamfusion: Text-to-3d using 2d diffusion. arXiv 2022, arXiv:2209.14988. [Google Scholar]

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Zhou, X.; Ran, X.; Xiong, Y.; He, J.; Lin, Z.; Wang, Y.; Sun, D.; Yang, M.H. Gala3d: Towards text-to-3d complex scene generation via layout-guided generative gaussian splatting. arXiv 2024, arXiv:2402.07207. [Google Scholar]

- Qi, Z.; Fang, Y.; Sun, Z.; Wu, X.; Wu, T.; Wang, J.; Lin, D.; Zhao, H. Gpt4point: A unified framework for point-language understanding and generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 26417–26427. [Google Scholar]

- Zhang, Q.; Zhang, J.; Xu, Y.; Tao, D. Vision transformer with quadrangle attention. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 3608–3624. [Google Scholar] [CrossRef]

- Wang, C.; Zhong, H.; Chai, M.; He, M.; Chen, D.; Liao, J. Chat2Layout: Interactive 3D Furniture Layout with a Multimodal LLM. arXiv 2024, arXiv:2407.21333. [Google Scholar] [CrossRef]

- Liu, D.; Huang, X.; Hou, Y.; Wang, Z.; Yin, Z.; Gong, Y.; Gao, P.; Ouyang, W. Uni3D-LLM: Unifying Point Cloud Perception, Generation and Editing with Large Language Models. arXiv 2024, arXiv:2402.03327. [Google Scholar]

- Wang, W.; Bao, H.; Dong, L.; Bjorck, J.; Peng, Z.; Liu, Q.; Aggarwal, K.; Mohammed, O.K.; Singhal, S.; Som, S.; et al. Image as a foreign language: Beit pretraining for vision and vision-language tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 19175–19186. [Google Scholar]

- Jain, J.; Yang, J.; Shi, H. Vcoder: Versatile vision encoders for multimodal large language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 27992–28002. [Google Scholar]

- Chen, F.; Han, M.; Zhao, H.; Zhang, Q.; Shi, J.; Xu, S.; Xu, B. X-llm: Bootstrapping advanced large language models by treating multi-modalities as foreign languages. arXiv 2023, arXiv:2305.04160. [Google Scholar]

- Hsu, W.N.; Bolte, B.; Tsai, Y.H.H.; Lakhotia, K.; Salakhutdinov, R.; Mohamed, A. Hubert: Self-supervised speech representation learning by masked prediction of hidden units. IEEE/ACM Trans. Audio Speech Lang. Process. 2021, 29, 3451–3460. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust speech recognition via large-scale weak supervision. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 28492–28518. [Google Scholar]

- Wu, Y.; Chen, K.; Zhang, T.; Hui, Y.; Berg-Kirkpatrick, T.; Dubnov, S. Large-scale contrastive language-audio pretraining with feature fusion and keyword-to-caption augmentation. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Yu, X.; Tang, L.; Rao, Y.; Huang, T.; Zhou, J.; Lu, J. Point-bert: Pre-training 3d point cloud transformers with masked point modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19313–19322. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 10–17 October 2021; pp. 9650–9660. [Google Scholar]

- Guzhov, A.; Raue, F.; Hees, J.; Dengel, A. Audioclip: Extending clip to image, text and audio. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 23–27 May 2022; pp. 976–980. [Google Scholar]

- Hong, Y.; Zhen, H.; Chen, P.; Zheng, S.; Du, Y.; Chen, Z.; Gan, C. 3d-llm: Injecting the 3d world into large language models. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2023; Volume 36, pp. 20482–20494. [Google Scholar]

- Chen, S.; Guhur, P.L.; Tapaswi, M.; Schmid, C.; Laptev, I. Learning from unlabeled 3d environments for vision-and-language navigation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 638–655. [Google Scholar]

- Seita, D.; Wang, Y.; Shetty, S.J.; Li, E.Y.; Erickson, Z.; Held, D. Toolflownet: Robotic manipulation with tools via predicting tool flow from point clouds. In Proceedings of the Conference on Robot Learning, Atlanta, GA, USA, 6–9 November 2023; pp. 1038–1049. [Google Scholar]

- Qin, Y.; Huang, B.; Yin, Z.H.; Su, H.; Wang, X. Dexpoint: Generalizable point cloud reinforcement learning for sim-to-real dexterous manipulation. In Proceedings of the Conference on Robot Learning, Atlanta, GA, USA, 6–9 November 2023; pp. 594–605. [Google Scholar]

- Reed, S.; Zolna, K.; Parisotto, E.; Colmenarejo, S.G.; Novikov, A.; Barth-Maron, G.; Gimenez, M.; Sulsky, Y.; Kay, J.; Springenberg, J.T.; et al. A generalist agent. arXiv 2022, arXiv:2205.06175. [Google Scholar] [CrossRef]

- Guhur, P.L.; Chen, S.; Pinel, R.G.; Tapaswi, M.; Laptev, I.; Schmid, C. Instruction-driven history-aware policies for robotic manipulations. In Proceedings of the Conference on Robot Learning, Atlanta, GA, USA, 6–9 November 2023; pp. 175–187. [Google Scholar]

- Jang, E.; Irpan, A.; Khansari, M.; Kappler, D.; Ebert, F.; Lynch, C.; Levine, S.; Finn, C. Bc-z: Zero-shot task generalization with robotic imitation learning. In Proceedings of the Conference on Robot Learning, Auckland, New Zealand, 14–18 December 2022; pp. 991–1002. [Google Scholar]

- Liu, S.; James, S.; Davison, A.J.; Johns, E. Auto-lambda: Disentangling dynamic task relationships. arXiv 2022, arXiv:2202.03091. [Google Scholar] [CrossRef]

- Hong, Y.; Zheng, Z.; Chen, P.; Wang, Y.; Li, J.; Gan, C. Multiply: A multisensory object-centric embodied large language model in 3d world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 26406–26416. [Google Scholar]

- Huang, T.; Dong, B.; Yang, Y.; Huang, X.; Lau, R.W.; Ouyang, W.; Zuo, W. Clip2point: Transfer clip to point cloud classification with image-depth pre-training. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 17–24 June 2023; pp. 22157–22167. [Google Scholar]

- Guo, Z.; Zhang, R.; Zhu, X.; Tang, Y.; Ma, X.; Han, J.; Chen, K.; Gao, P.; Li, X.; Li, H.; et al. Point-bind & point-llm: Aligning point cloud with multi-modality for 3d understanding, generation, and instruction following. arXiv 2023, arXiv:2309.00615. [Google Scholar]

- Ji, J.; Wang, H.; Wu, C.; Ma, Y.; Sun, X.; Ji, R. JM3D & JM3D-LLM: Elevating 3D Representation with Joint Multi-modal Cues. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 2475–2492. [Google Scholar]

- Karnchanachari, N.; Geromichalos, D.; Tan, K.S.; Li, N.; Eriksen, C.; Yaghoubi, S.; Mehdipour, N.; Bernasconi, G.; Fong, W.K.; Guo, Y.; et al. Towards Learning-Based Planning: The nuPlan Benchmark for Real-World Autonomous Driving. arXiv 2024, arXiv:2403.04133. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5828–5839. [Google Scholar]

- Achlioptas, P.; Abdelreheem, A.; Xia, F.; Elhoseiny, M.; Guibas, L. ReferIt3D: Neural Listeners for Fine-Grained 3D Object Identification in Real-World Scenes. In Proceedings of the 16th European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Wald, J.; Avetisyan, A.; Navab, N.; Tombari, F.; Nießner, M. Rio: 3d object instance re-localization in changing indoor environments. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7658–7667. [Google Scholar]

- Zhu, Z.; Ma, X.; Chen, Y.; Deng, Z.; Huang, S.; Li, Q. 3d-vista: Pre-trained transformer for 3d vision and text alignment. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 17–24 June 2023; pp. 2911–2921. [Google Scholar]

- Liao, Y.; Xie, J.; Geiger, A. Kitti-360: A novel dataset and benchmarks for urban scene understanding in 2d and 3d. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 3292–3310. [Google Scholar] [CrossRef]

- Yeshwanth, C.; Liu, Y.C.; Nießner, M.; Dai, A. Scannet++: A high-fidelity dataset of 3d indoor scenes. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 17–24 June 2023; pp. 12–22. [Google Scholar]

- Baruch, G.; Chen, Z.; Dehghan, A.; Dimry, T.; Feigin, Y.; Fu, P.; Gebauer, T.; Joffe, B.; Kurz, D.; Schwartz, A.; et al. Arkitscenes: A diverse real-world dataset for 3d indoor scene understanding using mobile rgb-d data. arXiv 2021, arXiv:2111.08897. [Google Scholar]

- Ramakrishnan, S.K.; Gokaslan, A.; Wijmans, E.; Maksymets, O.; Clegg, A.; Turner, J.; Undersander, E.; Galuba, W.; Westbury, A.; Chang, A.X.; et al. Habitat-matterport 3d dataset (hm3d): 1000 large-scale 3d environments for embodied ai. arXiv 2021, arXiv:2109.08238. [Google Scholar]

- Mao, Y.; Zhang, Y.; Jiang, H.; Chang, A.; Savva, M. MultiScan: Scalable RGBD scanning for 3D environments with articulated objects. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 9058–9071. [Google Scholar]

- Zheng, J.; Zhang, J.; Li, J.; Tang, R.; Gao, S.; Zhou, Z. Structured3d: A large photo-realistic dataset for structured 3d modeling. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part IX; Springer: Cham, Switzerland, 2020; pp. 519–535. [Google Scholar]

- Deitke, M.; VanderBilt, E.; Herrasti, A.; Weihs, L.; Ehsani, K.; Salvador, J.; Han, W.; Kolve, E.; Kembhavi, A.; Mottaghi, R. ProcTHOR: Large-Scale Embodied AI Using Procedural Generation. In Proceedings of the Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 5982–5994. [Google Scholar]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral Pedestrian Detection: Benchmark Dataset and Baselines. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ma, X.; Yong, S.; Zheng, Z.; Li, Q.; Liang, Y.; Zhu, S.C.; Huang, S. Sqa3d: Situated question answering in 3d scenes. arXiv 2022, arXiv:2210.07474. [Google Scholar]

- Song, S.; Yu, F.; Zeng, A.; Chang, A.X.; Savva, M.; Funkhouser, T. Semantic scene completion from a single depth image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1746–1754. [Google Scholar]

- Qi, Y.; Wu, Q.; Anderson, P.; Wang, X.; Wang, W.Y.; Shen, C.; Hengel, A.v.d. Reverie: Remote embodied visual referring expression in real indoor environments. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9982–9991. [Google Scholar]

- Zhu, F.; Liang, X.; Zhu, Y.; Yu, Q.; Chang, X.; Liang, X. Soon: Scenario oriented object navigation with graph-based exploration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 12689–12699. [Google Scholar]

- Liu, J.; Liu, Z.; Wu, G.; Ma, L.; Liu, R.; Zhong, W.; Luo, Z.; Fan, X. Multi-interactive feature learning and a full-time multi-modality benchmark for image fusion and segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 17–24 June 2023; pp. 8115–8124. [Google Scholar]

- Liu, J.; Shahroudy, A.; Perez, M.; Wang, G.; Duan, L.Y.; Kot, A.C. Ntu rgb+ d 120: A large-scale benchmark for 3d human activity understanding. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 2684–2701. [Google Scholar] [CrossRef]

- Cong, P.; Zhu, X.; Qiao, F.; Ren, Y.; Peng, X.; Hou, Y.; Xu, L.; Yang, R.; Manocha, D.; Ma, Y. Stcrowd: A multimodal dataset for pedestrian perception in crowded scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19608–19617. [Google Scholar]

- Flir. FLIR Thermal Datasets for Algorithm Training. Available online: https://www.flir.in/oem/adas/dataset/ (accessed on 27 April 2025).

- Wang, T.; Mao, X.; Zhu, C.; Xu, R.; Lyu, R.; Li, P.; Chen, X.; Zhang, W.; Chen, K.; Xue, T.; et al. Embodiedscan: A holistic multi-modal 3d perception suite towards embodied ai. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19757–19767. [Google Scholar]

- Li, M.; Chen, X.; Zhang, C.; Chen, S.; Zhu, H.; Yin, F.; Yu, G.; Chen, T. M3dbench: Let’s instruct large models with multi-modal 3d prompts. arXiv 2023, arXiv:2312.10763. [Google Scholar]

- Yuan, Z.; Yan, X.; Li, Z.; Li, X.; Guo, Y.; Cui, S.; Li, Z. Toward Fine-Grained 3-D Visual Grounding Through Referring Textual Phrases. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 19411–19422. [Google Scholar] [CrossRef]

- Yan, X.; Yuan, Z.; Du, Y.; Liao, Y.; Guo, Y.; Cui, S.; Li, Z. Comprehensive visual question answering on point clouds through compositional scene manipulation. IEEE Trans. Vis. Comput. Graph. 2023, 30, 7473–7485. [Google Scholar] [CrossRef]

- Kuznetsova, A.; Rom, H.; Alldrin, N.; Uijlings, J.; Krasin, I.; Pont-Tuset, J.; Kamali, S.; Popov, S.; Malloci, M.; Kolesnikov, A.; et al. The open images dataset v4: Unified image classification, object detection, and visual relationship detection at scale. Int. J. Comput. Vis. 2020, 128, 1956–1981. [Google Scholar] [CrossRef]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Changpinyo, S.; Sharma, P.; Ding, N.; Soricut, R. Conceptual 12m: Pushing web-scale image-text pre-training to recognize long-tail visual concepts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 3558–3568. [Google Scholar]

- Schuhmann, C.; Vencu, R.; Beaumont, R.; Kaczmarczyk, R.; Mullis, C.; Katta, A.; Coombes, T.; Jitsev, J.; Komatsuzaki, A. Laion-400m: Open dataset of clip-filtered 400 million image-text pairs. arXiv 2021, arXiv:2111.02114. [Google Scholar]

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Katta, A.; Mullis, C.; Wortsman, M.; et al. Laion-5b: An open large-scale dataset for training next generation image-text models. In Advances in Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 25278–25294. [Google Scholar]

- Desai, K.; Kaul, G.; Aysola, Z.; Johnson, J. Redcaps: Web-curated image-text data created by the people, for the people. arXiv 2021, arXiv:2111.11431. [Google Scholar]

- Chen, Z.; Luo, Y.; Wang, Z.; Baktashmotlagh, M.; Huang, Z. Revisiting domain-adaptive 3D object detection by reliable, diverse and class-balanced pseudo-labeling. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 17–24 June 2023; pp. 3714–3726. [Google Scholar]

- Saito, K.; Watanabe, K.; Ushiku, Y.; Harada, T. Maximum classifier discrepancy for unsupervised domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 13–18 June 2018; pp. 3723–3732. [Google Scholar]

- Zhang, B.; Wang, Y.; Zhang, C.; Jiang, J.; Luo, X.; Wang, X.; Zhang, Y.; Liu, Z.; Shen, G.; Ye, Y.; et al. FogFusion: Robust 3D object detection based on camera-LiDAR fusion for autonomous driving in foggy weather conditions. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2025, 09544070251327229. [Google Scholar] [CrossRef]

- Maanpää, J.; Taher, J.; Manninen, P.; Pakola, L.; Melekhov, I.; Hyyppä, J. Multimodal end-to-end learning for autonomous steering in adverse road and weather conditions. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Nashville, TN, USA, 20–25 June 2021; pp. 699–706. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the ACL Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, ISA, 29 June 2005; pp. 65–72. [Google Scholar]

- Lin, C.Y. Rouge: A package for automatic evaluation of summaries. In Text Summarization Branches Out; Association for Computational Linguistics: Barcelona, Spain, 2004; pp. 74–81. [Google Scholar]

- Vedantam, R.; Lawrence Zitnick, C.; Parikh, D. Cider: Consensus-based image description evaluation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4566–4575. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Wijmans, E.; Datta, S.; Maksymets, O.; Das, A.; Gkioxari, G.; Lee, S.; Essa, I.; Parikh, D.; Batra, D. Embodied question answering in photorealistic environments with point cloud perception. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6659–6668. [Google Scholar]

- Rubner, Y.; Tomasi, C.; Guibas, L.J. The earth mover’s distance as a metric for image retrieval. Int. J. Comput. Vis. 2000, 40, 99–121. [Google Scholar] [CrossRef]

- Achlioptas, P.; Diamanti, O.; Mitliagkas, I.; Guibas, L. Learning representations and generative models for 3d point clouds. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 40–49. [Google Scholar]

- Yokoyama, N.; Ha, S.; Batra, D. Success weighted by completion time: A dynamics-aware evaluation criteria for embodied navigation. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 1562–1569. [Google Scholar]

- Singh, S.U.; Namin, A.S. A survey on chatbots and large language models: Testing and evaluation techniques. Nat. Lang. Process. J. 2025, 10, 100128. [Google Scholar] [CrossRef]

- Deriu, J.; Rodrigo, A.; Otegi, A.; Echegoyen, G.; Rosset, S.; Agirre, E.; Cieliebak, M. Survey on evaluation methods for dialogue systems. Artif. Intell. Rev. 2021, 54, 755–810. [Google Scholar] [CrossRef]

- Norton, A.; Admoni, H.; Crandall, J.; Fitzgerald, T.; Gautam, A.; Goodrich, M.; Saretsky, A.; Scheutz, M.; Simmons, R.; Steinfeld, A.; et al. Metrics for Robot Proficiency Self-Assessment and Communication of Proficiency in Human-Robot Teams. ACM Trans. Hum.-Robot Interact. 2022, 11, 29. [Google Scholar] [CrossRef]

- Bai, Y.; Ding, Z.; Taylor, A. From Virtual Agents to Robot Teams: A Multi-Robot Framework Evaluation in High-Stakes Healthcare Context. arXiv 2025, arXiv:2506.03546. [Google Scholar]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. Shapenet: An information-rich 3d model repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- Goyal, A.; Law, H.; Liu, B.; Newell, A.; Deng, J. Revisiting point cloud shape classification with a simple and effective baseline. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 3809–3820. [Google Scholar]

- Zini, J.E.; Awad, M. On the explainability of natural language processing deep models. ACM Comput. Surv. 2022, 55, 103. [Google Scholar] [CrossRef]

- iMerit. Managing Uncertainty in Multi-Sensor Fusion: Bayesian Approaches for Robust Object Detection and Localization. Available online: https://imerit.net/resources/blog/managing-uncertainty-in-multi-sensor-fusion-bayesian-approaches-for-robust-object-detection-and-localization/ (accessed on 1 October 2025).

- Pfeifer, T.; Weissig, P.; Lange, S.; Protzel, P. Robust factor graph optimization—A comparison for sensor fusion applications. In Proceedings of the 2016 IEEE 21st International Conference on Emerging Technologies and Factory Automation (ETFA), Berlin, Germany, 6–9 September 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Xu, J.; Yang, G.; Sun, Y.; Picek, S. A Multi-Sensor Information Fusion Method Based on Factor Graph for Integrated Navigation System. IEEE Access 2021, 9, 12044–12054. [Google Scholar] [CrossRef]

- Ziv, Y.; Matzliach, B.; Ben-Gal, I. Sensor Fusion for Target Detection Using LLM-Based Transfer Learning Approach. Entropy 2025, 27, 928. [Google Scholar] [CrossRef] [PubMed]

- Dey, P.; Merugu, S.; Kaveri, S. Uncertainty-aware fusion: An ensemble framework for mitigating hallucinations in large language models. In Proceedings of the Companion Proceedings of the ACM on Web Conference 2025, Sydney, NSW, Australia, 28 April–2 May 2025; pp. 947–951. [Google Scholar]

- Kweon, W.; Jang, S.; Kang, S.; Yu, H. Uncertainty Quantification and Decomposition for LLM-based Recommendation. In Proceedings of the ACM on Web Conference 2025, Sydney, NSW, Australia, 28 April–2 May 2025. [Google Scholar]

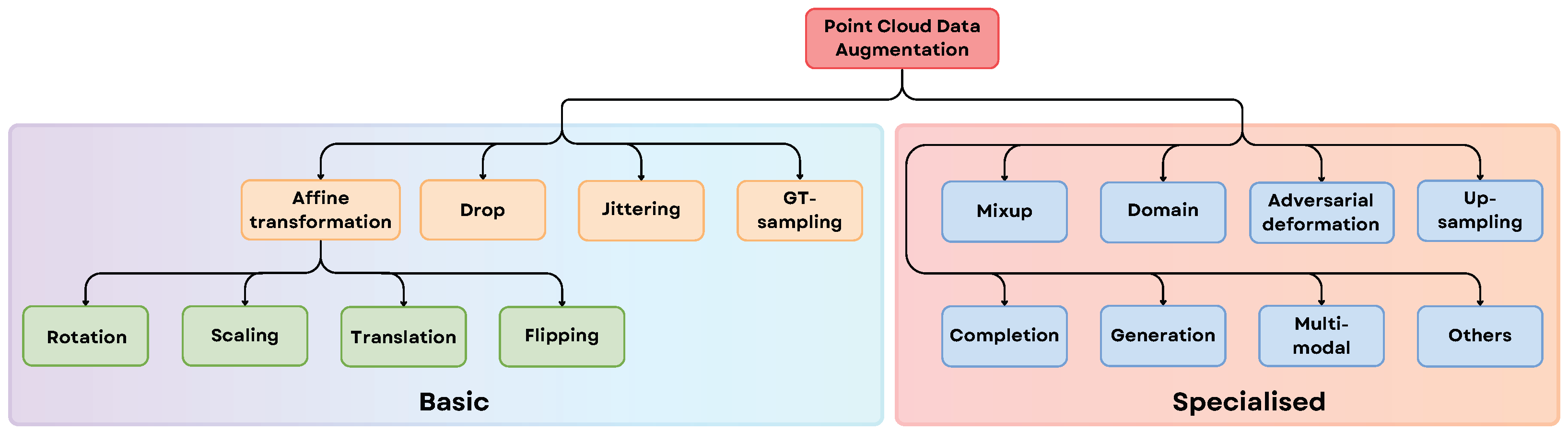

- Zhu, Q.; Fan, L.; Weng, N. Advancements in point cloud data augmentation for deep learning: A survey. Pattern Recognit. 2024, 153, 110532. [Google Scholar] [CrossRef]

- Cuskley, C.; Woods, R.; Flaherty, M. The Limitations of Large Language Models for Understanding Human Language and Cognition. Open Mind 2024, 8, 1058–1083. [Google Scholar] [CrossRef] [PubMed]

- Burtsev, M.; Reeves, M.; Job, A. The Working Limitations of Large Language Models. Available online: https://sloanreview.mit.edu/article/the-working-limitations-of-large-language-models/ (accessed on 27 April 2025).

- Cai, Y.; Rostami, M. Dynamic transformer architecture for continual learning of multimodal tasks. arXiv 2024, arXiv:2401.15275. [Google Scholar] [CrossRef]

- Kumar, S.; Parker, J.; Naderian, P. Adaptive transformers in RL. arXiv 2020, arXiv:2004.03761. [Google Scholar] [CrossRef]

| Metric | Traditional 3D Methods | LLM-3D Pipeline |

|---|---|---|

| FLOPs | 10–200 GFLOPs per inference | 1–5+ TFLOPs per inference |

| Power (Watts) | 20–80 W (embedded GPUs, edge devices) | 250+ W (high-performance GPUs/clusters) |

| Latency | 10–100 ms | 200 ms |

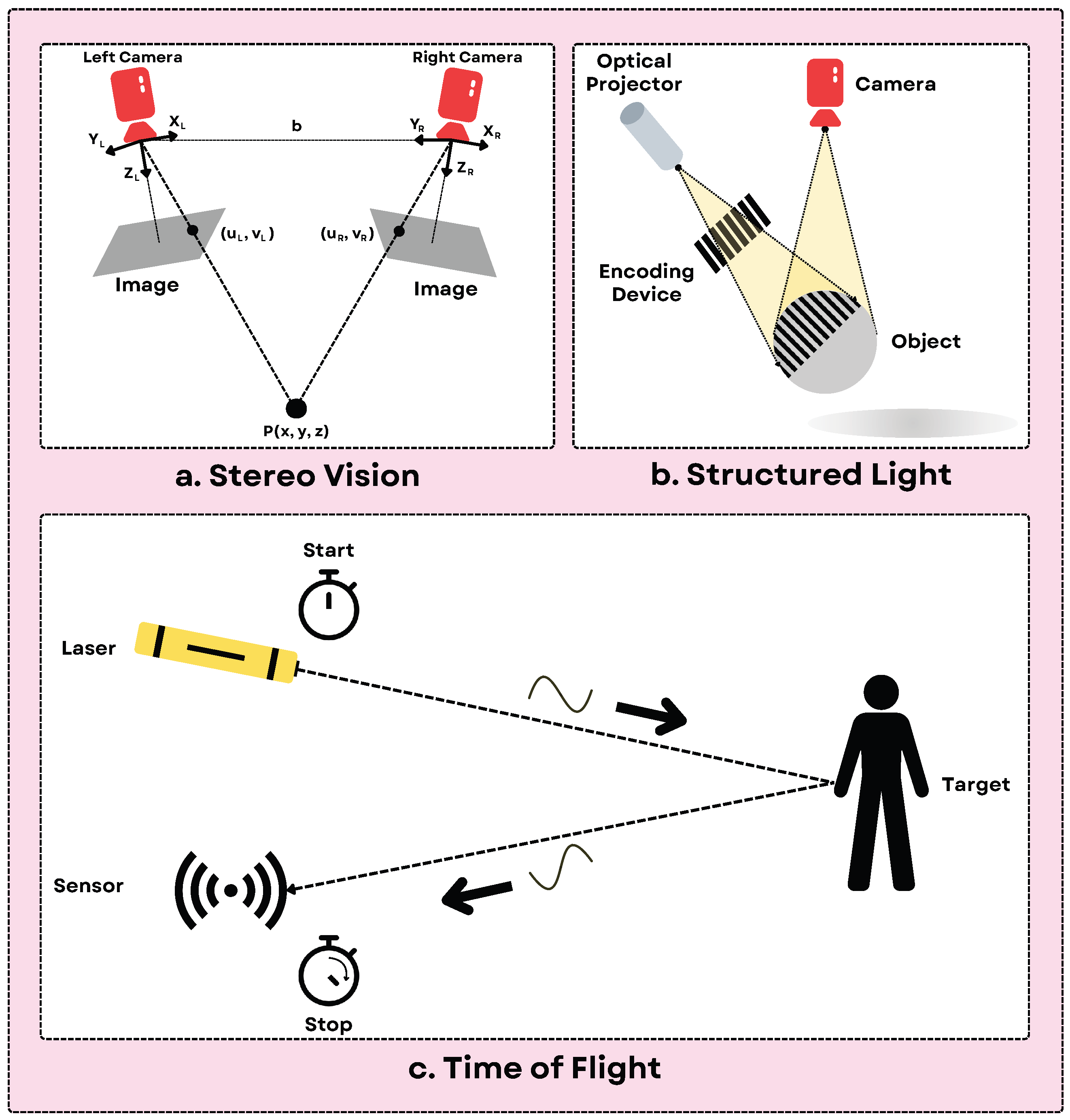

| Method | Principle | Modality | Type |

|---|---|---|---|

| Stereo Vision | Triangulation | Passive | Direct |

| Structured Lighting | Triangulation | Active | Direct |

| Shape from Shading | Monocular Images | Passive | Indirect |

| Shape from Texture | Monocular Images | Passive | Indirect |

| Time of Flight | Time Delay | Active | Direct |

| Interferometry | Time Delay | Active | Direct |

| LiDAR | Time Delay/Phase Shift | Active | Direct |

| Technology | Sensor Example | Key Specs (Resolution/Rate/Points) | Typical Range (m) | Integrated IMU |

|---|---|---|---|---|

| Stereo Vision | Bumblebee 2 | 648 × 488 @ 48 fps | 0.1–20 | × |

| Bumblebee XB3 | 1280 × 960 @ 16 fps | 0.1–20 | × | |

| Nerian SP1 | 1440 × 1440 @ 40 fps | - | × | |

| DUO3D Stereo Camera | 640 × 480 @ 30 fps | - | ✓ | |

| OrSens3D Camera | 640 × 640 @ 15 fps | - | × | |

| Structured Light | Microsoft Kinect v1 | 320 × 240 @ 30 fps | 1.2–3.5 | × |

| PrimeSense Carmine | 640 × 480 @ 30 fps | 0.35–3 | × | |

| Orbbec Astra Pro | 640 × 480 @ 30 fps | 0.6–8 | × | |

| Intel RealSense D435 | 1280 × 720 @ 90 fps | 0.1–10+ | × | |

| Intel RealSense R200 | 640 × 480 @ 60 fps | 3–4+ | × | |

| Intel RealSense R399 | 640 × 480 @ 60 fps | 0.1–1.2 | × | |

| Intel RealSense ZR300 | 480 × 360 @ 60 fps | 0.5–2.8 | ✓ | |

| Time-of-Flight | Microsoft Azure Kinect | 1024 × 1024 @ 30 fps | 0.5–3.86 (NFOV) | ✓ |

| Microsoft Kinect v2 | 512 × 484 @ 30 fps | 0.5–4.5 | × | |

| SICK Visionary-T | 144 × 176 @ 30 fps | 1–7 | × | |

| Basler ToF ES Camera | 640 × 480 @ 20 fps | 0–13 | × | |

| MESA SR4000 | 176 × 144 @ 54 fps | 5/10 | × | |

| MESA SR4500 | 176 × 144 @ 30 fps | 0.8–9 | × | |

| Argos3D P100 | 160 × 120 @ 160 fps | 3 | × | |

| Argos3D P330 | 352 × 287 @ 40 fps | 0.1–10 | × | |

| Sentis3D M520 | 160 × 120 @ 160 fps | 0.1–5 | × | |

| LiDAR | Velodyne VLP-16 (Puck) | 16 Ch ∼300k pts/s | ∼100 | Optional/Ext. |

| Ouster OS1-64 | 64 Ch ∼1.3M pts/s | ∼100–120 | ✓ | |

| Hesai PandarXT-32 | 32 Ch ∼640k pts/s | ∼120 | ✓ | |

| Livox Mid-70 | NRS ∼100k pts/s | ∼70 | ✓ |

| Type | Sensor | Principle/Specs | Output | Applications | Limitations |

|---|---|---|---|---|---|

| Tactile | GelSight | Optical cam; elastomer def. | Hi-res surface, texture, force map | Dexterous manipulation, object ID, slip detection | Bulky, wear, calibration, high processing |

| BioTac/Weiss | Biomimetic; piezoresistive array | Force, vibration, temperature | Grasp stability, material ID, HRI | Wiring, calibration drift, signal interpretation | |

| FlexiForce | Thin-film piezoresistive | Single-point force | Contact detection, basic force sensing | Low spatial info, shear wear | |

| Audio | ReSpeaker/UMA-8 | MEMS mic array (4–8) | Multi-channel audio, DOA, text | Voice command, speaker localization, ambient sound monitoring | Noise handling, source separation, non-speech interpretation |

| Built-in Mic Arrays | Custom array config. | Similar to above | Same as above | Proprietary processing, limited flexibility | |

| Single Mic | Condenser/MEMS | Mono audio | Sound recording, basic event detection | No directional info, noise sensitivity | |

| Thermal | FLIR Lepton | LWIR, 160 × 120 px | Low-res thermal image | Presence detection, human detection | Low resolution and frame rate, emissivity dependence |

| FLIR Boson | LWIR, 320 × 640 px | Medium/high-res thermal image | Surveillance, machine monitoring, HRI | High cost, lower resolution than visual | |

| Seek CompactPRO | LWIR, 320 × 240 px | Medium-res thermal image | Easy integration, human detection, diagnostics | Varying performance, requires adaptation |

| Datasets | Remarks |

|---|---|

| ScanNet [192] | 2.5M views in 1513 scenes with 3D poses, reconstructions, and semantic annotations. |

| Sr3D 1 [193] | 83,572 synthetic utterances. Built on top of ScanNet [192]. |

| Sr3D+ 1 [193] | 83,572 synthetic utterances. Enhanced with fewer-distractor utterances. |

| Nr3D 1 [193] | 41,503 human utterances. Built on top of ScanNet [192]. |

| ScanRefer 1 [159] | 51,583 descriptions of 11,046 objects from 800 ScanNet scenes [192]. |

| 3RScan [194] | 1482 RGB-D scans of 478 environments with 6DoF mappings and temporal changes. |

| ScanScribe 1 [195] | 2995 RGB-D scans of 1185 scenes with 278K paired descriptions. |

| KITTI360Pose 1 [196] | 150K+ images, 1B 3D points with coherent 2D-3D semantic annotations. |

| ScanNet++ [197] | 460 scenes, 280,000 captured DSLR images, and over 3.7M iPhone RGBD frames. |

| ARKitScenes [198] | 5047 captures of 1661 unique scenes with oriented Bounding Box of room-defining objects. |

| HM3D [199] | 1000 3D reconstructions of multi-floor residences and private indoor spaces. |

| MultiScan [200] | 273 scans of 117 scenes with 10,957 objects, part-level semantics, and mobility annotations. |

| Structured3D 2 [201] | Comprises 3500 scenes, 21,835 rooms, 196K renderings, with “primitive + relationship” structures. |

| ProcTHOR 2 [202] | 10,000 procedurally generated 3D scenes for training embodied AI agents. |

| Matterport3D [152] | 10,800 panoramas, 194,400 RGB-D images of 90 scenes with 2D/3D semantics. |

| ModelNet 2 [161] | 151,128 3D CAD models belonging to 660 unique object categories. |

| Kaist [203] | 95K color-thermal image pairs with 103K annotations and 1182 unique pedestrians. |

| ScanObjectNN [162] | 15,000 3 in 15 categories with point-based attributes and semantic labels. |

| ScanQA [156] | 40K question-answer pairs from 800 indoor scenes drawn from the ScanNet [192] dataset. |

| SQA3D [204] | 650 scenes [192] with 6.8K situations, 20.4K descriptions, and 33.4K reasoning questions. |

| SUNCG 2 [205] | 45,622 scenes, 49,884 floors, 404,058 rooms, and 5.7M object instances across 84 categories. |

| REVERIE [206] | 10,318 panoramas of 86 buildings with 4140 objects, and 21,702 crowd-sourced instructions. |

| FAO [207] | 1500 aligned IR-visible image pairs across 14 classes, covering harsh conditions. |

| FMB [208] | 4K sets of annotated instructions with 40K trajectories. |

| nuPlan [191] | 1282 h of driving scenarios with auto-labeled object tracks and traffic light data. |

| NTU RGB+D 120 [209] | 114K+ RGB+D human action samples, 120 action classes and 8M+ frames. |

| STCrowd [210] | Synchronized LiDAR and camera data with 219K pedestrians, 20 persons per frame. |

| FLIR ADAS [211] | RGB + Thermal frames, for various weather conditions for ADAS. |

| 3D-Grand [61] | 40,087 household scenes paired with 6.2 million densely grounded scene-language instruction. |

| EmbodiedScan [212] | Over 5k scans, 1M egocentric RGB-D views, 1M language prompts, 160k 3D-oriented boxes for 760 categories. |

| M3DBench [213] | Over 320K language pairs with 700 scenes with a special prompting that interweaves language with visual cues. |

| SceneVerse [46] | 68K scenes with 2.5M vision-language pairs generated using human annotations and scene-graph-based approach. |

| PhraseRefer [214] | 227K phrase-level annotations from 88K sentences across the Nr3D, Sr3D, and ScanRefer [193] datasets. |

| CLEVR3D [215] | 171K questions about object attributes and spatial relationships, generated from 8771 3D scenes. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mehta, V.; Sharma, C.; Thiyagarajan, K. Large Language Models and 3D Vision for Intelligent Robotic Perception and Autonomy. Sensors 2025, 25, 6394. https://doi.org/10.3390/s25206394

Mehta V, Sharma C, Thiyagarajan K. Large Language Models and 3D Vision for Intelligent Robotic Perception and Autonomy. Sensors. 2025; 25(20):6394. https://doi.org/10.3390/s25206394

Chicago/Turabian StyleMehta, Vinit, Charu Sharma, and Karthick Thiyagarajan. 2025. "Large Language Models and 3D Vision for Intelligent Robotic Perception and Autonomy" Sensors 25, no. 20: 6394. https://doi.org/10.3390/s25206394

APA StyleMehta, V., Sharma, C., & Thiyagarajan, K. (2025). Large Language Models and 3D Vision for Intelligent Robotic Perception and Autonomy. Sensors, 25(20), 6394. https://doi.org/10.3390/s25206394