Research on Optimization of RIS-Assisted Air-Ground Communication System Based on Reinforcement Learning

Abstract

1. Introduction

1.1. Related Work

1.2. Contributions

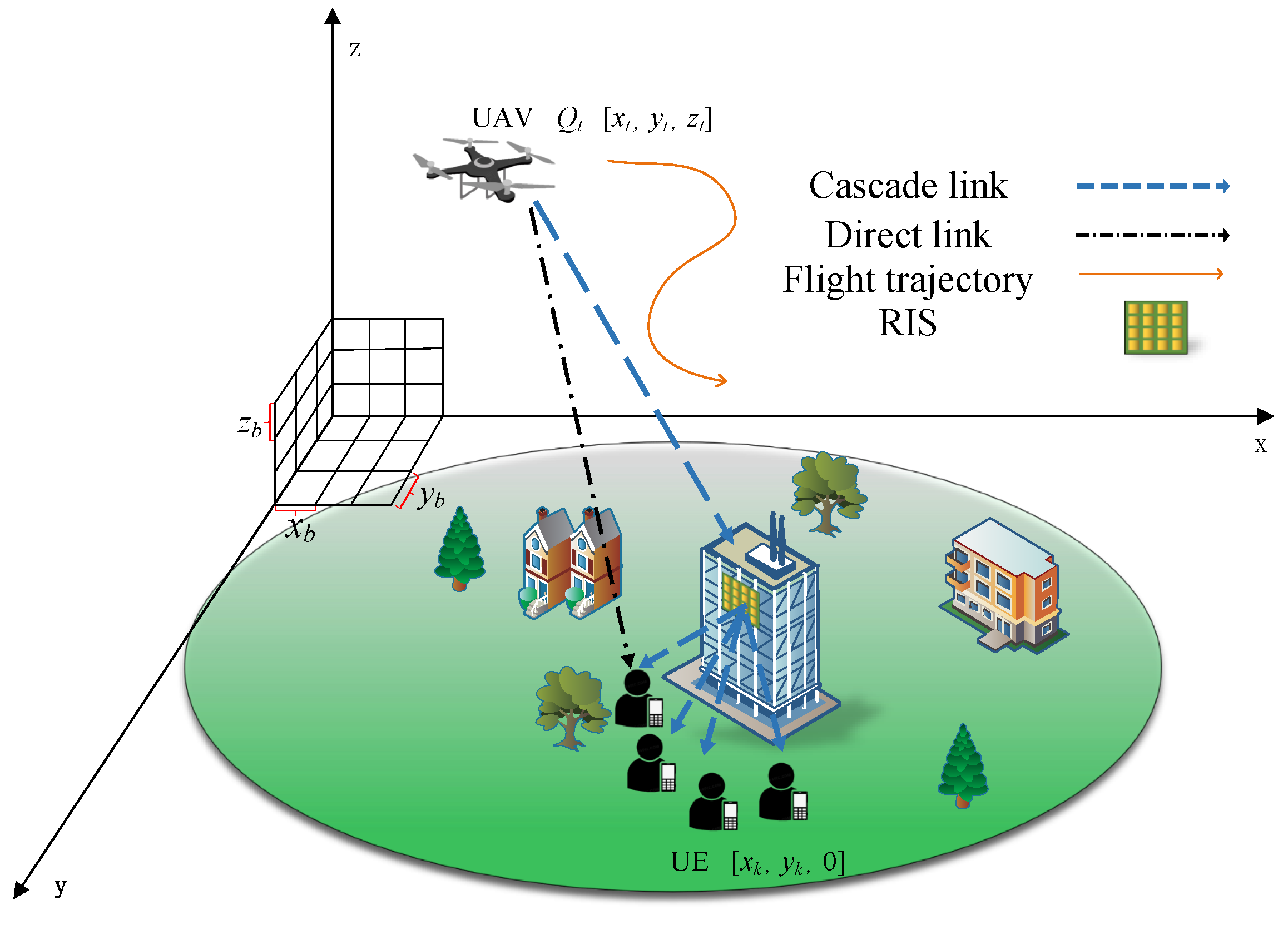

- A joint optimization framework for RIS-assisted UAV air-to-ground wireless communication networks under 3D spatial coordinates is proposed. This framework significantly improves the network system sum-rate and throughput during communication by collaboratively optimizing the 3D spatial coordinates of UAVs, the RIS phase shift matrix, and the UAV transmission power, thus giving full play to the synergistic potential of RIS and UAVs;

- A joint optimization method combining BS transmission power optimization based on the water-filling algorithm and D3QN. Aiming at the convexity problem of BS transmission power optimization, the water-filling algorithm is adopted for an efficient solution; meanwhile, the D3QN algorithm is innovatively used to solve the optimization problem in the discrete action space. It jointly optimizes the 3D coordinates of UAVs and RIS phases, effectively overcoming the limitations of traditional methods in high-dimensional non-convex optimization problems;

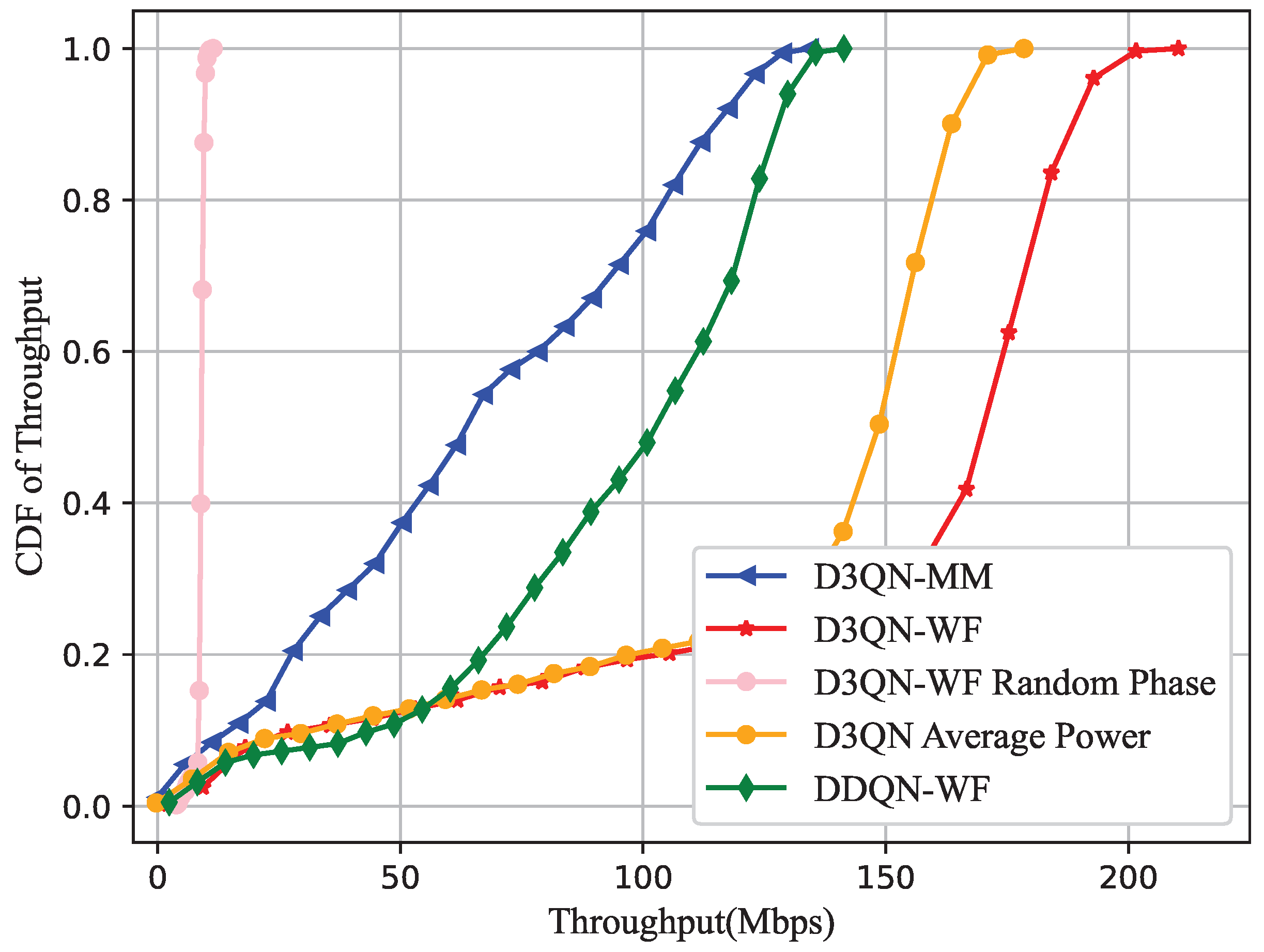

- Detailed verification results are provided to demonstrate the effectiveness of the proposed algorithm in improving the system sum-rate and throughput. Simulation results show that the proposed algorithm has significant advantages in rate improvement. In addition, compared with the DDQN-WF, the D3QN-WF algorithm shows obvious advantages in handling multi-dimensional action spaces, with faster convergence and higher stability. This method increases the system sum-rate by 15.9% and the throughput by 50.1%, providing new ideas for the dynamic optimization of future intelligent communication networks.

2. System Model

2.1. Channel Model

2.2. Downlink Signal Transmission Modeling and Optimization

2.3. Throughput Model

3. DRL-Based Algorithms

- State : The state of the system at time slot t is defined as , , S is denoted as the state space. where represents the 3D coordinates of the UAV at time slot t. denotes the phase shift matrix of the RIS at time slot t.

- Action : Define the spatial action of the UAV in the system at time slot t: The spatial action of the UAV includes horizontal movement and vertical movement. The phase matrix of the RIS elements is dynamically optimized, with the phase parameter of each RIS element being discretely adjusted within a predetermined discretization range. The action belongs to the action space A.

- State Transition Probability : The optimization problem of UAV path planning and RIS phase angle adjustment can be simplified as an MDP. The state transition probability depends only on the current state and action .

- Reward : The UAV receives feedback on its actions from the environment. Rewards can help it evaluate the quality of its actions and adjust its strategy accordingly. This strategy is adjusted according to the magnitude of the reward to obtain higher returns, thereby improving the sum rate of the system. denotes the reward obtained by the system after taking action under state at time slot t. Thus, the reward is the downlink sum rate of the system at time slot t, as shown follows:The goal of MDP is to maximize the cumulative expected return. When the system is in the optimal state, when the maximum rate is achieved, the maximum return is obtained.

| Algorithm 1: D3QN-WF algorithm |

|

4. Complexity of Simulation Analysis

5. Simulation Result

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| BS | base station |

| UAV | unmanned aerial vehicle |

| RIS | reconfigurable intelligent surfaces |

| ZF | zero-forcing |

| DRL | deep reinforcemen learning |

| 6G | sixth Generation |

| 5G | Fifth Generation |

| ML | Machine learning |

| RL | Reinforcement Learning |

| DDQN | Double Deep Q-Network |

| D3QN | Dueling Double Deep Q-Network |

| D3QN-WF | Dueling Double Deep Q-Network Water-Filling Algorithm |

| DDQN-WF | Double Deep Q-Network Water-Filling Algorithm |

| SINR | signal interference noise ratio |

| CSI | channel state information |

| LoS | line-of-sight |

| NLoS | non-line-of-sight |

| AoI | Area of Interest |

| MDP | Markov Decision Process |

References

- Zhou, Y.Q.; Liu, L.; Wang, L. Service Aware 6G: An Intelligent and Open Network Based on Convergence of Communication, Computing and Caching. Digit. Commun. Netw. 2020, 6, 253–260. [Google Scholar] [CrossRef]

- Li, B.; Fei, Z.; Zhang, Y. UAV Communications for 5G and Beyond: Recent Advances and Future Trends. IEEE Internet Things J. 2019, 6, 2241–2263. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, Q.Q.; Zhang, R. Accessing From the Sky: A Tutorial on UAV Communications for 5G and Beyond. Proc. IEEE 2019, 107, 2327–2375. [Google Scholar] [CrossRef]

- Meng, K.; Wu, Q.; Xu, J.; Chen, W.; Feng, Z.; Schober, R.; Swindlehurst, A.L. UAV-Enabled Integrated Sensing and Communication: Opportunities and Challenges. IEEE Wirel. Commun. 2024, 31, 97–104. [Google Scholar] [CrossRef]

- Cui, Y.; Yuan, W.; Zhang, Z.; Mu, J.; Li, X. On the Physical Layer of Digital Twin: An Integrated Sensing and Communications Perspective. IEEE J. Sel. Areas Commun. 2023, 41, 3474–3490. [Google Scholar] [CrossRef]

- Wang, L.; Wei, Q.; Xu, L.; Shen, Y.; Zhang, P.; Fei, A. Research on low-energy-consumption deployment of emergency UAV network for integrated communication-navigating-sensing. J. Commun. 2022, 43, 1–20. [Google Scholar]

- Li, X.; Zheng, Y.; Zhang, J.; Dang, S.; Nallanathan, A.; Mumtaz, S. Finite SNR Diversity-Multiplexing Trade-off in Hybrid ABCom/RCom-Assisted NOMA Systems. IEEE Trans. Mob. Comput. 2024, 23, 9108–9119. [Google Scholar] [CrossRef]

- Li, X.; Wang, Q.; Zeng, M.; Liu, Y.; Dang, S.; Tsiftsis, T.A.; Dobre, O.A. Physical-Layer Authentication for Ambient Backscatter Aided NOMA Symbiotic Systems. IEEE Trans. Commun. 2023, 71, 2288–2303. [Google Scholar] [CrossRef]

- Li, X.; Zhao, M.; Zeng, M. Hardware Impaired Ambient Backscatter NOMA System: Reliability and Security. IEEE Trans. Commun. 2021, 69, 2723–2736. [Google Scholar] [CrossRef]

- Wu, Q.Q.; Zhang, R. Towards Smart and Reconfigurable Environment: Intelligent Reflecting Surface Aided Wireless Network. IEEE Commun. Mag. 2020, 58, 106–112. [Google Scholar] [CrossRef]

- Huang, C.; Zappone, A.; Alexandropoulos, G.C.; Debbah, M.; Yuen, C. Reconfigurable intelligent surfaces for energy efficiency in wireless communication. IEEE Trans. Wireless Commun. 2019, 18, 4157–4170. [Google Scholar] [CrossRef]

- RISTech Alliance (RISTA). Reconfigurable Intelligent Surface (RIS) White Paper (2023); RIS Tech Alliance (RISTA): Beijing, China, 2023. [Google Scholar] [CrossRef]

- Cui, T.; Jin, S.; Zhang, J.; Zhao, Y.; Yuan, Y. Research Report on Reconfigurable Intelligent Surface (RIS). IMT-2030 (6G) Promotion Group. 2021.

- Hemavathy, P.; Priya, S.B.M. Energy-efficient UAV integrated RIS for NOMA communication. In Proceedings of the 2025 1st International Conference on Radio Frequency Communication and Networks (RFCoN), Thanjavur, India, 19–20 June 2025; pp. 1–6. [Google Scholar] [CrossRef]

- Huang, J.; Wu, B.; Duan, Q.; Dong, L.; Yu, S. A Fast UAV Trajectory Planning Framework in RIS-Assisted Communication Systems With Accelerated Learning via Multithreading and Federating. IEEE Trans. Mob. Comput. 2025, 24, 6870–6885. [Google Scholar] [CrossRef]

- Wu, Z.; Li, X.; Cai, Y.; Yuan, W. Joint Trajectory and Resource Allocation Design for RIS-Assisted UAV-Enabled ISAC Systems. IEEE Wirel. Commun. Lett. 2024, 13, 1384–1388. [Google Scholar] [CrossRef]

- Li, S.; Du, H.; Zhang, D.; Li, K. Joint UAV Trajectory and Beamforming Designs for RIS-Assisted MIMO System. IEEE Trans. Veh. Technol. 2024, 73, 5378–5392. [Google Scholar] [CrossRef]

- Li, Z.; Wang, S. Phase Shift Design in RIS Empowered Networks: From Optimization to AI-based Models. Network 2022, 2, 398–418. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, C.; Chen, G.; Song, R.; Song, S.; Xiao, P. Deep Learning Empowered Trajectory and Passive Beamforming Design in UAV-RIS Enabled Secure Cognitive Non-Terrestrial Networks. IEEE Wirel. Commun. Lett. 2024, 13, 188–192. [Google Scholar] [CrossRef]

- Nguyen, K.K.; Khosravirad, S.R.; da Costa, D.B.; Nguyen, L.D.; Duong, T.Q. Reconfigurable Intelligent Surface-Assisted Multi-UAV Networks: Efficient Resource Allocation with Deep Reinforcement Learning. IEEE J. Sel. Top. Signal Process. 2022, 16, 358–368. [Google Scholar] [CrossRef]

- Moon, S.; Liu, H.; Hwang, I. Joint beamforming for ris-assisted integrated sensing and secure communication in UAV networks. J. Commun. Netw. 2024, 26, 502–508. [Google Scholar] [CrossRef]

- Aung, P.S.; Park, Y.M.; Tun, Y.K. Energy-Efficient Communication Networks via Multiple Aerial Reconfigurable Intelligent Surfaces: DRL and Optimization Approach. IEEE Trans. Veh. Technol. 2024, 73, 4277–4292. [Google Scholar] [CrossRef]

- Zhang, H.; Huang, M.; Long, K. Capacity Maximization in RIS-UAV Networks: A DDQN-Based Trajectory and Phase Shift Optimization Approach. IEEE Trans. Wirel. Commun. 2023, 22, 2583–2591. [Google Scholar] [CrossRef]

- Liu, X.; Yu, Y.; Li, F. Throughput Maximization for RIS-UAV Relaying Communications. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19569–19574. [Google Scholar] [CrossRef]

- Sin, S.; Lee, C.-G.; Ma, J.; Kim, K.; Liu, H.; Moon, S.; Hwang, I. UAV-RIS trajectory optimization algorithm for energy efficiency in UAV-RIS based non-terrestrial systems. In Proceedings of the 2024 15th International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 16–18 October 2024; pp. 121–123. [Google Scholar]

- Mohamed, Z.; Aissa, S. Leveraging UAVs with Intelligent Reflecting Surfaces for Energy-Efficient Communications with Cell-Edge Users. In Proceedings of the 2020 IEEE International Conference on Communications Workshops (ICC Workshops), Dublin, Ireland, 7–11 June 2020; pp. 1–6. [Google Scholar]

- Liu, X.; Liu, Y.; Chen, Y. Machine Learning Empowered Trajectory and Passive Beamforming Design in UAV-RIS Wireless Networks. IEEE J. Sel. Areas Commun. 2021, 39, 2042–2055. [Google Scholar] [CrossRef]

- Ahmad, I.; Narmeen, R.; Becvar, Z.; Guvenc, I. Machine learning-based beamforming for unmanned aerial vehicles equipped with reconfigurable intelligent surfaces. IEEE Wirel. Commun. 2022, 29, 32–38. [Google Scholar] [CrossRef]

- Hu, Y.; Cao, K.T. Improved DDQN Method for Throughput in RIS-Assisted UAV System. In Proceedings of the 2023 IEEE 6th International Conference on Automation, Electronics and Electrical Engineering (AUTEEE), Shenyang, China, 15–17 December 2023; pp. 80–85. [Google Scholar]

- Mei, H.; Yang, K.; Wang, K. 3D-Trajectory and Phase-Shift Design for RIS-Assisted UAV Systems Using Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2022, 71, 3020–3029. [Google Scholar] [CrossRef]

- Khalili, A.; Monfared, E.M.; Jorswieck, E.A. Resource Management for Transmit Power Minimization in UAV-Assisted RIS HetNets Supported by Dual Connectivity. IEEE Trans. Wirel. Commun. 2022, 21, 1806–1822. [Google Scholar] [CrossRef]

- Nguyen, T.H.; Park, H.; Park, L. Recent Studies on Deep Reinforcement Learning in RIS-UAV Communication Networks. In Proceedings of the 2023 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Bali, Indonesia, 20–23 February 2023; pp. 378–381. [Google Scholar]

| Physical Meaning | Parameter | Value |

|---|---|---|

| Noise Power | —80 dBm | |

| Bandwidth | B | 1 MHz |

| Path Loss Exponent | 4 | |

| Path Channel Gain | —40 dBm | |

| UAV Transmit Power | 30 dBm | |

| Rice Factor |

| Physical Meaning | Parameter | Value |

|---|---|---|

| Learning Rate | ||

| Decay Factor | 0.9 | |

| Replay Buffer | F | 1500 |

| Greedy Policy | 0.1 | |

| Update Step | O | 750 |

| Mini-batch Size | Mini-batch size | 75 |

| Activation Function | Activation function | ReLU |

| Optimizer | Optimizer | RMSProp |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, Y.; Liu, X.; Huang, S.; Yue, X. Research on Optimization of RIS-Assisted Air-Ground Communication System Based on Reinforcement Learning. Sensors 2025, 25, 6382. https://doi.org/10.3390/s25206382

Yao Y, Liu X, Huang S, Yue X. Research on Optimization of RIS-Assisted Air-Ground Communication System Based on Reinforcement Learning. Sensors. 2025; 25(20):6382. https://doi.org/10.3390/s25206382

Chicago/Turabian StyleYao, Yuanyuan, Xinyang Liu, Sai Huang, and Xinwei Yue. 2025. "Research on Optimization of RIS-Assisted Air-Ground Communication System Based on Reinforcement Learning" Sensors 25, no. 20: 6382. https://doi.org/10.3390/s25206382

APA StyleYao, Y., Liu, X., Huang, S., & Yue, X. (2025). Research on Optimization of RIS-Assisted Air-Ground Communication System Based on Reinforcement Learning. Sensors, 25(20), 6382. https://doi.org/10.3390/s25206382