Data-Driven Optimization of Healthcare Recommender System Retraining Pipelines in MLOps with Wearable IoT Data

Abstract

1. Introduction

2. Related Work

2.1. Preliminary

2.1.1. Recommender Systems

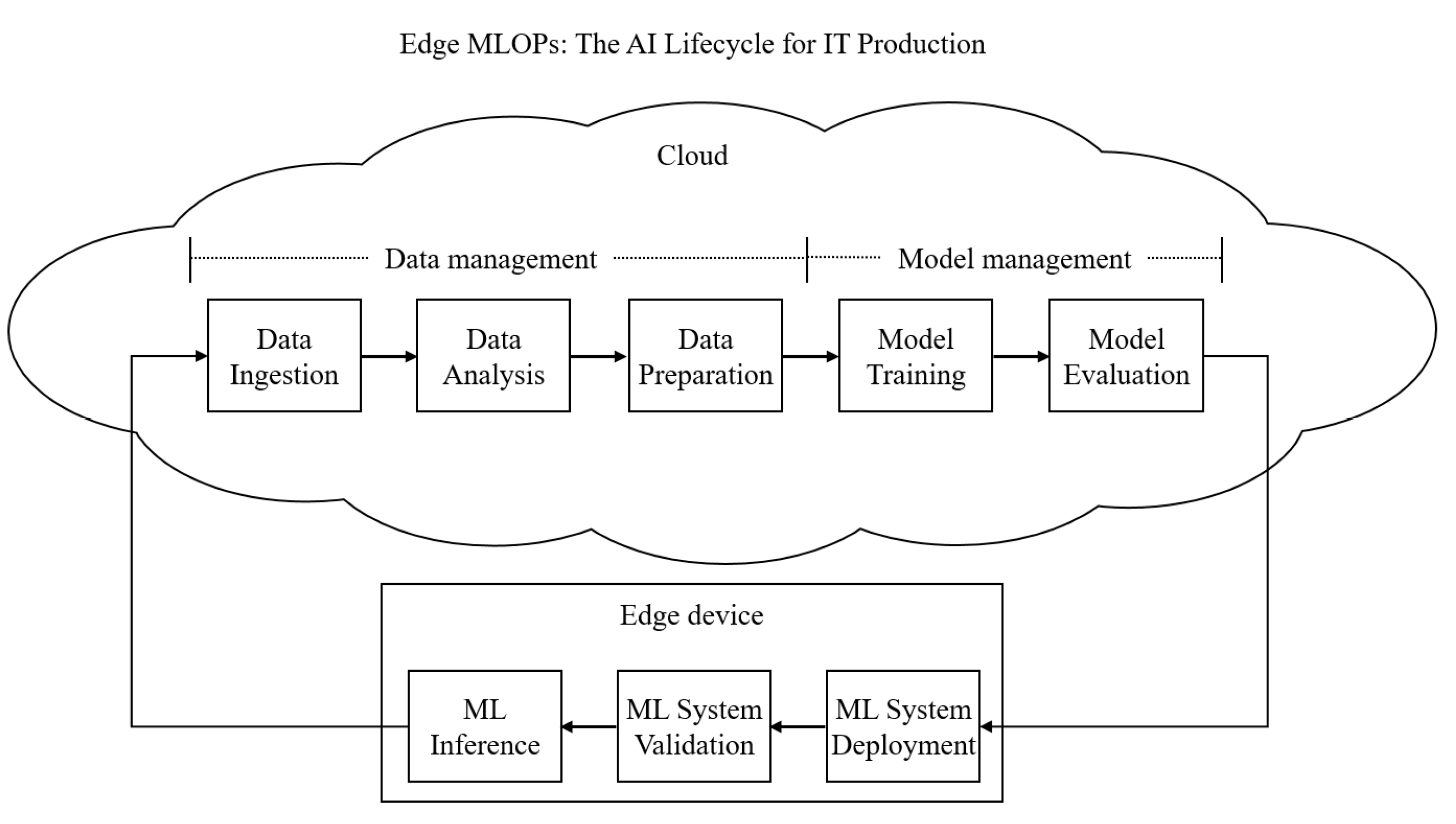

2.1.2. Edge MLOps

2.2. Model Retraining and Continuous Learning

2.3. Data Management in ML

2.4. Data Reduction

2.5. Feature Selection

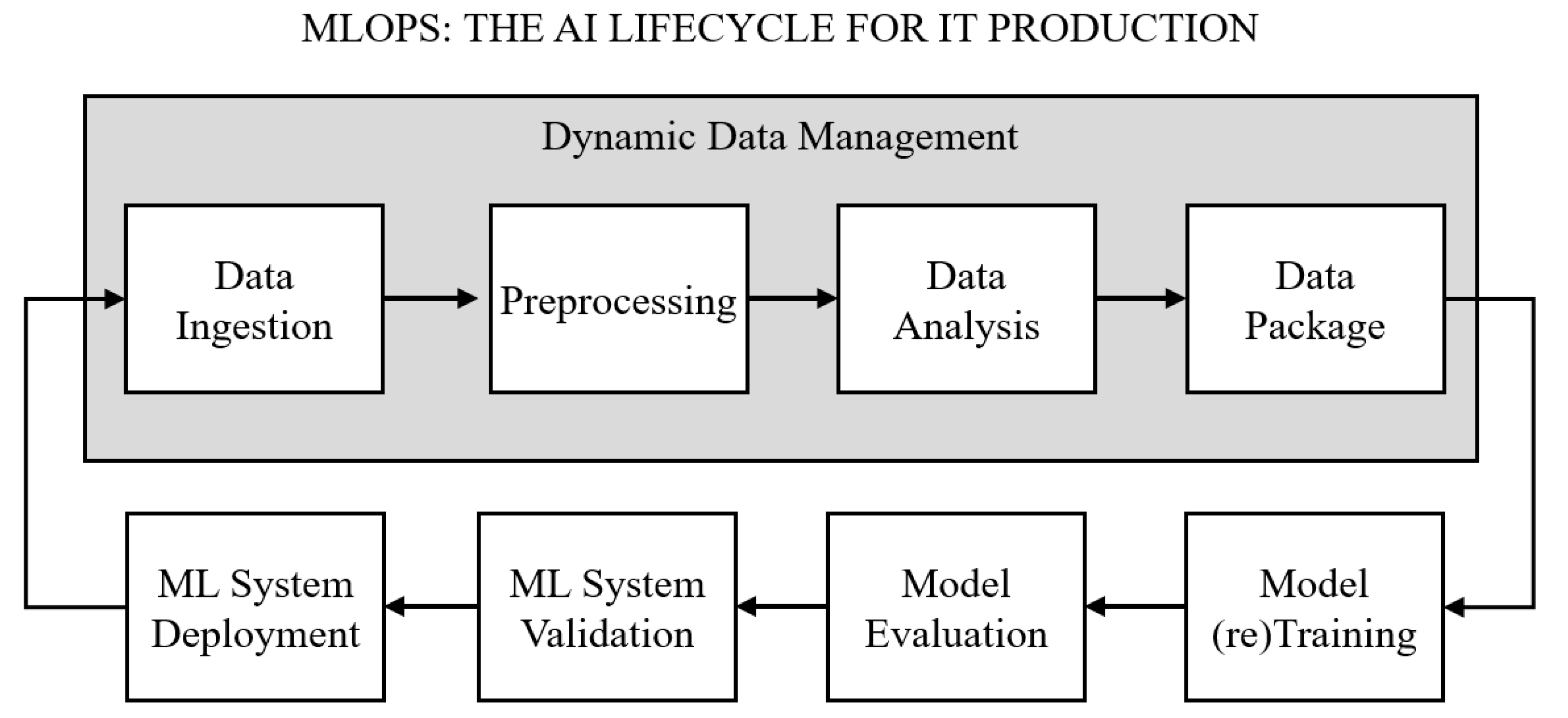

3. Data-Driven Optimization with DDM

3.1. Overview of DDM

- Feature selection module: identifies and retains the most critical user-relevant features, preserving informative attributes essential for effective model training.

- Data reduction module: implemented using matrix factorization, eliminates redundant portions of the training data, thereby compressing the dataset and improving computational efficiency.

3.2. Raw Data

- Old Data: datasets previously used for training recommender models, which preserve historical patterns of user behavior.

- New Data: data collected during service operation, which capture the most recent user behavior.

3.3. Preprocessing

3.4. Data Analysis

| Algorithm 1: Dynamic Data Management (DDM) Workflow | |

| 1 | Input: New_data (csv), Old_data (csv) |

| 2 | Output: Data_version_N (csv) |

| 3 | NewData, OldData ← read_csv(New_data), read_csv(Old_data) |

| 4 | function dynamic_data_management(OldData, NewData): |

| 5 | NewData_preprocessed ← preprocess(NewData) |

| 6 | UserPatternData, FeatureImportances ← analyze_data(OldData, NewData_preprocessed) |

| 7 | DataVersion_N ← package_data(UserPatternData, NewData_preprocessed, FeatureImportances) |

| 8 | return DataVersion_N |

| 9 | end function |

| 10 | function analyze_data(OldData, NewData_preprocessed): |

| 11 | UserPatternData ← data_reduction_module(OldData) |

| 12 | FeatureImportances ← feature_selection_module(UserPatternData, NewData_preprocessed) |

| 13 | return UserPatternData, FeatureImportances |

| 14 | end function |

| 15 | function package_data(UserPatternData, NewData_preprocessed, FeatureImportances): |

| 16 | DataVersion_N ← combine(UserPatternData, NewData_preprocessed) |

| 17 | DataVersion_N ← apply_weights_and_filter (DataVersion_N, FeatureImportances) |

| 18 | return DataVersion_N |

| 19 | end function |

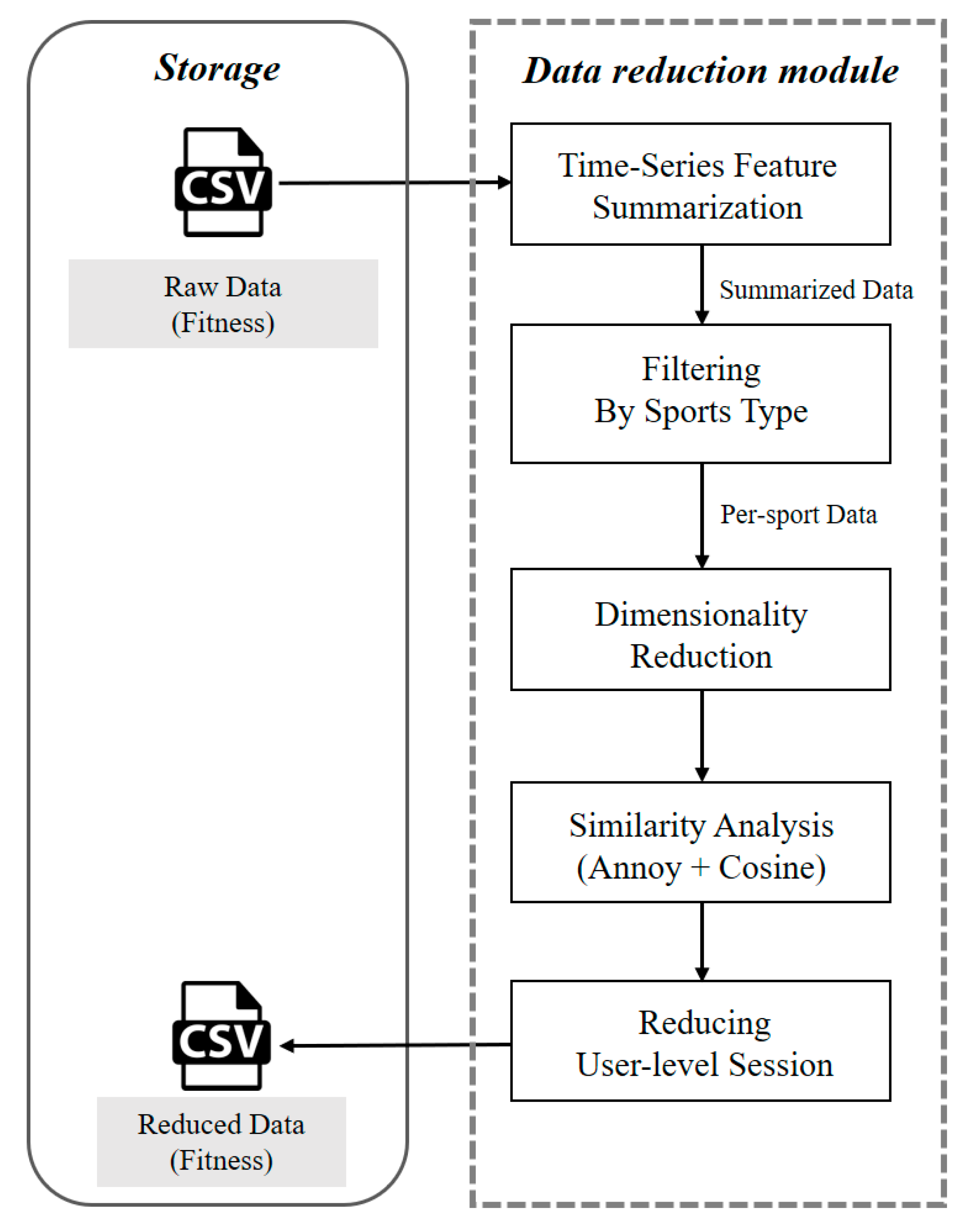

| 20 | function data_reduction_module(data) |

| 21 | SummarizedData ← summarize_time_series_features(data) |

| 22 | perSportData ← filter_by_sports_type(SummarizedData) |

| 23 | ReducedData ← apply_pca(perSportData) |

| 24 | UserPatternData ← analyze_similarity(ReducedData) |

| 25 | return UserPatternData |

| 26 | end function |

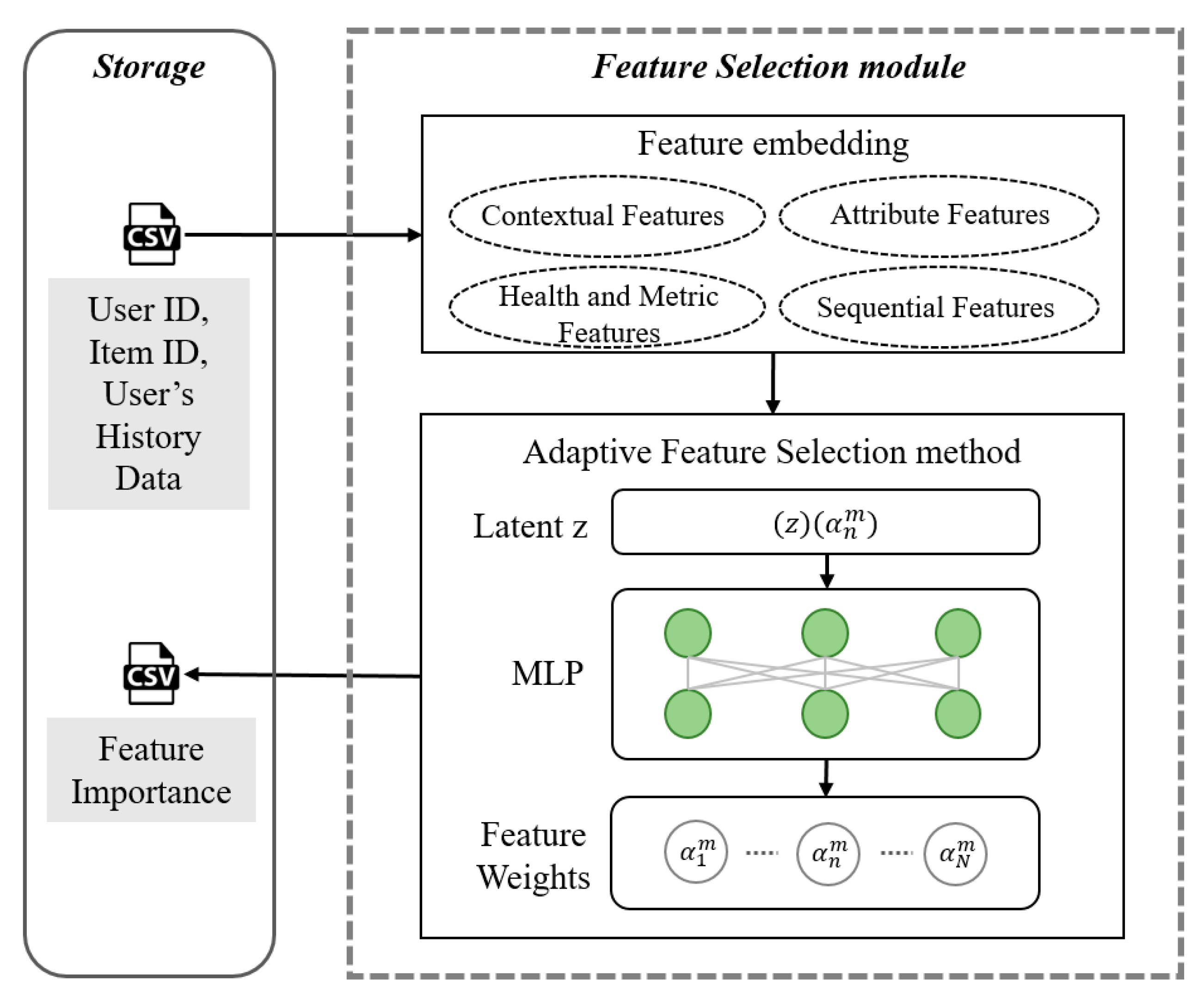

| 27 | function feature_selection_module(UserPatternData, NewData_preprocessed) |

| 28 | EmbeddedFeatures ← combine_features(attributeFeatures, contextualFeatures, sequentialFeatures, healthMetricFeatures) |

| 29 | FeatureWeights ← calculate_feature_weights(EmbeddedFeatures) |

| 30 | FeatureImportance ← select_important_features(FeatureWeights) |

| 31 | return FeatureImportance |

| 32 | end function |

3.4.1. Data Reduction Module

- Time-Series Feature Summarization: Each session is condensed into a fixed-length vector using statistical measures such as mean, standard deviation, minimum, and maximum. This reduces the computational burden of analyzing raw sequences.

- Filtering by Sports Type: The distribution of activities is analyzed, and sports categories with sufficient data volume are selectively retained. This reduces heterogeneity across exercise patterns (e.g., varying heart rate profiles) and enhances analytical reliability.

- Dimensionality Reduction: Principal Component Analysis (PCA) is applied to the selected activity-specific data to reduce the feature space while preserving key information and improving the efficiency of similarity assessment.

- Similarity Analysis: Reduced vectors are indexed using Annoy, and cosine similarity is applied to enable efficient large-scale comparisons of session-level exercise patterns.

- Reducing User-Level Sessions: For each user’s dataset, highly similar sessions are consolidated by retaining a single representative instance, thereby reducing redundancy while preserving the integrity of user-specific distributions.

3.4.2. Feature Selection Module

- Attribute Features: user-related attributes.

- Contextual Features: exercise-related context such as intensity, type, and recovery status.

- Health and Metric Features: physiological measures including heart rate and blood pressure.

- Sequential Features: temporally ordered activity patterns.

3.4.3. Data Package

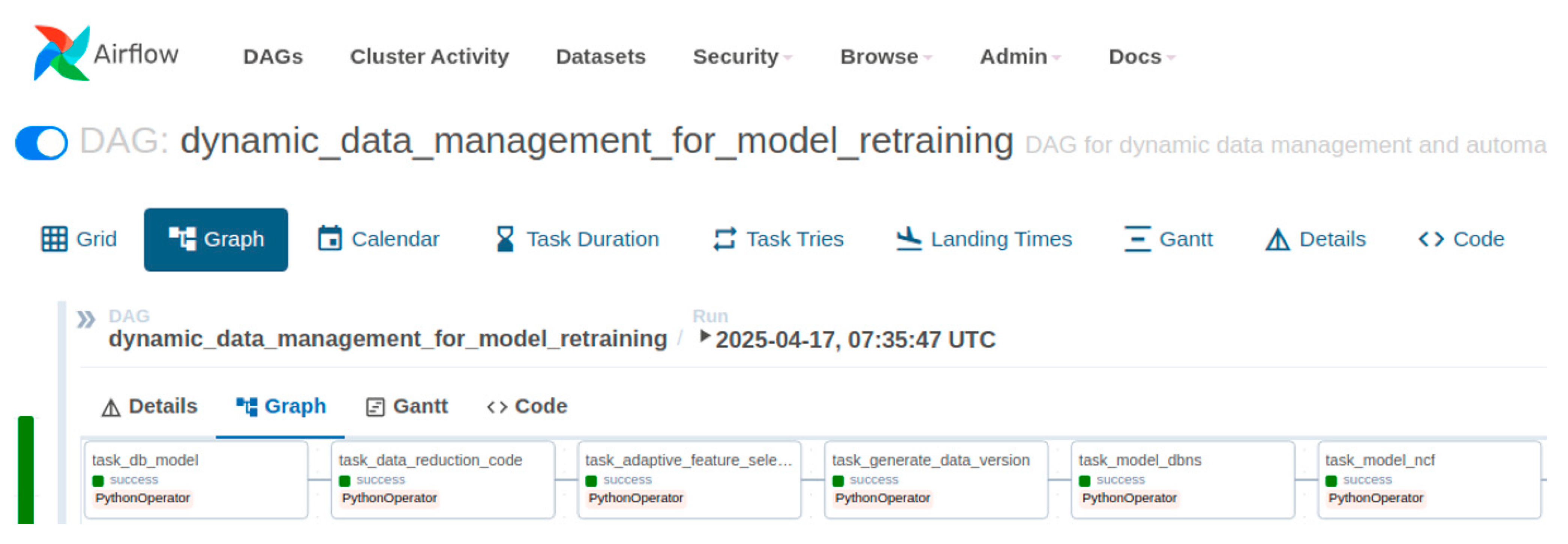

3.5. Implementation of the MLOps Environment

4. Experiments and Results

4.1. Experimental Environment

4.2. Experimental Data

- Stage 1 (Initial Training): 55% of the earliest sessions, used to train the initial model.

- Stage 2 (First Retraining): the Stage 1 model is retrained on 55% old data + 15% new data, where old data preserves historical patterns and new data reflects recent user behavior.

- Stage 3 (Second Retraining): the model is updated with 70% old data + 15% new data.

- Stage 4 (Third Retraining): the model is updated with 85% old data + 15% new data.

4.3. Evaluation Metrics

4.3.1. Metrics for Time-Series Prediction

4.3.2. Metrics for Recommendation Accuracy

4.3.3. Metrics for Resource Efficiency

4.4. Experimental Results

4.4.1. Results of Data Reduction

4.4.2. Results of Feature Selection

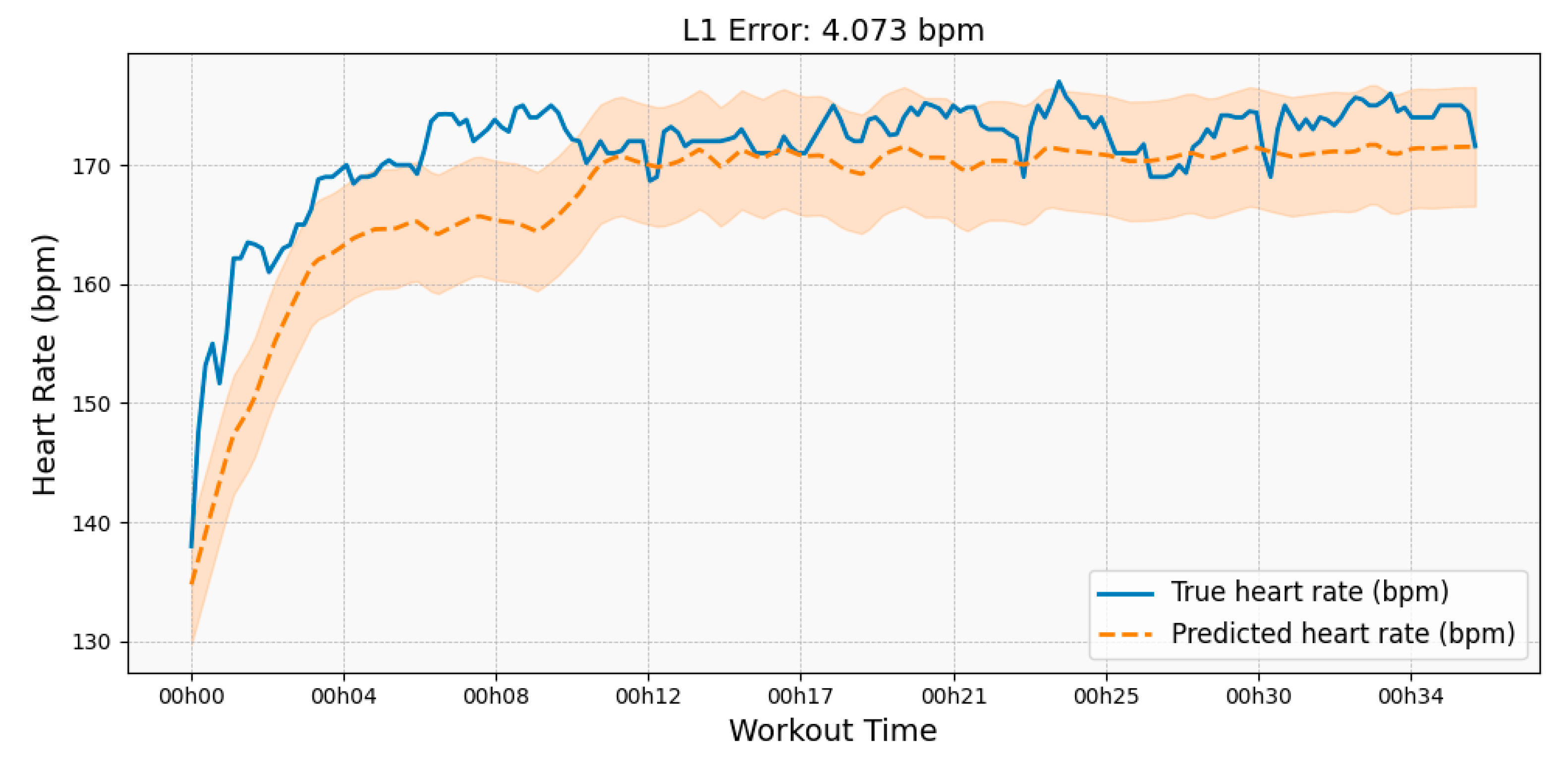

4.4.3. Results of Time-Series Prediction

4.4.4. Results of Recommender Model

4.4.5. Resource Efficiency

- Retraining from scratch: all old and new data are used at every retraining step.

- Simple data merging: old and new data are blended in predetermined ratios for retraining.

- Proposed DDM: old data are reduced, and adaptively selected key features are retained to generate dynamic data versions for retraining.

4.4.6. Ablation Study

5. Discussion

5.1. Latency and Memory Efficiency

5.2. Extending Scalability and Generalizability

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Boutaba, R.; Salahuddin, M.A.; Limam, N.; Ayoubi, S.; Shahriar, N.; Estrada-Solano, F.; Caicedo, O.M. A comprehensive survey on machine learning for networking: Evolution, applications and research opportunities. J. Internet Serv. Appl. 2018, 9, 16. [Google Scholar] [CrossRef]

- Ko, H.; Lee, S.; Park, Y.; Choi, A. A survey of recommendation systems: Recommendation models, techniques, and application fields. Electronics 2022, 11, 141. [Google Scholar] [CrossRef]

- Yue, W.; Wang, Z.; Zhang, J.; Liu, X. An overview of recommendation techniques and their applications in healthcare. IEEE/CAA J. Autom. Sin. 2021, 8, 701–717. [Google Scholar] [CrossRef]

- Bayram, F.; Ahmed, B.S.; Kassler, A. From concept drift to model degradation: An overview on performance-aware drift detectors. Knowl. Based Syst. 2022, 245, 108632. [Google Scholar] [CrossRef]

- Ma, X.; Li, M.; Liu, X. Advancements in recommender systems: A comprehensive analysis based on data, algorithms, and evaluation. arXiv 2024, arXiv:18937. [Google Scholar] [CrossRef]

- Tran, T.N.T.; Felfernig, A.; Trattner, C.; Holzinger, A. Recommender systems in the healthcare domain: State-of-the-art and research issues. J. Intell. Inf. Syst. 2021, 57, 171–201. [Google Scholar] [CrossRef]

- Zarour, M.; Alzabut, H.; Al-Sarayreh, K.T. MLOps best practices, challenges and maturity models: A systematic literature review. Inf. Softw. Technol. 2025, 183, 107733. [Google Scholar] [CrossRef]

- Fujii, T.Y.; Hayashi, V.T.; Arakaki, R.; Ruggiero, W.V.; Bulla Jr, R.; Hayashi, F.H.; Khalil, K.A. A digital twin architecture model applied with MLOps techniques to improve short-term energy consumption prediction. Machines 2021, 10, 23. [Google Scholar] [CrossRef]

- Miñón, R.; Diaz-de-Arcaya, J.; Torre-Bastida, A.I.; Hartlieb, P. Pangea: An MLOps tool for automatically generating infrastructure and deploying analytic pipelines in edge, fog and cloud layers. Sensors 2022, 22, 4425. [Google Scholar] [CrossRef]

- Sritrakool, N.; Maneeroj, S. Personalized preference drift aware sequential recommender system. IEEE Access 2021, 9, 155491–155506. [Google Scholar] [CrossRef]

- Sun, B.; Dong, L. Dynamic model adaptive to user interest drift based on cluster and nearest neighbors. IEEE Access 2017, 5, 1682–1691. [Google Scholar] [CrossRef]

- Rabiu, I.; Salim, N.; Da’u, A.; Osman, A. Recommender system based on temporal models: A systematic review. Appl. Sci. 2020, 10, 2204. [Google Scholar] [CrossRef]

- Raj, E.; Buffoni, D.; Westerlund, M.; Ahola, K. Edge mlops: An automation framework for aiot applications. In Proceedings of the 2021 IEEE International Conference on Cloud Engineering (IC2E), San Francisco, CA, USA, 4–8 October 2021. [Google Scholar]

- Bayram, F.; Ahmed, B.S. Towards trustworthy machine learning in production: An overview of the robustness in mlops approach. ACM Comput. Surv. 2025, 57, 121. [Google Scholar] [CrossRef]

- Amazon Science. On Challenges in Machine Learning Model Management. 2012. Available online: https://www.amazon.science/publications/on-challenges-in-machine-learning-model-management (accessed on 21 August 2025).

- Mahadevan, A.; Mathioudakis, M. Cost-aware retraining for machine learning. Knowl. Based Syst. 2024, 293, 111610. [Google Scholar] [CrossRef]

- Aleixo, E.L.; Colonna, J.G.; Cristo, M.; Fernandes, E. Catastrophic forgetting in deep learning: A comprehensive taxonomy. arXiv 2023, arXiv:10549. [Google Scholar] [CrossRef]

- Klabjan, D.; Zhu, X. Neural network retraining for model serving. arXiv 2020, arXiv:14203. [Google Scholar]

- Kirkpatrick, J.; Pascanu, R.; Rabinowitz, N.; Veness, J.; Desjardins, G.; Rusu, A.A.; Milan, K.; Quan, J.; Ramalho, T.; Grabska-Barwinska, A.; et al. Overcoming catastrophic forgetting in neural networks. Proc. Natl. Acad. Sci. USA 2017, 114, 3521–3526. [Google Scholar] [CrossRef]

- Lopez-Paz, D.; Ranzato, M.A. Gradient episodic memory for continual learning. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Roh, Y.; Heo, G.; Whang, S.E. A survey on data collection for machine learning: A big data-ai integration perspective. IEEE Trans. Knowl. Data Eng. 2019, 33, 1328–1347. [Google Scholar] [CrossRef]

- Polyzotis, N.; Roy, S.; Whang, S.E.; Zinkevich, M. Data lifecycle challenges in production machine learning: A survey. ACM Sigmod Rec. 2018, 47, 17–28. [Google Scholar] [CrossRef]

- Polyzotis, N.; Roy, S.; Whang, S.E.; Zinkevich, M. Data management challenges in production machine learning. In Proceedings of the 2017 ACM International Conference on Management of Data, Chicago, IL, USA, 14–19 May 2017. [Google Scholar]

- Naveed, H.; Arora, C.; Khalajzadeh, H.; Grundy, J.; Haggag, O. Model driven engineering for machine learning components: A systematic literature review. Inf. Softw. Technol. 2024, 169, 107423. [Google Scholar] [CrossRef]

- Baumann, N.; Kusmenko, E.; Ritz, J.; Rumpe, B.; Weber, M.B. Dynamic data management for continuous retraining. In Proceedings of the 25th International Conference on Model Driven Engineering Languages and Systems: Companion Proceedings, Montreal, QC, Canada, 23–28 October 2022. [Google Scholar]

- Heinrich, B.; Hopf, M.; Lohninger, D.; Schiller, A.; Szubartowicz, M. Data quality in recommender systems: The impact of completeness of item content data on prediction accuracy of recommender systems. Electron. Mark. 2021, 31, 389–409. [Google Scholar] [CrossRef]

- Basaran, D.; Ntoutsi, E.; Zimek, A. Redundancies in data and their effect on the evaluation of recommendation systems: A case study on the amazon reviews datasets. In Proceedings of the 2017 SIAM International Conference on Data Mining, Houston, TX, USA, 27–29 April 2017. [Google Scholar]

- Biega, A.J.; Potash, P.; Daumé, H.; Diaz, F.; Finck, M. Operationalizing the legal principle of data minimization for personalization. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Xi’an China, 25–30 July 2020. [Google Scholar]

- Zhang, H.; Luo, F.; Wu, J.; He, X.; Li, Y. LightFR: Lightweight federated recommendation with privacy-preserving matrix factorization. ACM Trans. Inf. Syst. 2023, 41, 90. [Google Scholar] [CrossRef]

- Niu, X.; Rahman, R.; Wu, X.; Fu, Z.; Xu, D.; Qiu, R. Leveraging uncertainty quantification for reducing data for recommender systems. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023. [Google Scholar]

- Ahmadian Yazdi, H.; Seyyed Mahdavi Chabok, S.J.; KheirAbadi, M. Effective data reduction for time-aware recommender systems. Control Optim. Appl. Math. 2023, 8, 33–53. [Google Scholar]

- Lee, Y.; Park, Y.; Kayange, H.; Um, J.; Choi, J.; Choi, J. Lightweight Similarity-Based Approach for Reducing User Interaction Data with Matrix Factorization in a Recommendation System. In Proceedings of the 2025 International Conference on Information Networking (ICOIN), Chiang Mai, Thailand, 15–17 January 2025. [Google Scholar]

- Li, J.; Cheng, K.; Wang, S.; Morstatter, F.; Trevino, R.P.; Tang, J.; Liu, H. Feature selection: A data perspective. ACM Comput. Surv. (CSUR) 2017, 50, 94. [Google Scholar] [CrossRef]

- Lin, W.; Zhao, X.; Wang, Y.; Xu, T.; Wu, X. AdaFS: Adaptive feature selection in deep recommender system. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022. [Google Scholar]

- Lyu, F.; Tang, X.; Liu, D.; Chen, L.; He, X.; Liu, X. Optimizing feature set for click-through rate prediction. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April–4 May 2023. [Google Scholar]

- Wang, Y.; Zhao, X.; Xu, T.; Wu, X. Autofield: Automating feature selection in deep recommender systems. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022. [Google Scholar]

- Kayange, H.; Mun, J.; Park, Y.; Choi, J.; Choi, J. A Hybrid Approach to Modeling Heart Rate Response for Personalized Fitness Recommendations Using Wearable Data. Electronics 2024, 13, 3888. [Google Scholar] [CrossRef]

- Kayange, H.; Mun, J.; Park, Y.; Choi, J.; Choi, J. ProAdaFS: Probabilistic and Adaptive Feature Selection in Deep Recommendation Systems. In Proceedings of the 2024 International Conference on Information Networking (ICOIN), Bangkok, Thailand, 17–19 January 2024. [Google Scholar]

- Jiamo, N.; Muhlstein, L.; McAuley, J. Modeling heart rate and activity data for personalized fitness recommendation. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019. [Google Scholar]

- Zanotti, M. On the retraining frequency of global forecasting models. arXiv 2025, arXiv:00356. [Google Scholar]

- Bertsimas, D.; Digalakis Jr, V.; Ma, Y.; Paschalidis, P. Towards Stable Machine Learning Model Retraining via Slowly Varying Sequences. arXiv 2024, arXiv:19871. [Google Scholar]

| Package | Version of Package |

|---|---|

| Apache Airflow | 2.7.2 |

| Python | 3.9 |

| TensorFlow | 2.18 |

| Scikit-learn | 1.2.2 |

| Docker | 27.3.1 |

| Kubernetes | 1.32.0 |

| FitRec Data | ||||

|---|---|---|---|---|

| Data Version | Old Data | Reduced Old Data | New Data | Total Data |

| version 1 | - | - | 139,161 | 139,161 |

| version 2 | 139,161 | 118,285 (15%) | 37,953 | 156,238 |

| version 3 | 177,114 | 144,233 (17%) | 37,953 | 182,186 |

| version 4 | 215,067 | 176,339 (18%) | 37,953 | 214,292 |

| FitRec Data | ||

|---|---|---|

| Data Version | MAE | RMSE |

| version 1 | 5.2 | 12.5 |

| version 2 | 6.7 | 15.1 |

| version 3 | 4.5 | 10.1 |

| version 4 | 3.9 | 8.7 |

| Training Phases | Recall | Precision | F1 Score | NDCG |

|---|---|---|---|---|

| Stage 1 | 0.25 | 0.11 | 0.15 | 0.0821 |

| Stage 2 | 0.34 | 0.14 | 0.19 | 0.1134 |

| Stage 3 | 0.51 | 0.20 | 0.28 | 0.1416 |

| Stage 4 | 0.52 | 0.22 | 0.30 | 0.1480 |

| Full training | 0.54 | 0.24 | 0.33 | 0.1572 |

| FitRec Data | |||

|---|---|---|---|

| Data Version | Retraining from Scratch | Simple Data Merging | Ours |

| version 1 | 15 | 15 | 15 |

| version 2 | 21 | 18 | 17 |

| version 3 | 25 | 20 | 19 |

| version 4 | 31 | 24 | 21 |

| Similarity Threshold | Reduction Rate (%) | NDCG | Retraining Time |

|---|---|---|---|

| 100% | 0% | 0.0912 | 31 |

| 99.5% | 7% | 0.0937 | 27 |

| 99% | 14% | 0.0924 | 21 |

| 97.5% | 30% | 0.0908 | 17 |

| 95% | 48% | 0.0895 | 14 |

| 90% | 65% | 0.0762 | 11 |

| Model | RMSE | NDCG | Retraining Time |

|---|---|---|---|

| DDM | 8.7 | 0.1480 | 21 |

| Without Data Reduction | 9.8 | 0.1394 | 24 |

| Without Feature Selection | 11.4 | 0.1312 | 25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, Y.; Mun, J.; Lee, Y.; Um, J.; Choi, J.; Choi, J. Data-Driven Optimization of Healthcare Recommender System Retraining Pipelines in MLOps with Wearable IoT Data. Sensors 2025, 25, 6369. https://doi.org/10.3390/s25206369

Park Y, Mun J, Lee Y, Um J, Choi J, Choi J. Data-Driven Optimization of Healthcare Recommender System Retraining Pipelines in MLOps with Wearable IoT Data. Sensors. 2025; 25(20):6369. https://doi.org/10.3390/s25206369

Chicago/Turabian StylePark, Yohan, Jonghyeok Mun, Yejung Lee, Jihwan Um, Jongsun Choi, and Jaeyoung Choi. 2025. "Data-Driven Optimization of Healthcare Recommender System Retraining Pipelines in MLOps with Wearable IoT Data" Sensors 25, no. 20: 6369. https://doi.org/10.3390/s25206369

APA StylePark, Y., Mun, J., Lee, Y., Um, J., Choi, J., & Choi, J. (2025). Data-Driven Optimization of Healthcare Recommender System Retraining Pipelines in MLOps with Wearable IoT Data. Sensors, 25(20), 6369. https://doi.org/10.3390/s25206369