1. Introduction

Sleep is an indispensable biological necessity, playing a critical role in maintaining comprehensive physiological and psychological health. Impaired sleep quality or undiagnosed and untreated sleep disorders can lead to profound and detrimental consequences for both physical and mental well-being, contributing to a wide array of chronic diseases [

1]. Among the many factors that degrade sleep quality, irregular respiratory patterns—such as sleep apnea, hypopnea, and other forms of disordered respiration—are particularly significant. These patterns are intimately linked with severe sleep-related disorders, including obstructive sleep apnea (OSA) and central sleep apnea (CSA), as well as other chronic conditions like cardiovascular disease and diabetes [

2]. Consequently, their early and accurate detection and diagnosis are paramount for effective intervention [

3].

According to the comprehensive guidelines of the American Academy of Sleep Medicine (AASM), sleep is meticulously classified into distinct stages: Wake, N1 (light non-REM), N2 (deeper non-REM), N3 (deepest non-REM), and REM (Rapid Eye Movement) [

4]. Each of these stages is characterized by specific physiological and neurological signatures, reflecting unique brain activity, muscle tone, and ocular movements. A healthy sleep architecture is defined by a characteristic structure and proportion of time spent in each of these stages; disruptions to this distribution are frequently associated with underlying sleep disorders or other health pathologies [

5]. Therefore, a comprehensive assessment of sleep quality necessitates the simultaneous, in-depth analysis of both respiratory patterns and the distribution of sleep stages.

Traditional methods for sleep evaluation, notably polysomnography (PSG), offer unparalleled accuracy in sleep stage scoring and respiratory event detection, serving as the clinical gold standard. However, PSG studies are inherently constrained by their substantial cost, patient inconvenience due to extensive sensor attachment, and limited feasibility for routine, longitudinal, or home-based applications [

6]. These practical limitations have spurred a growing academic and industrial interest in developing non-contact, non-invasive sleep monitoring technologies. Earlier approaches primarily relied on classical signal processing techniques [

7,

8], but these methods often proved susceptible to noise, lacked adaptability to individual physiological variations, and performed poorly in uncontrolled, real-world environments [

9].

To overcome these significant challenges, deep learning–based approaches have emerged as exceptionally promising alternatives. These sophisticated models can effectively infer sleep-related conditions and physiological states solely from ambient or tracheal respiratory sounds, enabling critical tasks such as automated snore detection [

10,

11], robust OSA screening [

12,

13], and comprehensive sleep stage estimation [

14,

15]. Furthermore, such approaches elegantly leverage ubiquitous sensing modalities like smartphones and bedside microphones, offering substantial practical benefits including reduced patient burden, enhanced comfort, and the crucial feasibility for scalable daily or nightly monitoring outside of clinical settings [

16].

Despite their considerable promise, most existing deep learning studies in this domain tend to adopt an End-to-End framework. These typically involve feeding epoch-level log-Mel spectrogram inputs directly into deep neural networks to classify sleep stages or detect apneic events. While effective for prediction, these models often extract unstructured, implicit features from the entire audio signal. This approach fundamentally lacks the fine-grained, explicit analysis of specific respiratory patterns, such as the precise timing of inhale and exhale phases, or the subtle variations in respiratory cycle length, regularity, and overall variability. Consequently, they are inherently limited in their explainability, making it difficult to discern how specific physiological features contribute to their predictions, thereby hindering clinical interpretation and trust.

The preceding work [

17] partially addressed some of these interpretability issues through a deep learning–based audio segmentation model. This model demonstrated superior performance over traditional signal processing methods in accurately segmenting respiratory events even in noisy environments. It enabled the precise extraction of fundamental respiratory indicators, such as respiratory cycles, from sleep respiratory sounds, thereby facilitating a more quantitative analysis of nocturnal respiratory patterns. Unlike other deep models that learn opaque implicit representations, this explicitly tokenizes and analyzes discrete respiratory events, thereby enhancing the interpretability of each segment within a clinically meaningful context. However, its utility has been largely confined to the segmentation of respiratory intervals, falling short of deriving higher-level physiological information directly relevant to sleep architecture.

In this study, we propose a systematic framework that significantly extends the concept of respiratory event segmentation to achieve full sleep-stage distribution estimation. The proposed framework integrates three sequential components:

- 1

Respiratory Sound Segmentation: High-fidelity segmentation of sleep respiratory sounds into distinct respiratory cycles using a state-of-the-art Transformer-based pre-trained speech model, fine-tuned for this specific task.

- 2

Respiratory Feature Extraction: Derivation of a diverse suite of signal-based and statistical features from the segmented respiratory events, designed to capture both static and dynamic aspects of respiratory behavior.

- 3

Sleep-Stage Distribution Prediction: Clinically meaningful estimation of the proportions of time spent in Wake, Light (N1+N2), Deep (N3), and REM sleep stages through a series of dedicated regression models.

By strategically leveraging the well-established correlation between nuanced respiratory dynamics and various sleep stages [

18], the proposed framework aims to quantitatively estimate overall sleep stage distributions directly from detailed sleep respiratory patterns. This inherently modular architecture fundamentally enhances both interpretability and explainability compared to conventional End-to-End models. We evaluate the framework on the public PSG-Audio dataset with subject-wise cross-validation.

2. Proposed Methods

The proposed approach is specifically designed to analyze sleep respiratory sounds in a modular and inherently interpretable manner. Diverging from a direct End-to-End classification pipeline, we strategically decompose the complex problem of sleep stage proportion estimation into distinct, logically ordered stages that align directly with known physiological processes of respiration and sleep.

Figure 1 visually illustrates the overall workflow of the proposed framework and the complete procedure is summarized in Algorithm 1. The framework is meticulously designed with three major components: (1) a Respiratory Sound Segmentation module that accurately detects distinct respiratory cycles from raw respiratory sounds; (2) a Respiratory Feature Extraction module that computes a rich set of statistical and signal-derived metrics from these precisely segmented events; and (3) a Sleep-Stage Distribution Prediction module that estimates the percentage of time spent in each distinct sleep stage.

As summarized in

Figure 1, the pipeline decomposes the task into physiologically grounded stages that map raw audio to a subject-level sleep architecture. First, overnight tracheal audio is split into overlapping 20 s windows and converted to log-Mel spectrograms; a fine-tuned Whisper-based Transformer performs frame-wise inhale/exhale/silence classification, with majority voting across overlaps to enforce temporal consistency [

19]. Supervision for respiratory onsets is derived from synchronized abdominal effort signals in the PSG-Audio dataset [

20]. Second, two window-level respiratory metrics—

Respiratory Count (inhale onsets per minute) and

Respiratory Period (median inhale-to-inhale duration)—are computed and then aggregated across the full night into a 76-dimensional, physiologically interpretable feature vector capturing central tendency, dispersion, temporal dynamics, frequency-domain descriptors, and distributional shape. Finally, four stage-specific regressors map this vector to the proportions of Wake, Light (N1+N2), Deep (N3), and REM; the four outputs are normalized to sum to one, yielding a coherent, simplex-constrained estimate of sleep-stage distribution. This modular design makes every step traceable to measurable respiratory quantities and improves clinical interpretability relative to end-to-end spectrogram classifiers.

| Algorithm 1: Estimating sleep-stage distribution from respiratory audio |

- Input:

Overnight tracheal audio ; window length s; hop H; pretrained Whisper-based segmentation model ; post-processing rules ; feature map ; per-stage regressors - Output:

Sleep-stage proportion vector ,

|

- 1

Step 1: Spectrogramization and frame-wise segmentation; - 2

Split into overlapping windows of length W with hop H; - 3

Convert each to log-Mel spectrogram ; - 4

Obtain frame posteriors over {inhale, exhale, silence}; - 5

Fuse overlapping predictions by majority voting to get a single label per global frame;

|

- 6

Step 2: Event parsing and onset extraction; - 7

Merge consecutive identical labels into runs; identify candidate transitions ; - 8

Apply (min-duration, hysteresis, refractory) to suppress spurious transitions; - 9

Record inhale-onset timestamps ;

|

- 10

Step 3: Window-level respiratory metrics; - 11

For each window i: - 12

number of onsets in (breaths/min); - 13

using onsets within/near

|

- 14

Step 4: Episode-level feature aggregation; - 15

Compute feature vector from via - 16

(e.g., mean, std, IQR, autocorr, spectral entropy, skewness, kurtosis, CV, cross-metrics);

|

- 17

Step 5: Stage-wise regression and simplex projection; - 18

For each stage : ; - 19

Normalize to the simplex: with small ; - 20

return ;

|

2.1. Respiratory Sound Segmentation

The initial and foundational module precisely segments continuous respiratory audio into distinct respiratory cycles. This is achieved via a fine-tuned version of WhisperSeg [

21], a sophisticated Transformer-based model originally developed for robust speech recognition but adapted here for the unique characteristics of respiratory sounds.

2.1.1. Audio Preprocessing

Raw tracheal audio recordings are first preprocessed by splitting them into fixed-duration, overlapping 20 s segments. Each segment undergoes transformation into a log-Mel spectrogram, a dense time-frequency representation of the audio signal. This spectrogram effectively captures the spectral characteristics and temporal evolution of the respiratory sound, serving as the primary input for the downstream segmentation model.

2.1.2. Audio Segmentation Model

Respiratory sound segmentation is formulated as frame-wise multi-class classification. Overlapping 20 s audio windows are converted into log-Mel spectrograms and passed to a fine-tuned Whisper-based Transformer [

19,

21]. For each frame, the model outputs posterior probabilities over three classes:

inhale,

exhale, and

silence.

Let

N be the number of frames per segment and

the number of classes. With one-hot labels

and predicted probabilities

, the cross-entropy loss is minimized:

Since windows overlap, a given frame may be classified multiple times. We apply majority voting across overlaps to produce a single hard label per frame. This suppresses transient noise and yields temporally smoother label sequences.

Downstream features require discrete respiratory events rather than frame-level labels. We, therefore, convert the smoothed label sequence into a sequence of inhale onsets via a simple post-processing pass: consecutive frames with identical labels are first merged into runs, and a candidate onset is then identified whenever the sequence transitions from {silence, exhale} to an inhale run. We apply minimum-duration constraints and hysteresis to suppress spurious transitions, and impose a short refractory period to prevent duplicate detections. The first frame of each validated inhale run is recorded as the onset timestamp . Although the exhale and silence predictions are not used directly as features, they stabilize the boundaries and reduce false onsets in noisy segments. This process yields an ordered sequence of inhale-onset timestamps , which serve as the sole input to the subsequent feature extraction module.

2.2. Respiratory Feature Extraction

2.2.1. Segment Feature Extraction

Given the inhale-onset sequence produced by the segmentation module, we derive two core respiratory metrics for each 20 s segment: Respiratory Count and Respiratory Period. These metrics provide a compact yet physiologically interpretable description of local respiratory dynamics.

Respiratory Count

Respiratory Count is defined as the number of inhale onsets detected within a segment:

This can be expressed as breaths-per-minute, a standard indicator of respiratory rate. Abnormalities in this rate are associated with sleep-disordered respiratory such as OSA and CSA [

22].

Respiratory Period

Respiratory Period captures cycle duration by measuring the interval between consecutive inhale onsets:

We use the median to mitigate the effect of transient irregularities or artifacts. Prolonged or highly variable respiratory periods can indicate apneic events or respiratory instability during sleep [

23].

Anchoring features on inhale onsets provides a sharp and consistently detectable landmark across subjects and recording conditions, more robust than exhalation offsets which are often diffuse. Although only the inhale timestamps appear in the formulas, the preceding exhale and silence frame predictions are crucial for reliable onset detection, since they stabilize boundaries and suppress false positives. The resulting metrics form the foundation for higher-level feature aggregation over an entire sleep episode, leading to the comprehensive 76-dimensional respiratory feature vector described in the next subsection.

2.2.2. Sleep Episode Feature Extraction

Following the initial respiratory event segmentation and the computation of per-segment metrics, the subsequent crucial step involves aggregating these granular measurements across the full duration of the sleep episode. This comprehensive aggregation process yields a robust, global respiratory profile that effectively captures both the macro-structural and dynamic aspects of an individual’s nocturnal respiratory behavior.

We compute a comprehensive set of 76 distinct statistical and signal-derived features from the two primary metrics: Respiratory Count and Respiratory Period. These features are meticulously designed to summarize not only central tendencies but also higher-order variations, distributional characteristics, and intricate temporal patterns over the entire recording period. Specifically, the extracted features encompass the following:

Time-domain statistics: These include the mean (e.g., average respiratory rate throughout the night), standard deviation (quantifying variability in respiratory), maximum and minimum values (identifying extreme events), and the interquartile range (reflecting the spread and central distribution).

Temporal dynamics: Features like the slope of moving averages (revealing trends in respiratory rate), autocorrelation coefficients (indicating periodicity and predictability of respiratory cycles), and zero-crossing rate (characterizing signal complexity and changes in the respiratory waveform components).

Frequency-domain descriptors: Metrics such as the dominant frequency (identifying the primary respiratory rhythm) and spectral entropy (quantifying the regularity or randomness of the respiratory signal’s frequency content).

Shape-related statistics: These include skewness (assessing the asymmetry of the distribution of respiratory durations), kurtosis (indicating the tailedness, which can highlight extreme respiratory events), and the coefficient of variation (providing a standardized measure of relative variability).

Cross-feature interactions: Derived metrics like ratios and differences between Respiratory Count and Period (e.g., inhale–exhale ratio, which may indicate airway obstruction or altered respiratory effort patterns).

Collectively, these meticulously engineered descriptors form a 76-dimensional feature vector per subject, representing a rich, structured, and physiologically interpretable summary of their entire overnight respiratory patterns. This vector is capable of encoding both rapid, short-term fluctuations (e.g., irregular bursts of respiratory) and crucial long-term stability (e.g., consistency of respiratory cycle lengths).

Critically, each feature within this vector can be directly interpreted physiologically. For example, an increased variability in Respiratory Period might reflect fragmented sleep, transient hypopneas, or even frank apneic events. This highly structured and interpretable representation provides an explicit, explainable basis for the subsequent, downstream prediction of sleep stage proportions.

2.3. Sleep-Stage Distribution Prediction

The final stage of the proposed framework involves estimating the proportion of time spent in each distinct sleep stage, utilizing the subject-level respiratory feature vector generated previously. The framework adopts a separate, dedicated regression model trained for each target sleep stage—namely Wake, Light (N1+N2 combined), Deep (N3), and REM.

Let denote the comprehensive 76-dimensional input feature vector for a given sleep episode. For each distinct sleep stage , the objective is to learn an independent regression function that accurately predicts the proportion of total sleep time spent in that specific stage, i.e., .

The individual outputs from the four independent models are subsequently normalized to ensure that their sum precisely equals 1.0. This normalization step is critical as it ensures the predictions accurately represent a valid and complete distribution of the entire sleep architecture.

We adopt TabPFN [

24] as our primary regressor (see

Figure 2). TabPFN is a Transformer-based foundation model for tabular prediction that performs

amortized inference using a learned prior over synthetic training tasks, yielding strong accuracy and data efficiency on small-to-medium tabular datasets without extensive hyperparameter tuning. Concretely, TabPFN jointly embeds support samples

and query features

, and performs in-context learning within a 12-layer Transformer encoder, where each layer sequentially applies feature-wise attention, sample-wise attention, and an MLP sublayer, with constraints preventing query samples from attending to each other. After these layers, an additional linear transformation maps the outputs to a piece-wise constant predictive distribution, from which point estimates such as

can be derived. This architecture captures nonlinear feature interactions and target dependencies while remaining comparatively robust under limited sample sizes—an appealing property in physiological studies with subject-wise evaluation. For comparison, we also evaluated Random Forest, Support Vector Regression, Linear Regression, Decision Tree Regressor, k-Nearest Neighbors, and a shallow Multi-Layer Perceptron under the identical cross-validation protocol.

Each of these models is independently trained on the same rich respiratory feature input vector but is specifically optimized for a different scalar target—the proportion of time in a particular sleep stage. This strategic approach allows for stage-specific specialization, which is particularly advantageous given that the underlying respiratory correlates may differ significantly across stages (e.g., the characteristic irregularity during REM sleep versus the pronounced stability during Deep sleep).

We emphasize that this is a per-stage regression approach: for each stage s, a separate and distinct model is trained using the common 76-dimensional input vector and a scalar target . The full set of predictions is then normalized to produce a complete and coherent proportion vector representing the entire sleep architecture. This design significantly improves modularity and allows for selective model optimization based on the unique characteristics of each sleep stage.

3. Experimental Setup

3.1. Overview

To rigorously validate the performance of the proposed system, we conducted two distinct sets of experiments, each meticulously designed to correspond to its core functional components. The first experiment meticulously evaluates the accuracy of respiratory event segmentation and the precision of the derived respiratory metrics from sleep audio. The second critically assesses the overall effectiveness of the system in predicting comprehensive sleep-stage distribution based on the aggregated, interpretable respiratory features. All experiments were implemented in Python 3.11 with PyTorch 2.5.1 (CUDA 12.1, cuDNN 9.1.0) on Ubuntu 20.04, and training was performed on a single NVIDIA’s A6000 GPU (48 GB VRAM) with an Intel’s Xeon W-2295 @ 3.00 GHz CPU and 64 GB RAM (both from Santa Clara, CA, USA).

3.2. Dataset

For all experimental tasks, we utilized the comprehensive PSG-Audio dataset [

20]. This publicly available dataset is particularly well-suited for our study as it contains meticulously synchronized multimodal recordings from a diverse cohort of 287 subjects. Each subject’s recording spans an average duration of 5.3 h, providing extensive, real-world data for robust analysis. The dataset specifically includes the following:

Tracheal sleep audio recordings: Passive acoustic data captured from a microphone placed near the trachea, providing direct information about respiratory sounds.

Abdominal respiratory effort signals: Physiologically validated signals obtained from a sensor placed on the abdomen, serving as a gold-standard reference for individual respiratory cycles and events.

Expert-labeled sleep stages (hypnograms): Derived from full polysomnography (PSG) by certified sleep technologists according to AASM guidelines, providing the ground truth for sleep architecture analysis.

This synchronized multimodal information is predominantly leveraged for the development of sleep apnea and snoring detection algorithms [

26,

27]. The rich and synchronized nature of this dataset is crucial for both training the respiratory segmentation model with precise ground truth and for evaluating the sleep stage proportion predictions against clinical standards.

3.3. Task 1: Respiratory Sound Segmentation

The first experimental task is dedicated to thoroughly evaluating the accuracy of segmenting continuous sleep respiratory audio into its fundamental respiratory events, specifically, inhale, exhale, and silence intervals. Furthermore, it assesses the precision of the crucial respiratory metrics directly derived from these segmentations: the respiratory rate (Respiratory Count) and the respiratory cycle duration (Respiratory Period). Both are physiologically meaningful and clinically vital indicators of respiratory function during sleep.

For this task, we systematically segmented the tracheal audio recordings from the PSG-Audio dataset into overlapping 20 s windows using a sliding window approach, resulting in approximately 228,000 such audio segments used for model training and evaluation.

To construct the robust ground truth annotations for respiratory event segmentation, we leveraged the synchronized abdominal respiratory effort signals from the PSG-Audio dataset. We first applied a moving average filter (with a window size of 25 samples) to smooth the abdominal effort signal, thereby effectively reducing high-frequency noise and motion artifacts. Subsequently, we applied the well-established

findpeaks algorithm [

28] to precisely identify local maxima and minima within the smoothed signal. These points accurately correspond to the physiological onset of inhalation and exhalation, respectively. These precise temporal markers were then meticulously mapped to frame-level labels in the audio timeline, serving as the definitive supervision targets for our segmentation model.

The core segmentation model is founded upon Whisper-Large [

19], a powerful Transformer-based architecture initially developed for robust speech recognition. We performed extensive fine-tuning of this pre-trained Whisper model. The log-Mel spectrograms of the segmented audio served as input, with the primary objective being the accurate classification of each audio frame into one of three distinct classes: inhale, exhale, or silence. To mitigate the inherent noisy predictions that often occur at frame boundaries and to ensure temporal consistency, we judiciously applied a majority voting strategy across overlapping frames in the output.

To establish a robust benchmark for the performance of our proposed model, we implemented two widely recognized traditional signal processing baselines. The first method utilizes the Fast Fourier Transform (FFT) to estimate dominant respiratory frequencies. In this approach, raw audio signals are first meticulously filtered, dynamically compressed, and then smoothed using a Gaussian kernel before undergoing spectral analysis. The prominent frequency components are then directly employed to infer respiratory cycles [

29]. The second method is based on smoothed peak detection within the energy envelope of the respiratory sound. Inspired by [

30], this technique initially transforms the audio into a log-Mel spectrogram, subsequently computes an intensity envelope, and then applies Gaussian smoothing followed by a refined peak detection algorithm. To further improve alignment with precise physiological events, the initially detected peaks are refined using a BFGS optimization approach.

The entire dataset was randomly and rigorously split into training and evaluation sets at a 9:1 ratio, critically ensuring a subject-independent basis. This prevents data leakage and ensures the model’s ability to robust to new, unseen individuals. The model was trained for a substantial 154,900 steps within a single epoch, optimizing for cross-entropy loss. This meticulous fine-tuning procedure successfully adapted the generic yet powerful Whisper model to the highly specialized task of respiratory event segmentation in noisy, real-world sleep audio environments.

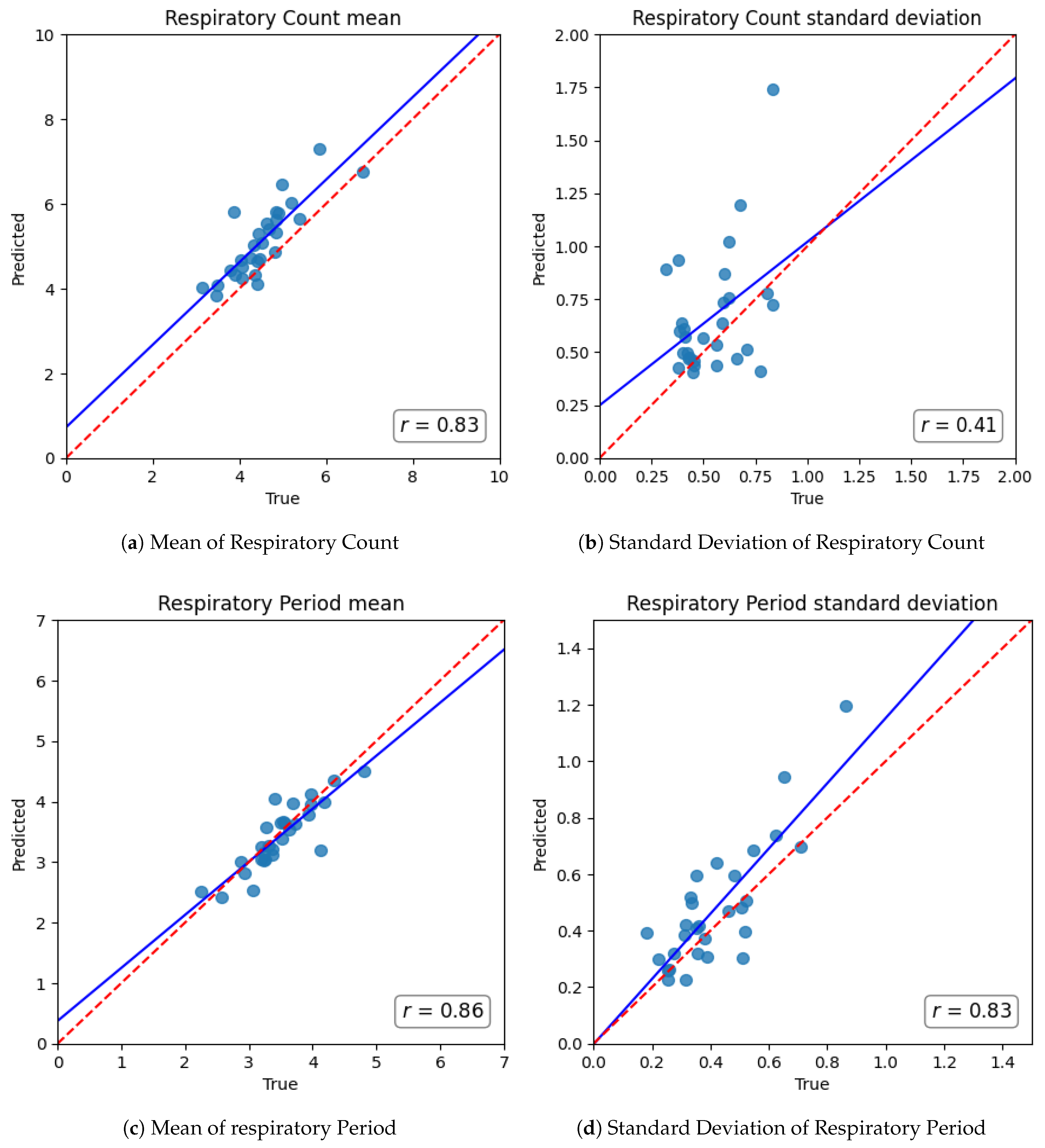

For comprehensive performance evaluation, we focused on two crucial downstream metrics derived directly from the segmentation output: Respiratory Count and Respiratory Period. Respiratory Count is precisely defined as the number of inhalation onsets per minute, directly equivalent to the respiratory rate. Respiratory Period is computed as the median interval between consecutive inhale onsets, thereby accurately capturing the duration of the respiratory cycle. These predicted values were then rigorously compared against those meticulously computed from the ground-truth sensor annotations. We quantified performance using Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE). RMSE, being particularly sensitive to larger errors, is especially suitable for evaluating clinical applicability, especially in segments characterized by irregular or disordered respiratory, while MAE provides a direct measure of average prediction deviation.

3.4. Task 2: Sleep-Stage Distribution Prediction

The second experimental task aims to precisely estimate the overall distribution of macro sleep stages across a subject’s full night of sleep, relying exclusively on features derived from their continuous nocturnal respiratory sounds. Consistent with prior work on wearable- and audio-based staging, we adopt a four-class mapping: Wake, Light (N1+N2), Deep (N3), and REM [

14,

31,

32]. The original PSG-Audio dataset provides expert-labeled hypnograms according to AASM guidelines, in which each 30 s epoch is annotated as Wake, N1, N2, N3, or REM. For the purpose of this study, we consolidated the two light non-REM stages (N1 and N2) into a single Light category, yielding four macro stages in total. This aggregation is widely adopted in prior research because N1 and N2 share similar physiological characteristics, and distinguishing them reliably from non-EEG modalities such as audio is challenging.

In this specific setting, our objective is to predict the proportion of total sleep time spent in each of the four stages, rather than performing short-term epoch-by-epoch classification. To generate the robust predictive features, we systematically utilized the output from the precisely executed respiratory event segmentation module (Task 1). Specifically, we calculated the Respiratory Count and Respiratory Period for each 20 s audio segment across the entire night. These granular metrics were then meticulously aggregated across the complete sleep session to form a comprehensive, subject-level representation of an individual’s nocturnal respiratory behavior. From each subject’s full sleep episode recording, we extracted a total of 76 distinct features as described in

Section 2.2.

For this task, the ground truth sleep stage proportions were derived directly from the expert-labeled sleep stages provided within the PSG-Audio dataset. The total duration of each subject’s recording was first determined, and then the accumulated time spent in Wake, Light, Deep, and REM stages was calculated. These accumulated times were subsequently converted into proportions of the total recording time, serving as the scalar target values for our regression models.

To identify the most effective modeling approach, we compared seven regressors: Linear Regression (LR), Decision Tree Regressor (DTR), Random Forest Regressor (RFR), Support Vector Regression (SVR), k-Nearest Neighbors (kNN), Multi-Layer Perceptron (MLP), and TabPFN [

24]. The first six models were implemented with

scikit-learn [

33] using the hyperparameters listed in

Table 1, while TabPFN—a pretrained Transformer-based foundation model for tabular data—was used with its default pretrained weights. This suite spans linear, tree-based, kernel, instance-based, neural, and foundation-model approaches, providing a balanced basis to evaluate accuracy and data efficiency on our 76-feature representation.

To ensure a robust and unbiased evaluation of generalizability, we employed 5-fold subject-wise cross-validation. In each fold, the regressors were trained on data from 80% of the subjects and rigorously tested on the remaining 20%, meticulously ensuring that no subject appeared in both the training and test sets. Model performance was then meticulously averaged across all five folds to provide a reliable assessment of generalizability to unseen individuals.

For evaluation, we computed the Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE) between the predicted and true sleep stage proportions for each of the four stages. These metrics provide direct, quantitative insight into the model’s capability to accurately approximate clinically meaningful sleep architecture solely based on comprehensive respiratory patterns.

5. Discussion

5.1. Clinical and Physiological Implications

This study demonstrates that subtle respiratory sound patterns carry significant information about sleep architecture, enabling accurate and non-intrusive sleep monitoring. By explicitly segmenting and analyzing respiratory cycles, our framework achieves a level of performance and interpretability that favorably compares with prior audio-based sleep staging approaches. Crucially, our findings reinforce long-standing observations that respiration changes with sleep depth – for instance, respiratory tends to become slower and more regular in deep N3 sleep, whereas it is more irregular during REM and wakefulness [

34]. Our system effectively exploits these physiological differences to yield robust estimates of overall sleep stage composition directly from respiratory sounds.

The high fidelity of our respiratory segmentation module is fundamental to the framework’s success. Our fine-tuned Transformer model accurately distinguished inhale/exhale phases, even in realistic, noisy conditions, significantly outperforming classical signal-processing methods. The marked reduction in error for both respiratory rate and cycle duration indicates that deep learning can overcome limitations of earlier heuristic approaches, which often required high-quality audio or close-contact sensors to function reliably [

14]. This advance is crucial as it ensures that downstream features accurately reflect physiological respiratory rather than artifacts. Practically, the ability to track respiratory rate and variability with sub-minute resolution means our system can capture transient respiratory irregularities that might indicate sleep instability or arousals. This granularity was difficult to attain with traditional methods that often faltered in low signal-to-noise settings [

14]. Our approach, by contrast, maintained accuracy across a full night, suggesting robust handling of real-world respiratory variability.

Building on this solid respiratory foundation, the sleep stage proportion estimator yielded promising accuracy. The model’s predictions for time spent in Wake, Light, Deep, and REM sleep were within only a few percentage points of the true values, which is notable given the use of a single audio modality. The particularly low error for Deep sleep is significant and likely stems from the distinctive respiratory signatures of slow-wave sleep—characterized by pronounced regularity and slower respiratory [

34]. In essence, our findings confirm that respiratory sound alone is a strong proxy for sleep architecture, echoing earlier studies that different sleep stages manifest unique sound and respiratory profiles [

35]. The ability to estimate sleep architecture from audio could have substantial clinical value; for example, capturing reductions in REM or Deep sleep proportion (often associated with aging or disorders) no longer necessitates full PSG but could potentially be achieved with a bedroom microphone.

Moreover, by outputting an easily interpretable summary of sleep (percentages of each stage), our system aligns with how clinicians and sleep researchers typically evaluate sleep quality—through stage distribution and sleep efficiency rather than raw epoch-by-epoch labels. While minute-by-minute sleep stage classification models can also derive these proportions by aggregating epoch-level predictions over a full night, most prior end-to-end approaches offer limited insight into why a certain stage was predicted for a given epoch, as they often rely on black-box mappings from sound to labels [

14]. In contrast, our framework’s predictions can be directly traced back to concrete, physiologically meaningful features, such as a higher average respiratory rate or greater variability in respiratory period. This explicit link significantly enhances trust and transparency, which is particularly vital in medical contexts, allowing clinicians to understand the physiological basis of the assessment.

5.2. Technical Advances of Transformer-Based Segmentation

To better contextualize these improvements, we highlight the specific technical advances of our segmentation approach. Traditional methods such as FFT-based frequency estimation or envelope peak detection rely on hand-crafted heuristics, operate on short local windows, and are easily confounded by noise or irregular respiratory [

7,

29]. Such methods often misidentify respiratory cycles in the presence of snoring, background noise, or variable respiratory patterns, leading to substantial errors in downstream metrics.

In contrast, the Transformer-based model utilized here, built on Whisper architecture [

19], incorporates self-attention mechanisms that capture long-range temporal dependencies and complex dynamics across entire segments of sleep audio [

21]. Pretrained on massive speech datasets, the model inherits robust feature representations that transfer effectively to respiratory sounds, improving resilience to environmental variability. Fine-tuning adapts these representations for precise inhale/exhale/silence classification, while majority voting across overlapping frames enforces physiologically plausible segmentation boundaries and mitigates transient noise-induced errors.

These design choices explain the superior accuracy observed in our experiments. Empirically, the proposed approach achieved substantially lowers RMSE and MAE in estimating both respiratory counts and periods compared to classical baselines, demonstrating that deep sequence models can overcome the fragility of traditional signal-processing pipelines [

14,

17]. Furthermore, the explicit segmentation outputs allow the derivation of clinically interpretable metrics that directly inform sleep stage estimation, bridging the gap between raw acoustic patterns and meaningful physiological insights.

Overall, the integration of Transformer-based segmentation into our framework offers a powerful, explainable, and scalable alternative to classical methods, enabling reliable capture of fine-grained respiratory patterns that are essential for sleep architecture analysis.

5.3. Limitations and Future Directions

This study has several limitations that should be acknowledged when interpreting the findings. First, our evaluation relied exclusively on a single public dataset (PSG-Audio). Although subject-wise cross-validation reduces optimistic bias, true generalizability across recording devices, environments, and populations remains unproven. Moreover, the cohort may not fully represent the diversity of age groups, BMI distributions, or sleep-disordered respiratory severities, raising the possibility of subgroup-specific performance differences. In addition, our analysis was based on single-night recordings, without assessing night-to-night variability or linking predictions to established clinical outcomes such as the apnea–hypopnea index, sleep efficiency, or patient-reported symptoms. Finally, real-world deployment factors—including robustness to commodity microphones, far-field bedroom acoustics, on-device latency, and privacy considerations—were not evaluated in this work. Addressing these aspects will be essential for translation into daily use.

From a technical perspective, the framework is intentionally restricted to respiratory audio. While this design emphasizes transparency and deployability, it may limit robustness in segments with weak respiratory, substantial background noise, or overlapping sounds such as snoring and speech. Incorporating complementary low-burden modalities (e.g., accelerometry, PPG, radar) could improve stability while preserving interpretability. Another limitation lies in the ground-truth labels: polysomnographic staging is known to exhibit inter-scorer variability, and our aggregation of N1 and N2 into a single Light stage may obscure physiologically meaningful differences. Finally, the pipeline is sequential, so segmentation errors can propagate to feature extraction and regression without explicit uncertainty quantification. Future work should explore uncertainty-aware prediction, label-noise sensitivity analyses, and finer-grained staging.

6. Conclusions

In this study, we developed and evaluated a novel, interpretable, and robust framework for estimating sleep stage proportions directly from nocturnal respiratory audio. Our modular pipeline, which incorporates precise respiratory sound segmentation, comprehensive feature extraction, and stage-specific regression models, demonstrated superior performance compared to conventional methods in respiratory analysis and yielded promising accuracy in predicting Wake, Light, Deep, and REM sleep proportions.

A key strength of our approach lies in its inherent transparency and physiological interpretability. By explicitly modeling and quantifying key respiratory dynamics, our system offers a path beyond opaque black-box predictions, providing clinically meaningful insights into how respiratory patterns may correlate with sleep architecture. This can enable a deeper understanding for clinicians and may facilitate user trust in home-based monitoring solutions. The method’s demonstrated robustness across varying respiratory patterns and its ability to generalize across subjects suggests its strong potential for practical, real-world application.

This work represents a promising step towards more accessible, contact-free, and explainable sleep health monitoring. Building upon this foundation, future research will focus on validating the system on more diverse populations, integrating multi-modal physiological signals for enhanced accuracy, and optimizing the framework for efficient, real-time deployment on consumer-grade hardware. Ultimately, this work aims to contribute to making comprehensive sleep assessment more widely accessible, supporting proactive health management and potentially improving the quality of life for many.