Seismic Intensity Prediction with a Low-Computational-Cost Transformer-Based Tracking Method

Abstract

1. Introduction

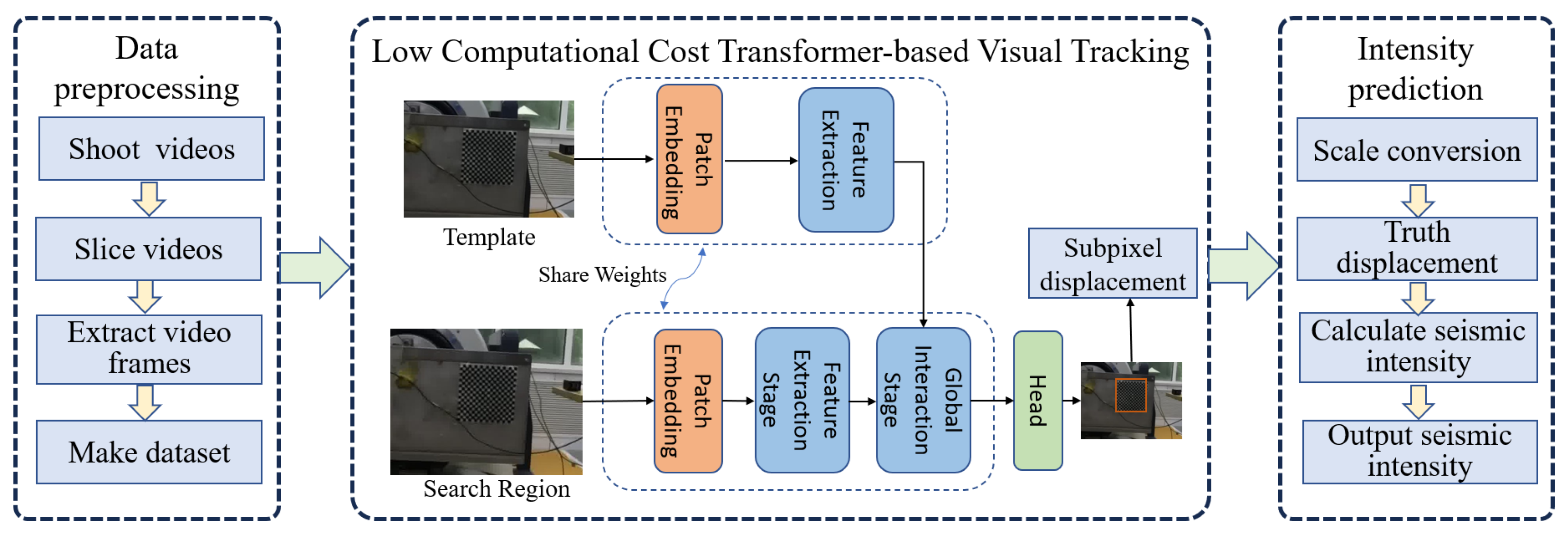

- A low-computational-cost transformer-based tracking method (LCCTV) is proposed to predict seismic intensity in surveillance videos quickly.

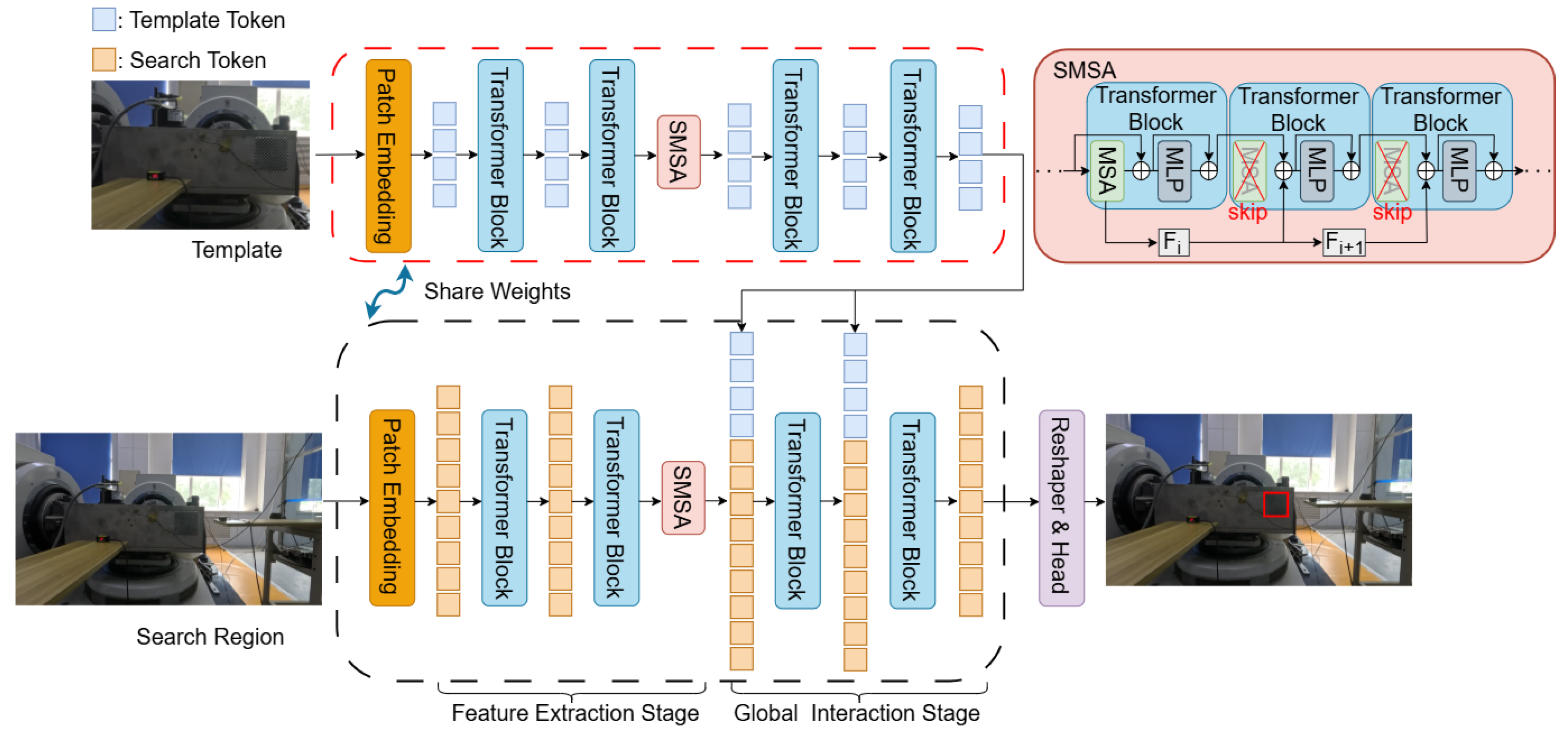

- An efficient module (SMSA, Skip Multi-head Self-Attention) is designed to skip the MSA (Multi-head Self-Attention) computation in layers with high similarity. This reduces redundant self-attention operations and further lowers the overall computational cost of the model.

- The earthquake video dataset captured in the laboratory environments is introduced for open benchmark verification. Experimental results on the collected earthquake video dataset have shown that the proposed LCCTV method achieved superior performance in comparison to other state-of-the-art methods. Furthermore, it can run on edge devices in real time, demonstrating its practicality and effectiveness for earthquake intensity prediction.

2. Data Description and Processing

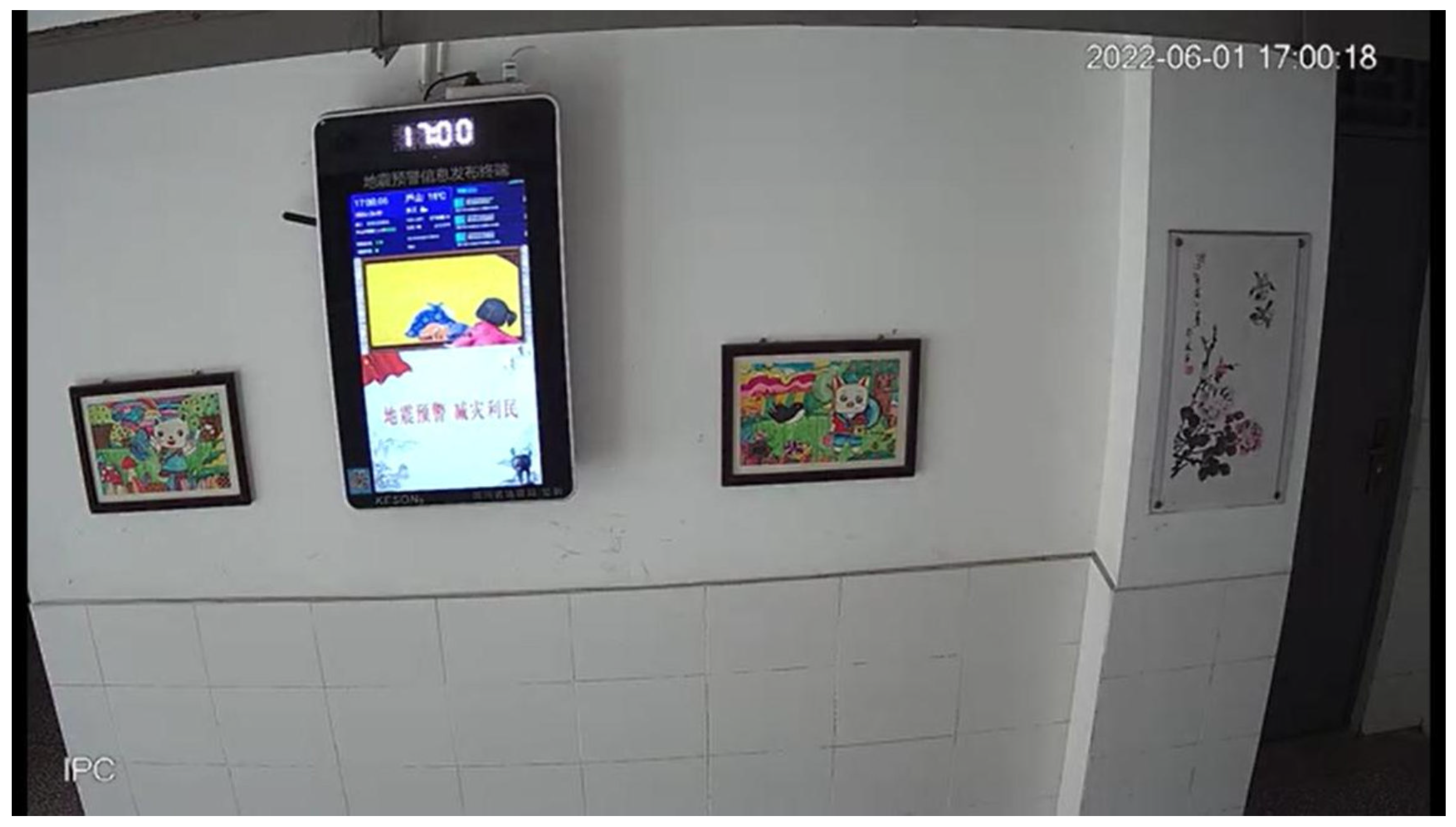

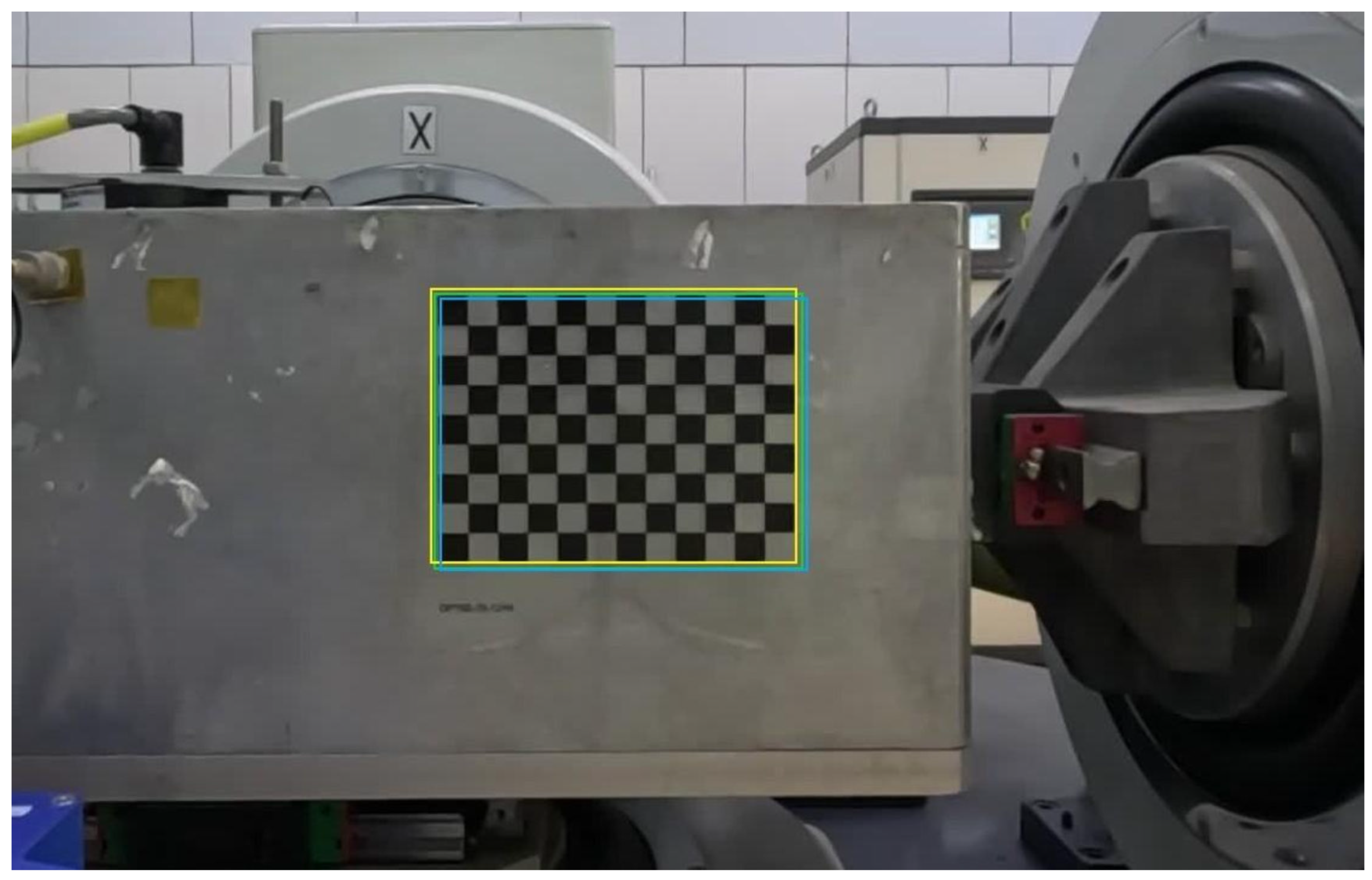

2.1. Data Collection

2.2. Data Processing

3. Methods

3.1. Overview

3.2. Low-Computational-Cost Transformer-Based (LCCTV) Visual Tracking

3.2.1. Feature Extraction

3.2.2. Global Interaction

3.2.3. Head and Loss

3.3. Earthquake Intensity Prediction

4. Experiment

4.1. Experimental Environment

4.2. Implementation Details

4.3. Algorithm Comparison

4.3.1. Generic Tracking Benchmark

4.3.2. Seismic Dataset Evaluation

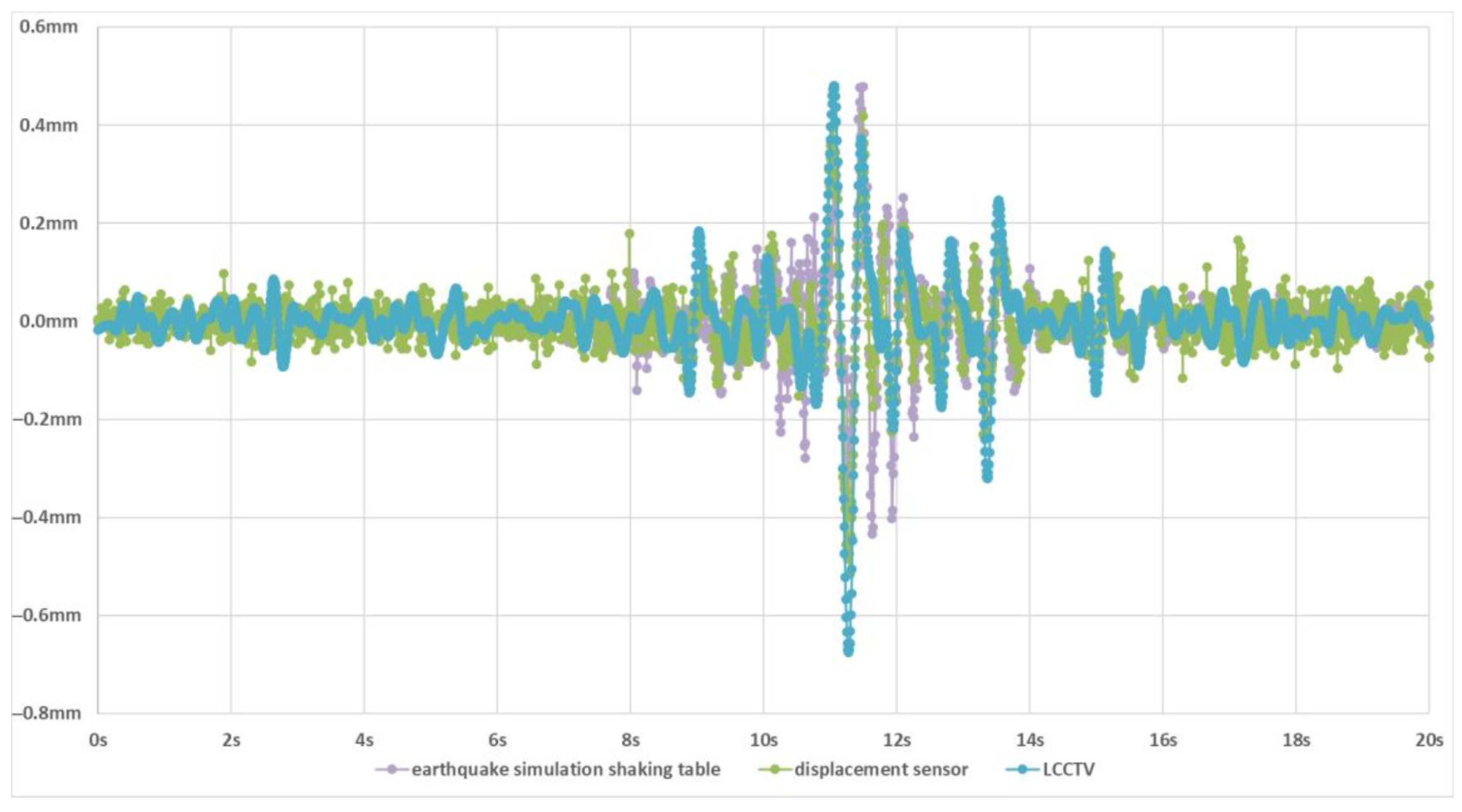

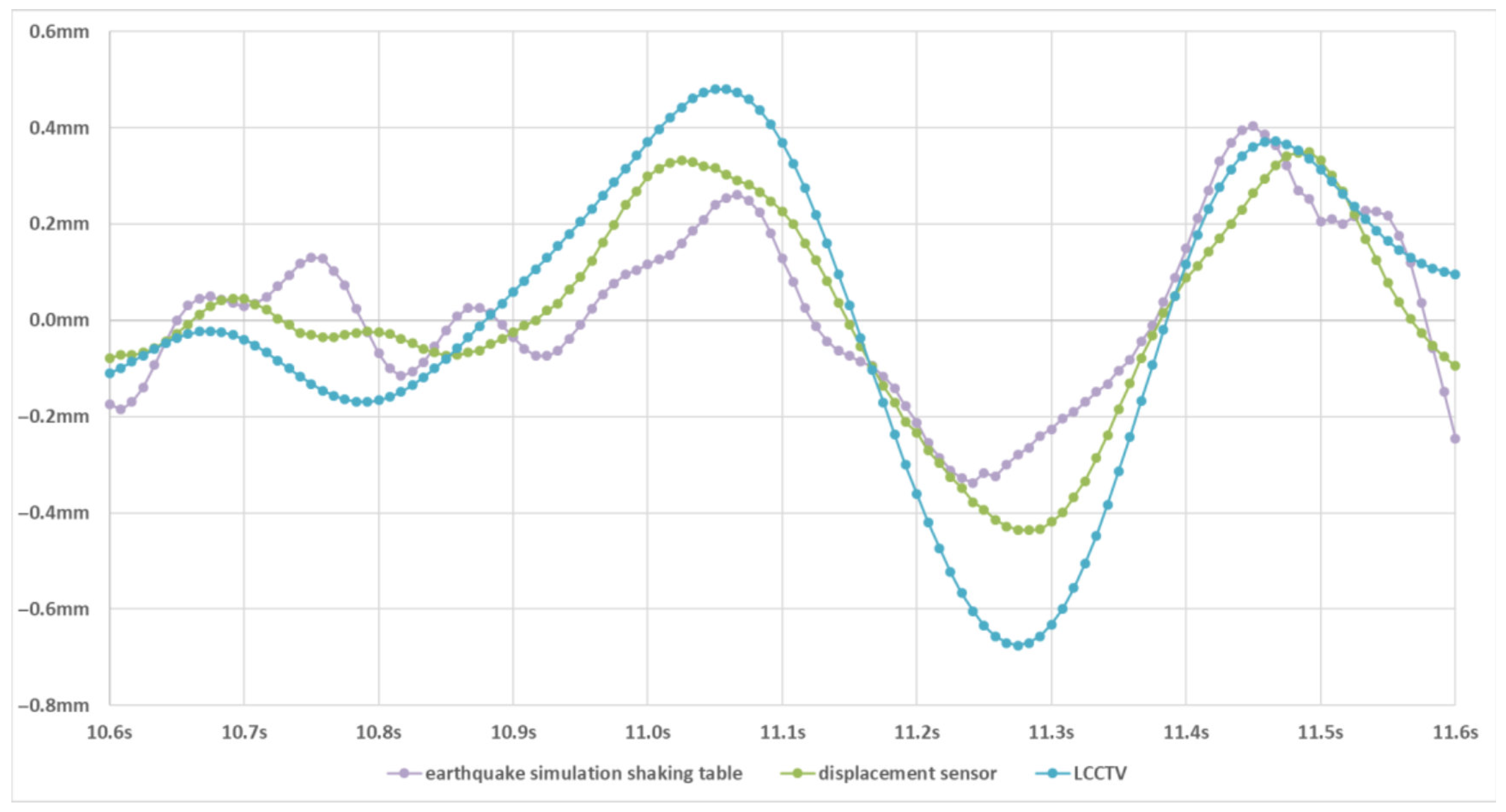

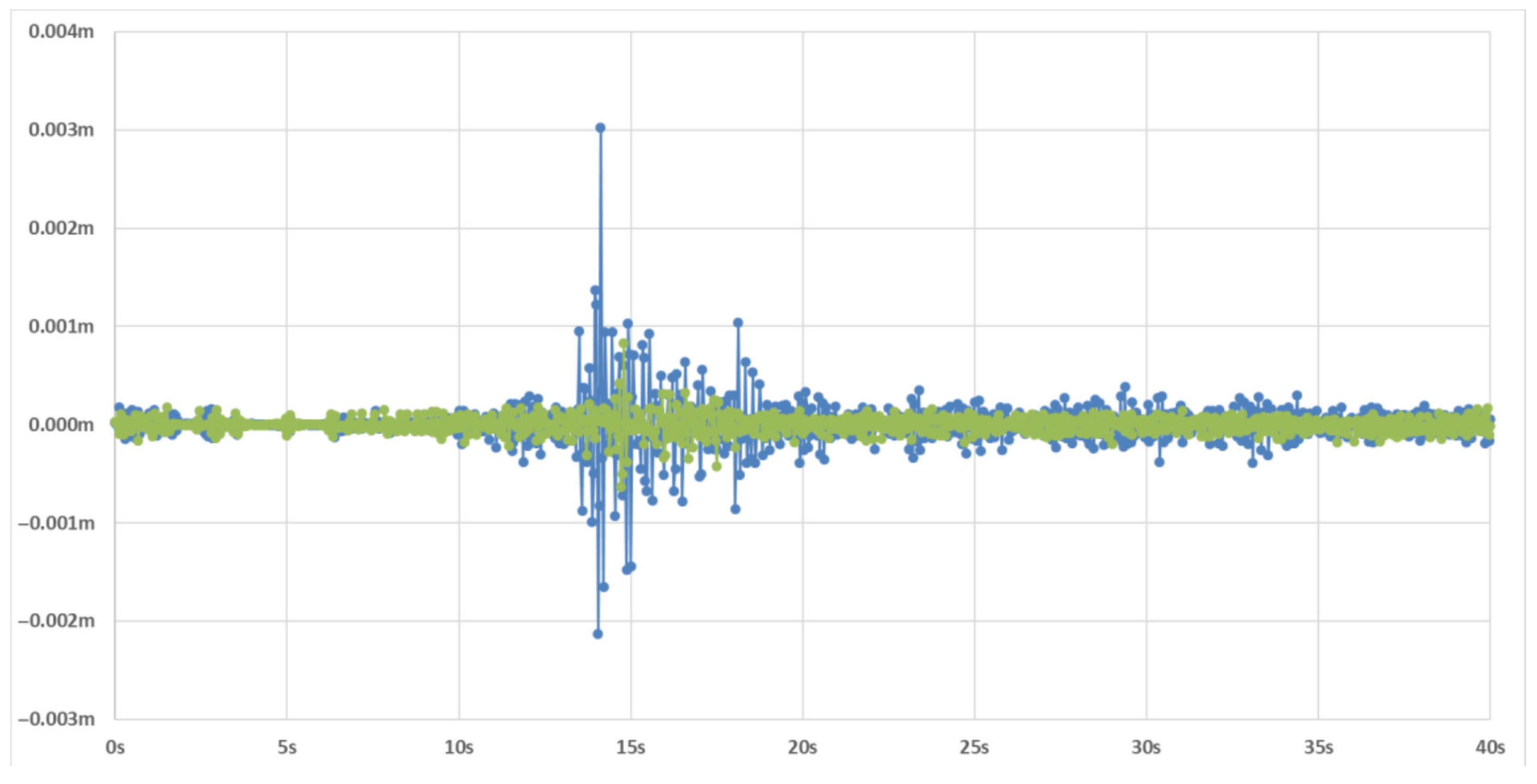

4.3.3. Visualization and Analysis of Earthquake Waveform Diagrams

4.3.4. Analysis of Real Earthquake Scenarios

4.4. Ablation Study

4.5. Failure Case and Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kontogianni, V.A.; Stiros, S.C. Earthquakes and seismic faulting: Effects on tunnels. Turk. J. Earth Sci. 2003, 12, 153–156. [Google Scholar]

- Sharma, S.; Judd, W.R. Underground opening damage from earthquakes. Eng. Geol. 1991, 30, 263–276. [Google Scholar] [CrossRef]

- Scislo, L. High activity earthquake swarm event monitoring and impact analysis on underground high energy physics research facilities. Energies 2022, 15, 3705. [Google Scholar] [CrossRef]

- Schaumann, M.; Gamba, D.; Morales, H.G.; Corsini, R.; Guinchard, M.; Scislo, L.; Wenninger, J. The effect of ground motion on the LHC and HL-LHC beam orbit. Nucl. Instrum. Methods Phys. Res. Sect. A Accel. Spectrometers Detect. Assoc. Equip. 2023, 1055, 168495. [Google Scholar] [CrossRef]

- Wu, Y.M.; Zhao, L. Magnitude estimation using the first three seconds P-wave amplitude in earthquake early warning. Geophys. Res. Lett. 2006, 33. [Google Scholar] [CrossRef]

- Lee, J.; Khan, I.; Choi, S.; Kwon, Y.W. A smart iot device for detecting and responding to earthquakes. Electronics 2019, 8, 1546. [Google Scholar] [CrossRef]

- Katakami, S.; Iwata, N. Enhancing Railway Earthquake Early Warning Systems with a Low Computational Cost STA/LTA-Based S-Wave Detection Method. Sensors 2024, 24, 7452. [Google Scholar] [PubMed]

- Noda, S.; Iwata, N.; Korenaga, M. Improving the Rapidity of Magnitude Estimation for Earthquake Early Warning Systems for Railways. Sensors 2024, 24, 7361. [Google Scholar] [CrossRef]

- Cua, G.; Heaton, T. The Virtual Seismologist (VS) method: A Bayesian approach to earthquake early warning. In Earthquake Early Warning Systems; Springer: Berlin/Heidelberg, Germany, 2007; pp. 97–132. [Google Scholar]

- Wu, T.; Liu, Z.; Yan, S. Detection and Monitoring of Mining-Induced Seismicity Based on Machine Learning and Template Matching: A Case Study from Dongchuan Copper Mine, China. Sensors 2024, 24, 7312. [Google Scholar] [CrossRef]

- Abdalzaher, M.S.; Soliman, M.S.; El-Hady, S.M. Seismic intensity estimation for earthquake early warning using optimized machine learning model. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5914211. [Google Scholar] [CrossRef]

- Sun, W.F.; Pan, S.Y.; Liu, Y.H.; Kuo-Chen, H.; Ku, C.S.; Lin, C.M.; Fu, C.C. A Deep-Learning-Based Real-Time Microearthquake Monitoring System (RT-MEMS) for Taiwan. Sensors 2025, 25, 3353. [Google Scholar]

- Mousavi, S.M.; Ellsworth, W.L.; Zhu, W.; Chuang, L.Y.; Beroza, G.C. Earthquake transformer—An attentive deep-learning model for simultaneous earthquake detection and phase picking. Nat. Commun. 2020, 11, 3952. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, M.; Tian, X. Real-time earthquake early warning with deep learning: Application to the 2016 M 6.0 Central Apennines, Italy earthquake. Geophys. Res. Lett. 2021, 48, 2020GL089394. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, T.; Wu, W.; Jiang, J. Application of seismic damage rapid identification algorithm in Yingcheng M4.9 earthquake in Hubei Province. Earthq. Eng. Eng. Vib. 2023, 43, 229–238. [Google Scholar] [CrossRef]

- Du, H.; Zhang, F.; Lu, Y.; Lin, X.; Deng, S.; Cao, Y. Recognition of the earthquake damage to buildings in the 2021 Yangbi, Yunnan MS_6. 4 earthquake area based on multi-source remote sensing images. J. Seismol. Res. 2021, 44, 490–498. [Google Scholar]

- Wang, X.; Wittich, C.E.; Hutchinson, T.C.; Bock, Y.; Goldberg, D.; Lo, E.; Kuester, F. Methodology and validation of UAV-based video analysis approach for tracking earthquake-induced building displacements. J. Comput. Civ. Eng. 2020, 34, 04020045. [Google Scholar] [CrossRef]

- Hong, C.S.; Wang, C.C.; Tai, S.C.; Chen, J.F.; Wang, C.Y. Earthquake detection by new motion estimation algorithm in video processing. Opt. Eng. 2011, 50, 017202. [Google Scholar] [CrossRef]

- Huang, C.; Yao, T. Earthquake Detection Based on Video Analysis. Artif. Intell. Robot. Res. 2019, 8, 126–139. [Google Scholar] [CrossRef]

- Liu, Z.; Xue, J.; Wang, N.; Bai, W.; Mo, Y. Intelligent damage assessment for post-earthquake buildings using computer vision and augmented reality. Sustainability 2023, 15, 5591. [Google Scholar] [CrossRef]

- Yang, J.; Lu, G.; Wu, Y.; Peng, F. Method for Indoor Seismic Intensity Assessment Based on Image Processing Techniques. J. Imaging 2025, 11, 129. [Google Scholar] [CrossRef]

- Wen, W.; Xu, T.; Hu, J.; Ji, D.; Yue, Y.; Zhai, C. Seismic damage recognition of structural and non-structural components based on convolutional neural networks. J. Build. Eng. 2025, 102, 112012. [Google Scholar] [CrossRef]

- Jing, Y.; Ren, Y.; Liu, Y.; Wang, D.; Yu, L. Automatic extraction of damaged houses by earthquake based on improved YOLOv5: A case study in Yangbi. Remote Sens. 2022, 14, 382. [Google Scholar] [CrossRef]

- Zhu, J.; Tang, H.; Chen, X.; Wang, X.; Wang, D.; Lu, H. Two-stream beats one-stream: Asymmetric siamese network for efficient visual tracking. Proc. Aaai Conf. Artif. Intell. 2025, 39, 10959–10967. [Google Scholar] [CrossRef]

- Cui, Y.; Song, T.; Wu, G.; Wang, L. Mixformerv2: Efficient fully transformer tracking. Adv. Neural Inf. Process. Syst. 2023, 36, 58736–58751. [Google Scholar]

- Vig, J. A multiscale visualization of attention in the transformer model. arXiv 2019, arXiv:1906.05714. [Google Scholar] [CrossRef]

- Vig, J.; Belinkov, Y. Analyzing the structure of attention in a transformer language model. arXiv 2019, arXiv:1906.04284. [Google Scholar] [CrossRef]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 341–357. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- GB/T17742-2020; The Chinese Seismic Intensity Scale. National Standardization Administration of the People’s Republic of China: Beijing, China, 2020.

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Chen, X.; Ding, M.; Wang, X.; Xin, Y.; Mo, S.; Wang, Y.; Han, S.; Luo, P.; Zeng, G.; Wang, J. Context autoencoder for self-supervised representation learning. Int. J. Comput. Vis. 2024, 132, 208–223. [Google Scholar] [CrossRef]

- Huang, L.; Zhao, X.; Huang, K. Got-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Fan, H.; Lin, L.; Yang, F.; Chu, P.; Deng, G.; Yu, S.; Bai, H.; Xu, Y.; Liao, C.; Ling, H. Lasot: A high-quality benchmark for large-scale single object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5374–5383. [Google Scholar]

- Muller, M.; Bibi, A.; Giancola, S.; Alsubaihi, S.; Ghanem, B. Trackingnet: A large-scale dataset and benchmark for object tracking in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 300–317. [Google Scholar]

- Kang, B.; Chen, X.; Wang, D.; Peng, H.; Lu, H. Exploring lightweight hierarchical vision transformers for efficient visual tracking. In Proceedings of the IEEE/CVF International Conference on Computer vision, Paris, France, 1–6 October 2023; pp. 9612–9621. [Google Scholar]

- Kristan, M.; Leonardis, A.; Matas, J.; Felsberg, M.; Pflugfelder, R.; Kämäräinen, J.K.; Danelljan, M.; Zajc, L.Č.; Lukežič, A.; Drbohlav, O.; et al. The eighth visual object tracking VOT2020 challenge results. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 547–601. [Google Scholar]

- Blatter, P.; Kanakis, M.; Danelljan, M.; Van Gool, L. Efficient visual tracking with exemplar transformers. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 1571–1581. [Google Scholar]

- Chen, X.; Kang, B.; Wang, D.; Li, D.; Lu, H. Efficient visual tracking via hierarchical cross-attention transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23 October 2022; pp. 461–477. [Google Scholar]

- Borsuk, V.; Vei, R.; Kupyn, O.; Martyniuk, T.; Krashenyi, I.; Matas, J. FEAR: Fast, efficient, accurate and robust visual tracker. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 644–663. [Google Scholar]

- Yan, B.; Peng, H.; Wu, K.; Wang, D.; Fu, J.; Lu, H. Lighttrack: Finding lightweight neural networks for object tracking via one-shot architecture search. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 15180–15189. [Google Scholar]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Li, Y. Hift: Hierarchical feature transformer for aerial tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15457–15466. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4660–4669. [Google Scholar]

- Danelljan, M.; Bhat, G.; Shahbaz Khan, F.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- The China Earthquake Administration Released the Seismic Intensity Map of Magnitude 6.1 in Lushan, Sichuan Province. 2022. Available online: https://www.cea.gov.cn/cea/xwzx/fzjzyw/5661356/index.html (accessed on 4 August 2025).

| Methods | Source | LaSOT | Speed (fps) | |||

|---|---|---|---|---|---|---|

| AUC | PNorm | P | GPU | OrinNX | ||

| LCCTV | Ours | 65.3 | 75.4 | 69.9 | 170 | 22 |

| AsymTrack-B [24] | AAAI’25 | 64.7 | 73.0 | 67.8 | 197 | 41 |

| MixFormerV2 [25] | NeurIPS’24 | 60.6 | 69.9 | 60.4 | 167 | 30 |

| HiT-Base [38] | ICCV’23 | 64.6 | 73.3 | 68.1 | 175 | 39 |

| E.T.Track [40] | WACV’23 | 59.1 | - | - | 53 | 21 |

| HCAT [41] | ECCVW’22 | 59.3 | 68.7 | 61.0 | 205 | 34 |

| FEAR [42] | ECCV’22 | 53.5 | - | 54.5 | 143 | 46 |

| LightTrack [43] | CVPR’21 | 53.8 | - | 53.7 | 110 | 38 |

| HiFT [44] | ICCV’21 | 45.1 | 52.7 | 42.1 | 213 | 50 |

| ATOM [45] | CVPR’19 | 51.5 | 57.6 | 50.5 | 83 | 21 |

| ECO [46] | CVPR’17 | 32.4 | 33.8 | 30.1 | 113 | 22 |

| Tracker | II | III | IV | V | VI | Average |

|---|---|---|---|---|---|---|

| LCCTV (ours) | 66.7 | 66.7 | 84.6 | 89.5 | 95.0 | 80.5 |

| AsymTrack-B [24] | 66.7 | 53.3 | 76.9 | 78.9 | 75.0 | 70.2 |

| MixFormerV2 [25] | 66.7 | 46.7 | 73.1 | 68.4 | 70.0 | 65.0 |

| HiT-Base [38] | 66.7 | 60.0 | 80.8 | 73.7 | 80.0 | 72.2 |

| E.T.Track [40] | 33.3 | 40.0 | 69.2 | 63.2 | 70.0 | 55.1 |

| HCAT [41] | 33.3 | 46.7 | 73.1 | 63.2 | 65.0 | 56.2 |

| FEAR [42] | 33.3 | 33.3 | 57.7 | 52.6 | 50.0 | 45.4 |

| LightTrack [43] | 33.3 | 40.0 | 61.5 | 57.9 | 55.0 | 49.6 |

| HiFT [44] | 0.0 | 26.7 | 46.2 | 42.1 | 50.0 | 33.0 |

| ATOM [45] | 33.3 | 33.3 | 50.0 | 47.4 | 45.0 | 41.8 |

| ECO [46] | 0.0 | 20.0 | 38.5 | 31.6 | 35.0 | 25.0 |

| Intensity Degree | Sample Size | Accuracy | Variance | Std. Dev. | 95%S Confidence Interval |

|---|---|---|---|---|---|

| II | 3 | 66.7 | 0.222 | 0.471 | (0.000,1.000) |

| III | 15 | 66.7 | 0.222 | 0.471 | (0.406,0.928) |

| IV | 26 | 84.6 | 0.130 | 0.361 | (0.700,0.992) |

| V | 19 | 89.5 | 0.094 | 0.307 | (0.747,1.000) |

| VI | 20 | 95.0 | 0.047 | 0.218 | (0.848,1.000) |

| MACs (G) | Params (M) | Speed_GPU (fps) | LaSOT | |

|---|---|---|---|---|

| w/ SMSA | 14.15 | 54.88 | 170 | 65.3 |

| w/o SMSA | 16.01 | 62.13 | 152 | 64.6 |

| Truth | II | III | III | III | III | III |

| LCCTV (ours) | III | IV | IV | II | IV | IV |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Bai, Z.; Bai, R.; Zhao, L.; Lin, M.; Han, Y. Seismic Intensity Prediction with a Low-Computational-Cost Transformer-Based Tracking Method. Sensors 2025, 25, 6269. https://doi.org/10.3390/s25206269

Wang H, Bai Z, Bai R, Zhao L, Lin M, Han Y. Seismic Intensity Prediction with a Low-Computational-Cost Transformer-Based Tracking Method. Sensors. 2025; 25(20):6269. https://doi.org/10.3390/s25206269

Chicago/Turabian StyleWang, Honglei, Zhixuan Bai, Ruxue Bai, Liang Zhao, Mengsong Lin, and Yamin Han. 2025. "Seismic Intensity Prediction with a Low-Computational-Cost Transformer-Based Tracking Method" Sensors 25, no. 20: 6269. https://doi.org/10.3390/s25206269

APA StyleWang, H., Bai, Z., Bai, R., Zhao, L., Lin, M., & Han, Y. (2025). Seismic Intensity Prediction with a Low-Computational-Cost Transformer-Based Tracking Method. Sensors, 25(20), 6269. https://doi.org/10.3390/s25206269