Abstract

With the proliferation of mobile terminals and the rapid growth of network applications, fine-grained traffic identification has become increasingly challenging. Methods based on machine learning and deep learning have achieved remarkable results, but they heavily rely on the distribution of training data, which makes them ineffective in handling unseen samples. In this paper, we propose AG-ZSL, a zero-shot learning framework based on traffic behavior and attribute representations for general encrypted traffic classification. AG-ZSL primarily learns two mapping functions: one that captures traffic behavior embeddings from burst-based traffic interaction graphs, and the other that learns attribute embeddings from traffic attribute descriptions. Then, the framework minimizes the distance between these embeddings within the shared feature space. The gradient rejection algorithm and K-Nearest Neighbors are introduced to implement a two-stage method for general traffic classification. Experimental results on IoT datasets demonstrate that AG-ZSL achieves exceptional performance in classifying both known and unknown traffic, highlighting its potential for enhancing secure and efficient traffic management at the network edge.

1. Introduction

Traffic identification, critical for network management, plays a key role in improving the quality of service, preventing network intrusions and optimizing resource allocation [1,2,3,4]. In edge computing environments, where real-time processing and efficient resource management are essential, traffic classification faces unique challenges. The rapid emergence of privacy-enhancing technologies and protocols has further complicated this task [5]. Over 70% of network traffic now uses encryption algorithms to protect privacy [6], concealing the characteristics of traffic generated by malicious activities. Encrypted malicious traffic often appears statistically similar to benign traffic, making it difficult to classify using methods reliant on specific traffic patterns [7].

Furthermore, traditional traffic classification methods, primarily based on machine learning or deep learning, typically rely on supervised learning with pre-collected traffic data [8]. These approaches struggle to adapt to the dynamic and resource-constrained edge computing environments, as they cannot encompass all known traffic categories. Morever, with the proliferation of network protocols and services, the volume of unknown traffic categories continues to grow [5]. Consequently, there is an urgent need for innovative approaches to traffic identification [9,10].

To address this issue, it is crucial to consider the prediction of unknown traffic during classification, the task is commonly referred to as zero-shot learning (ZSL) [11]. One of the key concepts of ZSL is transfer learning [12], which involves adding an intermediate representation layer to bridge unknown and known classes, thereby leveraging knowledge from known classes to predict the categories of unseen samples.

Currently, research on zero-shot learning for encrypted traffic classification is relatively limited. Early studies primarily focused on the binary classification problem [9], dividing traffic into two categories: known traffic and unknown traffic. Although these methods consider the impact of unknown data, the practical value of binary classification remains controversial, as traffic classification requires finer-grained distinctions. Works [10,13,14] apply clustering algorithms to classify unknown traffic, but a common issue is the difficulty in determining the optimal number of clusters. Additionally, some researchers use reinforcement learning [15] and attention mechanisms [16] to identify unknown traffic. To address the data acquisition problem, studies [8] improve model performance by synthesizing unknown data. However, these methods lack interpretability.

Considering that ZSL often uses intermediate representations to connect unknown and known classes, a natural idea is to bridge them by studying shared characteristics. Notably, traffic is not naturally occurring, it is structured data designed by humans. Thus, traffic inherently contains common attributes that can be leveraged to transfer knowledge from seen classes to unseen classes. Specifically, the training data can be used to find unique patterns by learning relationships between datagrams and attributes, enabling fine-grained classification.

In this paper, we divide the task of identifying traffic into two stages: first, determining whether the network traffic belongs to unseen classes. If it is identified as belonging to the unknown category, we further map the traffic to an attribute label (the description of the attribute label is present in Section 4.1.2). Otherwise, we perform a fine-grained classification to identify the ground-truth label of the traffic. The gradient rejection strategy [10] and the KNN algorithm are introduced into the framework. The gradient rejection strategy is used to determine whether the traffic belongs to an unseen class, while KNN maps unknown traffic to attribute labels based on intermediate attribute representations. In summary, we propose a ZSL-based framework to solve the issue of identifying general encrypted traffic. The framework consists of two components: (1) Graph neural network (GNN) for traffic behavior embedding. Traffic bursts often reveal details of traffic interaction behavior [17]. Therefore, we first extract a traffic interaction graph based on bursts and then utilize GNN to extract features, generating the representation of traffic behavior. (2) Traffic attribute description. Attributes represent the most fundamental information of traffic and are not changed by environmental factors. Attributes cover various aspects of traffic, such as actions, transmission patterns, packet rates, and other characteristics. An attribute description is a sequence composed of multiple attributes of the flow, which is then embedded using simple recurrent units (SRU) [18] to generate an attribute representation. The contributions of this paper are as follows:

- Conceptually, we propose a ZSL-based framework for general encrypted traffic classification that uses attribute representations as intermediates to address the challenge of predicting unknown categories. This framework enables knowledge transfer from known to unknown categories through attribute-based representations, effectively enhancing model generalization.

- Methodologically, the framework leverages traffic attribute representations to transform traditional classification labels into attribute labels that facilitate knowledge transfer. These attributes, which include basic traffic characteristics, are integrated with traffic behavior representations to enable robust classification of unknown categories.

- Innovatively, a novel burst-based traffic interaction graph is introduced to capture detailed traffic interaction features, where nodes represent datagrams and edges encode the interaction relationships between endpoints.

- Experimentally, extensive experiments on public datasets demonstrate that AG-ZSL achieves state-of-the-art performance in both fine-grained classification and zero-shot prediction.

2. Related Work

2.1. Traffic Classification

Research on traffic identification has been widely applied across various domains and can be broadly divided into three categories: statistics-based, machine learning-based, and deep learning-based approaches. For discussion purposes, we summarize the related works in Table 1.

Table 1.

Related works in encrypted traffic identification.

2.1.1. Statistic Methods

The earliest traffic classification methods were based on specific attribute values and statistics, such as port-based techniques and deep packet inspection. However, these methods relied heavily on prior knowledge, and with the increasing use of dynamic allocation protocols, they have gradually become less effective.

2.1.2. Machine Learning Methods

Machine learning methods primarily collected packet attribute values through side-channel information to classify traffic based on their states, which mitigates the impact of random content on accuracy. Taylor et al. [19] utilized packet sizes for training classifiers, while Al-Naami et al. [20] focused on temporal data. Panchenko et al. [21] used packet size and direction to classify traffic. These approaches rely heavily on expert knowledge and feature engineering, which lead to their ineffectiveness at classifying data with unknown labels.

2.1.3. Deep Learning Methods

Wang [22] was the first to apply computer vision techniques to traffic classification by converting datagram bytes into grayscale images, enabling CNNs to classify encrypted traffic. Building on this approach, Bhat et al. designed Var-CNN [7], which accounts for the multidimensional characteristics of traffic by using convolutional kernels with various sizes to extract features. Additionally, natural language processing algorithms have also been widely applied to traffic classification tasks. Luo et al. [23] leveraged transformer models to capture semantic relationships for traffic classification. Huoh et al. [24] analyzed the relationships between traffic bytes and packet interactions for application classification. Mimetic-All [25] used burst traffic and communication protocols as contextual inputs, serving as an additional modality for application classification. He et al. [26] applied ALBERT to encrypted traffic classification, achieving an accuracy of 93.27%. However, since these methods did not fully consider the characteristics of datagram interactions, resulting in suboptimal model performance.

Compared to other models, GNNs are capable of handling non-Euclidean data, such as the relationships between packet length, and direction of transmission in flow. By mapping traffic into a graph structure, GNNs can capture features such as temporal and sequential relationships between datagrams. Additionally, GNNs handle variable-length traffic without the need for padding or truncation, as is required by CNNs or RNNs, thus avoiding the precision loss caused by padding or truncation. To fully utilize the meta information embedded in packet data and packet order, researchers have begun adopting GNNs for traffic identification. Huoh et al. [27] were the first to convert encrypted traffic into a graph structure, preserving its temporal and structural information as well as overcoming the limitations of fixed input shapes required by traditional methods. This approach significantly improved classification accuracy. Shen et al. [28] captured the multidimensional interaction features between clients and servers through graph structures, transforming the DApp traffic classification problem into a graph classification task. Yang et al. [29] transformed the traffic sessions into graphs and proposed a semi-supervised classifier. Pang et al. [30] proposed a chained graph model to maintain the chained compositional sequence of the datagrams. Compared to previous methods, our approach emphasizes burst characteristics in the graph structure design, which more effectively highlights traffic interaction features.

2.2. Zero-Shot Learning

Zero-shot learning addresses the challenge of classifying unseen classes without labeled data by learning transferable semantic representations from known to unknown classes. In contrast, traditional deep learning-based traffic classification models are trained in a supervised manner using closed-world data, with performance typically reliant on class balance and the amount of labeled data available in the dataset [12,31].

Current research has explored the use of ZSL for classifying unknown encrypted traffic. Payap et al. [32] applied triplet networks in traffic classification, using contrastive learning to optimize the loss function and address challenges caused by network condition variations and data distribution inconsistencies. Hu et al. [8] proposed a framework that leveraged attributes from known classes and GAN-generated data to enhance the classification capability for unknown classes. Yang et al. [9] developed the GradBP algorithm, which detected novel and unseen traffic patterns using gradient backpropagation. Zhang et al. [10] introduced the HyperVision system, which utilized a flow interaction graph to detect unknown encrypted traffic. Wu et al. [16] proposed a model based on the self-attention mechanism to classify known and unknown traffic. Fu et al. [13] and Zhao et al. [14] both classified unknown traffic through clustering. In comparison to these methods, AG-ZSL connects known and unknown classes by intermediate semantic representations, and the use of the gradient rejection strategy can further enhance the interpretability of the approach.

3. Preliminary

In real-world scenarios, unseen traffic primarily originates from two sources. First, new applications or protocols generate unknown traffic, and second, traffic undergoes “morphing” after encryption, causing significant differences in feature distribution compared to training data, which increases the difficulty of traffic classification. However, some traffic features exhibit similarities during transmission, meaning that the characteristics of unknown traffic may partially overlap with those of known traffic. This provides a theoretical basis for knowledge transfer in zero-shot classification.

Compared to other methods, AG-ZSL additionally defines traffic attribute labels, which are attribute sets consisting of multiple attribute values that serve as transferable knowledge for unknown traffic classification. Network traffic needs to be fully represented, for which a burst-based traffic interaction graph is designed. The graph helps capture the temporal and spatial information within sessions and is learned by a GNN to generate the graph vector. Then, SRU generates the attribute vector based on the attribute labels of known traffic. In the model training phase, the objective of AG-ZSL is to improve the similarity between the traffic graph vector and its corresponding attribute vector. To avoid the impact of inconsistencies in data distribution on the training objective, we use the Euclidean distance [11] to measure the similarity between the two vectors. Below, we provide detailed explanations of the traffic interaction graph, attribute description, and objective of the framework.

3.1. Traffic Interaction Graph

The burst-based traffic interaction graph is used for a fine-grained representation of traffic. A burst is a collection of adjacent packets within a flow that have the same direction. From the application layer perspective, bursts reflect traffic characteristics and effectively illustrate traffic transmission patterns. Typically, when two endpoints communicate, the complete content is transmitted in the form of bursts. Therefore, bursts can capture the structural information and sequential relationships associated with traffic categories.

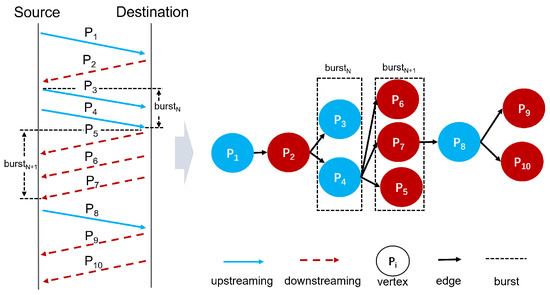

We design a traffic interaction graph based on bursts, as shown in Figure 1. In this graph, each node represents a packet, denoted as . The value of each node corresponds to the length of the associated packet. Upstream packets and downstream packets are marked in blue and red, respectively. Each edge represents the relationship between two consecutive bursts. Importantly, each packet is connected with the last packet of the previous burst. For instance, in Figure 1, the last packet of is , which is connected to every packet in , as well as the dash lines indicate the boundaries of bursts. Additionally, the directions of the arrows indicate the chronological relationship between packets.

Figure 1.

Traffic interaction graph based on burst.

3.2. Attribute Description

Traditional methods usually focus on single tasks, such as classifying network types, applications, or anonymity, with each task relying on some attributes. Since traffic is human-defined data, typically generated by terminal activities and transmitted over the network only when protocol requirements are met, the same category often exhibits similar patterns. For example, datasets used for service classification can also be applied in application identification. By combining attributes from multiple tasks into a unified attribute description, the commonalities among these traffic can be better revealed and leveraged for more fine-grained traffic classifications.

To describe traffic more accurately, it is essential to analyze the factors that may affect datagrams during flow generation and transmission. Referring to the work [8], traffic actions and protocols are crucial for traffic transmission patterns. Therefore, we consider and as two factors for the traffic attribute description. In addition, through the analysis of existing data, we find significant differences between normal and malicious traffic in terms of transmission mode and frequency (the analysis of transmission mode and frequency is presented in Section 5.2.3). Hence, in this framework, we define the traffic attribute description to include five factors: , , , , and . Among these, refers to the behavior generating the traffic, refers to the transmission pattern of the traffic, indicates the traffic is normal or anomalous, refers to the type of protocol applied in the traffic, and refers to the number of packets transmitted per unit time. The corresponding attribute values for each factor are shown in Table 2.

Table 2.

Ground-truth label and attribute labels used in datasets.

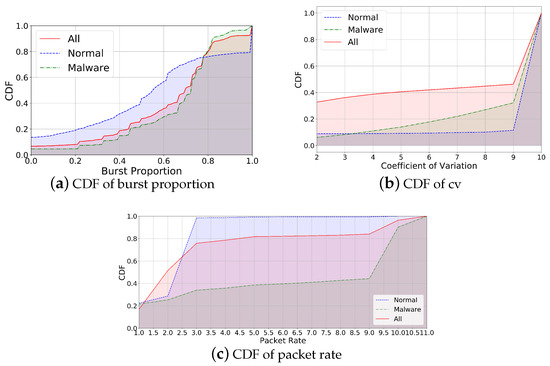

Among these attributes, , , and are objective attributes that can be directly labeled. However, and require further data statistics and analysis. The attribute values for include burst, spike, and steady. Here, burst indicates a high proportion of bursts within a flow, spike represents noticeable peaks in traffic transmission, and steady denotes a generally stable flow during transmission. The thresholds for spike and steady are determined by calculating the Coefficient of Variation (CV).

Here, represents the standard deviation of all packets within a flow, and represents the average packet size within the flow. refers to the number of packets transmitted per unit time in a flow. The packet rate is defined as the number of packets per second. includes two attribute values: fast and slow. In Section 5.2.3, we will analyze the threshold corresponding to the attribute value of and .

3.3. Goal

Let = represent known labels, and represent unknown labels. Similarly, represents known traffic, and represents unknown traffic. The attribute label is defined as A = , where with . represents the specific value of the attribute. Assuming the training set contains N samples, each sample consists of seen traffic , ground-truth label , and the attribute label , denoted as .

The goal of AG-ZSL is to train two models: the traffic behavior representation model F and the attribute representation model M, with the training objectives outlined as follows:

where denotes the Euclidean distance. represents the known traffic behavior embedding, and represents the attribute embedding. The two models are trained by minimizing the distance between their generated embeddings, ensuring semantic alignment between the input traffic and the corresponding attribute description based on the training set. This approach enables to perform fine-grained classification. Additionally, when encountering unknown classes, F and M can be used to predict attribute labels for samples, providing a basis for the subsequent analysis of unknown traffic.

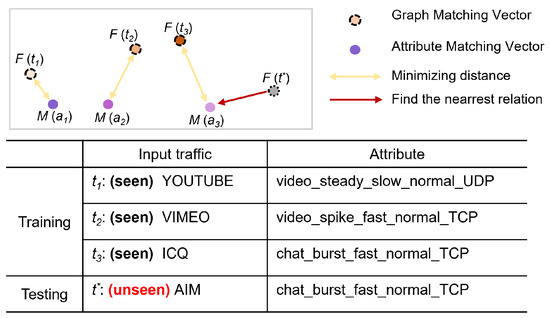

The inference phase includes two objectives: first, to determine whether the traffic is unknown, and second, to perform fine-grained classification by identifying the ground-truth label of known traffic or mapping unknown traffic to attribute labels. Figure 2 illustrates an example of AG-ZSL predicting unknown traffic, where , , represent YOUTUBE, VIMEO, and ICQ traffic, respectively, and , , are their corresponding attributes sets, as well as represents unknown traffic. The models F and M have been trained, and the attribute representations , and are saved. Using KNN, the attribute representation that is close to can be found, with the corresponding attribute description serving as the attribute label for the unknown class.

Figure 2.

The training and testing objectives of AG-ZSL.

4. The Proposed Framework

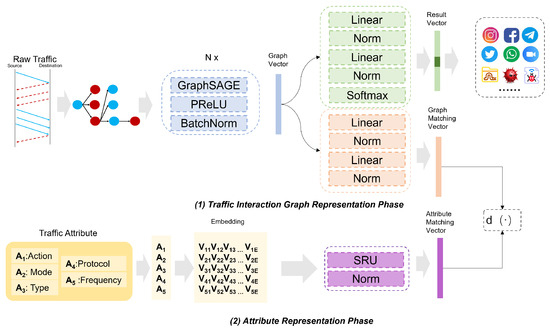

The AG-ZSL architecture is illustrated in Figure 3 and Figure 4. The framework primarily consists of GNN and SRU, and it includes two stages: the model training phase and the zero-shot prediction phase. We train the framework under a multi-task method, with one task focusing on classifying the ground-truth labels of traffic, and the other task aiming to minimize the distance between traffic behavior representations and attribute representations. In the inference phase, AG-ZSL predicts either the ground-truth label or the attribute label for new traffic.

Figure 3.

AG-ZSL training phase.

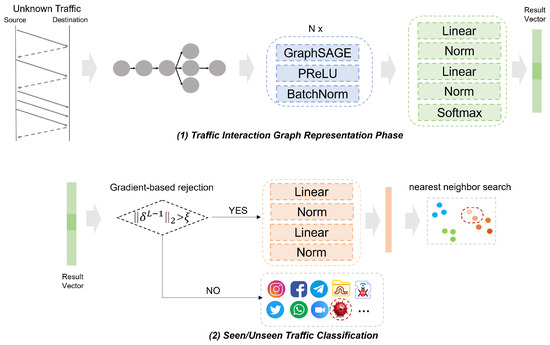

Figure 4.

AG-ZSL inference phase.

4.1. Model Training

The objective of the training phase is to generate two types of vectors: the result vector, which can be utilized for fine-grained classification based on traffic behavior, and the graph matching vector, which is designed to align with attribute representations. These two representations are based on the graph vector and are formed by different linear layers. As illustrated in Figure 3, the training phase is divided into two parts: traffic interaction graph representation and attribute representation.

4.1.1. Traffic Behavior Representation

AG-ZSL employs GraphSAGE for the fine-grained representation of network traffic. GraphSAGE is an inductive learning model based on graph-structured data, and its core idea is to generate representations for target nodes by aggregating the features of neighboring nodes [33]. Aggregation functions are used to combine the features of these neighboring nodes with the features of target nodes to generate new feature representations. Based on this method, AG-ZSL can better learn the representation of burst traffic from adjacent neighbors. When it comes to training, the first step is to construct a burst-based traffic interaction graph from the raw traffic to serve as the input to the model. The packets are divided into different flows based on the five-tuple (source IP, destination IP, source port, destination port, protocol). Each flow is then transformed into a burst-based traffic interaction graph using the method mentioned in Section 3.1, denoted as , where represents the process of generating the traffic interaction graph. This graph is then fed into a multi-layer GraphSAGE model. At each layer, GraphSAGE aggregates information from the current node and its neighbors to generate new node embeddings.

Following each GraphSAGE layer, the PReLU activation function is applied to introduce non-linearity into the network. The advantage of using PReLU is that when the output of GraphSAGE is negative, the activation function’s output is not always zero, which can help alleviate the vanishing gradient problem [34]. In addition, batch normalization is used to ensure that the input distribution remains consistent, thereby stabilizing the learning process. The readout function is employed to aggregate the features of all nodes, producing a global graph embedding vector.

The graph embedding vector is then fed into a network , which consists of linear layers and normalization layers, to generate the result vector. This result vector is subsequently passed through the softmax function to predict the ground-truth label. In parallel, we design an additional linear network , which generates a graph-matching vector. This vector will be utilized in the subsequent attribute-matching phase.

4.1.2. Attribute Representation

Each traffic, in addition to the ground-truth label, also has an attribute label, which is an attribute description composed of five attribute values. First, all the attribute values that appear during the training phase are collected and a dictionary is constructed based on these values. Then, the attribute labels are encoded into vectors using the dictionary. These vectors serve as the input to the SRU and finally output the attribute matching vector.

The training phase consists of two objectives: the first is to maximize the classification accuracy based on the result vector using cross-entropy loss, and the second is to minimize the distance between the input traffic and its attribute description while maximizing the distance between the input traffic and other attribute descriptions.

where represents the cross-entropy loss, represents the distance loss between the traffic representation and its attribute representation, i and j represent different sample indices, denotes the prediction vector based on the graph vector, P stands for the probability function, represents the model parameters, indicates the margin for distance and denotes the loss weighting factor, and represents the function that generate graph vector.

4.2. Zero-Shot Prediction

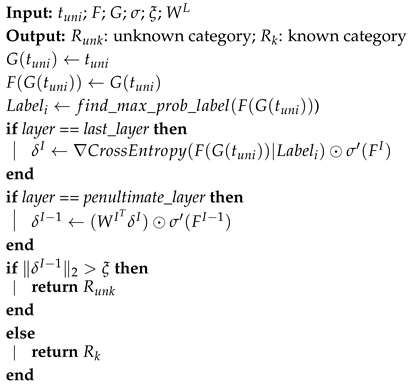

As illustrated in Figure 4, the zero-shot prediction phase begins by formalizing the test data as , which serves as the input to the model. This prediction phase consists of two parts. First, the framework determines whether the traffic is unknown. AG-ZSL employs a gradient rejection strategy to identify whether the traffic belongs to an unknown class. The process of the gradient rejection strategy is as in Algorithm 1. This strategy leverages backpropagation gradients, using “shadow training” during inference to compute the gradient of the current classification result for the penultimate layer’s weights. This reflects the model’s sensitivity near the decision boundary. The gradient corresponds to input data near the decision boundary, indicating a possible unknown category. The computation [9] of gradient rejection is given as follows:

where and represent the gradients of the last layer and the penultimate layer of neural network z, represents the partial derivative, and are the derivatives of the activation function with respect to the output of layer I and layer , denotes the threshold for determining whether the traffic is unseen, and is the weight between layer and layer I. If > , the traffic is classified as an unknown class. In this case, is applied to represent , generating the graph matching vector. KNN is then performed on this vector to find the closest attribute representation vector . We then infer that the attribute label of is .

| Algorithm 1: Gradient Rejection Strategy |

|

5. Experiment

In this section, we compare AG-ZSL with existing methods to evaluate the performance of the proposed framework in both fine-grained classification and unknown class prediction.

5.1. Dataset

We evaluate the framework on two public datasets: ISCX-VPN [35] and ToN-IoT [36]. ISCX-VPN includes popular VPN protocols such as OpenVPN and OpenConnect. Considering the encapsulation and service types of the traffic, this dataset contains traffic from 12 different applications. ToN-IoT is collected from a realistic, large-scale network designed at the Cyber Range and IoT Labs of SEIT and UNSW, which includes nine types of malicious traffic. Unlike other studies, in addition to the ground-truth labels, we further mark each traffic with an attribute label. The ground-truth labels and attribute labels for these two datasets are shown in Table 2.

Since the ISCX-VPN dataset is imbalanced, we sample 5000 packets for each application, resulting in a total of 60,471 packets across 4753 flows. The sampled dataset of ISCX-VPN is described as . Similarly, for the ToN-IoT dataset, we sample 4000 packets for each type of malicious traffic, resulting in a total of 36,249 packets across 3326 flows, which is described as . Details of these datasets are provided in the Table 3.

Table 3.

The detail of datasets used in experiments.

To demonstrate the robustness of the proposed method, the experiments include fine-grained classification and zero-shot prediction, which are described as and , respectively. The attribute labels included in and can be found in the Appendix A.

5.2. Implementation

5.2.1. Hyperparamters Setting

A key step in model training is determining the hyperparameters to balance model performance between underfitting and overfitting. To efficiently find suitable hyperparameters, the proposed framework utilizes interval search to identify the optimal combination. The implementation details of AG-ZSL are summarized in Table 4.

Table 4.

Hypberparamters for AG-ZSL.

5.2.2. Experiment Setting

All experiments are conducted on a Ubuntu system using PyTorch 1.8.0 with an NVIDIA RTX 3090 (NVIDIA, Santa Clara, CA, USA). For both and , 70% of the data is used for training, 10% for validation, and 20% for testing. All experiments are performed in a controlled environment, and a ten-fold cross-validation method is used to evaluate the framework’s performance.

We evaluate and compare the performance of the methods using four typical metrics, including accuracy (ACC), precision (PRE), recall (REC), and F1 score, and the calculation methods of these metrics are as follows:

5.2.3. Attribute Label Threshold Setting

Since the attribute values for and need to be calculated based on statistical analysis, it is necessary to obtain the cumulative distribution function (CDF) of burst ratio, coefficient of variation, and packet rate in and .

Based on the statistical results, we further determine the threshold corresponding to the attribute values of and . Figure 5a shows the proportion of bursts in normal, malicious, and all traffic. From Figure 5a, we can see that 60% of normal traffic has a burst proportion of 61%, while only 30% of malicious traffic meets this condition. Figure 5b shows the proportion of coefficient of variation in normal, malicious, and all traffic. We can learn that 30% of malicious traffic has a CV between 2 and 9, while over 90% of normal flows have a greater CV than 9. Figure 5c shows the proportion of packet rates in normal, malicious, and all traffic, which demonstrates that over 95% packet rates of normal traffic are 3, while only 30% of malicious flows have a packet rate of 3.

Figure 5.

Data statistics: CDF of burst proportion, cv, and packet rate.

Through the statistical analysis of normal and malicious traffic, we define the values with significant differences between normal and malicious flows as threshold attribute values. The specific threshold values are shown in the Table 4.

5.3. Experiments Results

We evaluate the performance of fine-grained classification and zero-shot prediction for AG-ZSL, using FS-NET [37] and TF [32] as the baseline comparison methods.

5.3.1. Fine-Grained Classification Experiments

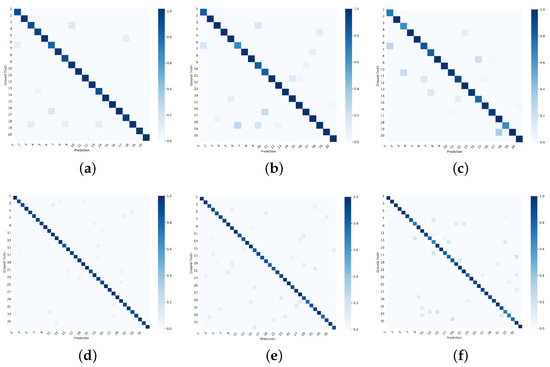

The classification results of the ground-truth label for and are shown in Table 5 while the attribute label results are shown in Figure 6. As shown in Table 5, our method significantly outperforms the baseline methods FS-Net and TF across all metrics. In , the proposed framework achieves an accuracy of 0.9571 and a recall of 0.9613, while FS-Net and TF report accuracies of 0.9133 and 0.9171, and recalls of 0.9254 and 0.9257, respectively. AG-ZSL also demonstrates strong performance in terms of precision and F1 score, highlighting the clear advantage in fine-grained identification of encapsulated traffic. Similarly, the results in are outstanding, with the proposed framework achieving an accuracy of 0.9659 and a recall of 0.9659, compared to FS-Net’s and TF’s accuracies of 0.9146 and 0.9213, indicating that our method consistently delivers superior classification performance across various scenarios.

Table 5.

Comparison results on and .

Figure 6.

Confusion matrix on and . (a) Confusion matrix of accuracy for AG-ZSL on . (b) Confusion matrix of accuracy for TF on . (c) Confusion matrix of accuracy for FS-Net on . (d) Confusion matrix of accuracy for AG-ZSL on . (e) Confusion matrix of accuracy for TF on . (f) Confusion matrix of accuracy for FS-Net on .

Figure 6 shows the confusion matrix of accuracy for AG-ZSL, TF, and FS-Net on the and . The x-axis represents the predicted categories by the model, while the y-axis represents the attribute labels. Given the large number of labels involved in the experiments, the categories are represented by numerical labels, and the corresponding attribute labels can be found in Appendix A. The diagonal represents the ratio of the number of predicted samples to the actual number of samples, with darker colors indicating better performance.

In these experiments, AG-ZSL exhibits a high classification accuracy across most categories. In contrast, TF and FS-Net show more misclassification issues in certain categories, particularly with high similarity between classes. Our method, however, demonstrates a stronger ability to distinguish between different traffic classes, especially under complex traffic patterns, further emphasizing its superior performance.

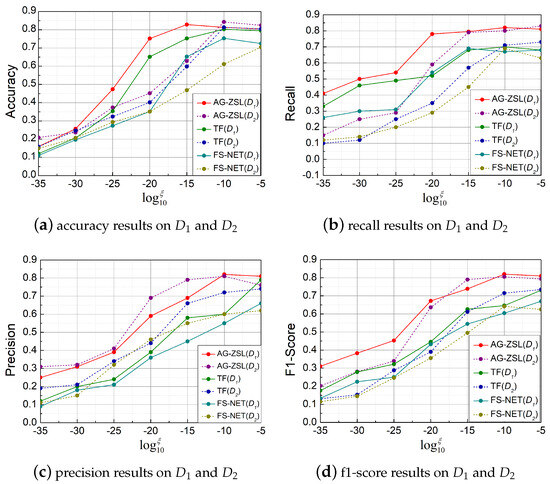

5.3.2. Zero-Shot Prediction Experiments

In , if the traffic to be classified belongs to a known category, it is the model inference ground-truth label of the traffic. Otherwise, the model predicts the attribute label. In this experiment, 12 categories from and 20 categories from are sampled to evaluate the model’s performance. From , four categories are selected as unknown classes, while from , six categories are selected as unknown classes. The specific unknown categories are detailed in the Appendix A.

Figure 7 illustrates the results of three methods on unseen classes. By adjusting , we can evaluate the model’s performance under different parameters. Figure 7a presents the accuracy in the and . Our method significantly outperforms the baseline methods, especially when is low (between −35 and −20). In , AG-ZSL shows a clear advantage, reaching around 0.84 when equals −15, while the accuracy of TF and FS-Net remains below 0.75. Figure 7c shows precision, where our method maintains superior performance, especially in the low range (−35 to −25).

Figure 7.

Comparison of the results of different methods under various .

Table 6 shows the performance of three methods in zero-shot prediction on . The last four classes represent the unknown classes. It can be seen that AG-ZSL achieves the best results in the prediction of all 12 classes. When only looking at the prediction results of the unknown classes, our method has a maximum improvement of 12% in accuracy.

Table 6.

Result of zero-shot prediction on .

5.3.3. Evaluation of Complexity

In this section, we will analyze the complexity of AG-ZSL, including the trainable parameters and time cost. As shown in Table 7, we present the model size, training time, and inference time. The training time refers to the phase where the model minimizes the Euclidean distance between the traffic behavior representation and the attribute representation. The inference phase includes generating the result vector, executing the gradient rejection strategy, and performing the nearest neighbor search. We define the time consumption of the proposed method as 1, serving as the baseline for the time cost of other methods.

Table 7.

Complexity comparison.

From Table 7, it is shown that our model occupies a larger space, as our approach integrates multiple models, leading to more parameters. In terms of time cost, in the training phase, AG-ZSL takes significantly longer than the other two methods, as it requires generating two representations for a single sample and optimizing the distance between them. However, in both fine-grained classification and zero-shot prediction tasks, our method outperforms others, with a maximum recall rate improvement of 10%.

6. Conclusions

In this paper, we propose AG-ZSL, a zero-shot learning framework designed for the classification of both known and unknown traffic. This framework effectively addresses the challenge of identifying unseen traffic for edge nodes by leveraging both graph and attribute representations. The combination of traffic interaction representation and attribute representation enables the approach to classify both known traffic and infer the attributes of unknown traffic. To further enhance the generalization of the model, we introduce a gradient-based rejection strategy and a KNN algorithm.

To further validate the effectiveness of AG-ZSL, we plan to expand our experimental scope to include a broader range of datasets, encompassing more diverse types of network traffic. Morever, by collecting datasets that capture new traffic patterns and protocols, we can better evaluate the model’s generalization capabilities. In addition, we will focus on the impact of data drift on the model.

Author Contributions

Conceptualization, Z.L. and Z.C.; methodology, Z.L. and Z.C.; software, Z.L. and Z.C.; validation, M.H.; formal analysis, L.S.; investigation, all authors; resources, all authors; data curation, L.S.; writing—original draft preparation, Z.L. and Z.C.; writing—review and editing, Z.L. and M.H.; visualization, L.S.; supervision, L.S.; project administration, Z.L.; funding acquisition, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China (62227805, 62071056).

Data Availability Statement

The source code of this paper can be obtained by sending an email to luzikui@bupt.edu.cn or chang030115@126.com.

Conflicts of Interest

The funders had no role in the design of the study.

Appendix A. Attribute Label

The attribute labels in and are shown in the Table A1, where the bold labels represent the unknown categories in (which are known in ).

Table A1.

The attribute labels in and .

Table A1.

The attribute labels in and .

| Scenario | |||||

|---|---|---|---|---|---|

| D1 | D1 | ||||

| No. | Attribute Label | No. | Attribute Label | No. | Attribute Label |

| 1 | chat_spike_fast_normal_TCP | 1 | injection_steady_fast_malware_DNS | 21 | polling_steady_slow_malware_DNS |

| 2 | audio_steady_fast_normal_TCP | 2 | injection_steady_slow_malware_HTTP | 22 | injection_spike_fast_malware_TCP |

| 3 | file_burst_fast_normal_UDP | 3 | control_burst_fast_malware_TCP | 23 | polling_burst_slow_malware_TCP |

| 4 | audio_steady_slow_normal_SSH | 4 | injection_steady_slow_malware_UDP | 24 | control_spike_fast_malware_UDP |

| 5 | audio_steady_slow_normal_TCP | 5 | control_steady_slow_malware_UDP | 25 | polling_spike_slow_malware_HTTP |

| 6 | audio_burst_slow_normal_TCP | 6 | polling_burst_fast_malware_TCP | 26 | polling_spike_slow_malware_SSH |

| 7 | chat_steady_slow_normal_TCP | 7 | polling_spike_fast_malware_HTTP | 27 | injection_burst_slow_malware_TCP |

| 8 | audio_spike_fast_normal_UDP | 8 | injection_burst_fast_malware_TCP | 28 | polling_steady_fast_malware_SSH |

| 9 | file_spike_fast_normal_TCP | 9 | polling_burst_fast_malware_HTTP | 29 | polling_burst_fast_malware_UDP |

| 10 | email_spike_fast_normal_UDP | 10 | injection_steady_fast_malware_TCP | 30 | injection_steady_slow_malware_FTP |

| 11 | chat_spike_fast_normal_HTTP | 11 | polling_burst_slow_malware_UDP | 31 | control_burst_slow_malware_HTTP |

| 12 | video_spike_slow_normal_HTTP | 12 | polling_steady_slow_malware_FTP | 32 | polling_burst_slow_malware_HTTP |

| 13 | audio_steady_slow_normal_UDP | 13 | injection_steady_fast_malware_HTTP | 33 | polling_burst_slow_malware_IMAP |

| 14 | email_burst_fast_normal_UDP | 14 | polling_burst_fast_malware_POP3 | 34 | polling_steady_fast_malware_HTTP |

| 15 | audio_burst_fast_normal_UDP | 15 | control_spike_slow_malware_UDP | 35 | injection_burst_slow_malware_UDP |

| 16 | file_burst_fast_normal_UDP | 16 | control_spike_fast_malware_TCP | 36 | polling_spike_fast_malware_TCP |

| 17 | chat_spike_slow_normal_UDP | 17 | control_spike_slow_malware_HTTP | ||

| 18 | audio_burst_fast_normal_TCP | 18 | polling_steady_slow_malware_UDP | ||

| 19 | vedio_burst_slow_normal_UDP | 19 | polling_spike_fast_malware_POP3 | ||

| 20 | vedio_spike_slow_normal_TCP | 20 | polling_steady_slow_malware_NTP | ||

References

- Xu, S.-J.; Geng, G.-G.; Jin, X.-B.; Liu, D.-J.; Weng, J. Seeing Traffic Paths: Encrypted Traffic Classification With Path Signature Features. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2166–2181. [Google Scholar] [CrossRef]

- He, M.; Huang, Y.; Wang, X.; Wei, P.; Wang, X. A Lightweight and Efficient IoT Intrusion Detection Method Based on Feature Grouping. IEEE Internet Things J. 2024, 11, 2935–2949. [Google Scholar] [CrossRef]

- Wang, X.; Lu, Z.; Wang, X.; He, M.; Wang, X. GETRF: A General Framework for Encrypted Traffic Identification With Robust Representation Based on Datagram Structure. IEEE Trans. Cogn. Commun. Netw. 2024, 10, 2045–2060. [Google Scholar] [CrossRef]

- Yao, H.; Liu, C.; Zhang, P.; Wu, S.; Jiang, C.; Yu, S. Identification of Encrypted Traffic Through Attention Mechanism Based Long Short Term Memory. IEEE Trans. Big Data 2022, 8, 241–252. [Google Scholar] [CrossRef]

- Han, D.; Wang, Z.; Chen, W.; Wang, K.; Yu, R.; Wang, S.; Zhang, H.; Wang, Z.; Jin, M.; Yang, J. Anomaly Detection in the Open World: Normality Shift Detection, Explanation, and Adaptation. In Proceedings of the 30th Annual Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 27 February–3 March 2023. [Google Scholar]

- Fu, C.; Li, Q.; Shen, M.; Xu, K. Realtime Robust Malicious Traffic Detection via Frequency Domain Analysis. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, Virtual, 15–19 November 2021; ACM: New York, NY, USA, 2021; pp. 3431–3446. [Google Scholar] [CrossRef]

- Bhat, S.; Lu, D.; Kwon, A.; Devadas, S. Var-CNN: A Data-Efficient Website Fingerprinting Attack Based on Deep Learning. Proc. Priv. Enhancing Technol. 2019, 2019, 292–310. [Google Scholar] [CrossRef]

- Hu, Y.; Cheng, G.; Chen, W.; Jiang, B. Attribute-Based Zero-Shot Learning for Encrypted Traffic Classification. IEEE Trans. Netw. Serv. Manag. 2022, 19, 4583–4599. [Google Scholar] [CrossRef]

- Yang, L.; Finamore, A.; Jun, F.; Rossi, D. Deep Learning and Zero-Day Traffic Classification: Lessons Learned From a Commercial-Grade Dataset. IEEE Trans. Netw. Serv. Manag. 2021, 18, 4103–4118. [Google Scholar] [CrossRef]

- Zhang, J.; Li, F.; Ye, F.; Wu, H. Autonomous Unknown-Application Filtering and Labeling for DL-based Traffic Classifier Update. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications, Virtual, 6–9 July 2020; pp. 397–405. [Google Scholar]

- Chen, C.-Y.; Li, C.-T. ZS-BERT: Towards Zero-Shot Relation Extraction with Attribute Representation Learning. arXiv 2021, arXiv:2104.04697. [Google Scholar] [CrossRef]

- Lampert, C.H.; Nickisch, H.; Harmeling, S. Learning to detect unseen object classes by between-class attribute transfer. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 951–958. [Google Scholar]

- Fu, C.; Li, Q.; Xu, K. Detecting Unknown Encrypted Malicious Traffic in Real Time via Flow Interaction Graph Analysis. arXiv 2023, arXiv:2301.13686. [Google Scholar] [CrossRef]

- Zhao, S.; Zhang, Y.; Sang, Y. Towards Unknown Traffic Identification via Embeddings and Deep Autoencoders. In Proceedings of the 2019 26th International Conference on Telecommunications (ICT), Hanoi, Vietnam, 8–10 April 2019; pp. 85–89. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Y.; Guo, Y. Unknown network attack detection method based on reinforcement zero-shot learning. J. Phys. Conf. Ser. 2022, 2303, 012008. [Google Scholar] [CrossRef]

- Wu, B.; Gysel, P.; Divakaran, D.M.; Gurusamy, M. ZEST: Attention-based Zero-Shot Learning for Unseen IoT Device Classification. arXiv 2024, arXiv:2310.08036. [Google Scholar] [CrossRef]

- Lin, X.; Xiong, G.; Gou, G.; Li, Z.; Shi, J.; Yu, J. ET-BERT: A Contextualized Datagram Representation with Pre-training Transformers for Encrypted Traffic Classification. In Proceedings of the WWW ’22: Proceedings of the ACM Web Conference 20, Lyon France, 25–29 April 2022; ACM: New York, NY, USA, 2022; pp. 633–642. [Google Scholar] [CrossRef]

- Lei, T.; Zhang, Y.; Wang, S.I.; Dai, H.; Artzi, Y. Simple Recurrent Units for Highly Parallelizable Recurrence. arXiv 2018, arXiv:1709.02755. [Google Scholar] [CrossRef]

- Taylor, V.F.; Spolaor, R.; Conti, M.; Martinovic, I. Robust Smartphone App Identification via Encrypted Network Traffic Analysis. IEEE Trans. Inf. Forensics Secur. 2018, 13, 63–78. [Google Scholar] [CrossRef]

- Al-Naami, K.; Chandra, S.; Mustafa, A.; Khan, L.; Lin, Z.; Hamlen, K.; Thuraisingham, B. Adaptive Encrypted Traffic Fingerprinting with Bi-Directional Dependence. In Proceedings of the 32nd Annual Conference on Computer Security Applications, ACSAC ’16, Los Angeles, CA, USA, 5–8 December 2016; pp. 177–188. [Google Scholar] [CrossRef]

- Panchenko, A.; Lanze, F.; Pennekamp, J.; Engel, T.; Zinnen, A.; Henze, M.; Wehrle, K. Website Fingerprinting at Internet Scale. In Proceedings of the Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 21–24 February 2016. [Google Scholar]

- Wang, W.; Zhu, M.; Wang, J.; Zeng, X.; Yang, Z. End-to-End Encrypted Traffic Classification with One-Dimensional Convolution Neural Networks. In Proceedings of the 2017 IEEE International Conference on Intelligence and Security Informatics (ISI), Beijing, China, 22–24 July 2017; pp. 43–48. [Google Scholar]

- Luo, Y.; He, M.; Wang, X.; Jin, L. Network Flow Detection of Semantic Relationship Between Flow and Byte. In Proceedings of the 2022 IEEE Eighth International Conference on Big Data Computing Service and Applications (BigDataService), Newark, CA, USA, 15–18 August 2022; pp. 179–180. [Google Scholar]

- Huoh, T.-L.; Luo, Y.; Li, P.; Zhang, T. Flow-Based Encrypted Network Traffic Classification With Graph Neural Networks. IEEE Trans. Netw. Serv. Manag. 2023, 20, 1224–1237. [Google Scholar] [CrossRef]

- Guarino, I.; Aceto, G.; Ciuonzo, D.; Montieri, A.; Persico, V.; Pescapè, A. Contextual counters and multimodal Deep Learning for activity-level traffic classification of mobile communication apps during COVID-19 pandemic. Comput. Netw 2022, 219, 109452. [Google Scholar] [CrossRef] [PubMed]

- He, H.Y.; Yang, Z.G.; Chen, X.N. PERT: Payload Encoding Representation from Transformer for Encrypted Traffic Classification. In Proceedings of the 2020 ITU Kaleidoscope: Industry-Driven Digital Transformation (ITU K), Online, 7–11 December 2020; pp. 1–8. [Google Scholar]

- Huoh, T.-L.; Luo, Y.; Zhang, T. Encrypted Network Traffic Classification Using a Geometric Learning Model. In Proceedings of the 2021 IFIP/IEEE International Symposium on Integrated Network Management (IM), Bordeaux, France, 17–21 May 2021; pp. 376–383. [Google Scholar]

- Shen, M.; Zhang, J.; Zhu, L.; Xu, K.; Du, X. Accurate Decentralized Application Identification via Encrypted Traffic Analysis Using Graph Neural Networks. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2367–2380. [Google Scholar] [CrossRef]

- Yang, Y.; Lyu, R.; Gao, Z.; Rui, L.; Yan, Y. Semisupervised Graph Neural Networks for Traffic Classification in Edge Networks. Discret. Dyn. Nat. Soc. 2023, 2023, 2879563. [Google Scholar] [CrossRef]

- Pang, B.; Fu, Y.; Ren, S.; Wang, Y.; Liao, Q.; Jia, Y. CGNN: Traffic Classification with Graph Neural Network. arXiv 2021, arXiv:2110.09726. [Google Scholar] [CrossRef]

- Chen, L.; Chen, D.; Shang, Z.; Wu, B.; Zheng, C.; Wen, B.; Zhang, W. Multi-Scale Adaptive Graph Neural Network for Multivariate Time Series Forecasting. arXiv 2023, arXiv:2201.04828. [Google Scholar] [CrossRef]

- Sirinam, P.; Mathews, N.; Rahman, M.S.; Wright, M. Triplet Fingerprinting: More Practical and Portable Website Fingerprinting with N-shot Learning. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security (CCS ’19), London, UK, 11–15 November 2019; ACM: New York, NY, USA, 2019. 18p. [Google Scholar] [CrossRef]

- Hamilton, W.L.; Ying, R.; Leskovec, J. Inductive Representation Learning on Large Graphs. arXiv 2018, arXiv:1706.02216. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. arXiv 2015, arXiv:1502.01852. [Google Scholar] [CrossRef]

- Habibi Lashkari, A.; Draper Gil, G.; Mamun, M.; Ghorbani, A. Characterization of Encrypted and VPN Traffic Using Time-Related Features. In Proceedings of the 2016 International Conference on Information Systems Security and Privacy (ICISSP), Rome, Italy, 19–21 February 2016; pp. 407–414. [Google Scholar]

- Moustafa, N.; Ahmed, M.; Ahmed, S. Data Analytics-Enabled Intrusion Detection: Evaluations of ToN_IoT Linux Datasets. In Proceedings of the 2020 IEEE 19th International Conference on Trust, Security and Privacy in Computing and Communications (TrustCom), Guangzhou, China, 29 December 2020–1 January 2021; pp. 727–735. [Google Scholar]

- Liu, C.; He, L.; Xiong, G.; Cao, Z.; Li, Z. FS-Net: A Flow Sequence Network For Encrypted Traffic Classification. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April 2019–2 May 2019; pp. 1171–1179. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).