ASFT-Transformer: A Fast and Accurate Framework for EEG-Based Pilot Fatigue Recognition

Abstract

1. Introduction

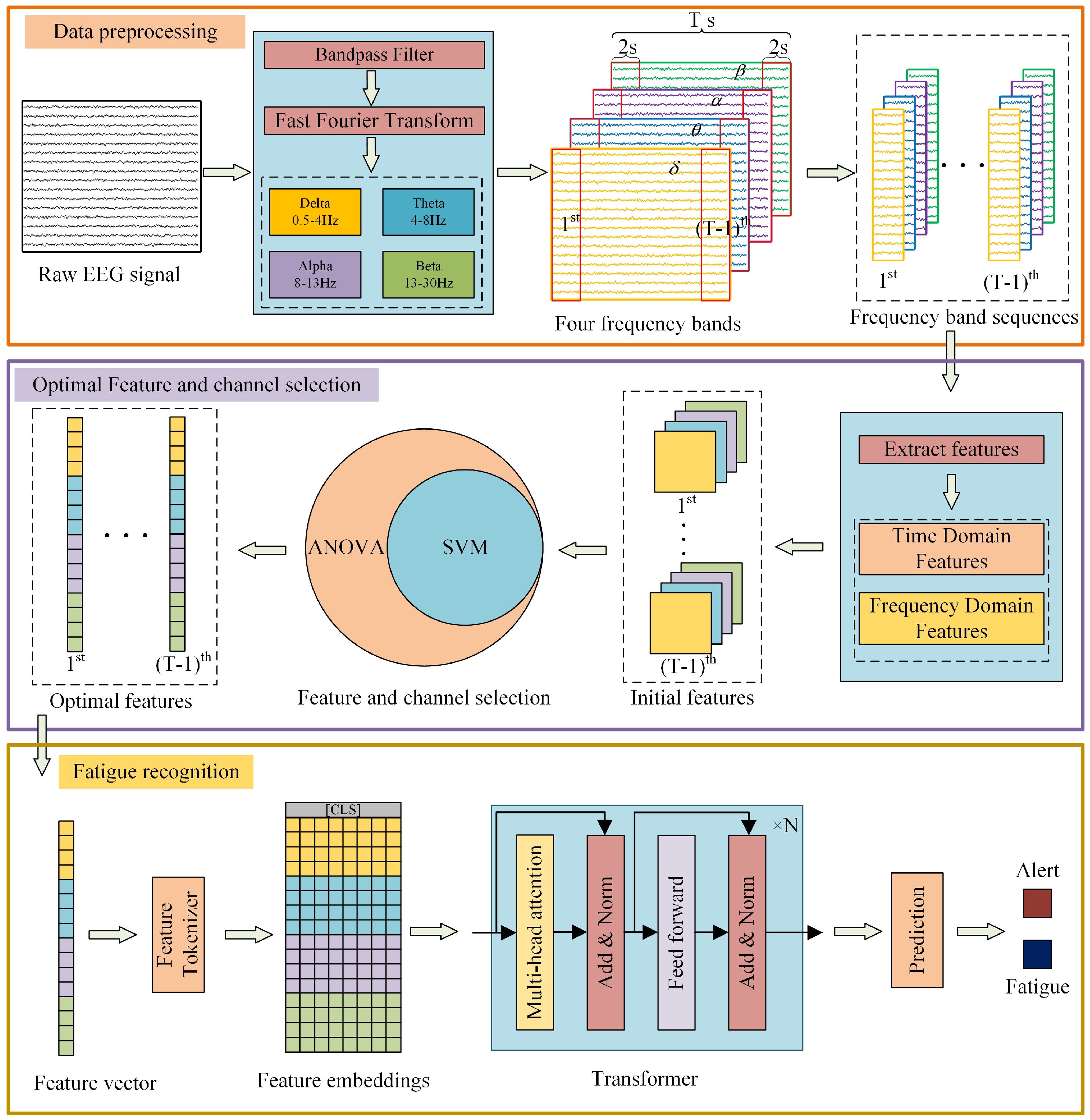

- (1)

- EEG feature extraction. The EEG signals were initially decomposed into , , , and bands, followed by the extraction of both time-domain and frequency-domain features. This process yielded a multi-dimensional description of the raw signals. Consequently, it improved the interpretability of the features and ensured informational completeness, providing crucial insights for a detailed study of how EEG signals correlate with pilot fatigue.

- (2)

- Pivotal feature and channel selection. A channel and feature selection method based on one-way analysis of variance and support vector machine (ANOVA-SVM) was proposed to select the channels and features closely related to pilot fatigue, thereby preventing the classifier from using excessive irrelevant information that may lead to lower accuracy. Furthermore, this process reduced the data dimensionality, which significantly shortened the model training time.

- (3)

- Classification recognition. The FT-Transformer (Feature Tokenizer + Transformer) was employed to identify pilot fatigue states. For model training, pivotal features from each time window were aggregated into a feature vector, with each vector serving as an independent sample of the pilot state. These vectors were then fed into the classifier for training. Based on the above steps, the fast detection of pilot fatigue has been achieved.

2. Methodology

2.1. Overall Framework

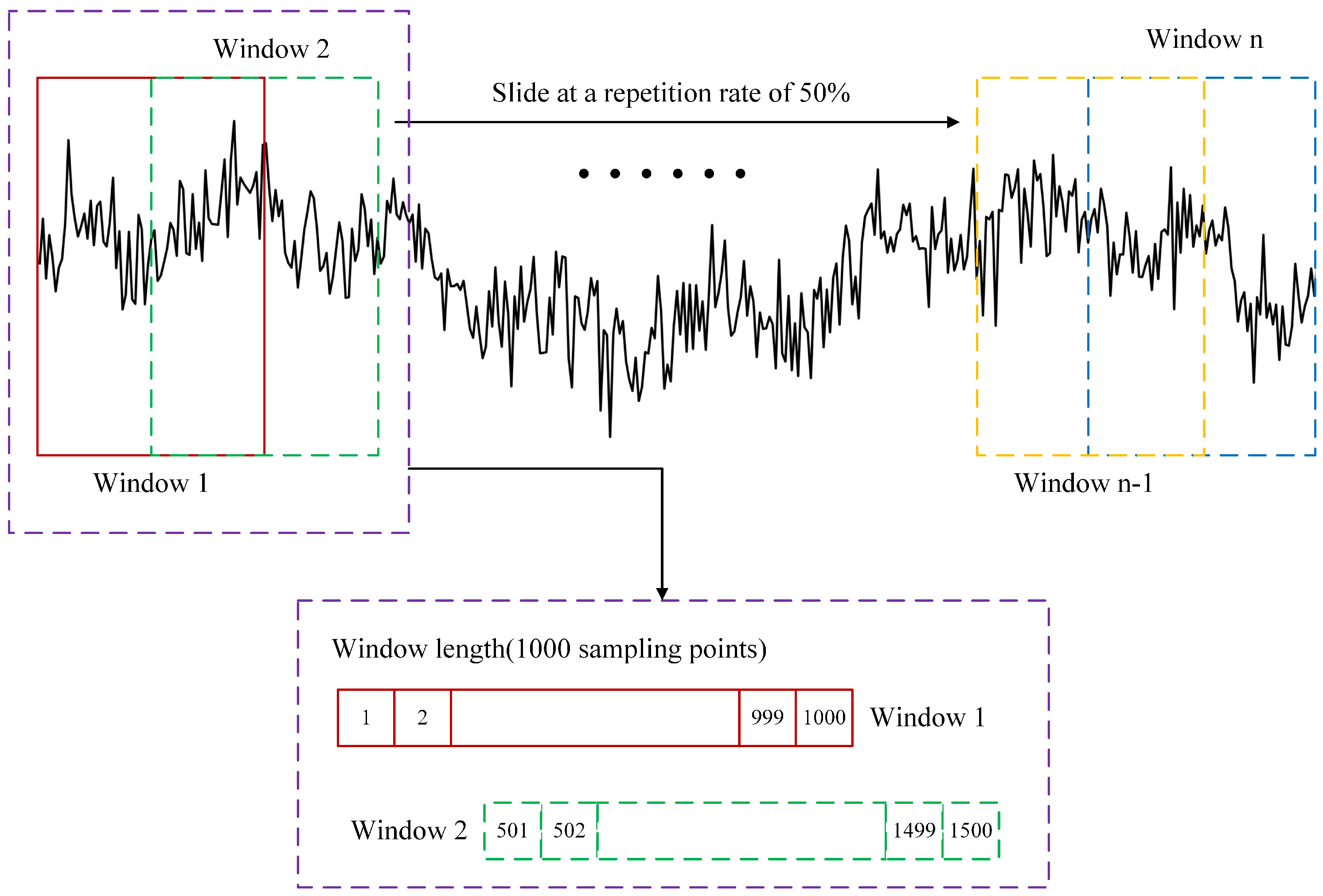

2.2. Data Preprocessing

2.3. Feature Extraction

2.3.1. Time-Domain Features

2.3.2. Frequency-Domain Features

2.4. Pivotal Feature Screening and Channel Selection

2.4.1. Feature Screening

2.4.2. Channel Selection

- (1)

- Individual SVM classifier training

- (2)

- Evaluating performance with AUC

- (3)

- Ranking and selecting pivotal channels

2.5. Fatigue Recognition Based on EEG Features

3. Results

3.1. Dataset Description

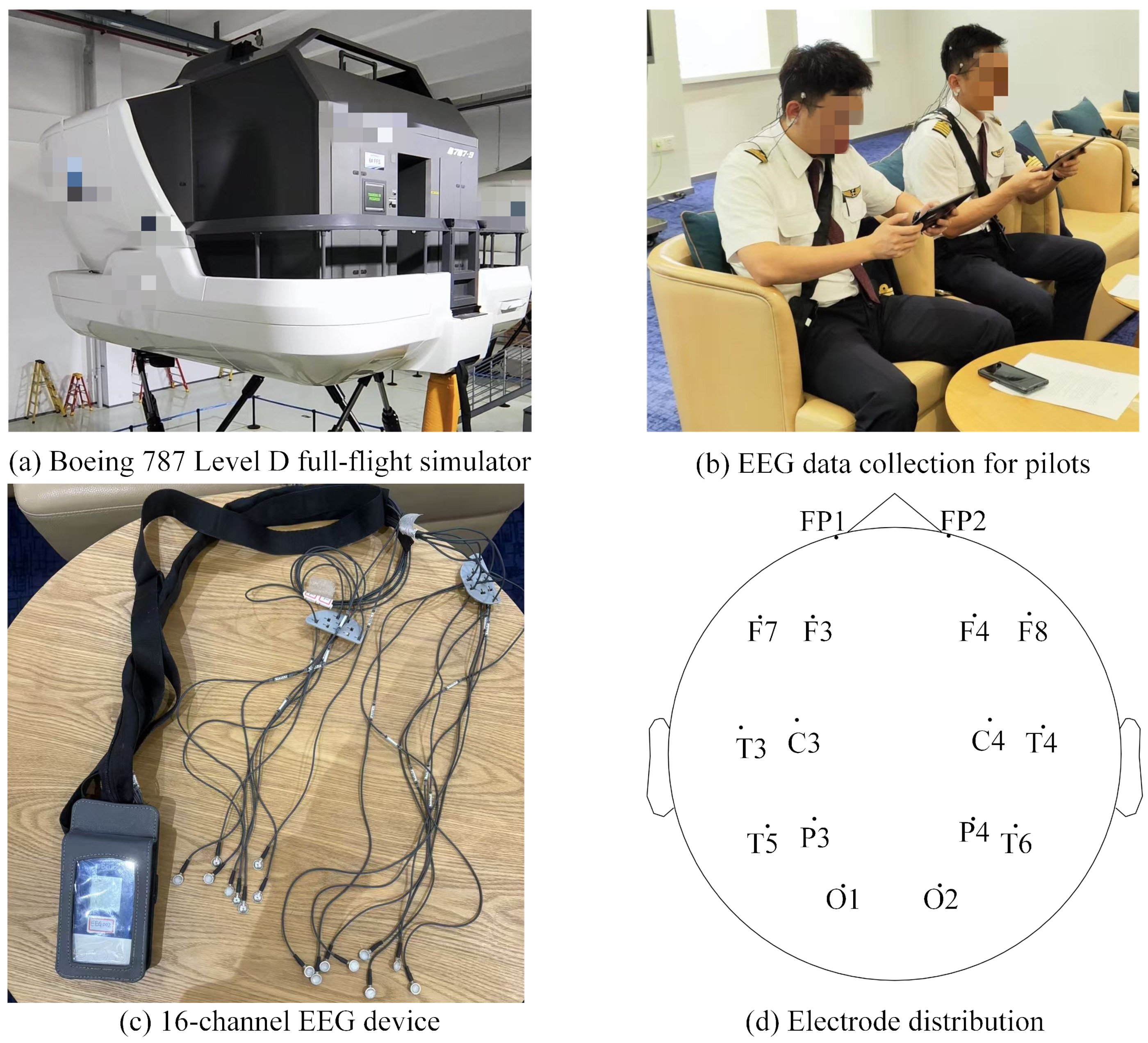

3.1.1. Participants

3.1.2. Procedure

3.1.3. Data Acquisition

3.2. Selection of Significant Features

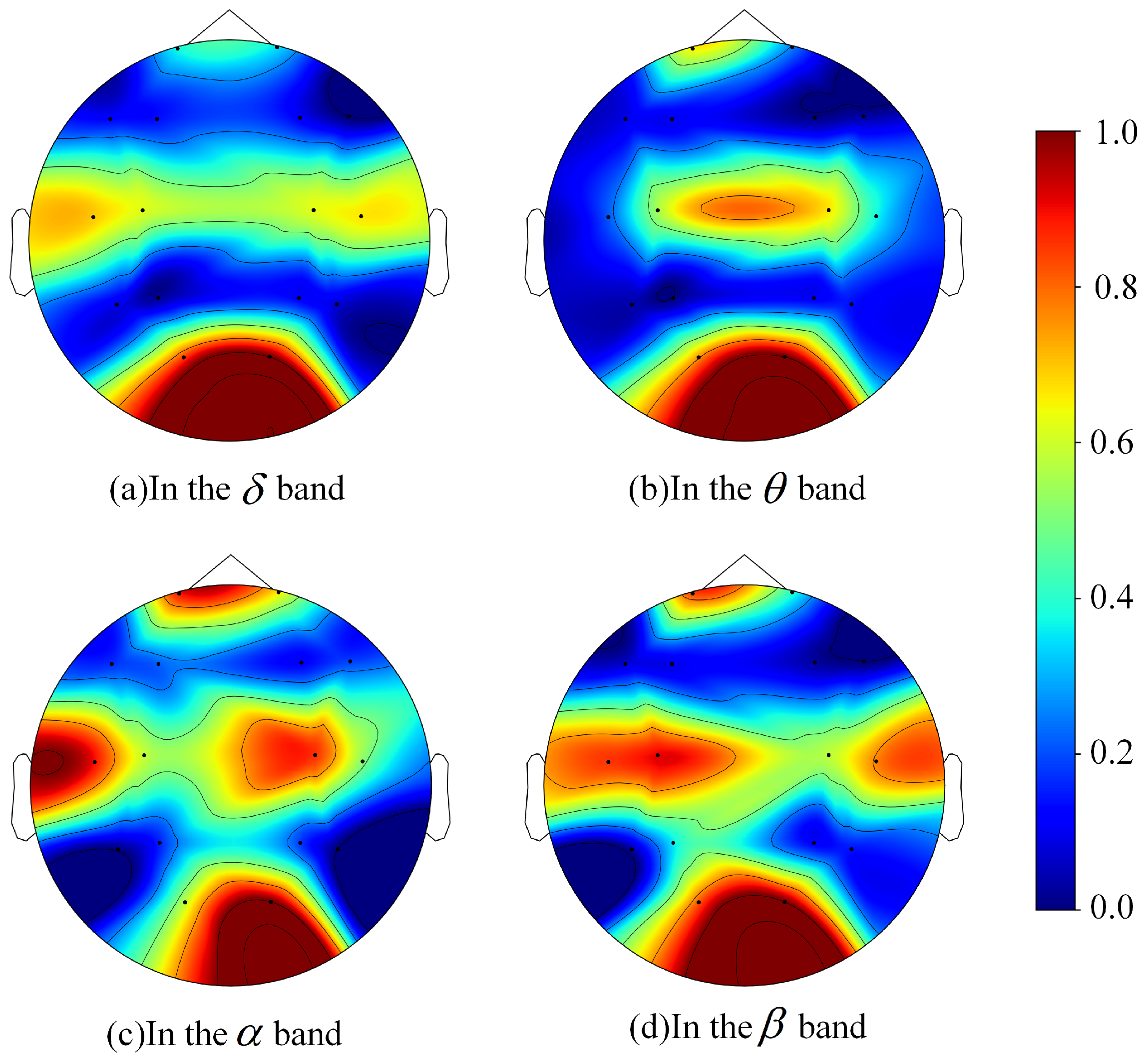

3.3. AUC Calculation for EEG Channels

3.4. Pilot Fatigue Recognition

3.4.1. Dataset Description

3.4.2. Model Setup

3.4.3. Recognition Performances

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANOVA | One-Way Analysis of Variance |

| AUC | Area Under the Curve |

| CAAC | Civil Aviation Administration of China |

| EASA | Convolutional Neural Network |

| EBT | Evidence-Based Training |

| ECG | Electrocardiography |

| EEG | Electroencephalography |

| EMG | Electromyography |

| FAA | Federal Aviation Administration |

| FN | False Negative |

| FP | False Positive |

| FRMS | Fatigue Risk Management System |

| FT | Feature Tokenizer |

| ICAO | International Civil Aviation Organization |

| KNN | k-Nearest Neighbors |

| KSS | Karolinska Sleepiness Scale |

| LSTM | Long Short-Term Memory |

| LR | Logistic Regression |

| MBC | Maneuver-Based Checks |

| MHSA | Multi-Head Self-Attention |

| MLP | Multilayer Perceptron |

| RNN | Recurrent Neural Network |

| ROC | Receiver Operating Characteristic |

| SBT | Scenario-Based Training |

| SMS | Safety Management System |

| SSS | Stanford Sleepiness Scale |

| SVM | Support Vector Machine |

| TN | True Negative |

| TP | True Positive |

Appendix A. ANOVA Statistical Results of Features in Different EEG Frequency Bands Under Alertness and Fatigue

| Channels | Features | |||||||

|---|---|---|---|---|---|---|---|---|

| MEA | ENE | VAR | RMS | PSD | CF | FV | MSF | |

| FP1 | 0.9724 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| FP2 | 0.9779 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| F3 | 0.9757 | <0.01 (*) | <0.01 (*) | 0.8251 | <0.01 (*) | <0.01 (*) | 0.4220 | <0.01 (*) |

| F4 | 0.9581 | <0.01 (*) | <0.01 (*) | 0.7304 | <0.01 (*) | 0.9579 | 0.1814 | 0.6913 |

| C3 | 0.9953 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| C4 | 0.9591 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| P3 | 0.9909 | 0.2070 | 0.2070 | 0.4118 | 0.1923 | <0.01 (*) | 0.7526 | <0.01 (*) |

| P4 | 0.9516 | 0.3404 | 0.3404 | <0.01 (*) | 0.3583 | <0.01 (*) | 0.6766 | <0.01 (*) |

| O1 | 0.9400 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| O2 | 0.9211 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| F7 | 0.9893 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| F8 | 0.9783 | <0.01 (*) | <0.01 (*) | 0.0673 | <0.01 (*) | 0.1320 | 0.6174 | 0.1885 |

| T3 | 0.9754 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| T4 | 0.9915 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| T5 | 0.9769 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.6768 | <0.01 (*) |

| T6 | 0.9993 | <0.01 (*) | <0.01 (*) | 0.0895 | <0.01 (*) | 0.2799 | 0.4599 | 0.3555 |

| Channels | Features | |||||||

|---|---|---|---|---|---|---|---|---|

| MEA | ENE | VAR | RMS | PSD | CF | FV | MSF | |

| FP1 | 0.9627 | 0.5251 | 0.5246 | <0.01 (*) | 0.5596 | 0.3307 | <0.01 (*) | 0.2448 |

| FP2 | 0.9750 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| F3 | 0.9888 | <0.01 (*) | <0.01 (*) | 0.7138 | <0.01 (*) | 0.0652 | 0.2383 | <0.01 (*) |

| F4 | 0.9350 | 0.0672 | 0.0672 | <0.01 (*) | 0.3123 | <0.01 (*) | 0.0649 | <0.01 (*) |

| C3 | 0.9748 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.5767 | <0.01 (*) |

| C4 | 0.9961 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| P3 | 0.9610 | <0.01 (*) | <0.01 (*) | 0.6929 | <0.01 (*) | 0.4533 | 0.9495 | 0.3834 |

| P4 | 0.9595 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.8968 | 0.1558 | 0.8600 |

| O1 | 0.9859 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| O2 | 0.9889 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.2881 | <0.01 (*) |

| F7 | 0.9550 | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.0686 | 0.1455 | <0.01 (*) | 0.0812 |

| F8 | 0.9887 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.2455 | 0.4559 | 0.2359 |

| T3 | 0.9778 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| T4 | 0.9726 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.3887 | <0.01 (*) | 0.6247 |

| T5 | 0.9933 | <0.01 (*) | <0.01 (*) | 0.8864 | <0.01 (*) | 0.8727 | <0.01 (*) | 0.6848 |

| T6 | 0.9912 | 0.6568 | 0.6566 | <0.01 (*) | 0.9070 | <0.01 (*) | 0.4994 | <0.01 (*) |

| Channels | Features | |||||||

|---|---|---|---|---|---|---|---|---|

| MEA | ENE | VAR | RMS | PSD | CF | FV | MSF | |

| FP1 | 0.9692 | 0.1182 | 0.1183 | <0.01 (*) | 0.1534 | <0.01 (*) | 0.8561 | <0.01 (*) |

| FP2 | 0.9751 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.0519 | <0.01 (*) |

| F3 | 0.9458 | 0.4699 | 0.4700 | 0.0518 | 0.5761 | <0.01 (*) | 0.6728 | <0.01 (*) |

| F4 | 0.9145 | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.3642 | 0.2280 | <0.01 (*) | 0.2551 |

| C3 | 0.9469 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.8638 | <0.01 (*) | 0.9240 |

| C4 | 0.8831 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.5363 | <0.01 (*) |

| P3 | 0.9899 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.2360 | <0.01 (*) |

| P4 | 0.9403 | 0.2499 | 0.2504 | <0.01 (*) | 0.5821 | 0.2738 | 0.5165 | 0.2631 |

| O1 | 0.9779 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| O2 | 0.9459 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| F7 | 0.9364 | 0.1537 | 0.1537 | 0.0610 | 0.2236 | <0.01 (*) | 0.0654 | <0.01 (*) |

| F8 | 0.9483 | 0.3195 | 0.3196 | <0.01 (*) | 0.3951 | <0.01 (*) | 0.6090 | <0.01 (*) |

| T3 | 0.9429 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.4380 | <0.01 (*) |

| T4 | 0.9591 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.3023 | <0.01 (*) |

| T5 | 0.9602 | 0.1594 | 0.1595 | 0.3931 | 0.2193 | 0.5166 | 0.7923 | 0.4852 |

| T6 | 0.9826 | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.1421 | 0.9477 | 0.8905 | 0.8945 |

| Channels | Features | |||||||

|---|---|---|---|---|---|---|---|---|

| MEA | ENE | VAR | RMS | PSD | CF | FV | MSF | |

| FP1 | 0.9796 | 0.1488 | 0.1488 | 0.1846 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| FP2 | 0.9829 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.1145 | <0.01 (*) |

| F3 | 0.9786 | 0.0785 | 0.0785 | 0.4634 | 0.1338 | <0.01 (*) | 0.6512 | <0.01 (*) |

| F4 | 0.9912 | 0.5586 | 0.5585 | <0.01 (*) | 0.3192 | <0.01 (*) | 0.9168 | <0.01 (*) |

| C3 | 0.9653 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| C4 | 0.9722 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| P3 | 0.9965 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| P4 | 0.9449 | <0.01 (*) | <0.01 (*) | <0.01 (*) | 0.3553 | <0.01 (*) | 0.6807 | <0.01 (*) |

| O1 | 0.9605 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| O2 | 0.9521 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| F7 | 0.9527 | 0.5608 | 0.5608 | 0.8829 | 0.7697 | <0.01 (*) | 0.6108 | <0.01 (*) |

| F8 | 0.9773 | <0.01 (*) | 0.0259 | <0.01 (*) | 0.1056 | <0.01 (*) | 0.7110 | <0.01 (*) |

| T3 | 0.9781 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| T4 | 0.9059 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| T5 | 0.9921 | <0.01 (*) | <0.01 (*) | 0.1721 | <0.01 (*) | <0.01 (*) | <0.01 (*) | <0.01 (*) |

| T6 | 0.9719 | 0.4500 | 0.4501 | <0.01 (*) | 0.2209 | <0.01 (*) | 0.3089 | <0.01 (*) |

References

- International Civil Aviation Organization. Manual for the Oversight of Fatigue Management Approaches, Doc 9966; ICAO: Montréal, QC, Canada, 2020. [Google Scholar]

- Mannawaduge, C.D.; Pignata, S.; Banks, S.; Dorrian, J. Evaluating fatigue management regulations for flight crew in Australia using a new Fatigue Regulation Evaluation Framework (FREF). Transp. Policy 2024, 151, 75–84. [Google Scholar] [CrossRef]

- Berberich, J.; Leitner, R. The look of tiredness: Evaluation of pilot fatigue based on video recordings. Aviat. Psychol. Appl. Hum. Factors 2017, 7, 86–94. [Google Scholar] [CrossRef]

- Marcus, J.H.; Rosekind, M.R. Fatigue in transportation: NTSB investigations and safety recommendations. Inj. Prev. 2017, 23, 232–238. [Google Scholar] [CrossRef] [PubMed]

- Wingelaar-Jagt, Y.Q.; Wingelaar, T.T.; Riedel, W.J.; Ramaekers, J.G. Fatigue in Aviation: Safety Risks, Preventive Strategies and Pharmacological Interventions. Front. Physiol. 2021, 12, 712628. [Google Scholar] [CrossRef]

- Federal Aviation Administration. Fatigue Risk Management Systems for Aviation Safety; FAA: Washington, DC, USA, 2013. [Google Scholar]

- European Union Aviation Safety Agency. Commission Regulation (EU) No 965/2012 of 5 October 2012–Laying Down Technical Requirements and Administrative Procedures Related to Air Operations Pursuant To Regulation (EC) No 216/2008 of the European Parliament and of the Council; EASA: Cologne, Germany, 2012. [Google Scholar]

- Civil Aviation Administration of China. Rules on the Certification of Public Air Carriers of Large Aircraft, CCAR-121; CAAC: Beijing, China, 2021. [Google Scholar]

- Maclean, A.W.; Fekken, G.C.; Saskin, P.; Knowles, J.B. Psychometric evaluation of the Stanford Sleepiness Scale. J. Sleep Res. 1992, 1, 35–39. [Google Scholar] [CrossRef]

- Kaida, K.; Takahashi, M.; Åkerstedt, T.; Nakata, A.; Otsuka, Y.; Haratani, T.; Fukasawa, K. Validation of the Karolinska sleepiness scale against performance and EEG variables. Clin. Neurophysiol. 2006, 117, 1574–1581. [Google Scholar] [CrossRef]

- van Weelden, E.; Alimardani, M.; Wiltshire, T.J.; Louwerse, M.M. Aviation and neurophysiology: A systematic review. Appl. Ergon. 2022, 105, 103838. [Google Scholar] [CrossRef]

- Guo, D.; Wang, C.; Qin, Y.; Shang, L.; Gao, A.; Tan, B.; Zhou, Y.; Wang, G. Assessment of flight fatigue using heart rate variability and machine learning approaches. Front. Neurosci. 2025, 19, 1621638. [Google Scholar] [CrossRef]

- Naim, F.; Mustafa, M.; Sulaiman, N.; Zahari, Z.L. Dual-Layer Ranking Feature Selection Method Based on Statistical Formula for Driver Fatigue Detection of EMG Signals. Trait. Signal 2022, 39, 1079–1088. [Google Scholar] [CrossRef]

- Blanco, J.A.; Johnson, M.K.; Jaquess, K.J.; Oh, H.; Lo, L.C.; Gentili, R.J.; Hatfield, B.D. Quantifying Cognitive Workload in Simulated Flight Using Passive, Dry EEG Measurements. IEEE Trans. Cogn. Dev. Syst. 2018, 10, 373–383. [Google Scholar] [CrossRef]

- Luo, H.; Qiu, T.; Liu, C.; Huang, P. Research on fatigue driving detection using forehead EEG based on adaptive multi-scale entropy. Biomed. Signal Process. Control 2019, 51, 50–58. [Google Scholar] [CrossRef]

- Dehais, F.; Duprès, A.; Blum, S.; Drougard, N.; Scannella, S.; Roy, R.N.; Lotte, F. Monitoring Pilot’s Mental Workload Using ERPs and Spectral Power with a Six-Dry-Electrode EEG System in Real Flight Conditions. Sensors 2019, 19, 1324. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Han, M.; Peng, Y.; Zhao, R.; Fan, D.; Meng, X.; Xu, H.; Niu, H.; Cheng, J.; Liu, T. LGNet: Learning local–global EEG representations for cognitive workload classification in simulated flights. Biomed. Signal Process. Control 2024, 92, 106046. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, H.; Zhou, Y.; Xu, C.; Liao, Y. Recognising drivers’ mental fatigue based on EEG multi-dimensional feature selection and fusion. Biomed. Signal Process. Control 2023, 79, 104237. [Google Scholar] [CrossRef]

- Zhang, M.; Qian, B.; Gao, J.; Zhao, S.; Cui, Y.; Luo, Z.; Shi, K.; Yin, E. Recent Advances in Portable Dry Electrode EEG: Architecture and Applications in Brain-Computer Interfaces. Sensors 2025, 25, 5215. [Google Scholar] [CrossRef]

- King, L.; Nguyen, H.; Lal, S.K.L. Early Driver Fatigue Detection from Electroencephalography Signals using Artificial Neural Networks. In Proceedings of the 2006 International Conference of the IEEE Engineering in Medicine and Biology Society, New York, NY, USA, 30 August–3 September 2006; pp. 2187–2190. [Google Scholar] [CrossRef]

- Feltman, K.A.; Bernhardt, K.A.; Kelley, A.M. Measuring the Domain Specificity of Workload Using EEG: Auditory and Visual Domains in Rotary-Wing Simulated Flight. Hum. Factors 2021, 63, 1271–1283. [Google Scholar] [CrossRef]

- Jing, D.; Liu, D.; Zhang, S.; Guo, Z. Fatigue driving detection method based on EEG analysis in low-voltage and hypoxia plateau environment. Int. J. Transp. Sci. Technol. 2020, 9, 366–376. [Google Scholar] [CrossRef]

- Huang, J.; Liu, Y.; Peng, X. Recognition of driver’s mental workload based on physiological signals, a comparative study. Biomed. Signal Process. Control 2022, 71, 103094. [Google Scholar] [CrossRef]

- Zeng, H.; Li, X.; Borghini, G.; Zhao, Y.; Aricò, P.; Di Flumeri, G.; Sciaraffa, N.; Zakaria, W.; Kong, W.; Babiloni, F. An EEG-Based Transfer Learning Method for Cross-Subject Fatigue Mental State Prediction. Sensors 2021, 21, 2369. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, X.; Du, Y.; Zhang, N. TMU-Net: A Transformer-Based Multimodal Framework with Uncertainty Quantification for Driver Fatigue Detection. Sensors 2025, 25, 5364. [Google Scholar] [CrossRef]

- Jiao, Z.; Gao, X.; Wang, Y.; Li, J.; Xu, H. Deep Convolutional Neural Networks for mental load classification based on EEG data. Pattern Recognit. 2018, 76, 582–595. [Google Scholar] [CrossRef]

- Shen, X.; Liu, X.; Hu, X.; Zhang, D.; Song, S. Contrastive Learning of Subject-Invariant EEG Representations for Cross-Subject Emotion Recognition. IEEE Trans. Affect. Comput. 2023, 14, 2496–2511. [Google Scholar] [CrossRef]

- Gao, Z.; Wang, X.; Yang, Y.; Mu, C.; Cai, Q.; Dang, W.; Zuo, S. EEG-Based Spatio–Temporal Convolutional Neural Network for Driver Fatigue Evaluation. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2755–2763. [Google Scholar] [CrossRef] [PubMed]

- Peivandi, M.; Ardabili, S.Z.; Sheykhivand, S.; Danishvar, S. Deep Learning for Detecting Multi-Level Driver Fatigue Using Physiological Signals: A Comprehensive Approach. Sensors 2023, 23, 8171. [Google Scholar] [CrossRef]

- Ardabili, S.Z.; Bahmani, S.; Lahijan, L.Z.; Khaleghi, N.; Sheykhivand, S.; Danishvar, S. A Novel Approach for Automatic Detection of Driver Fatigue Using EEG Signals Based on Graph Convolutional Networks. Sensors 2024, 24, 364. [Google Scholar] [CrossRef] [PubMed]

- Tang, J.; Li, X.; Yang, Y.; Zhang, W. Euclidean space data alignment approach for multi-channel LSTM network in EEG based fatigue driving detection. Electron. Lett. 2021, 57, 836–838. [Google Scholar] [CrossRef]

- Cao, L.; Wang, W.; Dong, Y.; Fan, C. Advancing classroom fatigue recognition: A multimodal fusion approach using self-attention mechanism. Biomed. Signal Process. Control 2024, 89, 105756. [Google Scholar] [CrossRef]

- Mehmood, I.; Li, H.; Qarout, Y.; Umer, W.; Anwer, S.; Wu, H.; Hussain, M.; Fordjour Antwi-Afari, M. Deep learning-based construction equipment operators’ mental fatigue classification using wearable EEG sensor data. Adv. Eng. Inform. 2023, 56, 101978. [Google Scholar] [CrossRef]

- Pan, Y.; Steven Li, Z.; Zhang, E.; Guo, Z. A vigilance estimation method for high-speed rail drivers using physiological signals with a two-level fusion framework. Biomed. Signal Process. Control 2023, 84, 104831. [Google Scholar] [CrossRef]

- Jia, H.; Xiao, Z.; Ji, P. End-to-end fatigue driving EEG signal detection model based on improved temporal-graph convolution network. Comput. Biol. Med. 2023, 152, 106431. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. A multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef] [PubMed]

- Gao, D.; Li, P.; Wang, M.; Liang, Y.; Liu, S.; Zhou, J.; Wang, L.; Zhang, Y. CSF-GTNet: A Novel Multi-Dimensional Feature Fusion Network Based on Convnext-GeLU- BiLSTM for EEG-Signals-Enabled Fatigue Driving Detection. IEEE J. Biomed. Health Inform. 2024, 28, 2558–2568. [Google Scholar] [CrossRef] [PubMed]

- Gao, D.; Wang, K.; Wang, M.; Zhou, J.; Zhang, Y. SFT-Net: A Network for Detecting Fatigue From EEG Signals by Combining 4D Feature Flow and Attention Mechanism. IEEE J. Biomed. Health Inform. 2024, 28, 4444–4455. [Google Scholar] [CrossRef] [PubMed]

- Liu, Q.; Liu, Y.; Chen, K.; Wang, L.; Li, Z.; Ai, Q.; Ma, L. Research on Channel Selection and Multi-Feature Fusion of EEG Signals for Mental Fatigue Detection. Entropy 2021, 23, 457. [Google Scholar] [CrossRef]

- Wang, K.; Mao, X.; Song, Y.; Chen, Q. EEG-based fatigue state evaluation by combining complex network and frequency-spatial features. J. Neurosci. Methods 2025, 416, 110385. [Google Scholar] [CrossRef]

- Jebelli, H.; Hwang, S.; Lee, S. EEG-based workers’ stress recognition at construction sites. Autom. Constr. 2018, 93, 315–324. [Google Scholar] [CrossRef]

- Zhou, Y.; Zeng, C.; Mu, Z. Optimal feature-algorithm combination research for EEG fatigue driving detection based on functional brain network. IET Biom. 2023, 12, 65–76. [Google Scholar] [CrossRef]

- Wang, F.; Wu, S.; Ping, J.; Xu, Z.; Chu, H. EEG Driving Fatigue Detection With PDC-Based Brain Functional Network. IEEE Sens. J. 2021, 21, 10811–10823. [Google Scholar] [CrossRef]

- Fu, R.; Han, M.; Yu, B.; Shi, P.; Wen, J. Phase fluctuation analysis in functional brain networks of scaling EEG for driver fatigue detection. Promet-Traffic Transp. 2020, 32, 487–495. [Google Scholar] [CrossRef]

- Wang, F.; Wu, S.; Zhang, W.; Xu, Z.; Zhang, Y.; Chu, H. Multiple nonlinear features fusion based driving fatigue detection. Biomed. Signal Process. Control 2020, 62, 102075. [Google Scholar] [CrossRef]

- Tuncer, T.; Dogan, S.; Ertam, F.; Subasi, A. A dynamic center and multi threshold point based stable feature extraction network for driver fatigue detection utilizing EEG signals. Cogn. Neurodynamics 2021, 15, 223–237. [Google Scholar] [CrossRef]

- Cai, H.; Qu, Z.; Li, Z.; Zhang, Y.; Hu, X.; Hu, B. Feature-level fusion approaches based on multimodal EEG data for depression recognition. Inf. Fusion 2020, 59, 127–138. [Google Scholar] [CrossRef]

- Lin, C.T.; Chuang, C.H.; Huang, C.S.; Tsai, S.F.; Lu, S.W.; Chen, Y.H.; Ko, L.W. Wireless and Wearable EEG System for Evaluating Driver Vigilance. IEEE Trans. Biomed. Circuits Syst. 2014, 8, 165–176. [Google Scholar] [CrossRef] [PubMed]

- Tang, X.; Li, W.; Li, X.; Ma, W.; Dang, X. Motor imagery EEG recognition based on conditional optimization empirical mode decomposition and multi-scale convolutional neural network. Expert Syst. Appl. 2020, 149, 113285. [Google Scholar] [CrossRef]

- Subasi, A.; Saikia, A.; Bagedo, K.; Singh, A.; Hazarika, A. EEG-Based Driver Fatigue Detection Using FAWT and Multiboosting Approaches. IEEE Trans. Ind. Inform. 2022, 18, 6602–6609. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Gorishniy, Y.; Rubachev, I.; Khrulkov, V.; Babenko, A. Revisiting Deep Learning Models for Tabular Data. In Advances in Neural Information Processing Systems; Ranzato, M., Beygelzimer, A., Dauphin, Y., Liang, P., Vaughan, J.W., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2021; Volume 34, pp. 18932–18943. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; Burstein, J., Doran, C., Solorio, T., Eds.; Association for Computational Linguistics: Minneapolis, MN, USA, 2019; pp. 4171–4186. [Google Scholar] [CrossRef]

- Wang, Q.; Li, B.; Xiao, T.; Zhu, J.; Li, C.; Wong, D.F.; Chao, L.S. Learning Deep Transformer Models for Machine Translation. arXiv 2019, arXiv:1906.01787. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Zhao, K.; Guo, X. PilotCareTrans Net: An EEG data-driven transformer for pilot health monitoring. Front. Hum. Neurosci. 2025, 19, 1503228. [Google Scholar] [CrossRef]

- International Civil Aviation Organization. Manual of Evidence-Based Training, Doc 9995; ICAO: Montréal, QC, Canada, 2013. [Google Scholar]

- Lin, C.; Pal, N.; Chuang, C.; Ko, L.W.; Chao, C.; Jung, T.; Liang, S. EEG-based subject- and session-independent drowsiness detection: An unsupervised approach. EURASIP J. Adv. Signal Process. 2008, 2008, 519480. [Google Scholar] [CrossRef]

- Li, X.; Tang, J.; Li, X.; Yang, Y. CWSTR-Net: A Channel-Weighted Spatial–Temporal Residual Network based on nonsmooth nonnegative matrix factorization for fatigue detection using EEG signals. Biomed. Signal Process. Control 2024, 97, 106685. [Google Scholar] [CrossRef]

- Xu, X.; Gao, H.; Wang, Y.; Qian, L.; Wu, K.; Bezerianos, A.; Wang, W.; Sun, Y. EEG-Based Mental Workload Assessment in a Simulated Flight Task Using Single-Channel Brain Rhythm Sequence. IEEE Trans. Instrum. Meas. 2024, 73, 1–12. [Google Scholar] [CrossRef]

- Wu, Z.; Zhao, S.; Qian, T.; Zhou, T. Microstructure and surface monitoring of ECG signals for CrTiN-Ni coatings as dry bioelectrodes. Vacuum 2025, 240, 114576. [Google Scholar] [CrossRef]

| Band | Band | Band | Band | ||||

|---|---|---|---|---|---|---|---|

| Channels | AUC | Channels | AUC | Channels | AUC | Channels | AUC |

| O2 | 0.6393 ± 0.0513 | O2 | 0.5945 ± 0.0517 | O2 | 0.5934 ± 0.0214 | O2 | 0.6068 ± 0.0582 |

| O1 | 0.6172 ± 0.0250 | O1 | 0.5733 ± 0.0479 | T3 | 0.5834 ± 0.0429 | C3 | 0.5989 ± 0.0306 |

| T3 | 0.5998 ± 0.0267 | C4 | 0.5675 ± 0.0459 | FP1 | 0.5821 ± 0.0504 | T3 | 0.5938 ± 0.0305 |

| T4 | 0.5956 ± 0.0529 | FP1 | 0.5648 ± 0.0312 | C4 | 0.5815 ± 0.0246 | FP1 | 0.5902 ± 0.0332 |

| C4 | 0.5904 ± 0.0423 | C3 | 0.5587 ± 0.0547 | FP2 | 0.5625 ± 0.0495 | O1 | 0.5897 ± 0.0424 |

| C3 | 0.5899 ± 0.0411 | T4 | 0.5445 ± 0.0578 | C3 | 0.5573 ± 0.0206 | T4 | 0.5855 ± 0.0593 |

| FP1 | 0.5578 ± 0.0363 | FP2 | 0.5328 ± 0.0557 | O1 | 0.5569 ± 0.0254 | C4 | 0.5686 ± 0.0205 |

| FP2 | 0.5535 ± 0.0486 | T3 | 0.5309 ± 0.0414 | T4 | 0.5527 ± 0.0328 | FP2 | 0.5519 ± 0.0319 |

| F3 | 0.5284 ± 0.0289 | T6 | 0.5244 ± 0.0447 | F3 | 0.5256 ± 0.0525 | P3 | 0.5497 ± 0.0260 |

| F7 | 0.5276 ± 0.0575 | P4 | 0.5215 ± 0.0282 | F8 | 0.5238 ± 0.0299 | T6 | 0.5302 ± 0.0454 |

| F4 | 0.5256 ± 0.0342 | F3 | 0.5210 ± 0.0383 | P4 | 0.5234 ± 0.0451 | P4 | 0.5273 ± 0.0262 |

| P4 | 0.5248 ± 0.0267 | F7 | 0.5208 ± 0.0542 | F7 | 0.5225 ± 0.0353 | F3 | 0.5253 ± 0.0576 |

| T5 | 0.5226 ± 0.0342 | F8 | 0.5162 ± 0.0349 | P3 | 0.5211 ± 0.0469 | F7 | 0.5247 ± 0.0295 |

| T6 | 0.5210 ± 0.0548 | T5 | 0.5162 ± 0.0338 | F4 | 0.5178 ± 0.0259 | T5 | 0.5217 ± 0.0318 |

| P3 | 0.5124 ± 0.0292 | F4 | 0.5143 ± 0.0215 | T5 | 0.5081 ± 0.0542 | F4 | 0.5203 ± 0.0337 |

| F8 | 0.5101 ± 0.0260 | P3 | 0.5130 ± 0.0383 | T6 | 0.5071 ± 0.0583 | F8 | 0.5172 ± 0.0240 |

| Parameters | Ours | Gorishniy [52] |

|---|---|---|

| Embedding Dimension | 64 | 192 |

| Number of Transformer Encoder Layers | 3 | 6 |

| Number of Attention Heads | 8 | 8 |

| Dimension of the Feed-Forward Network’s Hidden Layer | 128 | 384 |

| Dropout Rate | 0.2 | 0.1 |

| Methods | Accuracy | Precision | Recall | F1_Score | Average Training Time |

|---|---|---|---|---|---|

| FT-Transformer | 94.79% | 94.80% | 94.78% | 94.79% | 56 min 32.3 s |

| (±0.84%) | (±0.84%) | (±0.85%) | (±0.86%) | ||

| AFT-Transformer | 95.27% | 95.28% | 95.28% | 95.29% | 21 min 44.7 s |

| (±0.52%) | (±0.51%) | (±0.50%) | (±0.52%) | ||

| ASFT-Transformer | 97.24% | 97.25% | 97.25% | 97.24% | 8 min 38.8 s |

| (±0.27%) | (±0.27%) | (±0.27%) | (±0.29%) |

| Methods | Accuracy | Precision | Recall | F1_Score | Average Training Time |

|---|---|---|---|---|---|

| ASFT-Transformer | 97.24% | 97.25% | 97.25% | 97.24% | 8 min 38.8 s |

| (±0.27%) | (±0.27%) | (±0.27%) | (±0.29%) | ||

| ResNet | 96.51% | 96.51% | 96.49% | 96.50% | 5 min 30.0 s |

| (±0.65%) | (±0.70%) | (±0.63%) | (±0.69%) | ||

| MLP | 94.85% | 94.85% | 94.87% | 94.87% | 2 min 43.4 s |

| (±0.31%) | (±0.28%) | (±0.33%) | (±0.29%) | ||

| XGBoost | 96.03% | 95.99% | 96.01% | 96.00% | 37.3 s |

| (±026%) | (±0.27%) | (±0.26%) | (±0.26%) | ||

| SVM | 84.15% | 84.18% | 84.15% | 84.13% | 7 min 19.3 s |

| (±0.42%) | (±0.39%) | (±0.41%) | (±0.40%) | ||

| LR | 72.02% | 72.03% | 72.02% | 72.02% | 12.1 s |

| (±0.35%) | (±0.36%) | (±0.35%) | (±0.37%) | ||

| KNN | 71.53% | 72.44% | 71.50% | 71.46% | 31.5 s |

| (±0.45%) | (±0.40%) | (±0.46%) | (±0.48%) | ||

| LSTM | 57.68% | 57.02% | 55.71% | 58.73% | 106 min 9.1 s |

| (±0.53%) | (±1.48%) | (±2.76%) | (±0.93%) | ||

| BiLSTM | 58.89% | 62.43% | 60.14% | 59.64% | 245 min 30.8 s |

| (±0.54%) | (±0.75%) | (±2.30%) | (±1.57%) | ||

| 1D_CNN | 76.88% | 77.42% | 80.21% | 81.95% | 41 min 0.5 s |

| (±5.98%) | (±1.32%) | (±7.35%) | (±11.73%) |

| Methods | Accuracy | Precision | Recall | F1_Score |

|---|---|---|---|---|

| ASFT-Transformer | 87.72% | 87.59% | 91.27% | 88.94% |

| (±3.76%) | (±4.26%) | (±5.75%) | (±6.60%) | |

| AFT-Transformer | 82.93% | 83.43% | 83.28% | 81.34% |

| (±5.01%) | (±4.68%) | (±6.10%) | (±6.78%) | |

| FT-Transformer | 79.65% | 79.56% | 81.01% | 81.03% |

| (±5.51%) | (±5.08%) | (±7.92%) | (±6.79%) | |

| ResNet | 84.25% | 84.40% | 87.68% | 86.97% |

| (±5.94%) | (±5.81%) | (±7.12%) | (±6.56%) | |

| MLP | 78.42% | 79.29% | 82.37% | 80.25% |

| (±4.17%) | (±4.23%) | (±6.96%) | (±5.77%) | |

| XGBoost | 84.02% | 84.86% | 89.75% | 85.98% |

| (±3.65%) | (±3.88%) | (±5.89%) | (±5.07%) | |

| SVM | 71.24% | 70.62% | 75.40% | 72.58% |

| (±4.45%) | (±4.75%) | (±6.86%) | (±5.52%) | |

| LR | 63.82% | 63.42% | 67.39% | 64.39% |

| (±4.37%) | (±4.10%) | (±6.26%) | (±4.57%) | |

| KNN | 60.61% | 60.20% | 63.11% | 61.94% |

| (±4.37%) | (±4.10%) | (±6.26%) | (±4.57%) | |

| LSTM | 53.17% | 53.28% | 60.29% | 58.56% |

| (±4.96%) | (±7.43%) | (±9.65%) | (±6.28%) | |

| BiLSTM | 55.71% | 56.38% | 62.77% | 60.97% |

| (±3.91%) | (±3.49%) | (±8.75%) | (±6.98%) | |

| 1D_CNN | 69.91% | 68.40% | 75.18% | 72.74% |

| (±9.84%) | (±5.10%) | (±9.91%) | (±9.80%) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Zhou, Y.; He, Q.; Gao, Z. ASFT-Transformer: A Fast and Accurate Framework for EEG-Based Pilot Fatigue Recognition. Sensors 2025, 25, 6256. https://doi.org/10.3390/s25196256

Liu J, Zhou Y, He Q, Gao Z. ASFT-Transformer: A Fast and Accurate Framework for EEG-Based Pilot Fatigue Recognition. Sensors. 2025; 25(19):6256. https://doi.org/10.3390/s25196256

Chicago/Turabian StyleLiu, Jiming, Yi Zhou, Qileng He, and Zhenxing Gao. 2025. "ASFT-Transformer: A Fast and Accurate Framework for EEG-Based Pilot Fatigue Recognition" Sensors 25, no. 19: 6256. https://doi.org/10.3390/s25196256

APA StyleLiu, J., Zhou, Y., He, Q., & Gao, Z. (2025). ASFT-Transformer: A Fast and Accurate Framework for EEG-Based Pilot Fatigue Recognition. Sensors, 25(19), 6256. https://doi.org/10.3390/s25196256