1. Introduction

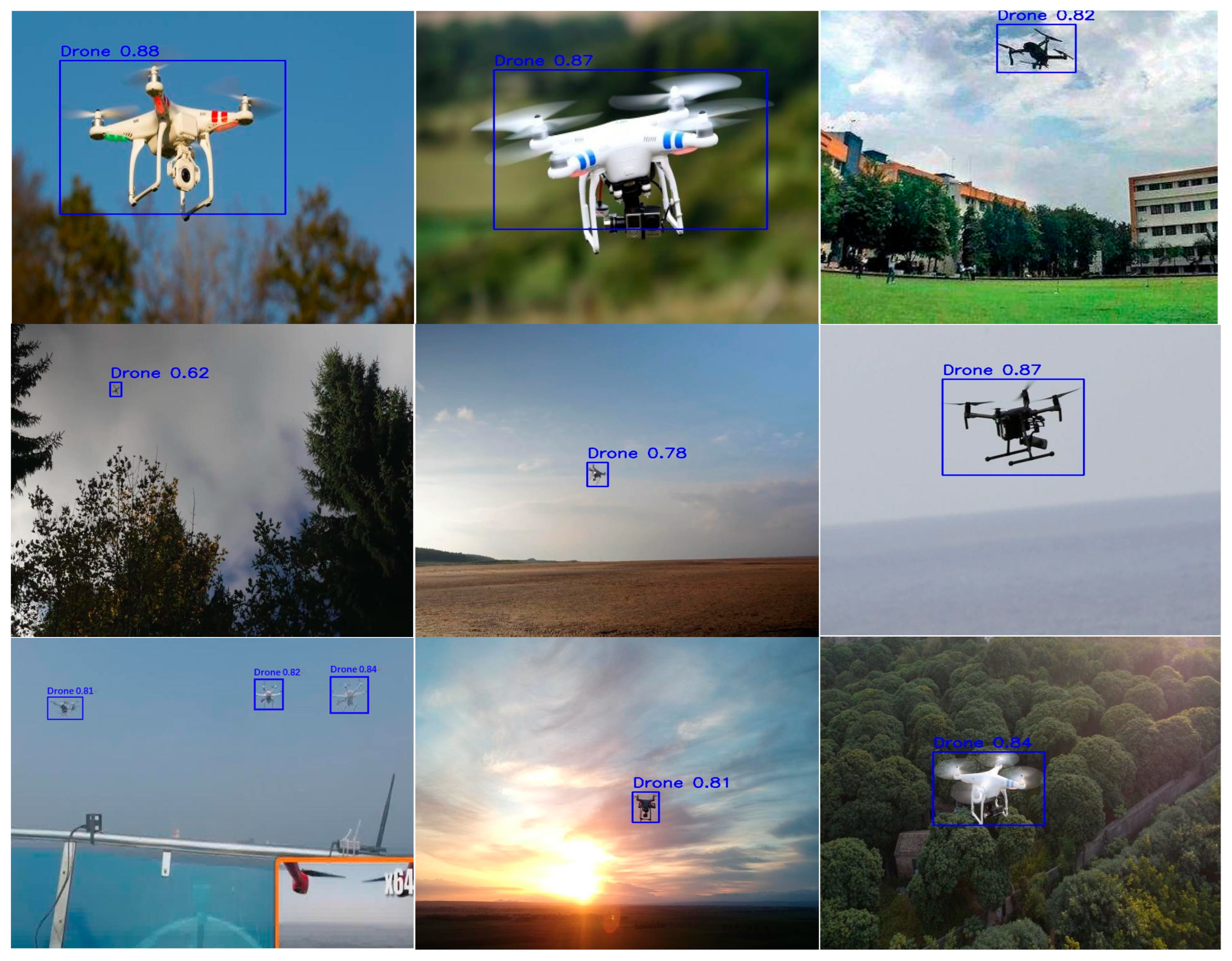

In the field of computer vision, object detection—a fundamental yet challenging undertaking [

1]—has undergone considerable advancements and found extensive applications across various domains, including surveillance, autonomous driving, and industrial production [

2]. Since the advent of region-based convolutional neural networks (R-CNNs) [

3] that pioneered region-based deep learning for object detection, the field has evolved through the following two paradigms: two-stage detectors (e.g., Faster R-CNN [

4]) emphasizing accuracy, and one-stage detectors (e.g., You Only Look Once (YOLO) [

5], single-shot detectors) prioritizing real-time performance. This paradigm shift reflects the dual demand for precision and efficiency in domains such as unmanned aerial vehicle (UAV) detection, wherein both attributes are critical.

Among these applications, detecting and tracking unmanned aerial vehicles (UAVs) has garnered considerable attention owing to the growing use of drones in civilian and military contexts [

6,

7]. However, practical UAV detection scenarios introduce unique challenges, including scale variability—drones appear as tiny pixels at long distances but occupy large regions in close-range footage [

8]; dynamic occlusion—cluttered backgrounds (e.g., urban landscapes, foliage) or inter-drone overlap degrade feature distinctiveness [

9]; and need for environmental robustness—low-light, motion blurring, or adverse weather (e.g., rain and fog) impair detection reliability [

10]. These challenges necessitate algorithms that balance spatial feature fidelity and temporal context awareness; existing methods have not effectively addressed this gap.

Object detection algorithms based on deep learning, particularly those in the YOLO series, have become dominant in real-time detection tasks [

2]. YOLOv8 has demonstrated excellent performance in both speed and accuracy, leading to the development of variants for specific applications. YOLO-drone [

11] optimized the network for detecting tiny UAV targets by introducing a high-resolution detection branch, pruning large target layers, reducing the parameters by 59.9%, and enhancing the detection speed. ITD-YOLOv8 [

12] enhanced the perception of the neck network by integrating the global context and multiscale features, while replacing the standard convolution with AXConv to adaptively reduce complexity. However, these improvements focus on spatial feature extraction, thereby overlooking crucial temporal information for video-based UAV detection.

In video-related object detection, challenges such as motion blur and defocus can considerably degrade the algorithm performance. To address these issues, the blur-aid feature aggregation network (BFAN) proposed in [

13] aggregates blurred features with minimal additional computation. However, it fails to capture the dynamic motion patterns of UAVs over time. Regarding UAV-captured images, existing methods often exhibit low detection accuracy and inefficient utilization of pre-trained models. The high-quality object detection method in [

14] addresses these issues by employing an improved DINO framework combined with masked image modeling. It utilizes a hybrid backbone that integrates convolutional neural networks (CNNs) and vision transformers to extract global and local features more effectively. However, this method faces challenges in satisfying real-time requirements for UAV tracking.

Detecting tiny objects—common in UAV applications—has attracted considerable attention. Approaches such as Temporal-YOLOv8 [

15] enhance performance by leveraging the temporal context in videos and specialized data augmentation, thereby increasing the mean average precision (mAP) from 0.465 to 0.839. DroneNet [

6] introduced a feature information enhancement module to better retain object information, which can be seamlessly integrated into the backbone network. Reference [

16] investigated the effectiveness of anchor-matching strategies and imbalance in anchor-based object detection, particularly for small objects. SDS-YOLO [

17] improved the localization accuracy by replacing the complete intersection-over-union loss (CIoU loss) with a shape-IoU loss, thereby providing an accurate measurement of overlap between the predicted and actual bounding boxes, which is crucial for detecting UAVs with irregular shapes or in overlapping scenarios. The Adam optimizer, as demonstrated in [

18] for high-speed object detection and steering angle prediction in autonomous driving control, is effective in accelerating model convergence by adaptively adjusting learning rates based on parameter gradients. Regarding integrating recurrent neural networks (RNNs), long short-term memory (LSTM)-based methods have demonstrated strong potential in processing sequential data. The correlation-aware LSTM-based modified YOLO algorithm in [

19] achieved 99.7% precision in night-vision object tracking, outperforming the standard YOLOv7. The LSTM-CNN tracker in [

20] maintained 92.1% ID consistency during occlusions by modeling tracking as a 17-dimensional Markov decision process state representation.

The low-light object tracking system using the correlation-aware, modified LSTM-based YOLO algorithm suggested in offers enhanced precision in object recognition and characterization under low-light conditions. This method utilizes the sequential data processing of the LSTM and combines it with the object detection of the YOLO algorithm to address object detection and tracking in low-light environments. However, similarly to other methods, it may struggle with complex dynamic scenarios such as multiple occlusions or fast-moving targets. In military and surveillance applications, the need for detecting and classifying UAV objects, such as birds, planes, and drones, is rapidly increasing [

21]. However, accurately classifying distant objects that appear as small points in images remains challenging. There is an inherent tradeoff between detection accuracy and confidence, thereby making it crucial to achieve precise detection with high confidence for practical UAV detection systems.

In related studies, target detection utilizes LSTM [

22]. Analogous to traditional target detection algorithms, these methods often fail to fully exploit spatial information, resulting in low detection accuracy under non-ideal conditions, such as occlusion, deformation, wear, and poor lighting. This limitation highlights the need for more advanced algorithms capable of managing complex scenarios—a challenge that is also relevant to UAV detection, wherein dynamic environments and occlusions are prevalent.

Other studies have investigated different aspects of object detection. Reference [

23] explores joint salient object detection and presence prediction, highlighting the assumption in existing models that at least one salient object is present in the input image—a premise that often leads to poor saliency maps when processing pure background images. In [

24], a system-integrated YOLOv8 with MOT algorithms, BoT-SORT and ByteTrack, was used to extract multitarget information, thereby improving the redetection capability and robustness to occlusions in dynamic environments. Reference [

7] highlighted the importance of artificial intelligence in enhancing real-time drone detection for anti-drone systems.

Numerous studies [

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35] have improved the YOLOv8 framework. Reference [

25] evaluated optimized YOLOv8 variants for multiscale object detection in terms of the computational cost, energy consumption, and mAP-50. Reference [

26] proposed EDGS-YOLOv8, an improved lightweight UAV detection model that incorporates ghost convolution for size reduction and enhances heads for better multiscale detection. Reference [

27] used the RepVGG downsampling modules in the Drone-YOLO backbone to enhance multiscale feature learning. Reference [

28] introduced YOLO-GCOF, which combines dynamic group convolution with shuffle transformers for small drone feature extraction. Reference [

29] presented DMFF-YOLO, which integrates components to improve small target detection for UAV aerial photography. Reference [

30] developed a reparameterization feature redundancy extraction network for UAV detection and improved feature processing during downsampling. Reference [

31] proposed RPS-YOLO with a recursive feature pyramid to enhance small-object detection in UAV scenarios. Reference [

32] optimized YOLO for UAV detection and classification via RF spectrogram images. Reference [

33] investigated fine-grained feature-based object detection using the C2f module. Reference [

34] improved the small-object detection in the UAV images using YOLOv8n featuring a new loss function. In [

35], HSP-YOLOv8 was proposed to address the UAV detection challenges using a small prediction head and specific convolution.

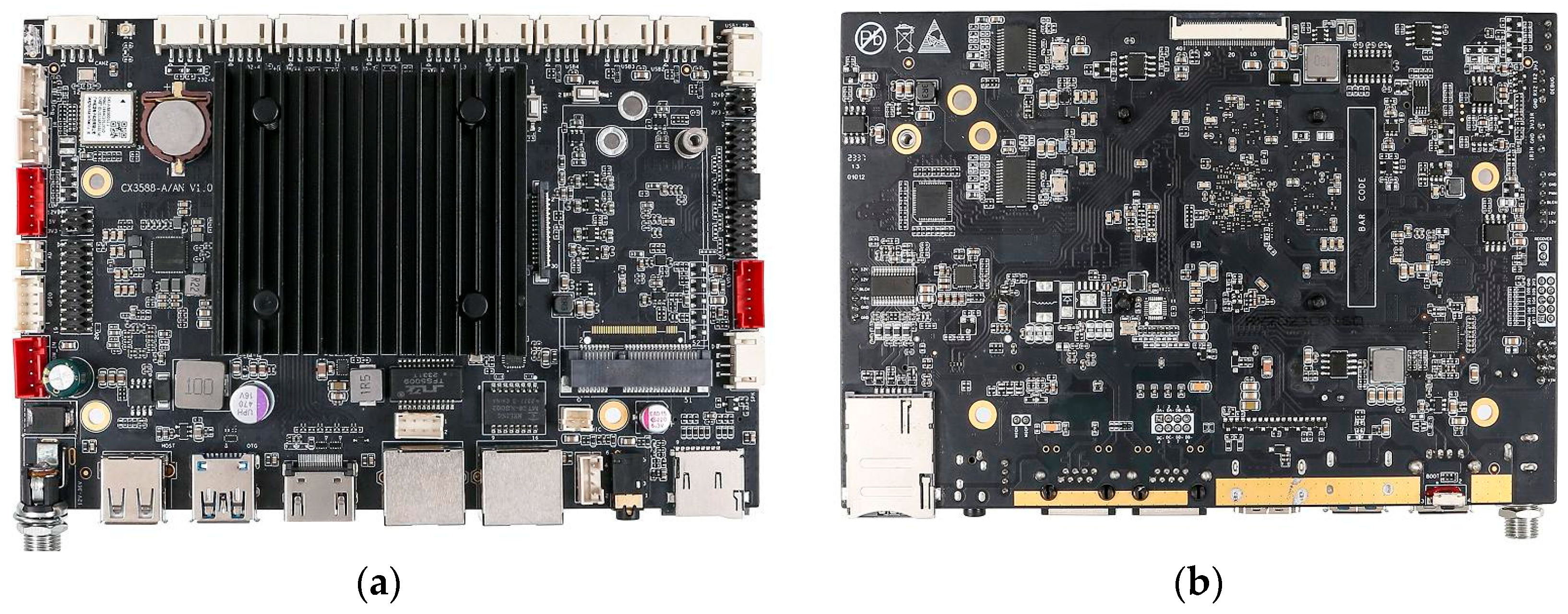

The approaches proposed in [

36,

37] significantly enhance detection accuracy and efficiency by integrating YOLOv8 with the RK3588 platform, offering a promising direction for UAV target detection.

With the rapid growth of the low-altitude economy, the increasing frequency of unauthorized (“rogue”) drone activities presents serious threats to public safety, airspace regulation, and flight operations. This highlights the urgent need for a drone detection and countermeasure system that offers high precision, strong robustness, and real-time performance. In response to this demand, this study proposes a UAV detection algorithm that integrates LSTM networks with YOLOv8s and is implemented on the RK3588-A embedded platform. The goal is to enhance tracking performance for dynamically moving aerial targets. The proposed method combines the robust spatial feature extraction capabilities of YOLOv8s with the temporal sequence modeling strength of LSTM, significantly improving feature representation in temporally continuous scenarios. During training, a Monte Carlo sampling strategy is employed to increase data diversity and improve the model’s generalization ability. The loss function incorporates a combination of bounding box regression loss, binary cross-entropy, and mean squared error, while the Adam optimizer is used to accelerate convergence. Simulation results confirm that the method achieves strong performance in obstacle detection accuracy, tracking stability, and error control when deployed on the RK3588-A platform. These features make it particularly well-suited for efficient detection and reduction in rogue UAVs in complex low-altitude environments, demonstrating high practical utility and deployment potential, especially in edge computing scenarios supported by the RK3588-A platform.

Advantages and limitations of the existing object tracking techniques.

| Technology Type | Advantages | Disadvantages |

| Blur-aid feature aggregation network (BFAN) | High-precision aggregation of blurred features with high computational efficiency | Lack of dynamic and multi-scale processing, limited to single application scenario |

| High-quality object detection method based on improved dino and masked image modeling | Extracting features using a hybrid network for high detection accuracy | Complex model, high training cost, weak real-time performance |

| Integrated YOLO Algorithm with RK3588 Embedded System | Boosts tiny-target detection, cuts parameters and speeds up.0 | Hurts mid-large target performance, cannot handle temporal information. |

| YOLOv8s combined with LSTM | Spatial-temporal integration boosts robust detection and tracking, with efficient training and strong generalization | Complex structure with difficult parameter tuning, relatively high computational resource requirements |

The novel methods in this study—integrating YOLOv8s with LSTM, introducing an innovative loss function design, applying the ADAM optimizer, utilizing Monte Carlo randomization and RK3588-A system—address the key limitations in existing technologies across the five following areas:

(1) Enhanced Temporal Feature Capture

The original model of YOLOv8 had limited temporal feature extraction capabilities. Integrating LSTM enables dynamic temporal attention, thereby capturing UAV state variations during positioning and tracking, including addressing conventional detector limitations.

(2) Optimized Loss Function

A task-specific loss function combining boundary regression, binary cross-entropy, and mean squared error accurately reflects prediction errors, guides the precise learning of target positions and categories, and improves the detection accuracy over conventional losses.

(3) Improved Optimal Performance

The Adam optimizer, with momentum and adaptive learning rates, adjusts parameter updates, prevents gradient problems, promotes faster and more stable convergence, and reduces training time and resources compared to other optimizers.

(4) Verification of Randomization using Monte Carlo Simulations

Monte Carlo sampling provides statistical validation (mean, standard deviation, and confidence intervals) of model stability and reliability under varied conditions, thereby surpassing single-result evaluations and addressing uncertainty and generalization gaps.

(5) Embedded Hardware Co-optimization

Hardware-aware optimization of the detection algorithm is achieved through the co-design of the RK3588-A embedded platform deployment and the algorithm. The platform’s dedicated neural processing unit (NPU) accelerates efficient computation of the lightweight model and reduces algorithmic complexity, all while maintaining high detection accuracy. This hardware-algorithm co-optimization strategy addresses the performance limitations of conventional algorithms on embedded devices and offers a lightweight, deployable solution for engineering low-altitude UAV control systems.

For comparison, the optimization strategies proposed in this study are referred to as YOLOv8s-LSTM.

2. Materials and Methods

2.1. YOLOv8s Algorithm Model

YOLOv8s reframes the object detection problem as a regression task, in which each grid cell predicts bounding boxes and their confidence scores, along with the class probabilities [

25].

For each bounding box, five values must be predicted: center coordinates (

x,

y), width and height (

w,

h), and confidence score. The center coordinates (

x,

y) represent offsets relative to the grid cell, while the width and height (

w,

h) are expressed as ratios relative to the entire image. The detection equation is expressed as Equation (1) as follows:

where

represents the coordinates of the top-left corner of the current grid cell,

the dimensions of the anchor (prior) box, and

the offsets predicted by the network. The sigmoid function

restricts these offsets to the [0, 1] range.

2.2. Confidence Prediction and Class Prediction

The confidence score indicates the probability that an object exists within the predicted bounding box and degree of overlap between the predicted bounding box and ground truth. It is generally expressed as a scalar ranging from 0–1, wherein values closer to 1 indicate a higher likelihood that the bounding box contains an object and that its localization is accurate.

The confidence score

Confidence is expressed by Equation (2) as follows:

where

denotes the probability that an object is in the bounding box, while

denotes a greater degree of alignment between the predicted and actual object locations.

The calculation formula for

is expressed by Equation (3) as follows:

where

denotes the predicted bounding box and

the ground truth bounding box.

Each grid cell outputs a set of class scores for its predicted bounding box, with each score corresponding to a specific object category. The class score

for a specific bounding box is computed as the product of the confidence score and class probability, as expressed in Equation (4):

where

denotes the probability that the object in the bounding box belongs to class

i, given that an object is present.

To convert these scores into probabilities, assuming that there are

classes and the network outputs a raw class score vector

, the conversion formula is expressed by Equation (5) as follows:

where

denotes the probability that the object within the predicted bounding box

belongs to class

I,

the raw score output by the network for class

I, and

the raw score for class

j.

For real-time detection—an objective of this study, the confidence score and class-prediction results were used. The class prediction results for a detection box are considered only when the confidence score exceeds a certain threshold. The final detection results comprised the bounding boxes with high confidence scores and highest class probabilities.

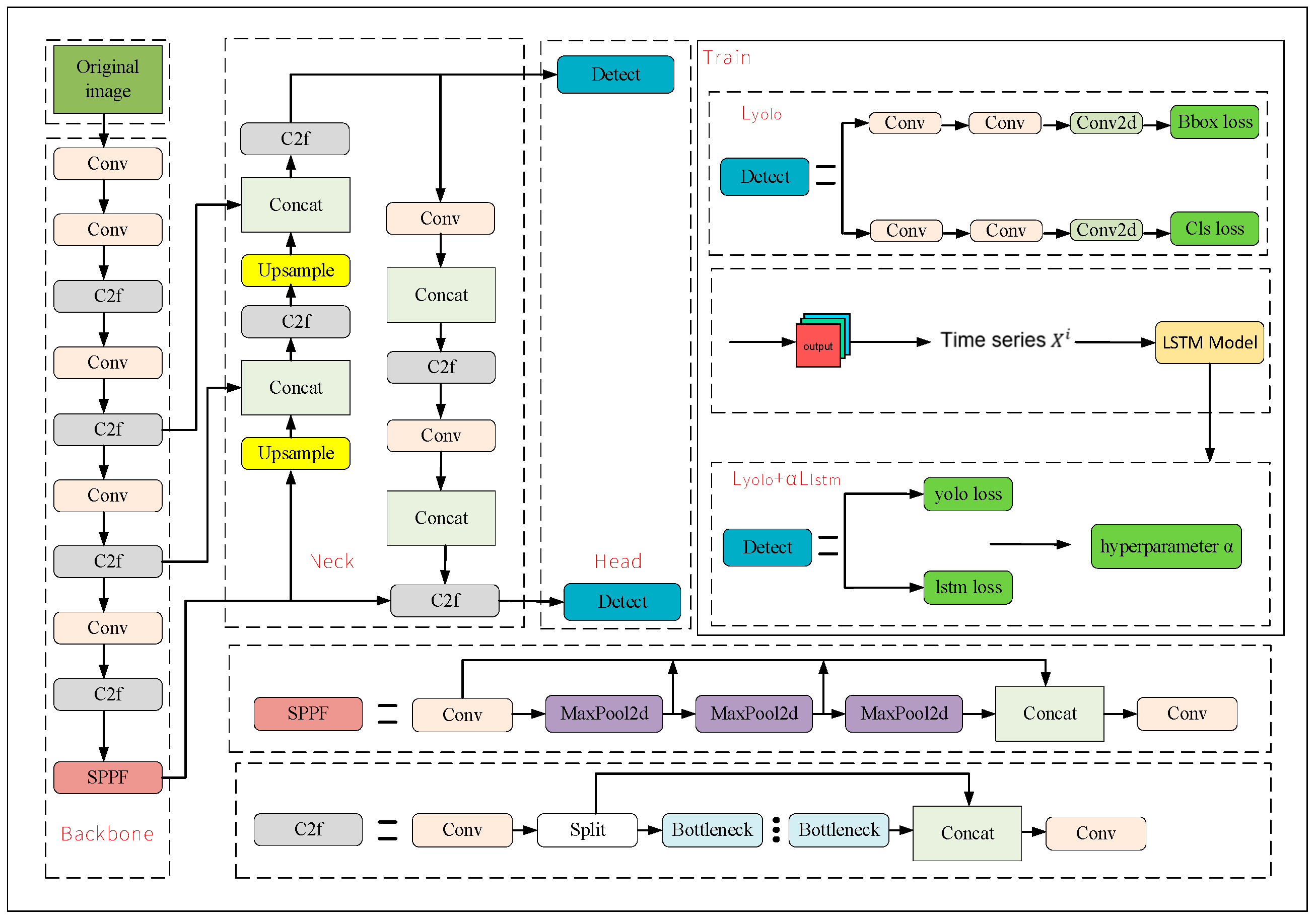

Figure 1. Feature map processing of YOLOv8s shows the feature map processing pipeline of YOLOv8s.

When using YOLOv8s for frame-by-frame drone tracking, each frame is treated as an independent image, with YOLOv8s detecting the position of the drone in every frame. However, relying on this detection can result in issues such as minor inconsistencies in detecting the same drone across different frames, including reduced accuracy owing to factors such as occlusion or changes in lighting.

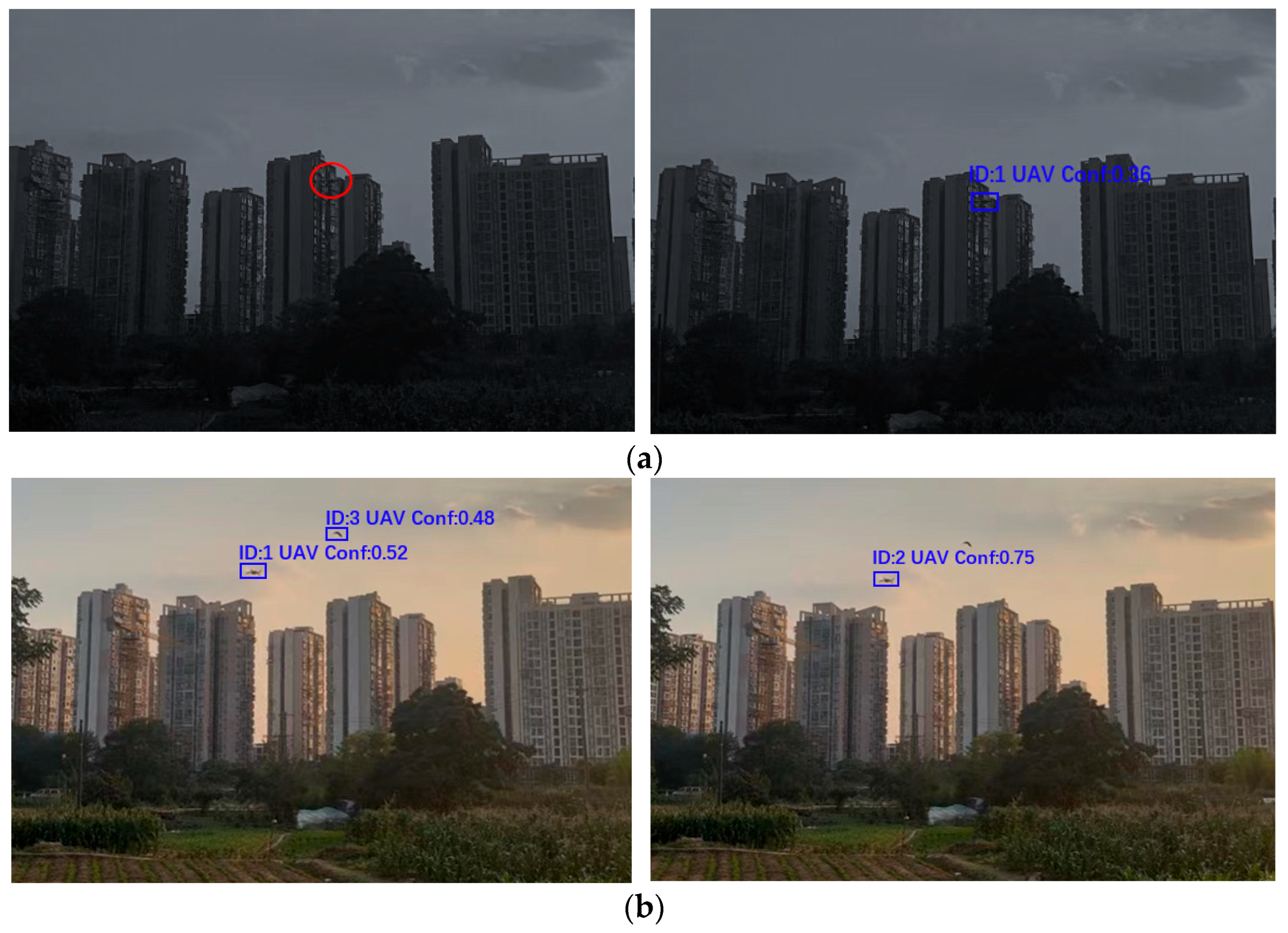

In target tracking, if a frame is lost, the information for that frame is also lost, making it impossible to accurately account for the motion of the target during that interval. This can result in considerable discrepancies between the predicted and actual target positions, thereby affecting subsequent detection and association. Since real-time drone detection relies on continuous tracking, even if a target is detected in the next frame, the previously established tracking trajectory may be interrupted owing to the missing frame. As shown in

Figure 2, the YOLOv8s algorithm is combined with a binocular camera for UAV detection, highlighting frame loss.

Figure 2a shows that the YOLOv8s algorithm successfully identifies the drone but with a low confidence, while

Figure 2b shows that the drone is not detected, thereby indicating frame loss. To address this problem, this study proposes a YOLOv8s-LSTM target-tracking model that enhances drone detection performance and mitigates tracking failure caused by video frame loss.

2.3. YOLOv8s-LSTM Algorithm

The optimized YOLOv8-LSTM model proposed in this paper provides an innovative approach to addressing the aforementioned issues. First, YOLOv8s is used to perform single-frame target detection on each frame of the video, accurately locating the positions of drones (and interfering targets) and outputting the coordinates of the detection boxes. Subsequently, the coordinates from consecutive frames are concatenated into a time series in chronological order and in-put into the LSTM model.

Its architecture is illustrated in

Figure 3. Compared with the original YOLOv8 [

9], the core innovations focus on three aspects to break through the performance bottleneck of single-frame detection in complex scenarios:

(1) Enhancement of backbone and neck networks: On the basis of inheriting the efficient Cross Stage Partial (C2f) module and multi-scale feature fusion strategy of YOLOv8, this framework introduces a hierarchical progressive feature refinement mechanism with the “Convolution (Conv) + C2f” module group as the core. Through multiple rounds of feature transmission and refinement, the spatial feature fidelity of tiny UAV targets is improved.

(2) Temporal modeling module: The proposed architecture embeds an LSTM-based time-series processing branch. After the detection head of YOLOv8 outputs the bounding box coordinates of consecutive frames, these coordinates are aggregated into a time series and input into the LSTM model. This enables the model to learn the target motion patterns and overcomes the defect that YOLOv8 cannot model inter-frame dependencies.

(3) Optimization of training processes: In the training phase, the “Train” sub-module adopts a strategy of dual-path parallel “Conv + C2f” streams to enhance feature diversity, a design not available in the single-branch training of YOLOv8.

Thus, by combining the single-frame detection accuracy of YOLOv8s with the temporal sequence modeling capability of LSTM, efficient tracking of drones in complex scenarios is achieved, it can effectively reduce the occurrence of target loss.

A sequence of consecutive video frames , where T denotes the total number of frames in the video or real-time surveillance footage, was processed using YOLOv8s. Each frame (t = 1, 2, …, T) is input into the YOLOv8s model for detection.

After processing frame , YOLOv8s generates numerous detection boxes. For each detected object, the detection box information is represented by the vector .

and represent the coordinates of the top-left and bottom-right corners of the detection box, respectively; is the confidence score indicating the presence of an object within the detection box; and is the probability that the object in the detection box belongs to class j, where C denotes the total number of object classes.

To utilize this information for UAV localization using LSTM, we focused on the coordinate data from the detection boxes. Herein, partial coordinates are extracted as , where i denotes the ith UAV target. The ground truth coordinates for each UAV target are denoted as .

For a specific target, the coordinate information from consecutive frames is organized into a time series

, where

serve as the input to the LSTM [

20].

When processing time-series data, the LSTM unit maintains the cell state and hidden state . At each time step t, the inputs to the LSTM include the present input , hidden state from the last time step , and prior cell state .

The LSTM algorithm centers on its cell state and three gating mechanisms: the input, forget, and output gates. These mechanisms enable LSTM to effectively learn long-term dependencies while mitigating the vanishing or exploding gradient problems associated with conventional RNNs.

The cell state is a key component of the LSTM that determines the data storage, as expressed in Equation (6):

where

represents element-wise multiplication;

denotes the updated cell state at the current time step, integrating the outputs of the forget gate (

) and input gate (

);

is the candidate cell state generated at the current time step; and

is the cell state from the previous time step.

The input gate determines the new information stored in the cell state, as expressed in Equation (7):

where

represents the output of the input gate;

the candidate cell state;

and

the weight matrices for the input gate and candidate cell state, respectively;

and

the corresponding bias terms; and

the hyperbolic tangent function with an output range of −1 to 1.

The forget gate determines the information to be discarded from the cell state, as expressed in Equation (8):

where

represents the output of the forget gate;

the weight matrix of the forget gate;

the bias term of the forget gate;

the sigmoid function with an output range of 0 to 1;

the hidden state from the previous time step; and

the input at the current time step.

The output gate determines which parts of the cell state are passed as outputs to the next time step, as expressed in Equation (9):

where

represents the weight matrix of the output gate and

the bias term of the output gate.

determines how much information from the current cell state

is output to the hidden state

.

Equation (10) expresses how the hidden state

, obtained from the LSTM at the current time step, is used to predict the bounding box information of the target in the next frame.

where

is the predicted bounding box coordinates of the target in the next frame

represents the weight matrix of the fully connected layer and

the bias vector.

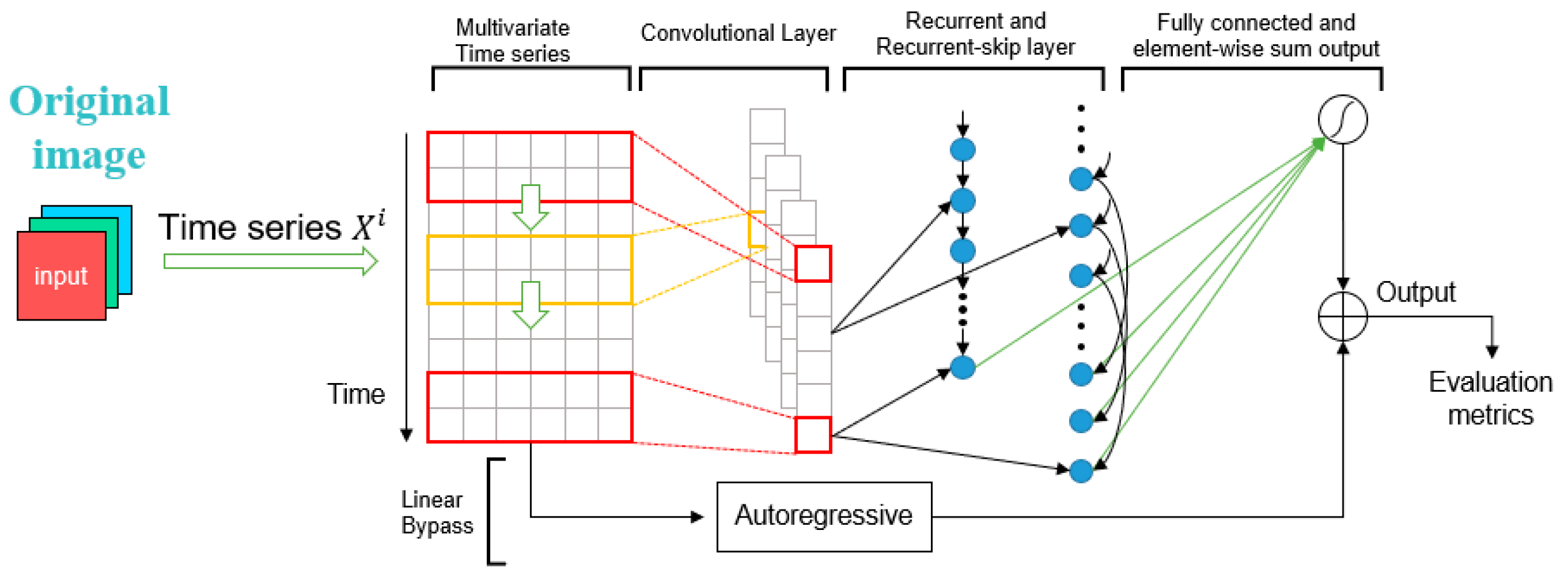

Figure 4 shows the enhanced YOLOv8s-LSTM architecture for multivariate time-series prediction, which improves the forecasting performance by integrating convolutional layers, recurrent units, and an autoregressive component.

2.4. Loss Function Model

The loss function is vital in the integrated YOLOv8s and LSTM models as it gauges the difference between model predictions and ground truth labels, thereby guiding parameter updates during training [

38]. As expressed in Equation (11), the combined loss function

of the model comprises two components: the detection loss

from YOLOv8s and prediction loss

from LSTM, scaled by a hyperparameter

to control their contributions.

The YOLOv8s detection loss, as expressed in Equation (12), comprises two components: the bounding-box regression loss

and confidence loss

:

The bounding box regression loss employs complete intersection over union (

), an enhanced IoU metric that takes into account not only the overlap between predicted and ground truth boxes, but also integrates center point distance and aspect ratio.

is expressed by Equation (13) as follows:

where

denotes the ground-truth bounding box;

the predicted bounding box; and

the number of bounding boxes involved in the loss computation within a batch.

The equation aggregates the individual regression losses computed for each bounding box, which is later divided by the total number of bounding boxes to obtain the average bounding box regression loss . This guides the adjustment of the bounding box prediction parameters during model training.

The confidence loss is computed using the binary cross-entropy loss function, which measures the difference between the predictions and ground truth labels of the model. The confidence loss is expressed by Equation (14) as follows:

where

denotes the number of samples;

the ground-truth label indicating whether an object is present in the detection box; and

the predicted confidence score.

This equation computes the overall confidence loss by averaging the confidence loss across all detection boxes to optimize the confidence prediction of the detection boxes during model training.

As expressed in Equation (15), the prediction loss of LSTM is calculated using the mean squared error loss, which measures the difference between the predicted bounding box information and ground truth. The LSTM model parameters were optimized by minimizing the loss function as follows:

where

denotes the number of time series samples used to train the LSTM;

the predicted bounding box;

the ground truth bounding box; and

the squared

-norm that measures the distance between

and

, quantifying the magnitude of their difference.

The formula computes the overall loss by averaging the distances between the predicted and ground truth values at each time step.

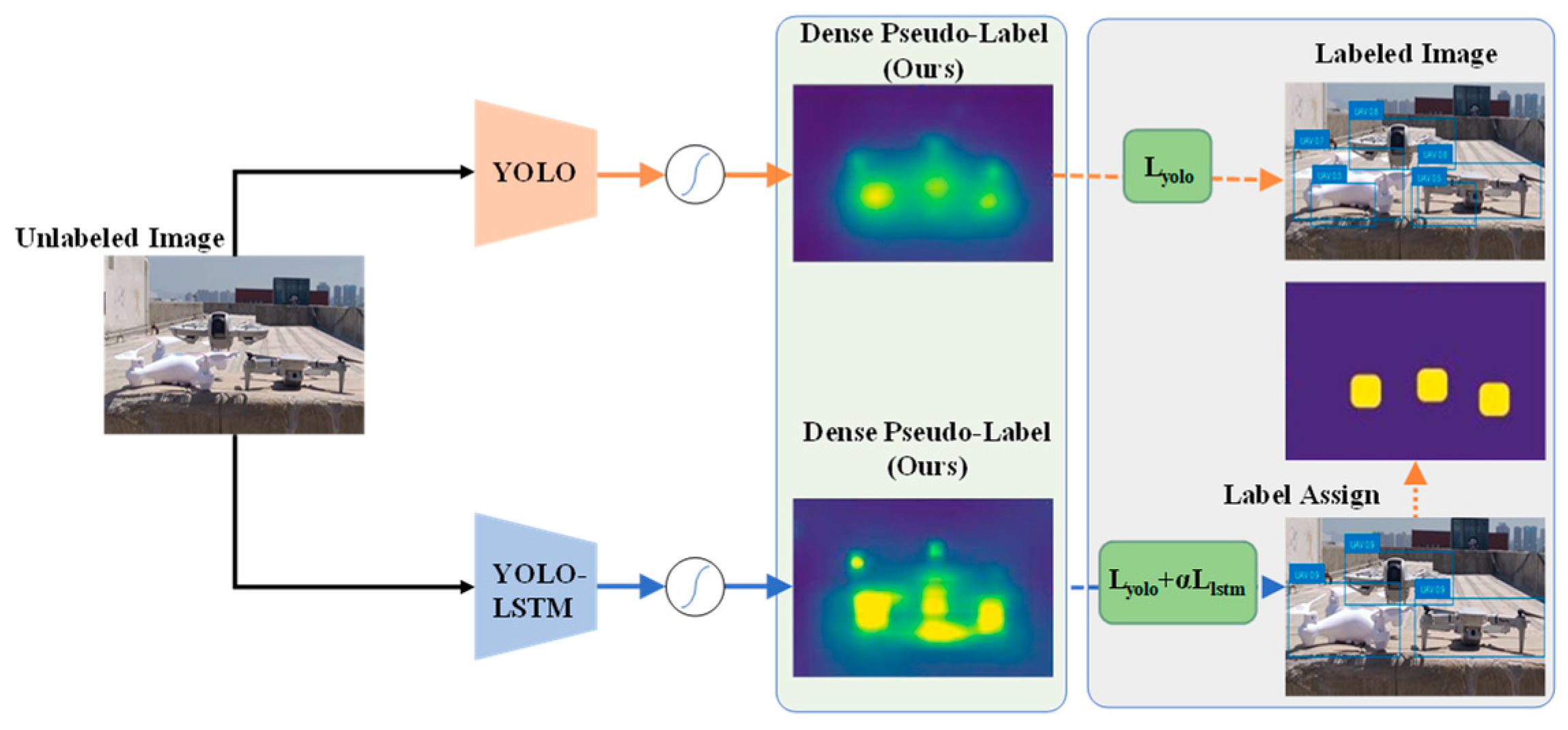

Figure 5 illustrates the workflow of the integrated YOLO-LSTM object-detection framework, which combines the YOLO detector with a temporal LSTM model and employs a pseudo-labeling mechanism to enhance supervision and object detection.

2.5. Adam Optimizer

The Adam optimizer merges the benefits of momentum and adaptive learning rates [

24]. It computes individualized learning rates for different parameters, enabling rapid updates and fast convergence during the initial training phases, while providing stable and controlled adjustments later to prevent overshooting due to overly large learning rates. In the YOLOv8s-LSTM framework, accurately learning spatial features via YOLOv8s and temporal sequence features via LSTM is critical. The adaptively optimized step size and direction allow the model to efficiently converge toward an optimal parameter set within a short training period.

The first-order moment estimation is defined in Equation (16) as follows:

where

denotes the first-order moment estimate;

the decay rate for the first-order moment, generally close to 1;

the first-order moment estimate from the previous iteration; and

the gradient of the loss function with respect to parameter

.

The second-order moment estimation is expressed by Equation (17) as follows:

where

denotes the second-order moment estimate;

the second-order moment decay rate;

the second-order moment estimate prior to iteration; and

the squared gradient of the loss function

with respect to parameter

.

Because the initial values of and second-order moment estimate are generally set to zero vectors, they are biased toward zero in the early iterations. Hence, bias correction is necessary.

The bias-corrected first-order moment estimate is expressed in Equation (18) as follows:

The bias-corrected second-order moment estimate is expressed using Equation (19) as follows:

where

t denotes the iteration step. As the number of iterations increases, the effect of the bias correction gradually decreases.

Equation (20) expresses the update of the model parameters

using the bias-corrected first-order and second-order moment estimates as follows:

where

denotes the learning rate and

a constant.

Using these steps, the Adam optimizer iteratively updates the model parameter using the gradient of the loss function, thereby minimizing the total loss function and optimizing the YOLOv8s-LSTM model.

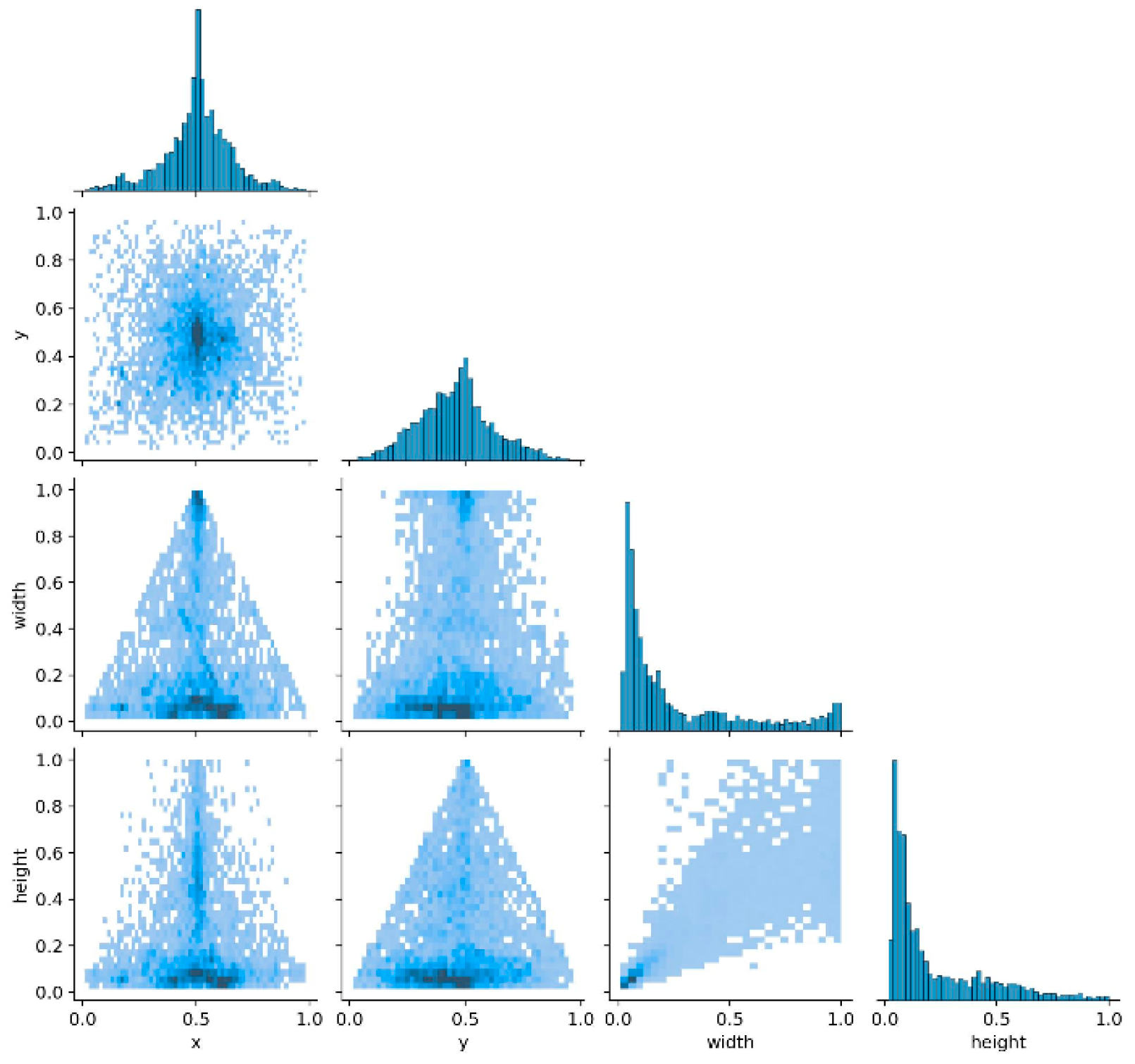

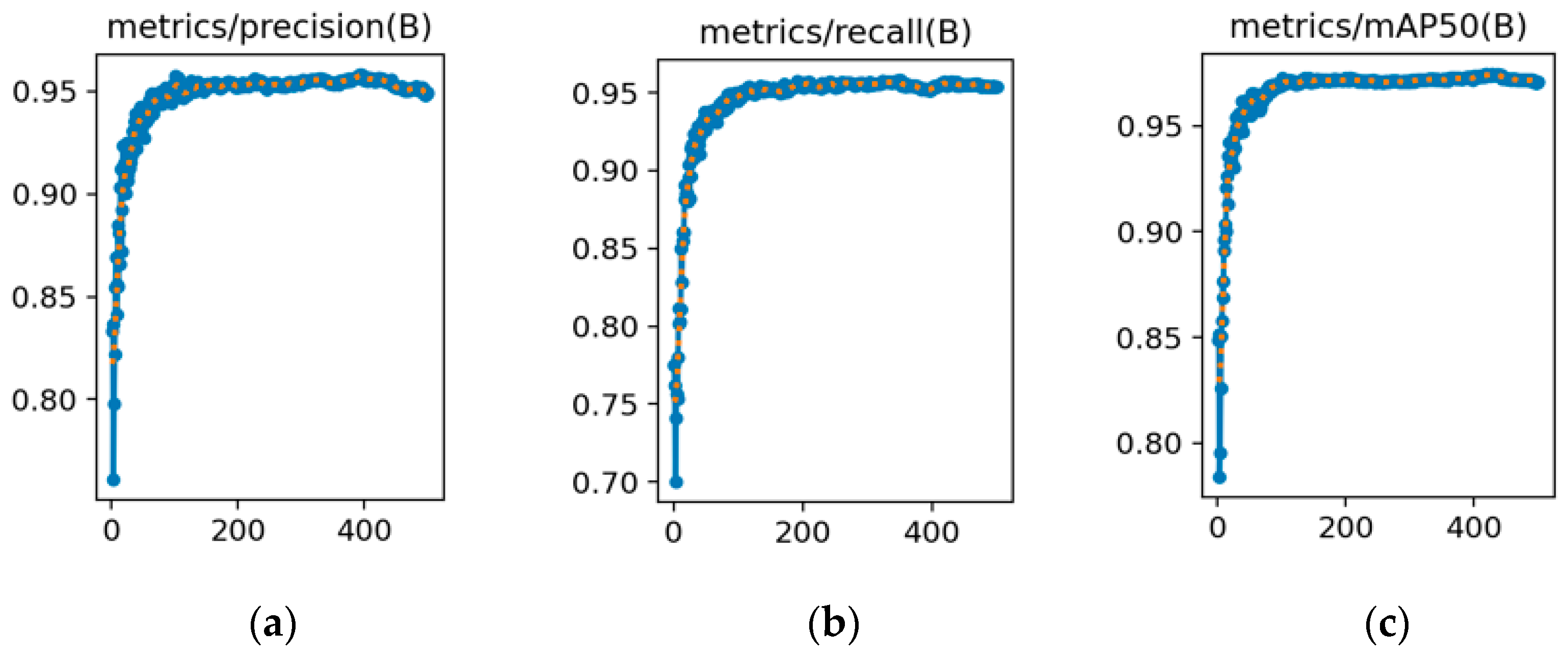

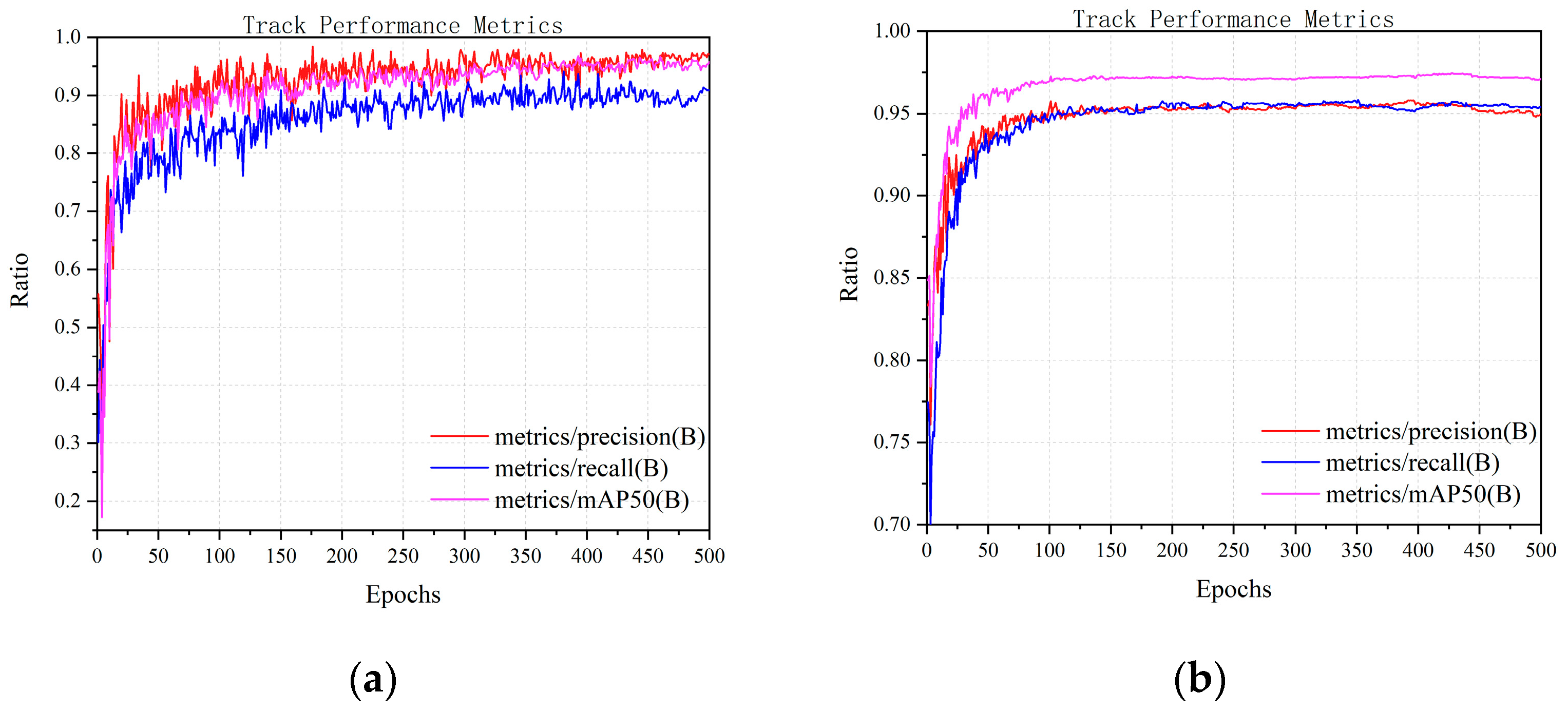

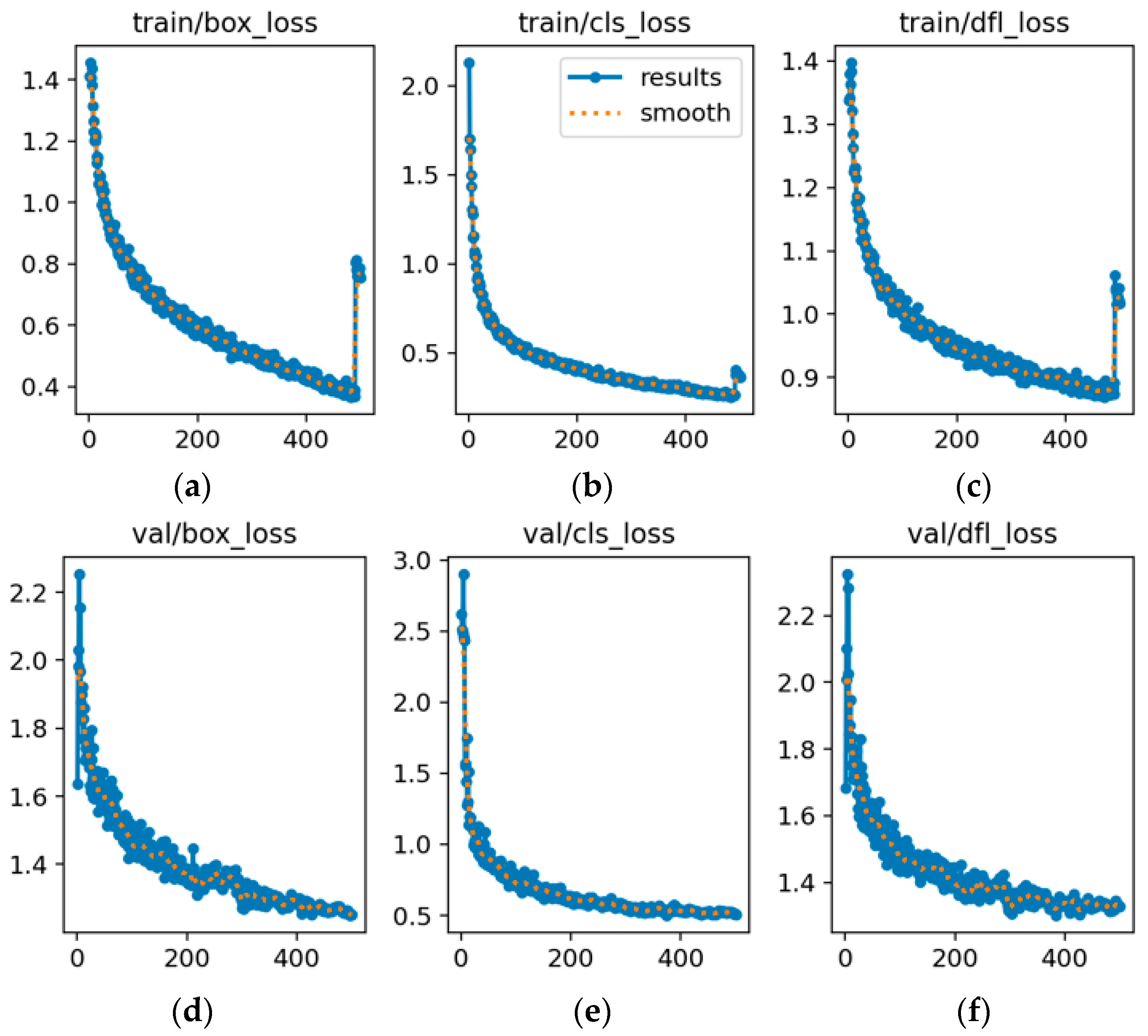

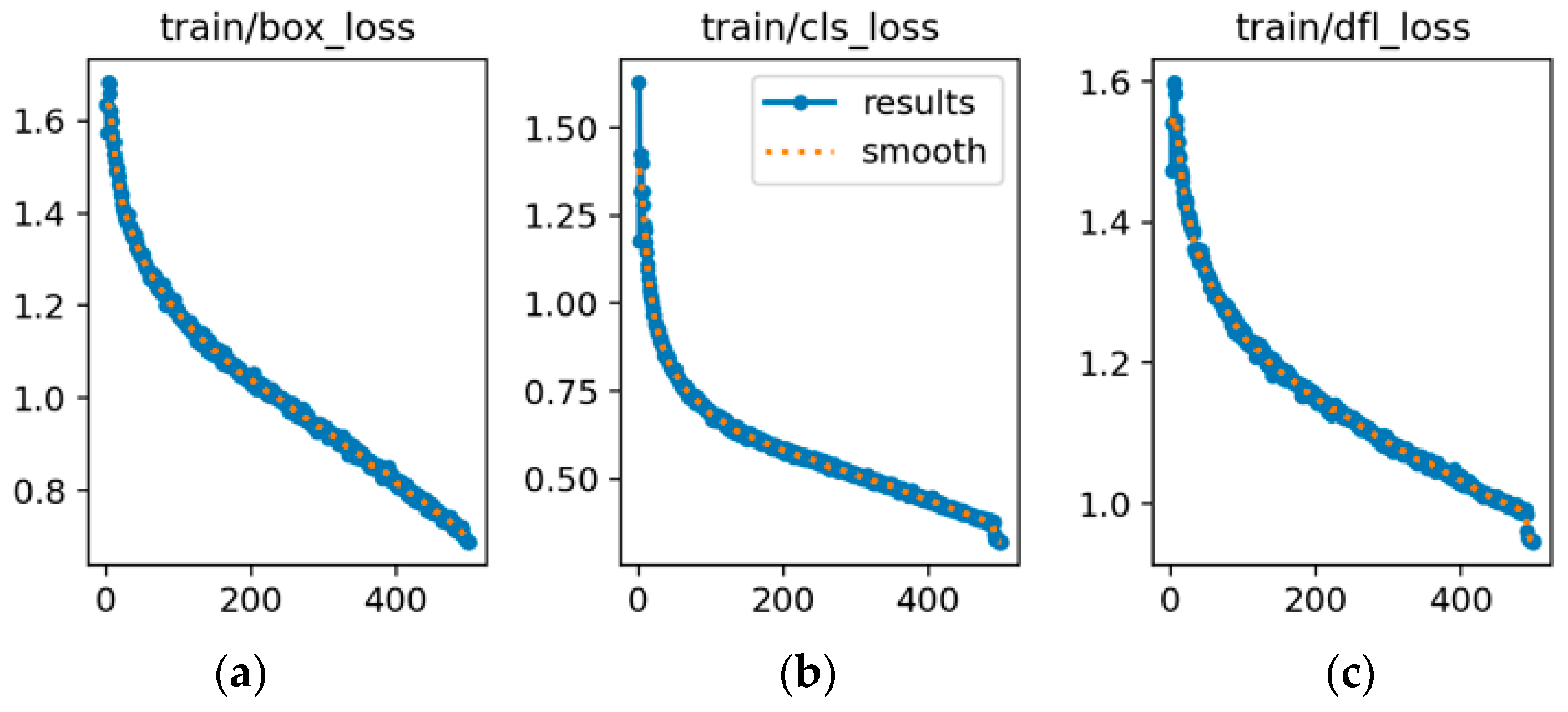

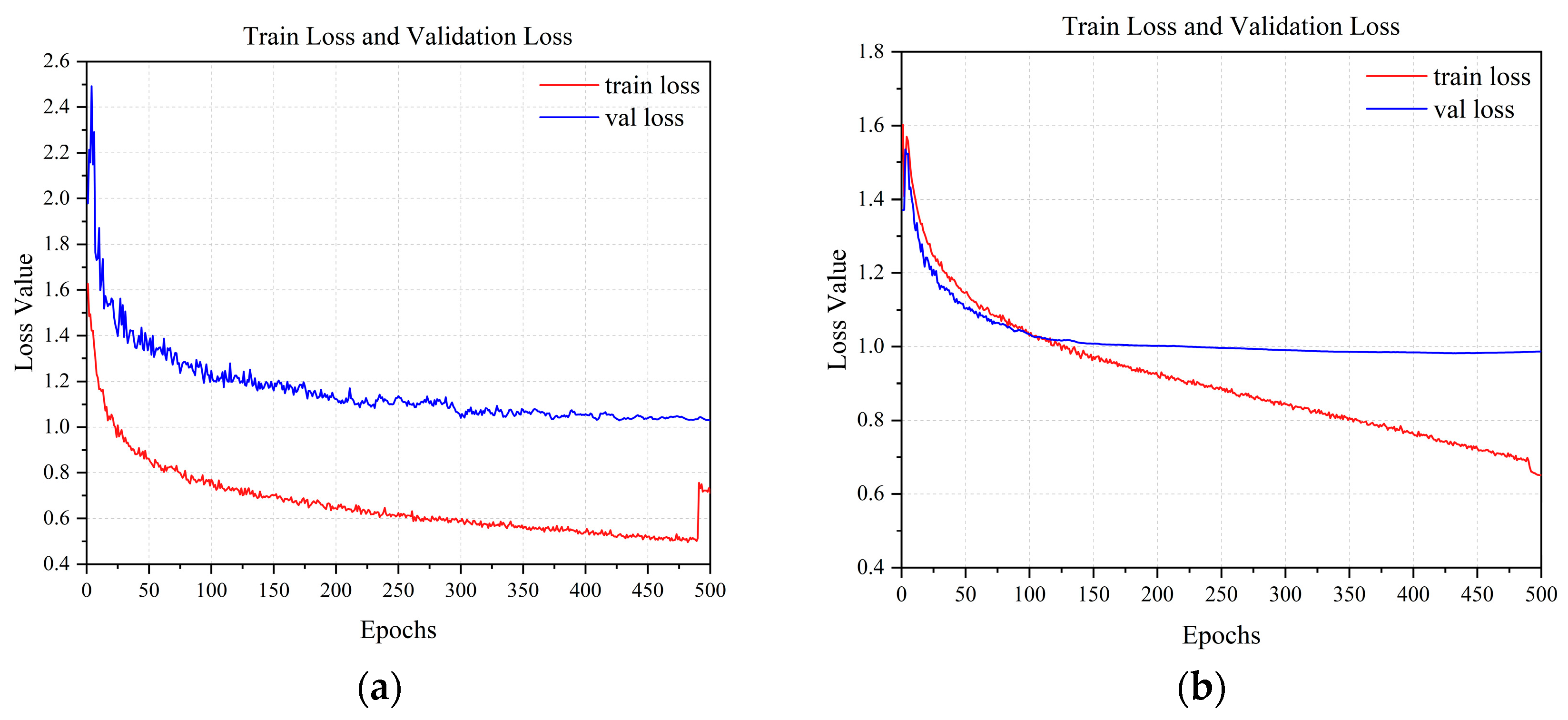

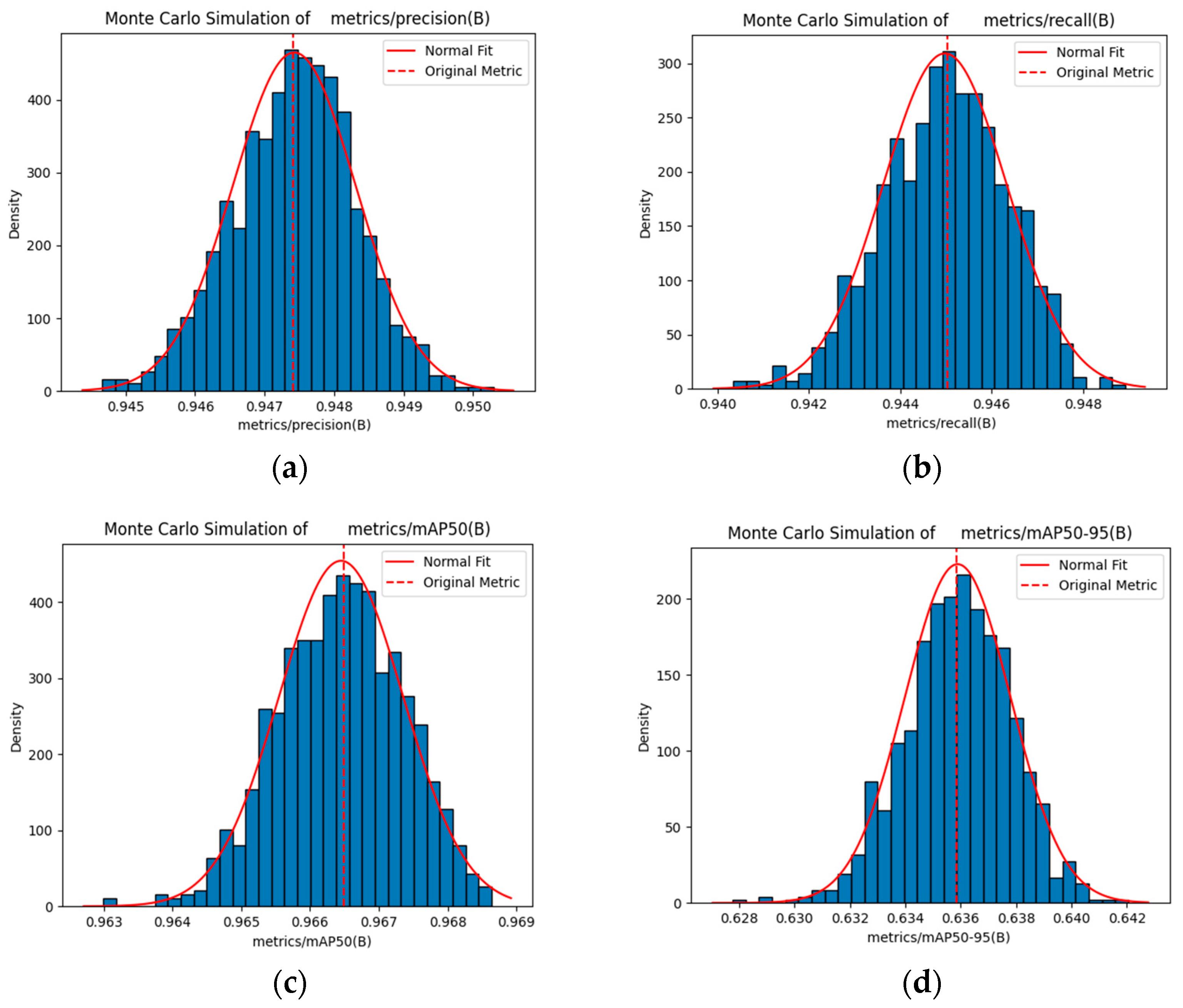

Integrating LSTM with YOLOv8s offers considerable advantages for object detection and localization. An analysis of the object parameter distributions shows that the model adapts well to position and scale variations, effectively managing diverse scenarios. Evaluations using key metrics—mAP50, mAP50-95, precision, and recall—demonstrated considerable improvements in the detection accuracy and object capture capability. Loss analysis further confirmed that the model converged faster and was more stable during training. The Monte Carlo sampling results verified that the model maintains stable performance and high accuracy under different conditions.

4. Conclusions and Future Works

The tracking data were visualized by analyzing the distribution and correlation of the target parameters, including the position (X and Y coordinates), scale (width, height), and category. This provides an intuitive representation of the input data for the model. By optimizing the structure, modifying the loss model during training, and incorporating the Adam optimizer, the results show that integrating the LSTM with the YOLOv8s model considerably enhances adaptability to target locations and scale, enabling the system to manage a broader and more diverse range of detection scenarios.

We offer a thorough analysis of the UAV detection metrics, including key metrics such as mAP50, mAP50-95, precision, and recall. These metrics measure the detection accuracy and target capturing of the model from different perspectives. Furthermore, detailed analysis of the training and validation losses revealed that the integration of LSTM with the YOLOv8s model offers notable advantages in terms of the speed of a faster loss convergence and improved final stability, thereby highlighting the high training efficiency and stability of the model. When deployed on the RK3588-A embedded platform, the model benefits from the platform’s 6TOPS NPU acceleration, leading to reduced computational complexity and improved inference speed.

To further validate the reliability of the UAV detection metrics, 1000 sampling iterations were conducted using the Monte Carlo method. By analyzing the average, standard deviation, and 95% confidence interval of the sampling metrics, the integration of the LSTM and YOLOv8s models can maintain stable performance and provide high accuracy under different sampling conditions. Notably, on the RK3588-A platform, these metrics demonstrate consistent stability even in high-density UAV scenarios, with FPS fluctuations within 5% and positioning errors controlled within the 95% confidence interval.

The comprehensive analyses, including real-world deployment on the RK3588-A platform, demonstrate that the integration of LSTM with YOLOv8s models offers considerable advantages in terms of tracking and localization performance, with tangible improvements in real-time inference speed, computational efficiency, and positioning precision. This result fully highlights the strong application potential of the integrated model in edge computing scenarios, especially suitable for low-altitude airspace management systems built on the RK3588-A embedded platform, and also indicates that it possesses prominent practical value in airspace management and anti-UAV operations.