To rigorously validate the efficacy of the proposed adaptive deep ensemble learning (ADEL) framework, comprehensive experiments are conducted against the following two categories of benchmark methods: state-of-the-art deep learning architectures for signal identification and advanced ensemble learning methodologies. This comparative analysis quantitatively demonstrates ADEL’s superiority under data scarcity and class imbalance conditions.

3.1. Experiment Setup and Evaluation Criteria

Deep learning architectures for RF signal processing demonstrate specialized innovations as follows: MCNet [

50] leverages asymmetric convolutions with skip-connections for multi-scale spatiotemporal feature extraction. PETCGDNN [

51] integrates phase-aware correction within lightweight CNN–GRU hybrids for parameter-efficient modulation recognition. Adapted 1D CNN/VGG [

52] enables temporal signal processing, while MSCANet [

53] orchestrates multi-scale attention for noise robustness. CVCNN [

40] preserves IQ signal coupling via complex-valued operations, maintaining quadrature relationships lost in real-valued networks. FFTMHA [

54] reconstructs high-frequency components through Fourier-attention mechanisms, enabling joint spectral–temporal modelling via RNN feature extraction.

The deep ensemble learning methodologies include a composite ensemble learning (CEL) in [

45], an ensemble learning method with convolutional neural networks (CNNEL) in [

46], two deep ensemble algorithms, hierarchical and multi-stage feature fusion (HMSFF) and cooperative decision (Co_decision) in [

47], the deep ensemble learning method for SEI by multi-feature fusion in [

48], feeding amplitude, phase and spectral asymmetry features into parallel CNNs, aggregating predictions through output averaging, and the lightweight transformer-based network GLFormer in [

32].

To ensure a rigorous and fair comparison, all deep learning models in this study were trained under identical conditions using the Adam optimizer [

55] with a fixed learning rate of 1.6 × 10

−4. The training protocol consisted of 200 maximum epochs with an early stopping mechanism (patience = 10) to prevent overfitting, and a consistent batch size of 32 was maintained throughout. For hyperparameter optimization, we conducted a systematic grid search using Optuna, exploring the following parameter spaces: convolutional layer channels ranging from 32 to 128 in increments of 16, transformer attention heads selected from {4, 8, 16} and convolutional layers varying between 3 and 8. This standardized experimental design guarantees that any observed performance differences can be confidently attributed to architectural variations rather than training inconsistencies or parameter selection biases.

The evaluation metrics in the experiments are accuracy, precision, recall and macro-F1. The macro-F1 assigns equal weight to each category, making it particularly suitable for scenarios with class imbalance.

where

TP denotes the true positive,

TN represents true negative,

FP stands for false positive,

FN means false negative,

C is the total number of classes and F1

i denotes the F1-score corresponding to the

i-th class. Following Equations (8)–(10), precision, recall and macro-F1 are computed for each class. The overall dataset metrics represent the weighted average of these per-class values.

3.2. Benchmarking Results

The benchmarking results are based on the following two benchmark methodologies: deep learning models and deep ensemble learning methods; their outcomes in terms of the evaluation metrics are given in

Table 6. All experimental results are reported as the mean value ± standard deviation from five independent replicates. Our comparative analysis demonstrates ADEL’s statistically significant superiority over CNN/VGG. The 95% confidence intervals show complete separation between architectures (ADEL: 98.25 ± 0.86 vs. CNN/VGG: 96.58 ± 1.11), with no interval overlap indicating robust performance differences. The paired

t-test yields highly significant results (t = 8.944,

p = 0.00086). The extremely low

p-value (

p < 0.001) provides overwhelming evidence rejecting the null hypothesis, establishing that ADEL’s accuracy improvement is both statistically and practically significant. The results in

Table 6 demonstrate the superiority of the ADEL framework. To facilitate comparison, the best performance achieved across all replicates is presented in the following sections.

ADEL demonstrates dominant performance across all evaluation metrics, achieving near-perfect scores that reflect exceptional classification consistency as follows: 0.9975 accuracy, 0.9975 precision, 0.9975 recall and 0.9975 macro-F1 score; this apparent equality stems from rounding at the 10

−4 precision level. This equilibrium—maximizing true positive identification while minimizing false positives/negatives—exceeds existing methods by substantial margins. Specifically, ADEL outperforms the strongest baseline (CNN/VGG) by 2.55% in accuracy (0.9975 vs. 0.9720) and 2.52% in macro-F1 score (0.9975 vs. 0.9723), while surpassing weaker methods like FFTMHA [

54] by 24.55% in accuracy.

Performance degradation in comparative methods (e.g., FFTMHA’s 0.7520 accuracy) primarily stems from training data scarcity and class distribution imbalance, which collectively exacerbate model overfitting. Notably, ensemble methods like CNNEL and multi-feature achieve comparable performance (e.g., multi-feature: 0.9575 accuracy; CNNEL: 0.9550 accuracy) through handcrafted multi-feature extraction and deep ensemble architectures. Nevertheless, ADEL establishes holistic superiority with +4% accuracy and +4.03% macro-F1 score over multi-feature, and +4.25% accuracy/+4.57% macro-F1 score over CNNEL. This advancement derives from ADEL’s heterogeneous deep network architecture, which enables end-to-end multi-scale representation learning, hybrid loss optimization and adaptive ensemble inference—eliminating manual feature engineering while enhancing generalization.

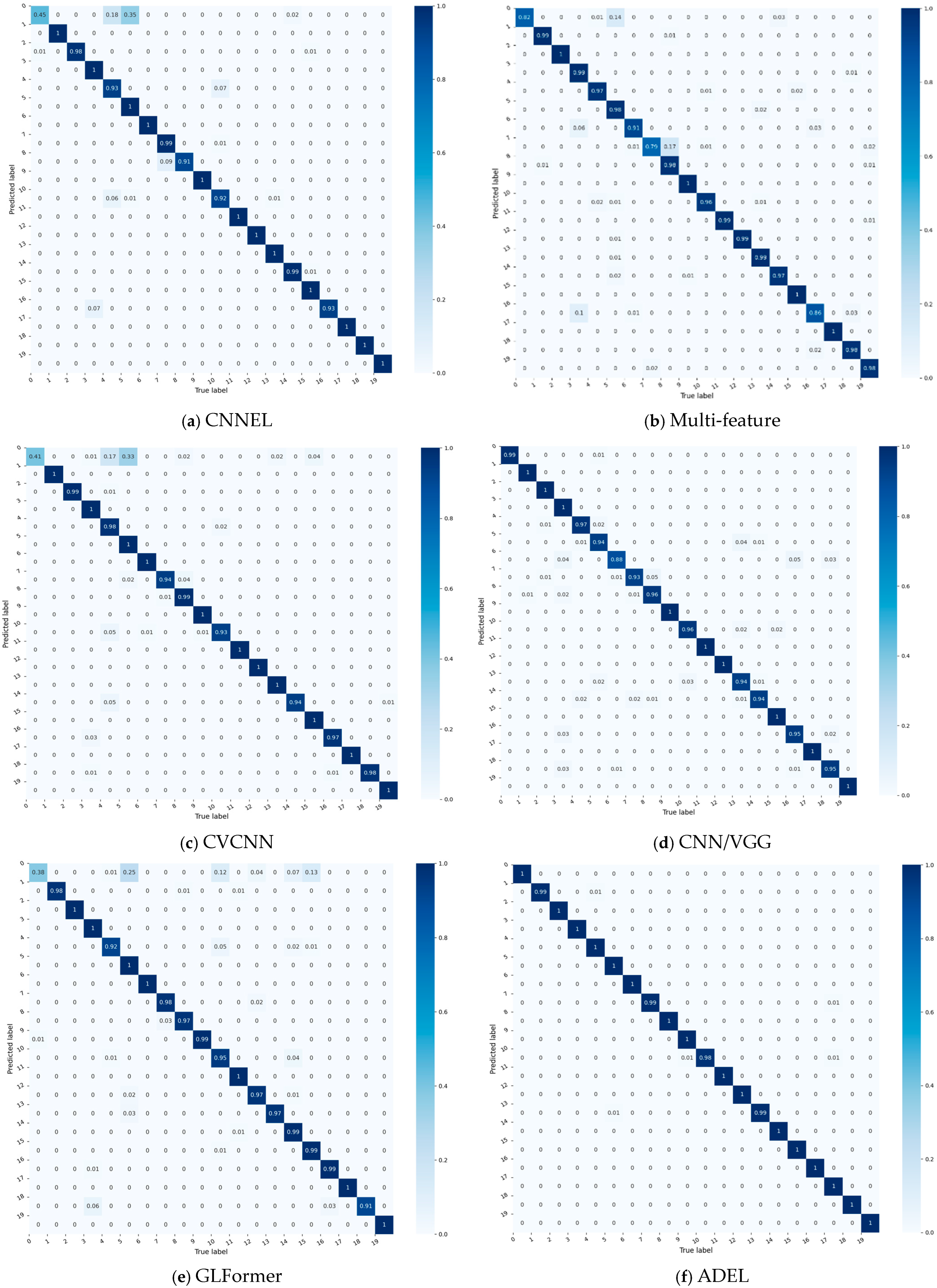

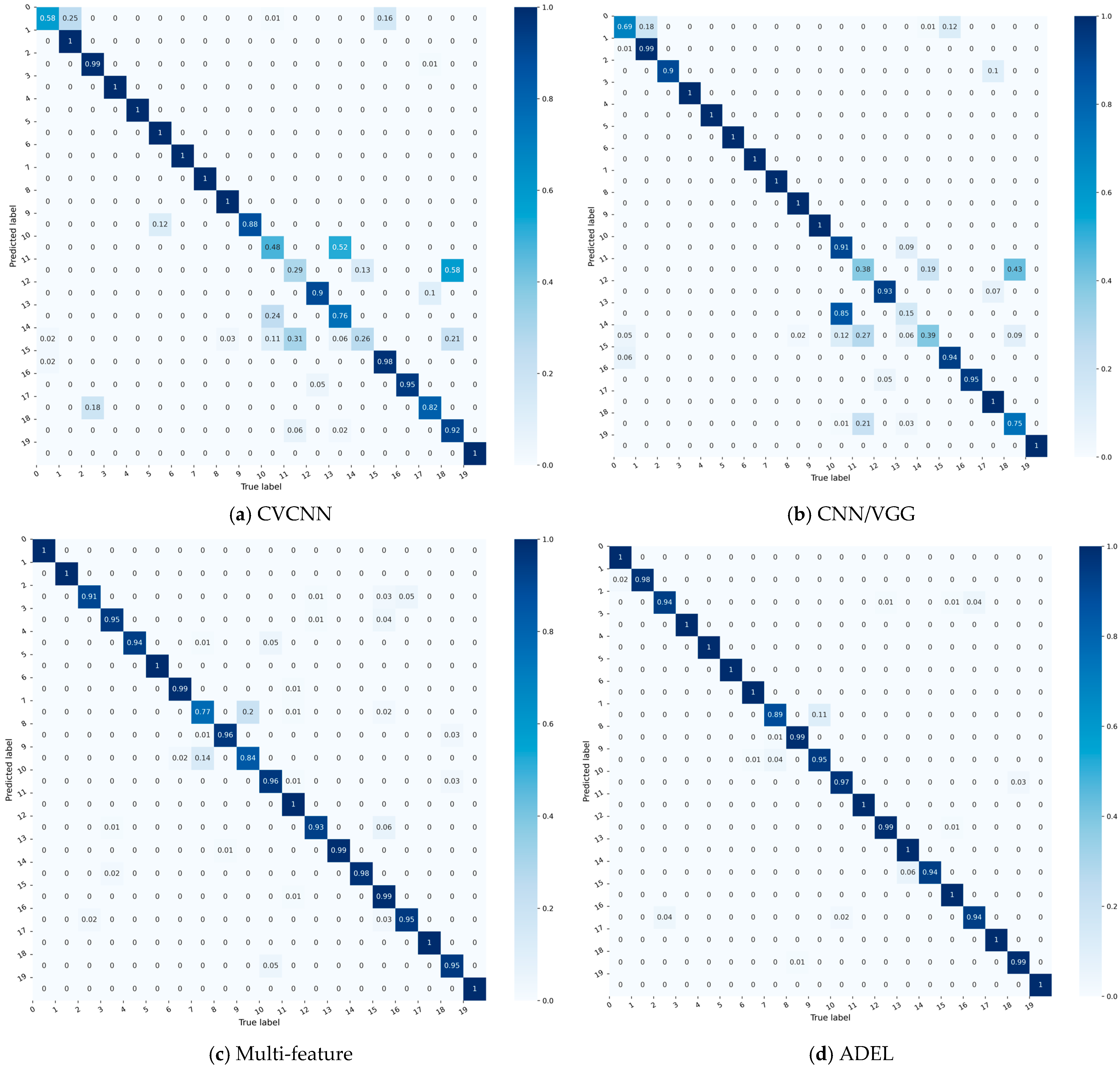

For granular analysis of per-class accuracy,

Figure 3 compares confusion matrices of four top-performing baselines (each exceeding 90% overall accuracy).

The confusion matrices of five models—four baselines (

Figure 3a–d) and the proposed ADEL (

Figure 3f)—reveal critical performance patterns through color-encoded probability distributions. CNNEL reveals critical model inequity with catastrophic failure in Class 0 (accuracy = 45%) alongside perfect performance in 12 classes. Multi-feature shows improved diagonal consistency (79–100%) yet suffers hazardous cross-class confusions (Class 5→0: 14%, Class 8→7: 17%, Class 4→16: 10%). CVCNN exhibits significant performance volatility across the 20 classes, with accuracy ranging from 41% to 100%. This 0.59 span represents severe inconsistency, particularly evidenced by the dramatic contrast between Class 0’s catastrophic failure (0.41 accuracy) and the perfect classification achieved for 10 categories (Classes 1, 3, 5, 6, 9, 11, 12, 15, 17 and 19). CNN/VGG model significantly outperforms all other baselines, demonstrating commendable stability: its minimum per-class accuracy reaches 88%, while 19 of 20 classes (>95%) exceed 93% accuracy. Crucially, 75% of classes (15/20) achieve > 95%, confirming robust generalization across categories. The confusion matrix of the proposed ADEL method demonstrates exceptional classification accuracy across all 20 categories, establishing its superiority over comparative methods. The model shows near-perfect accuracy: 100% for 16 classes and ≥98% for others (lowest: Class 10 at 98%).

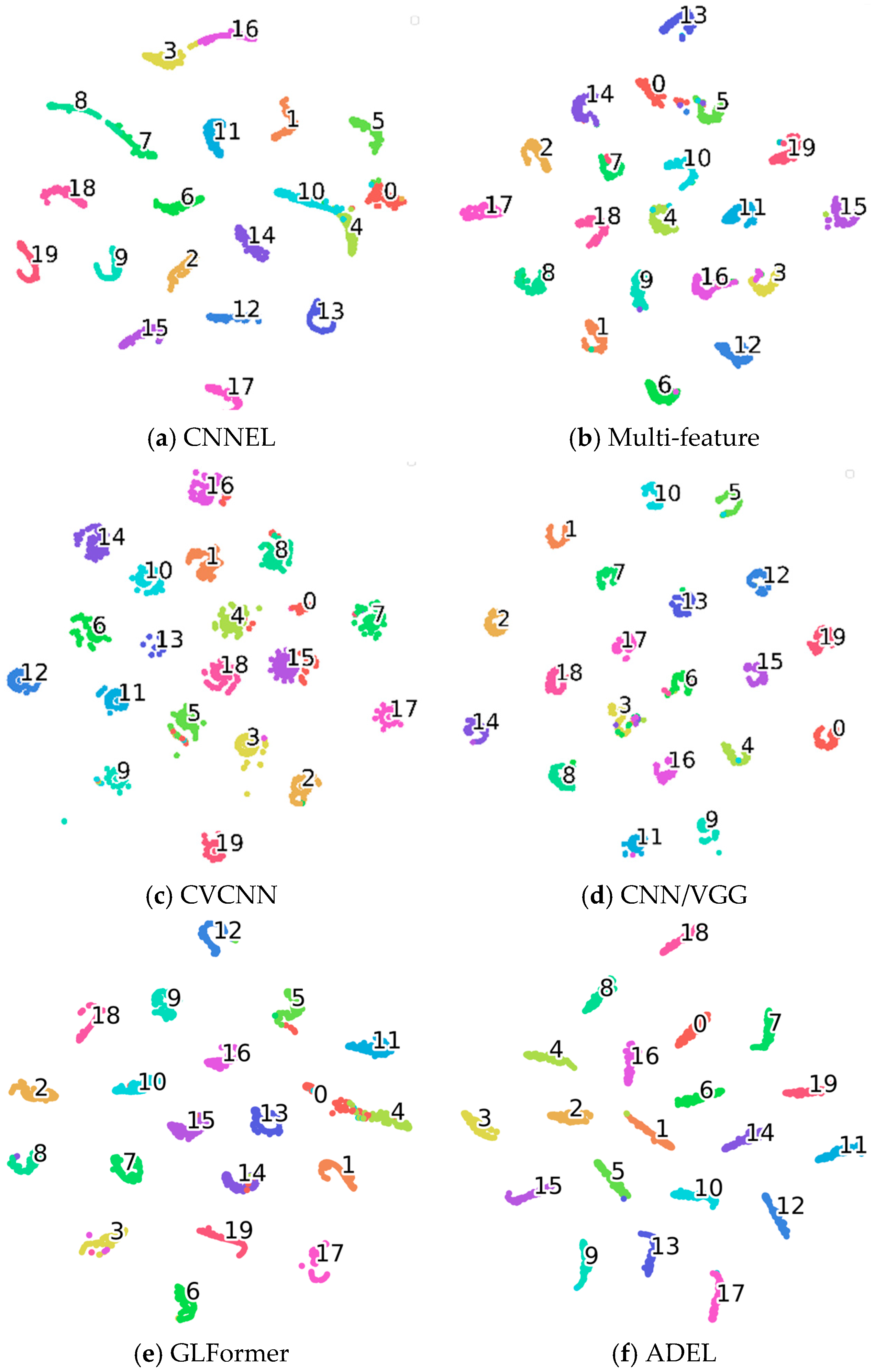

To gain deeper insight into the learned feature representations of each network, we perform dimensionality reduction on the 20-dimensional features from the final fully connected layer using t-Distributed Stochastic Neighbor Embedding (t-SNE). The quality of these feature representations is quantitatively evaluated via silhouette coefficients, with higher values indicating stronger discriminative power. Comparative deep features and silhouette scores (SC) for all networks are visualized in

Figure 4 and given in

Table 7, respectively, the number in the clusters signify the respective classes.

Both qualitative and quantitative analyses demonstrate ADEL’s superior discriminative power. t-SNE visualizations (

Figure 4) show well-separated clusters with exceptional intra-class compactness and inter-class separation. This is quantitatively validated by SC analysis, where ADEL achieves the highest 0.9184 SC. The performance hierarchy reveals traditional methods (CNNEL: 0.8558; multi-feature fusion: 0.8734), hybrid approach (CVCNN: 0.9021), advanced architectures (GLFormer: 0.8701, CNN/VGG: 0.9145) and our ADEL framework (0.9184). Notably, ADEL’s 0.0039 SC advantage over CNN/VGG suggests fundamental architectural improvements rather than incremental gains.

To further validate ADEL’s effectiveness, we conducted comprehensive experiments using a LoRa dataset. The acquisition of LoRa employed a USRP N210 software-defined radio (SDR) platform as the receiver, operating at a carrier frequency of 868.1 MHz with a transmission interval of 0.3 s. The receiver sampling rate was configured at 1 MHz. For this evaluation, we utilized a representative subset comprising 20 device classes, with 500 samples per class. The dataset was partitioned using random sampling into training (60%), validation (20%) and testing (20%) subsets to ensure rigorous performance assessment. The full experimental dataset details are available in [

56].

Among the aforementioned models achieving accuracy exceeding 80%, CVCNN, CNN/VGG, multi-feature and ADEL attain accuracies of 84.05%, 84.90%, 95.50% and 97.90%, respectively, on the LoRa dataset. Their corresponding confusion matrices are presented in

Figure 5. Although these accuracy values are lower than those achieved on the ADS–B dataset, ADEL still outperforms the multi-feature approach by 2.40%.

Figure 6 presents t-SNE visualizations of the learned feature representations for each network architecture. The corresponding silhouette coefficients (SC) are 0.6235, 0.6289, 0.9035 and 0.9123, respectively. Higher SC values indicate superior feature representation quality, which is corroborated by the tighter cluster separation and reduced inter-class overlap observed in the t-SNE projections.

Table 8 presents the parameter counts and inference latency metrics across the evaluated models. The results demonstrate that while ADEL achieves superior performance relative to comparative approaches, this performance advantage comes at the cost of substantially increased model complexity and longer inference times. These computational considerations warrant careful attention in resource-constrained deployment scenarios.

3.3. Ablation Results

The ADEL framework incorporates the following three core optimization components: categorical cross-entropy loss, center loss and mean squared error (MSE). When MSE is employed, it concurrently activates adaptive weighted inference for ensemble predictions. Ablation study results quantifying these components’ contributions are presented in

Table 9, where the symbol ✓ denotes component inclusion and ✗ indicates exclusion.

Table 9 reveals substantial performance improvements through hybrid loss optimization. Baseline cross-entropy loss alone yields 93.00% accuracy, while integrating MSE loss (λ = 0.49) elevates accuracy by 4.55% to 97.55%, demonstrating the critical role of signal reconstruction fidelity. Augmenting center loss (λ

center = 0.56) achieves comparable enhancement (97.10% accuracy), highlighting its efficacy in compressing intra-class variance. Crucially, simultaneously incorporating both auxiliary losses (λ = 0.31, λ

center = 0.85) establishes peak accuracy at 99.75%, a 6.75% improvement over baseline—validating their complementary mechanisms in refining feature space geometry.

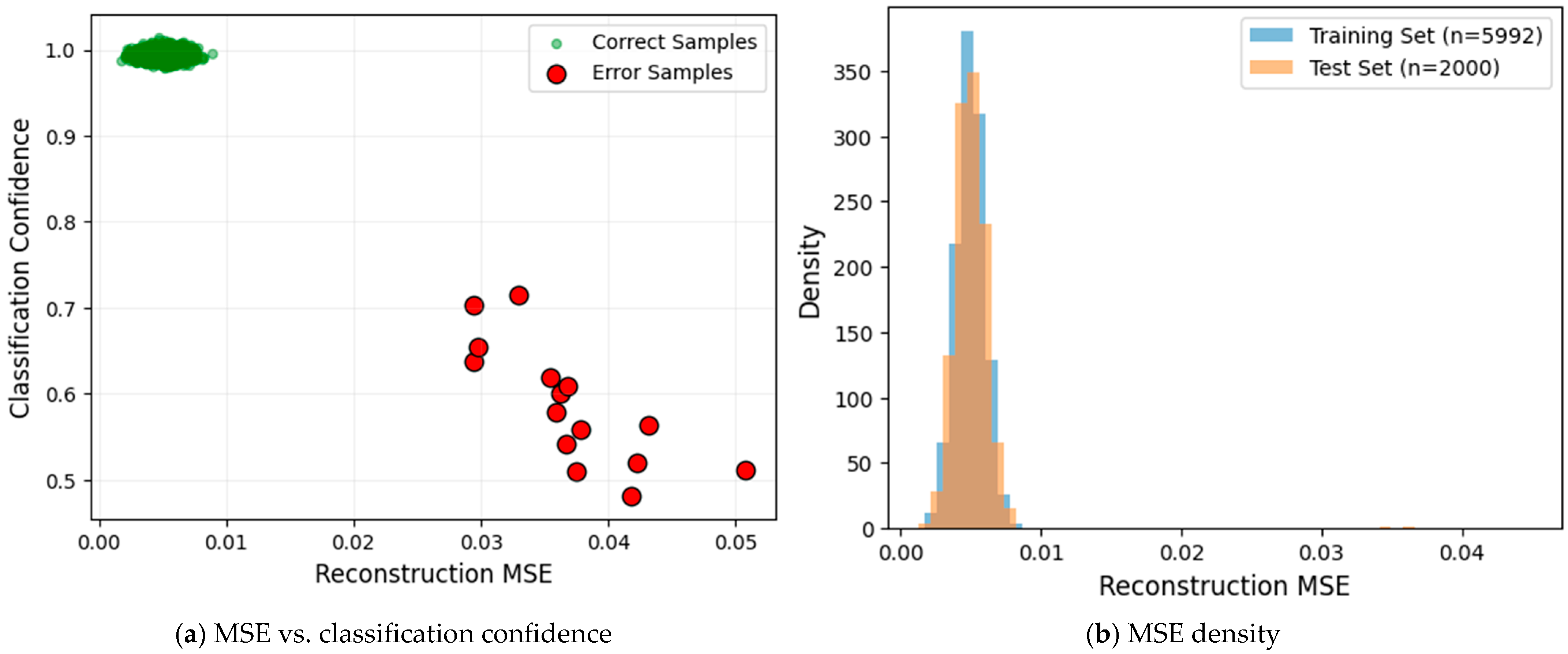

The adaptive weighting mechanism based on reconstruction error (MSE) plays a pivotal role in ADEL’s performance. As illustrated in

Figure 7a, we observe a strong negative correlation between MSE and classification confidence as follows: correctly classified samples predominantly cluster in the low-MSE/high-confidence region, while few misclassified samples exhibit significantly higher MSE values. This distinct separation confirms that reconstruction quality reliably indicates classification reliability. Furthermore,

Figure 7b demonstrates statistically equivalent MSE distributions between training and test sets. This distributional alignment provides robust evidence that the model learns transferable features rather than memorizing training set artifacts.

To quantitatively assess the individual contributions of each component in our hybrid loss function (Equation (1)), we perform a comprehensive parameter sensitivity analysis. As demonstrated in

Figure 8, the model’s classification accuracy (accuracy) is systematically evaluated across

and

, with all configurations achieving > 80% accuracy being visualized through both radar chart and heatmap representations.

The radar chart and heatmap analysis reveals a systematic evaluation of parameter interactions between λ and λ_c. The visualization demonstrates that model performance exhibits strong dependence on both parameters, with particularly notable behavior observed for the λ = 0.40 configuration (red polygon), which achieves optimal accuracy (99.15%) at λ_c = 0.90; this represents a significant 17.95% improvement over the λ = 0.40 configuration (81.20% at λ_c = 0.80). The heatmap quantitatively validates these findings, showing a well-defined high-performance region (dark blue cells) concentrated at λ = 0.10–0.20 with λ_c ≥ 0.70. Three distinct performance tiers emerge: (1) a peak performance zone (accuracy > 99%) centered at (λ = 0.40, λ_c = 0.90); (2) a transition zone (94–97%) surrounding the peak region. Notably, the λ = 0.40 configuration exhibits anomalous behavior, with accuracy dropping sharply to 81.20% at λ_c = 0.80 despite reasonable performance at higher λ_c values, suggesting complex parameter interactions. The concordance between these complementary visualization techniques strongly supports the conclusion that the λ = 0.40/λ_c = 0.90 combination represents the optimal parameter configuration, while also highlighting the importance of avoiding the λ = 0.40 regime when λ_c approaches 0.80. Notably, when implementing a more granular search interval (0.01 versus 0.10), the model maintains its peak performance capability, consistently reaching 99.75% accuracy under optimal parameter combinations.

To better characterize the behavior of these parameters, we performed a fine-grained grid search over λ ∈ [0.1,0.5] (∆λ = 00.1) and λ_center ∈ [0.5,1] (∆λ_center = 0.01). As shown in

Figure 9, despite persistent fluctuations in performance, classification accuracy consistently exceeds 97% across both lower and upper bounds of λ values.