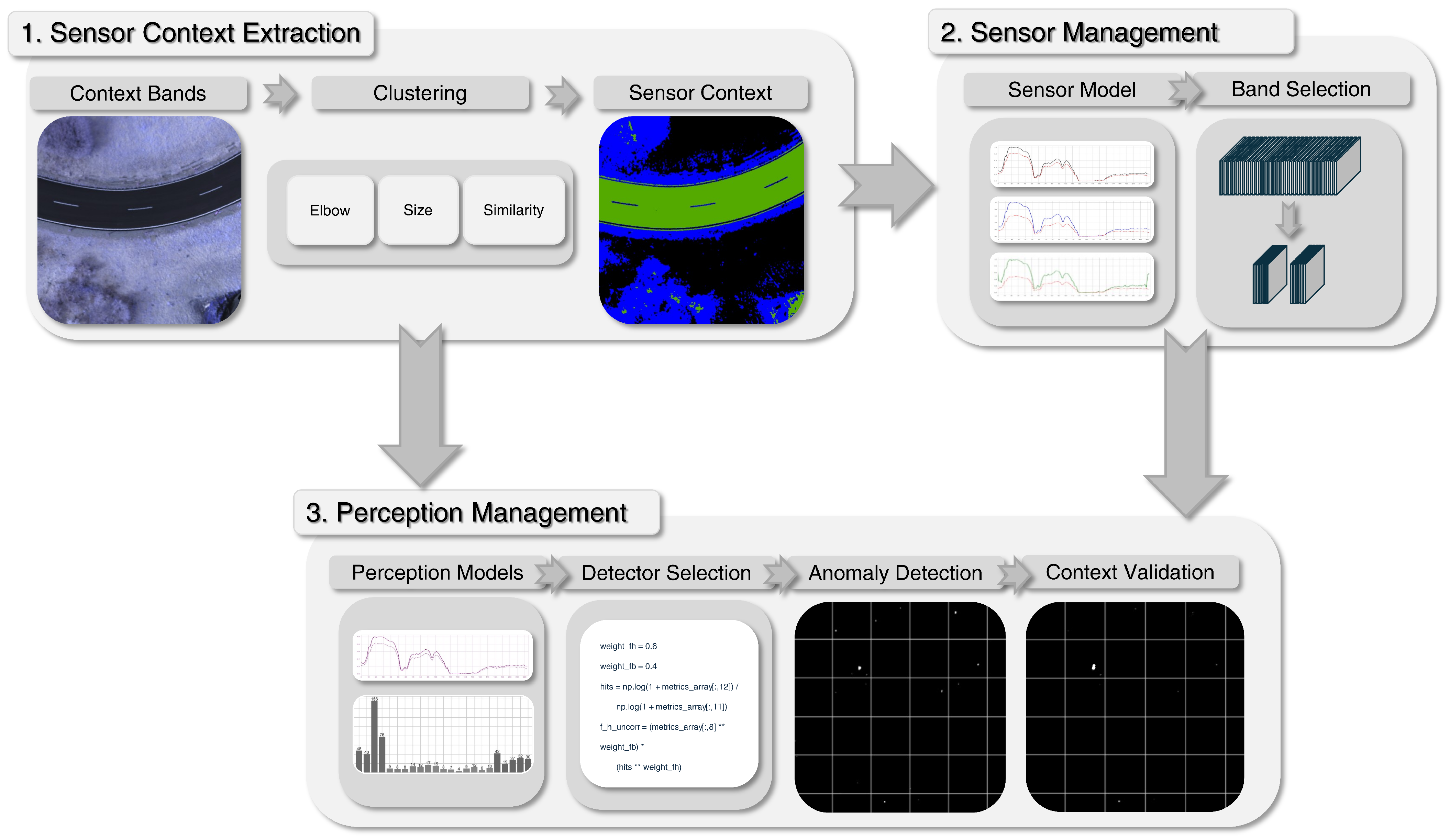

The perception management module extends the principle of sensor management to the level of anomaly detection. Its purpose is to predict and automatically select the most suitable anomaly detector for each input HSI based on the current scene context and target characteristics, see

Figure 1. Detection performance strongly depends on both image conditions and target properties. This is because different anomaly detectors use different mathematical definitions of what constitutes an outlier, each based on a relative comparison to a chosen baseline. As a result, their responses vary with changes in background statistics or texture, which directly influence this comparison and thus the outcome of the detection. To address this challenge, our approach does not rely on a single detector. Instead, perception management exploits this variability by maintaining a pool of complementary detectors and using a trained perception model to predict detector performance and adaptively select the best configuration. Accordingly, perception management is divided into two sections. First, the pool of available anomaly detectors are introduced. Secondly, the perception model is proposed, which predicts the expected detection performance for all pool detectors and selects the optimal one. Due to the distinct spectral–spatial characteristics, separate detector pools and perception models are maintained for camouflage and UXO targets.

Detectors

The detector pool implemented in perception management is directly aligned with the characteristics of the evaluation dataset, which contains both large-area camouflage targets and small UXO objects under diverse environmental and seasonal conditions. To address these two fundamentally different target types, two detector pools are defined, see

Table A1:

For camouflage materials (large, often structures with varying color contrasts due to their camouflage texture), four algorithmic families are used—Local Reed–Xiaoli Detector (LRX), contour-based HDBSCAN (C-HDBSCAN), contour-based Normalized Cross Classification (C-NCC), and a bandpass filter, parameterized into 23 detector configurations.

For UXO (small, point-like targets with limited spatial extent and often uniform contrast), a specialized LRX variant with adjusted parameters is applied, resulting in 7 additional detector configurations.

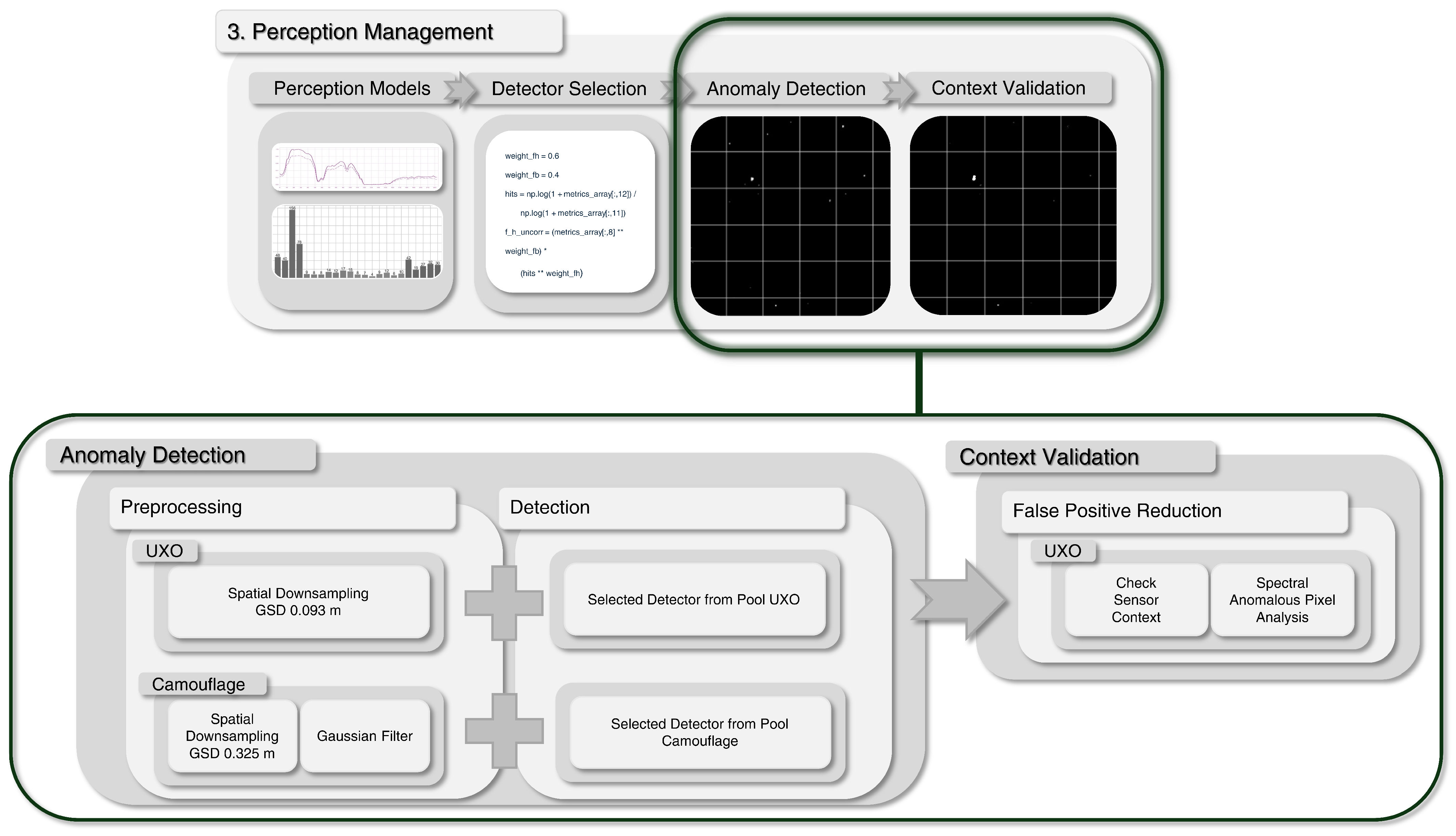

These diverse detector pools ensure that both fundamentally different target types are addressed while providing complementary strengths across heterogeneous environments. The general workflow is illustrated in

Figure 4, including preprocessing (downsampling and Gaussian filtering) and subsequent anomaly detection with a context valdiation for false positives reduction.

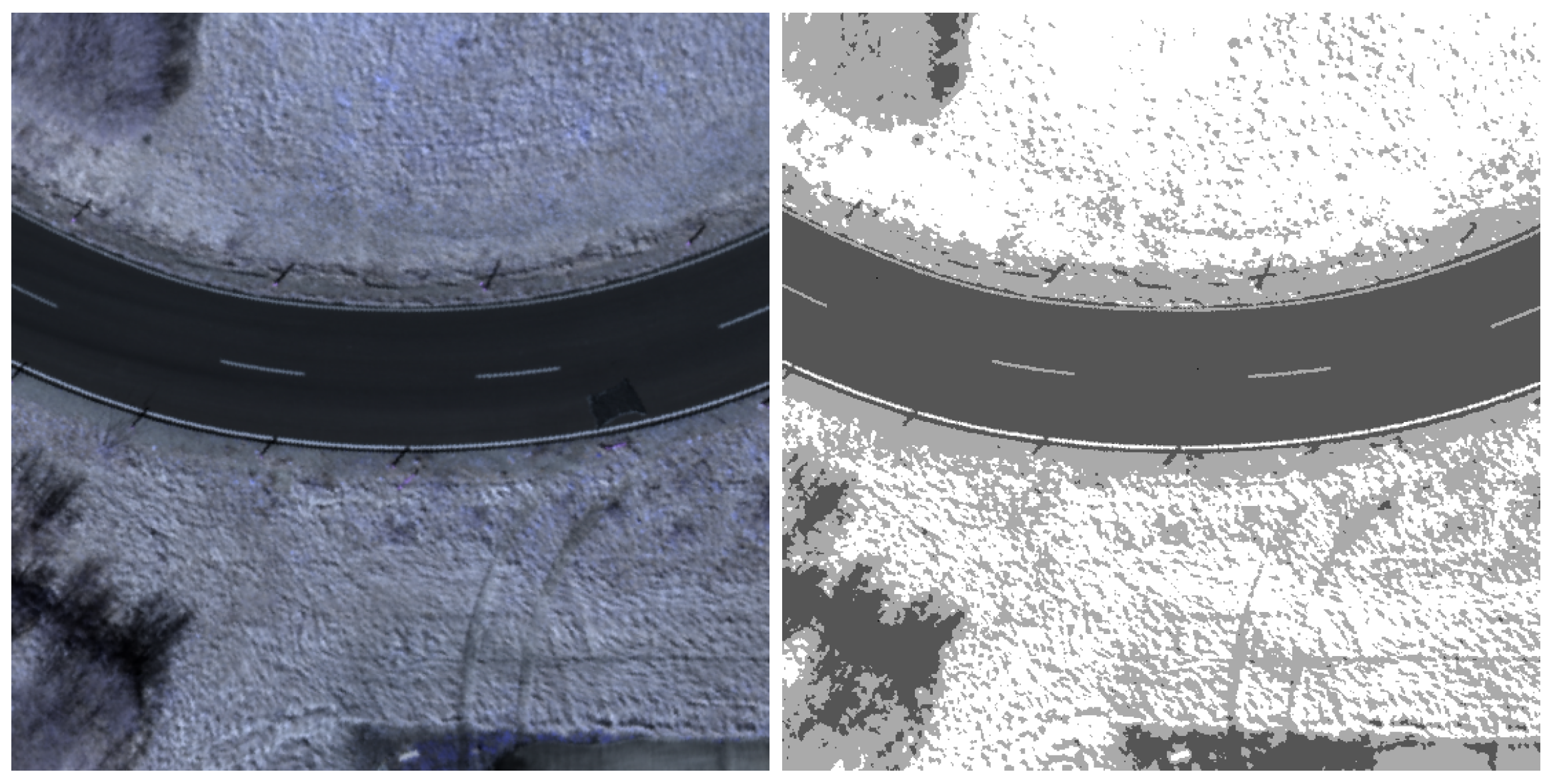

The detection processing of the camouflage material starts with a spatial downsampling and Gaussian filtering of the selected image band set as part of preprocessing. Spatial downsampling is performed to a GSD of 0.325 m, which represents a trade-off between preserving sufficient spatial resolution for detecting the targets and reducing computational load to ensure efficient processing while maintaining adequate coverage. Subsequently, a Gaussian filter smooths the image and reduces noise to enhance image quality affected by poor lighting conditions or bright reflections such as canopies. For this purpose, the filter is set with a Gaussian distribution of 0.5 and performed on the band set. The preprocessed images are then processed by one seleted anomaly detector out of the 23 camouflage detector configurations with the 4 algorithmic families. As a result of the strong methodological independence of the 4 algorithmic families, the performance of each detector varies greatly across changing image contexts in diverse image scenes. The methodology of the four algorithmic families is introduced below.

The Reed–Xiaoli Detector, first published in 1990 by [

18], is one of the most popular anomaly detectors and a benchmark in HSI [

44,

45,

46]. The detection algorithm models the background by a multivariate Gaussian distribution, assuming a homogeneous background. For this purpose, the detector compares a single pixel under test

x with the background pixels

w of a defined background

, which contains the image pixels within a specified inner window

and outer window

around

x. If the defined window covers the entire image, the detector is referred to as the global Reed–Xiaoli Detector, otherwise, as the local one. Then, for each image pixel, the squared Mahalanobis distance

is calculated to determine the level of abnormality to the background by

where

is the mean vector of the background and

the corresponding local covariance matrix that models the specified background. Once the LRX is performed, the detection map is converted into a binary detection mask by applying a percentile

that defines the required minimum percentage

to be considered as an anomaly.

In addition to the LRX, the clustering-based detector C-HDBSCAN is implemented as part of the hSPM. This detector uses contour information, extracted by Felzenszwalb and Huttenlocher image segmentation, and the Hierarchical Density-Based Spatial Clustering of Applications with Noise (HDBSCAN). The HDBSCAN is a hierarchical density-based clustering algorithm that uses a minimum spanning tree (MST) to find the optimum clusters [

47,

48]. For that purpose, for all pairs of data points, the Bray–Curtis distance

d is calculated, and the local density of the data by the mutual reachability distance

is determined with

where

F and

G are the paired data points, and

the core distance, which represents the minimum distance of the data points to its

l-nearest neighbors and describes the data density around the data pairs. Using

, a mutual reachability distance graph is created, where all points are connected and weighted by their assigned distances. From this graph, an MST is computed, which directly connects all data points while minimizing the total sum of mutual reachability distances. This MST is the input for the hierarchical clustering that processes the MST with a varying level of detail in clustering. Subsequently, each cluster is analyzed with respect to its density, significance, and stability, and is selected based on the highest results. Hence, the process of hierarchical clustering can be controlled by setting a required minimum number of cluster pixels, called

. Some of the pixels may not be assigned to any of the clusters and are, therefore, identified as noise or anomalous data points. This exclusion is used to create a binary anomaly detection mask that is extended with contour information by Felzenszwalb and Huttenlocher image segmentation. The graph-based segmentation algorithm transforms the image pixels into nodes, connected through edges that represent their spatial distance and pixel value, defined as similarity [

49]. Then, the algorithm varies the details of the segments, starting with a single segment for each pixel and terminating with assigning all pixels using a defined similarity threshold. The parameters

and

adjust the level of detail within this segmentation process. Finally, the resulting segments of the algorithm are checked for their size and transformed into a binary mask by thresholding the segments that exceed the maximum size, defined by

. A logical AND is then used to combine the segmentation mask with the result of the clustering step. HDBSCAN was implemented using [

50,

51] for the Felzenszwalb algorithm.

A contour-based Normalized Cross Classificator (C-NCC) based on [

52] is also implemented alongside LRX and C-HDBSCAN. Here, the classifier that compares the image spectra to a given spectrum of interest is performed on the spectral environment vectors

of the sensor context in

Section 2.1.1. In this way, the NCC can be used for the detection of anomalous targets that deviate from the environment and remain unclassified. For this purpose, the NCC normalizes the given spectrum of interest and the spectral image in the first step. Subsequently, the NormXCorr is calculated for the spectrum of interest and each image pixel, providing a metric to measure their spectral similarities, see Equation (

1). Hence, pixels that show generally low similarity below a defined percentile

across all spectral environment vectors are identified as anomalous or noise in a binary detection mask. Finally, this detection mask is combined with the contour information also extracted by the Felzenszwalb and Huttenlocher image segmentation algorithm using the same procedure as C-HDBSCAN.

The bandpass filter is the fourth detector implemented for the target group camouflage materials and isolates and identifies image pixels that differ significantly from the image background [

53]. For this purpose, a passable signal range is defined by an upper and lower cutoff, called

and

, which removes all signals outside the passable range. The bandpass filter creates, in the first step, a single 8-bit image from the spectral averaged input band set and determines the image center. This image center

is defined by the pixel rows

H and columns

W of the image, which are combined by the theorem of Pythagoras. Hence, the defined cutoff values can be transformed into the value range of the image by multiplication with

and the subsequent Fourier transform is prepared by creating a coordinate system for

where

x and

y are the arrays of the coordinate system used to calculate the Euclidean distances between them. With these distances and the determined image space cutoff values, the actual bandpass filter is created by a binary mask passing all distances within the cutoff range by

where

fshifted is the shifted filter, which re-centers the low-frequency components to the center of the image before applying a 2-dimensional discrete Fourier transform. The product of the latter and the shifted bandpass filter is then transformed back into the initial image space by an inverse 2-dimensional discrete Fourier transform. In the last step, a percentage percentile

converts the image into a binary mask, depicting the extracted anomalous pixels. The parameter settings of all introduced detectors can be found in

Table A1.

In addition to the detectors specialized for taller targets such as camouflage material, an LRX with an adjusted window size is used for the smaller targets UXO, see

Table A1. Unlike the previously introduced detection procedure, the process of anomaly detection differs significantly and, besides a reduced downsampling rate, does not consider a Gaussian filter or any other detection algorithms other than the LRX, see

Figure 4. This is due to the characteristics of the small target sizes, which require, on the one hand, a higher spatial resolution. On the other hand, the use of a Gaussian filter and the resulting image smoothing reduces the target differentiability for small targets and is not implemented due to this contradiction. Thus, only a spatial downsampling is implemented in addition to the detection algorithm LRX with variable parameter settings. While the LRX was the only detector that has shown higher detection rates with relatively low computational requirements, no other algorithmic family besides the LRX is implemented. In addition, the extensive postprocessing step context validation for reducing false positives is added subsequently to the UXO anomaly detection. The use of the statistically driven LRX for UXO detection causes a high false detection rate and must be reduced. To address this limitation, the next processing stage of the hSPM architecture, context validation, is introduced.

Context Validation

This module exploits the extracted sensor context and the predictions of the sensor model to systematically reduce false positives, as illustrated in

Figure 1. In the first step of context validation, the detected anomalies are evaluated with respect to the extracted sensor context

and the target deviations

v predicted by the sensor model. The general idea is to leverage the cluster labels from the sensor context to filter out anomalous pixels associated with clusters that were subsequently classified as irrelevant. Hence, K-Means Clustering in the sensor context works with a fixed number of clusters; the algorithm assigns each data point to one of these clusters based on the smallest distance. Thus, each anomalous pixel is assigned to an environment cluster by the previous context extraction. At the same time, the sensor model has estimated for the targets of interest the expected target deviation

to these clusters, and thus, for each target there exists a environment with the smallest deviation. Based on the K-means clustering method, all anomalies of interest must be assigned to the environment with the smallest distance in the context clustering. This means that environments without a minimum target deviation for any target should not contain anomalous pixels originating from actual targets but only false positives. Hence, all anomalies that were assigned to those environments in the context clustering are very likely not the target and are excluded from the detection map. In detail, all targets are shuffled to determine and list the environment with the assigned minimum target deviation, see Algorithm 1 Line 1 to 10. Subsequently, a binary mask is created by excluding all unlisted environments in the context clustering label map from

Section 2.1.1, Line 11 to 12. This mask is then combined with the anomaly detection map using a logical AND operation, excluding all anomalies assumed to be false positives due to unassigned or irrelevant environmental clusters, Line 13.

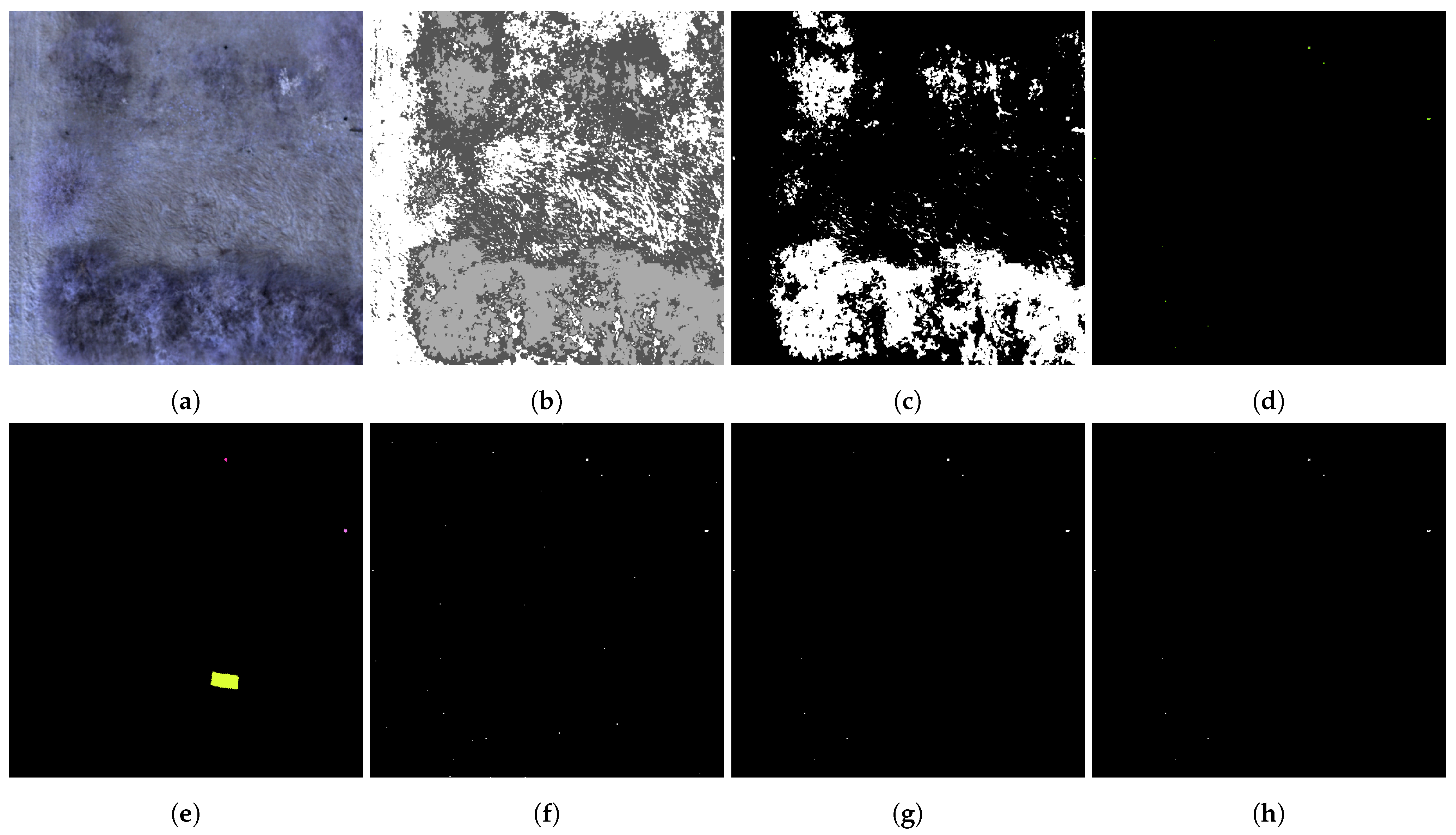

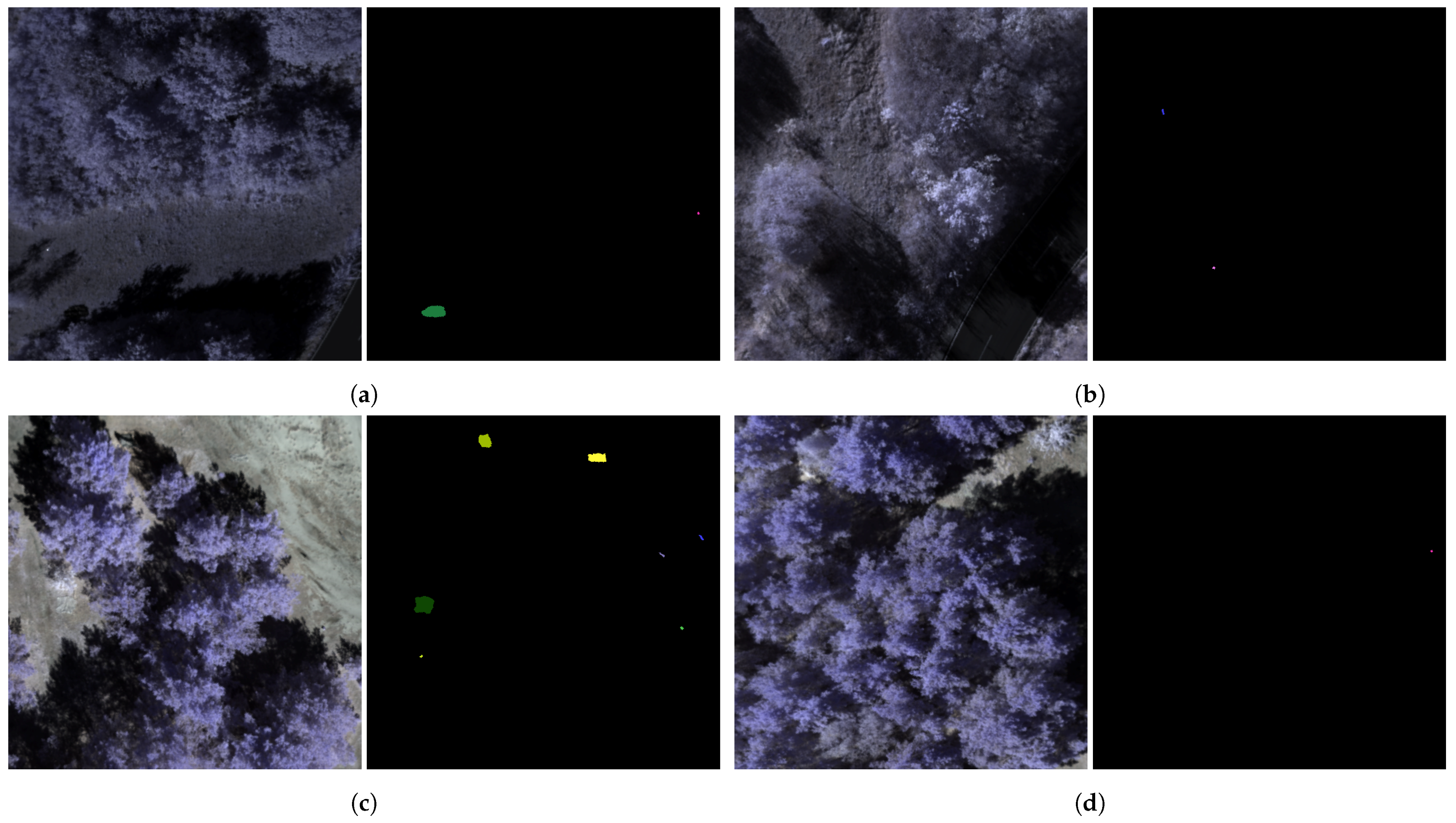

Figure 5c,g show an example of the improved detection performance with reduced false positives by considering the contextual knowledge of the sensor context. The image sample from dataset 1 shows two UXO and a camouflage net. Please note that the latter one is not detected by the LRX for UXO due to its selected window size for the much smaller UXO and is not considered as false negative. The detection of the camouflage material will be performed in parallel by the earlier introduced detectors specialized for camouflage.

| Algorithm 1 Check Sensor Context. |

| Require: detectionMap, envContextClusterLabel, , , |

| Ensure: detectionMapPostEnv, relevantEnvContextVecIndices |

| 1: relevantEnvContextVecIndices |

| 2: for to do |

| 3: tempDist |

| 4: for to do |

| 5: Compute for target in environment: |

| 6: |

| 7: end for |

| 8: Determine k with min. tempDist for t: (tempDist) |

| 9: |

| 10: end for |

| 11: |

| 12: ▷ with elementwise containment check |

| 13: |

| 14: ▷ with elementwise comparison |

| 15: return detectionMapPostEnv, relevantEnvContextVecIndices |

In addition to contextual postprocessing based on sensor context information, a second stage of false positive reduction is applied in the context validation: Spectral Anomalous Pixel Analysis. In this step, anomalous pixels are clustered according to their spectral signatures, grouping them into either potential anomaly targets or presumably spurious noise within the hyperspectral image (HSI). The underlying assumption is that, due to the initially high false alarm rate, the number of false positives remains larger than the number of true anomalous target pixels even after the first reduction stage. These false positives frequently originate from natural background variability, irregularities that can be interpreted as spectral noise. Because of their natural origin, many of these pixels are expected to share similar spectral characteristics and can thus be aggregated into a small number of large spectral clusters. These clusters can be distinguished from actual target clusters by comparing them to the sensor model-predicted target deviation

v. This deviation is defined as the difference between the spectral signature of each anomalous pixel and the corresponding environmental context signature, as described in Equation (

2). The assumption is that noisy clusters will exhibit greater dissimilarity to the predicted deviation than those clusters corresponding to actual targets. Therefore, noise clusters can be identified by their larger difference to the expected deviation and removed from the anomaly detection map, thereby lowering the false alarm rate. For each predicted target deviation

, anomalous pixels exhibiting the minimum distance to the sensor model prediction are identified, along with their associated context clusters. These clusters are then assumed to have a high likelihood of containing valid target information. In contrast, clusters not containing any pixel with minimum distance are assumed to be false positives and are excluded. This process is described in detail in Algorithm 2: First, the HDBSCAN algorithm is used due to its capability to form detailed spectral clusters, Line 4 to 6. The clustering process is governed by the parameters

min_samples, set to 20, and true

allow_single_cluster, which determine whether noisy or anomalous pixels can still form clusters, even in uniformly distributed data. If the number of anomalous pixels falls below

min_samples, no cluster is created and the process is terminated. Afterwards, the target deviations for each anomalous pixel to the relevant context clusters are calculated, Line 7 to 12. Subsequently, distances between predicted target deviations and the calculated ones are determined using the minimum Euclidean distance and listed, Line 14. All HDBSCAN clusters that include pixels assigned a minimum distance are kept, Line 15. Finally, all non-relevant clusters are excluded from the anomaly detection map, Line 18 to 19. Since the accuracy of the procedure depends on the assumption that anomalies contain a large number of false positives, this assumption may no longer hold when only a few anomalies are present. Here, similarity and structuring by the HDBSCAN tend to be misleading. In such cases, the algorithm may produce overly granular clusters and inadvertently discard true positives. To address this issue, the proposed context validation is always applied in conjunction with the combined LRX configuration and its specific parameters. Each combination of detector configurations and context validation produces distinct detection results and must be taken into account by the downstream perception model.

Figure 5d,h illustrate the clustered anomalous pixels and the resulting detection map. As shown in this example, the map following sensor context validation (g) already contains relatively few anomalous pixels due to the aforementioned limitations. Nevertheless, the clustering step is designed to address these challenges and results in the final refined detection map (h).

| Algorithm 2 Spectral Anomalous Pixel Analysis. |

| Require: HSI, envContextClusterLabel, , , , |

| Ensure: detectionMapFinal, relevantEnvContextVecIndices |

| 1: relevantHDBSCANClusterLabelIndex |

| 2: Perform HDBSCAN clusterer on anomalous pixel: |

| 3: |

| 4: for to do: |

| 5: |

| 6: ▷ with elementwise comparison |

| 7: Calculate pixel deviations to corresponding for each pixel signature , |

| 8: where u denotes the pixel index and the set of u: |

| 9: |

| 10: for all do |

| 11: |

| 12: end for |

| 13: for to do: |

| 14: Get pixel index with min distance: |

| 15: |

| 16: end for |

| 17: end for |

| 18: |

| 19: ▷ with elementwise containment check |

| 20: return detectionMapFinal |

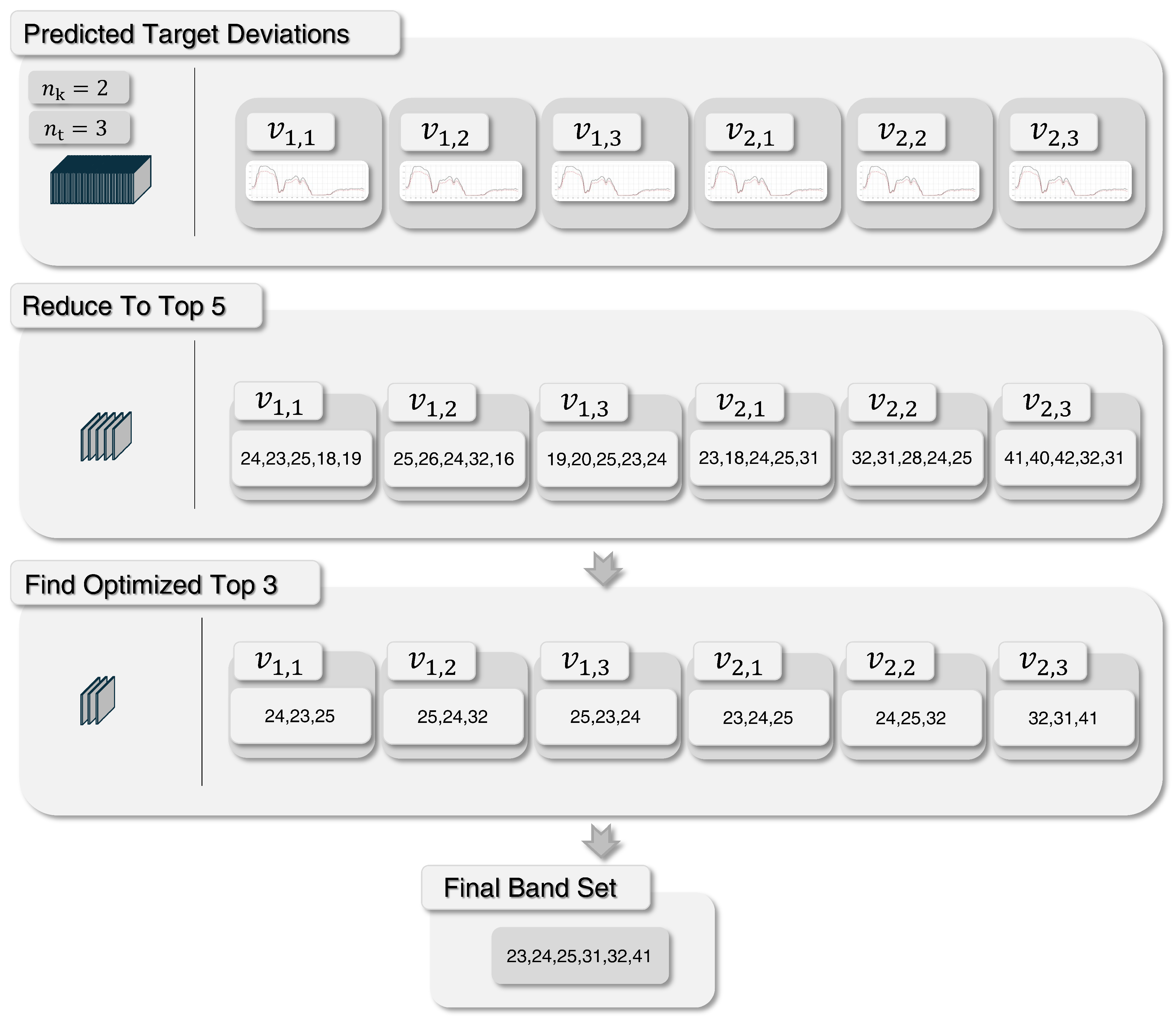

Perception Model and Detector Selection

The perception model and the detector selection are the key components of the perception management and the instances that select the best-performing anomaly detector from the provided detector pools together with the corresponding pre- and postprocessing procedure. Each of the two target groups has its own perception model that is equal in meteorology and input features but trained only with data for the corresponding target group to adress the strong varying target characteristics. For this, the single perception models select the most suitable detector configuration for a given input band set from the band selection, which is also defined separately for each target group. The process begins by normalizing the input band set and converting it into an averaged single-band image in 8-bit format. From this image, a feature vector is extracted that serves as the input for predicting detector performance. Reliable prediction requires features that adequately capture the relationships between the targets, the image characteristics, and the properties of the detection algorithms.

The feature vector is constructed from Haralick and Local Binary Pattern (LBP) features, which describe texture, contrast, and entropy, and thus provide a representative abstraction of the scene. These features directly influence detector performance and allow the regression model to predict which detector is expected to perform best for a given scene. For the Haralick features, the average values across all four image directions are computed and concatenated to ensure directional invariance. The LBP features are likewise extracted in a directionally invariant manner, using a fixed radius of 111 and 5 sampling points, making them particularly effective for capturing coarser texture and contrast relevant for camouflage detection. In addition to these descriptors, the feature vector also includes the overall predicted mean target deviation as well as the minimum and maximum predicted target deviations from the sensor model. These values encode target-specific discriminability information for the model. The complete feature vector is then passed to a CatBoost regressor, which constitutes the perception model. Prediction proceeds in two steps: First, the regressor outputs a performance estimate for each detector in the pool with its pre- and postprocessing, expressed as a vector where each index corresponds to a specific detector. Secondly, based on these predicted values, the configuration with the highest score for detection performance is automatically selected. This detector performance is quantified using the newly proposed

-score, derived from the widely used

-score. The

-score is defined as follows:

where

p is the precision,

r is the recall, and

is the parameter that controls the weight of recall in relation to precision, set to 1.1 for a slight improvement in the overall detection sensitivity. However, the

-score is not a suitable metric for evaluating overall detection performance in scenarios with multiple anomalies, as it only considers the total number of correctly identified pixels, regardless of the individual targets present in the images. This can lead to a detection result with a single well-detected target being rated higher than a result where all targets are detected but less accurately. The latter is clearly preferable when applying HSI anomaly detection without needing contours for subsequent classification that can be performed using the spectral signature of individual pixels. The use of the AUC performance measurement, which determines the overall detector performance across all possible threshold values, also falls short here. The calculation includes a threshold range that may not be relevant in actual application scenarios, and it may also rank detectors with generally high performance above those that actually perform best within the relevant threshold range. To overcome these shortcomings, we propose a new metric, the

-score, which combines the

-score with the number of correctly detected targets in a scene into a single performance value. The

-score is defined as follows:

where

is the number of correctly detected targets,

is the total number of targets, and

and

are the weights (0.4 and 0.6, respectively). The logarithmic term prevents dominance by the number of targets and enables fair comparison across scenes. The weighted exponents ensure a unique mapping between input features and output scores, unlike a weighted sum. Finally, based on the predicted

-scores, the perception model automatically selects the detector configuration with the highest score for the given HSI scene, ensuring robust and adaptive detection performance. The training configuration of the two perception models used in this study are presented in

Section 3.