Trunk Detection in Complex Forest Environments Using a Lightweight YOLOv11-TrunkLight Algorithm

Abstract

1. Introduction

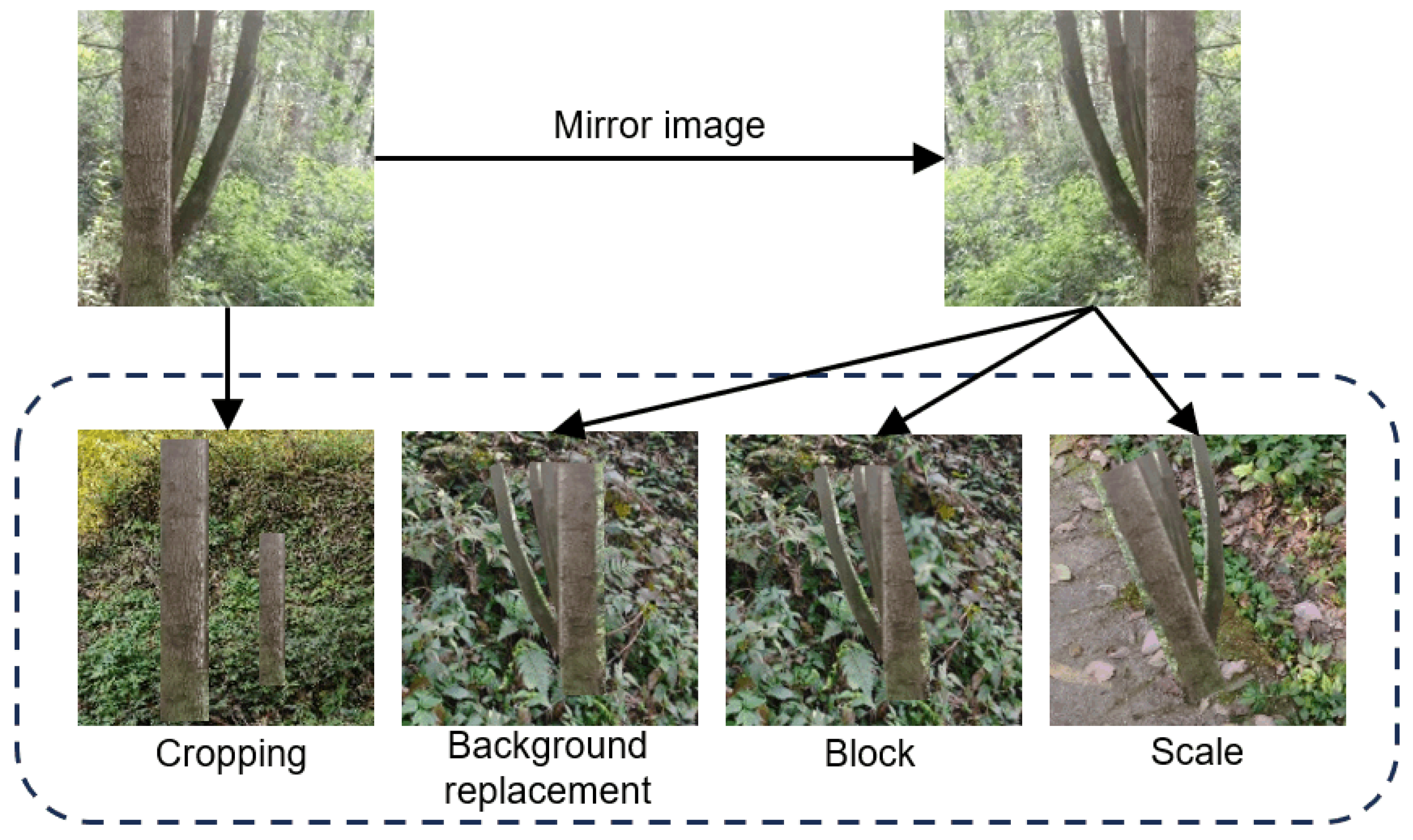

- To overcome the limitations of generic datasets and enhance model generalization for complex forest environments, we constructed a specialized dataset. This dataset was built by collecting images under various weather conditions and employing advanced data augmentation techniques, such as feature mapping transformation for background segmentation, cropping, and rotation. The processed trunk targets were then randomly scaled and superimposed onto diverse backgrounds to simulate the high variability and complexity of real-world forest scenes.

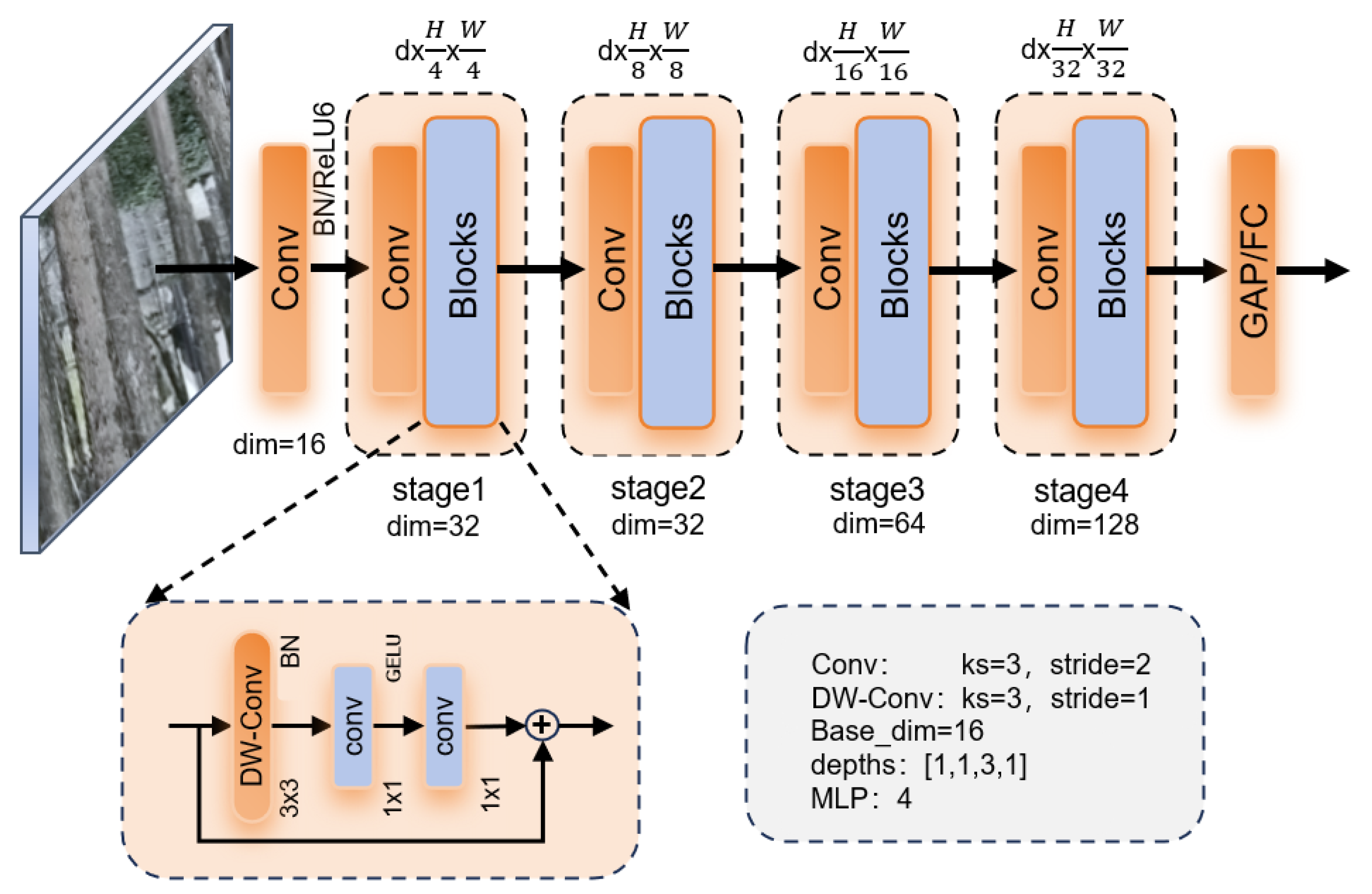

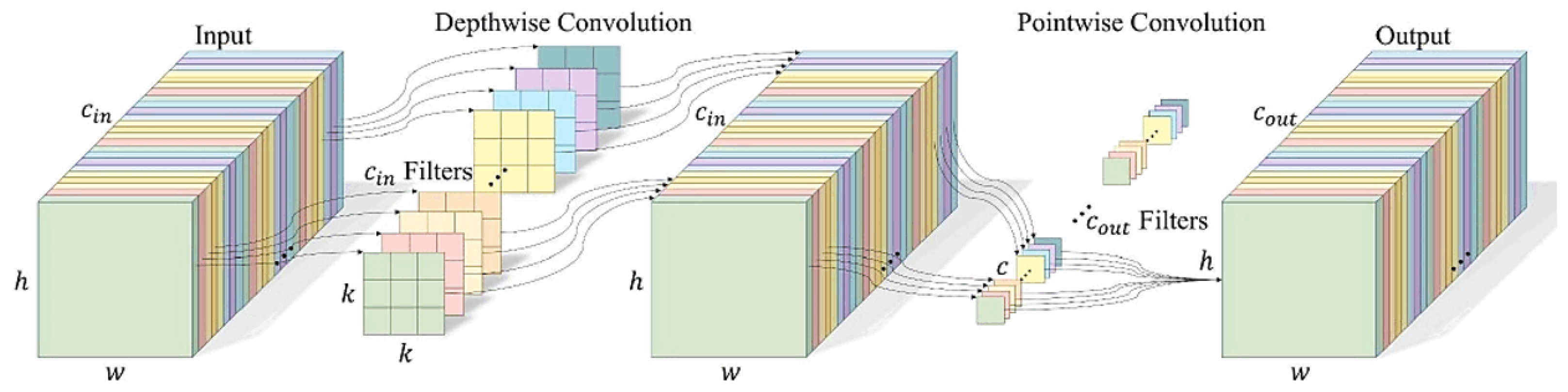

- Addressing the challenge of balancing lightweight design with feature representation power [10,11,12], we innovatively propose the StarNet_Trunk backbone network. This architecture replaces traditional residual connections with element-wise multiplication and integrates 3 × 3 depthwise separable convolutions. This design significantly reduces computational complexity and parameters while maintaining a large receptive field, thereby achieving efficient and robust trunk feature extraction without compromising the model’s capacity.

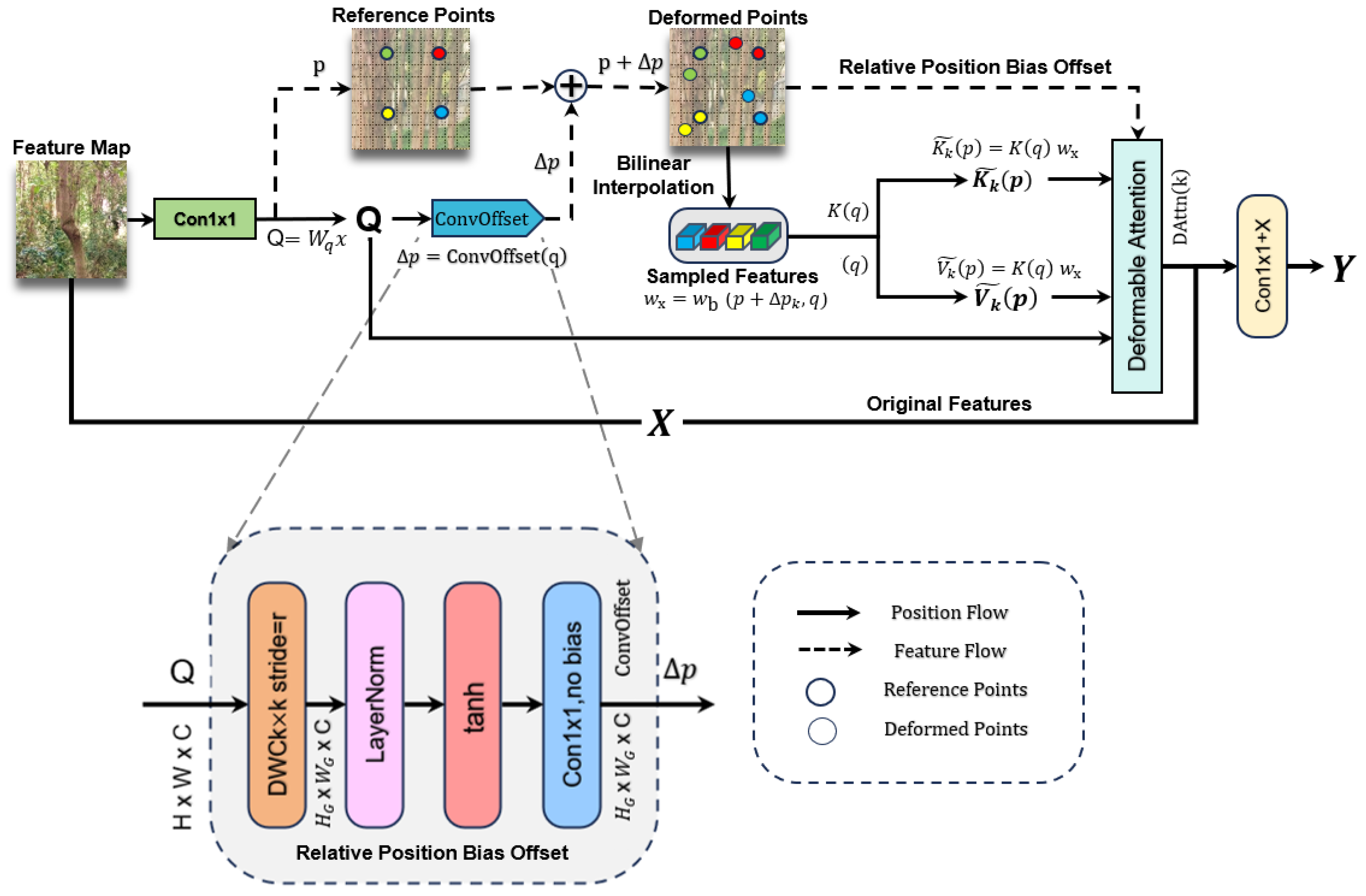

- To specifically enhance the model’s adaptability to the geometric deformation of trunks and complex backgrounds—a limitation not fully addressed by existing attention mechanisms [15,16] or multi-modal methods [14]—we designed the C2DA deformable attention module. It incorporates dynamic relative position bias encoding, allowing the model to dynamically adjust its receptive field to fit irregular trunk contours. Furthermore, to optimize the trade-off between detection accuracy and computational efficiency in the prediction head, we developed the dual-path EffiDet detection head. It improves detection speed and reduces parameters through feature decoupling and a dynamic anchor mechanism, making it highly suitable for edge deployment.

2. Materials and Methods

2.1. Dataset Construction

2.1.1. Data Collection and Characterization

2.1.2. Data Preprocessing and Annotation Strategy

2.1.3. Synthetic Data Augmentation

2.2. Modeling

2.2.1. StarNet_Trunk Module

2.2.2. C2DA Module

2.2.3. EffiDett Module

3. Experimentation

3.1. Experimental Environment and Parameter Settings

3.1.1. Experimental Environment Configuration

3.1.2. Training Parameter Settings

3.2. Evaluation Indicators and Standards

Detection Accuracy Indicators

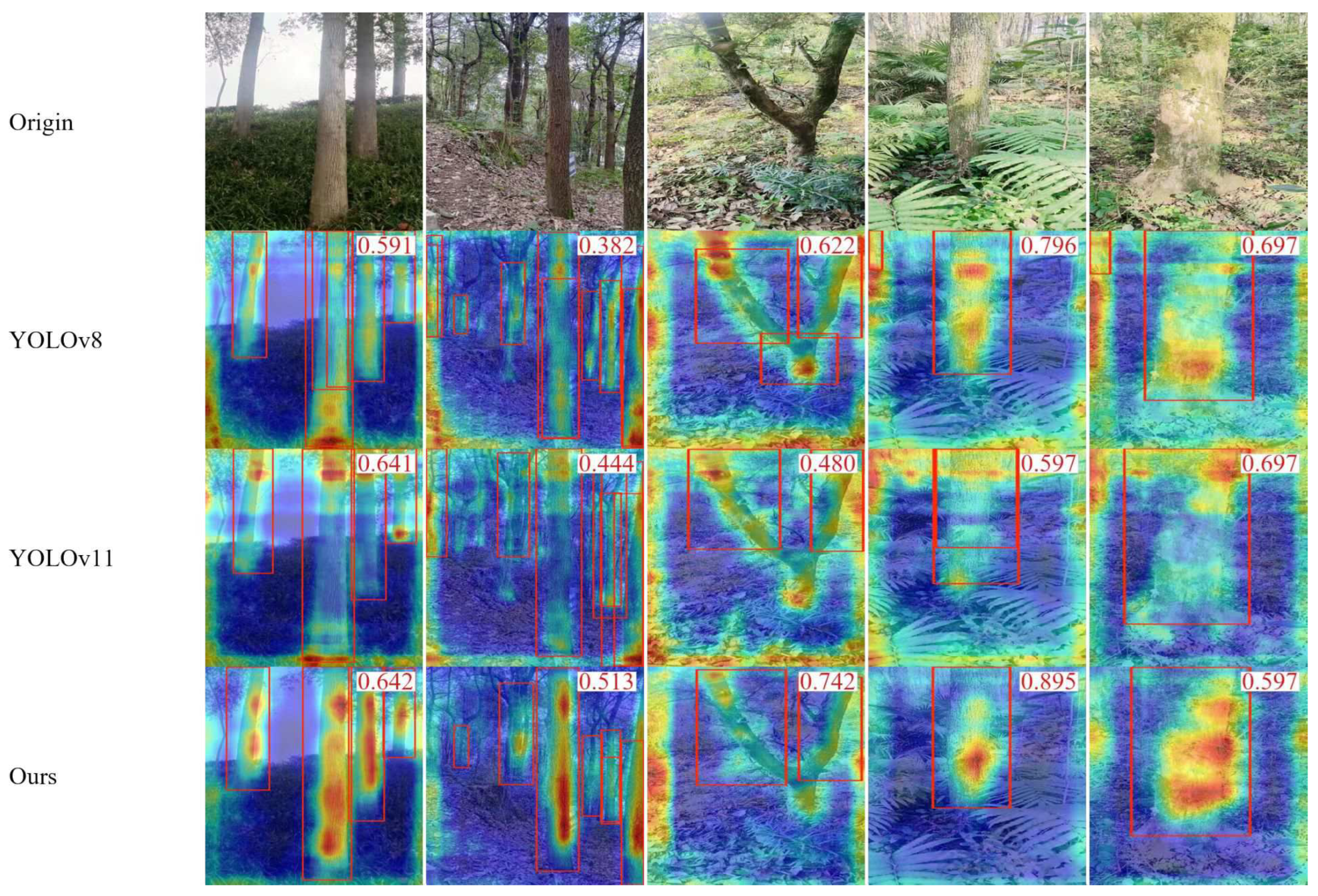

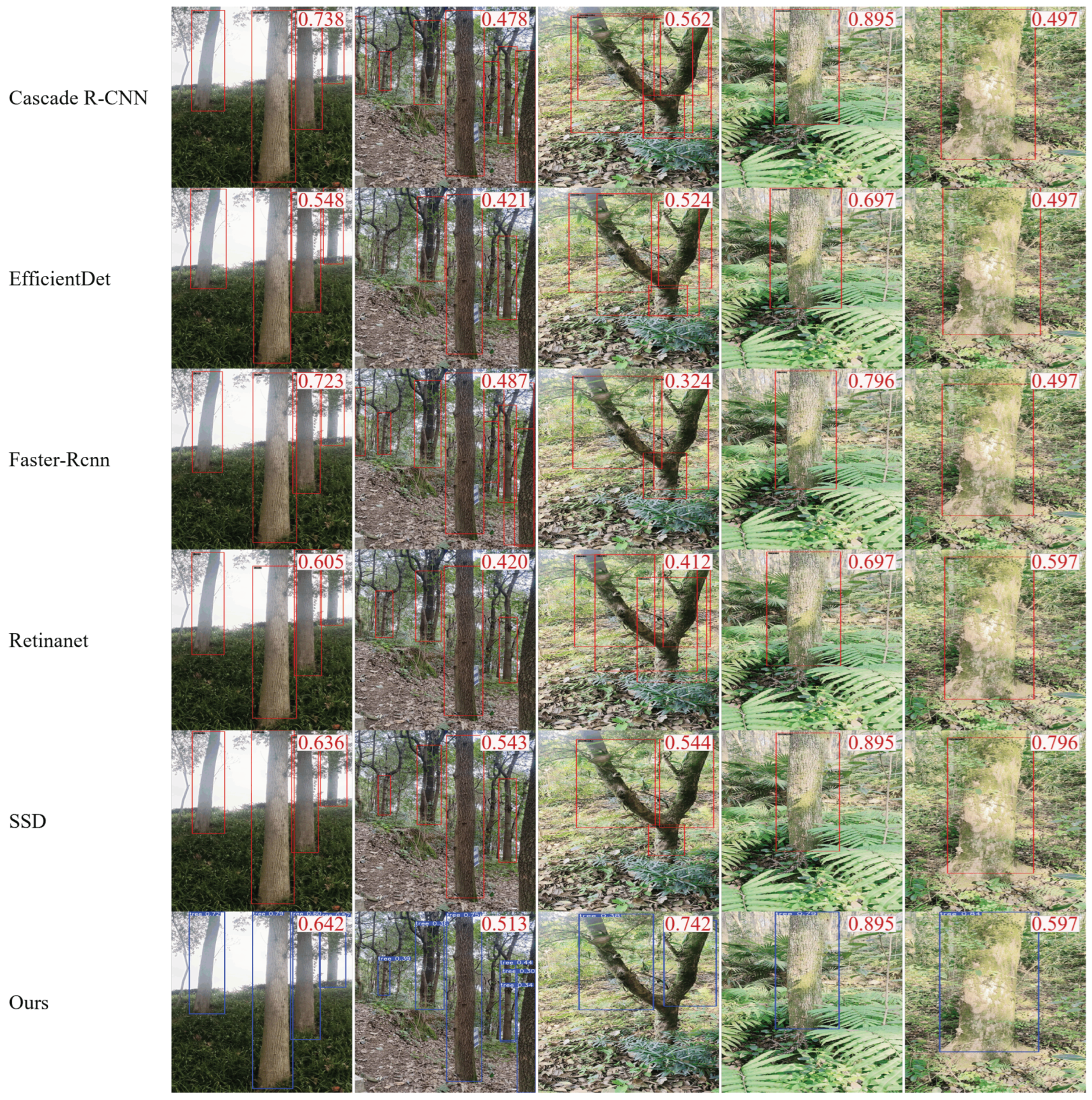

3.3. Comparative Analysis

3.3.1. Comparison with Mainstream Target Detection Algorithms

3.3.2. Detection Head Comparison Experiment

3.3.3. Backbone Network Assessment

3.4. Ablation Experiments

3.4.1. Component Contribution Analysis

3.4.2. Component Analysis

4. Conclusions

4.1. Research Findings Overview

4.2. Key Innovations and Advantages

4.3. Implications and Future Deployment

5. Limitations and Future Work

5.1. Model Limitations

5.2. Future Research Directions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ambuj; Machavaram, R. Intelligent path planning for autonomous ground vehicles in dynamic environments utilizing adaptive Neuro-Fuzzy control. Eng. Appl. Artif. Intell. 2025, 144, 110119. [Google Scholar] [CrossRef]

- Ma, Y.; Zhao, Y.; Im, J.; Zhao, Y.; Zhen, Z. A deep-learning-based tree species classification for natural secondary forests using unmanned aerial vehicle hyperspectral images and LiDAR. Ecol. Indic. 2024, 159, 111608. [Google Scholar] [CrossRef]

- Savinelli, B.; Tagliabue, G.; Vignali, L.; Garzonio, R.; Gentili, R.; Panigada, C.; Rossini, M. Integrating Drone-Based LiDAR and Multispectral Data for Tree Monitoring. Drones 2024, 8, 744. [Google Scholar] [CrossRef]

- da Silva, D.Q.; dos Santos, F.N.; Sousa, A.J.; Filipe, V. Visible and Thermal Image-Based Trunk Detection with Deep Learning for Forestry Mobile Robotics. J. Imaging 2021, 7, 176. [Google Scholar] [CrossRef]

- Strunk, J.L.; Reutebuch, S.E.; McGaughey, R.J.; Andersen, H.E. An examination of GNSS positioning under dense conifer forest canopy in the Pacific Northwest, USA. Remote Sens. Appl. Soc. Environ. 2025, 37, 101428. [Google Scholar] [CrossRef]

- Zeng, Z.; Miao, J.; Huang, X.; Chen, P.; Zhou, P.; Tan, J.; Wang, X. A Bottom-Up Multi-Feature Fusion Algorithm for Individual Tree Segmentation in Dense Rubber Tree Plantations Using Unmanned Aerial Vehicle–Light Detecting and Ranging. Plants 2025, 14, 1640. [Google Scholar] [CrossRef] [PubMed]

- Gupta, H.; Andreasson, H.; Lilienthal, A.J.; Kurtser, P. Robust Scan Registration for Navigation in Forest Environment Using Low-Resolution LiDAR Sensors. Sensors 2023, 23, 4736. [Google Scholar] [CrossRef]

- Lin, Y.C.; Liu, J.; Fei, S.; Habib, A. Leaf-Off and Leaf-On UAV LiDAR Surveys for Single-Tree Inventory in Forest Plantations. Drones 2021, 5, 115. [Google Scholar] [CrossRef]

- Pereira, T.; Gameiro, T.; Pedro, J.; Viegas, C.; Ferreira, N.M.F. Vision System for a Forestry Navigation Machine. Sensors 2024, 24, 1475. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, M.; Yang, Z.; Li, J.; Zhao, L. An improved target detection method based on YOLOv5 in natural orchard environments. Comput. Electron. Agric. 2024, 219, 108780. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, H.; Liu, Y.; Luo, Y.; Li, H.; Chen, H.; Liao, K.; Li, L. A Trunk Detection Method for Camellia oleifera Fruit Harvesting Robot Based on Improved YOLOv7. Forests 2023, 14, 1453. [Google Scholar] [CrossRef]

- López, P.R.; Dorta, D.V.; Preixens, G.C.; Gonfaus, J.M.; Marva, F.X.R.; Sabaté, J.G. Pay Attention to the Activations: A Modular Attention Mechanism for Fine-Grained Image Recognition. arXiv 2019, arXiv:1907.13075. [Google Scholar] [CrossRef]

- Wu, H.; Mo, X.; Wen, S.; Wu, K.; Ye, Y.; Wang, Y.; Zhang, Y. DNE-YOLO: A method for apple fruit detection in Diverse Natural Environments. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102220. [Google Scholar] [CrossRef]

- Lin, Y.; Huang, Z.; Liang, Y.; Liu, Y.; Jiang, W. AG-YOLO: A Rapid Citrus Fruit Detection Algorithm with Global Context Fusion. Agriculture 2024, 14, 114. [Google Scholar] [CrossRef]

- Wu, Y.; Chen, J.; Wu, S.; Li, H.; He, L.; Zhao, R.; Wu, C. An improved YOLOv7 network using RGB-D multi-modal feature fusion for tea shoots detection. Comput. Electron. Agric. 2024, 216, 108541. [Google Scholar] [CrossRef]

- Liu, H.; Fan, K.; Ouyang, Q.; Li, N. Real-Time Small Drones Detection Based on Pruned YOLOv4. Sensors 2021, 21, 3374. [Google Scholar] [CrossRef]

- Liu, Y.; Yao, J.; Lu, X.; Xie, R.; Li, L. DeepCrack: A Deep Hierarchical Feature Learning Architecture for Crack Segmentation. Neurocomputing 2019, 338, 139–153. [Google Scholar] [CrossRef]

- O’Hanlon, J.F.; Peasnell, K.V. Residual Income and Value-Creation: The Missing Link. Rev. Account. Stud. 2002, 7, 229–245. [Google Scholar] [CrossRef]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Vision Transformer with Deformable Attention. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, Z.; Wang, X.; Luo, T.; Xiao, Y.; Fang, B.; Xiao, F.; Luo, D. STARNet: An Efficient Spatiotemporal Feature Sharing Reconstructing Network for Automatic Modulation Classification. IEEE Trans. Wirel. Commun. 2024, 23, 13300–13312. [Google Scholar] [CrossRef]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Dat++: Spatially dynamic vision transformer with deformable attention. arXiv 2023, arXiv:2309.01430. [Google Scholar] [CrossRef]

- Sun, G.; Liu, Y.; Ding, H.; Probst, T.; Van Gool, L. Coarse-to-fine feature mining for video semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 3126–3137. [Google Scholar] [CrossRef]

- Mekhalfi, M.L.; Nicolò, C.; Bazi, Y.; Al Rahhal, M.M.; Alsharif, N.A.; Al Maghayreh, E. Contrasting YOLOv5, transformer, and EfficientDet detectors for crop circle detection in desert. IEEE Geosci. Remote Sens. Lett. 2021, 19, 3003205. [Google Scholar] [CrossRef]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6450–6458. [Google Scholar] [CrossRef]

- Zhang, L.; Deng, Y.; Zou, Y. Automatic road damage recognition based on improved YOLOv11 with multi-scale feature extraction and fusion attention mechanism. PLoS ONE 2025, 20, e0327387. [Google Scholar] [CrossRef] [PubMed]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar] [CrossRef]

- Qian, L.; Chen, J.; Ma, L.; Urakov, T.; Gu, W.; Liang, L. Attention-based Shape-Deformation networks for Artifact-Free geometry reconstruction of lumbar spine from MR images. IEEE Trans. Med. Imaging 2025. Early Access. [Google Scholar] [CrossRef] [PubMed]

- Zhu, H.; Zhao, W.; Li, X.; Hou, B.; Jiao, C.; Ren, Z.; Ma, W.; Jiao, L. A Semantically Non-redundant Continuous-scale Feature Network for Panchromatic and Multispectral Classification. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5407815. [Google Scholar] [CrossRef]

| Parameters | Numbers/Types |

|---|---|

| Image size/(pixel × pixel) | 640 × 640 |

| Training batch | 150 |

| Data loading thread | 8 |

| Initial learning rate | 0.01 |

| Weight decay factor | 0.0004 |

| Model | mAP50–95 (%) | mAP50 (%) | P (%) | R (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| Easter-Renn | 54.70 | 94.80 | 92.30 | 87.60 | 60.34 | 247.81 |

| SSD | 52.20 | 91.40 | 91.00 | 82.70 | 24.38 | 87.541 |

| Retinanet | 50.90 | 92.80 | 96.40 | 81.20 | 36.33 | 177.152 |

| EfficientDet | 52.00 | 93.70 | 87.50 | 84.90 | 18.33 | 79.786 |

| Yolov8n | 53.60 | 92.80 | 87.10 | 85.90 | 2.87 | 8.1 |

| Yolov11n | 54.90 | 93.00 | 93.90 | 80.10 | 2.58 | 6.3 |

| Cascade R-CNN | 56.90 | 93.30 | 91.10 | 85.40 | 88.14 | 276.18 |

| Ours | 53.30 | 92.90 | 90.80 | 85.40 | 1.68 | 3.8 |

| Model | mAP50–95 (%) | mAP50 (%) | P (%) | R (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| Aux | 54.80 | 94.10 | 84.00 | 89.20 | 2.582347 | 6.3 |

| Atthead | 44.20 | 83.20 | 82.30 | 74.50 | 2.596011 | 6.5 |

| MAN | 53.80 | 93.90 | 86.00 | 87.90 | 3.774299 | 8.4 |

| SRFD | 54.40 | 93.00 | 88.70 | 85.10 | 2.554027 | 7.6 |

| SEAMHead | 53.70 | 93.00 | 87.70 | 84.70 | 2.490571 | 5.8 |

| WFU | 54.80 | 94.00 | 86.30 | 87.80 | 3.600075 | 8.0 |

| EffiDet | 54.00 | 93.20 | 89.70 | 84.50 | 2.312139 | 5.1 |

| Model | mAP50–95 (%) | mAP50 (%) | P (%) | R (%) | Params (M) | FLOPs (G) |

|---|---|---|---|---|---|---|

| fasternet | 54.20 | 93.30 | 92.70 | 81.20 | 3.901959 | 9.2 |

| timm | 53.60 | 92.60 | 93.60 | 80.00 | 13.056003 | 33.6 |

| efficientViT | 55.20 | 92.50 | 88.80 | 82.30 | 3.738051 | 7.9 |

| SPDConv | 54.60 | 92.90 | 87.10 | 85.90 | 4.586827 | 11.3 |

| swintransformer | 54.10 | 92.50 | 89.50 | 85.40 | 29.715709 | 77.6 |

| LDConv | 53.70 | 92.70 | 90.40 | 83.20 | 2.166127 | 5.4 |

| Starnet_Trunk | 52.20 | 92.20 | 86.10 | 86.30 | 1.942563 | 5.0 |

| Baseine | C2DA | EffiDet | StarNet_Trunk | Params (M) | FLOPs (G) | mAP50 (%) | FPS |

|---|---|---|---|---|---|---|---|

| √ | 2,582,347 | 6.3 | 93.00 | 242.58 | |||

| √ | √ | 2,599,307 | 6.3 | 94.40 | 218.87 | ||

| √ | √ | √ | 2,329,099 | 5.1 | 93.30 | 276.52 | |

| √ | √ | √ | √ | 1,689,315 | 3.8 | 92.90 | 275.37 |

| Baseline | C2DA | EffiDet | StarNet_Trunk | P (%) | R (%) | mAP50–95 (%) |

|---|---|---|---|---|---|---|

| √ | 93.90 | 80.10 | 54.90 | |||

| √ | √ | 89.00 | 86.20 | 54.40 | ||

| √ | √ | √ | 89.00 | 83.00 | 53.20 | |

| √ | √ | √ | √ | 90.80 | 85.40 | 53.30 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Zheng, Y.; Bi, R.; Chen, Y.; Chen, C.; Tian, X.; Liao, B. Trunk Detection in Complex Forest Environments Using a Lightweight YOLOv11-TrunkLight Algorithm. Sensors 2025, 25, 6170. https://doi.org/10.3390/s25196170

Zhang S, Zheng Y, Bi R, Chen Y, Chen C, Tian X, Liao B. Trunk Detection in Complex Forest Environments Using a Lightweight YOLOv11-TrunkLight Algorithm. Sensors. 2025; 25(19):6170. https://doi.org/10.3390/s25196170

Chicago/Turabian StyleZhang, Siqi, Yubi Zheng, Rengui Bi, Yu Chen, Cong Chen, Xiaowen Tian, and Bolin Liao. 2025. "Trunk Detection in Complex Forest Environments Using a Lightweight YOLOv11-TrunkLight Algorithm" Sensors 25, no. 19: 6170. https://doi.org/10.3390/s25196170

APA StyleZhang, S., Zheng, Y., Bi, R., Chen, Y., Chen, C., Tian, X., & Liao, B. (2025). Trunk Detection in Complex Forest Environments Using a Lightweight YOLOv11-TrunkLight Algorithm. Sensors, 25(19), 6170. https://doi.org/10.3390/s25196170