ED-Swin Transformer: A Cassava Disease Classification Model Integrated with UAV Images

Abstract

1. Introduction

- (1)

- Leveraging the advantages of UAV data acquisition, we employed an enhanced deep learning image classification model to accurately classify comprehensive cassava disease images captured with UAVs.

- (2)

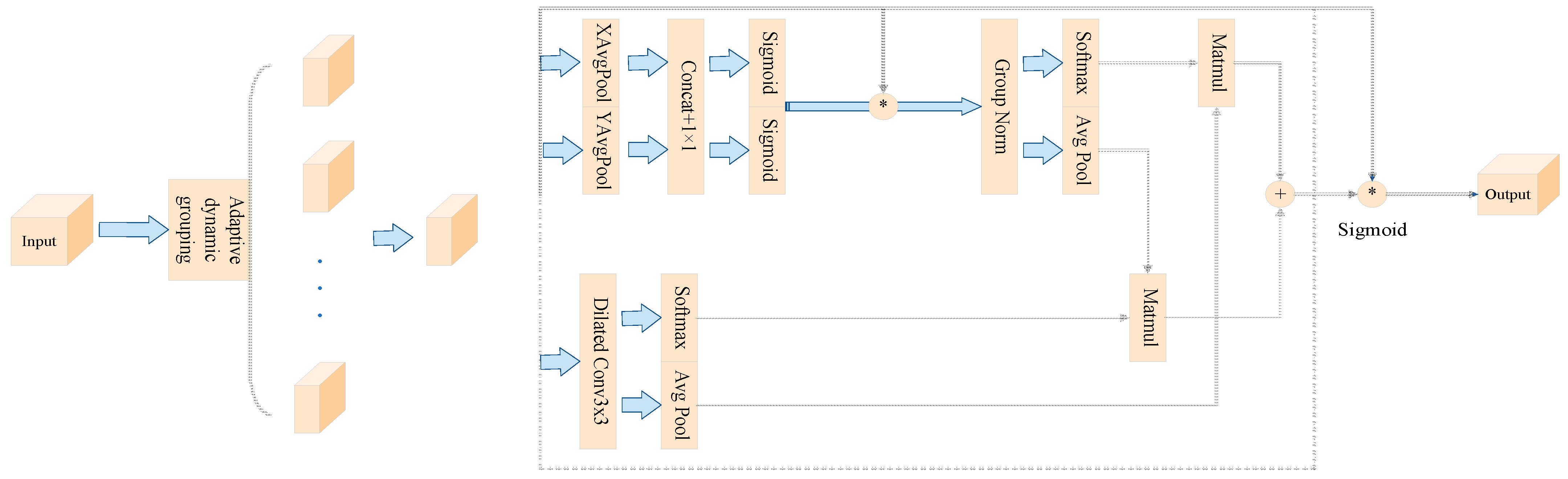

- Firstly, we introduced the EMAGE module to integrate the global distribution characteristics and local texture details of diseased leaves in UAV imagery, effectively mitigating the interference of complex background noise on feature extraction. Additionally, we incorporated dynamic grouping and dilated convolution modules, enabling the model to adaptively adjust the number of groups and fuse multi-scale information. This addresses the issues of missing global information and insufficient detail capture in key disease regions within complex backgrounds.

- (3)

- We designed the DASPP module, which employs deformable dilated convolutions to adaptively match the irregular boundaries of disease regions. This enhances the model’s robustness to morphological variations caused by angles and occlusions in low-altitude UAV imagery.

2. Related Work

2.1. Plant Disease Identification

2.2. Attention Mechanism

2.3. Feature Extraction

3. Method

3.1. Swin Transformer

3.2. EMAGE

3.3. DASPP

4. Results

4.1. Data Preparation

4.2. Experimental Parameter Settings and Metric Evaluation

4.3. The Ablation Experiments on the ED-Swin Transformer

4.4. Effectiveness Evaluation of ED-Swin Transformer

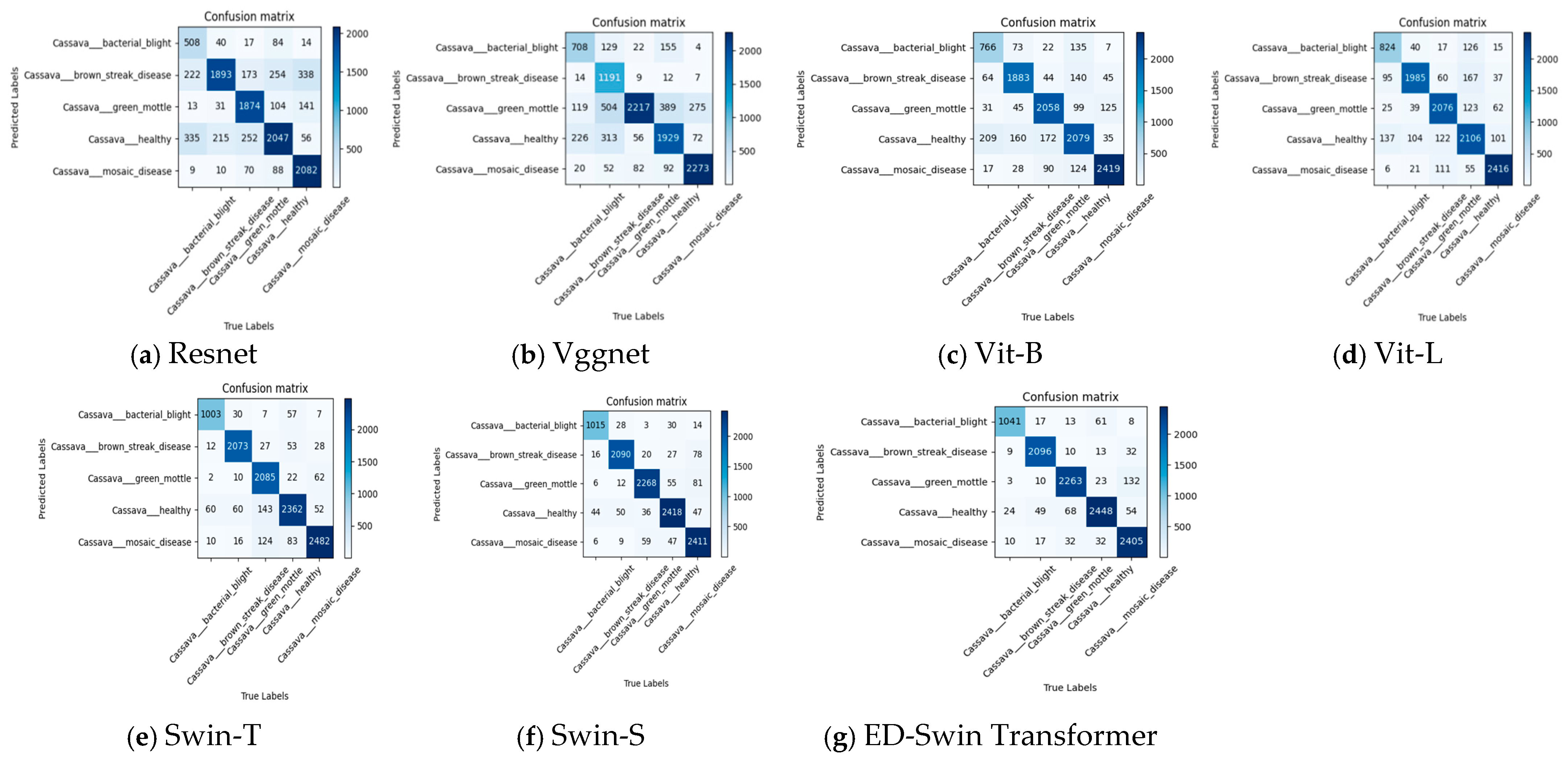

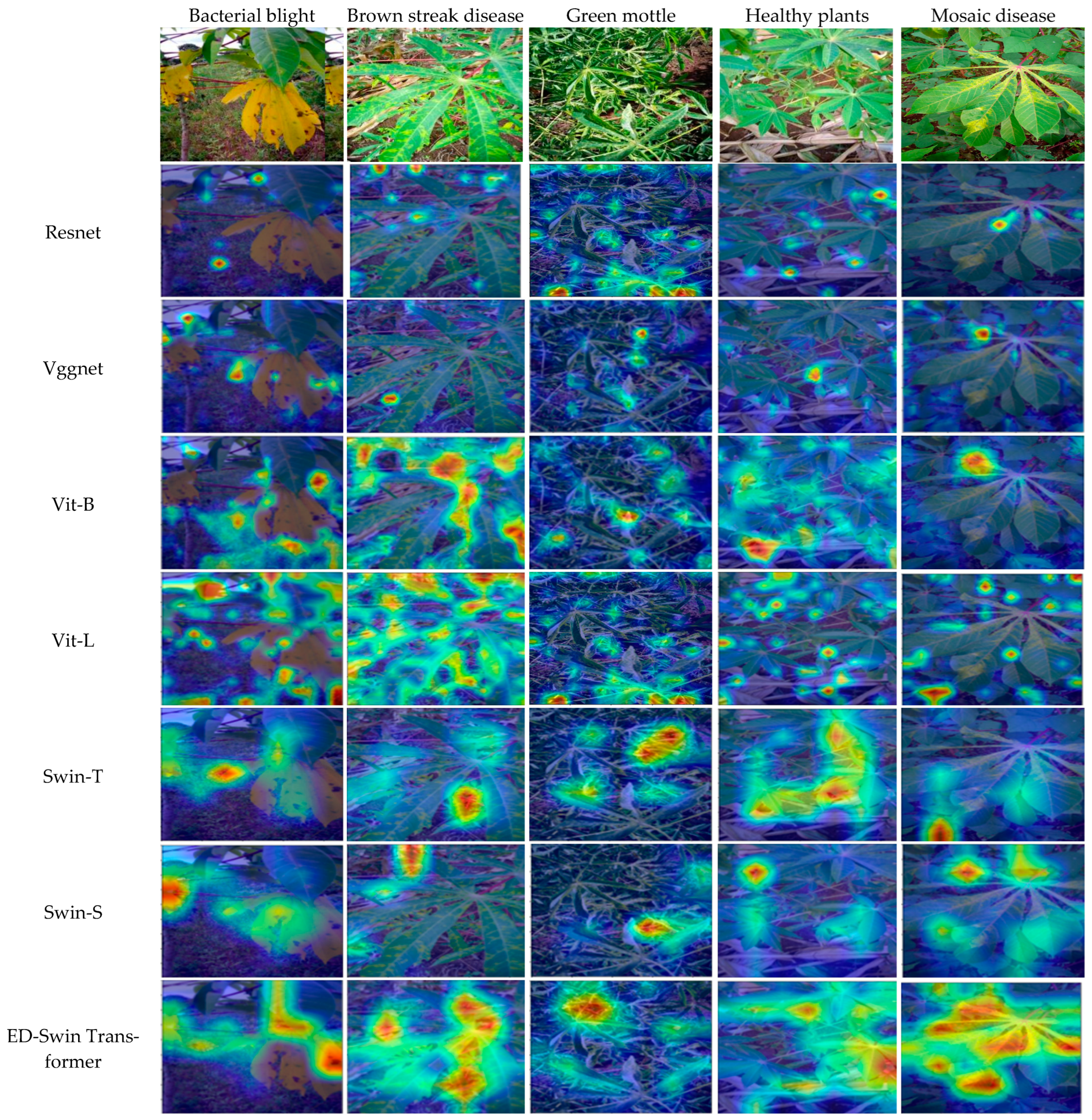

4.5. Visualized Results of Different Models in Cassava Leaf Disease Classification

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, J.; Zhang, B.; Qi, C.; Xu, T.; Xie, L.; Li, H.; Wang, X.; Liu, Y.; Ma, Y.; Chen, J. MAIANet: Signal modulation in cassava leaf disease classification. Comput. Electron. Agric. 2024, 225, 109351. [Google Scholar] [CrossRef]

- Apacionado, B.V.; Ahamed, T. Sooty Mold Detection on Citrus Tree Canopy Using Deep Learning Algorithms. Sensors 2023, 23, 8519. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Huang, T.; Li, Z.; Lyu, S.; Hong, T. Design of Citrus Fruit Detection System Based on Mobile Platform and Edge Computer Device. Sensors 2022, 22, 59. [Google Scholar] [CrossRef] [PubMed]

- Lilhore, U.K.; Imoize, A.L.; Lee, C.C.; Sharma, R.; Dwivedi, R.; Kumar, S.; Yoon, Y.; Lee, H. Enhanced convolutional neural network model for cassava leaf disease identification and classification. Mathematics 2022, 10, 580. [Google Scholar] [CrossRef]

- Bhowmik, A.C.; Ahad, M.T.; Emon, Y.R.; Islam, S.M.S.; Haque, M.; Jahan, S.; Rahman, M.T. A customised Vision Transformer for accurate detection and classification of Java Plum leaf disease. Smart Agric. Technol. 2024, 8, 100500. [Google Scholar] [CrossRef]

- Abouelmagd, L.M.; Shams, M.Y.; Marie, H.S.; Zaki, M.; Salem, H.; El-Bakry, H.M.; Hassan, A. An optimized capsule neural networks for tomato leaf disease classification. EURASIP J. Image Video Process. 2024, 2024, 2. [Google Scholar] [CrossRef]

- Ritharson, P.I.; Raimond, K.; Mary, X.A.; Michael, B.; Johny, M. DeepRice: A deep learning and deep feature based classification of Rice leaf disease subtypes. Artif. Intell. Agric. 2024, 11, 34–49. [Google Scholar] [CrossRef]

- Reis, H.C.; Turk, V. Potato leaf disease detection with a novel deep learning model based on depthwise separable convolution and transformer networks. Eng. Appl. Artif. Intell. 2024, 133, 108307. [Google Scholar] [CrossRef]

- Pacal, I. Enhancing crop productivity and sustainability through disease identification in maize leaves: Exploiting a large dataset with an advanced vision transformer model. Expert. Syst. Appl. 2024, 238, 122099. [Google Scholar] [CrossRef]

- Guo, Q.; Wang, C.; Xiao, D.; Li, T.; Liu, H.; Zhang, S.; Chen, Z.; Wu, Y.; Ma, J.; Feng, Y. A novel multi-label pest image classifier using the modified Swin Transformer and soft binary cross entropy loss. Eng. Appl. Artif. Intell. 2023, 126, 107060. [Google Scholar] [CrossRef]

- Usha Ruby, A.; George Chellin Chandran, J.; Chaithanya, B.N.; Priyanka, R.; Ajay, M.; Rajesh, S.; Kumar, D.; Vinoth, R.; Monica, L.; Devi, T. Wheat leaf disease classification using modified ResNet50 convolutional neural network model. Multimed. Tools Appl. 2024, 83, 62875–62893. [Google Scholar] [CrossRef]

- Si, H.; Li, M.; Li, W.; Zhang, J.; Wang, L.; Zhao, H.; Liu, X.; Yu, T. A dual-branch model integrating CNN and swin transformer for efficient apple leaf disease classification. Agriculture 2024, 14, 142. [Google Scholar] [CrossRef]

- Gaikwad, S.S.; Rumma, S.S.; Hangarge, M. Fungi affected fruit leaf disease classification using deep CNN architecture. Int. J. Inf. Technol. 2022, 14, 3815–3824. [Google Scholar] [CrossRef]

- Paul, S.G.; Biswas, A.A.; Saha, A.; Bhowmik, D.; Mitra, S. A real-time application-based convolutional neural network approach for tomato leaf disease classification. Array 2023, 19, 100313. [Google Scholar] [CrossRef]

- Ahad, M.T.; Li, Y.; Song, B.; Jahan, S.; Bhowmik, A.C.; Hossain, A. Comparison of CNN-based deep learning architectures for rice diseases classification. Artif. Intell. Agric. 2023, 9, 22–35. [Google Scholar] [CrossRef]

- Hemalatha, S.; Jayachandran, J.J.B. A multitask learning-based vision transformer for plant disease localization and classification. Int. J. Comput. Intell. Syst. 2024, 17, 188. [Google Scholar] [CrossRef]

- Sun, Y.; Ning, L.; Zhao, B.; Wang, D.; Liu, M.; Chen, H. Tomato Leaf Disease Classification by Combining EfficientNetv2 and a Swin Transformer. Appl. Sci. 2024, 14, 7472. [Google Scholar] [CrossRef]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Chellali, M.; Guersi, N. A survey on deep learning-based identification of plant and crop diseases from UAV-based aerial images. Clust. Comput. 2023, 26, 1297–1317. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Astolfi, G.; Belete, N.; Amorim, W.P.; Avila, S. Detection and classification of soybean pests using deep learning with UAV images. Comput. Electron. Agric. 2020, 179, 105836. [Google Scholar] [CrossRef]

- Jayanthy, S.; Kiruthika, G.; Lakshana, G.; Rithanya, M.; Arthi, K. Early cotton plant disease detection using drone monitoring and Deep learning. In Proceedings of the 2024 IEEE International Conference for Women in Innovation, Technology & Entrepreneurship (ICWITE), Bangalore, India, 16–17 February 2024; pp. 625–630. [Google Scholar]

- Stephen, A.; Punitha, A.; Chandrasekar, A. Designing self attention-based ResNet architecture for rice leaf disease classification. Neural Comput. Appl. 2023, 35, 6737–6751. [Google Scholar] [CrossRef]

- Hao, S.; Li, J.; Ma, X.; Zhang, Y.; Wang, H.; Zhang, X.; He, T.; Chen, M. A Photovoltaic Hot-spot Fault Detection Network for Aerial Images Based on Progressive Transfer Learning and Multi-scale Feature Fusion. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4709713. [Google Scholar] [CrossRef]

- Li, H.-A.; Liu, M. An automatic teeth arrangement method based on an intelligent optimization algorithm and the Frenet-Serret formula. Biomed. Signal Process. Control 2025, 105, 107606. [Google Scholar] [CrossRef]

- Zeng, Q.; Niu, L.; Wang, S.; Liu, D.; Liu, Y.; Li, Z.; Guo, R.; Wang, X. SEViT: A large-scale and fine-grained plant disease classification model based on transformer and attention convolution. Multimed. Syst. 2023, 29, 1001–1010. [Google Scholar] [CrossRef]

- Bhuyan, P.; Singh, P.K.; Das, S.K. Res4net-CBAM: A deep cnn with convolution block attention module for tea leaf disease diagnosis. Multimed. Tools Appl. 2024, 83, 48925–48947. [Google Scholar] [CrossRef]

- Zhao, Y.; Chen, J.; Xu, X.; Wang, X.; Zhang, Q. SEV-Net: Residual network embedded with attention mechanism for plant disease severity detection. Concurr. Comput. Pract. Exp. 2021, 33, e6161. [Google Scholar] [CrossRef]

- Tang, Z.; Yang, J.; Li, Z.; Chen, X.; Wang, Q. Grape disease image classification based on lightweight convolution neural networks and channelwise attention. Comput. Electron. Agric. 2020, 178, 105735. [Google Scholar] [CrossRef]

- Gao, R.; Wang, R.; Feng, L.; Liu, M.; Zhang, Z.; Shi, H. Dual-branch, efficient, channel attention-based crop disease identification. Comput. Electron. Agric. 2021, 190, 106410. [Google Scholar] [CrossRef]

- Naik, B.N.; Malmathanraj, R.; Palanisamy, P. Detection and classification of chilli leaf disease using a squeeze-and-excitation-based CNN model. Ecol. Inform. 2022, 69, 101663. [Google Scholar] [CrossRef]

- Alirezazadeh, P.; Schirrmann, M.; Stolzenburg, F. Improving deep learning-based plant disease classification with attention mechanism. Gesunde Pflanz. 2023, 75, 49–59. [Google Scholar] [CrossRef]

- Hao, S.; Li, J.; Sun, X.; Zhang, X.; Ma, X.; Wang, H. MDFOaNet: A Novel Multi-Modal Pedestrian Detection Network Based on Multi-Scale Image Dynamic Feature Optimization and Attention Mapping. IEEE Trans. Intell. Transp. Syst. 2025, 26, 268–282. [Google Scholar] [CrossRef]

- Lou, Y.; Ye, X.; Li, M.; Zhang, C.; Wang, Q.; Zhao, L. Object detection model of cucumber leaf disease based on improved fpn. In Proceedings of the 2022 IEEE 6th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Beijing, China, 3–5 October 2022; pp. 743–750. [Google Scholar]

- Wang, J.; Jia, J.; Zhang, Y.; Liu, L.; Zhao, W.; Xu, M.; Li, F. RAAWC-UNet: An apple leaf and disease segmentation method based on residual attention and atrous spatial pyramid pooling improved UNet with weight compression loss. Front. Plant Sci. 2024, 15, 1305358. [Google Scholar] [CrossRef] [PubMed]

- Vishnoi, V.K.; Kumar, K.; Kumar, B. A comprehensive study of feature extraction techniques for plant leaf disease detection. Multimed. Tools Appl. 2022, 81, 367–419. [Google Scholar] [CrossRef]

- Fan, X.; Luo, P.; Mu, Y.; Yang, L.; Zhou, Y. Leaf image based plant disease identification using transfer learning and feature fusion. Comput. Electron. Agric. 2022, 196, 106892. [Google Scholar] [CrossRef]

- Mao, R.; Zhang, Y.; Wang, Z.; Liu, Y.; Sun, Y. DAE-Mask: A novel deep-learning-based automatic detection model for in-field wheat diseases. Precis. Agric. 2024, 25, 785–810. [Google Scholar] [CrossRef]

- Yang, X.; Chen, A.; Zhou, G.; Li, J.; Chen, Y.; Zhang, X. Instance segmentation and classification method for plant leaf images based on ISC-MRCNN and APS-DCCNN. IEEE Access 2020, 8, 151555–151573. [Google Scholar] [CrossRef]

- Dai, G.; Tian, Z.; Fan, J.; Wang, Y.; Liu, H.; Zhao, L.; Sun, M.; Zhang, X. DFN-PSAN: Multi-level deep information feature fusion extraction network for interpretable plant disease classification. Comput. Electron. Agric. 2024, 216, 108481. [Google Scholar] [CrossRef]

- Li, Y.; Sun, S.; Zhang, C.; Liu, F.; Huang, Y. One-stage disease detection method for maize leaf based on multi-scale feature fusion. Appl. Sci. 2022, 12, 7960. [Google Scholar] [CrossRef]

- Lou, J.; Xie, X.; Huo, G.; Wang, C.; Li, Z.; Hu, Y. CSASNet—A Crop Leaf Disease Identification Method Based on Improved ShuffleNetV2. Autom. Control Comput. Sci. 2024, 58, 408–419. [Google Scholar]

- Karthik, R.; Hussain, S.; George, T.T.; Ramasamy, M.; Anand, A. A dual track deep fusion network for citrus disease classification using group shuffle depthwise feature pyramid and swin transformer. Ecol. Inform. 2023, 78, 102302. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

- Hao, S.; He, T.; Ma, X.; Zhang, X.; Wu, Y.; Wang, H. KDBiDet: A bi-branch collaborative training algorithm based on knowledge distillation for photovoltaic hot-spot detection systems. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Liu, T.; Chen, Z. Efficient multi-scale attention module with cross-spatial learning. In Proceedings of the ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

| Accuracy/% | Precision /% | Recall/% | Specificity/% | F1 Score/% | Params/M | FLOPs /G | |

|---|---|---|---|---|---|---|---|

| Swin Transformer | 93.04 | 92.24 | 98.18 | 86.10 | 95.12 | 48.3 | 8.5 |

| Swin Transformer + CBAM | 93.30 | 93.32 | 98.32 | 87.83 | 95.75 | 48.4 | 8.6 |

| Swin Transformer + EMA | 93.74 | 93.00 | 98.26 | 87.42 | 95.55 | 48.3 | 8.5 |

| Swin Transformer + EMAGE | 93.76 | 93.66 | 98.44 | 88.20 | 95.99 | 48.3 | 8.5 |

| Accuracy/% | Precision /% | Recall /% | Specificity /% | F1 Score/% | Params /M | FLOPs /G | |

|---|---|---|---|---|---|---|---|

| Swin Transformer | 93.04 | 92.24 | 98.18 | 86.10 | 95.12 | 48.3 | 8.5 |

| Swin Transformer + FPN | 93.24 | 93.32 | 98.28 | 87.28 | 95.74 | 49.8 | 8.9 |

| Swin Transformer + ASPP | 93.42 | 93.40 | 98.48 | 88.30 | 95.87 | 49.1 | 8.2 |

| Swin Transformer + DASPP | 94.08 | 93.76 | 98.48 | 88.92 | 96.06 | 49.3 | 9.3 |

| Accuracy /% | Precision /% | Recall /% | Specificity /% | F1 Score/% | Params /M | FLOPs /G | |

|---|---|---|---|---|---|---|---|

| Swin Transformer | 93.04 | 92.24 | 98.18 | 86.10 | 95.12 | 48.3 | 8.5 |

| Swin Transformer + EMAGE | 93.76 | 93.66 | 98.44 | 88.20 | 95.99 | 48.3 | 8.5 |

| Swin Transformer + DASPP | 94.08 | 93.76 | 98.48 | 88.92 | 96.06 | 49.3 | 9.3 |

| ED-Swin Transformer | 94.32 | 94.56 | 98.56 | 89.22 | 96.52 | 49.3 | 9.3 |

| Accuracy /% | Precision /% | Recall /% | Specificity /% | F1 Score/% | |

|---|---|---|---|---|---|

| Swin Transformer | 97.03 | 96.14 | 95.8 | 96.19 | 96.00 |

| Swin Transformer + EMAGE | 97.42 | 96.63 | 96.38 | 96.81 | 96.50 |

| Swin Transformer + DASPP | 97.91 | 97.41 | 97.23 | 97.59 | 97.32 |

| ED-Swin Transformer | 98.43 | 98.39 | 97.91 | 98.95 | 98.14 |

| Accuracy/% | Bacterial Blight | Brown Streak Disease | Green Mottle | Healthy Plants | Mosaic Disease |

|---|---|---|---|---|---|

| Resnet | 76.6 | 65.7 | 86.6 | 70.5 | 92.2 |

| Vggnet | 69.5 | 96.6 | 63.3 | 74.3 | 90.2 |

| Vit-B | 80.6 | 84.7 | 89.3 | 81.9 | 92.6 |

| Vit-L | 83.3 | 95.5 | 95.4 | 79.3 | 90.1 |

| Swin-T | 90.9 | 94.5 | 95.6 | 88.2 | 91.4 |

| Swin-S | 93.1 | 93.7 | 93.6 | 93.2 | 93.8 |

| ED-Swin Transformer | 96.1 | 96.1 | 91.1 | 93.2 | 95.1 |

| Accuracy/% | Precision/% | Recall/% | Specificity/% | F1 Score/% | Params/M | FLOPs /G | |

|---|---|---|---|---|---|---|---|

| Resnet | 78.32 | 74.04 | 94.24 | 60.69 | 82.93 | 25.5 | 3.8 |

| Vggnet | 78.18 | 74.74 | 94.02 | 60.60 | 83.28 | 138 | 15.4 |

| Vit-B | 85.82 | 85.40 | 96.62 | 75.14 | 90.66 | 86 | 17.6 |

| Vit-L | 88.72 | 87.84 | 97.04 | 78.65 | 92.21 | 304 | 122 |

| Swin-T | 92.12 | 92.08 | 97.98 | 85.32 | 94.94 | 28 | 4.5 |

| Swin-S | 93.04 | 92.24 | 98.18 | 86.10 | 95.12 | 48 | 8.5 |

| ED-Swin Transformer | 94.32 | 94.56 | 98.56 | 89.22 | 96.52 | 49.3 | 9.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Zhou, H.; Liu, K.; Xu, Y. ED-Swin Transformer: A Cassava Disease Classification Model Integrated with UAV Images. Sensors 2025, 25, 2432. https://doi.org/10.3390/s25082432

Zhang J, Zhou H, Liu K, Xu Y. ED-Swin Transformer: A Cassava Disease Classification Model Integrated with UAV Images. Sensors. 2025; 25(8):2432. https://doi.org/10.3390/s25082432

Chicago/Turabian StyleZhang, Jing, Hao Zhou, Kunyu Liu, and Yuguang Xu. 2025. "ED-Swin Transformer: A Cassava Disease Classification Model Integrated with UAV Images" Sensors 25, no. 8: 2432. https://doi.org/10.3390/s25082432

APA StyleZhang, J., Zhou, H., Liu, K., & Xu, Y. (2025). ED-Swin Transformer: A Cassava Disease Classification Model Integrated with UAV Images. Sensors, 25(8), 2432. https://doi.org/10.3390/s25082432