YOLOv11-XRBS: Enhanced Identification of Small and Low-Detail Explosives in X-Ray Backscatter Images

Abstract

1. Introduction

2. Dataset Construction

2.1. XRBS Imaging System

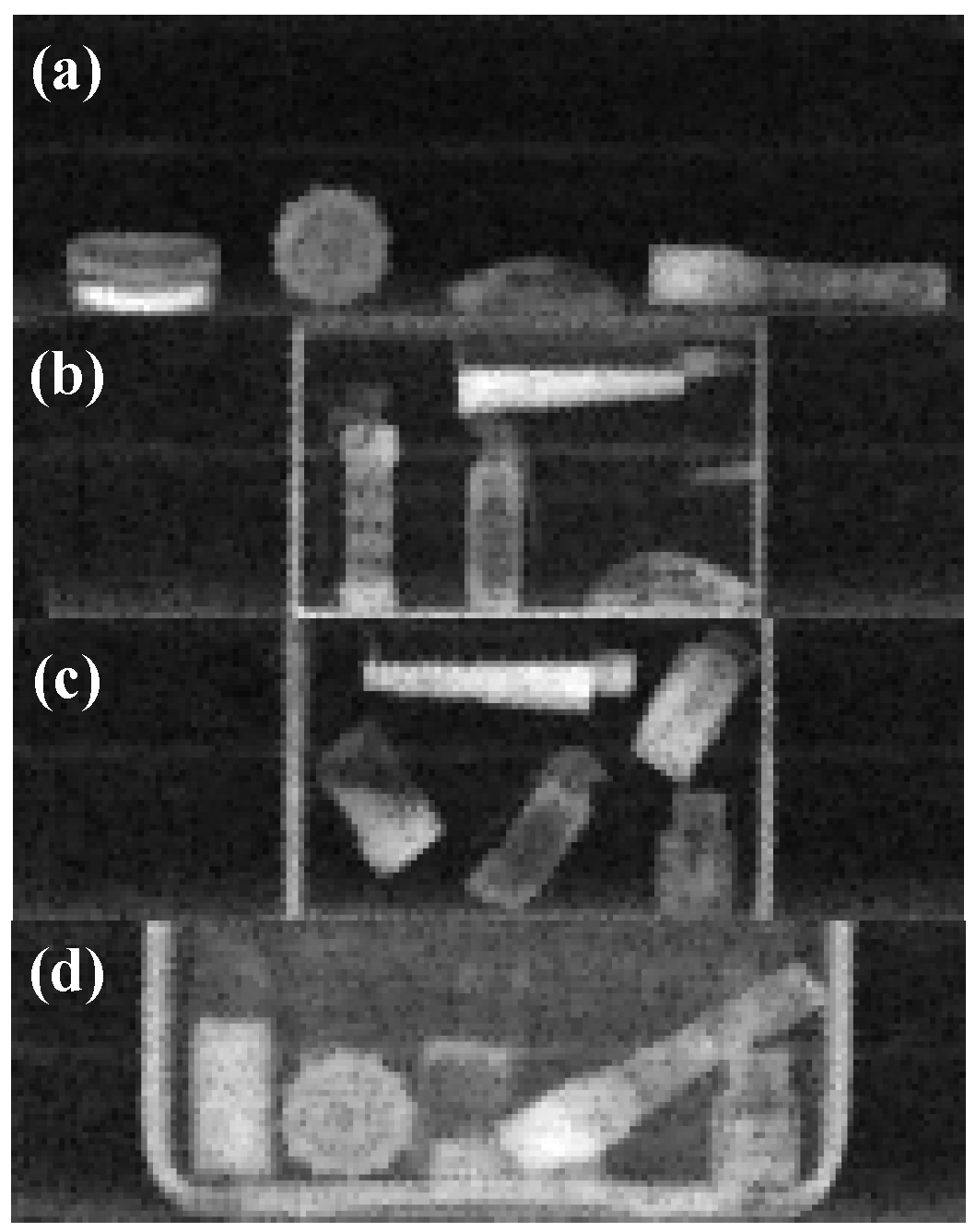

2.2. Dataset Acquisition

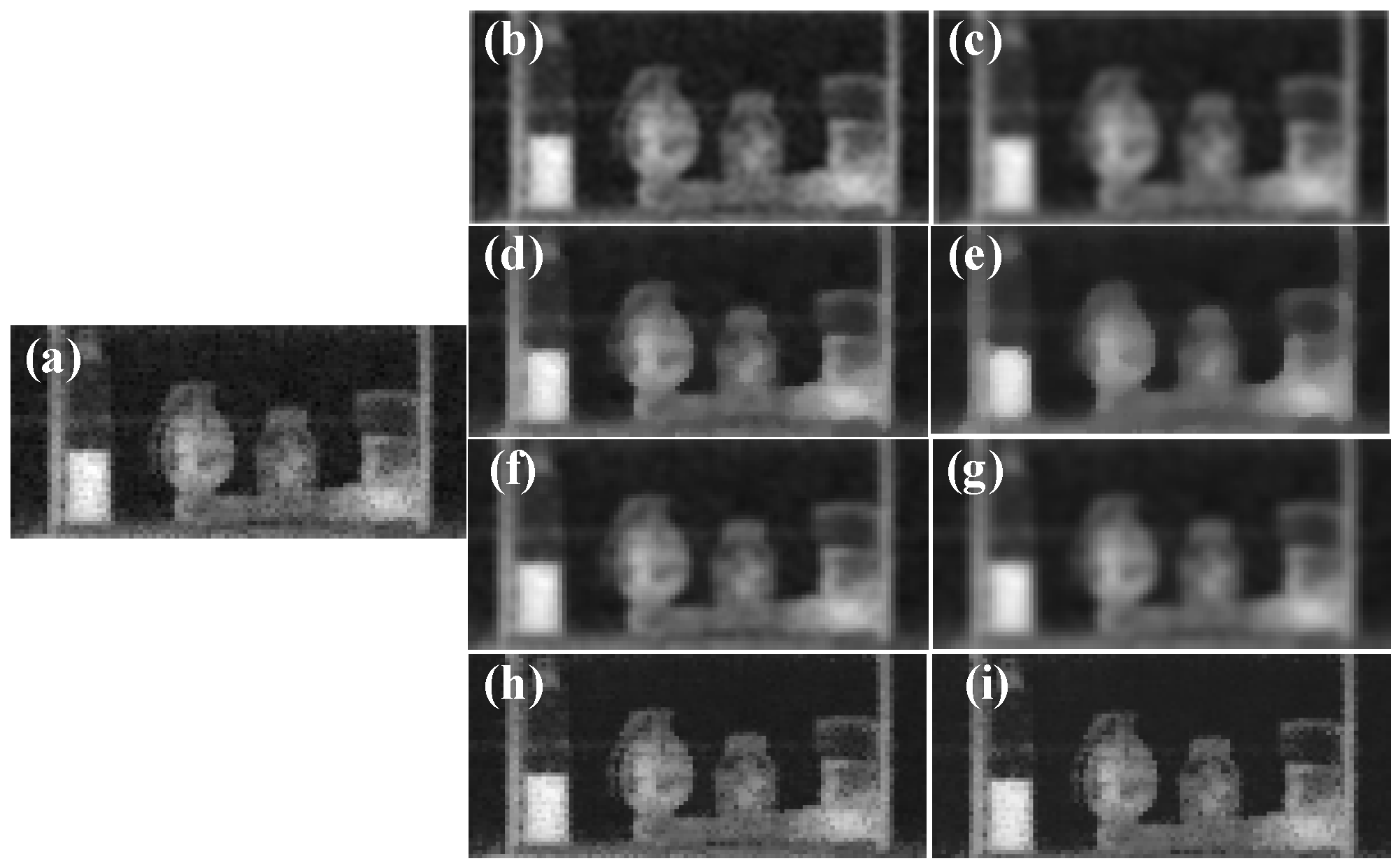

2.3. Image Processing

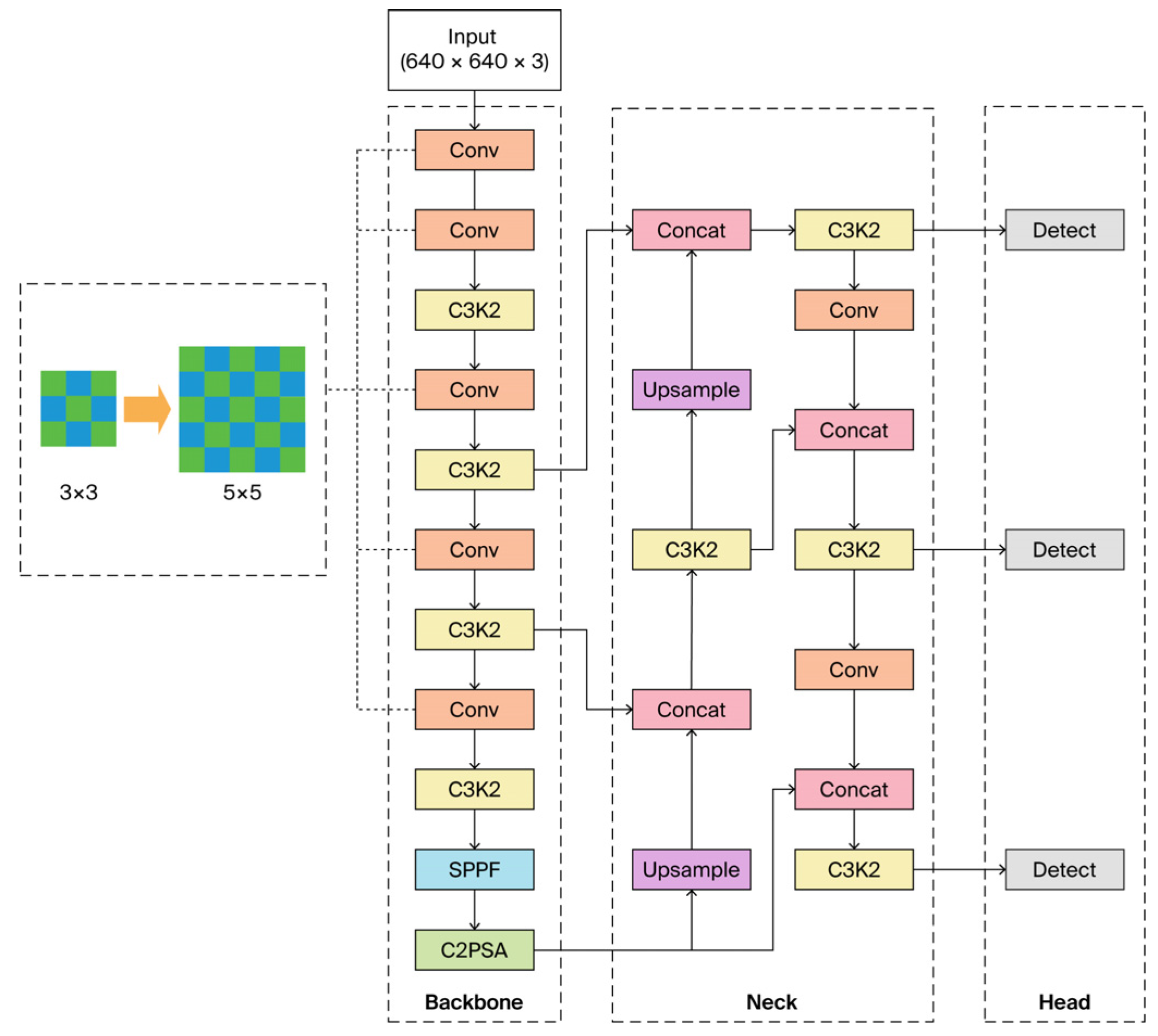

3. Algorithm Optimization

3.1. Adaptive Architecture Refinement

3.2. Size-Aware Focal Loss (SaFL)

3.3. Loss Function Recomposition

4. Results and Discussion

4.1. Ablation Study

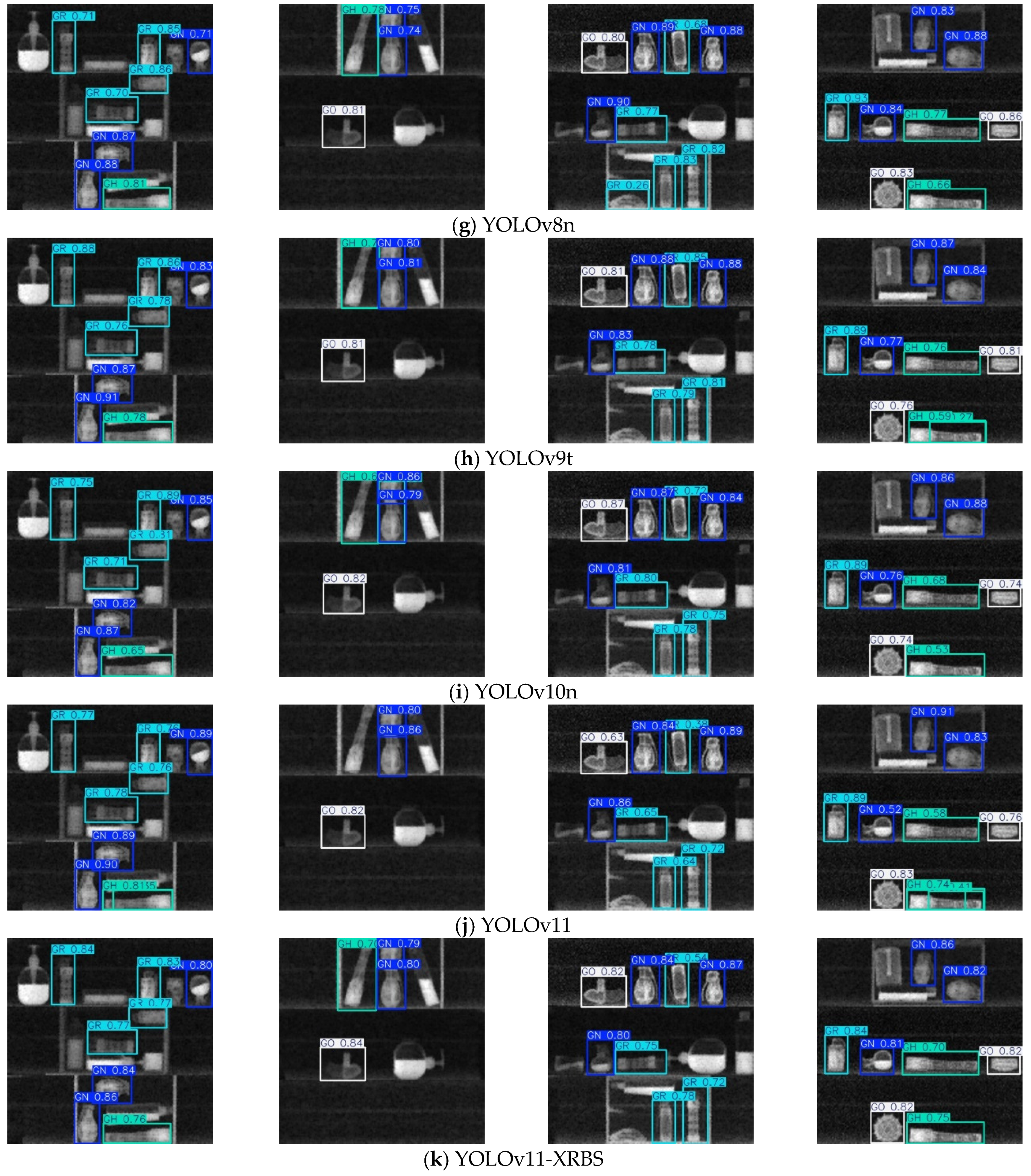

4.2. Performance Comparison

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Dual-Use Research Statement

Conflicts of Interest

References

- Fenimore, E.E.; Cannon, T.M. Coded Aperture Imaging with Uniformly Redundant Arrays. Appl. Opt. 1978, 17, 337. [Google Scholar] [CrossRef]

- Singh, S.; Singh, M. Explosives Detection Systems (EDS) for Aviation Security. Signal Process. 2003, 83, 31–55. [Google Scholar] [CrossRef]

- Towe, B.C.; Jacobs, A.M. X-Ray Backscatter Imaging. IEEE Trans. Biomed. Eng. 1981, 28, 646–654. [Google Scholar] [CrossRef]

- Smith, G.J. Detection of Contraband Concealed on the Body Using X-Ray Imaging; Alyea, L.A., Hoglund, D.E., Eds.; SPIE: Bellingham, WA, USA, 1997; pp. 115–120. [Google Scholar]

- Kolkoori, S.; Wrobel, N.; Zscherpel, U.; Ewert, U. A New X-Ray Backscatter Imaging Technique for Non-Destructive Testing of Aerospace Materials. NDT E Int. 2015, 70, 41–52. [Google Scholar] [CrossRef]

- Huang, Y.; Maier, A.; Fan, F.; Kreher, B.; Huang, X.; Fietkau, R.; Han, H.; Putz, F.; Bert, C. Learning Perspective Distortion Correction in Cone-Beam X-Ray Transmission Imaging. IEEE Trans. Radiat. Plasma Med. Sci. 2025, 9, 927–938. [Google Scholar] [CrossRef]

- Tuhuț, L.; Munteanu, L.; Șimon-Marinică, A.-B.; Matei, A.D. Explosives Identification by Infrared Spectrometry. MATEC Web Conf. 2022, 373, 00025. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y.; Wang, L.; Zhang, S. X-ray image analysis for explosive circuit detection using deep learning algorithms. J. X-Ray Sci. Technol. 2022, 30, 567–578. [Google Scholar]

- Mery, D.; Svec, E.; Arias, M.; Riffo, V.; Saavedra, J.M.; Banerjee, S. Modern computer vision techniques for X-ray testing in baggage inspection. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 682–692. [Google Scholar] [CrossRef]

- Cieślak, M.J.; Gamage, K.A.A.; Glover, R. Coded-Aperture Imaging Systems: Past, Present and Future Development—A Review. Radiat. Meas. 2016, 92, 59–71. [Google Scholar] [CrossRef]

- Akcay, S.; Kundegorski, M.E.; Willcocks, C.G.; Breckon, T.P. Using Deep Convolutional Neural Network Architectures for Object Classification and Detection Within X-Ray Baggage Security Imagery. IEEE Trans. Inf. Forensic Secur. 2018, 13, 2203–2215. [Google Scholar] [CrossRef]

- Sharma, P.; Mohanram, B.; Vijayashree, J.; Jayashree, J.; Sahoo, A.R. Image Enhancement Using ESRGAN for CNN Based X-Ray Classification. In Proceedings of the 2022 5th International Conference on Contemporary Computing and Informatics (IC3I), Greater Noida, UP, India, 14 December 2022; pp. 1965–1969. [Google Scholar]

- Yu, Q.; Wu, Q.; Liu, H. Research on X-Ray Contraband Detection and Overlapping Target Detection Based on Convolutional Network. In Proceedings of the 2022 4th International Conference on Frontiers Technology of Information and Computer (ICFTIC), Qingdao, China, 2 December 2022; pp. 736–741. [Google Scholar]

- Wu, J.; Xu, X.; Yang, J. Object Detection and X-Ray Security Imaging: A Survey. IEEE Access 2023, 11, 45416–45441. [Google Scholar] [CrossRef]

- Jing, B.; Duan, P.; Chen, L.; Du, Y. EM-YOLO: An X-Ray Prohibited-Item-Detection Method Based on Edge and Material Information Fusion. Sensors 2023, 23, 8555. [Google Scholar] [CrossRef]

- Yu, X.; Yuan, W.; Wang, A. X-Ray Security Inspection Image Dangerous Goods Detection Algorithm Based on Improved YOLOv4. Electronics 2023, 12, 2644. [Google Scholar] [CrossRef]

- Liu, W.; Tao, R.; Zhu, H.; Sun, Y.; Zhao, Y.; Wei, Y. BGM: Background Mixup for X-Ray Prohibited Items Detection. arXiv 2025, arXiv:2412.00460. [Google Scholar]

- Akcay, S.; Breckon, T. Towards Automatic Threat Detection: A Survey of Advances of Deep Learning within X-Ray Security Imaging. Pattern Recognit. 2022, 122, 108245. [Google Scholar] [CrossRef]

- Li, X.; Zhang, B.; Sander, P.V.; Liao, J. Blind Geometric Distortion Correction on Images Through Deep Learning. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4850–4859. [Google Scholar]

- Yang, B.; Yang, Z.; Wang, X.; Mu, B.; Xu, J.; Yang, C.; Li, H. Research on Wavelength-Shifting Fiber Scintillator for Detecting Low-Intensity X-Ray Backscattered Photons. Photonics 2025, 12, 567. [Google Scholar] [CrossRef]

- Shorten, C.; Khoshgoftaar, T.M. A Survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. arXiv 2018, arXiv:1801.03924. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized Intersection Over Union: A Metric and a Loss for Bounding Box Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Powers, D.M.W. Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness and Correlation. J. Mach. Learn. Technol. 2011, 2, 37–63. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

| Component | Parameters |

|---|---|

| X-ray Source | 150 kV, 150 W |

| Number of Detectors | 2 |

| Detector Size | 400 mm × 200 mm × 50 mm |

| Spatial Resolution | 1 mm |

| Field of View | >30 cm |

| Filtering Methods | Figure | PSNR | SSIM | LPIPS |

|---|---|---|---|---|

| Mean Filtering | a | 27.95 | 0.86 | 0.20 |

| b | 28.08 | 0.88 | 0.22 | |

| c | 27.60 | 0.88 | 0.24 | |

| Median Filtering | a | 29.45 | 0.88 | 0.09 |

| d | 30.21 | 0.90 | 0.06 | |

| e | 29.31 | 0.91 | 0.10 | |

| Gaussian Filtering | a | 29.90 | 0.91 | 0.14 |

| f | 30.17 | 0.92 | 0.15 | |

| g | 29.60 | 0.92 | 0.19 | |

| Bilateral Filtering | a | 33.09 | 0.94 | 0.03 |

| h | 34.13 | 0.94 | 0.02 | |

| i | 34.54 | 0.98 | 0.01 |

| Variant | Precision (%) | Recall (%) | mAP (%) |

|---|---|---|---|

| Baseline YOLOv11 | 91.0 | 92.1 | 93.8 |

| Add SaFL | 91.3 | 92.3 | 94.1 |

| Add SaFL and Loss Function Recomposition | 91.9 | 92.8 | 94.5 |

| Add SaFL, Loss Function Recomposition and Adaptive Architecture Refinement | 92.9 | 93.5 | 94.8 |

| Model | Precision | Recall | F1 Score | mAP (%) | COCO mAP (%) |

|---|---|---|---|---|---|

| VGGNet | 84.3 | 84.9 | 85.7 | 86.3 | 63.8 |

| RetinaNet | 89.1 | 89.7 | 90.1 | 91.4 | 66.2 |

| DETR | 90.7 | 91.7 | 92.5 | 93.6 | 69.3 |

| Faster R-CNN | 90.3 | 91.0 | 90.8 | 92.1 | 66.2 |

| SSD512 | 87.2 | 87.8 | 88.1 | 89.7 | 65.7 |

| YOLOv6n | 91.6 | 92.1 | 91.8 | 93.0 | 68.0 |

| YOLOv8n | 92.7 | 93.1 | 92.9 | 94.3 | 68.6 |

| YOLOv9t | 92.3 | 92.4 | 92.3 | 93.4 | 66.6 |

| YOLOv10n | 86.8 | 85.9 | 86.3 | 91.0 | 66.6 |

| YOLOv11 | 91.0 | 92.1 | 92.7 | 93.8 | 68.4 |

| YOLOv11-XRBS | 92.9 | 93.5 | 93.2 | 94.8 | 72.2 |

| Model | mAP (%) | AP | |||

|---|---|---|---|---|---|

| GN | GR | GO | GH | ||

| VGGNet | 86.3 | 91.2 | 88.4 | 86.7 | 79.5 |

| RetinaNet | 91.4 | 96.8 | 94.1 | 91.3 | 83.7 |

| DETR | 93.6 | 97.4 | 98.4 | 93.5 | 85.1 |

| Faster R-CNN | 92.1 | 97.3 | 95.8 | 90.3 | 85.6 |

| SSD512 | 89.7 | 95.3 | 92.2 | 90.3 | 80.3 |

| YOLOv6n | 93.0 | 94.8 | 91.2 | 92.5 | 87.9 |

| YOLOv8n | 94.3 | 97.6 | 97.0 | 94.4 | 88.3 |

| YOLOv9t | 93.4 | 97.0 | 96.3 | 92.7 | 87.5 |

| YOLOv10n | 91.0 | 97.0 | 94.9 | 91.0 | 81.0 |

| YOLOv11 | 93.8 | 97.1 | 97.7 | 92.8 | 88.6 |

| YOLOv11-XRBS | 94.8 | 98.1 | 98.6 | 93.3 | 89.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, B.; Yang, Z.; Wang, X.; Mu, B.; Xu, J.; Li, H. YOLOv11-XRBS: Enhanced Identification of Small and Low-Detail Explosives in X-Ray Backscatter Images. Sensors 2025, 25, 6130. https://doi.org/10.3390/s25196130

Yang B, Yang Z, Wang X, Mu B, Xu J, Li H. YOLOv11-XRBS: Enhanced Identification of Small and Low-Detail Explosives in X-Ray Backscatter Images. Sensors. 2025; 25(19):6130. https://doi.org/10.3390/s25196130

Chicago/Turabian StyleYang, Baolu, Zhe Yang, Xin Wang, Baozhong Mu, Jie Xu, and Hong Li. 2025. "YOLOv11-XRBS: Enhanced Identification of Small and Low-Detail Explosives in X-Ray Backscatter Images" Sensors 25, no. 19: 6130. https://doi.org/10.3390/s25196130

APA StyleYang, B., Yang, Z., Wang, X., Mu, B., Xu, J., & Li, H. (2025). YOLOv11-XRBS: Enhanced Identification of Small and Low-Detail Explosives in X-Ray Backscatter Images. Sensors, 25(19), 6130. https://doi.org/10.3390/s25196130