Using Android Smartphones to Collect Precise Measures of Reaction Times to Multisensory Stimuli

Abstract

1. Introduction

- We propose a reproducible methodology to assess the suitability of Android smartphones for conducting audio–tactile RT-based paradigms. This methodology includes evaluating the device’s timing precision in synchronized auditory and tactile delivery, as well as in RT logging.

- We introduce a novel approach to improve RT measurement precision on smartphones by combining touchscreen and accelerometer data.

- An Android app–Dynaspace– was developed to implement audio–tactile interaction tasks, such as the one used to study of peripersonal space.

- Experimental results show that multisensory effects on RTs typically observed under controlled laboratory conditions can be replicated using a top-performing Android smartphone.

2. Related Work

3. Development Software and Measurement Chain for the Proposed Methods

3.1. Environment of Protocol Development

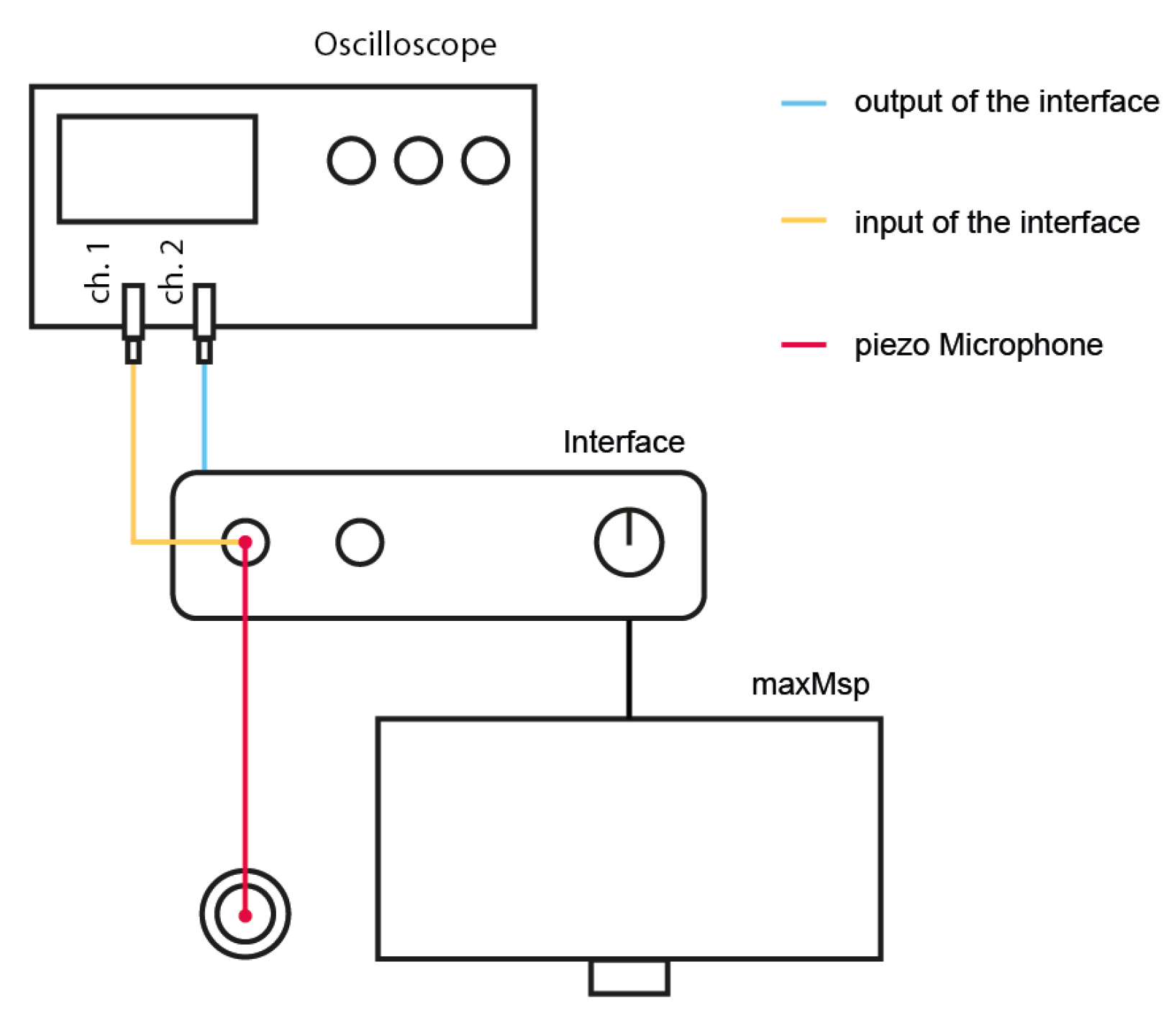

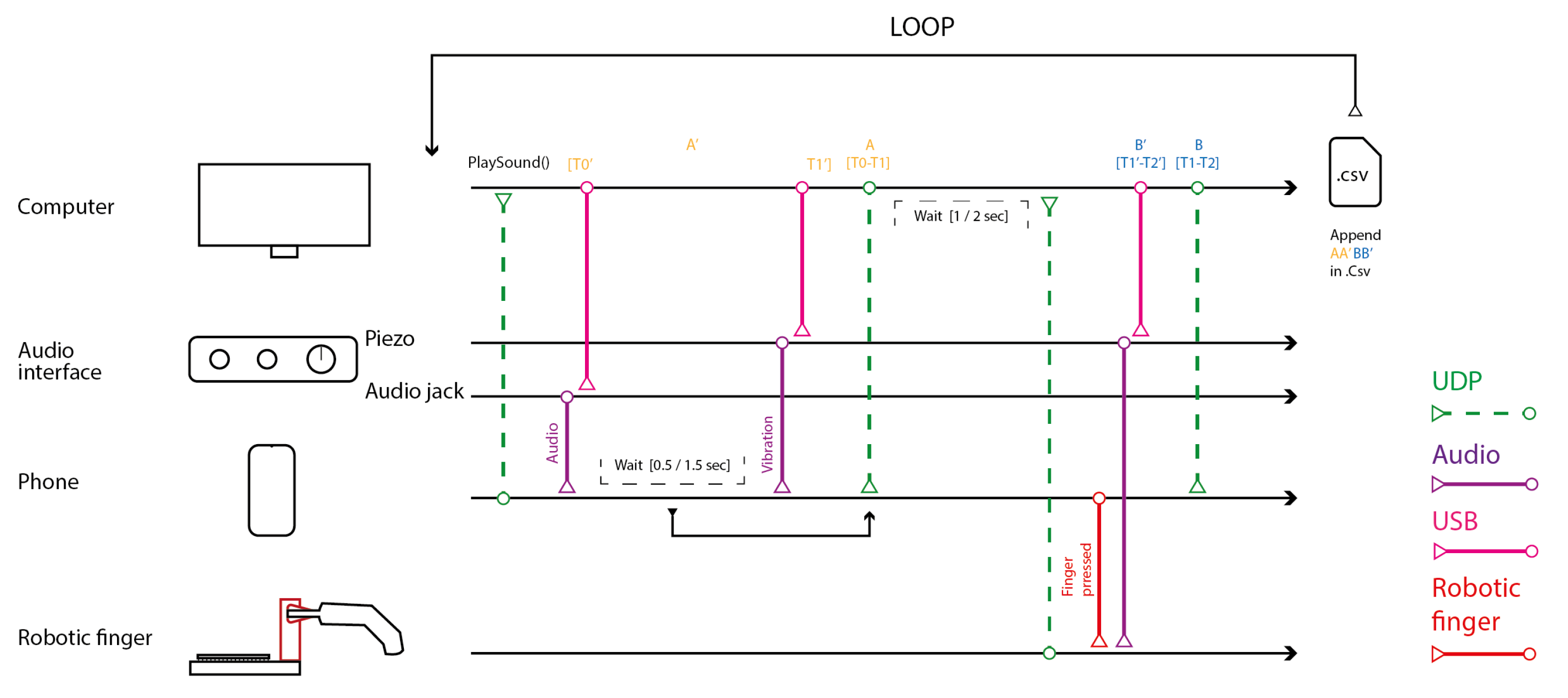

3.2. Evaluation of Timing Error Introduced by the Measurement Chain

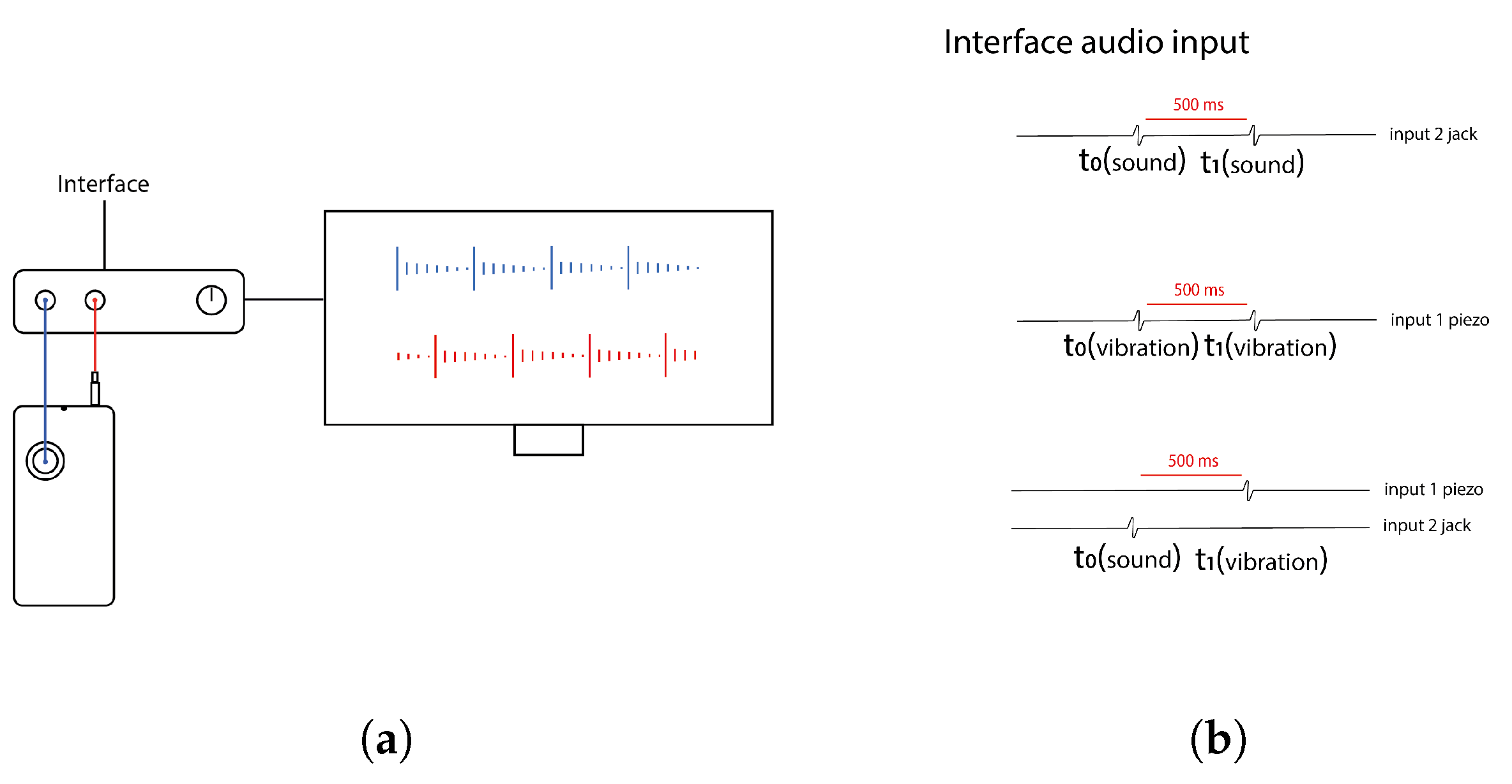

4. Assessment of a Smartphone Performance for Audio–Tactile Stimuli Synchronized Delivery

- while(start){

- emitVibration();

- delay(500);

- }

- while(start){

- emitSound();

- delay(500);

- }

- while(start){

- i++;

- if(i%2)

- emitSound();

- else

- emitVibration();

- delay(500);

- }

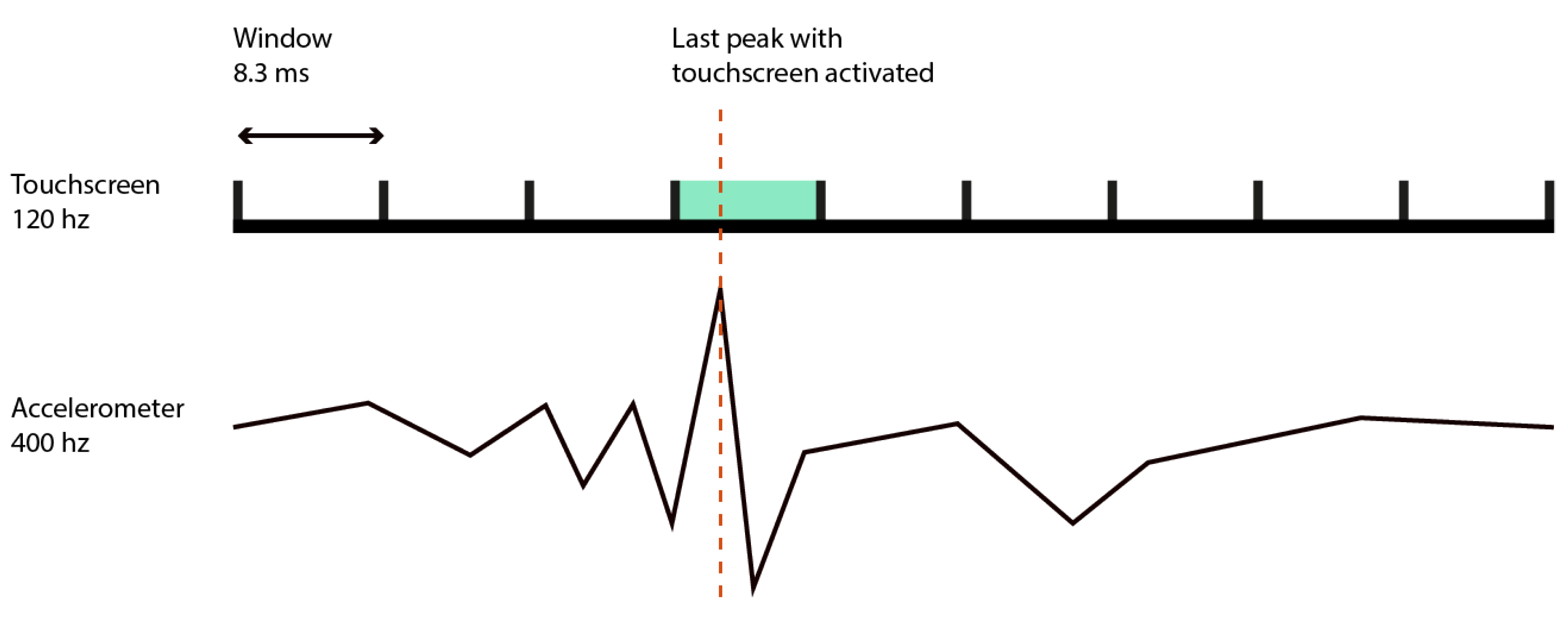

5. Enhancement of Reaction Time Measurement Precision

6. Assessment of Five Smartphones’ Performance Suitability for Audio-Tactile Reaction Time Paradigms

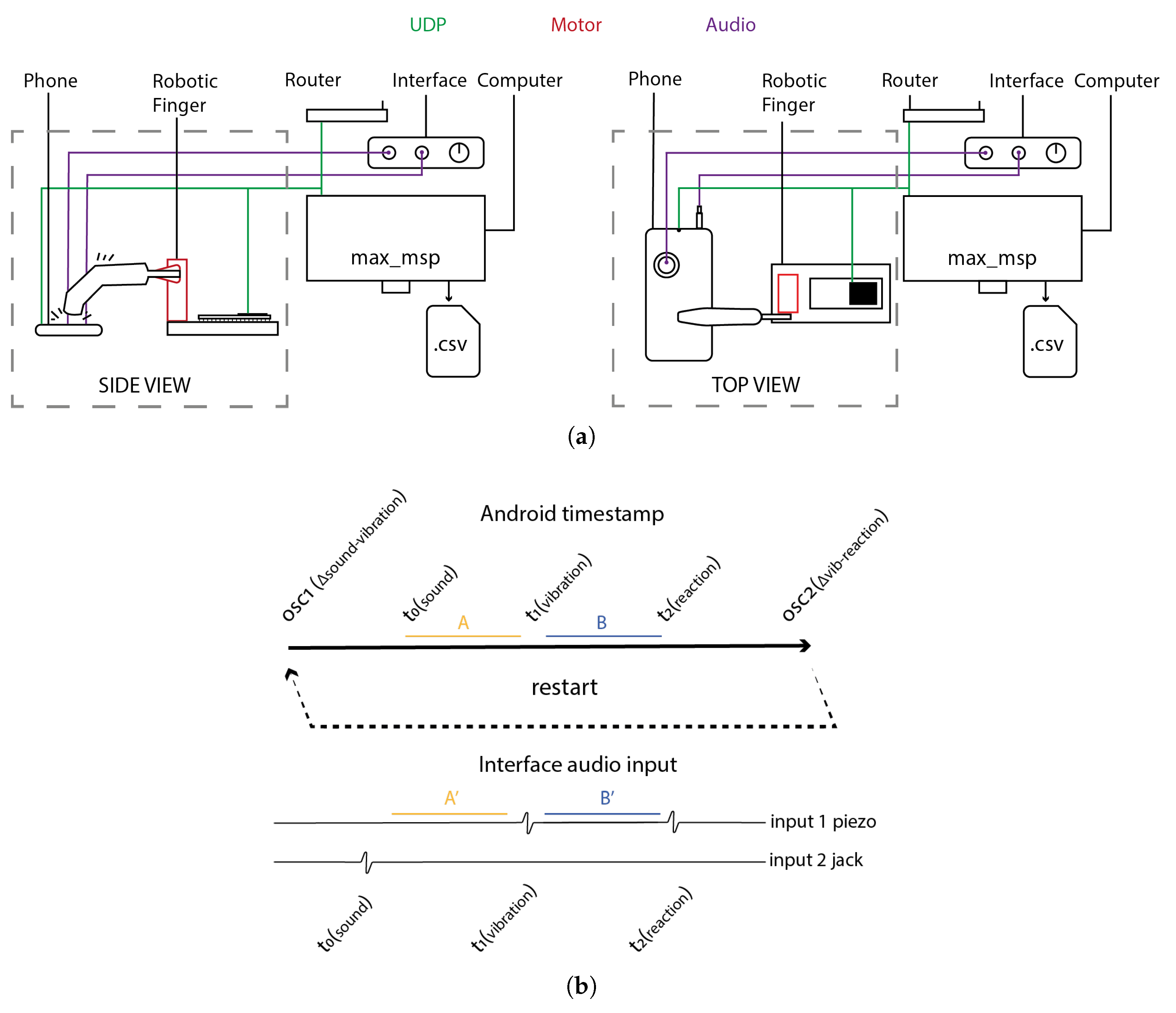

6.1. Methodology

- while(start){

- delta_1 = rand(500,2500);

- sendOSC(delta_1);

- emitSound();

- delay(delta_1);

- emitVibration();

- while(!catchOnset.get()){}

- timeOnset = catchOnset.get();

- catchOnset.reset();

- delta_2 = timeOnse - delta_1;

- sendOSC(delta_2);

- }

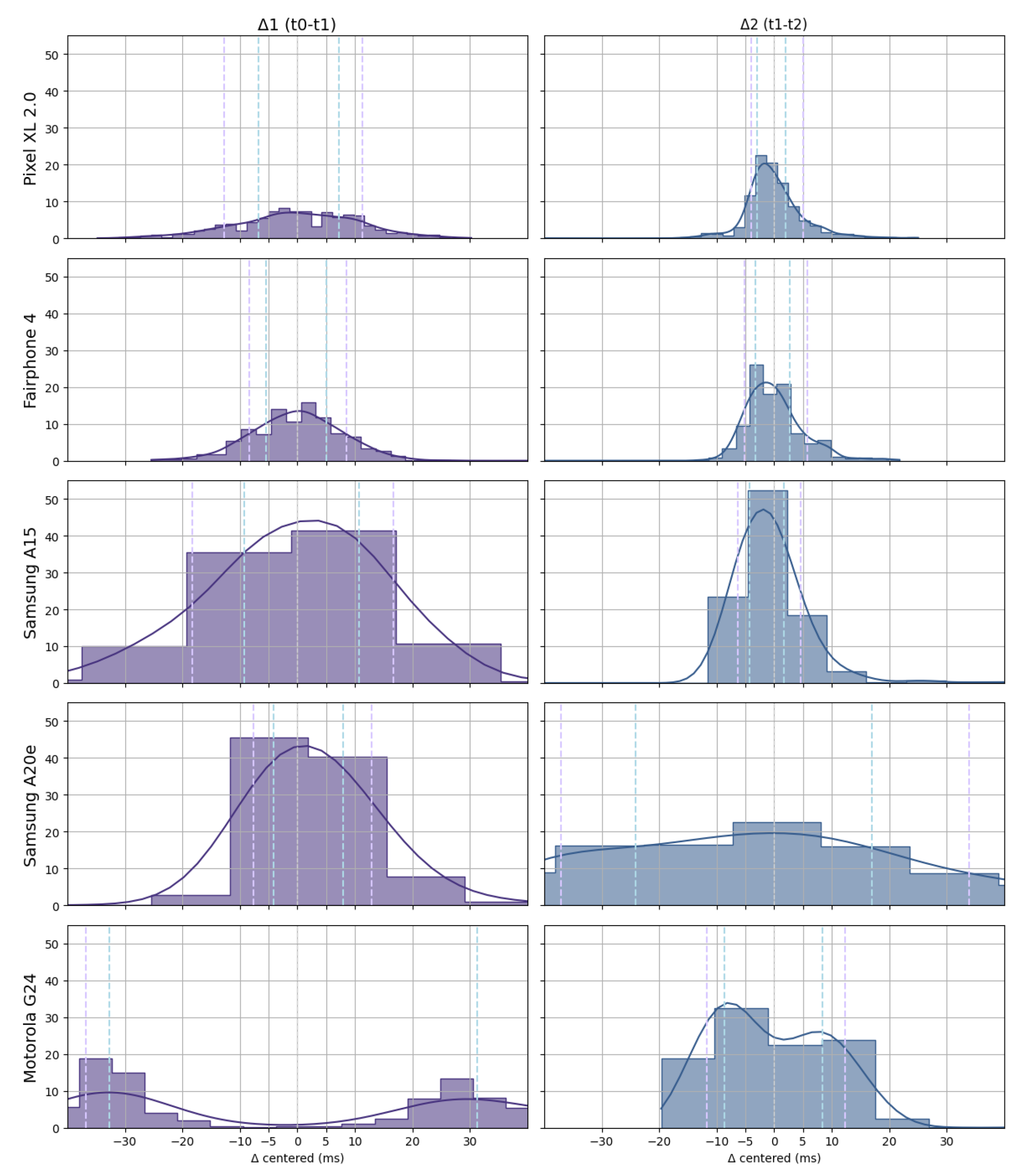

6.2. Results

6.3. Indicator of Smartphone Performances

7. Behavioral Validation

7.1. Methods

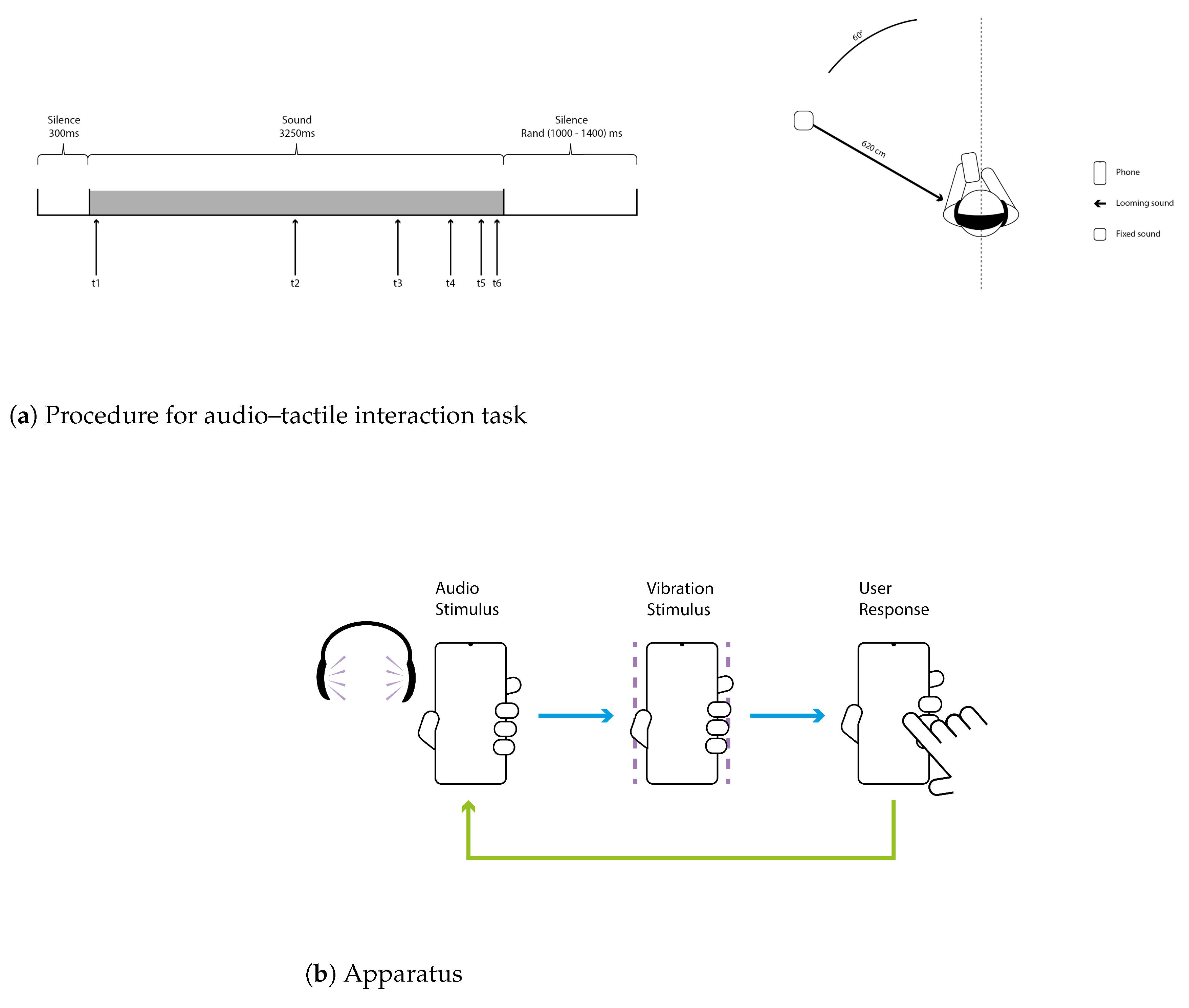

7.1.1. Dynaspace: Implementation of the Audio–Tactile Paradigm

7.1.2. Participants

7.1.3. Experimental Setup and Stimuli

7.1.4. Experimental Procedure

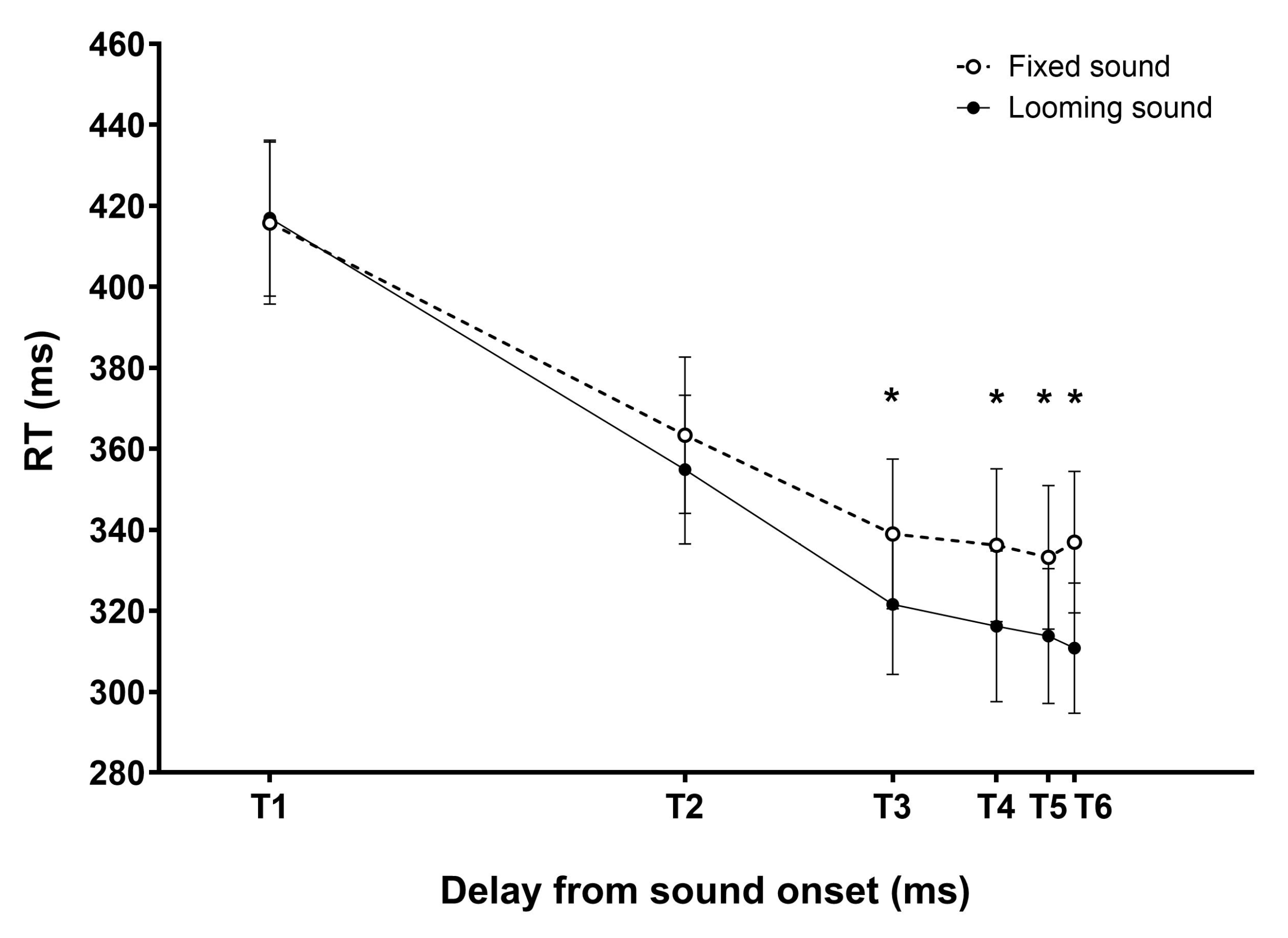

7.2. Results

8. Discussion

9. Limitations and Future Work

10. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pew Research Center. Mobile Fact Sheet. 2024. Available online: https://www.pewresearch.org/internet/fact-sheet/mobile/ (accessed on 20 July 2025).

- European Commission. Shaping Europe’s Digital Future: Europeans’ Use and Views of Electronic Communications in the EU. 2021. Available online: https://digital-strategy.ec.europa.eu/en/news/eurobarometer-europeans-use-and-views-electronic-communications-eu (accessed on 16 July 2025).

- De Vries, L.P.; Baselmans, B.M.L.; Bartels, M. Smartphone-Based Ecological Momentary Assessment of Well-Being: A Systematic Review and Recommendations for Future Studies. J. Happiness Stud. 2021, 22, 2361–2408. [Google Scholar] [CrossRef]

- Kaur, E.; Delir Haghighi, P.; Cicuttini, F.M.; Urquhart, D.M. Smartphone-Based Ecological Momentary Assessment for Collecting Pain and Function Data for Those with Low Back Pain. Sensors 2022, 22, 7095. [Google Scholar] [CrossRef] [PubMed]

- Miller, G. The Smartphone Psychology Manifesto. Perspect. Psychol. Sci. 2012, 7, 221–237. [Google Scholar] [CrossRef] [PubMed]

- Anwyl-Irvine, A.L.; Massonnié, J.; Flitton, A.; Kirkham, N.; Evershed, J.K. Gorilla in our midst: An online behavioral experiment builder. Behav. Res. Methods 2020, 52, 388–407. [Google Scholar] [CrossRef]

- Giamattei, M.; Yahosseini, K.S.; Gächter, S.; Molleman, L. LIONESS Lab: A free web-based platform for conducting interactive experiments online. J. Econ. Sci. Assoc. 2020, 6, 95–111. [Google Scholar] [CrossRef]

- Reips, U.D. The web experiment: Advantages, disadvantages, and solutions. In Psychology Experiments on the Internet; Academic Press: Cambridge, MA, USA, 2000; pp. 89–117. [Google Scholar]

- Bridges, D.; Pitiot, A.; MacAskill, M.R.; Peirce, J.W. The timing mega-study: Comparing a range of experiment generators, both lab-based and online. PeerJ 2020, 8, e9414. [Google Scholar] [CrossRef]

- Lauzon, A.P.; Russo, F.A.; Harris, L.R. The influence of rhythm on detection of auditory and vibrotactile asynchrony. Exp. Brain Res. 2020, 238, 825–832. [Google Scholar] [CrossRef]

- Sharma, G.; Yasuda, H.; Kuehner, M. Detection Threshold of Audio Haptic Asynchrony in a Driving Context. arXiv 2023, arXiv:2307.05451. [Google Scholar] [CrossRef]

- Kaaresoja, T.; Brewster, S. Feedback is... late: Measuring multimodal delays in mobile device touchscreen interaction. In Proceedings of the International Conference on Multimodal Interfaces and the Workshop on Machine Learning for Multimodal Interaction, ICMI-MLMI ’10. New York, NY, USA, 8–11 November 2010; pp. 1–8. [Google Scholar] [CrossRef]

- Arthurs, M.; Dominguez Veiga, J.J.; Ward, T.E. Accurate Reaction Times on Smartphones: The Challenges of Developing a Mobile Psychomotor Vigilance Task. In Proceedings of the 2021 ACM International Symposium on Wearable Computers, Virtual, 21–26 September 2021; pp. 53–57. [Google Scholar] [CrossRef]

- Hobeika, L.; Taffou, M.; Carpentier, T.; Warusfel, O.; Viaud-Delmon, I. Capturing the dynamics of peripersonal space by integrating expectancy effects and sound propagation properties. J. Neurosci. Methods 2020, 332, 108534. [Google Scholar] [CrossRef]

- Canzoneri, E.; Magosso, E.; Serino, A. Dynamic Sounds Capture the Boundaries of Peripersonal Space Representation in Humans. PLoS ONE 2012, 7, 1–8. [Google Scholar] [CrossRef]

- Taffou, M.; Viaud-Delmon, I. Cynophobic fear adaptively extends peri-personal space. Front. Psychiatry 2014, 5, 3–9. [Google Scholar] [CrossRef]

- Teneggi, C.; Canzoneri, E.; di Pellegrino, G.; Serino, A. Social Modulation of Peripersonal Space Boundaries. Curr. Biol. 2013, 23, 406–411. [Google Scholar] [CrossRef] [PubMed]

- Ferri, F.; Tajadura-Jiménez, A.; Väljamäe, A.; Vastano, R.; Costantini, M. Emotion-inducing approaching sounds shape the boundaries of multisensory peripersonal space. Neuropsychologia 2015, 70, 468–475. [Google Scholar] [CrossRef] [PubMed]

- Brozzoli, C.; Pavani, F.; Urquizar, C.; Cardinali, L.; Farnè, A. Grasping actions remap peripersonal space. NeuroReport 2009, 20, 913–917. [Google Scholar] [CrossRef] [PubMed]

- Bassolino, M.; Serino, A.; Ubaldi, S.; Làdavas, E. Everyday use of the computer mouse extends peripersonal space representation. Neuropsychologia 2010, 48, 803–811. [Google Scholar] [CrossRef]

- de Haan, A.M.; Smit, M.; Van der Stigchel, S.; Dijkerman, H.C. Approaching threat modulates visuotactile interactions in peripersonal space. Exp. Brain Res. 2016, 234, 1875–1884. [Google Scholar] [CrossRef]

- StatCounter. Mobile Operating System Market Share Worldwide. 2025. Available online: https://gs.statcounter.com/os-market-share/mobile/worldwide (accessed on 25 September 2025).

- Inuggi, A.; Domenici, N.; Tonelli, A.; Gori, M. PsySuite: An android application designed to perform multimodal psychophysical testing. Behav. Res. Methods 2024, 56, 8308–8329. [Google Scholar] [CrossRef]

- Marin-Campos, R.; Dalmau, J.; Compte, A.; Linares, D. StimuliApp: Psychophysical tests on mobile devices. Behav. Res. Methods 2021, 53, 1301–1307. [Google Scholar] [CrossRef]

- Nunez, V.; Gordon, J.; Oh-Park, M.; Silvers, J.; Verghese, T.; Zemon, V.; Mahoney, J.R. Measuring Multisensory Integration in Clinical Settings: Comparing an Established Laboratory Method with a Novel Digital Health App. Brain Sci. 2025, 15, 653. [Google Scholar] [CrossRef]

- Catalogna, M.; Saporta, N.; Nathansohn-Levi, B.; Tamir, T.; Shahaf, A.; Molcho, S.; Erlich, S.; Shelly, S.; Amedi, A. Mobile application leads to psychological improvement and correlated neuroimmune function change in subjective cognitive decline. npj Digit. Med. 2025, 8, 359. [Google Scholar] [CrossRef]

- Mahoney, J.R.; George, C.J.; Verghese, J. Introducing CatchU™: A Novel Multisensory Tool for Assessing Patients’ Risk of Falling. J. Percept. Imaging 2022, 5, jpi0146. [Google Scholar] [CrossRef]

- Nicosia, J.; Wang, B.; Aschenbrenner, A.J.; Sliwinski, M.J.; Yabiku, S.T.; Roque, N.A.; Germine, L.T.; Bateman, R.J.; Morris, J.C.; Hassenstab, J. To BYOD or not: Are device latencies important for bring-your-own-device (BYOD) smartphone cognitive testing? Behav. Res. Methods 2022, 55, 2800–2812. [Google Scholar] [CrossRef]

- Arrieux, J.; Ivins, B. Accuracy of Reaction Time Measurement on Automated Neuropsychological Assessment Metric UltraMobile. Arch. Clin. Neuropsychol. 2024, 40, 310–318. [Google Scholar] [CrossRef]

- Jobe, W. Native Apps vs. Mobile Web Apps. Int. J. Interact. Mob. Technol. (iJIM) 2013, 7, 27. [Google Scholar] [CrossRef]

- Android Developers. NDK Guides: Audio Latency. 2025. Available online: https://developer.android.com/ndk/guides/audio/audio-latency?hl=en (accessed on 5 May 2025).

- Kay, M.; Rector, K.; Consolvo, S.; Greenstein, B.; Wobbrock, J.; Watson, N.; Kientz, J. PVT-Touch: Adapting a Reaction Time Test for Touchscreen Devices. In Proceedings of the 7th International Conference on Pervasive Computing Technologies for Healthcare and Workshops, Venice, Italy, 5–8 May 2013. [Google Scholar] [CrossRef]

- Culhane, K.M.; O’Connor, M.; Lyons, D.; Lyons, G.M. Accelerometers in rehabilitation medicine for older adults. Age Ageing 2005, 34, 556–560. [Google Scholar] [CrossRef] [PubMed]

- Grouios, G.; Ziagkas, E.; Loukovitis, A.; Chatzinikolaou, K.; Koidou, E. Accelerometers in Our Pocket: Does Smartphone Accelerometer Technology Provide Accurate Data? Sensors 2022, 23, 192. [Google Scholar] [CrossRef] [PubMed]

- Serino, A.; Noel, J.P.; Galli, G.; Canzoneri, E.; Marmaroli, P.; Lissek, H.; Blanke, O. Body part-centered and full body-centered peripersonal space representation. Sci. Rep. 2015, 5, 18603. [Google Scholar] [CrossRef]

- Taffou, M.; Suied, C.; Viaud-Delmon, I. Auditory roughness elicits defense reactions. Sci. Rep. 2021, 11, 956. [Google Scholar] [CrossRef]

- Pronk, T.; Wiers, R.W.; Molenkamp, B.; Murre, J. Mental chronometry in the pocket? Timing accuracy of web applications on touchscreen and keyboard devices. Behav. Res. Methods 2020, 52, 1371–1382. [Google Scholar] [CrossRef]

- Harari, G.M.; Müller, S.R.; Aung, M.S.; Rentfrow, P.J. Smartphone sensing methods for studying behavior in everyday life. Curr. Opin. Behav. Sci. 2017, 18, 83–90. [Google Scholar] [CrossRef]

- Carpentier, T.; Noisternig, M.; Warusfel, O. Twenty years of Ircam Spat: Looking back, looking forward. In Proceedings of the 41st International Computer Music Conference (ICMC), Denton, TX, USA, 25 September–1 October 2015; pp. 270–277. [Google Scholar]

- LISTEN Project, IRCAM. LISTEN HRTF Database. Available online: http://recherche.ircam.fr/equipes/salles/listen/ (accessed on 1 September 2025).

- Kandula, M.; Van der Stoep, N.; Hofman, D.; Dijkerman, H.C. On the contribution of overt tactile expectations to visuo-tactile interactions within the peripersonal space. Exp. Brain Res. 2017, 235, 2511–2522. [Google Scholar] [CrossRef]

- Ulrich, R.; Miller, J. Information processing models generating lognormally distributed reaction times. J. Math. Psychol. 1993, 37, 513. [Google Scholar] [CrossRef]

- Whelan, R. Effective analysis of reaction time data. Psychol. Rec. 2008, 58, 475–482. [Google Scholar] [CrossRef]

- Kuhlmann, T.; Garaizar, P.; Reips, U.D. Smartphone sensor accuracy varies from device to device in mobile research: The case of spatial orientation. Behav. Res. Methods 2021, 53, 22–33. [Google Scholar] [CrossRef]

- Bignardi, G.; Dalmaijer, E.S.; Anwyl-Irvine, A.; Astle, D.E. Collecting big data with small screens: Group tests of children’s cognition with touchscreen tablets are reliable and valid. Behav. Res. Methods 2021, 53, 1515–1529. [Google Scholar] [CrossRef]

- Coello, Y.; Cartaud, A. The Interrelation Between Peripersonal Action Space and Interpersonal Social Space: Psychophysiological Evidence and Clinical Implications. Front. Hum. Neurosci. 2021, 15, 636124. [Google Scholar] [CrossRef]

| Accuracy | Precision | |||

|---|---|---|---|---|

| Mean (ms) | Min (ms) | Max (ms) | SD (ms) | |

| Auditory | +12.66 | −0.021 | +2 | 0.963 |

| Tactile | +1.41 | −19.833 | +23.125 | 3.625 |

| Auditory + Tactile | +32.085 | +28.776 | +37.188 | 0.2001 |

| Manufacturer | Model | Year | Android | RAM (GB) | Processor |

|---|---|---|---|---|---|

| Pixel 2 XL | 2017 | v11 | 4 | Snapdragon 835 | |

| Samsung | Galaxy A20e | 2019 | v11 | 3 | Exynos 7884 |

| Samsung | Galaxy A15 | 2023 | v14 | 4 | MediaTek Dimensity 6100 |

| Fairphone | 4 | 2021 | v13 | 8 | Snapdragon 750G |

| Motorola | G24 | 2024 | v14 | 4 | MediaTek Helio G85 |

| Samsung A20 | Samsung A15 | Fairphone 4 5G | Motorola G24 | Pixel 2 XL | |

|---|---|---|---|---|---|

| in/out latency | ~120 ms | ~80 ms | 60 ms | ~110 ms | 30 ms |

| hasLowLatencyFeature | false | true | true | true | true |

| hasProFeature | false | false | true | false | true |

| Fq accelerometer | ~100 Hz | 320 Hz | >400 Hz | >400 Hz | >400 Hz |

| Fq touchScreen | 120 Hz | 90 Hz | 60 Hz | 90 Hz | 120 Hz |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roussel, U.; Fléty, E.; Agon, C.; Viaud-Delmon, I.; Taffou, M. Using Android Smartphones to Collect Precise Measures of Reaction Times to Multisensory Stimuli. Sensors 2025, 25, 6072. https://doi.org/10.3390/s25196072

Roussel U, Fléty E, Agon C, Viaud-Delmon I, Taffou M. Using Android Smartphones to Collect Precise Measures of Reaction Times to Multisensory Stimuli. Sensors. 2025; 25(19):6072. https://doi.org/10.3390/s25196072

Chicago/Turabian StyleRoussel, Ulysse, Emmanuel Fléty, Carlos Agon, Isabelle Viaud-Delmon, and Marine Taffou. 2025. "Using Android Smartphones to Collect Precise Measures of Reaction Times to Multisensory Stimuli" Sensors 25, no. 19: 6072. https://doi.org/10.3390/s25196072

APA StyleRoussel, U., Fléty, E., Agon, C., Viaud-Delmon, I., & Taffou, M. (2025). Using Android Smartphones to Collect Precise Measures of Reaction Times to Multisensory Stimuli. Sensors, 25(19), 6072. https://doi.org/10.3390/s25196072