Abstract

Accurate and reliable vehicle localization is essential for autonomous driving in complex outdoor environments. Traditional feature-based visual–inertial odometry (VIO) suffers from sparse features and sensitivity to illumination, limiting robustness in outdoor scenes. Deep learning-based optical flow offers dense and illumination-robust motion cues. However, existing methods rely on simple bidirectional consistency checks that yield unreliable flow in low-texture or ambiguous regions. Global navigation satellite system (GNSS) measurements can complement VIO, but often degrade in urban areas due to multipath interference. This paper proposes a multi-sensor fusion system that integrates monocular VIO with GNSS measurements to achieve robust and drift-free localization. The proposed approach employs a hybrid VIO framework that utilizes a deep learning-based optical flow network, with an enhanced consistency constraint that incorporates local structure and motion coherence to extract robust flow measurements. The extracted optical flow serves as visual measurements, which are then fused with inertial measurements to improve localization accuracy. GNSS updates further enhance global localization stability by mitigating long-term drift. The proposed method is evaluated on the publicly available KITTI dataset. Extensive experiments demonstrate its superior localization performance compared to previous similar methods. The results show that the filter-based multi-sensor fusion framework with optical flow refined by the enhanced consistency constraint ensures accurate and reliable localization in large-scale outdoor environments.

1. Introduction

Advancements in autonomous driving sensor performance and efficient processing capabilities for large-scale multi-sensor data have contributed significantly to the development of autonomous vehicle technology. These improvements have also facilitated the widespread adoption of deep learning-based approaches, which effectively address the limitations of traditional methods and improve robustness in complex environments. A typical autonomous driving architecture utilizing onboard sensors consists of perception, localization and mapping, path planning, decision-making, and vehicle control [1]. To achieve a fully autonomous driving system, each component must maintain accuracy and completeness while operating in a seamlessly integrated manner. In particular, localization, which estimates the position of the vehicle, is crucial for both ensuring the performance of autonomous driving systems and enabling safe navigation in urban environments [2]. However, achieving accurate and drift-free vehicle localization remains challenging due to sensor noise, signal degradation, and environmental factors. This challenge has motivated extensive research into methods capable of robustly integrating multiple sensor modalities, including visual, inertial measurement unit (IMU), and global navigation satellite system (GNSS) data, to overcome the limitations of conventional approaches.

The widely used approach for vehicle localization involves fusing in-vehicle sensor data with satellite-based information. A navigation system integrating GNSS and IMU has been extensively utilized in various vehicle localization algorithms [3,4,5]. The IMU, an onboard vehicle sensor, continuously tracks position and orientation at a high frame rate from a given initial position. However, it suffers from drift over time due to accumulated errors. In contrast, GNSS provides global positioning information using satellite signals, making it free from drift errors. However, GNSS-based navigation systems are susceptible to environmental factors such as multipath interference or signal outages. The GNSS/IMU fusion method mitigates IMU drift errors by applying GNSS measurement corrections, thus improving vehicle position accuracy. This complementary relationship enables robust short-term motion estimation with long-term position corrections. However, fusion performance is heavily dependent on the reliability of GNSS updates, which can vary significantly with the surrounding environment. In environments such as tunnels or dense urban areas with tall buildings, satellite signals remain susceptible to interference, leading to deterioration in the performance of GNSS/IMU integration [6,7]. As alternative positioning systems, pseudolite-based approaches have been investigated to complement GNSS in urban and smart city environments [8]. Nevertheless, in such challenging urban environments, GNSS/IMU integration alone is insufficient to ensure reliable localization, highlighting the need for additional sophisticated fusion strategies.

To overcome these limitations, intelligent vehicles can improve navigation accuracy by fusing sensor data from onboard sensors, with visual–inertial odometry (VIO) emerging as a promising approach for multi-sensor integration and heterogeneous data fusion. VIO, which fuses IMU data to solve the scale recovery problem in monocular camera-based visual odometry (VO), estimates the pose of the sensors by compensating for IMU drift using image measurements. This technology is crucial for vehicles and is also applicable in mobile robotics and virtual reality [9]. With advancements in computer vision, VIO has evolved from traditional image feature-based approaches to more recent methods that utilize deep neural networks [10,11]. Feature-based approaches focus on extracting robust feature points that maintain consistency across image frames, achieving real-time performance [12,13]. However, factors such as featureless images, low-light conditions, and fast movement can degrade the performance of feature-based VIO [14]. In particular, feature-based VIO is limited by sparse features, sensitivity to illumination and texture variations, and can fail in challenging visual conditions, representing an unresolved problem in the field. To address such issues, learning-based methods employ deep neural networks to directly infer motion from raw image sequences, allowing them to remain effective even under poor lighting or blurred images. Approaches leveraging deep learning have been applied either as hybrids replacing parts of traditional VIO architectures or as end-to-end pose estimation methods, offering various alternatives to traditional VIO. However, existing deep optical flow-based approaches often rely on simple bidirectional flow consistency constraints that yield unreliable flow in low-texture or ambiguous regions, a problem that remains critical in challenging scenarios. Furthermore, end-to-end approaches exhibit lower pose estimation performance compared to traditional methods.

This paper proposes a multi-sensor fusion system that integrates monocular-based VIO and GNSS measurements for vehicle localization. The proposed method adopts a hybrid approach to VIO, replacing traditional image feature extraction by leveraging a deep optical flow network. The optical flow network predicts dense optical flow from image pairs and extracts robust optical flow from the predicted flow using an enhanced consistency constraint. The extracted flow is used as visual measurements, which are then fused with IMU data in the VIO system, and a multi-sensor fusion framework is formed by incorporating GNSS measurement. To integrate the sensor data and estimate the system state, an extended Kalman filter (EKF) is employed. Finally, the performance of the proposed method is validated using the publicly available KITTI dataset [15]. The main contributions of the proposed approach are summarized as follows:

- An integrated framework combining a hybrid VIO approach employing deep networks with GNSS measurements is proposed. The enhanced consistency constraint is applied to the predicted optical flow to selectively extract high-confidence measurements, which are then incorporated into the VIO framework. The framework is applied to real sensor-based datasets, enhancing scalability through sensor fusion while maintaining general applicability.

- A filter-based multi-sensor fusion strategy is proposed to enhance the localization accuracy of the vehicle. The proposed strategy can be applied to large-scale outdoor environments and prevent the accumulation of errors over time during long-term driving.

- The proposed method is tested on real-world data and compared with leading approaches. The results demonstrate the superiority of the proposed method in terms of localization accuracy.

The remainder of this paper is organized as follows: Section 2 discusses the existing works on VO/VIO and multi-sensor fusion approaches. Section 3 introduces the proposed system in detail. Section 4 focuses on presenting the experimental setup and provides a comprehensive analysis of the results. Finally, Section 5 presents the conclusion of the paper and potential future works.

2. Related Work

2.1. Representative VO/VIO Methods

Traditional feature-based VO/VIO approaches have utilized feature detectors such as SIFT [16], SURF [17], or ORB [18] to extract feature points from images. The extracted keypoints are continuously tracked across successive camera frames using tracking algorithms such as Kanade–Lucas–Tomasi (KLT) [19]. The movement of these keypoints in image pixels can be represented by sparse optical flow [20], which can then be used for camera pose estimation. Many VO/VIO methods and simultaneous localization and mapping (SLAM) techniques have been developed based on this approach. PTAM [9], which uses the FAST corner detector [21], divides the tracking and mapping sequences, applying the keypoint idea and optimizing the map using bundle adjustment. ORB-SLAM [10] overcomes the passive initialization drawback of PTAM with a parallel-thread structure divided into tracking, local mapping, and loop closure, making it one of the most widely used methods. However, monocular camera-based VO systems face the scale recovery problem, which can be overcome by combining the system with an IMU in VIO. MSCKF [22] is a filter-based approach that merges measurements from multiple camera frames as constraints. ROVIO [23] applies a direct method using photometric error in monocular VIO and updates the state using an EKF. VINS-Mono [13] is an optimization-based approach that employs IMU pre-integration [24] and uses a sliding window to maintain robust pose estimation performance. However, these feature-based approaches can still suffer from degraded VO/VIO performance due to factors such as featureless images, low-light conditions, and fast movement, which can affect the feature point extraction process.

Recent advancements in deep learning for computer vision have highlighted the use of convolutional neural networks (CNNs) as an alternative to traditional handcrafted features by applying them to images. Deep networks for end-to-end VO/SLAM have been proposed to directly predict relative poses between image frames through supervised learning [25,26,27] or unsupervised learning [28,29,30]. VINet [31] proposed a deep network architecture for learning end-to-end VIO sequences. However, in terms of pose estimation performance, end-to-end methods are inferior compared to traditional VO/VIO approaches. As a hybrid approach that combines deep learning algorithms with geometry-based VO, DF-VO [32] estimates optical flow and depth from deep networks, recovers scale, and estimates poses by applying geometry constraints. Based on DF-VO, a dynamic object-aware VO system, Dynamic VO [33], maintains the lightweight optical flow backbone while incorporating semantic segmentation to identify and filter dynamic objects. In addition, an extended DF-VO framework, Deep Depth-Flow odometry [34], integrates a high-pricision flow network, RAFT [35] with IMU data fusion. PLD-SLAM [36] proposed a method utilizing deep learning-based segmentation and K-means clustering to filter dynamic objects. However, long-term navigation sequences and large-scale outdoor environments with non-static objects in urban settings still lead to drift errors in these methods. Moreover, employing high-precision flow networks or integrating additional deep models generally increases computational overhead.

2.2. Global-Aware Multi-Sensor Fusion Methods

GNSS provides globally referenced location information and can be combined with various vehicle onboard sensors to address drift issues that local estimation methods may face during long-term navigation [37]. The fusion of visual, IMU, and GNSS information has shown the potential to offer long-term localization solutions in outdoor environments [38]. Recent hybrid localization frameworks combine inertial measurements with sporadic position updates to enhance robustness and reduce drift [39]. Similarly, IC-GVINS [40] integrates GNSS and visual-inertial odometry in a tightly-coupled, INS-centric framework, achieving robust real-time pose estimation even under GNSS outages. GVINS [41] tightly integrates GNSS raw measurements with VINS, performing global pose estimation in both indoor and outdoor environments. In [42], GNSS measurements are used to enhance VINS-Mono through graph optimization. However, GNSS signal-free conditions may compromise the robustness and accuracy of these approaches. The combination of VIO and GNSS can alleviate drift and scale uncertainties, but challenges still exist in system fusion due to failures of individual systems, particularly in complex urban environments.

As global recognition fusion methods using deep learning, ref. [43] proposed a method that fuses GNSS and IMU data using a recurrent neural network (RNN). In [2], an approach was proposed that uses long-short-term memory (LSTM) to fuse GNSS and IMU data for vehicle localization. In [44], an end-to-end global positioning system modeling sequential data with LSTM was proposed. These deep learning-based methods introduce novel flexibility in modeling complex sensor dynamics, but their dependency on locally collected data and limited generalization across unseen environments remain challenges. Continued efforts are needed to enhance the robustness and adaptability of these models for deployment in diverse and dynamically changing environments.

3. Methodology

3.1. Overview of Proposed System

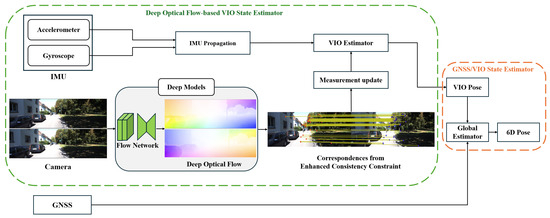

Figure 1 presents an overview of the proposed multi-sensor fusion system. Depending on the input sensor configuration, the system is divided into two components. The deep optical flow-based VIO state estimator fuses image and IMU data to estimate the VIO pose. IMU accelerometer and gyroscope measurements are propagated between consecutive image frames. A deep learning-based optical flow prediction network is employed to extract deep optical flow for all the image pixels. From this dense information, reliable and robust optical flow is extracted through the enhanced consistency constraint, forming the visual measurement model. The VIO estimator then fuses the IMU and camera data within the EKF framework to estimate the state of the system. The GNSS/VIO state estimator combines global GNSS data and the pose from the VIO estimator to estimate the final vehicle pose. The proposed system integrates multi-sensor information based on the EKF framework, balancing local accuracy and global consistency.

Figure 1.

Framework of the proposed multi-sensor fusion localization system.

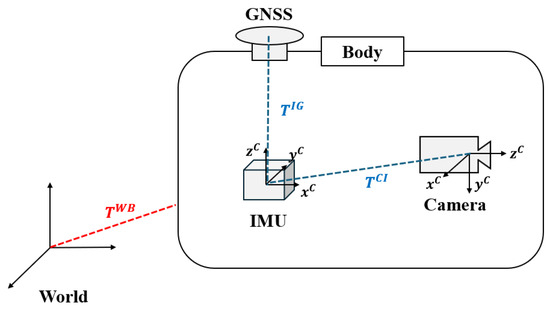

The IMU and camera attached to the vehicle each have their own coordinate systems, while GNSS uses earth-centered earth-fixed (ECEF) rectangular coordinates based on the Earth’s center of mass for reference. Therefore, to fuse multi-sensor data, the different coordinate systems of each sensor must be aligned, and the extrinsic parameters between the sensors are assumed to be known. The coordinate systems used in the proposed approach are defined as the world frame , the body frame , the IMU frame , the camera frame , the ECEF frame , and the global frame of GNSS . Figure 2 illustrates the relationships among the major coordinate frames. In the camera frame, the pixel frame is denoted as , and the normalized image frame is denoted as . The homogeneous representation of a point in the pixel frame is expressed as . The relationship between the pixel frame and the normalized frame is defined using the intrinsic matrix from the pinhole camera model K as [45]. The rotation matrix represents the rotation from frame B to frame A. In this paper, it is assumed that the sensors are rigidly connected, with the camera frame defined as the body frame.

Figure 2.

Relationships of the coordinate frames and transformation.

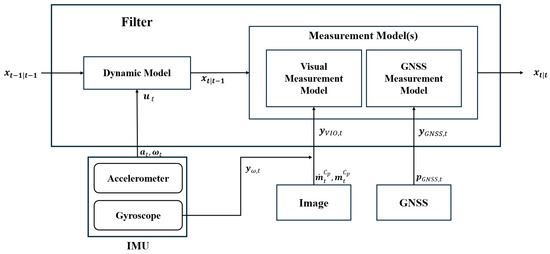

Figure 3 presents a filter architecture and the overall data flow of the proposed method. The system state is propagated using IMU measurements, including accelerometer and gyroscope data. Visual measurements are obtained from optical flow and the corresponding image pixels, combined with gyroscope data, while GNSS measurements provide global position updates. These sensor inputs are integrated within the EKF framework to produce robust and accurate vehicle pose estimates.

Figure 3.

Filter architecture and data flow.

3.2. Deep Optical Flow-Based VIO

3.2.1. IMU Measurement Propagation

Accounting for biased random walk and noise, the inertial measurement model for the acceleration and angular velocity at time t is formulated as:

where and denote the acceleration and angular velocity measurements, respectively, and represents the gravity vector in the world frame. Acceleration noise and angular velocity noise are assumed to be Gaussian white noise, given by and , respectively. The acceleration bias and gyroscope bias are modeled as random walk processes, described as:

where and represent the noise of acceleration bias and gyroscope bias, respectively, which are assumed to be Gaussian distributions, expressed as and .

For the construction of the EKF in the proposed method, the state vector is defined as:

where represents the position, denotes the velocity, and is a unit quaternion that defines the orientation of the body frame B expressed in the world frame W.

Since the IMU operates at a higher frame rate than the camera, its measurements are integrated between consecutive image frames. The state propagation equations for position, velocity, and quaternion over the time interval are given by:

where

Note that the measurements of acceleration and angular velocity are expressed in the body frame B, obtained by applying the rotation matrix to transform from the IMU frame to the body frame.

The dynamic model of the state is given by:

where denotes a control input, and represents the process noise, with . Let denote the estimation of state at time t, with . The time update for EKF is defined as follows:

where

here, is the estimated error covariance matrix and represents the noise covariance matrix of the process. is the Jacobian of the process model with respect to , and is the Jacobian of the process model with respect to .

3.2.2. Enhanced Corresponding Optical Flow from Deep Network

Given an image pair , optical flow describes the 2D to 2D motion of a pixel in image to its corresponding location in image . A deep learning-based optical flow network learns pixel-wise correspondences and estimates dense optical flow for the entire image. Since obtaining labeled ground truth data for all pixels is challenging, the network is trained and evaluated using synthetic image datasets. Advances in deep learning-based optical flow network models have surpassed the accuracy of traditional hand-crafted methods.

Accurately estimated optical flow enables robust state estimation through measurement updates. However, advanced flow prediction models designed for high accuracy often involve deep architectures incorporating RNN or attention mechanisms from transformer networks, which increase inference time [46]. Based on the comparative analysis of deep optical flow models and traditional approaches presented in [47], the proposed method incorporates PWC-Net [48] into the VIO framework. Following standard optical flow training conventions [49], the model is trained and fine-tuned using synthetic datasets and subsequently fine-tuned on the KITTI dataset.

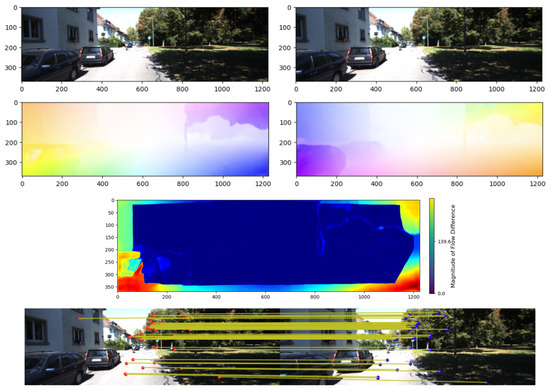

However, using the optical flow of all pixels as measurements can be computationally expensive and degrade performance due to the inclusion of noise. To address this limitation, a consistency-based filtering method is often employed [32]. Given an image pair, the network predicts bidirectional forward–backward optical flow. The corresponding flows are compared to assess the reliability of the estimated motion at each pixel, which yields the flow difference. This approach assumes that correct correspondences should yield nearly inverse flows, i.e., they should cancel each other out when composed. Nevertheless, this consistency constraint applies the same criterion to all pixels without considering their motion context or underlying appearance. As a result, it can derive unreliable flows in low-texture areas and overlook spurious flows caused by motion ambiguity or occlusion areas. In addition, in regions with weak gradients or subtle motion signals, minor inconsistencies are more indicative of inherent uncertainty rather than genuine estimation errors.

To improve the reliability of visual measurements derived from dense optical flow, an enhanced flow consistency metric is proposed that incorporates both local image structure and motion coherence. In contrast to relying solely on bidirectional flow agreement, the proposed approach introduces a normalization strategy that accounts for spatial texture strength, motion magnitude, and neighborhood-level flow uniformity. This enables a more context-aware evaluation of flow reliability, leading to a normalized flow consistency score that reflects local structural and motion characteristics. The enhanced flow consistency score is defined as:

where denotes a pixel point in frame , is the forward flow from frame to , and is the backward flow. The term identifies the corresponding pixel in the subsequent frame. The denominator incorporates three key components: the magnitude of the image gradient , which reflects local texture richness at pixel ; the magnitude of the predicted forward flow , indicating motion strength; and the contextual consistency term , which evaluates the coherence of flow vectors within a local neighborhood. The contextual term is computed as:

where represents a neighboring pixel within a local window centered at . The contextual term computes the average flow deviation between the central pixel and its neighbors, thereby penalizing flow vectors that significantly differ from their surrounding motion patterns. This encourages the selection of flow estimates that are not only directionally consistent but also spatially coherent, effectively suppressing unreliable measurements in regions. Parameters , , and are weighting factors that balance the contribution of texture strength, motion magnitude, and local flow coherence, respectively, in the normalization term. The constant is introduced to prevent division by zero and to ensure numerical stability in regions with minimal gradient or motion. These hyperparameters can be tuned to emphasize specific cues depending on the characteristics of the scene or the target application. By integrating these spatial and motion-aware cues, the proposed consistency score selectively retains flow vectors that exhibit both directional agreement and contextual stability. This leads to more robust visual observations, particularly in environments with heterogeneous motion or low-texture areas.

Figure 4 illustrates the process of extracting high-confidence optical flow from two consecutive input images. The deep flow network computes dense bidirectional optical flow, and (11) is then applied to remove unreliable matches, leaving only robust flow vectors with their corresponding pixel locations. These results serve as inputs to the visual measurement model. The corresponding 2D-2D matches are illustrated in the figure to visualize the retained high-confidence optical flow.

Figure 4.

Process of extracting corresponding optical flow using a deep learning-based optical flow network.

3.2.3. Visual Measurement Model

The selected optical flow in Section 3.2.2 is obtained by filtering out noisy flows from the dense optical flow of the entire image, resulting in a more accurate representation of camera motion. These optical flow measurements are then fused with the dynamic model of the IMU to estimate the state. This paper follows the derivations of [50,51] for the estimation process.

A 3D point in the camera coordinate system can be represented using the 3D point observation model that relates it to the world coordinate system, given by . Taking the time derivative of this equation results in the following expression:

where denotes the optical flow in the camera frame, and are the extrinsic parameters of the camera observation model, which transform the world coordinate system to the camera coordinate system, and is the skew-symmetric matrix obtained from the angular velocity vector.

Since the measurement of the optical flow is on the image plane, the depth remains unknown. To solve this problem, applying the relationship to (13) leads to the following derivation:

where is the homogeneous form of optical flow, and is related to the system states.

The depth can be eliminated by multiplying both sides of (14) by , resulting in:

The measurement equation can be derived by relating the parameters to the system model. Since it is assumed that the IMU and camera are mounted on a rigid body, the angular velocity is obtained from the rotation matrix , which represents the transformation from the IMU frame to the camera frame. With the measurement considered at time t, the measurement model is in the following form:

where denotes the measurement noise, which includes the optical flow measurement noise and the angular velocity measurement noise . Considering that the measurement noise is mutually independent at time t, the measurement equation is then derived as:

The optical flow extracted through the enhanced consistency constraint from image pairs can have multiple values, providing additional measurement information. For each of the extracted flows, (17) is stacked, forming a comprehensive model for the measurement update. The measurement update of EKF is expressed as follows:

where is the stacked measurement model of constructed from the selected optical flow measurements in the image. represents the Jacobian of with respect to the state vector, represents the Jacobian of with respect to the noise vector , and denotes the noise covariance matrix. These matrices are defined as follows:

Since the measurement includes optical flow derived from 2D camera motion on the image plane and angular velocity, the measurement update directly refines the velocity and orientation of the state. To improve position accuracy and integrate global position information, a GNSS measurement update is performed, as described in the following section.

3.3. GNSS Measurement Model

In general, GNSS provides absolute longitude, latitude, and altitude with respect to the Earth, making it an excellent complement to VIO. For multi-sensor fusion, the GNSS measurements provided in the global coordinate system and the VIO’s local coordinate system need to be aligned. The proposed method compares two GNSS positions measured at different times to estimate the heading angle, and integrates the gyro yaw rate over time to determine the relative heading. By comparing the gyro-based heading with the GNSS-derived heading, the initial heading can be determined, allowing the alignment of the two coordinate systems.

When the position information is received from GNSS, its measurement model is defined as follows [52]:

where represents the Gaussian noise model for GNSS measurement noise, and denotes the position in the ECEF rectangular coordinates. is obtained using the following equation:

where represent the latitude, longitude, and altitude provided by GNSS. The following parameters are defined above: is the length from the Earth’s center to the surface; is the Earth eccentricity; and the Earth’s ellipsoid semi-major and semi-minor axes are a = 6,378,137 m and b = 6,356,752.3142 m, respectively. The transition matrix from the ECEF frame to the global frame G is given as:

To fuse the GNSS measurement in the global frame with the VIO frame, the integrated system obtains the GNSS measurement model by applying to . The rotation matrix is derived from the extrinsic parameters of the sensors. The measurement model of the GNSS is then expressed as follows:

where represents the measurement noise of the GNSS, with . The measurement update is formulated as follows:

where represents the Jacobian of with respect to the state vector, given by:

4. Experimental Results

In this section, the experimental setup and results of the proposed method are described, including both quantitative and qualitative analyses.

4.1. Experimental Setup

The proposed method is implemented on an Intel Core i7-13620H CPU laptop (Intel Corporation, Santa lara, CA, USA) with 16 GB RAM and NVIDIA GeForce RTX 4050 laptop (NVIDIA Corporation, Santa lara, CA, USA) GPU. For the flow network, PWC-Net [48] is employed using the PyTorch 1.13.0 [53] framework, pre-trained with standard optical flow training conventions [49]. The network model is initialized with random weights and pre-trained on FlyingChairs [54]. Fine-tuning is performed on a combination of FlyingThings2D [55] and Sintel [56]. Moreover, further fine-tuning is performed on the KITTI dataset [15].

The proposed method is evaluated and compared with other methods in terms of trajectory accuracy in the KITTI dataset, which is commonly used for algorithm evaluation in autonomous driving scenarios. The sensor platform used in the dataset is equipped with two grayscale and two color cameras operating at 10 Hz, and a 100 Hz GNSS/INS navigation system for data collection. Additionally, the dataset provides ground truth camera poses. The proposed method uses a 10 Hz GNSS observation frequency. The coordinate systems of the sensors are defined as follows: the camera follows a right–down–forward convention, and the GNSS/IMU adopts a forward–left–up convention. The proposed method sets the camera as the body frame to fuse sensor data. Furthermore, most of the runtime of the proposed method was spent on optical flow network inference, with an average processing time of 156 ms per image frame.

4.2. Results

The proposed method is evaluated and compared with other methods in terms of localization accuracy on sequences from the KITTI odometry dataset. The methods compared in the experiments are categorized into VO, VIO, and GNSS-VIO approaches. In particular, the proposed method utilizes deep learning-based optical flow and is compared with VO methods, which are classified into pure deep learning-based methods, geometry-based methods, and hybrid methods. Pure deep learning-based methods include Depth-VO-Feat [57] and SC-SFMLearner [58], the geometry-based method is ORB-SLAM2 [12], and the hybrid methods are DF-VO [11] and Dynamic VO [33]. For VI-SLAM methods, RLD-SLAM [59] is compared, and for GNSS-VIO methods, Global-VINS [60] is compared. To further assess the impact of the enhanced correspondence, the variant that relies solely on the forward–backward flow consistency is denoted Proposed (w/o EFC), whereas the complete method including the enhanced flow consistency is denoted Proposed.

The root mean square error (RMSE) of the absolute trajectory error (ATE) is used as an evaluation metric. A smaller RMSE indicates a smaller difference between the predicted trajectory and the ground truth, making it an appropriate metric for localization evaluation. Table 1 presents the experimental results from the sequences of the KITTI dataset. In the table, ORB-SLAM2 is shown with two results, as there is a significant performance difference depending on whether loop closure is included in the algorithm. The best result for each sequence is highlighted in bold. The results indicate that the overall localization accuracy of the feature-based approach, ORB-SLAM2 with loop closure, is superior to that of pure deep learning-based VO methods. The hybrid VO approach, DF-VO, which employs optical flow and depth networks, delivers competitive performance due to scale correction from the depth network. Additionally, the multi-sensor fusion approach, integrating additional sensors, provides improved accuracy. Global-VINS, a GNSS-VIO approach, achieves higher accuracy than the VIO-based RLD-SLAM. Moreover, the results indicate that the incorporation of the enhanced flow consistency leads to improved accuracy. As shown in the RMSE results, the proposed method demonstrates strong performance compared to other methods through the multi-sensor fusion approach.

Table 1.

Quantitative comparison of the results on KITTI dataset (RMSE [m]). The best result is in bold.

The localization results of the RMSE show that the hybrid VO approach, which incorporates a depth network, outperforms pure deep learning-based VO methods. This demonstrates the significant benefit of integrating depth information for more accurate scale estimation and trajectory estimation. Furthermore, the multi-sensor fusion approach, which combines the strengths of visual odometry with additional sensor data, shows substantial improvements in localization accuracy. The proposed method, which integrates hybrid VIO and GNSS through multi-sensor fusion, competes effectively with existing methods and demonstrates superior performance in large-scale outdoor driving environments. Notably, the proposed approach achieves robust localization without drift, even under challenging conditions, marking a significant advancement in the field of autonomous navigation.

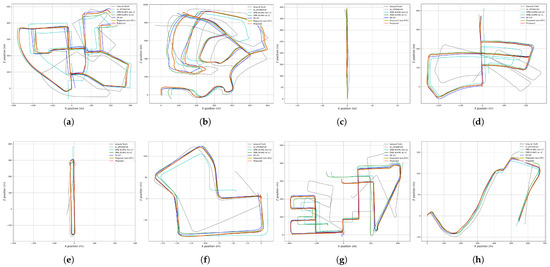

To further evaluate the accuracy of pose estimation, the relative pose error (RPE) is compared. In contrast to ATE, which depends on the total number of frames in a trajectory, RPE is independent of this accumulation and evaluates frame-to-frame relative pose error. The proposed method, employing a hybrid approach with an optical flow network, computes the pose error between image frames when visual measurements are updated, allowing comparison with other visual-based approaches. Figure 5 compares the trajectories of eight sequences of the KITTI dataset, showing that the proposed method closely follows the ground truth. Table 2 presents the numerical evaluation results for RPE in terms of translation and rotation, with the best results highlighted in bold. As shown in the table, the proposed method achieves comparable or superior RPE performance compared to other methods, demonstrating strong frame-to-frame translation and rotation pose estimation accuracy.

Figure 5.

Qualitative comparison of trajectories from KITTI dataset: (a) Sequence 00. (b) Sequence 02. (c) Sequence 04. (d) Sequence 05. (e) Sequence 06. (f) Sequence 07. (g) Sequence 08. (h) Sequence 10.

Table 2.

Comparison of RPE (translation and rotation) results across sequences 00-10 of KITTI dataset.

To evaluate the global localization accuracy of the proposed method, a comparison with the GNSS data is performed. The RMSE, mean, standard deviation (Std), maximum (Max), and minimum (Min) values for the GNSS, proposed method, and ground truth, as measured in sequence 04 of the KITTI dataset, are compared and presented in Table 3. The results show that the proposed method improves the localization accuracy of the raw GNSS measurements by leveraging multi-sensor fusion. Although GNSS provides global location information without error accumulation, but still includes localization errors. By integrating additional sensor data, overall accuracy is improved. These results highlight the effectiveness of the proposed method in enhancing GNSS-based localization accuracy.

Table 3.

Comparison of GNSS and the proposed method accuracy on sequence 04 of KITTI dataset [m].

The enhanced flow consistency constraint introduced in Section 3.2.2 improves the robustness of visual measurements, as demonstrated by the accuracy gains of the proposed method over its variant without this constraint. Furthermore, the GNSS measurement model in Section 3.3 helps mitigate long-term drift in the evaluated KITTI sequences, resulting in lower RMSE values. Notably, the proposed method achieves competitive or superior performance even compared to existing GNSS-VIO approaches, underscoring the effectiveness of the fusion design. These results highlight how each design step in the filter architecture contributes to the overall localization accuracy.

The methods introduced in Section 3 are directly reflected in the results presented here. Specifically, the IMU propagation and visual measurement update (Section 3.2.1–Section 3.2.3) form the VIO estimator, while the GNSS measurement model in Section 3.3 is further integrated to constitute the complete fusion system. The enhanced flow consistency constraint introduced in Section 3.2.2 improves the robustness of visual measurements, which is validated by the accuracy gains of the proposed method over its variant without this constraint. By jointly leveraging these components, the system achieves robust state estimation, including both position and orientation through quaternion representation, leading to improved RMSE and RPE performance. Furthermore, the GNSS integration effectively mitigates long-term drift in the KITTI sequences, resulting in lower RMSE values. Notably, the proposed method achieves competitive or superior performance even compared to existing GNSS-VIO approaches, underscoring the effectiveness of the fusion design. These results highlight how each design step in the filter architecture contributes to the localization accuracy.

Furthermore, under the enhanced flow consistency constraint, more robust optical flow can be extracted from the deep prediction model. While this improves the accuracy of visual measurements and subsequent state estimation, it introduces an additional computational burden, representing a trade-off between runtime efficiency and flow prediction quality. This general consideration highlights the balance between accuracy and computational cost inherent in the use of advanced optical flow models within the proposed VIO and multi-sensor fusion framework.

Overall, the experimental results demonstrate that the proposed multi-sensor fusion method, leveraging the deep learning-based optical flow network, effectively improves vehicle localization accuracy in outdoor environments. The filter-based multi-sensor fusion strategy, which combines the hybrid VIO approach incorporating the deep optical flow network with GNSS measurements, addresses long-term error accumulation in large-scale outdoor environments by enhancing the scalability and robustness of the pose estimation framework. Tested on real-world data, the proposed method shows improvements in localization accuracy compared to standards approaches in frame-to-frame accuracy and GNSS-based localization accuracy.

5. Discussion and Conclusions

This paper proposes a multi-sensor fusion method that integrates monocular VIO with GNSS measurements to enhance vehicle localization accuracy in outdoor environments. Leveraging a deep learning-based optical flow network, the proposed method effectively addresses challenges related to long-term drift and error accumulation in large-scale outdoor environments. The fusion of GNSS and hybrid VIO, which employs deep optical flow, provides reliable pose estimation. The experimental evaluation on the KITTI dataset demonstrates that the proposed method outperforms similar approaches, providing strong frame-to-frame accuracy and improved GNSS-based localization performance. The results also highlight the filter-based fusion strategy, which successfully integrates data from multiple sensors to achieve an accurate and drift-free localization system.

Although the proposed method demonstrates promising results in outdoor environments, future research could extend the evaluation to more challenging real-world scenarios, such as urban canyons or tunnels, where GNSS signals are frequently degraded or completely blocked. In these environments, maintaining consistent VIO estimation and incorporating sporadic GNSS updates through tight fusion strategies could provide more robust localization performance. Such evaluations would further validate the generalization capability of the proposed framework and highlight its practical applicability under extreme navigation scenarios. In addition, the proposed method exhibits a computational bottleneck primarily in the flow inference step, where both forward and backward flows are estimated from the input images, resulting in substantial runtime overhead. To address this limitation, future work could involve strategies for reducing computational cost, including input image resampling to lower resolution prior to inference, adoption of lightweight network architectures, and selective flow estimation within regions of interest guided by IMU dynamic estimation, thereby improving the overall efficiency of the algorithm. These lightweight strategies will also be explored to further improve computational efficiency for real-time embedded systems deployment. Finally, although the evaluation of the proposed method was performed on a high-performance platform, the use of these efficiency-oriented strategies would allow deployment on typical autonomous vehicle computing units, enabling practical performance assessment in real-world navigation scenarios.

Funding

This research was funded by Halmstad University.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset available on request from the authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GNSS | Global navigation satellite system |

| VIO | Visual-inertial odometry |

| IMU | Inertial measurement units |

| VO | Visual odometry |

| EKF | Extended Kalman filter |

| SLAM | Simultaneous localization and mapping |

| CNNs | Convolutional neural networks |

| RNN | Recurrent neural network |

| LSTM | Long-short-term memory |

| ECEF | Earth-centered earth-fixed |

| RMSE | Root mean square error |

| ATE | Absolute trajectory error |

| RPE | Relative pose error |

References

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixao, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Liu, Y.; Luo, Q.; Zhou, Y. Deep learning-enabled fusion to bridge GPS outages for INS/GPS integrated navigation. IEEE Sens. J. 2022, 22, 8974–8985. [Google Scholar] [CrossRef]

- Zhang, T.; Yuan, M.; Wang, L.; Tang, H.; Niu, X. A robust and efficient IMU array/GNSS data fusion algorithm. IEEE Sens. J. 2024, 24, 26278–26289. [Google Scholar] [CrossRef]

- Zhang, Y. A fusion methodology to bridge GPS outages for INS/GPS integrated navigation system. IEEE Access 2019, 7, 61296–61306. [Google Scholar] [CrossRef]

- Meng, X.; Tan, H.; Yan, P.; Zheng, Q.; Chen, G.; Jiang, J. A GNSS/INS integrated navigation compensation method based on CNN-GRU+ IRAKF hybrid model during GNSS outages. IEEE Trans. Instrum. Meas. 2024, 73, 2510015. [Google Scholar] [CrossRef]

- Zhang, H.; Xiong, H.; Hao, S.; Yang, G.; Wang, M.; Chen, Q. A novel multidimensional hybrid position compensation method for INS/GPS integrated navigation systems during GPS outages. IEEE Sens. J. 2023, 24, 962–974. [Google Scholar] [CrossRef]

- Liu, T.; Liu, J.; Wang, J.; Zhang, H.; Zhang, B.; Ma, Y.; Sun, M.; Lv, Z.; Xu, G. Pseudolites to support location services in smart cities: Review and prospects. Smart Cities 2023, 6, 2081–2105. [Google Scholar] [CrossRef]

- Klein, G.; Murray, D. Parallel tracking and mapping for small AR workspaces. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; IEEE: New York, NY, USA, 2007; pp. 225–234. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef]

- Zhan, H.; Weerasekera, C.S.; Bian, J.-W.; Garg, R.; Reid, I. DF-VO: What should be learnt for visual odometry? arXiv 2021, arXiv:2103.00933. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardos, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef]

- Yang, N.; Wang, R.; Gao, X.; Cremers, D. Challenges in monocular visual odometry: Photometric calibration, motion bias, and rolling shutter effect. IEEE Robot. Autom. Lett. 2018, 3, 2878–2885. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? the kitti vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: New York, NY, USA, 2012; pp. 3354–3361. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Proceedings, Part I; Springer: Berlin/Heidelberg, Germany, 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: New York, NY, USA, 2011; pp. 2564–2571. [Google Scholar]

- Tomasi, C.; Kanade, T. Detection and tracking of point. Int. J. Comput. Vis. 1991, 9, 3. [Google Scholar]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In Proceedings of the IJCAI’81: 7th International Joint Conference on Artificial Intelligence, Vancouver, BC, Canada, 24–28 August 1981; Volume 2, pp. 674–679. [Google Scholar]

- Rosten, E.; Drummond, T. Fusing points and lines for high performance tracking. In Proceedings of the Tenth IEEE International Conference on Computer Vision (ICCV’05) Volume 1, Beijing, China, 17–21 October 2005; IEEE: New York, NY, USA, 2005; Volume 2, pp. 1508–1515. [Google Scholar]

- Mourikis, A.I.; Roumeliotis, S.I. A multi-state constraint Kalman filter for vision-aided inertial navigation. In Proceedings of the 2007 IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; IEEE: New York, NY, USA, 2007; pp. 3565–3572. [Google Scholar]

- Bloesch, M.; Omari, S.; Hutter, M.; Siegwart, R. Robust visual inertial odometry using a direct EKF-based approach. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; IEEE: New York, NY, USA, 2015; pp. 298–304. [Google Scholar]

- Forster, C.; Carlone, L.; Dellaert, F.; Scaramuzza, D. On-manifold preintegration for real-time visual–inertial odometry. IEEE Trans. Robot. 2016, 33, 1–21. [Google Scholar] [CrossRef]

- Wu, Z.; Zhu, Y. Swformer-VO: A Monocular Visual Odometry Model Based on Swin Transformer. IEEE Robot. Autom. Lett. 2024, 9, 4766–4773. [Google Scholar] [CrossRef]

- Wang, S.; Clark, R.; Wen, H.; Trigoni, N. Deepvo: Towards end-to-end visual odometry with deep recurrent convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: New York, NY, USA, 2017; pp. 2043–2050. [Google Scholar]

- Franccani, A.O.; Maximo, M.R.O. Transformer-based model for monocular visual odometry: A video understanding approach. IEEE Access 2025, 13, 13959–13971. [Google Scholar] [CrossRef]

- Cimarelli, C.; Bavle, H.; Sánchez-López, J.L.; Voos, H. Raum-vo: Rotational adjusted unsupervised monocular visual odometry. Sensors 2022, 22, 2651. [Google Scholar] [CrossRef]

- Han, S.; Li, M.; Tang, H.; Song, Y.; Tong, G. UVMO: Deep unsupervised visual reconstruction-based multimodal-assisted odometry. Pattern Recognit. 2024, 153, 110573. [Google Scholar] [CrossRef]

- Li, R.; Wang, S.; Long, Z.; Gu, D. Undeepvo: Monocular visual odometry through unsupervised deep learning. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; IEEE: New York, NY, USA, 2018; pp. 7286–7291. [Google Scholar]

- Clark, R.; Wang, S.; Wen, H.; Markham, A.; Trigoni, N. Vinet: Visual-inertial odometry as a sequence-to-sequence learning problem. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. Number 1. [Google Scholar]

- Zhan, H.; Weerasekera, C.S.; Bian, J.-W.; Reid, I. Visual odometry revisited: What should be learnt? In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: New York, NY, USA, 2020; pp. 4203–4210. [Google Scholar]

- Cho, H.M.; Kim, E. Dynamic object-aware visual odometry (VO) estimation based on optical flow matching. IEEE Access 2023, 11, 11642–11651. [Google Scholar] [CrossRef]

- Kang, J. Deep depth-flow odometry with inertial sensor fusion. Electron. Lett. 2025, 61, e70409. [Google Scholar] [CrossRef]

- Teed, Z.; Deng, J. Recurrent all-pairs field transforms for optical flow. In Proceedings of the European Conference on Computer Vision (ECCV), Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020; pp. 402–419. [Google Scholar]

- Zhang, C.; Huang, T.; Zhang, R.; Yi, X. PLD-SLAM: A new RGB-D SLAM method with point and line features for indoor dynamic scene. ISPRS Int. J. Geo-Inf. 2021, 10, 163. [Google Scholar] [CrossRef]

- Wu, X.; Huang, F.; Wang, Y.; Jiang, H. A vins combined with dynamic object detection for autonomous driving vehicles. IEEE Access 2022, 10, 91127–91136. [Google Scholar] [CrossRef]

- Surber, J.; Teixeira, L.; Chli, M. Robust visual-inertial localization with weak GPS priors for repetitive UAV flights. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: New York, NY, USA, 2017; pp. 6300–6306. [Google Scholar][Green Version]

- D’ippolito, F.; Garraffa, G.; Sferlazza, A.; Zaccarian, L. A hybrid observer for localization from noisy inertial data and sporadic position measurements. Nonlinear Anal. Hybrid Syst. 2023, 49, 101360. [Google Scholar] [CrossRef]

- Niu, X.; Tang, H.; Zhang, T.; Fan, J.; Liu, J. IC-GVINS: A robust, real-time, INS-centric GNSS-visual-inertial navigation system. IEEE Robot. Autom. Lett. 2022, 8, 216–223. [Google Scholar] [CrossRef]

- Cao, S.; Lu, X.; Shen, S. GVINS: Tightly coupled GNSS-visual-inertial fusion for smooth and consistent state estimation. IEEE Trans. Robot. 2022, 38, 2004–2021. [Google Scholar] [CrossRef]

- Yu, Y.; Gao, W.; Liu, C.; Shen, S.; Liu, M. A gps-aided omnidirectional visual-inertial state estimator in ubiquitous environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3 November 2019; pp. 7750–7755. [Google Scholar]

- Dai, H.-F.; Bian, H.-W.; Wang, R.-Y.; Ma, H. An INS/GNSS integrated navigation in GNSS denied environment using recurrent neural network. Def. Technol. 2020, 16, 334–340. [Google Scholar] [CrossRef]

- Yusefi, A.; Durdu, A.; Aslan, M.F.; Sungur, C. LSTM and filter based comparison analysis for indoor global localization in UAVs. IEEE Access 2021, 9, 10054–10069. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Jiang, S.; Campbell, D.; Lu, Y.; Li, H.; Hartley, R. Learning to estimate hidden motions with global motion aggregation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 9772–9781. [Google Scholar]

- Kang, J.M.; Sjanic, Z.; Hendeby, G. Optical Flow Revisited: How good is dense deep learning based optical flow? In Proceedings of the 2023 IEEE Symposium Sensor Data Fusion and International Conference on Multisensor Fusion and Integration (SDF-MFI), Bonn, Germany, 27–29 November 2023; IEEE: New York, NY, USA, 2023; pp. 1–6. [Google Scholar]

- Sun, D.; Yang, X.; Liu, M.-Y.; Kautz, J. Pwc-net: Cnns for optical flow using pyramid, warping, and cost volume. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8934–8943. [Google Scholar]

- Ilg, E.; Mayer, N.; Saikia, T.; Keuper, M.; Dosovitskiy, A.; Brox, T. Flownet 2.0: Evolution of optical flow estimation with deep networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2462–2470. [Google Scholar]

- Bleser, G.; Hendeby, G. Using optical flow for filling the gaps in visual-inertial tracking. In Proceedings of the 2010 18th European Signal Processing Conference, Aalborg, Denmark, 23–27 August 2010; IEEE: New York, NY, USA, 2010; pp. 1836–1840. [Google Scholar]

- Kang, J.M.; Sjanic, Z.; Hendeby, G. Visual-Inertial Odometry Using Optical Flow from Deep Learning. In Proceedings of the 2024 27th International Conference on Information Fusion (FUSION), Venice, Italy, 8–11 July 2024; pp. 1–8. [Google Scholar]

- Grewal, M.S.; Weill, L.R.; Andrews, A.P. Global Positioning Systems, Inertial Navigation, and Integration; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic Differentiation in Pytorch. 2017. Available online: https://openreview.net/forum?id=BJJsrmfCZ (accessed on 28 September 2025).

- Dosovitskiy, A.; Fischer, P.; Ilg, E.; Hausser, P.; Hazirbas, C.; Golkov, V.; Van Der Smagt, P.; Cremers, D.; Brox, T. Flownet: Learning optical flow with convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 2758–2766. [Google Scholar]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Butler, D.J.; Wulff, J.; Stanley, G.B.; Black, M.J. A naturalistic open source movie for optical flow evaluation. In Proceedings of the Computer Vision–ECCV 2012: 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; Proceedings, Part VI 12; Springer: Berlin/Heidelberg, Germany, 2012; pp. 611–625. [Google Scholar]

- Zhan, H.; Garg, R.; Weerasekera, C.S.; Li, K.; Agarwal, H.; Reid, I. Unsupervised learning of monocular depth estimation and visual odometry with deep feature reconstruction. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 340–349. [Google Scholar]

- Bian, J.; Li, Z.; Wang, N.; Zhan, H.; Shen, C.; Cheng, M.-M.; Reid, I. Unsupervised scale-consistent depth and ego-motion learning from monocular video. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019); NeurIPS: San Diego, CA, USA, 2019. [Google Scholar]

- Zheng, Z.; Lin, S.; Yang, C. RLD-SLAM: A robust lightweight VI-SLAM for dynamic environments leveraging semantics and motion information. IEEE Trans. Ind. Electron. 2024, 71, 14328–14338. [Google Scholar] [CrossRef]

- Adham, M.; Chen, W.; Li, Y.; Liu, T. Towards Robust Global VINS: Innovative SemanticAware and Multi-Level Geometric Constraints Approach for Dynamic Feature Filtering in Urban Environments. IEEE Trans. Intell. Veh. 2024. early access. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).