Forehead and In-Ear EEG Acquisition and Processing: Biomarker Analysis and Memory-Efficient Deep Learning Algorithm for Sleep Staging with Optimized Feature Dimensionality

Abstract

1. Introduction

- An in-depth review of wearable EEG solutions utilizing forehead and in-ear placements, emphasizing their effectiveness in accurate and unobtrusive sleep stage classification and disorder detection.

- The development of a custom experimental setup for reliable EEG signal acquisition from both the forehead and in-ear regions.

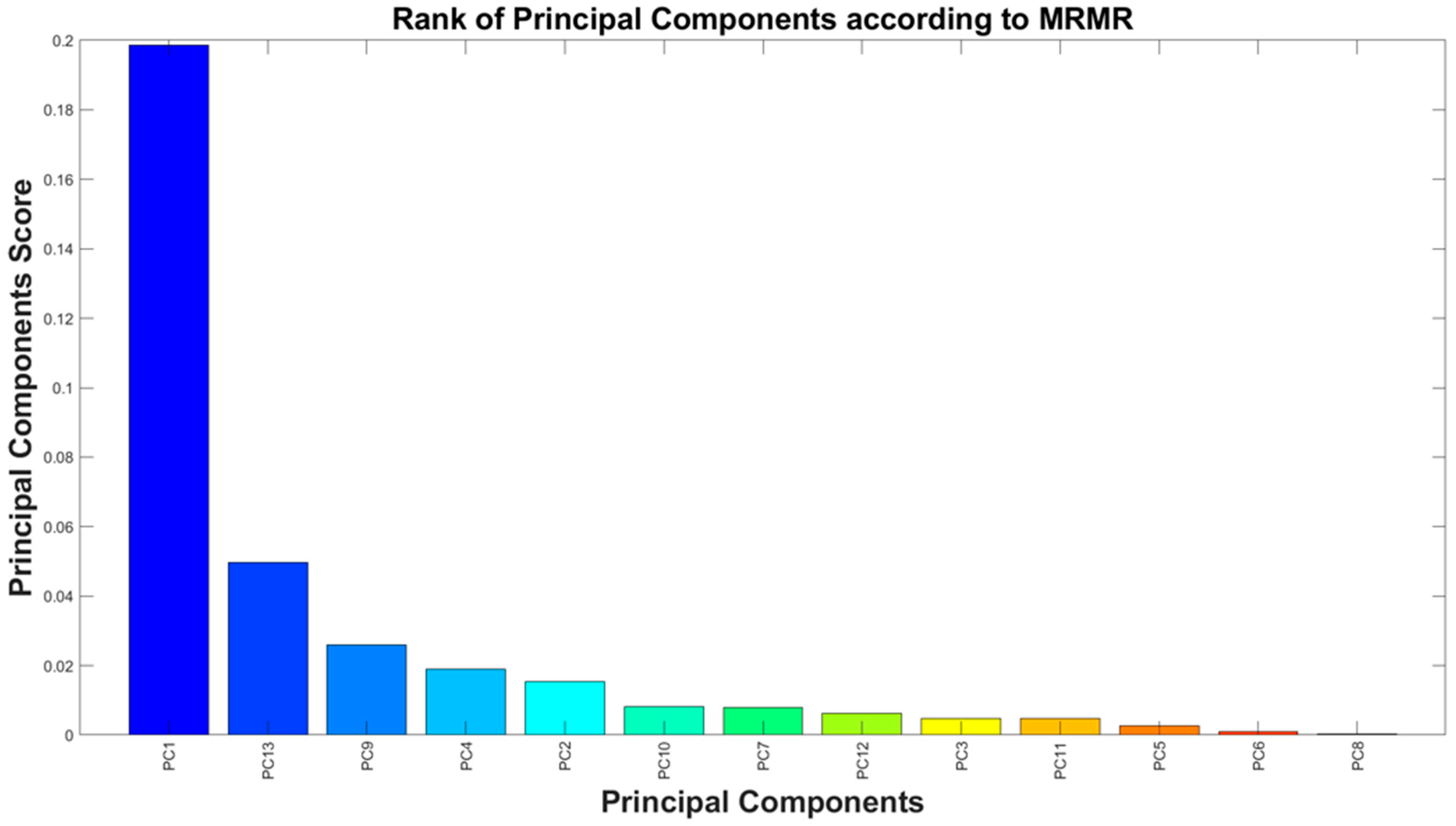

- A feature set was identified based on an extensive literature review that supported their physiological relevance, followed by the application of mRMR and PCA to reduce its dimensionality using data from an open-access EEG database.

- A MATLAB-based (version 24.1) feature extraction tool was developed to process forehead EEG signals collected under various experimental conditions, enabling the analysis of correlations and trends related to the subject’s physiological state.

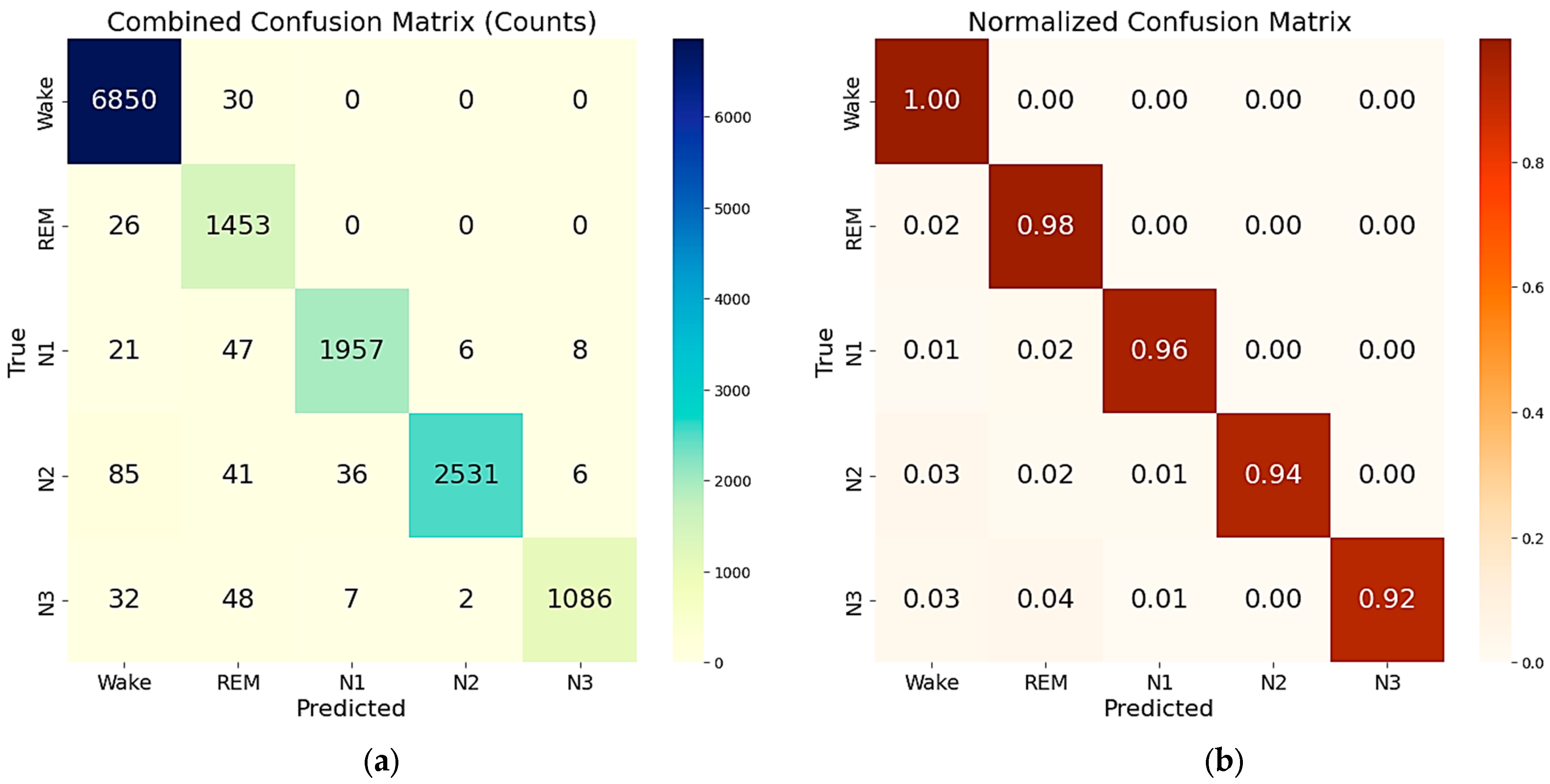

- A two-step ensemble classification approach was implemented, based on LSTM-based models trained to enable 5-class sleep staging.

2. Literature Analysis

2.1. Overview of Forehead EEG Acquisition Systems and Algorithms for Sleep Monitoring

2.2. Overview of Ear and In-Ear EEG Acquisition Systems and Algorithms for Sleep Monitoring

3. Materials and Methods

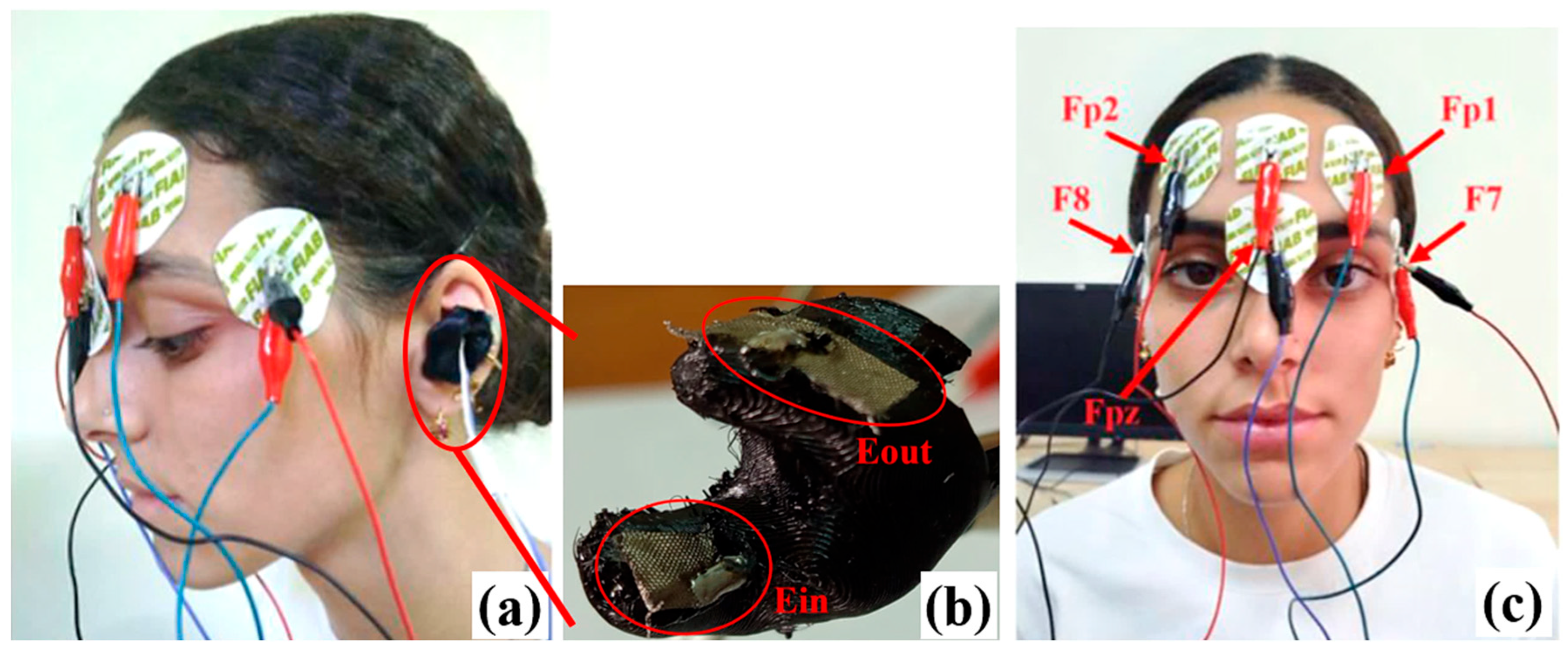

3.1. Experimental Setups and Methodologies for EEG Acquisition

3.2. Selection and Analysis for Sleep Staging and Detecting Disorders of Sleep

4. Results

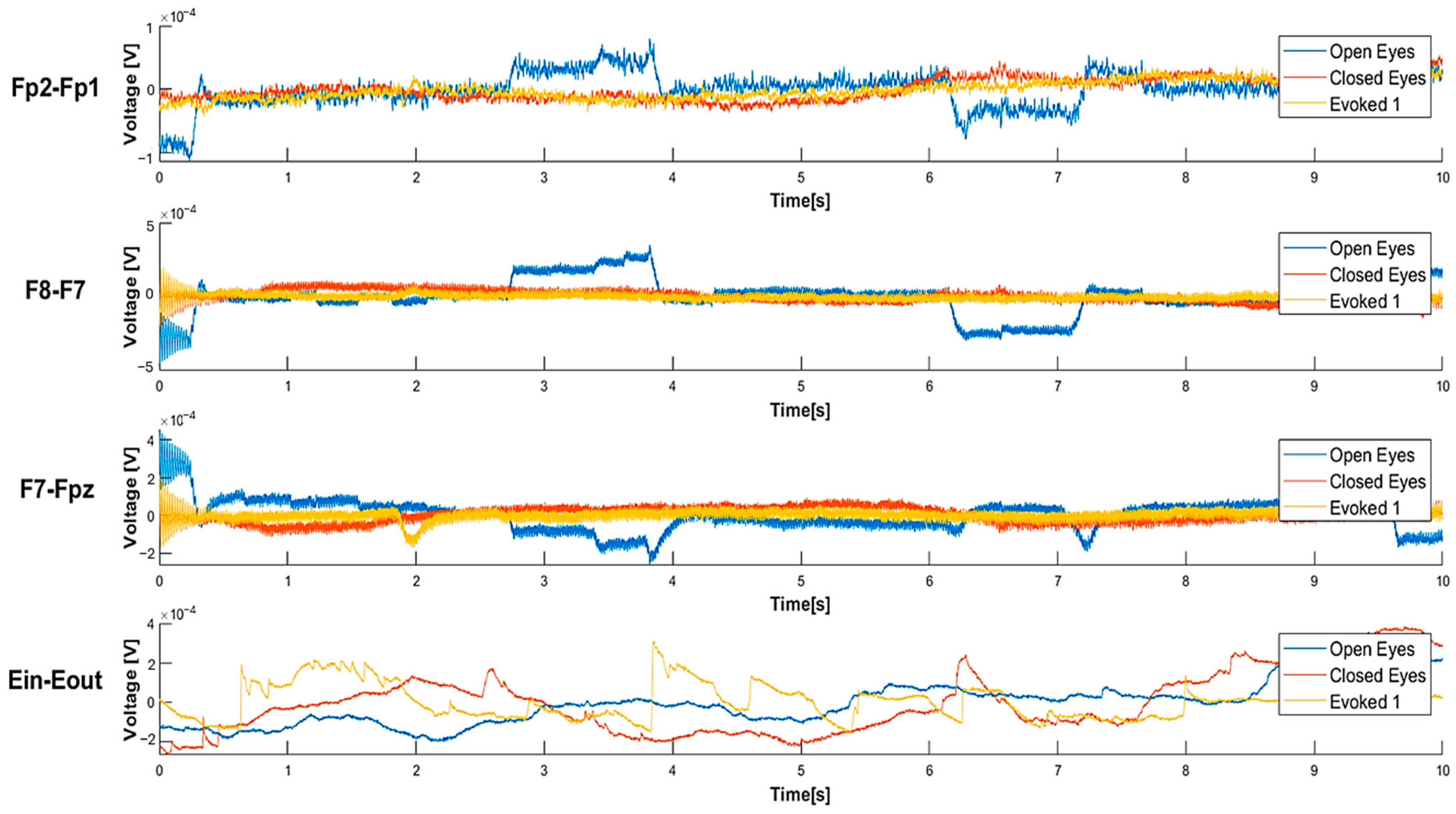

4.1. Experimental Tests on the Acquisition of the EEG from the Forehead, Ear, and In-Ear

- The DAR is the ratio of delta wave power to alpha wave power.

- The DTR is the ratio of delta wave power to theta wave power.

- The DTABR is defined as the ratio of the sum of delta and theta wave power (slow waves) to the sum of alpha and beta wave power (fast waves).

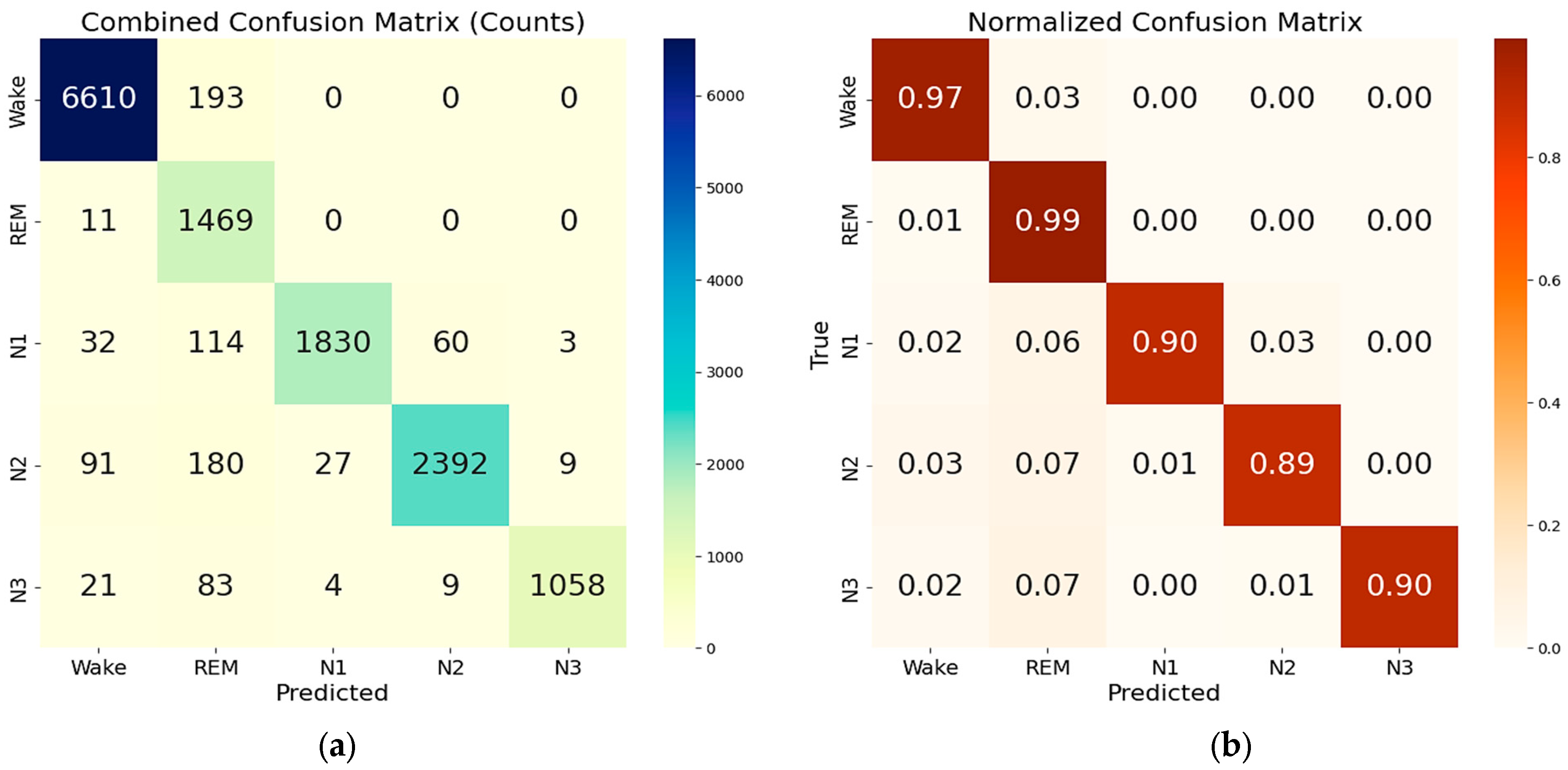

4.2. Training and Testing of Sleep Staging Algorithms

5. Discussions

5.1. Feature Insights and Trends from Acquired EEG Signals

5.2. Analysis of Sleep Stage Variability Using the Coefficient of Variation Metrics

5.3. Development of a Deep Learning Algorithm for Sleep Staging

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| EEG | Electroencephalography |

| mRMR | Minimum Redundancy Maximum Relevance |

| PCA | Principal Component Analysis |

| LSTM | Long Short-Term Memory |

| BOAS | Bitbrain Open Access Sleep |

| PSG | Polysomnography |

| ECG | Electrocardiogram |

| EOG | Electrooculogram |

| EMG | Electromyogram |

| SpO2 | Blood Oxygen Saturation |

| PPG | Photoplethysmography |

| ML | Machine Learning |

| DL | Deep Learning |

| VEP | Visual Evoked Potentials |

| LS | Light Sleep |

| DS | Deep Sleep |

| RF | Random Forest |

| REM | Rapid Eye Movement |

| NREM | Non-Rapid Eye Movement |

| JSD-FSI | Jensen-Shannon Divergence Feature-based Similarity Index |

| AASM | The American Academy of Sleep Medicine |

| LIBS | Lightweight In-ear Biosignal Sensing System |

| NMF | Negative Matrix Factorization |

| ADCs | Analog-To-Digital Converters |

| FFT | Fast Fourier Transform |

| PRVEP | Pattern Reverse Visual Evoked Potential |

| RMS | Root Mean Square |

| ZCR | Zero-Crossing Rate |

| AAC | Average Amplitude Change |

| IQR | Interquartile Range |

| SSI | Simple Square Integral |

| RSP | Relative Spectral Power |

| SWI | Slow Wave Index |

| ASI | Alpha Slow Wave Index |

| SEFd | Spectral Edge Frequency difference |

| AP | Absolute Power |

| SVD | Singular Value Decomposition |

| LZC | Lempel–Ziv Complexity |

| MI | Mutual Information |

| PSD | Power Spectral Density |

| DAR | Delta–alpha ratio |

| DTR | Delta–theta ratio |

| DTABR | Delta–theta–alpha–beta ratio |

| PCs | Principal Components |

| DSI | Delta Slow Wave Index |

| TSI | Theta Slow Wave Index |

| CV | Coefficient of Variation |

References

- Gerstenslager, B.; Slowik, J.M. Sleep Study. In StatPearls; StatPearls Publishing: Treasure Island, FL, USA, 2020. [Google Scholar]

- Kwon, S.; Kim, H.; Yeo, W.-H. Recent Advances in Wearable Sensors and Portable Electronics for Sleep Monitoring. iScience 2021, 24, 102461. [Google Scholar] [CrossRef]

- Radhakrishnan, B.L.; Kirubakaran, E.; Jebadurai, I.J.; Selvakumar, A.I.; Peter, J.D. Efficacy of Single-Channel EEG: A Propitious Approach for In-Home Sleep Monitoring. Front. Public Health 2022, 10, 839838. [Google Scholar] [CrossRef] [PubMed]

- Kanas, N. Stress, Sleep, and Cognition in Microgravity. In Behavioral Health and Human Interactions in Space; Springer International Publishing: Cham, Switzerland, 2023; pp. 1–50. ISBN 978-3-031-16722-5. [Google Scholar]

- Morphew, E. Psychological and Human Factors in Long Duration Spaceflight. McGill J. Med. 2020, 6, 74–80. [Google Scholar] [CrossRef]

- Roveda, J.M.; Fink, W.; Chen, K.; Wu, W.-T. Psychological Health Monitoring for Pilots and Astronauts by Tracking Sleep-Stress-Emotion Changes. In Proceedings of the 2016 IEEE Aerospace Conference, Big Sky, MT, USA, 5–12 March 2016; IEEE: New York, NY, USA, 2016; pp. 1–9. [Google Scholar]

- Di Rienzo, M.; Vaini, E.; Lombardi, P. Wearable Monitoring: A Project for the Unobtrusive Investigation of Sleep Physiology Aboard the International Space Station. In Proceedings of the 2015 Computing in Cardiology Conference (CinC), Nice, France, 6–9 September 2015; IEEE: New York, NY, USA, 2015; pp. 125–128. [Google Scholar]

- De Fazio, R.; Mastronardi, V.M.; De Vittorio, M.; Spongano, L.; Fachechi, L.; Rizzi, F.; Visconti, P. A Sensorized Face Mask to Monitor Sleep and Health of the Astronauts: Architecture Definition, Sensing Section Development and Biosignals’ Acquisition. In Proceedings of the 2024 9th International Conference on Smart and Sustainable Technologies (SpliTech), Bol and Split, Croatia, 25–28 June 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Eldele, E.; Chen, Z.; Liu, C.; Wu, M.; Kwoh, C.-K.; Li, X.; Guan, C. An Attention-Based Deep Learning Approach for Sleep Stage Classification With Single-Channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 809–818. [Google Scholar] [CrossRef] [PubMed]

- De Fazio, R.; Cascella, I.; Visconti, P.; De Vittorio, M.; Al-Naami, B. EEG Signal Acquisition from the Forehead and Ears through Textile-Based 3D-Printed Electrodes to Be Integrated into a Sensorized Face-Mask for Astronauts’ Sleep Monitoring. In Proceedings of the 2024 Second Jordanian International Biomedical Engineering Conference (JIBEC), Amman, Jordan, 27 November 2024; IEEE: New York, NY, USA, 2024; pp. 16–21. [Google Scholar]

- Simões, H.; Pires, G.; Nunes, U.; Silva, V. Feature Extraction and Selection for Automatic Sleep Staging Using EEG. In Proceedings of the 7th International Conference on Informatics in Control, Automation and Robotics, Funchal, Portugal, 15–18 June 2010; SciTePress—Science and and Technology Publications: Setúbal, Portugal, 2010; pp. 128–133. [Google Scholar]

- Metzner, C.; Schilling, A.; Traxdorf, M.; Schulze, H.; Krauss, P. Sleep as a Random Walk: A Super-Statistical Analysis of EEG Data across Sleep Stages. Commun. Biol. 2021, 4, 1385. [Google Scholar] [CrossRef]

- Hussain, I.; Hossain, M.A.; Jany, R.; Bari, M.A.; Uddin, M.; Kamal, A.R.M.; Ku, Y.; Kim, J.-S. Quantitative Evaluation of EEG-Biomarkers for Prediction of Sleep Stages. Sensors 2022, 22, 3079. [Google Scholar] [CrossRef]

- Fell, J.; Röschke, J.; Mann, K.; Schäffner, C. Discrimination of Sleep Stages: A Comparison between Spectral and Nonlinear EEG Measures. Electroencephalogr. Clin. Neurophysiol. 1996, 98, 401–410. [Google Scholar] [CrossRef]

- Ma, Y.; Shi, W.; Peng, C.-K.; Yang, A.C. Nonlinear Dynamical Analysis of Sleep Electroencephalography Using Fractal and Entropy Approaches. Sleep Med. Rev. 2018, 37, 85–93. [Google Scholar] [CrossRef]

- Matsumori, S.; Teramoto, K.; Iyori, H.; Soda, T.; Yoshimoto, S.; Mizutani, H. HARU Sleep: A Deep Learning-Based Sleep Scoring System With Wearable Sheet-Type Frontal EEG Sensors. IEEE Access 2022, 10, 13624–13632. [Google Scholar] [CrossRef]

- Onton, J.A.; Simon, K.C.; Morehouse, A.B.; Shuster, A.E.; Zhang, J.; Peña, A.A.; Mednick, S.C. Validation of Spectral Sleep Scoring with Polysomnography Using Forehead EEG Device. Front. Sleep 2024, 3, 1349537. [Google Scholar] [CrossRef]

- Arnal, P.J.; Thorey, V.; Debellemaniere, E.; Ballard, M.E.; Bou Hernandez, A.; Guillot, A.; Jourde, H.; Harris, M.; Guillard, M.; Van Beers, P.; et al. The Dreem Headband Compared to Polysomnography for Electroencephalographic Signal Acquisition and Sleep Staging. Sleep 2020, 43, zsaa097. [Google Scholar] [CrossRef] [PubMed]

- Carneiro, M.R.; De Almeida, A.T.; Tavakoli, M. Wearable and Comfortable E-Textile Headband for Long-Term Acquisition of Forehead EEG Signals. IEEE Sens. J. 2020, 20, 15107–15116. [Google Scholar] [CrossRef]

- Wang, Z.; Ding, Y.; Chen, H.; Wang, Z.; Chen, C.; Chen, W. Multi-Modal Flexible Headband for Sleep Monitoring. In Proceedings of the 2024 IEEE 20th International Conference on Body Sensor Networks (BSN), Chicago, IL, USA, 15 October 2024; IEEE: New York, NY, USA, 2024; pp. 1–4. [Google Scholar]

- Guo, H.; Di, Y.; An, X.; Wang, Z.; Ming, D. A Novel Approach to Automatic Sleep Stage Classification Using Forehead Electrophysiological Signals. Heliyon 2022, 8, e12136. [Google Scholar] [CrossRef] [PubMed]

- Leino, A.; Korkalainen, H.; Kalevo, L.; Nikkonen, S.; Kainulainen, S.; Ryan, A.; Duce, B.; Sipila, K.; Ahlberg, J.; Sahlman, J.; et al. Deep Learning Enables Accurate Automatic Sleep Staging Based on Ambulatory Forehead EEG. IEEE Access 2022, 10, 26554–26566. [Google Scholar] [CrossRef]

- Palo, G.; Fiorillo, L.; Monachino, G.; Bechny, M.; Wälti, M.; Meier, E.; Pentimalli Biscaretti Di Ruffia, F.; Melnykowycz, M.; Tzovara, A.; Agostini, V.; et al. Comparison Analysis between Standard Polysomnographic Data and In-Ear-Electroencephalography Signals: A Preliminary Study. Sleep Adv. 2024, 5, zpae087. [Google Scholar] [CrossRef]

- IGE-3.1 Quick Start Guide. Available online: https://docs.idunguardian.com/en/page-1c-igeb-quickstart (accessed on 17 September 2025).

- Screening Del Sonno SOMNOscreen Plus—SOMNOmedics Italia. Available online: http://www.somnomedics.it/somnoscreen-plus.html (accessed on 17 September 2025).

- Looney, D.; Goverdovsky, V.; Rosenzweig, I.; Morrell, M.J.; Mandic, D.P. Wearable In-Ear Encephalography Sensor for Monitoring Sleep: Preliminary Observations from Nap Studies. Ann. Am. Thorac. Soc. 2016, 13, 2229–2233. [Google Scholar] [CrossRef]

- Mandekar, S.; Holland, A.; Thielen, M.; Behbahani, M.; Melnykowycz, M. Advancing towards Ubiquitous EEG, Correlation of In-Ear EEG with Forehead EEG. Sensors 2022, 22, 1568. [Google Scholar] [CrossRef]

- Frey, J. Comparison of a Consumer Grade EEG Amplifier with Medical Grade Equipment in BCI Applications. In Proceedings of the International BCI Meeting, Pacific Grove, CA, USA, 30 May–3 June 2016. [Google Scholar]

- Tabar, Y.R.; Mikkelsen, K.B.; Shenton, N.; Kappel, S.L.; Bertelsen, A.R.; Nikbakht, R.; Toft, H.O.; Henriksen, C.H.; Hemmsen, M.C.; Rank, M.L.; et al. At-Home Sleep Monitoring Using Generic Ear-EEG. Front. Neurosci. 2023, 17, 987578. [Google Scholar] [CrossRef]

- Nguyen, A.; Alqurashi, R.; Raghebi, Z.; Banaei-kashani, F.; Halbower, A.C.; Vu, T. A Lightweight and Inexpensive In-Ear Sensing System For Automatic Whole-Night Sleep Stage Monitoring. In Proceedings of the 14th ACM Conference on Embedded Network Sensor Systems CD-ROM, Stanford, CA, USA, 14 November 2016; ACM: New York, NY, USA, 2016; pp. 230–244. [Google Scholar]

- Texas Instruments ADS1299EEGFE-PDK Evaluation Board—Datasheet. Available online: https://www.ti.com/lit/ug/slau443b/slau443b.pdf?ts=1727425648363 (accessed on 27 September 2024).

- International Society for Clinical Electrophysiology of Vision; Odom, J.V.; Bach, M.; Brigell, M.; Holder, G.E.; McCulloch, D.L.; Mizota, A.; Tormene, A.P. ISCEV Standard for Clinical Visual Evoked Potentials: (2016 Update). Doc. Ophthalmol. 2016, 133, 1–9. [Google Scholar] [CrossRef]

- Depoortere, H.; Francon, D.; Granger, P.; Terzano, M.G. Evaluation of the Stability and Quality of Sleep Using Hjorth’s Descriptors. Physiol. Behav. 1993, 54, 785–793. [Google Scholar] [CrossRef]

- Marino, S.; Silveri, G.; Bonanno, L.; De Salvo, S.; Cartella, E.; Miladinović, A.; Ajčević, M.; Accardo, A. Linear and Non-Linear Analysis of EEG During Sleep Deprivation in Subjects with and Without Epilepsy. In XV Mediterranean Conference on Medical and Biological Engineering and Computing—MEDICON 2019; Henriques, J., Neves, N., De Carvalho, P., Eds.; IFMBE Proceedings; Springer International Publishing: Cham, Switzerland, 2020; Volume 76, pp. 125–132. ISBN 978-3-030-31634-1. [Google Scholar]

- Aamodt, A.; Sevenius Nilsen, A.; Markhus, R.; Kusztor, A.; HasanzadehMoghadam, F.; Kauppi, N.; Thürer, B.; Storm, J.F.; Juel, B.E. EEG Lempel-Ziv Complexity Varies with Sleep Stage, but Does Not Seem to Track Dream Experience. Front. Hum. Neurosci. 2023, 16, 987714. [Google Scholar] [CrossRef]

- Li, H.; Peng, C.; Ye, D. A Study of Sleep Staging Based on a Sample Entropy Analysis of Electroencephalogram. Bio-Med. Mater. Eng. 2015, 26, S1149–S1156. [Google Scholar] [CrossRef]

- Aboalayon, K.A.I.; Ocbagabir, H.T.; Faezipour, M. Efficient Sleep Stage Classification Based on EEG Signals. In Proceedings of the IEEE Long Island Systems, Applications and Technology (LISAT) Conference 2014, Farmingdale, NY, USA, 2 May 2014; IEEE: New York, NY, USA, 2014; pp. 1–6. [Google Scholar]

- Jan Dijk, D.; Beersma, D.G.M.; Daan, S.; Bloem, G.M.; Hoofdakker, R.H. Quantitative Analysis of the Effects of Slow Wave Sleep Deprivation during the First 3 h of Sleep on Subsequent EEG Power Density. Eur. Arch. Psychiatr. Neurol. Sci. 1987, 236, 323–328. [Google Scholar] [CrossRef]

- Wu, J.; Zhou, Q.; Li, J.; Chen, Y.; Shao, S.; Xiao, Y. Decreased Resting-State Alpha-Band Activation and Functional Connectivity after Sleep Deprivation. Sci. Rep. 2021, 11, 484. [Google Scholar] [CrossRef] [PubMed]

- Peng, H.; Hu, B.; Zheng, F.; Fan, D.; Zhao, W.; Chen, X.; Yang, Y.; Cai, Q. A Method of Identifying Chronic Stress by EEG. Pers. Ubiquitous Comput. 2013, 17, 1341–1347. [Google Scholar] [CrossRef]

- Jobert, M.; Schulz, H.; Jähnig, P.; Tismer, C.; Bes, F.; Escola, H. A Computerized Method for Detecting Episodes of Wakefulness During Sleep Based on the Alpha Slow-Wave Index (ASI). Sleep 1994, 17, 37–46. [Google Scholar] [CrossRef] [PubMed]

- You, Y.; Zhong, X.; Liu, G.; Yang, Z. Automatic Sleep Stage Classification: A Light and Efficient Deep Neural Network Model Based on Time, Frequency and Fractional Fourier Transform Domain Features. Artif. Intell. Med. 2022, 127, 102279. [Google Scholar] [CrossRef]

- Van Hese, P.; Philips, W.; De Koninck, J.; Van De Walle, R.; Lemahieu, I. Automatic Detection of Sleep Stages Using the EEG. In Proceedings of the 2001 Conference 23rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Istanbul, Turkey, 25–28 October 2001; IEEE: New York, NY, USA, 2001; pp. 1944–1947. [Google Scholar]

- Ferreira, C.; Deslandes, A.; Moraes, H.; Cagy, M.; Pompeu, F.; Basile, L.F.; Piedade, R.; Ribeiro, P. Electroencephalographic Changes after One Nigth of Sleep Deprivation. Arq. Neuro-Psiquiatr. 2006, 64, 388–393. [Google Scholar] [CrossRef]

- Kozhemiako, N.; Mylonas, D.; Pan, J.Q.; Prerau, M.J.; Redline, S.; Purcell, S.M. Sources of Variation in the Spectral Slope of the Sleep EEG. eNeuro 2022, 9, ENEURO.0094-22.2022. [Google Scholar] [CrossRef]

- Imtiaz, S.A.; Rodriguez-Villegas, E. A Low Computational Cost Algorithm for REM Sleep Detection Using Single Channel EEG. Ann. Biomed. Eng. 2014, 42, 2344–2359. [Google Scholar] [CrossRef]

- Berry, R.B.; Brooks, R.; Gamaldo, C.E.; Harding, S.M.; Lloyd, R.M.; Marcus, C.L.; Vaughn, B.V. The AASM Manual for the Scoring of Sleep and Associated Events: Rules, Terminology and Technical Specifications: Version 2.3; American Academy of Sleep Medicine: Darien, IL, USA, 2015. [Google Scholar]

- Danker-Hopfe, H.; Anderer, P.; Zeitlhofer, J.; Boeck, M.; Dorn, H.; Gruber, G.; Heller, E.; Loretz, E.; Moser, D.; Parapatics, S.; et al. Interrater Reliability for Sleep Scoring According to the Rechtschaffen & Kales and the New AASM Standard. J. Sleep Res. 2009, 18, 74–84. [Google Scholar] [CrossRef]

- Rosenberg, R.S.; Van Hout, S. The American Academy of Sleep Medicine Inter-Scorer Reliability Program: Sleep Stage Scoring. J. Clin. Sleep Med. 2013, 9, 81–87. [Google Scholar] [CrossRef] [PubMed]

- Esparza-Iaizzo, M.; Sierra-Torralba, M.; Klinzing, J.G.; Minguez, J.; Montesano, L.; López-Larraz, E. Automatic Sleep Scoring for Real-Time Monitoring and Stimulation in Individuals with and without Sleep Apnea. bioRxiv 2024. [Google Scholar] [CrossRef]

- Bitbrain Open Access Sleep Dataset—OpenNeuro. Available online: https://openneuro.org/datasets/ds005555/versions/1.0.0 (accessed on 23 September 2025).

- Darbellay, G.A.; Vajda, I. Estimation of the Information by an Adaptive Partitioning of the Observation Space. IEEE Trans. Inform. Theory 1999, 45, 1315–1321. [Google Scholar] [CrossRef]

- Ding, C.; Peng, H. Minimum Redundancy Feature Selection from Microarray Gene Expression Data. J. Bioinform. Comput. Biol. 2005, 3, 185–205. [Google Scholar] [CrossRef]

- Gorlova, S.; Ichiba, T.; Nishimaru, H.; Takamura, Y.; Matsumoto, J.; Hori, E.; Nagashima, Y.; Tatsuse, T.; Ono, T.; Nishijo, H. Non-Restorative Sleep Caused by Autonomic and Electroencephalography Parameter Dysfunction Leads to Subjective Fatigue at Wake Time in Shift Workers. Front. Neurol. 2019, 10, 66. [Google Scholar] [CrossRef]

- Huang, C.-S.; Lin, C.-L.; Ko, L.-W.; Liu, S.-Y.; Sua, T.-P.; Lin, C.-T. A Hierarchical Classification System for Sleep Stage Scoring via Forehead EEG Signals. In Proceedings of the 2013 IEEE Symposium on Computational Intelligence, Cognitive Algorithms, Mind, and Brain (CCMB), Singapore, 16–19 April 2013; IEEE: New York, NY, USA, 2013; pp. 1–5. [Google Scholar]

- Popovic, D.; Khoo, M.; Westbrook, P. Automatic Scoring of Sleep Stages and Cortical Arousals Using Two Electrodes on the Forehead: Validation in Healthy Adults. J. Sleep Res. 2014, 23, 211–221. [Google Scholar] [CrossRef]

- Taran, S.; Sharma, P.C.; Bajaj, V. Automatic Sleep Stages Classification Using Optimize Flexible Analytic Wavelet Transform. Knowl.-Based Syst. 2020, 192, 105367. [Google Scholar] [CrossRef]

- Krystal, A.D.; Edinger, J.D.; Wohlgemuth, W.K.; Marsh, G.R. NREM Sleep EEG Frequency Spectral Correlates of Sleep Complaints in Primary Insomnia Subtypes. Sleep 2002, 25, 626–636. [Google Scholar] [CrossRef]

- Michielli, N.; Acharya, U.R.; Molinari, F. Cascaded LSTM Recurrent Neural Network for Automated Sleep Stage Classification Using Single-Channel EEG Signals. Comput. Biol. Med. 2019, 106, 71–81. [Google Scholar] [CrossRef]

- Carli, F.D.; Nobili, L.; Beelke, M.; Watanabe, T.; Smerieri, A.; Parrino, L.; Terzano, M.G.; Ferrillo, F. Quantitative Analysis of Sleep EEG Microstructure in the Time–Frequency Domain. Brain Res. Bull. 2004, 63, 399–405. [Google Scholar] [CrossRef]

- Knyazev, G.G.; Slobodskoj-Plusnin, J.Y.; Bocharov, A.V. Event-Related Delta and Theta Synchronization during Explicit and Implicit Emotion Processing. Neuroscience 2009, 164, 1588–1600. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, L.; Luo, Y.; Luo, Y. Individual Differences in Detecting Rapidly Presented Fearful Faces. PLoS ONE 2012, 7, e49517. [Google Scholar] [CrossRef]

- Liu, C.; Tan, B.; Fu, M.; Li, J.; Wang, J.; Hou, F.; Yang, A. Automatic Sleep Staging with a Single-Channel EEG Based on Ensemble Empirical Mode Decomposition. Phys. A Stat. Mech. Its Appl. 2021, 567, 125685. [Google Scholar] [CrossRef]

- Xiao, W.; Linghu, R.; Li, H.; Hou, F. Automatic Sleep Staging Based on Single-Channel EEG Signal Using Null Space Pursuit Decomposition Algorithm. Axioms 2022, 12, 30. [Google Scholar] [CrossRef]

| Reference | Electrode Position | Material of the Electrode | Number of Channels | Device Objective | Battery Life |

|---|---|---|---|---|---|

| S. Matsumori et al. [16] | 2–7 channels from equally spaced forehead electrodes | Ag | 3 | Sleep staging | 12 h |

| J.A. Onton et al. [17] | Fp1-AFz, Fp2-Fp1, and Fp2-AFz | Hydrogel | 3 | Sleep staging | 14 h |

| P.J. Arnal et al. [18] | O1, O2, FpZ, F7, and F8 | Ag/AgCl | 5 | Sleep staging and quality | 25 h |

| M.R. Carnerio et al. [19] | AF8, AF10, FP10, FP2, FP1, FP9, AF7, AF9 | Conductive stretchable ink | 24 | EEG acquisition | 24 h |

| Z. Wang et al. [20] | F7, F8, T3, T4, O1 and O2 | Flexible, claw-shaped dry electrodes | 6 | Sleep monitoring | a N. A. |

| H. Guo et al. [21] | Fh1, Fh2 | Dry electrodes | 3 | Sleep staging | a N. A. |

| A. Leino et al. [22] | Fp1/Fp2 | Ag/AgCl | 1 | Sleep staging | a N. A. |

| Reference | Electrode Position | Type of Electrodes | Number of Channels | Device Objective | Algorithm |

|---|---|---|---|---|---|

| G. Palo et al. [23] | In-ear | Dryode ink electrodes | 1 | To compare the in-ear EEG with standard PSG for sleep staging | JSD-FSI |

| D. Looney et al. [26] | In-ear (diametrically opposite) | Flexible conductive fabric | 2 | Sleep staging | AASM (The American Academy of Sleep Medicine) sleep-scoring |

| S. Mandekar et al. [27] | Out-ear (spaced 120° apart) | Flexible conductive fabric | 3 | EEG acquisition | Alpha band power correlation |

| YR. Tabar et al. [29] | In-ear | Titanium, IrO2 | 2 | Sleep monitoring | RF Classifier |

| A. Nguyen et al. [30] | In-ear | Conductive silver leaves, adhesive gel, and fabric | 1 | Sleep staging | NMF |

| Principal Component | Explained Variance [%] | Cumulative Variance [%] |

|---|---|---|

| PC1 | 16.9 | 16.9 |

| PC2 | 13.2 | 30.1 |

| PC3 | 12.2 | 42.3 |

| PC4 | 10.8 | 53.1 |

| PC5 | 8.7 | 61.8 |

| PC6 | 7.5 | 69.3 |

| PC7 | 6.6 | 75.9 |

| PC8 | 6.0 | 81.8 |

| PC9 | 4.9 | 86.7 |

| PC10 | 4.3 | 91.1 |

| PC11 | 3.8 | 94.9 |

| PC12 | 2.9 | 97.8 |

| PC13 | 2.2 | 100.0 |

| Analysis Objective | Features |

|---|---|

| Sleep staging [13,14,26,29,30,37,44,45,55,56,57,58,59] | Maximum Value, Minimum Value, Mean Value, Median, Root Mean Square, 25th, 50th, 75th Percentile, Variance, Skewness, Kurtosis [55], Hjorth Parameters [56], ZCR [26], AAC, Clearance Factor [29], Interquartile Range [30], SSI [37], Total Power [57], Power Ratios [13], Dominant Frequency [44], Slow Wave Indexes [45,59], Harmonic Parameters [14], Band Energy [13], Spectral Slope [58] |

| Sleep deprivation and disorders [13,15,26,33] | ZCR [26], Total Power [12,33], Band Energy [15] |

| Psychological stress due to sleep deprivation [13,56,57,60] | Hjorth Parameters [56], RSP [43], Spectral Entropy, LZ Complexity, Rényi Entropy, SVD Entropy [13,57,60] |

| Open Eyes | Closed Eyes | Evoked1 | |

|---|---|---|---|

| Fp2-Fp1 | 2.60 × 10−10 W | 6.89 × 10−11 W | 4.69 × 10−11 W |

| F8-F7 | 5.39 × 10−9 W | 3.39 × 10−10 W | 5.91 × 10−10 W |

| F7-Fpz | 1.59 × 10−9 W | 3.24 × 10−10 W | 2.90 × 10−10 W |

| Ein-Eout | 7.41 × 10−9 W | 5.58 × 10−9 W | 4.37 × 10−9 W |

| Precision | Recall | F1-Score | Support for Each Class | |

|---|---|---|---|---|

| Wake | 0.979 | 0.974 | 0.977 | 6752 |

| REM | 0.747 | 0.991 | 0.852 | 1478 |

| N1 | 0.993 | 0.903 | 0.946 | 2039 |

| N2 | 0.967 | 0.902 | 0.934 | 2699 |

| N3 | 0.992 | 0.917 | 0.953 | 1175 |

| Macro avg | 0.936 | 0.938 | 0.932 | 14,143 (total support) |

| Weighted avg | 0.955 | 0.947 | 0.949 | 14,143 (total support) |

| Accuracy | 0.947 (i.e., 94.7%) | 14,143 (total support) | ||

| Precision | Recall | F1-Score | Support for Each Class | |

|---|---|---|---|---|

| Wake | 0.977 | 0.969 | 0.973 | 6752 |

| REM | 0.711 | 0.992 | 0.828 | 1478 |

| N1 | 0.958 | 0.914 | 0.936 | 2039 |

| N2 | 0.983 | 0.914 | 0.936 | 2699 |

| N3 | 0.964 | 0.899 | 0.930 | 1175 |

| Macro avg | 0.919 | 0.925 | 0.916 | 14,143 (total support) |

| Weighted avg | 0.946 | 0.936 | 0.938 | 14,143 (total support) |

| Accuracy | 0.936 (i.e., 93.6%) | 14,143 (total support) | ||

| Precision | Recall | F1-score | Support for each class | |

|---|---|---|---|---|

| Wake | 0.992 | 0.993 | 0.993 | 6816 |

| REM | 0.882 | 0.991 | 0.934 | 1484 |

| N1 | 0.990 | 0.957 | 0.974 | 2039 |

| N2 | 0.992 | 0.967 | 0.979 | 2699 |

| N3 | 0.993 | 0.940 | 0.966 | 1175 |

| Macro avg | 0.970 | 0.970 | 0.969 | 14,213 (total support) |

| Weighted avg | 0.980 | 0.979 | 0.979 | 14,213 (total support) |

| Accuracy | 0.979 (i.e., 97.9%) | 14,213 (total support) | ||

| Feature | Open Eyes | Closed Eyes | Evoked1 | Evoked2 |

|---|---|---|---|---|

| Variance [V2] | 5.6126 × 10−10 | 2.7623 × 10−10 | 9.2886 × 10−11 | 1.3600 × 10−9 |

| Hjorth activity [V] | 5.6126 × 10−10 | 2.7623 × 10−10 | 9.2886 × 10−11 | 1.3600 × 10−9 |

| Hjorth mobility [Hz] | 353.03 | 366.35 | 570.07 | 563.47 |

| Hjorth complexity | 4.7210 | 4.9504 | 2.7321 | 2.6554 |

| RSP in alpha band | 8.4320 × 10−3 | 4.0915 × 10−2 | 6.2681 × 10−2 | 3.3350 × 10−2 |

| DSI | 220.19 | 6.9247 | 2.6467 | 11.752 |

| TSI | 1.1033 × 10−2 | 0.1213 | 0.2635 | 9.6001 × 10−2 |

| ASI | 1.1308 × 10−2 | 5.2976 × 10−2 | 0.1166 | 5.7637 × 10−2 |

| Spectral slope in delta band [V/Hz] | −6.0904 | −3.0129 | −2.2365 | −3.2671 |

| Spectral slope in theta band [V/Hz] | −1.4609 | −2.6125 | −1.2880 | −1.2904 |

| Spectral slope in alpha band [V/Hz] | −0.6966 | −0.6409 | −1.0234 | −0.5779 |

| Spectral slope in beta band [V/Hz] | 1.0776 | −6.6134 × 10−2 | −2.3219 × 10−2 | −0.2998 |

| Spectral slope in gamma band [V/Hz] | −0.36971 | −0.4077 | −0.6696 | 1.0208 |

| Delta–theta ratio | 392.23 | 11.283 | 4.5284 | 19.349 |

| Delta–alpha ratio | 555.32 | 24.840 | 8.3635 | 33.929 |

| Delta–beta ratio | 130.06 | 11.605 | 2.3912 | 7.3553 |

| Delta–gamma ratio | 126.81 | 16.769 | 3.2509 | 7.3014 |

| Theta–delta ratio | 1.1569 × 10−2 | 0.1295 | 0.3051 | 0.1052 |

| Theta–alpha ratio | 1.6319 | 2.9363 | 2.4523 | 2.0191 |

| Theta–beta ratio | 0.3858 | 1.3408 | 0.6628 | 0.5853 |

| Theta–gamma ratio | 0.4040 | 1.8459 | 0.8758 | 0.5804 |

| Spectral Entropy | 8.8508 × 10−2 | 0.1904 | 0.4576 | 0.3377 |

| Renyi Entropy | 2.6029 | 1.5355 | 1.2446 | 2.2815 |

| SVD Entropy | 0.1610 | 0.4183 | 0.7065 | 0.6595 |

| Sleep Stage | Median CV (%) | Mean CV (%) | Standard Deviation |

|---|---|---|---|

| Wake | 125.05 | 1371.63 | 6967.04 |

| N2 | 101.07 | 1259.30 | 4897.27 |

| N1 | 93.11 | 420.19 | 1378.86 |

| REM | 87.20 | 5116.69 | 28,199.65 |

| N3 | 71.56 | 1041.35 | 9146.77 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Fazio, R.; Yalçınkaya, Ş.E.; Cascella, I.; Del-Valle-Soto, C.; De Vittorio, M.; Visconti, P. Forehead and In-Ear EEG Acquisition and Processing: Biomarker Analysis and Memory-Efficient Deep Learning Algorithm for Sleep Staging with Optimized Feature Dimensionality. Sensors 2025, 25, 6021. https://doi.org/10.3390/s25196021

De Fazio R, Yalçınkaya ŞE, Cascella I, Del-Valle-Soto C, De Vittorio M, Visconti P. Forehead and In-Ear EEG Acquisition and Processing: Biomarker Analysis and Memory-Efficient Deep Learning Algorithm for Sleep Staging with Optimized Feature Dimensionality. Sensors. 2025; 25(19):6021. https://doi.org/10.3390/s25196021

Chicago/Turabian StyleDe Fazio, Roberto, Şule Esma Yalçınkaya, Ilaria Cascella, Carolina Del-Valle-Soto, Massimo De Vittorio, and Paolo Visconti. 2025. "Forehead and In-Ear EEG Acquisition and Processing: Biomarker Analysis and Memory-Efficient Deep Learning Algorithm for Sleep Staging with Optimized Feature Dimensionality" Sensors 25, no. 19: 6021. https://doi.org/10.3390/s25196021

APA StyleDe Fazio, R., Yalçınkaya, Ş. E., Cascella, I., Del-Valle-Soto, C., De Vittorio, M., & Visconti, P. (2025). Forehead and In-Ear EEG Acquisition and Processing: Biomarker Analysis and Memory-Efficient Deep Learning Algorithm for Sleep Staging with Optimized Feature Dimensionality. Sensors, 25(19), 6021. https://doi.org/10.3390/s25196021