Towards Optimal Sensor Placement for Cybersecurity: An Extensible Model for Defensive Cybersecurity Sensor Placement Evaluation

Abstract

1. Introduction

- A novel and detailed problem formulation of the defensive cybersecurity optimal sensor placement problem.

- A novel, extensible mathematical model for quantitative evaluation of defensive cybersecurity sensor configurations to protect against cyber attack, including capture of both sensor data source locations and sensor analytics/rules used, the combination of which has never before been explored.

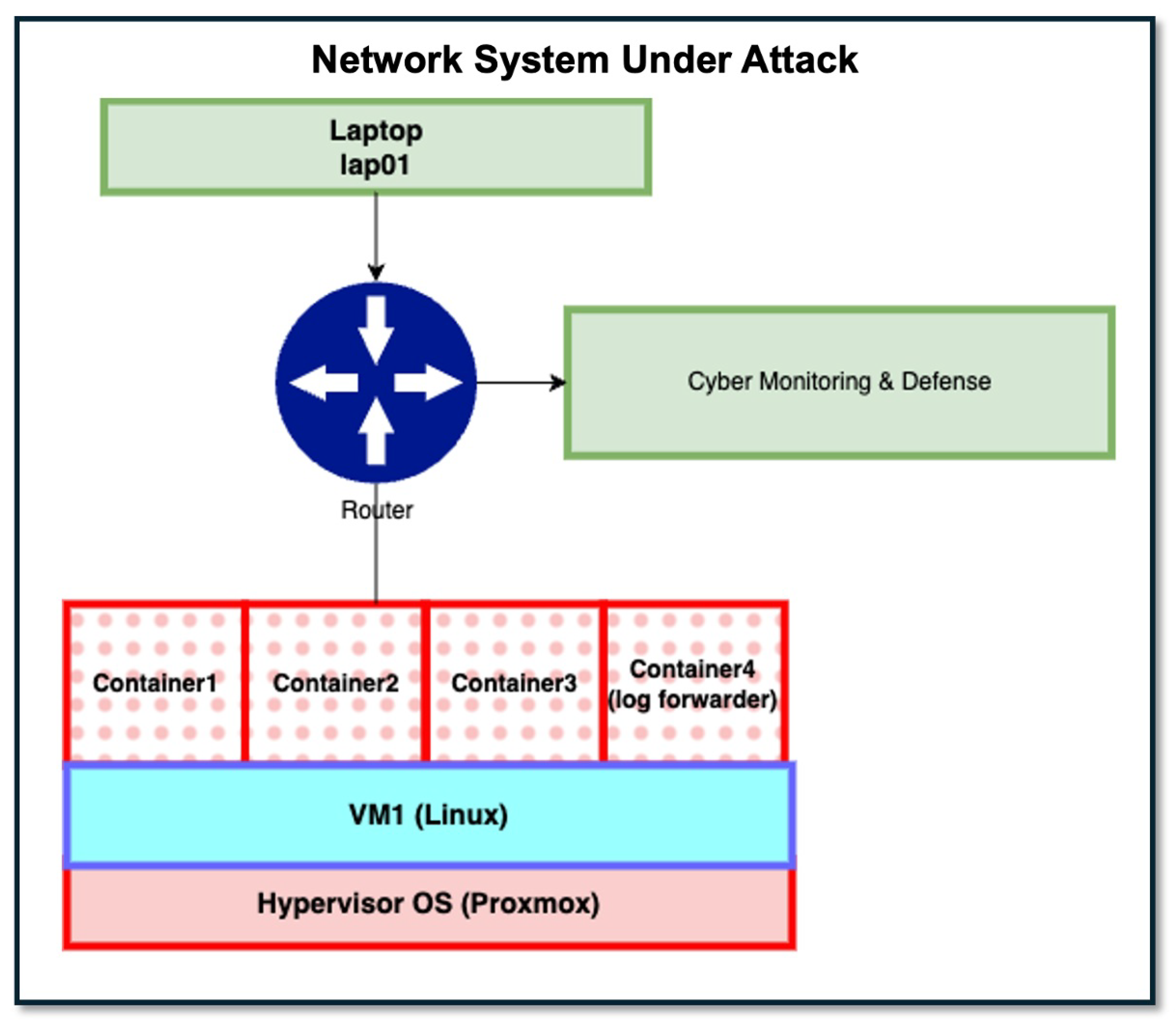

- A detailed case study demonstrating model usage for a representative network system protected by a configuration of defensive cybersecurity sensors and under the threat of multi-step attacks that employ real cyber attack techniques taken from the MITRE ATT&CK knowledge base against known vulnerabilities recorded in the NVD.

- A discussion on how the model can be extended and used to support future OSP research efforts for defensive cybersecurity.

2. Related Work

3. The Cybersecurity Sensor Placement Problem

3.1. Preliminary Concepts

3.2. Definitions

3.3. Problem Formulation

4. An Extensible Mathematical Model for Cybersecurity Sensor Placement Risk Evaluation

4.1. The Component Vulnerability-to-Threat Sub-Model

4.2. The Sensor Detection Sub-Model

4.3. The Cyber Threat Propagation Sub-Model

4.3.1. Single Attack Propagation Sub-Model

| Algorithm 1 Single attack propagation sub-model algorithm | |

1: procedure Single-attack-propagation-trial() | |

2: | ▹ Size of attack sequence |

3: | ▹ Activate attack entry step |

4: for to n do | |

5: | ▹ Set of vulns. on attacked component |

6: if exploit of vulnerability not required then | |

7: | ▹ Live-off-the-land exploit |

8: else if then | |

9: | |

10: else | |

11: Compute | |

12: if then | ▹ Sensor enabled to detect attack step |

13: Compute | ▹ Detection probability |

14: else | |

15: | |

16: | ▹ Probability of attack step success |

17: | ▹ Random draw |

18: if then | |

19: if then | |

20: | ▹ Activate next attack step |

21: else | |

22: return 1 | ▹ Attack attains goal |

23: else | |

24: return 0 | ▹ Attack step failure, attack fails |

25: procedure Execute-single-attack-propagation-trials() | |

26: | ▹ Initialize vector of trial results |

27: for to do | |

28: | |

29: | |

30: return | |

4.3.2. Multi-Attack Aggregation Sub-Model

| Algorithm 2 Multi-attack aggregation sub-model algorithm | |

1: procedure Multi-attack-trace-pai() | |

2: | ▹ Initialize product of complements |

3: for all in do | |

4: | |

5: | |

6: | |

7: | ▹ Update product of complements |

8: | ▹ Compute complement of product of complements |

9: return p | |

10: procedure Single-attack-trace-pai() | |

11: | ▹ Size of vector of trial outcomes |

12: | |

13: for to n do | |

14: | ▹ Update sum of trial outcomes |

15: | ▹ Compute expected probability |

16: return p | |

4.4. Cybersecurity Sensor Placement Risk Model Extensibility

5. Case Study for Model Demonstration

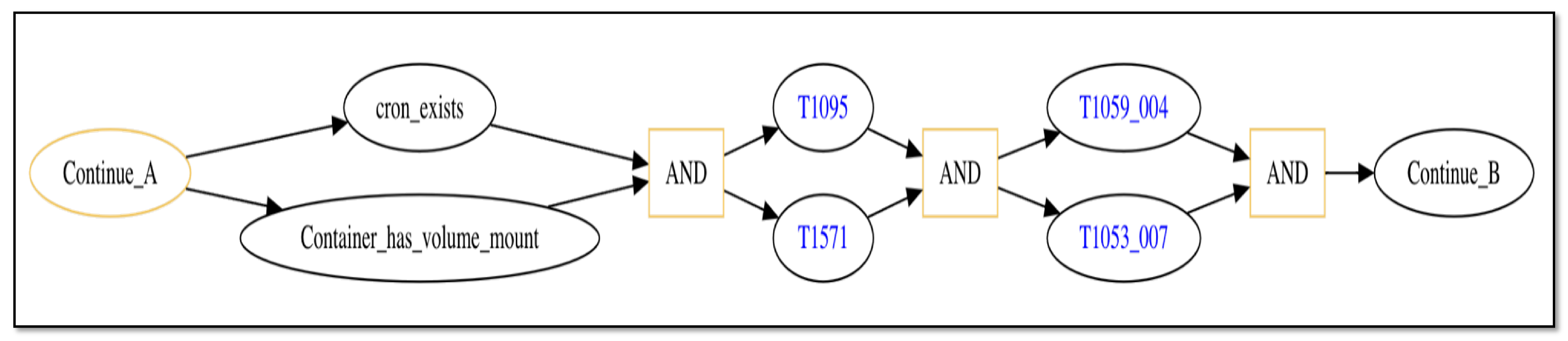

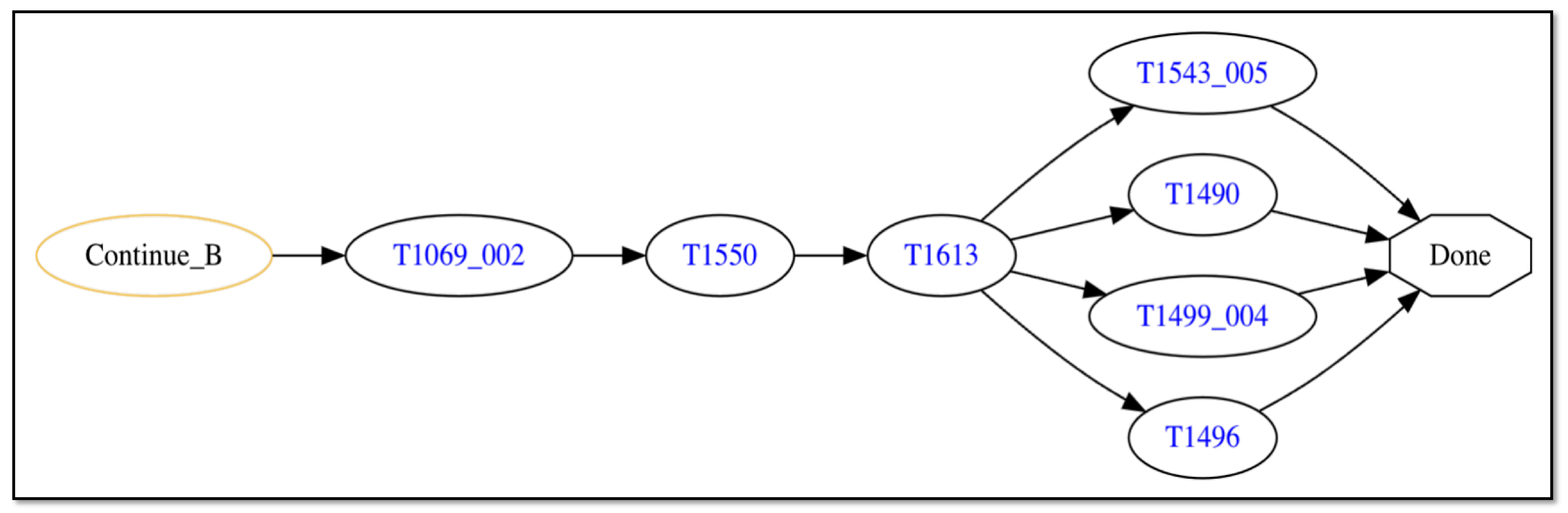

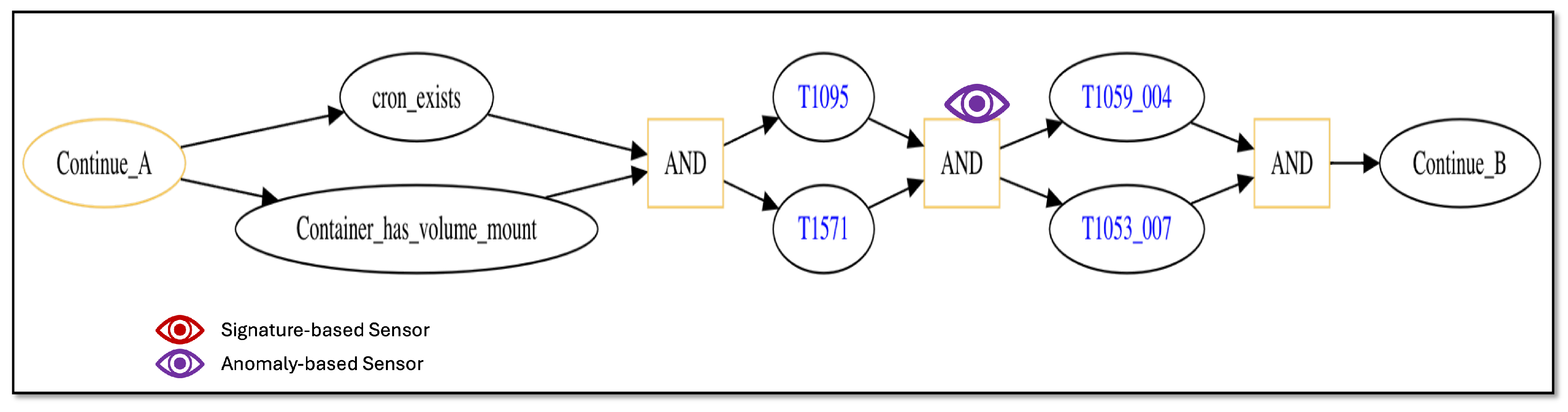

5.1. Attack Threat Trace: Disrupt User-Facing Containerized Services

5.2. Attack Threat Trace: Disrupt Cybersecurity Sensor and Monitoring Infrastructure

5.3. System Vulnerabilities Targeted for Attack

5.4. Defensive Cybersecurity Sensors

5.5. Case Study Scenario Variant: Inclusion of Zero-Day Effects

6. Experiments

7. Discussion of Practical Considerations, Model Limitations, and Future Work

7.1. Applying the Model to Real-World Network Systems

7.1.1. Obtain Network System Environment Information

7.1.2. Determine Attack Threats of Concern

7.1.3. Specify Sensor Detection Rates/Probabilities

7.1.4. Model Complexity and Scalability

7.2. Model Limitations

7.3. Future Work Directions

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hassani, S.; Dackermann, U. A systematic review of optimization algorithms for structural health monitoring and optimal sensor placement. Sensors 2023, 23, 3293. [Google Scholar] [CrossRef]

- Huang, J.; McBean, E.A.; James, W. Multi-objective optimization for monitoring sensor placement in water distribution systems. In Proceedings of the Eighth Annual Water Distribution Systems Analysis Symposium (WDSA), Cinncinati, OH, USA, 27–30 August 2006; American Society of Civil Engineers: Reston, VA, USA, 2006; Volume 8, pp. 1–14. [Google Scholar]

- Zhen, T.; Klise, K.A.; Cunningham, S.; Marszal, E.; Laird, C.D. A mathematical programming approach for the optimal placement of flame detectors in petrochemical facilities. Process Saf. Environ. Prot. 2019, 132, 47–58. [Google Scholar] [CrossRef]

- Chen, G.; Shi, W.; Yu, L.; Huang, J.; Wei, J.; Wang, J. Wireless Sensor Placement Optimization for Bridge Health Monitoring: A Critical Review. Buildings 2024, 14, 856. [Google Scholar] [CrossRef]

- Hu, C.; Li, M.; Zeng, D.; Guo, S. A survey on sensor placement for contamination detection in water distribution systems. Wirel. Netw. 2018, 24, 647–661. [Google Scholar] [CrossRef]

- Wu, H.; Liu, Z.; Hu, J.; Yin, W. Sensor placement optimization for critical-grid coverage problem of indoor positioning. Int. J. Distrib. Sens. Netw. 2020, 16, 1550147720979922. [Google Scholar] [CrossRef]

- Zhang, H. Optimal sensor placement. In Proceedings of the Proceedings 1992 IEEE International Conference on Robotics and Automation, Nice, France, 12–14 May 1992; IEEE Computer Society: Washington, DC, USA, 1992; Volume 2, pp. 1825–1830. [Google Scholar]

- Abidi, B.R. Automatic sensor placement. In Proceedings of the Intelligent Robots and Computer Vision XIV: Algorithms, Techniques, Active Vision, and Materials Handling, Philadelphia, PA, USA, 23–26 October 1995; SPIE: Bellingham, WA, USA, 1995; Volume 2588, pp. 387–398. [Google Scholar]

- Shi, Z.; Law, S.S.; Zhang, L.M. Optimum sensor placement for structural damage detection. J. Eng. Mech. 2000, 126, 1173–1179. [Google Scholar] [CrossRef]

- Baruh, H.; Choe, K. Sensor placement in structural control. J. Guid. Control Dyn. 1990, 13, 524–533. [Google Scholar] [CrossRef]

- Khan, A.; Ceglarek, D.; Ni, J. Sensor location optimization for fault diagnosis in multi-fixture assembly systems. ASME J. Manuf. Sci. Eng. 1998, 120, 781–792. [Google Scholar] [CrossRef]

- Inayat, U.; Zia, M.F.; Mahmood, S.; Khalid, H.M.; Benbouzid, M. Learning-based methods for cyber attacks detection in IoT systems: A survey on methods, analysis, and future prospects. Electronics 2022, 11, 1502. [Google Scholar] [CrossRef]

- Khoei, T.T.; Slimane, H.O.; Kaabouch, N. A comprehensive survey on the cyber-security of smart grids: Cyber-attacks, detection, countermeasure techniques, and future directions. Commun. Netw. 2022, 14, 119–170. [Google Scholar] [CrossRef]

- Zhang, J.; Pan, L.; Han, Q.; Chen, C.; Wen, S.; Xiang, Y. Deep learning based attack detection for cyber-physical system cybersecurity: A survey. IEEE/CAA J. Autom. Sin. 2021, 9, 377–391. [Google Scholar] [CrossRef]

- Ghosh, A.; Albanese, M.; Mukherjee, P.; Alipour-Fanid, A. Improving the Efficiency of Intrusion Detection Systems by Optimizing Rule Deployment Across Multiple IDSs. In Proceedings of the 21st International Conference on Security and Cryptography—Volume 1: SECRYPT. INSTICC, Dijon, France, 8–10 July 2024; SciTePress: Setúbal, Portugal, 2024; pp. 536–543. [Google Scholar] [CrossRef]

- Elastic. SIEM from Elastic. Available online: https://www.elastic.co/security/siem (accessed on 22 April 2025).

- Splunk. Splunk Advanced Threat Detection. Available online: https://www.splunk.com/en_us/solutions/advanced-threat-detection.html (accessed on 22 April 2025).

- Hylender, C.D.; Langlois, P.; Pinto, A.; Widup, S. 2025 Data Breach Investigations Report. 2025. Available online: https://www.verizon.com/business/resources/reports/dbir/ (accessed on 20 September 2025).

- MITRE ATT&CK Framework. Available online: https://attack.mitre.org/ (accessed on 27 November 2024).

- National Institute of Standards and Technology. National Vulnerability Database. Available online: https://nvd.nist.gov/ (accessed on 20 January 2025).

- Rao, A.S.; Radanovic, M.; Liu, Y.; Hu, S.; Fang, Y.; Khoshelham, K.; Palaniswami, M.; Ngo, T. Real-time monitoring of construction sites: Sensors, methods, and applications. Autom. Constr. 2022, 136, 104099. [Google Scholar] [CrossRef]

- Sun, C.; Li, V.O.; Lam, J.C.; Leslie, I. Optimal citizen-centric sensor placement for air quality monitoring: A case study of city of Cambridge, the United Kingdom. IEEE Access 2019, 7, 47390–47400. [Google Scholar] [CrossRef]

- Klise, K.; Nicholson, B.L.; Laird, C.D.; Ravikumar, A.; Brandt, A.R. Sensor placement optimization software applied to site-scale methane-emissions monitoring. J. Environ. Eng. 2020, 146, 04020054. [Google Scholar] [CrossRef]

- Krichen, M. Anomalies detection through smartphone sensors: A review. IEEE Sens. J. 2021, 21, 7207–7217. [Google Scholar] [CrossRef]

- Sheatsley, R.; Durbin, M.; Lintereur, A.; McDaniel, P. Improving radioactive material localization by leveraging cyber-security model optimizations. IEEE Sens. J. 2021, 21, 9994–10006. [Google Scholar] [CrossRef]

- Weston, M.; Geng, S.; Chandrawat, R. Food sensors: Challenges and opportunities. Adv. Mater. Technol. 2021, 6, 2001242. [Google Scholar] [CrossRef]

- Bhardwaj, J.; Krishnan, J.P.; Marin, D.F.L.; Beferull-Lozano, B.; Cenkeramaddi, L.R.; Harman, C. Cyber-physical systems for smart water networks: A review. IEEE Sens. J. 2021, 21, 26447–26469. [Google Scholar] [CrossRef]

- Andronie, M.; Lăzăroiu, G.; Ștefănescu, R.; Uță, C.; Dijmărescu, I. Sustainable, smart, and sensing technologies for cyber-physical manufacturing systems: A systematic literature review. Sustainability 2021, 13, 5495. [Google Scholar] [CrossRef]

- Mason, S. Heuristic reasoning strategy for automated sensor placement. Photogramm. Eng. Remote Sens. 1997, 63, 1093–1101. [Google Scholar]

- Krishna, M.; Chowdary, S.M.B.; Nancy, P.; Arulkumar, V. A survey on multimedia analytics in security systems of cyber physical systems and IoT. In Proceedings of the 2nd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 7–9 October 2021; IEEE: New York, NY, USA, 2021; pp. 1–7. [Google Scholar]

- Kiraz, M.; Sivrikaya, F.; Albayrak, S. A Survey on Sensor Selection and Placement for Connected and Automated Mobility. IEEE Open J. Intell. Transp. Syst. 2024, 5, 692–710. [Google Scholar] [CrossRef]

- Balestrieri, E.; Daponte, P.; Vito, L.; Picariello, F.; Tudosa, I. Sensors and measurements for UAV safety: An overview. Sensors 2021, 21, 8253. [Google Scholar] [CrossRef]

- Lykou, G.; Moustakas, D.; Gritzalis, D. Defending airports from UAS: A survey on cyber-attacks and counter-drone sensing technologies. Sensors 2020, 20, 3537. [Google Scholar] [CrossRef]

- Domingo-Perez, F.; Lazaro-Galilea, J.L.; Wieser, A.; Martin-Gorostiza, E.; Salido-Monzu, D.; Llana, A. Sensor placement determination for range-difference positioning using evolutionary multi-objective optimization. Expert Syst. Appl. 2016, 47, 95–105. [Google Scholar] [CrossRef]

- Iosa, M.; Picerno, P.; Paolucci, S.; Marone, G. Wearable inertial sensors for human movement analysis: A five-year update. Expert Rev. Med. Devices 2021, 18 (Suppl. S1), 79–94. [Google Scholar]

- Alrowais, F.; Mohamed, H.G.; Al-Wesabi, F.N.; Al Duhayyim, M.; Hilal, A.M.; Motwakel, A. Cyber attack detection in healthcare data using cyber-physical system with optimized algorithm. Comput. Electr. Eng. 2023, 108, 108636. [Google Scholar] [CrossRef]

- AlZubi, A.A.; Mohammed, A.; Alarifi, A. Cyber-attack detection in healthcare using cyber-physical system and machine learning techniques. Soft Comput. 2021, 25, 12319–12332. [Google Scholar] [CrossRef]

- Lachure, J.; Doriya, R. Securing Water Distribution Systems: Leveraging Sensor Networks Against Cyber-Physical Attacks Using Advanced Chicken Swarm Optimization. IEEE Sens. J. 2024, 24, 39894–39913. [Google Scholar] [CrossRef]

- Pardhasaradhi, B.; Yakkati, R.R.; Cenkeramaddi, L.R. Machine learning-based screening and measurement to measurement association for navigation in GNSS spoofing environment. IEEE Sens. J. 2022, 22, 23423–23435. [Google Scholar] [CrossRef]

- Wang, W.; Li, G.; Chu, Z.; Li, H.; Faccio, D. Two-Factor Authentication Approach Based on Behavior Patterns for Defeating Puppet Attacks. IEEE Sens. J. 2024, 24, 8250–8264. [Google Scholar] [CrossRef]

- Cui, H.; Dong, X.; Deng, H.; Dehghani, M.; Alsubhi, K.; Aljahdali, H.M.A. Cyber attack detection process in sensor of DC micro-grids under electric vehicle based on Hilbert–Huang transform and deep learning. IEEE Sens. J. 2020, 21, 15885–15894. [Google Scholar] [CrossRef]

- Meira-Goes, R.; Rômulo, E.K.; Kwong, R.H.; Lafortune, S. Synthesis of sensor deception attacks at the supervisory layer of cyber–physical systems. Automatica 2020, 121, 109172. [Google Scholar] [CrossRef]

- Zhao, C.; Lin, H.; Li, Y.; Liang, S.; Lam, J. Event-based state estimation against deception attacks: A detection based approach. IEEE Sens. J. 2023, 23, 23020–23029. [Google Scholar]

- Tong, X.; Wang, J.; Zhang, C.; Wang, R.; Ge, Z.; Liu, W.; Zhao, Z. A content-based chinese spam detection method using a capsule network with long-short attention. IEEE Sens. J. 2021, 21, 25409–25420. [Google Scholar] [CrossRef]

- Al-Khateeb, H.; Epiphaniou, G.; Reviczky, A.; Karadimas, P.; Heidari, H. Proactive threat detection for connected cars using recursive Bayesian estimation. IEEE Sens. J. 2017, 18, 4822–4831. [Google Scholar]

- Jeon, W.; Xie, Z.; Zemouche, A.; Rajamani, R. Simultaneous cyber-attack detection and radar sensor health monitoring in connected ACC vehicles. IEEE Sens. J. 2020, 21, 15741–15752. [Google Scholar] [CrossRef]

- Sun, R.; Luo, Q.; Chen, Y. Online transportation network cyber-attack detection based on stationary sensor data. Transp. Res. Part C Emerg. Technol. 2023, 149, 104058. [Google Scholar] [CrossRef]

- Hong, A.E.; Malinovsky, P.P.; Damodaran, S.K. Towards attack detection in multimodal cyber-physical systems with sticky HDP-HMM based time series analysis. Digit. Threat. Res. Pract. 2024, 5, 1–21. [Google Scholar] [CrossRef]

- Noel, S.; Jajodia, S. Optimal IDS sensor placement and alert prioritization using attack graphs. J. Netw. Syst. Manag. 2008, 16, 259–275. [Google Scholar] [CrossRef]

- Babatope, L.O.; Babatunde, L.; Ayobami, I. Strategic sensor placement for intrusion detection in network-based IDS. Int. J. Intell. Syst. Appl. 2014, 6, 61. [Google Scholar] [CrossRef]

- Venkatesan, S.; Albanese, M.; Jajodia, S. Disrupting stealthy botnets through strategic placement of detectors. In Proceedings of the 2015 IEEE Conference on Communications and Network Security (CNS), Florence, Italy, 28–30 September 2015; IEEE: New York, NY, USA, 2015; pp. 95–103. [Google Scholar]

- Venkatesan, S.; Albanese, M.; Chiang, C.Y.J.; Sapello, A.; Chadha, R. DeBot: A novel network-based mechanism to detect exfiltration by architectural stealthy botnets. Secur. Priv. 2018, 1, e51. [Google Scholar] [CrossRef]

- Lanz, Z. Cybersecurity risk in US critical infrastructure: An analysis of publicly available US government alerts and advisories. Int. J. Cybersecur. Intell. Cybercrime 2022, 5, 43–70. [Google Scholar] [CrossRef]

- Mavroeidis, V.; Bromander, S. Cyber threat intelligence model: An evaluation of taxonomies, sharing standards, and ontologies within cyber threat intelligence. In Proceedings of the 2017 European Intelligence and Security Informatics Conference (EISIC), Athens, Greece, 11–13 September 2017; IEEE: New York, NY, USA, 2017; pp. 91–98. [Google Scholar]

- Microsoft. Sysmon V15.15. Available online: https://learn.microsoft.com/en-us/sysinternals/downloads/sysmon (accessed on 5 January 2025).

- The TCPdump Group. TCPDump and LibPCap. Available online: https://www.tcpdump.org/ (accessed on 5 January 2025).

- NMap Project. NPCap. Available online: https://npcap.com/ (accessed on 5 January 2025).

- Elastic NV. Elasticsearch. Available online: https://www.elastic.co/elasticsearch (accessed on 5 January 2025).

- González-Granadillo, G.; González-Zarzosa, S.; Diaz, R. Security information and event management (SIEM): Analysis, trends, and usage in critical infrastructures. Sensors 2021, 21, 4759. [Google Scholar] [CrossRef]

- Akowuah, F.; Kong, F. Real-Time Adaptive Sensor Attack Detection in Autonomous Cyber-Physical Systems. In Proceedings of the 2021 IEEE 27th Real-Time and Embedded Technology and Applications Symposium (RTAS), Nashville, TN, USA, 18–21 May 2021; pp. 237–250. [Google Scholar] [CrossRef]

- Tidjon, L.N.; Frappier, M.; Mammar, A. Intrusion Detection Systems: A Cross-Domain Overview. IEEE Commun. Surv. Tutor. 2019, 21, 3639–3681. [Google Scholar] [CrossRef]

- Sudar, K.M.; Nagaraj, P.; Deepalakshmi, P.; Chinnasamy, P. Analysis of intruder detection in big data analytics. In Proceedings of the 2021 International Conference on Computer Communication and Informatics (ICCCI), Coimbatore, India, 27–29 January 2021; IEEE: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Aslan, Ö.A.; Samet, R. A Comprehensive Review on Malware Detection Approaches. IEEE Access 2020, 8, 6249–6271. [Google Scholar] [CrossRef]

- Thang, N.M. Improving efficiency of web application firewall to detect code injection attacks with random forest method and analysis attributes HTTP request. Program. Comput. Softw. 2020, 46, 351–361. [Google Scholar] [CrossRef]

- Torrano-Gimenez, C.; Perez-Villegas, A.; Alvarez, G. A self-learning anomaly-based web application firewall. In Proceedings of the Computational Intelligence in Security for Information Systems: CISIS′09, 2nd International Workshop Burgos, Spain, September 2009 Proceedings; Springer: Berlin/Heidelberg, Germany, 2009; pp. 85–92. [Google Scholar]

- Ahmad, A.; Anwar, Z.; Hur, A.; Ahmad, H.F. Formal reasoning of web application firewall rules through ontological modeling. In Proceedings of the 2012 15th International Multitopic Conference (INMIC), Islamabad, Pakistan, 13–15 December 2012; IEEE: New York, NY, USA, 2012; pp. 230–237. [Google Scholar]

- Applebaum, S.; Gaber, T.; Ahmed, A. Signature-based and machine-learning-based web application firewalls: A short survey. Procedia Comput. Sci. 2021, 189, 359–367. [Google Scholar] [CrossRef]

- Pałka, D.; Zachara, M. Learning web application firewall-benefits and caveats. In Proceedings of the Availability, Reliability and Security for Business, Enterprise and Health Information Systems: IFIP WG 8.4/8.9 International Cross Domain Conference and Workshop, ARES 2011, Vienna, Austria, 22–26 August 2011; Proceedings 6. Springer: Berlin/Heidelberg, Germany, 2011; pp. 295–308. [Google Scholar]

- Apache. Apache Flink. Available online: https://flink.apache.org/ (accessed on 28 February 2025).

- Damodaran, S.; Davis, D. Cyber SEAL: Cyber Streaming Effects and Analytic Languages. Available online: https://www.mitre.org/news-insights/publication/cyber-seal-cyber-streaming-effects-and-analytic-languages (accessed on 28 February 2025).

- Kaplan, S.; Garrick, B.J. On the quantitative definition of risk. Risk Anal. 1981, 1, 11–27. [Google Scholar] [CrossRef]

- Mell, P.; Scarfone, K.; Romanosky, S. Common vulnerability scoring system. IEEE Secur. Priv. 2006, 4, 85–89. [Google Scholar] [CrossRef]

- Forum of Incident Response and Security Teams, Inc. CVSS: Common Vulnerability Scoring System v4.0. Available online: https://www.first.org/cvss (accessed on 20 January 2025).

- SecurityWeek. Record-Breaking Number of Vulnerabilities Disclosed in 2017. Available online: https://www.securityweek.com/record-breaking-number-vulnerabilities-disclosed-2017-report (accessed on 20 January 2025).

- Sun, N.; Zhang, J.; Rimba, P.; Gao, S.; Zhang, L.Y.; Xiang, Y. Data-driven cybersecurity incident prediction: A survey. IEEE Commun. Surv. Tutor. 2018, 21, 1744–1772. [Google Scholar] [CrossRef]

- Strom, B.E.; Applebaum, A.; Miller, D.P.; Nickels, K.C.; Pennington, A.G.; Thomas, C.B. MITRE ATT&CK: Design and philosophy. In Technical Report; The MITRE Corporation: McLean, VA, USA, 2018. [Google Scholar]

- Lippmann, R.P.; Riordan, J.; Yu, T.; Watson, K. Continuous Security Metrics for Prevalent Network Threats: Introduction and First Four Metrics; Lincoln Laboratory, MIT: Lexington, MA, USA, 2012. [Google Scholar]

- Lippmann, R.P.; Riordan, J.F. Threat-based risk assessment for enterprise networks. Linc. Lab. J. 2016, 22, 33–45. [Google Scholar]

- Wagner, N.; Şahin, C.Ş.; Winterrose, M.; Riordan, J.; Pena, J.; Hanson, D.; Streilein, W.W. Towards automated cyber decision support: A case study on network segmentation for security. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; IEEE: New York, NY, USA, 2016; pp. 1–10. [Google Scholar]

- MITRE. MITRE Cyber Analytics Repository. Available online: https://car.mitre.org/ (accessed on 22 April 2025).

- SIGMA. Sigma—Generic Signature Format for SIEM Systems. Available online: https://github.com/SigmaHQ/sigma (accessed on 22 April 2025).

- Ben-Gal, I. Bayesian Networks. In Encyclopedia of Statistics in Quality and Reliability; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2007. [Google Scholar]

- Barr-Smith, F.; Ugarte-Pedrero, X.; Graziano, M.; Spolaor, R.; Martinovic, I. Survivalism: Systematic analysis of windows malware living-off-the-land. In Proceedings of the 2021 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 24–27 May 2021; IEEE: New York, NY, USA, 2021; pp. 1557–1574. [Google Scholar]

- Microsoft. Windows 10 Release Information. Available online: https://learn.microsoft.com/en-us/windows/release-health/release-information (accessed on 27 April 2025).

- Ubuntu. Ubuntu Releases. Available online: https://releases.ubuntu.com/ (accessed on 27 April 2025).

- MITRE. Replication Through Removable Media. Available online: https://attack.mitre.org/techniques/T1091 (accessed on 20 April 2025).

- MITRE. Input Capture: Key Logging. Available online: https://attack.mitre.org/techniques/T1056/001 (accessed on 20 April 2025).

- OpenBSD. scp Command. Available online: https://man.openbsd.org/scp (accessed on 28 February 2025).

- MITRE. Valid Accounts: Local Accounts. Available online: https://attack.mitre.org/techniques/T1078/003/ (accessed on 20 April 2025).

- MITRE. Remote Services: SSH. Available online: https://attack.mitre.org/techniques/T1021/004/ (accessed on 20 April 2025).

- MITRE. Exploitation for Privilege Escalation. Available online: https://attack.mitre.org/techniques/T1068/ (accessed on 20 April 2025).

- OpenBSD. cron Command. Available online: https://man.openbsd.org/cron (accessed on 20 April 2025).

- Ubuntu. ReverseShell. Available online: https://wiki.ubuntu.com/ReverseShell (accessed on 20 April 2025).

- MITRE. Non-Application Layer Protocol. Available online: https://attack.mitre.org/techniques/T1095/ (accessed on 20 April 2025).

- MITRE. Non-Standard Port. Available online: https://attack.mitre.org/techniques/T1571/ (accessed on 20 April 2025).

- Linux. ncat—Concatenate and Redirect Sockets. Available online: https://man7.org/linux/man-pages/man1/ncat.1.html (accessed on 20 April 2025).

- MITRE. Scheduled Task/Job: Container Orchestration Job. Available online: https://attack.mitre.org/techniques/T1053/007/ (accessed on 20 April 2025).

- MITRE. Command and Scripting Interpreter: Unix Shell. Available online: https://attack.mitre.org/techniques/T1059/004/ (accessed on 20 April 2025).

- MITRE. Permission Groups Discovery: Domain Groups. Available online: https://attack.mitre.org/techniques/T1069/002/ (accessed on 20 April 2025).

- MITRE. Use Alternate Authentication Material. Available online: https://attack.mitre.org/techniques/T1550/ (accessed on 20 April 2025).

- MITRE. Container and Resource Discovery. Available online: https://attack.mitre.org/techniques/T1613/ (accessed on 20 April 2025).

- MITRE. Create or Modify System Process: Container Service. Available online: https://attack.mitre.org/techniques/T1543/005/ (accessed on 20 April 2025).

- MITRE. Inhibit System Recovery. Available online: https://attack.mitre.org/techniques/T1490/ (accessed on 20 April 2025).

- MITRE. Endpoint Denial of Service: Application or System Exploitation. Available online: https://attack.mitre.org/techniques/T1499/004/ (accessed on 20 April 2025).

- MITRE. Resource Hijacking. Available online: https://attack.mitre.org/techniques/T1496/ (accessed on 20 April 2025).

- National Vulnerability Database. CVE-2024-5290. Available online: https://nvd.nist.gov/vuln/detail/CVE-2024-5290 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2024-1724. Available online: https://nvd.nist.gov/vuln/detail/CVE-2024-1724 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2024-5536. Available online: https://nvd.nist.gov/vuln/detail/CVE-2023-5536 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2024-32629. Available online: https://nvd.nist.gov/vuln/detail/CVE-2023-32629 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2024-2640. Available online: https://nvd.nist.gov/vuln/detail/CVE-2023-2640 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2024-30549. Available online: http://nvd.nist.gov/vuln/detail/CVE-2023-30549 (accessed on 2 June 2025).

- MITRE. MITRE Cyber Analytics Repository: Analytic Coverage Comparison. Available online: https://car.mitre.org/coverage/ (accessed on 2 May 2025).

- Whittaker, Z. This Hacker’s iPhone Charging Cable Can Hijack Your Computer. Available online: https://techcrunch.com/2019/08/12/iphone-charging-cable-hack-computer-def-con/ (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2023-6080. Available online: http://nvd.nist.gov/vuln/detail/CVE-2023-6080 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2023-7016. Available online: http://nvd.nist.gov/vuln/detail/CVE-2023-7016 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2023-5993. Available online: http://nvd.nist.gov/vuln/detail/CVE-2023-5993 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2023-32544. Available online: http://nvd.nist.gov/vuln/detail/CVE-2023-32544 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2023-29244. Available online: http://nvd.nist.gov/vuln/detail/CVE-2023-29244 (accessed on 2 June 2025).

- National Vulnerability Database. CVE-2023-47145. Available online: http://nvd.nist.gov/vuln/detail/CVE-2023-47145 (accessed on 2 June 2025).

- Ross, R.S. Risk Management Framework for Information Systems and Organizations: A System Life Cycle Approach for Security and Privacy; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2018.

- Hickernell, F.J.; Jiang, L.; Liu, Y.; Owen, A.B. Guaranteed conservative fixed width confidence intervals via Monte Carlo sampling. In Monte Carlo and Quasi-Monte Carlo Methods 2012; Springer: Berlin/Heidelberg, Germany, 2013; pp. 105–128. [Google Scholar]

- Casella, G.; Berger, R. Statistical Inference; Chapman and Hall/CRC: Boca Raton, FL, USA, 2024. [Google Scholar]

- Tenable. Nessus Vulnerability Scanner. Available online: https://www.tenable.com/products/nessus (accessed on 2 June 2025).

- RedSEAL. RedSEAL Platform. Available online: https://www.redseal.net/ (accessed on 3 May 2025).

- Konsta, A.M.; Lafuente, A.L.; Spiga, B.; Dragoni, N. Survey: Automatic generation of attack trees and attack graphs. Comput. Secur. 2024, 137, 103602. [Google Scholar] [CrossRef]

- Kaynar, K. A taxonomy for attack graph generation and usage in network security. J. Inf. Secur. Appl. 2016, 29, 27–56. [Google Scholar] [CrossRef]

- Python Software Foundation. Python Programming Language Version 3.9.0. Available online: https://www.python.org/downloads/release/python-390/ (accessed on 2 June 2025).

- Gambo, M.L.; Almulhem, A. Zero Trust Architecture: A Systematic Literature Review. arXiv 2025, arXiv:2503.11659. [Google Scholar]

- Schulz, A.E.; Kotson, M.C.; Zipkin, J.R. Cyber Network Mission Dependencies; Technical Report; MIT Lincoln Laboratory: Lexington, MA, USA, 2015. [Google Scholar]

- Hemberg, E.; Zipkin, J.R.; Skowyra, R.W.; Wagner, N.; O’Reilly, U.M. Adversarial co-evolution of attack and defense in a segmented computer network environment. In Proceedings of the Genetic and Evolutionary Computation Conference, Kyoto, Japan, 15–19 July 2018; pp. 1648–1655. [Google Scholar]

- Wagner, N.; Şahin, C.Ş.; Pena, J.; Streilein, W.W. Automatic generation of cyber architectures optimized for security, cost, and mission performance: A nature-inspired approach. In Advances in Nature-Inspired Computing and Applications; Springer: Cham, Switzerland, 2019; pp. 1–25. [Google Scholar]

- Winterrose, M.L.; Carter, K.M.; Wagner, N.; Streilein, W.W. Adaptive attacker strategy development against moving target cyber defenses. In Advances in Cyber Security Analytics and Decision Systems; Springer: Cham, Switzerland, 2020; pp. 1–14. [Google Scholar]

| MITRE ATT&CK Technique | Vulnerabilities Targeted | Reason |

|---|---|---|

| T1091 | None | Laptop already compromised by malware on connected USB |

| T1056_001 | None | Installed key logger steals credentials |

| T1078_003 | None | Copy file to container allowed after login |

| T1021_004 | None | ssh to container allowed after login |

| T1068 | Ubuntu vulnerabilities | Escalation of privilege exploit attempt on container |

| T1095 | None | Communication via non-application layer protocol (TCP) enabled by privlege escalation |

| T1571 | None | Non-standard port use allowed after login |

| T1053_007 | None | cron script modification enabled by privilege escalation |

| T1059_004 | None | cron job execution allowed in normal container environment |

| T1069_002 | None | Discovery of Kubernetes credential file enabled by privilege escalation |

| T1550 | None | Access to credentials file enabled by privilege escalation |

| T1613 | None | Discovery of container services enabled by privilege escalation |

| T1543_005 | None | Modification of service enabled by privilege escalation |

| T1490 | None | Deletion of service enabled by privilege escalation |

| T1499_004 | None | Crashing of service enabled by privilege escalation |

| T1496 | None | Deletion of service-providing containers and/or creation of non-useful containers enabled by privilege escalation |

| CVE | Date Published | CVSS Score | CVSS Version | Reference |

|---|---|---|---|---|

| CVE-2024-5290 | 7 August 2024 | 7.8 | 3.1 | [106] |

| CVE-2024-1724 | 25 July 2024 | 8.2 | 3.1 | [107] |

| CVE-2023-5536 | 11 December 2023 | 6.4 | 3.1 | [108] |

| CVE-2023-32629 | 25 July 2023 | 7.8 | 3.1 | [109] |

| CVE-2023-2640 | 25 July 2023 | 7.8 | 3.1 | [110] |

| CVE-2023-30549 | 25 April 2023 | 7.8 | 3.1 | [111] |

| Sensor # | Sensor Type | Sensor Data Source | Technique Monitored |

|---|---|---|---|

| 1 | Signature-based | Laptop (lap01) | T1056_001 |

| 2 | Anomaly-based | Network communication from laptop (lap01) to container | T1078_003 |

| 3 | Anomaly-based | Network communication from laptop (lap01) to container | T1021_004 |

| 4 | Signature-based | Container1 | T1068 |

| 5 | Signature-based | Container2 | T1068 |

| 6 | Signature-based | Container3 | T1068 |

| 7 | Signature-based | Container4 | T1068 |

| 8 | Anomaly-based | Network communication from laptop (lap01) to container | T1095 AND T1571 |

| Sensor # | Sensor Type | Detection Probability Estimate |

|---|---|---|

| 1 | Signature-based | 0.02 |

| 2 | Anomaly-based | 0.5 |

| 3 | Anomaly-based | 0.5 |

| 4 | Signature-based | 0.45 |

| 5 | Signature-based | 0.45 |

| 6 | Signature-based | 0.45 |

| 7 | Signature-based | 0.45 |

| 8 | Anomaly-based | 0.5 |

| MITRE ATT&CK Technique | Vulnerabilities Targeted | Reason |

|---|---|---|

| T1091 | Windows 10 vulnerabilities | Attempt to install malware onto laptop by malicious USB charging cable (zero-day exploit) |

| T1056_001 | None | Installed key logger steals credentials |

| T1078_003 | None | Copy file to container allowed after login |

| T1021_004 | None | ssh to container allowed after login |

| T1068 | Ubuntu vulnerabilities | Escalation of privilege exploit attempt on container (zero-day exploit) |

| T1095 | None | Communication via non-application layer protocol (TCP) enabled by privlege escalation |

| T1571 | None | Non-standard port use allowed after login |

| T1053_007 | None | cron script modification enabled by privilege escalation |

| T1059_004 | None | cron job execution allowed in normal container environment |

| T1069_002 | None | Discovery of Kubernetes credential file enabled by privilege escalation |

| T1550 | None | Access to credentials file enabled by privilege escalation |

| T1613 | None | Discovery of container services enabled by privilege escalation |

| T1543_005 | None | Modification of service enabled by privilege escalation |

| T1490 | None | Deletion of service enabled by privilege escalation |

| T1499_004 | None | Crashing of service enabled by privilege escalation |

| T1496 | None | Deletion of service-providing containers and/or creation of non-useful containers enabled by privilege escalation |

| CVE | Date Published | CVSS Score | CVSS Version | Reference |

|---|---|---|---|---|

| CVE-2023-6080 | 18 October 2024 | 7.8 | 3.1 | [114] |

| CVE-2023-7016 | 27 February 2024 | 7.8 | 3.1 | [115] |

| CVE-2023-5993 | 11 December 2024 | 7.8 | 3.1 | [116] |

| CVE-2023-32544 | 19 January 2024 | 5.5 | 3.1 | [117] |

| CVE-2023-29244 | 19 January 2024 | 7.8 | 3.1 | [118] |

| CVE-2023-47145 | 7 January 2024 | 7.8 | 3.1 | [119] |

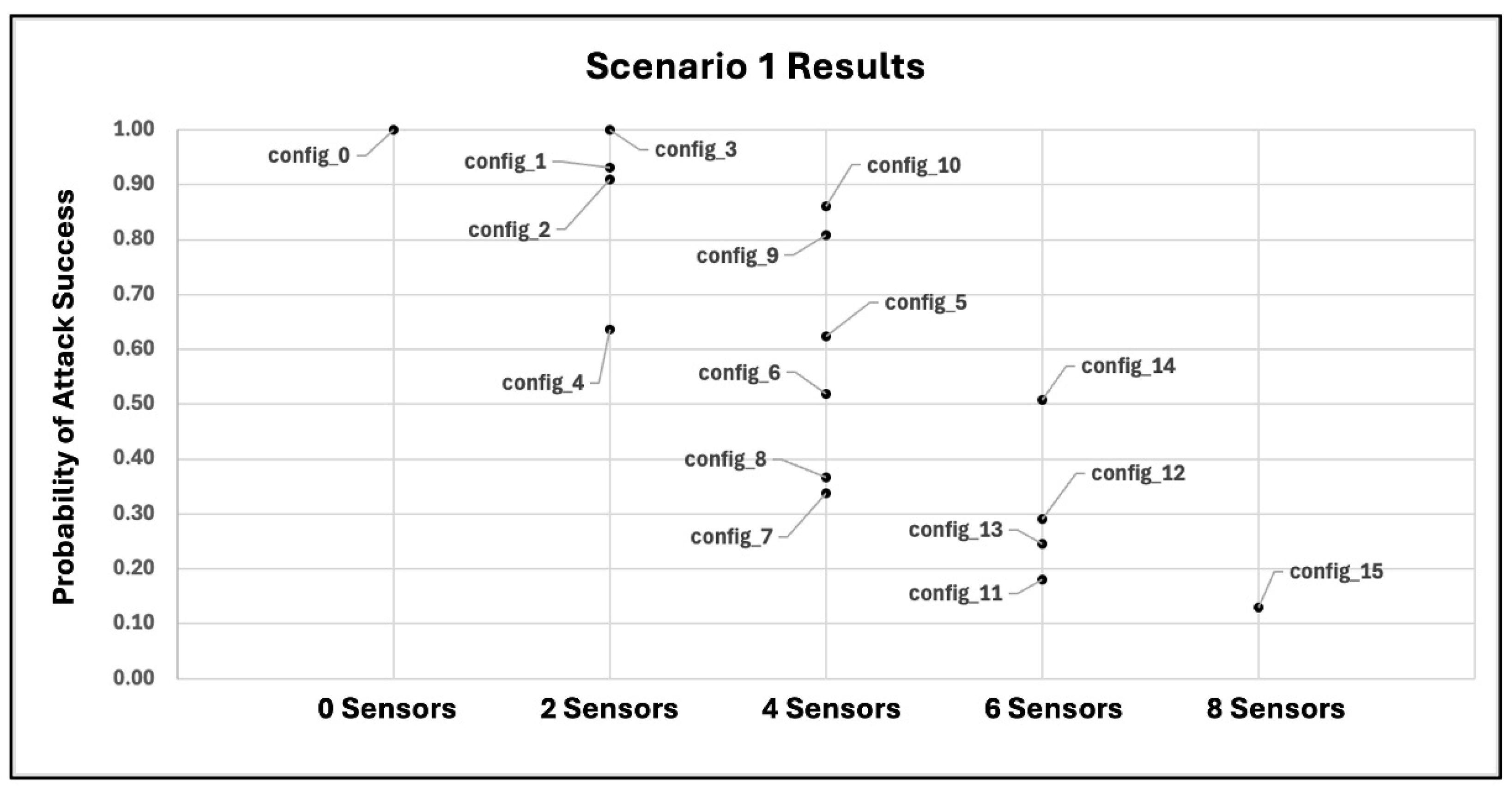

| Sensor Placement Config. ID | Sensor Instances | Sensor Types |

|---|---|---|

| config_0 | None | None |

| config_1 | Sensors #1–#2 | 1 Sig., 1 Anom. |

| config_2 | Sensors #3–#4 | 1 Sig., 1 Anom. |

| config_3 | Sensors #5–#6 | 2 Sig. |

| config_4 | Sensors #7–#8 | 1 Sig., 1 Anom. |

| config_5 | Sensors #1–#4 | 2 Sig., 2 Anom. |

| config_6 | Sensors #5–#8 | 3 Sig., 1 Anom. |

| config_7 | Sensors #3,#4,#7,#8 | 2 Sig., 2 Anom. |

| config_8 | Sensors #1,#2,#7,#8 | 2 Sig., 2 Anom. |

| config_9 | Sensors #3–#6 | 3 Sig., 1 Anom. |

| config_10 | Sensors #1,#2,#5,#6 | 3 Sig., 1 Anom. |

| config_11 | Sensors #1–#4,#7,#8 | 3 Sig., 3 Anom. |

| config_12 | Sensors #1,#2,#5–#8 | 4 Sig., 2 Anom. |

| config_13 | Sensors #3–#8 | 4 Sig., 2 Anom. |

| config_14 | Sensors #1–#6 | 4 Sig., 2 Anom. |

| config_15 | Sensors #1–#8 | 5 Sig., 3 Anom. |

| Sensor Placement Configuration ID | Probability of Attack Success | Std. Error | 95% Confidence Interval |

|---|---|---|---|

| config_0 | 1.00 | 0.0000 | [1.000, 1.000] |

| config_1 | 0.93 | 0.0026 | [0.925, 0.935] |

| config_2 | 0.91 | 0.0029 | [0.904, 0.916] |

| config_3 | 1.00 | 0.0000 | [1.000, 1.000] |

| config_4 | 0.64 | 0.0048 | [0.631, 0.649] |

| config_5 | 0.62 | 0.0049 | [0.610, 0.630] |

| config_6 | 0.52 | 0.0050 | [0.510, 0.530] |

| config_7 | 0.34 | 0.0047 | [0.331, 0.349] |

| config_8 | 0.37 | 0.0048 | [0.361, 0.379] |

| config_9 | 0.81 | 0.0039 | [0.802, 0.818] |

| config_10 | 0.86 | 0.0035 | [0.853, 0.867] |

| config_11 | 0.18 | 0.0038 | [0.172, 0.188] |

| config_12 | 0.29 | 0.0045 | [0.281, 0.299] |

| config_13 | 0.25 | 0.0043 | [0.242, 0.258] |

| config_14 | 0.51 | 0.0050 | [0.500, 0.520] |

| config_15 | 0.13 | 0.0034 | [0.123, 0.137] |

| Sensor Placement Configuration ID | Probability of Attack Success | Std. Error | 95% Confidence Interval |

|---|---|---|---|

| config_0 | 1.00 | 0.0000 | [1.000, 1.000] |

| config_1 | 0.93 | 0.0026 | [0.925, 0.935] |

| config_2 | 0.92 | 0.0027 | [0.915, 0.925] |

| config_3 | 1.00 | 0.0000 | [1.000, 1.000] |

| config_4 | 0.66 | 0.0047 | [0.651, 0.669] |

| config_5 | 0.64 | 0.0048 | [0.631, 0.649] |

| config_6 | 0.61 | 0.0049 | [0.600, 0.620] |

| config_7 | 0.37 | 0.0048 | [0.361, 0.379] |

| config_8 | 0.39 | 0.0049 | [0.380, 0.400] |

| config_9 | 0.88 | 0.0033 | [0.874, 0.886] |

| config_10 | 0.90 | 0.0030 | [0.894, 0.906] |

| config_11 | 0.20 | 0.0040 | [0.192, 0.208] |

| config_12 | 0.35 | 0.0048 | [0.341, 0.359] |

| config_13 | 0.33 | 0.0047 | [0.321, 0.339] |

| config_14 | 0.59 | 0.0049 | [0.580, 0.600] |

| config_15 | 0.18 | 0.0038 | [0.172, 0.188] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wagner, N.; Damodaran, S.K.; Reavey, M. Towards Optimal Sensor Placement for Cybersecurity: An Extensible Model for Defensive Cybersecurity Sensor Placement Evaluation. Sensors 2025, 25, 6022. https://doi.org/10.3390/s25196022

Wagner N, Damodaran SK, Reavey M. Towards Optimal Sensor Placement for Cybersecurity: An Extensible Model for Defensive Cybersecurity Sensor Placement Evaluation. Sensors. 2025; 25(19):6022. https://doi.org/10.3390/s25196022

Chicago/Turabian StyleWagner, Neal, Suresh K. Damodaran, and Michael Reavey. 2025. "Towards Optimal Sensor Placement for Cybersecurity: An Extensible Model for Defensive Cybersecurity Sensor Placement Evaluation" Sensors 25, no. 19: 6022. https://doi.org/10.3390/s25196022

APA StyleWagner, N., Damodaran, S. K., & Reavey, M. (2025). Towards Optimal Sensor Placement for Cybersecurity: An Extensible Model for Defensive Cybersecurity Sensor Placement Evaluation. Sensors, 25(19), 6022. https://doi.org/10.3390/s25196022