MS-YOLOv11: A Wavelet-Enhanced Multi-Scale Network for Small Object Detection in Remote Sensing Images

Abstract

1. Introduction

- 1.

- Frequency-domain detail preservation: A 2D Haar wavelet transform is embedded at the input stage to explicitly decompose the image into a low-frequency approximation component and three high-frequency detail sub-bands. This process suppresses background noise while highlighting subtle edges and textures that are prone to being obscured.

- 2.

- Lightweight receptive field expansion: Small-kernel depthwise separable convolutions are independently applied to each sub-band. This approach exponentially expands the receptive field without introducing redundant parameters, preserving global context while avoiding excessive smoothing of small objects.

- 3.

- Adaptive cross-scale fusion: A Mix Structure Block (MSB) is designed, which includes the following:

- An MSPLCK module that employs parallel dilated convolutions () for dense sampling, capturing multi-scale contextual information of extremely small targets;

- An EPA module that utilizes dual channel-space attention mechanisms with residual connections to adaptively weight features, dynamically suppressing background interference and enhancing target features.

2. Related Work

2.1. Object Detection Methods

2.2. Deep Learning Algorithms for Small Object Detection

3. Proposed Model

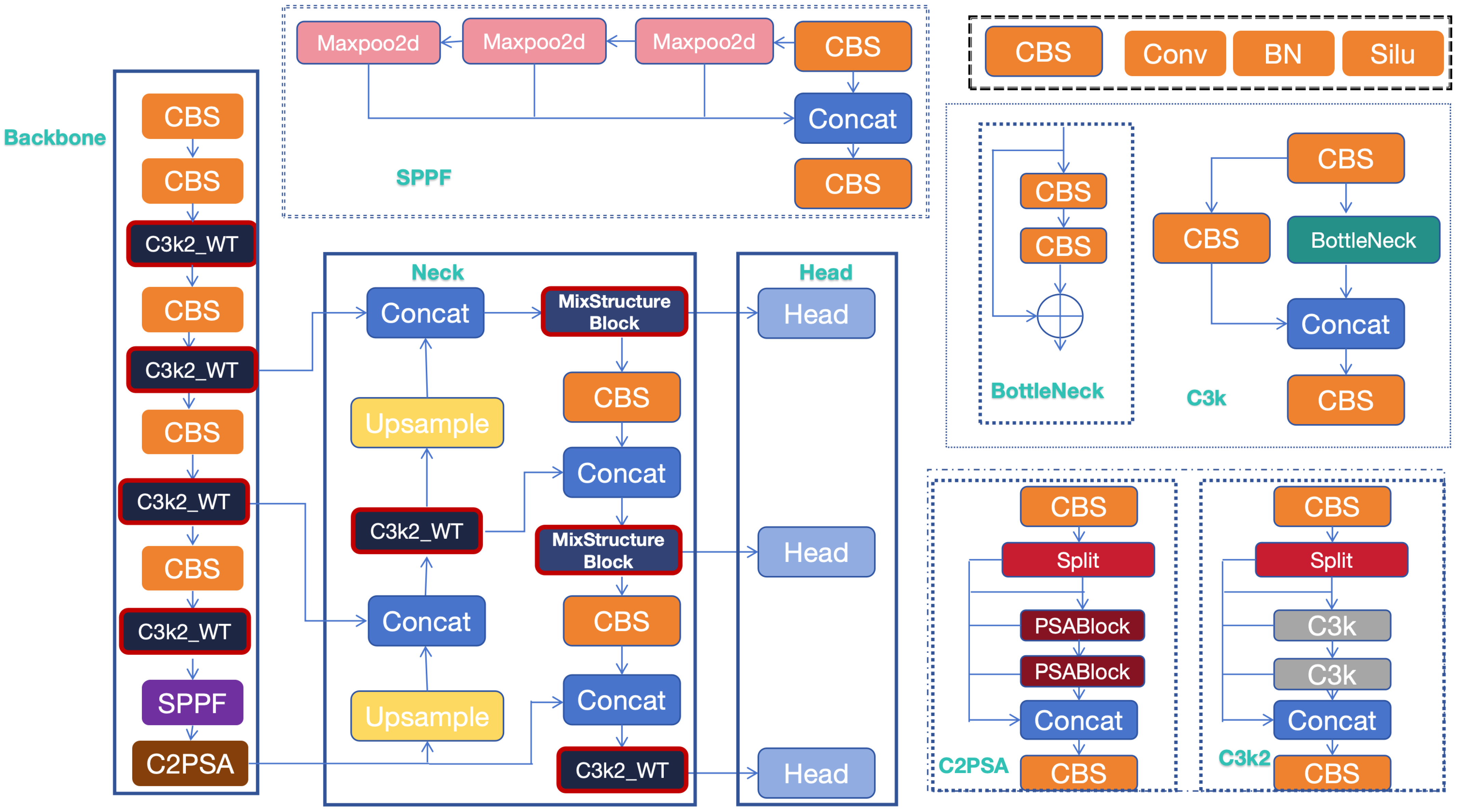

3.1. Overview of MS-YOLO Model

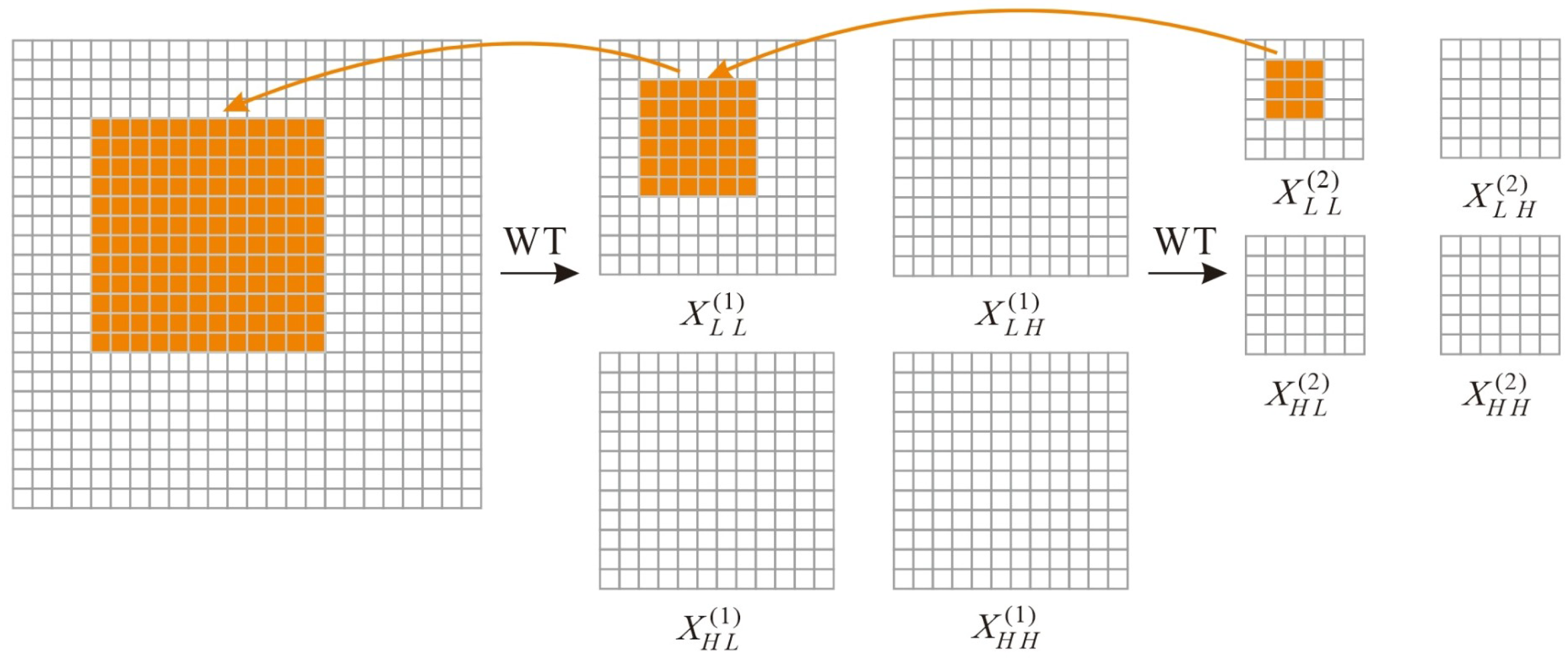

3.2. Algorithm Principle of Wavelet Transform

| Algorithm 1 2D Haar Wavelet Transform for Image Decomposition |

|

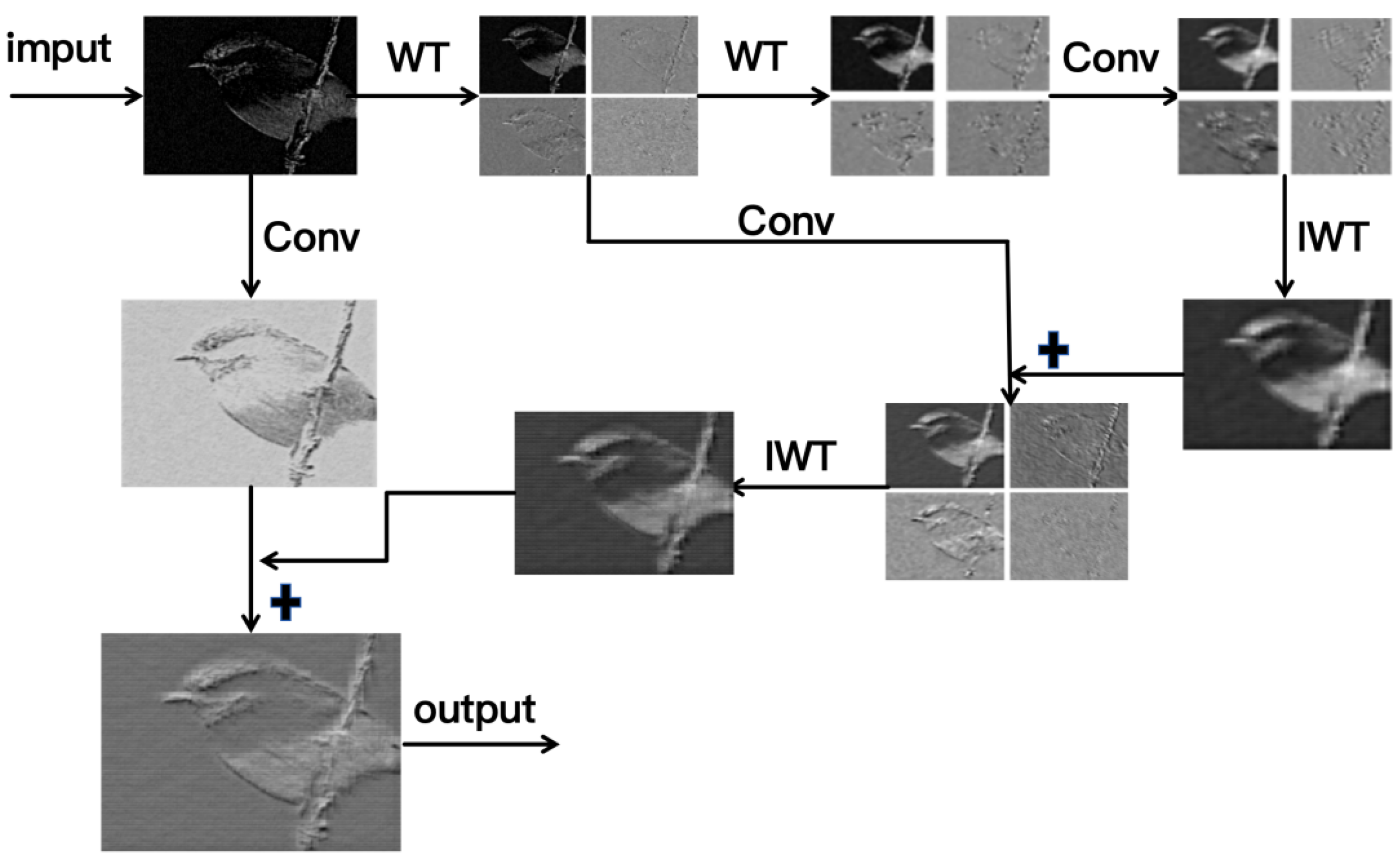

3.3. Wavelet Transform Convolutional Neural Network

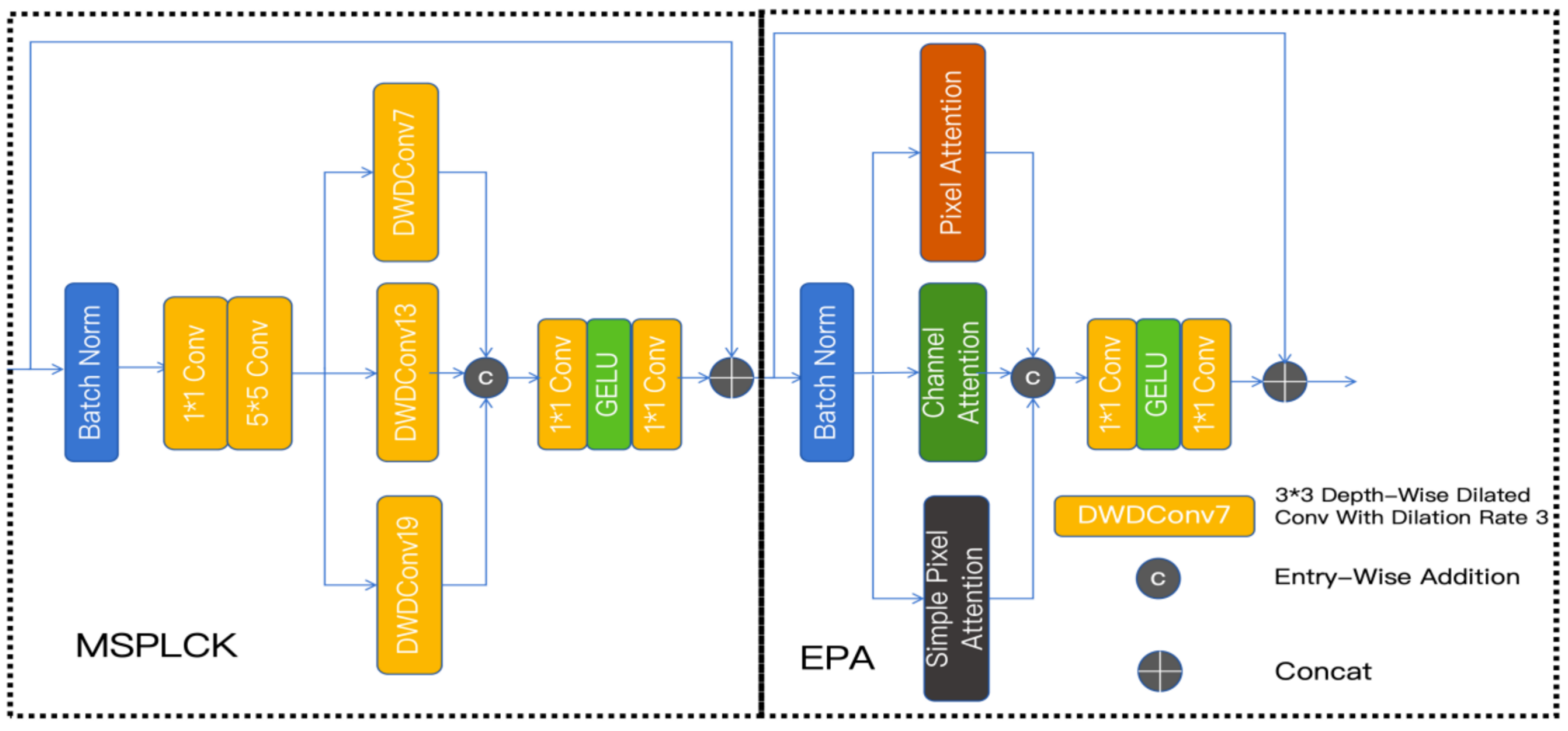

3.4. Mix Structure Block

3.4.1. Multi-Scale Parallel Large Convolutional Kernel Module (MSPLCK)

3.4.2. Enhanced Parallel Attention Module

4. Experiment

4.1. Datasets

4.2. Evaluation Metrics

- mAP@0.50: mAP at a single IoU threshold of 0.50;

- mAP@0.75: mAP at a stricter IoU threshold of 0.75;

- mAP@0.50:0.95: Average mAP computed over multiple IoU thresholds from 0.50 to 0.95 in steps of 0.05;

- mAP(small): Specifically measures mAP@0.50 for small objects, defined as those with area less than pixels according to the COCO standard.

4.3. Experiment Setup

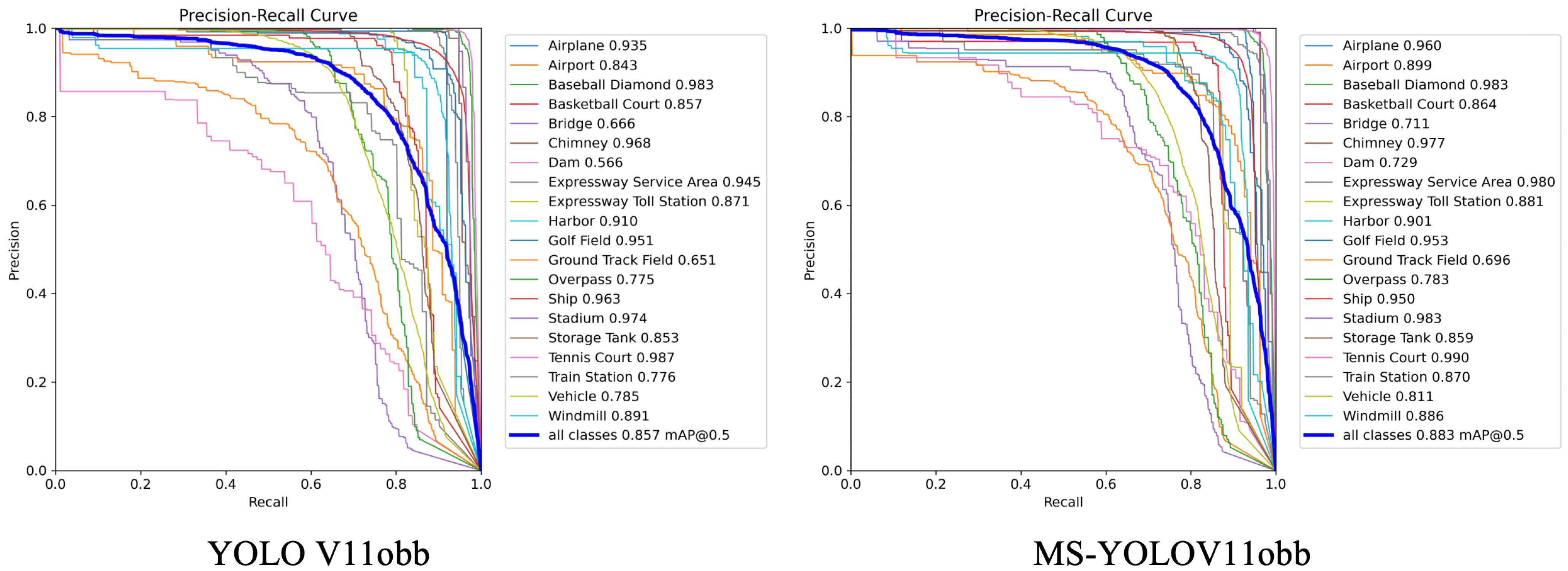

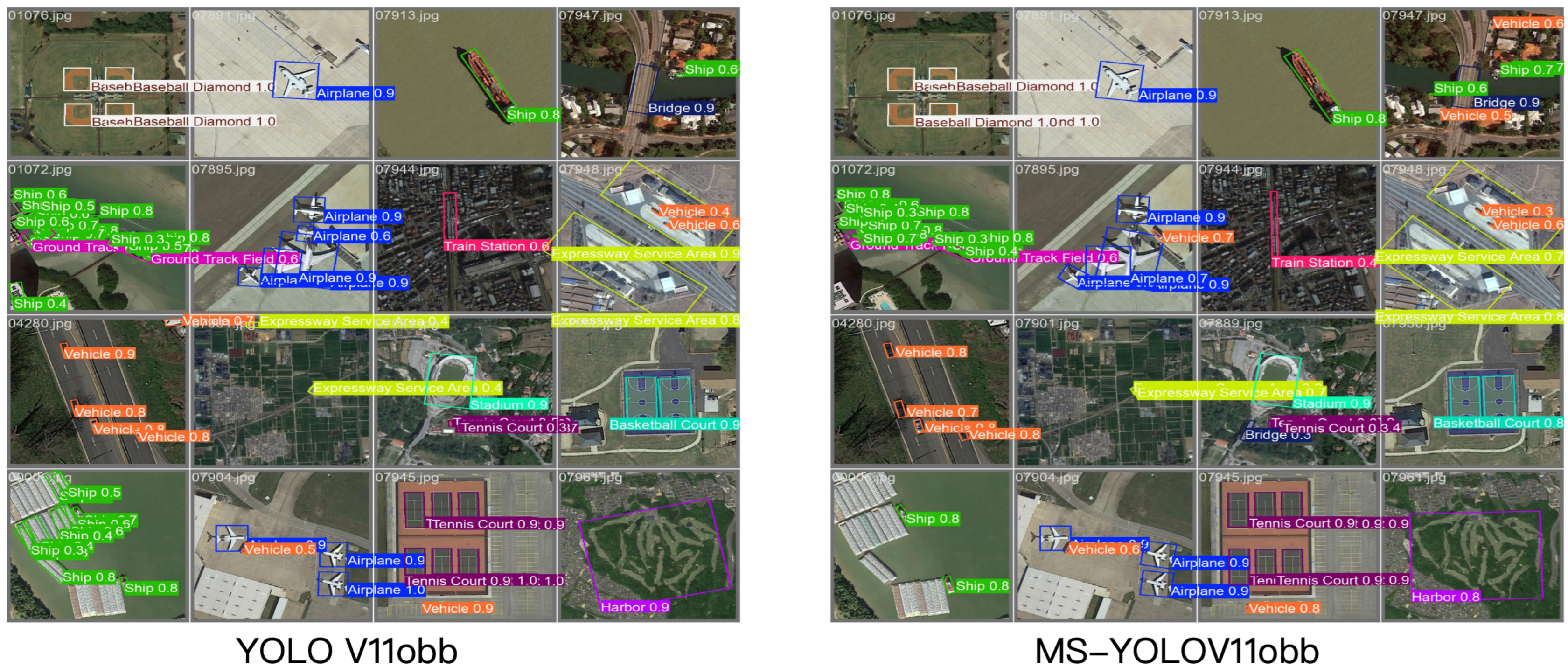

4.4. Experiment Results

4.5. Ablation Experiments

4.6. Comparison with Other Detection Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, J.; Pei, Y.; Zhao, S.; Xiao, R.; Sang, X.; Zhang, C. A review of remote sensing for environmental monitoring in China. Remote Sens. 2020, 12, 1130. [Google Scholar] [CrossRef]

- Wellmann, T.; Lausch, A.; Andersson, E.; Knapp, S.; Cortinovis, C.; Jache, J.; Scheuer, S.; Kremer, P.; Mascarenhas, A.; Kraemer, R.; et al. Remote sensing in urban planning: Contributions towards ecologically sound policies? Landsc. Urban Plan. 2020, 204, 103921. [Google Scholar] [CrossRef]

- Yamazaki, F.; Matsuoka, M. Remote sensing technologies in post-disaster damage assessment. J. Earthq. Tsunami 2007, 1, 193–210. [Google Scholar] [CrossRef]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Padilla, R.; Netto, S.L.; Da Silva, E.A.B. A survey on performance metrics for object-detection algorithms. In Proceedings of the 2020 International Conference on Systems, Signals and Image Processing (IWSSIP), Niteroi, Brazil, 1–3 July 2020; pp. 237–242. [Google Scholar]

- Wang, L.; Bai, J.; Li, W.; Jiang, J. Research Progress of YOLO Series Target Detection Algorithms. J. Comput. Eng. Appl. 2023, 59, 15. [Google Scholar]

- Liu, Z.; Gao, Y.; Du, Q. Yolo-class: Detection and classification of aircraft targets in satellite remote sensing images based on yolo-extract. IEEE Access 2023, 11, 109179–109188. [Google Scholar] [CrossRef]

- Betti, A.; Tucci, M. YOLO-S: A lightweight and accurate YOLO-like network for small target detection in aerial imagery. Sensors 2023, 23, 1865. [Google Scholar] [CrossRef]

- Qu, J.; Tang, Z.; Zhang, L.; Zhang, Y.; Zhang, Z. Remote sensing small object detection network based on attention mechanism and multi-scale feature fusion. Remote Sens. 2023, 15, 2728. [Google Scholar]

- Wang, Y.-Q. An analysis of the Viola-Jones face detection algorithm. Image Process. Line 2014, 4, 128–148. [Google Scholar] [CrossRef]

- Dadi, H.S.; Pillutla, G.K.M. Improved face recognition rate using HOG features and SVM classifier. IOSR J. Electron. Commun. Eng. 2016, 11, 34–44. [Google Scholar] [CrossRef]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Probabilistic two-stage detection. arXiv 2021, arXiv:2103.07461. [Google Scholar] [CrossRef]

- Li, B.; Liu, Y.; Wang, X. Gradient harmonized single-stage detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 8577–8584. [Google Scholar]

- Liu, B.; Zhao, W.; Sun, Q. Study of object detection based on Faster R-CNN. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 6233–6236. [Google Scholar]

- Zhang, G.; Yu, W.; Hou, R. Mfil-fcos: A multi-scale fusion and interactive learning method for 2d object detection and remote sensing image detection. Remote Sens. 2024, 16, 936. [Google Scholar] [CrossRef]

- Yuan, Z.; Gong, J.; Guo, B.; Wang, C.; Liao, N.; Song, J.; Wu, Q. Small object detection in uav remote sensing images based on intra-group multi-scale fusion attention and adaptive weighted feature fusion mechanism. Remote Sens. 2024, 16, 4265. [Google Scholar]

- Li, J.; Huang, K. Lightweight multi-scale feature fusion network for salient object detection in optical remote sensing images. Electronics 2024, 14, 8. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Doll’ar, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Yilmaz, E.N.; Navruz, T.S. Real-Time Object Detection: A Comparative Analysis of YOLO, SSD, and EfficientDet Algorithms. In Proceedings of the 2025 7th International Congress on Human-Computer Interaction, Optimization and Robotic Applications (ICHORA), Ankara, Turkiye, 23–24 May 2025; pp. 1–9. [Google Scholar]

- Sun, G.; Zhang, Y.; Sun, W.; Liu, Z.; Wang, C. Multi-scale prediction of the effective chloride diffusion coefficient of concrete. Constr. Build. Mater. 2011, 25, 3820–3831. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W.; Yeh, I.-H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Choi, S.R.; Lee, M. Transformer architecture and attention mechanisms in genome data analysis: A comprehensive review. Biology 2023, 12, 1033. [Google Scholar] [CrossRef]

- Elsken, T.; Metzen, J.H.; Hutter, F. Neural architecture search: A survey. J. Mach. Learn. Res. 2019, 20, 1–21. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9759–9768. [Google Scholar]

- Wang, S.; Park, S.; Kim, J.; Kim, J. Safety Helmet Monitoring on Construction Sites Using YOLOv10 and Advanced Transformer Architectures with Surveillance and Body-Worn Cameras. J. Constr. Eng. Manag. 2025, 151, 04025186. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, W.; Zhang, W.; Yang, L.; Wang, J.; Ni, H.; Guan, T.; He, J.; Gu, Y.; Tran, N.N. A multi-feature fusion and attention network for multi-scale object detection in remote sensing images. Remote Sens. 2023, 15, 2096. [Google Scholar]

- Chen, J.; Kao, S.-h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, don’t walk: Chasing higher FLOPS for faster neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 12021–12031. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Liu, J.; Cao, Y.; Wang, Y.; Guo, C.; Zhang, H.; Dong, C. SO-RTDETR for Small Object Detection in Aerial Images. Preprints 2024, 2024101980. [Google Scholar] [CrossRef]

- Peng, H.; Xie, H.; Liu, H.; Guan, X. LGFF-YOLO: Small object detection method of UAV images based on efficient local–global feature fusion. J. Real-Time Image Process. 2024, 21, 167. [Google Scholar] [CrossRef]

- Wei, J.; Liang, J.; Song, J.; Zhou, P. YOLO-PBESW: A Lightweight Deep Learning Model for the Efficient Identification of Indomethacin Crystal Morphologies in Microfluidic Droplets. Micromachines 2024, 15, 1136. [Google Scholar] [CrossRef] [PubMed]

- Cao, X.; Su, Y.; Geng, X.; Wang, Y. YOLO-SF: YOLO for fire segmentation detection. IEEE Access 2023, 11, 111079–111092. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiang, T.; Hospedales, T.M.; Lu, H. Deep mutual learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4320–4328. [Google Scholar]

- Xie, X.; Lang, C.; Miao, S.; Cheng, G.; Li, K.; Han, J. Mutual-assistance learning for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 15171–15184. [Google Scholar] [CrossRef]

- Jiao, Y.; Yao, H.; Xu, C. Dual instance-consistent network for cross-domain object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7338–7352. [Google Scholar] [CrossRef] [PubMed]

- Yao, B.; Zhang, C.; Meng, Q.; Sun, X.; Hu, X.; Wang, L.; Li, X. SRM-YOLO for Small Object Detection in Remote Sensing Images. Remote Sens. 2025, 17, 2099. [Google Scholar]

- Park, S.; Kim, J.; Kim, J.; Wang, S. Fault diagnosis of air handling units in an auditorium using real operational labeled data across different operation modes. J. Comput. Civ. Eng. 2025, 39, 04025065. [Google Scholar] [CrossRef]

- Finder, S.E.; Amoyal, R.; Treister, E.; Freifeld, O. Wavelet convolutions for large receptive fields. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September 2024; pp. 363–380. [Google Scholar]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Scaling up your kernels to 31 ✕ 31: Revisiting large kernel design in cnns. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11963–11975. [Google Scholar]

- Lu, L.; Xiong, Q.; Xu, B.; Chu, D. Mixdehazenet: Mix structure block for image dehazing network. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–10. [Google Scholar]

- Li, K.; Wan, G.; Cheng, G.; Meng, L.; Han, J. Object detection in optical remote sensing images: A survey and a new benchmark. ISPRS J. Photogramm. Remote Sens. 2020, 159, 296–307. [Google Scholar]

- Xia, G.-S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A large-scale dataset for object detection in aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar]

- Hou, L.; Lu, K.; Xue, J.; Li, Y. Shape-adaptive selection and measurement for oriented object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Oxford, UK, 19–21 May 2021; Volume 36, pp. 923–932. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.-S.; Lu, Q. Learning RoI transformer for oriented object detection in aerial images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2849–2858. [Google Scholar]

- Xu, Y.; Fu, M.; Wang, Q.; Wang, Y.; Chen, K.; Xia, G.-S.; Bai, X. Gliding vertex on the horizontal bounding box for multi-oriented object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 1452–1459. [Google Scholar] [CrossRef]

- Yang, X.; Yang, X.; Yang, J.; Ming, Q.; Wang, W.; Tian, Q.; Yan, J. Learning high-precision bounding box for rotated object detection via kullback-leibler divergence. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34, pp. 18381–18394. [Google Scholar]

- Li, Z.; Hou, B.; Wu, Z.; Ren, B.; Yang, C. FCOSR: A simple anchor-free rotated detector for aerial object detection. Remote Sens. 2023, 15, 5499. [Google Scholar] [CrossRef]

- Li, W.; Chen, Y.; Hu, K.; Zhu, J. Oriented reppoints for aerial object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1829–1838. [Google Scholar]

- Yang, W.; Li, Q.; Li, Q.; Huang, H. Remote Sensing Image Object Detection Method with Multi-Scale Feature Enhancement Module. In Proceedings of the 2025 5th International Conference on Neural Networks, Information and Communication Engineering (NNICE), Guangzhou, China, 10–12 January 2025; pp. 457–460. [Google Scholar]

- Xu, C.; Ding, J.; Wang, J.; Yang, W.; Yu, H.; Yu, L.; Xia, G.-S. Dynamic coarse-to-fine learning for oriented tiny object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7318–7328. [Google Scholar]

- Yu, J.; Huang, W.; Shen, W. An Improved YOLOv8 Algorithm for Oriented Object Detection in Remote Sensing Images. In Proceedings of the 2024 6th International Conference on Robotics and Computer Vision (ICRCV), Wuxi, China, 20–22 September 2024; pp. 88–92. [Google Scholar]

| Category | Component | Specification/Version |

|---|---|---|

| Hardware Environment | CPU | Intel Xeon Platinum 8369B @ 2.90 GHz (Intel, Santa Clara, CA, USA) |

| RAM | 512 GB DDR4 | |

| GPU | NVIDIA A100-SXM4-80 GB (x1) | |

| GPU Memory | 80 GB | |

| Software Environment | OS | Ubuntu 20.04.6 LTS |

| CUDA | 11.1 | |

| cuDNN | 8.0.5 | |

| Python | 3.8.20 | |

| PyTorch | 1.9.0+cu111 | |

| Framework | Ultralytics YOLOv11 (v8.3.54) |

| Model | Class | Detection Categories | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| D01 | D02 | D03 | D04 | D05 | D06 | D07 | D08 | D09 | D10 | D11 | D12 | D13 | D14 | D15 | AVG | ||

| YOLOv11obb | P | 0.9347 | 0.9031 | 0.9337 | 0.8154 | 0.9741 | 0.6699 | 0.7465 | 0.8449 | 0.6605 | 0.8644 | 0.6602 | 0.5024 | 0.7561 | 0.6880 | 0.7112 | 0.7738 |

| R | 0.8871 | 0.8572 | 0.5970 | 0.7122 | 0.9001 | 0.5329 | 0.5665 | 0.8359 | 0.4426 | 0.8325 | 0.7171 | 0.5672 | 0.5050 | 0.4929 | 0.7117 | 0.6772 | |

| F1 | 0.9103 | 0.8795 | 0.7283 | 0.7603 | 0.9231 | 0.5936 | 0.6441 | 0.8403 | 0.5268 | 0.8481 | 0.6820 | 0.5328 | 0.6177 | 0.5561 | 0.7114 | 0.7170 | |

| AP | 0.9308 | 0.9016 | 0.7674 | 0.7764 | 0.9462 | 0.5871 | 0.6314 | 0.5333 | 0.4800 | 0.8824 | 0.7221 | 0.5749 | 0.5913 | 0.5021 | 0.6815 | 0.7219 | |

| MS-YOLOv11obb | P | 0.9401 | 0.7720 | 0.9421 | 0.8379 | 0.9980 | 0.6610 | 0.7744 | 0.8719 | 0.6875 | 0.9000 | 0.7417 | 0.5218 | 0.8463 | 0.6909 | 0.8933 | 0.8013 |

| R | 0.8863 | 0.7531 | 0.8759 | 0.7402 | 0.8651 | 0.4723 | 0.5736 | 0.8107 | 0.4311 | 0.8456 | 0.7749 | 0.6009 | 0.8875 | 0.5432 | 0.8917 | 0.6970 | |

| F1 | 0.9124 | 0.7624 | 0.7240 | 0.7860 | 0.9001 | 0.5509 | 0.6590 | 0.8402 | 0.5239 | 0.8719 | 0.7580 | 0.5945 | 0.6935 | 0.6082 | 0.8925 | 0.7389 | |

| AP | 0.9339 | 0.7885 | 0.7692 | 0.8065 | 0.9254 | 0.5589 | 0.6635 | 0.8686 | 0.4856 | 0.9009 | 0.8019 | 0.6424 | 0.6718 | 0.5518 | 0.9410 | 0.7532 | |

| Model | Class | Detection Categories (D01–D10) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| D01 | D02 | D03 | D04 | D05 | D06 | D07 | D08 | D09 | D10 | ||

| YOLOv11obb | P | 0.9862 | 0.8046 | 0.9764 | 0.9394 | 0.8489 | 0.9767 | 0.6326 | 0.9326 | 0.9708 | 0.9041 |

| R | 0.9162 | 0.7786 | 0.9533 | 0.7902 | 0.5552 | 0.9473 | 0.5591 | 0.9167 | 0.8031 | 0.8431 | |

| F1 | 0.9499 | 0.7914 | 0.9647 | 0.8584 | 0.6713 | 0.9618 | 0.5936 | 0.9246 | 0.8791 | 0.8726 | |

| AP | 0.9355 | 0.8429 | 0.9829 | 0.8567 | 0.6657 | 0.9682 | 0.5661 | 0.9449 | 0.8711 | 0.9103 | |

| MS-YOLOv11obb | P | 0.9874 | 0.8452 | 0.9835 | 0.9317 | 0.8458 | 0.9729 | 0.7281 | 0.9499 | 0.8900 | 0.8588 |

| R | 0.9295 | 0.8358 | 0.9383 | 0.8333 | 0.6434 | 0.9649 | 0.6842 | 0.9356 | 0.8036 | 0.8387 | |

| F1 | 0.9575 | 0.8405 | 0.9604 | 0.8798 | 0.7309 | 0.9689 | 0.7055 | 0.9427 | 0.8446 | 0.8487 | |

| AP | 0.9600 | 0.8993 | 0.9833 | 0.8637 | 0.7113 | 0.9767 | 0.7285 | 0.9803 | 0.8814 | 0.9008 | |

| Model | Class | Detection Categories (D11–D20) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| D11 | D12 | D13 | D14 | D15 | D16 | D17 | D18 | D19 | D20 | AVG | ||

| YOLOv11obb | P | 0.9316 | 0.7697 | 0.9176 | 0.9391 | 0.9636 | 0.9497 | 0.9828 | 0.7352 | 0.9535 | 0.9315 | 0.9023 |

| R | 0.8819 | 0.5579 | 0.6523 | 0.9062 | 0.9474 | 0.7126 | 0.9627 | 0.7921 | 0.5786 | 0.8563 | 0.7955 | |

| F1 | 0.9060 | 0.6469 | 0.7626 | 0.9224 | 0.9554 | 0.8142 | 0.9727 | 0.7626 | 0.7202 | 0.8923 | 0.8411 | |

| AP | 0.9507 | 0.6507 | 0.7750 | 0.9629 | 0.9740 | 0.8527 | 0.9875 | 0.7757 | 0.7850 | 0.8910 | 0.8575 | |

| MS-YOLOv11obb | P | 0.9293 | 0.8027 | 0.8514 | 0.9409 | 0.9911 | 0.9339 | 0.9756 | 0.8392 | 0.9173 | 0.9158 | 0.9045 |

| R | 0.9113 | 0.6065 | 0.6910 | 0.9098 | 0.9524 | 0.7766 | 0.9634 | 0.8534 | 0.6676 | 0.8839 | 0.8312 | |

| F1 | 0.9202 | 0.6910 | 0.7629 | 0.9251 | 0.9713 | 0.8480 | 0.9695 | 0.8463 | 0.7728 | 0.8996 | 0.8643 | |

| AP | 0.9528 | 0.6961 | 0.7826 | 0.9503 | 0.9833 | 0.8587 | 0.9904 | 0.8702 | 0.8113 | 0.8858 | 0.8833 | |

| Wavelet Base | mAP@50 (%) | mAP@50:95 (%) | mAP(small) (%) | FPS | Params (M) |

|---|---|---|---|---|---|

| Haar (Ours) | 88.33 | 70.90 | 84.21 | 447.8 | 2.87 |

| Db4 | 88.15 | 70.75 | 83.95 | 421.5 | 2.87 |

| Db8 | 88.41 | 70.88 | 84.10 | 415.2 | 2.87 |

| Sym4 | 88.28 | 70.80 | 83.87 | 423.1 | 2.87 |

| Sym8 | 88.50 | 71.02 | 84.35 | 412.7 | 2.87 |

| Bior1.3 | 87.95 | 70.45 | 83.70 | 440.1 | 2.87 |

| Bior2.4 | 88.45 | 70.95 | 84.28 | 418.3 | 2.87 |

| Model Variant | Parameters | mAP@50 (%) | mAP@75 (%) | mAP@50-95 (%) | FPS |

|---|---|---|---|---|---|

| YOLOv11-OBB | 2,802,647 | 85.75 | 71.86 | 66.66 | 302.17 |

| YOLOv11-OBB + WTConv | 2,741,591 | 86.96 | 73.62 | 68.88 | 420.35 |

| YOLOv11-OBB + Mix | 3,254,025 | 87.81 | 75.79 | 70.50 | 376.43 |

| MS-YOLOv11 | 2,865,383 | 88.33 | 76.19 | 70.90 | 447.81 |

| Method | Backbone | SH | STO | VE | mAP |

|---|---|---|---|

| Faster R-CNN-O [43] | R-50 | 79.4 | 67.6 | 46.2 | 62.0 |

| SASM [44] | R-50 | 83.6 | 63.9 | 43.6 | 62.2 |

| RoI Transformer [45] | R-50 | 81.2 | 62.5 | 43.8 | 64.8 |

| Gliding Vertex [46] | R-50 | 81.0 | 62.5 | 43.2 | 62.9 |

| KLD [47] | R-50 | 80.9 | 68.0 | 47.8 | 64.6 |

| FCOSR [48] | R-50 | 81.0 | 62.5 | 43.1 | 63.4 |

| Oriented RepPoints [49] | R-50 | 85.1| 65.3 | 48.0 | 66.3 |

| LSKNet-S * [50] | LSK-S | 81.2 | 70.9 | 49.8 | 71.6 |

| † DCFL [51] | R-50 | - | - | 50.9 | 71.0 |

| YOLO V8obb * [52] | CSP | - | - | - | 86.3 |

| YOLO V8obb | CSP | 94.91 | 81.95 | 79.78 | 84.96 |

| YOLO V11obb | CSP | 96.29 | 85.27 | 78.50 | 85.75 |

| MS-YOLOv11obb | CSP | 95.03 | 85.87 | 81.13 | 88.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Li, X.; Wang, L.; Zhang, Y.; Wang, Z.; Lu, Q. MS-YOLOv11: A Wavelet-Enhanced Multi-Scale Network for Small Object Detection in Remote Sensing Images. Sensors 2025, 25, 6008. https://doi.org/10.3390/s25196008

Liu H, Li X, Wang L, Zhang Y, Wang Z, Lu Q. MS-YOLOv11: A Wavelet-Enhanced Multi-Scale Network for Small Object Detection in Remote Sensing Images. Sensors. 2025; 25(19):6008. https://doi.org/10.3390/s25196008

Chicago/Turabian StyleLiu, Haitao, Xiuqian Li, Lifen Wang, Yunxiang Zhang, Zitao Wang, and Qiuyi Lu. 2025. "MS-YOLOv11: A Wavelet-Enhanced Multi-Scale Network for Small Object Detection in Remote Sensing Images" Sensors 25, no. 19: 6008. https://doi.org/10.3390/s25196008

APA StyleLiu, H., Li, X., Wang, L., Zhang, Y., Wang, Z., & Lu, Q. (2025). MS-YOLOv11: A Wavelet-Enhanced Multi-Scale Network for Small Object Detection in Remote Sensing Images. Sensors, 25(19), 6008. https://doi.org/10.3390/s25196008