1. Introduction

The precise delineation of agricultural parcels is the basis for land management, subsidy control, precision agriculture, and compliance with environmental regulations [

1,

2]. This task is difficult in fragmented landscapes, where parcels vary in shape, size, farming practises, and phenology, and where fixed physical boundaries are not typical [

3]. Conventional pipelines typically rely on high-resolution RGB or multispectral single-date images, which require significant manual effort to obtain accurate results and still lack important seasonal features [

4,

5]. Single-date inputs are either spectrally flat, as is the case with RGB images, or temporally blind, as is the case with a single multispectral scene, so they lack the phenological cues that are important for boundary delineation [

6]. There are workarounds using deep learning, but they usually require large, annotated datasets and extensive training, which limits their use [

7,

8].

In the past, field delineation relied on manual digitisation or semi-automated aerial photography and cadastral methods, which were accurate but labour-intensive, time-consuming, and costly, especially in areas with highly fragmented parcels or rapid changes in land use [

9]. The advent of satellite remote sensing has changed the data supply, and enables large-scale, timely, and cost-effective monitoring [

10]. Modern delineation algorithms can be divided into three categories: edge detection, region-based, and integrated hybrid methods [

11]. A recent comprehensive review of agricultural parcel and boundary delineation confirms that the overwhelming majority of methods rely on spatial or spectral features, with deep learning models dominating recent work, while temporal phenology is typically treated only as an auxiliary input [

12]. This imbalance underscores that phenology remains underused in the context of field delineation, despite the increasing availability of dense satellite imagery time series.

Harmonic analysis provides a compact, interpretable summary of seasonal dynamics, and has proven useful in plant monitoring, land use mapping, and anomaly detection [

13,

14]. It decomposes the NDVI time series into mean, amplitude, and phase, and isolates cyclical vegetation dynamics from long-term trends, which are then used in the recolouring step. Modern zero-shot segmentation (e.g., the Segment Anything Model) can suggest segmentation masks without domain-specific training, but the effectiveness depends on how clearly the input expresses the boundary-relevant contrasts [

15]. Cylindrical colour spaces (HSV, HWB, LCH) are a natural way to represent complementary cues as separable channels (timing, seasonal range, mean level) for both human and algorithmic interpretation [

16]. A common challenge in remote sensing is persistent cloud cover and irregular acquisition, which can create gaps in the NDVI time series. Such missing data reduces the robustness of seasonal signal extraction. This limitation is increasingly mitigable. Harmonic regression, based on the Fourier series, has been shown to interpolate through noisy or incomplete records while preserving phenological dynamics, which makes it a promising tool for agricultural monitoring even under cloud-prone conditions [

17,

18]. Uniline linear interpolation or composing, harmonic regression yields smooth, periodic representations of vegetation dynamics, and is robust against residual atmospheric noise such as haze and cirrus clouds [

19]. As such, studies [

17,

18] have demonstrated its effectiveness for filling temporal gaps in Landsat and Sentinel time series.

Amin et al. [

20] propose an operational ResUNet-a d7 multi-task network using freely available Sentinel-2 monthly syntheses, coupled with a Gaussian Mixture post-processing step to refine adjacency boundaries. By assessing 14 sites in France over two years, their system attains a weighted F1 score of 92%, and it is explicitly designed for early-season delineation with single-date composites, which limits dependence on long, cloud-free time series and heavy preprocessing [

20]. The study also documents practical constraints of their approach, and argues for methods that can operate with minimal temporal input, especially early in the season. Their observations motivated our choice to encode phenology compactly, via harmonic NDVI, and to keep the segmenter zero-shot and training free.

In parallel, recent works also target crop mapping, which is methodologically relevant because it demonstrates how temporal cues and ensembles improve agricultural inference at scale. Hosseini et al. [

21] developed a stack ensemble on Google Earth Engine (GEE) that fuses Sentinel-2 and Landsat-8/9 monthly composites. Four base learners are combined using a Minimum-Distance meta-classifier, achieving an overall accuracy of 94.24% in 2021, and maintaining 90.97–91.82% overall accuracy when temporally transferred to other years without retraining [

21]. While [

21] focuses on classifying Cropping Intensity Patterns, not boundary extraction, their findings strengthen our premise that temporally informed representations are decisive in agricultural remote sensing.

Similarly, the authors of [

22] present an ensemble-based framework for corn and soybean mapping on GEE. Their pipeline first injects the phenological priors by restricting analysis to phenophases with maximal separability, then augmenting the spectra with Gray-Level Co-occurrence Matrix (GLCM) texture features and performs feature-importance filtering before collective voting across three supervised classifiers [

22]. The validation is performed at field scale in Illinois, U.S.A., and Indiana, U.S.A., and the study underscores three design lessons that translate to delineation. The first lesson is that phenology-aware timing increases class separability, the second one is that texture and spatial cues complement spectra when intra-class variability is high, and finally, simple ensembles can be robust and scalable in GEE [

22]. Our method uses a compact temporal signal, and exposes it as perceptually separable channels, after which it leverages a zero-shot segmenter.

Amin et al. [

20] demonstrate that strong geometry can be recovered without long time series when the signal is well structured, while ensemble crop-mapping efforts [

21,

22] show that phenology and aggregation across learners boost robustness and transferability at scale. The same harmonic encodings that are generated by our method can be paired with supervised classifiers to enable crop mapping, since amplitude–phase–mean combinations carry crop-specific signatures. In this way, our proposed method is not only suitable for boundary extraction, but also provides a lightweight, interpretable basis for crop classification.

In this work, we propose a method that integrates harmonic NDVI recolouring with zero-shot segmentation to improve field boundary delineation in fragmented agricultural landscapes. This study addresses a specific question: Does the zero-shot delineation of fields in fragmented farmland improve if we explicitly represent phenology in the input image? We test this on a 5 × 5 km area of interest (AOI) and compare colour spaces and time encodings with object-wise and pixel-wise metrics. We stratify by parcel size to show the impact on parcel sizes. The specific objectives of this research are as follows:

To evaluate the effectiveness of encoding harmonic NDVI components (mean, amplitude, phase) into cylindrical colour spaces (HSV, HWB, LCH) to improve automatic segmentation of agricultural parcels using SAM.

To perform quantitative evaluation of segmentation performance with pixel- and object-related metrics, such as Intersection-over-Union (IoU), precision, recall, F1 score, in comparison to manually digitized parcels.

To investigate how perceptual recolouring affects segmentation accuracy for different parcel configurations, including different sizes, shapes, and landscape contexts.

The remainder of this paper is divided into multiple sections and subsections in which the study area, data sources, and methodological steps are analyzed, including harmonic modelling, cylindrical colour space recolouring, and the use of SAM for segmentation. After this, the experimental results are presented; pixel-based and object-based metrics are reported; and error structures such as fragmentation, over-segmentation, and under-segmentation are examined. In the

Section 4, the broader implications of the findings are discussed, including contributions and limitations, and finally, the research is concluded by summarizing the main contributions and outlining directions for future research.

2. Materials and Methods

2.1. Study Area and PlanetScope Imagery

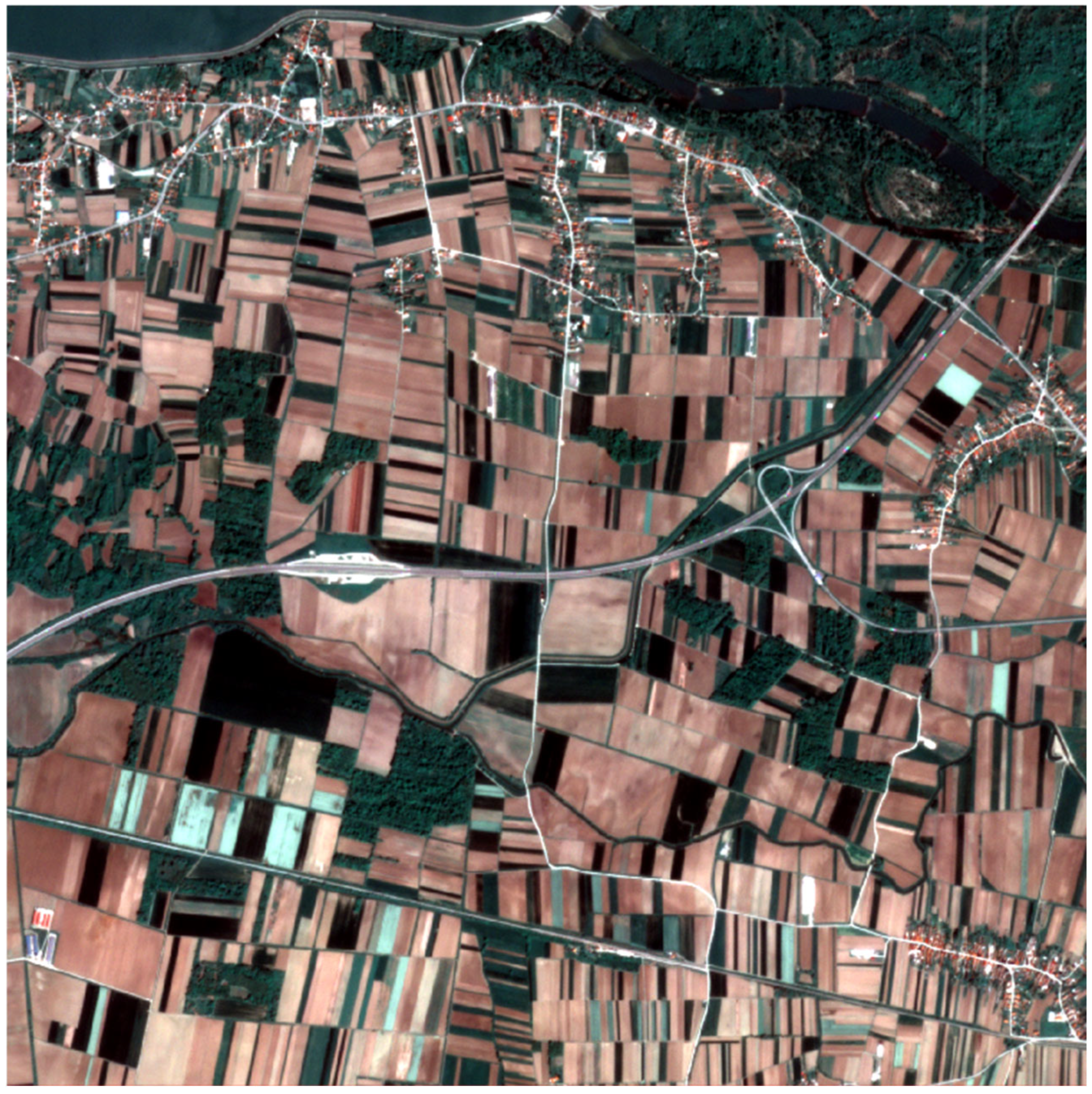

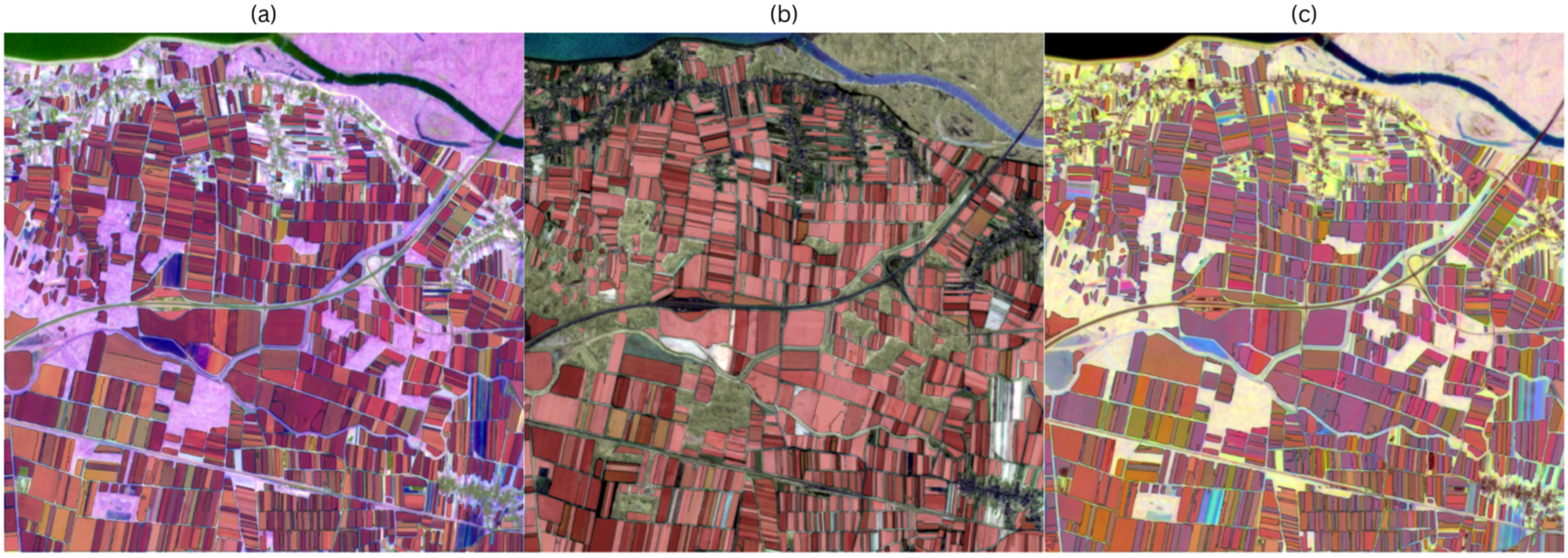

The analysis was carried out in a 5 km × 5 km area near Varaždin in northern Croatia. On the PlanetScope scenes (

Figure 1), the landscape consists mainly of small agricultural parcels with few hard physical boundaries. Apart from roads and occasional drainage lines, field boundaries are usually not marked by hedges, fences, or rows of trees. Boundaries manifest themselves as subtle shifts in tone, texture, seeding direction, and phenological stages between neighbouring strips.

The scene (

Figure 1) shows elongated, rectangular fields. A motorway bisects the area of interest (AOI) and divides it into a northern sector, characterized by smaller agricultural parcels, areas close to the city, the river Drava and its canals, and scattered forests, and a southern sector dominated by larger arable fields with additional forest areas. Dark green patches characterize the forest areas, while pale and reddish-brown tones indicate bare ground. Internal parcel boundaries are generally not expressed as physical features but appear as tonal shifts associated with the phenological stage of the crop. In terms of parcel size, the overwhelming majority of parcels are small; ~70% are smaller than 10,000 m

2.

The AOI contains the following main classes:

Agricultural parcels, which consist mainly of small fields, with the median area being ~0.6 ha.

Grassland and meadows.

Forests and wooded areas.

Urban and peri-urban areas concentrated in the northern and south-eastern parts of the AOI.

Open water bodies and drainage channels concentrated in the northern part of the AOI.

Twenty-two cloud-free SuperDove scenes were acquired for the period from January 2023 to July 2024, covering the AOI at 3 m resolution in eight spectral bands. The average interval between images is 27 days, providing a representative seasonal sample of two full crop cycles. The scenes were obtained through Planet’s Education & Research Program. They were delivered orthorectified and atmospherically corrected in EPSG:32633, and no additional radiometric preprocessing was performed.

2.2. Harmonic Analysis

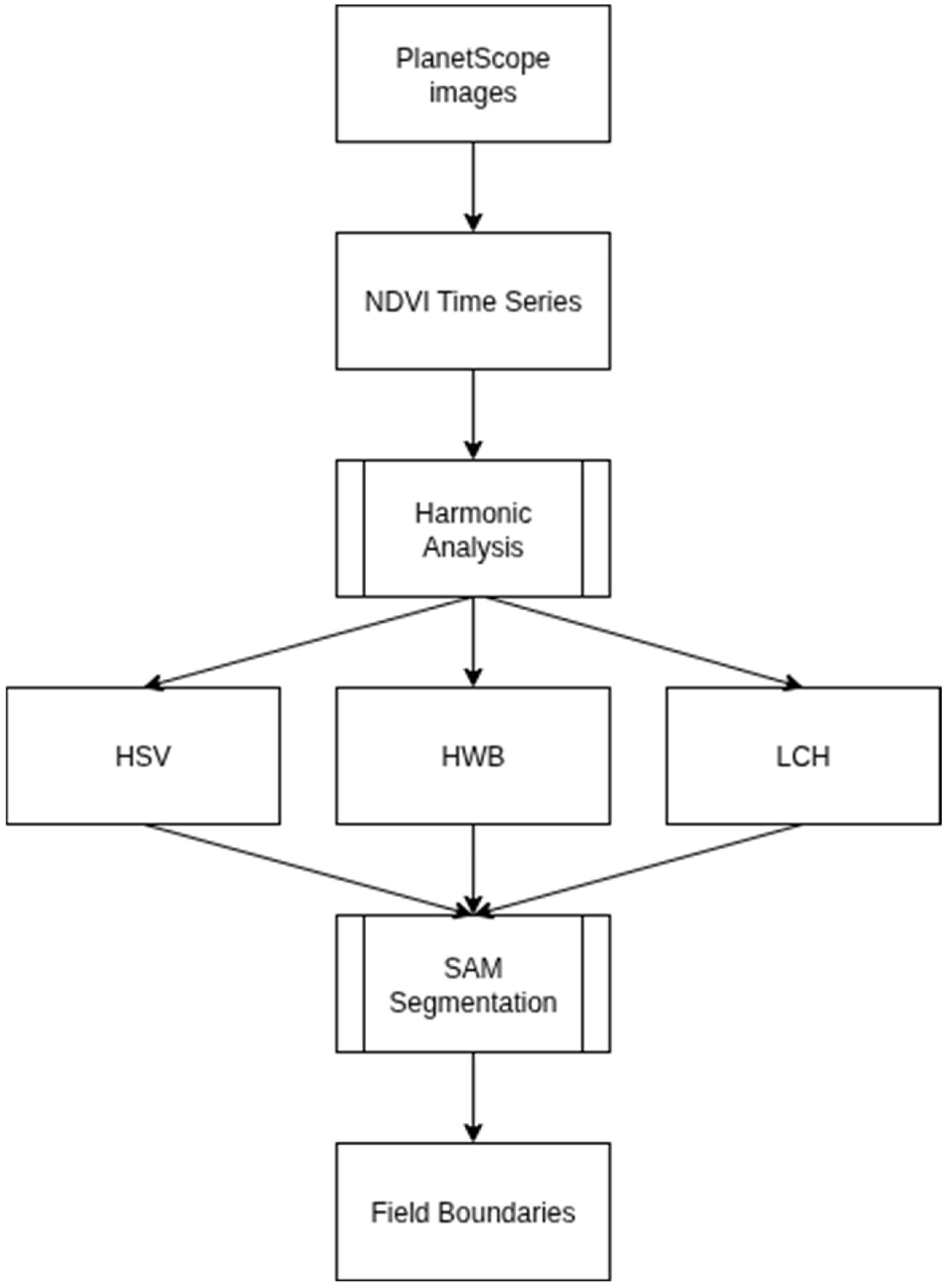

The overall workflow of the proposed method is summarized in

Figure 2. Starting with PlanetScope images, NDVI time series are computed and subjected to harmonic analysis to extract the mean, amplitude, and phase. These components are then mapped into cylindrical colour spaces which generate recoloured composites optimized for boundary detection. SAM is subsequently applied to these composites, producing delineated field boundaries for evaluation.

Neighbouring fields tend to have similar reflectivity on any single day, especially near the highest green, making the edges visually indistinct. In contrast, NDVI trajectories differ in phase and amplitude between crops and management regimes. These temporal descriptors are estimated by harmonic decomposition and mapped into cylindrical colour spaces to recolour entire parcels based on phenology, so that neighbouring fields with similar reflectivity have different stable colours in the composite on a single day.

The NDVI was calculated for the entire AOI from 22 cloud-free scenes using the following formula [

23]:

and processing was performed with Rasterio and NumPy in a single pass over the stacked grids. Acquisition times were encoded as ordinal daily values and stored as a one-dimensional vector aligned to the temporal stack.

Since slow drifts (e.g., background brightness, calibration differences between data) can interfere with a sinusoidal fit, the first step is to remove the pixel-specific linear trend and fit a single harmonic to the residual variability.

The 22-element NDVI series

y(

tj) of each pixel is regressed on time using ordinary least squares:

The time t is expressed as ordinal daily values, where the unit is days. The residual series

is transferred to the harmonic stage. In practice, the residuals do not have to be exactly zero-centred due to irregular acquisition times and measurement noise. Therefore, keeping a constant term in the harmonic model catches any small bias that might remain after detrending. This approach is common in time-series modelling, where the primary objective is to isolate seasonal cyclicity rather than to capture multi-year fluctuations. Higher-order polynomials or splines can introduce spurious oscillations in sparsely sampled data [

17,

18], and risk absorbing variance that should be modelled by the harmonic terms themselves.

The residuals of each pixel are then modelled as a single annual sinusoid with bias:

where

with τ being the annual angular time (day of the year, converted to radians). The temporal axis was encoded using two formulations. The first one employs an annual radian transformation, in which every day of the year is mapped onto the [0, 2π) interval by assuming a cycle length of 365.25 days. This assumption follows an established practice in Fourier-based vegetation modelling [

18], where the tropical year provides a mathematically consistent basis for representing periodic ecological signals.

The mapping sets the fundamental frequency to one cycle per year, so that the fitted coefficients have a direct phenological meaning. The parameters a0, a1, and b1 are estimated by non-linear least squares using the standard Levenberg–Marquardt solver, i.e., they are unweighted, without bounds, and with standard tolerances. The acquisition times are irregular and are passed on directly. No temporal resampling or interpolation is performed. The adjustments are completed without additional failure-handling logic, and the adjusted coefficients (a1, b1) are cached in a harmonics.npz file to avoid recalculation.

The calculations were performed using the adjusted coefficients:

The constant term a

0 represents the residual offset after detrending and is not used in the recolouring. The third colour value uses the empirical NDVI mean over the 22 dates.

Since the harmonic fit uses annual radians τ, ϕ is interpretable phenologically. It encodes the calendar timing in a single year, while A summarizes the seasonal NDVI range. The second approach consists of linear ordinal-day encoding, where the time is expressed simply as the day of the year without forced periodicity. This is due to the fact that different crop types often deviate from strictly annual rhythms due to crop rotations and double harvests, which can create shorter cycles, or make them irregular relative to the astronomical year.

The older harmonic values are also calculated, with the residuals adjusted as follows:

with t in days passed directly to sin and cos. This implicitly sets the period to 2π days, and the resulting

ϕ is only a relative phase offset, which has been shown to be more effective for visual separation, but cannot be directly interpreted as a day of the year.

2.3. Perceptual Recolouring

A is the first harmonic amplitude, and NDVI

mean is the empirical mean over the 22 observations. Min-max scaling was selected because cylindrical colour spaces require inputs within [0, 1] to map consistently to hue, saturation, and value. Standardization of the values would generate unbounded values, which would distort the perceptual mapping. To avoid contrast shifts between tiles, AOI width scalers are used, and the following formulae are used in the main experiments:

When recolouring hue and saturation value, hue encodes the phase of the seasonal NDVI cycle so that parcels that peak at different times appear in different colours. As mentioned earlier, hue contains a relative phase offset, which has been shown to be beneficial for visual separation, but does not represent an absolute calendar date. Saturation increases with the seasonal range. Fields with stronger NDVI variations are more vivid, while weak seasonal and perennial vegetation appears pastel-coloured. The value is directly related to the average greenness of the time series, so greener parcels appear brighter, even if their timing is similar.

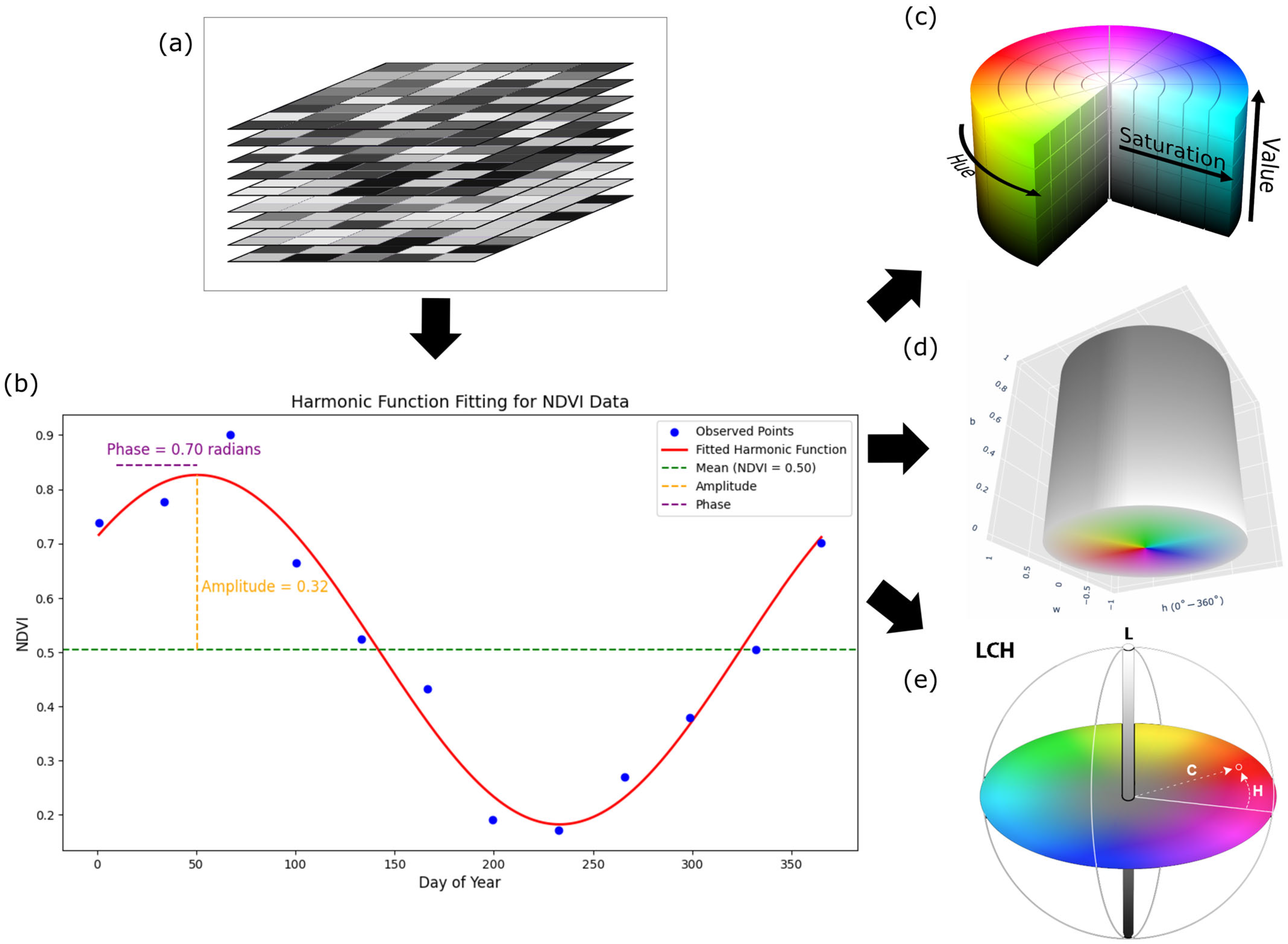

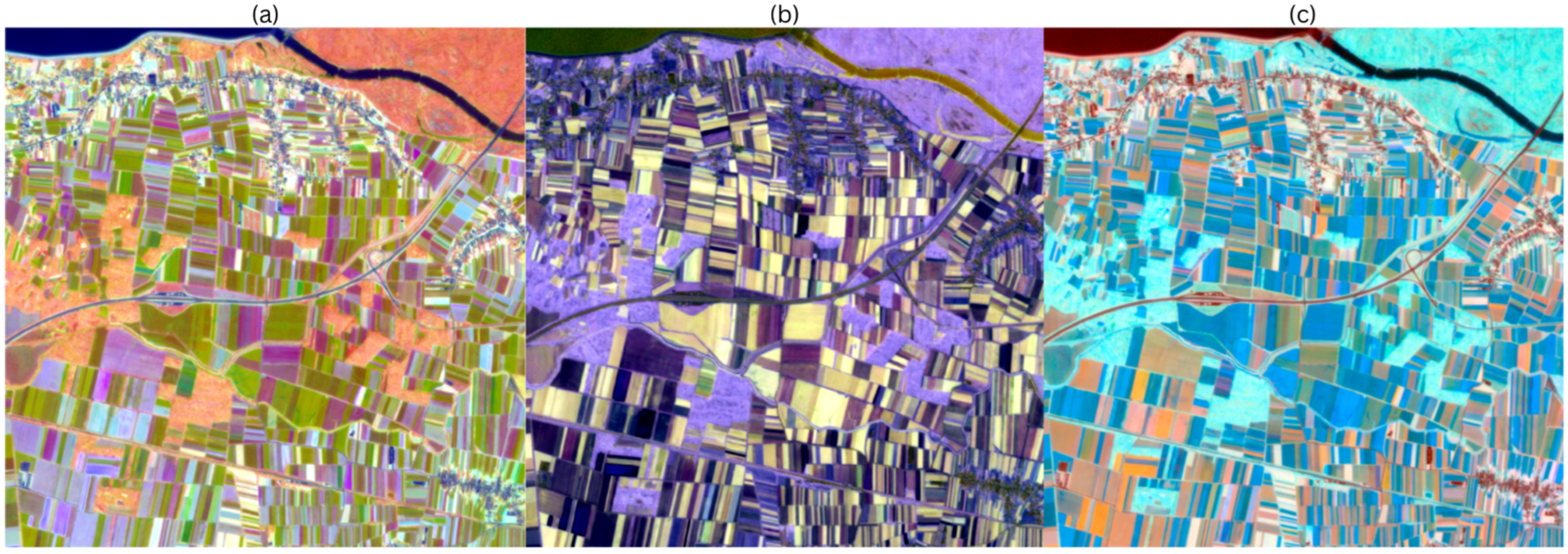

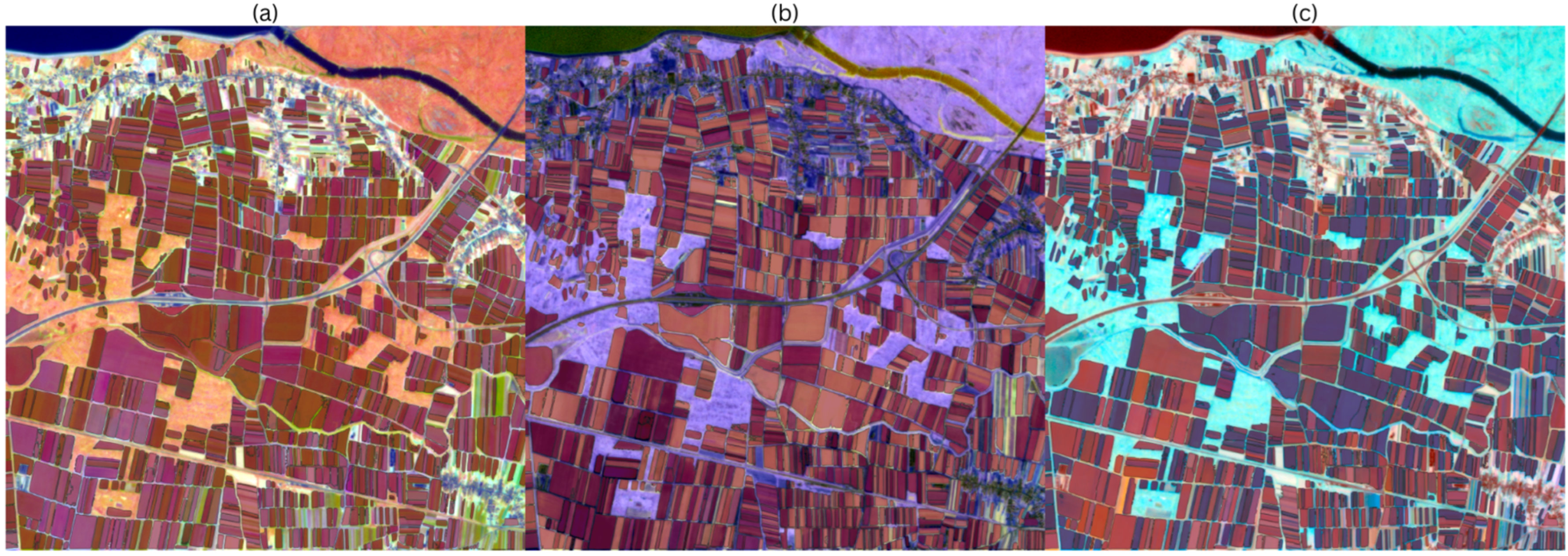

Figure 3 illustrates the entire workflow of recolouring from a multitemporal NDVI stack to different cylindrical colour spaces.

Figure 3a shows the input in the form of a stack of NDVI images acquired on different days. By stacking the NDVI images, a time series is obtained for each pixel.

Figure 3b shows the observed NDVI values of a pixel over the course of a year. A single harmonic model is fitted to the sample, from which three values are derived, the amplitude, which quantifies the seasonal range, the phase, which encodes the timing of the seasonal peak, and the mean, which is the empirical average of the NDVI over the data for a pixel.

Figure 3c shows the mapping of the above values to the HSV colour space to produce a single three-band image suitable for segmentation. The phase (timing) is mapped to the hue, the amplitude to the saturation, and the value is determined by the empirical mean NDVI values. The assignment of the phase to the hue separates the neighbouring parcels by the timing, while the saturation and the value modulate the contrast by the seasonal range and the mean value.

In the case of the Hue–Whiteness–Blackness colour space, the hue again separates the parcels according to phenological time. The brightness is directly proportional to the amplitude, i.e., strongly seasonal fields appear bright, and areas with low seasonality appear darker. The whiteness comes from the raw NDVI mean, which desaturates greener parcels more. The result is a palette with a clear temporal separation, a brightness controlled by seasonality, and a saturation softened by the average green.

Figure 3d illustrates the mapping of the above parameters into the HWB colour space to produce a single three-band image.

In the Luminance–Chroma–Hue colour space, the luminance represents the mean green intensity, the chroma represents the seasonal strength, and the hue angle represents the timing. As the chroma is extended to the entire area, parcels with strong seasonality are given a high colour purity, while weakly seasonal areas collapse towards grey. To avoid clipping outside the sRGB boundaries, chroma values were adaptively scaled to the maximum valid range, ensuring that hue and luminance relationships were preserved without distortion.

Figure 3e illustrates the assignment of the three factors to the LCH colour space. The phase corresponds to the hue; chroma is the normalized amplitude; and the lightness is the mean NDVI. The LCH triplet is then rendered using the standard LCH–LAB–RGB conversion, in which the timing, seasonal range, and baseline greenness are encoded as separate visual channels.

2.4. Segmentation with Segment Anything Model

We applied SAM independently to three recoloured composites—HSV, HWB, and LCH—and generated a segmentation mask with the native resolution of 3 m at each iteration. The composites were passed to SAM as Float32 RGB arrays, with no additional normalization or contrast stretching beyond the recolouring mappings mentioned above. No land cover masks were applied, and inference was performed on the entire AOI.

Tiles of 512 × 512 px were used for inference. Tile generation, error handling, multi-scale cropping, and tile merging was performed automatically by the Segment Geospatial Wrapper that surrounds SAM’s Automatic Mask Generator. The following parameters were defined according to [

24,

25], and coarsely adjusted within reasonable ranges to produce satisfying results:

These values are closely related to [

25], and our settings fall within the typical ranges used with SAM. The parameters were not cherry-picked for performance but selected to reasonably balance coverage and precision in fragmented fields.

SAM ViT-H was used, with the weights sam_vit_h_4b8939.pth. The merging of masks across overlapping tiles was performed automatically by SAM’s internal non-maximum suppression (NMS) and deduplication logic. The resulting outputs were not merged and each of the colour spaces was processed separately. This resulted in six independent prediction sets. The instance masks were then converted to vector polygons. No holes were filled; no morphological operations were performed; and no edge smoothing algorithms were applied. To assess whether tiling and internal NMS introduced edge artifacts, a seam-interior analysis was conducted. Tile boundaries were buffered by 16 pixels and rasterized to create seam and interior zones. Ground truth and predictions were rasterized at the native 3 m resolution, and pixel-wise IoU, precision, recall and F1 score were computed separately in the seam and interior zones to quantify whether segmentation accuracy was systematically degraded near tile edges.

2.5. Validation and Accuracy Metrics

The reference layer consists of hand-digitized parcel polygons for the AOI. The ground truth contains 1304 polygons, covering ~13 km2. Both the ground truth and the image data have the same spatial reference and were automatically aligned. The ground truth was digitized by the authors for this AOI and serves exclusively as a reference for the segmentation experiments conducted in this article.

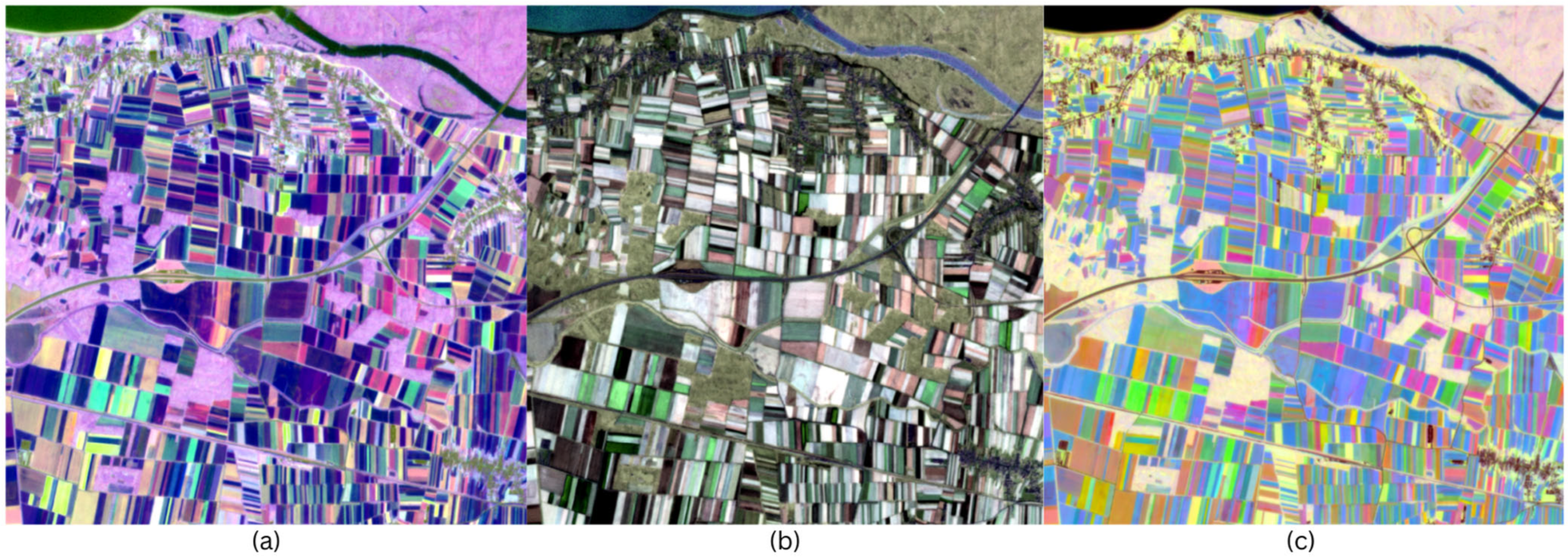

Figure 4 shows the ground truth overlaid with the RGB composite of the scene. All accuracy metrics are calculated within the AOI boundaries, and the derived geometries are clipped to the AOI extent to avoid edge artefacts. The same evaluation pipeline and the same threshold values were used for all three colour spaces. The object-related metrics are calculated in vector space, and the pixel-related metrics were calculated by rasterising the ground truth and predictions to the native resolution of 3 m.

Prior to evaluation, the predictions were filtered by removing polygons with an area of less than 30 m2. In addition, a preselection was made using the intersection-over-union (IoU) method, where for each predicted polygon the best IoU is calculated for each parcel of ground truth and only the predictions with an IoU > 0.50 are retained. In this way, the predictions that plausibly match agricultural parcels are selected so that only these are included in the evaluation. For future iterations, the IoU-based hand selection step can be changed by training a simple classifier (e.g., Random Forest, Support Vector Machines…) on ~100 labelled parcels.

With respect to the object-based metrics, the retained predictions and ground truth are used to match the predictions and ground truth. By iterating over the ground truth polygons in order of occurrence, a spatial index for the prediction is queried to obtain candidate polygons whose bounding box intersects the current ground truth parcel. In cases where a candidate does not yet match, the IoU value is evaluated and the polygon with the highest value is selected. If this IoU value is at least 0.50, the ground truth and prediction pairing is registered as a match and both objects are marked as used. Each ground truth parcel can be matched with a maximum of one prediction, and each prediction can participate in one match. After matching the predictions and the ground truth, true-positive predictions (TPs) are predictions that have been assigned to a prediction with an IoU value of more than 0.50. False-positive predictions (FPs) are predictions that have not been assigned to a ground truth, and false-negative predictions (FNs) are ground truth polygons that have not been assigned to a prediction.

These numbers were used to calculate the following metrics:

To quantify where each colour space representation helps or fails, the object-based metrics were also categorized by the area of ground-truth parcel area. The parcels were divided into three categories:

Parcels with an area of less than 5000 m2.

Parcels with an area between 5000 m2 and 20,000 m2.

Parcels with an area of more than 20,000 m2.

Within each of these categories, the following were counted:

TP—ground truth parcels in the category that were matched to exactly one prediction with IoU > 0.50.

FN—ground truth parcels in the category with no matching predictions.

FP—predictions that did not match any ground truth parcels.

These counts were used to calculate recall, precision, F1, and the mean IoU for each category. True negatives (TNs) are not shown, as the evaluation is object-based and ground-truth anchored, and the TN would represent the background, which is irrelevant to the calculated metrics.

Pixel-wise metrics are also calculated by rasterizing both the ground truth and each prediction level. The vector polygons were burnt as binary masks (parcel = 1, background = 0), and in the case where the prediction polygons overlapped, the raster array stored the union. The following counts are calculated on this grid:

TP—cells where the ground truth mask and the prediction mask both equal 1, correctly covered parcel area.

FP—cells where the ground truth mask is zero, and the prediction mask is one, polygons in the non-agricultural area.

FN—cells where the ground truth mask is equal to one, and the prediction mask is equal to zero, missing parcel areas.

As mentioned above, TN is not counted as it represents the background, which is irrelevant for the calculated metrics. Using the previously mentioned counts, the following metrics are calculated: precision, recall, F1, and IoU:

IoU measures how well a prediction matches the ground truth.

To quantify fragmentation, the number of predicted polygons associated with each ground truth parcel is calculated. A ground truth polygon is considered fragmented if it is matched to more than one prediction. The fragmentation metrics are stratified by parcel area.

In addition to fragmentation counts, object-level geometric error metrics are also calculated. These include Global Over-Classification Error (GOC), Global Under-Classification Error (GUC), and Global Total Classification Error (GTC) [

12].

Si denotes the i-th predicted parcel, and

Oi indicates the ground truth parcel with which

Si has the largest intersection. For each prediction, the Over-Classification error is defined per [

12] as

and the under-classification error as

the total classification error as per [

12] is

Global error measures were obtained as area-weighted means across all predictions to quantify segmentation accuracy in terms of spatial overreach, omission, and overall geometric consistency [

12]:

Statistical significance testing was conducted to assess whether the observed performance differences between colour-space variants were systematic rather than due to random variation. Because each parcel was evaluated under all six colour-space transformations, the scores form a repeated-measure design. A nonparametric Friedman test was applied as an omnibus test on parcel-level IoU and F1 scores within each size category. The Friedman test evaluates whether at least one method differs in rank distribution across parcels [

26]. In the cases where the test was significant, a pairwise Wilcoxon signed-rank test was performed between all method pairs, with Holm correction to control the family-wise error rate [

26,

27]. This combination provides a robust alternative to paired

t-tests, which assume normally distributed differences and are less suited to bounded and skewed performance metrics, such as IoU and F1 [

26,

27].

To quantify potential biases introduced by manual annotation, the area of interest was re-digitized independently a second time by another operator. The two datasets were compared parcel by parcel to measure inter-annotator consistency. For every matched pair of parcels, the IoU and GTC were calculated, and precision, recall, and F1 score were calculated by rasterizing each parcel pair to the native 3 m PlanetScope resolution. The matched pairs were categorized into three groups, just as before.

4. Discussion

4.1. Comparison with Previous Works

The recolouring of the multitemporal NDVI into cylindrical colour spaces and the segmentation of the resulting image with a zero-shot segmenter leads to reliable field delineation without retraining. The approach generates consistently parcel-coherent colour fields for SAM. The three colour spaces emphasize different aspects of the time series, leading to systematic precision–recall trade-offs. This method produces per-pixel amplitude and phase patches that are robust to slow drifts and can be used directly for remapping the perceived colour space. In practice, HWB tends to produce results with the lowest number of fragments, while HSV favours coverage, and LCH is somewhere in between.

Classical edge detectors are based on local gradients, region-based and integrated methods merge/split by spectral similarity. This method differs in that it generates contrast from time, and not just space, which is useful for boundaries. A compact harmonic model represents the temporal progression (phase), seasonal bandwidth (amplitude), and mean green colouration as separable visual channels. Conceptually, this behaves like a regional cue in which parcel-coherent colours emerge, which SAM uses as an implicit region during the mask proposal. Compared to heavily supervised methods, this method is lightweight and interpretable, it requires no labelled data and no fine-tuning, and it exploits seasonality, which other methods typically ignore.

Building on this zero-shot foundation, more recent customized SAM variants such as [

28,

29] show clear ways to improve performance. A complementary supervised path is [

30], which reports performance improvements with a strong generalization of zero-shot generalization across resolutions and regions. While our pipeline is label-free and interpretable, ref. [

30] suggests a backbone that could be paired with recoloured input. Reference [

29] can be combined with our recolourings without the need for retraining. This provides a cost-effective way to sharpen images while preserving parcel-coherent interiors. The segmentation head of [

30], YoloV11, can be used as a topology repair model.

Beyond delineation, the harmonic NDVI encodings used in this research also carry crop-specific information, phase reflects growth timing, amplitude captures seasonal intensity, and the mean tracks baseline greenness. These descriptors are discriminative for crop classification, suggesting that our method can be extended by pairing the recoloured composites with supervised classifiers. In such a setup, the segmentation step would provide parcel boundaries, while the harmonic features embedded in colour channels would serve as inputs for crop identification, linking field geometry and crop type in a unified workflow.

4.2. Error Analysis and Methodological Uncertaintie

In all colour spaces, the dominant errors are over-segmentation, fragmentation, and under-segmentation. These errors explain the high recall and high correspondence IoU, where the boundaries are usually well placed on the best mask, but whose precision is reduced by additional polygons. By slightly fine-tuning SAM, the above errors can be reduced [

28,

29,

30]. In particular, ref. [

28] couples a DeeplabV3+ prompter with SAM and fine-tunes the decoder. A hybrid approach could be tested directly by keeping the recoloured composites as inputs and training a prompter on a modest labelled subset to direct the prompters to the interior of the parcels. Similarly, ref. [

29] shows that improving the inputs with principal component analysis (PCA), high-frequency decomposition, and guided filtering can make the SAM embeddings more marginal, which could reduce the under-segmentation in densely packed strips.

HSV makes phase differences clearly visible via hue and strongly pushes seasonal crops towards saturated colours, maximizing detection but also promoting over-segmentation on large, uniform parcels. HWB encodes the mean NDVI value as whiteness and the amplitude as inverse blackness, dampening mottling and suppressing duplicates, improving object-level precision. The perceptual uniformity of LCH often results in cleaner, more uniform parcel fills, as well as smoother hue transitions across gradual timing gradients. However, using the full chroma range can result in some colours hitting the sRGB limit and the converter clipping the out-of-range values. For this reason, chroma was scaled iteratively during rendering. This adjustment preserved the relative relationships between hue, luminance, and chroma, ensuring that seasonality strength remained interpretable while avoiding artifacts.

Using ordinal days instead of mapping the day of the year annual radians changes the representation of the phase. The mapping of the annual cycle has the advantage that it can be directly interpreted phenologically, since the phase corresponds to a calendar angle tied to a 365.25-day tropical year [

18]. In our area of interest, fitting ordinal days resulted in a stronger contrast between parcels and a higher SAM separability than the annual radian model. A possible explanation for this is that the relaxation of the annual period constraint allows the sinusoid to absorb sub-annual management signals, such as double cropping, which exaggerates inter-parcel differences and facilitates segmentation. This suggests that alternative encodings such as the ordinal day encoding may offer greater agroclimatic sensitivity in fragmented agricultural systems.

It is important to note that in natural colour imagery, the brightness differences typically stem from crop type and growth stage, while in our method, the brightness is directly proportional to the NDVI amplitude. This means that strongly seasonal fields appear brighter and weakly seasonal areas appear darker. This mapping reduces the influence of short-lived spectral changes, such as flowering, and instead emphasizes the strength of seasonal vegetation dynamics. As a result of this, the brightness of the recoloured composites should be interpreted as a phenological indicator rather than a visual analogue of crop reflectance properties.

4.3. Input Data and Environmental Uncertainties

Some strengths of this method are that it requires no training, is quick to apply, and is interpretable. It is index-independent and sensor-independent. Within these limits, the cost advantage is also convincing. By using 22 cloud-free images and no labelling outside the evaluation set, the pipeline delivers strong results with little technical effort. The improvements are straightforward and incremental, and do not compromise the simplicity of the method.

Limitations remain. The results were derived from a single area in Croatia, a single sensor family, and a single harmonic seasonal model, so generalization to other landscapes requires further testing. The ground truth digitized by hand also tends to contain biases at the boundaries. However, these factors do not undermine the observations; rather, they motivate broader validation across different landscapes, agricultural contexts, and additional sensors such as Sentinel-2, as well as a series of robustness checks.

In addition, multitemporal datasets are inherently sensitive to atmospheric disturbances, residual cloud contamination, and imperfect radiometric normalization, all of which may reduce the stability of harmonic fitting and the consistency of phase and amplitude estimates. Small misregistrations can produce artificial boundary signals, particularly in fragmented landscapes where parcels are only a few pixels wide. The assumption of a single dominant seasonal harmonic does not capture more complex or irregular crop cycles, such as double cropping or intercropping, which could lead to mischaracterization of parcel-level dynamics.

Within these limits, the pipeline shows promising results, but careful attention to preprocessing quality, sensor consistency, and agro-ecological context is critical to ensure robust transferability to larger and more diverse agricultural regions.

5. Conclusions

This work proposes a method that recolours harmonic NDVI time series into cylindrical colour spaces to expose the parcel-level structure to a zero-shot segmenter. In a 5 × 5 km landscape without strong physical boundaries, this approach consistently provides a coherent appearance of the parcels and enables effective delineation without additional training or fine-tuning.

Among the tested colour spaces, HSV prioritizes coverage, encodes phase as hue, increases boundary contrast between fields with asynchronous phenology, and delivers the highest recall and best pixel-wise F1 score and IoU. HWB produces the cleanest masks. Amplitude luminance reduces fragmentation and achieves the highest precision and best overall object-based F1 score, especially for medium and large parcels. LCH typically lies between the other two colour spaces.

In summary, the proposed method of harmonic NDVI recolouring combined with SAM segmentation provides a solid, transparent basis for parcel delineation. It is quick to deploy, easy to explain, and adaptable to different operational objectives. This approach is well positioned to deliver value today, while providing a clear path for future improvements. Beyond delineation, the harmonic descriptors (phase, amplitude, and mean) embedded in the recoloured composites also hold strong discriminative properties for crop classification, suggesting that our method can be extended to jointly delineate fields and identify crop types in a single, scalable workflow.