Abstract

Although many deep learning-based image restoration networks have emerged in various image restoration tasks, most can only perform well in a specific type of restoration task and still face challenges in the general performance of image restoration. The fundamental reason for this problem is that different types of degradation require different frequency features, and the image needs to be adaptively reconstructed according to the characteristics of input degradation. At the same time, we noticed that the previous image restoration network ignored the reconstruction of the edge contour details of the image, resulting in unclear contours of the restored image. Therefore, we proposed an edge-aware guided adaptive frequency navigation network, EAFormer, which extracts edge information in the image by applying different edge detection operators and reconstructs the edge contour details of the image more accurately during the restoration process. The adaptive frequency navigation perceives different frequency components in the image and interactively participates in the subsequent restoration process with high- and low-frequency feature information, better retaining the global structural information of the image and making the restored image more visually coherent and realistic. We verified the versatility of EAFormer in five classic image restoration tasks, and many experimental results also show that our model has advanced performance.

1. Introduction

In digital image processing, image restoration is a crucial task that involves the reconstruction of damaged or missing parts, ensuring that the restored content is visually consistent with the original image. With the development of digital photography technology, people’s requirements for image quality are also increasing. However, in practical applications, due to the imperfect imaging system of the camera, unsatisfactory ambient lighting conditions, compression during transmission, and poor weather conditions that blur visual clarity, the images we obtain are often subject to varying degrees of degradation, such as noise interference, blur, compression artifacts, rain and fog interference, etc. These problems seriously affect the visual quality of the image and the performance of various visual tasks.

In recent years, deep learning technology has provided more possibilities for the development of image restoration technology. Among many previous explorations, generative adversarial networks [1,2] use adversarial training mechanisms to accurately fill in missing image information; multi-stage networks [3,4] sequentially and progressively optimize restoration effects; various types of convolutions [5,6] analyze image features from different dimensions; and attention mechanisms [7] focus on key areas to improve restoration accuracy. However, in-depth analysis shows that the network architecture and functional unit construction of such image restoration are mostly centered on spatial information mining. In actual restoration scenarios, the simple spatial domain strategy gradually becomes exhausted. It ignores the difference in frequency characteristics between clean images and degraded images, and it is difficult to accurately anchor the key restoration information contained in the frequency sub-bands, which hinders the restoration process. Some researchers found that different restoration tasks pay different attention to different frequency sub-bands [8]. For example, dark light enhancement and dehazing need more attention to low-frequency components. At the same time, the main difference between the target image and the degraded image for rain removal and deblurring is reflected in the mid-high-frequency components. Although researchers have previously noticed that better results can be achieved by utilizing low-frequency and high-frequency information in restoration tasks [9,10], they have not yet figured out how to enable the model to autonomously select different frequency components that are most suitable for the scenario under different tasks. To solve this problem, we explored general multi-task image restoration technology. During the experiment, we found that edge-filtering technology has shown great potential in many aspects of image restoration.

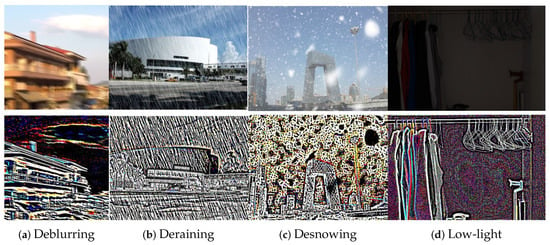

As shown in Figure 1, even if affected by the four types of degradation shown above, edge filtering can effectively extract image boundary features, separate degradation factors, and retain the image texture structure, which is crucial for maintaining the details and clarity of the image after restoration. Using the image preprocessed by edge filtering as the input of each stage can obtain a restoration effect more consistent with visual perception than directly adding the degraded image to the restoration process [11,12].

Figure 1.

Edge information extraction.

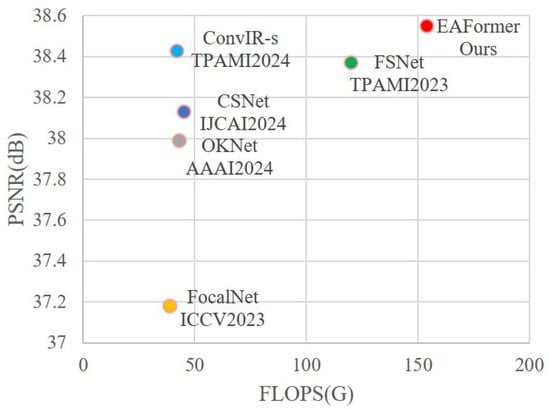

Therefore, we propose EAFormer, a general image restoration network architecture that uses edge-aware prior information to guide adaptive frequency navigation. It can effectively combine the edge information and frequency characteristics of the image. The edge information focuses on the accurate restoration of local details and structures, while the frequency characteristics focus on the overall coherence of the image and the natural transition of texture. Specifically, we divide the network into three parts: encoder, bottleneck, and decoder. The encoder part is responsible for extracting the image’s feature representation and capturing the image’s multi-level information, including low-level texture information and high-level semantic information. With the assistance of the Dynamic Multi-edge Feature Extraction Filter (DMFEF), the encoder can pay more attention to the edge and structure information in the image, providing rich contextual features for subsequent modules. As the middle link of the network, the bottleneck part not only transmits the features extracted by the encoder but also integrates the edge-aware prior information. We introduce the Braided Dual-stream Channel Attention (BDCA) and the adaptive frequency navigation module (AFNM) at this stage. BDCA adopts a dual-stream structure, processing two different feature streams separately and capturing the features of different subspaces in parallel, which helps the model identify the importance of different regions in the image, thereby re-weighting the feature map and emphasizing those regions that are more critical to the final task. In order to limit the computational overhead, we only deploy this attention mechanism at the bottleneck. AFNM autonomously adjusts the frequency response of the feature map according to the incoming image information, strengthens essential structural information, and suppresses unnecessary noise and artifacts. The decoder part converts the high-level features extracted by the encoder and bottleneck parts back to the image space. We adopt an asymmetric decoder structure, combining the encoder features with the decoder features from coarse to fine from multiple scales through jump connections, which retains the image’s detailed information and enhances the image’s semantic consistency. Our goal is to build a robust model capable of achieving high-quality restoration in multiple scenarios. Therefore, we compared recent powerful image restoration models [9,10,13,14,15,16], and EAFormer achieved a relative lead in multiple datasets and restoration tasks. Taking the CSD [17] dataset for snow removal as an example, our model outperforms the state-of-the-art algorithms, as shown in Figure 2.

Figure 2.

Our method outperforms the state-of-the-art image restoration models on the snow removal task, achieving a gain of 0.18 dB compared to FSNet [10].

The main contributions of this work are as follows:

- •

- We proposed an image restoration network, EAFormer, guided by edge information and autonomous selective frequency navigation, which can effectively focus on the local and overall features of the image and smoothly restore various degraded images.

- •

- We designed an edge feature extraction filter with dynamic weight adjustment to achieve adaptive edge perception, making it more flexible in processing images with different textures and structures.

- •

- We designed the Braided Dual-stream Channel Attention (BDCA) to independently learn features in different subspaces and reorganize and fuse them, which not only retains the advantages of each feature but also enhances the expressiveness of the features through interaction.

- •

- We designed the adaptive frequency navigation module (AFNM), which decomposes the high- and low-frequency components of the image and adaptively modulates different degradation types, effectively improving the generalization performance of the model.

- •

- All the above modules we designed are highly compatible and can be seamlessly integrated into any image restoration network architecture.

2. Related Work

2.1. CNN-Based Restoration Networks

CNN was introduced into the field of image restoration due to its success in image recognition tasks. CNN can automatically learn the hierarchical features of images, thereby achieving image restoration without manually designing features. CNN has been widely used in tasks such as denoising [5], deblurring [18], and deraining [19].

Most of the early CNN [20,21,22] image restoration models are based on non-blind image restoration, which means that they focus on restoring certain types of image damage without estimating the cause of image damage. For example, known blur kernel information is used to restore image clarity, or known noise type and noise level are used to achieve image denoising. This type of method is often limited in performance when facing unknown degradation types. DRUNet [23] combines U-Net and ResNet with a noise level map input to handle diverse noise levels via a single model, serving as an effective denoiser prior. Subsequently, CNN image restoration methods in blind scenarios [24,25] were derived, restoring images without knowing the cause or extent of damage, which is more challenging. In the field of blind denoising, DnCNN [5] was proposed to process images with unknown noise levels. These models no longer rely on fixed noise models but directly learn the characteristics of noise from data so that they can adapt to different noise levels and types. For blind deblurring, researchers have proposed models such as VBDeblurNet [26] and NR-IQA [27], along with DMPHN, [18] which employs a hierarchical multi-patch structure with residual learning for fine-to-coarse deblurring, and MIMO-UNet [28], which uses a single U-shaped network with a multi-input encoder, multi-output decoder, and asymmetric fusion to balance accuracy and efficiency. These models do not rely on pre-known blur kernels but restore images by learning end-to-end mapping from blurred to clear images. This approach requires the model to identify and compensate for various blur types, including motion blur and defocus blur. However, for a given degradation, a blind model may not perform better than a non-blind model. In relatively complex degradation patterns, such as irregular motion blur or non-uniform noise, the dataset of the blind model is more diverse, and the features are difficult to learn.

2.2. Transformer-Based Restoration Networks

The most significant advantage of Transformer over CNN is its self-attention mechanism, which can effectively capture long-range dependencies in the input sequence, which is of great significance for low-level visual tasks. In these tasks, the global information and local details of the image are equally important, and traditional CNNs may not be able to effectively utilize global information due to the limitations of their local receptive field.

In recent years, Transformer has also been widely used in image restoration tasks. Uformer [29] retains the U-shaped encoder–decoder structure. On the encoder side, it sequentially reduces the image space dimensions, just like accurately filtering information and extracting key features from massive image data; while in the decoder stage, it reverses the operation and gradually reshapes the image space dimensions to achieve fine restoration of image reconstruction. In each stage of the encoder and decoder, Uformer introduces a self-attention module, which enables the model to capture long-range dependencies of images at different scales and directly pass encoder features to the decoder through cross-scale connections, which helps to retain more detailed information during the restoration process. Another efficient image restoration model, SwinIR [30] adopts an encoder–decoder architecture and utilizes a shifted window multi-head self-attention mechanism. It divides the image window for self-attention calculation and shifts the interaction. It also performs feature fusion between the encoder and decoder and at different stages of the decoder, which can capture multi-scale features from low-level to high-level. Beyond the aforementioned models, other Transformer-based designs have also achieved notable performance in specific image restoration scenarios: DDABNet [31] is based on multi-supervision and hybrid attention, achieving excellent results in the field of image deblurring; Transfer CLIP [32] integrates noisy images and their multi-scale features from the frozen ResNet encoder of CLIP into the decoder, demonstrating better denoising performance; GRL [33] focuses on image features at multiple levels and shows good performance in the restoration of real-world images; Retinexformer [34] uses illumination representation to guide the restoration of low-light images under different illumination conditions, enabling better capture of long-range dependencies. However, with the increase in image resolution, the temporal and spatial complexity of the traditional self-attention mechanism increases quadratically, becoming a heavy burden on computing resources. In this context, Restormer [7] stood out and proposed the Multi-Dconv Head Transposed Attention mechanism, which cleverly reshaped the attention computing paradigm, significantly reduced computing overhead, successfully solved the problem of large image restoration, and brought image restoration quality to a new level. Although the current Transformer-based image restoration models have made significant progress in multiple restoration tasks, most of them learn features through supervised learning and need to transform to unsupervised or self-supervised learning in the future.

3. Method

In this section, we will give an overview of the image processing pipeline of EAFormer, followed by details of the Dynamic Multi-edge Feature Extraction Filter (DMFEF), Braided Dual-stream Channel Attention (BDCA), and adaptive frequency navigation module (AFNM).

3.1. Overall Pipeline

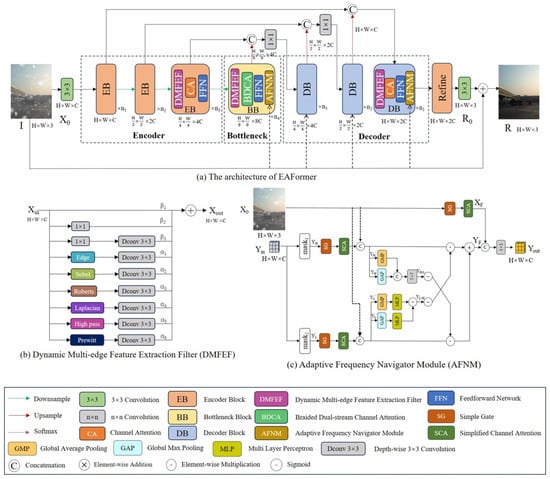

The EAFormer we proposed is an encoder–decoder architecture. The encoder module we designed consists of the DMFEF, channel attention, and a feedforward network. At the bottleneck, we replaced the channel attention with our designed BDCA. In order to find the most helpful feature combinations and frequency components for various tasks in the feature space, we added our designed AFNM at the end of the bottleneck module and the decoder module, as shown in Figure 3.

Figure 3.

(a) Overview of the proposed EAFormer. The encoder–decoder architecture of the model uses the edge information of the DMFEF and the high- and low-frequency information of the AFNM to learn universal image restoration. (b) The DMFEF dynamically allocates the weights of convolution and edge operators on the path to filter out key information for guiding subsequent processing. (c) The AFNM decomposes the high- and low-frequency information of the input-degraded image and fuses the complementary information of different frequency components.

The input-degraded image first passes through a 3 × 3 convolution feature extractor to obtain , and then passes through each layer of the encoder and the downsampling layer to obtain the feature map , . The output of each layer of the encoder is jump-connected with the output of the decoder and upsampling operation at the corresponding position as the input of the decoder at the next position. This jump connection method is a cross-layer feature reconstruction, which realizes the information supplementation between layers and the enrichment of deep features in fusing feature maps at different levels. The image, after all decoders, passes through the feature refinement module, which uses the same structure as the encoder module, further refines the modeling, and applies 3 × 3 convolution to obtain the residual image . superimposed on the degraded image I becomes the final repair result R:

3.2. Dynamic Multi-Edge Feature Extraction Filter

Edge filters have been shown to have attractive performance in image restoration tasks such as image dehazing [35] and denoising [11], and edge feature extraction filters are more helpful in improving image feature expression in image restoration tasks than ordinary convolution [12]. In order to further explore the potential of filters in image restoration tasks, we design a dynamic multi-edge feature extraction filter (DMFEF) with general image restoration performance.

As shown in Figure 3b, we added ordinary convolution to ensure the essential performance of feature extraction. At the same time, the filter integrates a variety of classic edge detection operators, including Sobel, Roberts, Prewitt, and Laplacian operators. These operators are implemented as learnable convolution kernels to detect horizontal, vertical, and diagonal image edges. Specifically, by dynamically adjusting the weights of these operators, our model can adaptively optimize the feature extraction process according to different image content and degradation conditions. In order to improve the performance of the filter in the deblurring task, it is necessary to highlight and enhance the high-frequency information in the image, and we introduced a high-pass filter. The overall working performance process of the DMFEF is as follows:

where to and to are the weights on each filter path, , , , , , and represent the process of loading the parameters of Edge, Sobel, Roberts, Laplacion, Highpass, and Prewitt filters into 3 × 3 depth-wise separable convolution, represents 1 × 1 point-wise convolution, and represents 3 × 3 depth-wise separable convolution.

In Figure 1, we visualize the processing effect of the DMFEF in different restoration scenarios. The rain-stain image in Figure 1b has a high contrast at the edge of the fine line. After filtering, we can clearly see that the edge of the rain mark is significantly enhanced. This information will be passed to the subsequent rain removal stage so that the model can accurately locate and remove the degradation information. The rain removal dataset simulates the rain process by superimposing continuous scratches of almost uniform fineness and coarseness. In order to better simulate the camera’s “big in and small out” imaging principle and the characteristics of different snowflakes with different snow particle sizes, the snow removal dataset simulates the snowfall scene by superimposing masking patterns of different sizes and shapes with uneven distribution density on the original image, as shown in Figure 1c. These irregular characteristics are more difficult to capture than rain removal scenes, which thoroughly explains why previous standard image restoration models such as MPRNet [4], SwinIR [30], Uformer [29], Restormer [7], and NAFNet [36] have failed to achieve better performance in snow removal tasks. We use the feature information of the snow image after preprocessing with the DMFEF as prior knowledge and pass it into the subsequent model. We can see that the snow and non-snow areas are effectively separated. For the image taken under dark conditions in Figure 1d, which is seriously lacking in details and contrast, after filtering, we find that the local contrast of the low-contrast area is significantly improved.

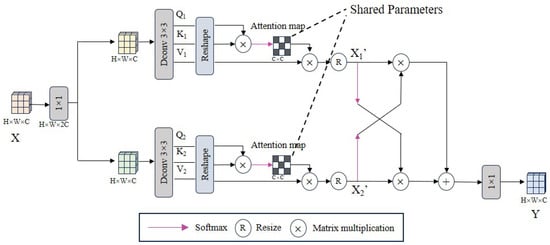

3.3. Braided Dual-Stream Channel Attention

The core component of the bottleneck module is the interleaved dual-stream channel attention mechanism (BDCA), as shown in Figure 4. The inspiration for our design of BDCA comes from the location of the bottleneck module at the junction of the encoder and the decoder. We simulate the subsequent interaction between the low-level image features from the encoder and the high-level image features from the decoder in the BDCA module, as shown in Figure 4.

Figure 4.

Information flow processing of BDCA.

Firstly, the input information stream is first subjected to a 1 × 1 convolution to expand the channel capacity to twice the input. This operation helps the model capture richer feature representations and provides more space for subsequent feature interactions. Then, two independent paths are used to divert the information and map it channel by channel with a 3 × 3 depth-wise separable convolution so that each channel contains its query (Q), key (K), and value (V) projection:

where split represents the process of diverting information from input X along two independent paths.

This split processing not only enhances the model’s ability to capture local features but also provides more detailed control for subsequent feature fusion. The , and , of the two channels are reconstructed and multiplied to obtain a shared attention map of size C × C to enhance relevant features and suppress irrelevant features. We do not calculate the attention maps of the two branches separately but adopt a shared attention map strategy. The reason is that the two branches are essentially homologous; that is, they come from the input X. Independent calculation of the attention map easily leads to the inability to effectively capture global context information, especially in scenes where background features or weather conditions cause pixel values to be similar but different from the background. This limitation hinders the model’s overall understanding of the image, especially in severe weather conditions such as rain, fog, or snow, which usually cause similar occlusion and brightness changes. Finally, by weighting and with the shared attention map, we obtain the adjusted and , which is to dynamically adjust the importance of each channel feature according to the global context information and cross-channel correlation. After being processed by the softmax function, and can adjust each other’s feature contribution. This interaction helps the model capture and utilize the complementary information between the two channels, thereby achieving more effective feature fusion. The above process can be described as:

where represents the process of sharing parameters in the attention graph, and are learnable parameters in the training process, which control the dot product size of and on the two paths, respectively, .

3.4. Adaptive Frequency Navigation Module

The perception of the frequency of images under different degradation factors is different [8], so it is necessary to navigate the high- and low-frequency boundaries under different degradation conditions. Therefore, our design idea of AFNM is shown in Figure 3c. First, the input is converted from the spatial domain to the frequency domain inside and , and then the high- and low-frequency boundaries of images under different degradation conditions are determined by the learnable spectrum mask size. The mask separates the frequencies, and the low-frequency components are mainly distributed in the center of the spectrum, while the high-frequency components are distributed on the periphery of the spectrum. Then, the high-frequency components outside the mask size and the low-frequency components within the mask size are restored to the spatial domain to obtain . Specifically, this process can be defined as:

where ⊙ represents element-by-element multiplication, the mask is a filter that fills the periphery of the adaptive spectrum mask size with 0 elements to have the same shape as , and represents fast Fourier transform/inverse transform.

Next, and are, respectively, mined through SimpleGate [36] and Simplified Channel Attention [36] to obtain . Taking as an example, the process is as follows:

where GAP represents global average pooling, SG represents SimpleGate, and SCA represents Simplified Channel Attention and is obtained by splitting along the channel size.

Our processing mode for the processed high- and low-frequency features continues the interactive idea of BDCA to cross-realize bidirectional feature complementation. For the given and , we apply global average pooling and global maximum pooling to them. For the branch of , the pooling results are concatenated in the channel dimension and passed to the 7 × 7 convolution. For the other branch of , the results of the two types of pooling are passed through the multi-layer perceptron, and the global average and maximum feature maps are nonlinearly transformed and added. Subsequently, the paths of and are subjected to sigmoid operations to generate a spatial gating map and a channel gating map between 0 and 1 to obtain and , and realizes the modulation of high- and low-frequency features:

Finally, the feature maps and of the degraded image after SG and SCA adaptive adjustment are concatenated in the channel dimension and then subjected to 1x1 convolution dimensionality reduction processing to achieve feature fusion, and is obtained. The entire module maintains size consistency from input to output :

4. Experiments

In this section, we use 16 datasets to verify the performance of EAFormer in 5 classic image restoration tasks: image deraining, image deblurring, image denoising, image desnowing, and low-light image enhancement. In order to highlight the statistical data of the experimental results, the highest performance indicators in the table are indicated in bold, and the indicators with the second lowest performance are underlined.

4.1. Implementation Details

We trained different models for different image restoration tasks. In all experiments, we used the following training parameters, as shown in Figure 3a, the number of encoder blocks is , , , the number of bottleneck blocks is , the number of decoder blocks is , , , and the number of refinements is 4. The number of channels C is 48. We used the AdamW optimizer with , , and the learning rate varied from to through cosine annealing, and the weight decay parameter was set to . The loss function used is L1 loss:

where N represents the number of pixels in the image, represents the i-th pixel of the restored image, and represents the i-th pixel of the target image. We adopted a data augmentation strategy of horizontal and vertical flipping and trained the images in a progressive learning manner. As the number of training times increases, the patch size increases from 128 × 128 to 160 × 160 to 192 × 192 to 224 × 224 and finally to 256 × 256, with a total of 300 K iterations. Our model is trained on NVIDIA GeForce RTX 4090 and tested on NVIDIA Tesla A800.

4.2. Image Deraining Resultss

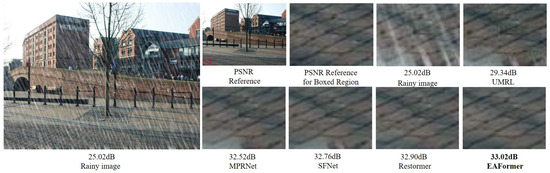

For the image deraining task, we calculated the PSNR and SSIM metrics of the Y channel in the YCbCr color space and compared the performance with recent deraining models on the public datasets Test100 [37], Rain100L [38], and Test1200 [39]. The results in Table 1 show that the deraining performance of our proposed EAFormer is generally stronger than that of Restormer [7]. Figure 5 shows our deraining restoration example.

Table 1.

We compare the deraining performance of general-purpose image restoration frameworks in recent years on the datasets Test100 [37], Rain100L [38], and Test1200 [39], with a focus on the PSNR [40] and SSIM [41,42] metrics.

Table 1.

We compare the deraining performance of general-purpose image restoration frameworks in recent years on the datasets Test100 [37], Rain100L [38], and Test1200 [39], with a focus on the PSNR [40] and SSIM [41,42] metrics.

| Method | Test100 PSNR SSIM | Rain100L PSNR SSIM | Test1200 PSNR SSIM |

|---|---|---|---|

| UMRL [19] | 24.41 0.829 | 29.18 0.923 | 30.55 0.910 |

| MPRNet [4] | 30.27 0.897 | 36.40 0.965 | 32.91 0.916 |

| SFNet [9] | 31.47 0.919 | 38.21 0.974 | 32.55 0.911 |

| Restormer [7] | 32.00 0.923 | 38.99 0.978 | 33.19 0.926 |

| EAFormer (ours) | 32.02 0.924 | 39.10 0.977 | 33.29 0.927 |

Figure 5.

Image deraining results [4,7,9,19] on Test2800 [43].

4.3. Image Deblurring Results

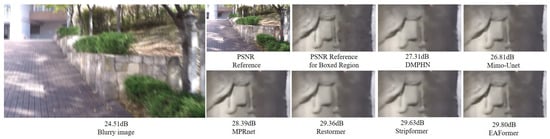

In the image deblurring task, we evaluate the actual deblurring performance of EAFormer by two types of deblurring, including single image motion deblurring and defocus deblurring. We use two synthetic datasets, GoPro [44] and HIDE [45], and two real datasets, RealBlur-R [46] and RealBlur-J [46], to evaluate the performance of single image motion deblurring, and learn the restoration strategy of defocus deblurring based on the DPDD [47] dataset. We calculate the PSNR and SSIM indicators on three channels (if not explicitly stated in the following, the indicators are calculated on three channels by default). Here, we would like to point out that for the fairness of the experiment, our experimental results remove the trick of NAFNet [36] using the tlc [48] method to align the inconsistency between test and training. The results of single image motion deblurring and defocus deblurring are shown in Table 2 and Table 3. Figure 6 and Figure 7 visualize our method.

Table 2.

Image motion deblurring effects on GoPro [44], HIDE [45], RealBlur-R [46], and RealBlur-J [46].

Table 3.

Comparison of defocus deblurring results on DPDD [47] dataset.

Figure 6.

Single image motion deblurring results [4,7,18,28,49] on GoPro [44].

Figure 7.

Defocus deblurring results [7,50,51,52] on DPDD [47].

4.4. Image Denoising Results

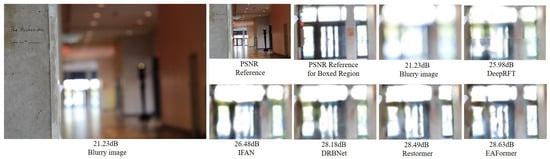

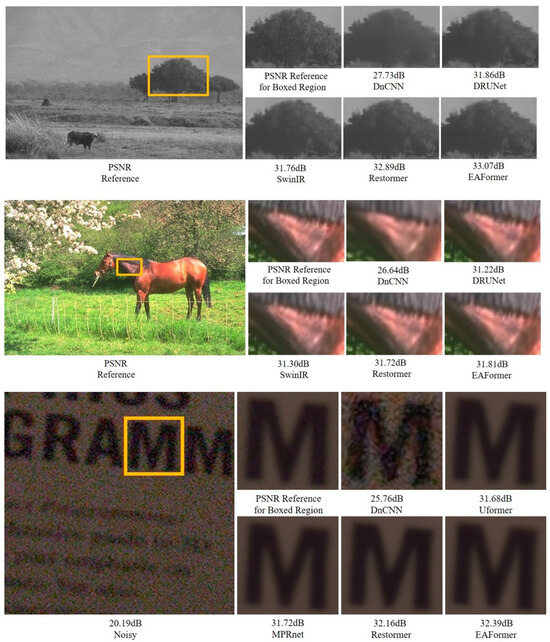

In Table 4, Table 5 and Table 6, we trained a separate model for each noise level. We used it to verify the denoising performance of EAFormer on color images, grayscale images, and real images. Gaussian denoising was performed on color and grayscale images at , respectively. In terms of PSNR, in color image denoising on the CBSD68 [53] dataset, EAFormer outperforms the baseline model Restormer [7] by 0.05 dB when ; in grayscale image denoising on the BSD68 [53] dataset, EAFormer leads Restormer [7] by 0.06 dB at ; and in real image denoising on the SIDD [54] dataset, EAFormer is 0.07 dB better than Restormer [7]. To ensure the consistency of experimental conditions, the Restormer [7] was also progressively trained with patch sizes of 128, 160, 192, 224, and 256. The results show that our model has the best denoising level when . We visualized the denoising results of color, grayscale, and real images, as shown in Figure 8.

Table 4.

The PSNR indicators of grayscale image datasets set12 [5], BSD68 [53], and Urban100 [55] when .

Table 5.

The PSNR indicators of the color image datasets CBSD68 [53], Kodak24 [56], McMaster [57], and Urban100 [55] are as follows; our model shows an overall improvement over the baseline denoising model Restormer [7] when and .

Table 6.

We performed real image denoising on the SIDD [54] dataset and found that our model achieved a PSNR gain of 0.07 over the state-of-the-art real image denoising model Restormer [7].

Figure 8.

The first four rows show the results [5,7,23,30] of grayscale image denoising and color image denoising of BSD68 [53] and CBSD68 [53], respectively. The last two rows show the real image denoising examples [4,5,7,29] of SIDD [54].

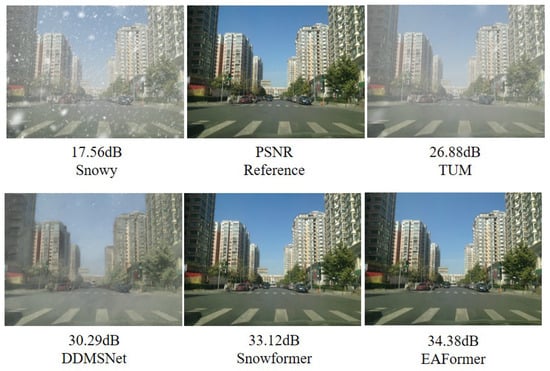

4.5. Image Desnowing Results

We calculated the PSNR and SSIM indicators on the public snow removal dataset CSD2000 [17]. In Table 7, the results show that our model has significantly improved the performance of the snow removal task compared to the latest general-purpose repair model DSANet [58], and has increased by 0.12 dB in PSNR compared to the latest CONVIR-S [59] model. The visual results of snow removal repair are shown in Figure 9, where it can be observed that EAFormer achieves more realistic restoration results in the blue building compared to Snowformer [60], removing more of the shadows from the building.

Table 7.

Comparison of snow removal results in CSD2000 [17].

Figure 9.

Desnowing results [60,61,62] on CSD [17].

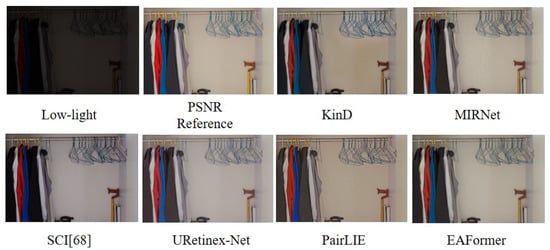

4.6. Low-Light Enhancement Results

We compared the existing state-of-the-art low-light enhancement model PairLIE [63] with the low-light datasets LOL-V1 [64] and LOL-V2 [65]. In Table 8, our model’s scores in PSNR and SSIM are significantly better than PairLIE [63]. As shown in Figure 10, we can find that KinD [66]-enhanced images have obvious dark areas and inaccurate clothing color recovery; MIRNet [67] lacks detail repair, with unclear clothing textures and blurry background walls; SCI [68] has issues with color fidelity, clothing color deviation, and overexposed areas; URetinex-Net [69] has uneven brightness adjustment and poor detail preservation; and PairLIE [63] has inaccurate color restoration and artificial appearance brightness enhancement. However, EAFormer achieved the most realistic low-light enhancement and was closest to the PSNR reference image. We can observe that the fine clothing texture and overall scene structure are well presented, and the brightness enhancement effect is uniform and natural, without excessive or insufficient local exposure. Under low-light conditions, the true color of the object is accurately restored, making the clothing color and background wall color in the enhanced image very close to the PSNR reference value.

Table 8.

Low-light enhancement results in LOL-V1 [64] and LOL-V2 [65].

Figure 10.

Low-light enhancement results [63,66,67,68,69] on LOL-V1 [64] and LOL-V2 [65].

4.7. Ablation Studies

In this section, all our ablation experiments are based on comparing rain removal and restoration results produced by Rain100L [38]. We verify the effectiveness of the proposed BDCA, AFNM, and DMFEF, and the results are shown in Table 9. In network (A), we remove the DMEFE from the encoder, bottleneck, decoder, and reconstruction modules of EAFormer to verify whether the edge information provided by the edge filter can guide the subsequent restoration process. In network (B), we replace the BDCA that only appears in the bottleneck with ordinary channel attention. In network (C), in order to test whether the AFNM can improve the overall performance of the model, we remove AFNM in the bottleneck and decoder and compare and verify whether the presence or absence of the AFNM affects the final restoration effect. Secondly, under the principle of a unified training strategy, we compare whether the modules with similar functions in other networks [8,11,12] are more substitutable than our modules and then more systematically verify the role of the proposed components. The results are shown in Table 10.

Table 9.

Ablation studies of each module.

Table 10.

We define A→B to only represent the process of replacing module A with module B. The other modules are consistent with the structure of EAFormer. This table shows the indicators after module replacement.

The results of the network (A) show that the performance of the model is affected without edge information as prior knowledge. This shows that edge information is indeed effective prior knowledge, and the model using edge information as prior knowledge can better capture the key structures in the image. Network (B) shows that our proposed BDCA is more adaptable to complex image features than ordinary channel attention, bringing a favorable gain of 0.04 dB. In network (C), the lack of adaptive frequency selection and modulation will limit the flexibility and effectiveness of the model in dealing with different types of image degradation. Removing the AFNM component will lead to a decrease in image restoration indicators.

In Table 10, we first compare the AFLB module with the frequency modulation function in [8], and we can see that our AFNM has a 0.10 dB PSNR performance improvement. We also compare the filter modules Adaptive Filter and ECB in [11,12]. The restoration performance of these filters applied to EAFormer is weaker than that of the DMFEF.

5. Limitations

We calculated the FLOPs and Params of each model when the input image size was uniformly 256 × 256, and also calculated the PSNR metric when processing the Rain100L [38], as shown in Table 11. It is not difficult to see that while our model achieves excellent performance across a variety of image restoration tasks, it also incurs a higher computational load and parameter count. However, it should be noted that for many practical applications, image restoration quality is often more critical than processing speed. For example, in fields such as medical image restoration and digital restoration of cultural relics, accurately restoring image details to ensure reliability for subsequent analysis or preservation takes precedence over processing efficiency. Therefore, for these tasks where quality takes precedence over speed, the trade-off between performance and computational cost achieved by the current model is acceptable. In the future, we will strive to further optimize the network architecture while maintaining the model’s current high performance, identify and remove redundant structures, and explore more efficient lightweight design solutions to reduce module size. This will reduce computational overhead while ensuring restoration quality, thereby improving the model’s practicality and generalization.

Table 11.

Comparison of performance of different networks with Flops and Params.

In addition, the data in Table 4 show that the denoising performance of our model is relatively limited when facing Gaussian noise with . We speculate that under the influence of high-intensity Gaussian noise, the edge and texture information of the image may be masked by the noise, making it difficult for the edge detection module to accurately identify and extract edge information. Inaccurate edge information will directly affect the subsequent image processing steps. On the other hand, our adaptive frequency navigation module is highly dependent on the accurate analysis of the frequency components of the image. Under the influence of such strong noise, it is difficult for the module to distinguish between signals and noise in the high-frequency area, resulting in a decrease in the actual denoising performance.

6. Conclusions

This paper proposes a general image restoration model with a multi-stage encoder-decoder architecture, which performs well in image deraining, deblurring, image denoising, image desnowing, and low-light image enhancement tasks. Our method introduces edge information to guide the decomposition of high-frequency and low-frequency components of subsequent images, which makes up for the shortcomings of traditional methods in edge preservation. When processing images with complex textures or blurred boundaries, it accurately extracts edge features to ensure that the restored image has clear contours and natural textures. At the same time, the adaptive frequency navigation module we designed can adaptively filter and modulate high-frequency and low-frequency boundaries according to different image degradation types, providing customized restoration strategies for diverse degraded images. Whether it is a low-light enhancement task that focuses on low-frequency information or a deraining and deblurring task that relies on mid- and high-frequency differential restoration, this module can accurately adjust the frequency response, enhance key structural information, and suppress noise and artifact interference to a certain extent, thereby ensuring that the restored image has excellent visual coherence and realism. For example, in low-light image enhancement, it can highlight the contours and details of the subject, and restore scene clarity and color saturation when removing rain. Finally, we rigorously verified the advancement and effectiveness of EAFormer through 16 datasets and five restoration tasks. Although EAFormer has performed well in multiple restoration tasks, we found that the designed model still encountered bottlenecks in improving the denoising performance of the model when faced with high-intensity noise. Despite its shortcomings, EAFormer has significant advantages in the field of cultural relic image restoration. It can accurately restore the delicate texture of cultural relics and enhance the clarity of structural details. In the future, we will strive to reduce the computing resource load while maintaining the stability of restoration accuracy, and create an efficient model that is adapted to edge computing resource constraint scenarios.

Author Contributions

Conceptualization, D.Z.; methodology, W.X.; software, W.X.; validation, W.X., W.W. and W.Z.; formal analysis, D.Z.; investigation, W.X.; resources, D.Z.; data curation, W.W.; writing—original draft preparation, W.X.; writing—review and editing, D.Z.; visualization, W.X.; supervision, D.Z.; project administration, W.X.; funding acquisition, D.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Our code is available at https://github.com/shdjak/xwj-EAFormer (accessed on 11 September 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. Deblurgan: Blind motion deblurring using conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8183–8192. [Google Scholar]

- Tao, X.; Gao, H.; Shen, X.; Wang, J.; Jia, J. Scale-recurrent network for deep image deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8174–8182. [Google Scholar]

- Wang, X.; Chen, H.; Gou, H.; He, J.; Wang, Z.; He, X.; Qing, L.; Sheriff, R.E. RestorNet: An efficient network for multiple degradation image restoration. Knowl.-Based Syst. 2023, 282, 111116. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14821–14831. [Google Scholar]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef]

- Quan, Y.; Yao, X.; Ji, H. Single image defocus deblurring via implicit neural inverse kernels. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 12600–12610. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Cui, Y.; Zamir, S.W.; Khan, S.; Knoll, A.; Shah, M.; Khan, F.S. AdaIR: Adaptive All-in-One Image Restoration via Frequency Mining and Modulation. arXiv 2024, arXiv:2403.14614. [Google Scholar] [CrossRef]

- Cui, Y.; Tao, Y.; Bing, Z.; Ren, W.; Gao, X.; Cao, X.; Huang, K.; Knoll, A. Selective frequency network for image restoration. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Cui, Y.; Ren, W.; Cao, X.; Knoll, A. Image restoration via frequency selection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 1093–1108. [Google Scholar] [CrossRef]

- Fan, D.; Yue, T.; Zhao, X.; Chang, L. LIR: A Lightweight Baseline for Image Restoration. arXiv 2024, arXiv:2402.01368. [Google Scholar] [CrossRef]

- Zhang, X.; Zeng, H.; Zhang, L. Edge-oriented convolution block for real-time super resolution on mobile devices. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 4034–4043. [Google Scholar]

- Cui, Y.; Ren, W.; Knoll, A. Omni-Kernel Network for Image Restoration. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 1426–1434. [Google Scholar]

- Guo, H.; Li, J.; Dai, T.; Ouyang, Z.; Ren, X.; Xia, S.T. Mambair: A simple baseline for image restoration with state-space model. In Proceedings of the 18th European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2025; pp. 222–241. [Google Scholar]

- Cui, Y.; Ren, W.; Cao, X.; Knoll, A. Focal network for image restoration. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 13001–13011. [Google Scholar]

- Khudjaev, N.; Tsoy, R.; A Sharif, S.; Myrzabekov, A.; Kim, S.; Lee, J. Dformer: Learning Efficient Image Restoration with Perceptual Guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 6363–6372. [Google Scholar]

- Chen, W.T.; Fang, H.Y.; Hsieh, C.L.; Tsai, C.C.; Chen, I.; Ding, J.J.; Kuo, S.Y. All snow removed: Single image desnowing algorithm using hierarchical dual-tree complex wavelet representation and contradict channel loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 4196–4205. [Google Scholar]

- Zhang, H.; Dai, Y.; Li, H.; Koniusz, P. Deep stacked hierarchical multi-patch network for image deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5978–5986. [Google Scholar]

- Yasarla, R.; Patel, V.M. Uncertainty guided multi-scale residual learning-using a cycle spinning cnn for single image de-raining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8405–8414. [Google Scholar]

- Zhang, K.; Zuo, W.; Zhang, L. FFDNet: Toward a fast and flexible solution for CNN-based image denoising. IEEE Trans. Image Process. 2018, 27, 4608–4622. [Google Scholar] [CrossRef] [PubMed]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a deep convolutional network for image super-resolution. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part IV 13; Springer: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Deng, Y.; Loy, C.C.; Tang, X. Compression artifacts reduction by a deep convolutional network. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 576–584. [Google Scholar]

- Zhang, K.; Li, Y.; Zuo, W.; Zhang, L.; Van Gool, L.; Timofte, R. Plug-and-play image restoration with deep denoiser prior. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6360–6376. [Google Scholar] [CrossRef]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. Memnet: A persistent memory network for image restoration. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4539–4547. [Google Scholar]

- Zhang, Y.; Dong, L.; Yang, H.; Qing, L.; He, X.; Chen, H. Weakly-supervised contrastive learning-based implicit degradation modeling for blind image super-resolution. Knowl.-Based Syst. 2022, 249, 108984. [Google Scholar] [CrossRef]

- Zhao, Q.; Wang, H.; Yue, Z.; Meng, D. A deep variational Bayesian framework for blind image deblurring. Knowl.-Based Syst. 2022, 249, 109008. [Google Scholar] [CrossRef]

- Song, T.; Li, L.; Wu, J.; Dong, W.; Cheng, D. Quality-aware blind image motion deblurring. Pattern Recognit. 2024, 153, 110568. [Google Scholar] [CrossRef]

- Cho, S.J.; Ji, S.W.; Hong, J.P.; Jung, S.W.; Ko, S.J. Rethinking coarse-to-fine approach in single image deblurring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 4641–4650. [Google Scholar]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17683–17693. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Shi, Y.; Huang, Z.; Chen, J.; Ma, L.; Wang, L.; Hua, X.; Hong, H. DDABNet: A dense Do-conv residual network with multisupervision and mixed attention for image deblurring. Appl. Intell. 2023, 53, 30911–30926. [Google Scholar] [CrossRef]

- Cheng, J.; Liang, D.; Tan, S. Transfer CLIP for Generalizable Image Denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–24 June 2024; pp. 25974–25984. [Google Scholar]

- Li, Y.; Fan, Y.; Xiang, X.; Demandolx, D.; Ranjan, R.; Timofte, R.; Van Gool, L. Efficient and explicit modelling of image hierarchies for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 18278–18289. [Google Scholar]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-stage retinex-based transformer for low-light image enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 12504–12513. [Google Scholar]

- Wang, C.; Shen, H.Z.; Fan, F.; Shao, M.W.; Yang, C.S.; Luo, J.C.; Deng, L.J. EAA-Net: A novel edge assisted attention network for single image dehazing. Knowl.-Based Syst. 2021, 228, 107279. [Google Scholar] [CrossRef]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple baselines for image restoration. In Proceedings of the 17th European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 17–33. [Google Scholar]

- Zhang, H.; Sindagi, V.; Patel, V.M. Image de-raining using a conditional generative adversarial network. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3943–3956. [Google Scholar] [CrossRef]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1357–1366. [Google Scholar]

- Zhang, H.; Patel, V.M. Density-aware single image de-raining using a multi-stream dense network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 695–704. [Google Scholar]

- Li, L.; Song, S.; Lv, M.; Jia, Z.; Ma, H. Multi-Focus Image Fusion Based on Fractal Dimension and Parameter Adaptive Unit-Linking Dual-Channel PCNN in Curvelet Transform Domain. Fractal Fract. 2025, 9, 157. [Google Scholar] [CrossRef]

- Cao, Z.-H.; Liang, Y.-J.; Deng, L.-J.; Vivone, G. An Efficient Image Fusion Network Exploiting Unifying Language and Mask Guidance. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 1–18. [Google Scholar] [CrossRef]

- Zhan, C.; Wang, C.; Lu, B.; Yang, W.; Zhang, X.; Wang, G. NGSTGAN: N-Gram Swin Transformer and Multi-Attention U-Net Discriminator for Efficient Multi-Spectral Remote Sensing Image Super-Resolution. Remote Sens. 2025, 17, 2079. [Google Scholar] [CrossRef]

- Fu, X.; Huang, J.; Zeng, D.; Huang, Y.; Ding, X.; Paisley, J. Removing rain from single images via a deep detail network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3855–3863. [Google Scholar]

- Nah, S.; Hyun Kim, T.; Mu Lee, K. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3883–3891. [Google Scholar]

- Shen, Z.; Wang, W.; Lu, X.; Shen, J.; Ling, H.; Xu, T.; Shao, L. Human-aware motion deblurring. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5572–5581. [Google Scholar]

- Rim, J.; Lee, H.; Won, J.; Cho, S. Real-world blur dataset for learning and benchmarking deblurring algorithms. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXV 16. Springer: Cham, Swotzerland, 2020; pp. 184–201. [Google Scholar]

- Abuolaim, A.; Brown, M.S. Defocus deblurring using dual-pixel data. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part X 16. Springer: Cham, Swotzerland, 2020; pp. 111–126. [Google Scholar]

- Chu, X.; Chen, L.; Chen, C.; Lu, X. Improving image restoration by revisiting global information aggregation. In Proceedings of the 17th European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Swotzerland, 2022; pp. 53–71. [Google Scholar]

- Tsai, F.J.; Peng, Y.T.; Lin, Y.Y.; Tsai, C.C.; Lin, C.W. Stripformer: Strip transformer for fast image deblurring. In Proceedings of the 17th European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Swotzerland, 2022; pp. 146–162. [Google Scholar]

- Mao, X.; Liu, Y.; Shen, W.; Li, Q.; Wang, Y. Deep residual fourier transformation for single image deblurring. arXiv 2021, arXiv:2111.11745. [Google Scholar]

- Lee, J.; Son, H.; Rim, J.; Cho, S.; Lee, S. Iterative filter adaptive network for single image defocus deblurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 2034–2042. [Google Scholar]

- Ruan, L.; Chen, B.; Li, J.; Lam, M. Learning to deblur using light field generated and real defocus images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16304–16313. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 416–423. [Google Scholar]

- Abdelhamed, A.; Lin, S.; Brown, M.S. A high-quality denoising dataset for smartphone cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1692–1700. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Franzen, R. Kodak Lossless True Color Image Suite. 15 November 1999. Available online: http://r0k.us/graphics/kodak (accessed on 7 July 2025).

- Zhang, L.; Wu, X.; Buades, A.; Li, X. Color demosaicking by local directional interpolation and nonlocal adaptive thresholding. J. Electron. Imaging 2011, 20, 023016. [Google Scholar] [CrossRef]

- Cui, Y.; Knoll, A. Dual-domain strip attention for image restoration. Neural Netw. 2024, 171, 429–439. [Google Scholar] [CrossRef]

- Cui, Y.; Ren, W.; Cao, X.; Knoll, A. Revitalizing convolutional network for image restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9423–9438. [Google Scholar] [CrossRef]

- Chen, S.; Ye, T.; Liu, Y.; Chen, E. SnowFormer: Context interaction transformer with scale-awareness for single image desnowing. arXiv 2022, arXiv:2208.09703. [Google Scholar]

- Zhang, K.; Li, R.; Yu, Y.; Luo, W.; Li, C. Deep dense multi-scale network for snow removal using semantic and depth priors. IEEE Trans. Image Process. 2021, 30, 7419–7431. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.T.; Huang, Z.K.; Tsai, C.C.; Yang, H.H.; Ding, J.J.; Kuo, S.Y. Learning multiple adverse weather removal via two-stage knowledge learning and multi-contrastive regularization: Toward a unified model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17653–17662. [Google Scholar]

- Fu, Z.; Yang, Y.; Tu, X.; Huang, Y.; Ding, X.; Ma, K.K. Learning a simple low-light image enhancer from paired low-light instances. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22252–22261. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Yang, W.; Wang, W.; Huang, H.; Wang, S.; Liu, J. Sparse gradient regularized deep retinex network for robust low-light image enhancement. IEEE Trans. Image Process. 2021, 30, 2072–2086. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the darkness: A practical low-light image enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1632–1640. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Learning enriched features for real image restoration and enhancement. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXV 16. Springer: Cham, Switzerland, 2020; pp. 492–511. [Google Scholar]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward fast, flexible, and robust low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. Uretinex-net: Retinex-based deep unfolding network for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5901–5910. [Google Scholar]

- Chen, X.; Li, Z.; Pu, Y.; Liu, Y.; Zhou, J.; Qiao, Y.; Dong, C. A comparative study of image restoration networks for general backbone network design. In Proceedings of the 18th European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2025; pp. 74–91. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).