Multimodal Large Language Model-Enabled Machine Intelligent Fault Diagnosis Method with Non-Contact Dynamic Vision Data

Abstract

1. Introduction

- (1)

- Sensing Paradigm and Deployment: Traditional methods require sensors to be in physical contact with the equipment under test. This makes the installation process cumbersome, maintenance costly, and deployment in difficult areas extremely challenging. It may even interfere with the system’s own dynamic characteristics due to the mass-loading effect. In stark contrast, dynamic vision technology, represented by event cameras, offers a completely non-contact solution. It enables remote sensing from a safe distance, greatly simplifying deployment and ensuring non-intrusive measurements [6].

- (2)

- Data Fidelity and Information Dimension: Traditional accelerometers are limited by their sampling frequency. This often makes them inadequate for capturing the high-frequency transient signals caused by incipient faults. This can easily lead to signal aliasing or information loss. Dynamic vision, especially event cameras, offers microsecond-level temporal resolution. Their event-driven mechanism accurately captures these high-speed transient processes. They also naturally filter out static background noise, allowing them to acquire key fault features with extremely low data redundancy [7].

- (3)

- Environmental Adaptability and Interference Immunity: Strong electromagnetic environments in industrial sites pose a severe challenge for traditional electronic sensors. Electromagnetic interference (EMI) often overwhelms weak fault signals. As an optical measurement technique, dynamic vision is inherently immune to such interference. Furthermore, its high dynamic range (HDR) allows it to operate stably under extreme or changing lighting conditions. This demonstrates excellent environmental robustness and ensures signal reliability [8].

- (4)

- Monitoring Scope and Diagnostic Efficiency: Traditional methods are essentially based on point sensing, where each sensor provides information from only a single location. To obtain the complete vibration pattern of a device, an expensive and complex sensor array must be deployed. In contrast, a single dynamic vision sensor can cover a wide field of view. This enables synchronous, full-field monitoring of multiple components or even an entire surface. This leap from point-to-surface monitoring significantly improves efficiency. It also provides rich spatial information for comprehensive diagnostics like operational deflection shape analysis [9].

2. Materials and Methods

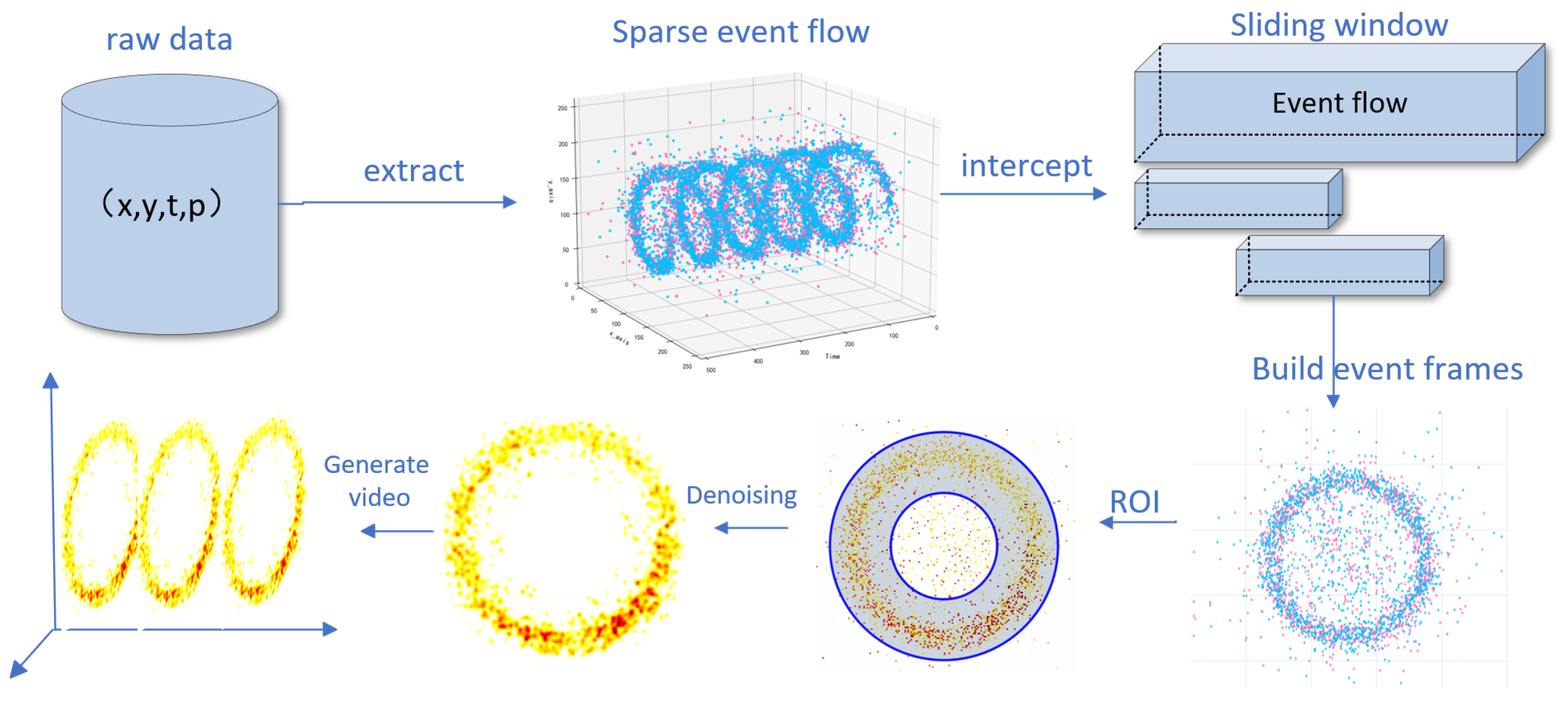

2.1. From Event Camera Raw Data to Training-Ready Video

2.1.1. Time Surface Representation

- (1)

- Timestamp NormalizationTo eliminate the influence of absolute timestamps and to focus on the relative recency of events within the current time window, we first linearly normalize the timestamps of all events within the window to the interval :where is the original timestamp of event , while and are the start and end times of the current time window , respectively. The variable represents the normalized timestamp.

- (2)

- Exponential Decay KernelThe contribution of each event to the time surface is defined by an exponential decay kernel function. This function assigns a higher weight to more recent events, thereby creating a “brighter” trace on the surface. Its mathematical expression is:where is a predefined time decay constant that controls the “memory” duration of the event history.

- (3)

- Surface Update with Polarity SeparationBased on the polarity of each event, the corresponding SAE is updated at the pixel location by taking the historical maximum value. This operation ensures that at the same pixel location, only the event with the highest weight (i.e., the most recent one) can refresh the surface value, thus effectively allowing the surface to record the “trace” of the last activation at each pixel. The update rule is as follows [14]:where represents the time surface for polarity p ( is , and is ), and is the value of that pixel before the update.

- (4)

- Final Surface NormalizationAfter iterating through all events within a time window, we perform a min–max normalization on the generated positive and negative time surfaces separately. This operation enhances the contrast of the SAE frames and standardizes their value range for subsequent processing by linearly scaling the pixel values of each surface to the interval . For any given time surface , the normalized surface is calculated as follows [15]:This step is executed only if the surface contains valid events (i.e., ).

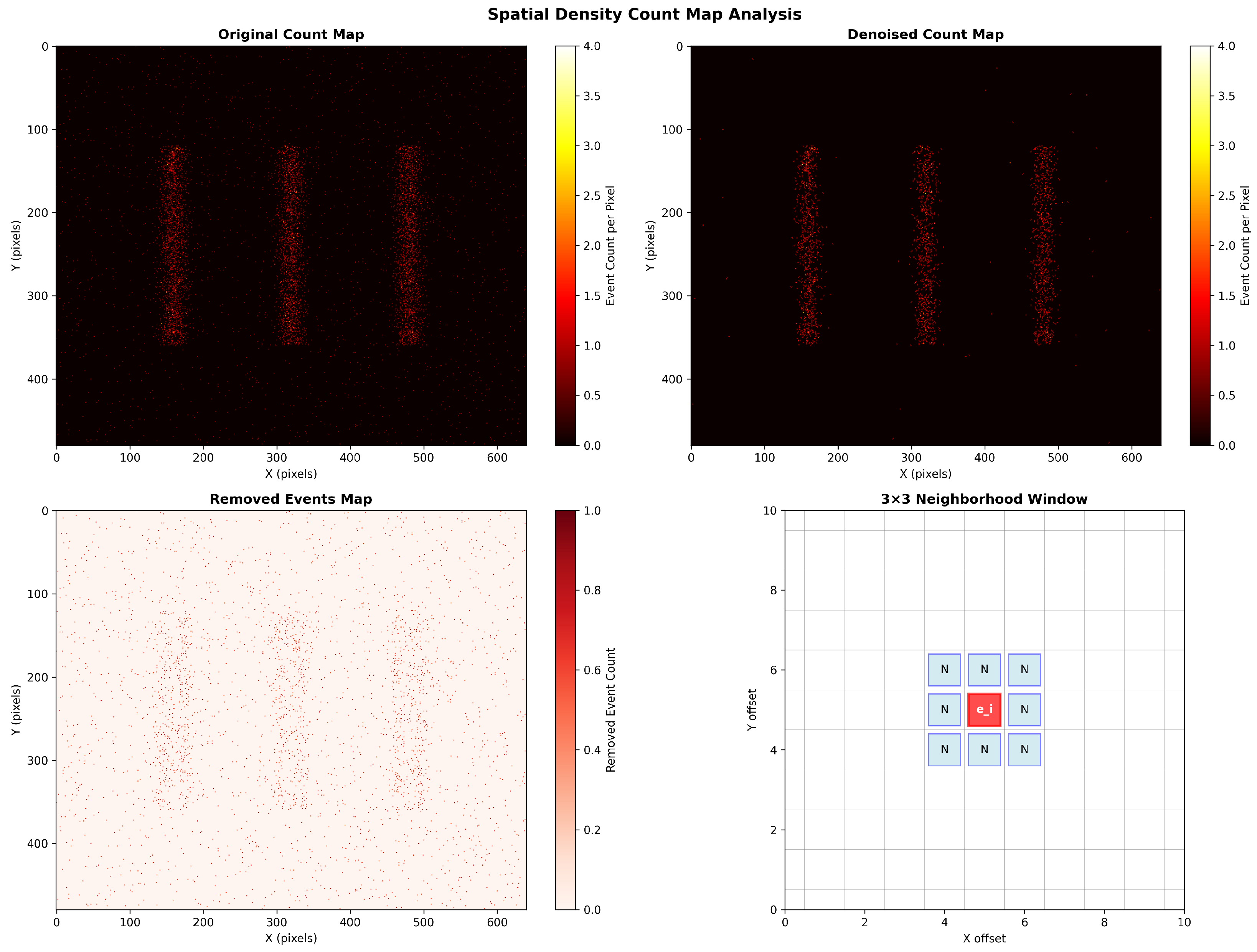

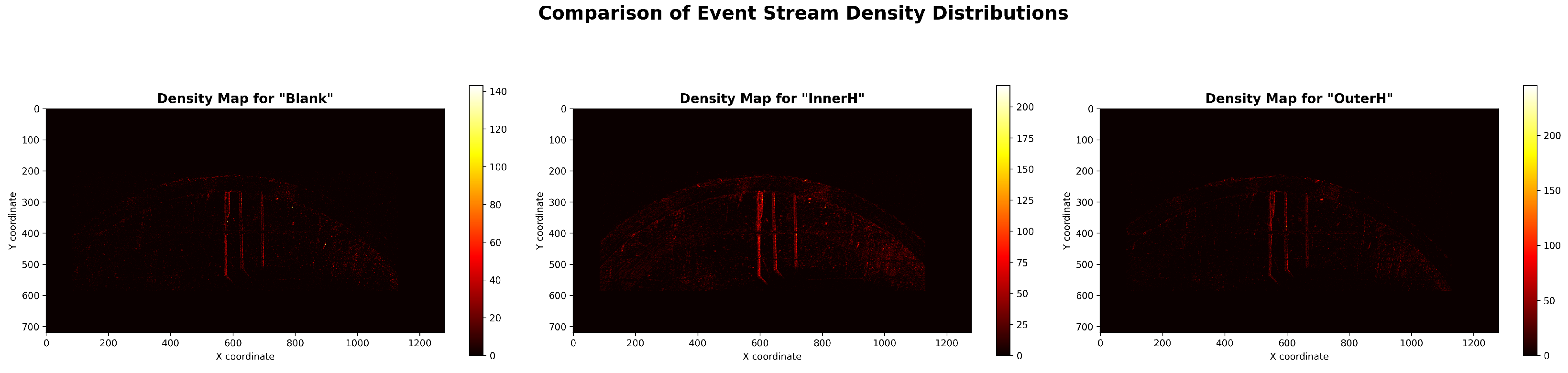

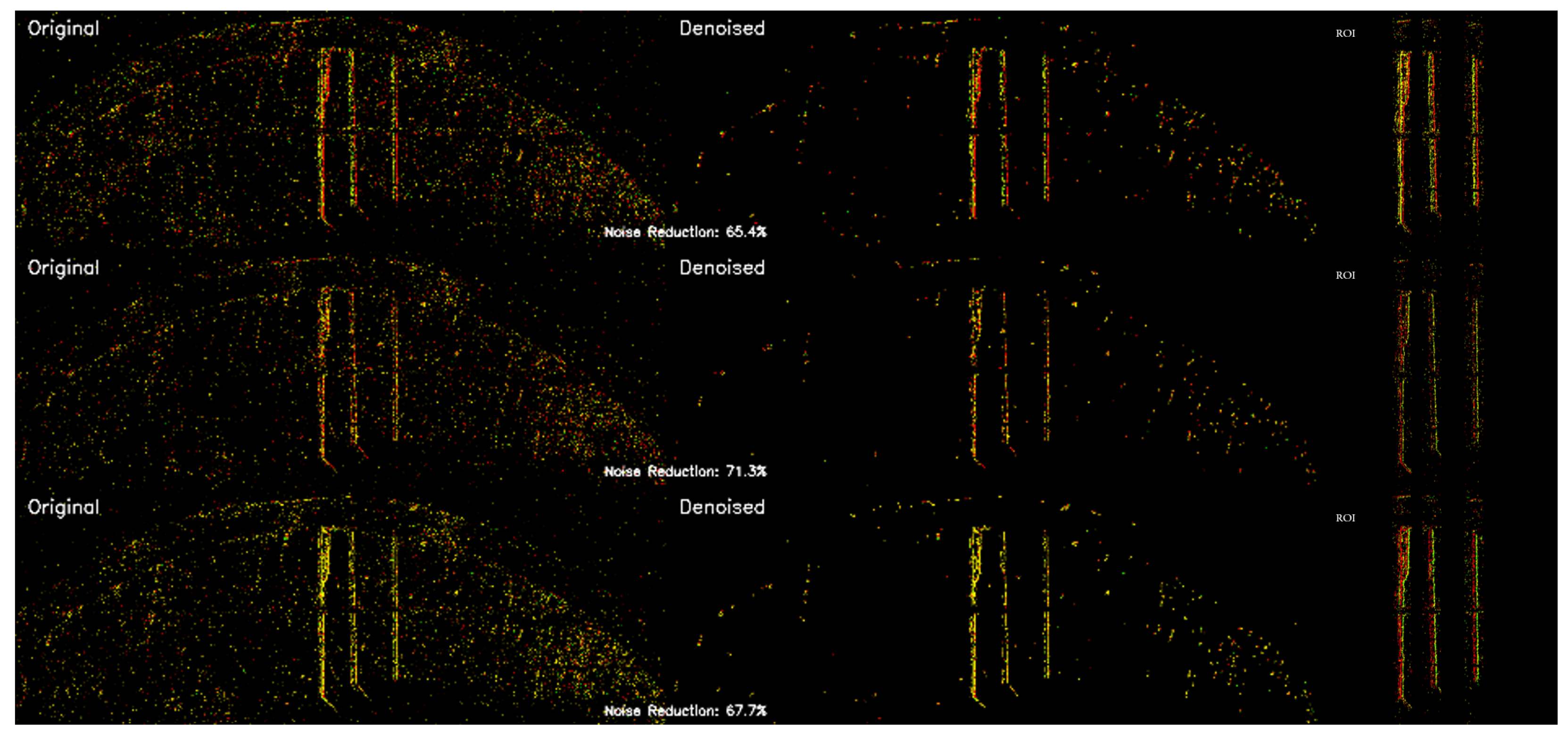

2.1.2. Spatial Density-Based Denoising

2.1.3. ROI Cropping

- (1)

- Coarse ROI Determination via Event Density StatisticsThe objective of this stage is to automatically localize the macroscopic region containing the key bearing structures in a data-driven manner. This process operates directly on the entire raw event stream over a period of time, with its core based on the 1D projection analysis of event density [18]. First, we construct the event density histograms, and , by projecting all events onto the x- and y-axes, respectively:where is the Kronecker delta function and N is the total number of events.Subsequently, on the x-axis density histogram, we perform a convolution with a 3-point uniform averaging kernel, , to obtain a smoothed density curve, . A multi-constraint peak detection algorithm is then applied to robustly identify the peak locations P corresponding to the three core vertical lines of the bearing:where , , and are the thresholds for height, distance, and prominence, respectively.Concurrently, the vertical boundaries of the effective event area, and , are determined from the y-axis density histogram using an adaptive threshold . A similar thresholding strategy is applied locally for each detected x-axis peak to determine its precise left and right boundaries. Through this automated analysis, we can ascertain the optimal ROI parameters for data under various operating conditions, which are then used for the initial coarse filtering of the raw sparse event stream.

- (2)

- Content-based Dynamic Fine-TuningFollowing the Stage 1 filtering and the subsequent generation of time surface (SAE) frames, we execute a second stage of dynamic cropping to ensure the final output video frames are as compact as possible. This stage operates on the generated SAE frame sequence, . We obtain an aggregated activity map, , by projecting the SAE sequence along the time axis:where is the indicator function and is an activity threshold. Then, based on this aggregated map, a minimal bounding box that encloses all non-zero pixels is calculated, with an additional small padding parameter . Finally, every frame in the sequence is uniformly and precisely cropped according to this bounding box and resized to the final target dimensions.

2.2. Construction of the Fine-Tuning Dataset

2.2.1. Dataset Format Specification

2.2.2. Synonymous Instruction Augmentation

2.2.3. Template-Based Parametric Instruction Generation

- (1)

- Random Template Sampling: A template is randomly and uniformly sampled from the library T.

- (2)

- Parametric Instantiation: The specific parameter value of sample is inserted into the placeholder(s) of the sampled template to generate the final instruction text.

- “At 5 Hz, use the video to determine if the bearing is in the innerH, outerH, or normal state.”

- “With the video at 5 Hz rotation, classify the bearing as innerH, outerH, or normal.”

- “From this video at 5 Hz, please indicate: is the bearing innerH, outerH, or normal?”

- “Examine the video at 5 Hz and specify: innerH, outerH, or normal.”

2.2.4. Enhancing Model Generalization Through Instruction Augmentation

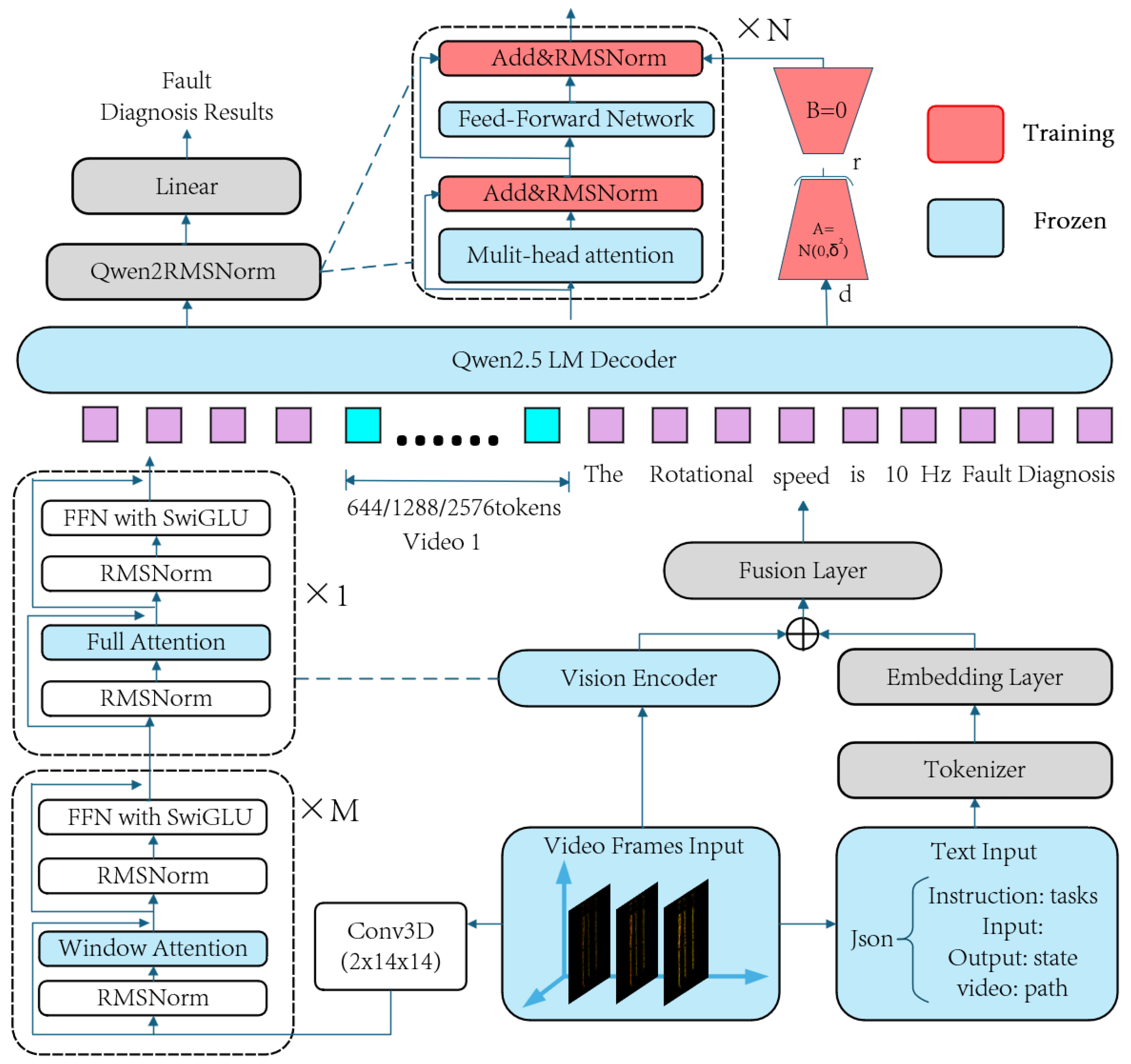

2.3. Lightweight Video Fine-Tuning of Qwen2.5-VL

- (1)

- Multimodal Feature Encoding: We encode the features from different modalities.

- For video spatiotemporal features, the video sequence is processed by a Conv3D module to capture local features. It is then fed into a hierarchical vision transformer encoder, composed of M window attention modules and one full attention module, to extract dynamic visual feature vectors (video tokens) that span from local to global contexts.

- For text instructions, the JSON-formatted text is vectorized using a standard tokenizer and an embedding layer.

- (2)

- Cross-Modal Feature Fusion: This framework adopts an additive fusion strategy. The feature vectors from both video and text are combined through vector addition (⊕) and then passed through a Fusion Layer for non-linear transformation. This process generates a unified multimodal input sequence for the language model decoder.

- (3)

- LoRA-based decoder fine-tuning: This is the core of our PEFT methodology. During fine-tuning, the original weight matrices within the Qwen2.5 LM decoder (primarily in the multi-head attention and feed-forward network layers) are kept frozen. We employ the low-rank adaptation (LoRA) technique, which injects a trainable bypass path composed of two low-rank matrices, A and B, in parallel with each frozen matrix W. The model’s update, , is simulated by the product , such that the new output is . For initialization, matrix A follows a Gaussian distribution , while matrix B is initialized to zero to ensure stability at the start of fine-tuning [26,27].

- (4)

- Task-Specific Output Layer: The feature vectors output by the decoder are normalized by a Qwen2RMSNorm layer and then fed into a trainable Linear output head, which maps them to the final fault diagnosis results.

3. Results

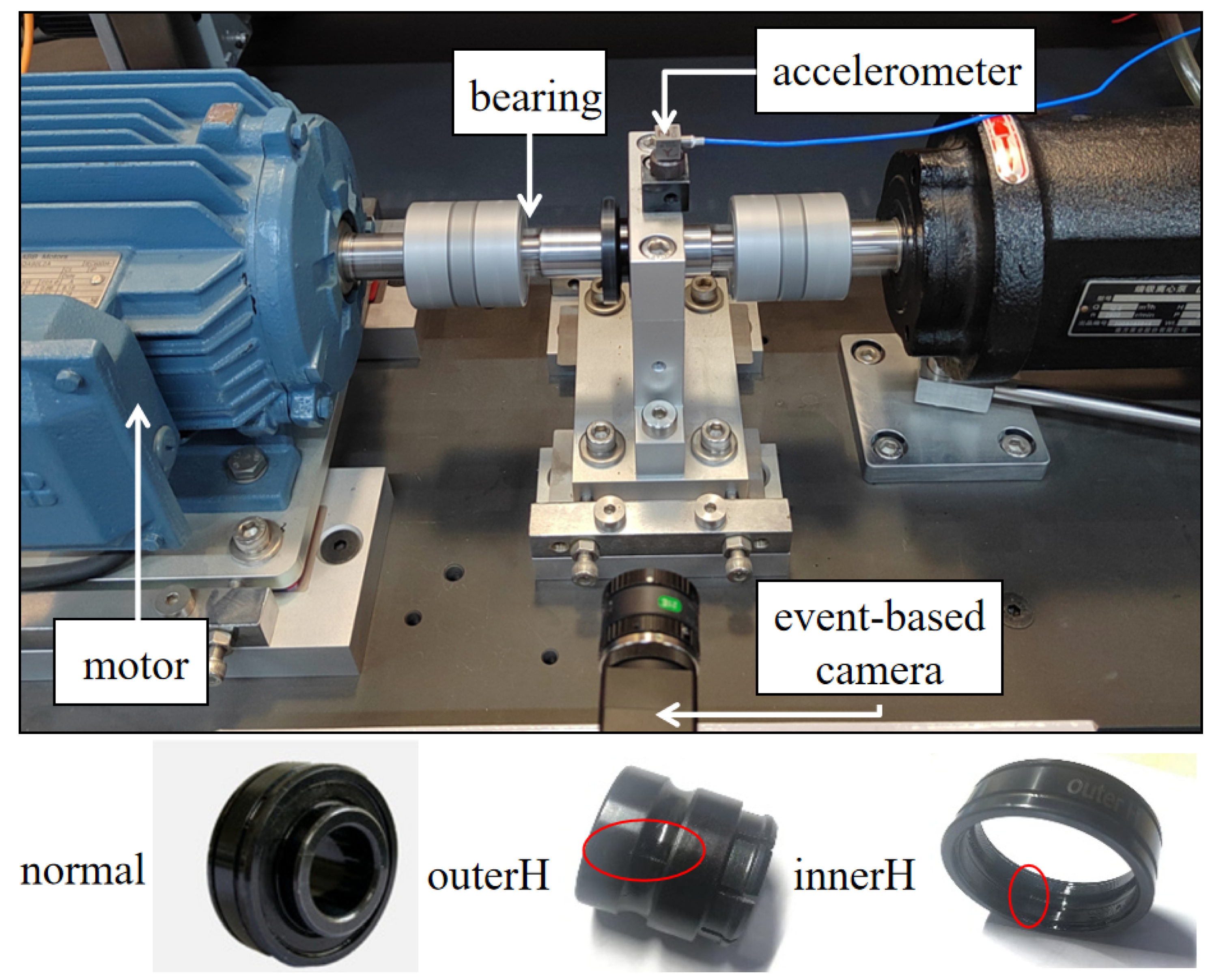

3.1. Dataset Introduction

3.2. Experimental Environment

3.3. Data Processing

- Scheme 1 (Image-based Diagnosis): This scheme uses keyframes randomly extracted from the original videos via OpenCV as the model’s input.

- Scheme 2 (Video-based Diagnosis): This scheme directly uses the unmodified raw video sequences as input.

3.4. Hyperparameter Settings

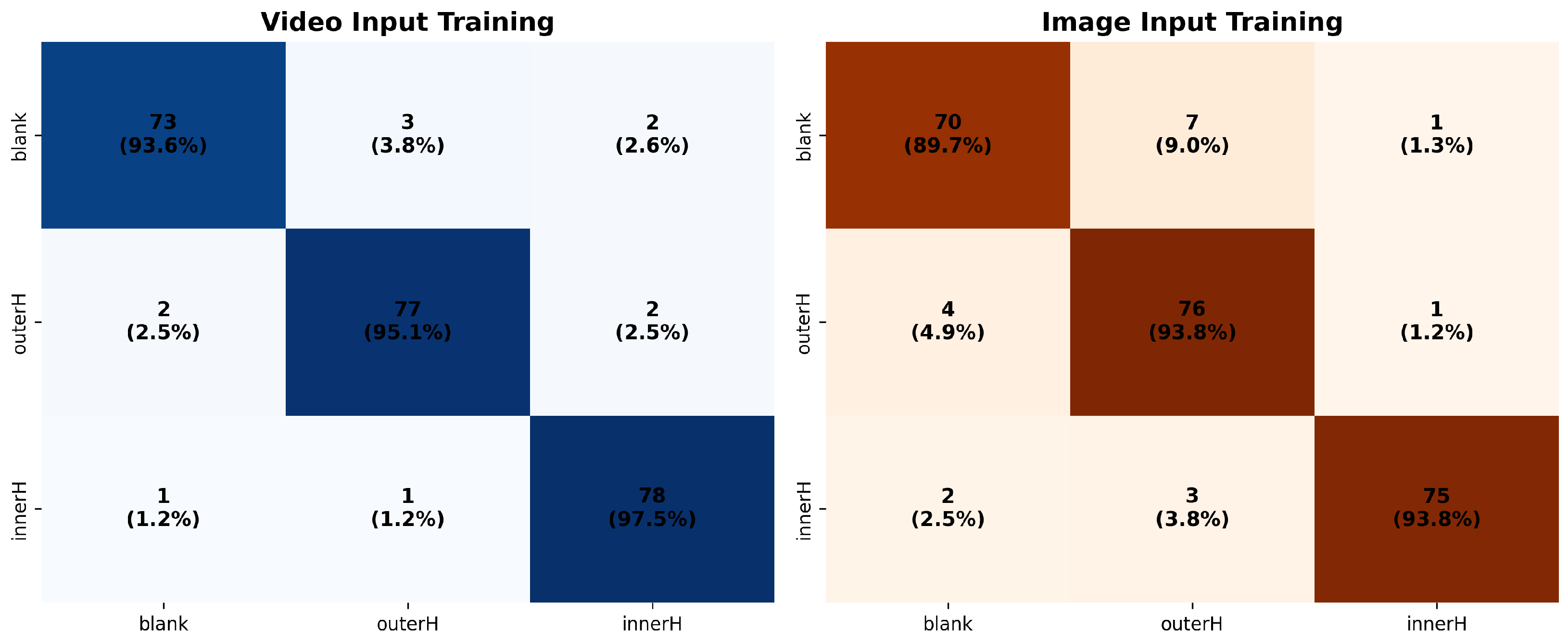

3.5. Evaluation of Diagnostic Accuracy Under Various Operating Conditions

- Scheme 1 (Event Frame-based Diagnosis): The overall diagnostic accuracy on the test set is 92.47%. Specifically, the per-class identification accuracies for the normal state, outer race fault (outerH), and inner race fault (innerH) are 89.74%, 93.83%, and 93.75%, respectively.

- Scheme 2 (Video-based Diagnosis): The overall diagnostic accuracy reaches 95.44%, showing a significant improvement over Scheme 1. Its per-class identification accuracies for the normal, outer race fault, and inner race fault categories are 93.59%, 95.18%, and 97.50%, respectively, demonstrating a comprehensive performance advantage across all classes.

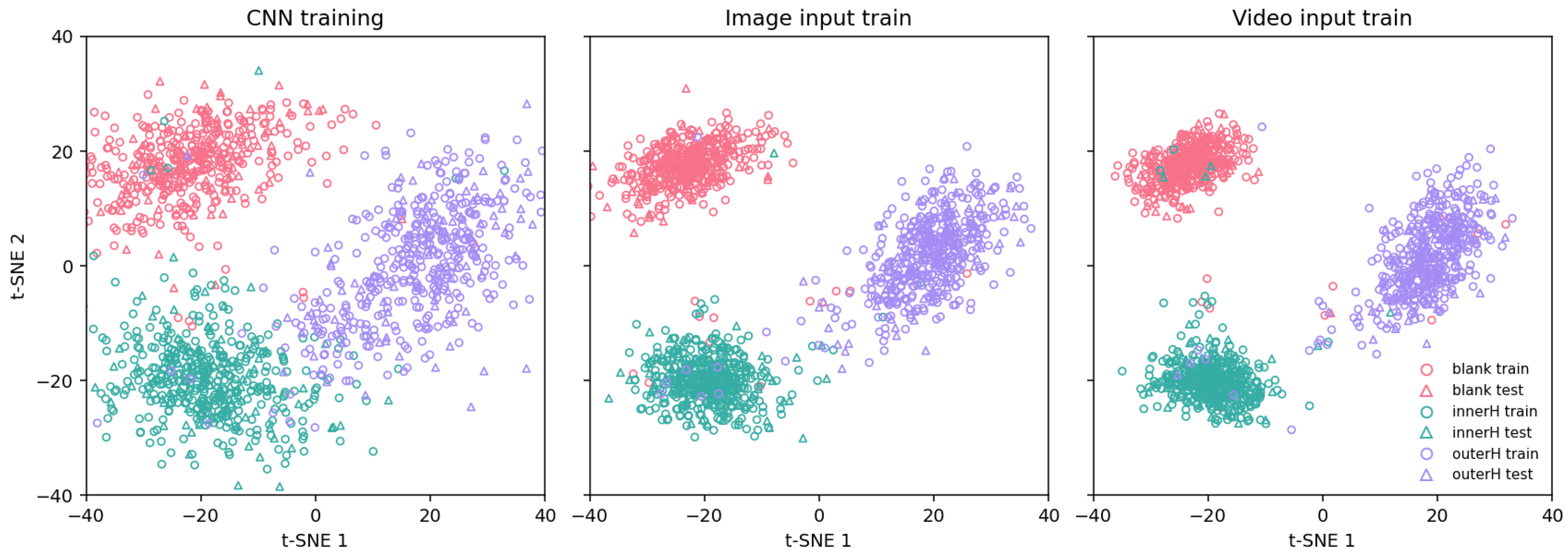

- For the baseline CNN model: The t-SNE plot shows that although the feature vectors form three general clusters, there is significant overlap between the different classes. In particular, the feature distributions for the outer race fault (outerH) and inner race fault (InnerH) states are quite close. This indicates that the discriminative power of static spatial features extracted solely by a basic CNN is relatively limited, making it difficult to delineate clear decision boundaries.

- For Scheme 1 (Event Frame-based): The t-SNE plot similarly forms three discernible clusters, showing a significant improvement in feature separability compared to the baseline CNN model. As seen in the plot, the inter-cluster overlap is substantially reduced, with the majority of samples correctly aggregated within their respective class clusters. This demonstrates that the sparse spatial information captured by the event camera, which embodies motion changes, provides the model with more discriminative features than static images. Nevertheless, despite the good overall separation, a few instances of sample confusion can still be observed at the cluster boundaries, indicating that while its feature discrimination is strong, it has not yet reached an ideal state of complete separation.

- For Scheme 2 (Video-based): The t-SNE visualization reveals three highly separable clusters. The large inter-cluster distances and high intra-cluster compactness are highly consistent with the quantitative accuracy of 95.44%, robustly demonstrating that the dynamic spatiotemporal features learned from the complete video sequence possess the most powerful discriminative ability.

3.6. End-to-End Processing Latency Analysis

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Li, W.; Daman, A.A.A.; Smith, W.; Zhu, H.; Cui, S.; Borghesani, P.; Peng, Z. Wear Performance and Wear Monitoring of Nylon Gears Made Using Conventional and Additive Manufacturing Techniques. J. Dyn. Monit. Diagn. 2025, 4, 101–110. [Google Scholar] [CrossRef]

- Lei, Y.; Chen, B.; Jiang, Z.; Wang, Z.; Sun, N.; Gao, R.X. Applications of machine learning to machine fault diagnosis: A review and roadmap. Mech. Syst. Signal Process. 2020, 138, 106587. [Google Scholar] [CrossRef]

- Zhang, W.; Xu, M.; Yang, H.; Wang, X. Data-Driven Deep Learning Approach for Thrust Prediction of Solid Rocket Motors. Measurement 2023, 225, 114051. [Google Scholar] [CrossRef]

- Thakuri, S.K.; Li, H.; Ruan, D.; Wu, X. The RUL Prediction of Li-Ion Batteries Based on Adaptive LSTM. J. Dyn. Monit. Diagn. 2025, 4, 53–64. [Google Scholar] [CrossRef]

- Chen, X.; Li, X.; Yu, S.; Lei, Y.; Li, N.; Yang, B. Dynamic Vision Enabled Contactless Cross-Domain Machine Fault Diagnosis With Neuromorphic Computing. IEEE/CAA J. Autom. Sin. 2024, 11, 788–790. [Google Scholar] [CrossRef]

- Chen, Z.; O’Brien, R.; Hajj, M.R.; Mba, D.; Peng, Z. Vision-based sensing for machinery diagnostics and prognostics: A review. Mech. Syst. Signal Process. 2023, 186, 109867. [Google Scholar]

- Zhang, W.; Li, X.; Ma, H.; Luo, Z. Transfer learning using deep representation regularization in remaining useful life prediction across operating conditions. Reliab. Eng. Syst. Saf. 2021, 211, 107556. [Google Scholar] [CrossRef]

- Gallego, G.; Delbruck, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 154–180. [Google Scholar] [CrossRef]

- Zhang, W.; Jiang, N.; Yang, S.; Li, X. Federated transfer learning for remaining useful life prediction in prognostics with data privacy. Meas. Sci. Technol. 2025, 36, 076107. [Google Scholar] [CrossRef]

- Li, S.; Zhang, C.; Du, J.; Cong, X.; Zhang, L.; Jiang, Y.; Wang, L. Fault Diagnosis for Lithium-ion Batteries in Electric Vehicles Based on Signal Decomposition and Two-dimensional Feature Clustering. Green Energy Intell. Transp. 2022, 1, 100009. [Google Scholar] [CrossRef]

- Yang, Y.; Pan, L.; Liu, L. Event Camera Data Dense Pre-training. In Proceedings of the European Conference on Computer Vision (ECCV), Milan, Italy, 29 September–4 October 2024. [Google Scholar]

- Li, H.; Abhayaratne, C. AW-GATCN: Adaptive Weighted Graph Attention Convolutional Network for Event Camera Data Joint Denoising and Object Recognition. arXiv 2025, arXiv:2305.12345. [Google Scholar] [CrossRef]

- Lagorce, X.; Orchard, G.; Galluppi, F.; Mirri, B.J.; Benosman, R.B. HOTS: A Hierarchy of Event-Based Time-Surfaces for Pattern Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1346–1359. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Z.; Wang, Z.; Daniilidis, K. Motion Equivariant Networks for Event Cameras with the Temporal Normalization Transform. arXiv 2019, arXiv:1902.06820. [Google Scholar] [CrossRef]

- Raghunathan, N.; Suga, Y.; Aoki, Y. Optical Flow Estimation by Matching Time Surface with Event-Based Cameras. Sensors 2021, 21, 1150. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wang, X.; Wang, G.; Lu, T.; Yang, X. Ev-IMO: Motion Segmentation From Single Event-Based Camera with Image-Domain Warping. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 5974–5987. [Google Scholar]

- Duarte, L.; Safeea, M.; Neto, P. Event-based tracking of human hands. Sens. Rev. 2021, 41, 382–389. [Google Scholar] [CrossRef]

- Guang, R.; Li, X.; Lei, Y.; Li, N.; Yang, B. Non-Contact Machine Vibration Sensing and Fault Diagnosis Method Based on Event Camera. In Proceedings of the 2023 CAA Symposium on Fault Detection, Supervision and Safety for Technical Processes (SAFEPROCESS), Yibin, China, 22–24 September 2023; pp. 1–5. [Google Scholar]

- Scheerlinck, C.; Rebecq, H.; Gehrig, D.; Barnes, N.; Mahony, R.E.; Scaramuzza, D. Fast Image Reconstruction with an Event Camera. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Snowmass, CO, USA, 1–5 March 2020; pp. 156–163. [Google Scholar]

- Maaz, M.; Rasheed, H.; Khan, S.; Khan, F.S. Video-ChatGPT: Towards Detailed Video Understanding via Large Vision and Language Models. arXiv 2023, arXiv:2306.05424. [Google Scholar]

- Zhang, W.; Li, X. Data privacy preserving federated transfer learning in machinery fault diagnostics using prior distributions. Struct. Health Monit. 2021, 21, 1329–1344. [Google Scholar] [CrossRef]

- Ma, R.; Li, W.; Shang, F. Investigating Public Fine-Tuning Datasets: A Complex Review of Current Practices from a Construction Perspective. arXiv 2024, arXiv:2407.08475. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Z.; Li, X. Blockchain-based decentralized federated transfer learning methodology for collaborative machinery fault diagnosis. Reliab. Eng. Syst. Saf. 2023, 229, 108885. [Google Scholar] [CrossRef]

- Huang, K.; Chen, Q. Motor Fault Diagnosis and Predictive Maintenance Based on a Fine-Tuned Qwen2.5-7B Model. Processes 2025, 13, 2051. [Google Scholar] [CrossRef]

- Yang, S.; Ling, L.; Li, X.; Han, J.; Tong, S. Industrial Battery State-of-Health Estimation with Incomplete Limited Data Toward Second-Life Applications. J. Dyn. Monit. Diagn. 2024, 3, 246–257. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Aitsam, M.; Goyal, G.; Bartolozzi, C.; Di Nuovo, A. Vibration Vision: Real-Time Machinery Fault Diagnosis with Event Cameras. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Cham, Switzerland, 2024; pp. 293–306. [Google Scholar]

- Bai, S.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; Song, S.; Dang, K.; Wang, P.; Wang, S.; Tanget, J.; et al. Qwen2.5-VL Technical Report. arXiv 2025, arXiv:2502.13923. [Google Scholar] [CrossRef]

- Li, M.; Zhang, P.; Wang, X.; Liu, Y.; Gao, Z. A Novel Method for Bearing Fault Diagnosis Based on an Event-Driven Camera and Spiking Neural Network. Sensors 2023, 23, 8730. [Google Scholar]

- Cui, S.; Li, W.; Zhu, H.; Peng, Z. Bearing Fault Diagnosis Based on Event-Driven Vision Sensing and Spatiotemporal Feature Learning. IEEE Trans. Ind. Inform. 2023, 19, 11250–11261. [Google Scholar]

- Taseski, D.I.; Li, W.; Peng, Z.; Borghesani, P. Event-based vision for non-contact, high-speed bearing fault diagnosis. Mech. Syst. Signal Process. 2024, 205, 110789. [Google Scholar]

- Wang, Z.; Liu, Q.; Zhou, J.; Peng, Z. High-Fidelity Vibration Mode Extraction for Rotating Machinery Using Event-Based Spatiotemporal Correlation. IEEE Trans. Instrum. Meas. 2024, 73, 4005112. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Tong, Z.; Song, Y.; Wang, J.; Wang, L. VideoMAE: Masked Autoencoders are Data-Efficient Learners for Self-Supervised Video Pre-Training. Adv. Neural Inf. Process. Syst. 2022, 35, 10098–10112. [Google Scholar]

- Ligan, B.; Jbilou, K.; Kalloubi, F.; Ratnani, A. Parameter-Efficient Fine-Tuning of Multispectral Foundation Models for Hyperspectral Image Classification. arXiv 2025, arXiv:2505.15334. [Google Scholar]

- Li, X.; Yu, S.; Lei, Y.; Li, N.; Yang, B. Intelligent Machinery Fault Diagnosis with Event-Based Camera. IEEE Trans. Ind. Inform. 2024, 20, 380–389. [Google Scholar] [CrossRef]

- Aarthi, E.; Jagadeesh, S.; Lingineni, S.L.; Dharani, N.P.; Pushparaj, T.; Satyanarayana, A.N. Detecting Parkinson’s Disease using High-Dimensional Feature-Based Support Vector Regression. In Proceedings of the 2024 International Conference on Intelligent Algorithms for Computational Intelligence Systems (IACIS), Hassan, India, 23–24 August 2024; pp. 1–7. [Google Scholar]

- Li, X.; Yu, S.; Lei, Y.; Li, N.; Yang, B. Dynamic Vision-Based Machinery Fault Diagnosis with Cross-Modality Feature Alignment. IEEE/CAA J. Autom. Sin. 2024, 11, 2068–2081. [Google Scholar] [CrossRef]

- Chen, C.; Zhao, K.; Leng, J.; Liu, C.; Fan, J.; Zheng, P. Integrating large language model and digital twins in the context of industry 5.0: Framework, challenges and opportunities. Robot. Comput.-Integr. Manuf. 2025, 94, 102982. [Google Scholar] [CrossRef]

| Field | Data Type | Content Definition |

|---|---|---|

| instruction | Text | Natural language instruction for the task. |

| input | Text | Additional context for the query (unused). |

| output | Text | Expected answer (bearing health status). |

| videos | Video Data | MP4 video from event data. |

| Diagnosis Scheme | Data Type | Train/Val/Test Samples | Diagnosis Capability |

|---|---|---|---|

| Event Frame-based | Image | 1077 / 232 / 239 | Basic diagnosis |

| Video-based | Video | 1077 / 232 / 239 | Diagnosis with time series |

| Dataset Split | Fault Class | Videos |

|---|---|---|

| Training Set | normal | 352 |

| innerH | 360 | |

| outerH | 365 | |

| Subtotal | 1077 | |

| Validation Set | Normal | 76 |

| innerH | 77 | |

| outerH | 79 | |

| Subtotal | 232 | |

| Test Set | Normal | 78 |

| innerH | 80 | |

| outerH | 81 | |

| Subtotal | 239 | |

| Total | — | 1548 |

| Parameter Type | Parameter | Value | Description |

|---|---|---|---|

| Language Module | 1024 | Max input length for the language module | |

| Adapter Parameters | 2048 | Number of neurons in the adapter | |

| LoRA Parameters | 8 | The rank of the LoRA low-rank matrices | |

| 16 | The learning rate scaling factor for LoRA | ||

| 0.1 | Dropout rate for the LoRA layers | ||

| Training Parameters | Learning rate | ||

| 2 | Batch size. | ||

| 10 | Warmup steps for the learning rate scheduler | ||

| 0.01 | Weight decay. | ||

| 1 | Gradient accumulation steps | ||

| 1 | Loss weight parameter | ||

| 1 | Loss weight parameter | ||

| 4000 | Number of training iterations |

| Training Scheme | Epoch | Acc. | W. Prec. | W. Recall | W. F1 | Kappa |

|---|---|---|---|---|---|---|

| Image Input | 1 | 85.21% | 85.35% | 85.21% | 85.26% | 77.82% |

| 2 | 88.34% | 88.47% | 88.34% | 88.39% | 82.51% | |

| 3 | 90.89% | 91.02% | 90.89% | 90.94% | 86.34% | |

| 4 | 92.47% | 92.61% | 92.47% | 92.50% | 88.70% | |

| Video Input | 1 | 87.45% | 87.58% | 87.45% | 87.49% | 81.18% |

| 2 | 91.56% | 91.68% | 91.56% | 91.60% | 87.34% | |

| 3 | 93.87% | 93.95% | 93.87% | 93.90% | 90.81% | |

| 4 | 95.40% | 95.41% | 95.40% | 95.39% | 93.09% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Z.; Sun, C.; Li, X. Multimodal Large Language Model-Enabled Machine Intelligent Fault Diagnosis Method with Non-Contact Dynamic Vision Data. Sensors 2025, 25, 5898. https://doi.org/10.3390/s25185898

Lu Z, Sun C, Li X. Multimodal Large Language Model-Enabled Machine Intelligent Fault Diagnosis Method with Non-Contact Dynamic Vision Data. Sensors. 2025; 25(18):5898. https://doi.org/10.3390/s25185898

Chicago/Turabian StyleLu, Zihan, Cuiying Sun, and Xiang Li. 2025. "Multimodal Large Language Model-Enabled Machine Intelligent Fault Diagnosis Method with Non-Contact Dynamic Vision Data" Sensors 25, no. 18: 5898. https://doi.org/10.3390/s25185898

APA StyleLu, Z., Sun, C., & Li, X. (2025). Multimodal Large Language Model-Enabled Machine Intelligent Fault Diagnosis Method with Non-Contact Dynamic Vision Data. Sensors, 25(18), 5898. https://doi.org/10.3390/s25185898