1. Introduction

3D semantic occupancy perception, which provides a dense volumetric model of objects and drivable space, is a fundamental task in autonomous driving that directly enables robust motion planning and precise obstacle avoidance. In recent years, vision-based approaches have made remarkable progress in this domain, driven by innovations in architecture, efficiency, and supervision paradigms. Architectural advancements have explored a wide range of 3D representations. For example, BEVFormer introduced spatio-temporal transformers for Bird’s-Eye View (BEV) grids [

1], while SurroundOcc demonstrated strong multi-camera performance using dense volumetric grids [

2]. VoxFormer leveraged sparse voxel transformers for semantic scene completion [

3], and others have refined 2D-to-3D lifting, such as decoupling height in occupancy queries [

4] or applying depth-aware strategies for monocular prediction [

5]. To improve computational efficiency, SparseOcc reduced redundancy through sparse representations [

6], while SHTOcc addressed long-tail voxel distributions to enhance performance [

7]. In terms of supervision, OccNeRF used neural radiance fields to guide occupancy learning [

8], UniOcc combined geometric and semantic rendering [

9], and SfmOcc introduced a novel structure-from-motion pipeline for pseudo ground truth generation [

10]. This rapid progress has been supported by standardized evaluation on large-scale benchmarks such as OpenOccupancy [

11]. Despite these advances, pure vision-based approaches suffer from fundamental limitations: inaccurate depth estimation leading to volumetric errors; high sensitivity to variations in ambient lighting; and lack of robustness in adverse weather conditions such as rain, snow, or fog.

Motivated by the inherent challenges of vision-centric occupancy perception, methodologies centered on LiDAR sensors have been developed. LiDAR provides accurate depth measurements and captures the 3D structure of the environment, exhibiting robust performance across varying light conditions and inclement weather. Recent studies have demonstrated the significant potential of LiDAR for predicting 3D semantic occupancy. For instance, PointOcc [

12] introduced a cylindrical tri-perspective view specifically designed for LiDAR’s data distribution, achieving efficient prediction. Similarly, SSC-RS [

13] proposed a novel framework that decouples the learning of semantic and geometric representations before fusing them in Bird’s-Eye View (BEV), a strategy effective for sparse point clouds. Further advancements include approaches like OccMamba [

14], which employs state-space models to process large-scale 3D data efficiently, while LMSCNet [

15] presents a lightweight architecture for real-time semantic completion. Others have explored novel representations, such as local deep implicit functions [

16], to create continuous scene models that avoid voxelization artifacts. Despite these advances, significant challenges persist for LiDAR-based occupancy prediction. A primary limitation is the inherent sparsity of LiDAR points, which restricts the resolution of the predicted occupancy grid. Furthermore, training these models demands dense supervision, with the manual annotation of 3D ground truth being a notoriously expensive and time-consuming bottleneck. Consequently, many approaches rely on pseudo-labels generated from accumulated laser scans. However, this reliance on pseudo-labels from aggregated scans is inherently flawed. This strategy not only introduces systemic biases but also confines the model’s learning to the intrinsic sparsity of the sensor, as it is supervised by a denser version of its own input modality. Therefore, these limitations necessitate the development of novel frameworks capable of performing dense LiDAR-based occupancy prediction within a self-supervised paradigm, thereby removing the reliance on costly manual annotations.

To address these challenges, we propose LiGaussOcc, a novel self-supervised framework for LiDAR-based occupancy prediction that leverages differentiable Gaussian rendering to enable annotation-free training. We first voxelize multi-frame LiDAR point clouds through VoxelNet [

17], and we employ the SECOND network [

18] for encoding 3D voxel features. Compared with camera-based occupancy prediction, this process eliminates the depth errors and memory consumption associated with 2D-to-3D transformations [

1,

19]. Next, we introduce a novel module, Empty Voxel Inpainting (EVI), to optimize empty voxel features for addressing LiDAR sparsity and enhancing occupancy density. Furthermore, the Adaptive Feature Fusion (AFF) module is designed to enhance the dense voxel features of the EVI module with an adaptive fusion mechanism. During the training stage, we introduce the Gaussian Primitive from Voxels (GPV) module, which is tasked with generating Gaussian parameters (e.g., position

, covariance

, opacity

) for each voxel. Leveraging the prevalence of multi-view cameras on autonomous vehicles, these parameters are then used to render the 3D voxel representation onto 2D image planes, generating the dense depth and semantic maps required for our self-supervised objective. A naive approach to supervising the predicted occupancy would be to directly compute the loss between the rendered images and the corresponding camera images, similar to the 3D Gaussian Splatting [

20] method. However, LiDAR point clouds lack the rich color information found in images. Another potential supervision method mentioned in GaussRender [

21] is to utilize ground truth annotations from datasets. Although this approach can render 2D feature maps from different viewpoints, it either relies on expensive manual annotations [

11] or uses depth information from aggregated multi-frame laser scans [

2,

22], which is clearly not suitable for training LiDAR-based occupancy prediction. To resolve these issues, we employ adjacent-view photometric loss as a supervision signal for self-supervised depth information with following GaussianOcc [

23], and we obtain dense 2D semantic pseudo-labels through the vision language models (VLMs) as mentioned in OccNerf [

8]. This dual-stream supervision strategy circumvents the need for error-prone 3D pseudo-labels, simultaneously mitigating the challenges of high annotation costs and inherent LiDAR sparsity.

The contributions of our paper are summarized as follows:

A novel self-supervised and dense LiDAR-based framework for 3D semantic occupancy prediction is proposed. To the best of the authors’ knowledge, this is the first LiDAR-based architecture with Gaussian rendering for the 3D semantic occupancy prediction task. The proposed framework efficiently processes LiDAR data to generate dense 3D voxel representations that compensate for LiDAR modality limitations, thereby improving performance.

For the inference pipeline, we propose two novel modules to facilitate a unified and fine-grained 3D semantic occupancy perception: an Empty Voxel Inpainting (EVI) module that densifies the initial sparse voxel features, and an Adaptive Feature Fusion (AFF) module that subsequently refines them via an adaptive fusion mechanism.

To enable self-supervision, we introduce the Gaussian Primitive from Voxels (GPV) module, which serves as a bridge between the 3D and 2D domains. By predicting the parameters for 3D Gaussian Splatting, the GPV module facilitates the rendering of LiDAR voxels into dense 2D depth and semantic maps, thereby allowing for effective self-supervision using 2D annotations.

Through extensive evaluation on the nuScenes-OpenOccupancy benchmark, we show that our self-supervised method achieved 30.4% Intersection over Union (IoU) and 14.1% mean IoU (mIoU). This performance reached 91.6% of the fully supervised LiDAR-based L-CONet, while entirely eliminating the need for costly manual 3D annotations. These results demonstrate the practicality and scalability of our approach for real-world 3D perception applications.

3. Method

This section presents the architecture and methodology of the proposed LiGaussOcc framework. We begin by introducing the core rendering principle, 3D Gaussian Splatting, which underpins our self-supervised learning paradigm. We then detail the components of the LiDAR-only inference pipeline, including the novel Empty Voxel Inpainting (EVI) and Adaptive Feature Fusion (AFF) modules, both designed to address the inherent sparsity of LiDAR data. Next, we describe the self-supervised training mechanism, in which the Gaussian Primitive from Voxels (GPV) module transforms voxel features into differentiable Gaussian primitives. These primitives are rendered into 2D depth and semantic maps, which are supervised using photometric consistency loss () and semantic pseudo-labels provided by vision foundation models (), respectively.

3.1. Preliminary for 3D Gaussian Splatting

In this study, 3D Gaussian Splatting [

20] is employed as a foundational method for achieving fully self-supervised 3D semantic occupancy prediction. This innovative technique represents and renders 3D scenes using a collection of point primitives, with each primitive modeled by the following distribution:

where

denotes the unique mean position of a primitive, and where

represents the 3D covariance matrix. For differentiable optimization,

is decomposed into a learnable scaling matrix

and a rotation matrix

:

Subsequently, the 3D Gaussians are projected onto a 2D image plane via a view transformation

W and the Jacobian

J of the projective approximation, thereby yielding the corresponding 2D covariance:

Following the 2D projection, an alpha-blend rendering technique is adopted to determine the final pixel color. The accumulated color

is formulated as a weighted sum:

where

denotes spherical harmonics (SH) representing the color associated with each Gaussian, and where

is the product of learned opacity and the 2D Gaussian defined by covariance Σ

’.

In summary, each Gaussian primitive is parameterized by these essential elements:

3.2. Architecture

The proposed LiGaussOcc is a novel, self-supervised framework for LiDAR-based occupancy prediction that employs differentiable Gaussian rendering technology to generate dense representations. The overall architecture is illustrated in

Figure 1 and consists of two primary stages. The top section depicts the inference pipeline: multi-sweep LiDAR point clouds are voxelized and passed through the proposed EVI and AFF modules to generate a dense representation, from which the final 3D occupancy grid is predicted. The bottom section outlines the self-supervised training stage: voxel features are processed by the GPV module to generate parameters for 3D Gaussian Splatting. These parameters are rendered into 2D depth and semantic maps, which are supervised using photometric consistency (

) and pseudo-labels derived from foundation models (

), respectively.

For inference, unstructured LiDAR point clouds are initially encoded into sparse 3D voxel features

through voxelization [

17] and the Second network [

18], where

D,

H,

W denotes the volumetric dimensions of the scene, and where

C represents the feature dimension of each LiDAR voxel. To address the inherent sparsity of LiDAR voxels, an Empty Voxel Inpainting (EVI) module is introduced to regularize empty voxels, thereby generating dense LiDAR occupancy; then, an Adaptive Feature Fusion (AFF) module is proposed to refine the final LiDAR voxel features.

To facilitate dense supervision for the generated dense LiDAR occupancy during the training phase, a novel self-supervision mechanism is designed within the 2D dense domain, leveraging 3D Gaussian Splatting [

20]. Specifically, a Gaussian Attributes Generation from Voxel (GAGV) module is first designed to extract the essential attributes for 3D Gaussian Splatting from the voxel features. Subsequently, splatting rendering is performed to project these voxels from the occupancy fields onto 2D image planes, yielding both depth maps

and semantic maps

. Concurrently, following [

8,

23], multi-view RGB images enable supervision through adjacent-view photometric loss

for

, while pre-trained foundation models generate semantic pseudo-labels

to supervise

.

The decoupled architecture in

Figure 1 represents an optimal design, strategically engineered to resolve the dual challenges of LiDAR sparsity and the need for training without manual annotations. The inference pipeline (top) is dedicated to the spatial domain, progressively densifying the sparse LiDAR input into a coherent voxel representation using the EVI and AFF modules. The training pipeline (bottom), in contrast, operates as a supervision bridge. It projects the 3D voxel features into the 2D image domain via differentiable Gaussian rendering, a critical step that enables a self-supervised paradigm. This allows the network, which starts with sparse 3D data, to be guided by powerful, dense supervision signals derived from cameras. This strategic separation is crucial: it allows the inference path to focus solely on the geometric task of densification, while the complex machinery of cross-domain supervision is handled entirely offline during training.

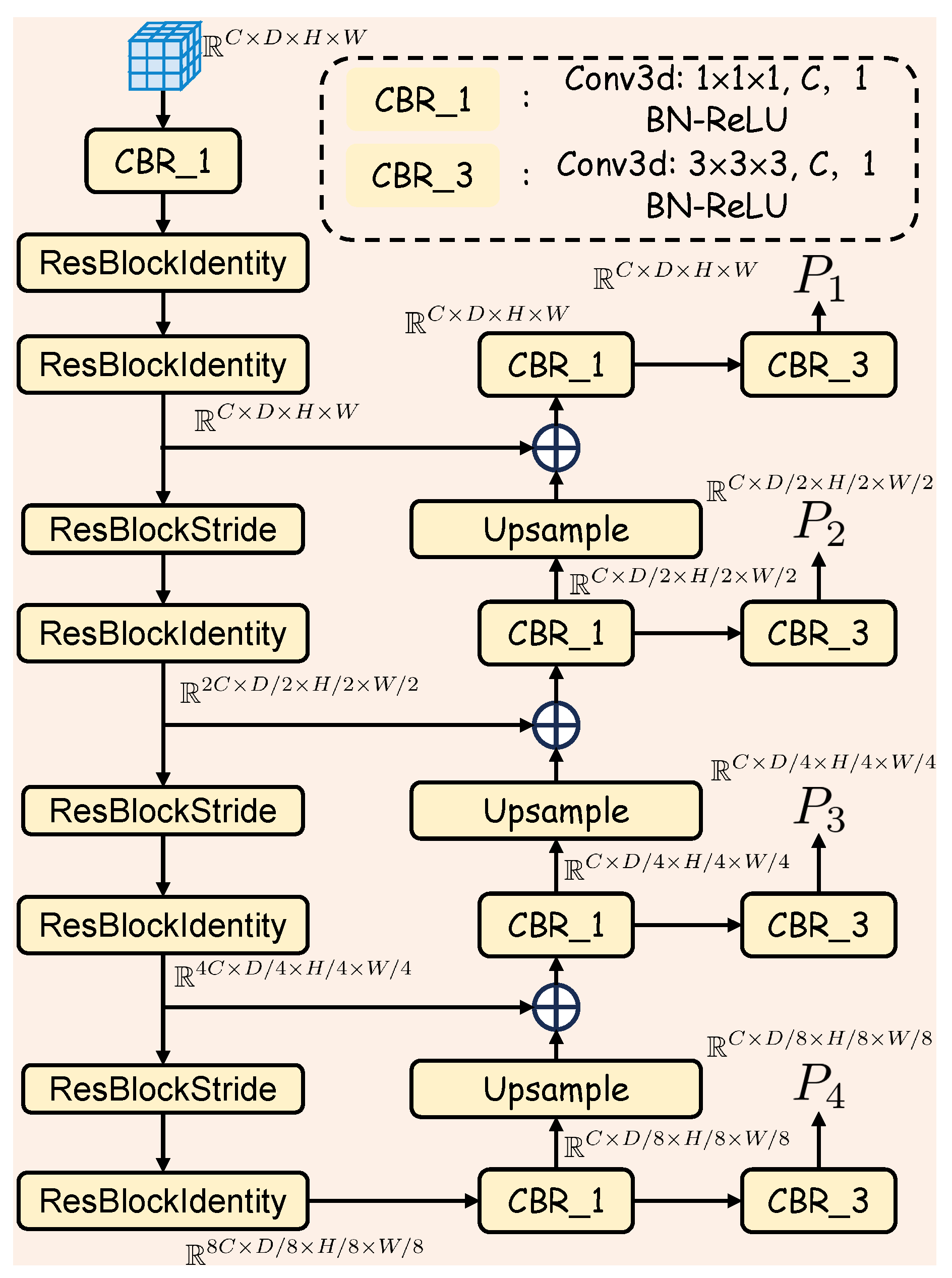

3.3. EVI Module

To overcome the inherent sparsity of LiDAR data for dense occupancy prediction, we introduce the Empty Voxel Inpainting (EVI) module, an encoder–decoder architecture inspired by principles from image inpainting [

38,

39]. As illustrated in

Figure 2, with the detailed components shown in

Figure 3, the module transforms the sparse voxel grid into a dense feature representation via a two-stage process. First, a 3D ResNet-based [

40,

41] encoder is employed to extract multi-scale 3D voxel features

, where

m denotes the scale level, and where

,

,

, and

represent the depth, height, width, and channel dimensions of the

m-th scale feature map, respectively. This encoder progressively downsamples the voxel features, enlarging the receptive field at each stage. This enables the network to learn high-level contextual information from broader spatial regions, allowing it to infer the underlying structure of the scene even from sparsely populated points. Subsequently, a decoder path, structured similarly to a 3D Feature Pyramid Network (FPN) [

42,

43], upsamples the encoded features

from the

j-th level, using 3D deconvolution layers. These upsampled features are fused with the corresponding encoder features

via skip connections. This fusion is critical, as it enables the decoder to leverage both global contextual information and fine-grained spatial detail. The combined features are then further refined using 3D convolutions. The final output consists of densified multi-scale feature maps

, where

M is set to 4 in our LiGaussOcc.

The EVI module employs an encoder–decoder architecture with skip connections, a topology proven effective in image inpainting for its ability to synthesize plausible content. This design is optimal for handling LiDAR sparsity because it fuses two critical streams of information. The encoder path captures high-level semantic context across progressively larger receptive fields, while the skip connections preserve and reintroduce fine-grained spatial details to the decoder. This fusion of contextual and spatial information enables the module to plausibly reconstruct features within unobserved empty voxels, ultimately yielding a coherent and dense scene representation.

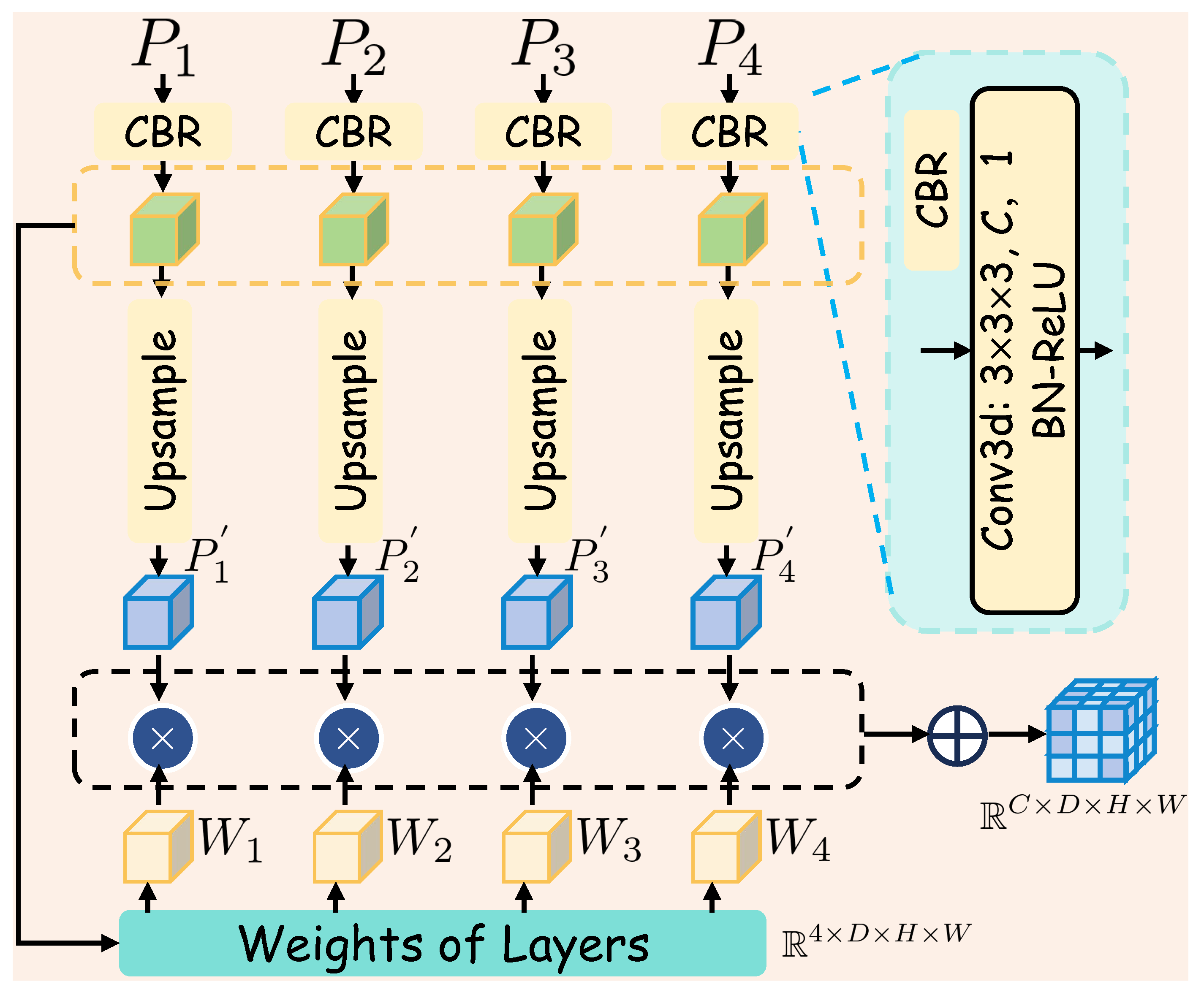

3.4. AFF

As illustrated in

Figure 4, the multi-scale LiDAR voxel features from each level,

, are fused with learned weights through an adaptive fusion mechanism. This adaptive fusion dynamically combines information from different scales to synthesize the final dense occupancy representations

, effectively inpainting the empty voxels by leveraging diverse contextual and detailed information across the feature hierarchy. The fusion mechanism is formulated as follows:

where

Here, represents the upsample operation, and denotes the weight encoding network, which primarily consists of a 3D convolutional network and normalization layers. The weight is computed via a sigmoid function and is used to balance the contribution from different scale voxel features, with values ranging from 0 to 1.

The superiority of the proposed adaptive fusion mechanism over simpler alternatives, such as summation or concatenation, was quantitatively validated by our ablation study. The results confirm that by using learnable weights to dynamically emphasize features from the most informative scales, our network produces a more robust and refined final representation capable of handling the diverse structures in driving environments.

3.5. GPV Module

To facilitate the conversion of voxel-based occupancy representations into differentiable Gaussian primitives, the Gaussian Primitive from Voxels (GPV) module is designed with a focus on simplified Gaussian parameterization, as depicted in

Figure 1. This approach aims to prevent unstable configurations during the learning process and to streamline optimization. The requisite attributes for each Gaussian primitive are extracted from its associated voxel at position

. These attributes include the following:

the 3D position : directly inherited from the voxel’s grid coordinates , eliminating learnable position offsets;

scale S: defined as a diagonal matrix , where s is a scalar factor determined by the voxel’s dimensions;

color logits c: directly derived from the model’s final occupancy semantic prediction for the corresponding voxel, preserving class probability distributions;

opacity o: a learned parameter with implementing MLP for voxel features;

rotation matrix R: fixed with identity matrix I, reducing the covariance matrix to , and ensuring spherical symmetry.

3.6. Rendering with Gaussian Splatting

To enhance the efficiency of the rendering pipeline, a 3D Gaussian Splatting approach is employed to project occupancy voxels onto the 2D image domain. This process involves transforming the 3D covariance matrix

into the image plane with a given viewing transformation

W, and the projected 2D covariance is defined as

where

J represents the Jacobian matrix, the affine approximation of the projective transformation [

20]. The resulting 2D covariance represents the spatial distribution and shape of the Gaussian splats, directly governing per-pixel opacity

and transmittance

computations during rasterization. For pixel

p, the aggregated semantic color

, where

denotes the number of semantic classes, and the rendered depth

are derived by summing contributions from all the overlapping Gaussians:

where

N indicates the total number of overlapping Gaussians,

represents the opacity via density

and ray interval

,

denotes cumulative transmittance to address occlusion, and

denotes the distance of the

i-th Gaussian to the camera. Thus, the rendered semantic images

and depth images

can be represented as follows:

where

represents the associated pixel set of each camera. This differentiable rendering mechanism enables direct projection of 3D semantic occupancy fields into geometrically consistent dense 2D representations; the 3D spatial information is effectively translated into 2D views, facilitating pixel-level supervision for LiDAR-based occupancy prediction.

3.7. Adjacent-View Photometric Consistency for Depth Label

To enforce geometrically consistent depth learning across multi-sensor platforms, our approach, by following [

23], establishes adjacent-view photometric consistency constraints leveraging reprojection geometry. For each target view

i and adjacent view

j, an overlap mask is computed to isolate mutually visible regions while preserving scene completeness through unprojection. The pixel

in view

i is reprojected to coordinate

in view

j via

where

,

denote intrinsic matrices,

,

represent camera-to-world extrinsics, and

is the predicted depth map of

ith camera. Differentiable splatting renders the warped image

, which is similar to Equation (

10). Accurate depth prediction ensures that

photometrically aligns with the raw image

I, providing depth-aware supervision.

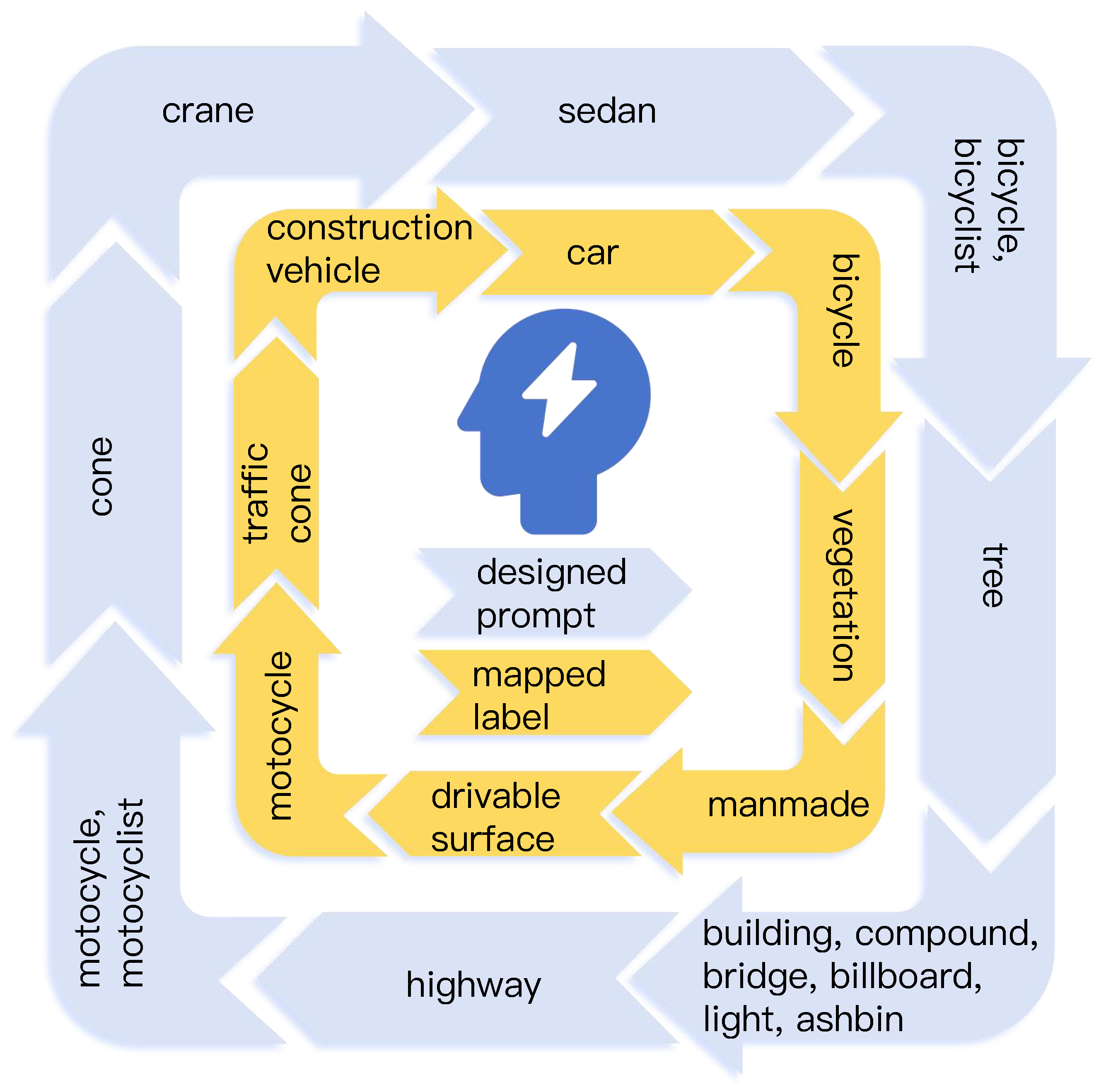

3.8. Foundation Models for Semantic Label

By following [

8], we leverage a pre-trained open-vocabulary model Grounded-SAM to generate 2D semantic segmentation labels. The pre-trained open-vocabulary model enables us to obtain 2D labels that closely match the semantics of the given category names. The detailed promote design is shown in

Figure 5. The design of these text prompts is crucial for maximizing the model’s performance. An abstract class name—for example, the ‘manmade’ category—can be ambiguous to the model. We found that providing a list of concrete, descriptive synonyms including ‘building’, ‘compound’, and ‘bridge’ elicits far more accurate and reliable segmentation masks. This process significantly improves the quality of the pseudo-labels that underpin our self-supervised training.

An uncertain label is given if the corresponding pixel does not belong to any category. Each pixel has

, where

K is the number of categories; the per-pixel label

is given by

where

is a function that maps the index of

to the category label according to the phrase. By following Equation (

9),

denotes the pseudo-label.

3.9. Loss Function

The depth loss

and the semantic loss

are shown as below:

where

is set to 0.85 for balancing the two loss terms of

, and where

represents the cross-entropy loss function. Our overall loss function

is expressed as

4. Results

This section presents the experimental validation of our proposed LiGaussOcc framework. We first describe the experimental setup, including the dataset, evaluation metrics, and implementation details. We then present our main quantitative results, comparing our method against state-of-the-art fully supervised approaches. Finally, we provide a series of ablation studies to analyze the individual contributions of our key modules and supervision strategies.

4.1. Experimental Setup

4.1.1. Dataset and Metrics

We evaluated our proposed LiGaussOcc framework on the nuScenes-OpenOccupancy dataset [

11,

44], a large-scale benchmark for 3D occupancy prediction. The dataset includes 1000 driving scenes, split into 700 for training, 150 for validation, and 150 for testing. Ground truth was provided as dense voxel grids of size

with 0.2 m resolution, covering 16 semantic categories and a free-space class. Following the standard evaluation protocol [

11], we adopted Intersection over Union (IoU) to assess geometric completion and mean IoU (mIoU) for semantic prediction. These metrics are defined as

where

,

, and

denote the number of true positives, false positives, and false negatives, and where

S is the number of semantic classes.

4.1.2. Implementation Details

Our model processed a sequence of 10 accumulated LiDAR sweeps, which were voxelized and then passed through a voxel-based encoder for feature extraction [

17,

18]. The EVI module, designed to densify the sparse LiDAR features, was constructed using a 3D ResNet encoder and a 3D FPN decoder [

40,

41,

42,

43], enabling the inpainting of empty voxels based on contextual information. The AFF module employed a lightweight weight-generation network comprising a

3D convolution followed by normalization to adaptively fuse multi-scale features. Gaussian primitives were initialized with a scale of 0.1. For training supervision, we adopted 2D pseudo-labels generated using the semantic mapping approach from OccNeRF [

8] and depth estimation from GaussianOcc [

23].

The model was implemented using the MMDetection3D framework [

45]. Training was performed for 20 epochs using the AdamW optimizer [

46], which included a weight decay of

to regularize the model and reduce overfitting. An initial learning rate of

was used, with a batch size of 8 distributed across eight NVIDIA L20 GPUs. In addition, standard LiDAR data augmentation techniques from the MMDetection3D pipeline were employed to further mitigate overfitting.

4.2. Main Results

A comprehensive evaluation was conducted to benchmark our proposed self-supervised framework, LiGaussOcc, against state-of-the-art methods, with the results detailed in

Table 1. Our method achieved a highly competitive IoU of 30.4 and mIoU of 14.1. It is critical to note that these results were obtained without relying on any manually annotated 3D ground truth for supervision, which fundamentally distinguishes our approach from traditional fully supervised methods and marks a significant contribution to the field. The significance of our method’s performance is further highlighted by its consistent and substantial gains across several key semantic classes critical for autonomous driving safety. As shown in

Table 1, our method achieved state-of-the-art results among even some fully supervised methods in static environmental classes, including drivable surface (35.4% IoU), sidewalk (22.6% IoU), man-made structures (19.6% IoU), and vegetation (23.8% IoU). This consistent outperformance across multiple diverse categories, rather than just an aggregate mIoU improvement, provides strong evidence for the robustness and statistically significant superiority of our proposed framework. In addition, these improvements are also critical for autonomous driving safety, as a precise and coherent understanding of the static scene is fundamental for trajectory prediction and motion planning.

The effectiveness of our framework was further validated through direct comparisons with leading methods across different modalities. When compared with leading LiDAR-only methods, the result was particularly remarkable. Our self-supervised LiGaussOcc achieved 91.6% of the performance of the fully supervised state-of-the-art L-CONet. Closing the performance gap to this extent, without any 3D labels, demonstrates the immense potential and effectiveness of our proposed annotation-free training paradigm for LiDAR occupancy prediction. Against representative camera-only approaches, LiGaussOcc established a clear advantage in geometric and semantic understanding. For instance, it surpassed C-CONet by a significant 13.7% in mIoU. This highlights the inherent benefits of the LiDAR modality for 3D perception and validates our model’s ability to effectively process these sparse inputs into a dense, accurate representation. The ability of our self-supervised framework to achieve results nearly on a par with fully supervised LiDAR methods, while significantly outperforming camera-based ones and demonstrating proficiency in static scene understanding, highlights the power of our annotation-free paradigm. This represents a valuable and practical step towards building scalable, data-efficient, and robust 3D perception systems for autonomous driving.

The qualitative results of LiGaussOcc are presented in

Figure 6. The visualizations illustrate that LiGaussOcc yielded 3D occupancy predictions with fewer false positives. Moreover, our method demonstrated robust performance in perceiving static scene elements, accurately predicting classes like drivable surface, man-made structures, vegetation, and sidewalk in close alignment with the ground truth.

4.3. Ablation Study

Ablation of Core Components. A comprehensive ablation study was conducted to validate the individual contributions of our core components, the EVI and AFF modules, with the quantitative results presented in

Table 2. Our analysis started from the full LiGaussOcc model, and it describes the performance degradation as each component was individually ablated. Firstly, to evaluate the impact of the EVI module, we replaced it with a standard downsampling block composed of four 3D convolutions. This change caused a substantial performance drop, with the mIoU decreasing by 11.3%. This result underscores the critical role of our EVI module in effectively processing sparse point cloud features to generate a high-quality scene representation. Secondly, we examined the effectiveness of the AFF module. By removing it and exclusively using the

feature from the EVI module for subsequent processing (as depicted in

Figure 2), the mIoU decreased by 6.4%. This degradation confirms that the feature fusion performed by the AFF module is essential for refining the occupancy prediction. These results demonstrate that each proposed module provides a distinct and critical contribution. The fact that the complete model achieved the highest score highlights a clear synergistic effect, validating our core components design.

Ablation of Supervision Method. Ablation studies were conducted to evaluate the impact of different supervision methods on the overall model performance, including ground truth supervision, volume rendering supervision, and our splatting rendering supervision, as presented in

Table 3. First;y, we established a fully supervised upper bound by training our network architecture directly with the ground truth 3D labels from the OpenOccupancy dataset [

11]. This fully supervised setup achieved a strong mIoU of 15.8. In contrast, our proposed method, which leverages 3D Gaussian Splatting for self-supervision, yielded a competitive mIoU of 14.1. This result is highly favorable compared to the alternative supervision strategies. It represents a 2.9% relative improvement over the baseline that uses volume rendering, a technique similar to that in OccNerf [

8], confirming the superiority of our chosen rendering technique for generating high-quality supervisory signals. Most critically, our self-supervised result achieved 89.2% of the performance of the fully supervised upper bound. This demonstrates that our proposed framework effectively captures a substantial portion of the performance achievable with perfect 3D labels, marking a significant step towards annotation-free and data-efficient 3D perception.

Ablation of AFF Module. An ablation study was conducted to validate the fusion strategy of our proposed Adaptive Feature Fusion (AFF) module, with the results presented in

Table 4. To evaluate its effectiveness, AFF was compared against two common fusion baselines adapted from methods in multi-modal fusion [

47]. The baselines were as follows: (1) summation-based fusion, where multi-scale voxel features are upsampled and then combined via element-wise addition before being processed by an MLP; and (2) concatenation-based fusion, where the upsampled features are concatenated along the channel dimension and, subsequently, fed into an MLP. The experimental results clearly demonstrate the superiority of our proposed AFF module. It achieved the best performance, with 30.4 in SC IoU and 14.1 in mIoU. Specifically, AFF outperformed the stronger summation-based baseline by 3.7% in mIoU, and it surpassed the concatenation-based method by a more significant 7.6%. This validates that the adaptive weighting mechanism within our AFF module provides a more effective and robust feature fusion than conventional, non-adaptive strategies.

5. Discussion

Our experiments validated that LiGaussOcc effectively addresses the key challenges of LiDAR sparsity and annotation dependency in 3D occupancy prediction. By integrating a novel self-supervised paradigm with Gaussian rendering, our framework achieved a competitive 30.4% IoU and 14.1% mIoU on the nuScenes-OpenOccupancy benchmark. This performance was notably close to fully supervised methods, achieving 91.6% of L-CONet’s mIoU and, thereby, highlighting the viability of our annotation-free approach as a scalable alternative to methods reliant on expensive manual 3D labels.

Several key design choices underpinned the strong performance of our framework. The effectiveness of the inference architecture was validated through ablation studies (

Table 2), which showed that removing the EVI module resulted in an 11.3% drop in mIoU, while ablating the AFF module led to a 6.4% degradation. These results demonstrate the critical role of these modules in densifying and refining sparse features. Additionally, Gaussian rendering supervision outperformed the volume rendering alternatives by 2.9% mIoU, highlighting its efficacy in generating dense 2D supervisory signals from sparse inputs. This advantage is reflected in the strong per-class results for static scene understanding, including a state-of-the-art 35.4% IoU for drivable surfaces.

When contextualized against other state-of-the-art methods, the merits of our approach become more evident. Our method achieved 14.1% mIoU, outperforming the camera-based C-CONet, which reached 12.4% mIoU, thereby confirming the superior geometric fidelity of the LiDAR modality. Furthermore, although our supervision strategy draws inspiration from vision-based methods such as OccNeRF, our framework is specifically designed to handle the sparsity challenges of LiDAR data, a constraint not encountered by camera-based approaches that operate on dense image features.

Despite these strengths, we acknowledge several limitations of the current framework. Firstly, performance remains limited for small and distant objects (e.g., 3.3% IoU for bicycles), primarily due to the inherent sparsity of LiDAR signals. Secondly, a key limitation lies in the reliance of our training paradigm on camera data, which can be unreliable under adverse conditions. Nonetheless, this limitation is mitigated by the scale and diversity of the nuScenes dataset, enabling the model to learn generalizable features despite occasional noisy frames. Camera-based signals serve as an indispensable and scalable teacher for learning a mapping from LiDAR structures to dense 3D semantics. This task is otherwise infeasible using sparse LiDAR data alone in a self-supervised setting. Finally, a critical consideration for real-world deployment is the computational cost of the 3D CNN backbone during inference, which can hinder real-time performance. While the Gaussian rendering-based training process is computationally intensive, it is conducted offline and does not affect deployment efficiency.

These limitations suggest clear and promising directions for future research. One key direction is to enhance perception robustness in adverse weather and for small objects by incorporating 4D radar. We plan to explore early-fusion strategies, where a dedicated network branch processes the radar’s velocity and weather-resilient data. These features could then be fused with LiDAR data at the voxel level using a cross-attention mechanism, allowing the model to dynamically weigh sensor inputs based on environmental conditions. Furthermore, addressing the computational bottleneck is critical for real-world deployment. Future work will, therefore, focus on model compression, specifically using knowledge distillation to transfer the representation from our EVI module into a lightweight student network to bridge the gap to real-time on-vehicle deployment.

6. Conclusions

In this paper, we introduced LiGaussOcc, a novel self-supervised framework designed to address the significant challenges of sparsity and 3D annotation dependency in LiDAR-based semantic occupancy prediction. Our core contribution is a new paradigm that utilizes 3D Gaussian Splatting to differentiably render LiDAR voxel features onto 2D image planes, enabling effective self-supervision from multi-view cameras without any 3D ground truth labels. The framework is further enhanced by specialized modules, EVI and AFF, designed to handle the inherent sparsity of LiDAR data during inference. Our extensive experiments conducted on the nuScenes-OpenOccupancy benchmark validated the effectiveness of our approach. LiGaussOcc achieved a highly competitive mIoU of 14.1% while also attaining state-of-the-art performance on critical static environmental classes such as drivable surfaces and vegetation. This performance was remarkably close to that of fully supervised state-of-the-art methods, demonstrating the viability of our annotation-free paradigm.

Despite the promising results achieved by LiGaussOcc, several limitations remain and define a clear roadmap for future research. The reliance on camera data for training introduces potential vulnerabilities under adverse environmental conditions, and the 3D CNN backbone imposes a significant computational burden that may hinder real-time deployment. Future work will address these challenges by exploring more robust supervision strategies, such as 4D radar integration and improving runtime efficiency through model compression techniques, including quantization and knowledge distillation. In conclusion, by successfully adapting differentiable rendering for LiDAR-based self-supervision, LiGaussOcc opens up a promising direction for developing scalable, annotation-free, and data-efficient 3D perception systems tailored for autonomous driving applications.