1. Introduction

Unmanned aerial vehicles (UAVs) have emerged as a revolutionary technology in defence, commercial, and scientific fields over the past decade. In particular, UAV swarms have played a significant role in areas such as intelligence, surveillance, search and rescue, agricultural inspection, natural disaster monitoring, and communication networks [

1,

2]. The scalability of UAV swarms makes them suitable for complex, collaborative missions where multiple UAVs must operate simultaneously and effectively in dynamic and uncertain environments.

The basic requirement for the safe and efficient operation of UAV swarms is that each UAV not only plans its trajectory autonomously but also flies in coordination with other UAVs to avoid collisions, resource wastage, and communication bottlenecks [

3,

4]. This coordination can depend on both centralised and decentralised control architectures. Centralised systems rely on a central controller that manages the planning of all UAVs, while decentralised systems have each UAV relying on local information and communicating with neighbouring UAVs. Understanding this distinction is crucial for planning the trajectories of UAV swarms.

Moreover, it is essential to differentiate between trajectory planning/design and path planning: path planning primarily focuses on finding the shortest route, while trajectory planning incorporates time, velocity, acceleration, and the physical constraints of the UAV [

5,

6]. Trajectory planning in swarm missions is often modelled as a Multiple Travelling Salesman Problem (MTSP), where multiple UAVs must cover different targets while considering mission time, energy constraints, and inter-UAV safety. To address these challenges, the research community has proposed various approaches to trajectory planning. Three major paradigms stand out:

Traditional Algorithms (TA): Deterministic methods such as Dijkstra [

7], A [

8], and Dubins Curves [

9], which rely on complete environmental information and provide optimal or near-optimal paths in well-structured scenarios [

10,

11,

12].

Biologically Inspired Algorithms (BIA): Approaches inspired by natural phenomena, such as bird flocking or the pheromone trails of ants, including PSO [

13], ACO [

14], GA [

15], and ABC [

16], which provide global optimization in large and complex search spaces [

17].

Modern AI-based Algorithms (AI-A): Machine learning [

18], deep learning [

19], reinforcement learning (RL) [

20], multi-agent RL (MARL) [

21], and graph neural networks enable UAV swarms to perform adaptive decision making, collaborative coordination, and intelligent behaviour in dynamic, uncertain environments [

22,

23]. In particular, modern approaches such as Active Inference [

24], based on Bayesian foundations, are introducing new directions in trajectory planning through predictive processing [

25].

These approaches are interconnected and form a continuum. TAs provide a foundational structure, BIAs offer global exploration and diversity, and AI-based techniques enable real-time adaptability and intelligent decision making. In modern research, these methods are being integrated into hybrid frameworks to simultaneously address complex aspects of trajectory design, such as scalability, collision avoidance, and mission-level optimisation.

The main objective of this paper is to present a systematic, comprehensive, and analytical review of all the essential aspects of UAV swarm trajectory planning, highlighting the clear connections and differences between various approaches.

This study outlines the fundamental concepts of centralised and decentralised control architectures and their practical applications.

The fundamental difference between trajectory design and path planning is clarified, and MTSP is introduced as a central mathematical framework that has been effectively adopted in UAV swarm trajectory planning.

The study discusses online and offline training/testing approaches, detailing how AI-based methods can be trained using an offline-generated BIA-based dataset and subsequently enhanced through online testing and minor adaptations in real-world missions.

The study clarifies decision making and collision avoidance as core challenges of UAV swarm trajectory planning and analyses various scientific approaches to solving these problems using geometric, physics-based, and AI-driven techniques.

This investigation provides a comparative analysis and critically evaluates the strengths and limitations of each approach, ultimately outlining future directions for UAV swarm research.

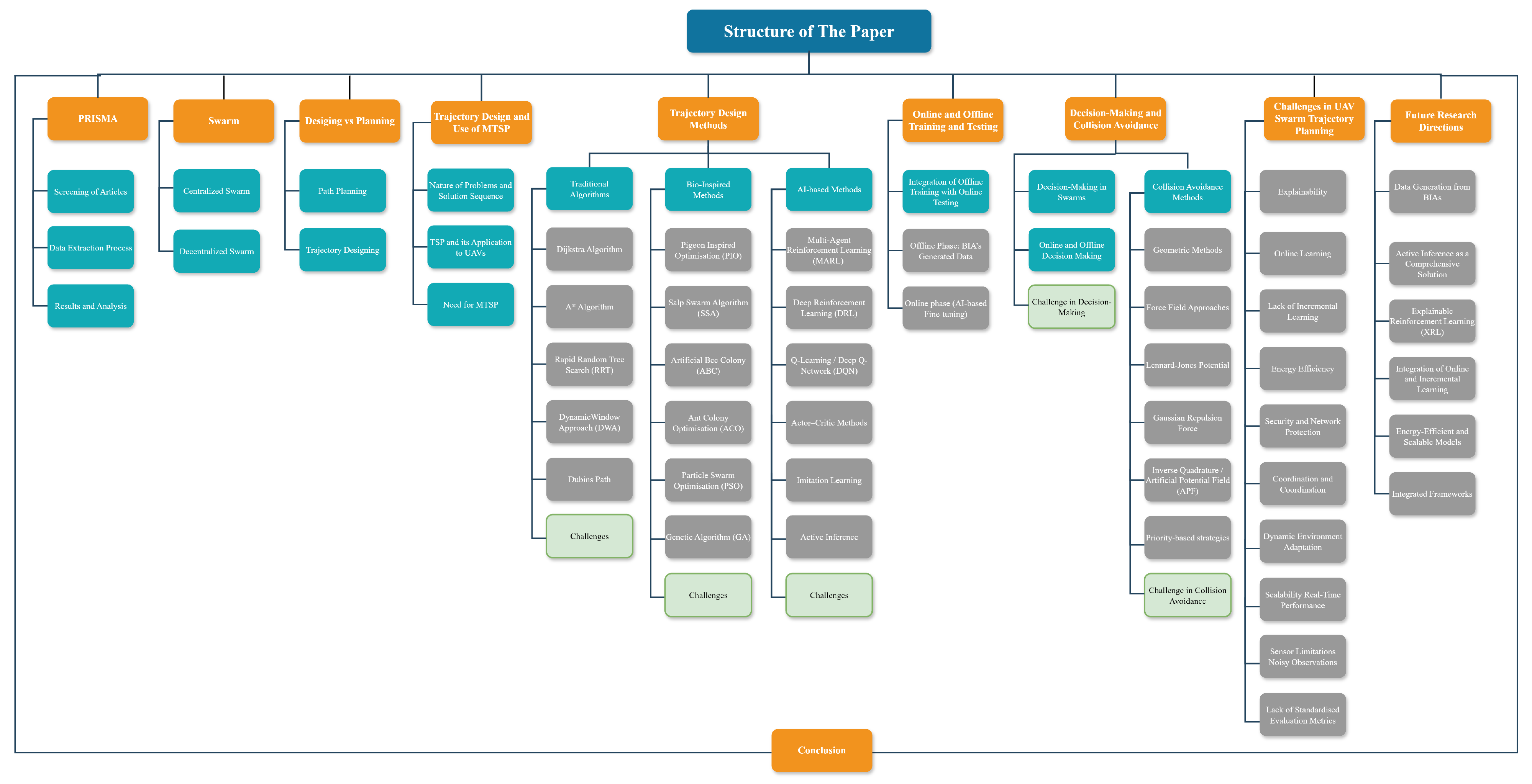

The structure of the paper is depicted in

Figure 1 and is organised as follows: First, centralised and decentralised swarm approaches are discussed, followed by the distinction between trajectory design and path planning. Next, the MTSP problem and its application to UAV swarms are described. TA, BIAs, and modern AI-based strategies are then presented. Subsequently, aspects of online and offline training/testing, decision making, and collision avoidance are reviewed. Ultimately, the paper highlights the primary challenges and potential future directions of UAV swarms.

2. Method

This study adopted a formal methodology for conducting systematic reviews following the PRISMA guidelines [

26]. The methodology consists of several steps, which are detailed in

Figure 2 and explained below.

A systematic search for relevant research articles for this review was conducted in two reliable electronic databases: Web of Science and Scopus. The search process included keywords with “OR” and “AND” operators, incorporating terms such as the following, with the intention of comprehensively identifying all possible and relevant research articles: (“UAV swarm” OR “drone swarm” OR “multi-UAV”) AND (“trajectory design” OR “path planning” OR “trajectory optimisation”) AND (“algorithm” OR “control” OR “strategy”).

A total of 1743 research articles were retrieved during this phase of the search. The authors then independently screened and selected these articles. Using Zotero 7 software, 832 articles were excluded as duplicate records, while 661 articles were excluded because they provided only a general overview and did not meet the study’s objectives. Therefore, only those articles that met the inclusion criteria were considered for review.

2.1. Screening of Articles

One author initially screened the research articles identified through the keyword search based on their titles and abstracts. A total of 911 studies were critically reviewed during this phase. All articles relevant to the topic of this study were included, while irrelevant studies were excluded.

If there was no consensus between the two authors regarding the selection or exclusion of a particular article, the entire article was carefully reviewed. If disagreement persisted, the final decision was made by a third, impartial reviewer to ensure transparency and objectivity.

2.2. Eligibility Criteria for Selection of Articles

This review included research articles that met the following criteria:

The article used keywords such as “UAV swarm”, “drone swarm”, or “multi-UAV”.

The article included research related to “trajectory design”, “path planning”, or “trajectory optimisation”.

The article proposed a practical method or technique related to “algorithm”, “control”, or “strategy”.

The research focused on issues such as collision avoidance, path optimisation, overlapping, and interference.

The study should cover topics that are relevant to the practical application of UAV swarms.

This criterion is established to include only articles that focus on solving the problems of effective, safe, and practical UAV swarm trajectory design and control in real-world contexts.

2.3. Data Extraction Process

The extraction of information from the selected research articles is carried out in a systematic and standardised manner. For this purpose, a pre-prepared data extraction form is used, in which the following points are compiled from each study:

Name of the author(s);

Year of publication;

Objective of the study;

Method or algorithm used;

Platform or simulator used;

Key findings and recommendations of the study;

Research limitations.

Data extraction is performed independently by two authors to minimise bias and ensure the accuracy of the results. In the event of any disagreement, the final decision is made after consulting with a third author. All the extracted information is compiled into a systematic table, which facilitates comparative analysis later.

2.4. Results and Analysis

A total of 250 research articles are ultimately included in this systematic review, as per our selection criteria of these 20 are review articles that helped us identify other research studies related to the topic [

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40,

41]. Additionally, 75 articles are excluded because they do not meet the exclusion criteria.

The selected articles are divided into three main categories based on their research orientation: TA, BIAs, and modern AI-based approaches

The performance of the algorithms presented in each category is evaluated based on several standard metrics, including the following: overlapping and interference of paths, obstacle avoidance, and optimisation quality.

The study utilises various tables to present the performance of each algorithm or hybrid approach visually. In these tables, the performance of each approach is presented, allowing for easy comparison of different techniques.

3. Centralised Swarm vs. Decentralised Swarm

A swarm is a concept derived from nature, such as a flock of birds, a school of fish, or a colony of ants. It involves several autonomous units (agents) working both in a coordinated and uncoordinated manner, without any central control, and using only local information [

42].

In the field of UAVs, a swarm refers to multiple drones or UAVs working together, communicating with each other, and operating under a collective goal, such as surveillance, search and rescue, or enemy identification [

43,

44]. There are two basic methods of controlling a swarm.

3.1. Centralised Swarm

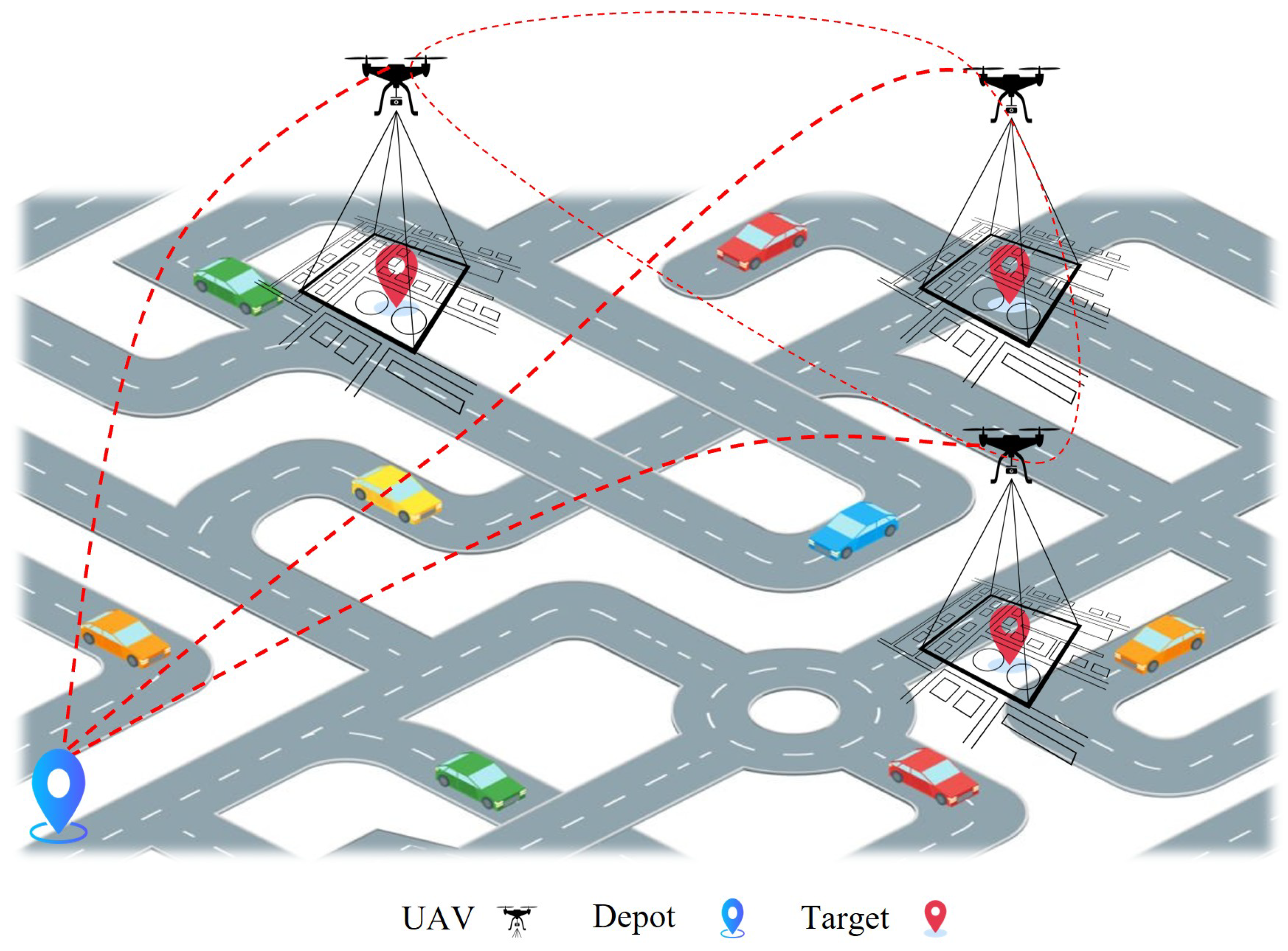

A swarm of centrally controlled UAVs is a system in which all drones or UAV subunits are controlled by a single central system, such as a Ground Control Station or a cloud server, as depicted in

Figure 3. This central station holds full responsibility for observation, control, and decision making. Each UAV receives specific instructions, tasks are distributed from this central unit, and each drone follows its defined flight plan or direction. Equation (

1) presents the states of the centralised swarm.

where:

is the position of UAV, i, at time, t.

is the control signal sent to all UAVs by the centralised controller.

Figure 3.

Illustration of a centralised controller.

Figure 3.

Illustration of a centralised controller.

Examples of controlled centralisation have also emerged in both research and practical applications. The authors of [

45,

46] presented a comparative analysis of the performance of centralised control in a study, in which a cloud-based control system guided 12 drones cooperatively. The results showed that centralised control excels in decision making; however, scalability remains a significant challenge. Similarly, the authors of [

47] introduced a centralised control-based hybrid AI system for ground surveillance. This system utilises Proximal Policy Optimisation (PPO)-based reinforcement learning models, where the centralised controller assigns specific search and tracking tasks to different UAVs. The results demonstrate that this centralised structure is effective for both search and continuous tracking.

The authors of [

48] highlighted that the centralised task assignment mode is the most widely used, in which the Ground Control Station distributes tasks, and each UAV completes its flight. Although this improves the quality of decision making and ensures that the system follows a coherent strategy, as the number of UAVs increases, challenges such as network communication, real-time response capability, and computational scalability arise. Ultimately, the researchers who published [

45,

47] agree that centralised control has its advantages, but its challenges cannot be ignored.

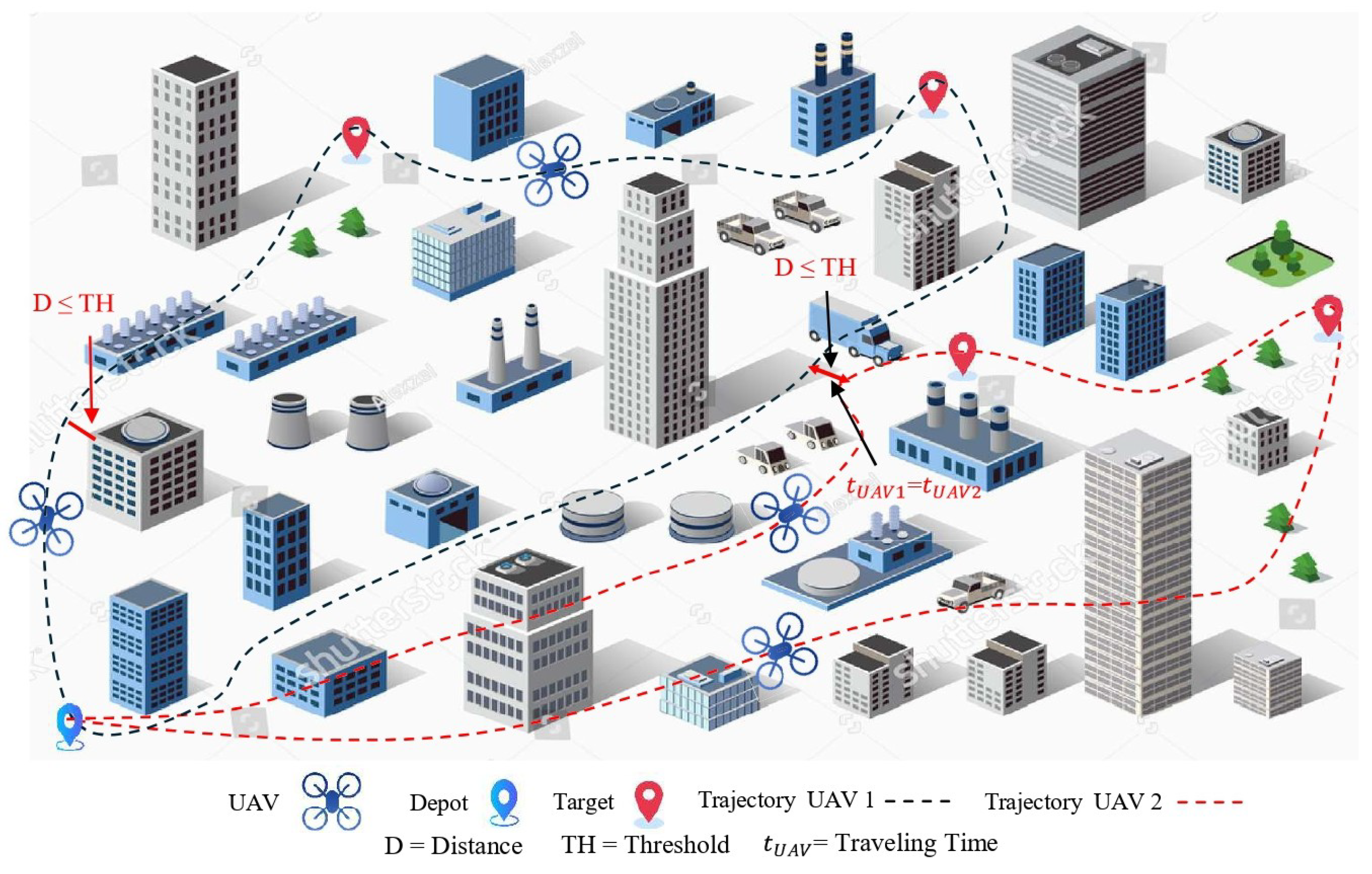

3.2. Decentralised Swarm

A decentralised UAV swarm is a model in which each UAV makes decisions based on its local information and signals received from neighbouring UAVs, as shown in

Figure 4. In this approach, there is no “single point of failure”, meaning that, if a single UAV fails, the rest of the system continues to function. Various studies have highlighted the robustness and resilience of decentralised models. For example, the authors of [

48,

49] present UAV coordination models based on decentralised algorithms, which demonstrate the advantages of efficient, low-latency control using local information. This proves that decentralised structures are more suitable for UAV swarms where the communication network is limited or uncertain [

50]. Equation (

2) presents the coordination of the decentralised swarm:

where:

denotes the position of UAV, i, at time, t.

is the set of UAVs neighbouring UAVs of UAV i.

is the magnitude of the influence that UAV j has on UAVi.

Figure 4.

Illustration of a decentralised controller.

Figure 4.

Illustration of a decentralised controller.

4. Trajectory Design vs. Path Planning

Although these two terms seem similar, there are several fundamental differences between them and their different uses have been repeatedly highlighted in research. For example, refs. [

51,

52,

53] define path planning as a method that focuses primarily on finding the shortest path from a starting point to a target, while refs. [

54,

55,

56,

57] define trajectory design as the planning of a complete and safe flight path with time, velocity, and acceleration.

4.1. Path Planning

The goal of path planning is to find a path from a starting point to a destination with the shortest distance, as shown in

Figure 5. This method is primarily used in static environments. It focuses on finding the shortest path based on local or global maps. Simple yet effective algorithms, such as those presented by Dijkstra [

58] or A* [

8], are used to obtain a path with the shortest distance.

The cost function, which is used to minimise the total length of the path, is given below:

where:

represents the waypoints of the path;

is the distance between two consecutive points;

n is the total number of points the path passes through.

4.2. Trajectory Designing

Trajectory design involves planning a fully dynamic flight path, including speed, time, angle, and acceleration as shown in

Figure 6. This method is commonly used in autonomous drones and robots, where the flight must be not only accurate but also smooth and energy-efficient. For this purpose, a cost function is commonly used to minimise the flight speed and its change (acceleration) [

27]. The function given below is based on this principle:

where:

is the velocity.

is the acceleration.

T is the mission duration.

Figure 6.

Illustration of trajectory planning/designing.

Figure 6.

Illustration of trajectory planning/designing.

Table 1 provides a comparative overview of the main differences, application areas, and key technical aspects between path planning and trajectory designing. This comparison reveals that path planning is typically employed to find a safe path in a static environment. In contrast, trajectory design provides a smoother and more time-efficient path in a dynamic and uncertain environment, making it more flexible and better suited to modern UAV missions.

5. UAV Trajectory Design Issues and Use of MTSP

5.1. Nature of Problems and Solution Sequence

Trajectory design by UAV is a complex problem, especially when the target has to be reached at multiple locations, and the mission duration or energy is limited [

59]. The following logical sequence is adopted to solve this problem:

- 1.

Mission Definition: Target points, time limit, and objectives are specified.

- 2.

Modelling: Targets are modelled as nodes, paths as edges, and distance/time as weights [

60,

61].

- 3.

Problem Classification:

If there is one UAV, → TSP [

62,

63].

If there are multiple UAVs, → MTSP [

64,

65].

- 4.

Trajectory Optimisation: A solution is derived using an appropriate heuristic or AI algorithm, which includes collision avoidance, energy limits, and other practical requirements [

64,

65].

- 5.

Simulation or Practical Testing: the performance of the obtained solution is tested.

5.2. TSP and Its Application to UAVs

If there is only one UAV and it has to visit

n destinations, the problem becomes the Travelling Salesman Problem (TSP) [

62,

63].

The objective of TSP is to visit all the destinations in the shortest distance or time and finally return to the starting point. The UAV path shown in

Figure 5 is a practical example of solving the same TSP problem, where the UAV visits all the targets (waypoints) in a specific order to minimise the total distance.

where:

is the cost of travelling, i.e., time and distance from location i to j.

if the path is chosen.

5.3. When It Comes to Congestion: The Need for MTSP

TSP becomes inadequate in the presence of more than one UAV. Therefore, we use the MTSP, which assigns paths to multiple UAVs such that they collectively visit all the destinations in the shortest distance or time, and each UAV eventually returns to its starting point (Dpot) [

64,

65]. The UAV trajectory shown in

Figure 6 is a practical example of solving the same MTSP problem, where each UAV visits a certain number of targets to minimise the total cost.

5.3.1. Definition and Mathematical Model of MTSP

MTSP is an extended model, in which the following are true:

m UAVs (salesmen);

n targets (tasks or cities);

Each target is assigned to only one UAV;

All UAVs start and return from a depot.

Objective of MTSP:

where:

if UAV k goes from location i to j.

is the distance or time value.

m is the total number of UAVs.

5.3.2. Application of MTSP to UAV Swarms

The use of MTSP in UAV swarms provides the following benefits:

Parallelism: All UAVs perform separate missions simultaneously.

Load Balancing: Fair distribution of targets is possible.

Time Efficiency: The total mission time is reduced.

Collision Avoidance: Obstacles are detected and avoided to ensure safe navigation.

While TSP is a suitable solution for UAVs, MTSP provides a very efficient, appropriate and workable framework for UAV swarms [

60,

61]. It not only improves speed but also enables missions to be completed in less time and with greater efficiency.

6. Different Trajectory Design Methods

Trajectory design is a complex problem, especially when it comes to UAVs or multi-agent systems. There are different strategies to solve this problem, which can be divided into three basic types.

6.1. Traditional Algorithms Used in UAV Swarms (In the Context of MTSP)

These TAs are usually used in static or known environments.

Famous Algorithms:

Rapid Random Tree Search (RRT) [

67];

Dynamic Window Approach (DWA) [

68];

UAV swarm-based trajectory design leverages TAs to identify optimal and safe paths to targets. In MTSP scenarios, these algorithms efficiently generate individual UAV trajectories, as illustrated in Algorithm 1, which depicts TAs’ operations.

| Algorithm 1 UAV swarm trajectory design using MTSP. |

- 1:

Input: (locations, with as the base station); (set of UAVs); (cost between locations).

- 2:

Output: Paths for each UAV, such that: - 3:

Initial Step: Set starting location for each UAV, and mark all as unvisited (except ). - 4:

while Unvisited nodes exist in V do - 5:

for each UAV do - 6:

Select the nearest unvisited node: - 7:

Add to path and mark it as visited - 8:

end for - 9:

end while - 10:

Return: UAVs return to base , with final paths .

|

6.1.1. Dijkstra Algorithm and Its Role in UAV Swarms

Dijkstra’s algorithm is a classic graph search technique that finds the least-cost or shortest path from one point to all other points. It is beneficial in UAV trajectory design once the MTSP has been solved, as it provides an efficient and shortest path for each UAV to reach its assigned targets [

7]. Thus, this algorithm helps to reduce both the time and total cost of mission completion. This concept can be expressed mathematically as:

where:

Research on UAV path planning has proposed basic algorithms that typically determine the optimal path from a cost map in a static 2D or 3D grid environment, yielding effective results in simple scenarios. However, these methods are generally limited to single-UAV operations and cannot coordinate large-scale UAV swarms [

69]. In the same vein, another study designed a pathfinding model for a group of 3–10 UAVs, taking into account battery limits, charging stations and coverage constraints, which provides more effective coverage and better mission completion. However, path overlap remains a key challenge [

70]. In another study, the initial paths obtained from classical Dijkstra are improved by PSO to enhance collision avoidance and path selection, resulting in better performance in complex scenarios with reduced path overlap and outperforming classical Dijkstra [

58]. Additionally, dynamic-planning-based methods, which utilise local replanning with the Bresenham algorithm, have been proposed to avoid unknown obstacles in both static and dynamic environments. They are mainly effective for single UAVs and are capable of handling sudden changes and new obstacles [

7]. Although Dijkstra-based method provides reliable routing for UAVs, classical Dijkstra has problems such as synchronization and lack of coordination of large-scale UAV swarms, which make it inadequate for large systems; however, its modern variants such as multi-UAV Dijkstra and Dijkstra + PSO [

58] overcome these weaknesses and provide more reliable solutions within UAV swarms with better coverage, effective collision avoidance, and less interference.

6.1.2. A* Algorithm

The A* algorithm is a heuristic-guided version of Dijkstra, which uses the heuristic function

to speed up the search process [

71]. It considers the least-cost path, as well as the estimated remaining distance, in the graph-based search, making it more computationally efficient than the classical Dijkstra algorithm. The cost function in A* algorithm can be expressed as:

where:

The TA grid-based A* algorithm is utilised for UAV scheduling and routing, providing efficient coordination of 3–10 UAVs while minimising mission overlap through temporal offset batching. To improve upon this, Jump Point Search (JPS)-Enhanced A is introduced, which finds faster paths by skipping unnecessary nodes and gives better results in environments with static obstacles. However, some path overlap is reported during Moving Window Search [

72]. As a further development, the 3D A algorithm provided efficient navigation in complex three-dimensional environments using octree-based space partitioning and reduced collisions through per-UAV deflection layers. Still, its performance remained relatively limited in unpredictable dynamic scenarios [

73]. In the same sequence, Classification A implemented local A on each UAV by dividing the workspace into sectors, which reduced the computing time and achieved better results [

74]. Overall, A* and its variants provide fast, reliable, and effective solutions for UAV trajectory design; however, challenges such as scalability and limited replanning capacity in large-scale UAV swarms and highly dynamic environments remain, which require more hybrid and adaptive approaches to overcome.

6.1.3. Rapidly-Exploring Random Trees (RRT)

RRT is a sampling-based path planning algorithm that rapidly grows new branches through random sampling in a given configuration space, to explore as much accessible space as possible [

67,

75]. The following function is used to select the nearest node and extend it in a randomly chosen direction:

where:

: current node in the tree that is closest to ;

: randomly chosen point in the direction in which the tree is expanded;

: step size that determines the extent of the expansion;

: Euclidean distance between the two points, which normalises the direction.

The initial research utilises Multi-platform Space–Time RRT, which enables UAVs to operate in static and cluttered 3D environments with space and time constraints. This model provides smooth and flyable paths, where path overlap is significantly reduced by strictly enforcing the time and separation of each UAV. Another study [

76] adopted multi-RRT with kinodynamic constraints and Bézier curves, which not only provided smoother and shorter paths for 3–10 UAVs but also improved upon methods such as classical RRT and Theta-RRT [

77], while ensuring collision avoidance. Meanwhile, RRT is utilised for single-UAV scenarios in photogrammetry and aerial survey, where real-time obstacle avoidance is possible with the aid of stereo cameras, and safe navigation at speeds of 6 m/s is demonstrated in practical missions [

78]. Furthermore, a hybrid method is introduced that combines iterative RRT with the Salp Swarm Algorithm (SSA), in which SSA intelligently guides the expansion of nodes. This approach reduces path length, decreases the number of iterations and nodes used, improves computational efficiency, and further minimises overlap between UAV paths [

79]. Overall, RRT-based algorithms are highly effective in UAV trajectory planning, particularly in complex, dynamic, or partially known environments. Their main strength is fast search; however, the randomness and non-smooth nature of classical RRT often create limitations, which is why modern research is integrating these techniques with Bézier smoothing or SSA-guided approaches to enable smoother, collision-free, and computationally efficient trajectories for UAV swarms.

6.1.4. Dynamic Window Approach (DWA)

DWA is a real-time spatial planning algorithm that selects a safe and feasible path within the UAV’s current velocity

v and angular velocity (

). This method is effective because it enables the UAV to avoid collisions even in rapidly changing conditions and complex or partially unknown environments. It analyses possible movements based on velocity and angle, assesses the safety and feasibility of each path, and instantly selects the path that provides the least risk and the most efficiency [

80,

81]. In DWA, the objective function is used to select the optimal path, considering various factors such as target alignment, obstacle distance, and speed. Its mathematical expression is as follows:

where:

v: velocity, : angular velocity;

: target alignment;

: distance from the obstacle;

: current speed of the UAV;

: weights that describe the relative influence of heading, clearance, and speed in decision making.

This function (Equation (

10)) combines these parameters to produce a score for each possible move, based on which the most suitable move is selected.

DWA has been adopted in various scenarios in UAV swarms to enable quick response and collision avoidance. Several studies have shown that DWA-based approaches not only make the routes safer during missions but also significantly improve the overall efficiency of UAVs. For example, the authors of [

82] combined DWA with ORCA (Optimal Reciprocal Collision Avoidance), resulting in a 17% reduction in mission time and a 27.9% reduction in path length. A study [

83] utilised DWA with gradient-field costs to enable UAVs to navigate more effectively around non-convex obstacles, although gradient sensitivity occasionally led to local minima. Similarly, the authors of [

84] combined DWA with global planners such as Jump Point Search (JPS), where the combination of local collision avoidance and global route guidance provided smoother paths. Overall, DWA is a reliable method for real-time local motion planning, enabling UAVs to make swift decisions in dynamic and partially known environments. It provides collision-free trajectories in a short time and improves mission duration. However, for large-scale coordination and nonlinear interactions in complex UAV swarms, DWA typically requires integration with global planners or AI-based intelligence to provide more scalable and adaptive solutions.

6.1.5. Dubins Path

Dubin’s path is a classical geometric trajectory planning model designed for vehicles with limited turning radius, and is particularly suitable for fixed-wing UAVs where zero-radius turns are not possible [

9,

85]. The model searches for a minimum path that consists of only three basic movements: straight ahead (

S), left turn (

L), or right turn (

R). The combinations of these movements create different possible paths, which can be expressed mathematically as:

This set represents all the basic possible paths that the model evaluates for minimum distance or cost. In this way, the model compares the performance of each combination and selects the most efficient route, which saves both time and energy in UAV navigation and path planning.

The Dubins path model has been adopted in several studies in UAV trajectory planning. The authors of [

86] developed a Dubins-based motion planning framework for fixed-wing UAVs, which is found to be effective for constrained turns and short-path planning. Another study in [

87] designed minimum-turn paths for UAVs, which improve trajectory smoothness and mission efficiency in different environments. Furthermore, the authors of [

88] integrated Dubins paths into cooperative UAV swarms, providing collision-free trajectories in a multi-agent path-planning scenario despite the turning constraints.

Dubin’s path model is a crucial technique for fixed-wing UAV swarms because it incorporates physical constraints, like turning radius, directly into the trajectory planning process. However, this model has limitations; it can only handle straight, constant-radius turns, making it less suitable for dynamic replanning. Therefore, it is often integrated with advanced methods or hybrid approaches in more complex scenarios.

Table 2 presents a comparative overview of different TAs in MTSP, showing that each algorithm plays a unique role in specific environments and scenarios. Observations indicate that a combination of different algorithms yields more effective, flexible, and situationally superior results in UAV swarm missions.

6.2. Bio-Inspired Methods Used in UAV Swarm

BIAs are inspired by simple yet effective behaviours found in nature. These heuristic-based methods are highly effective in solving NP-hard problems, such as the MTSP, particularly when designing trajectories for UAV swarms. As demonstrated in Algorithm 2, this illustrates the operational framework of TAs. Some famous algorithms are as follows:

Pigeon-Inspired Optimisation (PIO) [

89].

Salp Swarm Algorithm (SSA) [

90].

Artificial Bee Colony (ABC) [

16].

Ant Colony Optimisation (ACO) [

14].

Particle Swarm Optimisation (PSO) [

13].

Genetic Algorithm (GA) [

15].

| Algorithm 2 General flow of BIAs for UAV swarm and MTSP. |

- 1:

Input: : Hotspots (where is the base station) : Set of UAVs : Cost (distance, time, or energy) between locations Algorithm-specific parameters (e.g., pheromone for ACO, velocity v for PSO, etc.)

- 2:

Output: Optimal paths that minimize the total cost:

with each city visited by only one UAV (except the base station). - 3:

Initial step: - 4:

Create an initial population/colony/cluster for each BIA: Where each solution is a set of possible paths for the UAVs. - 5:

Set initial algorithm parameters (pheromone level, inertia weight, learning coefficients, etc.). - 6:

while termination criterion is not met (e.g., max iterations or convergence) do - 7:

for each solution do - 8:

- 9:

Update pheromone (for ACO): - 10:

Update velocity and position (for PSO): - 11:

Apply selection, crossover, mutation (for GA). - 12:

end for - 13:

Update best solution (global best or optimal). - 14:

end while - 15:

Output: Extract best solution , providing optimal or near-optimal MTSP paths for UAVs.

|

6.2.1. Pigeon-Inspired Optimisation (PIO)

PIO is a BIA based on the navigation abilities, memory, and tendency of pigeons to use the Earth’s magnetic field. PIO can be used to navigate UAVs in the right direction toward the global target, providing speed and accuracy in path planning. The algorithm was first introduced by the authors of [

89], who described it in two main steps: the map and compass operator, inspired by pigeons’ direction recognition and magnetic sensing, and the landmark operator, which reflects pigeons’ memory and ability to fly to a target.

In recent research, PIO has been applied to various engineering and optimisation problems. The authors of [

91] applied PIO to UAV path planning and showed that it can derive paths to the target in less time than TAs. Similarly, Sharma and Panda [

92] used PIO in multiobjective trajectory design, where PIO struck a balance between collision avoidance and energy efficiency. Furthermore, the authors of [

93] adapted PIO for UAV swarms to provide effective navigation toward the global target even in dynamic and uncertain environments. In the UAV swarm MTSP scenario, the compass-based formula in PIO is used to guide each UAV to the global best position (

). This enables coordinated movement of UAVs and efficient multi-target allocation. This method minimises the total travel distance while maintaining swarm coordination and ensuring the avoidance of unnecessary or redundant paths. This compass-based update formula is mathematically expressed as:

where:

This Equation (

12) ensures that over time, each UAV gradually moves from its current position to the global optimal position, allowing the entire swarm to complete the MTSP mission in a coordinated and efficient manner.

6.2.2. Salp Swarm Algorithm (SSA)

The Salp Swarm Algorithm (SSA) is a bio-inspired optimisation method inspired by the movement of a swarm of salps in the ocean, where a leader salp moves towards a target and the rest of the salps follow it. SSA is first introduced by the [

90], and consists of two stages: the movement of the leader salp that controls the exploration, and the movement of the follower salp that fine-tunes the exploitation.

SSA has demonstrated its effectiveness in various engineering applications over the past few years. For example, [

94] utilised SSA for UAV path planning and showed that it can identify the most efficient paths even in complex and dynamic environments. Similarly, the authors of [

95] implemented SSA in multiobjective optimisation, where energy consumption and path length are optimised simultaneously. Furthermore, the authors of [

96] extended SSA to complex problems, such as UAV swarm coordination and MTSP, and demonstrated its flexibility.

Leader swarm update equation:

Leader swarm update equation components:

: new position of the leader swarm in dimension j;

: position of the target (food source) in dimension;

: upper bound in the given dimension;

: lower bound in the given dimension;

: exploration coefficient, which decreases with time;

, : random numbers between 0 and 1.

If , the swarm moves towards the target.

If , the swarm moves away from the target, which maintains diversity.

In SSA, the movement of the leader swarm controls the overall direction and behaviour of the entire swarm. In the context of a UAV swarm, the leader swarm can be a UAV that determines the general movement of the swarm towards the target, while the rest of the UAVs follow it. This mechanism is considered ideal for maintaining a balance between exploration and exploitation in complex path planning problems, such as MTSP.

6.2.3. Artificial Bee Colony (ABC)

ABC is a popular bio-inspired optimisation algorithm inspired by the natural foraging behaviour of honeybees. The authors of [

97] introduced ABC, which consists of three types of bees employed: onlooker, scout, and worker bees. Each bee plays a role in the process of finding new food (solutions), exchanging information, and making better choices. The ABC algorithm has been successfully applied to various complex problems in engineering and robotics. For example, ref. [

16] uses it for numerical optimisation, while [

98] shows in UAV path planning that crowd-based cooperation accelerates the search for better paths. In the same vein, ref. [

99] applied the ABC approach to multiobjective optimisation in UAV swarms, where the optimal speed and path are determined while considering constraints such as energy, time, and distance.

These studies present the current state of the problem and possible search paths, illustrating that each UAV requires both local and global information to determine the optimal direction. This concept is mathematically represented in the following equation, which is the basic formula for generating a new solution:

where:

: current solution (the current path or speed of the UAV);

: neighbouring solutions (other UAVs or alternative paths);

: a random value that diversifies the search.

This update mechanism, as explained in Equation (

14), describes how each UAV combines its current state with neighbouring information to generate a new solution. By applying this equation, improved paths and speeds are achieved, providing fast and effective solutions to complex problems, such as the MTSP. This enables each UAV to determine the optimal path or trajectory in a cooperative manner. The collective intelligence of the UAVs yields faster and more efficient solutions to complex problems.

6.2.4. Ant Colony Optimisation (ACO)

ACO is another important BIA inspired by the natural path-finding behaviour of ants, where ants leave pheromone trails and use them to find the best path. The authors of [

100] founded ACO, and it remains a benchmark method for many optimisation problems today.

The authors of [

101] utilised ACO for cooperative search and surveillance missions in UAVs, demonstrating that pheromone-based learning enables effective navigation for UAVs even in dynamic environments. Furthermore, the authors of [

102] modified ACO to solve UAV-based MTSP and observed that it provides better scalability in parallel UAV coordination.

In MTSP, each UAV is considered as an “ant” searching for the best possible path to reach its target. The initial state of the problem, including all possible paths, as each UAV explores different paths. In this search process, each UAV learns from its own and other UAVs’ previous movements to choose the best path for the future. The following probability equation decides this selection:

where:

: pheromone level, which indicates the previous success of a path;

: approximate information (), which gives the immediate availability of the route.

Equation (

15) helps each UAV calculate which of the following cities or targets is most suitable to choose. The probability of selecting a route with a higher pheromone level and shorter distance increases, while the probability of choosing a path with a lower pheromone level and longer distance decreases.

ACO’s pheromone trails provide UAVs with a “collective memory”, which is updated after each iteration. This means that, when a UAV passes a good route, it leaves pheromones along that route, which other UAVs sense and incorporate into their decisions. This collaboration results in the emergence of optimal routes in the final graph, where each UAV reaches its assigned targets in the shortest distance, time, and energy.

In this sequence, the initial state → conducts decision making through equations → in which the pheromone updates the → optimised paths, helps solve complex problems like the MTSP efficiently and consistently.

6.2.5. Particle Swarm Optimisation (PSO)

PSO is a popular bio-inspired metaheuristic algorithm inspired by the collective behaviour of flocks of birds and schools of fish. The authors of [

103,

104] introduced PSO, in which each possible solution is considered a “particle” that explores the solution space by continuously updating its velocity and position.

In recent years, PSO has been widely adopted in UAV path planning and swarm coordination problems. The authors of [

105] utilised PSO in the trajectory optimisation of UAVs and demonstrated that the algorithm quickly finds near-optimal paths, even in dynamic environments. Similarly, the [

106] implemented PSO in UAV-based multi-target assignment (MTSP) and observed that this approach provides better load balancing while maintaining a low computational cost. Furthermore, the authors of [

107] used an improved version of PSO in UAV swarm collision avoidance, and the results showed that PSO-based coordination is effective in both safety and efficiency.

In MTSP, each particle represents a possible path or velocity and learns from its personal best and the group’s global best. The following equation controls the velocity update:

where:

: inertial component—maintains the current direction and velocity;

: cognitive component—movement towards the personal best position ;

: social component—movement towards the collective best solution g;

, : learning coefficients;

, : random factors that diversify the search.

The following equation then updates the position:

where:

Together, these two Equations (

16) and (

17) show a process in which each UAV continuously improves its position and velocity, first by taking advantage of its own experience and then by taking advantage of the collective experience of the group. Thus, the collective intelligence of PSO facilitates the identification of optimal paths in MTSP, utilising the minimum distance, time, and energy, and enables real-time swarm coordination.

6.2.6. Genetic Algorithm (GA)

GA are a popular evolutionary optimisation technique based on the principles of natural evolution, such as selection, crossover, and mutation. Goldberg [

15,

18] introduced GA as a general framework for complex optimisation problems. Since then, GA has been widely used in various fields, including robotics and UAV path planning.

GA has repeatedly proven its usefulness in UAVs and swarm operations. The authors of [

108,

109] utilised GA for UAV mission planning and demonstrated how chromosome-based encoding reduces the total cost (in terms of time and distance) by optimising multiple paths. Furthermore, the researchers who published [

110] utilised GA for UAV trajectory optimisation in the context of the MTSP, which demonstrated significant improvements in load balancing and mission completion time among UAVs. Similarly, the studies [

111,

112] implemented GA in UAV-based collision-free path planning, and the results showed that GA-based approaches remain efficient and scalable even in large search spaces.

In MTSP, the GA represents each possible UAV path as a chromosome, where genes represent the sequence of cities or targets that the UAV can visit. The goal of the GA is to find the solution among these paths that provides the least cost (distance or time). The following fitness function is used to measure this performance:

where:

Equation (

18) ensures that the lower the cost of the path, the higher its fitness. As a result, the GA naturally prefers low-cost and high-fitness paths.

The GA iteratively generates new solutions:

- 1.

Crossover: creates a new path by combining two existing paths.

- 2.

Mutation: creates diversity by making minor changes to the path.

- 3.

Selection: selects paths with better fitness for the next generation.

With each iteration, weaker solutions are eliminated and stronger solutions become more dominant, until all UAVs agree on an optimal or closest solution. The final part presents the results of this evolutionary process, where non-conflicting and low-cost paths for the UAVs emerge. Thus, GA’s evolutionary search enables the solution of complex problems, such as MTSP, quickly and efficiently, whether the problem involves trajectory planning, path allocation, or real-time swarm coordination.

6.3. Challenges in Bio-Inspired Algorithms

In the context of UAV swarms, several BIAs have been effectively adopted to solve complex combinatorial problems such as the MTSP. A specific natural phenomenon or organism inspires each algorithm, which then performs in UAV swarms with its unique mechanisms and advantages. However, each algorithm also has some limitations, which subsequent methods aim to address and improve.

Table 3 summarises these algorithms, describing the basic motivation of each algorithm, its role in UAV swarms/MTSP, and the main challenges.

This evolutionary sequence illustrates that each new algorithm overcomes the weaknesses of its predecessors to some extent. For example, PIO relies on basic GPS-like navigation behaviour; however, it often fails to reach the global optimum. This shortcoming is partially addressed by SSA, which introduced a simple leader–follower strategy; however, it also proved to be limited in more complex and dynamic environments.

Then, ABC improved exploration by modelling the foraging activity of worker bees; however, it took longer in large search spaces due to slow convergence. ACO introduced collective learning through cooperative pheromone trails; however, it suffered from problems such as premature convergence and pheromone evaporation.

PSO provided an effective yet simple coordination mechanism by combining individual and collective best (personal best and global best). Still, it often became stuck in local minima due to the difficulty in maintaining diversity. Finally, GA emerged with an evolutionary mechanism that provides substantial diversity through crossover and mutation, offering a highly reliable and robust solution to complex combinatorial problems, such as MTSP [

18,

110].

However, a fundamental limitation of GA is that it is primarily suited for offline scenarios, where all the data is already available. In online situations such as real-time UAV coordination, the computational complexity and latency of GA can limit quick decision making. Therefore, while GA performs well in offline mission planning, either lightweight algorithms or hybrid approaches may be more effective for online decision making [

105,

111,

113].

6.4. AI-Based and Innovative Methods

In recent years, artificial-intelligence-based methods have emerged as a crucial alternative for solving complex combinatorial problems, such as UAV swarm trajectory design and the MTSP. These innovative approaches have provided more adaptive, scalable, and data-driven solutions than TAs. AI-based algorithms enable UAVs to make autonomous decisions in changing environments and derive optimal routes in complex situations [

114,

115].

These AI-based approaches have ushered in a new era for UAV-based MTSP and trajectory planning, where UAVs not only operate according to pre-programmed rules but also adapt and perform effectively in complex, real-world scenarios, with the ability to learn and make autonomous decisions. As shown in Algorithm 3, this outlines the operational framework of AI-based methods for UAV swarm.

| Algorithm 3 AI Techniques for UAV Swarm and MTSP |

- 1:

Input: : Hotspots (with as the base station) : Set of UAVs : Cost (distance, time, or energy) between locations AI-specific parameters (e.g., learning rate, neural network structure, etc.)

- 2:

Output: Optimal paths that minimize the total cost:

with each city visited by only one UAV (except the base station). - 3:

Initial Step: - 4:

Initialise neural network weights, or reinforcement learning environment. - 5:

Set starting locations for each UAV . - 6:

while Not converged (e.g., max epochs, acceptable error) do - 7:

for each UAV do - 8:

Input current state (current location, previous path, etc.) into the AI model. - 9:

Output next location for UAV: - 10:

Add to UAV path . - 11:

Update model parameters based on the UAV’s decision (Reinforcement Learning: update Q-value or loss function). - 12:

end for - 13:

end while - 14:

Return: Extract best solution , providing optimal or near-optimal MTSP paths for UAVs.

|

6.4.1. Multi-Agent Reinforcement Learning (MARL)

MARL is an extension of traditional reinforcement learning in which multiple agents learn and act together in the same environment [

116,

121]. In MARL, each agent not only receives rewards and observations from the environment, but is also influenced by the presence and decisions of other agents. This feature is particularly suitable for UAV swarms because each UAV acts as an agent that determines its trajectory and decisions by taking into account the behaviour of other UAVs.

In recent years, MARL has been widely used for UAV swarm trajectory planning, MTSP, and cooperative decision making. For example, the study [

122] proposed a MARL-based framework for UAV swarms, which enables UAVs to jointly find optimal routes and share tasks (i.e., task allocation). Similarly, the authors of [

123] used a MARL model based on centralised training and decentralised execution (CTDE) for UAV collision avoidance, which provides better coordination in real time decisions. Furthermore, the authors of [

124] demonstrated that MARL enables UAVs to be cooperative and adaptive in dynamic MTSP scenarios, particularly in environments where targets and routes change over time.

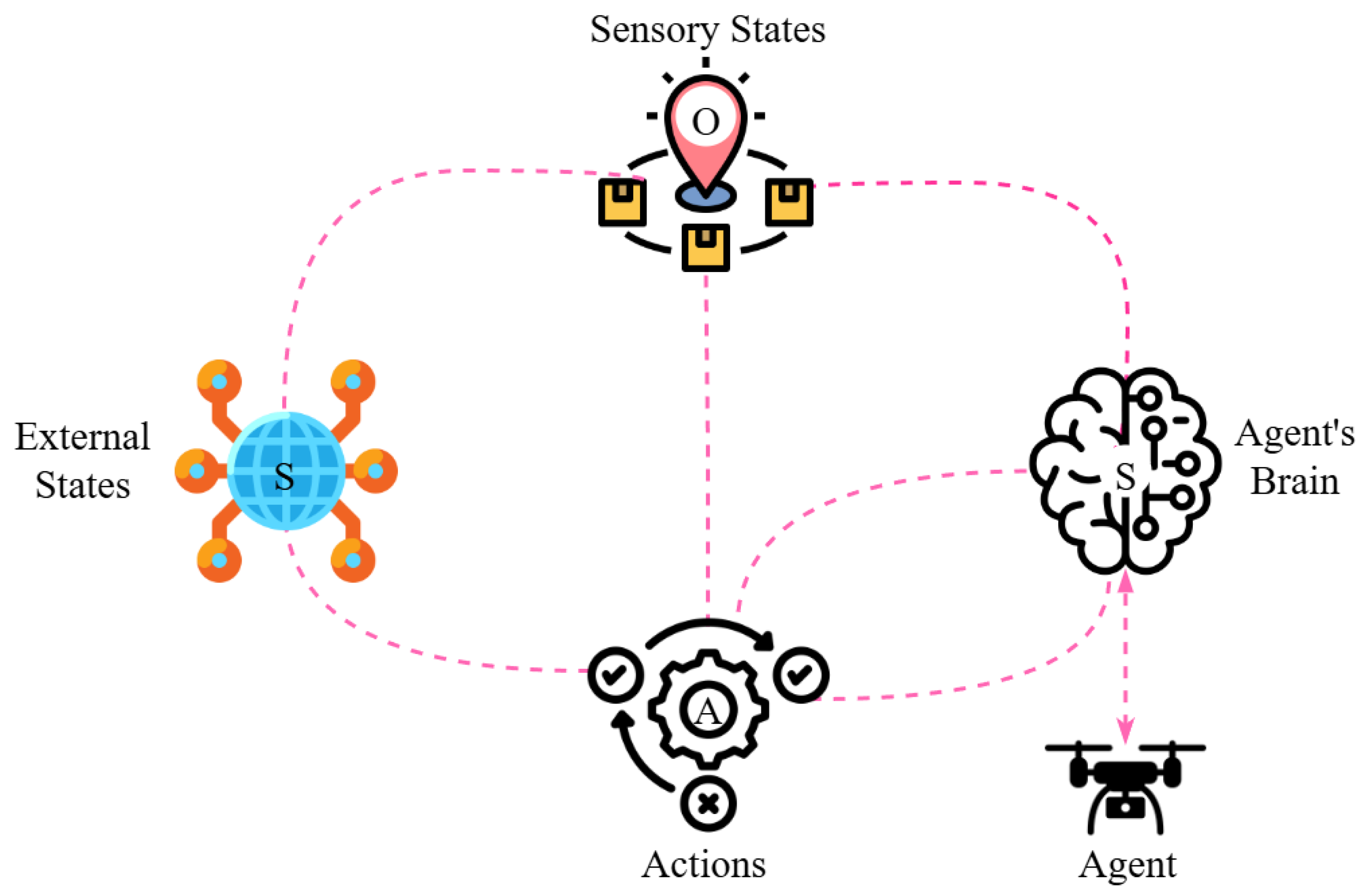

Figure 7 illustrates the concept of multi-agent reinforcement learning (MARL), where each UAV makes autonomous decisions based on its local observations and the rewards it receives. Each UAV learns not only from its own experience but also from the behaviour of other UAVs, allowing for better collective decision making. This process can be described mathematically by the following objective function:

where:

: policy of agent i, which chooses an action based on current observations;

: reward received by agent i at time t;

: discount factor, which maintains the importance of long-term rewards;

: policies of all other agents, which influence the environment and decisions.

Figure 7.

Example of a MARL framework for UAV trajectory planning.

Figure 7.

Example of a MARL framework for UAV trajectory planning.

Equation (

19) specifies that each UAV optimises its policy in such a way that the long-term total reward is maximised, while also taking into account the behaviour of other UAVs.

The MARL’s frequently updated decisions enable UAVs to learn from each other, taking paths that avoid collisions, reduce time and distance, and successfully solve complex problems, such as MTSP, in dynamic and uncertain environments.

Thus, MARL provides an effective solution for UAV swarms, enabling them to adapt in real time and collectively adopt the best strategy [

42,

124].

6.4.2. Deep Reinforcement Learning (DRL)

DRL is a modern learning method where an agent observes the environment, performs actions, and improves its policy based on rewards [

20,

125]. DRL combines the principles of classical reinforcement learning with deep neural networks, allowing it to learn efficiently even on high-dimensional inputs such as images, sensor data, and complex state spaces.

DRL has been widely used in complex combinatorial optimisation problems such as UAV trajectory design and MTSP. For example, the article [

126] proposes a policy framework based on DRL for UAV swarms, allowing UAVs to perform dynamic task allocation and real-time trajectory adjustments. Similarly, the study [

114] demonstrated that DRL enables UAVs to make adaptive routing decisions in response to changing situations during mission execution. Furthermore, the research presented in [

22] achieved significant improvements in both load balancing and mission completion time by implementing DRL in an MTSP setting. This process can be described mathematically by the following objective function:

where:

: policy that describes the strategy for choosing the action;

: reward received at time t;

: discount factor that balances long-term and short-term rewards.

Equation (

20) explains that in DRL, the UAV optimises its policy

in such a way that the long-term total reward is maximised. After each observation, the UAV estimates which action in the current state will yield the most benefit in the future and updates its decisions accordingly.

The result of this iterative process is that the UAVs have learned from the environment and adopted better paths and target preferences for the MTSP. This has not only increased mission performance but also reduced execution time. Thus, DRL enables UAV swarms to operate effectively in dynamic and uncertain environments and automatically select the best paths [

115,

117].

6.4.3. Q-Learning/Deep Q-Network (DQN)

Q-Learning is a classical value-based reinforcement learning technique that learns the expected reward for each state-action pair and ultimately produces an optimal policy [

127].

Figure 8 demonstrates the fundamental framework of Q-Learning and DQN. After receiving state and reward from the environment, agents update the Q-Table to learn which action is best in which state, and this knowledge helps to improve subsequent decisions. The principle of this update is described in the following equation:

where:

: estimated value of action in the current state ;

: reward received after executing the action at time t;

: next state;

: highest-valued possible action in the next state;

: learning rate, which determines the weight of new and old Q-values;

: discount factor, which determines the importance of future rewards.

Figure 8.

Illustrative example of Q-Learning/DQN approach for UAV-based MTSP.

Figure 8.

Illustrative example of Q-Learning/DQN approach for UAV-based MTSP.

Equation (

21) describes how Q-Learning updates the Q-value by combining new information with old information. The UAVs repeat this process repeatedly, learning which action will provide the highest reward in each situation. The result of this learning process is that the UAVs have adopted routes and task allocations that not only reduce distance and time but also avoid collisions in a dynamic and uncertain environment. Thus, Q-Learning enables both real-time route optimisation and dynamic task allocation in MTSP, improving the overall performance of the swarm [

117,

128].

Q-Learning enhances the discrete decision making capabilities of UAVs, whereas DQN addresses large state spaces. The work in [

128] proposes DQN-based trajectory planning for UAVs and observes better performance in complex urban settings. DQN combines the same principle with DQN to address high-dimensional state spaces, as demonstrated by the authors of [

20] for human-level decision making.

Recent research has used Q-Learning and DQN for complex combinatorial optimisation problems such as UAV trajectory planning and the MTSP. For example, the study [

118] used DQN in UAV swarms to improve real-time path selection and reduce mission completion time in dynamic scenarios. Similarly, the work [

22] presented a Q-Learning-based task allocation approach for multi-UAV MTSP, which significantly improved load balancing among cooperative UAVs. Furthermore, the research presented in [

129] employed a DQN-based approach for UAV collision avoidance and adaptive navigation, yielding promising results in complex environments.

6.4.4. Actor–Critic Methods

Actor–Critic is one of the primary reinforcement learning methods that combines policy-based and value-based approaches [

130,

131]. These methods consist of two main parts, as illustrated in

Figure 9:

Actor: which chooses an action and learns a policy . Critic: which estimates the value of the selected action ( or ) and provides feedback to the actor.

These methods are particularly suitable for problems where the action space is continuous, such as speed, angle, or throttle control, because they require precise and smooth control at each step [

132]. In the initial scenario, UAVs must not only decide which path to take but also make smooth adjustments to speed and angle while following that path, so that mission time is short and energy use is efficient. In such cases, the Policy Gradient update rule is used, which adjusts the policy parameters in such a way that the expected total reward is maximised:

where:

: policy parameters;

: probability of choosing action a in state, s;

: advantage function, which expresses the utility of an action relative to the average.

Actor–critic methods have been used in UAV swarm research through several advanced implementations:

Proximal Policy Optimisation (PPO): The article [

132] introduced PPO, which is a stable and sample-efficient Actor–Critic algorithm. For UAVs, PPO-based frameworks have been successfully adopted for dynamic mission planning and MTSP coordination [

115].

Deep Deterministic Policy Gradient (DDPG): The authors of [

133] proposed DDPG for continuous control. In UAV swarms, DDPG is utilised to learn continuous parameters, such as velocity and angle, resulting in smoother trajectories.

Soft Actor–Critic (SAC): It is an Actor–Critic variant based on maximum entropy RL, which provides a better balance between exploration and exploitation. SAC has shown promising results in UAV collision avoidance and coverage scenarios [

134].

Hybrid Multi-Agent Actor–Critic Approaches: Huang et al. [

135] used the Actor–Critic architecture in the multi-agent counterfactual advantage (MACA) framework, which reduced collisions in UAV swarms by 90% and improved cooperative behaviour.

Actor–critic methods enable UAV swarms to make adaptive decisions in complex and continuous action domains. In the context of problems such as MTSP, these approaches would allow UAVs to manage the trade-off between local observations and global mission objectives; however, they also present challenges in terms of computational complexity and scalability in large-scale swarms [

117,

131].

6.4.5. Imitation Learning

Imitation Learning is a learning method based on the principle that a model learns to make better decisions by following the demonstrations of experts [

119,

136]. As depicted in

Figure 10, it uses data provided by human operators or expert agents to learn a new policy that performs the same actions as the expert. This method is more efficient than reinforcement learning, because it learns from expert demonstrations rather than “trial-and-error”.

Imitation Learning is particularly effective in the context of UAV trajectory planning and the MTSP. For example, Kim et al. [

137] employed an Imitation Learning framework for UAV swarms, enabling UAVs to replicate expert trajectories and enhance cooperative formation flying. Similarly, Wan et al. [

138] proposed the DAgger (Dataset Aggregation) algorithm, which enhances learning robustness through iterative expert corrections in UAV navigation and decision making. Furthermore, Pan et al. [

139] combined Imitation Learning with deep neural networks in UAV-based MTSP missions to significantly reduce planning time and increase mission efficiency. Imitation Learning approaches have been combined in multi-agent setups for UAV swarms, as in Zhang et al. [

140], who developed a hybrid imitation–reinforcement learning framework that initialises UAVs with expert data and then further improves performance through reinforcement learning.

In behaviour cloning, the goal of the model is to replicate the behaviour of the expert with maximum accuracy. To achieve this goal, a specialised loss function is used, which measures the difference between the predicted action and the actual action of the expert. The mathematical expression for this loss is as follows:

where:

D: training dataset, consisting of pairs ; where s is the state and a is the expert action;

: action predicted by the policy network;

a: actual action of the expert;

: squared error between the prediction and the actual action.

This loss function teaches the policy to replicate the expert’s actions as accurately as possible. The higher this error, the greater the loss, and the model will reduce this difference by updating its parameters .

Imitation Learning not only enables UAVs to learn rapidly from expert demonstrations but also provides data-efficient and low-cost training for complex multi-target missions such as MTSP. However, expert data collection and domain shift can be a challenge in large-scale UAV swarms [

119,

136].

6.4.6. Active Inference

In Active Inference, decisions are based on the principles of free energy or surprise minimisation, which are inspired by theoretical models of the human brain and have been adapted to machines. It is an emerging probabilistic decision making framework based on Bayesian theory, integrating prediction, planning, and action under a unified framework [

120,

141]. In this approach, illustrated in

Figure 11, the agent constructs a generative model that captures the world’s model. Through this model, the agent minimises the gap between the expectations of sensory input and the actual observations. This gap is called free energy, and minimising it allows the agent to make more adaptive decisions. This function measures the deviation between the agent’s belief and the model, expressed mathematically as:

where:

: posterior belief of the agent about a state, s;

: generative model, which represents the joint probability of state, s, and observation, o.

Figure 11.

Representation of the Active Inference framework for UAV trajectory planning.

Figure 11.

Representation of the Active Inference framework for UAV trajectory planning.

This function forces UAVs to learn in such a way that the difference between their belief and the actual model is minimised.

In the context of MTSP, this method enables UAVs to design a trajectory and path based on predictions, thereby enhancing adaptation and facilitating real-time adjustments during the mission. As a result, UAV swarms reach their targets with greater precision and coordination, using minimal energy, regardless of the uncertain environment.

Applications of Active Inference have emerged in UAV research in recent years. For example, a goal-directed approach includes the TSPWP world model, which provides dictionary-based planning for effective flight by minimising surprises to a UAV in areas with wireless coverage. This model shows better results than Q-Learning in terms of decision making speed and stability, although further experiments are needed for full integration at the swarm level. [

142]. In the same vein, Active-MGDBN (a hybrid of Gaussian Dynamic Bayesian Network) is introduced, which provides autonomous path planning and self-supervision, and increases flight flexibility and speed by suggesting optimal paths based on assumptions in an unknown network environment. At the same time, it does not require training on specific datasets, as it is capable of learning autonomously [

25]. Another model is inspired by the decision-making style of human drivers, where decisions are made based on Bayesian cognition and free-energy minimisation. Although this model has not yet been directly applied to UAVs, its theoretical relevance makes it readily extensible to challenges such as UAV collision avoidance [

143]. Smith et al. [

144] showed that Active Inference enables UAVs to make successful decisions even in partially observable and dynamic environments. In contrast, Pezzulo et al. [

145] provided predictive awareness to UAVs during missions through Bayesian Active Inference models. Furthermore, Millidge et al. [

146] proposed a deep Active Inference framework that combines generative models with deep neural networks for UAVs, showing encouraging results in complex scenarios such as multi-target planning. Overall, Active Inference enable adaptive and prediction-driven decision-making capabilities to a UAV swarm. It provides a strong theoretical foundation through which UAVs can learn stable navigation in uncertain environments and effectively achieve speed, coordination, and continuously updated strategies during complex missions.

6.5. Challenges in AI-Based Algorithms

In the context of UAV swarms, various AI-based methods are employed to solve complex problems, such as the MTSP, effectively. Each algorithm solves a problem more effectively based on its learning style and neural processing; however, it also has some weaknesses. In recent years, several research works have demonstrated how one method succeeds another and overcomes its shortcomings, ultimately leading to the emergence of a generative and explainable short language model as a robust and unified framework [

116,

117,

144].

Table 4 presents a comparison of the basic concepts and roles of different AI-based methods in UAV swarms and MTSP. It shows that each method is effective in specific situations but has its limitations; therefore, a combination of different AI techniques can be more flexible, scalable, and provide better results in uncertain environments.

This study begins with MARL, which is designed for multi-agent coordination and cooperative task allocation in UAV swarms [

121,

122,

124]. MARL gave UAVs the ability to learn and cooperate; however, it still had problems such as scalability, communication overhead, and multi-agent credit assignment.

Then, came DRL, which is capable of learning whole mission-level policies [

20,

126]. However, DRL requires large amounts of data, time, and computational resources. This limitation is alleviated by value-based methods such as Q-Learning/DQN, which are effective for small and discrete action spaces. However, they are not suitable for continuous UAV control [

128].

The next is Actor–Critic methods, which combine policy and value learning and are effective for continuous actions, such as speed and angle [

132,

133]. However, these methods can be unstable without hyper-parameter tuning. Imitation Learning took a step further, enabling UAVs to learn rapidly based on expert data [

137,

138]. However, when new or unforeseen situations arise, it demonstrates limited adaptability.

After addressing these problems, Active Inference emerged as a promising solution, based on Bayesian generative models that combine observation, prediction, and action into a unified framework [

120,

144]. Active Inference works effectively even with limited data, providing UAVs with adaptive decision-making capabilities in uncertain environments and enabling real-time mission execution.

Overall, this progressive evolution demonstrates how each approach addresses the weaknesses of the previous one, and ultimately, Active Inference emerges as a state-of-the-art, adaptable, and computationally efficient method for complex multi-agent problems, such as UAV swarm trajectory planning and MTSP.

Table 5 illustrates when and where different approaches are used to solve complex problems such as UAV trajectory planning and MTSP. While TAs are simple and computationally efficient, they are limited to static situations. BIAs are helpful for more complex and large-scale optimisation; however, they require parameter tuning and computational resources. AI-based approaches, particularly DRL and Active Inference, are most promising in high-dimensional and uncertain scenarios; however, they require specialised expertise, advanced computational setups, and often large training datasets.

6.6. Hybrid Methods

Efficient trajectory design for UAV networks can be achieved using hybrid techniques such as 2-OPT, genetic algorithms (GA), and Active Inference. Initially, the 2-OPT algorithm is employed to generate offline training examples, where UAV paths are optimised based on minimum distance and time. These data are then used to train a world model, enabling the UAV to self-supervise its environment and select an online policy through Active Inference [

25]. Another study proposed a GA-based hybrid approach to generate repulsion forces in UAV swarm paths, thereby reducing collisions, overlaps, and interference among UAVs while producing optimal paths under the challenges of MTSP [

147]. The data generated by 2-OPT are fed into an Active Inference model, allowing UAVs to analyse online situations, adapt their policies accordingly, and perform fast, stable, and reliable path planning. This hybrid framework enables UAVs not only to learn from offline training but also to make optimal decisions in real time through online Active Inference, resulting in significant improvements in network performance, overall capacity, and the sustainability of route planning [

142].

7. Online and Offline Training and Testing: In the Context of UAV Swarms

The UAV swarm trajectory planning model is trained on previously collected data (trajectory sets, mission requirements, obstacle maps). This process is often conducted in a simulator or controlled environment to enable UAVs to learn effective policies before they are deployed on a mission. Once the model has completed training, it is deployed in the field [

142,

148].

The model receives new observations in real time and continuously updates its policy. This method is essential in dynamic and uncertain environments because it enables UAVs to make adaptive decisions during the mission [

142,

149].

Table 6 illustrates a comparative overview of key aspects of offline and online learning in UAV swarms. The comparison reveals that offline training offers a safer and less complex approach, while also having room for improvement in terms of flexibility. In contrast, online training provides real-time adaptation, albeit at the expense of requiring more computational resources and increasing the risk of field errors.

7.1. Integration of Offline Training with Online Testing

An effective strategy for UAV swarm missions is to utilise offline training for initial learning, followed by validation and fine-tuning of the model in the field through online testing.

7.1.1. Offline Phase: BIA’s Generated Data with Supervised/Unsupervised Learning

In the offline phase, the goal is to train an AI policy using data generated from BIAs (such as GA, PSO, or ACO) to learn expert-level performance [

150]. To achieve this, a dataset definition is first defined, which consists of states and their corresponding actions:

where

D is the trajectory plan generated by the BIAs, and each pair

represents a particular state and its corresponding expert action.

Based on this dataset, a loss function is defined so that the AI policy

can accurately replicate the expert’s actions. The following optimisation problem is solved to minimise this loss:

where:

D: dataset generated by algorithms such as GA, PSO, or ACO;

s: state of the environment (e.g., location of the UAV or remaining targets);

a: action (trajectory segment or assignment) recommended by the BIAs;

: AI-based policy that is learning to predict actions for these states.

Through this process, the AI policy can learn from the expert algorithm’s decisions to enhance path planning and task allocation in the MTSP, enabling effective and autonomous decision making without requiring expert assistance in the future.

7.1.2. Online Phase (AI-Based Fine-Tuning)

During the online phase, the model learns from the environment in real time to further refine the policy it has previously learned. This process is achieved through RL-based fine-tuning, where the UAV updates its policy based on its observations and the rewards it receives, thereby improving mission performance [

151]. The following policy update equation is used for this purpose:

where:

: parameters of the AI model, which are updated during the learning process;

: current state and currently selected action;

: reward received after the action, which reflects the effectiveness of the action;

: learning rate, which determines how much impact each update will have.

Equation (

27) ensures that the UAV updates its policy toward actions with higher expected rewards.

In MTSP scenarios, this update mechanism enables real-time path and speed optimisation, rapid adaptation to new targets, and improved inter-UAV coordination, enhancing overall mission success.

In this approach, BIAs (e.g., GA, PSO, ACO) are employed to generate initial datasets and trajectories, which subsequently serve as training inputs for AI-based models. This dual strategy not only provides UAV swarms with a robust initial policy but also enables them to perform adaptive decision making in real time, significantly increasing both mission success and safety [

150,

151,

152].

8. Decision Making and Collision Avoidance in UAV Swarms

8.1. Decision Making in Swarms

Decision making is a fundamental challenge in UAV swarm systems, as each UAV must not only focus on its mission (such as task execution or trajectory following) but also make real-time decisions while cooperating with other UAVs. The accuracy of these decisions is critical for mission success, collision avoidance, efficient energy use, and overall system stability [

153,

154].

Decision making is typically described at two levels: Local decision making, where each UAV makes decisions based on its local information (such as sensor data and the positions of nearby UAVs). Collective decision making, where UAVs share data and act according to a global strategy [

155].

8.2. Online and Offline Decision Making

Offline decision making: In offline decision making, UAVs rely on pre-trained policies or role-based models, which are often trained on simulations or historical data. This approach is computationally lightweight and suitable for predictable missions (such as mapping or fixed survey paths) [

156].

Online decision making: Online decision making is more dynamic, where UAVs continuously observe the environment, share information, and make decisions in real time based on the current situation (such as sudden obstacles, changing weather conditions, or a new mission target). This approach makes UAV swarms more adaptive and resilient, but it requires more computational power and a robust communication structure [

154].

Modern research is moving in the direction of using both methods in a hybrid manner, that is, first providing UAVs with a basic decision policy through offline learning and then continuously improving it through online decision making during the mission [

152,

157].

Table 7 illustrates a comparison of offline and online decision making in UAV swarms, showing that offline methods have low computational demands and rely on pre-trained policies. In contrast, online decision making offers greater adaptability and flexibility in real time, but requires more computational resources.

8.3. A Challenge in Decision Making: Collision Avoidance

When multiple UAVs fly together on close or shared paths, the risk of collision increases. This is a fundamental challenge for UAV swarms, as a minor collision can not only damage one UAV but also fail the entire mission. Therefore, collision avoidance strategies are considered an integral part of decision making. Modern research has shown that various approaches are used to improve collision avoidance in UAV swarms, including geometric, potential field-based, optimisation-driven and AI-assisted methods [

158,

159].

Collision avoidance: Techniques by which UAVs avoid each other or obstacles to maintain mission safety. Decision making in UAV swarms is not limited to path selection, but is a continuous, informative and protective process, involving real-time perception and mutual coordination. Especially in dynamic and uncertain environments, online decision making and collision avoidance are inseparable [

160].

8.4. Modern and Scientific Methods for Collision Avoidance

Several approaches have been developed for collision avoidance in UAV swarms, which can be categorised into the following groups.

8.4.1. Geometric Methods:

These methods are based on the geometry of the velocity and position of UAVs. For example, in the velocity obstacle method (VOM), each UAV predicts its future position based on the current position and velocity of other UAVs, and adjusts its velocity to avoid potential collisions [