Pixel-Level Segmentation of Retinal Breaks in Ultra-Widefield Fundus Images with a PraNet-Based Machine Learning Model

Abstract

1. Introduction

- Emphasis on the clinical significance of pixel-level delineation—enabling precise treatment planning and prophylactic interventions, and addressing the unmet clinical need for AI-based retinal break analysis.

- Training on a large, diverse, unfiltered dataset (34,867 UWF images) without excluding poor-quality or artifact-laden cases, thereby enhancing real-world applicability.

- Robust segmentation performance, achieving high Dice coefficient and IoU across heterogeneous cases, validated on an independent clinical test set.

2. Materials and Methods

2.1. Dataset

2.2. Annotation

2.3. Data Preprocessing and Augmentation

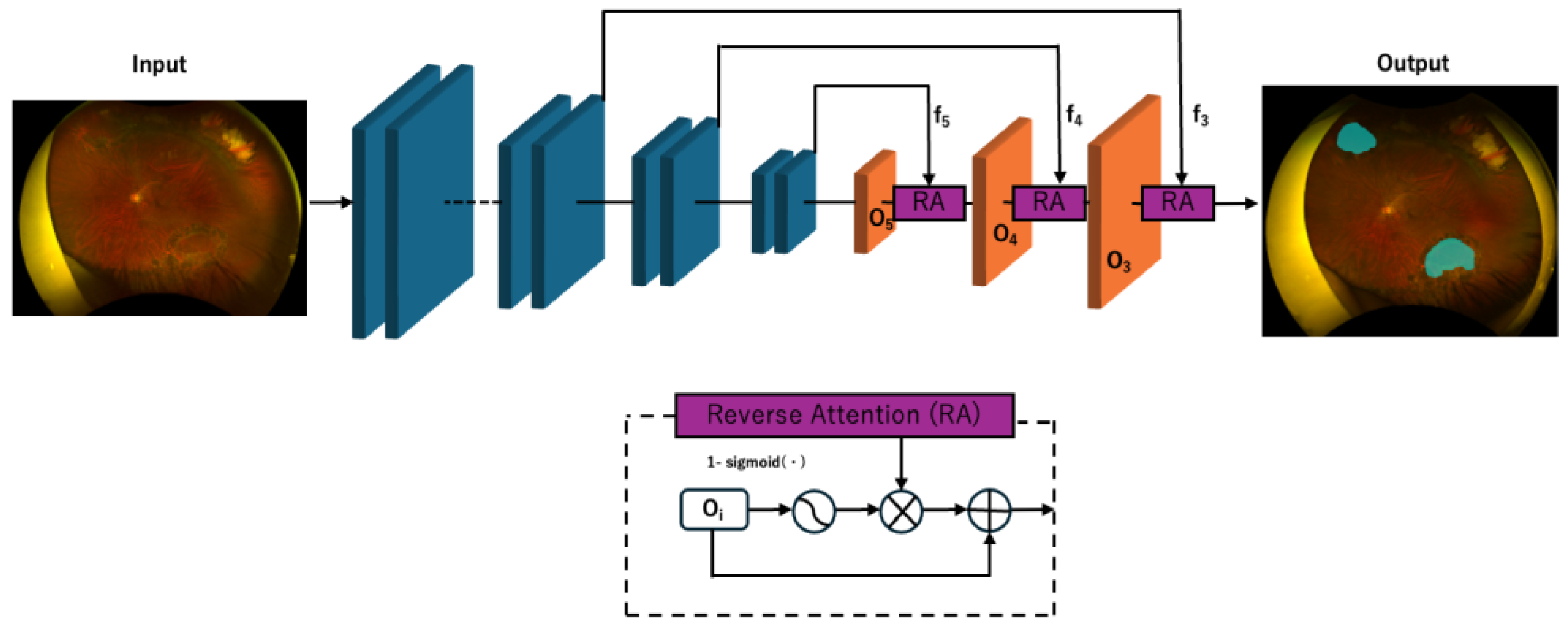

2.4. Deep Learning Model Development

2.5. Hyperparameter Optimization

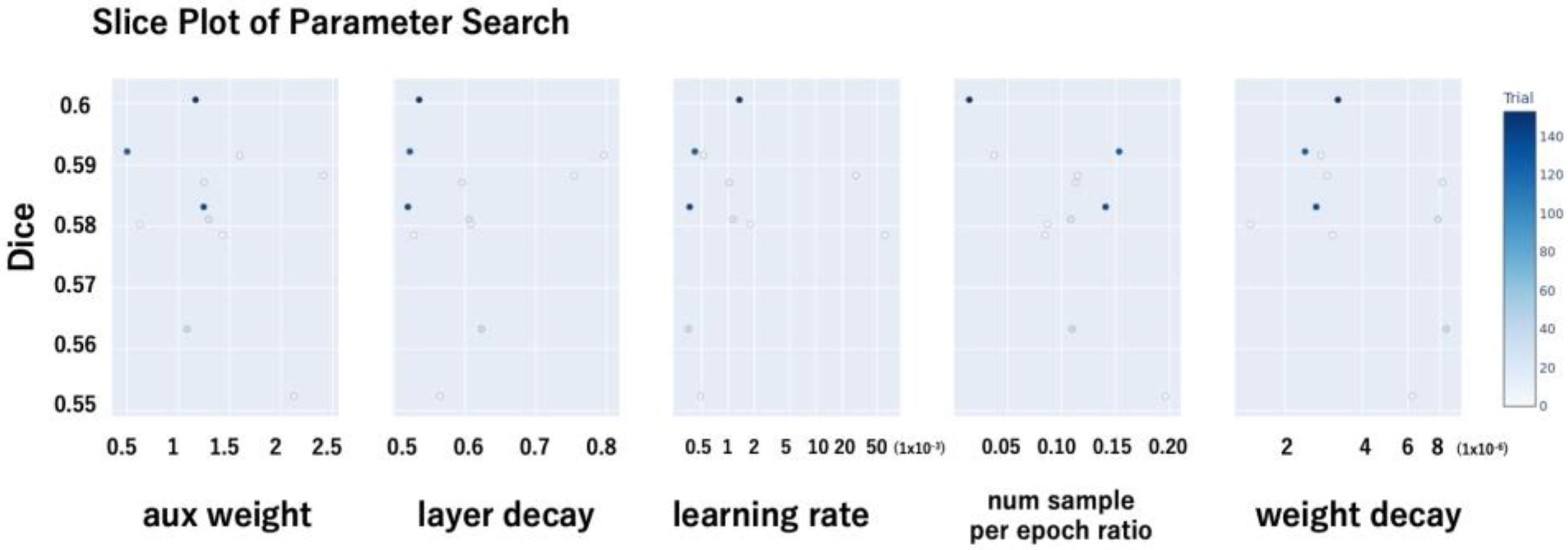

- Auxiliary loss weight (aux weight): This parameter controls the contribution of the auxiliary loss in the PraNet architecture, which is intended to facilitate learning by providing additional supervision to intermediate layers.

- Layer-wise learning rate decay (layer_decay): A decay factor was applied to assign smaller learning rates to deeper layers, thereby preserving the pretrained weights of the backbone network.

- Initial learning rate (lr): The base learning rate used during training.

- Negative sample ratio per epoch (num_sample_per_epoch_ratio): To address class imbalance—specifically, the lower prevalence of retinal break images—this parameter defined the number of negative (non-break) images randomly sampled per epoch relative to the number of positive (break) images. For instance, a ratio of 0.5 implies that for every 500 positive images, 250 negative images were sampled per epoch.

- Weight decay: The L2 regularization coefficient used to mitigate overfitting.

- The hyperparameter search was conducted in a distributed manner. The optimal hyperparameter configuration was selected based on the trial that achieved the highest Dice coefficient (Figure 2).

2.6. Performance Evaluation

2.6.1. Primary Outcome—Pixel-Level Segmentation Performance

- Accuracy: the proportion of all pixels (lesion and background) correctly classified by the model. This metric was calculated as (TP + TN)/(TP + TN + FP + FN), where TP is true positive pixels, TN is true negative pixels, FP is false positive pixels, and FN is false negative pixels.

- Precision: the proportion of pixels predicted as lesion that were truly lesion pixels, calculated as TP/(TP + FP). This reflects the model’s ability to avoid false positives in the lesion class.

- Recall: the proportion of true lesion pixels that were correctly identified, calculated as TP/(TP + FN). This reflects the model’s ability to detect lesion pixels without omission.

- IoU: the ratio of the area of overlap to the area of union between the predicted lesion mask and the ground truth mask, calculated as TP/(TP + FP + FN). IoU measures spatial overlap and is less affected by pixel counts than overall accuracy.

- Dice score: the harmonic mean of precision and recall for the lesion class, calculated as 2 × TP/(2 × TP + FP + FN). The Dice score emphasizes correct prediction of lesion pixels and is widely used as a primary segmentation quality metric.

- Centroid distance score: the Euclidean distance (in pixels) between the centroid of the predicted lesion mask and the centroid of the corresponding ground truth mask. The centroid of each mask was computed as the mean (x, y) coordinate of all pixels within that mask. The distance was then normalized by the image width (1536 pixels) to yield a unitless value between 0 and 1. Lower scores indicate greater spatial localization accuracy, independent of lesion size.

2.6.2. Secondary Outcome—Lesion-Wise Detection Performance

3. Results

3.1. Dataset Composition

3.2. Pixel-Level Segmentation Performance

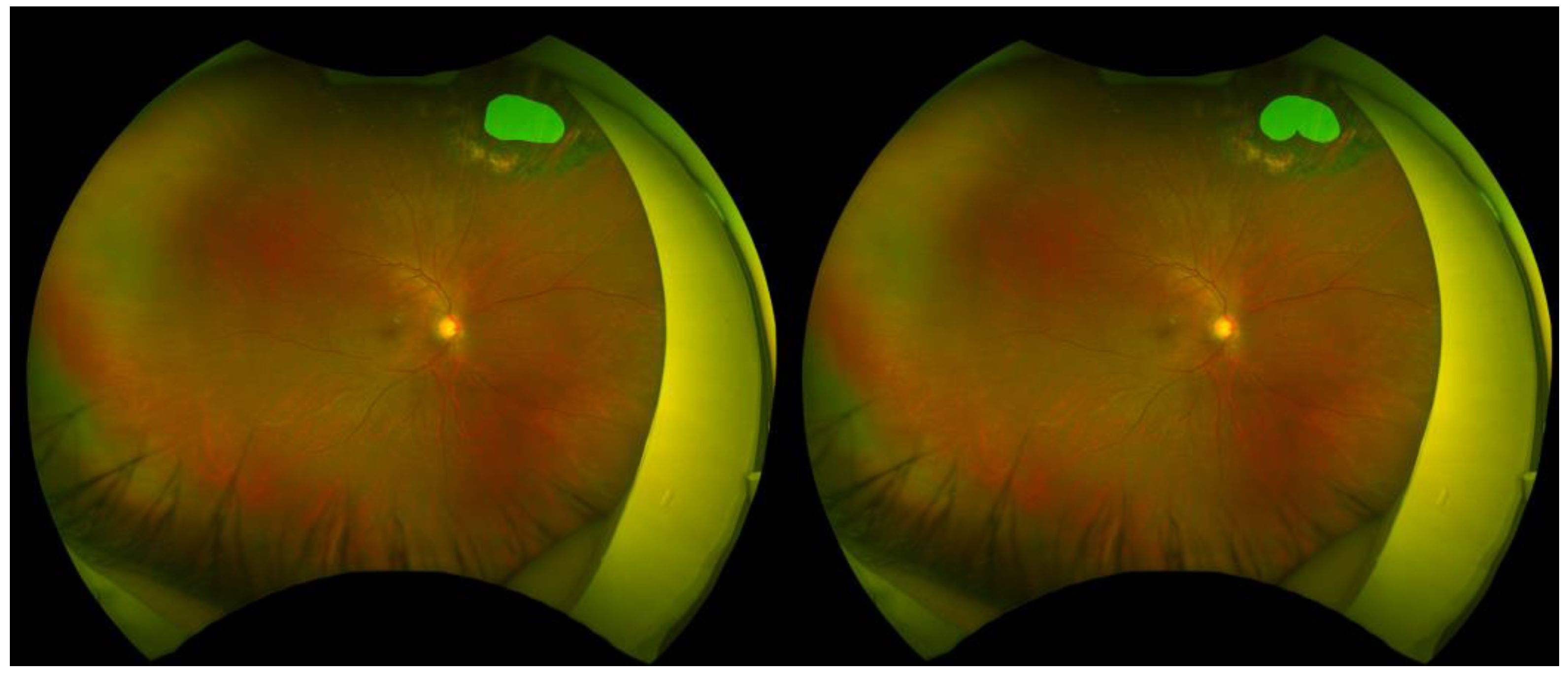

3.3. Representative Segmentation Examples

3.4. Background Data—Distribution of Breaks in Test Set

3.5. Lesion-Wise Detection Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| UWF | Ultra-Widefield Fundus |

| IoU | Intersection over Union |

| PPV | Positive Predictive Value |

| RRD | Rhegmatogenous Retinal Detachment |

| RPE | Retinal Pigment Epithelium |

| AI | Artificial Intelligence |

| DLS | Deep Learning Systems |

| CNN | Convolutional Neural Network |

| AUC | Area Under the Curve |

| CAMs | Class Activation Maps |

| AMMs | Attention Modulation Modules |

| PPD | Parallel Partial Decoder |

References

- Steinmetz, J.D.; Rupert, R.A.B.; Paul, S.B.; Flaxman, S.R.; Taylor, H.R.B.; Jonas, J.B.; Abdoli, A.A.; Abrha, W.A.; Abualhasan, A.; Abu-Gharbieh, E.G.; et al. Causes of blindness and vision impairment in 2020 and trends over 30 years, and prevalence of avoidable blindness in relation to VISION 2020: The Right to Sight: An analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e144–e160. [Google Scholar] [CrossRef]

- Wilkinson, C.P. Interventions for asymptomatic retinal breaks and lattice degeneration for preventing retinal detachment. Cochrane Database Syst. Rev. 2014, 2014, CD003170. [Google Scholar] [CrossRef]

- Combs, J.L.; Welch, R.B. Retinal breaks without detachment: Natural history, management and long term follow-up. Trans. Am. Ophthalmol. Soc. 1982, 80, 64–97. [Google Scholar]

- Flaxel, C.J.; Adelman, R.A.; Bailey, S.T.; Fawzi, A.; Lim, J.I.; Vemulakonda, G.A.; Ying, G.S. Posterior Vitreous Detachment, Retinal Breaks, and Lattice Degeneration Preferred Practice Pattern®. Ophthalmology 2020, 127, P146–P181. [Google Scholar] [CrossRef]

- Choi, S.; Goduni, L.; Wald, K.J. Clinical Outcomes of Symptomatic Horseshoe Tears After Laser Retinopexy. J. Vitreoretin. Dis. 2023, 7, 290–292. [Google Scholar] [CrossRef] [PubMed]

- Lankry, P.; Loewenstein, A.; Moisseiev, E. Outcomes following Laser Retinopexy for Retinal Tears: A Comparative Study between Trainees and Specialists. Ophthalmologica 2020, 243, 355–359. [Google Scholar] [CrossRef] [PubMed]

- Garoon, R.B.; Smiddy, W.E.; Flynn, H.W., Jr. Treated retinal breaks: Clinical course and outcomes. Graefes Arch. Clin. Exp. Ophthalmol. 2018, 256, 1053–1057. [Google Scholar] [CrossRef]

- Nagiel, A.; Lalane, R.A.; Sadda, S.R.; Schwartz, S.D. ULTRA-WIDEFIELD FUNDUS IMAGING: A Review of Clinical Applications and Future Trends. Retina 2016, 36, 660–678. [Google Scholar] [CrossRef]

- Sayres, R.; Taly, A.; Rahimy, E.; Blumer, K.; Coz, D.; Hammel, N.; Krause, J.; Narayanaswamy, A.; Rastegar, Z.; Wu, D.; et al. Using a Deep Learning Algorithm and Integrated Gradients Explanation to Assist Grading for Diabetic Retinopathy. Ophthalmology 2019, 126, 552–564. [Google Scholar] [CrossRef]

- Paul, S.K.; Pan, I.; Sobol, W.M. A SYSTEMATIC REVIEW OF DEEP LEARNING APPLICATIONS FOR OPTICAL COHERENCE TOMOGRAPHY IN AGE-RELATED MACULAR DEGENERATION. Retina 2022, 42, 1417–1424. [Google Scholar] [CrossRef] [PubMed]

- Thompson, A.C.; Jammal, A.A.; Medeiros, F.A. A Review of Deep Learning for Screening, Diagnosis, and Detection of Glaucoma Progression. Transl. Vis. Sci. Technol. 2020, 9, 42. [Google Scholar] [CrossRef]

- Wang, C.Y.; Nguyen, H.T.; Fan, W.S.; Lue, J.H.; Saenprasarn, P.; Chen, M.M.; Huang, S.Y.; Lin, F.C.; Wang, H.C. Glaucoma Detection through a Novel Hyperspectral Imaging Band Selection and Vision Transformer Integration. Diagnostics 2024, 14, 1285. [Google Scholar] [CrossRef]

- Wang, C.Y.; Mukundan, A.; Liu, Y.S.; Tsao, Y.M.; Lin, F.C.; Fan, W.S.; Wang, H.C. Optical Identification of Diabetic Retinopathy Using Hyperspectral Imaging. J. Pers. Med. 2023, 13, 939. [Google Scholar] [CrossRef]

- Yao, H.Y.; Tseng, K.W.; Nguyen, H.T.; Kuo, C.T.; Wang, H.C. Hyperspectral Ophthalmoscope Images for the Diagnosis of Diabetic Retinopathy Stage. J. Clin. Med. 2020, 9, 1613. [Google Scholar] [CrossRef]

- Wu, T.; Ju, L.; Fu, X.; Wang, B.; Ge, Z.; Liu, Y. Deep Learning Detection of Early Retinal Peripheral Degeneration from Ultra-Widefield Fundus Photographs of Asymptomatic Young Adult (17–19 Years) Candidates to Airforce Cadets. Transl. Vis. Sci. Technol. 2024, 13, 1. [Google Scholar] [CrossRef] [PubMed]

- Christ, M.; Habra, O.; Monnin, K.; Vallotton, K.; Sznitman, R.; Wolf, S.; Zinkernagel, M.; Márquez Neila, P. Deep Learning-Based Automated Detection of Retinal Breaks and Detachments on Fundus Photography. Transl. Vis. Sci. Technol. 2024, 13, 1. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Cao, J.; You, K.; Zhang, Y.; Ye, J. Artificial intelligence-assisted management of retinal detachment from ultra-widefield fundus images based on weakly-supervised approach. Front. Med. 2024, 11, 1326004. [Google Scholar] [CrossRef] [PubMed]

- Cui, T.; Lin, D.; Yu, S.; Zhao, X.; Lin, Z.; Zhao, L.; Xu, F.; Yun, D.; Pang, J.; Li, R.; et al. Deep Learning Performance of Ultra-Widefield Fundus Imaging for Screening Retinal Lesions in Rural Locales. JAMA Ophthalmol. 2023, 141, 1045–1051. [Google Scholar] [CrossRef]

- Wang, T.; Liao, G.; Chen, L.; Zhuang, Y.; Zhou, S.; Yuan, Q.; Han, L.; Wu, S.; Chen, K.; Wang, B.; et al. Intelligent Diagnosis of Multiple Peripheral Retinal Lesions in Ultra-widefield Fundus Images Based on Deep Learning. Ophthalmol. Ther. 2023, 12, 1081–1095. [Google Scholar] [CrossRef]

- Tang, Y.W.; Ji, J.; Lin, J.W.; Wang, J.; Wang, Y.; Liu, Z.; Hu, Z.; Yang, J.F.; Ng, T.K.; Zhang, M.; et al. Automatic Detection of Peripheral Retinal Lesions from Ultrawide-Field Fundus Images Using Deep Learning. Asia Pac. J. Ophthalmol. 2023, 12, 284–292. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef]

- Xu, Y.; Quan, R.; Xu, W.; Huang, Y.; Chen, X.; Liu, F. Advances in Medical Image Segmentation: A Comprehensive Review of Traditional, Deep Learning and Hybrid Approaches. Bioengineering 2024, 11, 1034. [Google Scholar] [CrossRef]

- Fan, D.P.; Ji, G.P.; Zhou, T.; Chen, G.; Fu, H.; Shen, J.; Shao, L. PraNet: Parallel Reverse Attention Network for Polyp Segmentation. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2020: 23rd International Conference, Lima, Peru, 4–8 October 2020; pp. 263–273. [Google Scholar]

- Inoda, S.; Takahashi, H.; Yamagata, H.; Hisadome, Y.; Kondo, Y.; Tampo, H.; Sakamoto, S.; Katada, Y.; Kurihara, T.; Kawashima, H.; et al. Deep-learning-based AI for evaluating estimated nonperfusion areas requiring further examination in ultra-widefield fundus images. Sci. Rep. 2022, 12, 21826. [Google Scholar] [CrossRef] [PubMed]

- Oh, R.; Oh, B.L.; Lee, E.K.; Park, U.C.; Yu, H.G.; Yoon, C.K. Detection and Localization of Retinal Breaks in Ultrawidefield Fundus Photography Using a YOLO v3 Architecture-Based Deep Learning Model. Retina 2022, 42, 1889–1896. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Guo, C.; Nie, D.; Lin, D.; Zhu, Y.; Chen, C.; Zhang, L.; Xu, F.; Jin, C.; Zhang, X.; et al. A deep learning system for identifying lattice degeneration and retinal breaks using ultra-widefield fundus images. Ann. Transl. Med. 2019, 7, 618. [Google Scholar] [CrossRef] [PubMed]

- Hu, B.C.; Ji, G.P.; Shao, D.; Fan, D.P. PraNet-V2: Dual-Supervised Reverse Attention for Medical Image Segmentation. arXiv 2025, arXiv:2504.10986. Available online: https://arxiv.org/abs/2504.10986 (accessed on 8 June 2025).

| Dataset | Retinal Break | No Retinal Break |

|---|---|---|

| Training | 806 | 33,907 (finally 1.5% were used) |

| Validation | 81 | 0 |

| Test | 73 | 0 |

| Total | 960 | 33,907 |

| Metric | Mean ± SD | Median |

|---|---|---|

| Accuracy | 0.996 ± 0.010 | 0.999 |

| Precision | 0.635 ± 0.301 | 0.760 |

| Recall | 0.756 ± 0.281 | 0.864 |

| IoU | 0.539 ± 0.277 | 0.616 |

| Dice score | 0.652 ± 0.282 | 0.763 |

| Centroid distance score | 0.081 ± 0.170 | 0.013 |

| Category | Total Number of Retinal Breaks | Average per Image (±SD) |

|---|---|---|

| AI-Predicted Retinal Breaks | 131 | 1.699 ± 1.310 |

| Annotated Retinal Breaks | 104 | 1.425 ± 0.809 |

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | 92 | 12 |

| Actual Negative | 32 | — |

| Metric | Value | |

| Sensitivity (Recall) | 0.885 | |

| Positive Predictive Value (PPV) | 0.742 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Takayama, T.; Uto, T.; Tsuge, T.; Kondo, Y.; Tampo, H.; Chiba, M.; Kaburaki, T.; Yanagi, Y.; Takahashi, H. Pixel-Level Segmentation of Retinal Breaks in Ultra-Widefield Fundus Images with a PraNet-Based Machine Learning Model. Sensors 2025, 25, 5862. https://doi.org/10.3390/s25185862

Takayama T, Uto T, Tsuge T, Kondo Y, Tampo H, Chiba M, Kaburaki T, Yanagi Y, Takahashi H. Pixel-Level Segmentation of Retinal Breaks in Ultra-Widefield Fundus Images with a PraNet-Based Machine Learning Model. Sensors. 2025; 25(18):5862. https://doi.org/10.3390/s25185862

Chicago/Turabian StyleTakayama, Takuya, Tsubasa Uto, Taiki Tsuge, Yusuke Kondo, Hironobu Tampo, Mayumi Chiba, Toshikatsu Kaburaki, Yasuo Yanagi, and Hidenori Takahashi. 2025. "Pixel-Level Segmentation of Retinal Breaks in Ultra-Widefield Fundus Images with a PraNet-Based Machine Learning Model" Sensors 25, no. 18: 5862. https://doi.org/10.3390/s25185862

APA StyleTakayama, T., Uto, T., Tsuge, T., Kondo, Y., Tampo, H., Chiba, M., Kaburaki, T., Yanagi, Y., & Takahashi, H. (2025). Pixel-Level Segmentation of Retinal Breaks in Ultra-Widefield Fundus Images with a PraNet-Based Machine Learning Model. Sensors, 25(18), 5862. https://doi.org/10.3390/s25185862