DynaNet: A Dynamic Feature Extraction and Multi-Path Attention Fusion Network for Change Detection

Abstract

1. Introduction

- We propose a Dynamic Feature Extractor (DFE) that enhances change-related features and suppresses background noise through a trans-temporal gating mechanism, enabling accurate extraction of fine-grained changes from bi-temporal images.

- A Contextual Attention Module (CAM) is designed to leverage global context, enhancing focus on key change regions while suppressing background noise, thereby improving the accuracy and robustness of fine change detection in complex environments.

- We introduce a Multi-Branch Attention Fusion Module (MBAFM) that models long-range dependencies and fuses multi-level features. By integrating self- and cross-attention, it enhances the structural relationships between buildings and their surroundings, improving change region recognition, boundary clarity, and robustness to noise.

- We provide a high-resolution Inner-CD dataset containing 600 pairs of 256 × 256 pixel images with a spatial resolution of 0.5–2 m to facilitate future research in building change detection.

2. Related Works

3. Method

3.1. Overall Architecture

3.2. Dynamic Feature Extractor

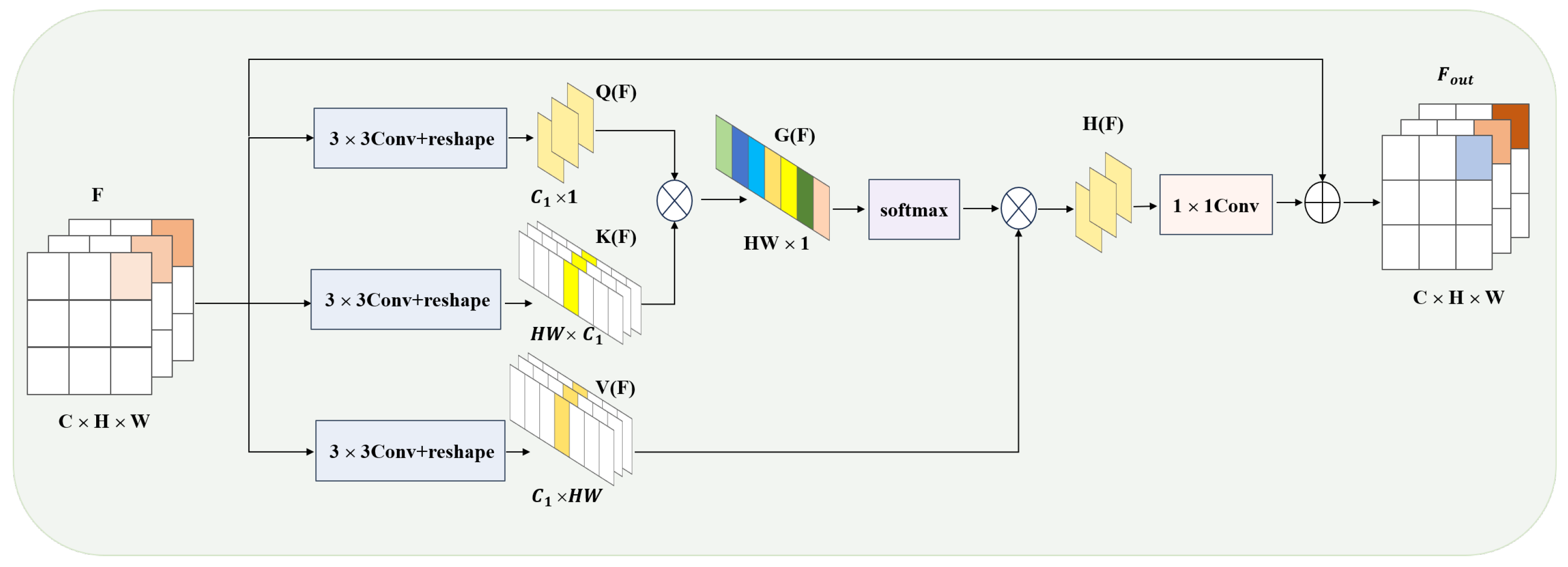

3.3. Contextual Attention Module

3.4. Multi-Branch Attention Fusion Module

3.5. Loss Functions

4. Experimental Results and Analysis

4.1. Dataset Introduction

4.2. Implementation Details and Evaluation Metrics

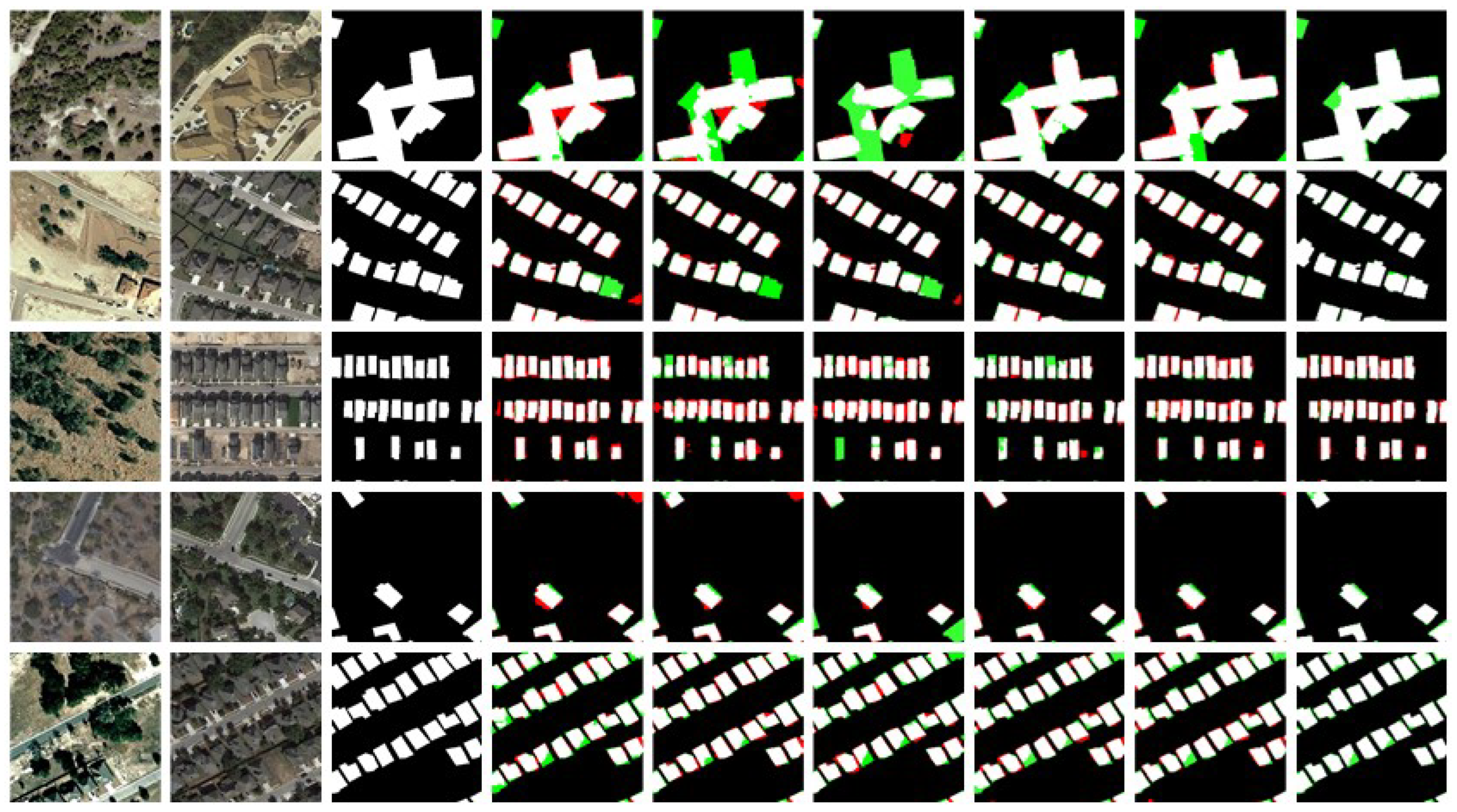

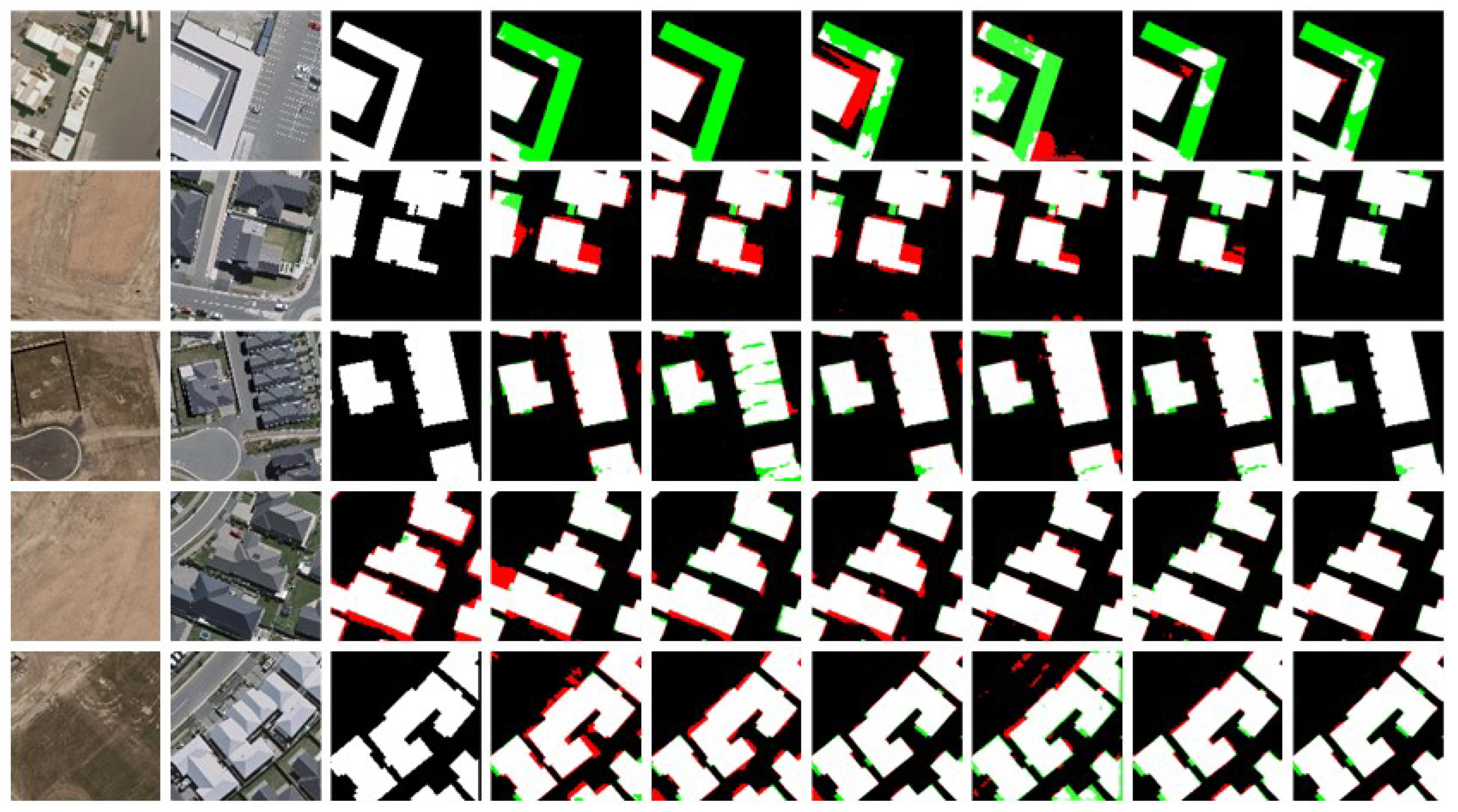

4.3. Comparison Experiments

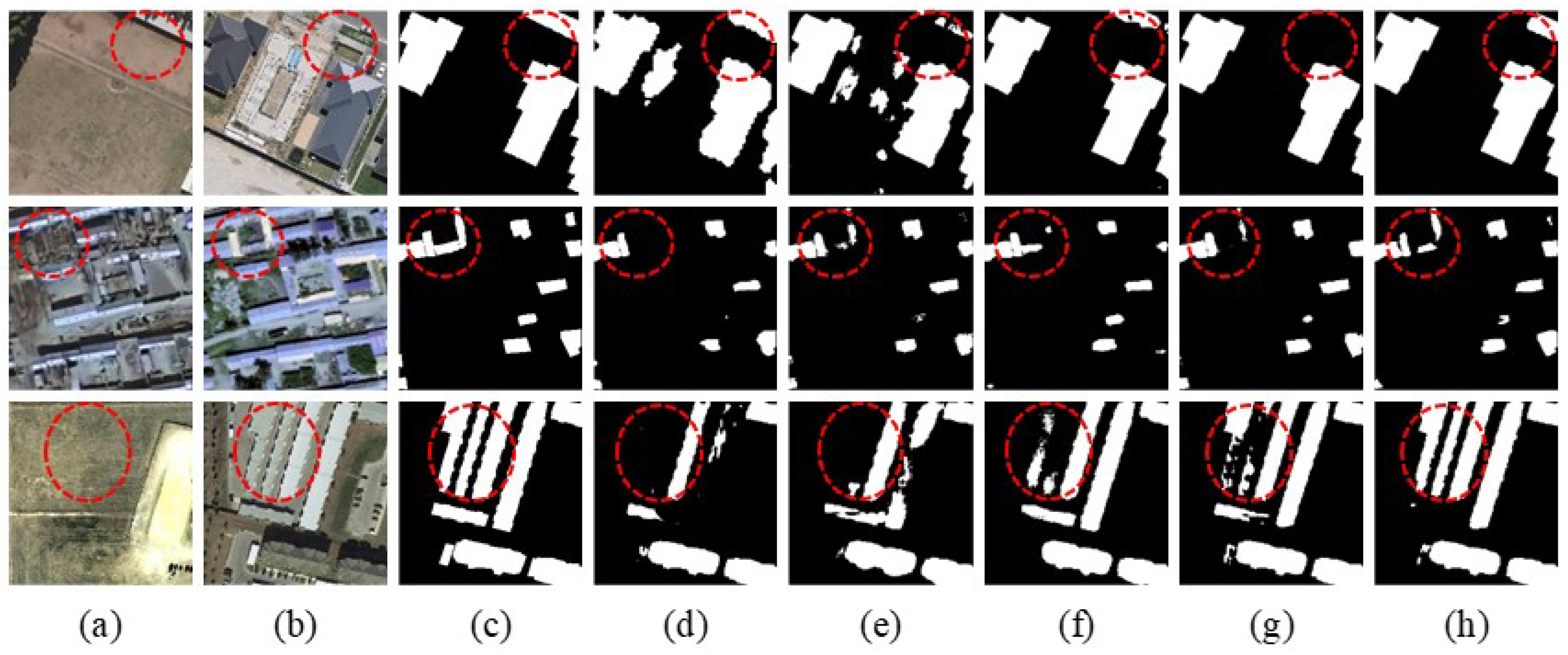

4.4. Ablation Experiments and Visualiztion

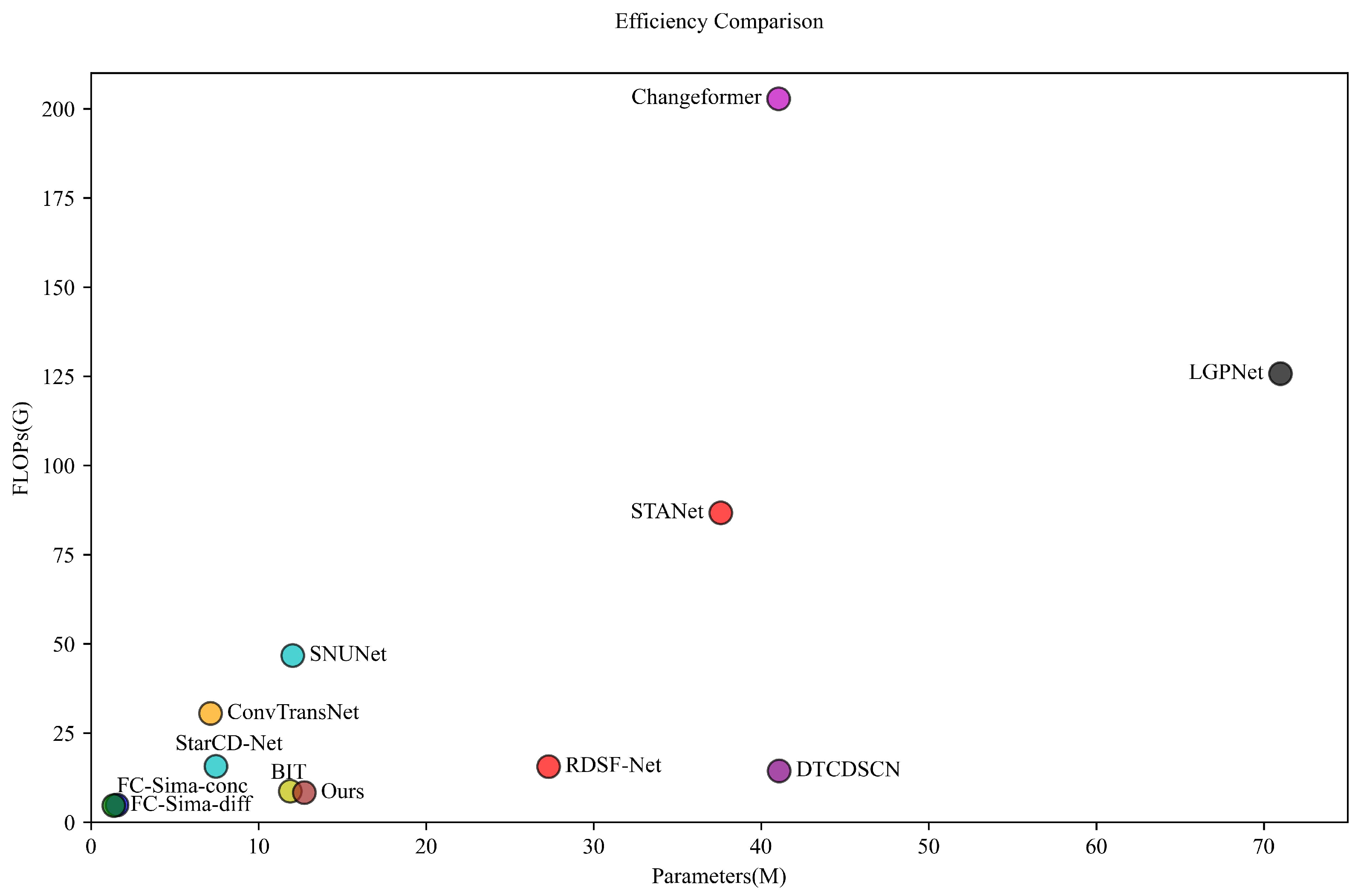

4.5. Efficiency Comparison

5. Discussion

- (1)

- By introducing the DFE module, DynaNet effectively filters out irrelevant features and focuses on detecting meaningful changes. The cross-temporal gating mechanism within DFE allows the model to selectively enhance relevant changes while suppressing background noise, resulting in improved change detection accuracy. This contributes significantly to the model’s robustness in handling complex change scenarios, especially in cases where objects undergo shape or spatial changes.

- (2)

- The CAM module brings global context into feature fusion, greatly enhancing the model’s capacity to concentrate on key areas of change. Global attention helps the network overcome challenges like environmental interference and subtle changes within remote sensing imagery that are often hard to identify. The global context CAM provides ensures that the model effectively captures important change signals, boosting detection precision and recall.

- (3)

- The dual-branch attention fusion mechanism leverages self-attention and cross-attention, allowing the model to model long-range dependencies and interactions between scales and regions. This capability is particularly important for building change detection, as buildings and surrounding structures often interact, and their changes may span various spatial scales. These attention mechanisms enable DynaNet to precisely identify building changes, even in challenging scenarios with complex building layouts and diverse environmental contexts.

- (1)

- Performance in complex environments: While DynaNet performs exceptionally well in typical building change detection tasks, its performance may be challenged in highly complex environments, such as areas with dynamic weather conditions, seasonal vegetation changes, or rapid urban development. Future work could explore integrating more advanced pre-trained vision models or multi-modal data to further enhance robustness and accuracy without altering the core structure of DynaNet.

- (2)

- Model complexity and computational cost: DynaNet achieves superior performance by combining modules such as DFE, CAM, and MBAFM. However, this modular design also increases computational complexity and memory requirements, which may limit real-time applications or deployment on resource-constrained devices. Future research could focus on designing more lightweight architectures, pruning redundant connections, or optimizing attention mechanisms to reduce computational cost while maintaining performance.

- (3)

- Generalization across datasets: Although DynaNet demonstrates strong performance on the Inner-CD dataset, its generalization capability across different geographic regions or imaging conditions has not been fully validated. Future studies could investigate cross-dataset evaluation and domain adaptation techniques to ensure broader applicability.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cheng, G.; Huang, Y.; Li, X.; Lyu, S.; Xu, Z.; Zhao, H.; Zhao, Q.; Xiang, S. Change Detection Methods for Remote Sensing in the Last Decade: A Comprehensive Review. Remote Sens. 2024, 16, 2355. [Google Scholar] [CrossRef]

- Lu, Y.; Coops, N.C.; Hermosilla, T. Estimating urban vegetation fraction across 25 cities in Pan-Pacific using Landsat time series data. ISPRS J. Photogramm. Remote Sens. 2017, 126, 11–23. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic Analysis of the Difference Image for Unsupervised Change Detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Feranec, J.; Hazeu, G.W.; Christensen, S.; Jaffrain, G. Corine Land Cover Change Detection in Europe (case studies of the Netherlands and Slovakia). Land Use Policy 2007, 24, 234–247. [Google Scholar] [CrossRef]

- Hamidi, E.; Peter, B.G.; Muñoz, D.F.; Moftakhari, H.; Moradkhani, H. Fast flood extent monitoring with SAR change detection using Google Earth Engine. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–19. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change detection in synthetic aperture radar images based on deep neural networks. IEEE Trans. Neural Networks Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef]

- Yu, Q.; Zhang, M.; Yu, L.; Wang, R.; Xiao, J. SAR Image Change Detection Based on Joint Dictionary Learning with Iterative Adaptive Threshold Optimization. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 15, 5234–5249. [Google Scholar] [CrossRef]

- Amitrano, D.; Guida, R.; Iervolino, P. Semantic Unsupervised Change Detection of Natural Land Cover with Multitemporal Object-Based Analysis on SAR Images. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5494–5514. [Google Scholar] [CrossRef]

- Wang, L.; Wang, L.; Wang, Q.; Atkinson, P.M. SSA-SiamNet: Spectral-Spatial-Wise Attention-Based Siamese Network for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5510018. [Google Scholar] [CrossRef]

- Luo, F.; Zhou, T.; Liu, J.; Guo, T.; Gong, X.; Ren, J. Multiscale Diff-Changed Feature Fusion Network for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5502713. [Google Scholar] [CrossRef]

- Yang, Z.; Xu, W.; Chen, N.; Chen, Y.; Wu, K.; Xie, M.; Xu, H.; Zheng, E. SDA-YOLO: Multi-Scale Dynamic Branching and Attention Fusion for Self-Explosion Defect Detection in Insulators. Electronics 2025, 14, 3070. [Google Scholar] [CrossRef]

- Khelifi, L.; Mignotte, M. Deep Learning for Change Detection in Remote Sensing Images: Comprehensive Review and Meta-Analysis. IEEE Access 2020, 8, 126385–126400. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, Y.; Yu, Z.; Yang, S.; Kang, H.; Xu, J. MCA-GAN: A Multi-Scale Contextual Attention GAN for Satellite Remote-Sensing Image Dehazing. Electronics 2025, 14, 3099. [Google Scholar] [CrossRef]

- Johnson, R.D.; Kasischke, E.S. Change Vector Analysis: A Technique for the Multispectral Monitoring of Land Cover and Condition. Int. J. Remote Sens. 1998, 19, 411–426. [Google Scholar] [CrossRef]

- Byrne, G.F.; Crapper, P.F.; Mayo, K.K. Monitoring Land-Cover Change by Principal Component Analysis of Multitemporal Landsat Data. Remote Sens. Environ. 1980, 10, 175–184. [Google Scholar] [CrossRef]

- Nemmour, H.; Chibani, Y. Multiple Support Vector Machines for Land Cover Change Detection: An Application for Mapping Urban Extensions. ISPRS J. Photogramm. Remote Sens. 2006, 61, 125–133. [Google Scholar] [CrossRef]

- Im, J.; Jensen, J. A Change Detection Model Based on Neighborhood Correlation Image Analysis and Decision Tree Classification. Remote Sens. Environ. 2005, 99, 326–340. [Google Scholar] [CrossRef]

- Habib, T.; Inglada, J.; Mercier, G.; Chanussot, J. Support Vector Reduction in SVM Algorithm for Abrupt Change Detection in Remote Sensing. IEEE Geosci. Remote Sens. Lett. 2009, 6, 606–610. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar] [CrossRef]

- Fang, S.; Li, K.; Shao, J.; Li, Z. SNUNet-CD: A Densely Connected Siamese Network for Change Detection of VHR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zheng, Z.; Ma, A.; Zhang, L.; Zhong, Y. Change is Everywhere: Single-Temporal Supervised Object Change Detection in Remote Sensing Imagery. In Proceedings of the Proceedings of IEEE/CVF Int. Conf. Comput. Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 15173–15182. [Google Scholar] [CrossRef]

- Fang, S.; Li, K.; Li, Z. Changer: Feature Interaction is What You Need for Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A Deeply Supervised Image Fusion Network for Change Detection in High Resolution Bi-temporal Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Feng, Y.; Jiang, J.; Xu, H.; Zheng, J. Change Detection on Remote Sensing Images Using Dual-Branch Multilevel Intertemporal Network. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Han, C.; Wu, C.; Guo, H.; Hu, M.; Chen, H. HANet: A Hierarchical Attention Network for Change Detection with Bitemporal Very-High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2023, 16, 3867–3878. [Google Scholar] [CrossRef]

- J, E.; Wang, L.; Zhao, C.; Mathiopoulos, P.; Ohtsuki, T.; Adachi, F. SAR Images Change Detection Based on Attention Mechanism-Convolutional Wavelet Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 1, 12133–12149. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An Image Is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 3–7 May 2021. [Google Scholar] [CrossRef]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. In Proceedings of the IGARSS 2022—2022 IEEE International Geoscience and Remote Sensing Symposium, Kuala Lumpur, Malaysia, 17–22 July 2022; pp. 207–210. [Google Scholar] [CrossRef]

- Wang, Q.; Jing, W.; Chi, K.; Yuan, Y. Cross-Difference Semantic Consistency Network for Semantic Change Detection. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Li, W.; Xue, L.; Wang, X.; Li, G. ConvTransNet: A CNN–Transformer Network for Change Detection with Multiscale Global–Local Representations. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–15. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, T.; Ma, J.; Zhang, X.; Liu, F.; Jiao, L. WNet: W-Shaped Hierarchical Network for Remote-Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, P.; Zhou, Z. CIMF-Net: A Change Indicator-enhanced Multiscale Fusion Network for Remote Sensing Change Detection. IEEE Access 2025, 1, 66843–66854. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-transformer network with multi-scale context aggregation for fine-grained cropland change detection. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A Deeply Supervised Attention Metric-Based Network and an Open Aerial Image Dataset for Remote Sensing Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5604816. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-End Change Detection for High-Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Zhang, X.; Yue, Y.; Gao, W.; Yun, S.; Su, Q.; Yin, H.; Zhang, Y. DifUnet++: A Satellite Images Change Detection Network Based on Unet++ and Differential Pyramid. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Lei, T.; Wang, J.; Ning, H.; Wang, X.; Xue, D.; Wang, Q.; Nandi, A.K. Difference Enhancement and Spatial-Spectral Nonlocal Network for Change Detection in VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4507013. [Google Scholar] [CrossRef]

- Lei, T.; Geng, X.; Ning, H.; Lv, Z.; Gong, M.; Jin, Y.; Nandi, A.K. Ultralightweight Spatial–Spectral Feature Cooperation Network for Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 4402114. [Google Scholar] [CrossRef]

- Liu, Y.; Li, S.; He, Z.; Liu, K. Edge and Flow Guided Iterative CNN for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2025, 63, 4406422. [Google Scholar] [CrossRef]

- Tan, H.; He, L.; Du, W.; Liu, H.; Chen, H.; Zhang, Y.; Yang, H. PRX-Change: Enhancing Remote Sensing Change Detection through Progressive Feature Refinement and Cross-Attention Interaction. Int. J. Appl. Earth Obs. Geoinf. 2024, 132, 104008. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change Detection in Multisource VHR Images via Deep Siamese Convolutional Multiple-Layers Recurrent Neural Network. IEEE Trans. Geosci. Remote Sens. 2019, 58, 2848–2864. [Google Scholar] [CrossRef]

- Yan, T.; Wan, Z.; Zhang, P.; Cheng, G.; Lu, H. TransY-Net: Learning Fully Transformer Networks for Change Detection of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Zhang, C.; Wang, L.; Cheng, S.; Li, Y. SwinSUNet: Pure Transformer Network for Remote Sensing Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Xia, L.; Chen, J.; Luo, J.; Zhang, J.; Yang, D.; Shen, Z. Building Change Detection Based on an Edge-Guided Convolutional Neural Network Combined with a Transformer. Remote Sens. 2022, 14, 4524. [Google Scholar] [CrossRef]

- Li, Q.; Zhong, R.; Du, X.; Du, Y. TransUNetCD: A Hybrid Transformer Network for Change Detection in Optical Remote-Sensing Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–19. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021. [Google Scholar] [CrossRef]

- Li, X.; Li, D.; Fang, J.; Feng, X. Remote Sensing Image Change Detection Method Based on Change Guidance and Bidirectional Mamba Network. Acta Opt. Sin. 2025, 45, 0528002. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction From an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2019, 57, 574–586. [Google Scholar] [CrossRef]

- Zeng, C.; Xue, X.; Li, C.; Xu, X.; Zhao, S.; Xu, Y. StarCD-Net: A Remote Sensing Change Detection Method Combining StarNet and Differential Operators. IEEE Access 2025, 13, 65466–65477. [Google Scholar] [CrossRef]

- Song, X.; Hua, Z.; Li, J. Remote Sensing Image Change Detection Transformer Network Based on Dual-Feature Mixed Attention. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Wang, S.; Cheng, D.; Yuan, G.; Li, J. RDSF-Net: Residual Wavelet Mamba-based Differential Completion and Spatio-Frequency Extraction Remote Sensing Change Detection Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 1, 1–16. [Google Scholar] [CrossRef]

| Methods | F1 (%) | Pre (%) | Rec (%) | IOU (%) |

|---|---|---|---|---|

| FC-Sima-conc [19] | 86.31 | 89.53 | 83.31 | 77.21 |

| FC-Sima-diff [19] | 87.35 | 89.53 | 82.45 | 75.14 |

| STANet [23] | 89.17 | 90.68 | 87.70 | 80.45 |

| SNUNet [20] | 88.30 | 91.25 | 85.55 | 79.06 |

| Changeformer [29] | 89.82 | 91.85 | 87.88 | 81.52 |

| BIT [33] | 89.96 | 91.74 | 88.25 | 81.76 |

| StarCD-Net [54] | 90.23 | 91.43 | 90.15 | 82.30 |

| LGPNet [35] | 89.37 | 92.13 | 86.32 | 80.74 |

| DMATNet [55] | 89.97 | 90.78 | 89.17 | 81.83 |

| DTCDSCN [44] | 87.67 | 88.53 | 86.83 | 78.05 |

| ConvTransNet [31] | 90.43 | 91.47 | 87.64 | 82.56 |

| WNet [32] | 90.67 | 91.16 | 90.18 | 82.93 |

| RDSF-Net [56] | 91.23 | 91.34 | 90.45 | 83.79 |

| DynaNet (Ours) | 92.38 | 93.45 | 91.46 | 84.57 |

| Methods | F1 (%) | Pre (%) | Rec (%) | IOU (%) |

|---|---|---|---|---|

| FC-Sima-conc [19] | 84.76 | 83.62 | 86.45 | 73.56 |

| FC-Sima-diff [19] | 85.77 | 86.53 | 85.02 | 75.07 |

| STANet [23] | 88.23 | 89.40 | 87.10 | 78.85 |

| SNUNet [20] | 89.50 | 87.60 | 88.49 | 79.06 |

| Changeformer [29] | 89.47 | 93.33 | 83.79 | 80.52 |

| BIT [33] | 89.96 | 92.24 | 88.25 | 83.48 |

| StarCD-Net [54] | 92.35 | 91.43 | 90.74 | 85.30 |

| LGPNet [35] | 87.07 | 90.84 | 85.53 | 79.74 |

| DMATNet [55] | 90.88 | 91.38 | 89.68 | 81.70 |

| DTCDSCN [44] | 71.95 | 85.13 | 85.12 | 77.06 |

| ConvTransNet [31] | 92.11 | 92.66 | 91.57 | 85.38 |

| WNet [32] | 91.25 | 92.37 | 90.15 | 83.91 |

| RDSF-Net [56] | 92.14 | 94.65 | 90.53 | 85.67 |

| DynaNet (Ours) | 94.35 | 93.28 | 92.46 | 87.57 |

| Methods | F1 (%) | Pre (%) | Rec (%) | IOU (%) |

|---|---|---|---|---|

| FC-Sima-conc [19] | 75.94 | 73.99 | 77.99 | 61.26 |

| FC-Sima-diff [19] | 78.79 | 77.54 | 78.32 | 68.07 |

| STANet [23] | 83.16 | 90.04 | 78.08 | 71.78 |

| SNUNet [20] | 86.36 | 87.60 | 85.16 | 75.25 |

| Changeformer [29] | 86.54 | 89.26 | 83.98 | 76.27 |

| BIT [33] | 85.15 | 87.46 | 84.93 | 75.68 |

| StarCD-Net [54] | 85.41 | 85.07 | 86.26 | 76.53 |

| LGPNet [35] | 85.35 | 84.75 | 86.48 | 74.52 |

| DMATNet [55] | 87.48 | 92.24 | 83.19 | 77.75 |

| DTCDSCN [44] | 85.00 | 84.87 | 85.32 | 73.91 |

| ConvTransNet [31] | 86.73 | 88.52 | 85.29 | 76.79 |

| WNet [32] | 87.78 | 89.73 | 85.92 | 78.23 |

| RDSF-Net [56] | 87.24 | 89.67 | 84.75 | 77.96 |

| DynaNet (Ours) | 90.92 | 93.80 | 87.84 | 80.37 |

| NO. | DFE | CAM | MBAFM | LEVIR-CD | WHU-CD | Inner-CD | |||

|---|---|---|---|---|---|---|---|---|---|

| F1(%) | IOU(%) | F1(%) | IOU(%) | F1(%) | IOU(%) | ||||

| 1 | 83.29 | 71.37 | 77.65 | 63.58 | 78.71 | 65.42 | |||

| 2 | ✓ | 85.53 | 75.21 | 80.54 | 70.55 | 80.11 | 69.16 | ||

| 3 | ✓ | 86.71 | 75.00 | 81.94 | 72.36 | 81.45 | 68.42 | ||

| 4 | ✓ | 87.01 | 77.59 | 84.34 | 75.91 | 83.27 | 70.34 | ||

| 5 | ✓ | ✓ | 89.01 | 79.59 | 88.62 | 83.89 | 86.79 | 73.17 | |

| 6 | ✓ | ✓ | 90.21 | 81.48 | 90.94 | 81.28 | 87.94 | 75.36 | |

| 7 | ✓ | ✓ | 90.72 | 82.21 | 92.29 | 85.69 | 88.85 | 78.10 | |

| 8 | ✓ | ✓ | ✓ | 92.38 | 84.57 | 94.35 | 87.57 | 90.92 | 80.37 |

| NO. | DEConv | CAM | LEVIR-CD | WHU-CD | Inner-CD | |||

|---|---|---|---|---|---|---|---|---|

| F1(%) | IOU(%) | F1(%) | IOU(%) | F1(%) | IOU(%) | |||

| 1 | 90.21 | 81.48 | 90.94 | 81.28 | 87.94 | 75.36 | ||

| 2 | ✓ | 91.96 | 83.56 | 93.13 | 86.25 | 88.73 | 78.82 | |

| 3 | ✓ | 90.67 | 82.01 | 92.36 | 83.72 | 88.42 | 77.54 | |

| 4 | ✓ | ✓ | 92.38 | 84.57 | 94.35 | 87.57 | 90.92 | 80.37 |

| Methods | Params (M) | Flops (G) | ΔF1 (%) |

|---|---|---|---|

| FC-Sima-conc [19] | 1.55 | 4.86 | −14.98 |

| FC-Sima-diff [19] | 1.35 | 4.73 | −12.13 |

| STANet [23] | 37.59 | 86.77 | −7.76 |

| SNUNet [20] | 12.03 | 46.79 | −5.13 |

| Changeformer [29] | 41.03 | 202.86 | −4.38 |

| BIT [33] | 11.89 | 8.71 | −5.77 |

| LGPNet [35] | 70.99 | 125.79 | −3.57 |

| StarCD-Net [54] | 7.46 | 15.74 | −5.51 |

| DTCDSCN [44] | 41.07 | 14.42 | −5.92 |

| ConvTransNet [31] | 7.13 | 30.53 | −3.19 |

| RDSF-Net [56] | 27.30 | 15.60 | −3.68 |

| DynaNet (Ours) | 12.72 | 8.36 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Li, D.; Fang, J.; Feng, X. DynaNet: A Dynamic Feature Extraction and Multi-Path Attention Fusion Network for Change Detection. Sensors 2025, 25, 5832. https://doi.org/10.3390/s25185832

Li X, Li D, Fang J, Feng X. DynaNet: A Dynamic Feature Extraction and Multi-Path Attention Fusion Network for Change Detection. Sensors. 2025; 25(18):5832. https://doi.org/10.3390/s25185832

Chicago/Turabian StyleLi, Xue, Dong Li, Jiandong Fang, and Xueying Feng. 2025. "DynaNet: A Dynamic Feature Extraction and Multi-Path Attention Fusion Network for Change Detection" Sensors 25, no. 18: 5832. https://doi.org/10.3390/s25185832

APA StyleLi, X., Li, D., Fang, J., & Feng, X. (2025). DynaNet: A Dynamic Feature Extraction and Multi-Path Attention Fusion Network for Change Detection. Sensors, 25(18), 5832. https://doi.org/10.3390/s25185832