A Systematic Review of Techniques for Artifact Detection and Artifact Category Identification in Electroencephalography from Wearable Devices

Abstract

1. Introduction

- RQ-I: have any studies addressed the specific challenges of artifact management in wearable EEG systems?

- RQ-II: which algorithms are available in the literature for artifact detection and artifact category identification in EEG signals acquired by wearable devices?

- RQ-III: which parameters are used to assess the performance of artifact detection and artifact category identification algorithms?

- RQ-IV: which assessment metrics are employed, and which reference signals are used to assess the performance of artifact detection and artifact category identification algorithms?

2. Materials and Methods

Search Strategy

3. Results

3.1. PRISMA

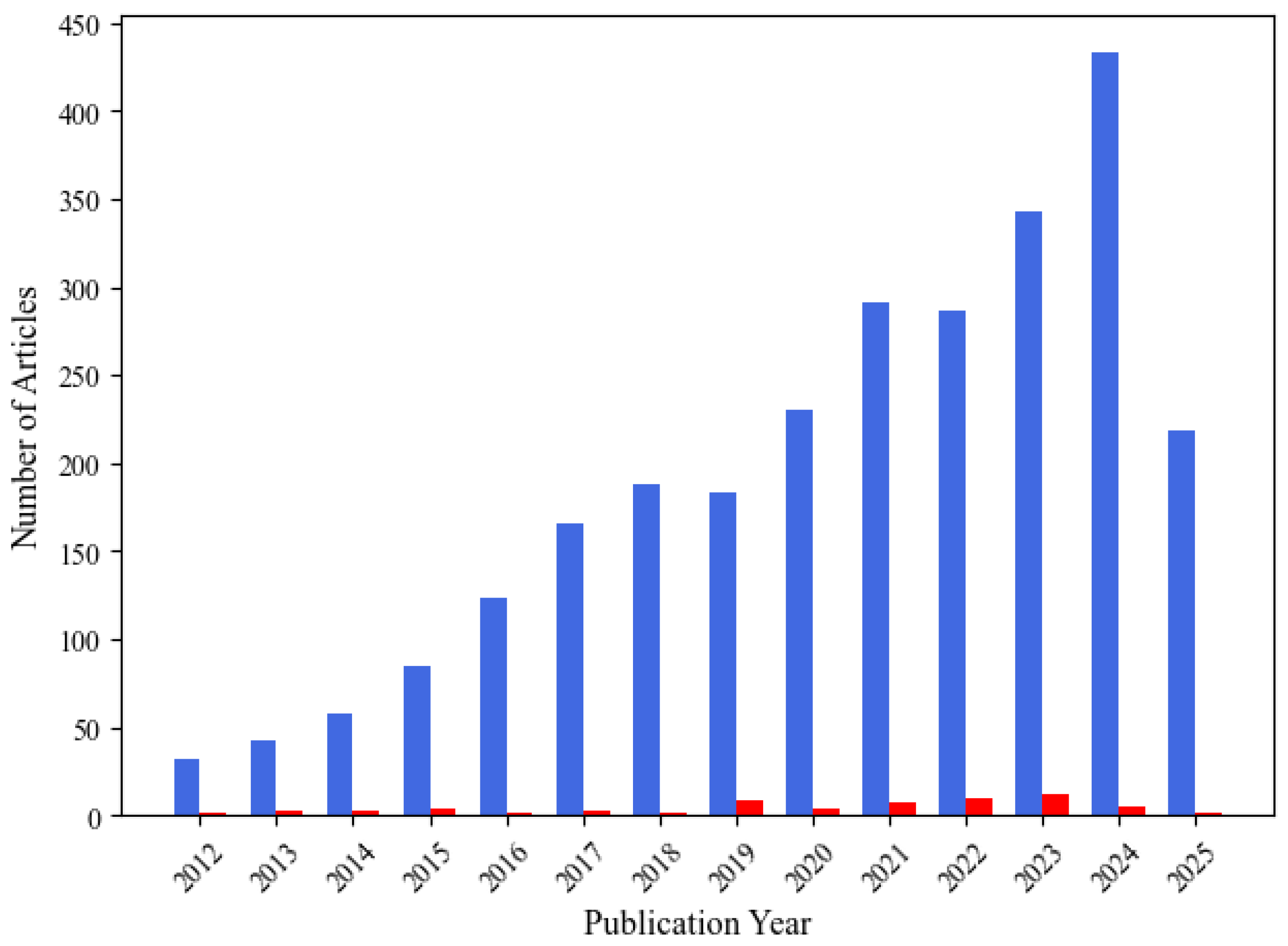

3.2. Temporal Trends in Wearable EEG and Artifact Management

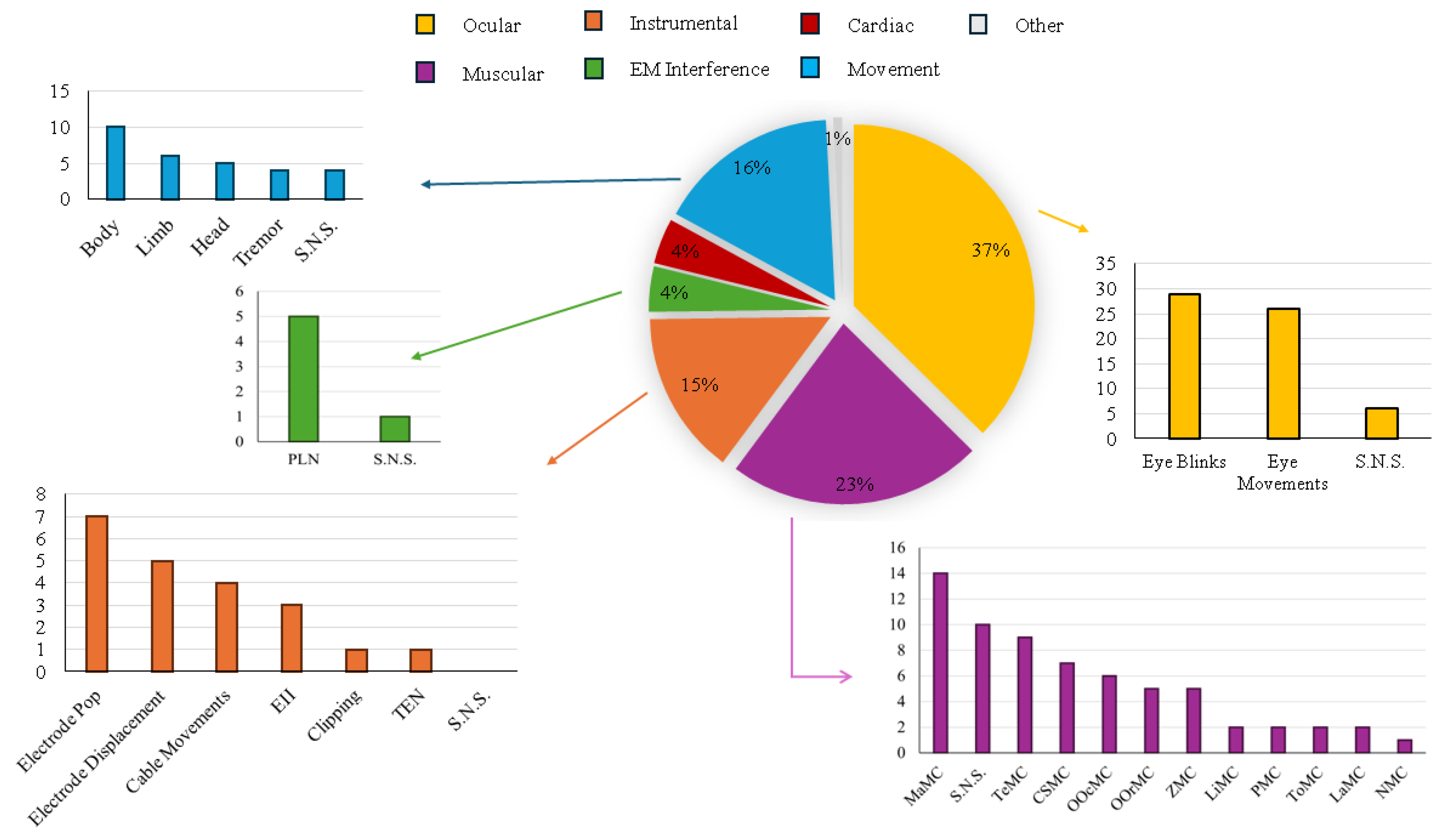

3.3. Acquisition Setup and Performance Assessment Methods of the Algorithms Across the Studies

3.3.1. Grid for Collecting Acquisition Setup Parameters and Performance Assessment Methods

3.3.2. Results of the Acquisition Setup Parameter Collection

3.3.3. Results of the Performance Assessment Methods Collection

3.4. Parameters of Artifact Detection Pipelines Across the Studies

3.4.1. ASR and Its Relevance for Wearable EEG Artifact Management

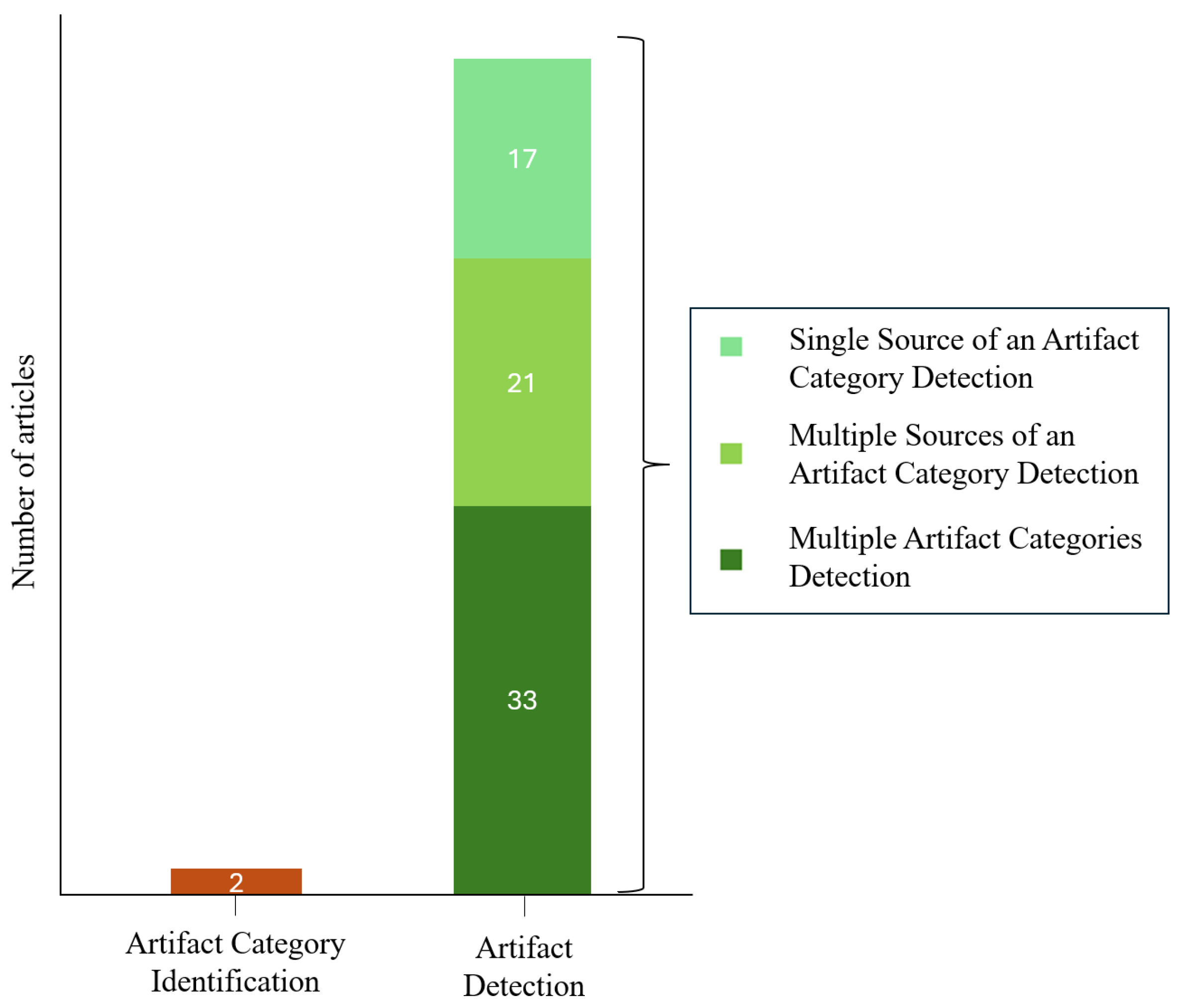

3.5. Artifact Detection vs. Artifact Category Identification Strategies

4. Discussion

4.1. Specific Challenges of Artifact Management in Wearable EEG Systems

4.2. Algorithms for Artifact Detection and Classification

4.3. Performance Assessment Parameters, Metrics, and Reference Signals

4.4. Emerging Directions and Limitations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ACF | Autocorrelation Function |

| ADJUST | Artefact Detector based on the Joint Use of Spatial and Temporal features |

| AMRC | Amplitude Modulation Rate of Change |

| ANC | Adaptive Noise Canceler |

| ANFIS | Adaptive Noise Cancellation System |

| ANOVA | Analysis of Variance |

| APF | Adaptive Predictor Filter |

| ASR | Artifact Subspace Reconstruction |

| AUC | Area Under the Curve |

| BC | Binary Classification |

| BiGRU | Bidirectional Gated Recurrent Unit |

| BM | Belief Matching |

| BPF | Band-Pass Filter |

| CC | Correlation of Coefficient |

| CCA | Canonical Correlation Analysis |

| CCR | Correct Classification Rate |

| CNN | Convolutional Neural Network |

| CSED | Cumulative Sum of Squared Error Difference |

| CSMC | Corrugator Supercilii Muscle Contraction |

| DWT | Discrete Wavelet Transform |

| DSNR | Difference in Signal-to-Noise Ratio |

| DTW | Dynamic Time Warping |

| EAWICA | Enhanced Automatic Wavelet ICA |

| EC | Eyes Closed |

| ECG | Electrocardiogram |

| EEG | Electroencephalogram |

| EEMD | Ensemble Empirical Mode Decomposition |

| EII | Electric Impedance Imbalance |

| EM | Expectation–Maximization |

| EMD | Empirical Mode Decomposition |

| EMG | Electromyogram |

| EMI | Electromagnetic Interference |

| EO | Eyes Open |

| EOG | Electrooculogram |

| ERP | Event-Related Potential |

| ETI | Electrode-Tissue Impedance |

| FC | Fully Connected |

| FCBF | Fast Correlation-Based Filter |

| FD | Fractal Dimension |

| FDR | False Discovery Rate |

| FIR | Finite Impulse Response |

| FMEMD | Fast Multivariate Empirical Mode Decomposition |

| FFT | Fast Fourier Transform |

| FORCe | Wavelet + ICA (SOBI) + Thresholding |

| FP-h | False Positive rate per hour |

| FPM | False Positive rate per Minute |

| FPR | False Positive Rate |

| FTR | Frequency-Tagging Response |

| GAN | Generative Adversarial Network |

| GRU | Gated Recurrent Unit |

| GRU-MARSC | Gated Recurrent Unit-based Multi-type Artifact Removal algorithm for Single-Channel |

| GSTV | Group Sparsity Total Variation |

| GWO | Gray Wolf Optimization |

| HPF | High-Pass Filter |

| HPO | HyperParameter Optimization |

| ICA | Independent Component Analysis |

| ICA-W | Independent Component Analysis–Wavelet |

| ICs | Independent Components |

| I-CycleGAN | Improved CycleGAN |

| IMDL | Integrated Minimum Description Length |

| IMFs | Intrinsic Mode Functions |

| IMU | Inertial Measurement Unit |

| ISD | Index of Spectral Deformation |

| KNN | k-Nearest Neighbors |

| LDA | Linear Discriminant Analysis |

| LiMC | Limb Muscle Contraction |

| LaMC | Laryngeal Muscle Contraction |

| LLMS | Leaky Least Mean Squares |

| LMM | Local Maximal and Minimal |

| LMS | Least Mean Square |

| LogP | Log Power |

| LPF | Low-Pass Filter |

| LZC | Lempel–Ziv Complexity |

| MAD | Median Absolute Deviation |

| MAE | Mean Absolute Error |

| MCA | Morphological Component Analysis |

| MCAF | Multi-Channel Adaptive Filtering |

| MARSC | Multi-type Artifact Removal algorithm for Single-Channel |

| MC | Multi-class Classification |

| MCC | Matthews Correlation Coefficient |

| MCCP | Minimal Cost-Complexity Pruning |

| MEMD | Multivariate Empirical Mode Decomposition |

| MFE | Morphological Feature Extraction |

| MI | Mutual Information |

| MMC | Multi-class Multi-output Classification |

| MaMC | Masseter Muscle Contraction |

| MeMC | Mentalis Muscle Contraction |

| MODWT | Maximal Overlap Discrete Wavelet Transform |

| MRA | MultiResolution Analysis |

| MSC | Magnitude Square Coherence |

| MSDW | Multi-window Summation of Derivatives within a Window |

| MSE | Mean Square Error |

| MV-EMD | Multivariate EMD with CCA |

| M-mDistEn | Multiscale Modified-Distribution Entropy |

| MLP | Multilayer Perceptron |

| mRMR | Minimum Redundancy Maximum Relevance |

| NA-MEMD | Noise-Assisted Multivariate Empirical Mode Decomposition |

| NMC | Nasalis Muscle Contration |

| NLMS | Normalized Least Mean Square |

| NSR | Noise-to-Signal Ratio |

| OD | Outlier Detection |

| OOcMC | Orbicularis Oculi Muscle Contraction |

| OOrMC | Orbicularis Oris Muscle Contraction |

| OS-EHO | Opposition Searched–Elephant Herding Optimization |

| PCA | Principal Component Analysis |

| PCMC | Posterior Cervical Muscle Contraction |

| PLN | Power-Line Noise |

| PMC | Pharyngeal Muscle Contraction |

| PGA | Principal Geodesic Analysis |

| PSD | Power Spectral Density |

| PSNR | Peak Signal-to-Noise Ratio |

| PPV | Positive Predictive Value |

| QDA | Quadratic Discriminant Analysis |

| RLS | Recursive Least-Squares Adaptive |

| RMS | Root Mean Square |

| RMSE | Root Mean Squared Error |

| RCs | Reconstructed Components |

| ResCNN | Residual Convolutional Neural Network |

| ResUnet1D | 1D Residual U-Net Semantic Segmentation Network |

| RNN | Recurrent Neural Network |

| RP | Relative Power |

| RRMSE | Relative Root Mean Squared Error |

| RRMSEf | Relative Root Mean Squared Error in time domain |

| RRMSEt | Relative Root Mean Squared Error in frequency domain |

| RSD | Relative Spectral Difference |

| SAR | Signal-to-Artifact Ratio |

| SBF | Stop-Band Filter |

| SD | Standard Deviation |

| SDW | Summation of Derivatives within a Window |

| ShMC | Shoulder Muscle Contraction |

| SSA | Singular Spectrum Analysis |

| SubMc | Submentalis Muscle Contraction |

| SE | Shannon Entropy |

| SEF | Spectral Edge Frequency |

| SG | Savitzky–Golay Filter |

| SOBI | Second Order Blind Identification |

| SNR | Signal-to-Noise Ratio |

| SVM | Support Vector Machine |

| SSVEP | Steady-State Visual Evoked Potentials |

| SWT | Stationary Wavelet Transform |

| STFT | Short-Time Fourier Transform |

| SVD | Singular Value Decomposition |

| TEN | Thermal Electronics Noise |

| TeMC | Temporalis Muscle Contraction |

| ToMC | Tongue Muscle Contraction |

| TFA | Time–Frequency Analysis |

| TPR | True Positive Rate |

| TPOT | Tree-based Pipeline Optimization Tool |

| vEOG | vertical Electrooculogram |

| VME | Variational Mode Extraction |

| VME-DWT | Variational Mode Extraction with Discrete Wavelet Transform |

| WICs | Wavelet Independent Components |

| wICA | Wavelet-enhanced Independent Component Analysis |

| WT | Wavelet Transform |

| ZCR | Zero Crossing Rate |

| ZMC | Zygomaticus Muscle Contraction |

References

- Värbu, K.; Muhammad, N.; Muhammad, Y. Past, present, and future of EEG-based BCI applications. Sensors 2022, 22, 3331. [Google Scholar] [CrossRef]

- Blinowska, K.; Durka, P. Electroencephalography (eeg). Wiley Encycl. Biomed. Eng. 2006, 10, 9780471740360. [Google Scholar]

- Amer, N.S.; Belhaouari, S.B. Eeg signal processing for medical diagnosis, healthcare, and monitoring: A comprehensive review. IEEE Access 2023, 11, 143116–143142. [Google Scholar] [CrossRef]

- Sharmila, A. Epilepsy detection from EEG signals: A review. J. Med. Eng. Technol. 2018, 42, 368–380. [Google Scholar] [CrossRef] [PubMed]

- Kowalski, J.W.; Gawel, M.; Pfeffer, A.; Barcikowska, M. The diagnostic value of EEG in Alzheimer disease: Correlation with the severity of mental impairment. J. Clin. Neurophysiol. 2001, 18, 570–575. [Google Scholar] [CrossRef]

- Geraedts, V.J.; Boon, L.I.; Marinus, J.; Gouw, A.A.; van Hilten, J.J.; Stam, C.J.; Tannemaat, M.R.; Contarino, M.F. Clinical correlates of quantitative EEG in Parkinson disease: A systematic review. Neurology 2018, 91, 871–883. [Google Scholar] [CrossRef]

- Karameh, F.N.; Dahleh, M.A. Automated classification of EEG signals in brain tumor diagnostics. In Proceedings of the 2000 American Control Conference ACC, (IEEE cat. No. 00CH36334). Chicago, IL, USA, 28–30 June 2000; Volume 6, pp. 4169–4173. [Google Scholar]

- Murugesan, M.; Sukanesh, R. Automated detection of brain tumor in EEG signals using artificial neural networks. In Proceedings of the 2009 International Conference on Advances in Computing, Control, and Telecommunication Technologies, Trivandrum, Kerala, India, 28–29 December 2009; pp. 284–288. [Google Scholar]

- Preuß, M.; Preiss, S.; Syrbe, S.; Nestler, U.; Fischer, L.; Merkenschlager, A.; Bertsche, A.; Christiansen, H.; Bernhard, M.K. Signs and symptoms of pediatric brain tumors and diagnostic value of preoperative EEG. Child’s Nerv. Syst. 2015, 31, 2051–2054. [Google Scholar] [CrossRef]

- Finnigan, S.; Van Putten, M.J. EEG in ischaemic stroke: Quantitative EEG can uniquely inform (sub-) acute prognoses and clinical management. Clin. Neurophysiol. 2013, 124, 10–19. [Google Scholar] [CrossRef]

- Soufineyestani, M.; Dowling, D.; Khan, A. Electroencephalography (EEG) technology applications and available devices. Appl. Sci. 2020, 10, 7453. [Google Scholar] [CrossRef]

- Biasiucci, A.; Franceschiello, B.; Murray, M.M. Electroencephalography. Curr. Biol. 2019, 29, R80–R85. [Google Scholar] [CrossRef]

- Nidal, K.; Malik, A.S. EEG/ERP Analysis: Methods and Applications; CRC Press: Boca Raton, FL, USA, 2014. [Google Scholar]

- Hammond, D.C. What is neurofeedback: An update. J. Neurother. 2011, 15, 305–336. [Google Scholar] [CrossRef]

- Hammond, D.C. Neurofeedback treatment of depression and anxiety. J. Adult Dev. 2005, 12, 131–137. [Google Scholar] [CrossRef]

- Marzbani, H.; Marateb, H.R.; Mansourian, M. Neurofeedback: A comprehensive review on system design, methodology and clinical applications. Basic Clin. Neurosci. 2016, 7, 143. [Google Scholar]

- Ros, T.; Moseley, M.J.; Bloom, P.A.; Benjamin, L.; Parkinson, L.A.; Gruzelier, J.H. Optimizing microsurgical skills with EEG neurofeedback. BMC Neurosci. 2009, 10, 87. [Google Scholar] [CrossRef] [PubMed]

- Xiang, M.Q.; Hou, X.H.; Liao, B.G.; Liao, J.W.; Hu, M. The effect of neurofeedback training for sport performance in athletes: A meta-analysis. Psychol. Sport Exerc. 2018, 36, 114–122. [Google Scholar] [CrossRef]

- Farwell, L.A.; Donchin, E. Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 1988, 70, 510–523. [Google Scholar] [CrossRef] [PubMed]

- Pfurtscheller, G.; Neuper, C. Motor imagery and direct brain-computer communication. Proc. IEEE 2001, 89, 1123–1134. [Google Scholar] [CrossRef]

- Nijholt, A.; Contreras-Vidal, J.L.; Jeunet, C.; Väljamäe, A. Brain-Computer Interfaces for Non-Clinical (Home, Sports, Art, Entertainment, Education, Well-Being) Applications. Front. Comput. Sci. 2022, 4, 860619. [Google Scholar] [CrossRef]

- Cannard, C.; Wahbeh, H.; Delorme, A. Electroencephalography correlates of well-being using a low-cost wearable system. Front. Hum. Neurosci. 2021, 15, 745135. [Google Scholar] [CrossRef]

- Flanagan, K.; Saikia, M.J. Consumer-grade electroencephalogram and functional near-infrared spectroscopy neurofeedback technologies for mental health and wellbeing. Sensors 2023, 23, 8482. [Google Scholar] [CrossRef]

- Wang, Q.; Sourina, O.; Nguyen, M.K. Eeg-based “serious” games design for medical applications. In Proceedings of the 2010 International Conference on Cyberworlds, Singapore, 20–22 October 2010; pp. 270–276. [Google Scholar]

- de Queiroz Cavalcanti, D.; Melo, F.; Silva, T.; Falcão, M.; Cavalcanti, M.; Becker, V. Research on brain-computer interfaces in the entertainment field. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 23–28 July 2023; pp. 404–415. [Google Scholar] [CrossRef]

- Mao, X.; Li, M.; Li, W.; Niu, L.; Xian, B.; Zeng, M.; Chen, G. Progress in EEG-Based Brain Robot Interaction Systems. Comput. Intell. Neurosci. 2017, 2017, 1742862. [Google Scholar] [CrossRef] [PubMed]

- Douibi, K.; Le Bars, S.; Lemontey, A.; Nag, L.; Balp, R.; Breda, G. Toward EEG-based BCI applications for industry 4.0: Challenges and possible applications. Front. Hum. Neurosci. 2021, 15, 705064. [Google Scholar] [CrossRef] [PubMed]

- Jeunet, C.; Glize, B.; McGonigal, A.; Batail, J.M.; Micoulaud-Franchi, J.A. Using EEG-based brain computer interface and neurofeedback targeting sensorimotor rhythms to improve motor skills: Theoretical background, applications and prospects. Neurophysiol. Clin. 2019, 49, 125–136. [Google Scholar] [CrossRef]

- Cheron, G.; Petit, G.; Cheron, J.; Leroy, A.; Cebolla, A.; Cevallos, C.; Petieau, M.; Hoellinger, T.; Zarka, D.; Clarinval, A.M.; et al. Brain oscillations in sport: Toward EEG biomarkers of performance. Front. Psychol. 2016, 7, 246. [Google Scholar] [CrossRef] [PubMed]

- Sugden, R.J.; Pham-Kim-Nghiem-Phu, V.L.L.; Campbell, I.; Leon, A.; Diamandis, P. Remote collection of electrophysiological data with brain wearables: Opportunities and challenges. Bioelectron. Med. 2023, 9, 12. [Google Scholar] [CrossRef]

- Casson, A.J. Wearable EEG and beyond. Biomed. Eng. Lett. 2019, 9, 53–71. [Google Scholar] [CrossRef]

- Mihajlović, V.; Grundlehner, B.; Vullers, R.; Penders, J. Wearable, wireless EEG solutions in daily life applications: What are we missing? IEEE J. Biomed. Health Inform. 2014, 19, 6–21. [Google Scholar]

- Skyrme, T.; Dale, S. Brain-Computer Interfaces 2025–2045: Technologies, Players, Forecasts; IDTechEx: Cambridge, UK, 2024; Available online: https://www.idtechex.com/en/research-report/brain-computer-interfaces/1024 (accessed on 20 May 2025).

- Gokhale, S. Brain Computer Interface Market Size, Share, Trends, Report 2024–2034; Precedence Research: Ottawa, ON, Canada, 2024; Available online: https://www.precedenceresearch.com/brain-computer-interface-market (accessed on 20 May 2025).

- Senkler, B.; Schellack, S.K.; Glatz, T.; Freymueller, J.; Hornberg, C.; Mc Call, T. Exploring urban mental health using mobile EEG—A systematic review. PLoS Ment. Health 2025, 2, e0000203. [Google Scholar]

- Rossini, P.M.; Di Iorio, R.; Vecchio, F.; Anfossi, M.; Babiloni, C.; Bozzali, M.; Bruni, A.C.; Cappa, S.F.; Escudero, J.; Fraga, F.J.; et al. Early diagnosis of Alzheimer’s disease: The role of biomarkers including advanced EEG signal analysis. Report from the IFCN-sponsored panel of experts. Clin. Neurophysiol. 2020, 131, 1287–1310. [Google Scholar]

- Chin, T.I.; An, W.; Yibeltal, K.; Workneh, F.; Pihl, S.; Jensen, S.K.; Asmamaw, G.; Fasil, N.; Teklehaimanot, A.; North, K.; et al. Implementation of a mobile EEG system in the acquisition of resting EEG and visual evoked potentials among young children in rural Ethiopia. Front. Hum. Neurosci. 2025, 19, 1552410. [Google Scholar]

- Galván, P.; Velázquez, M.; Rivas, R.; Benitez, G.; Barrios, A.; Hilario, E. Health diagnosis improvement in remote community health centers through telemedicine. Med. Access@ Point Care 2018, 2, 1–4. [Google Scholar]

- Shivaraja, T.; Chellappan, K.; Kamal, N.; Remli, R. Personalization of a mobile eeg for remote monitoring. In Proceedings of the 2022 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES), Kuala Lumpur, Malaysia, 7–9 December 2022; pp. 328–333. [Google Scholar]

- D’Angiulli, A.; Lockman-Dufour, G.; Buchanan, D.M. Promise for personalized diagnosis? Assessing the precision of wireless consumer-grade electroencephalography across mental states. Appl. Sci. 2022, 12, 6430. [Google Scholar] [CrossRef]

- Lopez, K.L.; Monachino, A.D.; Vincent, K.M.; Peck, F.C.; Gabard-Durnam, L.J. Stability, change, and reliable individual differences in electroencephalography measures: A lifespan perspective on progress and opportunities. NeuroImage 2023, 275, 120116. [Google Scholar] [CrossRef] [PubMed]

- Amaro, J.; Ramusga, R.; Bonifacio, A.; Frazao, J.; Almeida, A.; Lopes, G.; Chokhachian, A.; Santucci, D.; Morgado, P.; Miranda, B. Advancing Mobile Neuroscience: A Novel Wearable Backpack for Multi-Sensor Research in Urban Environments. bioRxiv 2025. [Google Scholar] [CrossRef]

- Höller, Y. Quantitative EEG in cognitive neuroscience. Brain Sci. 2021, 11, 517. [Google Scholar] [CrossRef] [PubMed]

- He, C.; Chen, Y.Y.; Phang, C.R.; Stevenson, C.; Chen, I.P.; Jung, T.P.; Ko, L.W. Diversity and Suitability of the State-of-the-Art Wearable and Wireless EEG Systems Review. IEEE J. Biomed. Health Inform. 2023, 27, 3830–3843. [Google Scholar] [CrossRef]

- Zhang, J.; Li, J.; Huang, Z.; Huang, D.; Yu, H.; Li, Z. Recent progress in wearable brain–computer interface (BCI) devices based on electroencephalogram (EEG) for medical applications: A review. Health Data Sci. 2023, 3, 0096. [Google Scholar]

- Lopez-Gordo, M.A.; Sanchez-Morillo, D.; Valle, F.P. Dry EEG electrodes. Sensors 2014, 14, 12847–12870. [Google Scholar] [CrossRef]

- Apicella, A.; Arpaia, P.; Isgro, F.; Mastrati, G.; Moccaldi, N. A survey on EEG-based solutions for emotion recognition with a low number of channels. IEEE Access 2022, 10, 117411–117428. [Google Scholar] [CrossRef]

- Klug, M.; Gramann, K. Identifying key factors for improving ICA-based decomposition of EEG data in mobile and stationary experiments. Eur. J. Neurosci. 2021, 54, 8406–8420. [Google Scholar] [CrossRef]

- Gudikandula, N.; Janapati, R.; Sengupta, R.; Chintala, S. Recent Advancements in Online Ocular Artifacts Removal in EEG based BCI: A Review. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Mandi, India, 6–11 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Mannan, M.M.N.; Kamran, M.A.; Jeong, M.Y. Identification and removal of physiological artifacts from electroencephalogram signals: A review. IEEE Access 2018, 6, 30630–30652. [Google Scholar] [CrossRef]

- Sadiya, S.; Alhanai, T.; Ghassemi, M.M. Artifact detection and correction in eeg data: A review. In Proceedings of the 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER), Virtual Conference, 4–6 May 2021; pp. 495–498. [Google Scholar]

- Jung, C.Y.; Saikiran, S.S. A review on EEG artifacts and its different removal technique. Asia-Pac. J. Converg. Res. Interchange 2016, 2, 43–60. [Google Scholar] [CrossRef]

- Islam, M.K.; Rastegarnia, A.; Yang, Z. Methods for artifact detection and removal from scalp EEG: A review. Neurophysiol. Clin. Neurophysiol. 2016, 46, 287–305. [Google Scholar] [CrossRef]

- Prakash, V.; Kumar, D. Artifact Detection and Removal in EEG: A Review of Methods and Contemporary Usage. In Proceedings of the International Conference on Artificial-Business Analytics, Quantum and Machine Learning, Bengaluru, India, 14–15 July 2023; pp. 263–274. [Google Scholar]

- Agounad, S.; Tarahi, O.; Moufassih, M.; Hamou, S.; Mazid, A. Advanced Signal Processing and Machine/Deep Learning Approaches on a Preprocessing Block for EEG Artifact Removal: A Comprehensive Review. Circuits Syst. Signal Process. 2024, 44, 3112–3160. [Google Scholar] [CrossRef]

- Seok, D.; Lee, S.; Kim, M.; Cho, J.; Kim, C. Motion artifact removal techniques for wearable EEG and PPG sensor systems. Front. Electron. 2021, 2, 685513. [Google Scholar] [CrossRef]

- Urigüen, J.A.; Garcia-Zapirain, B. EEG artifact removal—State-of-the-art and guidelines. J. Neural Eng. 2015, 12, 031001. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Sabor, N.; Luo, J.; Pu, Y.; Wang, G.; Lian, Y. Automatic removal of multiple artifacts for single-channel EEG. J. Shanghai Jiaotong Univ. (Sci.) 2021, 27, 437–451. [Google Scholar] [CrossRef]

- Inoue, R.; Sugi, T.; Matsuda, Y.; Goto, S.; Nohira, H.; Mase, R. Recording and characterization of EEGS by using wearable EEG device. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 15–18 October 2019; pp. 194–197. [Google Scholar]

- Cui, H.; Li, C.; Liu, A.; Qian, R.; Chen, X. A dual-branch interactive fusion network to remove artifacts from single-channel EEG. IEEE Trans. Instrum. Meas. 2023, 73, 4001912. [Google Scholar] [CrossRef]

- Kaongoen, N.; Jo, S. Adapting Artifact Subspace Reconstruction Method for SingleChannel EEG using Signal Decomposition Techniques. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–4. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Rethlefsen, M.L.; Kirtley, S.; Waffenschmidt, S.; Ayala, A.P.; Moher, D.; Page, M.J.; Koffel, J.B. PRISMA-S: An extension to the PRISMA statement for reporting literature searches in systematic reviews. Syst. Rev. 2021, 10, 39. [Google Scholar] [CrossRef]

- Sweeney, K.T.; Ayaz, H.; Ward, T.E.; Izzetoglu, M.; McLoone, S.F.; Onaral, B. A methodology for validating artifact removal techniques for physiological signals. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 918–926. [Google Scholar] [CrossRef]

- Matiko, J.W.; Beeby, S.; Tudor, J. Real time eye blink noise removal from EEG signals using morphological component analysis. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 13–16. [Google Scholar]

- Peng, H.; Hu, B.; Shi, Q.; Ratcliffe, M.; Zhao, Q.; Qi, Y.; Gao, G. Removal of ocular artifacts in EEG—An improved approach combining DWT and ANC for portable applications. IEEE J. Biomed. Health Inform. 2013, 17, 600–607. [Google Scholar] [CrossRef] [PubMed]

- Mihajlović, V.; Patki, S.; Grundlehner, B. The impact of head movements on EEG and contact impedance: An adaptive filtering solution for motion artifact reduction. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 5064–5067. [Google Scholar]

- Zhao, Q.; Hu, B.; Shi, Y.; Li, Y.; Moore, P.; Sun, M.; Peng, H. Automatic identification and removal of ocular artifacts in EEG—Improved adaptive predictor filtering for portable applications. IEEE Trans. Nanobioscience 2014, 13, 109–117. [Google Scholar] [CrossRef] [PubMed]

- Majmudar, C.A.; Mahajan, R.; Morshed, B.I. Real-time hybrid ocular artifact detection and removal for single channel EEG. In Proceedings of the 2015 IEEE International Conference on Electro/Information Technology (EIT), Dekalb, IL, USA, 21–23 May 2015; pp. 330–334. [Google Scholar]

- Kim, B.H.; Jo, S. Real-time motion artifact detection and removal for ambulatory BCI. In Proceedings of the 3rd International Winter Conference on Brain-Computer Interface (BCI 2015), Gangwon-do, Republic of Korea, 12–14 January 2015; pp. 1–4. [Google Scholar]

- Abd Rahman, F.; Othman, M. Real time eye blink artifacts removal in electroencephalogram using savitzky-golay referenced adaptive filtering. In Proceedings of the International Conference for Innovation in Biomedical Engineering and Life Sciences (ICIBEL 2015), Putrajaya, Malaysia, 6–8 December 2015; pp. 68–71. [Google Scholar]

- D’Rozario, A.L.; Dungan, G.C.; Banks, S.; Liu, P.Y.; Wong, K.K.; Killick, R.; Grunstein, R.R.; Kim, J.W. An automated algorithm to identify and reject artefacts for quantitative EEG analysis during sleep in patients with sleep-disordered breathing. Sleep Breath. 2015, 19, 607–615. [Google Scholar] [CrossRef] [PubMed]

- Chang, W.D.; Cha, H.S.; Kim, K.; Im, C.H. Detection of eye blink artifacts from single prefrontal channel electroencephalogram. Comput. Methods Programs Biomed. 2016, 124, 19–30. [Google Scholar] [CrossRef]

- Zhao, S.; Zhao, Q.; Zhang, X.; Peng, H.; Yao, Z.; Shen, J.; Yao, Y.; Jiang, H.; Hu, B. Wearable EEG-based real-time system for depression monitoring. In Proceedings of the Brain Informatics: International Conference, BI 2017, Beijing, China, 16–18 November 2017; pp. 190–201. [Google Scholar]

- Thammasan, N.; Hagad, J.L.; Fukui, K.i.; Numao, M. Multimodal stability-sensitive emotion recognition based on brainwave and physiological signals. In Proceedings of the 2017 Seventh International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW), San Antonio, TX, USA, 23–26 October 2017; pp. 44–49. [Google Scholar]

- Hu, H.; Guo, S.; Liu, R.; Wang, P. An adaptive singular spectrum analysis method for extracting brain rhythms of electroencephalography. PeerJ 2017, 5, e3474. [Google Scholar] [CrossRef]

- Dehzangi, O.; Melville, A.; Taherisadr, M. Automatic eeg blink detection using dynamic time warping score clustering. In Proceedings of the Advances in Body Area Networks I: Post-Conference Proceedings of BodyNets 2017, Dalian, China, 12–13 September 2017; Springer: Cham, Switzerland, 2019; pp. 49–60. [Google Scholar]

- Cheng, J.; Li, L.; Li, C.; Liu, Y.; Liu, A.; Qian, R.; Chen, X. Remove diverse artifacts simultaneously from a single-channel EEG based on SSA and ICA: A semi-simulated study. IEEE Access 2019, 7, 60276–60289. [Google Scholar] [CrossRef]

- Goldberger, A.L.; Amaral, L.; Glass, L.; Hausdorff, J.; Ivanov, P.C.; Mark, R.; Mietus, J.; Moody, G.; Peng, C.; Stanley, H. PhysioBank, PhysioToolkit, and Physionet: Components of a new research resource for complex physiologic signals. Circulation 2000, 101, E215–E220. [Google Scholar] [CrossRef]

- Leeb, R.; Lee, F.; Keinrath, C.; Scherer, R.; Bischof, H.; Pfurtscheller, G. Brain–computer communication: Motivation, aim, and impact of exploring a virtual apartment. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 473–482. [Google Scholar] [CrossRef]

- Brunner, C.; Leeb, R.; Müller-Putz, G.; Schlögl, A.; Pfurtscheller, G. BCI Competition 2008–Graz data set A. IEEE Dataport 2008, 16, 34. [Google Scholar]

- Val-Calvo, M.; Álvarez-Sánchez, J.R.; Ferrández-Vicente, J.M.; Fernández, E. Optimization of real-time EEG artifact removal and emotion estimation for human-robot interaction applications. Front. Comput. Neurosci. 2019, 13, 80. [Google Scholar]

- Grosselin, F.; Navarro-Sune, X.; Vozzi, A.; Pandremmenou, K.; de Vico Fallani, F.; Attal, Y.; Chavez, M. Quality assessment of single-channel EEG for wearable devices. Sensors 2019, 19, 601. [Google Scholar] [CrossRef] [PubMed]

- Rosanne, O.; Albuquerque, I.; Gagnon, J.F.; Tremblay, S.; Falk, T.H. Performance comparison of automated EEG enhancement algorithms for mental workload assessment of ambulant users. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20–23 March 2019; pp. 61–64. [Google Scholar]

- Blum, S.; Jacobsen, N.S.; Bleichner, M.G.; Debener, S. A Riemannian modification of artifact subspace reconstruction for EEG artifact handling. Front. Hum. Neurosci. 2019, 13, 141. [Google Scholar] [CrossRef] [PubMed]

- Butkevičiūtė, E.; Bikulčienė, L.; Sidekerskienė, T.; Blažauskas, T.; Maskeliūnas, R.; Damaševičius, R.; Wei, W. Removal of movement artefact for mobile EEG analysis in sports exercises. IEEE Access 2019, 7, 7206–7217. [Google Scholar] [CrossRef]

- Albuquerque, I.; Rosanne, O.; Gagnon, J.F.; Tremblay, S.; Falk, T.H. Fusion of spectral and spectro-temporal EEG features for mental workload assessment under different levels of physical activity. In Proceedings of the 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER), San Francisco, CA, USA, 20–23 March 2019; pp. 311–314. [Google Scholar]

- Liu, Y.; Zhou, Y.; Lang, X.; Liu, Y.; Zheng, Q.; Zhang, Y.; Jiang, X.; Zhang, L.; Tang, J.; Dai, Y. An efficient and robust muscle artifact removal method for few-channel EEG. IEEE Access 2019, 7, 176036–176050. [Google Scholar] [CrossRef]

- Casadei, V.; Ferrero, R.; Brown, C. Model-based filtering of EEG alpha waves for enhanced accuracy in dynamic conditions and artifact detection. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; pp. 1–6. [Google Scholar]

- Islam, M.S.; El-Hajj, A.M.; Alawieh, H.; Dawy, Z.; Abbas, N.; El-Imad, J. EEG mobility artifact removal for ambulatory epileptic seizure prediction applications. Biomed. Signal Process. Control 2020, 55, 101638. [Google Scholar]

- Noorbasha, S.K.; Sudha, G.F. Removal of EOG artifacts from single channel EEG–an efficient model combining overlap segmented ASSA and ANC. Biomed. Signal Process. Control 2020, 60, 101987. [Google Scholar]

- Dey, E.; Roy, N. Omad: On-device mental anomaly detection for substance and non-substance users. In Proceedings of the 2020 IEEE 20th International Conference on Bioinformatics and Bioengineering (BIBE), Cincinnati, OH, USA, 26–28 October 2020; pp. 466–471. [Google Scholar]

- Liu, A.; Liu, Q.; Zhang, X.; Chen, X.; Chen, X. Muscle artifact removal toward mobile SSVEP-based BCI: A comparative study. IEEE Trans. Instrum. Meas. 2021, 70, 4005512. [Google Scholar] [CrossRef]

- Kumaravel, V.P.; Kartsch, V.; Benatti, S.; Vallortigara, G.; Farella, E.; Buiatti, M. Efficient artifact removal from low-density wearable EEG using artifacts subspace reconstruction. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Guadalajara, Mexico, 26–30 July 2021; pp. 333–336. [Google Scholar]

- Shahbakhti, M.; Beiramvand, M.; Nazari, M.; Broniec-Wójcik, A.; Augustyniak, P.; Rodrigues, A.S.; Wierzchon, M.; Marozas, V. VME-DWT: An efficient algorithm for detection and elimination of eye blink from short segments of single EEG channel. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 408–417. [Google Scholar]

- Sha’abania, M.; Fuadb, N.; Jamalb, N. Eye Blink Artefact Removal of Single Frontal EEG Channel Algorithm using Ensemble Empirical Mode Decomposition and Outlier Detection. Signal 2021, 22, 23. [Google Scholar]

- Aung, S.T.; Wongsawat, Y. Analysis of EEG signals contaminated with motion artifacts using multiscale modified-distribution entropy. IEEE Access 2021, 9, 33911–33921. [Google Scholar] [CrossRef]

- Noorbasha, S.K.; Sudha, G.F. Removal of motion artifacts from EEG records by overlap segmentation SSA with modified grouping criteria for portable or wearable applications. In Proceedings of the Soft Computing and Signal Processing: Proceedings of 3rd ICSCSP 2020, Hyderabad, India, 22–23 February 2020; Springer: Singapore, 2021; Volume 1, pp. 397–409. [Google Scholar]

- Ingolfsson, T.M.; Cossettini, A.; Benatti, S.; Benini, L. Energy-efficient tree-based EEG artifact detection. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, Scotland, UK, 11–15 July 2022; pp. 3723–3728. [Google Scholar]

- Chen, H.; Zhang, H.; Liu, C.; Chai, Y.; Li, X. An outlier detection-based method for artifact removal of few-channel EEGs. J. Neural Eng. 2022, 19, 056028. [Google Scholar] [CrossRef]

- Occhipinti, E.; Davies, H.J.; Hammour, G.; Mandic, D.P. Hearables: Artefact removal in Ear-EEG for continuous 24/7 monitoring. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–6. [Google Scholar]

- Paissan, F.; Kumaravel, V.P.; Farella, E. Interpretable CNN for single-channel artifacts detection in raw EEG signals. In Proceedings of the 2022 IEEE Sensors Applications Symposium (SAS), Sundsvall, Sweden, 1–3 August 2022; pp. 1–6. [Google Scholar]

- Zhang, H.; Zhao, M.; Wei, C.; Mantini, D.; Li, Z.; Liu, Q. EEGdenoiseNet: A benchmark dataset for end-to-end deep learning solutions of EEG denoising. arXiv 2020, arXiv:2009.11662. [Google Scholar] [CrossRef] [PubMed]

- Peh, W.Y.; Yao, Y.; Dauwels, J. Transformer convolutional neural networks for automated artifact detection in scalp EEG. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 3599–3602. [Google Scholar]

- Brophy, E.; Redmond, P.; Fleury, A.; De Vos, M.; Boylan, G.; Ward, T. Denoising EEG signals for real-world BCI applications using GANs. Front. Neuroergonomics 2022, 2, 805573. [Google Scholar] [CrossRef] [PubMed]

- Xiao, Z.; Tan, X.; Wang, T. A modified artifact subspace rejection algorithm based on frequency properties for meditation detection application. In Proceedings of the 2022 12th International Conference on Information Technology in Medicine and Education (ITME), Xiamen, China, 18–20 November 2022; pp. 429–433. [Google Scholar]

- Arpaia, P.; De Bendetto, E.; Esposito, A.; Natalizio, A.; Parvis, M.; Pesola, M. Comparing artifact removal techniques for daily-life electroencephalography with few channels. In Proceedings of the 2022 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Taormina, Messina, Italy, 22–24 June 2022; pp. 1–6. [Google Scholar]

- Noorbasha, S.K.; Sudha, G.F. Electrical Shift and Linear Trend Artifacts Removal from Single Channel EEG Using SWT-GSTV Model. In Proceedings of the International Conference on Soft Computing and Signal Processing, Hyderabad, India, 18–19 June 2021; pp. 469–478. [Google Scholar]

- Zhang, W.; Yang, W.; Jiang, X.; Qin, X.; Yang, J.; Du, J. Two-stage intelligent multi-type artifact removal for single-channel EEG settings: A GRU autoencoder based approach. IEEE Trans. Biomed. Eng. 2022, 69, 3142–3154. [Google Scholar] [CrossRef] [PubMed]

- Jayas, T.; Adarsh, A.; Muralidharan, K.; Gubbi, J.; Pal, A. Computer Aided Detection of Dominant Artifacts in Ear-EEG Signal. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Honolulu, Oahu, HI, USA, 1–4 October 2023; pp. 4423–4428. [Google Scholar] [CrossRef]

- Narmada, A.; Shukla, M. A novel adaptive artifacts wavelet Denoising for EEG artifacts removal using deep learning with Meta-heuristic approach. Multimed. Tools Appl. 2023, 82, 40403–40441. [Google Scholar] [CrossRef]

- Mahmud, S.; Hossain, M.S.; Chowdhury, M.E.; Reaz, M.B.I. MLMRS-Net: Electroencephalography (EEG) motion artifacts removal using a multi-layer multi-resolution spatially pooled 1D signal reconstruction network. Neural Comput. Appl. 2023, 35, 8371–8388. [Google Scholar]

- Jiang, Y.; Wu, D.; Cao, J.; Jiang, L.; Zhang, S.; Wang, D. Eyeblink detection algorithm based on joint optimization of VME and morphological feature extraction. IEEE Sens. J. 2023, 23, 21374–21384. [Google Scholar]

- Klados, M.A.; Bamidis, P.D. A semi-simulated EEG/EOG dataset for the comparison of EOG artifact rejection techniques. Data Brief 2016, 8, 1004–1006. [Google Scholar] [CrossRef]

- Kumaravel, V.P.; Farella, E. IMU-integrated Artifact Subspace Reconstruction for Wearable EEG Devices. In Proceedings of the 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Istanbul, Turkey, 5–8 December 2023; pp. 2508–2514. [Google Scholar]

- Li, Y.; Liu, A.; Yin, J.; Li, C.; Chen, X. A segmentation-denoising network for artifact removal from single-channel EEG. IEEE Sens. J. 2023, 23, 15115–15127. [Google Scholar]

- Shoeb, A.H. Application of Machine Learning to Epileptic Seizure Onset Detection and Treatment. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2009. [Google Scholar]

- Yin, J.; Liu, A.; Li, C.; Qian, R.; Chen, X. A GAN guided parallel CNN and transformer network for EEG denoising. IEEE J. Biomed. Health Inform. 2023, 70, 4005512. [Google Scholar]

- O’Sullivan, M.E.; Lightbody, G.; Mathieson, S.R.; Marnane, W.P.; Boylan, G.B.; O’Toole, J.M. Development of an EEG artefact detection algorithm and its application in grading neonatal hypoxic-ischemic encephalopathy. Expert Syst. Appl. 2023, 213, 118917. [Google Scholar]

- Chen, J.; Pi, D.; Jiang, X.; Xu, Y.; Chen, Y.; Wang, X. Denosieformer: A transformer-based approach for single-channel EEG artifact removal. IEEE Trans. Instrum. Meas. 2023, 73, 2501116. [Google Scholar]

- Hermans, T.; Smets, L.; Lemmens, K.; Dereymaeker, A.; Jansen, K.; Naulaers, G.; Zappasodi, F.; Van Huffel, S.; Comani, S.; De Vos, M. A multi-task and multi-channel convolutional neural network for semi-supervised neonatal artefact detection. J. Neural Eng. 2023, 20, 026013. [Google Scholar]

- Bahadur, I.N.; Boppana, L. Efficient architecture for ocular artifacts removal from EEG: A Novel approach based on DWT-LMM. Microelectron. J. 2024, 150, 106284. [Google Scholar] [CrossRef]

- Arpaia, P.; De Benedetto, E.; Esposito, A.; Natalizic, A.; Parvis, M.; Pesola, M.; Sansone, M. Artifacts Removal from Low-Density EEG Measured with Dry Electrodes. In Proceedings of the 2024 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), St Albans, UK, 21–23 October 2024; pp. 195–200. [Google Scholar]

- Ingolfsson, T.M.; Benatti, S.; Wang, X.; Bernini, A.; Ducouret, P.; Ryvlin, P.; Beniczky, S.; Benini, L.; Cossettini, A. Minimizing artifact-induced false-alarms for seizure detection in wearable EEG devices with gradient-boosted tree classifiers. Sci. Rep. 2024, 14, 2980. [Google Scholar] [CrossRef]

- Saleh, M.; Xing, L.; Casson, A.J. EEG artifact removal at the edge using AI hardware. IEEE Sens. Lett. 2024, 9, 7003004. [Google Scholar]

- Nair, S.; James, B.P.; Leung, M.F. An optimized hybrid approach to denoising of EEG signals using CNN and LMS filtering. Electronics 2025, 14, 1193. [Google Scholar] [CrossRef]

- Islam, M.K.; Rastegarnia, A.; Sanei, S. Signal artifacts and techniques for artifacts and noise removal. In Signal Processing Techniques for Computational Health Informatics; Springer: Cham, Switzerland, 2020; pp. 23–79. [Google Scholar]

- Kaya, I. A brief summary of EEG artifact handling. In Brain-Computer Interface; IntechOpen: London, UK, 2019. [Google Scholar] [CrossRef]

- Villasana, F.C. Getting to Know EEG Artifacts and How to Handle Them in BrainVision Analyzer 2. Brain Products. Available online: https://pressrelease.brainproducts.com/eeg-artifacts-handling-in-analyzer/ (accessed on 3 October 2023).

- Kane, N.; Acharya, J.; Beniczky, S.; Caboclo, L.; Finnigan, S.; Kaplan, P.W.; Shibasaki, H.; Pressler, R.; Van Putten, M.J. A revised glossary of terms most commonly used by clinical electroencephalographers and updated proposal for the report format of the EEG findings. Revision 2017. Clin. Neurophysiol. Pract. 2017, 2, 170–185. [Google Scholar]

- Amin, U.; Nascimento, F.A.; Karakis, I.; Schomer, D.; Benbadis, S.R. Normal variants and artifacts: Importance in EEG interpretation. Epileptic Disord. 2023, 25, 591–648. [Google Scholar] [CrossRef]

- Xing, L.; Casson, A.J. Deep autoencoder for real-time single-channel EEG cleaning and its smartphone implementation using tensorflow lite with hardware/software acceleration. IEEE Trans. Biomed. Eng. 2024, 71, 3111–3122. [Google Scholar] [CrossRef]

- Cho, H.; Ahn, M.; Ahn, S.; Kwon, M.; Jun, S.C. EEG datasets for motor imagery brain–computer interface. GigaScience 2017, 6, gix034. [Google Scholar] [CrossRef]

- Wu, X.; Zhang, W.; Fu, Z.; Cheung, R.; Chan, R. Ear-EEG Recording for Brain Computer Interface of Motor Task; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar] [CrossRef]

- Obeid, I.; Picone, J. The temple university hospital EEG data corpus. Front. Neurosci. 2016, 10, 196. [Google Scholar] [CrossRef]

- Zheng, W.L.; Lu, B.L. Investigating Critical Frequency Bands and Channels for EEG-based Emotion Recognition with Deep Neural Networks. IEEE Trans. Auton. Ment. Dev. 2015, 7, 162–175. [Google Scholar] [CrossRef]

- Schelter, B.; Winterhalder, M.; Maiwald, T.; Brandt, A.; Schad, A.; Timmer, J.; Schulze-Bonhage, A. Do false predictions of seizures depend on the state of vigilance? A report from two seizure-prediction methods and proposed remedies. Epilepsia 2006, 47, 2058–2070. [Google Scholar] [CrossRef] [PubMed]

- Kaya, M.; Binli, M.K.; Ozbay, E.; Yanar, H.; Mishchenko, Y. A large electroencephalographic motor imagery dataset for electroencephalographic brain computer interfaces. Sci. Data 2018, 5, 180211. [Google Scholar] [CrossRef] [PubMed]

- Torkamani-Azar, M.; Kanik, S.D.; Aydin, S.; Cetin, M. Prediction of reaction time and vigilance variability from spatio-spectral features of resting-state EEG in a long sustained attention task. IEEE J. Biomed. Health Inform. 2020, 24, 2550–2558. [Google Scholar] [CrossRef]

- Reichert, C.; Tellez Ceja, I.F.; Sweeney-Reed, C.M.; Heinze, H.J.; Hinrichs, H.; Dürschmid, S. Impact of stimulus features on the performance of a gaze-independent brain-computer interface based on covert spatial attention shifts. Front. Neurosci. 2020, 14, 591777. [Google Scholar] [CrossRef]

- Rantanen, V.; Ilves, M.; Vehkaoja, A.; Kontunen, A.; Lylykangas, J.; Mäkelä, E.; Rautiainen, M.; Surakka, V.; Lekkala, J. A survey on the feasibility of surface EMG in facial pacing. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 6–20 August 2016; pp. 1688–1691. [Google Scholar]

- Kanoga, S.; Nakanishi, M.; Mitsukura, Y. Assessing the effects of voluntary and involuntary eyeblinks in independent components of electroencephalogram. Neurocomputing 2016, 193, 20–32. [Google Scholar] [CrossRef]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef]

- He, P.; Kahle, M.; Wilson, G.; Russell, C. Removal of ocular artifacts from EEG: A comparison of adaptive filtering method and regression method using simulated data. In Proceedings of the 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference (EMBC 2005), Shanghai, China, 1–4 September 2005; pp. 1110–1113. [Google Scholar]

- Yeung, N.; Bogacz, R.; Holroyd, C.B.; Cohen, J.D. Detection of synchronized oscillations in the electroencephalogram: An evaluation of methods. Psychophysiology 2004, 41, 822–832. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Chang, C.Y.; Kothe, C.; Iversen, J.R.; Miyakoshi, M. Juggler’s ASR: Unpacking the principles of artifact subspace reconstruction for revision toward extreme MoBI. J. Neurosci. Methods 2025, 420, 110465. [Google Scholar] [CrossRef] [PubMed]

- Gorjan, D.; Gramann, K.; De Pauw, K.; Marusic, U. Removal of movement-induced EEG artifacts: Current state of the art and guidelines. J. Neural Eng. 2022, 19, 011004. [Google Scholar]

- Giangrande, A.; Botter, A.; Piitulainen, H.; Cerone, G.L. Motion artifacts in dynamic EEG recordings: Experimental observations, electrical modelling, and design considerations. Sensors 2024, 24, 6363. [Google Scholar] [CrossRef]

- Chi, Y.M.; Cauwenberghs, G. Wireless non-contact EEG/ECG electrodes for body sensor networks. In Proceedings of the 2010 International Conference on Body Sensor Networks (BSN 2010), Singapore, 7–9 June 2010; pp. 297–301. [Google Scholar]

- Benatti, S.; Milosevic, B.; Tomasini, M.; Farella, E.; Schoenle, P.; Bunjaku, P.; Rovere, G.; Fateh, S.; Huang, Q.; Benini, L. Multiple biopotentials acquisition system for wearable applications. In Proceedings of the Special Session on Smart Medical Devices-From Lab to Clinical Practice (DATE 2015), Lisbon, Portugal, 12–15 January 2015; Volume 2, pp. 260–268. [Google Scholar]

- Tomasini, M.; Benatti, S.; Milosevic, B.; Farella, E.; Benini, L. Power line interference removal for high-quality continuous biosignal monitoring with low-power wearable devices. IEEE Sens. J. 2016, 16, 3887–3895. [Google Scholar]

- Xu, J.; Mitra, S.; Van Hoof, C.; Yazicioglu, R.F.; Makinwa, K.A. Active electrodes for wearable EEG acquisition: Review and electronics design methodology. IEEE Rev. Biomed. Eng. 2017, 10, 187–198. [Google Scholar] [CrossRef]

- Kalevo, L.; Miettinen, T.; Leino, A.; Kainulainen, S.; Korkalainen, H.; Myllymaa, K.; Töyräs, J.; Leppänen, T.; Laitinen, T.; Myllymaa, S. Effect of sweating on electrode-skin contact impedances and artifacts in EEG recordings with various screen-printed Ag/Agcl electrodes. IEEE Access 2020, 8, 50934–50943. [Google Scholar]

- JCGM. International Vocabulary of Metrology—Basic and General Concepts and Associated Terms (VIM), 3rd ed.; Bureau International des Poids et Mesures (BIPM): Sèvres, France, 2012. [Google Scholar]

- Anders, P.; Müller, H.; Skjæret-Maroni, N.; Vereijken, B.; Baumeister, J. The influence of motor tasks and cut-off parameter selection on artifact subspace reconstruction in EEG recordings. Med. Biol. Eng. Comput. 2020, 58, 2673–2683. [Google Scholar]

- Mullen, T.R.; Kothe, C.A.; Chi, Y.M.; Ojeda, A.; Kerth, T.; Makeig, S.; Jung, T.P.; Cauwenberghs, G. Real-time neuroimaging and cognitive monitoring using wearable dry EEG. IEEE Trans. Biomed. Eng. 2015, 62, 2553–2567. [Google Scholar] [CrossRef]

- Chang, C.Y.; Hsu, S.H.; Pion-Tonachini, L.; Jung, T.P. Evaluation of artifact subspace reconstruction for automatic artifact components removal in multi-channel EEG recordings. IEEE Trans. Biomed. Eng. 2019, 67, 1114–1121. [Google Scholar] [CrossRef]

| Article | Focused Artifact Category (Source) | Experimental Sample | Task Description | Channel Setup No. & Type (Location) | Reference Signal | Assessment Parameters | Assessment Metrics (Results) | Algorithm |

|---|---|---|---|---|---|---|---|---|

| Sweeney et al. (2012) [64] | Instrumental (Cable movements) [64] | R: 6 subjects 4 trials × 540 s | Resting-state | 2 n.r. (Fpz, Fp1) | No contaminated channel | Accuracy | SNR ( SNR: (a) 5.1 dB; (b) 9.7 dB; (c) 8.9 dB), Correlation (improvement rate: (a) 37.66%; (b) 83.13%; (c) 76.5%) | (a) Adaptive Filter; (b) Kalman Filter; (c) EEMD-ICA |

| Matiko et al. (2013) [65] | Ocular (Eye blinks) | R: n.r. 60 trials × 1 s | n.r. | 1 dry (Fp1) | Raw EEG | Selectivity | CC (improvement rate: 30.56%) | MCA based on STFT |

| Operational speed | Latency (26.90 ms) | |||||||

| Peng et al. (2013) [66] | Ocular (Eye movements, blinks) | S: 50 trials × 20 s | n.a. | 1 n.a. (n.a.) | Initial EEG | Accuracy | MSE (0.00531), MAE (frequency: = 0.02233, = 0.01436, = 0.00382, = 0.00055; time: 0.00531) | DWT + ANC |

| R: 25 subjects 1 trial × 120 s | Resting-state | 3 dry (Fp1, Fp2, Fpz) | Raw EEG | Selectivity | Frequency domain correlation (numerical values n.r.) | |||

| Operational speed | Latency (numerical values n.r.) | |||||||

| R: 22 subjects 1 trial × 40 s | Resting-state | n.r. | Raw EEG | Selectivity | Frequency domain correlation (numerical values n.r.) | |||

| Mihajlovic et al. (2014) [67] | Movement (Head) | R: 6 subjects 3 trials × 60 s | Motor tasks | 4 dry (C3, C4, Cz and Pz) | EEG baseline | Accuracy | Spectral Score (reduction rate: ∼60–70%), Distribution Score (reduction rate: ∼70–80%) | BPF + leaky least-mean square MCAF |

| Zhao et al. (2014) [68] | Ocular (Eye blinks) | S: 50 trials × 30 s | n.a. | 1 n.a. (n.a.) | Initial EEG | Accuracy | MSE (0.6443), MAE (: 0.2501; : 0.1545; : 0.0975; : 0.0174) | DWT + APF |

| R: 20 subjects 1 trial × 120 s | Resting-state | 3 dry (Fp1, Fp2, Fpz) | EEG baseline | Selectivity | Frequency domain correlation (numerical values n.r.) | |||

| Operational speed | Latency (5000 points, 1 s) | |||||||

| Majmudar et al. (2015) [69] | Ocular (Eye blinks) | R: 3 subjects 1 trial × 45 s | Resting-state | 2 wet (Fp1, Fp2) | Raw EEG | Selectivity | TFA (numerical values n.r.), MSC plot (f > 16 Hz: ∼1; f < 16 Hz: <1), CC (0.39 ± 0.25), MI (0.91 ± 0.12) | Algebraic approach + DWT |

| Operational speed | Latency (improvement rate: ∼25%) | |||||||

| Kim et al. (2015) [70] | Movement (Body, limb) | R: 5 subjects 1 trial × 300 s | Resting-state; dual-task | 14 wet (AF3, F7, F3, FC5, T7, P7, O1, O2, P8, T8, FC6, F4, F8, AF4) | PSD at SSVEP and P300 frequency | Accuracy | SNR ( SNR: 0.26 ± 0.11 (SSVEP); 0.07 ± 0.10 (P300)) | Fast ICA + Kalman filter + SVM |

| Rahman et al. (2015) [71] | Ocular (Eye blinks) | S: n.r. | n.a. | 1 n.a. (n.a.) | Initial EEG | Accuracy | SNR (20.23 dB), MSE () | SG filter + ANFIS |

| R: n.r. 1 trial × 55 s | Resting-state | 14 n.r. (only FP1 is reported) | EOG | Accuracy | SNR (16.98 dB), MSE () | |||

| Selectivity | CC (measured Fp1/ estimated Fp1: 0.1478; measured EOG/ estimated eye blink: 0.9899) | |||||||

| D’Rozario et al. (2015) [72] | Ocular (Eye movements, blinks); Muscular (S.N.S.); Movement (S.N.S.) | R: 24 subjects 2–4 trial × 28,800 s | Sleep | (a) 6 wet (C3, C4, Fz, Cz, Pz, and Oz), (b) 5 wet (C3, Fz, Cz, Pz and O2) | Artifacts identified by visual inspection | Classification Performance Metrics | Cohen’s kappa (0.53 ± 0.16), Classification Accuracy (93.5 ± 3.0%), TPR (68.7 ± 7.6%), FPR (4.3 ± 1.8%) | SD-based automated artifact detection and removal |

| Chang et al. (2016) [73] | Ocular (Eye blinks) | R: 24 subjects 10 trial × 15 s | Cognitive task | 3 wet (Fp1, Fp2, vEOG) | vEOG | Classification Performance Metrics | TPR (∼99%), FPR (∼10%) | MSDW |

| Zhao et al. (2017) [74] | Ocular (S.N.S.); Muscular (S.N.S.) | R: 170 subjects 1 trial × 72/90 s | Resting-state; audio stimulation | 3 n.r. (Fp1, Fp2, Fpz) | n.c. | Accuracy | Temporal trend comparison (numerical values n.r.) | Wavelet transform + Kalman filter |

| Thammasan et al. (2017) [75] | Ocular (Eye movements); Muscolar (S.N.S.); EMI (PLN) | R: 9 subjects 24 trials × 67/112 s | Resting-state; audio stimulation | 8 soft dry (Fp1, Fp2, F3, F4, F7, F8, T7, T8) | Artifacts identified by visual inspection | Classification Performance Metrics on other topics | Enhancement on Classification Accuracy (n.r.), Enhancement on MCC (n.r.) | Automatic rejection based on Regression (pop_rejtrend), Joint Probability (pop_jointprob), Kurtosis (pop_rejkurt), FFT (pop_rejcont) |

| Hu et al. (2017) [76] | Ocular (Eye movements, blinks); Instrumental (EII, TEN) | S: n.r. × 8 s | n.a. | 1 n.a. (n.a.) | Initial EEG | Classification Performance Metrics | Classification accuracy (95.8%) | Adaptive SSA |

| R: 3 subjects 1 trial × 120 s | Resting-state | 3 wet (frontal electrodes) | n.c. | Selectivity | Power spectrum differences (numerical values n.r.) | |||

| Dehzangi et al. (2018) [77] | Ocular (Eye blinks) | R: 5 subjects 4 trials × 240/360 s | Cognitive task | 7 wet (F7, Fz, F8, T7, T8, Pz, O2) | Artifact labels | Accuracy | DTW distances (multi-score detection performance: 87.4 ± 8.1%) | DTW score + K-means clustering + SVM |

| Cheng et al. (2019) [78] | Cardiac [79]; Ocular (Eye movements, blinks) [80,81]; Muscular (LiMC) [80,81] | SS: 11 subjects n.r. × 10 s | Resting-state; motor-imagery | n.r. wet (n.r.) | No contaminated EEG | Accuracy | RRMSE (triple contamination: SNR = 0.5: 0.23 ± 0.06; SNR = 1.0: 0.18 ± 0.04; SNR = 1.5: 0.15 ± 0.03) | SSA + ICA |

| Selectivity | CC (triple contamination: SNR = 0.5: 0.78 ± 0.06; SNR = 1.0: 0.82 ± 0.04; SNR = 1.5: 0.85 ± 0.03) | |||||||

| Val-Calvo et al. (2019) [82] | Ocular (Eye blinks) | SS: 15 subjects 15 trials × n.r. | Video stimulation | 8 n.r. (AF3, T7, TP7, P7, AF4, T8, TP8, P8) | No contaminated EEG | Selectivity | CORR (0.87 (all bands), 0.86 ()), MI (0.66 (all bands), 0.64 ()) | EAWICA |

| Accuracy | RMSE (0.27 (all bands), 0.29 ()) | |||||||

| Grosselin et al. (2019) [83] | Ocular (Eye movements, blinks); Muscular (MaMC, TeMC); Instrumental (Clipping, electrode pop); Movement (Body) | R1: 3 subjects n.r. | Resting-state | 32 wet (n.r.) | EEG baseline | Accuracy | SNR-based accuracy (SNR < 0 dB: 99.8%; 0 ≤ SNR < 10 dB: 82.5%; SNR ≥ 10 dB: 43.13%) | Classification-based approach |

| R2: 21 subjects n.r. | Resting-state | 2 dry (P3, P4) | ||||||

| R3: 10 subjects n.r. × 60 s | Resting-state (altert condition) | 2 wet (P3, P4) | Classification Performance Metrics | Classification Accuracy (92.2 ± 2.2%) | ||||

| R4: 10 subjects n.r. × 60 s | Resting-state (altert condition) | 2 dry (P3, P4) | ||||||

| Rosanne et al. (2019) [84] | Ocular (Eye blinks); Movement (Body, limb) | R: 48 subjects 6 trials × 1200 s | Dual-task | 8 n.r. (FP1, FP2, AF7, AF8, T9, T10, P3, P4) | Raw EEG | Classification Performance Metrics on other topics | Enhancement on Classification Accuracy (no movement: (b) 10%; medium physical activity: (b) 4%; high physical activity: (a) 4%) | (a) ASR+wICA+ Random Forest, (b) ASR+ADJUST+ Random Forest |

| Inoue et al. (2019) [59] | Ocular (Eye movements, blinks); Musco- lar (OOcMC); Movement (Body) | R: 10 subjects 1 trial × n.r. | Resting-state; Motor task | 8 n.r. (F3, C3, T3, O1, F4, C4, T4, O2) | Recorded video | n.c. | n.c. | Automatic detection algorithm based on frequency analysis |

| Blum et al. (2019) [85] | Ocular (Eye blinks) | R: 27 subjects 1 trial × n.r. | Resting-state; dual-task | 24 wet (n.r.) | EEG baseline | Accuracy | SNR (numerical values n.r.) | Riemannian ASR |

| Sensitivity | Blink amplitude (similarity value: 0.15) | |||||||

| Operational speed | Latency (5.6 ± 0.7 s) | |||||||

| Butkevičiūtė et al. (2019) [86] | Movement (Body, limb) | SS: n.r 10 trials × 60 s | Motor tasks | n.r. | No contaminated EEG | Selectivity | Pearson’s correlation coefficient (0.055 ± 0.058) | BEADS + EMD |

| Albuquerque et al. (2019) [87] | Ocular (S.N.S.) Movement (Body, limb) | R: 47 subjects 2 trials × n.r. | Motor task | 8 dry (T9, AF7, FP1, FP2, AF8, T10) | n.r. | Accuracy | PSD (ANOVA: 0.8715 ± 0.0699; mRMR: 0.8706 ± 0.0701), AMRC (ANOVA: 0.8815 ± 0.0521; mRMR: 0.8440 ± 0.0608) | wICA |

| Liu et al. (2019) [88] | Muscular (S.N.S.) | SS: 31 subjects n.r. | Resting-state | 6 wet (n.r.) | No contaminated EEG | Accuracy | RRMSE (numerical values n.r.) | FMEMD-CCA |

| Selectivity | CC (numerical values n.r.) | |||||||

| Casadei et al. (2020) [89] | Other (generic large artifacts); Instrumental (Electrode pop) | R: 1 subject n.r. | n.r | 1 n.r. (O2) | Band-pass filtered EEG | Selectivity | Amplitude and phase consistency (numerical values n.r.) | Model-based amplitude estimation |

| Islam et al. (2020) [90] | Instrumental (Cable movements); Movement (Body, limb) | R: 6 subjetcs, 9 trials × 240 s | Resting-state; motor tasks | 21 dry (Fp1, Fp2, F7, F3, Fz, F4, F8, A1, T3, C3, Cz, C4, T4, A2, T5, P3, Pz, P4, T6, O1, O2) | EEG baseline | Accuracy | Artifact reduction rate (6.96 ± 2.96%), SNR ( SNR: 10.74 ± 4.24 dB), RMSE ( RMSE: 48.71 ± 36.14 mV) | Infomax ICA |

| Selectivity | PSD distortion (improvement: 51.00 ± 21.36%), correlation (improvement: 77.31 ± 12.57%), coherence (improvement: 94.82 ± 5.54%) | |||||||

| SS: 5 subjects n.r. | Resting-state | n.r. | No contaminated EEG | Classification Performance Metrics | Classification Accuracy (90.8 ± 4.7%), TPR (84.4 ± 22.8%), FPR (45.1 ± 59.7%) | |||

| Noorbasha et al. (2020) [91] | Ocular (S.N.S.) | SS: 3 subjects 1 trial × 120 s | Resting-state | 3 wet (frontal electrodes) | No contaminated EEG | Accuracy | SNR-based RRMSE (SNR = 8 dB, RRMSE = 98%), MAE ( MAE: −17.43 ± 1.11 dB) | Ov-ASSA + ANC |

| Dey et al. (2020) [92] | Ocular (Eye blinks); Muscolar (CSMC) | R: 20 subjects 10 trials × 10 s | Resting-state | 19 wet (n.r.) | n.r. | Classification Performance Metrics | Classification Accuracy (82.1 ± 2.9%), F1-score (0.800 ± 0.023) | MLP-based model |

| Inference time | n.r. | |||||||

| n.a. | Hardware efficiency | Power consumption (over 70% reduction of model size with >3% loss in accuracy) | ||||||

| Liu et al. (2021) [93] | Muscular (MaMC, TeMC) | R: 10 subjects 24 trials × 7 s | SSVEP | 8 wet (POz, PO3, PO4, PO5, PO6, Oz, O1, O2) | EMG reference | Classification Performance Metrics | Classification Accuracy improvement (1-channel 24.42%, 3-channels 15.72%) | RLS Adaptive Filter |

| Kumaravel et al. (2021) [94] | Ocular (Eye blinks); Move- ment (Head, body, limb) | R: 6 subjects 3 trials × 25 s | SSVEP | 8 wet (n.r.) | EEG baseline | Selectivity | SSVEP analysis (FTR improvement: 2 Hz–Correction 18.7%, 4 Hz–Removal 67.5%, 8 Hz–Removal 49.5%) | ASR |

| Shahbakhti et al. (2021) [95] | Ocular (Eye blinks) | SS: 1368 trials × 4104 s | n.a. | 1 n.a. (n.a.) | Initial EEG | Classification Performance Metrics | TPR (95.77 ± 4.14%), FPR (0.0057 ± 0.007) | VME + DWT |

| Accuracy | RRMSE (0.135 ± 0.031) | |||||||

| Selectivity | CC (0.955 ± 0.024), PSD difference (: , : , : , : , : ) | |||||||

| R: 32 subjects 3000 trials × 9000 s | Motor-imagery; attention task | 1 wet (frontal electrode) | Raw EEG | Classification Performance Metrics | TPR (95.3 ± 2.3%), FPR (0.0074 ± 0.0024) | |||

| Sha’bani et al. (2021) [96] | Ocular (Eye blinks) | SS: 36 subjects n.r. × 1280 s | Resting-state | n.r. (focus on AF3) | No contaminated EEG | Accuracy | RMSE (7.62 ± 2.51) | EEMD + OD + cubic spline interpolation |

| Selectivity | Pearson’s correlation (0.802 ± 0.102), PDS differences (: 7.11 ± 2.90; : 1.68 ± 0.79; : 1.99 ± 1.41; : 10.09 ± 13.29; : 7.80 ± 9.77), SAR (∼12) | |||||||

| Aung et al. (2021) [97] | Instrumental (Cable movements) | R: 6 subjects 24 trials × 540 s | Resting-state | 2 n.r. (Fpz e Fp1) | No contaminated channel | Classification Performance Metrics | Classification Accuracy (86.2 ± 5.9%), TPR (84.8 ± 6.3%), FPR (2.0 ± 4.5%) | M-mDistEn |

| Zhang et al. (2021) [58] | Ocular (Eye blinks); Muscular (MaMC, TeMC, PMC, LaMC, ToMc, NMC); Movement (Tremor); EMI (PLN, S.N.S.) | SS: n.r. n.r. × 10 s | n.a. | 1 n.a. (n.a.) | Initial EEG | Accuracy | RMSE (Non-Blink zones: 0.59 ± 0.07; Blink zones: 2.81 ± 0.38) | DWT + CCA |

| Selectivity | CC (Non-Blink zones: 0.947 ± 0.003; Blink zones: 0.167 ± 0.027) | |||||||

| R: 23 subjects 1 trial × n.r. | Sleep | 23 n.r. (focus on C4, P7, FT9, FP1) | Raw EEG | Selectivity | CC (0.923 ± 0.048), MI (1.00 ± 0.33), MSC plot (numerical values n.r.) | |||

| Noorbasha et al. (2021) [98] | Instrumental (Cable movements) [79] | R: 6 subjects 4 trials × 540 s | Resting-state | 2 n.r. (P2, P1) | No contaminated EEG | Accuracy | SNR ( SNR: 1.6 dB (0.79% overlap)), RRMSE (improvement rate: 15.62% (0.79% overlap)) | SSA with modified grouping |

| Operational speed | Latency (0.84 s ((0.79% overlap)) | |||||||

| Ingolfsson et al. (2022) [99] | Ocular (Eye movements), Instru- mental (Electrode pop, displace- ment); Muscular (MaMC, TeMC); Movement (Tremor) | R: 213 subjects n.r. | n.r. | 22 n.r. (focus on F7, T3, T3, T5, F8, T4, T4, T6) | Artifact labels | Classification Performance Metrics | Classification Accuracy (87.8 ± 1.5%), F1-score (0.850 ± 0.019) | DWT + MMC |

| Chen et al. (2022) [100] | Ocular (Eye movements, blinks); Musco- lar (MaMC, TeMC, CSMC); Movement (Head); EMI (PLN) | R: 32 subjects 6 trials × 720 s | Audio and video stimulation | 8 wet (F3, F4, C3 C4, T3, T4, O1, O2) | Raw EEG | Selectivity | PSD differences (numerical values n.r.) | MRA + CCA + SVM OD |

| SS: 32 subjects n.r. × 1200 s | n.r. | 8 wet (F3, F4, C3 C4, T3, T4, O1, O2) | No contaminated EEG | Accuracy | NSR-based RRMSE (NSR = 6 dB, Ocular: 12.7 ± 2.2; Muscular and Movement: 14.2 ± 2.5; PLN continous 10.3 ± 1.6; PLN intermittent 12.0 ± 1.9) | |||

| R: 12 subjects 440 trials × 880 s | Video stimulation | 3 wet (Cz, Pz, Oz) | Artifact-related ICs | Selectivity | ERP peak amplitudes (numerical values n.r.) | |||

| Occhipinti et al. (2022) [101] | Muscular (MaMC, TeMC, PMC, LaMC, ToMC) Movement (Body) | R: 12 subjects 1 trial × 120 s | Resting-state; cognitive tasks | 1 wet (into the ear canal) | Raw EEG | Selectivity | Amplitude and mean power reduction rate (numerical (value n.r.)) | NA-MEMD |

| Paissan et al. (2022) [102] | Ocular (Eye movements, blinks) [103]; Muscular (CSMC, ZMC, OOrMC, OOcMC, MaMC) [103] | SS: 105 subjects 1 trial × n.r. | Resting-state; motor tasks | n.r. | No contaminated channel | Classification Performance Metrics | SNR-based classification accuracy (SNR = 3 dB) (classification accuracy = 75%) | 1D-CNN with HPO |

| Peh et al. (2022) [104] | Ocular (Eye movements); Muscular (MaMC, TeMC, S.N.S.); Instrumen- tal (Electrode pop); Movement (Tremor) | R: 310 subjects 1 trial × n.r. | Resting-state; dual-task | 19 n.r. (Fp1, F3, C3, P3, F7, T3, T5, O1, Fz, Cz, Pz, Fp2, F4, C4, P4, F8, T4, T6, O2) | Artifact labels | Classification Performance Metrics | Balanced Accuracy (muscolar: 0.95); (intrumental: 0.73); (ocular: 0.83); (movement: 0.86), TPR (49.2 ± 10.3%), FPR (3.0 ± 1.6%) | CNN with BM loss |

| Brophy et al. (2022) [105] | Ocular (S.N.S.); Muscular (S.N.S.); EMI (PLN) | SS: n.r. | Motor-imagery | n.r. | No contaminated EEG | Accuracy | RRMSE (numerical values n.r.) | GAN |

| Selectivity | CC (numerical values n.r.), PSD differences (numerical values n.r.) | |||||||

| Xiao et al. (2022) [106] | Cardiac; Ocular (Eye movements); Muscular (s.n.s); Instrumental (EII); EMI (PLN) | R: 28 subjects 40 trials × 20–60 s | Resting-state | 22 wet (n.r.) | Raw EEG | Selectivity | Spectrum differences numerical values n.r.) | Modified ASR method based on spectral properties |

| n.a. | Hardware efficiency | Power consumption (numerical values n.r.) | ||||||

| Arpaia et al. (2022) [107] | Ocular (Eye mo- vements, blinks); Muscular (S.N.S.) | R: 13 subjects 1 trial × 900–2700 s | Resting-state | 27 n.r. | EEG baseline | Accuracy | RMSE (numerical values n.r.) | ASR |

| Selectivity | SD differences (numerical values n.r.) | |||||||

| Noorbasha et al. (2022) [108] | Instrumental (Electrode pop, EII) | R: n.r. 5 trials × 5 s | Resting-state | 18 n.r. | Raw EEG | Accuracy | MAE (0.0282 ± 0.0211) | SWT + GSTV |

| Selectivity | PSD differences (numerical values n.r.) | |||||||

| SS: 22 trials × 5 s | n.a. | 1 n.a. (n.a.) | Initial EEG | Accuracy | RRMSE (SNR = 6 dB, 0.45 ± 0.05) | |||

| Selectivity | CC (SNR = 6 dB, 0.86 ± 0.03) | |||||||

| Zhang et al. (2022) [109] | Cardiac; Ocular (Eye movements, Blinks) Muscular (S.N.S.) Instrumental (Electrode displacement) | SS: 27 subjects 2 trials × 30 s | Resting-state | 19 n.r. (Fp1, Fp2, F3, F4, C3, C4, P3, P4, 01, 02, F7, F8, T3, T4, T5, T6, Fz, Cz, Pz) | No contaminated EEG | Accuracy | RRMSE (mixed artifacts: 0.60) | GRU-MARSC |

| Selectivity | CC (mixed artifacts: 0.81), PSD differences (numerical values n.r.) | |||||||

| Classification Performance Metrics | classification accuracy (98.52%), PPV (98.22%), TPR (98.81%) | |||||||

| Operational speed | Latency (10,250 samples, 11.05 s) | |||||||

| Jayas et al. (2023) [110] | Ocular (Eye movements, blinks) Muscular (S.N.S.) | R: 6 subjects n.r. | Motor task | 8 wet (4 in each ear, 2 in front and back of the ear, 2 in upper and bottom) | Co-registered scalp-EEG | Accuracy | RMS (n.r.), SNR (n.r.), ZCR (n.r.), Max Gradient (n.r.) | Classification model based on Random Forest |

| Selectivity | Skewness (n.r.), Kurtosis (n.r.), Spectral Entropy (n.r.), ACF (n.r.) | |||||||

| Classification Performance Metrics | Classification accuracy (76.70%) F1-score (0.85%) | |||||||

| Narmada et al. (2023) [111] | Cardiac; Ocular (Eye movements); Muscular (LiMC) | SS: (a) 22 subjects n.r. × 8 s; (b) 9 subjects 576 trials × 8 s | (a) n.r.; (b) Motor-imagery | (a) n.r.; (b) 22 n.r. | No contaminated EEG | Accuracy | MAE (Cardiac: 1.13 ± 0.64; Muscular: 1.22 ± 1.18; Ocular: 0.81 ± 0.19), PSNR (Cardiac: 44.76 ± 2.13; Muscular: 44.74 ± 3.88; Ocular: 46.05 ± 0.95), RMS (Cardiac: 1.17 ± 0.64; Muscular: 1.29 ± 1.20; Ocular: 0.85 ± 0.19) | Deep learning + adaptive wavelet |

| Selectivity | CC (Cardiac: 1.174 ± 0.006; Muscular: 1.137 ± 0.036; Ocular: 1.177 ± 0.0003) | |||||||

| Efficiency | CSED (Cardiac: 1373.9 ± 0.65; Muscular: 1374.8 ± 5.16; Ocular: 1378.8 ± 2.68) | |||||||

| Mahmud et al. (2023) [112] | Instrumental (Cable Movement) [64] | R: 6 subjects 4 trials × 540 s | Resting-state | 2 n.r. (Fpz, Fp1h) | No contaminated channel | Accuracy | DSNR (26.641 dB), MAE (0.056 ± 0.025), artifact reduction rate (90.52%) | Deep learning + adaptive wavelet |

| Selectivity | PSD comparison (numerical values n.r.) | |||||||

| Jiang et al. (2023) [113] | Ocular (Eye blinks) | SS: 27 subjects 1 trial × n.r. | Resting-state | 1 dry (Fp1) | No contaminated EEG | Classification Performance Metrics | TPR (92.86%), FPM (0.85) | VME+ MFE + GWO |

| R: 9 subjects n.r. × 480–900 s | Resting-state | 1 n.r. (Fp1/Fp2) | Expert-annotated blinks | Classification Performance Metrics | CCR (97.63%), TPR (92.64%), FPM (0.02), FDR (2.37%) | |||

| Cui et al. (2023) [60] | Cardiac [79]; Ocular (Eye movements, blinks) [103]; Muscular (CSMC, ZMC, OOrMC, OOcMC, MaMC) [103]; Movement (Body) [64] | SS: 158 subjects 1 trial × n.r. | Resting-state | 1 n.a. (n.a.) | No contaminated EEG | Accuracy | RRMSE (Muscular: 0.356; Ocular: 0.210; Cardiac: 0.273; Movement: 0.262), SNR (Muscular: 9.463; Ocular: 14.653; Cardiac: 10.275; Movement: 11.951) | EEGIFNet |

| Selectivity | CC (Muscular: 0.926; Ocular: 0.974; Cardiac: 0.951; Movement: 0.945) | |||||||

| Efficiency | CSED (flop of 100.784 M) | |||||||

| Kaongoen et al. (2023) [61] | Ocular (Eye movements, blinks); Instrumental (Cable movements) | SS: (i) 24 subjects 1 trial × 540 s [79] (ii) 33 subjects n.r. [114] | Resting-state | 1 n.a. (n.a.) | No contaminated EEG | Accuracy (n.r.) | MSE ( MSE: 9.39 ± 1.45), SNR ( SNR: 15.24 ± 0.52) | WT + ASR |

| Selectivity (n.r.) | CC (0.210 ± 0.095) | |||||||

| Kumaravel et al. (2023) [115] | n.c. | R1: 6 subjects 3 trials × 25 s | SSVEP | 8 dry (n.r) | Raw EEG | Selectivity | FTR (2 Hz: 1.3 ± 0.3; 4 Hz: 3.0 ± 0.8; 8 Hz: 5.5 ± 1.5) | IMU-ASR |

| R2: 37 subjects 4 trials × 600 s | Motor task | 120 wet (n.r.) | Raw EEG | Selectivity | Brain ICs (No.: 6 ± 3), muscle ICs (No.: 12 ± 9) | |||

| Li et al. (2023) [116] | Ocular (Eye movements, blinks); Muscular (CSMC, ZMC, OOrMC, OOcMC, MaMC); Movement (S.N.S.) | SS: (i) 27 subjects, 2 trials × n.r. [114] (ii) 105 subjects, 1 trial × n.r. [103] | (i) Resting-state (ii) Resting-state, motor tasks | (i) 19 n.r. (FP1, FP2, F3, F4, C3, C4, P3, P4, O1, O2, F7, F8, T3, T4, T5, T6, Fz, Cz, Pz); (ii) n.r. | Raw EEG | Accuracy | RRMSE (0.4129 ± 0.0979), SNR (6.0321 ± 1.8962) | ResUnet1D-RNN |

| Selectivity | CC (90.75% ± 4.27) | |||||||

| R [117]: 23 subjects, 23–26 trials × n.r. | Resting-state | n.r. | Raw EEG | Selectivity | Waveform and PSD qualitative analysis (numerical values n.r.) | |||

| Yin et al. (2023) [118] | Ocular (Eye movements, blinks) | SS: 27 subjects from [114] n.r. | Resting-state | 8 n.r. (FP1, FP2, F3, F4, F7, F8, T3, T4) | No contaminated EEG | Accuracy | SNR (11.123 ± 1.306), RRMSE (0.340 ± 0.044) | GCTNet Generator (CNN and Transformer Blocks) |

| Selectivity | CC (0.929 ± 0.015) | |||||||

| O’Sullivan et al. (2023) [119] | Instrumental (Poor electrode contact); Muscular (S.N.S.): Movement (S.N.S.); Cardiac | R: 51 subjects n.r. | Daily activities | 9 disposable (F3, F4, C3, C4, Cz, T3, T4, O1, O2) | Raw EEG | Classification Performance Metrics | AUC (0.844), MCC (0.649), Classification Sensitivity (0.794), Classification Specificity (0.894) | CNN deep learning architecture |

| Chen et al. (2023) [120] | Ocular (Eye movements, blinks); Muscular (Head movement) | SS: 52 subjects from [103] n.r. | Motor-imagery | 1 n.r. (n.r.) | No contaminated EEG | Accuracy | RRMSE (0.444) | Denoiseformer Tranfomer-based Encoder and Self-Attentional Mechanism |

Selectivity | CC (0.859) | |||||||

| Hermans et al. (2023) [121] | Instrumental (Device interference, electrodes); Muscular (S.N.S.): Movement (S.N.S.); Cardiac | R: 133 subjects n.r. | Daily Activities | 8 n.r. (Fp1, Fp2, C3, C4, T3, T4, O1, O2) | Raw EEG | Classification Performance Metrics | Classification Accuracy (96.6%), F1-score (86.2), Miss Rate (11.7) | Semi-supervised multi-task CNN (encoder + decoder) |

| Bahadur et al. (2024) [122] | Ocular (Eye movements, blinks) | SS: 27 subjects n.r. | Resting-state | 19 n.r. | No contaminated EEG | Accuracy | RMSE (2.22 ± 0.27) | DWT + LMM |

| Selectivity | CC (0.93 ± 0.02) | |||||||

| n.a. | Hardware efficiency | Silicon area (area: 5181.73 μm2, power: 446.06 W) | ||||||

| Arpaia et al. (2024) [123] | Ocular (Eye mo- vements, blinks); Muscular (TeMC, MaMC); Movement (Head) | R: 2 subjects 50 trials × 40 s | Resting-state | 8 dry (Fz, C3, Cz, C4, Pz, PO7, PO8, Oz) | EEG baseline | Accuracy | RRMSE (4-channels (k = 9): contaminated 5.0 ± 0.0, clean 4.5 ± 2.0; 3-channels (k = 8): contaminated 6.0 ± 4.0, clean 5.5 ± 3.5; 2-channels (k = 9): contaminated 3.0 ± 0.0, clean 3.0 ± 0.0) | MEMD + ASR |

| Ingolfsson et al. (2024) [124] | Ocular (Eye mo- vements); Musco- lar (MaMC, TeMC, S.N.S.) Movement (Tremor) | R: 22 subjects n.r. | Resting-state | 4 n.r. (F7-T7, T7-P7, F8-T8, T8-P8) | Artifact labels | Classification Performance Metrics | Classification Accuracy (93.95%), Sensitivity (61.27 ± 5.66%), FPR (FP-h) (<0.58) | XGBoost-based model |

| Saleh et al. (2024) [125] | Ocular (Eye mo- vements) [114]; Muscular (CSMC, ZMC, OOrMC, OOcMC, MaMC) [103]; Instrumental (Electrode displacement) [64] | SS: 138 subjects 1 trial × n.r. | Resting-state; motor tasks | 64 n.r. (n.r.) | No contaminated EEG | Accuracy (n.r.) | RRMSEt (Ocular: 0.52 ± 0.10; Movement: 0.70 ± 0.10; Muscular: 0.58 ± 0.16; Clean EEG: 0.30 ± 0.07) RRMSEf (Ocular: 0.53 ± 0.20; Movement: 0.72 ± 0.17; Muscular: 0.59 ± 0.18; Clean EEG: 0.42 ± 0.11) | Deep Convolutional Autoencoder |

| Selectivity | CC (Ocular: 0.86 ± 0.06; Movement: 0.71 ± 0.11; Muscular: 0.80 ± 0.12; Clean EEG: 0.95 ± 0.02) | |||||||

| Nair et al. (2025) [126] | Ocular (Eye movements); Muscular (MeMC, SubMc) | R: 197 subjects 2 trials × 72,000 s | Resting-state | 2 n.r. (n.r.) | Raw EEG | Accuracy (n.r.) | RMSE (3.0 ± 0.5 V), SNR (22.5 ± 1.2 dB) | CNN + LMS (OBC Radix-4 DA) |

| Selectivity | CC (0.93 ± 0.02) |

| Public Dataset | Data Type | Signal Category | Experimental Protocol | Participants | Hardware | Signal Processing |

|---|---|---|---|---|---|---|

| EEG datasets for motor-imagery brain–computer interface [133] | R | Reference (Physiological EEG and Ocular and EMG Artifact) | Ambiental Conditions: laboratory setting; background noise level was held between 37 and 39 decibels Subject Position: sitting in a chair with armrests in front of a monitor Stimulus/Task: (i) motor-imagery movement of left and right hands (ii) resting state EO and (iii) artifact recordings (eye blinking, eyeball movement up/down and left/right, head movement, and jaw clenching) | No. and Type: 52 healthy subjects Age: 24.8 ± 3.9 years Sex: 19 F, 33 M | Device: Biosemi ActiveTwo system Electrodes No.: 64 Electrodes Type: n.r. | Common average reference, fourth order Butterworth filter [8–30 Hz] |

| BCI Competition 2008 Graz data set B [80] | R | Reference (Physiological EEG and EOG Artifact) | Ambiental Conditions: n.r. Subject Position: sitting in an armchair in front of an LCD computer monitor placed approximately 1 m in front at eye level Stimulus/Task: (i) motor-imagery movement of the left and right hands, (ii) rest EO (while looking at a fixation cross on the screen) (iii), rest EC (iv), eye movements (eye blinking, rolling, up–down, left–right movements) | No. and Type: 10 healthy subjects Age: 24.7 ± 3.3 years Sex: 6 M and 4 F | Device: Easycap Electrodes No.: 3 bipolar recordings extracted from the 22 total electrodes. EOG was recorded with 3 monopolar electrodes. Electrodes Type: n.r. | BPF [0.5–100 Hz] and notch filter at 50 Hz |

| BCI Competition 2008 Graz data set A [81] | R | Reference (Physiological EEG and EOG Artifact) | Ambiental Conditions: n.r. Subject Position: sitting in a comfortable armchair in front of a computer screen Stimulus/Task: (i) four motor-imagery tasks (left and right hand, both feet, and tongue) (ii) EO (looking at a fixation cross on the screen), (iii) EC, (iv) eye movements | No. and Type: 9 healthy subjects Age: n.r. Sex: n.r. | Device: n.r. Electrodes No.: n.r. Electrodes Type: n.r. | n.r. |

| Ear-EEG Recording for Brain Computer Interface of Motor Task [134] | R | Reference (Physiological EEG) | Ambiental Conditions: n.r. Subject Position: sitting in front of a computer monitor Stimulus/Task: subjects were asked to imagine and grasp the left or right hand according to an arrow direction present on the computer monitor | No. and Type: 6 healthy subjects Age: 22–28 years Sex: 2 M, 4 F | Device: Neuroscan Quick Cap (Model C190) Electrodes No.: n.r. for the cap-EEG. Ear-EEG were recorded with 8 ear electrodes Electrodes Type: n.r. | BPF [0.5–100 Hz] together with a notch filter |

| A Methodology for Validating Artifact Removal Techniques for Physiological Signals [64] | R | Reference (Physiological EEG and Cable Motion Artifact) | Ambiental Conditions: n.r. Subject Position: n.r. Stimulus/Task: subjects were asked to keep their eyes closed and maintain a stationary head position throughout the experiment. An artifact motion was then induced to one of the electrodes by pulling on the connecting lead. | No. and Type: 6 healthy subjects Age: 27.0 ± 4.3 years Sex: 3 M, 3 F | Device: Electro-cap International Electrodes No.: 2 electrodes were considered based on the 256 electrodes composing the EEG device Electrodes Type: wet | n.r. |

| The impact of the MIT-BIH Arrhythmia Database [79] | R | Reference (Physiological ECG) | Ambiental Conditions: clinical setting inside the Boston’s Beth Israel Hospital (BIH; now the Beth Israel Deaconess Medical Center) Subject Position: n.r. Stimulus/Task: n.r. | No. and Type: 23 healthy subjects and 24 participants with uncommon but clinically important arrhythmia (47 subjects in total) Age: 23 to 89 years Sex: 25 M, 22 F | Device: n.r. Electrodes No.: n.r. Electrodes Type: n.r. | n.r. |

| TUH EEG Artifact Corpus dataset [135] | R | Reference (Physiological EEG) | Ambiental Conditions: data are composed of archival records acquired in clinical settings in the Temple University Hospital (TUH) Subject Position: n.r. Stimulus/Task: n.r. | No. and Type: archival recordings of 10.874 healthy and clinical subjects Age: 1 to 90 years Sex: 51% F, 49% M | Device: n.r. Electrodes No.: n.r. Electrodes Type: n.r. | n.r. |

| SEED database [136] | R | Reference (Physiological EEG and EOG Artifact) | Ambiental Conditions: n.r. Subject Position: n.r. Stimulus/Task: subjects were asked to watch 15 film clips designed to elicit positive, neutral, and negative emotions | No. and Type: 15 healthy subjects Age: 23.3 ± 2.4 years Sex: 7 M, 8 F | Device: ESI NeuroScan System for EEG signals acquisition and SMI eye-tracking glasses for eye movements Electrodes No.: n.r. Electrodes Type: n.r. | BPF [0–75 Hz] was applied |

| CHB-MIT Scalp EEG Database [117] | R | Reference (Physiological EEG) | Ambiental Conditions: n.r. Subject Position: n.r. Stimulus/Task: EEG recordings were acquired during and after seizures attacks | No. and Type: 22 pediatric subjects with intractable seizures Age: 1.5 to 22 years Sex: 5 M, 17 F | Device: n.r. Electrodes No.: n.r. Electrodes Type: n.r. | n.r. |

| Freiburg EEG dataset [137] | R | Reference (Physiological EEG) | Ambiental Conditions: clinical settings in the Epilepsy Center of the University Hospital of Freiburg, Germany Subject Position: n.r. Stimulus/Task: EEG recordings were made during an invasive pre-surgical epilepsy monitoring | No. and Type: 21 patients suffering from medically intractable focal epilepsy Age: n.r. Sex: n.r. | Device: Neurofile NT digital video EEG system Electrodes No.: 128 depth-electrodes Electrodes Type: n.r. | No notch or BPF have been applied |